Guest post by Pat Frank

Readers of Watts Up With That will know from Mark I that for six years I have been trying to publish a manuscript with the post title. Well, it has passed peer review and is now published at Frontiers in Earth Science: Atmospheric Science. The paper demonstrates that climate models have no predictive value.

Before going further, my deep thanks to Anthony Watts for giving a voice to independent thought. So many have sought to suppress it (freedom denialists?). His gift to us (and to America) is beyond calculation. And to Charles the moderator, my eternal gratitude for making it happen.

Onward: the paper is open access. It can be found here , where it can be downloaded; the Supporting Information (SI) is here (7.4 MB pdf).

I would like to publicly honor my manuscript editor Dr. Jing-Jia Luo, who displayed the courage of a scientist; a level of professional integrity found lacking among so many during my 6-year journey.

Dr. Luo chose four reviewers, three of whom were apparently not conflicted by investment in the AGW status-quo. They produced critically constructive reviews that helped improve the manuscript. To these reviewers I am very grateful. They provided the dispassionate professionalism and integrity that had been in very rare evidence within my prior submissions.

So, all honor to the editors and reviewers of Frontiers in Earth Science. They rose above the partisan and hewed the principled standards of science when so many did not, and do not.

A digression into the state of practice: Anyone wishing a deep dive can download the entire corpus of reviews and responses for all 13 prior submissions, here (60 MB zip file, Webroot scanned virus-free). Choose “free download” to avoid advertising blandishment.

Climate modelers produced about 25 of the prior 30 reviews. You’ll find repeated editorial rejections of the manuscript on the grounds of objectively incompetent negative reviews. I have written about that extraordinary reality at WUWT here and here. In 30 years of publishing in Chemistry, I never once experienced such a travesty of process. For example, this paper overturned a prediction from Molecular Dynamics and so had a very negative review, but the editor published anyway after our response.

In my prior experience, climate modelers:

· did not know to distinguish between accuracy and precision.

· did not understand that, for example, a ±15 C temperature uncertainty is not a physical temperature.

· did not realize that deriving a ±15 C uncertainty to condition a projected temperature does *not* mean the model itself is oscillating rapidly between icehouse and greenhouse climate predictions (an actual reviewer objection).

· confronted standard error propagation as a foreign concept.

· did not understand the significance or impact of a calibration experiment.

· did not understand the concept of instrumental or model resolution or that it has empirical limits

· did not understand physical error analysis at all.

· did not realize that ‘±n’ is not ‘+n.’

Some of these traits consistently show up in their papers. I’ve not seen one that deals properly with physical error, with model calibration, or with the impact of model physical error on the reliability of a projected climate.

More thorough-going analyses have been posted up at WUWT, here, here, and here, for example.

In climate model papers the typical uncertainty analyses are about precision, not about accuracy. They are appropriate to engineering models that reproduce observables within their calibration (tuning) bounds. They are not appropriate to physical models that predict future or unknown observables.

Climate modelers are evidently not trained in the scientific method. They are not trained to be scientists. They are not scientists. They are apparently not trained to evaluate the physical or predictive reliability of their own models. They do not manifest the attention to physical reasoning demanded by good scientific practice. In my prior experience they are actively hostile to any demonstration of that diagnosis.

In their hands, climate modeling has become a kind of subjectivist narrative, in the manner of the critical theory pseudo-scholarship that has so disfigured the academic Humanities and Sociology Departments, and that has actively promoted so much social strife. Call it Critical Global Warming Theory. Subjectivist narratives assume what should be proved (CO₂ emissions equate directly to sensible heat), their assumptions have the weight of evidence (CO₂ and temperature, see?), and every study is confirmatory (it’s worse than we thought).

Subjectivist narratives and academic critical theories are prejudicial constructs. They are in opposition to science and reason. Over the last 31 years, climate modeling has attained that state, with its descent into unquestioned assumptions and circular self-confirmations.

A summary of results: The paper shows that advanced climate models project air temperature merely as a linear extrapolation of greenhouse gas (GHG) forcing. That fact is multiply demonstrated, with the bulk of the demonstrations in the SI. A simple equation, linear in forcing, successfully emulates the air temperature projections of virtually any climate model. Willis Eschenbach also discovered that independently, awhile back.

After showing its efficacy in emulating GCM air temperature projections, the linear equation is used to propagate the root-mean-square annual average long-wave cloud forcing systematic error of climate models, through their air temperature projections.

The uncertainty in projected temperature is ±1.8 C after 1 year for a 0.6 C projection anomaly and ±18 C after 100 years for a 3.7 C projection anomaly. The predictive content in the projections is zero.

In short, climate models cannot predict future global air temperatures; not for one year and not for 100 years. Climate model air temperature projections are physically meaningless. They say nothing at all about the impact of CO₂ emissions, if any, on global air temperatures.

Here’s an example of how that plays out.

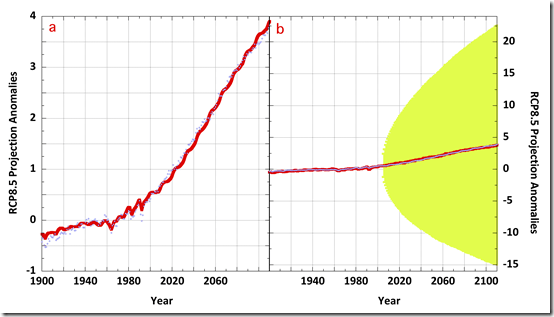

Panel a: blue points, GISS model E2-H-p1 RCP8.5 global air temperature projection anomalies. Red line, the linear emulation. Panel b: the same except with a green envelope showing the physical uncertainty bounds in the GISS projection due to the ±4 Wm⁻² annual average model long wave cloud forcing error. The uncertainty bounds were calculated starting at 2006.

Were the uncertainty to be calculated from the first projection year, 1850, (not shown in the Figure), the uncertainty bounds would be very much wider, even though the known 20th century temperatures are well reproduced. The reason is that the underlying physics within the model is not correct. Therefore, there’s no physical information about the climate in the projected 20th century temperatures, even though they are statistically close to observations (due to model tuning).

Physical uncertainty bounds represent the state of physical knowledge, not of statistical conformance. The projection is physically meaningless.

The uncertainty due to annual average model long wave cloud forcing error alone (±4 Wm⁻²) is about ±114 times larger than the annual average increase in CO₂ forcing (about 0.035 Wm⁻²). A complete inventory of model error would produce enormously greater uncertainty. Climate models are completely unable to resolve the effects of the small forcing perturbation from GHG emissions.

The unavoidable conclusion is that whatever impact CO₂ emissions may have on the climate cannot have been detected in the past and cannot be detected now.

It seems Exxon didn’t know, after all. Exxon couldn’t have known. Nor could anyone else.

Every single model air temperature projection since 1988 (and before) is physically meaningless. Every single detection-and-attribution study since then is physically meaningless. When it comes to CO₂ emissions and climate, no one knows what they’ve been talking about: not the IPCC, not Al Gore (we knew that), not even the most prominent of climate modelers, and certainly no political poser.

There is no valid physical theory of climate able to predict what CO₂ emissions will do to the climate, if anything. That theory does not yet exist.

The Stefan-Boltzmann equation is not a valid theory of climate, although people who should know better evidently think otherwise including the NAS and every US scientific society. Their behavior in this is the most amazing abandonment of critical thinking in the history of science.

Absent any physically valid causal deduction, and noting that the climate has multiple rapid response channels to changes in energy flux, and noting further that the climate is exhibiting nothing untoward, one is left with no bearing at all on how much warming, if any, additional CO₂ has produced or will produce.

From the perspective of physical science, it is very reasonable to conclude that any effect of CO₂ emissions is beyond present resolution, and even reasonable to suppose that any possible effect may be so small as to be undetectable within natural variation. Nothing among the present climate observables is in any way unusual.

The analysis upsets the entire IPCC applecart. It eviscerates the EPA’s endangerment finding, and removes climate alarm from the US 2020 election. There is no evidence whatever that CO₂ emissions have increased, are increasing, will increase, or even can increase, global average surface air temperature.

The analysis is straight-forward. It could have been done, and should have been done, 30 years ago. But was not.

All the dark significance attached to whatever is the Greenland ice-melt, or to glaciers retreating from their LIA high-stand, or to changes in Arctic winter ice, or to Bangladeshi deltaic floods, or to Kiribati, or to polar bears, is removed. None of it can be rationally or physically blamed on humans or on CO₂ emissions.

Although I am quite sure this study is definitive, those invested in the reigning consensus of alarm will almost certainly not stand down. The debate is unlikely to stop here.

Raising the eyes, finally, to regard the extended damage: I’d like to finish by turning to the ethical consequence of the global warming frenzy. After some study, one discovers that climate models cannot model the climate. This fact was made clear all the way back in 2001, with the publication of W. Soon, S. Baliunas, S. B. Idso, K. Y. Kondratyev, and E. S. Posmentier Modeling climatic effects of anthropogenic carbon dioxide emissions: unknowns and uncertainties. Climate Res. 18(3), 259-275, available here. The paper remains relevant.

In a well-functioning scientific environment, that paper would have put an end to the alarm about CO₂ emissions. But it didn’t.

Instead the paper was disparaged and then nearly universally ignored (Reading it in 2003 is what set me off. It was immediately obvious that climate modelers could not possibly know what they claimed to know). There will likely be attempts to do the same to my paper: derision followed by burial.

But we now know this for a certainty: all the frenzy about CO₂ and climate was for nothing.

All the anguished adults; all the despairing young people; all the grammar school children frightened to tears and recriminations by lessons about coming doom, and death, and destruction; all the social strife and dislocation. All the blaming, all the character assassinations, all the damaged careers, all the excess winter fuel-poverty deaths, all the men, women, and children continuing to live with indoor smoke, all the enormous sums diverted, all the blighted landscapes, all the chopped and burned birds and the disrupted bats, all the huge monies transferred from the middle class to rich subsidy-farmers.

All for nothing.

There’s plenty of blame to go around, but the betrayal of science garners the most. Those offenses would not have happened had not every single scientific society neglected its duty to diligence.

From the American Physical Society right through to the American Meteorological Association, they all abandoned their professional integrity, and with it their responsibility to defend and practice hard-minded science. Willful neglect? Who knows. Betrayal of science? Absolutely for sure.

Had the American Physical Society been as critical of claims about CO₂ and climate as they were of claims about palladium, deuterium, and cold fusion, none of this would have happened. But they were not.

The institutional betrayal could not be worse; worse than Lysenkoism because there was no Stalin to hold a gun to their heads. They all volunteered.

These outrages: the deaths, the injuries, the anguish, the strife, the malused resources, the ecological offenses, were in their hands to prevent and so are on their heads for account.

In my opinion, the management of every single US scientific society should resign in disgrace. Every single one of them. Starting with Marcia McNutt at the National Academy.

The IPCC should be defunded and shuttered forever.

And the EPA? Who exactly is it that should have rigorously engaged, but did not? In light of apparently studied incompetence at the center, shouldn’t all authority be returned to the states, where it belongs?

And, in a smaller but nevertheless real tragedy, who’s going to tell the so cynically abused Greta? My imagination shies away from that picture.

An Addendum to complete the diagnosis: It’s not just climate models.

Those who compile the global air temperature record do not even know to account for the resolution limits of the historical instruments, see here or here.

They have utterly ignored the systematic measurement error that riddles the air temperature record and renders it unfit for concluding anything about the historical climate, here, here and here.

These problems are in addition to bad siting and UHI effects.

The proxy paleo-temperature reconstructions, the third leg of alarmism, have no distinct relationship at all to physical temperature, here and here.

The whole AGW claim is built upon climate models that do not model the climate, upon climatologically useless air temperature measurements, and upon proxy paleo-temperature reconstructions that are not known to reconstruct temperature.

It all lives on false precision; a state of affairs fully described here, peer-reviewed and all.

Climate alarmism is artful pseudo-science all the way down; made to look like science, but which is not.

Pseudo-science not called out by any of the science organizations whose sole reason for existence is the integrity of science.

Wow! What a blockbuster of an article, Anthony. Knowledgeable people in the scientific and political communities need to read this article and the accompanying paper and SI and take this to heart. Especially the Republican Party. But of course, we know what that apocalyptic types will do. We must not allow that, especially in any attempt by the Trump administration to overturn the endangerment finding made by the previous (incompetent) administration.

Politicians, are mostly too thick, to understand it. That applies to any politician, any party, any country.

Politicians are following the money, as they always have done, that is, money for their own pockets

And the politicians (e.g. Trudeau, Gore) continue to fly a lot, continue to drive around in big SUVs, and continue to live in multiple, very large houses.

Often very close to those rising oceans……

Adam Gallon

100% correct, and the very reason why the climate alarmists stole a march on sceptics. They framed their argument politically, and everyone, irrespective of education, has the right to a political opinion. Sceptics chose the scientific route, and less that 10% of the world is scientifically educated.

When it comes time to vote, guess who gets the most, and the cheapest votes for their $/£?

And , so it seems , are the scientists “ mostly too thick “ !

Not too thick, only unsceptical. The more intelligent you are, the more you can utilise your intelligence to justify your need to believe something, and the more prone you will be to confirmation bias.

Cherry picking evidence that suits you and finding justifications to hand wave away that which doesn’t requires a good brain, but good brains are still at the mercy of their owners’ emotional defences, including pride, self interest, misplaced fear and stubbornness.

100 percent spot on. This is done in all areas of our society. Government, non government, business, media. People wordsmithing their agendas, justifying their actions. Corruption years ago was someone taking money under the table. These days it is taken over the table, people are just smarter in justifying their course of action. Sadly most of them believe their own rheteric.

Someone needs to get this Paper to trump…..

Have HIM go public with it….

And have him ask for rebuttal from the Climate Science world….. Since the clown media exacts their *freedom denialist* on him whenever they can…..

Don’t sit on this…….

Trump wouldn’t read it. He’d ask for the short version in 25 words or less.

The CACA crock is built upon climate models that don’t model climate, climatologically useless air temperature measurements, and proxy paleo-temperature reconstructions which don’t reconstruct temperature.

Edited down to 25 words.

Because error propagation.

@DanM: No, please please please don’t give it to Trump. His credibility is low — and falls further with every tweet. Trump’s daily dribble of dubious pronouncements is easily dismissed as ignorant, self-serving prattle.

We “deniers” need to stay focused on science vs. non-science, as the article’s author suggests. “Climate science” presents a non-falsifiable theory as inevitable outcome — as Richard Feynman once said, that is not science.

If we are to convince the “more educated” segment of society of the perniciousness of “climate science”, we must disentangle the science from the politics. The two are antithetical: The former is, very generally speaking, about parsing signal from noise; the latter is, very generally speaking, the exact opposite.

The “more educated” don’t get that yet, don’t get that their religious belief in CO2-induced End Times is based on corrupted scriptures. When they do, enlightenment will follow.

Richie, the skeptic community has been riven with dislike or distrust for too long. The spat between Anthony Watts and Tony Heller is a good example.

You may dislike Trump’s tweeting, but he is uniquely willing and able to take climastrology head on, and his tweets probably reach a group of people that your preferred approach never would.

If Trump picks this up and tweets it around, good for him, good for everyone. If you want to engage your community in a scientific debate, good for you.

There are plenty of alarmists out there pushing out nonsense, we don’t need to criticize each other for doing what we can, where we can, to push it back.

Richie, Trump is on only one in 30 years in politics to call BS on these climate terrorists. The only one to call BS on China trade practices. The only one willing to rescind a deal to give nukes to Iran. The only one to even mention the USA cannot just have everyone in the world move here. The only one to suggest NATO pay their own way. You need to listen to people that did not spout ‘Russian collusion” for 2+ years knowing it was a bald faced lie. You better get on board, as this guy is the ONLY one with credibility.

Trump will not read this paper and nor should he , he is not a scientist and has never pretended to be ! Contrary to popular belief I am sure, Trump nor all Ex presidents make ALL decisions such as this on subjects . Not one single person has the amount of knowledge or education required to “Run” a country . Trump rely’s on his advisers I am sure which is the right thing to do.

Actually, as grad of a good B-school, Trump must have taken statistics courses. He could read and understand, or at least get the gist of this paper, but his attention span is short and digesting the whole thing would be a waste of time for any president.

The abstract and conclusions, with a graph or two, in his daily summary would be the most for which we could or should hope.

Politicians don’t WANT to understand it (except Trump) AGW is a HUGE gravy train for them…

Not sure if I completely agree, Adam.

I agree that there are many who are too thick to understand, but there are also many who don’t want to understand and others that don’t have time to understand.

The don’t want to understand people don’t care. They are either already hard core Warm Cult or have been informed by their spin merchants that Climate Change is what their voters want. These are either ‘Science is Settled… and if it isn’t, then it should be’ or would support the reintroduction of blood sports if their internal polling said it would win them another term.

Then there are the ones who don’t have time to care. Politicians are busy people. All that sunshine isn’t going to get blown up people’s…. ummm… egos by itself you know. They don’t have time to sit down and read reports, they have Important Meetings to attend. Hence they surround themselves with staffers who – nominally – do all the reading for them and feed them the 10 word summary. Now that all sounds fine and dandy, and Your Country May Vary, but here in Oz most staffers are the 24 year olds who have successfully backstabbed and grovelled their way through the ‘Young’ branch of their party and the associated faction politics. Since very few of these people have anything remotely resembling a STEM background they are for all extents and purposes, masculine bovine mammaries.

Like they say, Sausages and Laws. 🙁

Great article.

In layman’s terms:

If the climate modelers were financial advisors the world would be living under one gigantic bridge.

If climate modelers were engineers, there wouldn’t be any bridges to live under.

Climate modelling is Cargo Cult Science!

Was it Freeman Dyson or Richard Feynman who stated this years ago?

Climate models are not real models.

Real models make right predictions.

Climate models make wrong predictions.

The so called “climate models”, and government bureaucrat “scientists” who programmed them, are merely props for the faith based claim that a climate crisis is in progress.

If people who joined conventional religions believed that, they would point to a bible as “proof”.

In the unconventional “religion” of climate change, their “bible” is the IPCC report, and their “priests” are government bureaucrat and university “scientists”.

Scientists and computer models are used as props, to support an “appeal to authority” about a “coming” climate crisis, coming for over 30 years, that never shows up !

In the conventional religions, the non-scientist “priests” and their bibles say: ‘You must do as we say, or you will go to hell’.

In the climate change “religion”, the scientist “priests” say: ‘You must do as we say, or the Earth will turn into hell for your children’.

” … the whole aim of practical politics is to keep the populace alarmed (and hence clamorous to be led to safety) by menacing it with an endless series of hobgoblins, most of them imaginary.”

— From H. L. Mencken’s In Defense of Women (1918).

.

.

My climate science blog:

http://www.elOnionBloggle.Blogspot.com

.

.

Concerning the Green New Deal:

“Politics is the art

of looking for trouble,

finding it everywhere,

diagnosing it incorrectly,

and applying the wrong remedies.”

Groucho Marx

It was Feynman. A commencement speech in the 70’s.

[excerpt from this excellent article]

“In their hands, climate modeling has become a kind of subjectivist narrative, in the manner of the critical theory pseudo-scholarship that has so disfigured the academic Humanities and Sociology Departments, and that has actively promoted so much social strife. Call it Critical Global Warming Theory. Subjectivist narratives assume what should be proved (CO₂ emissions equate directly to sensible heat), their assumptions have the weight of evidence (CO₂ and temperature, see?), and every study is confirmatory (it’s worse than we thought).

Subjectivist narratives and academic critical theories are prejudicial constructs. They are in opposition to science and reason. Over the last 31 years, climate modeling has attained that state, with its descent into unquestioned assumptions and circular self-confirmations.”

…

Raising the eyes, finally, to regard the extended damage: I’d like to finish by turning to the ethical consequence of the global warming frenzy. After some study, one discovers that climate models cannot model the climate. This fact was made clear all the way back in 2001, with the publication of W. Soon, S. Baliunas, S. B. Idso, K. Y. Kondratyev, and E. S. Posmentier Modeling climatic effects of anthropogenic carbon dioxide emissions: unknowns and uncertainties. Climate Res. 18(3), 259-275, available here. The paper remains relevant.

In a well-functioning scientific environment, that paper would have put an end to the alarm about CO₂ emissions. But it didn’t.

Instead the paper was disparaged and then nearly universally ignored (Reading it in 2003 is what set me off. It was immediately obvious that climate modelers could not possibly know what they claimed to know). There will likely be attempts to do the same to my paper: derision followed by burial.

But we now know this for a certainty: all the frenzy about CO₂ and climate was for nothing.

[end of excerpt]

So the false narrative of global warming alarmism has once again been exposed, even though this paper was REPRESSED for SIX YEARS!

It is absolutely clear, based on the evidence , that global warming and climate change alarmism was not only false, but fraudulent. Its senior proponents have cost society tens of trillions of dollars and many millions of lives – an entire global population has been traumatized by global warming alarmism, the greatest scientific fraud in history – these are crimes against humanity and their proponents belong in jail – for life!

Oh yes indeed. But who will do it?

Too many people are making too much money off of this ridiculous hoax. Too many politicians are acquiring too much power off of this insanity. The media spin their narrative continually because it plays into the leftist desire to smash capitalism.

We need somebody to break this thing once and for all. Trump has tried but he is so controversial in so many ways that the message is lost. So we will all just continue in our own little way trying to change the opinion of those close to us and hope that our own prophet will appear and throw the money lenders out of the temple of pseudo-science once and for all.

Is jail for life sufficient punishment for the theft of trillions in treasure and loss of life for tens of millions?

Pat’s math nonsense has been completely dismantled here:

https://patricktbrown.org/2017/01/25/do-propagation-of-error-calculations-invalidate-climate-model-projections-of-global-warming/

here:

https://andthentheresphysics.wordpress.com/2017/01/26/guest-post-do-propagation-of-error-calculations-invalidate-climate-model-projections/

and years ago here:

https://tamino.wordpress.com/2011/06/02/frankly-not/

…and by Nick Stokes here:

https://moyhu.blogspot.com/2019/09/another-round-of-pat-franks-propagation.html

and two years ago here:

https://moyhu.blogspot.com/2017/11/pat-frank-and-error-propagation-in-gcms.html

Unlike other zombie myth let us hope this one is finally laid to rest.

Hahahaha — tamino. Fortunately haven’t heard anything about that abomination for yrs….

Calling tamino names is not a scientific argument. We are claiming to be scientists. The standards that we impose upon ourselves must be rigorous.

Where is an analysis of the tamino paper that refutes tamino?

Apparently you didn’t bother to read Pat Frank’s responses.

It’s not surprising that a young computer gamer would object to Pat’s work. Young Dr. Brown would need to find a new career should Pat’s conclusions be confirmed.

There are 5 references put forward to refute Mr. Frank’s paper.

Calling people names is not a scientific argument.

You refer to Mr. Frank’s responses. Where are the references to his responses that allow a review of the arguments? Who are the people with sufficient credibility who stand behind Mr. Frank’s work and refute the arguments (pseudo arguments) put forth in these five references.

Lord Moncton has made a different argument that claims to demolish the alarmists. But the alarmists have put forward a criticism of Moncton that I have not seen addressed.

Rigorous argument is the hallmark of science. There is no shortcut.

No name calling. GIGO GCMs are science-free games. They are worse than worthless wastes of taxpayer dollars, except to show how deeply unphysical is the CACA scam.

Patrick Brown’s arguments did not withstand the test of debate, carried out beneath his video.

ATTP thinks (+/-) means constant offset. And Tamino ran away from the debate — which was about a different analysis anyway.

Nick’s moyhu posts are just safe-space reiterations of the arguments he failed to establish in open debate.

Skeptic t-shirt.

https://www.amazon.com/dp/B07XNNBBXZ

It’s brilliant, and the list looks pretty complete. 🙂

Missing at least one relevant point:

Uncertainty Propagation

I’ll have my wife add it on there after mine arrives. She’s good at that sort of thing and has produced a number of fun items for me to wear.

I never, ever buy t-shirts. I just bought this one. I could not resist. Thanks for the link.

How do you see the back? – there is no simple link !!!

Click on the small image of the back, then hover your mouse/cursor over the resultant view to see a magnified view.

OK, thanks for that ! I could’t find the image of the back of the Shirt…

It was way over to the left side on my screen, and couldn’t seem to locate it until I knew what to look for,

The list is pretty damn complete, thx !

JPP

I have been waiting for years hoping that someone would come up with an A+B proof that definitively buries the non-scientific proceedings of the “climate religion”. Pat Frank’s publication hits that nail with a beautiful hammer! Every student writing a report about a practical physics experiment has to calculate the error margins. That these so-called scientists (some are even at ETH Zurich) don’t even seem to understand what an error margin means was a real shock to me. Just recently I’ve been reading something about the UN urging for haste and mentioning that scientific arguments are not relevant anymore and should be ignored… Do you see something coming?

“…for giving a voice to independent thought.”

Although it’s been a struggle for some of us.

Add to the list of what people don’t know.

Most people don’t understand that at this distance from the sun objects get hot (394 K) not cold (- 430 F).

The atmosphere/0.3 albedo cools the earth compared to no atmosphere.

And because of a contiguous participating media, i.e. atmospheric molecules, ideal BB LWIR upwelling from the surface/oceans is not possible.

396 W/m^2 upwelling is not possible.

333 W/m^2 downwelling/”back” LWIR 100% perpetual loop does not exist.

RGHE theory goes into the rubbish bin of previous failed consensual theories.

Nick, you’ve got it wrong, it’s not a 333 feedback loop…. 396 – 333 = 63 watts per sq. M radiated from the ground to the sky on average. At the basic physics of it all, the negative term in the Stephan-Boltzmann two surfaces equation, which is referred to as “back radiation”, 333 watts in this case, is how much the energy content of the wave function of the hotter body is negated by a cooler body’s wave function. But only high level physicists think of it in those terms. Most just use the back radiation concept. So do climatologists. Engineers prefer to just use SB to calculate heat transfer from hot to cold directly, to be sure they don’t inadvertently get dreaded temperature crosses in their heat exchangers.

DMac

The 396 W/m^2 is a theoretical “what if” calculation for the ideal LWIR from a surface at 16 C, 289 K. It does not, in actual fact, exist.

The only way a surface radiates BB is into an vacuum where there are no other heat transfer processes occurring.

As demonstrated in the classical fashion, by actual experiment:

https://principia-scientific.org/debunking-the-greenhouse-gas-theory-with-a-boiling-water-pot/

No 396, no 333, no RGHE, no GHG warming.

“…how much the energy content of the wave function of the hotter body is negated by a cooler body’s wave function…”

Classical handwavium nonsense. If a cold body “negated” a hot body there would be refrigerators without power cords. I don’t know of any. You?

I think you both have it wrong. Two objects near each other send radiation back and forth continuously and the outgoing flux can be calculated using the temperature and albedo of each. The fact that an IR Thermometer works at all proves this to be true.

In the case of the Earth’s surface and a “half-silvered” atmosphere, there is a continuous escaping to space of some of the radiation from the surface (directly) and from the atmosphere (directly and indirectly) according to the GHG concentration.

I am weary of arguments that there is no “circuit” between the atmosphere and the surface. Of course there is – there is a thermal energy “circuit” between all objects that have line-of-sight of each other, including between me and the Sun. There is nothing mysterious about this. That is how radiation works.

A simple demonstration of this is to build a fire using one stick. Observe it. Make a sustainable fire as small as possible. Now split the stick in two and make another fire, placing the two sticks in parallel about 10 mm apart. The fire can be smaller than the previous one because the thermal radiation back and forth between the two is conserved. There is no net energy gain doing this for either stick, but there is net benefit (if the object is to make the smallest possible fire).

Radiation continues regardless of whether there is anything “on the receiving end” and always will.

“Two objects near each other send radiation back and forth continuously and the outgoing flux can be calculated using the temperature and albedo of each. The fact that an IR Thermometer works at all proves this to be true. ”

Is this what you have in mind: Q = sigma * A * (T1^4 – T2^4)

Where are the other 5 terms? 2 Qs, 2 epsilon, second area?

This is not “net” energy, it’s the work required to maintain the different temperatures.

Nonsense.

Two objects one hot and one cold: energy flows (heat) from the hot to the cold (EXCLUSIVELY) until they come to equilibrium. The only way to reverse this energy flow is by adding work in the form of a refrigeration cycle.

IR instruments are designed, fabricated and applied based on temperature sensing elements. Power flux is inferred based on an assumed emissivity.

Assuming 1.0 for the earth’s surface or much of molecular anything else is just flat wrong.

The Instruments & Measurements

But wait, you say, upwelling LWIR power flux is actually measured.

Well, no it’s not.

IR instruments, e.g. pyrheliometers, radiometers, etc. don’t directly measure power flux. They measure a relative temperature compared to heated/chilled/calibration/reference thermistors or thermopiles and INFER a power flux using that comparative temperature and ASSUMING an emissivity of 1.0. The Apogee instrument instruction book actually warns the owner/operator about this potential error noting that ground/surface emissivity can be less than 1.0.

That this warning went unheeded explains why SURFRAD upwelling LWIR with an assumed and uncorrected emissivity of 1.0 measures TWICE as much upwelling LWIR as incoming ISR, a rather egregious breach of energy conservation.

This also explains why USCRN data shows that the IR (SUR_TEMP) parallels the 1.5 m air temperature, (T_HR_AVG) and not the actual ground (SOIL_TEMP_5). The actual ground is warmer than the air temperature with few exceptions, contradicting the RGHE notion that the air warms the ground.

Sun warms the surface, surface warms the air, energy moves from surface to ToA according to Q = U A dT, same as the insulated walls of a house.

Nonsense.

All objects radiate, unless they are at absolute zero.

Net energy flows from the hot object to the cold object, but energy IS flowing in both directions.

This is a reply to your claim that “Two objects one hot and one cold: energy flows (heat) from the hot to the cold (EXCLUSIVELY) until they come to equilibrium.” Wrong. Energy flows in both directions (unless one happened to be at absolute zero); however, the energy flowing from the hotter object to the colder one is greater than the energy flow in the opposite direction. The result is that the NET flow is unidirectional until equilibrium. But flow =/= net flow.

You are absolutely correct CinW. Very close to the Earth’s surface a downward facing calculation using MODTRAN will produce the Stefan-Boltzmann with an emissivity of 0.97 just about exactly. The typical earth materials have emissivities averaging to about 0.97.

As one rises away from the Earth’s surface the calculated effective emissivity of the downward view will decline, eventually to a value of 0.63 or so, because of the intervening IR active gasses.

Claiming the SB law applies only to a cavity in vacuum is an utterly immaterial argument. The lack of a cavity is why emissivity is less than one for surfaces in vacuum.

I think you mean each of the 2 separated fires is a bit smaller than the original single fire…view factor considerations…but I’m thinking draft is an important factor for sticks 10 mm apart versus 0 mm…

Kevin kilty – September 7, 2019 at 6:50 pm

Utterly silly claim, ….. with no basis in fact.

The atmosphere is constantly moving across the surface of the Earth in weather patterns so it is unlikely that they will ever reach equilibrium unless a weather pattern becomes stuck and the surface is given time to reach equilibrium with the atmosphere. The surface temperature is heated by solar radiation but cools or heats up with thermal interaction with the atmosphere close to it. My model of the thermal Earth does not have any back radiation there is local thermal equilibrium between the surface and overlying atmosphere if the atmosphere remains static to give time for equilibrium to be reached.

Donald P, ….. I criticized Kevin kilty simply because the ppm density of Kevin’s stated “IR active gasses” is pretty much constantly changing, with H2O vapor being the dominant one. Also, the IR being radiated from the surface is not polarized, meaning, ……. the higher the elevation from the emitting surface, ….. the more diffused or spread out the IR radiation is. Just like the visible light from a lightbulb decreases in intensity (brightness) the farther away the viewer is.

Samuel C Cogar,

Before launching into someone, you ought to know what you are talking about. Run some models using the U of Chicago wrapper for MODTRAN and see what you get looking down close to the surface and again high in the atmosphere. I have run hundreds of MODTRAN models and they are very educational. By the way, MODTRAN is among the most reliable codes of any sort around (Tech. Cred. 9), so do not hide behind “its just a model”.

I have no idea why you do not understand the impact of IR active gasses in an atmosphere. The ramifications involve the sensors and controls in millions of boilers, furnaces, power plants, etc. Every day, all day long.

Nick, busses could drive through the holes in your experiment. You can’t disprove the negative term Thot^4-Tcold^4 in the SB equation with a boiling kettle. Because the instrumentation on many fired heaters and industrial furnaces confirm it every hour, every day, worldwide. I’ve designed some if them. SB is right, so there is a RGHE resulting from CO2 and H2O in the atmosphere. I know H2O and CO2 absorb and emit CO2 from many years of calculating it and reading instruments that confirm it. End of story.

>>>>>>MarkW

September 7, 2019 at 5:21 pm

”Nonsense.

All objects radiate, unless they are at absolute zero.

Net energy flows from the hot object to the cold object, but energy IS flowing in both directions.”<<<<<<

No reply function under your post so I put this here….please forgive..

As a non-scientist, I have trouble visualizing this. How can an object lose (emit) and gain (absorb) energy at the same time? What is the mechanism? (in simple terms)

Mike

How do things lose and gain energy [not heat] at the same time?

Consider two flashlights (torches in the UK) pointing at each other. The light from each shines out from the bulb and is, in part, received by the other. Now, suppose the batteries in one start to fade and the emission of light decreases. Will this affect the amount of light emerging from the other one? Not at all. Nothing about one light affects what the other does. They both shine as they are able, or not if they are turned off.

Nick S above is thinking about conduction of heat, not radiation of energy. Different rules apply for that. There are three modes of energy transfer: conduction, convection and radiation. People with no high school science education frequently confuse conduction and radiation lumping both into “transfer”.

Light is not conducted through the air from one flashlight to the other – it is radiated, and this would happen even if there was no air at all.

Now consider that the original IMAX projector had a 25 kilowatt short arc Xenon bulb in it which produced enough light to brightly illuminate that hundred foot wide screen. Point one at a flashlight. Is the flashlight’s radiance in any way “countered” or “dimmed” or “enhanced”? No not at all. They are independent, disconnected systems with a gap between that can only be bridged by the radiation of photons.

Infra-red radiations is a form of light, light with a wavelength below what we can perceive. Some insects can see IR, some snakes, not us. Some can see UV. We can’t see that either. Not being able to see it doesn’t mean it is not flowing like the visible photons from a flashlight. IR camera can see the IR radiation. The temperature is converted to colour scale for convenience. Basically it is a size-for-size wavelength conversion device.

It happens that all material in the universe is capable of emitting photons, but not nearly equally., however. Non-radiative gases are so-termed because they don’t emit (much) IR, but they will emit something if heated high enough. That doesn’t happen in the atmosphere.

It isn’t quite true that all objects will radiate energy down to absolute zero. That only applies to black objects or gases with absorption bands in the IR. We are only talking about IR radiation when we discuss the climate.

Something very interesting and rather counter-intuitive is that an object such as a piece of steel will have a certain emissivity, say 0.85. (Water is almost absolutely black in IR, BTW.) When the steel is heated hundreds of degrees, until it is glowing yellow, for example, the emissivity rating stays essentially the same.

If you heat a black rock from 0 to 700 C, it can be seen easily in the dark, glowing, but it is still “black”, it is just very hot,radiating energy like crazy. Hold your hand up to it. Feel the radiation warm your skin. Your skin is radiating energy too, back to the hot rock. Not nearly as much so you gain more than you lose.

A glowing object retains (pretty much) the emissivity that it has at room temperature. We see it glow because our eyes are colder than the rock. For this reason, missiles tracking aircraft with “heat-seeking technology” chill the receptor to a very low temperature, often using de-compressed nitrogen gas which is stored nearby. When the missile is armed and “ready” it means the gas is flowing and the sensor is chilled. If the pilot doesn’t fire it within a certain time, the gas is depleted and the missile is essentially useless.

When the receptor is very cold, it “sees” the aircraft much more easily, even if the skin temperature is -60C, so it works.

IR radiation is like stretched light. Almost any solid object emits it all the time, in all directions. When the amount received from all the objects in a room balances with what the receiving object emits, its temperature stops changing. That is the very definition of thermal equilibrium. In=Out=stable temperature. It does not mean the flashlights stopped shining.

Crispin, excellent explanation. But Nick will not accept it and repeat his nonsense over and over again.

I refer to a thought experiment from the first time I heard this entire line of argumentation:

Consider two stars in isolation in space.

One is at 5000°K

One is at 6000°K

Now bring those stars into a close orbit,far enough away so negligible mass is being transferred gravitationally, but each is intercepting a large portion of the radiation being emitted from the other one.

Clearly each star is now gaining considerable energy from the other, and the temperature of each will rise.

Each star has the same core temperature and the same internal flux from the core to the photosphere, but now each also has additional heat flux from the nearby star.

So, what happens to the temperature of each star?

It is obvious, to me at least, that both stars will become hotter.

The cooler one will make the hotter one even hotter, and the hotter one will make the cooler one hotter as well, as each star is now being warmed by energy that was previously radiating away to empty space.

Can anyone imagine or describe how the cooler star is not heating the warmer star?

My assertion is that the same logic applies to two such objects no matter what the absolute or relative temperatures of each might happen to be.

If the two objects are of identical diameter, the warmer star will be adding more energy to the cooler star than it is getting back from the cooler star.

But a situation could be easily postulated wherein the cooler star has different diameter than the warmer star, such that the flow is exactly equal from one star to the other, as can a scenario in which the cooler star sufficiently different in diameter that it is adding more energy to the warmer star than it is getting back from the other.

In this last case, the cooler object is actually warming the warmer star more than it is itself being warmed by the warmer star.

I’ve often come across that scenario or similar ( which underpins the entire radiative AGW hypothesis) many times and it is only in the past few minutes with the help of a bottle of wine that the solution has flashed into my mind.

I always knew that the cooler star won’t make the warmer star hotter but it will slow down the rate of cooling of the warmer star. I think that is generally accepted.

However, the novel point which I now present is that, in addition, the warmer star then being warmer than it otherwise would have been will then radiate heat away faster than it otherwise would have done so the net effect is that the two stars combined will lose heat at exactly the same rate as if they had not been radiating between themselves.

Meanwhile the warmer star’s radiation to the cooler star will indeed warm the cooler star but being warmer than it otherwise would have been the cooler star will also radiate heat away faster than it otherwise would have done so the net effect, again, is that the two stars combined will lose heat at exactly the same rate as if they had not been radiating between themselves.

The reason is that radiation operates at the speed of light which is effectively instantaneously at the distances involved so all one is doing is swapping energy between the two instantaneously with no net reduction in the speed of energy loss to space of the combined two units.

In order to get any additional net heating one needs an energy transfer mechanism that is slower than the speed of light i.e. not radiation.

Therefore, conduction and convection being slower than the speed of light are the only possible cause of a net temperature rise and that can only happen if the two units of mass are in contact with one another as is the case for an irradiated surface and the mass of an atmosphere suspended off that surface against the force of gravity.

Can anyone find a flaw in that ?

To make it a bit clearer, the potential system temperature increase that could theoretically arise from the swapping of radiation between the two stars is never realised because it is instantly negated by an increase in radiation from the receiving star.

One star radiates a bit more than it should for its temperature and the other radiates a bit less than it should for its temperature but the energy loss to space is exactly as it should be for the combined units so no increase in temperature can occur for the combined units.

The S-B equation is valid only for a single emitter. If one has dual emitters the S-B equation applies to the combination but not to the discrete units.

The radiative theorist’s mistake is in thinking that the radiation exchange between two units slows down the radiative loss for BOTH of them. In reality, radiation loss from the warmer unit is slowed down but radiative loss from the cooler unit is speeded up and the net effect is zero.

Unless the energy transfer is slower than the speed of light the potential increase in temperature cannot be realised.

Which leaves us with conduction and convection alone as the cause of a greenhouse effect.

I should have said “…now each also has additional energy flux from the nearby star.”

When energy is absorbed by an object, in most cases it will increase in temperature, that is, it will warm up.

Exceptions clearly exist, as when energy is added to a substance undergoing a phase change and the added energy does not show up as sensible heat but rather exists as latent heat in the new phase of the material.

But in general conversational parlance, I think most of us understand what concept is being conveyed when one uses the word “heat”, when what is actually meant is more precisely termed “energy’.

I wasn’t happy with my previous effort so try this instead:

Consider two objects in space, one warmer than the other and exchanging radiation between them.

Taking a view from space and bearing in mind the S-B equation that mass can only radiate according to its temperature, what happens to the temperatures of the individual objects?

The warmer object can heat the cooler object via a net transmission of radiation across to it so the temperature of the cooler object can rise and more radiation to space can occur from the cooler object.

However, the cooler object will be drawing energy from the warmer object that would otherwise be lost to space.

From space the warmer object would appear to be cooler than it actually is because the cooler object is absorbing some of its radiation.

The apparent cooling of the warmer object would be offset by the actual warming of the cooler object so as to satisfy the S-B equation when observing the combined pair of units from space.

So, the actual temperature of the two units combined would be higher than that predicted by the S-B equation but as viewed from space the S-B equation would be satisfied.

That scenario involves radiation alone and since radiation travels at the speed of light the temperature divergence from S-B for the warmer object would be indiscernible for objects less than light years apart and for objects at such distances the heat transmission between objects would be too small to be discernible.

So, for radiation purposes for objects at different temperatures the S-B equation is universally accurate both for short and interstellar distances.

The scenario is quite different for non-radiative processes which slow down energy transfers to well below the speed of light.

As soon as one introduces non-radiative energy transfers the heating of the cooler object (a planetary surface beneath an atmosphere) becomes magnitudes greater and is easily measurable as compared to the temperature observed from space (above the atmosphere).

So, in the case of Earth, the view from space shows a temperature of 255k which accords with radiation to space matching radiation in from the sun.

But due to non-radiative processes within the atmosphere the surface temperature is at 288k.

The same principle applies to every planet with an atmosphere dense enough to lead to convective overturning.

Stephen,

Thank you for responding.

Very interesting thoughts you have added.

I did of course realize that there would be after some delay (perhaps a very exceedingly brief delay?) a new equilibrium temperature and if this is hotter then the immediate effect will be an increase in output of the star.

I have to step out at the moment and will comment more fully later this evening, but for now a few brief thoughts in response to your thoughtful comments:

– How far into a star can a photon impinging upon that star go before being absorbed? Probably different for different wavelengths, no?

– How fast can the star transfer energy from the side of the star facing the other star, to the side facing empty space? I had not considered it, but most stars are known to rotate, although the thought experiment did not stipulate this. Stars are very large. If the stars are not rotating, will it not take a long time for energy to make it’s way to the far side?

-If the star warms up on the side facing the other star, will it not tend to shine most of the increased output towards the other star? Each point on the surface is presumably radiating omnidirectionally. If the surface now has another input of energy, will it not have to increase output? If it’s output is increased, is that synonymous with, or equivalent to, an increase in temperature?

-If it takes a long time (IOW not instantaneous) for energy to be transferred to the far side, will not most of the increased output be aimed right back at the other star?

OK, got dash now, but you have got me thinking…my thought experiment only went as far as the instantaneous change that would occur, not to the eventual result when a new equilibrium was reached, but several questions arise when that is considered.

Stars can be cooler AND simultaneously more luminous…in fact this happens to all stars as the move into the red giant branch on the H-R diagram, to give one example.

So, will the stars each expand when heated from an external source, and not get hotter, but instead become more luminous while staying the same temp?

I suppose now we will have to have a look at published thoughts on the subject, and maybe measurements of the relative temp of similar stars when in isolation and when in binary and trinary close orbits with other stars.

How fast does gas conduct energy, and how fast does a parcel of gas on the surface convect, and how efficient is radiation inside a star? Does all of the incident energy really just shine right back out? If it happens instantly, wont it just shine back at the first star, so they are now sending photons back and forth (hoo boy, I see where this is going!)

BTW…all honest questions…I do not know for sure what the answers are.

How sure are you about your view on this?

I think to keep it simple at first, let us just consider the case where the stars are the same diameter.

Does it matter how close they are and/or how large they actually are?

Thanks again for responding…few have done so over the years to this thought experiment.

Nicholas,

I’m sure I am right on purely logical grounds.

I have been confronted with this issue many times but only now has it popped into my mind what the truth is.

You mention a number of potentially confounding factors but none of them matter.

Whatever the scenario,the truth must be that the S-B equation simply does not apply to discrete units where radiation is passing between them.

If viewing from outside the system then one will be radiating more that it ‘should’ and one will be radiating less than it ‘should’ with a zero net effect viewed from outside.

However, the discrepancy is indiscernible for energy transfers at the speed of light. For slower energy transfers the discrepancy becomes all too apparent hence the greenhouse effect induced by atmospheric mass convecting up and down within a gravity field rather than induced by radiative gases.

At its simplest:

S-B applies to radiation only between two locations only, a surface and space.

Add non radiative processes and/or more than two locations and S-B does not apply.

A planetary surface beneath an atmosphere open to space involves non radiative processes (conduction and convection) and three locations (surface, top of atmosphere and space).

The application of S-B to climate studies is an appalling error.

It seems ” Podsiadlowski (1991)” may have explored the effects of irradiation on the evolution of binary stars, in particular with regard to high x-ray flux.

I am sure there must be plenty of literature on how binary stars effect each other’s evolution, but most of what I find in a quick look has to do with mass transfer situations.

Be back later, but:

http://www-astro.physics.ox.ac.uk/~podsi/binaries.pdf

Page 38 is where I got too for now.

This is paywalled:

http://adsabs.harvard.edu/abs/1991Natur.350..136P

Just reading some easily found papers, I have come across a few references to what happens in such cases, which as actually common: It is thought most stars are binary.

http://articles.adsabs.harvard.edu/cgi-bin/nph-iarticle_query?bibcode=1985A%26A…147..281V&db_key=AST&page_ind=0&data_type=GIF&type=SCREEN_VIEW&classic=YES

The second paragraph of this paper starts out stating: “In general, the external illumination results in a heating of the atmosphere.”

Second paragraph begins:

“For models in radiative and convective equilibrium, not all the incident energy is re-emitted”

The details are apparently, as I surprised, quite complex, and have been considered by various stellar physicists going back to at least Chandrasekhar in 1945.

This one is a relatively old paper, 1985 it appears, and is of course paywalled.

I think I read once on here that there is a way to read most paywalled scientific papers without paying. Maybe someone can help on this.

But most papers on this topic are concerned with the apparently more interesting effects of mass transfer in binary systems, the primary mechanism for which is something called Roche Lobe Overflow (RLOF). Along the way one learns about stars called “redbacks” and “black widows”, among others.

https://iopscience.iop.org/article/10.1088/2041-8205/786/1/L7/pdf

Limb brightening, grey atmospheres, stars in convective equilibrium…have to read up on these and refresh my memory…I only took a couple of classes in astrophysics.

“Standard CBS models do not take into account either evaporationofthedonorstarbyradiopulsarirradiation(Stevensetal. 1992), or X-ray irradiation feedback (B¨uning & Ritter 2004). During an RLOF, matter falling onto the NS produces X-ray radiation that illuminates the donor star, giving rise to the irradiationfeedbackphenomenon.Iftheirradiatedstarhasanouter convective zone, its structure is considerably affected. Vaz & Nordlund (1985) studied irradiated grey atmospheres, finding that the entropy at deep convective layers must be the same for the irradiated and non-irradiated portions of the star. To fulfill this condition, the irradiated surface is partially inhibited from releasing energy emerging from its deep interior, i.e., the effective surface becomes smaller than 4πR2 2 (R2 is the radius of thedonorstar).Irradiationmakestheevolutiondepartfromthat predicted by the standard theory. After the onset of the RLOF, the donor star relaxes to the established conditions on a thermal (Kelvin–Helmholtz) timescale, τKH =GM2 2/(R2L2) (G is the gravitational constant and L2 is the luminosity of the donor star). In some cases, the structure is unable to sustain the RLOF and becomes detached. Subsequent nuclear evolution may lead the donor star to experience RLOF again, undergoing a quasicyclic behavior (B¨uning & Ritter 2004). Thus, irradiation feedbackmayleadtotheoccurrenceofalargenumberofshort-lived RLOFs instead of a long one. In between episodes, the system may reveal itself as a radio pulsar with a binary companion. Notably, the evolution of several quantities is only mildly dependent on the irradiation feedback (e.g., the orbital period).”

“A planetary surface beneath an atmosphere open to space involves non radiative processes (conduction and convection) and three locations (surface, top of atmosphere and space).”

I agree with this completely.

There is no reason to think that radiative properties of CO2 dominate all other influences, and many reasons to believe it’s influence at the margin is very small, if not negligible or zero. If it is negligible or zero there are many possible reasons for it being so.

One need not be able to explain the precise reasons, however, to know that there is no causal correlation at any time scale, between CO2 and the temperature of the Earth.

“The application of S-B to climate studies is an appalling error.”

I am still trying to figure out why there is such a variety of views on this point.

I confess I find this baffling.

I do not know who is right.

My thought experiment is conceived to look narrowly at the question of whether or not radiant energy from a cool object impinges upon a warmer object, and what happens if and when it does.

How fast everything happens seems to me to be a separate question.

The speed of light is very fast, but it is not instantaneous.

I can find many references confirming that when photons are absorbed by a material, the effect is generally to make the material becomes warmer, because energy is added.

I have not found anything that says that the temperature of the substance that emitted the photons changes that.

“The warmer object can heat the cooler object via a net transmission of radiation across to it so the temperature of the cooler object can rise and more radiation to space can occur from the cooler object.

However, the cooler object will be drawing energy from the warmer object that would otherwise be lost to space.

From space the warmer object would appear to be cooler than it actually is because the cooler object is absorbing some of its radiation.

The apparent cooling of the warmer object would be offset by the actual warming of the cooler object so as to satisfy the S-B equation when observing the combined pair of units from space.”

I had not seen this previously.

I have to disagree.

Perhaps I misunderstand, or perhaps you misspoke.

The warmer star appears cooler because the cooler star is intercepting some of it’s radiation?

But radiation works by line of site. And whatever photons the cooler star absorbs cannot have any effect on how the warmer star radiates.

Here is how it must be in my view:

Each star had, when isolated in space, a given temp, which was a balance between the flux from the core and the radiation emitted from the surface. Flux from the core is either via radiation or convection according to accept stellar models, and these tend to be in discreet zones.

When the stars are brought into orbit (and let’s stipulate circular orbits around a common center of mass, in a plane perpendicular to the observer (us), so they are not at any time eclipsing each other from our vantage point) near each other, each is now being irradiated by the other. And radiation emitted in the direction of the other star by either one of them is either reflected or absorbed. Each star emits across a wide range of energies, and the optical depth of the irradiated star to these wavelengths varies depending on the wavelength of the individual photons.

Since in the new situation, the flux leaving the core remains the same, and since the surface area of each star that is losing energy to open space is now diminished, each one will have to become more luminous. Each star now has an addition flux of energy reaching it’s surface, due to being irradiated.

Since each star is absorbing some energy from the other, each will initially get hotter.

The stars will each respond by expanding, because that portion of the star has first become hotter.

I’m not happy with my description either so still working on it. There is something in it though which is niggling at me but best to leave it for another time.

The thing is that one should be considering two objects rather than two stars both of which are being irradiated by a separate energy source so the issue is one of timing which involves delay caused by the transfer of radiative energy throughput to and fro between the two irradiated objects.

I have previously dealt with it adequately in relation to objects in contact with one another such as a planet and its atmosphere which involves non radiative transfers but I need to create a separate narrative where the irradiated objects are not in contact so that only radiative transfers are involved.

The general principle of a delay in the energy throughput resulting in warming of both objects whilst not upsetting the S-B equation should apply for radiation just as it does for slower non radiative transfers but it needs different wording and I’m not there yet.

Your comments are helpful though.

No, no refrigerators without power cords, heat flows from hot to cold, unless you put work into it. Not handwavium, standard physics, yes classical. No helping you. I can only stop others from accepting your erroneous view.

Heat does not flow in radiation as in conduction. Bodies radiate. Two bodies, not at absolute zero will radiate and each body will capture some radiation from the other. There will be a net energy gain in some cases (large hot object to small cold one- cold one gains net heat for example). If the bodies are spheres in space, a lot of the radiation just travels away though “space”, except where areas intersect (view factor). Even if a cold body “sees” a hot one, it still radiates photons to it. There is no magic switch turning off the radiation. The cold body may send 1000 photons to the hot one, but the hot one may send a trillion to the cold one.

Please explain how a room temperature thermal imaging camera works. It measures temperatures down to -20°C whilst its sensor temperature is well above operating environment temperature of 50°C.

this is an UNCOOLED microbolometer sensor

For objects cooler than the microbolometer less radiation is focussed on the array so the array is only slightly warmed

for objects hotter than the microbolometer array more radiation is focussed on the array so the array is warmed more.

The array cannot be cooled unless you believe in negative IR energy!

there is a continual change of IR from hot to cold and cold to hot. The NET radiation is from the hot to cold. BUT the cold still adds energy to the hot!

FLIR data sheet

https://flir.netx.net/file/asset/21367/original

Detector type and pitch Uncooled microbolometer

Operating temperature range -15°C to 50°C (5°F to 122°F)

Please tell me how the Uncooled microbolometer knows what the temperature is?

What function of the Radiation tells the meter what temperature it is at ie what is it that “warms it up a bit”?

The bolometer works by turning radiation into heat in each pixel of the array. Different temperatures of radiation from different parts of the object heat different pixels more or less. The individual pixels are constructed a couple micrometers away from the chip base. All the pixels can maintain a fairly steady temperature by radiating from the backside into the base chip.

The temperature is measured by the varying resistance of each pixel. Once a stable image has formed additional energy is going to be going into the base chip. The pixels are separated enough to not allow much transfer of heat to adjacent pixels.

Yess, if a black body had a temperature of 16° C, it would radiate at 396 W/m².

Notice that the Earth does not have a constant temperature all over. In some places the temperature is 288 K, at others it’s 293 K or 283 K.

A black body at 288 K will radiate 390.7 W/m².

The average for black bodies radiating at 293 K and 283 K will be the average of

(293/288)^4 *390.7K and (283/288))^4*390.7 K , or the average of

418.546 and 364.266 W/m²., which is 391.406 W/m², higher than 390.7

For temperatures of 298 K and 278 K, still averaging 288 K, wattage will be the average of

(298/288)*390.7 W/m²^4 and (278/288)^4, *390.7 W/m² or the average of

447.856 and 339.198 which is 393.527 W/²m²m 2.827 W/m² greater than 390.7.

The average for

(302/288)^4*390.7 W/m²^ and (274/288)^4 W/m²^4 is

the average of 472.390 W/m² and 320.092= 396.241 W/m².

The greater the variation in temperatures from “average”, the greater Wattage per square meter radiated from Earth’s surface, even though average temperatures stay the same.

Bingo!

Plus Engineers will physically test heat transfer under controlled conditions to ensure their calculations match reality.

Energy flows in an electromagnetic field, high energy to low, and unlike running red lights, the Second Law of Thermodynamics is inviolable. Period.

Tom all I read on here is Photons, never energy. Just about everybody on here says photons are photons.

But surely they cannot all be equal? Otherwise there would be no SW, no Near IR and no LWIR.

My understanding is that photons are electrically neutral with no energy of their own, and they flow within an electromagnetic field between the energy-emitting and energy-absorb¬ing molecules of surfaces coupled by the electromagnetic field. They may be considered to mediate or denominate a flow of energy out of and into the molecules. They have the following basic properties:

Stability,

Zero mass and energy at rest, i.e., nonexistent except as moving particles,

Elementary particles despite having no mass at rest,

No electric charge.

Motion exclusively within an electromagnetic field (EMF),

Energy and momentum dependent on EMF spectral emission frequency.

Motion at the speed of light in empty space,

Interactive with other particles such as electrons, and

Destroyed or created by natural processes – e.g., radiative absorption or emission.

Photons have energy. Photons with energy above 10 eV can remove electrons from atoms (i.e., ionize them).

My previous response to this was evidently not sufficiently clear (or acceptable), which is unfortunate since there seems to be a good deal of misunderstanding about energy within an electromagnetic field and photons that mediate that flow. As mediators or markers of that energy they have no existence except within the field and as indication that it exists. Information concerning that is available and can clear up most if not all of the mystery.

Photons are a boson of a special sort, sometimes called a force particle, as bosons are intrinsic to physical forces like electromagnetism and possibly even gravity. In 1924 in an effort to fully understand Planck’s law of thermodynamic equilibrium arising from his work on blackbody radiation, physicist Subhas Chandra Bose (1897 – 1945) proposed a method in 1924 to analyze photons’ behavior. Einstein, who edited Bose’s paper, extended its reasoning to matter particles, basic “gauge bosons” that mediate the fundamental physics forces. These four gauge bosons have been experimentally tracked if not observed. They are:

The photon – the particle of light that transmits electromagnetic energy and acts as the gauge boson mediating the force of electromagnetic interactions,

The gluon – mediating the interactions of the strong nuclear force within an atom’s nucleus,

The W Boson – one of the two gauge bosons mediating the weak nuclear force, and

The Z Boson – the other gauge boson mediating the weak nuclear force.

The unstable Higgs boson that lends weak-force gauge bosons mass they otherwise lack

I am also to blame for not fully understanding your point. As a health (radiation) physicist (but educated as a generalist physicist), I am rather entrenched in conceptualizing photons as energetic wave packets, whose deposited energy has consequences, cell damage, heating, etc. And, with that said, however one conceptualizes the photon, the absorption or scattering of a photon imparts energy in the receptor.

“Most people don’t understand that at this distance from the sun objects get hot (394 K) ”

Off hand I’d say ALL people don’t understand it because it isn’t true.

Thank-you Charles and thank-you Anthony, for this and all you do.

Thank YOU for the hard fought effort to post the paper!

Thanks, but no thanks are necessary, Sunsettommy. I was compelled to do it. Compelled. My sanity demanded it.

I’m just very glad that the first slog is over.

Of course, now comes the second slog. 🙂 But still, that’s an improvement.

Fantastic paper and comments, Pat!

Being trained in the atmospheric science major I was, it was well understood from radiation physics derived post Einstein by the pioneers of the science that CO2 is only a GHG of secondary significance in the troposphere because of the hydrological cycle and cannot control the IR flux to space in its presence. It has no controlling effect on climate.

These conclusions were derived empirically from the calculations and the only thing that changed this was the advent of these horrible models you cite, the lies that were told about them to get grant money and the continued lies being told about them that they are accurate and can be used today to make public policy with.

It is $ money that is the motivating factor behind the lying. Both for the taxpayer funded grant money keeping the climate hysteria gravy train rolling in the universities and for the political class that saw an opportunity to exploit this fraud through creating a fake Rx that carbon taxation will fix it.

This terrible corruption has spread through the public university system and must be stopped. The political class falls back on the universities that promote climate hysteria as a means of defending their horrible ideas about carbon taxes and cap and trade.

You are correct that a hostile response to you will be forthcoming. It is always what happens when funding for fraud needs to be cut off and the perpetrators are threatened with unemployment as a result.

Thanks, Chuck.

Gordon Fulks has written of your struggles in Oregon. You’ve had to withstand a lot of abuse, telling the truth about climate and CO2 as you do.

Chuck’s testimony before an OR legislative committee:

https://olis.leg.state.or.us/liz/2018R1/Downloads/CommitteeMeetingDocument/145657

Hopeless but valiant struggle in defense of science against the false religion and corrupt political ideology of CACA.

“Being trained in the atmospheric science major I was, it was well understood from radiation physics derived post Einstein by the pioneers of the science that CO2 is only a GHG of secondary significance in the troposphere because of the hydrological cycle and cannot control the IR flux to space in its presence. It has no controlling effect on climate.”

A brilliant summation of reality. CO2 doesn’t “drive” jack shit. Just like ALWAYS. A quick review of the Earth’s climate history shows that atmospheric CO2 does NOT “drive” the Earth’s temperature. Nor will it ever. This is, and will always be (until the Sun goes Red Giant and makes Earth uninhabitable or swallows it up), a water planet.

But this is what happens when so-called “scientists” obsess about PURELY HYPOTHETICAL situations (i.e., doubling of atmospheric CO2 concentration with ALL OTHER THINGS HELD EQUAL, which of course will NEVER HAPPEN), and extrapolating from there with imaginary “positive feedback loops” which simply don’t exist here in the REAL world.

Many Many thanks Pat. 6 years to get a paper reviewed!! Holy Cow! You have the patience of Job. The world is a better place because of your tenacity! It demonstrates that here is hope after all.

Thank you Professor Frank from this Irishman.

For you to even exist in such a social, religious, and scientific desert as the country of my birth has become, is a minor miracle.

As you say, without people like Anthony and his wonderful band of realist contributors and helpers we would be lost.

Just look at a few crazy headlines this past week

A “scientist” proposes we start eating cadavers, my Pope proposes we stop producing and using all fossil fuels NOW to prevent run away global warming.

So help God me to leave this madhouse soon.

Keep hope Patrick Healy.

Things have gotten much worse in the past, and we’ve somehow muddled our way back to better things. 🙂

Patrick,

Pat lives in the worst of the USA’s madhouses, ie the SF Bay Area, albeit outside of then verminous rat-infested, human fecal matter-encrusted diseased and squalid City.

He attended college and grad school in that once splendid region*, earning a PhD. in chemistry from Stanford and enjoying a long career at SLAC.

*In 1969, Redwood City still billed itself as “Climate Best by Government Test!”

An excellent paper and commentary. I will reference it often.\

Thanks, Rs. Your critical approval is welcome.

..and thank you Pat…for “he persisted”

Appreciated, Latitude. 🙂