Propagation of Error and the Reliability of Global Air Temperature Projections

Guest essay by Pat Frank

Regular readers at Anthony’s Watts Up With That will know that for several years, since July 2013 in fact, I have been trying to publish an analysis of climate model error.

The analysis propagates a lower limit calibration error of climate models through their air temperature projections. Anyone reading here can predict the result. Climate models are utterly unreliable. For a more extended discussion see my prior WUWT post on this topic (thank-you Anthony).

The bottom line is that when it comes to a CO2 effect on global climate, no one knows what they’re talking about.

Before continuing, I would like to extend a profoundly grateful thank-you! to Anthony for providing an uncensored voice to climate skeptics, over against those who would see them silenced. By “climate skeptics” I mean science-minded people who have assessed the case for anthropogenic global warming and have retained their critical integrity.

In any case, I recently received my sixth rejection; this time from Earth and Space Science, an AGU journal. The rejection followed the usual two rounds of uniformly negative but scientifically meritless reviews (more on that later).

After six tries over more than four years, I now despair of ever publishing the article in a climate journal. The stakes are just too great. It’s not the trillions of dollars that would be lost to sustainability troughers.

Nope. It’s that if the analysis were published, the career of every single climate modeler would go down the tubes, starting with James Hansen. Their competence comes into question. Grants disappear. Universities lose enormous income.

Given all that conflict of interest, what consensus climate scientist could possibly provide a dispassionate review? They will feel justifiably threatened. Why wouldn’t they look for some reason, any reason, to reject the paper?

Somehow climate science journal editors have seemed blind to this obvious conflict of interest as they chose their reviewers.

With the near hopelessness of publication, I have decided to make the manuscript widely available as samizdat literature.

The manuscript with its Supporting Information document is available without restriction here (13.4 MB pdf).

Please go ahead and download it, examine it, comment on it, and send it on to whomever you like. For myself, I have no doubt the analysis is correct.

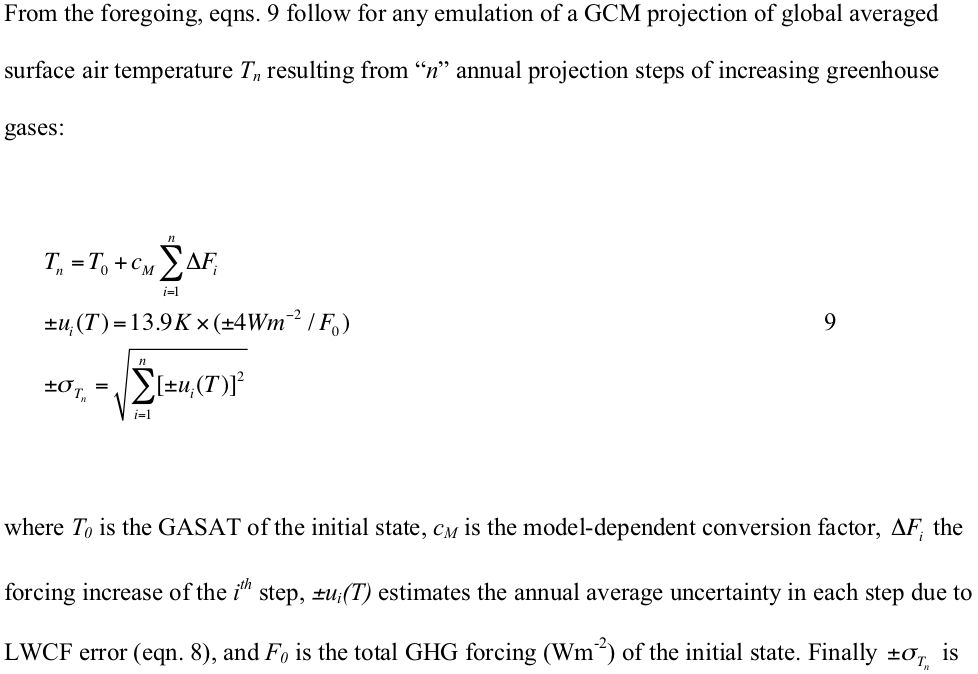

Here’s the analytical core of it all:

Climate model air temperature projections are just linear extrapolations of greenhouse gas forcing. Therefore, they are subject to linear propagation of error.

Complicated, isn’t it. I have yet to encounter a consensus climate scientist able to grasp that concept.

Willis Eschenbach demonstrated that climate models are just linearity machines back in 2011, by the way, as did I in my 2008 Skeptic paper and at CA in 2006.

The manuscript shows that this linear equation …

… will emulate the air temperature projection of any climate model; fCO2 reflects climate sensitivity and “a” is an offset. Both coefficients vary with the model. The parenthetical term is just the fractional change in forcing. The air temperature projections of even the most advanced climate models are hardly more than y = mx+b.

The manuscript demonstrates dozens of successful emulations, such as these:

Legend: points are CMIP5 RCP4.5 and RCP8.5 projections. Panel ‘a’ is the GISS GCM Model-E2-H-p1. Panel ‘b’ is the Beijing Climate Center Climate System GCM Model 1-1 (BCC-CSM1-1). The PWM lines are emulations from the linear equation.

CMIP5 models display an inherent calibration error of ±4 Wm-2 in their simulations of long wave cloud forcing (LWCF). This is a systematic error that arises from incorrect physical theory. It propagates into every single iterative step of a climate simulation. A full discussion can be found in the manuscript.

The next figure shows what happens when this error is propagated through CMIP5 air temperature projections (starting at 2005).

Legend: Panel ‘a’ points are the CMIP5 multi-model mean anomaly projections of the 5AR RCP4.5 and RCP8.5 scenarios. The PWM lines are the linear emulations. In panel ‘b’, the colored lines are the same two RCP projections. The uncertainty envelopes are from propagated model LWCF calibration error.

For RCP4.5, the emulation departs from the mean near projection year 2050 because the GHG forcing has become constant.

As a monument to the extraordinary incompetence that reigns in the field of consensus climate science, I have made the 29 reviews and my responses for all six submissions available here for public examination (44.6 MB zip file, checked with Norton Antivirus).

When I say incompetence, here’s what I mean and here’s what you’ll find.

Consensus climate scientists:

1. Think that precision is accuracy

2. Think that a root-mean-square error is an energetic perturbation on the model

3. Think that climate models can be used to validate climate models

4. Do not understand calibration at all

5. Do not know that calibration error propagates into subsequent calculations

6. Do not know the difference between statistical uncertainty and physical error

7. Think that “±” uncertainty means positive error offset

8. Think that fortuitously cancelling errors remove physical uncertainty

9. Think that projection anomalies are physically accurate (never demonstrated)

10. Think that projection variance about a mean is identical to propagated error

11. Think that a “±K” uncertainty is a physically real temperature

12. Think that a “±K” uncertainty bar means the climate model itself is oscillating violently between ice-house and hot-house climate states

Item 12 is especially indicative of the general incompetence of consensus climate scientists.

Not one of the PhDs making that supposition noticed that a “±” uncertainty bar passes through, and cuts vertically across, every single simulated temperature point. Not one of them figured out that their “±” vertical oscillations meant that the model must occupy the ice-house and hot-house climate states simultaneously!

If you download them, you will find these mistakes repeated and ramified throughout the reviews.

Nevertheless, my manuscript editors apparently accepted these obvious mistakes as valid criticisms. Several have the training to know the manuscript analysis is correct.

For that reason, I have decided their editorial acuity merits them our applause.

Here they are:

- Steven Ghan___________Journal of Geophysical Research-Atmospheres

- Radan Huth____________International Journal of Climatology

- Timothy Li____________Earth Science Reviews

- Timothy DelSole_______Journal of Climate

- Jorge E. Gonzalez-cruz__Advances in Meteorology

- Jonathan Jiang_________Earth and Space Science

Please don’t contact or bother any of these gentlemen. On the other hand, one can hope some publicity leads them to blush in shame.

After submitting my responses showing the reviews were scientifically meritless, I asked several of these editors to have the courage of a scientist, and publish over meritless objections. After all, in science analytical demonstrations are bullet proof against criticism. However none of them rose to the challenge.

If any journal editor or publisher out there wants to step up to the scientific plate after examining my manuscript, I’d be very grateful.

The above journals agreed to send the manuscript out for review. Determined readers might enjoy the few peculiar stories of non-review rejections in the appendix at the bottom.

Really weird: several reviewers inadvertently validated the manuscript while rejecting it.

For example, the third reviewer in JGR round 2 (JGR-A R2#3) wrote that,

“[emulation] is only successful in situations where the forcing is basically linear …” and “[emulations] only work with scenarios that have roughly linearly increasing forcings. Any stabilization or addition of large transients (such as volcanoes) will cause the mismatch between this emulator and the underlying GCM to be obvious.”

The manuscript directly demonstrated that every single climate model projection was linear in forcing. The reviewer’s admission of linearity is tantamount to a validation.

But the reviewer also set a criterion by which the analysis could be verified — emulate a projection with non-linear forcings. He apparently didn’t check his claim before making it (big oh, oh!) even though he had the emulation equation.

My response included this figure:

Legend: The points are Jim Hansen’s 1988 scenario A, B, and C. All three scenarios include volcanic forcings. The lines are the linear emulations.

The volcanic forcings are non-linear, but climate models extrapolate them linearly. The linear equation will successfully emulate linear extrapolations of non-linear forcings. Simple. The emulations of Jim Hansen’s GISS Model II simulations are as good as those of any climate model.

The editor was clearly unimpressed with the demonstration, and that the reviewer inadvertently validated the manuscript analysis.

The same incongruity of inadvertent validations occurred in five of the six submissions: AM R1#1 and R2#1; IJC R1#1 and R2#1; JoC, #2; ESS R1#6 and R2#2 and R2#5.

In his review, JGR R2 reviewer 3 immediately referenced information found only in the debate I had (and won) with Gavin Schmidt at Realclimate. He also used very Gavin-like language. So, I strongly suspect this JGR reviewer was indeed Gavin Schmidt. That’s just my opinion, though. I can’t be completely sure because the review was anonymous.

So, let’s call him Gavinoid Schmidt-like. Three of the editors recruited this reviewer. One expects they called in the big gun to dispose of the upstart.

The Gavinoid responded with three mostly identical reviews. They were among the most incompetent of the 29. Every one of the three included mistake #12.

Here’s Gavinoid’s deep thinking:

“For instance, even after forcings have stabilized, this analysis would predict that the models will swing ever more wildly between snowball and runaway greenhouse states.”

And there it is. Gavinoid thinks the increasingly large “±K” projection uncertainty bars mean the climate model itself is oscillating increasingly wildly between ice-house and hot-house climate states. He thinks a statistic is a physically real temperature.

A naïve freshman mistake, and the Gavinoid is undoubtedly a PhD-level climate modeler.

The majority of Gavinoid’s analytical mistakes include list items 2, 5, 6, 10, and 11. If you download the paper and Supporting Information, section 10.3 of the SI includes a discussion of the total hash Gavinoid made of a Stefan-Boltzmann analysis.

And if you’d like to see an extraordinarily bad review, check out ESS round 2 review #2. It apparently passed editorial muster.

I can’t finish without mentioning Dr. Patrick Brown’s video criticizing the youtube presentation of the manuscript analysis. This was my 2016 talk for the Doctors for Disaster Preparedness. Dr. Brown’s presentation was also cross-posted at “andthentheresphysics” (named with no appreciation of the irony) and on youtube.

Dr. Brown is a climate modeler and post-doctoral scholar working with Prof. Kenneth Caldiera at the Carnegie Institute, Stanford University. He kindly notified me after posting his critique. Our conversation about it is in the comments section below his video.

Dr. Brown’s objections were classic climate modeler, making list mistakes 2, 4, 5, 6, 7, and 11.

He also made the nearly unique mistake of confusing an root-sum-square average of calibration error statistics with an average of physical magnitudes; nearly unique because one of the ESS reviewers made the same mistake.

Mr. andthentheresphysics weighed in with his own mistaken views, both at Patrick Brown’s site and at his own. His blog commentators expressed fatuous insubstantialities and his moderator was tediously censorious.

That’s about it. Readers moved to mount analytical criticisms are urged to first consult the list and then the reviews. You’re likely to find your objections critically addressed there.

I made the reviews easy to apprise by starting them with a summary list of reviewer mistakes. That didn’t seem to help the editors, though.

Thanks for indulging me by reading this.

I felt a true need to go public, rather than submitting in silence to what I see as reflexive intellectual rejectionism and indeed a noxious betrayal of science by the very people charged with its protection.

Appendix of Also-Ran Journals with Editorial ABM* Responses

Risk Analysis. L. Anthony (Tony) Cox, chief editor; James Lambert, manuscript editor.

This was my first submission. I expected a positive result because they had no dog in the climate fight, their website boasts competence in mathematical modeling, and they had published papers on error analysis of numerical models. What could go wrong?

Reason for declining review: “the approach is quite narrow and there is little promise of interest and lessons that transfer across the several disciplines that are the audience of the RA journal.”

Chief editor Tony Cox agreed with that judgment.

A risk analysis audience not interested to discover there’s no knowable risk to CO2 emissions.

Right.

Asia-Pacific Journal of Atmospheric Sciences. Songyou Hong, chief editor; Sukyoung Lee, manuscript editor. Dr. Lee is a professor of atmospheric meteorology at Penn State, a colleague of Michael Mann, and altogether a wonderful prospect for unbiased judgment.

Reason for declining review: “model-simulated atmospheric states are far from being in a radiative convective equilibrium as in Manabe and Wetherald (1967), which your analysis is based upon.” and because the climate is complex and nonlinear.

Chief editor Songyou Hong supported that judgment.

The manuscript is about error analysis, not about climate. It uses data from Manabe and Wetherald but is very obviously not based upon it.

Dr. Lee’s rejection follows either a shallow analysis or a convenient pretext.

I hope she was rewarded with Mike’s appreciation, anyway.

Science Bulletin. Xiaoya Chen, chief editor, unsigned email communication from “zhixin.”

Reason for declining review: “We have given [the manuscript] serious attention and read it carefully. The criteria for Science Bulletin to evaluate manuscripts are the novelty and significance of the research, and whether it is interesting for a broad scientific audience. Unfortunately, your manuscript does not reach a priority sufficient for a full review in our journal. We regret to inform you that we will not consider it further for publication.”

An analysis that invalidates every single climate model study for the past 30 years, demonstrates that a global climate impact of CO2 emissions, if any, is presently unknowable, and that indisputably proves the scientific vacuity of the IPCC, does not reach a priority sufficient for a full review in Science Bulletin.

Right.

Science Bulletin then courageously went on to immediately block my email account.

*ABM = anyone but me; a syndrome widely apparent among journal editors.

Pat,

This has already been explained to you numerous, so it’s unlikely that this attempt will be any more successful than previous attempts. The error that you’re trying to propagate is not an error at every timestep, but an offset. It simply influences the background/equilibrium state, rather than suggesting that there is an increasing range of possible states at every step. For example, if we ran two simulations with different solar forcings (but everything else the same), this wouldn’t suddenly mean that they would/could diverge with time, it would mean that they would settle to different background/equilibrium states.

@ and Then There’s Physics

I’m a layman and no mathematician but having read the first few pages of the paper it seems to me that your points are answering the wrong question. (?)

The point made, or so it appears to me, is that where there is uncertainty in the assumptions being made within a model then – if, as they should be those uncertainties are expressed and included within the model, as the time-steps are calculated then the uncertainty grows into a wide band with a diverging top and bottom spread of values. In other words they diverge.

If the uncertainties are not included as part of the model then surely it is linear and unable to produce meaningful results?

If you have multiple uncertainties, as in climate, which are input into a model then the spread or divergence must become even greater with time.

Some of those would seem to be (but far from limited too) temperatures and effect on on atmospheric water vapour levels; cloud formation and cloud cover; solar activity; volcanic activity etc etc. each would have an effect on some of the others and with a amount of uncertainty which would need to be expressed.

As I said, I am a layman and would appreciate it if you could enlighten me.

Thanks

The point made, or so it appears to me, is that where there is uncertainty in the assumptions being made within a model then – if, as they should be those uncertainties are expressed and included within the model, as the time-steps are calculated then the uncertainty grows into a wide band with a diverging top and bottom spread of values. In other words they diverge.

Except this is not correct. An uncertainty only propagates if it applies at every step (i.e., if there is some uncertainty in the expected value at every step). If, however, some value is “wrong” by some amount that is the same at all time steps, then this does not propagate (by “wrong” I mean potentially different to reality). In this case, it is quite possible that the cloud forcing is “wrong” by a few W/m^2. What this would mean is that the equilibrium state would also then be “wrong”. It doesn’t mean, however, that the range of possible equilibrium states will grow with time, since this error does not propagate.

As I mentioned in the first comment, imagine we could run a perfect model in which every parameter exactly matched reality. Now imagine running the same model, apart from the Solar forcing being different by a few W/m^2. What would happen is that this would change the equilibrium state (there would be a constant offset between the “perfect” model and this other model). It would not mean that the difference between the model with the different solar forcing, and the “perfect” model would grow with time.

“An uncertainty only propagates if it applies at every step”

Um… No. A climate model is essentially an attempt to integrate a bunch of co-dependent variables numerically. If you knew anything about numerical integration, you would know that errors propagate wildly. The tool is, fundamentally, unsuited to the purpose it is being put.

ATTP, Stop focusing on the output result and thinking it’s error within an acceptable range therefore ok to propagate.

The issue is that the uncertainty that is propagated at each time step isn’t seen in the output because the output has been constrained by design to be within “reasonable” values. This is seen to be evidence the model is “doing the right thing” but the real problem is that at every single time step the output is meaningless for a climate calculation because the climate signal is much smaller than the error and what we’re left with is a fitted result.

Now you’ll arc up and suggest CGMs aren’t fits and are based on physics but again you’re mistaken because there are components (eg clouds) that aren’t and they’re approximations, they’re fits and by including them the models themselves are reduced to fits.

The whole GCM enterprise further relies on the assumption that errors cancel at each step throughout and that’s a ridiculous assumption. Completely unjustified and most certainly incorrect. In fact there is a small (unintentionally) built in bias that results in an expected result.

“imagine we could run a perfect model in which every parameter exactly matched reality.”

Yet you are UNABLE to run one where ANY parameter matches reality.

The ONLY thing you have is hallucinogenic anti-science IMAGINATION and FAIRY-TAILS

” If you knew anything about numerical integration, you would know that errors propagate wildly.”

I know lots about numerical integration (so does ATTP). I have spent a large part of my professional life doing it, in computational fluid dynamics, a regular engineering activity of which GCMs are a subset. Your statement is nonsense.

There’s no reason that difference would be equal over time. That’s a sign you have created a linear model, and it’s a decidedly non-linear system you’re modeling.

If this is what you think you guys are lost.

Micro,

I totally agree. This is probably the most telling comment of this whole discussion.

rip

ATTP, I was thinking about this more, you totally do not get that WV acts as a regulating medium, it actively alters the out going radiation response based on cooling temperatures, and not the stupid SB 4th pwr decay, this is on top of that, it’s the bends in the clear sky cooling profile. And since this is decidedly non-linear, and it controls the response to Co2, you’re not accounting for it in your models.

Think about how much the atm column shrinks at night. when it’s calm, it can only cool by radiation, and radiation is omni directional. Also for every gram of water vapor, there is a 4.21 J exchange of IR for a condense – reevaporation cycle as let’s say a 3,000 meter tall stack cools.

Interestingly it cools really quickly till air temps near dew point, then it stops cooling. It’s just there’s about -50W/m^2 of radiation to space just through the optical window based on SB calculations, yet net radiation is less than -20W/m^2. There’s about 35W’m^2 of sensible heat keeping the surface temp from falling as quickly.

There’s a 90F differences in the middle of the spectrum, I’ve measured over 100F differences.

How much energy is about 1 psi change between morning min T and afternoon Max temps at the surface(plus enthalpy lost, water condensed)? Oh wait, without the pressure change, average of about 3,300W/m^3.

attp says:

Funny, your example uses the ONLY independent variable in the whole shabang. Use any other co-dependent variable, and your example is busted.

Only in linear systems

In chaotic systems a single butterfly flapping its wings once….

…and that is a huge point. Climate models treat the climate as a linear system, because we do not have computational tools that can address the uncertainty of non linear systems.

To accept chaotic behaviour is merely to affirm ‘we can’t predict where this is going at all’. Or to put it in the vernacular. Climate science is at that level just bunk.

Even those people here who look for ‘cycles’ in climate with the ardent passion of ‘chemtrail’ observers, may in the end be barking up only a slightly less egregious gum tree than the climate scientists. Chaotic behaviour produces quasi-periodic fluctuations: That is over short times spans it may look briefly like a cycle, but then as it moves towards new attractors, it will enter a different ‘cycle’ and those of us who have built electronic circuits utilising chaotic feedback (super regenerative radios) know that, absent of a forcing signal, what you get is NOISE pure and simple, with no detectable single spectral component.

Nothing is more infuriating than to have someone lecturing you on the characteristics of linear equations challenging you to disprove their finer points, when your whole position is predicated in a provable assertion that what is being modelled cannot be represented by linear equations in the first place.

“Except this is not correct. An uncertainty only propagates if it applies at every step (i.e., if there is some uncertainty in the expected value at every step). If, however, some value is “wrong” by some amount that is the same at all time steps”

incorrect, the value increases with each step over time, you are a completely anti scientific chappy, clueless

Typical attp tactic, start off with a lie then spin sophistry round your false strawman.

Micro thanks for exposing ATTP’s cut and paste knowledge. Once you get in depth with him, he vanishes every time and runs back to his echo chamber

this annual average ±4.0 Wm-2 year-1 uncertainty in simulated LWCF is approximately ±150% larger than all the forcing due to all the anthropogenic greenhouse gases put into the atmosphere since 1900 (~2.6 Wm-2), and approximately ±114× larger than the average annual ~0.035 Wm-2 year-1 increase in greenhouse gas forcing since 1979

The error DOES in my opinion propagate.

And Then There’s Physics says If, however, some value is “wrong” by some amount that is the same at all time steps, then this does not propagate.

If the correction for cloud fraction error was a simple linear adjustment to models to correct the error, we would never have known about it. The adjustment would have been applied, and the model prediction would have aligned with observed cloud fraction.

Since nobody can accurately predict how clouds respond to GHG forcing, the margin for error grows with every iteration step, The uncertainty of how clouds will respond to the GHG forcing applied in a single step has to be carried through to the next iteration.

When the margin for error drastically exceeds what is physically plausible, I think we can safely assume the predictions of the model are total nonsense.

Page 23 of Pat Frank’s paper, hindcast cloud fraction error of global climate models.

ATTP says,

This assumes a linear response. It assumes that climate (and thus, presumably, weather) is a linear function of forcings.

If the initial value is “wrong” by some amount – or inaccurate by some amount – then that will affect the next iteration in some way.

If the next iteration is affected by the same amount every single time then the response is always constant.

Once again we have pseudoscience pretending that clouds don’t exist. That phase changes (water vapour to water droplets, for example) are smooth.

Why does ATTP worry about a declining Arctic Icecap when he doesn’t believe in non-linear phase changes? Melting can’t exist in his understanding of climate!

Except he has no understanding. He’s just a climate fanatic. It’s faith, not science.

You’ve got the essence, Old England, “that where there is uncertainty in the assumptions being made within a model then – if, as they should be those uncertainties are expressed and included within the model, as the time-steps are calculated then the uncertainty grows into a wide band…

You have grasped the central point that continually eludes ATTP and virtually every single climate modeler.

The error is systematic, resident in the model, and is introduced into a simulation by the model itself. It enters every simulation time-step, and necessarily produces an increasing uncertainty in the projection.

Look at ATTP’s reply to you.. His “wrong by some amount” supposes a constant offset error and is a completely wrong description of the systematic error.

Look at manuscript Figure 5. Every single model makes has a different error profile with positive and negative excursions. I pointed this out to ATTP in prior conversations. He ignores it. Perhaps because he doesn’t understand the significance. Change the parameter set of any one model, and its error profile will be different.

But ATTP (and others) want to add up all the errors to get one number, and then assume that number is a constant offset error that will correct any model expectation value to be error-free. His (their) idea is beyond parody.

Then he goes on to suppose statistical uncertainty is physical error, i.e., ATTP: “the range of possible equilibrium states will grow with time, since this error does not propagate,”

ATTP makes a standard mistake of my reviewers, here specifically number 6, but he has already also made mistakes 4, 5, 7 and 8.

He makes those same mistakes over, and over again.

TimTheToolMan gets it right, as usual.

Tim, do you have any idea why uncertainty is so opaque to climate modelers?

It’s dead obvious to any experimental scientist or engineer.

In this post, Nick Stokes admitted that GCMs are engineering models. I.e., Nick: “a regular engineering activity of which GCMs are a subset.”

Engineering models are useless outside their calibration bounds. Nick has repudiated the entire global warming scary-2100 enterprise.

Yet another inadvertent validation in an attempted refutation. Thank-you, Nick.

Eric Worrall, your comment is right on.

Thanks for posting Figure 5. It shows that every model has a different error profile, with positive and negative excursions.

Mere inspection of the figure shows how ludicrous is ATTP’s idea that all those errors should be merely added together into a number. And then subtracted away to make everything accurate. Only in consensus climate science.

“Engineering models are useless outside their calibration bounds.”

So what are the “calibration bounds” of, say, Nastran? Or Fluent, or Ansys? Pat, you don’t have a clue about engineering models.

well it’s obvious you don’t understand this.

Calibration isn’t defined by the simulator, but the models as applied to the design you’re evaluating. And it’s in comparison to the real circuit in operation.

Pat writes

I dont think it is. I think even Nick gets it and one day might even accept it (no Nick, it doesn’t mean your CFD work is dead, or weather models are wrong – models have their place still!) but no climate modeler can admit to it because it’d be well…a career limiting move. And as the GCMs are the cornerstone to so much of our science today, untangling the mess would be horrendous. Better to let sleeping dogs lie.

Pat Frank,

Look at manuscript Figure 5.

Ok, it shows latitudinal profiles of 25-year averaged model cloud fraction error versus cloud fraction observations averaged over a similar timescale. It demonstrates latitudinal error offsets between models and observations, as well as showing differences between models.

Every single model makes has a different error profile with positive and negative excursions.

Yes, this is well known and clearly understood by ATTP. Different models, different offsets.

Change the parameter set of any one model, and its error profile will be different.

Yes, this would obviously be true but how is it relevant to error propagation within a projection? Within an individual model projection run the parameter set will remain the same, thereby maintaining the same offset error.

Put in context of your Figure 5, your error propagation suggests that those error profiles should change quite dramatically over time. Why would that happen?

Nick Stokes, no matter your diversionary sneering, I’m clued in enough to know that engineering models are unreliable outside their calibration bounds.

Paulski0 “Within an individual model projection run the parameter set will remain the same, thereby maintaining the same offset error.”

Not correct, for two reasons. The parameters are not unique. They have large uncertainty widths. One can get the same apparent error with different suites of parameters. A given error is just representative. It does not transmit the true range of model errors. The uncertainty is made cryptic unless this is taken into account.

Second, even with unchanging parameter sets, any given projection simulation step is wrong, but to some unknown amount. Those wrong climate states are projected forward. Every step begins with initial value errors.

The projection error from step to step therefore varies, and in unknowable ways.

In a futures projection, one can’t know the errors. One only knows the uncertainty, by way to the propagated calibration error statistic. And uncertainty grows with each projection step because of increasing ignorance of the relative positions of the simulated state and the correct physical state in phase space.

Error propagation says nothing about error profiles in projection simulations. It addresses the reliability of the projection. Not its error.

I think you and the reviewers may be missing the point here attp. Millions spent on building climate models…and a simple linear model can recreate them very closely…..surely this is worth publishing and worth investigating further?

A simple linear model is what climate models are, stripped of decorative complexity, but whilst models may be represented by a model of that nature, reality it seems is just too complicated for that class of model to have a snowballs chance in hell of representing the vagaries of actual climate.

So I dont know what you are saying, but its not worth spending a copper nickel on.

I looked into cutting edge attempts by seriously bright mathematicians to even discern whether a given set of non linear partial derivatives led to a bounded set of solutions (broadly, a climate that never goes below snowball earth or boils the oceans dry) and we cant even do THAT. Observationally climate is amazingly stable.

But wobbles a lot as well.

And we have absolutely no idea whether it could one day wobble off to a whole new regime, just because a butterfly flapped its wings, let alone by injecting tons of CO2 into it. All we can say is that in times gone by, when CO2 was way greater than it is today, or is likely to be in the foreseeable future, the climate seems to have been stable enough for life to flourish.

The state of climate change science stripped down to the actual science, which is almost none, is simply stated

1/. We don’t know.

2/. Even if we did know the partial differentials governing it, we still wouldn’t know what the climate will do.

3/. We lack both the mathematics and the computational power to ever know better than that.

4/. Climate change is therefore not worth spending any grant money on.

5/. Even WUWT has no function beyond pointing out points 1, 2, 3 and 4.

6/. The IPCC is an organization without any purpose, since it exists to advise governments on situations that have no existence in reality.

7/. Renewable energy is therefore a crock of excrement, a pointless waste of money.

8/. Anyone who disagrees with any of the points above is like a holocaust denier.

9/. There is an urgent need to set up an international organisation to help whole swathes of the population come to terms with the facts that:

– the cheque isn’t in the post

– the tooth fairy doesn’t exist

– he/she wont love you in the morning.

– ‘man made climate change’ is as real as Tinkerbelle.

Great post, Leo Smith. Your number 1 has been the conclusion of my AGW assessment from the first. 🙂

If only you were head of the US National Academy. Or Pres. Trump’s science advisor. 🙂

Another point, that I think I’ve made to Pat before, is that if he is correct he should be able to easily demonstrate this. If you’re running computational models, one way to estimate the uncertainty is to simply run them many times with different initial conditions. If the uncertainty propagates as Pat suggests, then the range of results should reflect this. As I understand it, this has been done, and they do not.

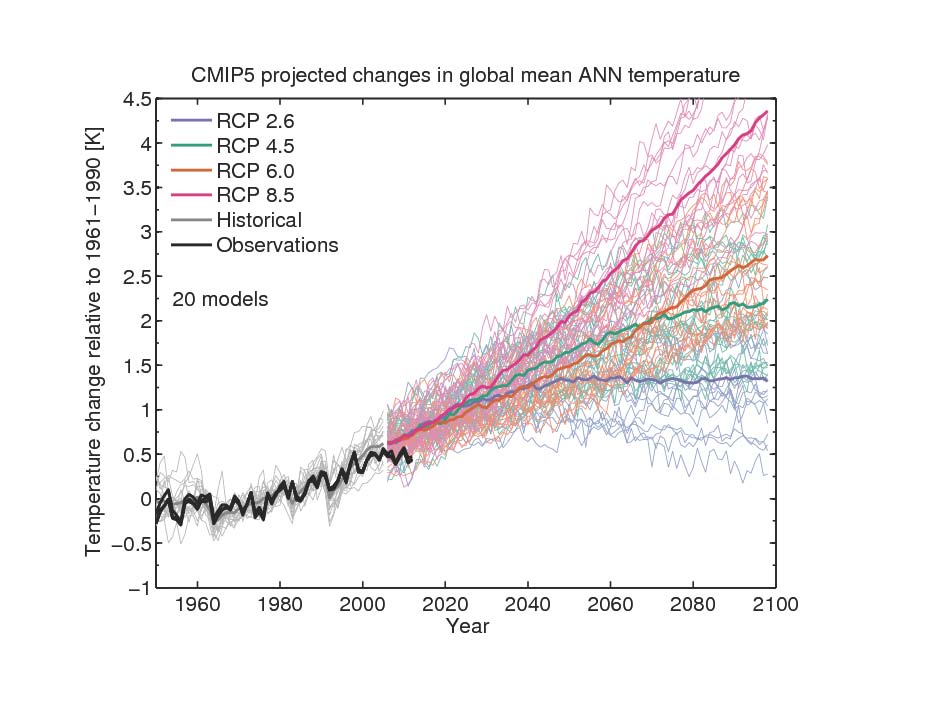

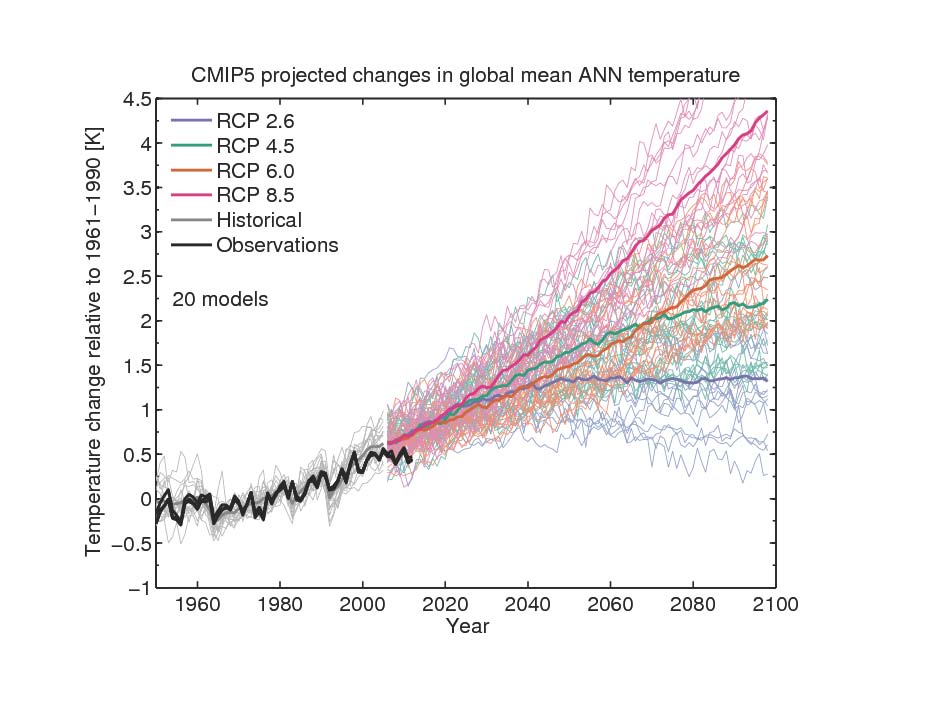

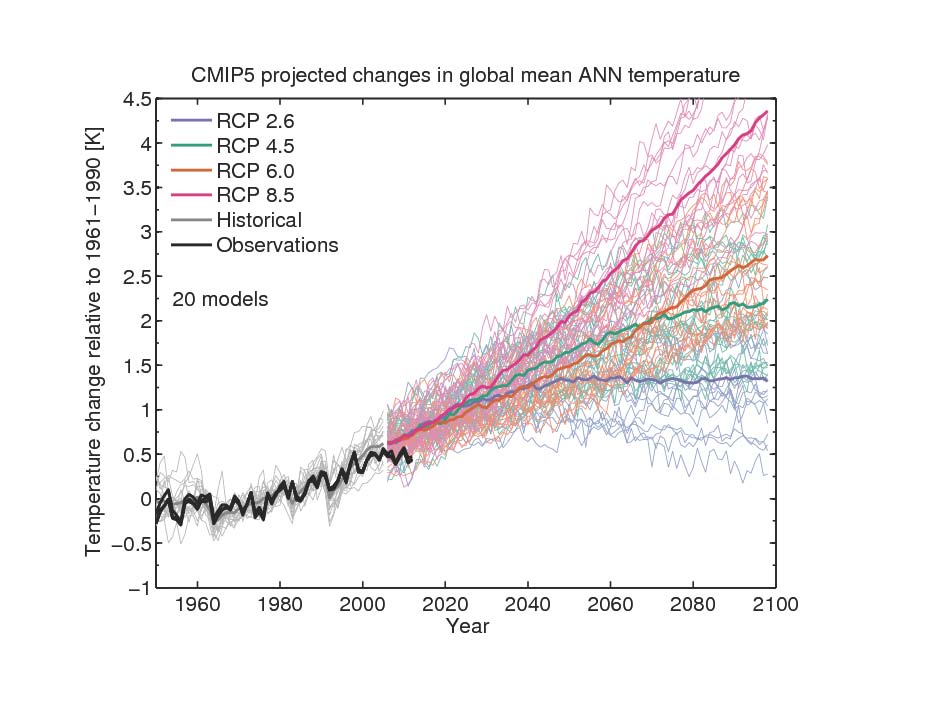

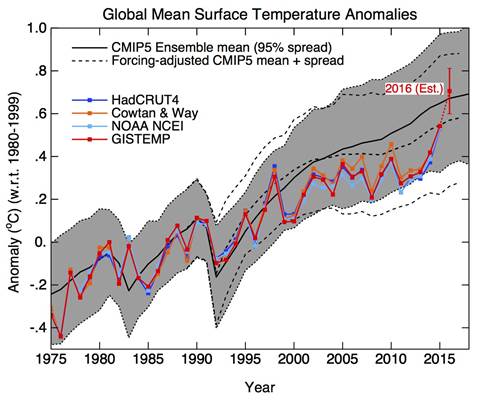

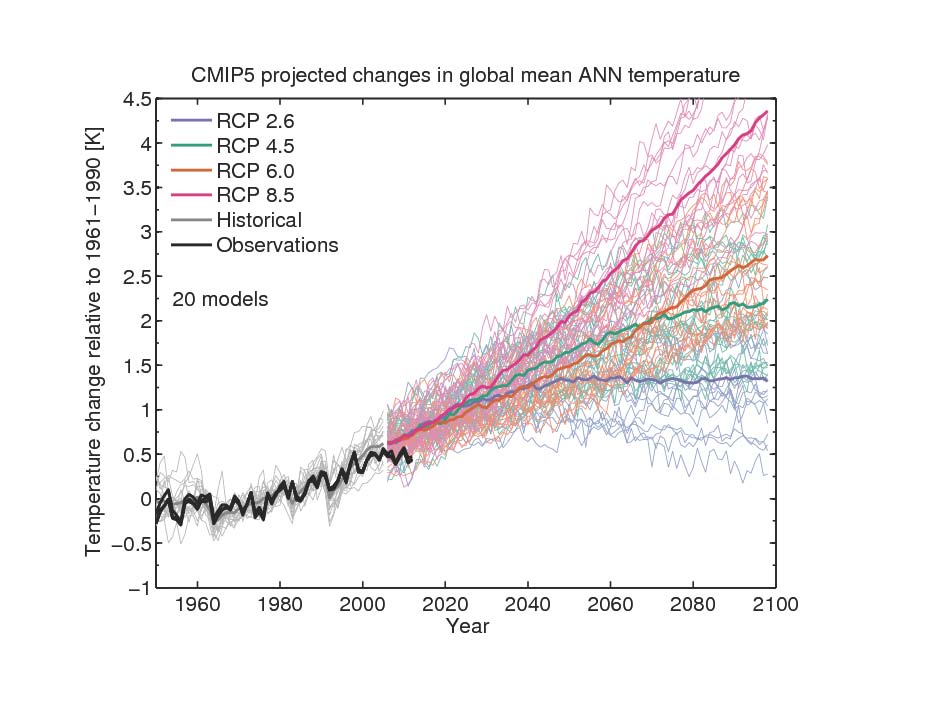

This is clear even from the published CMIP5 simulations. Pat Frank claims that the error arising from cloud uncertainty alone should accumulate to an extent of ±16°C by 2100. And he seems to infer the cloud error from disagreement between the models. But the CMIP5 models clearly do not diverge by 16°C by 2100. Here is a plot

The spread is mainly due to the different scenarios; for an individual scenario it is maybe ±0.6°C.

Yes Nick

Hundreds of scam CO2-hatred “scenarios”.

NOT ONE anywhere near REALITY.

Thanks for drawing that to everybody’s attention.

Nick

And that’s your argument to establish the effectiveness of the models? Really

That’s not true Andy! Give Nick his due. ONE of those models is quite close to reality – the one at the very bottom. The rest of the models should clearly be fired. But that one should be given a prize, and it shows that temperatures in 2100 will be about the same as today. So according to the one believable model, there is no C in CAGW, and no real W either. Great! Can we all pack up and go home now? And stop wasting money on this nonsense?

@aTTP (1:24am) and Nick Stokes (2:36am)

It seems the issue is not in getting different results with different initial conditions but rather running slightly different models from the same initial condition.

The simplest setup would be to select a single tunable parameter (e.g. clouds), vary the value up or down to create 2 model formulations, and run them both from the same initial conditions. The different values may cause divergence or other feedbacks/interactions may dampen it to insignificant.

If I understand the source of Nick’s spaghetti graph, the graph demonstrates the differences between models, not the potential uncertainty inherent in any one model. Each spaghetti line has it’s own uncertainty band that it not displayed.

MJB,

Yes, you could also do what you suggest (i.e., run with the same initial conditions, but different parameters). If we consider clouds, then there is probably a range of a few W/m^2. This would correspond to potentially difference of about 1K; not even close to the +-15K suggested by Pat Frank.

As far as the spaghetti graph is concerned, I think it is a combination of individual models run more than once and different models, so you are correct that it isn’t a true uncertainty. However, it does illustrate that the range is unlikely to be as large as suggested by Pat Frank.

If constraints are being applied for each calculation then you are not getting modelled outputs but constrained outputs. Do the runs with no constraints to see the inherent validity of the underlying physics, not the hand-tailoring needed to sell a story.

But hey, if the need is to sell a story….

I find it hilarious that Nick and others still think the chart at this comment is relevant since it is pseudoscience crap:

https://wattsupwiththat.com/2017/10/23/propagation-of-error-and-the-reliability-of-global-air-temperature-projections/#comment-2643766

How can anyone think wild guesses to year 2100, be considered good science,when most of it UNVERIFIABLE! Models are a TOOL for research,not to create actual fact based science,since it lacks real data for the next 83 years. This is the what the AGW conjecture is based on,a puddle of unverifiable guesses,

Bwahahahahahahahahaha!!!

Imagine what real Meteorologists, who do short term modeling for weather prediction in the next few days know how quickly short term predictions can quickly spiral out of reality. I see them adjusting their forecasts daily,sometimes even in hours, as new information comes in,but can still be waaaaay off anyway,as they were in my city just yesterday.

Models are a TOOL for research,not a creator of data.

…and Then There’s Physics ,

You said, “However, it does illustrate that the range is unlikely to be as large as suggested by Pat Frank.” I’m not sure that you can justify that statement. The propagation of errors provides a probabilistic uncertainty range, which is an upper bound, not the most likely outcomes. That is, with numerous ensemble runs, they are most likely to cluster around the most probable values, but that doesn’t preclude them from sometimes reaching the maximum values if a large enough number of runs are made.

Extrapolating the apparent arc of the upper limit from the spaghetti plot of model runs, you reach a maximum divergence value of approximately 8.5K to 9.5K truly slightly more than half the 15K to 16K suggested

Clyde,

Normally what’s presented are 1, or 2, sigma uncertainties. This would mean that either about 66%(1 sigma), or 95% (2 sigma), of your results should lie within this range. Depending on what is presented, you would expect either 1/3 of your results (1 sigma), or 5% of your results (2 sigma), to lie outside the range. Therefore, if you ran a lot of simulations and the results never ended up outside the range, then the range would probably be too large.

Bryan,

Except, the range is mostly because of the range of emission scenarios, rather than scatter for a single scenario. Therefore, the overall range isn’t representative of some kind of model uncertainty.

sun: the RCPs aren’t “guesses,”

they’re assumptions.

Crackers,when they run to year 2100, they are indeed wild guesses,since there is ZERO evidence to support it, you are playing word game here. They are unverifiable,can’t run a hypothesis on it since most of it is far into the future,thus qualifies as wild guesses.

He writes,

“sun: the RCPs aren’t “guesses,”

they’re assumptions.”

Yawn, is this how low science literacy has fallen?

The difference between an assumption and a guess is basically the reputation of the person making them.

Fenchie77,

You are of course correct. The fact that the models require constraints is enough to invalidate them.

I doubt the is a Mechanical Engineer in the crowd that would trust his/her family’s safety to a 5th floor apartment deck that was designed with, or the design was verified by, a stress analysis (i.e., modelling) program that required constraints be placed within it to keep the calculations within reasonable ranges.

ATTP, “ one way to estimate the uncertainty is to simply run them many times with different initial conditions.”

No, it’s not. Your proposed method tells one nothing about physical uncertainty.

Mistakes 1, 3, 4, 6, and 10. Good job, ATTP.

Nick Stokes, “Pat Frank claims that the error arising from cloud uncertainty alone should accumulate to an extent of ±16°C by 2100.”

No, I don’t Nick. You’re proposing that ±16°C is a physically real temperature.

It’s an uncertainty statistic. An ignorance measure. It’s not physical error.

You’ve made mistakes 2, 6, 11 and, implicitly, 12.

You’ve many times now demonstrated knowing nothing about physical error analysis. Now it’s many times plus one more.

Pat,

Hold on. You’re suggesting the results from the models are far more uncertain than mainstream climate modellers suggest and yet you’re also suggesting that if you ran the models many times (with different initial conditions and using different parameter values) you would get an overall result that was not representative of the uncertainty. This doesn’t seem consistent.

Nick Stokes

October 23, 2017 at 2:36 am

My comment to you it is not actually about the particular point you trying to make there, but more in the aspect of contemplating the validity of the whole argument in question here about GCMs.

You see, you have a clear beautiful plot there, but really no much relevant, as it does not have the according ppm concentration trends also.

Last time I checked AGW is all about temps as per ppm…….and the correlation there…..

Ignoring this actually puts one in the position of misinterpreting the value of GCMs as an experiment…..either intentionally or not.

So when the nice plot you posted helps with your point, maybe, in its essence misleads towards a result of misinterpretation and confusion about the actual value of GCMs as an experiment, which by the way are not climate models anyhow, and very very expensive experimental tools at that.

Don’t you think that the plot you provide, the way it stands has no much support value about the RF or the fCO2 as contemplated by the AGW hypothesis one way or another?

cheers

“That’s not true Andy! Give Nick his due. ONE of those models is quite close to reality – the one at the very bottom.”

yeah, predict 1 2 3 4 5 6 and throw a dice, and one will be right.

Logic is not you nick or ATP

Idiots pretending to be scientists. Why not get English lit Mosher in on the act too

or some more of pseudo science sensitivity studies that are nothing but tuned junk driven by observations

ATTP

“Pat,

Hold on. You’re suggesting the results from the models are far more uncertain than mainstream climate modellers suggest and yet you’re also suggesting that if you ran the models many times (with different initial conditions and using different parameter values) you would get an overall result that was not representative of the uncertainty. This doesn’t seem consistent.”

It’s not inconsistent.

The models are far more uncertain than claimed, because of 1 much comes from hindcast tuning, not physics (not incomplete and some much not understood physics) Unless you are going to be uber absurd and claim that is not true

The range outcomes is uncertainy (in model physics which leas to instability (not variability)), error, and different tunings.

as with Mosher, logical examination is not for you, as usual, add Nick in there

However if you replace the linear models by non linear ones, the behaviour is exactly as he describes.

It is not the coherence of linear models that is under criticism, it is their applicability at all.

It is of no use to refute the fact that your cat scratched my leg, by pointing out that dogs just dont do that.

ATTP, physical uncertainty is with respect to physical reality, not with respect to model spread.

You’re conflating model precision with model accuracy (mistake #1). You make this mistake repeatedly. So do climate modelers. You all seem unable to grasp the difference.

Running a model over and over, with different initial conditions, tells you nothing, nothing, about physical uncertainty (mistake #3).

Unless (BIG! unless here) your model is falsifiable and produces physically unique predictions.

Climate models violate both conditions.

Run them until you’re blue in the face, and you’ll have learned nothing except how they move around.

Nick Stokes October 23, 2017 at 2:36 am

Nick, can you extend your chart so we can see how high the projections go for RCP 8.5? The chart cuts them off at the year ~ 2080.

I suspect that if you increase the vertical axis and in addition, include the uncertainty surrounding each run, you will end up with roughly the range suggested by the author of this post.

Think!?

A never believable claim from confirmed liars or misdirection specialists.

If you believe your falsehood, write up a mathematical article and publish it.

Until then, your belief is just so much speculation.

Without proof or logic.

sun says ‘when they run to year 2100, they are indeed wild guesses,since there is ZERO evidence to support it’

no, they’re assumptions, not guesses.

there can be no evidence from

the future, only assumptions.

a model has to assume path of future emissions.

these

are the RCPs.

there are four of them for different

scenarios of future energy

use.

unless you can predict for us

that future path. go ahead and try.

So much for contributions from Nick.

What are the starting uncertainties in climate models, Nick?

Technically, adjusting a temperature record is an immediate admission of error and even roughly identifies the error range.

Yet, not one of the models initializes with that one uncertainty or propagates it through.

Gross assumptions regarding total lack of temperature equipment calibration or certification

Total lack of side by side measurements before swapping equipment.

Total lack of side by side measurements before moving the temperature station.

Total failure to track temperature station infestations or to identify errors caused.

Instead, Nick apparently espouses averaging temperatures repeatedly to accurize numbers and improve precision.

Run the models many times…

A solution that is far worse than claiming stopped clocks are correct twice a day.

The sample standard deviation (SD) in a statistical sense is only meaningful if the underlying population is normally distributed; The percentage of values claimed to fall within some error window depends on the shape of the population distribution. If instead you are talking about the standard deviation of a sampling distribution of a summary statistic (such as the mean), then the central limit theorem is invoked to adopt the assumption that the theoretical sampling distribution of that summary statistic (which you are sampling from) is distributed Normal. The standard error (SE) is the sample estimate of the standard deviation of that sampling distribution.

If the SE (or sometimes the SD though far less likely) is used to support a statement of confidence about the population parameter, such as the mean, then the correct confidence statement is that the error window has some x chance of encompassing the population parameter. Again, assuming a Normal distribution. The notion that the one confidence window you calculate will contain x percent of ‘the data’ or sample statistic should I run the process over and over again is incorrect. Each time you sample, both the mean and the SE vary, and as such so will any confidence statement drawn from the sample statistics.

The proper statement of interpretation of confidence (or uncertainty) is that, in the long run of N (very large) samples of size ‘n’, my ‘x level of confidence’ error windows will capture the population parameter x percentage lf times.

Error propagation is different altogether. Different formulae, and they also depend on what operations you are performing on your data.

Generating an error bar from a large collection of predictions from different models and even, within each model, varrying the initial conditions is an ad hoc method to generate error intervals. It seems supremely niave to believe that varrying these things will happen to capture the uncertainty in the accuracy of the coefficients and values of the model parameters for any coefficient or parameter value that itself posseses some none neglibeable and varied amount of uncertainty associated with them.

Even in a bivariate linear regression model, Y = B1X1 + B2, there is uncertainty in the prediction of Y (y’) and uncertainty in the estimate (b1) of the B1 coefficient and the estimate (b2) of the B2 intercept and, often times, even uncertainty in the observations (x) of X used to generate the model in the first place.

Suppose we sample from a linear system. We don’t know it but the X’s in our model are all appropriate in explaining Y. Good for us so far. But we don’t know what the exact values for X are. So, we sample Y, we also sample X1 to Xk, we then crunch the numbers (do the regression) and come up with the estimates (b1 to bk) of the coefficiemts (B1 to Bk). Thus, we now have a model. The accuracy of our measurements of Y and X1 to Xk (and, normally, the appropriateness of our X1 to Xk in explaining Y but, again, here we are assuming they are appropriate) will help determine how well this model actually does in explaining and predicting Y. Y is unknown as are (probably most) of the true values of X – presumably Time (year) would be one of them. Our measurements of Y and X1 to Xk (y and x1 to xk) are, for the most part, all we have, but we based our model off of the measurements. There are uncertainties in the measurements. We don’t know the direction of those errors or their magnitude (offsets as i think is being used above), because we don’t know the relation of the measurements to their true value. These errors will propagate as the model is run iteratively, being fed its own outputs as inputs at each iteration.

Tweaking the estimated values of X1 to Xk and b1 to bk to generate different estimates of Y (y’) is an ad hoc attempt to quantify this additional uncertainty in X and Y through ’empirical’ simulation.

Pat Frank well-approximates the model temperature outputs using a simplified linear equation. He then focuses on the effect of cloud coverage on solar insolence (if memory serves) and (presumably) uses error propagation formulae to quantify the effect of this uncertainty in the estimate of temperature.

There is either a theoretical/mathematical explanation for why error propagation does not apply or there isn’t and the modellers technique for evaluatiom is gravely misguided.

Pat Frank October 23, 2017 at 9:36 am

Nick Stokes, “Pat Frank claims that the error arising from cloud uncertainty alone should accumulate to an extent of ±16°C by 2100.”

No, I don’t Nick. You’re proposing that ±16°C is a physically real temperature.

It’s an uncertainty statistic. An ignorance measure. It’s not physical error.

You’ve made mistakes 2, 6, 11 and, implicitly, 12.

You’ve many times now demonstrated knowing nothing about physical error analysis. Now it’s many times plus one more.

_________________________

What is it they say Pat, a little knowledge is…. 😉

At least Nick might run off now and try understand physical error analysis, seems the sort that does not like understanding things 🙂

* Does not like Not understanding things.. heh, wish I could edit my stupidity instead of posting again 🙁

“the effect of cloud coverage on solar insolence”

Busy old fool, unruly sun

Much as i hate to chip in, in support of both Nick and aTTP. They are giving you accurate information. If the models were wrong in the ways described above…. they would be “more” wrong and it would be very obvious to even the most committed warmist modeller. All models are wrong, it’s inherent in modelling. Some are really, really wrong. But most of the ones in active use are not. I would agree that the current crop run hot and im not a massive fan of zekes recent work, trying to show that they dont. But we have apply healthy scepticism and critical thought to all of this. We cannot push that all to one side because we simply like the sound of what’s being said. Mosher, to his previously sceptical credit makes that point often. He sometimes at least recently doesn’t take his own advice. But i guess we are all guilty of that.

Depending on your nationality there’s always the PNAS route to publishing. Pal reviews can cut both ways.

Cracker,

Assumption

“a thing that is accepted as true or as certain to happen, without proof.”

Guess

“estimate or suppose (something) without sufficient information to be sure of being correct.”

Meanwhile you keep playing word games while I keep saying they are junk,you never disputed that they are junk.

I stated:

“Crackers,when they run to year 2100, they are indeed wild guesses,since there is ZERO evidence to support it, you are playing word game here. They are unverifiable,can’t run a hypothesis on it since most of it is far into the future,thus qualifies as wild guesses.”

and,

“How can anyone think wild guesses to year 2100, be considered good science,when most of it UNVERIFIABLE! Models are a TOOL for research,not to create actual fact based science,since it lacks real data for the next 83 years. This is the what the AGW conjecture is based on,a puddle of unverifiable guesses,”

You have NOTHING to sell here.

You are pathetic.

Nick by eyeball, the spread is eight + from the smudge at ~0C in 2100 to the topmost steeply exiting the top of the graph at about 2075. And these represent the models that survived the cut. You would still be wrong with a linear model but more difficult to criticize had you guys not been charged with the task by Grouchmarxist highschool drop out Maurice Strong (creator of both UNFCCC and IPCC) to find burning fossil fuels will destroy the planet, thereby justifying trashing economies and freedoms and having global governance by elites. Models vs observations to date show climate sensitivity to be at most ~1, but this takes the scare out of rising CO2.

I’m thinking we should crowd source a large fund and place a bet that with the collapse of the Paris agreement we will not achieve a rise of 1.5C going gangbusters with fracking oil and gas, burning coal, making concrete, etc. If we haven’t got over halfway there by 2050 we declare a win and make the fund available to third world economies for developing cheap reliable electricity generation. Honesty in temperature collection would need some resources and oversight.

“Nick by eyeball, the spread is eight + from the smudge at ~0C in 2100 to the topmost steeply exiting the top of the graph at about 2075.”

The spread for each scenario is much smaller. The fact that scientists don’t know what will be done about GHGs and have to cover the range of possibilities has nothing to do with error propagation. But there is a real test of PF’s ridiculous errors. ±15°C would be about ±9°C in the 30 years since Hansen’s prediction. Now we quibble about small fractions of a degree difference in scenarios, and another small fraction that might be a transient for El Nino, but there is nothing like a 9°C error.

Nick Stokes first thinks uncertainty is physical error (mistake #6), and then effortlessly moves on to suppose it’s a physical temperature instead (mistake 11).

Nick’s self-contradictory assignments also implicitly embrace mistakes 2, 4 and 12.

Clyde Spencer it’s even worse than that, because the cloud forcing error is inherent in the model and is systematic.

That means one never knows the most probable value.

ATTP supposes that model precision is a measure of reliability.

Mistakes 1 and 3.

blunder bunny wrote, “they would be “more” wrong and it would be very obvious to even the most committed warmist modeller.”

Not correct. GCMs are tuned to give a reasonable projection. That practice hides physical error and side-steps uncertainties.

Mark – Helsinki I can’t offer a rationale for it all. 🙂

RW I can’t add anything to your thoughtful post, but can mention that,

Vasquez, V. R., and W. B. Whiting (2006), Accounting for Both Random Errors and Systematic Errors in Uncertainty Propagation Analysis of Computer Models Involving Experimental Measurements with Monte Carlo Methods, Risk Analysis, 25(6), 1669-1681, doi: 10.1111/j.1539-6924.2005.00704.x.

assess random and systematic errors in nonlinear numerical models and recommend propagating systematic model error as the root-sum-square.

The precedent of that paper, by the way, encouraged me to make Risk Analysis my first journal for submission. The rest is history. 🙂

Nick Stokes “But there is a real test of PF’s ridiculous errors. ±15°C …”

That’s not physical error, Nick.

Mistakes 4, 5, 6 and 11, and probably 12 implicitly.

Well done. 🙂

Why don’t people understand uncertainty? They taught us that in first year physics. I could easily make a model that only ever has one outcome, but if it propagates an uncertain value then the error bars will be huge by the end. That doesn’t mean my model will ever show that, thus conflating model precision with uncertainty. The error bar means that my model could be wrong by that much. Of course if your model is wrong it won’t tell you, that’s the whole point of error bars.

Pat Frank, thanks for the reference. I have downloaded it and will check it out.

From ..and Then There’s Physics October 23, 2017 at 9:36 am

“you’re also suggesting that if you ran the models many times (with different initial conditions and using different parameter values) you would get an overall result that was not representative of the uncertainty.”

But if the model would indeed propagate the suggested systematic, “throughout”, physical error, it *will* be noticed. The current models are not sufficiently taking into account non-linear effects of known modelling errors. This then causes the accuracy of the model to decrease rapidly with each time-step and explains perfectly the issues seen today comparing measurements with runs of 10-20 years ago.

Many climate scientists seem to make the same mistake simply because they continue to apply tools without allowing rigorous review of the validity of using those tools that way. This is a larger systematic *human* error in that particular field. And it’s not the first time in recent history but certainly becoming the most costly. And the cause of it lies within underlying role of politics, money and emotion, which has grown into something big to “fail”. The cure here is “back to basics”: re-examination of the toolbox itself.

Jarryd Beck, thank-you. 🙂

It seems to me that training in climate modeling completely neglects physical error analysis. Not one climate modeler I’ve encountered has a clue about it. And they’re often hostile to it.

I like his self declared hero status after his sixth rejection, obviously due to the corrupt system and fear of what the analysis would unleash – no other explanation possible here.

The fact that the insiders circle the wagons when criticized is proof that the criticism is meritless.

Gotcha.

“Imagine what real Meteorologists, who do short term modeling for weather prediction in the next few days know how quickly short term predictions can quickly spiral out of reality. I see them adjusting their forecasts daily,sometimes even in hours, as new information comes in,but can still be waaaaay off anyway”

This is a big reason why us real operational meteorologists (for 35 years) have such a high % of skeptics vs in other sciences. We must constantly reconcile the forecast with realities. Quickly adjust based on models that also quickly dial in new/fresh data and come out with a new scenario that can sometimes look much different than the previous one………..with errors/changes often growing exponentially with time.

Individual ensemble members of the same model can look completely different beyond a week. Different models in week 2 can have very different outcomes, not just regionally but in the position of many large scale features that define the pattern.

However, despite this, climate models are much different and they are not as effected by the random, chaotic short term fluctuations in initial conditions that can never be captured perfectly and lead to exponentially growing errors with time.

For instance, if the amount of solar forcing in a climate model was too high/low, one would not expect it to result in output/projections that amplify exponentially over time. It would remain pretty much constant. There would also be potential negative/positive feedbacks but they would be limited and probably not greater than the error from the solar forcing being too high/low.

Another difference. With weather models, we change them/equations every several years or so to make potential slight improvements, with experimental models constantly being run and compared to the existing models……..with mixed results.

I am in not involved in modeling but it seems clear that certain models are superior than others, especially when it comes to handling particular atmospheric dynamics. However, the gatekeepers of all models seem committed to making improvements of their models vs justifying keeping the current one(s).

Skill scores for different time frames are constantly tracked and accountability/performance is well known and acknowledged based on the blatantly obvious, non adjusted statistic for all to see.

I don’t see this being the case for climate models. Adjustments have lagged well behind the reality of observations screaming out loud and clear that they are too warm. Anyone with a few objective brain cells can see that global temperatures are not increasing at the rate of model projections. If it takes an El Nino spike higher in global temperatures to get up close to the model projections ensemble mean for instance, instead of treading along the lower baseline of the range for a decade, then the models are too warm.

There can be no scientific justification to continue with those same models. They need to be adjusted. Wishing and hoping and having decades before needing to truly reconcile models with reality because you are convinced the equations are right and the atmosphere will come around is not authentic science……….it’s just a tool to be used for something other than authentic climate science.

Pat,

Thank you very much for this excellent article, the work and well thought out discussion. I may not agree entirely with everything but believe you make some great points and it deserves to be read/published………even if the gatekeepers don’t agree with all of it.

One wonders if they disagreed with just as much but it supported the CAGW narrative, if it would have been published.

WTF,

Pat referred to a nice post Willis Eschenbach made a few years ago,which YOU should visit,that materially support the main point Pat makes here,here is a useful quote from Willis:

” Willis Eschenbach

May 16, 2011 at 12:01 am

Steve McIntyre has posted up R code for the analysis I’ve done, at ClimateAudit.

The main issue for me is that the climate model isn’t adding anything. I mean, if you can forecast the future directly from the forcings, then there’s no value-added. A good model should give you something that you can’t get from a simple transformation of the inputs. It should add information to the mix.

But the GCMs don’t add anything new, they just spit the forcings out in a slightly different form.

Now, you could say that the model is valuable because it allows us to calculate the variables of lambda and tau … except that each model comes out with a different value of those two.

The main problem, however, is that we have nothing to show us that the underlying concept is true, that forcing actually controls temperature linearly. So that means that the different lambdas and taus we might get from the model may mean nothing at all …

w.”

https://wattsupwiththat.com/2011/05/14/life-is-like-a-black-box-of-chocolates/#comment-661218

Imagine people trying to model chaos with linear functions……….,using ZERO data as real data, but not yet existing data of the future………

Ha ha ha ha ha…………..

Mike, I wasn’t trying to denigrate Meteorologists with their prediction being wrong in my city,just trying to point out that even with short term predictions based on REAL data can STILL be off from the forecast target.

You wrote,

“This is a big reason why us real operational meteorologists (for 35 years) have such a high % of skeptics vs in other sciences. We must constantly reconcile the forecast with realities. Quickly adjust based on models that also quickly dial in new/fresh data and come out with a new scenario that can sometimes look much different than the previous one………..with errors/changes often growing exponentially with time.”

The big difference is that you usereal updated data regularly to adjust the forecast with, While IPCC create a spaghetti based climate model using a lot of assumptions on forcings we know little about and say we can make a forecast far into the future with significant confidence.,

The whole thing is absurd!

Sunset,

I never considered your comment as denigrating meteorologists. Just the opposite, a compliment with regards to how we are reality based in using models based on their usefulness.

I’ve busted at least hundreds of forecasts……..it part of the job. The best busted forecast is the one that gets updated the quickest. I was on television for 11 years and that means that thousands of people see the face and person who busts forecasts and you hear about it.

In the earliest years, I hesitated to update as quickly because of believing the models when I made the first forecast and sort of hoping they would revert to the previous solution when they diverted the wrong way.

I also showed over confidence because of too much trust in models.

The reality is that you can be the best model data analyst on the planet but if the model is wrong, it doesn’t matter………you will be wrong.

With experience, you learn to be more skeptical and certain model tendencies. With so many more models and ensembles available, it provides an enormous opportunty to consider potentially different scenarios.

In the 1980’s, most of us just used one (or 2) operational model and went with whatever it showed.

Reading the “climategate” emails, the “corruption” is well documented. I would not regard every single individual with bias as corrupt, since they also display expectation bias. Trenberth’s assertion that there must be something wrong with the data tells an entire story in one brief sentence. Other emails such as Jones indicating that papers critical of model results and methods need to be suppressed (not published) rather than addressed substantively are also revealing. The “corruption” may initially have been more due to “noble cause” fixation than to economic bias, but once economics and university and agency policy enters the picture, the result can be out right corruption. Any of the journals could have published Dr. Frank’s paper and then left the podium open for actual discussion and demonstration of any mistake he might have made. Not doing so looks unscientific, and outright faith-based rather than grounded in scientific argument.

WTF, ad hominem comment.

If you can’t appraise the manuscript and the reviews you have nothing worthwhile to offer.

So far, you’ve offered nothing more worthwhile than a view into your character.

Forrest,

I think that is pretty easy. Run a climate model many times with different initial conditions, and show that the range of outputs diverges as suggested by Pat’s proposed error propagation.

Forrest,

As far as I’m aware, they have. There is some uncertainty (i.e., running a model with different initial conditions does indeed produce a different path/output) but they do not show the output diverging as suggested by Pat Frank’s analysis. We expect the equilibrium state to be constrained by energy balance and so it is very hard to see how it could diverge, as suggested by Pat Frank, without violating energy conservation.

Forrest,

I wasn’t suggesting that the equilibrium state should be the same at all times, I’m pointing out that it should tend towards a state in which energy is in balance (i.e., energy coming in matches energy going out). The reason it has changed in the past is because things have happened to change the energy balance. The Sun’s output isn’t constant. Our orbit around the Sun can vary. Volcanoes can erupt. Ice sheets can retreat/advance (often due to orbital variations), greenhouse gases can be released/takenup etc. However, the state to which it will tend will be one in which the energy coming in matches the energy going out.

So, if someone wants to argue that the range of possible temperature is 30K (as appears to be suggested by Pat Frank’s error analysis) then one should try to explain how these states all satisfy the condition that they should be in approximate energy balance (or tending towards it).

Yes, that is the controversy. Pat is essentially arguing that something that would produce an offset should be propagated – at every timestep – as an error. This is not correct, which should be pretty clear from Nick Stokes’s recent comment with the output from climate models.

I see that “nophysics” has very little comprehension of error propagation.

Why is that not a surprise?

Little errors GROW to be big errors… that is the way the climate change mantra works !!

in response to ATTP,

This argument is seriously flawed.

Just like shorter range EPS global weather models the outturn is constrained to within realistic climatic values…otherwise they do indeed blow out into massive range of error. Climate models will be no different, but the constraint range means error propagation is limited with each time step.

Seems to me that since the models are blatantly wrong…the offsets, forcings, whatever, are cancelling each other out….either way, you end up linear that exactly matches CO2….something anyone could do with a ruler

First problem seems to be getting modelers to admit that…

but then they are handicapped from the get go…..they are having to back cast to a fake temp history in the first place

forcings add. (aerosol forcing is negative).

by 2016 the anthro

GHG forcings add to

a CO2-equivalent of 489 ppmv

https://www.esrl.noaa.gov/gmd/aggi/aggi.html

489 ppm CO2eq of anthropo forcing? …

current level of CO2 is ~400ppm, meaning, without human action Earth would “enjoy” -89 CO2eq GHG forcing. +2K per CO2 doubling is also -2K per CO2 /2, hence -2K for the effect of going from 400 to 200, another -2K for going from 200 to 100 etc. Let’s stop here, although the theory goes that we should keep going on.

So the theory says that without human GHG, Earth temp would be no less than 4K below current level. Remember that LIA was only 1K below current (so says IPCC), so imagine the effect

I say: LOL !!!

paqyfelyc claim – “THERE IS a line of code that says “this much more CO2 give this much less heat loss (aka warming)”

there is a line of code,

a well-honed equation with evidence

to back it up, that uses CO2’s

radiative forcing (which is not warming), at

the tropospause, not the

surface.

because it’s a fact that CO2

absorbs IR. and a fact that the

earth emits

IR. it’s not difficult

to understand, with a model

or equations, why that

means more CO2 means

more warming.

You’re ignoring the water vapor that has 10 or 20 times the energy content, with a temperature sensitivity at Sea level air pressure and temp. And it does what it wants.

micro: water vapor certainly isn’t ignored in

climate models.

but water vapor in the atmosphere only

changes when the temperature first changes;

then it’s a feedback.

Bzzzzzzzz! Wrong.

Do you live someplace that you get dew at night?

Oh’s it a feedback, about -35W/m^2

A lot more than Co2’s forcing.

“radiative forcing” is orwellian newspeak. Indeed CO2 radiates (as just any matter…), and that’s the real radiation that should appear in the equations, not some “forcing”.

it’s not difficult to understand, even without a model or equations, why that means more CO2 means

more RADIATION in and out atmosphere and less radiation directly from Earth gets to space. If, and if then to what extend, this result in warming (or even cooling!), is much more questionable.

This is what my work addresses. Specifically cooling under clear calm skies. This is the only condition that really matters. But that’s another argument for another time.

What I found was surface cooling rates adjust themselves, as it get near dew point, water vapor condenses, and that sensible heat supplies a significant portion of the energy radiating to space, which at dusk, was cooling the surface at 3 or more degrees F/hr, but an hour or two later it can be near zero, and there’s still 5 hours of dark, and there is still a 100F (the other night here) temp difference that has to be radiating to space.

You can see this everywhere by just logging RH, Dew Point and Air temp, and you see under clear skies the temp stops falling some nights, and you can measure a 80-100F temperature differential with an IR thermometer, and it isn’t cloudy either.

Everyone assumed it was just reaching equilibrium, it is not. This is the biggest “discovery” in climate science in 100 years, because it shows us water vapor has been actively regulating temps, not ghg’s.

Oh, so CS is just the ratio of the the two cooling rates times the 1.1C/doubling Co2, so above location that got measured it’d be about 1.1C/3, so 0.33C/doubling.

Frankly I should get a Noble Prize for this.

@micro6500

Your work makes sense, so much so that i don’t see anything new in it. Of course atmospheric water is a major heat buffer, that prevent temperature to go down as long as there remain water vapor to turn into liquid water, and hence to compensate heat escaping away through radiation. I doubt very much this deserves a Nobel, or Captain Obvious would already had been awarded (but who knows ? Al gore and Obama got one, so with the right political connection …)

Even “climate scientists” know that, although I suspect they don’t care. The word “dew” doesn’t even appear in the description of the NCAR Community Atmosphere Model (CAM 3.0) : the only water movement they care about is evaporation and cloud formation.

But I figured out it was a negative feedback to Co2. Tell me anyone who has proof of that?

But you’re right, it was stupid obvious. But people assumed it was something else. I recognized it for what it was, the end of co2 panic.

paqyfelyc says – “radiative forcing” is orwellian newspeak. Indeed CO2 radiates (as just any matter…), and that’s the real radiation that should appear in the equations, not some “forcing”.”

RF comes from solving the two-stream

equations, which are obtained from

applying energy

conservation and the Planck law to

the atmosphere.

now i think it’s the two-stream equations

that appear in the models, and not the

RF relations. see, for example, equations

4.229 & 4.230 in this model description:

http://www.cesm.ucar.edu/models/atm-cam/docs/description/description.pdf

The problem then is they either are doing the wrong terms or they are leaving the big one out. The assumption that Co2 adds is incomplete, it adds, but water vapor drops nearly as much as was added, it is the negative feedback that is either unknown or ignored. And it only does so for part of the night, averaging a whole day hides the fact it varies.

@crackers345 October 25, 2017 at 8:10 am