From Dr. Roy Spencer’s Global Warming Blog

Roy W. Spencer, Ph. D.

The Version 6 global average lower tropospheric temperature (LT) anomaly for March, 2024 was +0.95 deg. C departure from the 1991-2020 mean, up slightly from the February, 2024 anomaly of +0.93 deg. C, and setting a new high monthly anomaly record for the 1979-2024 satellite period.

New high temperature records were also set for the Southern Hemisphere (+0.88 deg. C, exceeding +0.86 deg. C in September, 2023) and the tropics (+1.34 deg. C, exceeding +1.27 deg. C in January, 2024). We are likely seeing the last of the El Nino excess warmth of the upper tropical ocean being transferred to the troposphere.

The linear warming trend since January, 1979 remains at +0.15 C/decade (+0.13 C/decade over the global-averaged oceans, and +0.20 C/decade over global-averaged land).

The following table lists various regional LT departures from the 30-year (1991-2020) average for the last 14 months (record highs are in red):

| YEAR | MO | GLOBE | NHEM. | SHEM. | TROPIC | USA48 | ARCTIC | AUST |

| 2023 | Jan | -0.04 | +0.05 | -0.13 | -0.38 | +0.12 | -0.12 | -0.50 |

| 2023 | Feb | +0.09 | +0.17 | +0.00 | -0.10 | +0.68 | -0.24 | -0.11 |

| 2023 | Mar | +0.20 | +0.24 | +0.17 | -0.13 | -1.43 | +0.17 | +0.40 |

| 2023 | Apr | +0.18 | +0.11 | +0.26 | -0.03 | -0.37 | +0.53 | +0.21 |

| 2023 | May | +0.37 | +0.30 | +0.44 | +0.40 | +0.57 | +0.66 | -0.09 |

| 2023 | June | +0.38 | +0.47 | +0.29 | +0.55 | -0.35 | +0.45 | +0.07 |

| 2023 | July | +0.64 | +0.73 | +0.56 | +0.88 | +0.53 | +0.91 | +1.44 |

| 2023 | Aug | +0.70 | +0.88 | +0.51 | +0.86 | +0.94 | +1.54 | +1.25 |

| 2023 | Sep | +0.90 | +0.94 | +0.86 | +0.93 | +0.40 | +1.13 | +1.17 |

| 2023 | Oct | +0.93 | +1.02 | +0.83 | +1.00 | +0.99 | +0.92 | +0.63 |

| 2023 | Nov | +0.91 | +1.01 | +0.82 | +1.03 | +0.65 | +1.16 | +0.42 |

| 2023 | Dec | +0.83 | +0.93 | +0.73 | +1.08 | +1.26 | +0.26 | +0.85 |

| 2024 | Jan | +0.86 | +1.06 | +0.66 | +1.27 | -0.05 | +0.40 | +1.18 |

| 2024 | Feb | +0.93 | +1.03 | +0.83 | +1.24 | +1.36 | +0.88 | +1.07 |

| 2024 | Mar | +0.95 | +1.02 | +0.88 | +1.34 | +0.23 | +1.10 | +1.29 |

The full UAH Global Temperature Report, along with the LT global gridpoint anomaly image for March, 2024, and a more detailed analysis by John Christy, should be available within the next several days here.

The monthly anomalies for various regions for the four deep layers we monitor from satellites will be available in the next several days:

Lower Troposphere:

http://vortex.nsstc.uah.edu/data/msu/v6.0/tlt/uahncdc_lt_6.0.txt

Mid-Troposphere:

http://vortex.nsstc.uah.edu/data/msu/v6.0/tmt/uahncdc_mt_6.0.txt

Tropopause:

http://vortex.nsstc.uah.edu/data/msu/v6.0/ttp/uahncdc_tp_6.0.txt

Lower Stratosphere:

http://vortex.nsstc.uah.edu/data/msu/v6.0/tls/uahncdc_ls_6.0.txt

I had expected temperatures to start to fall this month. Will be interesting to see how this compares to the surface data sets.

This breaks the old March record, set in 2016 by 0.3C, which in turn was about 0.3C warmer than the other contenders.

Top ten warmest March Anomalies in UAH history, back to 1979 are.

This makes 9 months in a row that have been the record for that month.

So what?

You’ll have to ask this web site why they keep publishing UAH updates every month. If you are not interested in them, you could just ignore them.

It’s not easy to ignore a 0.02 degrees of statistical heating increase. I had to get up early to turn off the furnace each morning in March because it was getting too hot to sleep.

Unfortunately, my thermostat is only programmable in degree units, so its useless to reprogram it. I’ve written Gavin Newsom about the lack of oversight that major Corporations have from the State when designing useful thermostats, but I haven’t heard back.

Yes, as Colonel Kurtz said –

“the horror”

He could have been talking about that 0.02 degrees of blast-furnace heating the planet is experiencing.

And what are the +/- tolerances around your setting? Perhaps 2 or 3 degrees F?

I was asking about the statement you made. I suspect you think that the data to which you refer is alarming and somehow tied to fossil fuel use, but I was giving you the chance to say that, if indeed that was your point.

You know that the bellboy will never be able to show any human causation in these El Nino events.

It has, in fact, many time stated that he purposely doesn’t mention it…

… a tacit admission that it knows these events are totally natural.

You can’t look at a graph of temperatures and deem to know what is causing the observed variability, you need to construct a physical theory to explain the observations. Bellman is not trying to explain why the temperatures are changing in the way that they are, they are simply describing the change.

Why do new temperature records keep getting set? Maybe it’s because El Nino is getting stronger and stronger over time, as you claim, but you have to present your physical theory, backed up by evidence, to convince anyone of this. Why is El Nino getting stronger and stronger? You never can say.

The GAT is a meaningless number that cannot represent “the climate”, yet all you ruler monkeys treat it as such.

The global air temperature anomaly does not represent the climate, it represents a change in the global energy state (i.e. it tells us that the climate is changing).

But, importantly, just seeing a change in the global energy state doesn’t tell you anything about what is causing the change, you only know that a change is occurring. Bnice thinks they know exactly what is causing the change – El Nino getting warmer and warmer over time, but can’t point to any physical theory to actually substantiate that conjecture.

Nice hand-waving, devoid of meaningful content.

“The global air temperature anomaly does not represent the climate, it represents a change in the global energy state (i.e. it tells us that the climate is changing).”

Malarky! The temperature doesn’t determine the energy state. The energy state is a complex functional relationship with numerous factors, such as humidity and pressure. The temperature can change while the energy state remains constant!

You would have us believe that things like pressure and humidity are not relevant factors. So does climate science. That’s why climate science has refused to convert to using enthalpy instead of temperature even though the data to calculate enthalpy has been available for at least some measurement stations for over 40 years!

“You can’t look at a graph of temperatures and deem to know what is causing the observed variability,”

You can’t look at a graph of temperatures and know *anything* about what is actually going on. It is *energy* content that is important and temperature is a piss-poor metric for energy, i.e. enthalpy.

What new temperature records are being set? Max temps? Min temps? Daily mid-range temps? Global anomaly values?

Record temperatures are typically WEATHER related, not climate related. And they are blips on the radar, not indicative of climate change at all. You don’t even have this one right!

And *you* want to talk about someone never saying? When are you going to give us the variances of the temperature data for ONE station over a period of time? If you don’t know the variance then you have no metric for determining how accurate the “average” value might be! But you seem to be ok with that since it is *your* failing to elucidate on the subject.

You can look at a graph of global temperatures and tell whether the planet was in an ice age or an interglacial period. Temperature is a useful metric to track. Sometimes it would behoove you to take a step back, inhale, and ask yourself if you’re really making any sense.

Check the mirror you are standing in front of first.

To paraphrase: “The Hypocrisy runs deep in you”. Just a few messages ago you admitted temperature cannot differentiate between different climates. Now you are claiming that temperature *can* differentiate between different climates.

Pick one and stick with it.

Who are you paraphrasing? I said that two places with the same mean climate might exhibit different mean temperatures, so you will need to observe other parameters to determine if they have different climates. I then said that two places might have all other parameters equal (e.g. dry, arid), and you need to observe the mean temperature to know if they have different climates.

This is different than noting that the global mean surface temperature can distinguish between different global climate states like ice ages and interglacials.

Sometimes I hope you’re just playing the fool for laughs, because the possibility that you are genuinely this obtuse is too sad to dwell on for long.

Just stop with the irony, you don’t WTF you yap about.

It’s all he has. There’s nothing as bad as being intentionally ignorant.

And then puffing yourself up as some kind of expert.

Why then do the climate models exhibit such a tight correlation with CO2 while missing the long pauses that occur while CO2 is growing? Why do the models with such a tight correlation with CO2 run too hot?

What parameters are the models missing?

The problem is that temperature is *NOT* the only control knob yet the models pretend that it is and that CO2 is the controlling factor.

CO2 is *not* the only factor and temperature is a piss-poor metric for energy content. Two simple facts which, for some reason, you can’t accept.

Plus the fact that after just a few iterations, these massive climate models become nothing but linear extrapolations of rising CO2 content.

Then he tried to gaslight by claiming the GAT and CO2 aren’t the only parameters the climate pseudoscientists look at.

Climate models model internal variability, but they do not replicate it. That is, random, or quasi-cyclic, fluctuations appear in the models with the correct periodicity and magnitude, but not at the same time as they are occurring in the real world, because the models are, well, models. If you post-hoc force the model with known factors, it will capture the resultant variability (e.g. adding forcing from a volcanic eruption), but the model has no way of knowing that a volcanic eruption is supposed to occur in the future. That’s just a random occurrence that has a short term impact on the long term trend.

Similarly, models produce hurricanes, but they don’t produce the exact set of hurricanes we experience in the real world unless we are feeding them tons and tons of data about the exact conditions occurring at the time of the storm, and even then they don’t give us the exact storm we are observing (that’s why there’s uncertainty in e.g. storm track forecasts).

That is flagrantly wrong, and betrays a deep ignorance of how climate models are built and how they function. I’d again refer you to the intro textbook I linked to.

Is hand-waving all you climate chicken littles have in the tank?

It’s ok to say things like, “I don’t understand what you just wrote, Alan, can you simplify/clarify it for me?” But, again, if you’d go do your reading as I prescribed you wouldn’t be having this struggle right now.

Not going to waste my time reading crap written by compatriots of Jones and Mann who decided they needed to “hide the decline” with fraudulent contortions to historic data.

“Climate models model internal variability, but they do not replicate it. That is, random, or quasi-cyclic, fluctuations appear in the models with the correct periodicity and magnitude, but not at the same time as they are occurring in the real world, because the models are, well, models.”

This is nothing more than saying some models are useful and that the models will be right in the long term. The models are now almost 40 years old and they are getting WORSE, not better, when compared to observations!

” the model has no way of knowing that a volcanic eruption is supposed to occur in the future.”

And yet it is these occurrences that have a large impact on the future! Like I keep saying, you are trying to say that the models *can* predict the future while at the same time saying they can’t! If you don’t know what is going to happen in the future then how do you predict the future?

“Similarly, models produce hurricanes, but they don’t produce the exact set of hurricanes we experience in the real world”

The models say hurricane occurrences are supposed to INCREASE while in the real world they aren’t. That is not just missing an exact match, it is just plain wrong!

Supposedly the models have shown for the last 30 years that food production will crash because of higher temps but for 30 years all we see are continued record food production every year. Same for the Artic ice disappearing, polar bears going extinct, and the oceans boiling. Is there *anything* the models get right? It certaintly isn’t getting the temp right.

“That is flagrantly wrong”

No, it is right. It can be shown by the close correlation of the models predicted temperature rise with the predicted growth of CO2 emissions. If the models had other factors significant factors right you wouldn’t see the close correlation with CO2. It’s why Monckton’s pauses are so important to recognize and understand.

I think they’re doing pretty darn well compared to observations:

No one has ever said models predict the future, in fact scientists are extremely careful to point out that the models do not provide predictions. That’s why the IPCC presents a range of different projections based on various forcing scenarios. What models say is, “if emissions follow this pathway, these are likely outcomes,” for instance. They have no way of knowing which pathway might actually be followed, because that’s a function of human behavior.

CO2 forcing has been the dominant driver of the warming observed over the past 50 or so years, so it’s unsurprising that the models generally show the warming as a function of CO2 forcing. There isn’t any other forcing capable of explaining the observed change:

Monckton’s pauses are completely unimportant, it’s just a dumb thing that people without any understanding of climate dynamics fixate on.

“I think they’re doing pretty darn well compared to observations:”

Bullcrap. Even the IPCC has had to admit the models are running too hot. The only scenario that is even close is one using a CO2 growth rate that is *SMALLER* than what is being seen in reality!

“in fact scientists are extremely careful to point out that the models do not provide predictions.”

Huh? What scientists are you speaking of? Then why so many studies saying that the earth is going to turn into a cinder? Why so many studies predicting a crash in food production due to HIGHER temperatures? Why so many studies predicting a growth in species extinctions due to HIGHER temperatures? And on and on and on and on and on …..

“CO2 forcing has been the dominant driver of the warming observed over the past 50 or so years”

An unproven assumption. It’s religious dogma, nothing more. If it was the DOMINANT driver then we wouldn’t have seen the multi-decadal pauses in temperature rise over the past 30 years since CO2 has continued to grow practically unabated the whole time!

A subset of models in CMIP6 run too hot, that is not “the” models. But even then, observations fall well inside the envelope of CMIP6 projections:

And, as shown above, even Hansen’s earlier projections from 1988 were on the mark.

I don’t know of a single study saying the earth is going to turn into a cinder. Can you cite them? Most projections show temperature rising because most projections involve scenarios with a continuing CO2 increase. Projections involving scenarios with net-zero CO2 or a CO2 drawdown do not show temperatures continuing to rise over the 21st century. This, again, highlights the difference between a prediction and a projection. Prediction: this is how humans are going to behave in the coming years, and this is how the climate will likely change as a result. Projection: this is one possible way humans might behave in the coming years, and if they do, this is how the climate will likely change as a result.

Chew on those for a while and see if you can spot the differences.

A projection is, at its base *is* a prediction. If you don’t assume how humans will behave then you can’t project anything – it all becomes swirls in a cloudy crystal ball.

If you can’t assign a value to a “projection” for how likely it is to happen then it is worthless. The only way to assign such a value is to assume how people will act – the same as for a prediction.

Do you also believe in the “projections” made by carnival fortune teller hucksters?

What a moronic comment !

Something you would expect from a RABID AGW-cult-apostle.

It will take quite a while—years—for Tongan eruption blasted water vapor and sulfate aerosols to settle out of the stratosphere.

https://www.pnas.org/doi/10.1073/pnas.2301994120

Don’t blame me, I was nowhere near that volcano.

I blame the trendologists.

I’m afraid that I will have to confess to seeing something in the entrails of Hunga-Tonga. This was not a typical El Nino event. In 1998 and 2016, the temperature peaked and declined the next month. This time, it seems that there is a convention being held around the 0.9 anomaly, without the usual rapid decline. Things are getting curiouser and curiouser.

NASA has pointed out that the massive volume of water (Tonga) reaching the ozone layer will take 5 years or more to dissipate.

They say that this water:

“…would not be enough to noticeably exacerbate climate change effects.”

The real problem nowadays, is finding enough virgins to tame these volcanoes. !

I’ll be within a few thousand miles in a couple of weeks. Maybe I should keep my eyes peeled.

Tonga no longer keeps its virgins peeled:

Gosh darn it!

Ah so are we one step closer to finding the cause of the eruption? An in-depth investigation of Tongan virgins may be necessary – volunteers please form an orderly queue.

The gods are angry!

The gods must be crazy!

I’d volunteer to save the planet, but…. Hmmm…. Maybe Greta will volunteer? Nah.

I think that the problem started in the ’60s or ’70s.

We know of four factors which have contributed significantly to the recent warmth (and there might be others we don’t know about). The four are:

#1. The unusual 2022 Hunga Tonga volcanic eruption, which humidified the stratosphere, which you mentioned.

#2. The El Niño, of course.

#3. The IMO 2020 pollution controls on ships. They resulted in “an estimated 46% decrease in ship-emitted aerosols.” Because ships are a major contributor, that caused a sharp 10% decrease in total global sulphur dioxide (SO2) emissions.

That’s a large reduction in air pollution in a very short time. Cleaner air reflects less sunshine, which causes you-know-what to become slightly milder.

#4. The slow, steady rise (25 ppmv/decade) in the atmospheric concentration of what Scientific American once called “the precious air fertilizer.”

The rise is generally benign. (It’s extremely helpful to Germany, which managed to get along last winter without Russian natural gas, and probably will be okay this winter, too, thanks to milder than usual winters.)

It is all good news, except that the first 2 of those 4 factors are transient.

Too bad MV Dali didn’t get the clean bunker fuel memo.

Nope – CO2 does nothing other than improve the biosphere.

You missed the most important factor driving the trend. Solar intensity shifting northward. It peaked in the SH 500 years ago. The change in the NH is accelerating slightly typical of a sine wave past its minimum.

The middle chart in the attached shows the UAH trend across latitudes. It has distinctive lobes in the region of the Ferrel Cells in both hemispheres.

Note the ocean heat content peaks at 45S also in the Ferrel Cell. These are net condensing regions as shown by the high negative Net radiation in the region of the Ferrel Cells.

Now explain to me how CO2 is selectively warming in the regions of the Ferrel Cells.

Come on Rick. Don’t be drawn into this ”trend” bullshit. The GAT was more or less the same in 1958 as it was in 2001 and today’s temps (besides this latest peaking) is probably more or less the same as it was just after WW2 there is no ”trend” in the weather because there is no ”trend” in temperatures. Hysterical narratives is the only place we can find a real trend.

The red arrow on the graph below shows the start of the satellite measurements.

There is a well established warming trend; most notably in the NH. The Vikings abandoned Greenland in 1450. The colony failed through climate changing from when they first arrived about 400 years earlier.

Thames frost fairs were a feature from 1600 up to the early 1800s.

The CET has readings dating back to 1659. The first 100 years warmed 0.6C. The second 100 increased but next to nothing. The third 100 to 1959 warmed 0.6C and the last 70 warmed 1.4C. The latter likely influenced by urban development.

Oceans have been steadily rising for at least 200 years through a combination of melting land ice and heat retention.

I am not a climate change denier. The climate has never been static and will never be static.

Earth is within 200 years of the permafrost rising and advancing south. Greenland will be 100% permanent ice cover by the end of this century.

The chart you attach goes to 2002 – it is historical but will still show a warming trend. Add the last 20 years and then trend it. It will have a firm upward trend.

The chart you attach goes to 2002 – it is historical but will still show a warming trend. Add the last 20 years and then trend it. It will have a firm upward trend.

Add the 20 years pre 58 and you will probably have a ”firm” downward trend to then.

I am not discounting your theory – you have done a lot of good work – but to use the last few decades of rising and falling temps as evidence of that will be problematic IMO because we may be experiencing short term oscillations which are completely unrelated.

Whatever trend it produced would be unreliable. I do not believe anything that purports to be global before the satellite era. I put some faith in single station records. So going 20 years before 1950 and thinking it global is akin to navel gazing. Even now, there is no true global picture of temperature. UAH is about 10 degrees short of both poles.

I am not relying solely on the last few decades. The trends are long established based on local temperature readings, rising sea level, retreating glaciers, retreating permafrost and historical records of notable events such as the Thames frost fairs.

The most notable change during the satellite era is the atmospheric water. It has increased by about 5% in the past 35 years. That is a big increase for such a short time and the reason the SH is getting a little warmer in the mid latitudes despite the solar intensity moving north. The only place actually cooling is the Southern Ocean and maybe Antarctica but there is not much high resolution data for Antarctica.

I would not be buying land north of 40N with a view to establishing a multi-generational legacy. Most permafrost still retreating but not as fast as predicted by the models. That is because those expectations are based on little understanding of snow formation.

https://par.nsf.gov/servlets/purl/10321741

I have history on my side in predicting the snowfall will overtake snow melt. I predict the reversal of permafrost across most land adjacent the Arctic Ocean will occur by 2200.

It might be sooner that many anticipate. The solar magnetic field is shifting. The grand solar minimum is approaching. Volcanism is on an upswing. The historical correlation of all three is strong to an ice age of some magnitude.

[deleted my comment]

Increasing CO2 changes the specific heat of air and the same energy (joules) results is a minor uptick in temperature. So keep it on the list.

Iceland and other volcanos…. just grist for the mill.

There has been a very large amount of energy released by this El Nino, and it hasn’t dissipated yet.

Absorbed solar radiation remains high.

El Nino peaks are usually in February or March, this El Nino started much earlier in the year than usual so it is quiet understandable that it has been somewhat protracted.

Of course, there is not the slightest evidence of any human causation… But you know that.

ps.. I suspect that when the “map” comes out we will see a large darker yellow (+2.5) area emanating from the Nina region.

Looking at the sequence of images, you can see how in April, the Tropics were all white, then warmth starts to spread quickly from the Nino region all around the global Tropics

In November, December, you can see parts of the Nino region reaching +1.5 then starting to spread.

In February, you can see a +2.5C blob starting to form in the Nino region.

There is absolutely no possibility of any human CO2 causation in this event. !

It’s almost as if there’s an El Niño. I wonder why nobody’s mentioned it before.

The question still remains. Why did this one seem to cause a much quicker global response, and does that mean it will cool down faster.

Here’s my rendition of the past year in UAH.

And here’s the same period over 2015-16.

I need to work on the resolution. But the point is 2015 warmed up much more quickly and so far hasn’t cooled to the extent 2016 had by this point.

You mean that 2023 warmed up much more quickly, with more warming, as well as starting much earlier and holding its peak a lot longer.

That is what your graph shows, so I assume it was a typo.

Well done. Yes I meant 2023, not 2015.

Constantly having to correct your errors..

Pity you are incapable of learning from the.

Still waiting for evidence of human causation for this El Nino.

ps.. Thanks for the graph showing just how early and powerful this El Nino has been. 🙂

Yep, 2015 El Nino was nowhere near as extensive or long lived as the current one.

As I have been saying all along.

Thank You. !

Wrong. But I’m sure you are used to that by now.

Both started at about the same anomaly.. (-0.03 and -0.04)

2023 has gone much higher and much longer…

Don’t let those facts get in the way of your idiocy.

Even your charts show just how much energy has been released by the current El Nino.

Noted you still have zero evidence about any human causation.

I assume that means you no longer think that AGW is real.

And you do know that ONI is only a temperature measurement over a small area.

It does not say how much energy is released to the atmosphere by the El Nino.

Try not to remain ignorant all your life.

If you don’t like the ONI index, here’s the MEI.

“It does not say how much energy is released to the atmosphere by the El Nino.”

How much energy an El Niño releases depends on how much energy there is in the oceans. As the world keeps warming the oceans have more energy, so the same strength El Niño nowadays will be releasing more energy.

There was probably a lot more energy in the oceans this time becasue we had several La Niñas in a row, keeping the surface cooler but putting more energy into the oceans.

But you want to use the La Niñas to claim there was no warming, and then think the extra energy released by each successive El Niño means that all the surface warming is caused by El Niños getting stronger and stronger.

Even you have to admit those that pretty pathetic attempts !!

He’s just hand-waving (as usual).

We have mentioned it , many, many times.

It is the source of the current warming, and El Ninos are what creates the trend-monkey linear trend in UAH data.

No indication of human causation what so ever..

Glad you are finally waking up to that fact.

“does that mean it will cool down faster.”

Unknown.

No-one has figured out the full effects of the HT eruption yet.

ENSO models indicate a drop to La Nina conditions in a few months’ time, but the lingering effects of HT are unknown.

Certainly, even someone as biased as you are, would have to admit that these events have absolutely zero human causation.

Are you prepared to admit that fact ?????

“even someone as biased as you are would have to admit that these events have absolutely zero human causation.

Are you prepared to admit that fact ?????”

Crickets !!

As expected….. bellboy cannot be honest…. ever. !!

How do you explain more than seven years of cooling after 2015-16 Super El Nino, which ended the “Pause” between it and 1997-98 Super El Nino?

I think you’ve answered your own question. If you start a trend at or just before a high peak you will get a downward trend for a time.

If you start a trend after the El Nino has finished.. you get a near zero trend or cooling.

Great to see you are finally coming to the REALITY that the only warming is coming from these big NATURAL El Nino events.

You like to use those La Niñas don’t you. And ignore the uncertainty in looking at just 6 years.

When can we be certain Bell?

30-years is the globally recognised period of ‘climatology’.

Always look at 30-year trends.

The 30 years was made up by the WMO because it suited their purposes at the time. It is even more meaningless than your suggestion.

Mike gives his opinion.

30-year trends of what? Temperature? What does temperature tell you about climate? Why can Las Vegas and Miami have the same temperatures but different climates?

Really?

Yes, REALLY!

If it is 100F in Las Vegas and 100F in Miami are their climates the same?

This is the second time I’ve asked you this. Are you going to answer or just continue to evade answering?

“30-year trends.”

Just happens to be about half the time period of one of the major ocean cycles.

How convenient for the climate scammers….

Until the AMO start dropping down again.. 😉

30 years was originally for a micro-climate, aka regional climate. That interval was picked by WMO and IPCC because of several reasons, one of which was it made it easier to ignore natural variation and attribute everything to man’s activities.

Yes, man has had a impact on climate. Look at the blacktop, steel and concrete, ships, cars, and 8 billion people needing energy, shelter, food, water, etc.

The energy released in coal fired steam turbine generators in 1 year is sufficient to raise the temperature of the lower 1000 feet of the atmosphere by 0.1 C. Those joules never seem to get entered into the ledger.

Thanks for showing everyone that the warming is totally down to El Ninos. (you can even see the minor El Nino in 2020 (or are you saying La Nina caused that spike)

Well done.

I assume you still haven’t got any evidence of human causation.

Thus are concurring this is NGW, and not AGW.

Thanks for admitting you still haven’t a clue about how energy works, prefer magic to science. Well done.

Looking at that insignificant period of cooling between El Niños, here’s my map of the global trends over that period. Seems clear that some of the greatest cooling is from the Niño regions, suggesting that this cooling is the result of positive ENSO conditions at the start, given way to the succession of La Niñas towards the end.

And, are the oceans boiling ? 😀

Do you require boiling oceans before you accept there is a problem?

Might be a bit late at that point.

How can anomalies based on averages tell you that the oceans are going to boil? What is causing the averages to go up?

I didn’t say the oceans would boil. I just said that if they did it would obviously be a bit late for worrying about controlling temperatures.

Increased energy in the system due to the heat-retaining impacts of increasing atmospheric greenhouse gases.

“Do you require boiling oceans before you accept there is a problem?”

Words matter. This sentence of yours has the implicit assumption that the oceans are going to boil – else there would be no problem to accept!

“Increased energy in the system due to the heat-retaining impacts of increasing atmospheric greenhouse gases.”

And how does temperature measure that increased energy? Stop evading and answer the question!

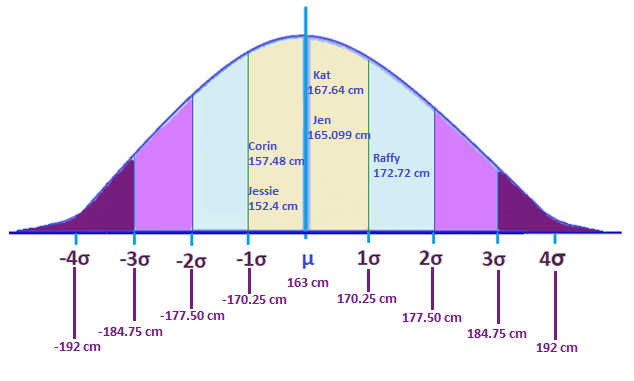

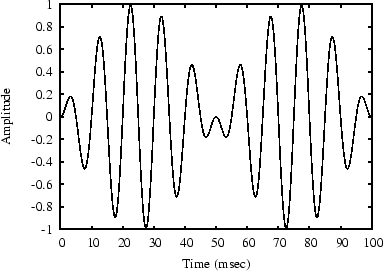

The correct answer is they don’t. I’ve been analyzing thermometer data in New England, specifically central Maine. Figure 1 are monthly average temperatures for the month of January at one single CRN station for the past 22 years. One could mistakenly think and oversimplify from looking at this graph that winter “just isn’t what it used to be.” But when you investigate further, you can see a more complicated and detailed picture.

I took all of the recorded maximums for each of these months, organized them from descending to ascending order, and graphed the results in Figure 2. The lowest points are the coldest recorded temperatures for each month, while the highest points are the highest recorded temperatures for each month. It’s normal for the temperature in this region to get into the mid and upper 50’s. You can see it’s familiar looking at Figure 1, but the monthly averages are hiding a lot of detail.

For example, the cold monthly averages can be cold due to extended periods of very cold weather (2003) or severe cold snaps with the rest of the month being more typical (2005). The month with the “warmest” average (2023) wasn’t even warm per say; it just lacked the severe cold that is typically featured. I think it’s misleading to say that it was a warm winter month. That’s been the feature for the past 4 years or so by looking at this graph. Some of the months with warmer averages had registered colder temperatures than the months with colder averages.

Bellman should REALLY contemplate this.

Any time you average something you lose detail – and it is the details that actually tell you what is going on with a physical process.

They start off calculating a daily “average” temperature which isn’t really an average temp at all. It’s a mid-range value, a median, and that mid-range value can be the same for two vastly different climates, such as Las Vegas and Miami – meaning it is simply a garbage metric from the word go.

And then they just keep averaging the averages over and over and never look at the variances of any of the data sets!

Your analysis makes much more sense to me as a way to determine what is actually going on. If Tmax is not changing much (and from your figure 2 it isn’t) then whining about it getting “warmer* is meaningless, especially when their “anomalies” are smaller than the measurement uncertainties in the data.

The minimum temps in your graph appear to have had a step change at the beginning but that could just be an artifact of your starting point. After about 2005 I don’t see where the minimum temps show much of a trend, just variation.

Thanks. That’s what I think about averaging too, and based on my limited experience conversing with other commentators in the blogosphere, I think knowing that puts you ahead of many people. Averaging hides so much important detail; alarmists look at Figure 1 and think it hasn’t been anomalously cold in this year in almost 20 years. When you average, you don’t respect the non-linearity of the climate system; you disregard it erroneously as a trivial detail.

Here is the same setup but with minimum temperatures. These are important because winter minimums are claimed to be warming the fastest of any of the measurements. But what I think originates from is improper OLS analysis. Winters in the NH have more variance and have unstable atmospheric conditions so when you graph these data points, the ranges are much more spread out. Look at the cold monthly averages compared to the warmer monthly averages; there’s a huge difference between them, like 20F or so. And the difference is even bigger when looking at the second figure. There are nights that can be above freezing in the low-to-mid 40s, while other nights can be well below 0°F.

Yep.

But just like they do with measurement uncertainty they ignore the variances of the data. Thus averages are always considered to be 100% accurate.

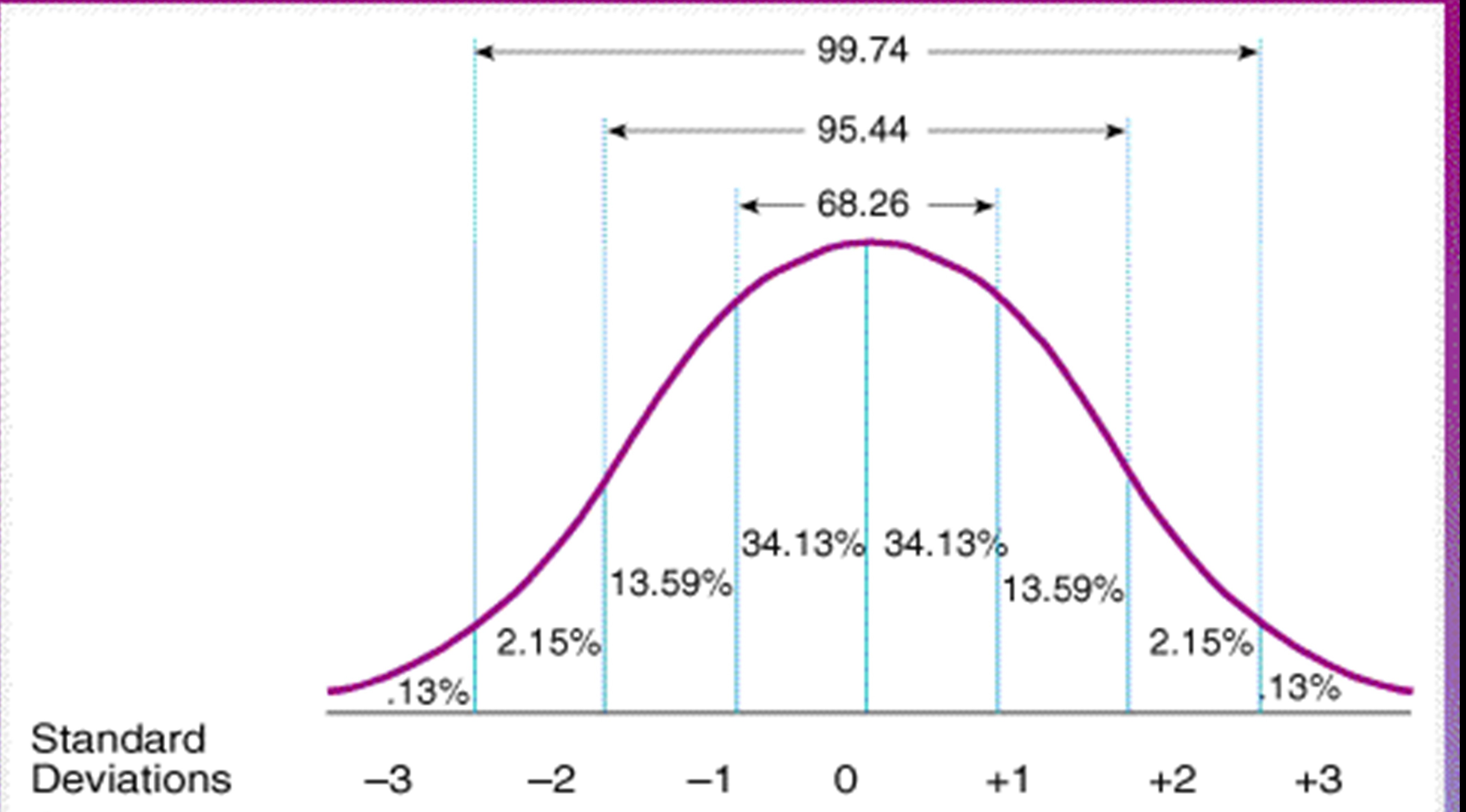

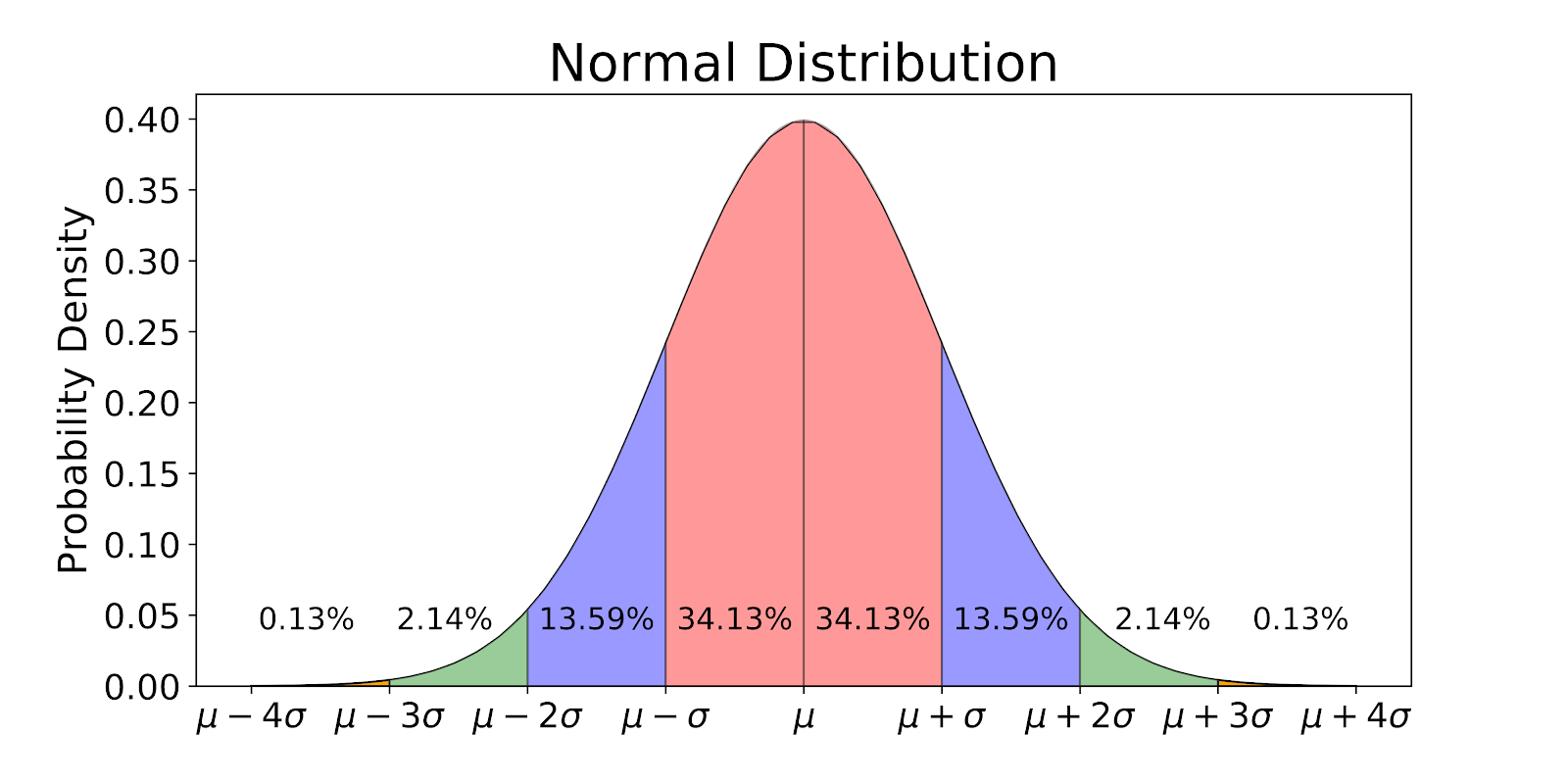

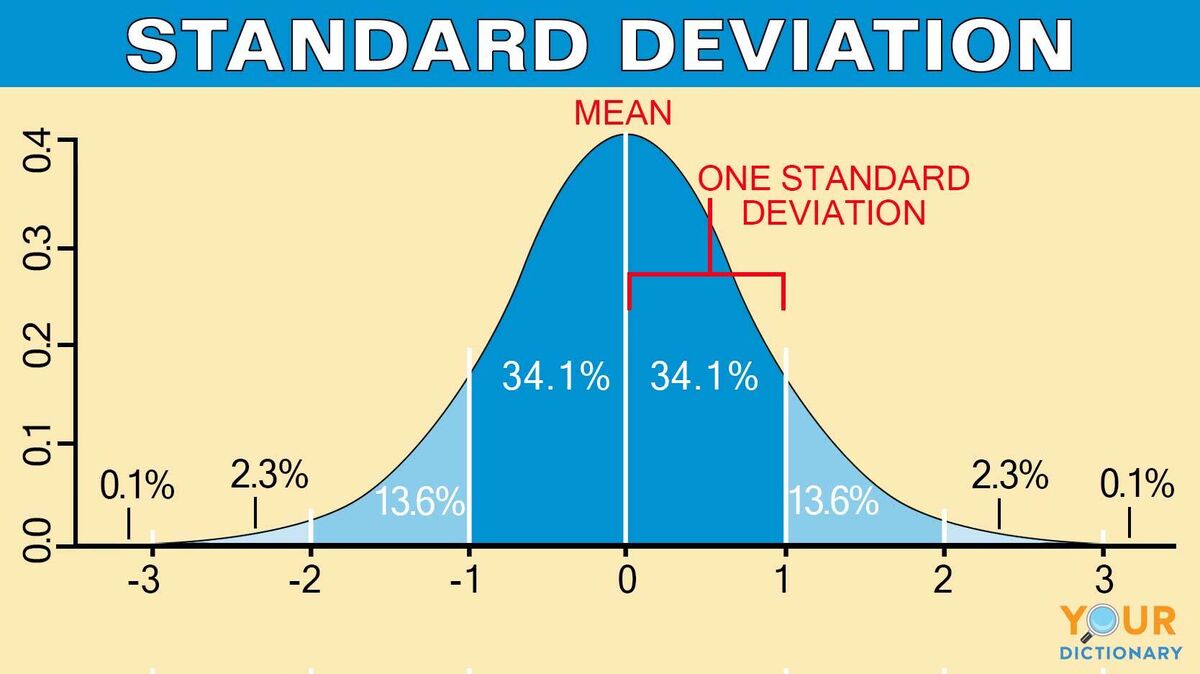

Far too many climate scientists and statisticians mouth the words like “standard deviation” and have absolutely zero intuitive feel for what the word means.

If your data has a small variance then you get a sharp hump surrounding the average – the possible values the average *could* actually be are small in quantity. On the other hand if the variance is large then you get a small, broad hump around the average meaning the actual possible values the average could really be is much larger.

It’s why in the GUM the standard deviation of experimental results is closely related to the uncertainty associated with that data. If you take 100 measurements and get the same value each time you can be pretty confident of the value you have for the average. If you take 100 measurements and they are spread all over creation then you simply can’t be sure of anything about what the average is telling you.

It’s why too many climate scientists and statisticians think the standard deviation of the sample means is the measurement uncertainty, i.e. the accuracy, of the average. It isn’t. The standard deviation of the sample means is a metric for *sampling error” and not a metric for accuracy.

There is no evidence there is a problem of any sort…

Temperatures are still well below the Holocene average

Arctic sea ice is still well above the Holocene average.

Extreme weather is not increasing or changing much at all…

So come on chicken-little… tell use where this “problem” is … apart from in your head. !!

Maybe the world’s scientific community is wrong and you, bnice2000, a commenter on an internet blog, are right.

We can’t rule out the possibility.

It just seems a bit unlikely.

The worlds scientific community is being ruled by money. Money from governments in the form of grants. From jobs being paid for by elites hoping to gather in some of the trillions being spent on CO2 reduction.

“Money from governments in the form of grants.”

Try selling that to the RA with multiple room mates, working night and day assemble an analyze the data.

Folks, the Gorman’s et. al. ignore the fact that the $ are almost all in denial. Ironically, they don’t really fund much above ground research, since they know how inconvenient most of the findings would be. Better to shadow fund Q social media,, thru Heartland and Koch. But if a credible alt.warming process were found, the finders would be blinded by the bling being thrown at them.

Story tip: Underground web site runs out of direct funding and needs pass thru advertising from alt. sources. Readers do not appreciate the turds from Hillsdale “College” left behind after visits. Especially the Heads I Win Tails You Lose questionnaires..

Marxist-democrat Fake News talking points, filled with personal HaTe. Go blob go.

Boiling oceans? Not required, but a lot better science (real science) and untampered data is required to prove man is creating hell on earth.

Ignore the wars for the purposes of the above comment. It was only about climate.

Yes, but only once and briefly. Seems a minor volcanic disturbance resulted in a short duration of bubbles appearing in the Atlantic. Other than that…..

I gave you an upvote for posting some interesting figures and for not mentioning that we’re all doomed.

The other El Nino peaks were just a couple of months or so long, but the current one is holding on – why the difference – anyone?

Water and aerosols in the stratosphere.

This just seems like conjecture. It might be true, but no one here who claims this can point to any research proving it.

Oh my, the irony is getting a bit too high here.

Go visit NASA on the effects of Tonga on the stratosphere.

Could you cite the specific research you’re referring to?

I used your comment as search terms. Here is my first return:

https://www.nasa.gov/earth/tonga-eruption-blasted-unprecedented-amount-of-water-into-stratosphere/#:~:text=The%20underwater%20eruption%20in%20the,affect%20Earth's%20global%20average%20temperature.

It appears to be the Weekly Reader version, but sums it up.

“In contrast, the Tonga volcano didn’t inject large amounts of aerosols into the stratosphere, and the huge amounts of water vapor from the eruption may have a small, temporary warming effect, since water vapor traps heat. The effect would dissipate when the extra water vapor cycles out of the stratosphere and would not be enough to noticeably exacerbate climate change effects.”

Bold and italics mine. Is there an alt. version that disputes this?

Please see my previous comments.

All I’m seeing is a pic of the last remaining Tonga virgins. If I missed your research link, would you please link to that comment? It’s a tool across from your nom de WUWT on the referenced comment.

I mentioned above that EL Ninos usually start to dissipate in February or March.

This one happened much earlier than usual, so a protracted period at the top is not unexpected.

ENSO models are showing a dip and probably La Nina condition in a few months…

Trouble is , no-one has yet figured out the full effect of the HT eruption.

Time will tell.

Surface temperatures may have fallen this month. This data is for atmospheric temperatures and may represent some residual warming.

That’s why I said it will be interesting to see the surface data when it comes out.

These data points frankly don’t change anything really. The climate fluctuates as history has shown. Pouring money into this green energy rubbish is futile and a waste of money. Just look at wind, solar and EV’s failures. A severe hail storm in TX pummeled a 10,000 acre solar farm. Is that a good ROI? I think not, unless of course your investment was propped up by taxpayer subsidies who are now on the hook perhaps either directly or indirect. Human adaption is more appropriate and useful. We ought just get on with life and end the climate change madness. Just imagine, if our focus was on productive endeavors with a real return on investment our lives would be free from the anxiety created by these raving climate alarmist lunatics.

And even if they manage to survive hail and marine corrosion, it all has to be repeated a couple decades down the road.

Great solution to a non-problem.

There is no need for alarm. 2023, IPCC’s new chairman, Professor Jim Skea.

https://www.thegwpf.org/new-ipcc-chairman-is-right-to-rebuke-misleading-climate-alarm/

I don’t think many city slickers even realize how big 10,000 acres is. That’s really just a big house right?

A square, 1 mile on a side is 640 acres. 10,000 / 640 = a square ~16 miles on a side. That’s a pretty big area.

Actually it’s 4 miles on a side but still rather big.

Interesting data. I have to wonder how much Tonga and El Nino added to the last 3 years.

With a dud of a brief El Niño decaying rapidly back intto La Niña territory and Arctic ice extent annual high rising to “14th lowest” extent, this has to signal something unusual going on. Isn’t someone in the game harkening back to the rare Hunga Tonga Hunga Pacific seafloor eruption which sent enough water into the high stratosphere to add 10% or more water to this fairly dry layer?

I’m sure that climateers would have quickly latched onto this lifeline if the effect had caused a sudden cooling. It was a NASA scientist who predicted it would result in noticeable warming.

https://www.space.com/tonga-eruption-water-vapor-warm-earth

Bellman, have a look at this comment:

https://wattsupwiththat.com/2024/04/02/uah-global-temperature-update-for-march-2024-0-95-deg-c/#comment-3891796

Please try to learn something from it.

Why? What do you think it’s saying that I don’t already know?

Those are what months of weather look like when averaged into a single number. Do you think that’s appropriate? That’s how it is for each station recording temperature around the globe. Just to give some perspective.

Do you understand non-linearity or deterministic chaos, Bellman?

“Do you think that’s appropriate?”

Yes.

Bellman is a monkey.

That’s insulting. I’m actually an ape.

1360 W/m2 is what months of zenith solar flux looks like when averaged into a single number. Do you think that’s appropriate?

And now the Inappropriate Analogy fallacy.

Not a very good analogy.

And no, there are important changes that are unevenly distributed across the solar spectrum. The construct of total solar irradiance conceals the role each spectrum plays. The UV spectrum, for example, can cause DNA damage in plants and animals, while visible light drives photosynthesis in plants.

It’s why Freeman Dyson was so critical of the “climate models”, saying they are not holistic at all. It’s nothing more than religious dogma that more CO2 is bad. From a holistic point-of-view CO2 is a fertilizer that promotes food growth and resistance to drought. What is “bad” about that?

What is different about it?

Solar output is just one of the factors that affect temperature. Solar output can increase or decrease, yet the temperature could remain unaffected. I’m unsure why so many think that curve-fitting CO2 concentration or total solar irradiance onto the surface temperature record is a reasonable attribution exercise.

They do it because they can manipulate the numbers to generate “scare” money.

The true answer is “because of measurement uncertainty being in at least the units digit we simply don’t know if the global average temperature is actually going up, going down, or stagnating”.

So they just assume “no measurement uncertainty” and just go merrily down the primrose path.

NO, it is not appropriate!

Radiation flux is based on T^4. The average flux will *NOT* give a complete picture of what is happening with temperature!

It’s why nighttime temperature follows an decaying exponential or polynomial. Heat loss at higher temperatures is much higher with the beginning night temperature. The actual heat loss has to be an integral of the entire curve. Just doing a mid-range value of the nighttime temp will *not* give a proper value for heat loss.

Averages, or more appropriately medians, are the refuge of those unwilling to actually do the hard work. It allow all kinds of actual details to be ignored. Something climate science is famous for.

Even fading El Niño won’t drop monthly anomalies back to last years’ Ls Niña negative reading. The high anomalies since last summer are mainly due to the huge mass of water and other ejecta blown into the stratosphere by the enormous 2022 Tongan underwater eruption.

Somebody please wake me when we pass the MWP beautiful weather.

I’ll break my rule of internet sarcasm.

So the world is nearly a whole one degree warmer than a year ago.

Anyone notice the difference?

NO 😀

That’s my thinking as well. I’ve been around since the early 70’s and have lived within a roughly 40 mile radius. The only thing that is noticeable to me is that there were more big snow storms in the late 70’s. Getting a lot of snow in central Maryland is as much a function of weather fronts, the jet stream, polar vortex, etc as it is of temperature. I can recall some very cold (but mostly snowless) winters in the last 40 some years, as the moisture wasn’t available to allow for lots of snow. And I can recall some very warm winters, even way back when.

Seasons come and seasons go, and other than year to year variability, I would never have a clue that the world (in general) is a degree or so warmer. That would be like someone measuring my hair and telling me that my hair is a millimeter or so longer this week than it was last week…

The temperature in my backyard is up about 20C since I put my slippers on this morning. Another 5C or more would be nice.

My personal experience in the SH was a tremendous growing season at 37S and a dry start to autumn that was corrected in a single day – somewhat unusual. There were 5 days of summer and a tad later than my earliest memories of 37S.

On a broader front, Australia appears to be getting more water in the centre. Through this year, it sustained the monsoon trough over land. One low intensity convective storm formed over land near Darwin and was sustained for a couple of weeks; picking up water from the land and ocean to the north while pulling in drier air from the south..

The water is possibly due to the current El Nino however there is a sustained upward trend in atmospheric moisture. Up around 5% through the satellite era. This is due to the NH warming up as the peak solar intensity shifts northward. The low to mid latitudes in the SH are benefitting from this extra moisture and higher levels of CO2.

You have to look hard but there are signs of warming and they are all good for now.

The warming is leading to more snowfall in the NH and that will eventually overtake the melt. Greenland is the only place where there is sustained upward trend in permanent ice extent.. There is when location on the northern coast of Alaska where the permafrost is rising and a Northern slope on Mount Marmot that also has rising permafrost. But still a couple of centuries before the permafrost advance down Southern slopes and southward on flatter land.

We barely had snow on the ground this winter, historic flooding, 70 degree days in January. Extremely atypical for my area. I’m not sure why you’re so blithely suggesting that it’s impossible to notice abnormal weather conditions.

This is all caused by too much CO2?

Prove your ridiculous assertion.

I did not say the abnormal weather this winter was caused by CO2, I said the abnormal weather was noticeable. Above, Michael asked if anyone had “noticed a difference” between the weather this year and last, and I was responding to that question.

Don’t run away, you certainly implied it.

Cee-Oh-Two!!!!

RUN AWAY!!!

“abnormal weather conditions”

Abnormal weather conditions are *NOT* climate. You are dissembling hoping no one would notice.

I never said the weather conditions were climate, I said the difference in weather this year is noticeable, in response to Michael’s question.

Is your nickname Captain Obvious? Yes, weather changes from year to year. So what?

It’s not obvious to Michael, who asked if anyone had actually noticed different weather this year wing to the abnormally warm temperature.

What “abnormally warm temperature” [singular]?

Hi, welcome to the conversation. Please go look at the temperature series that is the subject of this discussion thread. Thanks.

Shuttup.

Slightly strange times?

The International Research Institute has the El Nino probably ending in April. Their model is then for only TWO months of neutral conditions before it probably flips back to La Nina.

(I know, it is just a model, but IRI is generally pretty close.)

….and returning to its trend line of +0.18C per decade warming

This warming trend is smaller than the resolution of the measuring instruments.

I’ll never understand why it is so hard for people to understand this fact. A typical measurement uncertainty of +/- 0.5C for the measuring instruments means you simply can’t tell what is happening in the hundredths digit. Even the tenths digit is questionable. It all goes back to the idiotic assumption in climate science that all measurement uncertainty is random, Gaussian, and cancels leaving the stated values as 100% accurate – followed by the assumption that the standard deviation of the sample means is the measurement uncertainty of the mean. It’s these kinds of assumptions that get bridge pilings run into by container ships!

It’s a good thing Baltimore did not capsize.

(Something democrat representative Hank Johnson might say.)

Christy et al. 2003 assess the uncertainty on the trend at ±0.05 C/decade.

The AR(1) corrected uncertainty is also +0.05 C/decade.

Both are bullshit, and you propagate it.

These are the standard deviation of the sample means values, i.e. what statisticians call the “uncertainty of the mean” – which is total and utter BS because statisticians never factor in measurement uncertainty. It’s based on the assumption that all measurement uncertainty is random, Gaussian, and cancels so the stated values can be assumed to be 100% accurate. The true measurement uncertainty of the mean is related to the variance of the data – which climate science never evaluates at all at any level, not even the daily temperature data.

If statisticians would propagate the entire value of each data point into the sample they would have a data set consisting of “stated value +/- measurement uncertainty” for each data point selected for the sample. The mean of that sample would then become its own “stated value +/- measurement uncertainty”. When the mean of those sample means is evaluated then *it* would become a “stated value +/- measurement uncertainty”.

But you *never* see this done in climate science. It’s easier to just assume all measurement uncertainty is random, Gaussian, and cancels – no matter how idiotic such an assumption is.

Do you still believe that systematic errors affecting multiple different thermometers undergo a ‘context switch’ to behave like random errors? Or have you improved your reading skills?

Their usual hand-waving is to claim that after you glom a whole bunch of different thermometers together, the systematic errors magically transmogrify into random and then disappear in a puff of greasy green smoke.

And when you mention the strict precision demanded by climate science and the slim chance of these errors being scattered around such a value, they just shrug it off like it’s some trivial detail.

LOL

Only in climate science (i.e. trendology) does combining multiple independent variables results reducing measurement uncertainty. In every other technological endeavor the uncertainty increases.

They will never acknowledge this fundamental truth because it reduces all their curving fitting gyrations to meaninglessness.

Yes. Though to be pedantic it’s not a belief or a feeling. It is a fact. And just to be clear I’m answering yes to the scenario of each thermometer having its own separate and independent systematic error such that r(x_i, x_j) = 0 for all combinations of thermometers i and j.

The magic of trendology.

Prove your ridiculous assertion.

Only in climate science (i.e. trendology) does combining multiple independent variables results in reducing measurement uncertainty. In every other technological endeavor the uncertainty increases.

How do you know a given batch of thermometers all read high, by different and unknown amounts?

You don’t, and your little combination won’t randomize them.

He’s basing it on Page 57 of JCGM 100:2008, specifically referring to section E.3.6. Look at point c).

Note C only means that you can’t normally separate out random and systematic uncertainty in a measurement.

u(total) = u(random) + u(systematic)

Measurements are given as “stated value +/- u(total).

You can’t know u(random) because it *is* random. If you know u(systematic) then it should be used to condition the total measurement uncertainty. The problem is *knowing* what the systematic uncertainty in a field measurement device is. It’s actually part of the Great Unknown and, as such, it can only be recognized as part of the total uncertainty but it can’t be quantified.

It’s why measurements are *not* given as:

stated value +/- u(random) +/- u(systematic)

They are given as: stated value +/- u(total)

See the text following “c”.

“Benefit c) is highly advantageous because such categorization is frequently a source of confusion; an uncertainty component is not either “random” or “systematic”. Its nature is conditioned by the use made of the corresponding quantity, or more formally, by the context in which the quantity appears in the mathematical model that describes the measurement. Thus, when its corresponding quantity is used in a different context, a “random” component may become a “systematic” component, and vice versa.” (bolding mine, tpg)

See E.3.7: “E.3.7 For the reason given in c) above, Recommendation INC-1 (1980) does not classify components of uncertainty as either “random” or “systematic”. In fact, as far as the calculation of the combined standard uncertainty of a measurement result is concerned, there is no need to classify uncertainty components and thus no real need for any classificational scheme.”

bdgwx is like most of those in climate science – absolutely no training or understanding of physical science and/or metrology.

Therefore they like to take the easy way out and just assume that the stated value of a measurement is always 100% accurate.

Thank you, Mr. Gorman. I interpreted it as a correction for systematic error and random error not needing to be classified as either specific components, since that’s what was said at the beginning of the chapter.

If these two thermometers have no relationship between each other, what logic leads you to arrive at the conclusion that their errors behave like random errors?

Just think of the fact that each daily recording is usually measured to the tenth of a degree. Do you really think that those separate errors’ influences are all randomly distributed around the true value? You don’t even know the true value!

There isn’t any logic. There is only the meme that all measurement uncertainty is random, Gaussian, and cancels. This is coupled with the meme that once calibrated in the lab field instruments will retain 100% accuracy after being installed, regardless of microclimate changes at the station like green grass turning brown or vice versa. And these memes have the corollary that the variance of the generated data is of no value and can be ignored when averaging different stations together.

All this is done to make it *easy* instead of accurate or meaningful analysis these memes allow temperature to be used as an extensive property of instead of an intensive property.

Because different thermometers have different systematic errors. For example, if you enlist NIST to calibrate 1000 independent thermometers they are not going to come back and say each one was off by exactly +0.57 C (or whatever). They are going to come back with a distribution of errors. When you randomly sample the population of thermometers you discover a different error each time you sample. In this context the error you observe is random even though in the context of a single thermometer it is systematic.

No. I certainly don’t think all of the influence is random. But that’s not the question at hand. The fact you are challenging is that a context switch can cause a systematic error to act like a random error like would be the case when you consider a large set of independent instruments each with own different systematic error that act as if they are random when the context is switch to that of the group as opposed to that of an individual.

Let me ask you this. Do you think if given 1000 independent thermometers that you would find that they are all off by exactly -0.21 C (or some other specific value)?

Just because each error for every different thermometer doesn’t produce the exact same quantitative value as a result of the same systematic bias doesn’t mean the influence becomes random. Systematic errors, in that context, can still form a distribution resembling that of a random bias; it doesn’t mean they undergo a context switch into behaving like random errors, and therefore, they don’t cancel out or reduce with the LOLN. And once again, it’s extremely unlikely that they would, given the precision demanded by climate science. We can’t even test that in a time series.

And my answer to your question is no.

That’s exactly what it means. Do the experiment yourself via a monte carlo simulation.

Prove it. Show me the monte carlo simulation in which you simulated a group of thermometers each with their own systematic error and which a measurement model of say y = a – b or y = (a+b)/2 where a and b are two randomly selected thermometer readings exhibits no cancellation of error.

What’s extremely unlikely is for every single thermometer in existence to have the exact same systematic error.

Then you are close to the epiphany. If those thermometers don’t have the same systematic error then randomly sampling from the population will yield a random error. And each time you randomly sample it is more likely than not that you will get a completely different error. Create a histogram of your samples and surprise…surprise…you get a probability distribution.

You’ve been given jpg’s of NE KS temps many times in the past. Even stations as close as 20 miles (Topeka to Lawrence, Topeka to Holton) show different temperatures. How do you determine if the systematic bias in each station cancels with another?

Uncertainty is *NOT* error. How many times does this have to be pointed out to you? EVERY metrology expert will tell you this in no uncertain terms.

It’s why some physical scientists are not even using the “stated value +/- uncertainty” any more and are just using a range of values instead. How does a sampling of such do anything to cancel out systematic bias?

If I tell you that Station 1 measurement is 49.5F to 50.5F and Station 2 is 49.7F to 50.7F how does this cancel anything?

Once again, LOSE THE “TRUE VALUE +/- ERROR” mindset. It’s been shown to be more trouble than its worth! It’s why the current meme is “stated value +/- uncertainty”. And uncertainty is the range of values that can be reasonably assigned to the measurand – it is *NOT* the error in the reading!

There is no way to separate out the random component from the systematic component. If there is no way to separate the components then you simply can’t just assume that systematic bias is random.

Most systematic errors deviate in one direction only. As Mr. Gorman noted, these errors affecting these separate thermometers would have to go in two separate directions and be the exact opposite distance from zero to cancel out. But there will definitely be variability in the quantitative amounts of uncertainty because there could be more than one systematic error present at a time, as well as random error along with the environmental conditions. Your definition for what classifies as a systematic error is strict; we are measuring temperature in different regions all over the world with different climates. We *know* that UHI, for example, wouldn’t be as aggregated in cool, maritime climates as opposed to in hot, dry climates in landlocked areas like Arizona.

If what you said was true, why would climate scientists bother doing adjustments?

It all started with climate experts Jones and Mann needing to “hide the decline”.

“If what you said was true, why would climate scientists bother doing adjustments?”

I’m guessing that you know better, and are trying to make a philosophical point, for effect.

ANY measurement found to have been made with a known error, needs to have it corrected. In our cases, it is used for many, many purposes besides GAT. Local evaluations. Evaluations over shorter time periods. More.

Bigger pic, the “systemic error” whines can be made just as justifiably to any scientific or engineering evaluation. Those known need to be identified and corrected. The Bigfoot unknown errors, repeatedly mentioned by many here, need to be estimated – in light of the fact that the bigger they are, the more likely they are to have already been accounted for – and thrown into the evaluative mix, to see if they make a significant difference to the final error.

Systemic errors in GAT v time evaluations would not only have to be orders of magnitude bigger than probable, to matter. But they would also have to line up quite regularly in size and direction, over time, to qualitatively change any of the resulting trends. So, the Gorman’s keep those chimps busy typing up the encyclopedia Britannica, and they’re sure to get ‘er done sooner or later….

Bullshit, blob. True values and thus error are completely unknowable.

You might understand this if you had any real-world metrology experience.

“True values and thus error are completely unknowable.”

To God’s own exactitude, got me there. Please provide me with a single scientific or engineering evaluation for which this isn’t true.

On second thought, all in. My uncle wanted to lose weight, and I charted an aspirational weight v time table for him to meet. He was bummed at not doing so, but just caught me out. Seems that he read your post. Here in St. Louis, we are on clay rich ground, and it’s been shrinking from the drought. There is certainly a changing gravitational anomaly below his scale. He lent his high res gravimeter to a drinking buddy and so the changing systemic error is unknowable. The program’s now obviously jetted because of that changing, unknowable, systemic, error. My uncle thanks you. My beleaguered aunt, not so much…

You’re off in the weeds again, blob.

About your usual level of informed response….

Still waiting for the scientific/engineering evaluation that would not be completely invalidated by your repeated rant.

Triple negatives are a forte of your word salads, blob.

The Global Average Temperature anomaly. It is *NEVER* given with an associated measurement uncertainty by climate science.

The standard deviation of the sample means is *NOT* the measurement uncertainty of the average. Unless you use the climate science meme that all measurement uncertainty is random, Gaussian, and cancels – apparently even systematic uncertainty is considered to be random, Gaussian, and cancels by climate science.

“in light of the fact that the bigger they are, the more likely they are to have already been accounted for”

“Systemic errors in GAT v time evaluations would not only have to be orders of magnitude bigger than probable, to matter. But they would also have to line up quite regularly in size and direction, over time, to qualitatively change any of the resulting trends.”

What do you base that on? We can’t quantitatively state these errors because we get one chance to record a day’s worth of data. All climate science really cares about are TOBS, UHI, station moves, and the switch from LiG to electronic thermometers, but not bee’s nests laid in the instrument, calibration drift, wildlife interactions with the thermometer, cognitive state of the observer at the time of data collection process, etc.

“What do you base that on?”

The fact that there is a Trumpian YUGE discrepancy between the level of systemic error likely to be discovered and that which would significantly compromise GAT evaluations. All you need to do is to introduce such an error into GAT trends and perform the Monte Carlo simulation of trends over any physically significant time period – per bdgwx invitation – to see how paltry they are.

And to pile on, most of the “systemic errors” alluded to – but not quantified – would correlate. And positive error correlation of data points reduces the standard error of any resulting trend from what it would be otherwise

You have a battery, and a digital multimeter.

What is the true value of the open-circuit voltage of the battery?

Take all the time you need to answer.

You don’t really expect a coherent answer, do you? My guess is that he can’t even diagram the measurement circuit!

The way he rants about “errors”, he should be able to answer.

There are a multitude of variables that interact with each other and can affect the temperature at a given time. Temperature isn’t some one-dimensional, static property you can easily fit into a simplistic model. Anyone who tried to do that would, at best, be superficially estimating. You would have to assess each individual measurement at every station in the GHCN network to try to estimate how this has affected the GAT. That’s literally what it would take.

I sound like a broken record saying this, but we only get one chance to record the measurement. After that, our chance is gone forever. At the time of the recording, the vegetation that was present could be gone now or maybe there is artificial infrastructure there now that wasn’t there before.

What do you base that off?

What do you base that off?”

The fact that we call them “systemic errors”. So, for any one of them, they would tend to occur in groups, over time. That means that for trends, that particular systemic error component would tend to group the data tighter than if they were distributed independently,

But of course bdgwx remains right, in that the variety and randomness of those errors in GAT evaluations means that they can be effectively treated as another source of random error.

We *can’t* separate systematic error from random error, and there are multiple systematic errors. Climate science is tracking changes to the tenth of a degree; how would you know if particular systemic error components would grip the data ‘tighter’?

“.…how would you know if particular systemic error components would grip the data ‘tighter’?“

Because any one systemic error would tend to correlate as it was exhibited over and over. The would tend to reduce the standard error of any trend of them, from what it would be if they were randomly distributed. Now, I’m not saying that this is the actual case w.r.t. GAT evaluations, over time. Rather, I agree with bdgwx, that there are many such sources, and Engineering Statistics 101 tells us that as the number of them increases, they will tend towards normality.

Nonsense.

Where, on Mars?

Standard error is a metric for sampling error, not for accuracy.

“ Statistics 101 tells us that as the number of them increases, they will tend towards normality.”

The standard deviation of the sample means will tend toward normality because of the CLT – *IF* all the restrictions on using the CLT are met. But that only tells you how precisely you have calculated the population mean, it tells you NOTHING about the accuracy of the mean you have so precisely located.

The accuracy of that mean can only be established propagating the measurement uncertainty of the data, both in the population and in the samples you take.

Climate science and statisticians like to sample just the stated values of the population while ignoring the measurement uncertainty.

If the population data is x1 +/- u1, x2 +/- u2, etc then climate science and statisticians will use sample data of x1, x5, x30, etc. while ignoring u1, u5, u30, etc.

Thus their sample means are considered to be 100% accurate for that sample, no measurement uncertainty at all. The standard error of the sample means is thus calculated from those supposedly 100% accurate sample means.

In reality, each of those sample data points should be x1 +/- u1, x5 +/- u5, x30 +/- u30, etc. Thus the sample mean would be x_avg +/- u_c(x). The standard deviation of those sample means would then inherit the uncertainties from the sample means.

Bottom line, the trend line itself should be conditioned by the measurement uncertainties of the data used – unless you are in climate science. Only if the measurement uncertainty is less than the delta from data point 1 to data point 2 can a true trend line be determined. If it is greater than the delta then you can’t know if the delta is a “true value” or not. It might be up, down, or sideways – and you won’t know.

Exactly. Whether averaging reduces the effect of error is highly dependent on the nature of the error. If the error behaves randomly in the context of a group of instruments then averaging will provide some cancellation effect. An example is the individual instrument biases. They are all different so they behave randomly when viewed in the context of a group. However, if the error behaves systematically it will not reduce when averaging. And example is the time-of-observation bias. It introduces a persistent error in one direction even in the context of a group of instruments.

Error is not uncertainty!

When are you ever going to figure this out?

Random is *NOT* a sufficient criteria. Behaving differently doesn’t mean you will get equal negative and positive biases in the instrumentation.

And you *still* need to dump your dependence on “error”. Uncertainty is *NOT* error! Uncertainty means you don’t know the ratio of random to systematic. You just know the values that can be reasonable assigned to the measurand.

“So, for any one of them, they would tend to occur in groups, over time.”

Why? What happens to micrometers as they are used? Is the wear grouped? Or is the wear higher in a busy machine shop vs a jewelers workstation?

In order for systematic bias to cancel there has to be as many drifting lower as higher and in the same amounts. In a group of measuring devices is it always true that some drift lower and some higher and in equal increments?

“That means that for trends, that particular systemic error component would tend to group the data tighter than if they were distributed independently,”

Trends are based on measurements that are uncertain. If some drift at a slower rate than others then you are asserting that has little impact on the trend itself. How can you prove that? Especially when the individual drift component values are part of the Great Unknown?

“Anyone who tried to do that would, at best, be superficially estimating. “

And yet that is the very assumption that climate science makes when doing pairwise homogenization and/or infilling of temperature data.

Did you really mean to say that the climate data is full of superficial GUESSES?

“ random error.”

Random error implies equally more and less, i.e. data surrounding a central value.

Why is it appropriate to assume that systematic bias in LIG’s or PTR’s show negative and positive calibration drift in equal amounts over time?

It would be far more appropriate to assume that the systematic error is a skewed distribution and not a random distribution that cancels equal amounts of positive and negative data.

There is a reason why Taylor and Bevington both assert that systematic bias cannot be easily studied using statistical analysis – IT’S BECAUSE IT IS *NOT* RANDOM. It’s not even random across a group of measuring devices! Even worse it is part of the GREAT UNKNOWN known as “measurement uncertainty”.

Remember, even random distributions don’t have to have equal components on each side of the average. Otherwise we wouldn’t need statistical descriptors like kurtosis and skewness.

“All you need to do is to introduce such an error into GAT trends and perform the Monte Carlo simulation of trends “

Here we go with a monte carlo simulation again – USING RANDOM NUMBERS which cancel instead of real world data points with measurement uncertainties!

Your entire clam was invalidated by Hubbard and Lin when they found that adjustments have to be done on a station-by-station basis and need to be determined by comparison with a calibrated device. If the systematic bias was insignificant there would be 1. no need for adjustments to account for them and 2. there would be no need for a calibrated instrument to develop the adjustment value.

Standard error of a trend is nothing more than a metric for how well the trend line fits the data points. If those data points are given as “stated values” only, with no associated measurement uncertainty for each then the trend line is garbage, pure, unadulterated garbage. It simply doesn’t matter how well the trend line fits the stated values unless it is assumed that the stated values are 100% accurate.

“…instead of real world data points with measurement uncertainties!”

AGAIN, with the anodyne objection that you can make of any engineering or scientific evaluation. In the “real world”, we judge whether or not these Bigfoot individual data “associated measurement uncertainties”, could possibly change outcomes qualitatively. In this case, hell no.

GISS annual surface temps come with 2 sigma uncertainties and complete drill down into their derivation. Let’s both download 1979-latest data, trend it with expected values, and then bootstrap random samples of it with the the provided 2 sigma intervals. Then we can see how much the annual data point uncertainties increase the trend standard errors. Here are the uncertainties and the code for their derivation.

Spoiler alert folks. He will deflect and boing around to his boards…

https://data.giss.nasa.gov/gistemp/uncertainty/

Time’z up. Pencil’s down.

1979-2018 trend: 1.74 +/- 0.13 degC/century, using expected values

Now:

Use the provided confidence intervals to calc standard deviations, randomly sample the resulting probability distributions, and then calculate as before.

Do it over and over, 1300 times.

Find the average standard trend variance of the 1300 repeats.

Find the resulting standard trend error.

1979-2018 trend: 1.74 +/- 0.16 degC/century

Yes, I broke a rule of sig figs. Otherwise I couldn’t have shown you any increase in the standard trend. Not worth it to plot, because the C.I. curves would overlay…

What’d you get, Tim?

You ran away from the battery problem, blob.

Not surprised.

Those are examples of errors that are handled via pairwise homogenization. I’m not saying PHA is going to handle it perfectly. It certainly won’t. But it’s not the case that these types of things are ignored.

Here’s the problem blob ran away from, maybe you can hep him out:

You have a battery, and a digital multimeter.

What is the true value of the open-circuit voltage of the battery?