By Christopher Monckton of Brenchley

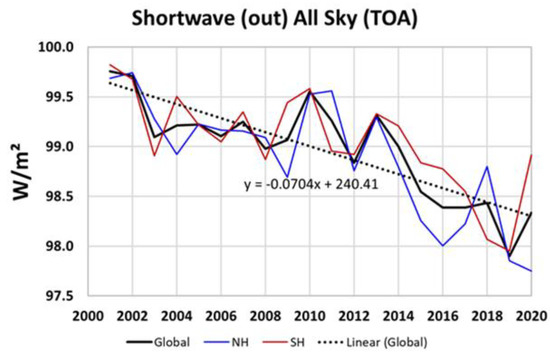

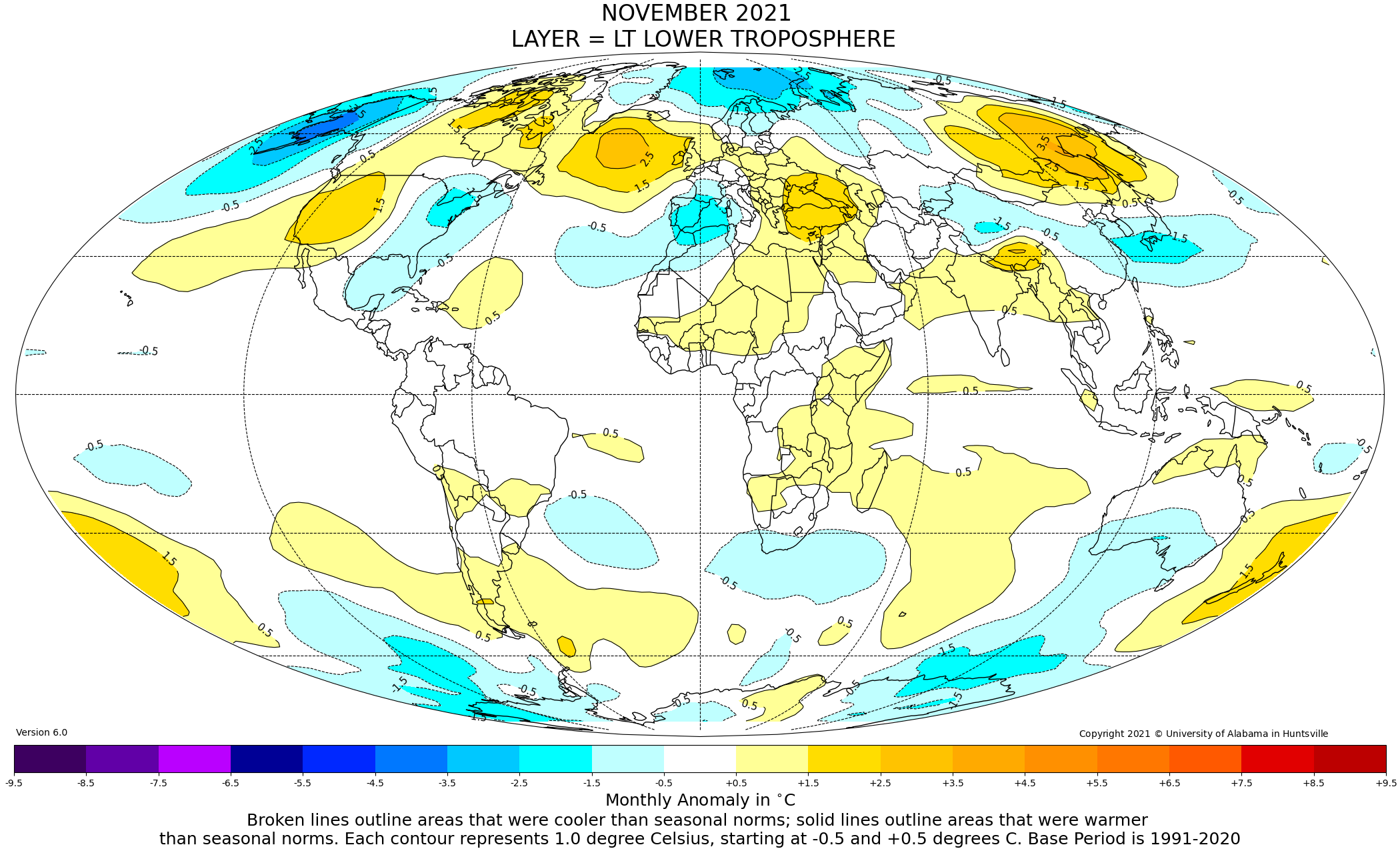

On the UAH data, there has been no global warming at all for very nearly seven years since January 2015 of 2015. The New Pause has lengthened by three months, thanks to what may prove to be a small double-dip la Niña:

On the HadCRUT4 data, there has been no global warming for close to eight years, since March 2014. That period can be expected to lengthen once the HadCRUT data are updated – the “University” of East Anglia is slower at maintaining the data these days than it used to be.

Last month I wrote that Pat Frank’s paper of 2019 demonstrating by standard statistical methods that data uncertainties make accurate prediction of global warming impossible was perhaps the most important ever to have been published on the climate-change question in the learned journals.

This remark prompted the coven of lavishly-paid trolls who infest this and other scientifically skeptical websites to attempt to attack Pat Frank and his paper. With great patience and still greater authority, Pat – supported by some doughty WUWT regulars – slapped the whining trolls down. The discussion was among the longest threads to appear at WUWT.

It is indeed impossible for climatologists accurately to predict global warming, not only because – as Pat’s paper definitively shows – the underlying data are so very uncertain but also because climatologists err by adding the large emission-temperature feedback response to, and miscounting it as though it were part of, the actually minuscule feedback response to direct warming forced by greenhouse gases.

In 1850, in round numbers, the 287 K equilibrium global mean surface temperature comprised 255 K reference sensitivity to solar irradiance net of albedo (the emission or sunshine temperature); 8 K direct warming forced by greenhouse gases; and 24 K total feedback response.

Paper after paper in the climatological journals (see e.g. Lacis et al. 2010) makes the erroneous assumption that the 8 K reference sensitivity directly forced by preindustrial noncondensing greenhouse gases generated the entire 24 K feedback response in 1850 and that, therefore, the 1 K direct warming by doubled CO2 would engender equilibrium doubled-CO2 sensitivity (ECS) of around 4 K.

It is on that strikingly naïve miscalculation, leading to the conclusion that ECS will necessarily be large, that the current pandemic of panic about the imagined “climate emergency” is unsoundly founded.

The error is enormous. For the 255 K emission or sunshine temperature accounted for 97% of the 255 + 8 = 263 K pre-feedback warming (or reference sensitivity) in 1850. Therefore, that year, 97% of the 24 K total feedback response – i.e., 23.3 K – was feedback response to the 255 K sunshine temperature, and only 0.7 K was feedback response to the 8 K reference sensitivity forced by preindustrial noncondensing greenhouse gases.

Therefore, if the feedback regime as it stood in 1850 were to persist today (and there is good reason to suppose that it does persist, for the climate is near-perfectly thermostatic), the system-gain factor, the ratio of equilibrium to reference temperature, would not be 32 / 8 = 4, as climatology has hitherto assumed, but much closer to (255 + 32) / (255 + 8) = 1.09. One must include the 255 K sunshine temperature in the numerator and the denominator, but climatology leaves it out.

Thus, for reference doubled-CO2 sensitivity of 1.05 K, ECS would not be 4 x 1.05 = 4.2 K, as climatology imagines (Sir John Houghton of the IPCC once wrote to me to say that apportionment of the 32 K natural greenhouse effect was why large ECS was predicted), but more like 1.09 x 1.05 = 1.1 K.

However, if there were an increase of just 1% (from 1.09 to 1.1) in the system-gain factor today compared with 1850, which is possible though not at all likely, ECS by climatology’s erroneous method would still be 4.2 K, but by the corrected method that 1% increase would imply a 300% increase in ECS from 1.1 K to 1.1 (263 + 1.05) – 287 = 4.5 K.

And that is why it is quite impossible to predict global warming accurately, whether with or without a billion-dollar computer model. Since a 1% increase in the system-gain factor would lead to a 300% increase in ECS from 1.1 K to 4.5 K, and since not one of the dozens of feedback responses in the climate can be directly measured or reliably estimated to any useful degree of precision (and certainly not within 1%), the derivation of climate sensitivity is – just as Pat Frank’s paper says it is – pure guesswork.

And that is why these long Pauses in global temperature have become ever more important. They give us a far better indication of the true likely rate of global warming than any of the costly but ineffectual and inaccurate predictions made by climatologists. And they show that global warming is far smaller and slower than had originally been predicted.

As Dr Benny Peiser of the splendid Global Warming Policy Foundation has said in his recent lecture to the Climate Intelligence Group (available on YouTube), there is a growing disconnect between the shrieking extremism of the climate Communists, on the one hand, and the growing caution of populations such as the Swiss, on the other, who have voted down a proposal to cripple the national economy and Save The Planet on the sensible and scientifically-justifiable ground that the cost will exceed any legitimately-conceivable benefit.

By now, most voters have seen for themselves that The Planet, far from being at risk from warmer weather worldwide, is benefiting therefrom. There is no need to do anything at all about global warming except to enjoy it.

Now that it is clear beyond any scintilla of doubt that official predictions of global warming are even less reliable than consulting palms, tea-leaves, tarot cards, witch-doctors, shamans, computers, national academies of sciences, schoolchildren or animal entrails, the case for continuing to close down major Western industries one by one, transferring their jobs and profits to Communist-run Russia and China, vanishes away.

The global warming scare is over. Will someone tell the lackwit scientific illiterates who currently govern the once-free West, against which the embittered enemies of democracy and liberty have selectively, malevolently and profitably targeted the climate fraud?

”There is no need to do anything at all about global warming except to enjoy it.”

And indeed I would were it to appear…

Thank you Lord Monckton.

Yep. I was hoping for a nice warm retirement. Maybe I’ll buy new skis instead.

In my area, most of the forecasters said that the high temperature record for the date set in 1885 would be challenged. In actuality, yesterday’s high fell short of the record by 3F.

Nothing to see here, and the warm front is being replaced by a cold front, so enjoying the warmth was nice while it lasted.

Yes, enjoy the heat dome and record 40C plus temps, enjoy the 1 in 1,000 year deluges sweeping away homes and drowning the subways and cutting off your major cities, enjoy the 100 mph winds cutting off your electricity for days.

Do you imagine the examples of weather events you have highlighted, are something unique to the 21st century then, griff?

Yea, like a 1 in 100 year flood now happens every 3 months? griff depends on fellow trolls not bothering to fact-check his manifesto.

There are likely over 7 billion once in a lifetime events happening every single day.

you and griffter lack a basic understanding of statistics…

a 1 in 100 year flood in a given location occurs roughly once every 100 years

a 1 in 100 year flood across 10,000’s of different locations across the earth may very well occur once ever few months

Menace, there is nothing in weather or statististics that says you cant have three or more 100yr floods, droughts, etc within a year at one location. You may or may not thereafter see another for several hundred years. Your understanding of statistics (and weather) is that of the the innumerate majority.

Ron Long is a geologist and you can be sure he understands both stats and weather along with a heck of a lot more.

That was my understanding as well, Gary. Most people just don’t get statistics … statistically speaking, of course.

very good!

I suspect very few rivers are so well studied that it’s precisely known what the “once per century” flood might look like. But, a study of the flood plain should suffice for guidance as to what land should not be developed and if so, what sort of measures can be taken to minimize the risk. Hardly ever done of course. Instead, often wetlands in floodplains are filled in and levies are built pushing the flood downstream. Seems like more of an engineering problem, not one of man caused climate catastrophe – unless you consider bad engineering to be man caused.

@menace…. a 100 year flood has a 1% chance of occurring each and every year. It is possible to have more than one 100 year flood in a given year. Read up on recurrence intervals in any good fluvial hydraulics text book

Exactly ! As another example , proton decay. If it does decay via a positron, the proton’s half-life is constrained to be at least 1.67×1034 years , many orders of magnitude longer than the current age of the Universe . But that doesn’t stop science from spending millions of dollars on equipment and installations looking for a decay if they look at enough protons at once

Just no.

Your understanding of statistics is on par with Griff.

At the same location you can have several 1 in 100 floods in the same decade, even the same year.

Or also a 1% chance happening every year.

I suspect the idiots think- if there is a 1 in 100 year flood SOMEWHERE on the planet most years, then that proves there is a problem. After all, such a flood should only happen once per century on the entire planet. Yes, that sounds dumb but all the climatistas that I personally know think at that level.

Rod, Griff is a rabble rouser and not interested in a careful and critical evaluation of various views on climate. He needs to be totally ignored – not even given a down arrow. There are plenty of other contributors that make thoughtful contributions on this site.

Absolutely correct, the more people respond the more he will put forward his stupid observations. Can I suggest that no one responds to him at all in the future as I belief he writes his endlessly ridiculous comments merely to evoke a response rather than intellectual argument. Let us all ignore him from now on and hope that will make him go away.

Actually, it is useful that nitwits like Griff comment here: for they are a standing advertisement for the ultimate futility of climate Communism.

Christopher,

Well said. And thank you so very much for all your tireless work.

I am confident that eventually sanity will return to the world and science. But sadly I probably won’t live to see it.

Let’s hope that at least we’ve reached peak insanity. Ironically, the one thing that may help to reverse the madness is a sustained period of cooling. It’s ironical because sceptics are familiar with the history of climate – unlike clowns such as Biden and Johnson – and they understand how devestating a significant cooling would be.

So, yes, let’s enjoy this mild warming while it lasts.

Chris

You’re right once again. It’s always useful to learn what’s on the minds of your enemies, regardless how limited they may be, because, in the words of C.S. Lewis, “… those who torment us for our own good will torment us without end for they do so with the approval of their own conscience.”

I’m mindful of once well respected scientists, like Stephen Schneider who cast away professional integrity for “The Cause”.

He claimed to be in a moral dilemma where in fact there is none. He said … “we have to offer up scary scenarios, make simplified, dramatic statements, and make little mention of any doubts we might have.” committing the sin of omission and the fallacy of false dichotomy. All he ever needed to do was to follow his own words … “as scientists we are ethically bound to the scientific method, in effect promising to tell the truth, the whole truth, and nothing but”.

Once again I thank you for your integrity and hard work. If we cannot trust those who have the knowledge, were does that leave us?

Are you here just for everyone else’s entertainment? It certainly seems that way.

Griffie-poo, pray tell us when, if ever, these weather disasters did NOT occur. You can’t, of course.

You do know that the annual global death toll due to weather has been declining since the beginning of the 20th Century?

Does this also mean Winter temps in England not falling below 10C; keeping at 12 to 15C say .This will be important when we can no longer heat our homes with gas central heating.

griff, you do know you’re going to die?

Probably sooner rather than later, you’re that wound-up.

That fact is used to create fear to manipulate us, and life in many ways is a struggle to come to terms with our mortality.

I gave up on mortality around Freshman year in high school. I’d done a bit of reading I came to the conclusion just to ignore it. I believe in God because I can’t see any other way to think about the Universe. There’s really nothing t be gained by thinking to much about mortality.

It’s the truly egalitarian life.

Easy, griff, you are pointing to the alternative:

Good luck with your

climateway of life changes!…All these weatherevents during the warming pause, so it’s not related to warming, but to natural variability you just have discovered.

I see griff is still trying to convince people that prior to CO2 there was no such thing as bad weather.

Prior to Adam and Eve eating the Forbidden Fruit, the weather was constant and always like a nice day in Tahiti, and there was only enough CO2 in the air to keep the existing plants in the Garden of Eden alive. It has all been down hill since then! Even the snake has to live in the grass. Alas, we are doomed! [Imaginative sarcasm primarily for the benefit of ‘griffy.’]

…. and the idiot lives in England too.

I got out on parole after 23 years. I’m thinking of moving to Costa Rica to stay warm. This Bay Area sh!t just ain’t cutting it.

Derp

Please show your math.

Ditto

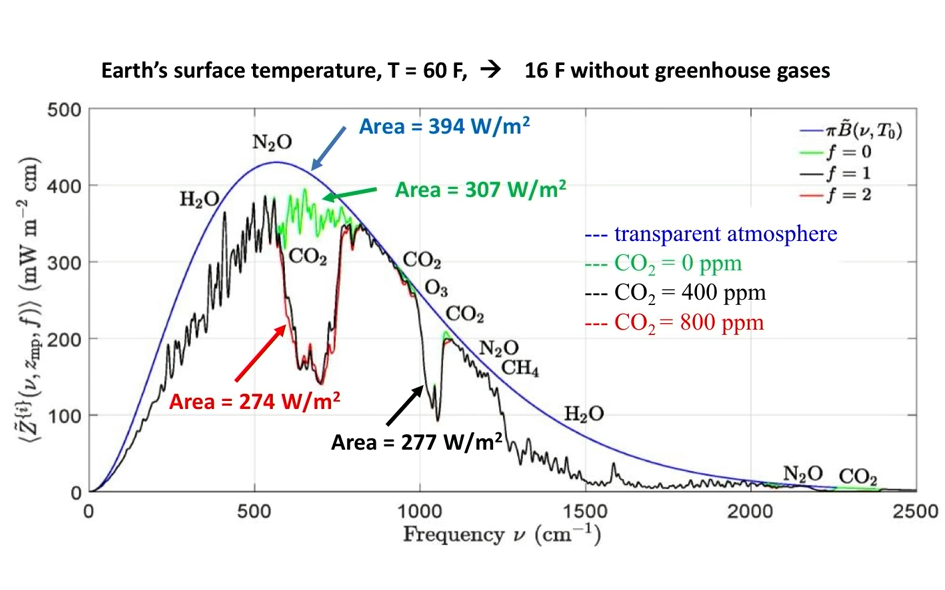

Maybe this will help:

Which means that in the approximately 20 thousand years that modern, H. sapiens have lived in Europe, there have been at least 20 such deluges. Nothing new! And, inasmuch as most cultures have legends of greater floods, we might well be in store for similar. But, it is to be expected, not the result of slight warming.

Winds were more frequent and much more ferocious at the end of the last glaciation because of the cold ice to the north and the warming bare soil exposed by the retreat of the glaciers.

You seem to be doing your hand-waving fan dance based on what you have experienced during your short life, rather than from the viewpoint of a geologist accustomed to envisioning millions and tens of millions of years. It is no wonder that you think that the sky is falling.

I know many posters know this but the pillock, Griff, is laughing at the people who take the trouble to put him/her/it right on CC etc.

You must understand, this idiot’s Mother has to lean his bed sheets against the wall to crack ’em in order to fold ’em for the wash. He’s also a waste of blog space. Please ignore him – even if you enjoy the sport.

It’s NOT about griffter, every regular here knows he’s been schooled time and time again about his baseless claims yet he persists. It’s about anyone who might be new here so they know that griffter is a despicable liar who parrots discredited BS.

About 8000 years ago, a group of several hundred people in the Himalayas were killed in a hailstorm, leaving them with tennis ball sized holes in their skulls. Weather extremes have always happened from time to time. No evidence they’re increasing now.

Hadn’t heard about that one. got a search to go to?

https://www.indiatoday.in/india/north/story/uttarakhand-roopkund-skeleton-lake-mystery-solved-bones-9th-century-tribesmen-died-of-hail-storms-165083-2013-05-31

You really need to stop posting your lack of knowledge… Take some time off, and dedicate yourself to getting a real education!

In contemporary England? Surely you jest. England stopped doing education several decades ago.

The stench of desperation in these words!

Sorry old boy, your threatened climate “attacks” have all happened now and then for over 3000 years. There were several pueblo cultures in the current Airzona/New Mexico and they lived there for centuries and prospered to the degree possible until a super drought around 1000CE and Inca invaders broke the whole area apart and died out..

Those droughts and other climate effects have returned many times over the years.

The last one was more or less in the 1930’s, further north and east. 100mph winds occur regularly, particularly in the mountainous states.

There is no need to look for “human caused” climate change. The natural changes seem to be plenty powerful and it is difficult to find any “climate changes”.

Keep in mind, the UN set up the United Nations Environment Program SPECIFICALLY to evaluate “HUMAN-CAUSED” environmental changes. No science need apply. Apparently, despite all the history, only humans can change the climate. Forget the Sun, currently in a major low point causing many effects on earth, earthquakes, fickle winds(mostly caused by the sun) and waaay more.

all of this shit has happened many times before down thru all written history.

It is nothing new, nothing catastrophic, & it sure isn’t unprecedented. No need to tell you to do some research because you won’t & besides you already know

that all you are doing is spreading bullshit & lying threw your teeth like a flim flam huckster. .

Climate Communists such as Griff are not, perhaps, aware that one would expect hundreds of 1-in-1000-year events every year, because there are so many micro-climates and so many possible weather records. They are also unaware that, particularly in the extratropics, generally warmer weather is more likely than not to lead to fewer extreme-weather events overall, which is why even the grim-faced Kommissars of the Brussels tyranny-by-clerk have found that even with 5.4 K warming between now and 2080 there would be 94,000 more living Europeans by that year than if there were no global warming between now and then.

I saw that movie too.

Now THAT’s Cognitive Dissonance if ever I saw it.

Exactly what I keep telling myself HotScot. Griff needs to take a course in human psychology to understand what is going on in his head.

I’d bet he’s getting a $1 per reply he gets, or some such. He doesn’t even make usable claims.

Global cooling trend intact since February 2016.

It’s a pleasure! On balance, one would expect global warming to continue, but these long Pauses are a visually simple demonstration that the rate of warming is a great deal less than had originally been predicted.

There has been no warming but, the science, man. The science. The science of consensus says that we’ve only got a few years left on the clock before earth becomes inhabitable. Doctor of Earthican Science, Joe Biden says we have only eight years left to act before doomsday. DOOMSDAY!

Teh siense, you meant?

Isn’t it interesting that the ones least able to fully understand ‘Science’ are the ones proclaiming it the loudest.

Yes, that is always the way. Ignorance is always demonstrated by those that lack training or rational thought.

On close inspection, Tom, it is not the science they are proclaiming. The science is untenable. So, they proclaim their virtuousness, and your/mine ignorance of the necessity to not question the science, in order to save the world from mankind’s industrial nature.

Literally! I mean it. Not figuratively. You know the thing.

More Goreball warning, as the Earth recovers from the devastating LIA. Record food crops from the delicious extra CO2.

Viscount Monckton,

Thanks for your article which I enjoyed.

Trolls make-use of minor and debateable points as distractions so I write to suggest a minor amendment to your article. Please note that this is a genuine attempt to be helpful and is not merely nit-picking because, in addition to preempting troll comments, my suggested minor amendment emphasises the importance of natural climate variability which is the purpose of your study of the ‘new pause’.

You say,

I write to suggest it would be more correct to say,

‘Therefore, if the feedback regime as it stood in 1850 were to persist today (and there is good reason to suppose that it does persist, for the climate is probably near-perfectly thermostatic), …’

This is because spatial redistribution of heat across the Earth’s surface (e.g. as a result of variation to ocean currents) can alter the global average temperature (GAT). The change to GAT occurs because radiative heat loss is proportional to the fourth power of the temperature of an emitting surface and temperature varies e.g. with latitude. So, GAT changes to maintain radiative balance when heat is transferred from a hot region to a colder region. Calculations independently conducted by several people (including me and more notably Richard Lindzen) indicate the effect is such that spatial redistribution of heat across the Earth’s surface may have been responsible for the entire change of GAT thought to have happened since before the industrial revolution.

Richard

You make a fine comment to go with CM’s article. Your assertion is one reason why using averages for the GAT makes no sense. An average only makes sense if the actual radiation occurs in that fashion. Otherwise, part of the earth (the equator) receives a predominate amount of the radiation and it reduces away from that point. Since temp is bases on an exponent of 4, the temps will also vary based on this factor. Simple averages and “linear” regression, homogenization, etc. simply can not follow the temps properly.

Richard Courtney asks me to add a second qualifier, “probably”, to the first, “near”, in the sentence “The climate is near-perfectly thermostatic”. However, Jouzel et al. (2007), reconstructing the past 810,000 years’ temperatures in Greenland by cryostratigraphy, concluded that in all that time (after allowing for polar amplification) global temperatures varied by little more than 3 K either side of the period mean. The climate is, therefore, near-perfectly thermostatic. Compensating influences such as Eschenbach variability in tropical afternoon convection keep the temperature within quite narrow bounds.

And owing to the fact that the vast majority, 99.9% of the earth’s surface is constantly exposed to deep space at near absolute zero and the Sun takes up such a small heat source in area it is remarkable that it does keep such a good control of temperature. I put that control largely down to clouds especially at night time in winter .

Of the numerous thermostatic processes in climate, the vast heat capacity of the ocean is probably the most influential.

Most voters believe that climate change is real and dangerous because they are force fed a constant diet of media alarmism, supported by dim politicians and green activists. It is so uncool [no pun intended] to be a climate heretic when the religious orthodoxy promotes ideological purity and punishes rational thinking.

Most people are uncomfortable living outside the orthodoxy especially when one is exposed to a constant barrage of dogma reinforcing it every minute. The media, now acting as the public relations branch of “progressive” governments, tailor their reporting to suit.

“…the “University” of East Anglia is slower at maintaining the data these days than it used to be…”

Hey, they’ve been really busy. Those “adjustments” don’t do themselves, you know.

uEA is now redundant. Stock markets do the predictions – FTse100 is currently running at 2,7C and needs to divest itself of commodities, oil and gas to reach the magic 1.5C. I don’t know where they imagine the materials will come from for their electric cars, heat pumps, wind farms, mobile phones, double glazing etc. No doubt China will step in to save the day just before we run into the buffers.

Wind turbines are self replicating organisms dontchaknow. That’s why the electricity they produce is so cheap.

It is only “cheap” if you live in never ending wind land , otherwise you have to keep a gas fired power plant idling in the background , MW for MW .

Truth be known, UEA is now only half-fast compared to what they used to be.

UAH is a multiply adjusted proxy measurement of the Troposphere, which doesn’t even agree with similar proxy Tropospheric measurements (why do these pages never mention RSS these days?).

I think we have to take it as at least an outlier and quite probably not representative of what’s happening.

Predictable attempt to discredit the most reputable and accurate measuring system we have.

The irony is that if UAH cannot be relied on then nor can any other system of measurement.

Would be interested to know the trend from the old pause at its maximum including the new pause.

I imagine it would be Roy’s 0.14 trend but. It might be lower.

So 1997? to 2021?

Depends on when exactly you think the old pause started. Cherry picking the lowest and longest trend prior to 1998, the trend from April 1997 is 1.1°C / decade.

Starting just after the El Niño the trend from November 1998 is 1.6°C / decade.

Did you enjoy Antarctica’s second coldest winter on record? I know I did.

How was the UK’s 3rd warmest autumn for you, fret? Did you manage to find your usual cloud?

What will you make of the situation if next year it is the 4th warmest Autumn? Or even if it is tied with this year? Do you really believe that the ranking has any significance when depending on hundredths of a degree to discriminate?

Did you not learn anything, ToeFungalNail? When the difference in temperature is less than the measurement error any ranking of the warmest month, year, or season is bogus. Why do you persist in (fecklessly) trying to mislead?

-51 oC central Greenland last week , coldest I have ever seen that !

I assume you mean 0.11 and 0.16 C/decade

the old pause started prior to 1997 El Nino spike, there was never pause starting from post-spike… indeed it is that large spike that made the long statistical “pause” possible

Yes, sorry. Good catch. I was thinking of the per century values.

I’m not sure if anyone sees the irony of claiming that a pause only exists prior to a temperature spike and vanishes if you start after the spike.

Bellman,

Your comment is lame and demonstrates you do not understand the calculation conducted by Viscount Monckton. I explain as follows.

(a)

The “start” of the assessed pause is now

and

(b) the length of the pause is the time back from now until a trend is observed to exist at 90% confidence within the assesedd time series of global average temperature (GAT).

The resulting “pause” is the length of time before now when there was no discernible trend according to the assessed time series of GAT.

I see no “irony” in the “pause” although its name could be challenged. But I do see much gall in your comment which attempts to criticise the calculation while displaying your ignorance of the calculation, its meaning, and its indication.

Richard

I’ve already answered this a couple of times, but no, Monckton’s pause is not based on confidence intervals, nor have I ever seen him claim that it starts at the end. Here’s Monckton’s definition

If you think you understand how the pause is calculated better than me, feel free to calculate when the pause should have started or ended this month and share your results, along with the workings.

For my part, I just have a function in R that calculates the trend from each start date to the current date, and then I just look back to see the earliest negative value. I could get the earliest date programmatically, but it’s useful to have a list of all possible trends, just to see how much difference a small change in the start date can make.

Bellman,

I object to you blaming me for your inability to read.

I said,

(a)

The “start” of the assessed pause is now

and

(b) the length of the pause is the time back from now until a trend is observed to exist at 90% confidence within the assessed time series of global average temperature (GAT).

You quote Viscount Monckton as having said,

As usual, the Pause is defined as the longest period, up to the most recent month for which data are available, during which the linear-regression trend on the monthly global mean lower-troposphere temperature anomalies shows no increase.

The only difference between those two explanations is that

I state the confidence (90%) that is accepted as showing no change (or ” no increase”) normally applied in ‘climate so-called science’

but

the noble Lord assumes an interested reader would know that.

I assume your claim that you cannot read is sophistry intended to evade the need for you to apologise for having posted nonsense (i.e. you don’t want to say sorry for having attempted ‘bull sh** baffles brains’)

Richard

“The only difference between those two explanations is that

I state the confidence (90%) that is accepted as showing no change”

And that’s where your method is different from Lord Monckton’s. And no amount of personal insults will convince me you are right and I’m wrong. I said before, if you want to convince me about your 90% confidence interval approach, actually do the work, show me how that will make January 2015 the start date this month.

Here’s some of my workings, each value represents the trend in degrees per century starting at each month.

The pause starts on January 2015, because that is the earliest month with a zero trend, actually -0.03.

If you wanted to go back as far as possible to find a trend that was significant at the 90% level the pause would be much longer.

“(b) the length of the pause is the time back from now until a trend is observed to exist at 90% confidence within the assessed time series of global average temperature (GAT).2”

Please provide a link to where Monckton says that he uses 90% confidence limits.

He doesn’t.

And, what’s more he doesn’t have to, as denizens don’t require it and he ignores all critics with bluster and/or ad hom.

In short he has blown his fuse and this place is the only one he can get traction for his treasured snake-oil-isms.

Forgot:

(the real driving motivation for his activities, so apparent in his spittle-filled language) …..

Accusing all critics of being communists or paid trolls.

Quite, quite pathetic.

And this is the type of science advocate (let’s not forget with diplomas in journalism and the Classics) who you support to keep your distorted view if the world and its climate scientists away from reality.

Paid climate Communists such as Bellman will make up any statistic to support the Party Line and damage the hated free West. The UAH global mean lower-troposphere temperature trend from April 1997 to November 2021 was 0.1 C/decade, a rate of warming that is harmless, net-beneficial and consistent with correction of climatology’s elementary control-theoretic error that led to this ridiculous scare in the first place.

Ad hominems aside I’d be grateful if you could point out where you think my statistics are wrong or “made up”.

Given that there is currently a lively discussion going on between me and Richard S Courtney about how you define the pause this would be a perfect opportunity to shed some light on the subject – given you are the only one who knows for sure. I say it is based on the longest period with a non-positive trend, whilst Courtney says it is the furthest you can go back until you see a significant trend.

It would be really easy to say Courtney is correct and here’s why, or no sorry Courtney, much as it pains me to say it, the “paid climate communist” is right on this one.

The furtively pseudonymous “Bellman” asks me to say where what it optimistically calls its “statistics” are wrong. It stated, falsely, that the world was warming at 1.1 C/decade, when the true value for the relevant period was 0.1 C/decade.

It was an honest mistake, for which I apologized several days ago when it was pointed out

https://wattsupwiththat.com/2021/12/02/the-new-pause-lengthens-by-a-hefty-three-months/#comment-3402617

Maybe, if you had just asked if it was correct, instead of making snide innuendos, I could have set the record straight to you as well. Unfortunately the comment system here doesn’t allow you to make corrections after a short time, and any comment I add will appear far below the original mistake.

For the record, here’s what the comment should have said

As an aside, I like the fact that Monckton is accusing me of making up statistics to “support the Party Line and damage the hated free West”, when I’m actually using the statistics to support his start date for the pause.

Unclear what you had in mind when you wrote the phrase “the old pause at its maximum” here …

… I’ll take it as “(the start of) the longest zero-trend period in UAH (V6)”, which is May 1997 to December 2015.

I haven’t updated my spreadsheet with the UAH value for November yet (I’ll get right on that, promise !), but the values for “May 1997 to latest available value” are included in the “quick and dirty” graph I came up with below.

Notes

1) UAH can indeed be considered as “an outlier” (along with HadCRUT4).

2) UAH trend since (May) 1997 is between 1.1 and 1.2 (°C per century), so your 1.4 “guesstimate” wasn’t that far off.

HadCRUT4 trend is approximately 1.45.

The other “surface (GMST) + satellite (LT)” dataset trends are all in the range 1.7 to 2.15.

3) This graph counts as “interesting”, to me at least, but people shouldn’t try to “conclude” anything serious from it !

It is important to remember that the UAH lower troposphere temperature is a complex convolution of the 0-10km temperature profile which decreases exponentially with altitude; it is not the air temperature at the surface.

And the GHE occurs at altitude in the Troposphere.

Ergo the IPCC tropospheric hotspot.

Stephen, actually, GISS and UAH used to be very closely in agreement until Karl (from which I coined the term “Karlization of temperatures” ‘adjusted’ us out of the Dreaded Pause^тм in 2015 on the eve if his retirement. Mearns, who does GISS’s satellite Ts then responded with his complementary adjustments. It bears mentioning that Roy Spencer invented the method and was commended for it by NASA at the time.

Karl added 0.12 C to the ARGO data to “be consistent with the [lousy] ship engine intake data.” This adds an independent warming trend over time as more and more ARGO floats come into the datasets, replacing the use of engine intakes in ongoing data collection.

Karl also used Night Marine Air Temperatures (NMAT) to adjust SSTs. Subsequent collected data have shown NMAT diverging from SST significantly. Somebody should readdress his “work.”

UAH is an honest broker that both sides can agree upon.

Yep but it is not a measurement of the earths surface, so useful but not the full picture.

Cooling earth is to see 2-3 month later in the lower troposphere.

Exactly Simon, we need to include the temps inside a volcano to get the full picture.

You might think that but I am for going with all the recognised data sets to get the full picture.

Just like Russia colluuuusion 😉

Duh… the one trick pony is now a no trick ass.

Are you calling yourself an ass?

Russia colluuuusion indeed. Along with your Xenophobia..that one always cracks me up.

No quarrel with that, but realize the limitations of each dataset. The “Karlized” set should not be used for scientific analyses. Also, RSS needs to explain its refusal to dump obviously bad data. Additionally, their method for estimating drift is model based as opposed to UHA’s empirical method.

Why would you measure the earths surface? Asphalt can get up to enormous temperatures not representative of the air on a sunny day. The entire point of measuring the lower troposphere is that it won’t have UHI.

That’s how they originally sold the satellites to Congress.

“Why would you measure the earths surface? “

Umm because we live here, or at least I do.

Ummm, no. You live in the lower part of the atmosphere, not in the surface! The surface is the ocean and land, e.g.. the “solid” part of the planet. The atmosphere is the gaseous part of the planet. The atmosphere is an insulator and it has a gradient from the boundary with the surface and toward space. The surface has a gradient in two directions downward and upward into the atmosphere.

Do you live in a rural location or in a city? It makes a big difference in measured temperatures.

Simon: Happily for you, UAH does agree with the direct measurements of balloon sondes. Agreement with independent measures is, of course the highest order of validation. The good fellow who invented it, Dr. Roy Spencer received a prestigious commendation of NASA back in the days when that meant a lot.

Look I have no issue with UAH, it is just not the complete picture. It has also had a lot of problems going back so anyone who thinks it is the be all and end all is, well, wrong.

And you think any of the lower atmosphere temperature data sets don’t have problems going back? They are probably less reliable because of coverage issues and the methods used to infill.

”Look I have no issue with UAH”……”( I’m just uncomfortable with what it’s showing)”

…. and it is due to natural variability.

When solar magnetic activity is high TSI goes up and warms the land and oceans. When magnetic activity goes down there is flood in of energetic GCRs which enhances cloud formation. Clouds increase albedo which should reduce atmospheric warming, but clouds also reduce heat re-radiation back into space.

Balance between two is important factor for the atmospheric temperature status, and at specific levels of reduction of solar activity the balance is tilted towards clouds warming effect.

Hence, we find that when global temperature is above average and amount of ocean evaporation is also above average, during falling solar activity there will be mall increase in the atmospheric temperature.

http://www.vukcevic.co.uk/UAH-SSN.gif

After prolonged period of time (e.g. Grand solar minima) oceans will cool, evaporation will fall and the effect will disappear.

“UAH is an honest broker that both sides can agree upon.”

Yet ever since UAH showed a warmer month one side keeps claiming satellite data including UAH is not very reliable. See carlo, monte’s analysis

https://wattsupwiththat.com/2021/12/02/uah-global-temperature-update-for-november-2021-0-08-deg-c/#comment-3401727

according to him the monthly uncertainty in UAH is at least 1.2°C.

Bellman said: “according to him the monthly uncertainty in UAH is at least 1.2°C.”

Which is odd because both the UAH and RSS groups using wildly different techniques say the uncertainty on monthly global mean TLT temperatures is about 0.2. [1] [2]

Why do you continue to use the word “uncertainty” when you don’t understand what it means?

Comparisons of line regressions against those from radiosondes is NOT an uncertainty analysis.

I use the word uncertainty because we don’t know what the error is for each month, but we do know that the range in which the error lies is ±0.2 (2σ) according to both UAH and RSS.

Note that I have always understood “error” to be the difference between measurement and truth and “uncertainty” to be the range in which the error is likely to exist.

Which is a Fantasy Island number, demonstrating once again that you still don’t understand what uncertainty is.

I compared UAH to RATPAC. The monthly differences fell into a normal distribution with σ = 0.17. This implies the an individual uncertainty of each of 2σ = 2*√(0.17^2/2) = 0.24 C which is consistent with the Christy and Mears publications. Note that this is despite UAH having a +0.135 C/decade trend while RATPAC is +0.212 C/decade so the differences increase with time due to one or both of these datasets having a systematic time dependent bias. FWIW the RSS vs RATPAC comparison implies an uncertainty of 2σ = 0.18 C. It is lower because differences do not increase with time like what happens with UAH. The data is inconsistent with your hypothesis that the uncertainty is ±1.2 C by a significant margin.

tell us again how temps recorded in integers can be averaged to obtain 1/100th of a degree. The uncertainty up to at least 1980 was a minimum of ±0.5 degrees. It is a matter of resolution of the instruments used and averaging simply can not reduce that uncertainty.

As Carlo, Monte says, “Comparisons of line regressions against those from radiosondes is NOT an uncertainty analysis.”

Linear regression of any kind ignores cyclical phenomena from 11 year sunspots, to 60 years cycles of ocean currents, to orbital variation.

Even 30 years for “climate change” ignores the true length of time for climate to truly change. Tell what areas have become deserts in the 30 to 60 years. Have any temperate boundaries changed? Have savannahs enlarged or shrunk due to temperature? Where in the tropics has become unbearable due to temperature increases?

Uncertainty: parameter, associated with the result of a measurement, that characterizes the dispersion of the values that could reasonably be attributed to the measurand.

If the uncertainty of a monthly UAH measurement is 1.2°C, then say if the measured value is 0.1°C, you are saying it’s reasonable to say the actual anomaly for that month could be between -1.1 and +1.3. If it’s reasonable to say this, you would have to assume that at least some of the hundreds of measured values differ from the measurand by a at least one degree. If you compare this with an independent measurement, say radiosondes or surface data, there would be the occasional discrepancy of at least one degree. The fact you don;t see anything like that size of discrepancy is evidence that your uncertainty estimate is too big.

UNCERTAINTY DOES NOT MEAN RANDOM ERROR!

You still have no idea of the difference between error and uncertainty. Uncertainty is NOT a dispersion of values that could reasonably be attributed to the measurand. That is random error. Each measurement you make of that measurand has uncertainty. Each and every measurement has uncertainty. You simply can not average uncertainty away as you can with random errors. What that means is that your “true value” also has an uncertainty that you can not remove by averaging.

As to your comparison. You are discussing two measurands using different devices. You CAN NOT compare their uncertainties nor assume that measurements will range throughout the range.

Repeat this 1000 times.

“UNCERTAINTY IS WHAT YOU DON’T KNOW AND CAN NEVER KNOW!”

Why do you think standard deviations are accepted as an indicator of what uncertainty can be. Standard deviations tell you what the range of values were while measuring some measurand. One standard deviation means that 68% of the values fell into that range. It means your measured values of the SAME MEASURAND will probably fall within that range. It doesn’t define what your measurement will be, only what range it could fall in.

Your assertion is a fine example of why scientific measurements should never be stated with including an uncertainty range. Not including this information leads people into the mistaken view that measurements are exact.

“Uncertainty is NOT a dispersion of values that could reasonably be attributed to the measurand.”

That is literally how the GUM defines it

“As to your comparison. You are discussing two measurands using different devices. You CAN NOT compare their uncertainties nor assume that measurements will range throughout the range.”

I’m not saying compare their uncertainties, I’m saying having two results will give you more certainty. I’m really not sure why you wouldn’t want a second opinion if the exact measurement is so important. You know there’s an uncertainty associated with your first measurement, how can double checking the result be a bad thing?

““UNCERTAINTY IS WHAT YOU DON’T KNOW AND CAN NEVER KNOW!””

I don’t care how many times you repeat this, you are supposed to know the uncertainty. Maybe you mean you can never know the error, but as you keep saying error has nothing to do with uncertainty I’m not sure what you mean by this.

“I’m saying having two results will give you more certainty.”

Only if you are measuring the SAME THING. This will *usually* generate a group of stated values plus random error where the random errors will follow a gaussian distribution and will tend to cancel out. Please note carefully that uncertainty is made up of two factors, however. One factor is random error and the other is systemic error. Random error will cancel, e.g. reading errors, systemic error will not.

If you are measuring *different* things then the errors will most likely not cancel. When measuring the same thing the stated values and uncertainties cluster around a true value. When measuring different things, the stated values and uncertainties do not cluster around a true value. There is no true value. In this case no number of total measurements will lessen the uncertainty associated with the elements themselves or the uncertainties associated with the calculated mean.

“I don’t care how many times you repeat this, you are supposed to know the uncertainty.”

Uncertainty is not error. That is a truism. Primarily because uncertainty is made up of more than one factor. If I tell you that the uncertainty of a measurement is +/- 0.2 can you tell me how much of that uncertainty is made up of random error and how much is made up of other factors (e.g. hysteresis, drift, calibration, etc)?

If you can’t tell me what each factor contributes to the total uncertainty then you can’t say that uncertainty *is* error because it is more than that. Uncertainty is not error.

“Only if you are measuring the SAME THING.”

In this case, you are measuring the same thing.

“Random error will cancel, e.g. reading errors, systemic error will not.”

Which is why it’s a good thing you are using different instruments.

“If you are measuring *different* things then the errors will most likely not cancel.”

Why not.

“When measuring different things, the stated values and uncertainties do not cluster around a true value”

Of course they do. The true value is the mean, each stated value is a distance from the mean.

“Uncertainty is not error.”

You don’t know the error, you do know the uncertainty.

“If I tell you that the uncertainty of a measurement is +/- 0.2 can you tell me how much of that uncertainty is made up of random error”

When you are stating uncertainty you should explain how it was established.

Also note, that systematic error’s are at least as much of a problem if you are measuring the same thing, than if you are measuring different things.

Why are you so desperate to make uncertainty as small as possible?

I’d have thought it was always going to be a good idea to be as certain as possible. Why would you want to be less certain?

How in the world did you jump to this idea? I never said or implied this. Instead I’ve been trying to show you how temperature uncertainties used in climastrology are absurdly small, or just ignored completely.

I was being flippant with your question, “Why are you so desperate to make uncertainty as small as possible?”

And yet the fact remains, that temperature uncertainties used in climastrology are absurdly small, or just ignored completely. Subtracting baselines does NOT remove uncertainty.

But it isn’t a fact, just your assertion. You say they are small because you can’t believe they could be so small, and in contrast give what to me seem absurdly large uncertainties.

Then you again make statements like “Subtracting baselines does NOT remove uncertainty”, as if merely you saying it makes it so.

I’m finished trying to educate you lot, enjoy life on Mars.

“Also note, that systematic error’s are at least as much of a problem if you are measuring the same thing, than if you are measuring different things”

So what? In one case you will still get clustering around a “true value” helping to limit random error impacts. In the other you won’t.

The so what, is it’s a good idea to measure something with different instruments using different eyes.

Look at the word you used — error. ERROR IS NOT UNCERTAINTY!

I can use a laser to get 10^-8 precision. Yet the uncertainty still lies with at least +/-10^-9. A systematic ERROR will still give good precision but it will not be ACCURATE!

Sorry if I’ve offended you again, but it was the Other Gorman who used the dreaded word.

“Uncertainty is not error. That is a truism. Primarily because uncertainty is made up of more than one factor. If I tell you that the uncertainty of a measurement is +/- 0.2 can you tell me how much of that uncertainty is made up of random error and how much is made up of other factors (e.g. hysteresis, drift, calibration, etc)?”

Rather than endlessly shout UNCERTAINTY IS NOT ERROR to a disinterested universe, it would be a lot more useful if you explain what you think uncertainty is. I’ve given you the GUM definition and you rejected that. I’ve tried to establish without success, is you definition has any realistic use. All you seem to want is for it to be a word that can mean anything you want. You can tell me the uncertainty in global temperatures is 1000°C, but any attempt to establish if that means you realistically think gloabal temperatures could be as much as 1000°C is just met with UNCERTAINTY IS NOT ERROR.

Sorry, that’s just not so. If uncertainty was error then the GUM definition wouldn’t state that after error is analyzed there still remains an uncertainty about the stated result.

It is *you* that keeps on rejecting that.

although error and error analysis have long been a part of the practice of measurement science or metrology.

It is now widely recognized that, when all of the known or suspected components of error have been

evaluated and the appropriate corrections have been applied, there still remains an uncertainty about the

correctness of the stated result, that is, a doubt about how well the result of the measurement represents the

value of the quantity being measured.”

This is the GUM definition. Please note carefully that it specifically states that uncertainty is not error. All the suspected components of error can be corrected or allowed for and you *still* will have uncertainty in the result of the measurement.

All this means is that once the uncertainty in your result exceeds physical limits that you need to re-evaluate your model. Something is wrong with it! In fact, if you are trying to model the global temperature to determine a projected anomaly 100 years in the future, and the uncertainty in your projection exceeds the value of the current temperature then you need to stop and start over again. For that means your model is telling you something you can’t measure! Anyone can stand on the street corner with a sign saying the world will end tomorrow. Just how much uncertainty is there in such a claim? If the sign says it will be 1C hotter tomorrow just how much uncertainty is there in such a claim?

And what the climatologists fail to recognize is that the correction factors themselves also have uncertainty that must be accounted for.

Hadn’t thought of that! Just keep going down the rabbit hole!

“Sorry, that’s just not so.”

I was talking to Jim, reminding him he rejected the error free definition of measurement uncertainty, as well as the definitions based on error.

“All this means is that once the uncertainty in your result exceeds physical limits that you need to re-evaluate your model.”

Really? You can’t accept the possibility that what it tells you is your uncertainty calculations are wrong?

“I can use a laser to get 10^-8 precision. Yet the uncertainty still lies with at least +/-10^-9.”

You keep confusing precision with resolution. If that’s not clear let me say RESOLUTION IS NOT PRECISION.

“A systematic ERROR will still give good precision but it will not be ACCURATE!”

Yes, that’s why I’m saying it’s useful to measure something twice with different instruments, even if their resolution is too low to detect random errors.

Oh yeah, you’re the world’s expert on all things metrology, everyone needs to listen up.

How many of those dozens of links that Jim has provided to you on a silver platter have you studied? Any?

No, you are just like Nitpick Nick Stokes, who picks at any little thing to attack anyone who threatens the global warming party line.

Go read his web site, he’ll tell you what you want to hear.

“Oh yeah, you’re the world’s expert on all things metrology, everyone needs to listen up.”

I am absolutely not an expert on anything – especially metrology. If I appear to be it’s because I’m standing on the shoulders of midgets.

“How many of those dozens of links that Jim has provided to you on a silver platter have you studied? Any?”

Enough to know that any he posts directly contradict his argument. Honestly the difference between SD and SEM is well documents, well known and Jim is just wrong, weirdly wrong, about them.

“No, you are just like Nitpick Nick Stokes, who picks at any little thing to attack anyone who threatens the global warming party line.”

I’m flattered that you compare me with Stokes.

The truth finally emerges from behind the greasy green smokescreen…

So Señor Experto, do tell why machine shops all don’t have a battalion of people in the backroom armed with micrometers to recheck each and every measurement 100-fold so that nirvana can be reached through averaging?

Finally, a sensible question, though asked in a stupid way.

Why don;t machine shops all make hundreds of multiple readings to increase the precision of their measurements? I think Bevington has a section on this that sums to up well. But the two obvious reasons are

1) it isn’t very efficient. Uncertainty decreases with the square root of the number of samples, s the more you do the less of a help it is. Take four measurements and you might have halved the uncertainty, but to get to a tenth the uncertainty, and hence that all important extra digit would require 100 measurements, and to get another digit is going to require 10000 measurements. I can’t speak for how machine shops are organized, but I can’t imagine it’s worth employing that many people just to reduce uncertainty by a hundredth. If you need that extra precision it’s probably better to invest in better measuring devices.

2) as I keep having to remind you, the reduction in uncertainty is a theoretical result. Taking a million measurements won’t necessarily give you a result that is 1000 times better. The high precision is likely to be swamped out by any other small inaccuracies.

Efficiency isn’t the point, you are running around the bush to try and and not answer the question. The question is whether it can be done with more measurements.

And he ran away from the inconvenient little fact is that no machine shop has a backroom filled with any people who do nothing repeat others’ measurements, not 100, not 20, not 5, not 1.

“Efficiency isn’t the point”

Really, wasn’t the question

“do tell why machine shops all don’t have a battalion of people in the backroom armed with micrometers to recheck each and every measurement 100-fold so that nirvana can be reached through averaging?”

I’m really not convinced by all these silly hypothetical questions, all of which seem to be distracting from the central question, which is does in general uncertainty increase or decrease with sample size.

Honestly the difference between SD and SEM is well documents, well known and Jim is just wong, weirdly wrong, about them.

If I am so wrong why don’t you refute the references I have made and the inferences I have taken from them. Here is the first one to refute.

SEM = SD / √N, where:

SEM is the standard deviation of the sample means distribution

SD is the standard deviation of the population being samples

N is the sample size taken from the population

What this means is you need to decide what you have in a temperature database. Do you have a group of samples or do you have a population of temperatures.

This is a simple decision to make, which is it?

I don’t need to refute the references because they agree with me.

“SEM = SD / √N, where:

SEM is the standard deviation of the sample means distribution

SD is the standard deviation of the population being samples

N is the sample size taken from the population”

See, that’s what I’m saying and not what you are saying. You take the standard deviation of the sample, which is an estimate of the SD of the population, and then think that is the standard error of the mean. Then you multiply the sample standard deviation by √N in the mistaken believe that this will give you SD.

In reality you divide the standard standard deviation by √N to get the SEM. This is on the assumption that the sample standard deviation is an estimate of SD.

Here’s a little thought experiment to see why this doesn’t work. You took a sample of size 5, took it’s standard deviation and multiplied by √5 to get a population standard deviation more than twice as big as the sample standard deviation. But what if you’d taken a sample of size 100, or 1000 or whatever. Using your logic you would multiply the sample deviation by √100 or √1000. This would make the population deviation larger the bigger your sample size. But the population standard deviation is fixed. It shouldn’t change depending on what size sample you take. Do you see the problem?

“What this means is you need to decide what you have in a temperature database. Do you have a group of samples or do you have a population of temperatures.

This is a simple decision to make, which is it?”

Of course you don;t have the population of temperatures. The population is all temperatures across the planet over a specific time period. It’s a continuous function and hence infinite. You are sampling the population in order to estimate what the population mean is.

They use temperatures from one location to infill temps at other locations. So what components of the population don’t you have? Are you saying you need a grid size of 0km,0km in order to have a true population?

Sampling only works if you have a homogenous population to sample. Are the temps in the northern hemisphere the same as the temps in the southern hemisphere? How does this work with anomalies?

Well yes, that’s what sampling is. Taking some elements as an estimate of what the population is. Of course, as I’ve said before the global temperature is not a random sample, and you do have to do things like infilling and weighting which is why estimates of uncertainty are complicated.

This is just more jive-dancing bullsh!t, you are not sampling the same quantity. You get one chance and it is gone forever.

“In reality you divide the standard standard deviation by √N to get the SEM. This is on the assumption that the sample standard deviation is an estimate of SD.”

If you already have the SD of the population then why are you trying to calculate the SEM? The SEM is used to calculate the SD of the population! If you already have the SD of the population then the SEM is useless!

You have to know the mean to calculate the SD of the population and you have to know the size of the population to calculate the mean. That implies you know the mean exactly. it is Σx/n where x are all the data values and n is the number of data values. Thus you know the mean exactly. And if you know the mean exactly and all the data values along with the number of data values then you know the SD exactly.

The SEM *should* be zero. You can’t have a standard deviation with only one value – i.e. the mean which you have already calculated EXACTLY!

This is why you keep getting asked whether your data set is a sample of if it is a population!

“DON’T confuse me with facts, my mind is MADE UP!“

What facts? Someone makes a claim that the sample standard deviation is the standard error of the mean. Something which anyone with an elementary knowledge of statistics know to be wrong and can easily be shown to be wrong. But I’m always prepared to be proven wrong, and that someone has given me an impressive list of quotes, from various sources, except that none of the quotes says that the sample standard deviation is the same as the standard error of the mean, and most say the exact opposite.

Why do I need to read the full contents of all the supplied documents. If you are making an extraordinary claim don’t throw random quotes at me – show me something that supports your claim.

“The standard error of the sample mean depends on both the standard deviation and the sample size, by the simple relation SE = SD/√(sample size). The standard error falls as the sample size increases, as the extent of chance variation is reduce”

From:

https://www.ncbi.nlm.nih.gov/pmc/articles/PMC1255808/

“However, the meaning of SEM includes statistical inference based on the sampling distribution. SEM is the SD of the theoretical distribution of the sample means (the sampling distribution).”

From:

https://www.investopedia.com/ask/answers/042415/what-difference-between-standard-error-means-and-standard-deviation.asp

Here is an image from:

https://explorable.com/standard-error-of-the-mean

Yes all three posts are saying exactly what I’m saying. Why do you think I’m wrong?

You say in a prior post that the “sample standard deviation IS NOT the standard error of the mean. Did you read what I posted?

Let me paraphrase, the Standard Error of the (sample) Mean, i.e., the SEM, is the Standard Deviation of the sample means distribution.

Look at the image.

If (qbar) has a distribution then there must be multiple samples, each with their own (qbar). By subtracting (qbar) from each value you are in essence isolating the error component.

Lastly, this is dealing with one and only one measurand. What the GUM is trying to do here is find the interval within which the true value may lay. This is important because it acknowledges that random errors quite probably won’t be removed by doing only a few measurements. If they did, the experimental standard deviation of the mean would be zero thereby indicating that there is no error left. The distribution of (qbar) would be exactly normal.

One should keep in mind that this is only dealing with measurements and error and in no way assesses the uncertainty of each measurement.

“You say in a prior post that the “sample standard deviation IS NOT the standard error of the mean. Did you read what I posted?”

Yes based on this comment, where you multiplied the standard deviation of a set of 5 numbers by √5 to calculate the standard deviation of the population.

The sample standard deviation is not the same thing as the standard error of the mean.

“Let me paraphrase, the Standard Error of the (sample) Mean, i.e., the SEM, is the Standard Deviation of the sample means distribution.”

Correct, but standard deviation of the sample means, is not the same as the standard deviation of the sample.

“If (qbar) has a distribution then there must be multiple samples, each with their own (qbar).”

No, there do not have to be literal samples. The distribution exists an abstract idea. If you took an infinite number of samples of a fixed size there would be the required distribution, but you don’t need to physically take more than one sample to know that the distribution would exist, and you can estimate it from your one sample.

It would in any event be a pointless exercise becasue if you have a large number of separate samples, the mean of their means would be much closer to the true mean. There would be no point in working out how uncertain each sample mean was, when you’ve now got a better estimate of the mean.

This nonsense constitutes “debunking” in your world?

You *HAVE* to be trolling, right? How do you get a DISTRIBUTION without multiple data points?

Total and utter malarky! Again, with one data point how do you define a distribution, be it literal or virtual?

Multiple samples allow you to measure how good your estimate is. A single sample does not! There is no guarantee that one sample consisting of randomly chosen points will accurately represent the population. And with just one sample you have no way to judge how representative the sample mean and standard deviation is of the total population.

Look at what *YOU* said: “The sample standard deviation is not the same thing as the standard error of the mean.”

When you have only one sample you are, in essence, saying the sample standard deviation *is* the standard deviation of the sample means. You can only have one way. Choose one or the other.

“True mean”? You *still* don’t get it, do you?

“There would be no point in working out how uncertain each sample mean was, when you’ve now got a better estimate of the mean.”

And, once again, you advocate for ignoring the uncertainty of the data points. If your samples consist only of stated values and you ignore their uncertainty then you have assumed the stated values are 100% accurate.

If you data set consists of the total population, with each data point consisting of “stated value +/- uncertainty” then are you claiming that the mean of that total population has no uncertainty? That each stated value is 100% accurate? If so then why even include uncertainty with the data values?

If mean of the total population has an uncertainty propagated from the individual components then why doesn’t samples from that population have an uncertainty propagated from the individual components making up the sample? How can the population mean have an uncertainty while the sample means don’t?

“You *HAVE* to be trolling, right? How do you get a DISTRIBUTION without multiple data points?”

“Total and utter malarky! Again, with one data point how do you define a distribution, be it literal or virtual?”

So you didn’t read any of the links I gave you?

I did read your links. And I told you what the problems with them were. And you *still* haven’t answered the question. How do you get a distribution without multiple data points.?

Fine, disagree with every text book on the subject, because they don’t understand it’s impossible to work out the SEM from just one sample. Just don’t expect me to follow through your tortured logic.

“And you *still* haven’t answered the question. How do you get a distribution without multiple data points.?”

The distribution exists, just because you haven’t sampled it. It’s what would happen if, and I repeat for the hard of understanding, if, you took an infinite number of samples of a specific size. You don’t actually need to take an infinite number of samples to know it exists – it exists as a mathematical concept.

He’s still pushing this “standard error of the mean(s)” asserting this is the “uncertainty” a temperature average.

He will never let go of this.

“When you have only one sample you are, in essence, saying the sample standard deviation *is* the standard deviation of the sample means. You can only have one way. Choose one or the other.”

You are really getting these terms confused. The sample standard deviation is absolutely, positively, not the standard deviation of the sample means. One is the deviation of all elements in the sample, the other is the deviation expected from all sample means of that sample size.

This is why I prefer to call it the error of the mean, rather than the standard deviation of the mean, (what ever GUM says), simply because it avoid the confusion of what particular deviation we are talking about.

“This is important because it acknowledges that random errors quite probably won’t be removed by doing only a few measurements.”

Bingo!

“You keep confusing precision with resolution. If that’s not clear let me say RESOLUTION IS NOT PRECISION.”

OMG! No wonder you have a difficult time. Resolution, precision, and repeatability are intertwined. Resolution lets you make more and more precise measurements, i.e., precision. Higher precision allows better repeatability. Why do you think people spend more and more money on devices with higher resolution if they don”t give better precision?

Intertwined, not the same thing. You know like error and uncertainty are intertwined.

OMG. You still refuse to learn that error and uncertainty are two separate things. The only thing the same is that the units of measure are the same.

Explain how there can be uncertainty without error.

Even Tim points out that uncertainty is made up from random error and systematic error. Just because you can have a definition of uncertainty in terms that doesn’t use the word error, doesn’t mean that error isn’t the cause of uncertainty.

There is no possible explanation that you might accept, so why bother?

Don’t whine.

Knowing error exists doesn’t mean you know what it is, how large it is, or how it affects the measurement.

Uncertainty is *NOT* error.

Why do you keep ignoring what you are being told? Go away troll.

Which is not saying that uncertainty is not caused by error, it’s saying there will always be other reasons for uncertainty as well as error.

I dIdn’t say that standard deviation isn’t made up of several different things. But error and uncertainty are not directly related. For example, if there was no error, i.e., the errors canceled out because they were in a normal distribution, you can still have uncertainty. That is where resolution comes in. There is always a digit beyond the one you can measure with precision.

But isn’t that just another error? Depending on your resolution and what you are measuring it might be random or systematic, but it’s still error. A difference between your measure and what you are measuring.

NO! It is uncertainty, the limit of what can be known.

Why is this so hard?

You and bwx are now the world’s experts on uncertainty, but still can’t find the barn.

What do you think error means?

Why do you insist on treating it as error?

Why do you answer with a question?

AS C,M points out limited resolution is UNCERTAINTY, not error.

Repeat 1000 times, “UNCERTAINTY IS NOT ERROR.

As I’ve pointed out to you already, using different instruments won’t help if the uncertainty in each is higher than what you are trying to measure. The only answer is to calibrate one of them and then use it to measure. If you use two instruments you have 2 chances out of three that both will be either high or low and only 1 chance out of three that one will be high and the other low thus leading to a cancellation of error. Would you bet your rent money on a horse with only a 30% chance to win?

Again, nobody specified in this question that there was a machine that guaranteed there would be zero uncertainty in the first measurement. If such a thing were possible and you could also rule out human error, than no, why would you ever need to discuss uncertainty, everything would be perfect, and getting a second opinion from Mike who also has a zero uncertainty device would not help, though it wouldn’t hurt either.

And again, you need to brush up on your probability if you think there is a one in three chance of the two cancelling out.

But, yet again, all this talks about the specifics of a work shop it just distraction. I’m not trying to set up a time and motion study, just answering the question would having a second measurement improve the uncertainty.

As I’ve tried to tell before, this is what a formal uncertainty analysis does. Try applying for accreditation as a calibration lab and you’ll learn PDQ what I’m talking about.

Are you disagreeing with me or Tim here? He was implying you couldn’t know how much of the uncertainty was due to random error.

Did you actually read what I wrote? Apparently not—a formal uncertainty analysis is how you “explain how it was established“…

Tim has been trying to help you understand that which you do not understand.

Really?

Just how does that help you get to a more accurate answer by averaging their readings? You must *still* propagate the uncertainty – meaning the uncertainty will grow. It will not “average out”.

We’ve been over this multiple times. It’s because they do not represent a cluster around a true value. The measurements of the same thing are related by the thing being measured. Each one gives you an expectation of what the next measurement will be. Measurements of different things are not related by the things being measured. The current measurement does not give you any expectation of what the next measurement will be. Measurements of the same thing give you a cluster around the true value. Measurements of different things do not give you a cluster around a true value, the measurements may give you a mean but it is not a true value.

Yes, really. The scenario was “This is like measuring the run out on a shaft with your own caliper and then asking Mike to come over and use his.”

“You must *still* propagate the uncertainty – meaning the uncertainty will grow. It will not “average out”.

Obviously nothing I can say will convince you that you are wrong on this point, not even quoting the many sources you point me to. You have a mind that is incapable of being changed, which is a problem in your case becasue the ideas you do have are generally wrong.

But I’m really puzzled why you cannot see the issue in this simple case. You’ve measured something, you have a measurement, you know there’s uncertainty in that measurement. That uncertainty means your measurement may not be correct. It may not be correct due to any number of factors, including random errors in the instrument, defects in the instrument, mistakes made by yourself or any number of other reasons. Why on earth would you consider it a bad idea to get someone else to double check your measurements? The second measurement will also have uncertainty, with all the same causes, but now you have a bit more confidence in your result, because you’ve either got two nearly identical results, or you have two different results. You either have more confidence that the first result was correct, or confidence that you have identified an error in at least one reading. Why would you prefer just to assume your result is correct and refuse to have an independent check. Remember how you keep quoting Feynman at me – you’re the easiest person to fool.

Whether you would actually just use an average of two as the final measurement, I couldn’t tell you. It’s going to depend on why you want the measurement in the first place, and how different the results are. But, on the balance of probabilities, and ignoring any priors, you can say that the average is your “best estimate” of the true value.

But I still can’t fathom is how you think having two reading actual increases the uncertainty. Maybe you are not using uncertainty in the sense of measurement uncertainty. “I was certain I had the right value, but then someone got a different result and now I’m less certain.”

“Unskilled, and Unaware”

“We’ve been over this multiple times. It’s because they do not represent a cluster around a true value.”

And you still haven’t figured out that the mean of a population is a true value. And the individual members are dispersed around that true value.

Why do you need to defend the shoddy and dishonest numbers associated with climastrology?

Hey! Kip Hansen just posted an article about SLR uncertainty, you better head over there and nitpick him, show him what’s what.

Really? You *still* think the mean value of the measurements of different things gives you a true value? The mean of the measurements of a 2′ board and an 8′ board will give you a “true value”?

You truly are just a troll, aren’t you?

You don’t seem to understand what you are writing.

The uncertainty interval can be established in many ways. Resolution limits, instrument limits, hysteresis impacts, response time, etc. Why don’t climate scientists explain their uncertainty intervals in detail – if they even mention them at all?

Before they showed up here and were told that uncertainty used by climatastrology was ignored or absurdly small, they had no idea the word even existed. And now they are the experts, believing that averaging reduces something they don’t understand. The NIST web site tells them what they want to hear.

“Really? You *still* think the mean value of the measurements of different things gives you a true value?”

Yes I do. Do you still not think they are?

“The mean of the measurements of a 2′ board and an 8′ board will give you a “true value”?”

Yes, they will give you the true value of the mean of those two boards. I suspect your problem is in assuming a true value has to represent a physical thing.

“You truly are just a troll, aren’t you?”

No.

“You don’t seem to understand what you are writing.”

What bit of “You don’t know the error, you do know the uncertainty.” do you disagree with. You don;t know what the error is because you don’t know the true value, you do know the uncertainty, or at least have a good estimate of it, or else there would be no point in all these books explaining how to analyze uncertainty.

This is some fine technobabble word salad here.