June 1st, 2022 by Roy W. Spencer, Ph. D.

The Version 6.0 global average lower tropospheric temperature (LT) anomaly for May, 2022 was +0.17 deg. C, down from the April, 2022 value of +0.26 deg. C.

The linear warming trend since January, 1979 still stands at +0.13 C/decade (+0.12 C/decade over the global-averaged oceans, and +0.18 C/decade over global-averaged land).

Various regional LT departures from the 30-year (1991-2020) average for the last 17 months are:

YEAR MO GLOBE NHEM. SHEM. TROPIC USA48 ARCTIC AUST

2021 01 0.12 0.34 -0.09 -0.08 0.36 0.50 -0.52

2021 02 0.20 0.31 0.08 -0.14 -0.66 0.07 -0.27

2021 03 -0.01 0.12 -0.14 -0.29 0.59 -0.78 -0.79

2021 04 -0.05 0.05 -0.15 -0.28 -0.02 0.02 0.29

2021 05 0.08 0.14 0.03 0.06 -0.41 -0.04 0.02

2021 06 -0.01 0.30 -0.32 -0.14 1.44 0.63 -0.76

2021 07 0.20 0.33 0.07 0.13 0.58 0.43 0.80

2021 08 0.17 0.26 0.08 0.07 0.32 0.83 -0.02

2021 09 0.25 0.18 0.33 0.09 0.67 0.02 0.37

2021 10 0.37 0.46 0.27 0.33 0.84 0.63 0.06

2021 11 0.08 0.11 0.06 0.14 0.50 -0.43 -0.29

2021 12 0.21 0.27 0.15 0.03 1.63 0.01 -0.06

2022 01 0.03 0.06 0.00 -0.24 -0.13 0.68 0.09

2022 02 -0.00 0.01 -0.02 -0.24 -0.05 -0.31 -0.50

2022 03 0.15 0.27 0.02 -0.08 0.22 0.74 0.02

2022 04 0.26 0.35 0.18 -0.04 -0.26 0.45 0.60

2022 05 0.17 0.24 0.10 0.01 0.59 0.22 0.19

The full UAH Global Temperature Report, along with the LT global gridpoint anomaly image for May, 2022 should be available within the next several days here.

The global and regional monthly anomalies for the various atmospheric layers we monitor should be available in the next few days at the following locations:

Lower Troposphere: http://vortex.nsstc.uah.edu/data/msu/v6.0/tlt/uahncdc_lt_6.0.txt

Mid-Troposphere: http://vortex.nsstc.uah.edu/data/msu/v6.0/tmt/uahncdc_mt_6.0.txt

Tropopause: http://vortex.nsstc.uah.edu/data/msu/v6.0/ttp/uahncdc_tp_6.0.txt

Lower Stratosphere: http://vortex.nsstc.uah.edu/data/msu/v6.0/tls/uahncdc_ls_6.0.txt

No sign of an emergency. Shurely shome mistake!

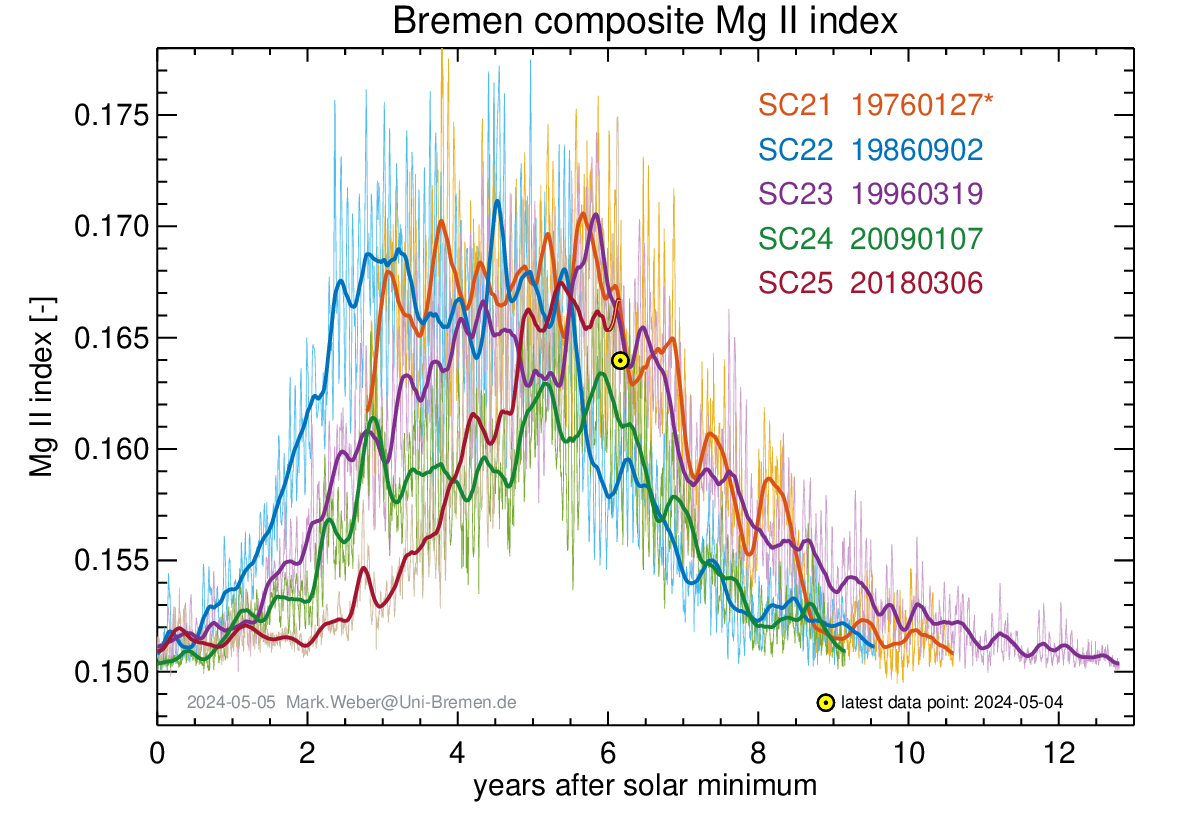

Not necessarily, solar activity went up a bit in May too, but still on track with one of the century ago

Yes, we have an increase in activity in May, but we are already seeing another decrease.

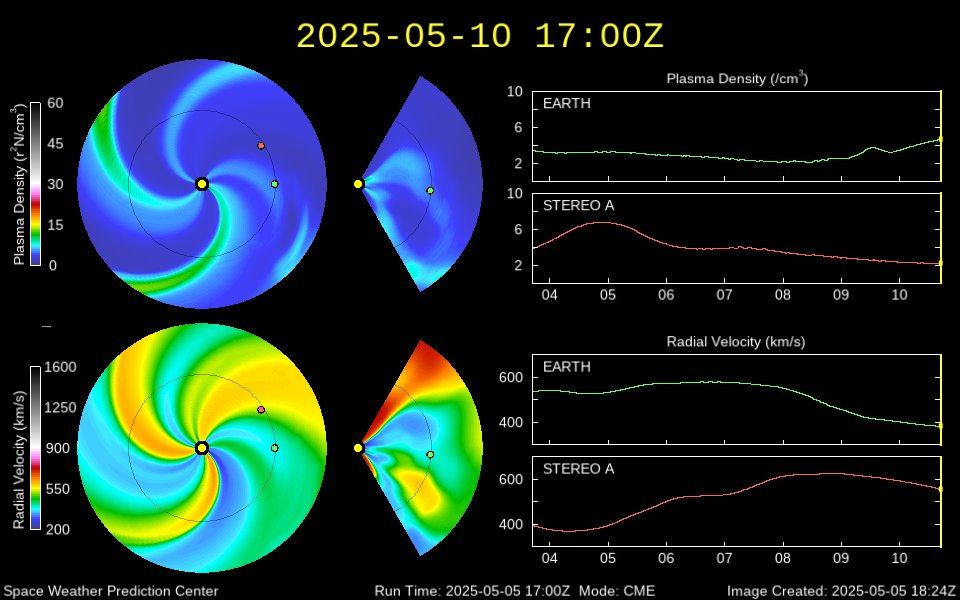

The solar wind ripples a lot.

Jupiter is moving away from Saturn, which will not increase solar activity.

https://www.theplanetstoday.com/

neat, but why did Saturn start radiating a few hours ago?

Cooling since Feb2016 or Feb 2020 – take your pick – even as atmospheric CO2 increases. The Global Warming (CAGW) narrative is proved false – again!. Told you so 20 years ago.

See electroverse.net for extreme-cold events and crop failures all over our blue planet.

Food shortages, fuel and food inflation, imminent famine, caused primarily by cold and wet weather and green energy nonsense.

The world has suffered from woke, imbecilic politicians. We need a few leaders with real science skills and real integrity, not the current crop of gullible green traitors and fools.

So basically the global average temperature anomaly was so small no human could detect it.

And so meaningless no one could detect it.

Of course people can detect it, thats why your house thermostat is settable to 1/100 ths of a degree! Duh.

Any chance to update the two temperature graphs off to the right? I’m really trying to see the USCRN graph, which is tough to find online. Anyone have a link to it?

Click on it, or go to https://wattsupwiththat.com/global-temperature/

Weird! Clicking the link leads to the UAH graph for March, but then clicking on the graph leads to a graph only page for February’s plot – from 2021!

https://www.ncdc.noaa.gov/temp-and-precip/national-temperature-index/time-series/anom-tavg/1/0

https://www.ncei.noaa.gov/access/monitoring/national-temperature-index/

Global cooling trend intact now for six years and three months, after peak of 2016 Super El Niño, which ended the Pause after 1998 SEN.

“The linear warming trend since January, 1979 still stands at +0.13 C/decade (+0.12 C/decade over the global-averaged oceans, and +0.18 C/decade over global-averaged land).”

**********

4.33 decades x .13 deg. Celsius/decade = 0.56 deg. C.

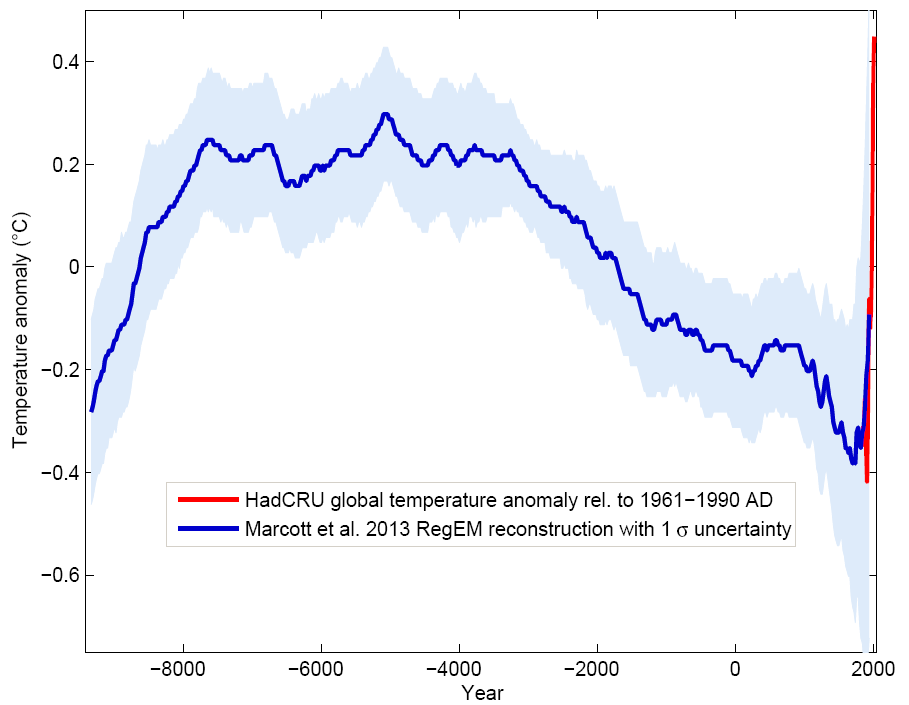

I seem to recall reading somewhere that the upside (warming side) of the Younger Dryas saw warming per decade that was much faster than this. So the alarmists will please excuse me if I don’t exactly go into panic mode here.

Disinformation bot says that’s disinformation.

Bots are one of the things Elon Musk is concerned about in his attempt to buy Twitter. His effort to purchase Twitter is on hold now because of the belief that there are considerably more bots on Twitter than its management is willing to admit to.

Using the Monckton method…

The pause period (<= 0 C/decade) is now at 92 months (7 years, 8 months).

The 2x warming period (>= 0.26 C/decade) is now at 184 months (15 years, 4 months).

The peak warming period (0.34 C/decade) is now at 137 months (11 years, 5 months).

And here is the latest global average temperature analysis comparing UAH with several widely available datasets

The unjustifiedly adjusted, poorly-cited, interpolated (ie, made up) surface station “data” sets are cooked book packs of lies.

UAH is adjusted and interpolated too; arguably more so than the other datasets.

Totally justifiably, to fix specific, known issues, not systematically to cool the past and heat the present. HadCRU’s Jones admitted heasting the land to keep pace with phony ocean warming. GISS’ UHI adjustments make the “data” warmer, not cooler. UAH doesn’t need to infill swaths 1200 km across with pretend “data”.

UAH infills up to 15 cells away. That is 15 * 2.5 * 111.3 km = 4174 km at the equator. They also infill temporally up to 2 days away. That’s something not even surface station datasets do. They also perform many of the same types of adjustments as the surface station datasets. They have a diurnal heating cycle adjustment which is similar in concept to the time-of-observation adjustment. They have to merge timeseries from different satellites similar in concept to homogenization. And the details of how they do these are arguably more invasive than anything the surface station datasets are doing. [Spencer & Christy 1992]

Why is it justified when UAH does it, but not justified when the others do it?

Surface temperature adjustments are applied to long past temperatures, sometimes many adjustments to the same data, and the trend in the adjustments is a large part of the supposed global trend. Any system is going to have to use some adjustments sometimes, but the bias in the surface temperature adjustments defies sanity.

There are so many problems with the ground based sensor network that only someone with absolutely no intention of honesty or integrity would ever use it.

The surface station project showed that over 80% of the sensors used in the US network were so poorly maintained that the data from them was worthless. No amount of mathematical gymnastics could rescue a signal from them.

In addition to local problems, most of the sensors were located in areas that had developed and built up resulting in UHI contamination.

There is the issue of undocumented changes in stations, both changes in instrumentation and location. Even when changes were documented, there was no period where the sensors operated side by side for a period of time, they were just swapped out.

Up until the 70’s, the sensors were analog sensors that were read by the human eyeball. These measurements, even when done properly, were only good to 1 degree.

Finally, the biggest problem with the ground based sensor network, is that it just way, way, way to sparse. Even today,only The US, southern Canada and Europe come anywhere close to be adequately monitored, that’s less than 5% of the Earth’s surface. Oceans are close enough to unmonitored, that the difference is minor.

The very idea that this network can be used to calculate the Earth’s temperature to within 0.01C is laughable. The notion that the we could use this network to do the same over 150 years ago is so ludicrous that only someone with no connection to reality could make such a claim.

MarkW said: “There are so many problems with the ground based sensor network that only someone with absolutely no intention of honesty or integrity would ever use it.”

And yet Anthony Watts himself said of the Berkeley Earth dataset that he was prepared to accept whatever result they produce even if it proves his premise wrong. For those that don’t know Berkeley Earth maintains a ground based observation dataset.

Anthony said that before BEST actually showed their work. His opinion now is quite a bit different.

Why am I not surprised that you chose the old quote and not the new one?

Could it be because you have no intention of telling the truth?

MarkW said: “Anthony said that before BEST actually showed their work. His opinion now is quite a bit different.”

That is interesting isn’t it. He was okay with the BEST method until he saw the result. It is doubly ironic in this discussion since BEST uses the scalpel method as a direct response to criticisms of adjustments.

MarkW said: “Why am I not surprised that you chose the old quote and not the new one?”

Pick that quote because to show that Anthony Watts has no problem using a ground based sensor network. Do you think that means he has no honesty or integrity?

BTW…he is also rebooting his surface station project as we speak. Do you think that too means he has no honesty or integrity?

It is the same for UAH. They have to adjust the entire satellite timeseries to get them aligned. Anyway, what is the bias in the surface temperature adjustments?

bdgwx –

We’ve been down this road several times before.

The biases in the satellite record can be measured and corrections applied as needed, sometimes to the entire record.

The biases in thousands of temperature sensors is impossible to quantify and yet corrections are applied willy-nilly to data several decades old without regard to what the accuracy of the readings were then compared to today.

The UAH data certainly has uncertainty, both in the actual measurements of radiance as well as in the calculation algorithms used to convert from radiance to temperature. Those uncertainties *should* be acknowledged, listed, and propagated through to the final results.

The surface data has the same issue.

Yet the purveyors of each stubbornly cling to the dogma that all error is random, symmetrical, and cancels out of the final results.

Stop trying to conflate measurable bias with unmeasurable bias. UAH adjustments result from measurable bias such as orbital decay, etc. This is not possible with the surface record. UAH is, therefore, the better metric to use. That said, I don’t think any of the data sets that are based on averages of averages of averages which are then used to calculate anomalies are worth the paper used to publish them.

Really? UAH measured all of the biases in the raw data? How exactly did UAH measure all of the biases in the raw data?

He didn’t say that, you did. This is a miss leading statement.

He said-

The biases in thousands of temperature sensors is impossible to quantify and yet corrections are applied willy-nilly

It’s hopeless, Tim, this guy refuses to be educated, he might as well be Dilbert’s pointy-haired boss. But don’t stop putting reality out there, others read it.

Educate me. How does UAH measure all of the biases in the raw data?

His goal is indoctrination, not education. Like most of the other warmunists.

So pointing out that UAH takes liberty to make adjustments that are arguably go above and beyond what surface station datasets are doing is indoctrination?

Yes! What *is* the bias in the surface temperature adjustments?

How can you adjust surface data from the 40’s based on calibration measured in 2000?

If you know the orbital measurements from the time the satellite was launched then you *can* adjust for any bias caused by orbital fluctuation. Similar measurements are not available for surface measurement devices.

TG said: “Yes! What *is* the bias in the surface temperature adjustments?”

That’s what I said!

TG said: “How can you adjust surface data from the 40’s based on calibration measured in 2000?”

The same way UAH selected only the 1982 overlap period of NOAA-6 and NOAA-7 as the basis for the diurnal heating cycle adjustment required for the satellite merging step.

TG said: “If you know the orbital measurements from the time the satellite was launched then you *can* adjust for any bias caused by orbital fluctuation.”

How do you think UAH does that?

BTW…Perhaps if you have time you can comment on taking the average of the two PRT readings from the hot target as an input into the radiometer calibration procedure. Why not just use one PRT since, according to you, uncertainty increases when averaging?

“That’s what I said!”

I know! The point is that you typically ignore that fact!

“The same way UAH selected only the 1982 overlap period of NOAA-6 and NOAA-7 as the basis for the diurnal heating cycle adjustment required for the satellite merging step.”

The overlap period can be measured and allowed for! How do you measure the calibration of surface measurements from 80 years ago? You are, once again, ignoring this fact by trying to say the measured overlap period of the satellites is the same thing as adjusting surface measurements from 80 years ago based on guesses and not measurements! The operative word here is “measured”. UAH can *measure* orbital variations, no one can “measure” the calibration of devices in the past!

“How do you think UAH does that?”

They *MEASURE* the orbits.

“BTW…Perhaps if you have time you can comment on taking the average of the two PRT readings from the hot target as an input into the radiometer calibration procedure. Why not just use one PRT since, according to you, uncertainty increases when averaging?”

We’ve covered this MULTIPLE times and yet you *never* seem to learn. Calibration of the sensor is *NOT* the same thing as the calibration of the measuring device. The ARGO floats are a prime example. The sensor in the ARGO floats can be calibrated to .001C – but the float uncertainty is +/- 0.5C!

It’s the same for the satellites. Calibrate the sensor and the measuring device however you want. That does *not* change the fact that a level of uncertainty remains when the satellite is pointed at the earth! It’s no different than using a micrometer to measure two different things. One can be a gauge block used for calibration and the next the journal on a crankshaft. Uncertainty remains in the amount of force the faces of the device apply to the measured things. If a different amount of force is applied to the gauge block than the crankshaft journal then the readings will have uncertainty that must be allowed for. It’s the same for the satellites. If there are invisible particles in the atmosphere affecting the radiance of the atmosphere at some snapshot point on the earth while there are none in a later snapshot at a different point then there *will* be uncertainty in the measurements taken.

No measurement taken in the real world is ever perfect. No measurement device is ever perfectly calibrated. That’s the entire purpose of using significant figures and uncertainty propagation in the real world.

TG said: “The overlap period can be measured and allowed for!”

So it’s okay to take a single overlap period of only two instruments measuring different locations apply that knowledge to all of the other (and completely different) time periods and other (and completely different) instruments? Is that what you’re saying?

TG said: “They *MEASURE* the orbits.”

It’s not just the orbit. How do they “MEASURE” the temperature bias caused by the change in orbit. How do they “MEASURE” the temperature bias caused by the limb effect? How do they “MEASURE” the temperature bias caused by spatially incomplete data? How do they “MEASURE” the temperature bias caused by temporally incomplete data? How do they “MEASURE” the temperature bias caused by the residual annual cycle of the hot target? How do they “MEASURE” the bias caused by the differing locations of instrument observations? Etc. Etc. Etc.

TG said: “We’ve covered this MULTIPLE times and yet you *never* seem to learn. Calibration of the sensor is *NOT* the same thing as the calibration of the measuring device.”

What does that have to do with anything? The question was…why not just use one PRT since, according to you, uncertainty increases when averaging?

I’ll even extend the question. Why do they average anything at all including but not limited to the two PRTs? Given your abject refusal to accept establish statistical facts and adherence to the erroneous belief that averages have more uncertainty than the individual elements they are based you’d think you would be just as vehemently opposed to UAH as you with any other dataset. Yet here here you are defending their methodological approach averaging and adjustments abound.

“So it’s okay to take a single overlap period of only two instruments measuring different locations “

Again, this can be measured. Adjustments to surface stations in the distant past cannot be measured, only guessed at.

The two are not the same!

” How do they “MEASURE” the temperature bias caused by the limb effect?”

They don’t measure TEMPERATURE! They measure radiance. The temperature calculated from those radiance measurements *do* have uncertainty just as the radiance measurements themselves have uncertainty. But you can MEASURE systematic bias in the satellites because they *exist* today. You can’t do that with surface data from 30, 40, 80 years ago!

I simply don’t understand why this is so hard for you to grasp. Have you bothered to go look up the work of Hubbard and Lin? It doesn’t sound like it. It just sounds like you are throwing crap against the wall hoping some of it will stick so you can use it to cast doubt on UAH.

“What does that have to do with anything? The question was…why not just use one PRT since, according to you, uncertainty increases when averaging?”

Once again you show you have absolutely no grasp of physical reality. Those PRT sensors exist in a measurement device. That device will *add* to the uncertainty of the measurement based on its design, maintenance, location, etc. Even the electronics associated with those PRT sensors have uncertainty associated with each and every part on the circuit board. That adds to the uncertainty of the measurement as well.

Even if you put multiple PRT sensors, each with its own measuring equipment, in the same box there is no guarantee you will get the same reading from each. The electronic equipment that reads the sensor and and stores the data can have different tolerances for each sensor. The uncertainties associated with each measurement device *will* add. That is why averaging only truly works for multiple measurements of the SAME THING using the SAME DEVICE. And even then you need to show that the same measurement device doesn’t have different systematic error on each measurement such as the measuring faces on a device wearing as material is passed across them.

The minute you separate those measurement devices physically into separate boxes the uncertainty gets worse because you are now measuring different things with different measurement devices.

“It is the same for UAH. They have to adjust the entire satellite timeseries to get them aligned.”

The UAH team must do a pretty good job of it because the UAH satellite data correlates with the Weather Balloon data.

TA said: “The UAH team must do a pretty good job of it because the UAH satellite data correlates with the Weather Balloon data.”

Does it?

[Christy et al. 2020]

UAH and Weather Balloons correlate:

https://www.researchgate.net/publication/323644914_Examination_of_space-based_bulk_atmospheric_temperatures_used_in_climate_research

TA said: “UAH and Weather Balloons correlate:

https://www.researchgate.net/publication/323644914_Examination_of_space-based_bulk_atmospheric_temperatures_used_in_climate_research“

So says Christy in that one publication using a procedure dependent on adjustments. I’ll just let Christy’s words speak for themselves.

And

They literally “adjust the radiosonde to match the satellite” in this publication.

Are you okay with this especially since you’ve mentioned your dissatisfaction with adjustments before?

And notice how the other publication, in which Christy is listed as the lead author” comes to a different conclusion.

They have to make up false UAH temperature data to fit the false climate agenda.

Correction — they have to make up false USHCN temperature data to fit the false climate agenda. UAH data is good stuff.

That is quite the indictment upon Dr. Spencer and Dr. Christy. Ya know…Dr. Christy has given testimony to congress on more than one occasion using his “false UAH temperature data”. I wonder what Anthony Watts and the rest of the WUWT editors and audience think about this?

UAH is good data, USHCN is falsified data. My “corrective” comment clarified that. Bottom line, there is no man-made climate change — just man-made climate alarmism — using junk science. CO2 is being demonized just like the Jews were.

So UAH and all of their adjustments is good, but USHCN using similar and arguably less invasive adjustments is falsified? What criteria are you using to make these classifications? I’d like to see if I can replicate your results if you don’t mind.

Here’s the data … https://www.ncei.noaa.gov/pub/data/ushcn/v2.5/

I know where the data is. I use it all of the time. I also know where the source code is (it is here). I didn’t ask where to find the data though. I asked what criteria you are using to classify UAH as good and USHCN as falsified even though UAH arguably uses more invasive adjustments than USHCN?

And it was only a few months ago that you told us “Altered data is no longer “data” — it is someone else’s “opinion” of reality.“ and “intellectual tyranny“ and “If you change reality (RAW data), you then create a false reality“ and “The “source” is the difference between the Raw and the Altered data. The “altered” data is in essence manufactured miss-information — not data.“

So I’d really like to know how UAH is so good even though by your criteria from a few months ago the data is tyrannical, opinion, false reality, misinformation, and/or not even data at all.

BTW…where is the UAH source code?

Why did Obama’s EPA hold a closed session to demonize CO2 through the Endangerment Finding?

JS said: “Why did Obama’s EPA hold a closed session to demonize CO2 through the Endangerment Finding?”

I have no idea. And its irrelevant because that has nothing to do with UAH’s methodology and adjustments.

I still want to know what criteria you are using to classify UAH is a good and USHCN as falsified. I’d also be interested in knowing why you were broadly and generally against adjustments only a few months ago and now consider them good at least in the context of what UAH did. What changed there?

Why is increasing CO2 increasing polar bears?

JS said: “Why is increasing CO2 increasing polar bears?”

I have no idea. And what does that have to do with the discussion? Is this a joke to you or something?

Why does increasing CO2 cause decreasing violent tornadoes?

JS said: “Why does increasing CO2 cause decreasing violent tornadoes?”

I’m trying to have a serious discussion here. If you’re not willing to provide explanations I have no choice but to think that you are deflecting and diverting attention away from the fact that espouse the goodness of UAH even though they use methods you earlier demonized. The best conclusion I can draw is that you prioritize the result over the method. Am I wrong?

The demonization of CO2 is the core problem — all else is a distraction. So … why is increasing CO2 reducing major hurricanes?

“So I’d really like to know how UAH is so good even though by your criteria from a few months ago the data is tyrannical, opinion, false reality, misinformation, and/or not even data at all.”

UAH adjustments are based on measured factors.

Surface data adjustments are based on biased guesses at the calibration error of measurement stations in the far distant past.

USHCN and UAH do *not* use similar adjustment processes.

How many times does this have to be pointed out to you before you internalize it?

UAH adjustments are *MEASURED* and applied consistently across the data set.

USHCN adjustment are pure guesses at the calibration bias of measurement stations in the distant past. Those guesses are purely subjective and typically cool the past – based on the biases of those making the guesses.

TG said: “USHCN and UAH do *not* use similar adjustment processes.”

Really? You don’t think UAH makes an adjustment for the time of observation of a location? You don’t UAH makes an adjustment to correct for the changepoints caused instrument changes?

Ya know what I think…I think you have no idea what UAH is doing and just giving your typical knee jerk “nuh-uh” responses. Prove me wrong.

TG said: “UAH adjustments are *MEASURED* and applied consistently across the data set.”

There it is again! Explain to everyone how UAH adjustments are “MEASURED”.

UAH *knows* when and where their satellite will be at any point in time by tracking the satellite orbit. We were doing that back in the 60’s with amateur radio satellites such as the OSCAR series of satellites.

Time of observation 80 years ago for a surface station simply can’t be measured, only guessed at. Unless you have a time machine *no* one can go back and measure calibration bias for a surface station or exactly when a temperature was measured.

Why are you so adamant about trying to say that adjustments to readings made 80 years ago by a surface station based on measurements made today are just as accurate as the adjustments made by UAH today? It sure sounds like you are just pushing a meme or an agenda rather than physical fact.

TG said: “UAH *knows* when and where their satellite will be at any point in time by tracking the satellite orbit.”

How does UAH “measure” the temperature bias (in units of C or K) caused by the changing orbital trajectory? That is the question. And the question extends to all of the other biases as well. How is the temperature bias measured exactly?

“I wonder what Anthony Watts and the rest of the WUWT editors and audience think about this?”

Well, if they are anything like me, they think this is a silly question.

TA said: “Well, if they are anything like me, they think this is a silly question.”

You think it is silly to ask how Anthony Watts feels about his site being used to promote and advocate for something many on here believe is fraudulent? Do you really think Anthony Watts would just say “meh” and move on?

Are you for real ? You wonder….really ?

Many have asked this same question.

The past is always cooler than we remember, and the future warmer than we expect. (Its a very scientifically objective process, as defined in the Klimate Koran.)

Because UAH gives the answer these guys want to see.

“Totally justifiably, to fix specific, known issues, not systematically to cool the past and heat the present”

Just a way of saying that UAH adjustments are good because we like the results. And the others are bad because we don’t.

Nick, your ability to read minds is as bad as your ability to explain the inexcusable.

Nick, you denigrate every person that read those devices and recorded those measurements when you modify them. You denigrate every person that built the station and maintained it. You are saying that the readings were done incorrectly because you have identified something wrong with them from a time more than a century after they were made.

You can’t say the readings were correct but “weren’t right”. That is entirely illogical. If they are correct, then they are correct. If a “break” occurs, then previous records still remain correct, but the new ones are different. The appropriate action is to discard one or the other. You can’t correct “correct” data. You are simply making up new information to replace existing data.

You have never provided a scientific field where previous measured data has been replaced with new information by using some scheme to identify both a “break” and what the new information should be. Why don’t you identify one?

If you don’t believe data is correct you destroy any trust in it by replacing it with new information. Isn’t it funny how all corrections go in one direction. As a mathematician you need to tell us how likely that would be for so many to come out that way!

“You are saying that the readings were done incorrectly”

Nobody is saying that. Adjustments are made for homogeneity – putting different readings on the same basis, as when a station moves, for example.

Never understood the rationale for changing temp data because a stationed moved. Temp stations measure the micro climate of a precise location. If you move the station then you have stopped measuring the micro climate of one location and now measuring the microclimate of a new location. Then size of a microclimate changes dramatically with distance, where I live it is 2 degrees cooler than a 5minute drive down the road.

Simonsays said: “Never understood the rationale for changing temp data because a stationed moved.”

When you move a station you change what it is measuring. For example, if a station is 200m elevation and you move it to a nearby location at 100m then you have introduced a nearly +1 C changepoint in the timeseries assuming the station is in a typical dry adiabatic environment. If that changepoint is not corrected then it creates a significant warm bias on the trend. UAH has a similar, but vastly more complicated issue regarding the drift and decay of the satellite orbits. The locations they are measuring are changing.

There is a similar issue for instrument package changes as well. UAH is not immune from this either. In fact, they a very complex adjustment procedure for dealing with this. In fact, the UAH adjustments in this regard are so complex that it requires correcting for biases in their bias corrections. But it has to be done otherwise the commissioning/decomissioning of satellites through the years introduce significant changepoints in the underlying data that UAH processes.

The question is we all know that we change the the temperature of the measurement yet you claim of + 1 C is only a guess. That may work most of the time yet I know I can find places that will not be true. Take the move of the Detroit Lakes Minnesota station you moved it from a swamp tree covered moist area to a high and dry prairie the differences are going to be more than altitude. In fact the variables will make any comparison or correction only a WAG without a running both for several years and than comparing the data. Of course that was not done. All the correction in the world will not give you a real number only a guess and a bad guess at that.

mal said: “The question is we all know that we change the the temperature of the measurement yet you claim of + 1 C is only a guess.”

It is an example. Nothing more. Do you understand the concept and the problem?

mal said: “Take the move of the Detroit Lakes Minnesota station you moved it from a swamp tree covered moist area to a high and dry prairie the differences are going to be more than altitude.”

Maybe and maybe not. It depends. Some datasets, like GISTEMP, use pairwise homogenization to identify and correct non-climatic changepoints. Some datasets, like BEST, treat the changepoints as the commissioning of a new station timeseries avoiding the adjustment altogther.

mal said: “All the correction in the world will not give you a real number only a guess and a bad guess at that.”

That’s not what the evidence says [Hausfather et al. 2016]. But if you are convinced of it then why not forward your grievance to Anthony Watts and the WUWT editors regarding their promotion of a dataset that takes great liberty in employing adjustments?

“Maybe and maybe not. It depends. Some datasets, like GISTEMP, use pairwise homogenization to identify and correct non-climatic changepoints.”

Pairwise homogenization is a joke from the start to the finish. A difference as small as 20 miles can make a 1C or more difference in readings because of microclimate changes. And not just because of elevation. Terrain and land use makes a *huge* difference as well in things like humidity, wind, etc. The east side of a moderate hill can have vastly different temps than the west side of a hill even if both are at the same elevation. Creation of an impoundment, even a large beaver pond, between two stations can cause a change in temperature readings in a specific measurement location. Why should this be considered a candidate for “homogenization” when it is actually measuring the change in the microclimate correctly?

TG said: “Pairwise homogenization is a joke from the start to the finish.”

Not according to the abundance of evidence available.

I’ll say this over and over if I have to. “Nuh-uh” arguments like what you often employ are not convincing in the same it is not convincing when someone simply “nuh-uhs” the 1LOT, general relatively, the standard model, or the SB law.

Don’t be a “nuh-uh”er. If you have new evidence to add then present it. Start by quantifying how different from reality a trend calculated from PHA corrected data is vs. an alternative method you feel is better. That’s how can you be convincing. That’s how you’ll get peoples attention.

If you can’t or won’t do this then pesky skeptics like me have choice but to dismiss your “nuh-uh” arguments.

Look at the attached. This is a small area of northeast Kansas. Look at the variation within small, small microclimates. There are several degrees if temperature difference.

How does ANY homogenization let alone with averaging retain the deviations shown here. The variance involved is huge. Averaging and homogenization remove (hide) the original variance making temperatures look more accurate with less variation than there actually is.

That is why people say GAT has several degrees of uncertainty. Nobody ever shows how variance is retained through mathematical manipulation.

When combining samples, means can be combined directly, in other words just making one set of numbers. Variances however are additive. Why is this never addressed?

“Not according to the abundance of evidence available.”

The proof was in Hubbard and Lin’s work around 2002. Their conclusion was that adjustments *had* to be done on a station-by-station basis in order to have any kind of validity at all. All those “homogenization” so-called scientists simply ignore their work and think that they can use other stations to infill or correct others.

Microclimate differences as small as the type of surface below the measuring stations, e.g. bermuda vs fescue or sand vs clay, can cause differences in the readings of calibrated measurement stations even in close proximity

Homogenization is nothing more than a guess with an unidentified uncertainty!

That is a perfect example of a station record that should be stopped and a new one started.

You need to ask why the existence of such a predilection of creating a “long” record is necessary.

There is a statistical reason and the folks insisting on doing it should tell you what it is!

Combining the old data with the new is fraudulent, don’t you get it?

CM said: “Combining the old data with the new is fraudulent, don’t you get it?”

I don’t get it. But it sounds like you are convinced of it. Perhaps you can direct your grievance to Anthony Watts and the WUWT editors who allow Dr. Spencer and Dr. Christy’s dataset, which you believe is fraudulent since they combine old and new data as part of their adjustment procedures, to be published and advocated for on a monthly basis.

Request DENIED.

Those adjustments are based on MEASURED factors, not a subjective, biased guesses!

TG said: “Those adjustments are based on MEASURED factors, not a subjective, biased guesses!”

And there it is again! How exactly are all of the adjustments made by UAH “MEASURED” and are not subjective?

radar, directional antennas, visible crossing points in the sky, telescopes, optical distance measuring devices such as lasers.

Jeesh, amateur radio operators have been tracking their communication satellites since the early 60’s, and doing so pretty darn accurately. The orbits of those first satellites had to be known accurately in order for low power radio equipment and highly directional antennas to communicate through them. A little off on time overhead or on azimuth and you had a missed pass.

Tracking equipment is far, far advanced over what we had in the 60’s and 70’s.

Stick to your math, dubious as some of it is, because you don’t seem to know much about the physical world,

How do radar, directional antennas, telescopes, optical distance measuring devices, and amateur radio operators measure the temperature bias caused by orbital drift and decay? Where can I find these measurements in units of K or C?

“ If that changepoint is not corrected then it creates a significant warm bias on the trend.”

Which is why each data set should stand on its own. Stop one and start another. Since you do *not* know the calibration status of the old measurement station over time you simply cannot change the old data by 1C and expect any kind of accuracy at all!

TG said: “Which is why each data set should stand on its own. Stop one and start another.”

That’s not how UAH does it.

However, it is how BEST does it.

Maybe Anthony Watts, Monckton, and WUWT editors should start preferring BEST over UAH?

How do YOU know which the people you list prefer?

UAH *is* one long radiance measurement data base. The issues with the satellites are *measurable* and can, therefore, be adjusted for across the record. The calibration bias of thermometers 80 years ago simply can’t be measured today therefore adjustments to those readings are biased guesses.

Not only biased, but in the end, they are declaring them wrong even though Nick Stokes has already declared they weren’t incorrect. I don’t get the logic of “correcting” correct data. Ultimately, there is only one reason, and that is to make the data look like you want it to look.

There is no rational on that, the proper way to do that is to maintain both sites for a period of time and note the differences, that was not done. So now you only have guesses and there is now way to prove or disprove the guess.

Add in the human race change the the old Stevenson Screen to white wash to latex paint we have no data on how that change the measurements since that was not tested.

Yet again we have no knowledge on how the impact of changing from Stevenson Screen to the new electronic measuring system since no tests were done and when it was done the difference were dismissed because the result was not what the experts wanted to hear.

No we cannot take the surface measurements as fact because the variables were never controlled and all the adjustments(guess) will not fix that problem. No one can honest say the land temperature record is accurate within plus or minus 3C for any given time period. Let alone with a hundred of a degree.

So again I will make this statement and prove me wrong(you can’t) The climate is always changing, the present question remains how much and which way. No one can “prove: how much and which way, all are guessing. My guess it going up how much and why God only knows, mankind does not have a clue. Prove me wrong.

mal said: “No one can honest say the land temperature record is accurate within plus or minus 3C for any given time period. Let alone with a hundred of a degree.”

Rhode et al. 2013 and Lenssen et al. 2019 say it is about ±0.05 C for the modern era. Even Frank 2010 who uses questionable methodology thinks it could be as low as ±0.46 C.

But if you truly think ±3 C is the limit of our ability then how do you eliminate 6 C worth of warming since 1979 for a rate of 1.4 C/decade?

As usual you are confusing SENSOR uncertainty with measurement station uncertainty!

They are *NOT* the same thing. It’s why the ARGO float sensor can be calibrated to .001C but the float uncertainty is +/- 0.5C!

Your lack of understanding about the real world is showing!

TG said: “As usual you are confusing SENSOR uncertainty with measurement station uncertainty!”

No. I’m not. On the contrary it is you who continually confuses location and time specific uncertainty with the global average temperature uncertainty. I’ll repeat this as many times as needed. The combined uncertainty is not the same thing as the uncertainty of the individual elements being combined. It is a different value. You’re own preferred source says so.

dr. adjustor weighs in on uncertainty, again, and does a face plant, again.

They don’t even realize they are acting like stock day traders who only use apps to track stock prices. Day traders are hoping to tell what a stock is going to do using exactly the same methods, price vs time. The problem is, 99% of them never dig into the underlying company fundamentals information to know why a company’s worth goes up or down. By the time a stocks price goes up or down, they are already behind the eight ball and must try to catch up. Other traders who do the basic research have already beaten them to the punch.

The problem with climate science’s obsession with temperature trends is that they will never know what the fundamentals are. Any predictions are fraught with builtin error. Just look at the range of model predictions to see what I mean.

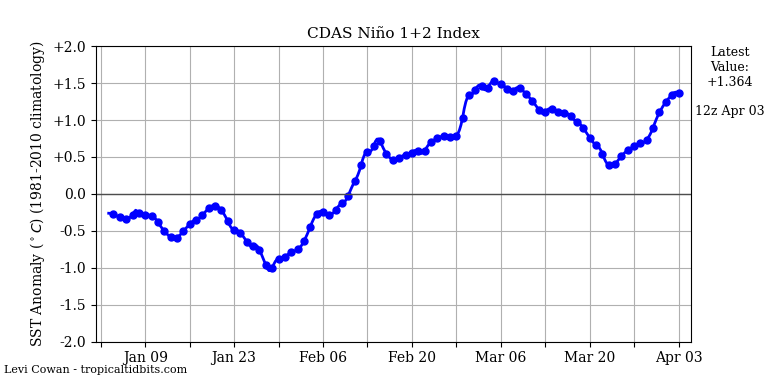

How many times do you want me to show you this graph? Time is not a factor in it, just CO2 and ENSO.

Will you ever ask Monckton why he doesn’t try to analyze his claim of a pause? Why he only considers time as a factor and ignores ENSO?

More dodging and weaving, you know the answer to this question but refuse to acknowledge it.

Somehow you have time on the x-axis and a temperature anomaly on the y-axis. I don’t see where CO2 has a temperature anomaly associated with it so I not sure what (CO2 + ENSO) actually means. It looks like a time series to me rather than a functional relationship between the two variables. You need to show the equation you used to derive the temperature anomaly from the time.

I’ve tried to explain this before. I’ve calculated a multivariant linear regression on the data. The dependent variable is the UAH anomaly, the independent variables are CO2 and ENSO (with some adjustments for lag and smoothing CO2). The red line is simply the prediction for each month based on those two variables.

By definition, the linear equations are a functional relationship. As I said, time is not a factor in the predictions, though it makes little difference as CO2 is close to a linear rise with respect to time.

Should also mention, that the linear regression is based on the data up to the start of the “pause” showing in green. This allows the pause to be the test data.

“There is no rational on that, the proper way to do that is to maintain both sites for a period of time and note the differences, that was not done.”

That really doesn’t help much in correcting past data because you simply can’t identify what the calibration status of the original location was in the past. Most stations have drift which means the calibration changes over time. Identifying the present error because of drift doesn’t identify the past error because of drift. Thus applying present error correction to past temperatures readings just isn’t very “scientific”.

In fact it is fraudulent.

One of the other components of drift in land surface stations is land use changes and flora growth. Wind breaks can grow and affect wind velocities at a station over time. Buildings and asphalt not even close can affect temperature. Grass changes both underneath the station and surrounding areas can affect measured temperatures. These are all things that can cause microclimate changes at the station and appear as drift but are not because of “thermometer” calibration.

You nailed it. No one can *prove* you wrong.

A station move should be treated by stopping the old record and starting a new one. Trying to create a “long record” from two different microclimates by creating new information is very unscientific. If the data is unfit for purpose, discard it.

Homogenization done by creating new information just creates additional bias. Why does homogenization end up cooling temps in almost all cases. Tell us what the chances of that occuring is and why. Why not both cooling and heating in equal portions?

I am still waiting on you to answer my question!

“You have never provided a scientific field where previous measured data has been replaced with new information by using some scheme to identify both a “break” and what the new information should be. Why don’t you identify one?”

JG said: “A station move should be treated by stopping the old record and starting a new one.”

That’s what BEST does.

JG said: “Trying to create a “long record” from two different microclimates by creating new information is very unscientific.”

UAH does this.

JG said: “Homogenization done by creating new information just creates additional bias.”

UAH does this.

JG said: “Why does homogenization end up cooling temps in almost all cases.”

It is true that for the US it increases the warming trend relative to the raw data. This is primarily because the time-of-observation change bias is negative and the instrument/shelter change bias is negative.

However, on a global scale the net effect of adjustments is to reduce the overall warming trend.

“UAH does this.”

No, it doesn’t.

TG said: “No, it doesn’t.”

I’m going to call your bluff here. I don’t think you have any idea what UAH is doing. Explain to everyone how UAH does the limb correction, diurnal heating cycle correction, deep convection removal, linear diurnal drift correction, non-linear diurnal drift correction, removal of residual annual cycle related to hot target variations, orbital decay correction, removal of dependence on time variations of hot target temperature, deconvolution of the TLT layer, spatial infilling, temporal infilling, etc. See if you can do so without invoking the creation of new data or the combining of timeseries representing different microclimates and being correct in your explanations.

Are you really this dense? Or is this all part of an act?

As I keep telling you — THESE ARE ALL MEASURED! They are not guesses (meaning UNMEASURED) at the calibration of a measuring device 80 years ago based on current calibration.

Take orbital fluctuations. THEY CAN BE MEASURED AND THEY APPLY TO *ALL* OF THE DATA.

You keep trying to justify changes to past surface temperature measurements based on nothing but biased guesses. It’s indefensible. You only look like a fool in trying to defend the practice.

TG said: “As I keep telling you — THESE ARE ALL MEASURED!”

You keep saying it, but saying it over and over does not make it right. You also keep deflecting and diverting away from explain HOW all of their bias corrections are measured. Why is that?

TG said: “Take orbital fluctuations. THEY CAN BE MEASURED AND THEY APPLY TO *ALL* OF THE DATA.”

How do they “measure” the temperature bias caused by orbital drift and decay?

TG said: “You keep trying to justify changes to past surface temperature measurements based on nothing but biased guesses.”

I’m not talking about surface temperature measurements. I’m talking about UAH adjustments. Those adjustments change past measurements.

Because ALL of the necessary information is recorded along with the microwave data.

Is this really so hard for you?

CM said: “Because ALL of the necessary information is recorded along with the microwave data.”

Where in the raw MSU data is the temperature bias recorded? Can you post a link to it so that I can review it? How come Spencer and Christy do not mention it in any of their methods papers?

CM said: “Is this really so hard for you?”

Yes. I’ve searched extensively. I don’t see it anywhere. Spencer and Christy don’t seem to be aware of it either.

Do you understand what is being measured? Do you think the satellites have devices that read physically remote temperature sensors?

Here is a paper that reviews some of the issues with using spectral irradiance to calculate temperatures.

Atmospheric Soundings | Issues in the Integration of Research and Operational Satellite Systems for Climate Research: Part I. Science and Design |The National Academies Press

Wikipedia has a succinct definition of UAH measurements.

WTH is “temperature bias”?

CM said: “WTH is “temperature bias”?”

The error of the temperature measurement. Where is that included in the raw MSU data?

You still don’t understand that uncertainty =/= error, yet you go around lecturing on the subject.

CM said: “You still don’t understand that uncertainty =/= error, yet you go around lecturing on the subject.”

Nobody is talking about uncertainty here.

The claim is that UAH “measures” all of the temperature biases that exist. You said all of the necessary information in the context of the temperature biases is included along with the microwave data.

My question is where is it included? I don’t see it. Dr. Spencer and Dr. Christy do not see it.

If you can’t or won’t form a response directly related to your claim that all information is included along with the microwave data and/or the temperature bias itself then I have no choice but to dismiss your claim.

I’m going to be blunt here. I don’t think you have the slightest idea how UAH is producing their published products. Prove me wrong.

Once again, you are in no position to place demands on other people.

“The claim is that UAH “measures” all of the temperature biases that exist.”

Stop making things up. *NO* one claims this.

They claim that orbital factors can be measured.

And the satellites do *not* measure temperature, they measure radiance. And the measure of that radiance *certainly* has uncertainty because of many external factors as well as internal factors.

And this doesn’t even include the uncertainties in the conversion algorithm changing radiance to temperature.

And you’ve been told *many* times that the uncertainties of the UAH are less than any of the surface temperature measurements. For one thing there are just a limited number of satellites compared to the thousands of temperature measuring devices. For independent, random variables the uncertainty grows with an increased number of measurement devices (just like the variance of independent, random variables add).

For some reason you just can’t seem to accept any of this. You are pushing an agenda – trying to denigrate the usefulness of UAH compared to the surface temp data and the climate models. If you think that isn’t becoming more and more obvious with each of your posts then you are only fooling yourself!

TG said: “Stop making things up. *NO* one claims this.”

UAH publishes temperature products. I’m told that all of the biases they correct for are “measured”. Examples of the statements are here, here, and here.

TG said: “And the satellites do *not* measure temperature, they measure radiance.”

We are not discussing how the temperatures are measured. We are discussing how the biases are measured.

The way in which the temperatures are measured is a big topic as well and worthy of discussion. It’s just not what is being discussed at the moment.

Direct measurement of errors is quite impossible because true values are unknowable.

Like you keep trying to tell them – error is not uncertainty. They never seem to be able to internalize that! It’s probably because they’ve never been in a situation where their personal liability is at issue if they don’t account for uncertainty properly.

Each and every statistics textbook publisher today should be sued for never including uncertainty of data elements in their teaching examples. Even if they ignore the uncertainty intervals in working out the examples they would have to explicitly state that and the students would at least get an inkling about the effects of uncertainty.

They still haven’t gotten past the terminology section of the GUM that explains this quite clearly.

If bdgwx is following his usual tack, he is hoping to get some kind of answer for his “measurement bias” demand that he can then turn around and use as a weapon in his Stump the Professor game.

Then those biases (systematic errors) cannot possibly be included with the microwave data.

Your clown show is quite threadbare.

Why not? Are you saying the MSU’s can’t be calibrated?

No. I didn’t say that.

“ I’m told that all of the biases they correct for are “measured”.”

All the biases you MENTIONED *are* measured. They mostly had to do with orbital fluctuations. Those can be measured down the the width of a laser beam!

“We are not discussing how the temperatures are measured. We are discussing how the biases are measured.”

*We are not discussing how the temperatures are measured. We are discussing how the biases are measured.

YOU* were talking about the satellites measuring temperature. They don’t.

And you’ve been told multiple times about the uncertainties associated with UAH by several people on here. You just conveniently forget them all the time and claim we think UAH is 100% accurate!

TG said: “All the biases you MENTIONED *are* measured. They mostly had to do with orbital fluctuations.”

I mentioned a lot of biases in this thread. Orbital fluctuations are one among many. But if you want to focus on just that for now that’s fine. How does UAH “measure” the temperature bias or systematic error caused by orbital fluctuations? Be specific.

TG said: “YOU* were talking about the satellites measuring temperature. They don’t.”

I’m talking about how UAH applies adjustments; not how the temperature is measured. That is a different topic.

TG said: “And you’ve been told multiple times about the uncertainties associated with UAH by several people on here. You just conveniently forget them all the time and claim we think UAH is 100% accurate!”

Uncertainty has nothing to do with this. That is a different topic.

Stay focused. How does UAH “measure” the temperature bias or systematic error?

Why do you expect and insist that Tim know this?

Go ask Spencer, its his calculation.

I don’t need to ask Spencer. He and Christy published a textual description of the procedure they used to identify and quantify the bias adjustments.

Then WTH are you demanding I tell you?

Fool.

“The error of the temperature measurement. Where is that included in the raw MSU data?”

The MSU (Microwave Sounding Unit) doesn’t measure temperature. It measures radiance. That radiance is then converted to temperature using an algorithm that has many inputs.

A true uncertainty analysis of all elements would be quite instructive, and that includes the uncertainty of the measuring device as well as the uncertainty of the algorithm.

TG said: “The MSU (Microwave Sounding Unit) doesn’t measure temperature. It measures radiance. That radiance is then converted to temperature using an algorithm that has many inputs.”

So the temperature bias or systematic error is not included in the raw MSU data stream?

TG said: “A true uncertainty analysis of all elements would be quite instructive, and that includes the uncertainty of the measuring device as well as the uncertainty of the algorithm.”

We are not talking about uncertainty. We are talking about the temperature bias or systematic error of the measuring device and algorithm that aggregates all of the measurements.

You and Carlo Monte keep telling me that this temperature bias or systematic error is measured. I want to know how you think it is measured. Where do I find these measurements?

NO!

All the information needed is already known, there is no need to make up fake data, as is your wont.

I’ll give you a dose of bellcurveman: “Go talk to Spencer and ask him”.

CM said: “All the information needed is already known, there is no need to make up fake data, as is your wont.”

Where does it exist? Where can I find it? How come Dr. Spencer and Dr. Christy make no mention of it?

There are papers on the satellites used. I have read several but didn’t save them. If you search the internet you can find papers discussing the MSU’s and other sensors on the satellites.

I have a lot of them downloaded going all the way back to the NIMBUS prototype that preceding the operational TIROS-N and successors.

Well pin a bright shiny star on your lapel.

Adjustments are made for homogeneity. That is where the fiddles enter. If the data is not already homogeneous they should be discarded and not incorporated into the history.

Data are never homogeneous. Look at the attached. The differences in temperature are large over a very small area. Like it or not, microclimates are not the same at any distance. Adjusting temperature data to achieve homogeneous temperature averages is a farce. It is done in order to manufacture long temperature records at individual stations.

That is not a scientific treatment of recorded, measurement data.

If you discard all of the data then how do you eliminate the possibility that the planet warmed by say 5.5 C as opposed to the 0.55 ± 0.21 C since 1979 like what UAH says?

Don’t you get it yet? There is no single temperature of “the planet”?

If the uncertainty of the data is greater than the differential trying to be identified then it is impossible to determine the differential.

The planet could have warmed or cooled by 5.5C and you simply can’t tell if the uncertainty is 6C. If the uncertainty is 1C then how do you determine a differential of .55C?

Your uncertainty of 0.21C is LESS than the uncertainty of the global measuring devices! You are, as usual, either

Neither of these is true in the real world.

“You can’t correct “correct” data. You are simply making up new information to replace existing data.”

Exactly. Alarmist want us to think they know exactly how to adjust past temperatures.

Alarmists want us to accept their manipulation of the temperature record as legitimate.

We don’t need a new temperature record. The old one does much better. The old one says we have nothing to worry about from CO2. The old one was recorded when there was no bias about CO2 warming.

Alarmists don’t want us to know this so they bastardized the past temperature records in order to scare people into doing what the Alarmists want them to do: Destroy our nations and societies by demonizing CO2 to the point that oil and gas are banned.

All because of a bogus, bastardized temperature record. The only “evidence” the alarmists have to back up their claims of unprecedented warming, and their evidence is a Lie, they made up out of whole cloth.

Notice all the alarmists jumping in to defend these temperature record lies. They *have* to defend them because it’s the only thing they have to promote their scary CO2 scenarios. Without the bastardized temperature record, the alarmist would have nothing to show and nothing to talk about.

Keep telling us how you know better what the temperature was in 1936, than the guy that wrote the temperature down at that time. The bastardized temperature record is a bad joke. And these jokers want us to accept it. No way! Go lie to someone else.

Most UAH adjustments are based on MEASURED bias such as orbital fluctuations.

This is simply not possible with surface data collected by thousands of temperature sensors, be they land or ocean.

Thus the UAH record is consistent while the surface data is not. UAH adjustments don’t cool the past and heat the present by changing past data, decades old, based on current measurement of accuracy.

It isn’t a matter of like/dislike. It is a matter of consistency.

TG said: “Most UAH adjustments are based on MEASURED bias such as orbital fluctuations.”

There it is again. How exactly do you think UAH “MEASURED” the biases they analyzed?

TG said: “UAH adjustments don’t cool the past and heat the present by changing past data, decades old, based on current measurement of accuracy.”

Oh yes they do. They also make adjustments to future data using past measurements in more than one way.

TG said: “It is a matter of consistency.”

0.307 C/decade worth of adjustments from version to version over the years isn’t what I would describe as consistency. But what do I know. I still accept that averaging reduces uncertainty, the 1LOT is fundamental and unassailable, and that the Stefan-Boltzmann Law is more than just a mere suggestion that only works if the body is equilibrium with its surroundings.

“There it is again. How exactly do you think UAH “MEASURED” the biases they analyzed?”

Highly directional antenna. Telescopes. Time differences between observation points. Radar.

LOTS OF WAYS TO MEASURE!

“Oh yes they do. They also make adjustments to future data using past measurements in more than one way.”

BUT THESE ARE *MEASURED* biases! Not guesses about calibration of devices 80 years ago!

“0.307 C/decade worth of adjustments from version to version over the years isn’t what I would describe as consistency.”

The biases are *MEASURED* and applied consistently to the data.

“ I still accept that averaging reduces uncertainty,”

Averaging does *NOT* reduce uncertainty unless you can show that all of the error is random and symmetrical. Which you simply cannot show for surface temperature measurements which consist of multiple measurements of different things using different devices. You cannot show that the all of the errors from all those measurements of different things using different devices form a random, symmetrical distribution where they all cancel out.

“ if the body is equilibrium with its surroundings”

You can’t even get this one correct. It has to also be in equilibrium internally – no conduction or convection internally. No equilibrium – wrong answer from S-B!

TG said: “Highly directional antenna. Telescopes. Time differences between observation points. Radar.”

UAH uses directional antenna, telescopes, and radar?

TG said: “BUT THESE ARE *MEASURED* biases!”

How?

TG said: “Averaging does *NOT* reduce uncertainty unless you can show that all of the error is random and symmetrical.”

Well now this is a welcome change of position. It was but a couple of months ago you were still telling Bellman and I that the uncertainty of an average is more than the uncertainty of the individual elements upon which is based.

TG said: “You can’t even get this one correct. It has to also be in equilibrium internally – no conduction or convection internally. No equilibrium – wrong answer from S-B!”

Wow. Just wow!

You might as well extend your rejection to Planck’s Law as well since the Stefan-Boltzmann Law is derived from it. Obviously you’re probably wanting to apply your rejection to the radiant heat transfer equation q = ε σ (Th4 – Tc4) Ah since it is derived from the SB law which in turn is going to force you to reject the 1LOT and probably 2LOT as well. Actually, the more I think about it your rejection here so thorough I’m not sure I’m going to be able to convince you that any thermodynamic law is real. And if I can’t do that then how can anyone possibly convince you of anything related to physics?

“UAH uses directional antenna, telescopes, and radar?”

Once again your lack of understanding of the physical world is just simply dismaying! The TRACKING stations use those to measure orbital information!

“How?”

Do you *truly* need a dissertation on how radar works? Or a laser distance measuring device? At it’s base, satellite tracking just uses triangulation and basic trigonometry, Do you need a class on navigating and trig?

“Well now this is a welcome change of position. It was but a couple of months ago you were still telling Bellman and I that the uncertainty of an average is more than the uncertainty of the individual elements upon which is based.”

No, that is *NOT* what I told you. I told you that the uncertainty of the mean of a sample *has* to have the uncertainty of the elements in the sample propagated to the mean of the sample. You cannot just assume the mean of the sample is 100% accurate with no uncertainty.

If the individual elements can be shown to have only random error and that it is symmetrically distributed then it can be assumed that the errors cancel. You simply cannot show that the uncertainty in the measurement of different things using different devices result in an error distribution that is random and symmetrical just as an assumption!

You and bellman keep wanting to assume the standard deviation of the sample means is the uncertainty of the mean calculated from those sample means. That is wrong unless you can show that the uncertainties form a random and symmetrical distribution.

If

Then you simply can’t say the uncertainty of the average (M1 + M2 +…. +Mn)/N is the standard deviation of M1 through Mn. Doing so requires ignoring the uncertainties such as +/- 1.0, +/- 1.5, etc.

That *is* what you, Bellman, and all the climate scientists want to do – ignore uncertainty because it is inconvenient to have to propagate it and consider it in your analysis of the data.

So you just assume it all cancels no matter what!

Planck’s Law is the same. It assumes a object in equilibrium. I don’t know why that is so hard for you to understand. Any heat that is being conducted within an object is not available for radiation. It can’t do both conduction and radiation at the same time.

from http://www.tec-science.com:

“The Stefan-Boltzmann law states that the intensity of the blackbody radiation in thermal equilibrium is proportional to the fourth power of the temperature! ” (bolding mine, tg)

Perhaps this will help you understand – if an object is not at thermal equilibrium then how do you know its actual temperature? It could be cooler on part of its surface and warmer on another. What temperature do you use in the S-B calculation?

TG said: “The TRACKING stations use those to measure orbital information!”

I’m not asking how UAH knows the orbital trajectories. That’s easy. I’m asking how you think UAH measures the temperature bias caused by orbital drift and decay.

TG said: “Any heat that is being conducted within an object is not available for radiation. It can’t do both conduction and radiation at the same time.”

Excuse me? Are you telling me that I can’t get radiation burns from fire because it is conducting heat to its surroundings? Are you telling me that I can’t feel the radiant heat from a space heater because it is conducting heat internally and externally to the air surrounding it?

TG said: “Perhaps this will help you understand”

No it doesn’t. I know what the SB law says. That does not help me understand your position that the SB law is invalid unless the body is in thermal equilibrium with its surroundings. That was your statement. And it evolved from your original statement that water below the surface does not radiate according to the SB law. It is also important to note that the SB law already has a provision for bodies that are not true blackbodies via the emissivity coefficient. Your source only examines the idealized case where emissivity is 1. Bodies do not have to be in thermal equilibrium with their surroundings or even within for them to radiate toward their surrounds according to the SB law. This is the whole principal behind the operation of thermopiles and radiometers. Anything and everything with a temperature emits radiation that delivers energy in accordance with the SB law to the thermopile or radiometer. You just have to set the emissivity correctly to get a realistic temperature reading. My Fluke 62 forces me to set the emissivity coefficient of what I’m measuring. And it is rarely in thermal equilibrium with the target regardless of whether that target is a parcel of water below the surface, looking up into a clear or cloudy sky, into a flame, etc and yet it still works.

BTW…here is the radiant heat transfer equation for grey bodies.

Q = σ(Th^4 – Tc^4) / [(1-εh)/Ah*εh + 1/Ah*Fhc + (1-εc)/Ac*εc] where h is the hot body, c is the cold body, T is temperature, A is area, ε is emissivity, and Fhc is the view factor from hot to cold which is derived from the 1LOT and the SB law. What would the point be if it only worked when h and c where in thermal equilibrium?

I’ll repeat. Anything and everything emits radiation. It doesn’t matter where it is. This includes parcels of water below the surface regardless of whether the parcel is in thermal equilibrium with its surroundings or not. It will emit radiation all of the time. And we use this fact in combination with the 1LOT and SB law to determine the temperature of the parcel. That’s why my Fluke 62 records the correct temperature of water even when dunked below the surface.

“I’m asking how you think UAH measures the temperature bias caused by orbital drift and decay.”

What difference does it make as long as it is consistent? Again, as I’ve told you multiple times, the satellites don’t measure temperature, they measure radiance. They then convert that into a temperature. As long as they do the conversion in a consistent manner on all of the data then the metric they determine is as useful as any surface measurement data and probably more useful because their coverage of the earth is better!

” Are you telling me that I can’t get radiation burns from fire because it is conducting heat to its surroundings? “

NO! That is *NOT* what I said. What I said is that any heat the fire is conducting into the ground is not available for radiation! That heat can’t radiate while it is being conducted into the ground! S-B won’t give you the right answer because it requires thermal equilibrium of the object. If there is conduction going on within the object then it is not at thermal equilibrium! It’s the exact same thing for the space heater. Heat that is being conducted internally or externally is not also available for radiation. S-B will give you the wrong answer.

Why is this so hard for you to understand?

“That does not help me understand your position that the SB law is invalid unless the body is in thermal equilibrium with its surroundings.”

Because conducted heat is not available for radiation. Again, why is this so hard to understand?

“emissivity coefficient.”

Which has nothing at all to do with the difference between conducted heat and radiated heat. Nice try at the argumentative fallacy of Equivocation.

“Your source only examines the idealized case where emissivity is 1”

Again, the difference between conducted heat and radiated heat has nothing to do with emissivity. Emissivity is a measure of the efficiency of radiation, it is not a measure of conductivity.

“Bodies do not have to be in thermal equilibrium with their surroundings or even within for them to radiate toward their surrounds according to the SB law.”

They do *NOT* have to be in thermal equilibrium in order to radiate. They *DO* have to be in thermal equilibrium in order to radiate according to the S-B equation. Conducted heat, be it internal or external, is not available for radiation. The S-B equation assumes that *all* the heat in a body is available for radiation — meaning it is in thermal equilibrium.

” You just have to set the emissivity correctly to get a realistic temperature reading. My Fluke 62 forces me to set the emissivity coefficient of what I’m measuring.”

Again, emissivity is a measure of radiative efficiency. It has nothing to do with the total heat in an object, part of which is radiated and part of which is being conducted.

“Q = σ(Th^4 – Tc^4) / [(1-εh)/Ah*εh + 1/Ah*Fhc + (1-εc)/Ac*εc]”

Where is the factor for the amount of heat being conducted away and is thus not available for radiation?

Your equation has an implicit assumption of thermal equilibrium and your blinders simply won’t let you see that!

As usual, it is an indication of your lack of knowledge of the real world! To use your space heater analogy, the amount of heat being conducted away from the space heater to the floor via conduction through its feet is *NOT* available for radiation via the heating coils. Thus S-B will *not* give a proper value for radiated heat based on the input of heat (via electricity from the wall) to the heater. If the coils are not in thermal equilibrium because one end of the coil is hotter than the other end because of conductivity then the total radiation from the coil can’t be properly calculated because S-B has no factor for conductivity.

“Anything and everything emits radiation. It doesn’t matter where it is. This includes parcels of water below the surface regardless of whether the parcel is in thermal equilibrium with its surroundings or not. “

Your first two sentences are true. Your third one is not. Conductive heat is not available for radiation and therefore S-B can’t give you a proper value for the amount of radiation from an object.

You proved this with your own equation. It has no factor for conductive heat.

Take off your blinders, open your eyes and stop trying to tell everyone that blue is really green!

TG said: “What difference does it make as long as it is consistent?”

You said it was MEASURED. I want to know how you think it was MEASURED.

TG said: “They do *NOT* have to be in thermal equilibrium in order to radiate.”

Exactly. Yet that is what Jim Steele was vehemently rejecting for water below the surface. He doesn’t think it radiates at all which you then started defending perhaps because you jumped into the conservation late and were unaware of context. I don’t know.

TG said: “They *DO* have to be in thermal equilibrium in order to radiate according to the S-B equation.”

That is for the body itself. This discussion is not analyzing bodies that are not in thermal equilibrium themselves. This discussion is analyzing two bodies. A parcel of water at temperature Th and its surroundings at temperature Tc. Both bodies will radiate toward each other according to the SB law with an emissivity of ~0.95 or so. This happens even though there is no equilibrium between the parcel and surroundings.

BTW…bodies that are not in thermal equilibrium will have a rectification error whose magnitude is related to the spatial variability of its radiant exitance or temperature. It is an important consideration especially for the 3 layer energy budget models in which the layers are not themselves in thermal equilibrium. We just aren’t discussing non-homogenous emitters right now.

TG said: “Where is the factor for the amount of heat being conducted away and is thus not available for radiation?”

No where. That means conduction does not directly effect the radiant exitance of a body. It only does so indirectly via its modulation of T. This is why conduction and radiation happen simultaneously. For two bodies H and C at temperatures Th and Tc heat will transfer via conduction (if they are in contact) and radiation simultaneously. A radiant space heater (body H) is both conducting and radiating heat to the surroundings (body C). A parcel of water (body H) is both conducting and radiating heat to the surroundings (body C).

TG said: “Your equation has an implicit assumption of thermal equilibrium and your blinders simply won’t let you see that!”

On the contrary it has an implicit assumption that there is no thermal equilibrium. In other words Th != Tc. If there were a requirement that Th = Tc then what would the point of it be since it would just reduce to q = 0. The whole point of heat transfer is for bodies that are not in thermal equilibrium.

“You said it was MEASURED. I want to know how you think it was MEASURED.”

Nice job of equivocation! IT *IS* MEASURED. You questioned how it is applied to the data, not the value of the measurement. And apparently you know nothing of the use of lasers in determining distance and direction let alone radar!

“Exactly. Yet that is what Jim Steele was vehemently rejecting for water below the surface. He doesn’t think it radiates at all which you then started defending perhaps because you jumped into the conservation late and were unaware of context. I don’t know.”

What Steele was saying is that radiation plays almost NO part in the heating of the water below the surface! In fact it is probably not even measurable because the heat transport will be so totally dominated by the conduction factor! If it’s not measurable then does it exist?

You are trying to argue how many angels can stand on the head of a pin!

“That is for the body itself. This discussion is not analyzing bodies that are not in thermal equilibrium themselves.”

You are moving the goalposts! Thermal equilibrium *is* a requirement for giving the proper answer from S-B. *YOU* are the one that brought up S-B and said it will give the proper answer even for objects not in thermal equilibrium.

Are you now changing you assertion?