Guest Essay by Kip Hansen

“…we should recognise that we are dealing with a coupled nonlinear chaotic system, and therefore that the long-term prediction of future climate states is not possible.”

“…we should recognise that we are dealing with a coupled nonlinear chaotic system, and therefore that the long-term prediction of future climate states is not possible.”

– IPCC AR4 WG1

Introduction:

The IPCC has long recognized that the climate system is 1) nonlinear and therefore, 2) chaotic. Unfortunately, few of those dealing in climate science – professional and citizen scientists alike – seem to grasp what this really means. I intend to write a short series of essays to clarify the situation regarding the relationship between Climate and Chaos. This will not be a highly technical discussion, but an even-handed basic introduction to the subject to shed some light on just what the IPCC means when it says “we are dealing with a coupled nonlinear chaotic system” and how that should change our understanding of the climate and climate science.

My only qualification for this task is that as a long-term science enthusiast, I have followed the development of Chaos Theory since the late 1960s and during the early 1980s often waited for hours, late into the night, as my Commodore 64 laboriously printed out images of strange attractors on the screen or my old Star 9-pin printer.

PART 1: Linearity

In order to discuss nonlinearity, it is best to start with linearity. We are talking about systems, so let’s look at a definition and a few examples.

Edward Lorenz, the father of Chaos Theory and a meteorologist, in his book “The Essence of Chaos” gives this:

Linear system: A system in which alterations of an initial state will result in proportional alterations in any subsequent state.

In mathematics there are lots of linear systems. The multiplication tables are a good example: x times 2 = y. 2 times 2 = 4. If we double the “x”, we get 4 times 2 = 8. 8 is the double of 4, an exactly proportional result.

When graphing a linear system as we have above, we are marking the whole infinity of results across the entire graphed range. Pick any point on the x-axis, it need not be a whole number, draw a vertically until it intersects the graphed line, the y-axis value at that exact point is the solution to the formula for the x-axis value. We know, and can see, that 2 * 2 = 4 by this method. If we want to know the answer for 2 * 10, we only need to draw a vertical line up from 10 on the x-axis and see that it intersects the line at y-axis value 20. 2 * 20? Up from 20 we see the intersection at 40, voila!

[Aside: It is this feature of linearity that is taught in the modern schools. School children are made to repeat this process of making a graph of a linear formula many times, over and over, and using it to find other values. This is a feature of linear systems, but becomes a bug in our thinking when we attempt to apply it to real world situations, primarily by encouraging this false idea: that linear trend lines predict future values. When we see a straight line, a “trend” line, drawn on a graph, our minds, remembering our school-days drilling with linear graphs, want to extend those lines beyond the data points and believe that they will tell us future, uncalculated, values. This idea is not true in general application, as you shall learn. ]

Not all linear systems are proportional in that way: the ratio between the radius of a circle and its circumference is linear. C =2πR, as we increase the radius, R, we get a proportional increase in Circumference, in a different ratio, due to the presence of the constants in the equation: 2 and π.

In the kitchen, one can have a recipe intended to serve four, and safely double it to create a recipe for 8. Recipes are [mostly] linear. [My wife, who has been a professional cook for a family of 6 and directed an institutional kitchen serving 4 meals a day to 350 people, tells me that a recipe for 4 multiplied by 100 simply creates a mess, not a meal. So recipes are not perfectly linear.]

An automobile accelerator pedal is linear (in theory) – the more you push down, the faster the car goes. It has limits and the proportions change as you change gears.

Because linear equations and relationships are proportional, they make a line when graphed.

A linear spring is one with a linear relationship between force and displacement, meaning the force and displacement are directly proportional to each other. A graph showing force vs. displacement for a linear spring will always be a straight line, with a constant slope.

In electronics, one can change voltage using a potentiometer – turning the knob – in a circuit like this:

In this example, we change the resistance by turning the knob of the potentiometer (an adjustable resistor). As we turn the knob, the voltage increases or decreases in a direct and predictable proportion, following Ohm’s Law, V = IR, where V is the voltage, R the resistance, and I the current flow.

Geometry is full of lovely linear equations – simple relationships that are proportional. Knowing enough side-lengths and angles, one can calculate the lengths of the remaining sides and angles. Because the formulas are linear, if we know the radius of a circle or a sphere, we can find the diameter (by definition), the area or surface area and the circumference.

Aren’t these linear graphs boring? They all have these nice straight lines on them

Richard Gaughan, the author of Accidental Genius: The World’s Greatest By-Chance Discoveries, quips: “One of the paradoxes is that just about every linear system is also a nonlinear system. Thinking you can make one giant cake by quadrupling a recipe will probably not work. …. So most linear systems have a ‘linear regime’ –- a region over which the linear rules apply–- and a ‘nonlinear regime’ –- where they don’t. As long as you’re in the linear regime, the linear equations hold true”.

Linear behavior, in real dynamic systems, is almost always only valid over a small operational range and some models, some dynamic systems, cannot be linearized at all.

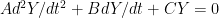

How’s that? Well, many of the formulas we use for the processes, dynamical systems, that make civilization possible are ‘almost’ linear, or more accurately, we use the linear versions of them, because the nonlinear version are not easily solvable. For example, Ian Stewart, author of Does God Play Dice?, states:

“…linear equations are usually much easier to solve than nonlinear ones. Find one or two solutions, and you’ve got lots more for free. The equation for the simple harmonic oscillator is linear; the true equation for a pendulum is not. The classic procedure is to linearize the nonlinear by throwing away all the awkward terms in the equation.

….

In classical times, lacking techniques to face up to nonlinearities, the process of linearization was carried out to such extremes that it often occurred while the equations were being set up. Heat flow is a good example: the classical heat equation is linear, even before you try to solve it. But real heat flow isn’t, and according to one expert, Clifford Truesdell, whatever good the classical heat equation has done for mathematics, it did nothing but harm to the physics of heat.”

One homework help site explains this way: “The main idea is to approximate the nonlinear system by using a linear one, hoping that the results of the one will be the same as the other one. This is called linearization of nonlinear systems.” In reality, this is a false hope.

The really important thing to remember is that these linearized formulas of dynamical systems –that are in reality nonlinear – are analogies and, like all analogies, in which one might say “Life is like a game of baseball”, they are not perfect, they are approximations, useful in some cases, maybe helpful for teaching and back-of-an-envelope calculations – but – if your parameters wander out of the system’s ‘linear regime’ your results will not just be a little off, they risk being entirely wrong — entirely wrong because the nature and behavior of nonlinear systems is strikingly different than that of linear systems.

This point bears repeating: The linearized versions of the formulas for dynamic systems used in everyday science, climate science included, are simplified versions of the true phenomena they are meant to describe – simplified to remove the nonlinearities. In the real world, these phenomena, these dynamic systems, behave nonlinearly. Why then do we use these formulas if they do not accurately reflect the real world? Simply because the formulas that do accurately describe the real world are nonlinear and far too difficult to solve – and even when solvable, produce results that are, under many common circumstances, in a word, unpredictable.

Stewart goes on to say:

“Really the whole language in which the discussion is conducted is topsy-turvy. To call a general differential equation ‘nonlinear’ is rather like calling zoology ‘nonpachydermology’.”

Or, as James Gleick reports in CHAOS, Making of a New Science:

“The mathematician Stanislaw Ulam remarked that to call the study of chaos “nonlinear science” was like calling zoology “the study of non-elephant animals.”

Amongst the dynamical systems of nature, nonlinearity is the general rule, and linearity is the rare exception.

Nonlinear system: A system in which alterations of an initial state need not produce proportional alterations in any subsequent states, one that is not linear.

When using linear systems, we expect that the result will be proportional to the input. We turn up the gas on the stove (altering the initial state) and we expect the water to boil faster (increased heating in proportion to the increased heat). Wouldn’t we be surprised though, if one day we turned up the gas and instead of heating, the water froze solid! That’s nonlinearity! (Fortunately, my wife, the once-professional cook, could count on her stoves behaving linearly, and so can you.)

What kinds of real world dynamical systems are nonlinear? Nearly all of them!

Social systems, like economics and the stock market are highly nonlinear, often reacting non-intuitively, non-proportionally, to changes in input – such as news or economic indicators.

Population dynamics; the predator-prey model; voltage and power in a resistor: P = V²2R; the radiant energy emission of a hot object depending on its temperature: R = kT4; the intensity of light transmitted through a thickness of a translucent material; common electronic distortion (think electric guitar solos); amplitude modulation (think AM radios); this list is endless. Even the heating of water, as far as the water is concerned, on a stove has a linear regime and a nonlinear regime, which begins when the water boils instead of heating further. [The temperature at which the system goes nonlinear allowed Sir Richard Burton to determine altitude with a thermometer when searching for the source of the Nile River.] Name a dynamic system and the possibility of it being truly linear is vanishing small. Nonlinearity is the rule.

What does the graph of a nonlinear system look like? Like this:

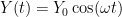

Here, a simple little formula for Population Dynamics, where the resources limit the population to a certain carrying capacity such as the number of squirrels on an idealized May Island (named for Robert May, who originated this work): xnext = rx(1-x). Some will recognize this equation as the “logistic equation”. Here we have set the carrying capacity of the island as 1 (100%) and express the population – x – in a decimal percentage of that carrying capacity. Each new year we start with the ending population of the previous year as the input for the next. r is the growth rate. So the growth rate times the population times the bit (1-x), which is the amount of the carrying capacity unused. The graph shows the results over 30 years using several different growth rates.

We can see many real life population patterns here:

1) With the relatively low growth rate of 2.7 (blue) the population rises sharply to about 0.6 of the carrying capacity of the island and after a few years, settles down to a steady state at that level.

2) Increasing the growth rate to 3 (orange) creates a situation similar to the above, except the population settles into a saw-tooth pattern which is cyclical with a period of two.

3) At 3.5 (red) we see a more pronounced saw-tooth, with a period of 4.

4) However, at growth rate 4 (green), all bets are off and chaos ensues. The slams up and down finally hitting a [near] extinction in the year 14 – if the vanishing small population survived that at all, it would rapidly increase and start all over again.

5) I have thrown in the purple line which graphs a linear formula of simply adding a little each year to the previous year’s population – xnext = x(1+(0.0005*year)) — slow steady growth of a population maturing in its environment – to contrast the difference between a formula which represents the realities of populations dynamics and a simplified linear versions of them. (Not all linear formulas produce straight lines – some, like this one, are curved, and more difficult to solve.) None of the nonlinear results look anything like the linear one.

Anyone who deals with populations in the wild will be familiar with Robert May’s work on this, it is the classic formula, along with the predator/prey formula, of population dynamics. Dr. May eventually became Princeton University’s Dean for Research. In the next essay, we will get back to looking at this same equation in a different way.

In this example, we changed the growth element of the equation gradually upwards, from 2.7 to 4 and found chaos resulting. Let’s look at one more aspect before we move on.

This image shows the results of xnext = 4x(1-x), the green line in the original, extended out to 200 years. Suppose you were an ecologist who had come to May Island to investigate the squirrel population, and spent a decade there in the period circled in red, say year 65 to 75. You’d measure and record a fairly steady population of around 0.75 of the carrying capacity of the island, with one boom year and one bust year, but otherwise fairly stable. The paper you published based on your data would fly through peer review and be a triumph of ecological science. It would also be entirely wrong. Within ten years the squirrel population would begin to wildly boom-and-bust and possibly go functionally extinct in the 81st or 82nd year. Any “cause” assigned would be a priori wrong. The true cause is the existence of chaos in the real dynamic system of populations under high growth rates.

You may think this a trick of mathematics but I assure you it is not. Ask salmon fishermen in the American Northwest and the sardine fishermen of Steinbeck’s Cannery Row. Natural populations can be steady, they can ebb and flow, and they can be truly chaotic, with wild swings, booms and busts. The chaos is built-in and no external forces are needed. In our May Island example, chaos begins to set in when the squirrels become successful, their growth factor increases above a value of three and their population begins to fluctuate, up and down. When they become too successful, too many surviving squirrel pups each year, a growth factor of 4, disaster follows on the heels of success. For real world scientific confirmation, see this paper: Nonlinear Population Dynamics: Models, Experiments and Data by Cushing et. al. (1998)

Let’s see one more example of nonlinearity. In this one, instead of doing something as obvious as changing a multiplier, we’ll simply change the starting point of a very simple little equation:

At the left of the graph, the orange line overwrites the blue, as they are close to identical. The only thing changed between the blue and orange is that the last digit of the initial value 0.543215 has been rounded up to 2, 0.54322, a change of 1/10000th, or rounded down to 0.54321, depending on the rounding rule, much as your computer, if set to use only 5 decimal places, would do, automatically, without your knowledge. In dynamical sciences, a lot of numbers are rounded up or down. All computers have a limited number of digits that they will carry in any calculation, and have their own built in rounding rules. In our example, the values begin to diverge at day 14, if these are daily results, and by day 19, even the sign of the result is different. Over the period of a month and a half, whole weeks of results are entirely different in numeric values, sign and behavior.

This is the phenomena that Edward Lorenz found in the 1960’s when he programmed the first computational models of the weather, and it shocked him to the core.

This is what I will discuss in the next essay in this series: the attributes and peculiarities of nonlinear systems.

Take Home Messages:

1. Linear systems are tame and predictable – changes in input produce proportional changes in results.

2. Nonlinear systems are not tame – changes in input do not necessarily produce proportional changes in results.

3. Nearly all real world dynamical systems are nonlinear, exceptions are vanishingly rare.

4. Linearized equations for systems that are, in fact, nonlinear, are only approximations and have limited usefulness. The results produced by these linearized equations may not even resemble the real world system results in many common circumstances.

5. Nonlinear systems can shift from orderly, predictable regimes to chaotic regimes under changing conditions.

6. In nonlinear systems, even infinitesimal changes in input can have unexpectedly large changes in the results – in numeric values, sign and behavior.

# # # # #

Author’s Comment Reply Policy:

This is a fascinating subject, with a lot of ground to cover. Let’s try to have comments about just the narrow part of the topic that is presented here in this one essay which tries to introduce readers to linearity and nonlinearity. (What this means to Climate and Climate Science will come in further essays in the series.)

I will try to answer your questions and make clarifications. If I have to repeat the same things too many times, I will post a reading list or give more precise references.

# # # # #

Great stuff, can’t wait for the rest, thanks!

What the IPCC, like many others, has long recognized is false. Linearity and chaos apply to models, not to the real world. Whether models are linear often depends merely on the scale factor applied. A system is linear if f(ax+by) = af(x)+bf(y). Equation do not exist in the real world to apply the definition. Models and equations are strictly manmade.

Chaos does not exist in the real world because the real world has no initial conditions to which it might be sensitive. Only models do. Climatologists and physicists alike often confuse the real world with their models.

+100

Ditto.

I like to paint. If I “paint a tree”, my model of the tree is not the tree.

http://www.maxphoton.com/let-light/

It is mind-blowing how many full-grown, professional adults can’t maintain that separation. In fact, the entire climate catastrophic fiasco hinges on this Tyranny of the Model.

Max Photon:

I was standing gazing at a distant mountain, thinking about how to paint a picture of all those trees with all the contrast and textures.

A friend asked me what I was thinking about.

I replied: ‘I was thinking about how to paint all those trees.’

I realized how ambiguous that statement was and added: ‘It would take a heck of a lot of paint to paint all those trees. There are thousands of them.’

Richard,

All you can do is create symbols (by placing pigment on fiber) that make the viewer’s mind say “tree.”

Max

Or put a coat of paint on each tree. Something about not seeing the painting for the trees.

Reply to Jeff Glassman ==> “Chaos does not exist in the real world” If only it were so. The natural dynamical systems of the world are almost all, exclusively, nonlinear and subject to chaos.

Turbulence in fluid flows of all kinds, including the atmosphere. Heat transfer through and between materials. Everyday population dynamics. Passage of radiant energy through a translucent medium (the atmosphere). All nonlinear dynamical systems, and subject to all the behaviors of nonlinearity.

Stay tuned to the whole series, and see if I can’t convince you of this.

Thanks for reading here.

..if this trend continues

It is so. And what makes it so is logic applied to our definitions of chaos. Many definitions exist, but one essential they all share is system sensitivity to its initial conditions. When Mitchell Feigenbaum (you quoted below; a reference would help) expressed chaos in terms of rapid growth, he was undoubtedly referring to rapid growth from its initial conditions (ICs). Devaney provide a definition that is popular, and several papers use that as a starting point for even more definitions. But I still have an opening in my collection of chaos definitions for one from a scientific field that does not have this IC property.

In Lorentz’s work, his systems were systems of equations. His domain was models of the real world.

One thing is certain. Nothing in the real world has initial conditions. Nor, for that matter, parameters, units, equations, coordinate systems, dimensions, sets, taxonomies, clocks, logic. These are all manmade constructs, expressed in human languages, from what impinges on our senses and instruments from the real world.

Jeff: one feature of nonlinear dynamics is that you can close your eyes and point to any point in time and say “these are my initial conditions.” So that means every chaotic system in the real world has initial conditions. Such systems are sensitively dependent on those initial conditions, so humans can never estimate them accurately enough to insert a model that can predict the future. However as Kip says, knowing that the systems are chaotic means that we understand better why the models don’t work.

Reply to Jeff Glassman ==> It has been some time since I heard someone claim that their own sense of logic trumped the Real World. There are so many real world examples of these chaotic behaviors in natural systems that I find your continuing assertions to the contrary difficult to understand.

Did you read the linked study Nonlinear Population Dynamics: Models, Experiments and Data by Cushing et. al. (1998)? It is a marvelous example of truly exemplary science on this topic.

I can only suggest reading any of the four books listed in the Introduction to Chaos Theory Reading List.

Reading any one of them should manage to bring you around…I hope!

“All nonlinear dynamical systems, and subject to all the behaviors of nonlinearity.”

That doesn’t make them chaotic. You beautifully demonstrate how some population equations behave chaotically, however, do actual populations exhibit such characteristics. Sure they’re complicated and subject to random events (like hurricanes) but that’s not chaos. I’m not going so far as to say there are no real world systems that are chaotic but they seem to be really rare to me. Let’s take squirrel populations for example, to be chaotic in the real world equivalent to the minute changes that significantly alter virtual populations a population of a hundred squirrels would have to be noticeably altered by an oak tree producing 2% less acorns (or the removal of 1 inch of one squirrels tail) a few years after the change. These kind of incredibly small changes don’t seem to be significant in the real world, in other words, the real world population of squirrels is not nearly as sensitive to initial conditions as would be required to consider it chaotic. Oh, and butterflies don’t really set off hurricanes except in virtual worlds. Admittedly, engineers are notorious for only looking at 3 significant digits (often 2, sometimes 1), but really I haven’t ran across many instances in which I really needed more than 2 in 25 years of real world electro-chemical processing which tells me they’re not chaotic even though they are “dynamical” and nonlinear (and sometimes really really complicated in what is certainly a variable rich environment subject to all sorts of random events).

Reply to John West ==> I will try one more time.

Please read any one of the books in the reading list for general understanding, or even just the Wikipedia article on Chaos Theory.

Read the paper I linked on Nonlinear Population Dynamics: Models, Experiments and Data by Cushing et. al. (1998). It is a marvelous example of truly exemplary science on this topic. This paper finds the very odd behaviors of nonlinear population dynamics in actual living populations in tightly controlled lab experiments — not just fooling around with numbers on a computer. Seeing, should be, believing.

As a chemist, try reading this paper Nonlinear Chemical Dynamics: Oscillations, Patterns, and Chaos . The BZ reaction and subsequent discoveries. It is all in there…right in your field.

Good luck.

It is not necessary to get any more complicated than the example a “real world” pendulum (with bearing friction) to demonstrate chaos in most all physical systems. Once released, the best you can do is calculate an envelope outside which the swinging bob CANNOT BE at any given moment in time thereafter, leaving a band of possible “where it is at any given point” in time values.

The bigger and more complex the physical system, the more difficult it becomes to calculate the envelope of possibilities. The 500,000 year record of reconstructed global temperature does a pretty good job of painting such a chaotic envelope for our grandaddy of all earthly non-linear systems. Within that envelope, the system pretty much has life of its own in which specific responses to specific forcing functions are impossible to calculate. Neither episodes of horrific volcanic activity nor cataclysmic meteor strikes has taken global climate outside that cyclic envelope of temperature values.

Mr. Hansen, et.al., I think you and Mr. Glassman are making different arguments, his philosophical and yours physical. In the real world the initial conditions occurred so long ago there is really no way to mentally get from there to the present. Equations, chaos, thought, and all the processes linear or otherwise are all chaotic in the sense that they are unpredictable because it is impossible to describe them in any mathematics accurately enough to match reality. Even the idea of predicting the a solar eclipse hundreds of years from now rests on the idea that no large disturbance in the solar system, say a Jupiter sized planet from far out in the Oort cloud passing between the earth and Venus, will happen. We just don’t know. As Dr. Essex so adroitly shows in several of his presentations, we don’t even know how we could know.

The simplest definition of chaos is unpredictability. Simplest example I can think of is a machine with two buttons, labeled “press a button to start, press a button to stop”. When you press a button the innards do some pseudo random calculation and assigns start and stop to each of the buttons. Every time you press a button the process repeats. When you press a button the machine starts. Press a button and it may stop, or it may not. Every time you press a button it may stop, or not. When it does stop pressing a button may start or maybe not. After an initial button press there is virtually no way to reliably predict what will happen on the next button press, the response is essentially unpredictable and chaotic. It’s also neither linear or non-linear, it’s a simple binary response.

One question- In your graph(7) of xnext = 4x(1-x), after a million or so iterations, is it possible to derive the original equation from the output?

Jeff the natural world certainly had an initial condition if you believe it did not then I am to assume that you do not believe or accept the big bang theory. I personal don’t know if it is truly correct but I am will to admit that is true and if it is that was the initial state of all the chaos that has happen since! Of course it worthless for a climate modeler since all the computing power in the world now or what every will be develop will never be capable of modeling ten years of climate let alone the all the states and chaos since the big bang. Even if you could the model would still not work since the model is not capable of forecasting anything in a chaotic system since chaos cannot not be predicted. I would assume you are with me on the point the people who model climate to the most part are fools on a fools errand.

Jeff

What’s your point – that everything is a cloud of unknowing and only religion can make sense of it. That making an image of nature, let alone God, is forbidden idolatry?

Have you been tasked with evading the implications of ubiquitous chaos and nonlinearity that are uncomfortable for CAGW?

Thanks for trying one more time.

Note in the paper “Nonlinear Chemical Dynamics: Oscillations, Patterns, and Chaos” the authors say “A small but growing number of chemical systems are now known to exhibit chaotic behavior”.

In other words, it’s rare.

They also note: “three fundamental classes of dynamical behavior (stationary, periodic, and chaotic)”.

Hmmm… Exactly how does one differentiate between an amalgamation of coupled complex periodic oscillating systems (with various damping) and a chaotic system? Sensitivity to initial conditions?

Furthermore right in their intro they say “Chemical reactions with nonlinear kinetic behavior can give rise to a remarkable set of spatiotemporal phenomena. These include periodic and chaotic changes …” [bold mine]

So nonlinear (even in the dynamical systems realm) can be something other than chaotic, i.e. periodic.

It seems to me these days we can be too quick to label something chaotic; chaos in the gaps of understanding (or possibly computational ability) if you will. The quintessential question being ‘is weather chaotic?’. Many say it is and while weather simulating models certainly behave chaotically I’m unconvinced that weather is chaotic. Call me a skeptic.

I’ll try to “let it go” for the next two parts and just leave this particular objection (of going from linear to chaotic too quickly) here.

Mr. Glassman’s point is shown in the squirrel population figure where the squirrel population values go to 0 at times. If this really happened just once, there would be no more squirrels on May Island.

I wondered about that too. But it appears one cannot assume that the change in squirrel population is based only on reproduction. Heck, if that were true a population of one squirrel is the same as zero and the population would never recover. So there must be a way for squirrels to arrive from afar, perhaps as stowaways on a visiting supply ship or with a boat of researchers. Of maybe they swim from a neighbouring island, assuming they can swim of course. I guess that’s another part of the chaos.

Reply to DHR and PaulH ==> Couple of points — in the population dynamics graph, it appears that the squirrel population has reached zero — in actuality, it is a very small number. In the real world, this could represent an local extinction event — or it could represent a common ecological case where a plant or animal becomes so locally rare that it appears to have vanished, only to be discovered again in the exact same place some number of years later.

Mr. Glassman seems to be railing against mathematical models in general and incorrectly believes that the chaos is a product of the math — which it is not. The chaotic behaviors are natural phenomena, only recently being discovered to also exist in the very mathematics of the systems described.

Maybe there’s half a squirrel. That’s a start,

100 %

Jeff Glassman

March 15, 2015 at 10:19 am

“Chaos does not exist in the real world because the real world has no initial conditions to which it might be sensitive. Only models do. Climatologists and physicists alike often confuse the real world with their models.”

Any momentary state can count as the initial state of what comes after it, so, you’re wrong. Chaos exists in the real world. It was OBSERVED by Lorenz before the entire branch of chaos mathematics and simulation came into being.

No. You are introducing the man-made element by assigning a point in time. Chaotic progression demonstrable from a point in time is still an arbitrary man-made condition. Chaos exists in the real world only within our context, not nature’s. If we are prepared to describe climate as “non-linear and chaotic”, we are basically saying, that for us, it is unknowable. We attempt to describe it and model it, but we can do so only imperfectly. And since our knowledge is imperfect, our models are even more so. Neither arrives at truth. Schrodinger!

Dirk, I agree. I’m not sure I even understand Jeff Glassman’s point. ALL of our idea’s are simply models of the reality “out there”. Those models may be understood as algorithms. We supply inputs to the algorithms, such as initial or boundary conditions. We turn the crank and produce some prediction for the output.

If the models are good ones and the initial/boundary conditions are chosen appropriately, then the predicted outcomes can be of great value in allowing us to anticipate and thus control, ACTUAL outcomes in the real world.

Consider a man throwing a baseball. If he is good at it (meaning his brain stores an excellent algorithm for the process of throwing the ball), then he can with a high degree of certainty deliver the ball to some precise location at a precise time given inputs such as a visual field (boundary conditions), and the state of play at a given moment (initial conditions). That is the value of the model that is in his head.

Furthermore, this is a highly non-linear system. A change amounting to only fractions of a degree in direction and azimuth can make the difference between a strike or a ball. A Home Run or an Out. Winning the World Series or losing the World Series.

I have chosen an active, participatory example. If that is not to one’s taste we could choose a more passive example. We can use the models (algorithms) of orbital mechanics to predict solar eclipses 100s of years ahead. Newton’s laws plus observations of the sun / earth / moon positions (boundary and initial conditions) permit prediction of a total eclipse on let us say the island of Tahiti beginning at 11:05am on May 5, 2034. That sort of pre-knowledge can also be very useful for controlling outcomes. (Mark Twain provides an interesting fictional instance of that in Connecticut Yankee in King Authur’s Court.)

In what sense is it useful to argue that initial / boundary conditions “don’t exist in the real world”? It makes just as much sense to fuss that MODEL’s “don’t exist” in the real world. True, and so what?

Paul Coppin: If you are trying to say “we don’t know what’s going to happen, but Nature does”, that’s wrong. There is chaos and uncertainty, down to the quantum level. Especially at the quantum level. “Nature” has no idea when an radioactive atom is going to decay. Or exactly where a leaf dropped in a rushing stream will be in 30 seconds, etc., etc.

The Universe is simply not predictable no matter how closely it is measured.

Dear Eustace Cranch,

To be sure you did not miss my apology to you here: http://wattsupwiththat.com/2015/03/12/claim-climate-communication-needs-to-be-less-optimistic-more-climate-disruption/#comment-1881285

here it is!

Take care,

Janice

DirkH,

Look more closely at what Lorenz did. He input what he thought was a previous state (and later discovered it was not) into his model and got something completely unexpected. THIS is what he observed. It was his model which was chaotic. He was in never in a position to claim his model was an accurate description of reality.

In fact, it would be difficult if not impossible to prove any physical system was chaotic unless you could define a model which accurately followed the physical system thus demonstrating it. The difficulty is that chaotic mathematical functions are subject to initial conditions which, in turn, implies that building such a demonstrator is very hard. Regardless, this is a statement about the modeler’s limited knowledge and not the physical system. You can never rule out the possibility that some modulating variable or groups of variables can be found which would simplify the model.

Think about it.

Eustace Cranch,

There is chaos and uncertainty, down to the quantum level. Especially at the quantum level.

There is indeed but only to the observer. The claim that, even if you knew all of the causes and their precise states and STILL get different answers, ultimately means that cause and effect is an illusion. The illusion may BE reality but It is a proposition that is not likely provable as you would be hard pressed to show you know all of the inputs.

Until such a proof comes along, it is better to assume that “random” (even when constrained, i.e., “chaotic”) is a description of modeler limitations and not reality.

Epistemological and not Ontological.

Yes Jeff, but really there is chaos. Chaos, not in the sense of the Physics definition, but in the sense of the state of mind of the proponents of the alarmist theories. They are quite literally in a, “state of extreme confusion and disorder”, that is to say their thinking and indeed subsequent actions, are chaotic.

From a Confucius scholars point of view this sort of malfunction in thinking is inevitable. …….

In the Western tradition, dominated by cosmogonic myth and speculation, in the beginning, at the origin, there is chaos. The transition from religious to philosophic and scientific speculation occurs in terms of the transition from mylhos to logos. In our tradition, because of the dominance of scientific thinking in the last four or five centuries, it is forgotten that, before there was a logos of mythos, there had to be a mythos of chaos; before scientific thinking could rationalize the myths, the myths had to organize chaos. In this view, reasoning is twice removed from the sources of individual and social experience in the primordial chaos at the time of the beginnings.

It is good to see a distinct and culturally different philosophical view expressed here.

Really? Why don’t you try it with physical systems from the real world to see if chaos does not exist in the real world? Do you best to prepare them ‘identically’ and watch them evolve over time.

A lot depends upon how close? What precision do you need. For a circle, pi = Circumference/diameter = C/d and therefore: C=Pi*d and conversely, d = C/pi. Since Pi is not a rational number and is transcendental. Try as you will you can never get an exact value for Pi. Therefore, if you know d exactly then C is always approximate and vice versa if C is known exactly then d is only approximate. In the practical world we can know C and d to our limits of measurements by starting with a good value of Pi with many digits. (Sometimes the constants have relatively large uncertainty in the real world due to measurement error).

Now if know d exactly and approximated C, then used C in n recursive calculations for a large n, number of times, then your final value of F(n,C) will be a function of n and C calculated with m digits of Pi. If your calculations are sensitive to round off error, then at some time for large n F(n,C) would be nonsense. And thus, they would be different depending upon on the initial value of Pi used in the calculations (i.e. the value of m, the number of digits used to estimate Pi).

Sometimes the models can be perfect C=Pi*d but because of our limits in specifying Pi, the calculations will always fail for large n. Now consider a global climate models with a very large number of constants that cannot be measured very well and run it over and over for a 1000 years where each value of the global mean temperature is dependent on the previous years values of global mean temperature and the previous years calculations of all “constants”, then we can see that their long- term predictions can go far astray.

The above discussions refer to cascading errors in the value of approximately known constants. Now introduce many parameters estimated with relatively large errors. Aye Yi Yi,! Now consider the uncertainty of the global climate models.

Wise guys in college used to say,” Constants aren’t and Variables won’t”.

Somebody says, “Really? Why don’t you try it with physical systems from the real world to see if chaos does not exist in the real world? Do you best to prepare them ‘identically’ and watch them evolve over time.”

=======================================================================

Such mathematics are not my area at all. However, philosophically I see the arguments against chaos as having some legs. In some recent debates with Brandon Gates, he kept informing me that all models are wrong. (It did not matter that I told him over and over of course they are, but in the engineering world you learn from your wrong models, the IPCC does not) The question is, why are all models wrong?

The answer is no models are infinitely precise, and all errors propagate. Beyond, and additive to that, not all fundamental forces are absolutely understood on every level including the quantum level.

Take the modeled pool table and classic pool break. The model says this and this will happen, and predicts where all the balls will end up. But it changes every time, despite all attempts to model it. The felt varies against what is known. One ball is slightly out of round. The humidity varies The cue is imperfect, or the cue ball is struck a hemi demi semi bit off. The error in the model vs. reality propagates, and it never gets it right.

Math is a symbol of something. Thus one apple plus one apple equals exactly two apples. As an idea this is perfectly true. But no two apples are exactly alike, therefore the “perfect” answer, is not in truth perfect.

Macroscopically, and microscopically, nothing is know perfectly or infinitely precisely, and errors propagate. Is chaos nothing more then the truth that all models have errors vs. the material reality? . . .

David, mathematical chaos is not necessarily a completely unordered state where not further entropy can occur, which is how the word is used philosophically. If you consider snow flakes, you look at a system that is fully determinant and yet which, except in the broadest of terms, you cannot predict, ever. There are many other natural systems that are governed by fully determinant yet cannot be forecast. All systems following fractals rules are in this case (moutains eroding, trees branching and distributions of leaves on trees).

David A,

Because that’s a ridiculous assertion stated so broadly.

My bottom line in this argument is that if you have little to no faith in models, you should be the first in line arguing for no change. Consistency goes a long way in a debate.

Now from my point of view, I not only want to know why CMIP5 models are wrong, I want them to be fixed. Realizing of course that they’ll never be perfect. Normally I’d then be asking how wrong they can be and still be useful. In this context, I see it as a moot question: they’re the best we’ve got, and I don’t see them getting much better any time soon as much as I wish that were so.

We have an infinitely better idea what CO2 at 280 ppmv looks like, because that era is in the rear-view mirror.

Do you get it yet? No? Very well, keep talking about chaos and unpredictability. Some day yet it may sink in.

Brandon Gates quotes me.

David A,

“In some recent debates with Brandon Gates, he kept informing me that all models are wrong. (It did not matter that I told him over and over of course they are, but in the engineering world you learn from your wrong models, the IPCC does not)

===========================================

Brandon states….”Because that’s a ridiculous assertion stated so broadly”

==========================

Full stop Brandon. My “broad assertion” comment was here. In the thread I gave you several graphics, explained how the IPCC chooses, and then utilized for future projections the “modeled mean” of knowingly wrong in ONE direction models. (They purposefully chose a KNON to be wrong answer to keep their scary stories alive. I also gave you links to detailed analysis of why this is political based post normal science, as opposed to real science.

You answered, with arm flapping detail, mute points about why all models are wrong, continually avoiding the FACT, that the IPCC does CHOOSE to use the mean of the error in one direction wrong models.

If they were building aviation instruments required for doing instrument landings, every one of their planes would crash into the runway because they thought they were higher then they were. (Were yet, they would face murder charges because they KNEW their modeled mean was telling them the planes were higher then they actually were, ad they sold them anyway. Arguing the broad term general about all models have errors”

You did in that post, exactly what you accused me of in this post….”arguing the broad term general about all models have errors” while ignoring the real story, no matter how clearly or how often it was pointed out to you. The rest of you comment follows this pattern.

David A,

You are ascribing motive, which is opinion. The factual statement is demonstrably false; the CMIP5 ensemble results as published in AR5 do not err in ONE direction:

http://1.bp.blogspot.com/-ZY_oL2cq4r4/VQiX3rRH2aI/AAAAAAAAAYo/0VNOKoRIQJw/s1600/CMIP5%2Bvs%2BHADCRUT4%2Btrend%2B1860-2014%2B01.png

Rate of change for all series computed with a least squares linear regression over the interval 1860 through 2014. This plot shows the result of subtracting the HADCRUT4 trend from each individual model trend. As you can see, roughly half of the models in the ensemble understate the observed trend over the entire interval. On balance, the CMIP5 ensemble is hot by 0.07 °C/century, but NOT as you ignorantly claim, because all models the IPCC have chosen are wrong in ONE direction.

All data from KNMI Climate Explorer.

The balance of your post ignores my central point: We have an infinitely better idea what CO2 at 280 ppmv looks like, because that era is in the rear-view mirror.

If you have any ability to be logically consistent, you will realize the import of my point.

Chaos for sure exists in any model of the real world though, and it doesn’t matter what you say, in the end all we have is models of the real world.

That is ultimately the only sane conclusion anyone who studies metaphysics can arrive at. There almost certainly is a real world out there, but we never deal with it directly. We only deal with models of it in our own heads and perceptions.

The game is not to throw models away and deal with reality directly – we can’t – or at least according to the mystics, all you end up with is bliss and no sense of identity 😉 – so we have to be in the business of better models, and in those better models chaos is for sure the best tool we have to describe what happens.

For sure models ain’t reality. That is the first step. The second step is to realise that reality is unattainable and we are stuck with models of it.

Chaos absolutely exists in the real world, Read James Gleick’s book “Chaos: Making A New Science”, if you understand that, and it’s amazingly understandable, you’ll know more about chaos that I’ve seen Climatologists demonstrate.

Paul, so you disagree with this statement…

ferdberple

March 15, 2015 at 12:48 pm

Predictable systems can be thought of as having a single attractor. A planet orbiting a star is predictable. However, when you add a third body the system becomes chaotic, except in the case where all 3 bodies lie in the same plane.

Chaotic doesn’t mean unpredictable, but it does mean unpredictable for all practical purposes. Given infinite precision and infinite time, you can predict a chaotic system.

Reply

======================

Kip Hansen

March 15, 2015 at 1:33 pm

Reply to ferdberple ==> Yes and Yes — think of a child learning to ride a bike — pedaling fast enough to get the bike up to speed, while steering close enough to straight, will get the bike on that stable “Look at me Mom, I’d riding a bike!” point

Nicely formulated Jeff. The map is not the territory. The distinction used to be called a priori/ a posteriori.

I highly recommend the work of Gregory Chaitin. He has continued the work of Goedel and Turing on the efficacy of modeling and meta-modeling. In essence it can be proved that no model can model reality, because the model would have to be more complex and bigger than said reality in order to model it.

Skip, reality may be complex or chaotic in a vulgar usage, but in a technical usage we are just describing reality as complex or chaotic. These theories while useful now will most likely themselves pass in time for more useful theories, at which time Reality will no longer be chaotic 😉

I think my post here, “http://wattsupwiththat.com/2015/03/15/chaos-climate-part-1-linearity/#comment-1884218 is articulating what Fred said below, “Chaotic doesn’t mean unpredictable, but it does mean unpredictable for all practical purposes. Given infinite precision and infinite time, you can predict a chaotic system.”

In this sense a chaotic system does not refer to the physics involved but to our capacity to predict and analyze them. Of course I understand some factor increase in a linear manner, and many are exponential, and in nature, many systems interact. I think however it may be fair to say, nature is not chaotic, but are capacity to predict it is.

Philosophy

The Hindu word for creation is maya, which literally translates as to divide that which is indivisible. In affect from the singularity of infinite energy beyond space and time solutions, comes all relative cause and affect. observations. To focus on one, is to by nature miss the interaction of all. Thus science can know nothing perfectly, as infinite precision and omniscience would be required.. To measure is to see only a part of the whole picture.

Below from a book published in the 1940s.

“The ancient Vedic scriptures declare that the physical world operates under one fundamental law of maya, the principle of relativity and duality. God, the Sole Life, is an Absolute Unity; He cannot appear as the separate and diverse manifestations of a creation except under a false or unreal veil. That cosmic illusion is maya. Every great scientific discovery of modern times has served as a confirmation of this simple pronouncement of the rishis.

Newton’s Law of Motion is a law of maya: “To every action there is always an equal and contrary reaction; the mutual actions of any two bodies are always equal and oppositely directed.” Action and reaction are thus exactly equal. “To have a single force is impossible. There must be, and always is, a pair of forces equal and opposite.”

Fundamental natural activities all betray their mayic origin. Electricity, for example, is a phenomenon of repulsion and attraction; its electrons and protons are electrical opposites. Another example: the atom or final particle of matter is, like the earth itself, a magnet with positive and negative poles. The entire phenomenal world is under the inexorable sway of polarity; no law of physics, chemistry, or any other science is ever found free from inherent opposite or contrasted principles.

Physical science, then, cannot formulate laws outside of maya, the very texture and structure of creation. Nature herself is maya; natural science must perforce deal with her ineluctable quiddity. In her own domain, she is eternal and inexhaustible; future scientists can do no more than probe one aspect after another of her varied infinitude. Science thus remains in a perpetual flux, unable to reach finality; fit indeed to formulate the laws of an already existing and functioning cosmos, but powerless to detect the Law Framer and Sole Operator. The majestic manifestations of gravitation and electricity have become known, but what gravitation and electricity are, no mortal knoweth.”

=========================================

http://www.ananda.org/autobiography/#chap30

.

“Chaos does not exist in the real world because the real world has no initial conditions to which it might be sensitive.”

There is hysteresis, especially in oceanic modes, providing greatly ranging initial conditions. That has though little to do with whether what came before and what follows is actually chaotic or not.

At some point introduce the distinction between nonlinear, and nonlinear dynamic (lagged feedback) systems. A pendulum is the former. Old clocks show pendulums are still well behaved. Squirrels and climate are both the latter. And are not.

Reply to Rud ==> Yes, gently kicked pendulums are wonderfully predictable and constant. However, kick them too hard, or break the pendulum into two sections and they devolve into chaotic behaviors.

I knew about the double pendulum behaviour.

pendulum math gets really messy once you get to the point where sin(x)~=x breaks down.

This needs to be taught in schools, together with physics and calculus. And it should be required that anyone who aspires to have an influence on public policy pass a test at this level of mathematics.

All scientists who study the causes of climate and weather pattern variations need most of their master’s coursework at this level of mathematics.

“This level of mathematics” is stripping the basics down to the bone! I would certainly pray that climate “scientists” know a hell of a lot more mathematics than this!!!

(You’re making me nervous.)

Don’t forget economics.

Very interesting, I look forward to the next part.

@Jeff Glassman: I would offer up one of the hardest physical phenomenon that there is to model, to disprove your assertion – turbulence in fluids. It is inherently chaotic and it dominates so many important aspects of our lives, from the weather to corrosion of pipes to the way our bodies work.

Re: pipe turbulence — good point rxc

–That we have not (and likely cannot) solve the equation describing it,

Dr. Christopher Essex gives as one example of why climate is NOT simulatable:

(from my 2/24/15 comment here: http://wattsupwiththat.com/2015/02/23/inconvenient-study-la-nina-killed-coral-reefs-4100-years-ago-and-lasted-over-two-millenia/#comment-1867617)

“{Essex video here on youtube: https://www.youtube.com/watch?v=19q1i-wAUpY}

{25:17} — Solving the closure problem. {i.e., the “basic physics” equations have not even been SOLVED yet, e.g., the flow of fluids equation “Navier-Stokes Equations” — we still can’t even figure out what the flow of water in a PIPE would be if there were any turbulence.}”

Janice

If this were so then chemical and petrochemical plants requiring thousands of pressure drop flow calculations (and ultimately power consumption calculations ) involving turbulent fluid flow and transfer through pipes could not be designed. If turbulent flow through pipes cannot be figured out then how do these plants get designed, built and operated.

Robert Stevenson

The industries absolutely do “use” turbulent flow approximations all the time. Just like they “use” linear approximations of beam stress-strain and linear approximations of resistance curves. No one at any time can “predict” the exact start of turbulent flow, nor what happens inside the pipe during turbulent flow – All that the designers can do is deliberately select a pump power and valve diameter and pipe diameter sufficient to guarantee that turbulent flow must occur (at some point in the pump discharge path) and that fluid speed and pipe roughness and fluid temperature and pressure are sufficient for that turbulent flow to STAY turbulent all the way through the pipe from end-to-end.

But – to answer the question about “What is the fluid “doing” at any point inside the pipe?” … That we cannot answer.

I agree that turbulent flow in pipes is chaotic but it has been adequately modeled; for turbulent flow in smooth tubes the Blasius equation gives the friction factor accurately for a wide range of Re nos.

f= 0.079/Re^0.25 4000 < Re <10^5

Pipe flow models developed using dimensional analysis give excellent predictions in the turbulent region for velocity and pressure drop. Why in your view cannot models be developed for turbulent flow in the atmosphere to be used to predict the effects of man made global warming and future climate changes?

To invalidate (not disprove; this is about science, not symbolic logic) what I said, you would need to provide a (necessarily new) definition for either linearity or chaos, and then show how turbulent fluid flow is either nonlinear or chaotic according to either definition and without resorting to a model for turbulent flow.

The existence of hard and unsolved problems shows only the limitations of our abilities to model, including poor choices for observations, parameters, or scale factors.

Dear Mr. Glassman,

It would be helpful, I think, so that other commenters could even possibly invalidate or respond precisely to your statements at 10:19am and 11:02am today, if you would define your terms and write more clearly. It appears that you and everyone else (John West, too, perhaps…) are writing past each other here… . And you know what happens when computations start to diverge from reality….. !!!!! 🙂

That is: people are responding to what they THOUGHT you meant,

however,

from your reply, it appears that

what you meant is not what they thought.

#(:))

Janice

Janice, 3/15/15 @ 11:19:

What I believe to be the essentials of the definitions were contained in my opening paragraph. For linearity/nonlinearity, the essence is the equation I gave. For chaos, the key criterion is a heightened sensitivity to initial conditions. If scientific definitions exist without these properties, I hope the posters here will illuminate the dialog.

You might, though, enjoy these related, circular definitions from IPCC, intended for laymen (e.g., Policymakers):

>>Chaos A dynamical system such as the climate system, governed by nonlinear deterministic equations (see Nonlinearity), may exhibit erratic or chaotic behaviour in the sense that very small changes in the initial state of the system in time lead to large and apparently unpredictable changes in its temporal evolution. Such chaotic behaviour may limit the predictability of nonlinear dynamical systems. AR4, Glossary, p. 942.

>>Predictability The extent to which future states of a system may be predicted based on knowledge of current and past states of the system.

>>Since knowledge of the climate system’s past and current states is generally imperfect, as are the models that utilise this knowledge to produce a climate prediction, and since the climate system is inherently nonlinear and chaotic, predictability of the climate system is inherently limited. Even with arbitrarily accurate models and observations, there may still be limits to the predictability of such a nonlinear system (AMS, 2000)

By the way, IPCC’s definition of climate system is a good-enough real world system, but its definition of nonlinearity is a property of simple models sans mathematics.

So in IPCC speak, a real world system is chaotic and unpredictable if the models of it do not produce predictable results. This implies a certain arrogance that climate models are perfect. But more importantly, IPCC blames the failure of its models to predict climate on the climate, not the models.

I think Mr. Glassman is quite clear. He understands the difference between the model and that which is being modeled.

Others should be so clear.

If you by “chaotic” mean “random but constrained” or even plain old “unpredictable” then you are making a statement about your knowledge of the world as expressed in your model. Assuming your model is the world is reification where the models become the reality. In no way does the difficulty in modeling turbulence disprove what Jeff Glassman has said.

.

Glassman: “Chaos does not exist in the real world … .”

rxc: “…– turbulence in fluids. It is inherently chaotic … .”

— This is the crux of the difference that Mr. Glassman needs to clarify. As it is, he has left great ambiguity. What does he mean precisely? Until he clarifies his meaning, we might as well just ignore what he writes. Those who applaud and those who attempt to refute him are equally likely to be mistaken.

You as well, Janice. What could “chaotic” mean and what does it mean to you. So far the best definition would be “random but constrained” but “random” really means “unknown or incalculable”. It can only mean something regarding the extent of one’s knowledge and never about the real world which I think obviously doesn’t suffer from this unknowability.

Glassman: “Chaos does not exist in the real world … .”

Why would anyone think otherwise?

Reply to DAV, Glassman, and janice ==> Perhaps this is my fault, as author, in assuming that my use of the word “chaotic” would be understood in the sense intended when we speak of nonlinearity and ‘chaos theory’.

Amongst the many scholarly definitions of CHAOS is this from Mitchell Feigenbaum:

Edward Lorenz offers this:

Fort readers who think that “chaotic” means the same thing as “entirely random” — see the Wiki article for a brief introduction.

Much of this I hope to make clear in the second and third part of this series.

Dear Mr. Hansen,

Thank you for that very nice definition of terms. That you can explain your position so clearly to a layperson like I shows that you are a master of your subject. It is clear from your writing in your post and your above comment that Glassman and DAV are either: 1. honestly mistaken; or 2. using ambiguity and imprecision deliberately (for what purpose, I will not guess).

Thank you for the great education. Starting a building with the foundation — only way to go!

Janice

Kip, thanks. I hope the future articles make it clear that “chaos” is only an expression of out ability to predict and not something actually present in physical systems. The models are not the reality.

.

Janice,

The fluid is not chaotic. It knows exactly what it is doing at all time.

It only appears chaotic to the observer.

Chaos is in the eye of the modeler, not in what is being modeled.

“It only appears chaotic to the observer.

Science is about observation.

That’s why “chaotic” is the term used to describe that situation.

It is a useful concept. Mr. Glassman is using “chaos” in some obscure, largely unhelpful, way.

Max Photon,

It only appears chaotic to the observer.

An apt description but some people will fail to see that happens only when the observer becomes a modeler and sees chaotic behavior in the model predictions. There is a tendency to see one’s model as being the reality. Climate modelers and the IPCC seem to have fallen into this trap (called reification) as demonstrated, e.g., by their hunt for “missing” heat. “Missing” because it was predicted by the models so it should be present.

As long as the difference between models and reality is maintained or at least commonly understood there isn’t really anything wrong with shortcut descriptions. However, it is quite evident some here can’t see the distinction. From some of Kip Hansen’s comments it seems he may be one of them.

Janice and Mr. Glassman, do my layman thoughts expressed in this post here, “http://wattsupwiththat.com/2015/03/15/chaos-climate-part-1-linearity/#comment-1884218

articulate some of the same thoughts Mr. Glassman is expressing in a more detailed manner?

Missed the link to my comment…

http://wattsupwiththat.com/2015/03/15/chaos-climate-part-1-linearity/#comment-1884218

rxc; Many years ago we were on holiday and I wanted to see if water came out of the shower head in streams, like the single stream from a hosepipe. I turned the shower on and took two photographs through the shower towards the light. The first exposure at about 1/30th second showed streams of water, the second at a wider aperture and 1/1000th second showed droplets all in lines. I reasoned that the turbulence in the shower head prevented a linear egress of the water. I would also hazard a guess that the greater number of holes there are in the shower head, the less chance there would be of predicting how many droplets would come out of each hole.

I will add that I do enjoy swimming, good food, wine and sight-seeing when I go away!!

http://www.sciencealert.com/this-tap-saves-water-by-creating-incredible-patterns

That’s what quantum mechanics is about. You can’t predict the path an individual photon takes but there is an overall ‘order’. From one perspective it is chaotic but from another perspective it is ordered. It’s like Schrodinger’s cat-chaos exists and doesn’t exist at the same time

Alex, chaos and order only exist in the mind of the observer.

Everything is in the eye (or brain) of the modeler. Doesn’t mean there is nothing outside the modeler’s brain for him to model.

E.g., for those who say that evolution (for example) is “only” a theory — they beg the question, a theory of what? In order for there to be a theory at all, there must be something, some phenomenon/a, for the theory to be about. A theory with no real-world referent is like a map that isn’t a map OF anything.

Chaos was observed. Chaos is still observed. Go ahead, draw me a map that doesn’t represent -anything-, not even that weird house-like place you dreamed about the other night.

The model is not the thing modeled. And it can’t exist without the thing modeled.

Chaos was observed

Actually it was only observed in models. If anything it would mean that the models were far from complete.. To say that whatever the model is trying to predict is chaotic because the model predictions are is saying the model is accurate when quite obviously it is not.

Max, you appear to make a novice mistake. Chaos in the mathematical sense is not an absence of order. It is a level of complexity that defies long term precise predictions.

Excellent topic, a very good read. Thank you for taking the time to put this together.

“1) nonlinear and therefore, 2) chaotic” … “Nonlinear systems are not tame – changes in input do not necessarily produce proportional changes in results.”

There are plenty of nonlinear yet “tame” (in that they are completely predictable) systems. Nonlinear includes exponential and logarithmic neither of which are chaotic. This post is oversimplification past the point of absurdity.

I disagree. It is very simplified and could do with more caveats that people can follow up on if they are interested.

But this is an introduction.

It is the level that should be taught to 11 to 13 year olds in schools, but isn’t.

I think this article is useful.

Initial introduction to trigonometry and logarithms are typically taught in 9th-10th grade in US public schools, about 14-15 yr olds. That would be their first real exposure to nonlinear math functions.

“1) nonlinear and therefore, 2) chaotic”

I disagree with this, too. Non-linear and chaotic are quite different and should always be presented as such – even at an introductory level.

joelobryan, That seems a little later than I recall from my schooldays. I’m sure it was long before we started studying for our GCSEs.

Maybe it’s different between the US and England. It would be interesting to know what variation there is in the world’s mathematics teaching.

But I still think these ideas ought to be taught to children of around 11 to 13 years old.

Reply to the “Nonlinear/Chaotic” point ==> I am talking here quite specifically about nonlinear dynamical systems.

Nonlinear mathematical functions are math….not dynamical systems.

For those who are stubbornly holding to “what I learned in college mathematics” — please read any one, or all, of the following books:

Gleick is fun. Explains nonlinear dynamics in context of the people and their discovery process. Stewart is more straightforward technical. Both highly recommended.

There are of course many nonlinear dynamical systems that are not chaotic. It is usually very difficult to prove that a system is chaotic in the mathematical meaning. There are also many well behaved non linear systems that can be easily analyzed and simulated, a car, a robot, celestial motions, a bridge to name a few. There are not many linear systems analyzed in robotics but it is still possibly to get very good results.

The only valid point in your article seems to be that you need to be careful when handling non linear systems but everyone with any knowledge about the subject knows that.

Would you please say what you mean by dynamical systems? What would make a system non-dynamical or partialy dynamical, for instance?

God does not play dice, hirelings do. God owns the Casino!

Reply to Will ==> The Wiki gives:

“A dynamical system is a concept in mathematics where a fixed rule describes how a point in a geometrical space depends on time. Examples include the mathematical models that describe the swinging of a clock pendulum, the flow of water in a pipe, and the number of fish each springtime in a lake.

At any given time a dynamical system has a state given by a set of real numbers (a vector) that can be represented by a point in an appropriate state space (a geometrical manifold). Small changes in the state of the system create small changes in the numbers. The evolution rule of the dynamical system is a fixed rule that describes what future states follow from the current state. The rule is deterministic; in other words, for a given time interval only one future state follows from the current state.”

(Sorry that is so dense, but you did ask for it).

In plain English a dynamical system is a real world process, a series of events (any continuum is a series of discreet events, one after another) in which the next state of the system depends on the current state. In our population example — squirrels on May Island — the calculation for the number of squirrels next year starts with the ending value of this year. The thing we are measuring is moving through time.

And “In physics and other sciences, a nonlinear [dynamical] system, in contrast to a linear system, is a system which does not satisfy the superposition principle – meaning that the output of a nonlinear system is not directly proportional to the input. …. Nonlinear problems are of interest to engineers, physicists and mathematicians and many other scientists because most systems are inherently nonlinear in nature.”

Kip Hansen, 3/15/2015 @ 12:14 pm

Thinking that I might be able to help you, I found all four of your references available for preview on-line, including the indices and the ability to search the contents. I found some discussions about competing definitions of chaos, but these authors support you: none of you can define the term you pretend to discuss. How easy it is to write volumes about something undefined. Real science articles do not enjoy that luxury.

So too, zero change in inputs can have these results??

Reply to RobRoy ==> Very good question! The answer is “No”. Re-running calculations (re-doing an experiment) with exactly the same inputs gives exactly the same results, assuming you are using the same computer (more on this next time).

I like this definition of chaos.

Kip Hansen

March 15, 2015 at 11:50 am

“Chaos: When the present determines the future, but the approximate present does not approximately determine the future.”

It seems like global climate is the sum of all the regional weather events in a constant chain reaction. It’s never really any one thing at any one time.

Yes RobRoy -That effectively defines a non-linear system. it is the division by zero or where something tangible or measurable happens in zero time or zero space.

Yes, I’m in agreement with Jeff G and John West

We really do have to get our heads around something that is ‘straight-line’ linear Y=Ax+B for instance or something not straight line, such like x-squared, Log(x) or sin(x)

They are still linear functions.

A pendulum is linear no matter what size swing it takes but limiting calculations to small swings is sheer laziness (for lack of a better word) by people who do not want to be bothered to do the actaul calculations. The pendulum is always responding in a linear fashon to whatever forces are applied to it no matter what size swing.

Likewise water in a pipe or swirling in a bucket. Each individual molecule is always responding in a linear fashion to whatever forces are being applied to it at all times.

It is the fact that there are about 36 million million million million molecules in just one liter of water makes the calculation ‘a bit difficult’ Present-day digital technology is not up to the task but that does not make it non-linear system. Basically, its ’rounding error’

Likewise applying the tag ‘chaos’

Each molecule of water knows exactly where its going, where its been and what force is acting on it at all time and responding in a linear fashion – how can it do otherwise? If each molecule is linear, how can the body of water be non-linear?

If we really do want to solve the problem of what a liter of water does in a pipe, simply get a liter of water and a pipe and let the water solve it for you.

Until the digital computer can resolve the forces on each of the 3.6x10e25 water molecules in each litre, in (at a guess) sub-nanosecond timescales – their behaviour will appear chaotic or non-linear.

As skeptics we must not fall into the beguiling trap of thinking (digital) computers are some sort of all powerfull wonder machines -just as the warmists do. Their unquestioning gullibility and naivety is mindblowing sometimes…

Peta in Cumbria ==> Both Gleick and Stewart (see reading list pull quote) insist that the very systems themselves are nonlinear in nature — not just that we have linearized them out of laziness (which is also true). These systems appear nonlinear because they are nonlinear. Nonlinearity is not a trick of lazy mathematicians or physicists.

Read this paper: Nonlinear Population Dynamics: Models, Experiments and Data by Cushing et. al. (1998) and check back in here with your opinion.

P in C, you raise two deep philosophical history of science points, both going back now centuries, amd noth crucialy important. Bravo.

First, is Pascal’s notion that if you knew everything about some state of the universe (the position, momentum, … of every atom, plus all the laws of physics like Newton’s), then the future would be in principle computable and knowable. Also known as LaGrange’s Demon (since the Devil could also do the calculations). Pascal quit math for Christian asceticism shortly after founding probability theory to solve a compulsive Paris gambler friend’s question about how to divide the table stakes if the game stopped short… LaGrange stayed mathematician to the end. This was the deterministic Clockwork Universe idea that Newton inspired.

It eventually foundered on two problems. First, the present state is not precisely knowable (PI has an an infinite number of digits, underlying the Lorentz butterfly effect problem of sensitive dependance on initial conditions, undoubtedly coming in a subsequent installment of this excellent primer). It was Poincare who originally finally laid the celestial clockwork metaphor to rest, by showing that Newton’s three celestial body was not solvable ( because of nonlinear dynamic ‘chaos’ theory, although Henri did have that nomenclature in his time).

Second, Turing computability. Some things are not practically computable (in the digital sense) EVER! See a nice little supplemental reading book, Computability and Unsolvability, by Martin Davis (1958). Which is why GCMs must be parameterized…

The IPCC dogma versus skeptic stuff highlighted by Kip Hansen’s excellent first in his series of posts (if the rest are as good as this one, beyond excellent and worthy of a book) has very deep intellectual roots. ‘Those who do not study history are doomed to repeat it…’ Something like that.

The pendulum starting from “true north” presents special difficulties because of the sensitivity to initial conditions.

PoC, these are deep waters. If you are making the observation that the laws of nature appear to be linear and satisfy the superposition principle at the microscopic classical level, and appear to be strictly reversible at that level, I certainly wouldn’t argue with you, although quantum theory introduces a sort of nonlinearity at the very smallest length/time scales via pair production and vacuum polarization, there is the puzzle of mass (there are nonlinear Higgs models that are still not ruled out as candidates, given that the Higgs boson is not yet really ruled in), and ultimately all of this is empirical probable truth, true so far as we can tell, and we may or may not find some fundamental nonlinearity somewhere.