Guest essay by Larry Hamlin

The NASA GISS global average temperature anomaly for November 2023 was released (provided below) which shows an El Niño driven value of 1.44 degrees C (2.592 degrees F) with the November outcome hyped in an L A Times article as being “a new monthly record for heat” and the “hottest November”.

This GISS anomaly value represents a November global absolute average temperature of 59.792 degrees F.

The prior highest measured GISS November global average temperature anomaly was in the year 2020 with a value of 1.10 degrees C (1.98 degrees F) which represents a November global absolute average temperature of 59.18 degrees F – a difference of 0.612 degrees F which the Times has hyped as being the “hottest November”.

The highest prior GISS measured EL Nino year average temperature anomaly was in 2016 at 1.37 degrees C (2.466 degrees F) which represents an absolute temperature of 59.666 degrees F – a difference of 0.126 degrees F (1/8th of a degree F) from the November 2023 EL Nino driven anomaly value.

The climate alarmist propaganda media misleadingly exaggerate the small average temperature anomaly differences between these measurements by deliberately concealing the specific numerical value of such differences and instead hyping these carefully hidden small differences as being “a new monthly record for heat” and the “hottest November” even when the latest measured GISS global anomaly value is only 1/8th of a degree F changed from the highest prior year 2016 EL Nino value.

The L A Times climate alarmist article continues to conceal and downplay the overwhelming importance of the large year 2023 El Niño event with the obvious impact that such a naturally occurring global wide climate event has in hugely increasing both absolute and anomaly temperature measurement outcomes around the world.

The Times article ridiculously hypes (shown below) that November 2023 is the “sixth straight month to set a heat record” that “has truly been shocking” with people “running out of adjectives to describe this” when the NASA GISS data shown above clearly establishes that this many month-long pattern of increasing anomalies is completely consistent with the year 2016 Global El Nino event which experienced 7 straight months of increasing anomalies from October 2015 through April 2016.

The GISS November 2023 global average temperature anomaly represents a mathematically derived composite average value of all global average temperature anomaly measurement data representing an extraordinary array of five disparate global climate regions (shown below) along with the huge disparate climate behavior differences present in the global hemispheres with their unique and far flung oceans, continents, mountains, deserts, rain forests, low lands, etc.

The mathematically contrived global average temperature anomaly result is created through a composite menagerie of widely disparate climate region data outcomes that apply to no specific region or location anywhere on earth.

Additionally, climate alarmist hyped claims of “limiting global warming to 2 degrees C (temperature anomaly value) above pre-industrial times” are based on a climate model referred to as RCP8.5 that was rejected by Working Group I, The Physical Science, of the UN International Panel on Climate Change (IPCC) Assessment Report (AR6, 2021).

The climate alarmist propaganda media erroneously misrepresent the contrived global average temperature anomaly data in support of their hyped climate alarmists claims while ignoring and concealing extensive anomaly and absolute temperature measurement data that conflicts with their highly contrived global average temperature anomaly driven methodology.

Extensive NOAA anomaly and absolute temperature measurement data is readily available for the Contiguous U.S that addresses both average temperature anomaly measurements as well as maximum absolute temperature measurements.

The graph below shows NOAA’s average temperature anomaly measurements through November 2023 for the Contiguous U.S. which clearly demonstrates there is no increasing trend in the average temperature anomaly data for the Contiguous U.S. using the most accurate USCRN temperature measurement stations that went into operation in 2005.

The NOAA November 2023 El Niño year average temperature anomaly value is 1.44 degrees F compared to the prior November 2016 El Niño year average temperature anomaly value of 4.88 degrees F.

Furthermore, the highest measured NOAA Contiguous U.S. November average temperature anomaly value from the USCRN temperature measurement stations was also the November 2016 El Niño year outcome of 4.88 degrees F compared to the November 2023 El Nino year value of 1.44 degrees F.

The next highest NOAA Contiguous U.S. November average temperature anomaly outcomes following the 4.88-degree F year 2016 value occurred (highest to lowest order) in the years 2020, 2009, 2017, 2021, 2005, 2015 and then 2023 respectively.

Thus, the November 2023 Contiguous U.S. average temperature anomaly value is only the 8th highest measured by the USCRN for the month of November.

The L A Times alarmist article conceals the most relevant Contiguous U.S. year 2023 climate average temperature anomaly data available from its readers with that data clearly showing the lack of any record-breaking climate anomaly outcomes in the Contiguous U.S. region.

Instead, the Times article hypes a contrived global average temperature anomaly outcome that applies nowhere on earth while at the same time falsely positing that this contrived global anomaly outcome is relevant to the Contiguous U.S. region.

The NOAA climate data shown below provides the November 2023 absolute maximum temperature measurements for the period 1895 to 2023 in the Contiguous U.S. that establishes the November 2023 outcome is only the 109th highest out of 129 maximum November temperature measurements recorded during the 1895 to 2023 period.

The NOAA data below provides the maximum November 2023 year to date interval temperatures in the Contiguous U.S. for the period 1895 to 2023 establishing that the year 2023 January through November absolute maximum temperature is only the 115th maximum temperature interval out of 129 maximum interval temperature measurements.

The NOAA data below provides the absolute maximum temperatures for all months between 1895 and November 2023 for the Contiguous U.S. establishing that month of November 2023 is only the 592nd highest maximum temperature out of 1547 absolute maximum temperatures measured with the highest ever measured maximum temperatures occurring in the dust bowl era of the 1930s.

NOAA has extensive temperature measurement data available for 9 U.S. Regions as shown in their Map below with access to this data obtained through NOAA’s Regional Time Series option at the links noted for the above graphs.

Without belaboring this analysis any further all NOAA’s 9 Contiguous U.S. climate regions measured data establish that November 2023 does not represent the highest absolute maximum temperature for any of these regions regardless of whether one is evaluating just the month of November, the January to November period interval or all months over the period 1895 through November 2023.

NOAA also has data available for all 48 Contiguous U.S. states as well as for Alaska using NOAA’s Statewide Time Series option available at the links noted above.

NOAA data for California establishes that November 2023 does not represent the highest absolute maximum temperature regardless of whether one is evaluating just the month of November, the January to November period interval or all months over the period 1895 through November 2023 as shown below where November 2023 is only the 602nd of 1547 absolute maximum temperature outcomes during 1895 to November 2023 period.

NOAA’s extensive and readily available climate data for the Contiguous U.S. (both average temperature anomaly and absolute maximum temperature measurements) clearly shows that climate alarmist propaganda claims of a “climate emergency” (including such claims in the L A Times) are unsupported by NOAA average temperature anomaly and absolute maximum temperature climate data measurements.

Additionally, this extensive NOAA data is deliberately concealed by climate alarmists while at the same time erroneously misrepresenting the critical climate science differences between maximum absolute temperature and average temperature anomaly data measurements.

“instead hyping these carefully hidden small differences as being “a new monthly record for heat” and the “hottest November” even when the latest measured GISS global anomaly value is only 1/8th of a degree F changed from the highest prior year 2016 EL Nino value.”

Itisn’t hype; all those things are true. Here is a stacked graph showing the various monthly values of global average. Each rectangle goes from the month temperature of the year shown by color, down to the next higher year. Black is 2023; the pinkish color is 2016. The dataset is TempLS, virtually the same as GISS.

Even the YTD for 2023 is about 0.14C higher than any previous year, and December will only increase that.

Here is the underlying table of values for that graph, this time with GISS data:

The month averages are in descending order, with year shown by colored square.

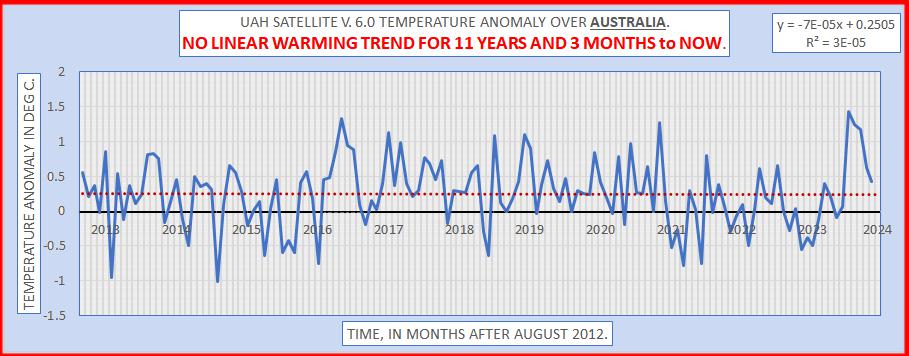

I’ve looked into the GHCN to extract information on the distribution of maximum and minimum temperatures rather than focusing on anomalies. There’s a lot of missing data, especially in the vicinity of the Arctic Circle, and the scarcity of weather stations just exacerbates the issue. That’s why I find it puzzling that when the monthly GHCN map is published, the entire area appears bright red, almost as if someone simply used a crayon to color it all in. Averaging temperatures over fixed periods isn’t very informative since the climate doesn’t neatly reset every 30, 60, or 365 days – it’s a chaotic system. The satellites still offer some utility as they cover all Earth’s grids, allowing them to capture natural events like ENSO in the best possible way.

You can visualise the GHCN (and ERSST) data for average anomaly here. It’s average, not min/max, but it shows the stations reporting. It’s a globe like Google Earth, where you can turn it, and zoom in. The coloring is by station anomaly interpolated over each triangle, so it is accurate at each station. You can click on stations for numerical dtat, and ask for display with or without stations marked. It has a glitch that I have had trouble fixing, where a few triangles don’t get colored. Here is a hemisphere showing how Eastern US was relatively cool in November; stations are not shown

And here is a closeup of the US, now with statios shown

Is that Earth or Mars?

It appears to be bassackwards to me. Looks like they’ve taken the average anomalies and worked backwards to fabricate some sort of regional pattern. All they had to do was to take the actual temperatures from each station and build up from there but no, that’s obviously far too old-fashioned for such a progressive thinker like little Nicky.

“All they had to do was to take the actual temperatures from each station and build up from there”

That is exactly what is done. For that month, the measured temperature for that station has its climatological mean subtracted and color assigned according to the scale. Then a triangle mesh is haded in between those colors. Here is some detail of the US part showing the mesh:

So the “measured mean” temperature (an “averaged” construct) then has another “averaged temperature” construct (the climatological mean) subtracted.

And the plot just gets thicker from there on?

(as happens in most mystery thriller scripts)

Sir Arthur Conan Doyle would be Stoked.

(sorry Nick, couldn’t stop myself)

I was thinking the exact same thing. Why bother using anomalies when you have actual temperatures unless you’re trying to hide something?

I note that Nick evaded your question, never actually providing an answer.

Anomalies are assumed by climate science to “normalize” the temperature variations at different locations, hemispheres, or whatever thus allowing them to be compared.

It’s just like the assumption that all measurement uncertainty is random, Gaussian, and cancels – i.e. garbage.

The climate science assumption with the anomalies is that their variances all cancel thus allowing direct comparison using averages.

Climate science is just chock-full of statistical garbage like this.

It’s even worse than that. You are a race car owner. Your crew chief has measured the braking force each of two cars have.

Car 1 @ 200 mph can initiate a rate of change of 1 fps.

Car 2 @ 100 mph can initiate a rate of change of 5 fps.

The crew chief tells that the cars can generate a rat of 3 fps. You see every other car on the track outbreaking your fast car. Yet the crew chief told you that it should be close to average.

Do you blame the driver?

If you want to combine multiple station records, you can’t use the absolute temperature unless both station records have the same length and are not missing any values. Otherwise you will introduce spurious trends into the resulting dataset. Anomalies avoid this problem because the datasets are normalized to a common baseline.

Research has also shown that anomalies are correlated over much larger distances than the absolute temperature, which helps in areas with low station density. The reason for this is trivially intuitive from common experience – the temperature above my driveway is rarely the same as the temperature under the trees in my back yard, but if it’s a hotter than normal day on the driveway it’s almost certainly going to be a hotter than normal day in the back yard, even though the definition of “hotter than normal” is different for both areas.

Note: assertion without evidence.

https://agupubs.onlinelibrary.wiley.com/doi/10.1029/JD092iD11p13345

OMG!

“Error estimates are based in part on studies of how accurately the actual station distributions are able to reproduce temperature change in a global data set produced by a three-dimensional general circulation model with realistic variability. ” (bolding mine, tpg)

And you claim this study is EVIDENCE? Comparing actual station data to that produced by a MODEL?

And exactly *what* is REALISTIC VARIABILITY? Someone’s GUESS?

As always, it is models all the way up, down, left, and right.

It sounds like the abstract piqued your interest, so maybe you should now go and delve into the study to get the answers to your questions.

YOU are the one quoting the study as an authority. It is thus YOUR responsibility to show how it supports your claim, not mine. *YOU* supply the answers, not me.

You asked for evidence, I provided it. The questions you have are answered in the manuscript, and I do not need to copy and paste the entirety of the document here, you are a big boy and more than capable of clicking a link.

You provided NOTHING! You gave a link to a document that says physical data is in error while model data is accurate!

You can’t even answer as to how that can be a valid assumption! And the document doesn’t answer it either!

You got caught using an argumentative fallacy and now you are trying to weasel your way out of it. My guess is that you didn’t even bother to actually read the document – you just looked at the title and cherry-picked it. Have you been taking lessons from bellman?

His evidence is a study that compared actual station data to that from a a MODEL with “realistic variation”!

In climate science, its ALWAYS models all the way down. And the models are always assumed to be 100% accurate and realistic! It’s the ACTUAL STATION DATA that creates the errors!

And that these “experts” possess the necessary knowledge to go back and edit historic data, claiming they are able to “remove” biases.

“Anomalies avoid this problem because the datasets are normalized to a common baseline.”

Anomalies do *NOT* avoid this problem!

Winter temps have a higher variance than summer temps. When you subtract a constant (the long term average) from those temps the anomalies thus generated will reflect the very same variance differences! You can’t avoid that. All you can do is “ass”ume it away.

You cannot average distributions with different variances and get a physically meaningful value. The variance in height of Shetland ponies is smaller than the variance in height of quarter horses. If you average the heights of Shetlands and use that to calculate an anomaly for a herd of Shetlands versus doing the same thing for quarter horses the variance of the anomalies produced for the Shetlands will be different than the variances for the quarter horses. Averaging those anomalies, a set for Shetlands and a set for quarter horses, WILL TELL YOU NOTHING PHYSICALLY MEANINGFUL about either herd or even for horses in general.

You are trying to defend doing the exact same thing for temperatures and give the result some kind of physical meaning. The truth is that there is *NO* physical meaning you can discern from what you calculate!

“Research has also shown that anomalies are correlated over much larger distances than the absolute temperature, which helps in areas with low station density”

What research? Are you trying to tell me that the variance of temps in San Diego and Boston are the same as the variance of temps in Lincoln, NE? Are you trying to tell me that the variance of temps on the north side of the Kansas River valley as on the south side? If so then explain why weather forecasts are always given for north of I-70 (which parallels the river valley) separately for locations south of I-70? The river valley is only 20-30 miles wide, that is not even a “large” distance! The variance of temps on top of Pike’s Peak is different than the variance of the temps in Colorado Springs, just a few miles away!

Once again, most of the temp correlation studies I have seen are actually looking at the correlation in time and the sun’s travel across the earth and not at the correlation of the temps themselves. Of course the temps are going to go up during the day and down at night (typically). That doesn’t mean the temps themselves are correlated, terrain, geography, elevation, humidity, etc usually legislate against temps being highly correlated even just a few miles apart. And if they are not highly correlated then you cannot just assign the temps at one location to another location without considering the intervening terrain, geography, etc. And none of the studies I have seen take that into consideration!

“the temperature above my driveway is rarely the same as the temperature under the trees in my back yard, but if it’s a hotter than normal day on the driveway it’s almost certainly going to be a hotter than normal day in the back yard,”

That does *not* mean the variance of the temps in the two locations are the same. And if they are not the same then the anomalies won’t be the same either. Meaning you can’t just substitute one for the other.

I have three different thermometers at my location, one on the porch on the north side of the house, one under the deck on the south side of the house, and one out in the middle of a 3 acre plot which is at least 50 yards from any building or equipment. Not only are the temps usually different, the variance in the temps are different also. And none of them are highly correlated with the thermometer at the Forbes AFB about 1.5miles from my location. Humidity is different (I live in the midst of soybean and corn fields vs a flat airport with lots of concrete/asphalt/etc). Wind is always different. Temperatures are always different. Even air pressure can be quite different.

You simply can’t say that because temps at two different locations both go up during the day that the temps are correlated and you can substitute the temp at one location for missing temps at another location. That just totally ignores physical reality!

What you need to do is not remove the variance, but to move the series onto the same baseline (so they have a zero that is aligned). That is all you need to do to resolve the problem I described (needing to combine records of varying lengths).

Doing this can’t give you breed-specific insights, but it can absolutely yield meaningful information about horse populations in general. Nobody ever said that the global temperature anomaly yields regional insights, it’s used as a generic metric to track the energy content of the climate system. If the global mean anomaly is low enough, the planet is in an ice age. High enough, and the poles are ice free. And everything in between. This is useful information to track. Nobody ever said the investigation ends there.

“What you need to do is not remove the variance, but to move the series onto the same baseline (so they have a zero that is aligned). That is all you need to do to resolve the problem I described (needing to combine records of varying lengths).”

Malarky! You cannot change the variance of two distributions by just moving them along the x-axis!

If the variances are different that implies that the ranges are different also. If one distribution has a range of 10 with a median of 5 and a second one has a range of 20 with a median of 10 then shifting the distribution with the range of twenty along the x-axis so its median becomes 5 WON’T CHANGE THE DIFFERENCES IN THE VARIANCE! Neither will shifting the distribution with a range of 10 so its median is no 10.

The anomalies generated by the two different distributions will inherit the same variances as the distributions themselves. Meaning that the average of the anomalies will remain meaningless.

If the average height of Shetland ponies is 4′ and that of quarter horses is 5′ you are claiming that by adding 1′ to all the heights of Shetland ponies you can somehow change the variance in heights of the Shetland’s to be the same as the variance in the heights of the quarter horses!

Where did you learn your statistics?

“Doing this can’t give you breed-specific insights, but it can absolutely yield meaningful information about horse populations in general.”

Again, MALARKY!

What you have is a multi-modal distribution! Neither the average or the median tell you anything useful! Every statistics book I have says you should describe the distribution you get using the 5-number description or something similar. I’ll ask again, WHERE DID YOU LEARN YOUR STATISTICS?

The *exact* same thing applies to temperatures. When you combine winter temps with summer temps you get a multi-modal distribution. The average is meaningless. And using anomalies doesn’t help since they inherit the variances of the underlying absolute temps. Temperature variance is a vital piece of knowledge for determining climate as are the absolute temps. The variance of the temps in a coastal city is vastly different than the variance of the temps in a high plains city. Yet you would have us believe that they have the same climate if the average anomaly is the same!

“it’s used as a generic metric to track the energy content of the climate system.”

Temperature is the most piss-poor metric for energy content there is. Energy content is highly dependent on humidity and pressure. If it wasn’t then Las Vegas (low humidity) and Miami (high humidity) would have the same climate! Have you *ever* looked at a set of steam tables?

You might be able to fog your CAGW buddies with all this crappola but you aren’t fooling anyone that has ever spent any time in a tent in below zero weather or time in Boston vs Topeka, KS both in the summer and winter.

You are as bad as bellman and bdgwx – totally unable to relate statistics to the real world. Statistics are *NOT* the real world. the real world is the real world. And in the real world you have humidity, pressure, terrain, geography, prevailing winds, elevation, etc. None of which are considered in averaging temperatures in climate science. You also have measurement uncertainty in the real world, you can’t just assume it all cancels. Buildings and bridges collapse when you do that!

I did not say that you could. I said that you can place the two series onto a normalized axis and remove spurious trends that arise from combining records of different lengths.

Of course I’m saying nothing of the kind, but tilting windmills does seem to be the favorite passtime of WUWT acolytes.

I’m saying that if you observe the mean height of the horse population changing through time that that is relevant information about horses. Obviously you cannot say from the population mean whether there has been a change in the height of shetlands or in quarter horses, but now you know that there is change occurring that you should be investigating.

“I did not say that you could. I said that you can place the two series onto a normalized axis and remove spurious trends that arise from combining records of different lengths.”

Still malarky! If the distributions are not identical, i.e. don’t have the same variance, then exactly what does shifting one of them along the x-axis do as far as eliminating spurious trends?

A statistical description of a distribution includes *both* the average and the variance. All you are doing is changing the average value by adding or subtracting a constant. That does *NOTHING* to normalize the variance!

“Of course I’m saying nothing of the kind, but tilting windmills does seem to be the favorite passtime of WUWT acolytes.”

If that is not what you are saying then exactly HOW are you treating the distributions to give them the same variance? If they don’t have the same variance then they are not identical distributions!

What you are implying is that you can make a multi-modal distribution into a uni-modal distribution merely by shifting one mode along the x-axis regardless of whether each mode has the same variance!

It is this kind of idiotic statistics that makes climate science into such a laughing stock!

“I’m saying that if you observe the mean height of the horse population changing through time that that is relevant information about horses.”

If you can’t identify what changed then knowing it changed is totally useless! Why is that so hard to understand?

“you should be investigating”

Investigating what? What is climate science investigating? It’s all CO2 and models based on CO2 growth! All based on not knowing what exactly is changing as far as temperature is concerned! The minute you use (Tmax + Tmin)/2 you have lost the very data you need to determine what is happening! Ag science tells you that growing seasons are expanding while grain crop harvests are setting records! Who in climate science is investigating why that is? It isn’t from the planet burning up or the oceans boiling!

Here’s an excerpt from a 2018 study on crop harvest variability: “Weather variability in general (1, 15, 16) and the frequency and intensity of heat waves and droughts are expected to increase under global warming ”

Really? The physical data shows that heat wave and drought intensity is *NOT* increasing. Who in climate science is investigating why that is using actual physical data instead of models?

Pretending that multi-modal distributions can be treated as if they are uni-modal is just part and parcel of the idiotic meme’s climate science uses to make things “easier”, regardless of whether it is physically meaningless or not!

It feels like you and I have been down this road before. But one more time for the folks in the cheap seats, here is an illustration. Take two records of unequal lengths (station B doesn’t start reporting until 1950):

Take their average:

You should, if you are observant, see that there is a problem. The average doesn’t look like it is representative of the trends in either series. They both seem to show slightly negative trends, but the average is sharply positive! Now calculate the anomalies, using the 1951-1980 reference period for the baseline:

And take the average of the anomalies:

Well, that’s a bit better! Now the mean actually seems to be representative of the trends in the individual series. This is one of the important reasons to use the anomaly: it allows us to combine records of unequal lengths. Our alternative would be to dump any records shorter than the longest (data loss), or to infill missing values (more uncertainty).

Notice we are not normalizing the series to have the same variance, we are just setting them to a common zero.

You probably don’t even know what you have actually shown. You are displaying how manipulating data allows you to show what you think is correct.

“If the global mean anomaly is low enough, the planet is in an ice age. High enough, and the poles are ice free. And everything in between.”

And yet we are told we just lived through the hottest year on record, while Arctic ice is no where close to as low as it has been.

And when it was lower in recent past years, it was not the hottest year ever.

So, either your idea about that correlation is wrong, or what we are told about temperature is wrong.

Or both.

Warmistas have recited a litany of disasters for many years, that they have insisted would categorically occur if the globe heats up.

Those disaster scenarios and events have failed to transpire, even as we are told the globe has heated up and is now hotter than ever.

So which is wrong?

How should we be expected to believe people that say such things, especially when we know they make stuff up and alter historical records? And then lie about making stuff up and altering records?

Year to year variability in Arctic sea ice is controlled by more factors than just ambient air temperature. The thing to look at is the trend. The long term (decades +) trend is being driven by a change in temperature in the Arctic.

Most of the more dire consequences of climate change have not been projected to happen for several decades to come. You can’t say something has “failed to transpire” when it is supposed to transpire at a future date.

“What you need to do is not remove the variance, but to move the series onto the same baseline (so they have a zero that is aligned). That is all you need to do to resolve the problem I described (needing to combine records of varying lengths).”

I think you have no spent nearly enough time outside for long stretches of time, making careful observations of weather conditions under every possible circumstance, and in different types of environments.

Microclimates vary hugeley over short distances, and the amount of this variance also varies gigantically.

Under some conditions, a rural farm area may be ten to fifteen degrees colder than areas nearby, even while on most days and nights, it rarely more than a few degrees different.

On one of the few nights a year that Florida has radiational cooling events with light winds and low dewpoints at all levels of the atmosphere, cold pockets form where the temperature is far below freezing, while higher terrain nearby, or the places where there is habitation and hence paving and homes and other buildings, it is in the mid-40’s.

The some high clouds blow in on the jet stream and the farm jumps up in temp 10 degrees within minutes, but the warmer spots do not change.

Go out west to places with huge changes in terrain and ground cover, from forests to rocky deserts within a few miles, and the situation is so much more complex, an such variances are so much more commonplace.

I wonder if microclimates are taken into account at all?

Because it sounds like the idea you are suggesting is about like declaring that microclimates can be ignored.

Which is something only someone who spends a lot of time inside, and little time outside observing in all conditions and all times of day and night in all sorts of places, could ever say. Or think.

But then, many of us know this full well.

The people that do climate modelling are generally not the same people that spends long lonely hours outside observing over many years.

from Hubbard and Lin, 2006:

“ For example, gridded temperature values or local area-averages of temperature might be unresolvedly contaminated by Quayle‘s constant adjustments in the monthly U.S. HCN data set or any global or regional surface temperature data sets including Quayle‘s MMTS adjustments. It is clear that future attempts to remove bias should tackle this adjustment station by station. Our study demonstrates that some MMTS stations require an adjustment of more than one degree Celsius for either warming or cooling biases. These biases are not solely caused by the change in instrumentation but may reflect some important unknown or undocumented changes such as undocumented station relocations and siting microclimate changes (e.g., buildings, site obstacles, and traffic roads).”

‘Spurious trends’ hahaha.

You do realise, don’t you that the station siting requirements were a way of establishing a common frame of reference for all temperature stations? That by ignoring these and including airports and urban stations you have introduced random variables that cannot be allowed for and, therefore, your datasets are now nothing but ‘spurious trends’ in their entirety? Your weak excuses aside, the datasets are complete and utter junk.

Btw – the very fact that you think using temperatures taken under some trees and in your driveway is perfectly fine leads me to the conclusion that you should never, ever take a professional temperature reading.

In the US, at least, the entire monitoring network was historically run by volunteers. So you can set requirements and guidelines, but you can’t really enforce them. Nor can you prevent, say, a parking lot from being built in what used to be a nice open green space. Nor can you prevent stations from being relocated when necessary. So you wind up with a transient station network whose composition changes through time, and you are forced to do your analysis within this context.

Since 2005 or so in the US, we have the pristine reference network, but we don’t have time machines to go back and install that network a century ago. So scientists do the best that can be done with existing historical data. Happily, the full, adjusted network is near perfect agreement with the reference network over their period of overlap:

Making scientists confident that the identified trends are not spurious or unduly influenced by non-climatic biases.

Always on WUWT there is an insistence that the data either have to be completely perfect and without a single flaw or we cannot use them, and I think this is unscientific and antithetical to the advancement of human knowledge. Never, ever, not once ever, on WUWT is there discussion of “here is how we would approach the analysis using imperfect datasets,” it’s always just “let’s close our eyes and pretend like we live in complete darkness.”

“you are forced to do your analysis within this context.”

That analysis cannot simply make things up in order to make things easier. If that means having to use shorter station data lengths then so be it. Include that as part of the analysis write-up. Making up “adjustment” data is *NOT* the way to do it!

“So scientists do the best that can be done with existing historical data. “

NO, they do not do the best they can. If they did they would use the measurement uncertainty associated with the data to say “we can’t actually tell what is going to happen in the next 100 years”!

“Making scientists confident that the identified trends are not spurious or unduly influenced by non-climatic biases.”

Not a single post you have made or link you have given has done one solitary thing to analyze the measurement uncertainty associated with the temperature record data sets. NOTHING. NADA. ZIP!

“Always on WUWT there is an insistence that the data either have to be completely perfect and without a single flaw or we cannot use them”

NO!!!!! That is *NOT* what is being insisted on! You are just whining. What is being insisted upon is that climate science do a valid analysis of the uncertainties associated with the data. The only ones assuming the data is perfect is climate science, including you!

Metrology protocol says that any statement of measurement, including the GAT, should have associated with it an uncertainty interval: “stated value +/- measurement uncertainty”.

When have *YOU* ever given any kind of uncertainty interval for anything associated with temperature?

Everything that is done as a part of the temperature analyses is included as part of the documentation.

Here is NASA’s GISTEMP analysis with the uncertainty estimate plotted:

And here is a link to the paper describing in detail NASA’s uncertainty analysis:

https://pubs.giss.nasa.gov/abs/le05800h.html

I’m happy we are able to put this myth that climate scientists ignore uncertainty to bed once and for all.

“Everything that is done as a part of the temperature analyses is included as part of the documentation.”

No, it isn’t. Can you tell me the systematic measurement uncertainty in the temp measuring device at Forbes AFB? At Moffat AFB? At the Topeka Municipal Airport?

If you can’t then the temperature analyses are NOT documenting all factors.

Can you give me the current systematic measurement bias for ANY measurement device in any temperature data base?

If you can’t then how can you say that all involved factors are being documented?

These NASA goobers are claiming ±0.2K air temperature uncertainty in 1880.

I call bullshit.

So does Berkeley Earth data.

You’ve opened pandora’s box. The Gormans, karlomonte, etc. will seize this opportunity to reject well established procedures for assessing uncertainty making numerous algebra mistakes in the process some so egregious a middle schooler could spot them.

If history is any guide then the discussion of uncertainty will devolve quickly and yield hundreds of posts. For that reason I’ll try to keep my own separated in a different subthread so as not to disrupt the flow here.

The Gorman twins do seem to have a proclivity for this topic and seem to have developed quite a cult of thought around their peculiar ideas. I appreciate your comments on the subject, I run out of patience trying to wade through the quagmire.

Yer an idiot as well as a troll.

beeswax and bellcurveman will welcome you with open arms.

You and climate science are stuck in 19th century methodologies. It’s obvious when you continue to use the standard deviation of the sample means as the accuracy of the mean as well as writing an average as “stated value” only instead of “stated value +/- uncertainty”.

NO ONE involved in metrology in the real world accepts “stated value” as acceptable today. Not even in the GUM. Yet that is *all* that climate science uses. It is based on the meme (never actually stated) that all uncertainty is random, Gaussian, and cancels. So the average is always 100% accurate.

Even the hidebound medical establishment has recognized that the use of the standard deviation of the sample means is not an acceptable metric for the accuracy of the average. Yet climate science stubbornly keeps right on using it.

Calculating the average of a huge number of inaccurate data points does *NOT* increase the accuracy of the calculated average. Never has and never will – unless you are a climate scientist!

stated value +/- uncertainty:

No one ever said it did. The precision of the estimate of the mean increases with a larger sample size, there might still be an inaccuracy arising from non-random bias. You need to use adjustments to deal with non-random bias, but you don’t like adjustments.

You keep talking past everyone and deliberately misunderstanding everything they say. I’m not sure how you find this a fulfilling way to spend your time.

±0.2K in 1880 is absurd, and not a single one of you trendology goobers can see this.

You don’t know WTF you yap about.

Do please substantiate this assertion.

Are you blind? Can you not see what is on the very graph that YOU posted?

Dearest karlo, I am obviously talking about your assertion that the shown uncertainty is absurd. That is what I want you to substantiate.

The instrumental uncertainty of LIG thermometers prior to 1890 is at least ±1 K.

We are not talking about the instrumental uncertainty of a single thermometer, we are talking about the uncertainty in the global mean temperature anomaly analysis prepared by NASA. Can you speak to this?

Yeah, its like thinking you can reduce entropy. Where did the numbers from 1880 come from? A model?

And the GAT is a meaningless index that tells nothing about climate.

Well they came from the same places that the numbers post 1980 came from. Have you read any of the relevant literature on this subject? You seem to have awful strong opinions about NASA’s temp products for someone who doesn’t seem to know much about them.

You are a data fraudster who pushes the clownish line that “biases” in historic temperature data can be “removed”, apparently NASA is part of this clown show as you here regurgitating the same fiction.

Do you expect to be treated seriously?

We *HAVE* been speaking to it. And your only reply is to continue stating the climate science meme that all measurement uncertainty is random, Gaussian, and cancels. It can therefore be totally ignored and all averages and anomalies can be assumed to be 100% accurate!

It is as simple as understanding that the standard deviation of the sample means is *NOT* the uncertainty of the average. It is a measure of the uncertainty of the sample means, not of the average.

σ or σ^2 of the population is the proper measure of the uncertainty of the average. And σ^2 = (standard deviation of the sample means) * sqrt(n).

σ^2 goes UP as n goes up!

I’ve not said this once, or alluded to it. Only you have.

A larger sample makes the estimate of the population mean more precise. You and I agree on this. It does not make the estimate of the mean more accurate because there might be a source of systematic bias in the sample. And I think you and I both agree on this. So why you’re acting like we’re in an argument about it is puzzling.

And no, you haven’t spoken a single word about the uncertainty analysis from NASA cited above.

What is YOUR background in formal uncertainty analysis?

How do YOU know this NASA bunch knows WTH they are doing?

Just because you found it in a journal paper?

You (and they) believe that anomilization reduces LIG temperature measurement uncertainty by a factor of at least 5.

This is a lie: subtracting a baseline increases uncertainty.

Yet you keep arguing that the GAT is accurate. The only way that can be is if all systematic bias is random, Gaussian, and cancels. The meme that permeates the entirety of climate science.

You seem to want your cake and to eat it too.

Or are you agreeing that the GAT calculated out to the hundredths digit is a useless piece of garbage?

You are not showing uncertainty. You are showing how precisely you have calculated an inaccurate mean using inaccurate data.

Why does that keep escaping your understanding?

Precision is not accuracy!

The GISTEMP uncertainty analysis includes estimates for uncertainty arising from systematic biases, which, having read the relevant literature, I’m sure you already know. The range of uncertainty thus encompasses the precision and accuracy of the analysis. There’s a 95% chance that the right answer is inside that envelope.

Actually it does *NOT* make estimates of the measurement uncertainty. It only makes estimates of how precisely they have calculated the inaccurate average from inaccurate data.

Precision is *NOT* accuracy.

NASA makes absolutely *NO* provision for the combination of temperature distributions with different variances, e.g. winter vs summer temps in opposite hemispheres.

They, like you, assume that all temperature distributions have the same variance so it can be ignored!

“There’s a 95% chance that the right answer is inside that envelope.”

There is a 95% chance that the inaccurate average obtained from inaccurate data is within the envelope of the averages of the samples.

If the population data is inaccurate then the sample means will be inaccurate and, in turn, the average calculated from those sample means will be inaccurate.

It simply doesn’t matter how many digits you use in calculating the average of the sample means, that average of the sample means will still be inaccurate if the sample means are inaccurate. And if the population data is inaccurate then the sample means will be inaccurate also.

Why can’t you address this? Why are you and the rest stuck in the meme that the standard deviation of the sample means tells you how accurate the average calculated from those means is? Why do you ignore the inaccuracy of the sample means/

Again, NASA’s uncertainty estimate includes consideration of uncertainty imparted by systematic bias. The uncertainty envelope includes uncertainty arising from inaccuracy.

“inaccuracy” — a big tell that you know next to nothing about the subject.

How do you fit ±1K into a ±0.2K “envelope”?

You haven’t read or understood anything I’ve written.

You first need to define your measurand. What are you hoping to accomplish.

Let’s say your measurand is the perimeter of a 30 sided irregular table.

How would you go about it.

You would make multiple measurements of each side and using the GUM procedure of finding the mean and the variance and subsequent uncertainty. That will vive a value of P1±u(P1). You do the same for P2 – P30. Then you calculate a combined uncertainty of all pieces.

With temperatures, you get one shot. No multiple measurements of each temp, all you have is Type B for each measurement and the variance of the data.

Let’s say you want to measure the average height of a person in a room with 10,000 people in it. You can measure the heights of 2000 people at most. Explain your process.

What it sounds like you’re saying, to all of us, is that you should only measure the height of a single person in the room, because each time you include another person in your average, your uncertainty of the average height of the people in the room increases due to measurement error. If this is not what you’re saying, please clarify.

I skimmed through this NASA production, looks to me like they confuse error and uncertainty. It certainly does not adhere to the international standard for expressing uncertainty (the GUM).

4.2.1: “The major source of station uncertainty is due to systematic, artificial changes in the mean of station time series due to changes in observational methodologies. “

This is total bullshit, hand-waving.

TOBS which hasn’t been a problem since ASOS.

Says a lot right there. They don’t recognize uncertainty lies in the process of monitoring the temperature.

First, you don’t measure the height of the average person. You measure the height of the population subjects. From that you have a distribution of data. You may calculate a mean, variance, and standard deviation.

From the GUM

Basically, the variance and accompanying standard deviation describe the dispersion of the values that could be attributed to the measurand.

Because of your limited sample of people, it is quite possible there is a large chance that the uncertainty of your mean is very large.

Your very question illustrates your lack of training in making measurements. Your understanding is incorrect.

It is not each measurement that adds uncertainty. It is the effect on the distribution of measurements that each measurement has. If all the measurements cluster closely around a mean, the variance will decrease and the uncertainty will be small. That is, the dispersion of values attributed to the mean will be small.

If the measurements are spread out, the dispersion and uncertainty will be large.

This subject is obviously something you have not studied. It is something every engineering student must learn in lab classes. Making inaccurate measurements just won’t be acceptable. Not understanding influence quantities in evaluating deviations from standards is inherent in engineering.

“First, you don’t measure the height of the average person.”

I think this why a lot of confusion in these discussions. Some people never understand that “the average height of a person”, is not the same as “the height of the average person”.

Alan J was careless in his choice of words.

No. He was entirely correct in his choice of words. It’s the way some insist on thinking average height, means height of the average, that is the problem.

One cannot measure the average of anything directly. It has to be calculated from sample measurements.

It isn’t surprising that you and Alan J are at odds with other commenters here when you don’t choose your words carefully.

“One cannot measure the average of anything directly.”

Of course not. It’s measured indirectly. But that doesn’t mean you are not measuring it.

If you disagree that sampling is a measurement then you need to take it up with NIST and their example from TN1900, which defines the average monthly maximum temperature as a measurand, which can be measured by looking at a sample of daily temperatures.

It’s not even “measured” indirectly. The average is a statistical descriptor, not a measurement. The average doesn’t even have to exist. How can something that doesn’t exist be a measurand?

What people call something and what it actually is don’t always match. You are a prime example of doing that when you say the standard deviation of the sample means is the accuracy of the mean.

And in less than five days, they get to start their out-of-tune orchestra up all over again, using the same bad music arrangements.

This is just quibbling semantics, but that isn’t what I said. I said “Let’s say you want to measure the average height of a person in a room.” i.e the average of all of the heights of all of the people. There are 10,000 people in the room, and all of them have a height, and we can compute the average of those heights. We want to estimate that number. In my example, we are restricted to only being able to measure the height of 2000 of the 10,000 people.

Nothing in the remainder of your response explains how you would approach this task, or if you even think it can be achieved. Care to actually take a stab at it?

You are not familiar enough with measurements to ask pertinent questions. Here is an article that is an excellent example of the issues surrounding averages. The big point is that they have variance. In other words uncertainty. Regardless of how accurate your average is calculated there are data surrounding that measurand that must be taken into account when dealing with it.

When U.S. air force discovered the flaw of averages (thestar.com)

This is an excellent example of how the measurements that make up a measurand can each have their own uncertainties and that they all combine into one uncertainty that illustrates the range to be expected.

I know you, programmers, and mathematicians have had basic statistics where sampling theory was discussed. I’ll also guarantee that 99.9% of these basic courses never addressed the issue of how to determine basic measurement uncertainty. I’ll also guarantee that these 99.9% of courses never addressed what to do when height measures were 5’8.25″±0.25″. They all assumed the 5’8.25″ was exact. Prove me wrong.

I know that graduate chemists, physicists, engineers, etc. had senior level lab classes that emphasized measurement accuracy and uncertainty. Their future jobs depended knowing how to guarantee that their work ended up with satisfactory statements of values+uncertainty.

Nowhere have I denied that averages carry uncertainty, nor have I claimed that the average is an ideal metric for every scenario. Those are motes of fairy dust you are swatting at, having imagined them in your head.

And you’ve again failed to even attempt to explain how you might approach the problem posed, or if you even think it is a solvable problem. At this moment it isn’t clear to me if you actually believe the average is a metric that is possible to estimate or use.

So writes the clueless noob who doesn’t understand Lesson One about uncertainty.

You’ve been told at least twice now how to handle this! Can’t you read?

Why do you think piston diameters are given a stated value +/- uncertainty? It’s so engine designers can make sure the cylinders can handle the varying sizes of pistons. They don’t design to a highly precisely calculated average. Nor do they assume that how precisely you have calculated the average tells them exactly what size to make the cylinders.

It works the other way around as well. Pistons are designed to fit cylinder walls with a specified uncertainty. In an ICE engine its done by fitting the pistons with compression and oil rings that can adjust to the size of the cylinder. The piston designers don’t make the pistons to fit a highly precise calculation of the average size of a cylinder wall.

Examples abound EVERYWHERE. The original M-16 rifle as compared to the AK-47. Every place you see a rubber o-ring such as in the end of your garden hose connector!

Every bridge you drive over has been carefully designed to the measurement uncertainty of the shear stress of the beams, not to a highly precise calculated average!

Temperature averages are no different. Any calculation of the average using samples which winds up with more decimal places than in the measurement uncertainty is basically scientific fraud. It’s stating something that you can’t possible know.

Climate science has been getting away with this fraud for literally decades, even centuries. But thanks to people like Mr Watts, Kip Hansen, Pat Frank, etc. more and more people are becoming aware of this. It’s not going to end well for climate science. It’s just a matter of time.

If you could measure 2001 people instead of 2000, would the quality of your evaluation probably improve, or not?

If you measure each person with a different device then the uncertainty of the average will grow with each measurement – exactly what is done with global temperature.

If you measure each person with their shoes on then the uncertainty of the average will grow with each measurement because the soles of shoes vary widely with style and wear.

It’s what is meant when it is stated that you can minimize uncertainty if you are measuring the same thing multiple times using the same measurement device under the same environmental conditions.

If you don’t meet *all* those requirements then uncertainty grows with each additional inaccurate measurement.

And global temperature measurements don’t meet ANY of those requirements.

It’s why the medical establishment has finally understood that the standard deviation of the sample means is *not* the proper metric for judging the accuracy of the mean. It leads to being unable to reproduce results as well as overestimating possible efficacy.

In the end, the “stated value +/- uncertainty” is meant to give other experimenters something they can use to judge the reasonableness of their results as well as to provide what is euphemistically called a “safety margin” when designing something that can fail, perhaps catastrophically – such as a bridge.

The trendologists really hate that uncertainty increases.

this reasoning implies that if you measure enough people your uncertainty will grow to greater than the height of the room. How can the uncertainty be greater than the limits imposed by known quantities?

Error is not uncertainty!

Our friend Tim tells us that the uncertainty grows with each measurement, so soon we will not be able to say whether the average height is greater than the height of the room or smaller than an ant. So you’d say you disagree with Tim?

Your interpretation of Mr. Gorman’s reasoning is erroneous. The key point being stressed to you is that uncertainty increases because the measuring conditions are not consistently stable and comparable.

Furthermore, it is a poor analogy because measuring temperatures is like a room full of people who wander in and out.

You’re an idiot.

Hope this helps.

If the measurements are high precision, the uncertainty will grow slowly. That is a good reason to use high precision because if the uncertainty becomes non-physical, it it telling you that the experiment is designed poorly.

Your comments are always enlightening. Thank you!

If you are measuring different things then high precision may not be as much of a help as you think. The variances of the measurands determines the uncertainty, not the measuring device.

“this reasoning implies that if you measure enough people your uncertainty will grow to greater than the height of the room”

What are you expecting to happen?

As you add people you are going to increase the range of the heights you encounter. Range is an indication of the uncertainty of an average. The wider the range the smaller the hump at the average. The distribution gets squished. Variance is related to range. Standard deviation is related to variance.

The range will never exceed the height of the room but the number of elements at the end of the range might grow extending out the standard deviation interval.

At some point you will probably reach an equilibrium that defines the distribution. What makes you think it will be a Gaussian distribution when different measuring devices and different environments are involved?

If it isn’t Gaussian then you can’t assume any specific level of cancellation that would reduce uncertainty, at least not with out some involved statistical analysis – which climate science does *NOT* do.

And even if the distribution looks Gaussian you *still* can’t account for the systematic bias introduced by the different measuring devices and/or the different measuring environment.

The best you could do is say the different measurement devices introduced an uncertainty of x inches. The different shoe designs introduced an additional uncertainty of y inches.

Did everyone stand at parade attention with their feet flat on the floor or did some slump and some didn’t have their heels against the wall? Add in some uncertainty for that, z inches.

Did the group consist of men and women? Those of Nordic descent and those of Mexican descent? Did you wind up with a multi-modal distribution or a Gaussian distribution?

How precisely you calculate the average is meaningless without knowing all the other factors describing the distribution. The SEM only works for a Gaussian distribution because a multi-modal distribution doesn’t even have a meaningful average or standard deviation!

I expect that your model of uncertainty should not allow for impossible things to happen, but yours does. Because we aren’t uncertain about impossible things happening. You claim that the uncertainty will never exceed the height of the room, but you also claim that the uncertainty grows without bound as the number of measurements increases. One of these things cannot be true. But you dont attempt to explain the contradiction.

And as I’ve said as nauseam, I agree that you cannot address systematic bias via taking a large sample. We agree on this point. You address systematic bias by identifying and adjusting for it. You keep arguing as though this is a point of contention.

So it must get smaller? Is this your claim?

Fool.

Little wonder climate pseudoscience is so off in the weeds.

Go buy a battery car.

“Go buy a battery car.”

Coincidentally, I didn’t wait for your permission. Our new Bolt EUV was bought on Friday, and is in our driveway as we exchange posts. Perfect for 95% of our driving, and will result in lower OpEx/mile than any other ICE vehicle.. It will also allow us to extend the life on our ’18 Colorado diesel for the rest of my driving life. We use that to pull our Escape 5.0 TA around El Norte, ~3 months/year, and for lots of family camping.

Don’t worry – we’ll still use the e bikes in clement weather to get us from A to B around St. Louis, and we rack them behind the Escape for trips. That way, we park close, get to see the city/countryside better on the way, and generally enjoy life more.

Is there anything else we should be doing…..?

Oh look, blob is a virtue signaler … what a surprise.

Don’t get it wet, or too hot, or too cold…

Measuring many samples allows one to determine the variance in the population (especially non-stationary time-series), confirm that the probability distribution is close enough to normal to justify most parametric statistical measures, and calculate those statistical measures, which one can’t do with a single measurement. However, the precision of the individual measured samples bounds the justifiable precision of the calculated statistical parameters. That is, if one measures the sample with a crude measuring device, they are not justified in claiming as many significant figures in any of the measurements or calculations as one would be with a high-precision measuring instrument. If one starts with a large uncertainty, it grows rapidly with each additional measurement. That is why high precision (and accuracy) is desired in the measuring device.

How do you distinguish accuracy from precision? Why show a temperature to a precision of +/- 0.01 deg if you think that the accuracy is only +/- 1 deg? It is deceptive because it gives the false impression that the accuracy is known to the same number of significant figures as the precision.

The UAH is guilty of this, they convert irradiance to temperature and save the results in K with five significant digits, 0.01K.

Pat Frank has a document out explaining this in detail. Of course you and climate science ignore the facts because they are inconvenient.

“The precision of the estimate of the mean increases with a larger sample size, there might still be an inaccuracy arising from non-random bias. “

Pure malarky!

Precision is not ACCURACY! Do I need to repost the graphic showing the difference? How many times will you need it reposted before accepting what it shows? I’d like to know.

The uncertainty gray line you are showing is *NOT* the accuracy of the mean, it is the precision with which you have calculated that inaccurate mean!

Where is the non-random bias in your graph? Did you just assume that it would cancel? I.e. that the non-random bias is actually random?

The problem is that everything concerning uncertainty is just whizzing by you right over your head and you simply won’t look up to see it!

IT SIMPLY DOESN’T MATTER HOW PRECISELY YOU CALCULATE THE POPULATION AVERGE IF THAT AVERAGE IS INACCURATE! And the precision with which you calculate the population average tells you *nothing* about the accuracy of the average.

The average uncertainty is *NOT* the uncertainty of the average! The average uncertainty is not a measure of the variance of the population data nor is it somehow a cancellation of systematic bias!

If you had taken the time to understand my comment before replying to it, you would see that I am actually distinguishing between precision and uncertainty using the very same criteria that you are. On this point we actually agree, yet even so you cannot help but take an adversarial tone. It’s a shame.

On the contrary, non-random bias is carefully accounted for in the uncertainty estimate:

But, again, I’m sure I’m just repeating what you already know.

“Uncertainties arise from measurement uncertainty, changes in spatial coverage of the station record, and systematic biases due to technology shifts and land cover changes. “

Stating that uncertainties arise from measurement uncertainty is *NOT* stating that they properly account for it! In actuality they use the same meme you do – all measurement uncertainty is random, Gaussian, and cancels. Thus they can ignore it!

If they *truly* accounted for it then they would be using the formula that the standard deviation of the temperature data is the standard deviation of the sample means *multiplied* by the standard deviation of the sample means. In other words the uncertainty interval associated with their “global average temperature” would *increase* instead of decreasing.

It is the variance of the population data that is the true determinant of the uncertainty of the average, not the precision with which you calculate an inaccurate mean from inaccurate data.

Well, sure, you can’t read only the abstract and conclude that the work is robust. I’ll look forward to your analysis of the actual paper and methodology described therein.

Again, how do you fit ±1K into a ±0.2K “envelope”?

Well said, they are no different from perpetual motion machine inventors trying to defeat entropy.

bgwax is still a clown show.

But nice lits o’haet, always a kook sign.

Put your money where your mouth is dude!

Show us where NIST TN 1900 Example 2 is incorrect.

Use some math and show their errors.

Then show the math as to how you reach a better measurement uncertainty for the same month and what it is?

Good luck! I will keep asking till you answer this.

His entire repertoire of uncertainty consists of blindly stuffing the average formula into the GUM and declaring:

“See! Averaging reduces measurement uncertainty!”

And of course there is the bellcurveman classic line:

“I can’t believe it is that big!”

“I can’t believe it is that big!”

I’m not sure when I said that. But if it’s in relation to the idea that 100 thermometers each with a measurement uncertainty of 0.5°C, could produce an average where the measurement uncertainty was 5°C. Then, yes, I find it hard to see how that is possible.

That’s because you have absolutely ZERO experience in reality.

You simply can’t accept that when you combine 10 2″x4″ boards the accuracy of the final result accumulates uncertainty as you add each board. Make it 100 boards and the inaccuracy of the final length will accumulate even *more* inaccuracy.

If you would *ever* actually work through Taylor’s book on uncertainty, especially Chapters 2 and 3, and actually DO THE EXAMPLES, it should become obvious that uncertainty accumulates when you combine measurements of different things.

Something you and climate science just absolutely refuse to accept. Your ingrained meme is that all uncertainty is random, Gaussian, and cancels. Neither of you can break out of the paper bag formed from that meme.

A lecture on reality from someone who still doesn’t understand that an average is not a sum.

The “well established” procedures you speak of have been abandoned by the medical establishment. It has been specifically recognized in the medial establishment that using the standard deviation of the sample means is *NOT* the proper measure of uncertainty. You’ve been given the links and specific quotes concerning this but you continue to ignore them.

The standard deviation of the sample means tells you *NOTHING* about the accuracy of the mean you have calculated. That can only be determined by analysis and propagation of the measurement uncertainty of the individual data points.

The medical establishment has been searching for reasons as to why so many experiments can’t be replicated. The use of the standard deviation of the sample means as the accuracy of the mean has been identified as one basic reason. It simply doesn’t matter how many samples you take if those samples are not accurate. You can calculate the average of those inaccurate samples down to the millionth digit but the result will still be as inaccurate as the data used to determine the average.

You and AlanJ are prime examples of why climate science remains stuck in 19th century methodologies while the medical establishment, agricultural science, and even HVAC engineering have moved on into the 21st century. Even the GUM has recognized this progression in methodology of determining measurement uncertainty.

Until climate science starts to recognize that the standard deviation of the sample means is *NOT* a measure of *accuracy* of the mean it will not progress past 19th century methodology.

And all they can do is mash the red button, heh.

This is not true. Errors occur. They should be noted in official documents. They can be interpolated to resolve singular errors.

What is not kosher is changing 50 years worth of data just so you can match it up with a recent trend using a different measuring device, different enclosure, etc.

You might tell us why recent measurements are not adjusted to match long records from the past instead of the other way around.

The various issues addressed by the major temperature analyses (GISTEMP, HadCRUT, BEST) are quite thoroughly documented in the literature. Being ignorant of the literature, and then assuming that if they aren’t aware of it then it mustn’t exist (blindness to one’s own ignorance) is something of a hallmark of WUWT acolytes.

It’s an arbitrary choice, but we live in the present, it’s our frame of reference, so it kind of makes sense to adjust past temperatures relative to the present day rather than present day temperatures relative to the past. There is no impact on the end result either way.

You lose! That is not a scientific reason based on data and logic at all.

Have you ever looked at what that does to a “global average temperature? If not, your scientific background is suspect. You can’t make decisions like that without examining multiple scenarios.

Who isn’t aware of the literature?

Have you read Hubbard and Lin’s 2006 analysis showing that regional temperature adjustments are just plain bad? That because of micro-climate differences any adjustments have to be done on a station-by-station basis? I.e. they have to be lab calibrated ON SITE, which is very seldom done?

Why has climate science ignored their findings? Why have YOU ignored it?

That’s just patently false. PHA was developed in part because of the findings of Hubbard & Lin.

[Hubbard & Lin 2006]

[Menne & Williams 2009]

Notice that Menne & Williams cite the work of Hubbard & Lin.

Why are you not addressing the actual issue? As I pointed out, PHA only serves to identify discontinuities. It can *NOT* evaluate what adjustments are appropriate to create a homogenous long record.

Hubbard and Lin SPECFICALLY point out that adjustments must be done on a station-by-station basis. When you are using other stations to determine the adjustments for a specific station or to infill missing data you are ignoring what Hubbard and Lin found.

Why can’t you address that simple fact?

NOAA’s implementation of PHA does just that. BEST uses a similar method for detection, but instead of making adjustments they opted to split the record. They call it the “scalpel” method.

That’s what PHA does.

Data FRAUD, and you defend these practices.

That is *NOT* what the documentation I read says. It said it can accurately identify change points. It did *not* say it could accurately determine the adjustment values over time to homogenize the differing records accurately.

PHA finds the inconsistencies on a station-by-station basis. Since it can’t evaluate differing micro-climates or calibration it simply cannot accurately evaluate adjustment VALUES.

Anyone that thinks it can is only fooling themselves. It’s what Hubbard and Lin tried to tell everyone. Microclimate matters. It doesn’t matter if you move the station 1 inch or 1 mile. If you can’t evaluate the micro-climate then you can evaluate any adjustments, especially for measurements far in the past.

From Hubbard and Lin.

You haven’t shown where Menne & Williams 2009 addressed measurement uncertainty at all. Identifying breaks is not the same as determining accurate adjustments.

HL06 are making a very specific argument that a generic offset adjustment from Quayle, 1991 is inappropriate for considering localized (single station-level) changes, and that a station-specific adjustment should be made in these cases instead. I don’t have the requisite expertise to evaluate those claims, but they are quite irrelevant when considering network-wide global/CONUS indexes.

USHCN has also been replaced by ClimDIV. I’m not sure how relevant this research is for the present day.

The paper has 27 citations.

Pushing data fraud again?

Your feathers are on display for all to see.

“ but they are quite irrelevant when considering network-wide global/CONUS indexes.”

They are *TOTALLY* relevant!

You state you don’t have the expertise to evaluate the claims but then turn around and use the argumentative fallacy of Argument by Dismissal to say they are irrelevant!

Unfreakingbelivable!

I’m saying that if Hubbard and Lin’s argument is correct, it has little impact on global/CONUS wide temperature estimates. It’s something that is of concern when you’re looking at large scales, e.g. areas represented by a single station. But also as stated, I’m not sure the adjustment from Quayle is still being used for the instrumentation change bias adjustment, since automated algorithms are being employed.

“All errors are random and cancel” — yawn, the same-old same-old.

“I’m saying that if Hubbard and Lin’s argument is correct, it has little impact on global/CONUS wide temperature estimates.”

In other words don’t confuse me with facts! My meme is that all measurement uncertainty is random, Gaussian, and cancels – and I’m sticking with that meme.

Thus the uncertainty of the global/CONUS wide temperature estimates are 100% accurate – or are at least accurate out to the milli-degree!

Most are actually systematic uncertainties which can never be discovered using statistical analysis.

A fact these rubes will never acknowledge—instead they invoke a magical incantation that transmogrify systematic uncertainties into random which then disappear in a puff of greasy green smoke.

They are not normalized to a common baseline. They are normalized to a STATION common baseline and all based on a similar period of time. Do you know the difference? It doesn’t mean the anomalies have one single baseline for the globe.

It is my contention that climate science should determine a common single global baseline value used for all anomalies. That would do two things, one, tell all what the best temperature is, and two, show variations from that temperature so a common point is used.

I am not going to pay for a junk document. It is up to you since you presented the paper, to answer questions. If measurements are correlated, the GUM requires a correlation calculation be added to the combined uncertainty. Was this done? If so how was it determined?

“It is my contention that climate science should determine a common single global baseline value used for all anomalies.”

It’s already done. the base is set at 273.15K, it’s just Americans who refuse to use it.

But using that as an anomaly for global temperatures fixes none of the problems that using different bases is intended to fix.

“That would do two things, one, tell all what the best temperature is,”

How does it do that? What do you mean by best? Best for me during summer is not the same as best during winter.

Well, doesn’t that say something about how useless an average anomaly is?

No.

And every time historical data is changed, the baseline(s) change. There is no assurance that all the old baselines get updated. That is a good reason for changing current readings and keeping the archival data untouched. It is far less effort and there is less chance for errors.

“let sleeping dogs lie”?

“The reason for this is trivially intuitive from common experience – the temperature above my driveway is rarely the same as the temperature under the trees in my back yard, but if it’s a hotter than normal day on the driveway it’s almost certainly going to be a hotter than normal day in the back yard, even though the definition of “hotter than normal” is different for both areas.”

Let’s assume for the sake of discussion that this is exactly true, even though you say it is only “almost certainly” the case.

Leaving that aside.

If we are going to use the temp of the driveway, where we have a thermometer, to figure out the temp of the backyard, where we have no thermometer, we must also expect that the conditions that make one place warmer than normal will have the same effect in the backyard, in magnitude and direction. So if it is hotter than normal on the driveway, we might figure that it is because the humidity is lower than average and less breeze than average and there are less clouds than average and there is higher pressure than average. But there is no reason to expect by any logic that the driveway is going to be as much above average temp as the back yard is, where it is grassy and kind of shady, as opposed to blacktop paving with no vegetation and no trees!

That driveway might be 5 degrees hotter than when it is breezy and partly cloudy and very humid, while these same relative conditions may well cause the grassy back yard to only be half a degree warmer than when it is breezy and partly cloudy and more humid.

Do you have any idea how much the actual density of air varies over the course of a year?

Do you know how much the specific heat of air varies from place to place and day to day?