Guest Post by Willis Eschenbach [see update at the end]

How much is a “Whole Little”? Well, it’s like a whole lot, only much, much smaller.

There’s a new paper out. As usual, it has a whole bunch of authors, fourteen to be precise. My rule of thumb is that “The quality of research varies inversely with the square of the number of authors” … but I digress.

In this case, they’re mostly Chinese, plus some familiar western hemisphere names like Kevin Trenberth and Michael Mann. Not sure why they’re along for the ride, but it’s all good. The paper is “Record-Setting Ocean Warmth Continued in 2019“. Here’s their money graph:

Now, that would be fairly informative … except that it’s in zettajoules. I renew my protest against the use of zettajoules for displaying or communicating this kind of ocean analysis. It’s not that they are not accurate, they are. It’s that nobody has any idea what that actually means.

So I went to get the data. In the paper, they say:

The data are available at http://159.226.119.60/cheng/ and www.mecp.org.cn/

The second link is in Chinese, and despite translating it, I couldn’t find the data. At the first link, Dr. Cheng’s web page, as far as I could see the data is not there either, but it says:

When I went to that link, it says “Get Data (external)” … which leads to another page, which in turn has a link … back to Dr. Cheng’s web page where I started.

Ouroborous wept.

At that point, I tossed up my hands and decided to just digitize Figure 1 above. The data may certainly be available somewhere between those three sites, but digitizing is incredibly accurate. Figure 2 below is my emulation of their Figure 1. However, I’ve converted it to degrees of temperature change, rather than zettajoules, because it’s a unit we’re all familiar with.

So here’s the hot news. According to these folks, over the last sixty years, the ocean has warmed a little over a tenth of one measly degree … now you can understand why they put it in zettajoules—it’s far more alarming that way.

Next, I’m sorry, but the idea that we can measure the temperature of the top two kilometers of the ocean with an uncertainty of ±0.003°C (three-thousandths of one degree) is simply not believable. For a discussion of their uncertainty calculations, they refer us to an earlier paper here, which says:

When the global ocean is divided into a monthly 1°-by-1° grid, the monthly data coverage is <10% before 1960, <20% from 1960 to 2003, and <30% from 2004 to 2015 (see Materials and Methods for data information and Fig. 1). Coverage is still <30% during the Argo period for a 1°-by-1° grid because the original design specification of the Argo network was to achieve 3°-by-3° near-global coverage (42).

The “Argo” floating buoy system for measuring ocean temperatures was put into operation in 2005. It’s the most widespread and accurate source of ocean temperature data. The floats sleep for nine days down at 1,000 metres, and then wake up, sink down to 2,000 metres, float to the surface measuring temperature and salinity along the way, call home to report the data, and sink back down to 1,000 metres again. The cycle is shown below.

It’s a marvelous system, and there are currently just under 4,000 Argo floats actively measuring the ocean … but the ocean is huge beyond imagining, so despite the Argo floats, more than two-thirds of their global ocean gridded monthly data contains exactly zero observations.

And based on that scanty amount of data, which is missing two-thirds of the monthly temperature data from the surface down, we’re supposed to believe that they can measure the top 651,000,000,000,000,000 cubic metres of the ocean to within ±0.003°C … yeah, that’s totally legit.

Here’s one way to look at it. In general, if we increase the number of measurements we reduce the uncertainty of their average. But the reduction only goes by the square root of the number of measurements. This means that if we want to reduce our uncertainty by one decimal point, say from ±0.03°C to ±0.003°C, we need a hundred times the number of measurements.

And this works in reverse as well. If we have an uncertainty of ±0.003°C and we only want an uncertainty of ±0.03°C, we can use one-hundredth of the number of measurements.

This means that IF we can measure the ocean temperature with an uncertainty of ±0.003°C with 4,000 Argo floats, we could measure it to one decimal less uncertainty, ±0.03°C, with a hundredth of that number, forty floats.

Does anyone think that’s possible? Just forty Argo floats, that’s about one for each area the size of the United States … measuring the ocean temperature of that area down 2,000 metres to within plus or minus three-hundredths of one degree C? Really?

Heck, even with 4,000 floats, that’s one for each area the size of Portugal and two kilometers deep. And call me crazy, but I’m not seeing one thermometer in Portugal telling us a whole lot about the temperature of the entire country … and this is much more complex than just measuring the surface temperature, because the temperature varies vertically in an unpredictable manner as you go down into the ocean.

Perhaps there are some process engineers out there who’ve been tasked with keeping a large water bath at some given temperature, and how many thermometers it would take to measure the average bath temperature to ±0.03°C.

Let me close by saying that with a warming of a bit more than a tenth of a degree Celsius over sixty years it will take about five centuries to warm the upper ocean by one degree C …

Now to be conservative, we could note that the warming seems to have sped up since 1985. But even using that higher recent rate of warming, it will still take three centuries to warm the ocean by one degree Celsius.

So despite the alarmist study title about “RECORD-SETTING OCEAN WARMTH”, we can relax. Thermageddon isn’t around the corner.

Finally, to return to the theme of a “whole little”, I’ve written before about how to me, the amazing thing about the climate is not how much it changes. What has always impressed me is the amazing stability of the climate despite the huge annual energy flows. In this case, the ocean absorbs about 6,360 zettajoules (10^21 joules) of energy per year. That’s an almost unimaginably immense amount of energy—by comparison, the entire human energy usage from all sources, fossil and nuclear and hydro and all the rest, is about 0.6 zettajoules per year …

And of course, the ocean loses almost exactly that much energy as well—if it didn’t, soon we’d either boil or freeze.

So how large is the imbalance between the energy entering and leaving the ocean? Well, over the period of record, the average annual change in ocean heat content per Cheng et al. is 5.5 zettajoules per year … which is about one-tenth of one percent (0.1%) of the energy entering and leaving the ocean. As I said … amazing stability.

And as a result, the curiously hubristic claim that such a trivial imbalance somehow perforce has to be due to human activities, rather than being a tenth of a percent change due to variations in cloud numbers or timing, or in El Nino frequency, or in the number of thunderstorms, or a tiny change in anything else in the immensely complex climate system, simply cannot be sustained.

Regards to everyone,

w.

h/t to Steve Milloy for giving me a preprint embargoed copy of the paper.

PS: As is my habit, I politely ask that when you comment you quote the exact words you are discussing. Misunderstanding is easy on the intarwebs, but by being specific we can avoid much of it.

[UPDATE] An alert reader in the comments pointed out that the Cheng annual data is here, and the monthly data is here. This, inter alia, is why I do love writing for the web.

This has given me the opportunity to demonstrate how accurate hand digitization actually is. Here’s a scatterplot of the Cheng actual data versus my hand digitized version.

The RMS error of the hand digitized version is 1.13 ZJ, and the mean error is 0.1 ZJ.

Hi Willis,

Could you please explain how you covnerted the energy change to a temperature change ?

David, the conversion is possible due to the fact that it the “specific heat” of seawater is about 4 megajoules per tonne per degree C. In other words, it takes 4 megajoules to warm a tonne of water by 1°C.

w.

“The RMS error of the hand digitized version is 1.13 ZJ, and the mean error is 0.1 ZJ.”

Hey Willis, can we have that in degrees please 😉

seriously, nice work.

“Not sure why they’re along for the ride, but it’s all good. ”

how do you expect them to get a hockey stick without having Mann on board to present incompatible data from a variety of data sources in the same colour and pretend it’s a trend.

Where would any search for missing heat be with the bone fides of Trenberth .

You knocked the Puck outa that post!

Trenberth and Mann have ‘prestige’ in the right circles to get the best coverage for the ‘oceans are boiling’ story. What with them now being acid too, count me out from having a paddle.

Changes in salinity alter the specific heat more than allows for the “accuracy” the paper claims then.

12 cheeseburgers. Your comment is talking about 12 cheeseburgers ± a few pickles.

Maybe 12 pickle molecules.

All their claimed are pickled, or they are all pixelated.

Excellent commentary on the error bars, yet certainly there are other factors that increase the absurdity of their claims…

The floats are not fixed or tethered to one location! They all move!

Finally, making a WAG that they are right, then as the atmosphere warms one far quicker, the difference between the ocean T and atmospheric T increases, thus over time the oceans ability to counter the atmospheric warming increases.

Yeah, but how many sesame seeds per bun?

They should use scarier units, such as electron-volts. 1 zJ = 6.24E39 eV! Now THAT’S some scary stuff!

zeta = 10²¹

J/eV = 1.6×10⁻¹⁹, so do 1/x inversion

eV/J = 6.25×10¹⁸

eV/zetaJ = 6.25×10¹⁸ × 10²¹ → 6.25×10³⁹!

Yay.

Math.

Thanks, Goatguy. My go-to resource on conversions is Unit Juggler, q.v.

w.

Maths – FIFY

Excellent article, would there be any other reason (other than leveraging alarmism) that the original paper would use Zetta-joules as a measurement ? We’re they trying to illustrate something else ?

Will Manb & co tell us, ARGO is measuring zeta joules ?

The hysteria surrounding this is unbelievable … here’s CNN:

Oceans are warming at the same rate as if five Hiroshima bombs were dropped in every second

People truly don’t realize how big the energy flows in the climate system actually are. Five Hiroshima bombs per second is the same as 0.6 watts per square metre … and downwelling energy at the surface is about half a kilowatt per square metre.

w.

YES. And what is more is that for every kilogram of water evaporated from the oceans some 694 Watthrs. are removed from the surface and dissipated into the clouds and beyond to space. This being why the oceans never seem to get above 35DegC even after tens of thousands of years of these bombs being dropped every second.

A watched kettle never boils it appears.

“A watched kettle never boils it appears.”

+1

Willis

Nice article. The britishpress are talking about a surge in warming with oceanic apocalypse around the corner.

one of the problems is context, such as your pertinent comment about the huge amount of energy entering the ocean, of which the human content is actually miniscule, which somehow never makes it into the media

The other problem is that most people have problems with numbers. It would be useful if numbers less than one could be expressed in words,for example one hundredth of a degree centigrade rather than the figure. The vanishingly smaller the number such as 0.001, the less likely it is that the average person will understand it

Tonyb

TonyB

Because many people have problems with numbers, it behooves us to translate oddball metrics into things people can understand. Willis did exactly that.

Something else we can do is explain in short sentences that a claim for a detected change that is smaller than the uncertainty about that change has to be accompanied by a “certainty” number.

Mmm It is not that we can’t calculate some average value from a host of instruments and readings. It is just that propagating the uncertainties by adding in quadrature to get the “quality” of the average (the mean) means getting a number with a pretty large uncertainty.

Until we know the number of readings and the number of instruments we can’t say exactly what the uncertainty is, but it is certainly more than 1.5 degrees C.

Suppose the claimed change is 0.1 degrees ±1.5, for example. We have to consider what certainty claim should accompany the 0.1. Suppose the errors in readings were Normally distributed (a reasonable assumption). Given a Sigma 1 uncertainty of ±1.5 C it means we can say the true average value is going to be within 1.5 degrees 68% of the time (were we to repeat the experiment). To say it is within 0.1 degrees is quite possible provided we admit there is, for example, only a 2% chance that this is true.

The public does not consider the implications of claims for small detected changes with a large uncertainty. If the public were all educated and sharp-eared consumers of information they would insist that the purveyors of calamity and disaster state the claims properly. Clearly, scientists are not going to do this unprovoked.

The reason I said “2%” is because there is 98% chance that the true answer lies outside the little range within which the “0.1 degrees” lies. That’s just how it is folks.

The British press – notably the Guardian, chief doomsayer among them all!

Carbon 500

Is that the Manchester Grauniad ?

cheers

Mike

Willis, at the very outset of the AGW hysteria, I’ve regarded the leftist media as the most culpable “dealer” in the whole supply chain of charlatans who contrive to benefit themselves from this perfidy –

1. the media is addicted to ‘click-bait’ stories;

2. dodgy academics know the media will publish every alarmist press release they put out;

3. the media knows that politicians will shamelessly jump aboard any issue that can garner them votes;

4. the circle of perfidy is completed when university administrators work on their academics to produce research that will pressure politicians and bureaucrats to direct grant funding to those projects that they can claim are “doing something”.

And so it goes on and on and on.

Hopefully, in the not too distant future, there will be another “Enlightenment” event that will cease the current auto-da-fe inquisition being inflicted on climate data.

Willis,

Not the Hiroshima bombs again!

This old chestnut was discredited years ago, I thought.

I remember when this bogeyman was being pushed and it was claimed the earth was subjected to 5 Hiroshima bombs per second by global warming, someone pointed out that the Sun was bombarding the earth’s atmosphere with 1700 Hiroshima bombs a second.

Did another 5 really matter?

It does once it is translated into Manhattans of ice melted away in the Arctic!

Nicholas,

As distinct from the Antarctic gaining Manhattans of Polar Ice!

I prefer to measure in ham sandwiches. The oceans are warming by 85 million ham sandwiches a second. Don’t tell AOC or she will say this is unfair to the vegan fish.

Impossible to melt Manhattan islands worth of ice with 5 Hiroshima bombs.

Leaving your Manhattans and ice reference as the ice in a few shallow Manhattan drinks.

Try cutting back.

Interesting. The results of the Von Shukman paper using Argo float data to 2012 was 0.62w/ sq m (+/- around 0.1w/sq m). This is from memory. It might be 0.64 +/- 0.09 but it’s close. Also, she did same thing in 2010 when the float deployment wasn’t quite complete and got 0.72w/sq m.

Her reference to 0.003°C accuracy was for the precision of the thermometers on the Argo floats themselves, not the overall accuracy of the gridded result which involves…models. As you can see from the above, her error is ~1/6 of the result and that error translates directly in the temp conversion because the relevant water mass and specific heat capacity of water are known constants.

Von Shukman seemed to be the go-to authority around 2012. I’ve not followed OHC in any detail for a long time since then though.

Note that “device resolution” is not at all the same concept as “device precision”, and neither is equivalent to what is known in the scientific world (as opposed to the fantasy world of climate science) as “accuracy”.

In fact these are all quite distinct concepts, not to mention different in how they help to try to determine exactly what has been measured and how anyone should have confidence that the result given is meaningful and properly expressed.

Metrology is an entire discipline in and of itself…as is statistical analysis.

Neither of these fields of study has ever been discovered to exist by any of the alarmists, let alone incorporated into the malarkey they (seemingly reflexively) spewed forth.

My frequent thoughts, exactly, but you are much more eloquent than I could ever hope to be.

In looking at Willis’ error bars in his digitised graph you can eyeball the 2010 error bar and see that it’s roughly 1/6 of the full reading. This is in keeping with Von Shukman 2010 and 2012 +/- error as stated in my comment above.

So it also bears out my point that the 0.003°C is related to the precision of the Argo float thermometers and not the accuracy of the modelled sum of gridded areas. The precision of the Argo float thermometer would’ve been calibrated in the laboratory before deployment. This would explain such fine precision as being credible whereas 0.003°C is indeed not credible for the OHC or its ocean temperature derivative that Willis derived.

Any measurement, as well as any calculation derived from any measurement, can only legitimately be reported to the number of significant figures as the least certain element of the calculation.

People that work in labs know how difficult it is to accurately measure even a small vessel of water to within one tenth of a degree.

The resolution of the device simply gives the maximum theoretical precision, and the calibration standard the maximum theoretical possible accuracy.

These guys think measuring random places in the ocean a few times a month lets them translate this theoretical value (if one wants to be generous and assume that the manufacturer’s supplied info is true without fail and in every case) of the sensor in the ARGO float, to the accuracy of their calculation for the heat content of the entire ocean and how this is changing over the years.

No explanation for how they have the same size error bar in the year 2000, prior to a single ARGO float being deployed, as they show in 2010, when they project had only recently reached an operational number of devices deployed.

And not much different (in absolute terms) than decades prior to that when virtually no measurement of deep water had ever been made, and electronic temperature sensors had not even been invented yet.

On top of that…it needs to be mentioned in every discussion, that all of the results they get are at several stages adjusted and “corrected”, and made to match the measured TOA energy imbalances between upwelling energy and incoming solar energy.

Just what is the “right” temp for the oceans? We are in an ice age so I would guess that we are running a little cold.

I would like things to be a little warmer as our governor here in NY is working hard to destroy our energy infrastructure and I’ll be freezing to death if the climate doesn’t warm a bit.

I’d like to know how many HBPS (Hiroshima bombs per second) are “going off” when the Fleet of Elon’s Teslas are charging/discharging every day.

Need some balancing perspective here.

How many Tesla cars have been sold in the US so far? 2012-2020 over 890,000. Compare to just Ford F-series pickup truck sales per year:

2019 1,000,000 or so…

2018 909,330

2017 896,764

2016 820,799

2015 780,354

2014 753,851

2013 763,402

2012 645,316

The Ford F-Series outsells all makes and models of EV’s combined in the US by a wide margin.

Why is market capitalization of Tesla greater than Ford and GM combined? Market expectations for Tesla must include not only huge growth in car/truck sales but also other things not yet identified. Or maybe Tesla stock is just over priced.

“Oceans are warming at the same rate as if five Hiroshima bombs were dropped in every second”

Jan 2014 Skeptical Science:

“… in 2013 ocean warming rapidly escalated, rising to a rate in excess of 12 Hiroshima bombs per second”

https://skepticalscience.com/The-Oceans-Warmed-up-Sharply-in-2013-We-are-Going-to-Need-a-Bigger-Graph.html

Well that’s the first thing you said that’s not true. It is quite believable the hysteria surrounding this.

Oh we forgot !!!!

SUB-SEA VOLCANO,s

Shhh! Don’t introduce nasty old facts……

All those Hiroshima’s seem to be causing nuclear winter in BC.

Every second day there’s a fresh layer of fallout needing to be plowed and shovelled.

It’s just about time to see a travel agent about a trip to somewhere warmer. Maybe Montreal.

I just received the Hiroshima bomb analogy from the ‘scholarly’ Sigma Xi Smart Briefs, relying on a second hand source. Who in academia are teaching that second-hand sources are authorities? Thought that we were relying on climate scientists who rely on second-hand data?

https://edition.cnn.com/2020/01/13/world/climate-change-oceans-heat-intl/index.html

everybody signs on cause it’s publish or perish…and then when one of the others does a paper…the others jump on it too…

…only problem I have with Argo…each one floats around in the same glob of water

Argo in situ calibration experiments reveal measurement errors of about ±0.6 C.

Hadfield, et al., (2007), J. Geophys. Res., 112, C01009, doi:10.1029/2006JC003825

At WUWT a few years ago, usurbrain posted a very comprehensive criticism of the accuracy of argo floats.

The entire paper is grounded in false precision.

Just like the rest of consensus climatology. It’s all a continuing and massive scandal.

Thanks, Pat, always good to hear from you. I hadn’t seen that study. From the abstract:

Don’t know whether to laugh or cry …

w.

Which means they don’t know if the oceans have warmed or cooled, period.

The way I look at it, Jeff, given atmospheric temperatures are generally increasing, a process that is influenced minimally by increased levels of CO2, it seems safe to extrapolate that the upper levels of the oceans are warmer than before and thus injecting massive amounts of heat into the atmosphere.

Could be Chad. But the paper reviewed by Willis doesn’t demonstrate it.

I don’t think we really know how much “the Earth has warmed” in any given time frame.

Thanks, Willis. It’s always a pleasure to read your work. It’s never short of analytically sound and creative.

“Don’t know whether to laugh or cry …”

I am gonna stick with anger, personally…tempered with a overwhelming and deep seated fatalism, and rounded over time by a raging river of humor.

“Argo in situ calibration experiments reveal measurement errors of about ±0.6 C.”

….that’s all of global warming

Exactly right, Latitude. And the land-station data are no better.

Except for the CRN data, which are of limited coverage (the US) and date only from 2003.

Yes, the USCRN is gold standard.

And the CONUS trend based on USCRN is 0.12 C/decade higher than that of ClimDiv (a very significant divergence).

I couldn’t find any reference to that figure in that paper?

I did find this “The temperatures in the Argo profiles are accurate to ± 0.002°C”

http://www.argo.ucsd.edu/FAQ.html

Believe me, I’m not a warmist, but where did you get that figure from??

From this and Figure 2, we conclude that the Argo float measured increase in global ocean temperature is 0.08C +/- 0.6C (face palm)

‘Science’ by Kevin Trenberth and Michael Mann……

I see, thanks for that!

I knew a university type that wrote a paper with a long title.

Then the title was changed, and a bit more, and the thing was published in a different journal. Repeat. Again, and again.

At an end-of-year party the grad students gave each of the faculty a “funny” sort of gift. One person was given rose-colored glasses.

The “change-the-title” person was given an expanded resume with each of his publication titles permutated in every manner possible.

This made for a large document.

I, of course, had nothing to do with any of this.

@Willis

http://159.226.119.60/cheng/images_files/OHC2000m_annual_timeseries.txt

Thanks, Krishna, appreciated. Curiously, that one goes back to 1940 but the one in their study starts in 1958 …

w.

@Willis

their study starts in 1958 …

So, not all data have an ARGO origin…

Ah, splicing. That’s where Dr. Mann shines.

i can harly beleive they can measure it too.. but then we have to explain this regular increase..it should be a mess..

Exactly my thoughts. A surprisingly noiseless plot for even a 100% coverage of a uniform ocean. Surely an El Nino year affects the average temperature by a hundredth of a degree, let alone the average of of the poor coverage – or extremely poor pre Argo.

SST has a very small effect when you are covering down to 2km.

There are changes to deeper currents. The Humbolt current is affected down to 600m. There is half a degree effect at the surface, which would be bigger than the plot for the average down to 2000m. My comment is more about the effect on limited sampling even if the actual average remained the same eg a shift of warmer water (0.01°C) to where it is sampled.

Excellent!

I am always amazed they think numbers like 0.003C is an accurate variable range when the equipment used to gather data doesn’t even remotely reach that level of accuracy in the first place.

Interesting, because on a paper where the accuracy requirement for the Argo floats is stated to be 0.005 C, so higher than the 0.003 C they claim to measure year over year…

https://www.google.com/url?sa=t&source=web&rct=j&url=https://www.terrapub.co.jp/journals/JO/pdf/6002/60020253.pdf&ved=2ahUKEwjZs5qD_oPnAhUFA2MBHV-8BhEQFjACegQIBBAB&usg=AOvVaw2zagHkNd5NPKta2pinjwLU

I can totally see how they could convince themselves that, by using the power of averaging, they could produce such accuracies. The technique works well in some circumstances, in the presence of truly random noise. The problem is that nature usually does not throw truly random noise at us. Nature likes to throw red noise at us.

Red noise has decreasing energy as frequency increases. White (truly random) noise has equal energy at all frequencies. That means the energy of white noise is infinite, clearly impossible.

Because of the low frequencies of red noise, it tends to look like a slow drift. For that reason, averaging a signal containing red noise does not, at all, improve accuracy.

The problem with statistics is that most scientists do not understand the assumptions they are making when they apply statistics. I have a hint for them: the ocean is not remotely similar to a vat of Guinness. link

Ha! Averaging works just fine when I’m grinding a crankshaft. I just use a wooden meter stick and measure 50K times,,, all the accuracy I want, great tolerances.

(Three econometricians) encounter a deer, and the first econometrician takes his shot and misses one meter to the left. Then the second takes his shot and misses one meter to the right, whereupon the third begins jumping up and down and calls out excitedly, “We got it! We got it!” link

You must be the guy who did the last overhaul on my Jaguar E-Type.

You don’t mention if that was good or bad.

There is one overhaul item that is different than 99.9% of other cars. valve lash

Of all the car servicing disasters I have heard, the worst was for Jag E-Type. It seems that there overpowering temptations to take short cuts that don’t turn out well.

I’m guessing Ron isn’t a satisfied customer.

The power of the Central Limit Theory!!

Even if it was white noise, that would only matter if they were repeatedly measuring the same piece of water. Measuring a second piece of water, hundreds of miles away, tells you nothing new about the piece of water right in front of you.

Hi Willis! Why so many names on the paper? They’re in it to get a paper count: it’s like beach-bums showing off their pecs: it’s a confirmation- in their eyes- that they are the best. I have a thing, never believe the 5-star on Amazon.

It is an LPU (Least Publishable Unit) exemplar. i.e. a confected, sexed up document aimed at a) publicity, b) some rationale for funding and c) free sexed up content bribes to the backside sniffers in the msm.

@Willis PS

monthly

http://159.226.119.60/cheng/images_files/OHC2000m_monthly_timeseries.txt

Outstanding, thanks.

w.

Willis,

Go to Le Quere et al 2018 which is the annual ‘bible’ paper on the Global Carbon Budget which I have been studying, particularly to gauge the error margin for the Oceans.

There are 76 Co-authors (!) and it must be the holy grail for mainstream climate scientists.

Thank you Willis. Great conversion to reality mode.

Highly related, also, thanks Anthony et al., for getting the ENSO meter back on the sidebar.

Brilliant. Thank you. I saw this splashed all over the front page of the Grauniad (no, I didn’t buy it) and found it hard to tie up with the recent peer-reviewed publications reproduced over at Pierre Gosselin’s brilliant site (No Tricks Zone). You have clarified the situation.

http://www.woodfortrees.org/plot/hadsst3gl/from:1964/plot/hadsst3nh/from:1964/plot/hadsst3sh/from:1964/plot/hadsst3gl/from:1964/trend

Willis. Thanks for the post. I agree with you on your comments abt their report. There are some of us who think the reason for the difference in nh SST and sh SST is pollution.. .

The reviewers need to be disqualified from any future vetting.

Willis thanks for another lesson!

The magnitude of our oceans still challenges my little brain.

Mac

I hope I’m alive when the world wakes up to the ginormous scientific fraud that is being perpetrated by Michael “Piltdown” Mann et al.

Thanks for putting this massively hyped paper into context. It’s all over the broadsheets in the U.K.

Perhaps you could clarify one thing that bothers me on OHC? The common claim in the press releases for papers like this is that “90% of warming due to increases in GHG is in the oceans” yet this only represents ca.70% of the earth’s surface.

At the equator this rises to ca.79%, and the DLW, due to higher air temps, will be greater there than at other latitudes. Is that sufficient to support the ‘90% ‘ claim, or is the figure simply alarmist padding?

James, we don’t actually know how much “warming due to increases in GHG” there is. It might actually be zero. The claim that 90% of it is “in the oceans” is simply not supportable.

w.

They say 90% , that is Trenberth’s “missing heat. ”

They “know” the heat is there because their ( failed ) models say it must be. They can not find it in the surface record, so they hide it in the deep ocean where no one can check their work.

In reality the missing heat is in their heads. That is why they keep exploding.

If 90% of the heat is in the oceans, and the result is they have warmed by a tenth of a degree in sixty years, can we call it a day and cancel the ‘climate crisis’?

Seems reasonable to me.

Obviously you haven’t heard… “60% of the time, it works every time….”

90% of statistics are wrong, and the other half are mostly just made up.

So here’s the hot news. According to these folks, over the last sixty years, the ocean has warmed a little over a tenth of one measly degree.

I know you’re not trying to be funny, but worrying about a + 0.12 K change since 1960 kinda makes a joke of worrying about the “hidden” warming.

“I know you’re not trying to be funny, but worrying about a + 0.12 K change since 1960 kinda makes a joke of worrying about the “hidden” warming.”

Temperature isn’t heat content.

Mass and specific heat come into it.

Try working out what that 0.12K delta would look like in it was to be applied to the atmosphere.

You’ll need the fact that the oceans have a mass 250x that of the atmosphere and that the specific heat of water is 4x that of air.

I was thinking that one could make quite a bit of money by betting people that they could not tell which bowl of water sitting in front of them was warmer…iffen the difference was even 1° , let alone one tenth of that amount.

How many people could tell when the room they were sitting in had warmed by a tenth of a degree, or even one degree?

Typically a room has to change by that amount (~1° C) before a wall thermostat kicks on or off, simply to avoid short cycling of the (air conditioning or heating) equipment being regulated.

Put another way…even a room which is climate controlled by a properly operating thermostat, the air temp will vary by at least one or two degrees (F, or 1°C) between when the things kicks on and when it kicks off.

This is the whole reason for reporting a temperature change in the ridiculous unit of a zettajoule to begin with, and why published MSM accounts of such a study is then helpfully translated into the readily relatable (to the average person in one’s daily life) unit known as one Hiroshima.

They could relate in terms of units such as “the amount of energy delivered by the Sun to the Earth in a day”…but that would make the number appear as meaninglessly tiny as it really is.

Try working out what that 0.12K delta would look like in it was to be applied to the atmosphere.

I don’t care if the 0.12K delta occurred for a million gigatons of mass, it would raise the temp of a flea, guess what, 0.12K. You were trying to make some kind of “point”, and you blew it.

And to add, all your “point” demonstrates is the obvious — the oceans have a huge thermal inertia and can absorb/release large amounts of energy with only small temperature changes. That’s a very good thing because it greatly decreases temp changes due to varying energy inputs.

Thanks to Krishna, I can now demonstrate just how accurate my hand digitization of the data graph actually was. Here’s the comparison …

RMS error of the digitizing is 1.1 ZJ.

w.

Very nice work, Willis!

Do you use WebPlotDigitizer?

Or some other tool?

Or do you just pull up the image in MS Paint or similar, and enter the pixel values in a spreadsheet, and then convert them yourself?

I’m running a Mac, and I use “Graphclick” for digitizing.

w.

You’re right Willis it’s nonsense.

The fact that the atmosphere cannot heat the ocean deserves a mention in my opinion. Heat flows from the ocean to the atmosphere and then lost to space, never the other way round.

It’s the sun, stupid 😀 😀

As always said 😀

Good comment, John.

“the atmosphere cannot heat the ocean …”

True, however, it can, and does, slow it’s cooling.

Just like it does over land.

It’s called the GHE, caused by GHGs.

But we don’t know if any warming or cooling is human caused. Their margin of error means they don’t even know if the oceans are warming or cooling.

Jeff Alberts

Their margin of error for the data shown in the first chart in Willis’s post (their Fig. 1) is stated as “… 228 ± 9 ZJ above the 1981–2010 average.” Their best estimate far exceeds the error margin.

“Their margin of error means they don’t even know if the oceans are warming or cooling.”

I think this is the most important point to come out of this article. The alarmists are making exaggerated claims based on what? Based on a margin of error in their measurements of 0.6C!

See Connolly and Connolly radiosonde data. Ain’t no greenhouse effect bro.

I don’t believe that heat flow from the atmosphere to the oceans can be ruled out, but the issue here is the vast difference in the thermal capacity of air and water. If there was a situation where the atmosphere was warmer than the oceans, so little heat would flow that its effect on the ocean temperature would be very small.

Why not ?

Uncertainty is one of those concepts that alarmists can’t understand, for if they did, they would know with absolute certainty that they can only be wrong. The most obvious example is calling an ECS with +/- 50% uncertainty ‘settled’ where even the lower bound is larger than COE can reasonably support.

Great article, as usual! I look forward to your down-to-earth explanations and analysis for those of us who have some science and/or engineering background, but are not experts in the field of weather or climate and have had reservations about the “certainty” some have on how the complex systems of our planet work.

I was fascinated with the whole Argo project when it started up years ago, but noticed that when its data didn’t immediately confirm rapid “global warming” it dropped out of the news. Thanks again for giving us some perspective on the actual magnitude of trends in our ocean systems.

Willis,

At their provided link:

http://159.226.119.60/cheng/

I did find this data in .txt tabular form here:

http://159.226.119.60/cheng/images_files/OHC2000m_annual_timeseries.txt

http://159.226.119.60/cheng/images_files/OHC2000m_monthly_timeseries.txt

============================

My comments:

Their paper states, “The OHC values (for the upper 2000 m) were obtained from the Institute of Atmospheric Physics (IAP) ocean analysis (see “Data and methods” section, below), which uses a relatively new method to treat data sparseness ….”

IOW, that made up a lot of fake data to infill as they liked.

To wit from their Methods: “Model simulations were used to guide the gap-filling method from point measurements to the grid, while sampling error was estimated by sub-sampling the Argo data at the locations of the earlier observations (a full description of the method can be found in Cheng et al., 2017).

Mann and Trenberth likely were recruited and brought onboard during manuscript drafting by Dr. Fasullo. Mann was listed as senior author, but that was just more pandering to help get the paper published in high impact Western journal. They might as well have put Chinese President Xi as senior author.

What you have to love about these lying perps is the way they ended the manuscript:

“It is important to note that ocean warming will continue even if the global mean surface air temperature can be stabil- ized at or below 2°C (the key policy target of the Paris Agreement) in the 21st century (Cheng et al., 2019a; IPCC, 2019), due to the long-term commitment of ocean changes driven by GHGs. Here, the term “commitment” means that the ocean (and some other components in the Earth system, such as the large ice sheets) are slow to respond and equilibrate, and will continue to change even after radiative forcing stabilizes (Abram et al., 2019). However, the rates and magnitudes of ocean warming and the associated risks will be smaller with lower GHG emissions (Cheng et al., 2019a; IPCC, 2019). Hence, the rate of increase can be reduced by appropriate human actions that lead to rapid reductions in GHG emissions (Cheng et al., 2019a; IPCC, 2019), thereby reducing the risks to humans and other life on Earth.”

What a stinkin’, heapin’ load of dog feces. “Reducing risks to humans and other life?” They might as well ask for offerings to volcano gods and conjure up voodoo incantations and spells. They have to reveal an agenda and appeal to the IPCC to infill their conclusions with junk science claims.

Maybe someone should point-out to Mann, Trenberth, and Fasullo that this Chinese-origin paper (sponsored by the “Chinese Academy of Sciences”, the “State Key Laboratory of Satellite Ocean Environment Dynamics, Second Institute of Oceanography, Hangzhou”, and the “Ministry of Natural Resources of China, Beijing”) is from the largest global anthro-CO2 emitter, a nation with no reduction INDCs under Paris COP21, and that makes this laughable piece of propaganda: “reduced by appropriate human actions that lead to rapid reductions in GHG emissions.” The Chinese have no intention to rapid reductions” and those 3 TDS afflicted stooges know that.

These 3 Stooges (Mann, Trenberth, Fasullo) just let themselves be the useful idiots for the Chinese Communist Party and their economic war on the West and the UN’s dedicated drive for global socialism.

Thanks, Joel. Formatting fixed.

w.

Voodoo incantations are more reliable than the fantasy of measuring temperature to three decimal places of accuracy when the measuring device only measures two decimal places. At least voodoo might be correct occasionally.

Here, the term “commitment” means that the ocean (and some other components in the Earth system, such as the large ice sheets) are slow to respond and equilibrate, and will continue to change even after radiative forcing stabilizes (Abram et al., 2019).

Without any “forcing” ( ie radiative imbalance ) the massive heat reservoir of the oceans will continue to warm.

Wow, they have officially abandonned one of the axioms of physics: the conservation of energy.

Now that’s what I call “missing heat” !!

greg

Amazing that they’ve fallen for the naïve error of believing in thermal inertia, in the same way that a heavy rolling object has kinetic inertia. There is no thermal inertia. Heat input stops, heating stops. Thermal “inertia” is used as a metaphor for massive heat capacity of oceans, but it indeed does not exist.

Now they’re on record as believing in magic.

Useful (well compensated) idiots?

Damn you and your facts Willis, a whole lot of time and money went into making that graph look scary.

Yes, conversion to reality mode is much appreciated.

I’m quite sure CNN and LAT will be telling us what a zettajoule is any time now. not

The fact that they go back 60 years to get such a small result is indicative of the problem with Ocean Heat Content. Before ARGO the data was laughably unreliable, canvas buckets, Engine Cooling Water intakes from two meters depth to ten meters, and almost nothing from the entire Southern Hemisphere where most of the ocean is found. ARGO data itself has been adjusted as well.

Just Bad Science…

Just Bad

Science…The data isn’t “bad”. It is just data. Bad is the use of it without keeping the limitations in mind.

Thanks for the expose’, Willis!

RE: “….Kevin Trenberth and Michael Mann. Not sure why they’re along for the ride…”

There seems to be a persistent correlation between these ‘authors’ and deliberate attempts to mislead and scare people into participation in their zeta-deceits whilst masking their +/-0.001 truth content.

I smell fraud/tampering. The line is way to lineair.

The fraud is in the extra data they created using models.

“Model simulations were used to guide the gap-filling method from point measurements to the grid, while sampling error was estimated by sub-sampling the Argo data at the locations of the earlier observations (a full description of the method can be found in Cheng et al., 2017). ”

https://link.springer.com/content/pdf/10.1007/s00376-020-9283-7.pdf

Figure 3 of the paper shows trends amongst the Indian, Atlantic, Southern, and Pacific Oceans to a depth of 2,000 meters. Except for the Southern Ocean, the graphic appears to show significant areas that are cooling. And, there are large areas of the Pacific showing no change at all. So what explains these anomalies? And, is a maximum depth of 2,000 meters valid inasmuch as the ocean is much deeper than that in certain locations?

The paper claims to have data measurements below 2000 m after 1991.

” The deep OHC change below 2000 m was extended to 1960 by assuming a zero heating rate before 1991, consistent with Rhein et al., (2013) and Cheng et al., (2017). The new results indicate a total full-depth

ocean warming of 370 ± 81 ZJ (equal to a net heating of 0.38 ± 0.08 W m−2 over the global surface) from 1960 to 2019, with contributions of 41.0%, 21.5%, 28.6% and 8.9% from the 0–300-m, 300–700-m, 700–2000-m, and below-2000-m layers, respectively. “

iirc, HadSST3 has ±0.03°C uncertainty, so these guys claim 10X better….

However, the rates and magnitudes of ocean warming and the associated risks will be smaller with lower GHG emissions

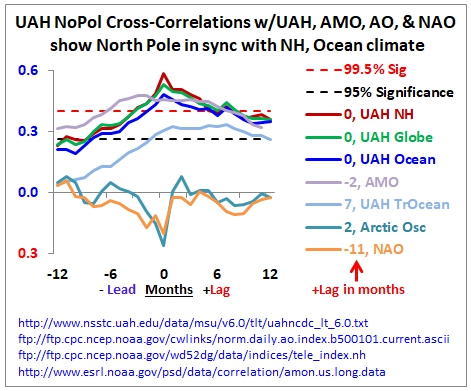

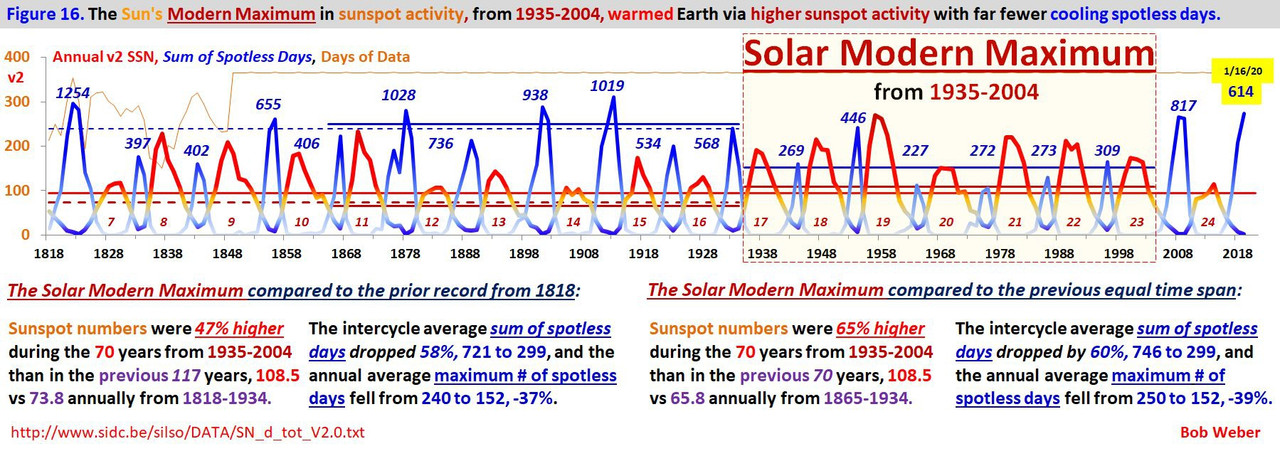

Climatologists just don’t know positive MEI, not CO2 or GHGs, drives SST growth:

The ‘pros’ just don’t seem to realize CO2 follows Nino34, MEI, OLR:

Human GHGs don’t change the weather or climate. ML CO2 naturally follows the climate.

The Argo buoys may well take measurements of the top 2,000 metres of the Earth’s oceans, but these oceans average some 5,000 metres in depth, so we basically know diddlysquat about 60% of the overall oceanic volume.

Not to worry. They made up data to cover that area and show it in their charts starting in 1991 even before Argo.

We must all assume

the depths filled with missing heat

boil bottom feeders

How can this be published? The ‘data’ for the most part is made up, and uncertainties are huge. I would doubt the temperature ‘data’ prior to 1978 knowable to + or – 1C. They show 50 times more precise?

For the technically obsessed of us, how did you digitize the graph, on screen or with an actual digitizer?

Love your posts. You are a gifted creative writer and a supurb technical writer. Rare combination. We are grateful indeed.

Thanks for the kind words, Tom. I not only write the posts, I do the scientific research for them as well. Regarding digitization, I’m running a Mac, and I use “Graphclick” for digitizing.

w.

“supurb technical writer”

Indeboobably.

OT, but Australia has reduced its per capita CO2 emissions by some 40% since 1990

http://joannenova.com.au/2020/01/global-patsy-since-1990-each-australian-have-already-cut-co2-emissions-by-40/

Yes, the Australians only breathe out 60 times for every 100 times they breathe in.

That’s coffee all over my keyboard.

Recent bush fires have erased that and then some. Not that the CO2 matters.

But the rains to Australia will return surely as the the next La Nina will be a monster. Just as California’s and Texas Perma-drought claims of 7 years ago were erased. If the climate change socialists weren’t lyin’, they wouldn’t be tryin’.

Estimates of CO2 from fires – 400 megatonnes, likely a high estimate. That would require a fuel load of 44 tonnes/hectare, which is right at the top of the possible fuel load per table 9.2 here.

Aussie emissions 2018 – 560 megatonnes.

So … big, but not bigger than the Australian emissions.

w.

Willis,

You did not plot ocean temperature in degrees C, but variation in temperature from the average level in degrees C. I know that is what you meant, but it can be confusing to some.

We had the same news flash about a year ago. Also where there was a conversion to joules to make the number bigger

“we’re supposed to believe that they can measure the top 651,000,000,000,000,000 cubic metres of the ocean to within ±0.003°C”

Sounds easy, Australia BOM thinks it can “correct” daily temperatures at a weather station in 1941 using the daily data from 4 “surrounding stations” located 220, 445, 621 and 775km away with totally different geography (coast versus 4 inland) that only have daily temperature records from the late 1950s to the early 70s.

Now that’s a neat trick.

Thanks for preparing this post, Willis. When I saw a news headline for the paper, I thought, Oh, no. Not again.

The last post I prepared on the same topic was about a year ago:

https://wattsupwiththat.com/2019/01/23/deep-ocean-warming-in-degrees-c/

For anyone interested, the cross post at my blog is here, too:

https://bobtisdale.wordpress.com/2019/01/23/deep-ocean-warming-in-degrees-c/

Regards,

Bob

And they spend how many resources (human and material) to get these results?

According to local press, the EU commissioner for «whatever» has just announced 100.000.000.000 euros «to stop CO2 and protect natural resources».

This is getting insane…

Getting?

Sorry, English is not my «first language»…

That’s a joke implying that, in this case, it has been crazy for a long time.

Considering only short wave radiation can warm the ocean, any ocean heating is caused by the sun.

Thus placing a heavy burden on those saying surface heating is due to anything other than the sun as they must now take their Zeta joules off any warming calculations they attribute to greenhouse gasses

Scott, longwave does indeed warm the oceans. See my post “Radiating The Ocean” for reasons why.

w.

Scott:

Be sure to read the many comments on Willis’ 2011 post, challenging his claim that “longwave does indeed warm the oceans.” This ex cathedra pronouncement is made by one who believes that there’s no difference between the LW response of solid earth surfaces and that of water–which evaporates.

The “sky dragon slayers” claim that a warmer ocean can’t be warmed by longwave infrared radiation from CO2 in a cooler atmosphere because they confusedly imagine that the 2nd Law of Thermodynamics prohibits it. They are wrong.

Alternately, it is occasionally claimed that longwave infrared (LWIR) radiation doesn’t warm the ocean because it is absorbed at the surface and just causes evaporation. That claim is also false, but less obviously so. That appears to be the fallacy which has misled you, 1sky1, so I’ll address that one.

A single photon of 15 µm LWIR radiation contains only 1.33E-29 J of energy.†

To evaporate a single water molecule, from a starting temperature of 25°C, requires 7.69E-20 J of energy.‡

That means that to evaporate a single molecule of liquid water at 25°C would require the amount of energy provided by absorption of nearly 5.8 billion 15 µm LWIR photons.

In fact, it would require the absorption of about 9.4 million 15 µm photons to merely raise the temperature of one molecule of water by 1°C.

So water can obviously absorb “downwelling” LWIR radiation without evaporating.

– – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – –

† The energy in Joules of one photon of light of wavelength λ is hc/λ, where at 15 µm:

h = Planck’s constant, 6.626×10E-34 = 6.626E-34

c = velocity of light in a vacuum, 3.00E+8

hc = 6.626E-34 × 3.00E+8

λ = 15 µm = 15E-6

hc/λ = 6.626E-34 × 3.00E+8 / 15E-6 = 1.33E-29 J

So, one 15 µm photon contains 1.33E-29 J of energy.

‡ Water has molecular weight 1 + 1 + 16 = 18.

So one mole of water weighs 18 grams = Avogadro’s number of molecules, 6.0221409E+23.

So, one gram of water is 6.0221409E+23 / 18 molecules.

540 calories are required to evaporate one gram of 100°C water, plus one calorie per degree to raise it to 100°C from its starting temperature.

So if it starts at 25°C, 540+75 = 615 calories are needed.

So one molecule requires 615 / (6.0221409E+23 / 18) = 1.83822E-20 calories to evaporate it.

1 Joule = 0.239006 calories, so

one molecule requires 1.83822E-20 calories / (0.239006 calories/joule) = 7.69109E-20 J to evaporate it.

Your theoretical calculations do not alter geophysical realities. Indeed, water need not entirely evaporate upon being irradiated by LWIR. Nevertheless, since practically all such radiation is absorbed within a dozen microns of the surface, it’s only the skin that is warmed directly and profoundly, thereby decreasing its density strongly and producing an adjacent Knudsen layer in the air. That development makes it very difficult to mix heat into any subsurface layer, let alone the top 2000 m of the ocean. It’s the warming of that layer that is at issue here.

BTW, the observation-based maps of actual surface fluxes of Q, linked in my comment below, are found on pp. 42-43.

The point is that since it takes a measurable amount of time for a single molecule of water to absorb enough photons to increase it’s chances of evaporating, that is enough time for that water molecule to transfer some or all of the energy absorbed to other molecules of water.

1sky1, neither you nor anyone else has been able to refute the four arguments I put forward in Radiating The Ocean.

Your current claim is that the LW is all absorbed in the top dozen microns of the ocean and it cannot mix with the rest of the ocean, viz:

Average downwelling LW in the ocean is on the order of 360 W/m2, which is 360 joules/second/m2. A micron is a thousandth of a mm. One mm over an area of one square meter is one kg. One micron over one square meter is one gram. 12 microns is 12 grams.

It takes 4 joules to raise one gram by 1°C. We’re warming 12 grams, so it takes 48 joules to warm the surface layer by 1°C.

The water is getting 360 joules per second. That would heat your 12 microns of water by 7.5°C/second. If the 12-micron layer of surface ocean starts at say 25°C, it would start boiling in ten seconds …

Nice try, though. Vanna, what kind of wonderful prizes do we have for our unsuccessful contestants?

w.

Willis.. isn’t “boiling” also called evaporation taking the heat with it? I think the issue is LW photons don’t penetrate as much as SW ones. So the LW reaction with the ocean occurs mostly in the surface layers while the SW ones penetrate deeper before interacting.

Also the 360 w/m2 only occurs when the Sun is directly overhead and falls off as the spot rotates away (or you move north or south in latitude). And as the incidence angle increases so does the reflection to where it hits the critical angle.

What you are outlining is the “worst case” and from a real world perspective only occurs at a small spot on Earth at any given time. Maybe we can say LW radiation does impact the ocean temperatures, but not nearly what SW does.

neither you nor anyone else has been able to refute the four arguments I put forward in

Haven’t you made graphs before that depict the morning SST as cooler before daytime SW heating? There’s your answer.

Is the ocean surface warmer at dawn or at dusk? If it’s warmer at dawn (and not from upwelling) then the LW warmed it in the absence of solar SW. If it’s not warmer, as is the reality afaik, then LW doesn’t warm the ocean overnight.

Arguing photon exchanges misses what’s important: there’s no net LW warming, illustrating that colder air doesn’t warm a warmer ocean.

This plot indicates the atmosphere keys off the ocean:

The atmospheric LW isn’t warming the ocean, and the residence time for heat flow from the ocean hasn’t changed over time, being very linear with SST. The atmosphere consistently holds a 4% higher temperature than the ocean over a month than it receives, a short residence time:

The atmosphere consistently holds a 4% higher temperature than the ocean over a month than it receives, a short residence time:

Hotter land surfaces provide additional heating effects on top of ongoing ocean-air heat exchange.

The linear UAH-SST 4% factor would be non-linear with increasing LW if LW drove SST, and would be a perpetual energy machine, as the LW would raise the SST, increasing LW eventually, leading to runaway positive feedback loop ocean warming, which is not observed.

The water is getting 360 joules per second.

The equatorial ocean gets full TSI minus albedo at the sub-solar point. Evaporation occurs after morning insolation rises from peaking insolation, not at the daily average.

It’s remarkable how many naive rationalizations are invoked here in avoiding the actual thermodynamic behavior of water. Being a relatively poor heat conductor, molecular transfer is quite limited; local convective currents due to density gradients keep the warmest water strongly confined near the surface. Nor is heat flux in water exempt from following the NEGATIVE gradient of temperature specified by Fourier’s Law.

But the gong-show winner is the notion that the flux density of absorbed DLWIR need only be normalized by the thickness of water-layer to obtain its rate of temperature change. Not only does this inept calculation ignore that such rates are critically dependent upon temperature differences, but it fails to account for LWIR emissions from the surface as well as the strong COOLING produced by evaporation. We only have coupled LWIR exchange within the atmosphere, NOT any bona fide external forcing.

The real-world consequence is that on an annual-average basis LATENT heat transfer from the ocean to the atmosphere exceeds that of all SENSIBLE heat transfers by nearly an order of magnitude. That is what is shown unequivocally in the WHOI-derived maps I referenced. Self-styled dragon-slayers remain unequipped to deal with that reality.

Wind is responsible for much if not most of the energy that goes into creating water vapor. Where exactly is the frictional heating of the atmosphere onto the surface referenced in the back radiation pseudoscience? http://www.cgd.ucar.edu/staff/trenbert/trenberth.papers/BAMSmarTrenberth.pdf

We’ve had a lot of fog hear recently as warm air has moved over the cold damp surface, something else that apparently never happens.

Your sciency answer sounds so clever

But since when did water have to get to 100C to evaporate?

Does the sweat on your skin get to 100C to evaporate?

How do you think the surface “dries” when there is no sunshine

AC, if you are claiming that the LW simply goes into evaporating the skin layer, then we have a very big problem.

Globally, evaporation is estimated via a couple of ways as being on the order of 80 W/m2. This evaporates about a meter of water, which is the global average rainfall.

But if all 360W/m2 were to evaporate water, then we’d be seeing about 4 metres (~13 feet) of rain on average. So we know that the LW is not simply going into evaporation.

w.

Mr Eschenbach, I did not mention anything to do with LW, I was merely pointing out that water does not need to get hot to evaporate.

So all the calculations to show “100C” were very nice but totally immaterial to evaporation.

The other day when this thread first appeared, I went and reviewed what occurs in the situation where water evaporates off of a cool surface, because no one can deny that a wet shirt or a mass of water will indeed create water vapor without ever getting anywhere close to 100° C.

A shirt will dry out.

A puddle on the floor will evaporate, unless the R.H. is 100%

There are tables for the amount of energy required to evaporate water at various temperatures.

It takes more energy to evaporate cool water than to evaporate hot water.

Water can evaporate, as I understand it, without being hot, because molecules are not all moving at the same velocity in a liquid.

Some have enough energy to escape from the surface.

When relative humidity is at 100%, the same number of molecules of water are leaving the surface of the water as are entering it from the air (ignoring supersaturation).

Comparison of the oceanic surface fluxes of latent and sensible heat Q is available in global maps shown on pp. 41-42 of:

ftp://ftp.iap.ac.cn/ftp/ds134_OAFLUX-v3-radiation_1_1month_netcdf/OAFlux_TechReport_3rd_release.pdf

Please, Scott, stay away from the Principia crackpot disinformation website, and their sky dragon book. They kill brain cells.

I came up with a thought experiment a while back which when presented to even ardent believers in this idea of thermodynamic impossibilities, convinced them they were mistaken.

Here it is:

Consider two stars in space, each in isolation.

Both have the same diameter.

One star is at 4000°K, and the other is at 5000°K.

Each is in stable thermal equilibrium between heat produced in the core, transferred via radiation and convection to the surface, and radiation of this energy into space.

Now, bring these two stars into orbit with each other, such that they are as close as possible without transferring any mass.*

Now describe what happens to the temperature of each star?

Each now has one side facing another star in close proximity, where before they were each surrounded by empty space.

What happens to the temperature of each of the stars?

Can anyone seriously think that the cooler star does not cause the warmer star to increase in temperature and reach a new equilibrium, at a now higher temperature?

If so, what becomes of the photons from the cooler star that impinge upon the hotter star?

In truth, the interaction would be complex, but the scenario described is a common one which has long ago been observed and described by astrophysicists.

The details are homework for anyone still thinking that the laws of thermodynamics operate as believed by dragonistas.

*Alternative scenario: Postulate further that they are white swarf stars, cooling so slowly that they stay the same temp for the interval of the experiment.

Too much like LM

Serving ping pong balls from a vat pressure driven with 300 balls added each minute.

Now have someone hit 1 in 3 back into the vat.

Result pressure driven vat serves out at a rate of over 400 balls a minute in equilibrium.

Mods,

I believe I have a comment in moderation bin posted here a day or so ago.

Thanks.

Nicholas, I just looked in both the Pending and the Spam lists, no posts from you. Might have posted it in some other location or thread by mistake …

Sorry,

w.

Ok, thanks.

Sometimes I change my mind after writing something.

Angech,

What is LM?

The question is clearly presented, and has nothing to do with vats full of ping pong balls and pressurized air.

Photons are not little balls of solid matter being propelled by a jet of air.

I will accept your expertise on the subject of vats full of ping pong balls, and assert that it has nothing to do with what happens to stars in space and the photons of electromagnetic radiation they emit and absorb.

The warmer star will cool less quickly, it will not get warmer.

If the energy being generated by the first star stays the same, adding new energy from a second star, regardless of the second star’s temperature will cause the first star to warm.

“The warmer star will cool less quickly, it will not get warmer.”

Do you care to support this assertion with any rationale for believing how and why it may be so?

For one thing, stars are highly stable with regard to their temperature at the radiating surface, over vast stretches of time.

What do you mean when you assert a star is cooling?

Is the Sun cooling over time?

Not according to currently accepted astrophysics.

For one thing, the energy radiated away at the surface takes tens of thousand of years to get from the core to the surface…first through the radiative zone and then through the convective zone.

There are parameters which can vary in my thought experiment which are not delineated:

– Are the stars rotating, and if so how fast?

– How massive are the stars? Stars smaller than 0.3 solar masses are thought to be entirely convective, and those larger than about 1.2 solar masses are thought to be entirely radiative. Those in between are like the Sun, with an inner radiative zone and an outer convective zone.

But regardless of these factors, when the stars were in isolation, surrounded by empty space, they were in equilibrium between energy generated in the core and energy emitted at the surface.

Bring another star into close proximity changes the amount of energy in the outer layer of the star…it increases.

So the star is no longer in equilibrium.

Instead of cold space and no influx, one side of the entire star now has a huge influx of energy from the second star.

Consider some other cases: What if the two stars are initially identical in temperature?

Then what happens to each?

Now consider the case where one is only slightly cooler than the other.

How is what happens in the case when they are identical changed to any significant degree?

I am curious to know how well you are considering the actual situation described.

Paper titled “Reflection effect in close binaries: effects of reflection on spectral lines”:

“The contour maps show that the radiative interaction makes the outer surface of the primary star warm when its companion illuminates the radiation. The effect of reflection on spectral lines is studied and noticed that the flux in the lines increases at all frequency points and the cores of the lines received more flux than the wings and equivalent width changes accordingly.”

https://link.springer.com/article/10.1007/s10509-013-1660-6

True. I discuss this question at length in my post “Can A Cold Object Warm A Hot Object“. The answer, of course, is “compared to what”?

w.

Hi Willis,

Not sure if this response in directed to me, but if so…

I devised my thought experiment after participating, but mostly just reading the back and forth of others who frequent WUWT, many of the discussions on your threads on this topic and those of some other contributors.

At first I did not know what to make of the ongoing disagreements among people who are apparently very knowledgeable on the subject of radiative physics.

I thought…how can it be that there is this basic disagreement about something that should be able to be settled by easily devised experiments or observations?

After a while, I decided to think of a dramatic case of two objects at different temps, in close proximity, and how they would be different than if each was in isolation.

At one point I even found out decades old astrophysics papers on this exact situation, although not any that were written with the goal of answering this question.

I will see if I can find that material.

Hi Dave,

A few comments below, Willis posted a link to one of his articles from 2017.

I had participated in that discussion (I used to use the handle “Menicholas”) but had apparently not stuck around until the thread was no longer accepting new comments.

Anywho…I missed your reply to the example I gave to respond to one of the people who assert that CO2 is in too small of a concentration to have much effect on…I am not sure what, radiation, optical properties, etc.

I am not anywhere close to having enough expertise to jump in on one side or another of many of the issues of radiative physics, but whenever possible I try to add something, or ask a question, in those instances when I am not following a line of logic or if I have info that someone else may not have considered.

Here is the comment, about using lake dyes like Blue Lagoon to dye an entire pond or lake in order to inhibit growth of aquatic plants and/or algae.

I just wanted to say, I agree with your assessment that the dye molecules are obviously absorbing the photons and so are almost certainty warming the pond.

Beyond that…I am not sure what it says about any of the basic disagreements about physics that are ongoing.

I am only hoping one day to be around when everyone finds some way to agree on such questions.

https://wattsupwiththat.com/2017/11/24/can-a-cold-object-warm-a-hot-object/#comment-2219952

You replied:

“What an interesting comment, menicholas! I had never heard of Blue Lagoon and products like it. Thank you for teaching me something.

Let’s do the arithmetic. Four acre-feet = 5,213,616 gallons. So 1 qt / 4 acre-feet = 0.1918 ppmv, blocks enough light from passing through 4 feet of water to prevent algae growth on the bottom. Impressive!

A column of the Earth’s atmosphere has about the same mass as a 30 foot column of water. So blocking the light through just four feet of water should require an even darker tint than blocking the absorbed shades of light through the Earth’s atmosphere.”

And most of the quart of Blue Lagoon (and there are plenty of other such dyes) is water and possibly other solvents…so the concentration is very small indeed.

You should see what happens when a tech spills some on his clothing or skin!

When will they start using the Jeff Severinghaus proxy I wonder.

https://tambonthongchai.com/2019/09/08/severinghaus/

And when will they other sources of heat into account?

https://tambonthongchai.com/2018/10/06/ohc/

I think the error bars back near 1960 should be a lot larger.

“Perhaps there are some process engineers out there who’ve been tasked with keeping a large water bath at some given temperature, and how many thermometers it would take to measure the average bath temperature to ±0.03°C.”

Willis:

I spent my 40 year career in laboratories where tight temperature control and precise measurement were often key requirements. Not many cases where control better than +/- 0.1 C was necessary or possible. Liquid baths are easier to control than air due to thermal mass/inertia, but precision requires good continuous mixing. Without mixing, it would take an array of sensors distributed both vertically and horizontally to obtain an accurate average. Sensors with resolution in the hundredths to thousandths of a degree range are quite expensive. Much cheaper to stir the bath to assure a uniform temperature. A good example is a combustion calorimeter which uses a small propeller type stirrer and, in the old days, a single high resolution mercury in glass thermometer (read with a microscope) or, these days, an RTD. Of course in a calorimeter we just want to measure temperature change and not control it. Control of temperature to thousandths of a degree is incredibly difficult and only attempted were large budgets are available in my experience. Small commercial lab temperature baths are typically accurate to about 0.1 C and cost several thousand dollars.

Thanks, Rick. I figured that was the case, but you have the experience to support it.

w.

I neglected to add that often when you dig into calibration certificates you find that the Measurement Uncertainty of your high resolution instruments is much bigger than the you might expect. 0.1 C resolution may come with +/-1.0 C MU.

This rubbish has been running on Sky News UK all day and it was in the Guardian yesterday. I noticed John Abraham is in the list of authors, he of the Guardian now defunct “Climate Consensus – the 97%” that he ran with Dana Nuccitelli.

Abraham did something similar in the Guardian in January 2018 concerning 2017.

https://www.theguardian.com/environment/climate-consensus-97-per-cent/2018/jan/26/in-2017-the-oceans-were-by-far-the-hottest-ever-recorded

Old propaganda beefed up.

Yes, there is a historical sequence of implausible papers. Good that Willis exposed the flaws in this one. In 2018 it was Resplandy et al. which Nic Lewis critiqued and a year later it was retracted. In the meantime Cheng et al 2019 made the same claims of ocean warming drawing upon Resplandy despite its flaws. Benny Peiser of GWPF protested to the IPCC for relying on Cheng (2019) for their ocean alarm special report last year. Nic Lewis also did an analysis of that paper and found it wanting. The main difference with Cheng et al. (2020) is adding a bunch of high-profile names and dropping the reference to Resplandy.

https://rclutz.wordpress.com/2020/01/14/recycling-climate-trash-papers/

Thanks Ron.

” “The quality of research varies inversely with the square of the number of authors” … but I digress.”

Ha ha ha ha ha ha ha ha ha ha ha ha ha!

This looks like yet another ‘study’ in which the likely errors are significantly greater than the tiny result obtained heralded as catastrophic. The ambitious claim that such a totally trivial temperature alteration is (mostly) due to human activities, rather than being caused by variations in cloud cover, or some El Nino/La Nina cycle, or in the activity of tropical thunderstorms is pure nonsense.

So Willis (my hat’s off to you) says the oceans absorb 6360 units, while the total created by man is .6 units (please correct me if I’m wrong), meaning that the anthropogenic contribution potential is .0094% of the total.

That seems reasonable given the .003deg accuracy coming from the 3858 Argo bouys wandering about.

Finally, the missing heat Trenberth was moaning about…

So how exactly does this differ from the

IPCC’s AR4 Report Chapter Five Executive Summary Page 387

where it says:

The oceans are warming. Over the period 1961 to 2003, global ocean temperature has risen by 0.10°C from the surface to a depth of 700 m.

Really? 0.10° not 0.11 or 0.09 but 0.10° degrees of warming in 42 years. That’s real precision, that’s for sure.

The mistake Eschenbach makes here is to confuse 0.1 degree of warming in the first 2000m of the ocean as UNIFORM warming across those 2000m.

Unfortunately for us land dwelling creatures, the temperature of the ocean at 5m is a lot more important than at 1675m. And we’re all perfectly aware that surface ocean temperatures have already warmed by 1 degree. This is basic knowledge that Eschenbach stealthily avoids by pretending that first the ocean must warm by 1 degree at a depth of 2000m before we are allowed to say

So here’s a question for Eschenbach. Yes, lets say it’ll take five centuries for the ocean down to 2000m to warm 1 degree. By what amount do you believe that the ocean surface will have warmed in order for the average warming through 2000m to be 1 degree? Right now we’re at surface: 1 degree, 2000m: 0.1 degree. So my naive guess is 10 degrees.

When considering a depth of two kilometres, an average warming of 0.1 degree is truly remarkable.

Butts January 14, 2020 at 2:03 pm

Grrrr. This is why I ask people to QUOTE MY DANG WORDS!! I made no such claim and I have no such confusion.

“Warmed by 1 degree” since when? Our data older than about forty years is very uncertain. The Reynolds OI SST data says that since 1981 (the start of their dataset) the ocean has warmed by 0.4°C.

However, if you can accept greater uncertainty, the HadCRUT SST dataset says that the SST has warmed 0.7°C since 1870 …

So no, Butts, we’re not “perfectly aware” of any one-degree rise in SST for a simple reason … it hasn’t happened. It’s just more alarmism.

w.

Willis wrote: ” HadCRUT SST dataset says that the SST has warmed 0.7°C since 1870 …”

What about the data back to the Medieval warm period? That is what we need in order to tell if it is anything unusual.

Jim, the whole question of paleo SSTs is fraught with complexitudes … there’s a good paper called “Past sea surface temperatures as measured by different proxies—A cautionary tale from the late Pliocene.”

The abstract says:

Hmmm …

w.

Willis wrote:” whole question of paleo SSTs is fraught with complexitudes …”

Which, as far as I can tell, means we have no way of knowing if the current ocean temperature is unusual. If it is not, then it cannot be used as evidence of CO2 causing unusual warming.

“Which, as far as I can tell, means we have no way of knowing if the current ocean temperature is unusual.”

Oh yes, we have. It is not unusual. The proxies do have large margins of error (on the order of 1-2 degrees at two sigmas), but not so large that it isn’t easy to show that ocean temperatures were much lower during glacials and significantly warmer during peak interglacials, including the warmest part of this interglacial 8-10.000 years ago.

And there are qualitative “climate proxies” that are pretty definitive, like fossil coral reefs, or glacial dropstones or iceberg ploughmarks.

Oh, snap!

Someone get a bucket of water to revive Butts with!

I’d suggest a bucket used to measure SST…

As Willis correctly asserts, the notion of measuring the top two kilometers of the whole ocean volume to such precision is ludicrous.

For the study authors to assert any sort of confidence in the accuracy of the result is even worse, IMO.

And several reasons for these doubts exist, some of which are not even debatable:

-The ARGO floats are not evenly distributed; each one covers a stupendously huge volume of water.

-There are large area where there are zero floats, including the entire Arctic Ocean, all of the coastal regions, any areas of the sea that are shallow banks and continental slopes.

-The floats do not go all the way to the bottom, where there are large variations in water temp over the global ocean, and so the import of the results, even if they are as asserted, are dubious at best…even if it were not such a tiny change in actual temp.

– The floats are not checked or recalibrated in any sort of systematic or ongoing basis.

– And perhaps the worst indictment of the methodology and results is, that when the results of the ARGO floats were first analyzed after deployment had reached what was considered a sufficient number of floats to be meaningful, what they showed was that the ocean was actually COOLING! Since that was not what was desired…or as they phrased it, what was “expected”, it was assumed the result was erroneous and the raw data was adjusted upwards until it showed warming!

So ever since, all the data has been adjusted upwards, guaranteeing that warming would be what was shown, no matter what was actually measured, let alone what the reality in the ocean was.

It matters not at all that they were able to come up with a justification for making the adjustment.

Everyone knows that the results would not have been adjusted downwards for any reason, even a legitimate and obvious one.