Essay by Eric Worrall

The oceans swallowed my global warming? Desperate butt covering from alarmists who are facing increasingly embarrassing questions about the failure of the world to end.

14 July 2022 16:41

Factcheck: No, global warming has not ‘paused’ over the past eight years

A decade ago, many in the climate community were fixated on an apparent “pause” in rising global surface temperatures. So many studies were published on the so-called “hiatus” that scientists jokedthat the journal Nature Climate Change should be renamed Nature Hiatus.

However, after a decade or so of slower-than-average warming, rapid temperature rise returned in 2015-16 and global temperatures have since remained quite warm. The last eight years are the warmest eight years since records began in the mid-1800s.

While the hiatus debate generated a lot of useful research on short-term temperature variability, it is clear now that it was a small variation on a relentlessly upward trend in temperatures.

But nearly a decade later, talk of a “pause” has re-emerged among climate sceptics, with columnist Melanie Phillips claiming in the Times this week that, “contrary to the dogma which holds that a rise in carbon dioxide inescapably heats up the atmosphere, global temperature has embarrassingly flatlined for more than seven years even as CO2 levels have risen”.

This falsehood appears to be sourced from a blog post by long-time climate sceptic Christopher Monckton, which claims to highlight the lack of a trend in global temperatures over the past eight years.

In a rebuttal letter to the Times, Prof Richard Betts – head of climate impacts research at the Met Office Hadley Centre and University of Exeter – points out that it is “fully expected that there will be peaks of particularly high temperatures followed by a few less hot years before the next new record year”.

In fact, the last eight years have been unusually warm – even warmer than expected given the long-term rate of temperature increases – with global temperatures exceeding 1.2C above pre-industrial levels. The temperature record is replete with short-term periods of slower or more rapid warming than average, driven by natural variability on top of the warming from human emissions of CO2 and other greenhouse gases.

There is no evidence that the past eight years were in any way unusual and the hype around – and obvious end of – the prior “pause” should provide a cautionary tale about overinterpreting year-to-year variability today.

…

Human-emitted greenhouse gases trap extra heat in the atmosphere. While some of this heat warms the Earth’s surface, the vast majority – around of 93% – goes into the oceans. Only 1% or so accumulates in the atmosphere and the remainder ends up warming the land and melting ice.

…

Most years set a new record for ocean heat content, reflecting the continued trapping of heat by greenhouse gases in the atmosphere. The figure below shows that annual OHC estimates between 1950 and present for both the upper 700m (light blue) and 700m-2000m (dark blue) depths of the ocean.

…

Read more: https://www.carbonbrief.org/factcheck-no-global-warming-has-not-paused-over-the-past-eight-years/

Lord Moncton apparently stirred the hive by publishing a few articles on the growing pause, like this article from three weeks ago.

His article on the last 6 years are entertaining because, where’s the warming? Wasn’t there supposed to be a hockey stick or something? Oh yeah, it disappeared into the ocean depths, allegedly.

The last 172 years, since 1850, temperatures have risen a little. Except for that period between the 1940s to 1970s, when the drop in global temperature triggered climate scientists like Stephen Schneider to suggest we should use nuclear reactors to melt the polar ice, to prevent an ice age. Schneider later claimed he’d made a mistake, and went on to become a global warming activist.

But that context doesn’t stop in 1850.

Looking before 1850, there were notable warm periods during the last few thousand years, like the medieval warm period, Roman Warm Period and Minoan Warm Period, which look suspiciously like our current modern warm period, except back then people didn’t drive automobiles.

Going back further, 9000-5000 years ago, during the Holocene Optimum, the sea level was around 2m higher than today, so it was probably pretty warm back then as well.

20,000 years ago, much of the world was covered by massive ice sheets.

Three million years ago, the world was so warm Antarctica was mostly ice free – until the onset of the Quaternary glaciation, which we are still enduring today. To put the Quaternary Glaciation into context, the Quaternary is one of only five comparable great cold periods which have been identified over the last two billion years.

55 million years ago was the Palaeocene – Eocene thermal maximum, an extremely warm period of such abundance our primate ancestors spread throughout much of the world.

When you take a more complete look at the context, rather than the limited 172 year / 0.0000086% of climate history Carbon Brief seems to want you to focus on, there is nothing unusually warm about today’s global temperatures. Even if further global warming does occur, if those little primate ancestors with walnut size brains could manage to thrive in the Palaeocene – Eocene thermal maximum, I’m pretty sure we could figure out how to cope with a small fraction of the warming they enjoyed.

Whatever happens, they make something up, like “safe and effective.”

The Arctic cooling for the first time since the mid-1970’s could mean the second pause is not temporary this time.

Poor ole Joe wasn’t double vaccinated and triple boosted.

Or the vaccine just is useless.

Dr Birx says 4 months

I never again will be hoodwinked by the cdc and these stupid ‘shots’. I don’t like being hoodwinked.

The vaccines do not prevent infections and are useless for Omicron, which is a very different, and much less deadly, disease than Covid/

If you recover in 2 to 7 days, you most likely had Omicron.

If you recover in 1 to 3 weeks, or possibly die, you most likely had Covid.

There is no evidence in US all-cause mortality data that vaccines reduced deaths in 2021, versus 2020 with no vaccines.

It is very difficult to determine if vaccines reduced hospitalizations in 2021. Up to half of “Covid patients” in a NYC hospital survey actually went to the hospital for another unrelated disease, not Covid. There were strong financial incentives for them to be called Covid patients after they entered the hospital for other reasons.

If you trust the deaths with Covid statistic (actually any death within 28 days of a Covid positive PCR test, from any cause) the deaths with Covid in 2021, with vaccines, were much higher than in 2020, with no vaccines.

There is strong evidence that Covid vaccines were not safe and were not effective. The root cause is most likely the extremely rushed development time financed by the Trump Administration. There is a good reason that vaccines usually take 5 to 15 years for development and testing, rather than nine months.

The lethality of COVID was pure propaganda. During the shamdemic no one apparently died from influenza which is an epidemiological impossibility. I propose that who did perish from infection (COVID, influenza, or other agent) suffered Vitamin D hypovitaminosis. This phenomenon has been observed for decades.

But then again he could be like the others and just got saline.

Just a heads up, but they’re going to invent more “warming” in the oceans.

easy enough to do – just remove the coolest 10% of Argo floats due to “anomalous readings”, and presto change-o a new ocean warming appears before your eyes.

I mean there’s a new paper out. Trenberth and Tamino are involved. It’s going to be karlization of the Argo set.

Like Hansen did culling temperature stations to remove rural and higher altitude inconvenient temperature stations?

Here’s evidence of what CNN is plotting to do to “milk” the global warming thing because COVID is losing fear factor impact with the public.

Utterly disgusting –

https://twitter.com/i/status/1549770683890253825

The Antarctic was far from ice-free 3 Ma, but the Arctic largely was.

The Cenozoic Ice Age began with formation of the East Antarctic Ice Sheet 34 Ma, with the formation of the Southern Ocean. Northern Hemisphere ice sheets did have to await the closing of the Isthmus of Panama about 3 Ma.

Don’t forget “common sense” solutions…

What got me involved in following climate change was the attempt with MBH98 to make the LIA and Medieval Warm period go away, and pretend all climate change is anthropogenic. Mann has still not retracted that atrocity.

Mann wants to mann-u-facture climate history….what a hockey puck!

Various alarmists still try to claim that nobody has refuted the Hockey Stick.

Tom Wigley and Keith Briffa were sceptical as shown in the Climate Gate e-mail release

Wigley “I have just read the M&M stuff criticising MBH. A lot of it seems valid to me. At the very least MBH is a very sloppy piece of work – an opinion I have held for some time”

Briffa “I believe the recent warmth was probably matched about 1000 years ago”

Dennis says we’re in another “hiatus”, in Dessler’s ECS & Cloud Feedback symposium at this point in the video, posted earlier in April 2022. Nobody argued. It is being discussed by the academics and it’s widely acknowledged.

https://youtu.be/aQznFJ9eVrk?t=2187

Sorry, Dennis Hartmann. Schmidt chimes in shortly after and points out his southern ocean cooling observations.

“and obvious end of – the prior “pause” should provide a cautionary tale about overinterpreting year-to-year variability today.”

Betts is one of the several dozen climate spinners on the list of those who will be sharing in the blame for the mounting worst policy-generated global economic crisis the world has known (eclipsing that of the 20th century monsters). The trillions wasted on what is a totally failed false front science used to enable a néomarxiste Great Reset that will cost many trillions more of precious funds needed to repair every facet of our trashed civilization – our education system from K to phony PhD and hundreds of other things.

Before the middle of the first decade of the new millennium I think in the main, Climate Science was honest, if misguided in their beliefs. After the total failure of climate predictions, the continual moving of goalposts, fiddling of climate data, blocking publication of adversarial climate papers, and dirty tricks like pushing the real 20th century temperature highstand of the 1930s-40s down to make 1998 el Niño the new record, etc, were prima facie criminal activity to cling to the broken theory and preserve the cash trough. You even cajoled a failing prime minister to steal another Billion from the taxpayer for climate in her way out of office.

Shame, shame on you and your co-conspirator colleagues. Oh, and you also know that it was an el Niño year that interrupted the 18yr+ “Dreaded Pause”, now apparently resuming. You know Gavin Schmidt stated last year that climate models are “running a way too hot, and we don’t know why. You and Gavin do know why! A wiseman these days would be seriously considering cementing in his pension in these turbulent times.

The very use of “anomalies” is a fraud of its own. Tell us the 5-number descriptor of the values: minimum, first quartile, median, third quartile, and maximum of the data used to calculate these multi-year averages and then the anomaly of the current average compared to the historical average.

If the average absolute global temp is 15C and we have a .13C/decade increase that’s a 0.9% change. Something no one could possibly consider to be “catastrophic”!

Add to that a MINIMUM possible uncertainty of at least +/- 0.5C and you couldn’t even tell if the change was or wasn’t 0.13C over a decade!

It’s all how you spin it (as Popper and Feynman spin in their graves). This is truly the age of neo-deception and the entrapment of humanity by the technocracy.

Put it in Kelvins and it is even less

Mr. Gorman: I’ve read the arguments re: anomalies, and I think there may be a good faith use for such math devices. But this is Climate Science, so there is no chance this is a good faith use!

Using anomalies is fine if *all* the rules are followed. But variance and uncertainty attach to the anomalies in the same magnitude as they do to the absolutes.

The climate crowd neither specifies the variance of their data or its uncertainty. They substitute average residual value between the stated data and the linear trend line as its “uncertainty*. It isn’t uncertainty, it is a best-fit metric and ignores the uncertainty associated with the measurement stated values.

They try to justify the use of anomalies as a way to weight all date equally but that’s just the beginning of the fraud. Since winter temps have a larger variance than summer temps, jamming them all together using anomalies makes no sense at all, especially when they don’t bother to even quote what the variance of the actual data is! Not only that but jamming winter temps and summer temps in the same month (i.e. northern hemisphere temps in July with southern hemisphere temps in July) together generates at least a bi-modal distribution resulting in an average value that is meaningless – and it should be recognized as such by a trained statistician.

I simply don’t trust *anything* put out by most climate scientists today, be it pro-CAGW or anti-CAGW. I only trust actual plots of absolute temps and even then am skeptical if the author doesn’t recognize the uncertainties associated with those temperature measurements. When almost all temperature measurement devices, both old and new, have at least an uncertainty of +/- 0.5C it is impossible to know exactly what is happening with the climate in the hundredths digit. Averaging measurements from different things using different devices does *not* guarantee a normal distribution of measurements where uncertainty cancels – i.e. averaging temps on a global basis doesn’t decrease uncertainty in the result, it only grows it.

Yes.

I agree. When questioned on it though, the argument is ‘well we don’t exactly know global average T exactly so we use anomalies which we can measure more accurately.’ I don’t see how when the same problems of calculating the average T plague the measurement of avg global anomalies.

But measuring as anomalies does invite fiddling temperatures which is an ‘extra degree of freedom’ for cooking data. It also allows easy use of algorithms for making hundreds of small adjustments daily.

When you argue a point regarding apparent artifacts in the data, the response is usually ‘O that’s because of a station move adjustment. Over a long time looking at T data, I noted some station moves for sites that weren’t showing warming and it became clear that station moves had become part of the T fiddler’s toolbox. The classic example was discontinuing the Death Valley Ranch Station because it stubbornly held onto the world’s highest temperature set in 1907 over 100 years later.

They moved the site a few miles down the road with heat enhancing microclimate. In 2021 they announced a ‘new’ world record which wa still a few degrees cooler than the old ranch one. Not knowing the back story, the world was stricken by this ‘warming’.

“Anomalies” without identifying context and error bounds.

Alarmists ignore the fact that smudged temperature adjustments and infilled temperatures increase error bounds.

On top of that alleged climate “experts”, “average” thousands of unique individual temperature measurements.

“If the average absolute global temp is 15C and we have a .13C/decade increase that’s a 0.9% change. Something no one could possibly consider to be “catastrophic”!”

Assuming the temperature at which water turns to ice is 0.

Considering the efforts to convert private pensions to public funds, there may be little security there.

Betts has a history of telling climate lies that makes BoJo look like George Washington.

Except for that period between the 1940s to 1970s, when the drop in global temperature …

_____________________________________________________________

A simple plot of the January-December Annual Mean from GISTEMP LOTI shows that Temperature also dropped from about 1880 to 1910 which is never explained.

1880 to 1910 is not global and is not accurate

Richard:

You can argue that it is not accurate but you cannot show that it was not global. Based on the fact that evidence speaks for itself, we can at least say there is measurement evidence of cooling from 1880-1910. Proof of global cooling in the form of widely dispersed glacier terminations is still obtainable.

It doesn’t matter if cooling cannot (now) be proven to be global. Asserting that evidence from one region is not proof of global cooling is a risky bet. Evidence from the only region with measurements is indicative of the whole, absent any contrary evidence, and you offered none.

Claiming that 93% of the heating (that is supposed to exist) is going into the oceans is downright humorous. The claim from Denver is that the deep oceans are warming. Proving that is basically impossible. Essentially they say the claim stands because there is no definitive proof the claim is not true. That is like the EPA claiming that all airborne particulate matter from any source is equally toxic (the equitoxicity rule) because they “have no proof that it is not”. I kid you not, that is what they said.

I think you should stay clear of statements about what was not global without evidence. Stick to claiming the proof of “globality” is not in evidence. Then you can sit in judgement of evidence as it appears. If you have credibility, people will listen to your sage assessments.

Evidence from the only region with measurements is indicative of the whole, absent any contrary evidence …

____________________________________________________

Excellently stated

Excellently stated nonsense.

Bizarre logic

Saying ‘I’m right because you can’t prove me wrong’.

That’s not how science works

Science requires data.

Data quality must be analyzed to determine if there are sufficient data, with sufficient accuracy, to draw a conclusion. The global average temperature before 1920 is a rough estimate of the Northern Hemisphere only. Not an accurate global average.

And your statement that it was not global is backed by NO EVIDENCE and is thus not scientific.

So assuming that when ALL the areas with temperature records show warming, that the rest of the world does not is typical Richard Greene.

Since you seem to comment at cross purposes every day, I would like to know why you are commenting at all.

Are you just a troll?

The following charts show locations of land based weather stations in various years. They have poor global coverage before 1920 and are still not so great today.

Honest global warming chart Blog: Poor distribution of land-based weather stations (elonionbloggle.blogspot.com)

Surface data in general, even today is a ‘rough estimate’

Certainly rough, when you consider that most of the atmosphere at Earth’s surface is over ocean. There we only have satellite data for the most part there. No thermometers next to runways.

Well stated Richard. So we don’t need to worry about 2°C above preindustrial because we do not know the preindustrial temperature.

Central England’s three weather stations already had well over +2 degrees C. warming since the very cold 1690s. Closer to +3 degrees C.

People living there in the 1690s would have loved today’s much more pleasant temperatures.

“People living there in the 1690’s would have loved today’s much more pleasant temperatures.” Exactly. Today’s temperatures are pleasant and life sustaining. Get your hand off the alarm button.

There were few measurements in the Southern Hemisphere before 1920 and ocean measurements were mainly in Northern Hemisphere shipping lanes with questionable accuracy of buckets and thermometers.

“ Evidence from the only region with measurements is indicative of the whole, absent any contrary evidence, and you offered none.”

Anti-science baloney

Sparse coverage of the Northern Hemisphere is not a global average. Bucket and thermometer measurement methodology makes the problem worse.

Global coverage by well-maintained and sited weather stations was better during the British Henisphere than after its demise, 1948-64, especially in the Southern Hemisphere. No continuous South Pole data however until 1958.

If we’re concerned about accuracy, no global readings before the satellite era make the cut, and even those are debatable. That includes Ice proxies and Mann’s treemometer concoctions and any other numbers you want to discuss. So what were we getting worked up about? Greta’s feelings?

I have no idea why people are getting worked up

about a prediction of climate doom. I guess they

have blind trust in leftist politicians and government bureaucrats,

We love global warming here in Michigan USA.

Give us more of that.

Yup! Sure has been hot and dry here in SE Michigan this summer, I want some rain, must be the La nina?

We have something better than a global average temperature that NOT ONE PERSON lives in:

We have almost 8 billion first-hand witnesses who have lived with up to 47 years of global warming. If you were born after 1974 you have experienced global warming for your entire life.

I bet a lot of people barely noticed. No one was harmed.

We have an advantage here in Michigan where we have llved in the same home since 1987, and four miles south for 10 years before that. Living in one place makes it easier to notice a small gradual change in the climate. Our winters are generally not as cold as they were in the1970s. And last winter had the least amount of snow of any winter since 1977. So not only have we been able to notice the mild global warming, but we also love it. And we don’t need an average temperature number to tell us how climate change actually affected us.

I’ve lived 80 years and see clearly that the warming and cooling are cyclical. What I’m experiencing this year is bringing back memories of the 1950s and your choosing 1974 brings back memories of the extreme snow and cold I experience then. It’s no warmer now than what I experienced repeatedly. I’ve lived in the same spot since 1968 and I see the same ups and downs decade to decade.

But our first-hand experiences with actual climate change over many decades don’t count because we are not climate scientists with supercomputers and climate models. And even worse, we never make scary predictions of the future climate !

But 1850 is ok?

Other than saying the climate has warmed since the Maunder Minimum period in the late 1600s, we do not have accurate global data until UAH in 1979. Surface data after WWII could have been reasonably accurate — still too much infilling — but has had huge arbitrary adjustments for the 1940 to 1975 period. Can’t trust those climate bureaucrats.

I wouldn’t put too much faith in UAH. We truly have no idea what the uncertainty of UAH is. It doesn’t even measure temperature but atmospheric emissions. Those are then converted to temperature. No idea of what the uncertainties are associated with the emission measurements and no idea of the uncertainties of the conversion algorithms.

All we really get is the average residual between the UAH measurements and the UAH average trend line and that is called an “uncertainty”. It is actually a best-fit metric for linear trend lines and the stated values, it is not measurement uncertainty.

Except UAH more closely resembles the sea surface temperature than others.

It’s safe to assume the average temperature either went up or down since 1979. Every data source says up. I say up too.

I think it was the late Prof Bob Carter who made the point that the uncertainty range of the supposed global thermometer record from ~1850 was greater than the claimed temperature rise since then.

Gee thanks know-it-all. Quit thinking you or me have ANYTHING to do with earthly temp changes besides ill-placed thermometers.

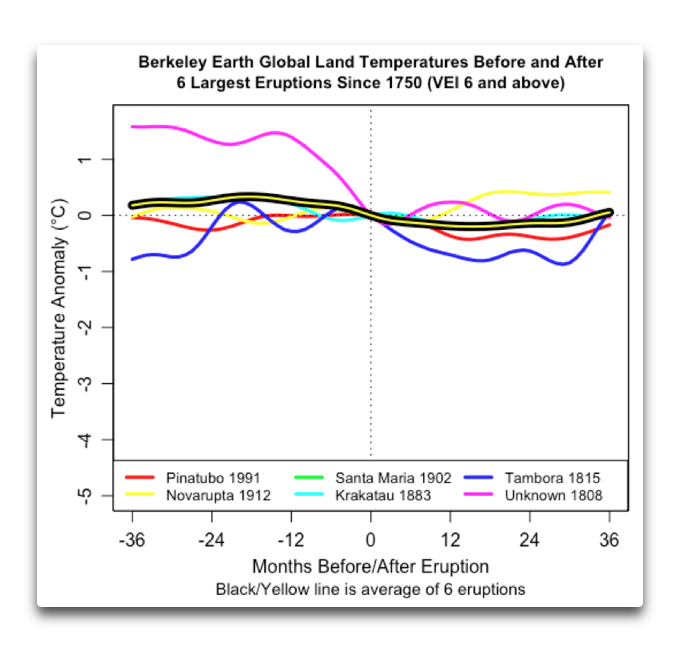

Krakatoa erupted in 1883 and apparently injected so much sulfur and ash into the upper atmosphere that it caused a global cooling event that lasted well into the 20th century. (1:25.00 in the video)

https://www.youtube.com/watch?v=yCXSDzo0tLg

They don’t quantify how much cooler, or how far exactly “well into the 20th century” means, but I’m sure it was a contributory factor.

A volcano affecting the global climate for over 17 years?

I don’t believe it.

Science isn’t about belief.

Volcanoes can affect the climate for a few years.

Not 17+ years.

I know baloney when I read it.

And you piled on with a meaningless character attack.

Troll on Richard.

In one comment tell someone else they are unscientific for not providing “data”, then in this post, you say, without any proof, that “I know baloney when I read it”. without ANY data.

You ARE just a troll.

Find one scientist who even believes any one modern era volcano affected the global climate for 17+ years

And Climate “science” ain’t about science. Hence the autistic girl as spiritual leader and chastiser-in-chief.

If the cooling is enough to cause ice fields to increase in size. Then even after the sulfur drops out of the atmosphere, it the ice fields will continue to cool the planet until they finish melting.

SO2 emissions have already been reduced by 75% to 80% since 1970. There was global cooling with high levels of SO2 before 1975, and global warming with high levels of SO2 after 1975. Conclusion: SO2 is a minor climate variable.

Where the SO2 is makes all the difference. Emissions from power plants and such stay in the lower atmosphere and are washed out of the atmosphere quickly. Volcanoes, especially the big ones, put SO2 into the upper atmosphere where they can stick around for years.

Trying to judge what impact volcanoes have by looking at power plant emissions is a fool’s errand.

Cooling is expected to be 2-3 years. However, maybe it tripped a tipping point. Tipping points are all the rage these days. Why not in 1886?

Crispin Pemberton-Piggot:

For a VEI4 eruption, it takes at least 5 years before their emissions fully settle out of the atmosphere. After that time, temperatures begin to rise because of the fully cleansed air.

For larger eruptions, more time is required, 15 years, or more, for a VEI7..

The only unambiguous VEI-7 eruption to have been directly observed in recorded history was Mount Tambora in 1815 and caused the Year Without a Summer in 1816.

Not out of the question, but much larger volcanoes have blown off in the last few thousand years. I believe that the Arctic ocean is the driver of long-tsrm swings in temperature. When ice extent is high, it insolates the ocean from giving up heat and also increases albedo. contributing to keeping the extent at a higher level. Over time, however the ocean wars under the ice, from water intrudi8ng from the Pacific and Atlantic. Eventually, the ice begins to thin from unde3rneath. As it breaks up, wind becomes a factor, cooling the surface and mixing the surface layer. Cooling increases as albedo drops, so it takes some time for the ice to get back to minimum. As apart of this process, the Arctic air tempe3ratures swing for continental cold toward a more marine state, with follow on effects globally on temperatures.

Or the even larger event in 1815.

Pretty sure that would mean that an individual volcano has to be averaged into the effects of all the much smaller ones. I don’t see how you avoid that conclusion, which reduces the short term effect of the larger volcano. Honestly, 50 years of study and they can’t qualify the effect of anything? Except that whatever happens must make it worse. This is what we’re calling science these days?

Krakatoa is not the only such event in recent past history. The explosion of Mount Tambora in 1815 produced a huge drop in insolation for years after its event. !*16 was known as ‘the year without summer’. And it ushered in years of crop disasters on a global basis. It’s estimated by some to have reduced the world’s average temperature by at least half a degree C.

It’s interesting that the last London Frost Fair of January 1814 predate the Tambora eruption of 5 April 1815 by over a year. The winter of 1813-14 is regarded as one of Europe’s coldest. Napoleon had problems with supplies because of that cold winter. So the eruption was given a headstart in Europe at least

Not in Australia – we had the millennium drought along with our highest temperatures recorded during the 1890s. It was that hot the birds fell out of the sky and citizens were sent by train to the tablelands closer to Sydney. Some 45 people died that night in January 1896 in the Bourke area in western NSW. Now that is hot

Willis disproved this claim several times.

e.g.,

‘Stacking Up Volcanoes‘ – Willis Eschenbach

Krakatau barely affected temperatures for several months.

Steve Case:

The temperature drop was due to volcanic eruptions in 1880 (VEI4?), two in in 1883 (VEI4 and VEI6, three in 1886 (VEI4, VEI5, and VEI4?), six VEI4 between 1888 and 1889, four in 1902 (VEI4, VEI4?, VEI4, VEI5?), and four between 1903 and 1907 (VEI4, VEI4, VEI4?, VEI5).

That was a period when the atmosphere was well polluted with dimming volcanic SO2 aerosols, as well as Industrial SO2, which rose from 9 Megatons in 1880 to 32 Megatons in 1910.

Volcanic activity has been increasing for the past 200 years. You have cherry picked data to obscure that trend.

Global Volcanism Program | Has volcanic activity been increasing?

There were some observations in the Arctic, some areas of the Northern Hemisphere, Austrialia and New Zealand that showed cooling. The problem being with the GISS the coverage was very poor between 1880 and 1910 over the entire ocean, Africa, Middle East, South America, Antarctica and anywhere Mexico South in North America.

https://data.giss.nasa.gov/gistemp/station_data_v4_globe/

Fact checkers seem to always leave out important … facts.

What about the PDO shift in 2014? It completely explains the warming that led to the “rapid temperature rise”. Since then there’s been a 7 year cooling trend of nearly 0.2 C / decade. We are already returning back to the situation prior to 2014 and with the AMO about ready to change into its cool phase, the cooling will only accelerate.

Careful, you might wake up even more people than the initial hockey stick sceptics and independent thinkers with these details and factors. That could case scrutiny from Soros-funded DAs.

fact checkers are fact chokers

The only difference between the two pauses has been the strong El Nino in 2015 and data manipulation of global temperature sets like GISS, HADCRUT4 and RSS trying to hide the decline.

https://www.woodfortrees.org/plot/uah6/from:1998/to:2015/plot/uah6/from:2016/plot/uah6-land/from:1998/to:2015/trend/plot/uah6/from:2016/trend

It might not be a pause…it might be topping out of the Modern Warming Period and a beginning of a return to Little Ice Age type climate….climate changes…none of us alive today will see the next bottom…..call it the Modern Cool Period beginning soon.

Monckton is a data mining fraud.

He hurts the cause of climate realists.

The UAH data begin in 1979.

He truncates data he does not like

to create meaningless short term trends.

“The (UAH) linear warming trend since January, 1979 still stands at +0.13 C/decade (+0.11 C/decade over the global-averaged oceans, and +0.18 C/decade over global-averaged land).”

Monckton cherry picks short term trends because he is biased.

There have been many short term flat trends since the latest

global warming trend began during the 1690s (coldest decade

of the Maunder Minimum period)

There was even a significant global cooling trend from 1940 to 1975,

which has since been “revised away”

Not one of those short term flat trends or the 1940 to 1975 global cooling

had an ability to predict the climate changes that followed. Global warming

continued even after the 1940 to 1975 cooling trend. Those trends were

meaningless variations within a longer term warming trend.

If you’re going to attack someone on their work, provide proof of your position.

Monckton has the whole 42 year UAH record available.

42 years is climate.

He cherry picks only the years

he wants to make a meaningless claim.

8 years is weather.

The Monckton starting year of 2015 includes the unusually large

2015 / 2016 El Nino heat release, unrelated to greenhouse gases. Choosing that starting year is also biased,

Even the 42 years of the UAH is not enough to establish long term trends. First, we know that from the mid 1950’s to late 1970’s we were in a cooler period, but don’t have the data available in the satellite record. Second, there were years in the 1980’s and early 1990’s in which global temperatures were depressed by stratospheric volcanic eruptions, which has a non-CO2 related effect on the per per decade UAH rise. And the 1930’s- mid 1950’s were nearly if not as warm as today.

These variable conditions may be the result of longer term trends influenced by the PMO and AMO. But the issue is that our lack of a complete global dataset more than 42 years old hinders our ability to understand what, if any, contribution CO2 is adding to the recent rise in warming over and above natural cycles. Therefore, anyone who says that the science is settled is either ignorant or lying.

The pre-1979 surface data can’t be trusted.

In 1975 NCAR reported significant global cooling from 1940 to 1975 that has since been “revised away” with no explanation. It’s true the global average temperature accuracy is questionable before 1979and the i use of weather satellites.

But your statement about that is wrong.

You wrote: “The issue is that our lack of a complete global dataset more than 42 years old hinders our ability to understand what, if any, contribution CO2 is adding to the recent rise in warming over and above natural cycles.”

We could have an accurate data set for 100 years and we STILL would not know the exact effect of CO2. There are too many climate change variables to know exactly what CO2 does. To know exactly what CO2 does, you would have to know what every other climate change variable does. We don’t know that, and are not even close to knowing that.

The warming since 1975 could be 100% natural or 100% CO2 but both would be a wild guess, and probably very unlikely. The rate of warming since 1975 suggests 100% natural causes are unlikely.

CO2 is a greenhouse gas. Adding CO2 to the atmosphere should impede Earth’s ability to cool itself by some amount. There is no evidence that amount has been harmful in any way. AGW is a reasonable assumption. But CAGW us an unreasonable prediction.

It’s rather frustrating that alarmists continue to insist that since their assessment of what data can and cannot be trusted begins at the end of the strongest cooling trend in recent history, essentially climate begins at that moment.

It’s a lot like watching a game of hockey starting late in the third period and analyzing teams based on how they behave when the score is particularly one-sided and time is running out.

Of course, when you then extrapolate based on that tiny amount of biased information, you’re going to get a hockey stick.

The data over that short a time period doesn’t suggest much of anything. Especially since when you use your conclusions to go back in time it’s a very poor model for past temperature and requires gymnastics like flatting out the Little Ice Age and the Medieval Warm Period.

And even then, it’s not enough. You have the NOAA altering past temperature record because the trend in the 1930s thoroughly breaks the loose association between CO2 and temperature. And even then, it’s not enough unless you program “tipping points” into models.

Why assume changing CO2 drives anything? It’s just one of millions of variables, and after 30 years of desperately trying to make this tail wag the climate dog, it still requires charlatans like Mann committing malpractice on the scientific method.

‘It’s rather frustrating that alarmists continue to insist that since their assessment of what data can and cannot be trusted begins at the end of the strongest cooling trend”

It’s worse than that.

Alarmists predict a future global warming rate 2x faster than the 1975 to 2020 period. And they never mention they made the same predictions from 1975 to 2020, and were wrong for the entire 45 years!

“We could have an accurate data set for 100 years and we STILL would not know the exact effect of CO2. There are too many climate change variables to know exactly what CO2 does. “

Exactly! Which is why short term trends *must* be considered. They are not meaningless. They are indicators of something occurring that long term trends do not adequately address.

“The rate of warming since 1975 suggests 100% natural causes are unlikely.”

Because of uncertainty in the measurements, including satellite measurements, how do we *really* know what the rate of warming actually is? The uncertainty interval totally masks the entire area the supposed measurements exist in. How do you determine a 0.13C difference when the uncertainty is more than +/- 0.5C?

Averaging does *NOT* increase accuracy nor does it lessen uncertainty, not when you are combining multiple measurements of different things. And that is what temperature measurements are, multiple measurements of different things. There is no guarantee they will generate a normal curve which can be described by the usual statistical descriptions of standard deviation and average. Temperature data should be described using the 5-number description: minimum, first quartile, median, third quartile, and maximum. Why are climate scientists, especially CAGW advocates, so reticent about doing this?

“Exactly! Which is why short term trends *must* be considered. ”

I’ll ask again, and not expect an answer, why do you consider the last 8 years to be a short term trend that needs to be considered and not the last 10 or 12 years?

They *ALL* need to be considered! When doing forecasts, however, the further back you go the less weight individual data values should have. The past eight years should be weighted heavier than years 10, 11, and 12.

It’s EXACTLY like forecasting weather! What happens today in weather is a far better predictor of what is going to happen tomorrow than the weather from 10, 11, or 12 days ago.

Do *YOU* believe the average weather of the past 30 days is a better predictor of the weather tomorrow than the weather you are experiencing today?

Why wouldn’t what happened this year be a better predictor of next year than a year 12yrs in the past? 20 yrs in the past? 40yrs in the past?

Your claim seems to be that what happened 40yrs ago is just as predictive of next year as what happened this year.

Forgive me but that belief is a religious one, not a scientific one.

“They *ALL* need to be considered!”

So why do I never see anyone here doing that? The only short term trends that are considered noteworthy are those showing a negative trend. If more recent term trends are considered more important, why did Monckton spend so much time going on about an 18 year “pause” whilst ignoring the more recent 7 or 8 years showing an accelerated rate of warming?

“It’s EXACTLY like forecasting weather!”

We are not trying to forecast anything here, we are trying to establish what the current trend us and if it has changed.

“Do *YOU* believe the average weather of the past 30 days is a better predictor of the weather tomorrow than the weather you are experiencing today?”

No I don’t. On the other hand the weather of the past 30 years is likely to be a better indicator of the weather in a few years time than the weather yesterday.

“Your claim seems to be that what happened 40yrs ago is just as predictive of next year as what happened this year.”

My claim is that looking at all the data over the last 40 years is a better indicator if what’s currently happening than the last few years, and that before you assume it’s not you should show a statistically significant indication there has been a change.

“Forgive me but that belief is a religious one, not a scientific one.”

Yes, wanting to see evidence for something is the religious approach.

“more recent 7 or 8 years showing an accelerated rate of warming?”

Accelerated compared to what?

See attached. I don’t see any acceleration in the rate of warming!

Accelerated compared with the previous warming. e.g. 0.26°C / decade compared with 0.13°C / decade.

Of course you don’t see any acceleration on that graph. That’s because a) there isn’t any, and b) you are not cherry-picking the most recent years at any point.

For example here is the trend from December 2007 to October 2015. Trend is 0.28°C / decade, more than twice the overall trend. By your logic this should have been of more interest than the 18 year pause.

Here’s the graph:

OK, let’s look at all of the “decadal (120-month trailing) trends” available from the UAH dataset, and how they have evolved (/ are evolving ?) over time.

Following the 1997/9 “massive / Godzilla” El Nino the UAH decadal trends show a jump from a (roughly) zero value to a double-peak (n 1998 and 2001/2), followed by a decline to a minimum at the beginning of 2012.

Following the 2015/6 “massive / Godzilla” El Nino the UAH decadal trends show a double-peak (in 2017 and 2020), and are currently “trending” lower …

Will the decadal trend continue down for the next 7 or 8 years ?

Nobody “knows” for certain.

OK.

Your own graph shows that the 10 year trend up to 2021, the time Monckton starts his new pause analysis, was the highest it’s ever been, over 0.5°C / decade. It’s now down to around 0.2°C / decade, faster than the underlying UAH trend, faster than most data sets over the last 40+ years.

None of this means the rate have warming has accelerated. All the graph really shows is that 10 year trends are very uncertain, and any conclusion drawn from any randomly chosen 10 year period is likely to be very wrong.

If a trend [ = “a rate of warming” ] increases in magnitude then it is, by definition, “accelerating”.

If it reduces in magnitude then it is, by definition, “de-celerating”.

Going from a trend of 0.5°C/decade to 0.2°C/decade is a de-celeration of 60%

Yes they are, which is why the default integration time for something to be considered as “climate” is 30 years.

I still consider my graph to be “intriguing” though, given “monster” El Ninos (1982/3, 1997/8, 2015/6) happen every 15 to 18 years or so …

According to your graph we are already below 1991/1992 temps.

That’s a 30 year spread, not a 10 year spread.

What it really shows is the cyclic factors in the temperatures. Right now it appears we are on the cooling side of a cycle. The question is where it will end.

1) “My graph” is of (10-year / 120-month) trends, not “temps”.

2) UAH, like RSS and STAR, doesn’t provide (global mean) “temperatures”, but “temperature anomalies” at various altitude (/ pressure level) bands derived from satellite MSU data.

Hopefully the following graph will make the difference clearer (with trends in °C per decade so they fit on the same Y-axis number scale).

Temperature anomalies are crap from the start to the finish. They are derived from bad data using bad statistical processes.

You can post anomalies all day long, such as the global average temperature, and I’ll show you how they are crap. I’ve done it multiple times with you and you just refuse to listen, instead just relying on religious dogma to carry you through!

“Temperature anomalies are crap from the start to the finish.”

Yet you use them to claim there’s been a change in the trend with no uncertainty.

Seriously, show so consistency. If there is no record that shows what’s actually happening to global temperatures, then all your claims that there is a pause, or that you can prove CO2 is not correlated with temperature, must be pure speculation.

Uncertainty is not going to help you. If we don;t know for sure temperatures are rising, we also don’t know that they are not rising at a much faster rate.

“Yet you use them to claim there’s been a change in the trend with no uncertainty.”

I am criticizing what is out there. I have stated several times just in this subthread that I have little confidence in *any* of the so-called temperature data, especially the derived anomalies.

“If there is no record that shows what’s actually happening to global temperatures, then all your claims that there is a pause, or that you can prove CO2 is not correlated with temperature, must be pure speculation.”

I have been especially consistent in criticizing *all* of the so-called temperature constructions. I guess you just don’t bother to read or you have a failing memory. That does *NOT* mean I can’t comment on what is out there. *I* am not the one that is saying the 40 year trend line is what we should depend on for creating expectations for the near-term future. That is *YOU*!

“Uncertainty is not going to help you. If we don;t know for sure temperatures are rising, we also don’t know that they are not rising at a much faster rate.”

Which is *EXACTLY* what I have been saying. We don’t know for sure if the trend slope is negative, positive, or zero. I’ve used that sentence multiple times with you!

Are you finally coming around to agreeing with me? Or are you going to continue assuming that measurement uncertainty is irrlevant and can be ignored?

“I have been especially consistent in criticizing *all* of the so-called temperature constructions. I guess you just don’t bother to read or you have a failing memory. That does *NOT* mean I can’t comment on what is out there.”

Granted my memory isn’t that good and not likely to get better at my age. But all I’m trying to get you to acknowledge is the inconsistency in you claiming that all temperature constructions are useless, yet also claiming you know with certainty that the trend has changed over the last 8 years, and claiming this trend shows all models are wrong and CO2 cannot be causing warming.

“*I* am not the one that is saying the 40 year trend line is what we should depend on for creating expectations for the near-term future. That is *YOU*!”

Maybe you need to worry about your own memory. You keep remembering things I haven’t said. All I’ve said is that a 40 year trend is likely to be a better predictor than an 8 year trend. I certainly don’t think you should depend on it.

“We don’t know for sure if the trend slope is negative, positive, or zero. I’ve used that sentence multiple times with you!”

But you insist we shouldn’t ignore it because short term trends often become long term ones. Do you see the problem? How can we concentrate on this short trend if we don’t know whether it’s warming or cooling much faster than before.

“Are you finally coming around to agreeing with me? Or are you going to continue assuming that measurement uncertainty is irrlevant and can be ignored?”

I keep telling you my thoughts on the subject, but you keep forgetting.

“We are not trying to forecast anything here, we are trying to establish what the current trend us and if it has changed.”

The current trend has *certainly* changed over the past eight years. You just don’t want to admit it.

Of what use is a trend line if you aren’t going to use it as a predictor of the future? What does knowing what happened in the past 40 or 50 years tell you? That it warmed over that period? So what? Does that mean it’s going to continue to warm? Or does it mean you simply don’t know what it’s going to do in the future?

If you don’t know what it’s going to do in the future then it’s all just mental masturbation with no purpose.

“No I don’t. On the other hand the weather of the past 30 years is likely to be a better indicator of the weather in a few years time than the weather yesterday.”

ROFL! So if it is sunny and hot today you think it is not likely to be sunny and hot tomorrow if 30 yrs ago it rained?

Why is the weather of 30yrs ago a better predictor of next year than this year? On what do you base that assumption? Tradition?

Bottom line? You are as bad at forecasting as you are at handling uncertainties.

“My claim is that looking at all the data over the last 40 years is a better indicator if what’s currently happening than the last few years”

You would make a *terrible* farmer where you must base your choice of crop and planting time based on what happened over the last few years rather than what happened 40 years ago!

You are bad, bad, bad at forecasting.

The same thing would apply to almost anything one can think of – sizing growth investment in infrastructure, writing long term contracts for supplies, determining highway investments, etc.

“you should show a statistically significant indication there has been a change.”

Exactly what Monckton has done and which you can only denigrate because you are jealous of what he has done.

“The current trend has *certainly* changed over the past eight years.”

Only if you ignore all uncertainties. You only want to use the stated values of the trend. But in that case, the trend is always changing. If you look at all 8 year trends over the UAH data, there are some warming at over 0.5°C / decade, and some cooling by 0.3°C / decade.

“Of what use is a trend line if you aren’t going to use it as a predictor of the future?”

To tell you what has been happening.

“What does knowing what happened in the past 40 or 50 years tell you?”

It tells you it’s been warming. It allows you to investigate reasons for that warming.

“Does that mean it’s going to continue to warm?”

You’re the one who keeps insisting the current trend needs to be considered a possible indication of what’s going to happen.

If you have no other information it may be the best assumption that a trend will continue into the future, but you don’t want to project that too far.

But it’s far better to understand what’s happening and try to use that information for future predictions.

“ROFL! So if it is sunny and hot today you think it is not likely to be sunny and hot tomorrow if 30 yrs ago it rained?”

No. If it’s unusually sunny and hot today, I’m not going to assume that it will continue to be unusually sunny and hot for the next 5 years. It’s reasonable to assume that if it’s rained on occasions over the last 30 years, that it will rain at some point in the future.

It was 40°C here a couple of days ago. I know that’s far from the average, so I’m not going to assume that tomorrow will also be 40.

“You would make a *terrible* farmer where you must base your choice of crop and planting time based on what happened over the last few years rather than what happened 40 years ago!”

If I were a farmer I’d listen to what the weather forecasts were rather than assume the weather this year was going to the same as it was last year.

“You are bad, bad, bad at forecasting.”

Give one example of a forecast I made that has been proven wrong.

“The same thing would apply to almost anything one can think of”

To be clear, when I say look at long term statistics, I’m also including trends etc. I’m not saying the weather next year is likely to be the same as it was 30 years ago, because we know there’s been 30 years of warming since then. I’m just saying don’t assume that just because this summer was unusually hot means next summer will also be unusually hot.

“Exactly what Monckton has done…”

I’ve never seen Monckton give any evidence of a statistically significant change in trend. If you know different than show me where he does. I’ve looked at the data enough to satisfy myself that no such evidence exists, but I’m always willing to look at any new evidence.

“Only if you ignore all uncertainties. “

If you consider uncertainties then the 40 year trend is just as meaningless as the 8 year trend. So are you saying you can’t use the 40 year trend either?

“You only want to use the stated values of the trend.”

Malarky! *I* am the one that informed you that your “uncertainty of the trend” was not an uncertainty at all – it is only a best-fit metric between the trend line and the stated values with no regard to the uncertainty associated with the stated values!

If the uncertainties of the stated values are so bad over the past eight years that the trend is unusable then so is the trend line for the past 40 years!

“To tell you what has been happening.”

What use is that? Are you going to use it to guess the future? It’s already the past. You lived through it. Apparently it didn’t cause the elimination of the human race.

“It tells you it’s been warming. It allows you to investigate reasons for that warming.”

Does that mean it is always going to warm? It’s pretty obvious that you think so.

It’s been 40 years with CAGW advocates creating and running climate models – and we are no closer to knowing the reasons for why the atmosphere acts as it does. The models all show the warming going up with the same slope forever – just like your 40 year trend. Apparently we are never going to have another ice age according to the models.

The 18 year pause and the current 8 year pause *should* be clues to honest researchers that the models aren’t working correctly since they don’t reproduce them at all. Calling the pauses “noise” is just the argumentative fallacy of Argument by Dismissal.

“If you consider uncertainties then the 40 year trend is just as meaningless as the 8 year trend.”

Only if you don’t understand how uncertainties in a trend work. Which of course, you don’t and I’m sure you are about to illustrate that again.

“Malarky! *I* am the one that informed you that your “uncertainty of the trend” was not an uncertainty at all”

You don’t inform someone by spouting nonsense. You insist that there is no uncertainty in a trend line, because “it is just a best fit metric”. Hence you want to rely only on the stated value of the trend line. That’s your only justification for saying the trend has certainly changed 8 years ago.

“If the uncertainties of the stated values are so bad over the past eight years that the trend is unusable then so is the trend line for the past 40 years!”

Pointless explaining this again. But here goes. The uncertainty in the trend line are not based, normally, on supposed uncertainty in the measurements, but on the variation in the data. This variation can come from different sources, including measurement error, but is mainly due to other factors not related to the independent variable (time in this case).

The less data you have, the more this variability contributes to the uncertainty of the trend. Hence the uncertainty over an 8 year period is much greater than over a 40 year period.

Gorman wants to ignore all this uncertainty and only base the uncertainty on the measurement uncertainty for each month. That’s fine, and you can include it. But He also believes the uncertainty in UAH data is huge, much larger than all the variation observed in the data over the last 40 years. And he thinks the best way to determine the actual uncertainty in the trend line is to assume it’s possible for the trend to go from the coldest possible value for the first month to the warmest possible value for the last month, or vice verse.

Hence he thinks the uncertainty over 40 years could be 2.8°C divided by the time frame. So this would be around 0.13 ± 0.7°C / decade. Whilst over the last 8 years it would be 0 ± 3.5°C / decade.

For some reason he doesn’t think this invalidates the claim that the pause proves CO2 does not effect temperature, or that there has certainly been a change in trend over the last 8 years.

“What use is that? Are you going to use it to guess the future? It’s already the past. You lived through it. Apparently it didn’t cause the elimination of the human race.

…

Does that mean it is always going to warm? It’s pretty obvious that you think so.”

So many straw men.

Once again I am not trying to predict the future. I want to know if temperatures have been warming, past tense. I’d like to know if that warming stopped 8 years ago.

One reason for asking these questions might be to see what this would mean if the trend continued into the future, but it is not a good way of predicting the future.

I absolutely do not think it is always going to warm. If, as I thin is likely, warming is being controlled by CO2, then warming will stop at some point after CO2 stops rising.It might also stop if other hypothetical cooling events occur, e.g. the earth is hit by a major asteroid, there’s a super volcano, or the sun was to go cold.

Linear regression can only really tell you about the trend within the scope of the data. Extrapolating far beyond that is dangerous for many reasons.

I do not believe it’s likely that global warming will result in the extinction of the human race. But arguing that it hasn’t happened yet so cannot happen no matter how warm it gets, is another of your fallacious arguments.

“You’re the one who keeps insisting the current trend needs to be considered a possible indication of what’s going to happen.”

They *are* a possible indication of what could happen! Otherwise the pauses would not have happened at all! Even better they are indicators that the models are wrong since the models don’t reproduce the pauses!

“If you have no other information it may be the best assumption that a trend will continue into the future, but you don’t want to project that too far.”

Ahhhh! So now you are changing your tune, eh? It’s always temporary with you. You will soon enough fall back into calling the pauses as discontinuities, statistically insignificant, and cherry-picked data.

“But it’s far better to understand what’s happening and try to use that information for future predictions.”

Of course! And Monckton is pointing out that the models don’t understand what is happening since they don’t reproduce the pauses in the face of ever increasing CO2!

“No. If it’s unusually sunny and hot today, I’m not going to assume that it will continue to be unusually sunny and hot for the next 5 years.”

How about for 18 years?

“It’s reasonable to assume that if it’s rained on occasions over the last 30 years, that it will rain at some point in the future.”

And if you can’t tell from your model when that rain is going to happen then what use is the model?

“If I were a farmer I’d listen to what the weather forecasts were rather than assume the weather this year was going to the same as it was last year.”

The Old Farmers Almanac has provided better long range forecasts for the next year than the climate models! The OFA claims an 80% average accuracy with its long range monthly forecasts. Since they publish a past year review on their accuracy each year, if they were fudging the numbers they’d get caught out pretty quickly.

The OFA does *NOT* use a 40 year trend line to forecast the next years weather!

“Give one example of a forecast I made that has been proven wrong.”

You keep claiming that Monckton’s pause is unusable, statistically insignificant, causes discontinuities, etc. All to try and dissuade people from using the current pause to say that it might continue next year. You’ve said this for over a year. But we just keep seeing the pause continue every month regardless of your claim that it can’t because the 40 year trend line says it can’t.

“I’m not saying the weather next year is likely to be the same as it was 30 years ago, because we know there’s been 30 years of warming since then.”

Really? It sure sounds like that is what you’ve been saying! It sure sounds like you are now trying to change your tune! But then, just like with uncertainty, you revert right back to saying that next year will be just like the past 30 years! That’s based on your sentence “I’ve never seen Monckton give any evidence of a statistically significant change in trend.” In other words you *are* saying that the weather next year will be what the 40 year trend line says and not what the pause trend says.

You want your cake and to eat it too. It just doesn’t work that way!

“They *are* a possible indication of what could happen!”

It’s possible they could be the start of a new long term trend. And it’s possible the trend since the start of 2011, 0.33°C / decade will continue into the distant future. Anything’s possible, but with statistics I’d prefer to be skeptical and wait for clear evidence before basing assumptions on slim possibilities.

“Ahhhh! So now you are changing your tune, eh?”

And I see we are in the another long session of strawman arguments. I say that the best indicator of future trends, if you have no other information, might be the trend over the last 40 years. I’ve no idea why you think that disagrees with anything else I’ve said.

“How about for 18 years?”

Obviously if I think it’s unlikely the current weather conditions will last for the next 5 years, I doubt they will last for the next 18. What is your point?

“And if you can’t tell from your model when that rain is going to happen then what use is the model?”

By model, you mean looking at weather over the last 30 years as an indicator of the range of likely weather for the near future?

The point isn’t to predict when it will rain, the point is to know that rain is possible at times. That’s all I’m claiming, along with the idea that this is better than just using the weather from the last 8 years as a model.

“The Old Farmers Almanac has provided better long range forecasts for the next year than the climate models!”

I’m sure you’ve got lots of anecdotal evidence to back that up.

“The OFA claims an 80% average accuracy with its long range monthly forecasts.”

Piers Corbyn claims 85% accuracy. It doesn’t make it true.

“You keep claiming that Monckton’s pause is unusable, statistically insignificant, causes discontinuities, etc”

That is not a forecast.

I might have jokingly said in 2016 that knowing Monckton he would be claiming that’s the start of a new pause in a few years time. That would have been a forecast.

“All to try and dissuade people from using the current pause to say that it might continue next year. You’ve said this for over a year. ”

An absolute lie. I’ve never said the new pause will end next year. I’m pretty sure it will continue for a number of years yet. It really just depends when the net big El Niño arrives.

“Really? It sure sounds like that is what you’ve been saying!”

It might sound like it to you, because you’ve got a weird habit of ignoring or misunderstanding everything I say.

“But then, just like with uncertainty, you revert right back to saying that next year will be just like the past 30 years! That’s based on your sentence “I’ve never seen Monckton give any evidence of a statistically significant change in trend.” In other words you *are* saying that the weather next year will be what the 40 year trend line says and not what the pause trend says.”

See what I mean?

How on earth does “I’ve never seen Monckton give any evidence of a statistically significant change in trend.”, translate in your mind to me saying “the weather next year will be what the 40 year trend line says”?

“When doing forecasts, however, the further back you go the less weight individual data values should have.”

Do you have a particular weighting you want to use? I’ve tried various ones, and they generally increase the trend slightly. Here for example, I’ve effectively reduced the weight by 10% per year going back from present. The trend increases to 0.15°C / decade.

(Blue line is the unweighted trend, red the weighted one.)

I don’t think you understand how weighting works in such a situation. Take the leftmost value and assign a weight of 1 to it, so that value only appears once. Take the second value and assign an incrementally increased weight to it, e.g. a 2, so it appears twice. Follow through with all the data values so that the rightmost value appears the same number of times as the number of data values in the set. If you have 40 years of monthly data then your last data entry will appear 480 times while the first data point will only appear once. The second to last data point will appear 479 times. Your trend line *should* bend to minimize the residuals between it and the 480 appearances of the last data point and the 479 appearances of the next to last data point and etc. Your x-axis is no longer time but order of appearance.

Use whatever weighting algorithm you want but it *has* to give more weight to current data than past data. And that does *NOT* mean just multiplying the data by some value.

Could you provide a reference to this technique?

The usual way I’ve seen to add weighting is to provide a weight to the cost of each residual.

“Could you provide a reference to this technique?”

If I can find any old studies we did for sizing central office growth additions I’ll forward them to you.

you might look here: https://atlasprecon.com/weighted-average-forecasting/

It will give some hints on how to do this. I’m not your research assistant. If you want to know more on how to do it then do your own research.

“I’m not your research assistant.”

Yet you seem to think I’m yours. You keep making vague claims without ever doing the work to show they are true. You insist that a weighted trend will demonstrate the pause, but then when I do the work and show it doesn’t, you claim I’m not doing it correctly, but still won’t justify the way you want it done.

I don’t know if you’ve noticed, but I’ve now done it exactly as you described, and it still shows an accelerated warming trend, not a pause.

The link you provided is not about a weighted regressions, it’s about weighted averages.

“Yet you seem to think I’m yours.”

Really? I am the *ONLY* one of us two that have worked out the examples throughout Taylor’s book on uncertainty. I’ve even obtained multiple statistics books and given you their reference and exact quotes. I have gone back through my forecasting notes and given you the proper method for weighting past data and given you a link to show how it is done!

And you just keep quoting religious dogma that the best-fit metric is a measure of uncertainty, that all uncertainty in all populations cancel and the accuracy of the mean is the standard deviation of the sample means.

You did *NOT* do the weighting correctly and you probably never will. It’s just too hard for you to understand even with my simple explanation of how to do it.

Nor can you get it into your head that you cannot just analyze physical phenomena by using linear regression based on the earliest start value and the latest end value. You HAVE to look at the pieces of the data along the way if you are trying to isolate physical phenomena. That’s why Monckton does what he does.

I’ve attached Abbott’s graph again. Maybe you’ll open your eyes and see it this time. It distinctly shows cyclical processes which your trend line does not and can not show. So you just remain stuck in your rut.

“Really? I am the *ONLY* one of us two that have worked out the examples throughout Taylor’s book on uncertainty.”

Congratulations. Have a gold star.

“I’ve even obtained multiple statistics books and given you their reference and exact quotes.”

I’m sure you believe that this has some baring on anything.

“I have gone back through my forecasting notes and given you the proper method for weighting past data and given you a link to show how it is done!”

Your memory is playing tricks again. Your link said nothing about a weighted regressions, as I told you.

But again, you keep giving me these tasks. You don’t do the work yourself. You show me what your weighted regression looks like. Don’t expect me to do it for you then complain if you don’t like the result.

“And you just keep quoting religious dogma that the best-fit metric is a measure of uncertainty, that all uncertainty in all populations cancel and the accuracy of the mean is the standard deviation of the sample means.”

All things I’ve never said. But keep arguing with the voices in your head. It’s amusing in a grim sort of way.

“You did *NOT* do the weighting correctly and you probably never will.”

Then you do it.

“It’s just too hard for you to understand even with my simple explanation of how to do it. ”

Really? What do you think I did wrong? As far as I can tell I followed your explanation to the letter. Maybe if you show me your workings we can see who’s done it more correctly.

“I’ve attached Abbott’s graph again. Maybe you’ll open your eyes and see it this time.”

Look at the comment I was responding to. There was no graph.

” It distinctly shows cyclical processes which your trend line does not and can not show.”

And has absolutely nothing to do with what we were talking about, or what you were claiming.

You said:

Your graph is showing a trend from 1975 – 2009. I was talking about the trend over the length of your two overlapping pauses – 1997 – 2022. I’m talking about UAH data, you are using some old HadCRUT data. You claim it stops in 2016, it stops around 2010.

“Your memory is playing tricks again. Your link said nothing about a weighted regressions, as I told you.”

You are the only one with a failing memory. I laid out how you do it in excruciating detail. I even gave you a link: https://atlasprecon.com/weighted-average-forecasting/

but you apparently didn’t go look at it or try to figure it out.

It’s a total waste of time trying to lead you to water, you just refuse to drink – like an old, stubborn mule!

“Then you do it.”

I’ve shown you how to do it. I’ve given you a link on the process. *YOU* are the one that needs to learn how to do it, not me. YOU need to do it as a learning exercise. Me doing it won’t teach you anything! I’ve attached two very simple graphs showing how I’ve done a very simple exercise. You use a polynomial fit to see how the slope of the trend line changes as you weight the most recent data more heavily vs a straight linear regression line of the data.

“Really? What do you think I did wrong? As far as I can tell I followed your explanation to the letter. Maybe if you show me your workings we can see who’s done it more correctly.”

You didn’t follow it at ALL! You don’t just multiply the data values, that changes the actual data without weighting it at all. As usual, you just don’t get it!

“And has absolutely nothing to do with what we were talking about, or what you were claiming.”

Of course it does! Stop whining. It shows you simply cannot just depend on a long term linear regression to determine what is actually happening! Something you just refuse to understand. There are none so blind as those who will not see!

“You are the only one with a failing memory. I laid out how you do it in excruciating detail.”

I was specifically talking about the link. The one you repeat, claiming it explains how to do the weighted regression. IT does not. Maybe you posted the wrong link twice, but the one you posted is about “weighted average forecasting”. It says nothing about regression. All it’s doing is calculating a weighted average by month and using that as a forecast.

As to your excruciating description, I followed it gave you two graphs. They didn’t give you the result you wanted, so you insist I’d done them wrong, but still refuse to show me what they should look like.

“Me doing it won’t teach you anything!”

I’m not asking you to teach me anything, and if I was I’d be asking for my money back. What I’m asking is for you to justify your claims.

“You use a polynomial fit to see how the slope of the trend line changes as you weight the most recent data more heavily vs a straight linear regression line of the data.”

Pity your “excruciating detail” failed to mention you want a polynomial. So what order of polynomial does your method require?

“As usual, you just don’t get it!”

Then show me how you did it. Show me your result.

“There are none so blind as those who will not see!”

There are none so empty as those who are full of themselves.

Wow! Go take your medicine! The entire issue was how you extend past data into the future – FORECASTING. *YOU* want to extend the linear regression line by saying that anything other than the linear regression line is somehow wrong!

I’ve told you MULTIPLE TIMES that such a view is nothing more than the old argumentative fallacy of Appeal to Tradition. When forecasting the *worst* thing you can do is extend a long term linear regression line because that gives equal weight to data in the far past compared to recent data. The link I gave you gives a base for weighting data to make it more applicable to a forecast!

You *REALLY* don’t remember me telling you this? You are going to make me go back through the thread to find it?

What did you expect a weighted data graph to give you? Another linear regression line with a different slope? That’s what you get when you generate a new set of data by just multiplying existing data by some factor! If you multiply the new data by a larger number or the old data by a smaller number exactly what did you expect to get besides another linear regression line with a larger slope?

That simply isn’t how you forecast from past data!

What difference does it make as to what order of polynomial it is? Create the data yourself and do a fit. It’s the only way you are going to learn! It’s pretty obvious what data values I used just from the graph – 1,2,3,4,5,6,7,8,9,10. And how to do it is obvious in the link I gave you.

It is *really* frustrating discussing anything with you because you know so little about the subject but are so willing to dismiss everything out of hand if it doesn’t fit your narrow point of view of how things ought to be!

I DID SHOW YOU! The graph is so simple a 6th grader could figure it out!

You have so blinded yourself you can’t even figure out a simple graph based on 10 numbers. And you are criticizing others?

“Wow! Go take your medicine! The entire issue was how you extend past data into the future…”

These attempts at distraction are getting feeble. You know full well, and if you don’t you can reread the comments, what I was talking about. You gave me a link you keep, claiming it answered the question of how you wanted me to do a weighted linear regression, and when I point out it’s about a weighted average forecast you claim that’s what you meant all along.

“*YOU* want to extend the linear regression line by saying that anything other than the linear regression line is somehow wrong!”

Stop lying. I keep telling you I don’t want to do that.

“I’ve told you MULTIPLE TIMES that such a view is nothing more than the old argumentative fallacy of Appeal to Tradition.”

Yet you have no problem with using the argumentative fallacy of a strawman.

“What did you expect a weighted data graph to give you?”

Stop playing games, and say exactly what you want me to do, or better yet do it yourself. I’m sure if you fiddle about with the data enough you can get something that looks like a pause.

“What difference does it make as to what order of polynomial it is?”

Seriously?

“Create the data yourself and do a fit.”

I did and posted the result.

“It is *really* frustrating discussing anything with you because you know so little about the subject but are so willing to dismiss everything out of hand if it doesn’t fit your narrow point of view of how things ought to be!”

Ditto.

“I DID SHOW YOU! The graph is so simple a 6th grader could figure it out! ”

Show me what it looks like when you use real data.

“You have so blinded yourself you can’t even figure out a simple graph based on 10 numbers.”

Explain to me how you would use your graph to predict what the next value would be.

Your data is growing linearly, but you want to weight the newer values. So you fit this curve which gives the impression the slope is declining, but the x axis isn’t useful, so presumably you need to re-scale it, as I did with the UAH data. At that point do you just get back to a linear line and predict the next value will be 11?

So here is the same weighted technique, using a polynomial. I tried a quadratic but that didn’t look much different to the linear, so here I’m using a cubic.

And here’s the same using the proper x scale, with the normal linear regression in blue.

“I’ve told you MULTIPLE TIMES that such a view is nothing more than the old argumentative fallacy of Appeal to Tradition.”

Do you actually know what appeal to tradition means?

I think this is what you are getting at. Red line shows the linear trend for your increasing repetition weighting scheme, the blue line is the non-weighted linear trend. As before the weighted trend predicts warmer temperatures at this time than the unweighted trend.

Here’s the same, but with the proper x-axis scale.

Not true

Weather predictions work well for a few days

Climate predictions are notoriously inaccurate.

Extrapolating short term trends does not create a good long term climate forecast. Even extrapolating 30 to 50 years trends does not create a good climate prediction for the next 30 to 50 years.

The climate will get warmer,

unless it gets colder.