Guest Post by Willis Eschenbach

Much has been made of the argument that natural forcings alone are not sufficient to explain the 20th Century temperature variations. Here’s the IPCC on the subject:

I’m sure you can see the problems with this. The computer model has been optimized to hindcast the past temperature changes using both natural and anthropogenic forcings … so of course, when you pull a random group of forcings out of the inputs, it will perform more poorly.

Now, both Anthony and I often get sent the latest greatest models that purport to explain the vagaries of the historical global average temperature record. The most recent one used a cumulative sum of the sunspot series, plus the Pacific Decadal Oscillation and the North Atlantic oscillation, to model the temperature. I keep pointing out to the folks sending them that this is nothing but curve fitting … and in that most recent case, it was curve fitting plus another problem. The problem is that they are using as an input something which is part of the target. The NAO and the PDO are each a part of what makes up the global temperature average. As a result, it is circular to use them as an input.

But I digress. I started out to show how not to model the temperature. In order to do this, I wanted to find whatever the simplest model I could find which a) did not use greenhouse gases, and b) used only the forcings used by the GISS model in the Coupled Model Intercomparison Project Phase 5 (CMIP5). These were:

[1,] “WMGHG” [Well Mixed Greenhouse Gases]

[2,] “Ozone”

[3,] “Solar”

[4,] “Land_Use”

[5,] “SnowAlb_BC” [Snow Albedo (Black Carbon)]

[6,] “Orbital” [Orbital variations involving the Earth’s orbit around the sun]

[7,] “TropAerDir” [Tropospheric Aerosol Direct]

[8,] “TropAerInd” [Tropospheric Aerosol Indirect]

After a bit of experimentation, I found that I could get a very good fit using only Snow Albedo and Orbital variations. That’s one natural and one anthropogenic forcing, but no greenhouse gases. The model uses the formula

Temperature = 2012.7 * Orbital – 27.8 * Snow Albedo – 2.5

and the result looks like this:

The red line is the model, and dang, how about that fit? It matches up very well with the Gaussian smooth of the HadCRUT surface temperature data. Gosh, could it be that I’ve discovered the secret underpinnings of variations in the HadCRUT temperature data?

And here are the statistics of the fit:

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) -2.4519 0.1451 -16.894 < 2e-16 ***

hadbox[, c(9, 10)]SnowAlb_BC -27.7521 3.2128 -8.638 5.36e-14 ***

hadbox[, c(9, 10)]Orbital 2012.7179 150.7834 13.348 < 2e-16 ***

---

Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

Residual standard error: 0.105 on 109 degrees of freedom

Multiple R-squared: 0.8553, Adjusted R-squared: 0.8526

F-statistic: 322.1 on 2 and 109 DF, p-value: < 2.2e-16

I mean, an R^2 of 0.85 and a p-value less than 2.2E-16, that’s my awesome model in action …

So does this mean that the global average temperature really is a function of orbital variations and snow albedo?

Don’t be daft.

All that it means is that it is ridiculously easy to fit variables to a given target dataset. Heck, I’ve done it above using only two real-world variables and three tunable parameters. If I add a few more variables and parameters, I can get an even better fit … but it will be just as meaningless as my model shown above.

Please note that I don’t even have to use data. I can fit the historical temperature record with nothing but sine waves … Nicola Scafetta keeps doing this over and over and claiming that he is making huge, significant scientific strides. In my post entitled “Congenital Cyclomania Redux“, I pointed out the following:

So far, in each of his previous three posts on WUWT, Dr. Scafetta has said that the Earth’s surface temperature is ruled by a different combination of cycles depending on the post:

First Post: 20 and 60-year cycles. These were supposed to be related to some astronomical cycles which were never made clear, albeit there was much mumbling about Jupiter and Saturn.

Second Post: 9.1, 10-11, 20 and 60-year cycles. Here are the claims made for these cycles:

9.1 years: this was justified as being sort of near to a calculation of (2X+Y)/4, where X and Y are lunar precession cycles,

“10-11″ years: he never said where he got this one, or why it’s so vague.

20 years: supposedly close to an average of the sun’s barycentric velocity period.

60 years: kinda like three times the synodic period of Jupiter/Saturn. Why three times? Why not?

Third Post: 9.98, 10.9, and 11.86-year cycles. These are claimed to be

9.98 years: slightly different from a long-term average of the spring tidal period of Jupiter and Saturn.

10.9 years: may be related to a quasi 11-year solar cycle … or not.

11.86 years: Jupiter’s sidereal period.

The latest post, however, is simply unbeatable. It has no less than six different cycles, with periods of 9.1, 10.2, 21, 61, 115, and 983 years. I haven’t dared inquire too closely as to the antecedents of those choices, although I do love the “3” in the 983-year cycle.

I bring all of this up to do my best to discourage this kind of bogus curve fitting, whether it is using real-world forcings, “sunspot cycles”, or “astronomical cycles”. Why is it “bogus”? Because it uses tuned parameters, and as I showed above, when you use tuned parameters it is bozo simple to fit an arbitrary dataset using just about anything as input.

But heck, you don’t have to take my word for it. Here’s Freeman Dyson on the subject of the foolishness of using tunable parameters:

When I arrived in Fermi’s office, I handed the graphs to Fermi, but he hardly glanced at them. He invited me to sit down, and asked me in a friendly way about the health of my wife and our newborn baby son, now fifty years old. Then he delivered his verdict in a quiet, even voice. “There are two ways of doing calculations in theoretical physics”, he said. “One way, and this is the way I prefer, is to have a clear physical picture of the process that you are calculating. The other way is to have a precise and self-consistent mathematical formalism. You have neither.” I was slightly stunned, but ventured to ask him why he did not consider the pseudoscalar meson theory to be a self-consistent mathematical formalism.

He replied, “Quantum electrodynamics is a good theory because the forces are weak, and when the formalism is ambiguous we have a clear physical picture to guide us.With the pseudoscalar meson theory there is no physical picture, and the forces are so strong that nothing converges. To reach your calculated results, you had to introduce arbitrary cut-off procedures that are not based either on solid physics or on solid mathematics.”

In desperation I asked Fermi whether he was not impressed by the agreement between our calculated numbers and his measured numbers. He replied, “How many arbitrary parameters did you use for your calculations?” I thought for a moment about our cut-off procedures and said, “Four.” He said, “I remember my friend Johnny von Neumann used to say, with four parameters I can fit an elephant, and with five I can make him wiggle his trunk.” With that, the conversation was over. I thanked Fermi for his time and trouble, and sadly took the next bus back to Ithaca to tell the bad news to the students.

So, you folks who are all on about how this particular pair of “solar cycles”, or this planetary cycle plus the spring tidal period of Jupiter, or this group of forcings miraculously emulates the historical temperature with a high R^2, I implore you to take to heart Enrico Fermi’s advice before trying to sell your whiz-bang model in the crowded marketplace of scientific ideas. Here’s the bar that you need to clear:

“One way, and this is the way I prefer, is to have a clear physical picture of the process that you are calculating. The other way is to have a precise and self-consistent mathematical formalism. You have neither.”

So … if you look at your model and indeed “You have neither”, please be as honest as Freeman Dyson and don’t bother sending your model to me. I can’t speak for Anthony, but these kinds of multi-parameter fitted models are not interesting to me in the slightest.

Finally, note that I’ve done this hindcasting of historical temperatures with a one-line equation and two forcings … so do we think it’s amazing that a hugely complex computer model using ten forcings can hindcast historical temperatures?

My regards to you all on a rainy, rainy night,

w.

The Usual Polite Request: Please quote the exact words that you are discussing. It prevents all kinds of misunderstandings. Only gonna ask once. That’s all.

For any who, like me, will want to read the rest of Dyson’s remarks, here is the link:

http://lilith.fisica.ufmg.br/dsoares/fdyson.htm

Thank you, Juan!

“One way, and this is the way I prefer, is to have a clear physical picture of the process that you are calculating. The other way is to have a precise and self-consistent mathematical formalism. You have neither.”

Willis, would you apply this condition to attempts to explain a biological phenomenon? Such as, for instance, why the European eel (Anguila anguila) persists in crossing the Atlantic to the Sargasso Sea in the Caribbean, to spawn each year?

phil salmon March 25, 2018 at 7:29 am

I can’t even imagine a “precise and self-consistent mathematical formalism” that describes eel migration, so it’s not at all clear what you are asking. It seems like what you are asking is like asking to define love using calculus … incommensurability roolz.

Regards,

w.

Willis – Wegener? Brett

Exactly. Incommensurability – great word, thanks!

Dyson and Fermi can afford the “luxury” of requiring precise mathematical and physically mechanistic proof when looking inside the atom or at the big bang.

However with eels and love, a level of complexity is reached where such strict requirements can’t be applied.

My point is that climate has such a level of complexity. Not only does it involve chaotic nonlinear pattern-formation processes, it also is significantly affected by living organisms. Ever since the great oxygenation event a little over 2 billion years ago, living organisms have had a massive effect on climate. Thus for example the attempts to model CO2 effects on climate are flawed if they don’t include the greening effect of CO2 causing an enhancement of transpiration and the hydrological cycle in arid and marginal regions.

But I agree that curve fitting to astrophysical processes is invalidated as you point out by the ease of fitting data to even a limited “toolkit” of proposed oscillating forcers. Also this approach makes another fundamental mistake in assuming the climate to be essentially passive such that all its ups and downs are driven by some external astrophysical agent. This is the same error as alarmist CAGW which requires a completely passive climate and all warming or cooling is imposed by atmospheric and always human-contributed gasses or particles. Both these are wrong. The climate is active, not passive and changes by itself, in and of itself. Optionally with a little help from outside.

Ptolemy2

“Thus for example the attempts to model CO2 effects on climate are flawed if they don’t include the greening effect of CO2 causing an enhancement of transpiration and the hydrological cycle in arid and marginal regions.”

I’m pretty sure most new models do this. Some may just include a parameter for the vegetative sink for CO2, but I think there are also parameters representing vegetation and land use.

“This is the same error as alarmist CAGW which requires a completely passive climate and all warming or cooling is imposed by atmospheric and always human-contributed gasses or particles. Both these are wrong. The climate is active, not passive and changes by itself, in and of itself. Optionally with a little help from outside.”

I always wonder who people mean by “alarmist CAGW.” Is this the greenies? The media? Or do you mean climate scientists? At any rate, I doubt there are many people so stupid that they believe all variation in weather is due to human-induced causes. Why do you say such things? Do you actually believe that? Why? Do you realize that just by saying something or reading it again and again it will seem to be true, even if you weren’t sure to begin with? Obviously there aren’t a lot of people you have to convince around here. I’m honestly puzzled why people say the same things again and again – not just any things; the comments have to be DEROGATORY.

Kristi

It was not my intention to be derogatory. I think “alarmist CAGW” is a reasonable description of the dominant body of opinion in the media, politics and academia, that recent warming is anthropogenic and a cause for alarm. There is nothing wrong with alarmism if there is a real threat on the horizon. Churchill was right to br alarmist about political and military developments in Germany in the 1930’s. People are right to be alarmist about antibiotic resistance.

You are right that progress is not helped by derogatory language and labelling. The climate research community is moving toward acceptance for instance that climate is not “passive”, that oceanic circulation shifts can cause 10, 100 – 1000 year timescale climate changes without necessarily outside forcing. This knowledge was of course always there in the oceanography literature, it’s just a question of connections between disciplines.

However it has to be said that a lot of communications about climate research do appear to assume a passive climate, albeit not explicitly. Did climate warm? – has to be CO2 or maybe soot; did it cool? – has to be particulate pollution or a volcano or two (at least the latter is not anthropogenic).

For instance a Canadian academic a few years back published a paper asserting that 99% of recent warming was anthropogenic, “natural” processes could be restricted to no more than 1%. Such a statement had clear political implications, which was evidently intentional. However it cannot have been made on the basis of understanding of “natural” ocean driven multidecadal climate variability.

But yes – cutting out inflammatory language is perhaps the single thing that would most advance the climate debate and the research process. All genuine attempts to advance understanding should be respected, from whichever direction they come.

Kristi, just saying “I’m pretty sure most new climate models do this”, doesn’t cut it.

These models have a long history of excluding critical factors.

ptolemy2 is phil salmon btw. Forgot that I was still anonymous on this pc.

Kristi, do not play the sympathy card. Climatism has been and is a practitioner of abuse and mendacity. For reasons not really concerned with climate, which is just a tool. CO2 and ridiculous CMIPs, now disavowed by IPCC even, but still used for meaningless scenarios and projections. Not science but politics, so leave off wasting our time please. Your steed has expired. Brett

Phil,

Thanks for the comment.

” I think “alarmist CAGW” is a reasonable description of the dominant body of opinion in the media, politics and academia, that recent warming is anthropogenic and a cause for alarm.

I think this is widely seen as derogatory. What is an alarmist, anyway? Someone who is concerned about the evidence that things are changing, and the changes to come? I can see talking about some in the media as alarmist, saying the latest storm is a sign of the coming devastation, but I think it’s destructive when the term is applied broadly to the scientific community. Then there is the “catastrophic’ part. What does this mean, exactly? Seems like it’s intentionally exaggerated. What about all those who are simply concerned by the potential for major disruption to human and biological systems? Much of my concern is based on the uncertainty of what will happen through destabilization of communities that have adapted together to their environment. This is what i know most about, so it’s what I think about, but if it’s ever addressed here it’s in a derisive way.

There is far too much knee-jerk dismissal of science that isn’t understood by those dismissing it. There is very little healthy skepticism among many who comment on WUWT; instead, denial is fostered. It’s reached the point that research in other fields that have nothing to do with climate modeling is dismissed just because it talks about a model, even if it’s just a multivariate regression.

The climate debate has become one of politics vs. science. I’d go so far as to say, the “skeptic” movement is anti-science. It promotes more misunderstanding then understanding.

“For instance a Canadian academic a few years back published a paper asserting that 99% of recent warming was anthropogenic, “natural” processes could be restricted to no more than 1%.”

This is obviously foolish. I’m the last to argue there aren’t fools out there. Al Gore is one.

” The climate research community is moving toward acceptance for instance that climate is not “passive”,”

I don’t know what you mean by this.

Please don’t be offended by what I say here. These are my perceptions. It matters to me much less what our carbon policy is than that the scientific community has such widespread public distrust.

Regards,

Kristi

Brett – sympathy card? You think I want your sympathy? What an absurd idea!

Yep, science has been crushed under the weight of politics. Maybe if hard-core deniers like yourself were less influenced by politics they might actually consider the science without bias.

@Kristi Silber ?w=664

?w=664

March 28, 2018 at 12:33 am

“what is an alarmist, anyway? Someone who is concerned about the evidence that things are changing, and the changes to come? ”

Not just that, but someone who denies that things were changing before and will keep changing anyway (implying that all changes are man doing, and, hence, what man did, he can stop doing and undo), AND that these changes are not just bad, but DOOM (implying that we cannot balance the good and the bad, we just must go backward to previous era, before man CO2-sinned).

” Much of my concern is based on the uncertainty of what will happen through destabilization of communities that have adapted together to their environment.”

Then creationist you are. Adaptation is not a state, it is a process. Living communities cannot be destabilized, because they are not stable in the first place. Most species exist only because change happens, and they themselves prompt change that will destroy (or at least displace or put in dormancy) them.

The poster story of nature conservation failure is how man almost destroyed some redwood (Sequoia sempervirens) by trying to protect it from fire. Trouble is, fire destroys its competitors more than it hurts redwood, so fire shouldn’t suppressed. Likewise, man tried to protect marsh rare species by wetland conservation. Complete failure, as these species depended on ecological succession of drier and wetter phase.

We don’t do this mistake anymore. You still do. Change not only happens, It is necessary for biodiversity.

“The climate debate has become one of politics vs. science. I’d go so far as to say, the “skeptic” movement is anti-science. It promotes more misunderstanding then understanding.”

Oh. Well, just look at this

This is OFFICIAL IPCC figure.

It presents “observations” Vs “model results” natural forcing. There are NO observations of natural forcing, and no way to observe this, {and the very notion of “natural forcing” is just … WTF???… Just think about it. Nature is forcing itself? } .

It presents “observations” Vs “model results” anthropogenic forcing. Likewise, there are NO observations of anthropogenic forcing and no way to observe it. Besides, there is just no reason for anthropogenic forcing to be so jerky, it should be a nice smooth curve, copy-pasted from CO2 concentration at MLO.

So is the state of “climate science”: calls “observation” things it didn’t observes and have no way to observe.

So is your state: You believe this is science, and call anti-science anyone who demands science, that is, proper data not made out of improper modeling.

Who promotes more misunderstanding then understanding? Rhetorical question. You, obviously.

“Please don’t be offended by what I say here.”

Your tone is very polite. Trouble is, such nonsensical belief, and calling “science” pseudoscience, is offending all by itself, for scientific minds like those of most denizen of WUWT

“It matters to me much less what our carbon policy is than that the scientific community has such widespread public distrust.”

Well, I am pissed off that so many people believe in bullshit like organic food, astrology, electromagnetic hypersensitivity, homeopathy, GMO and palm oil and vaccine dangerousness, etc. despite scientific proof (BTW, such anti-science beliefs are very well correlated with CAGW belief; does it surprise you? Not me). Now, I also understand why they do, and recognize their right to act according to their belief. I just don’t recognize their right to have their beliefs turned into law. You see a pattern here?

Remember, Feynman told “Science is the belief in the ignorance of the experts”. You know that Newton was wrong, and Einstein was wrong and didn’t trust himself (which made him SO scientific, after all).

I don’t trust any man nor any theory, unless an insofar it produce some actually working stuff: planes, engines, solar panel and the like. I trust the technicians who say they did this stuff by using a theory, and if this works, well, there is truth enough in the theory. No such thing in “climate science”.

BUT. I believe in science, which is a process. I don’t trust “scientific community”. Moreover, I cannot trust a community that didn’t kicked out Micheal Mann, the way medical community kicked out Jacques Benveniste (just another example of a man doing both very good science, and very bad; contrasting M. Mann, who never did any good science).

Scientific community deserves such widespread public distrust. When it starts being trustworthy, then you can blame the public. Not before. Won’t happen, unfortunately.

I have watched people at WUWT convincingly show that using the thickness of tree rings of certain trees as a proxy for local atmospheric temperature is scientifically false.

They are correct in pointing out that for some of the trees used as temperature proxies, the thickness of their tree rings is not solely dependent upon atmospheric temperature. This conclusion is based on the common sense idea that annual growth rate of many trees can be influenced by factors such as soil moisture, cumulative hours of exposure to sunshine (which is affected by cloudiness), maximum or minimum daily temperatures (as opposed to mean daily temperatures), total rainfall etc.etc.

They are also correct in pointing out that some of the scientists using tree rings as long-term temperature proxies have used dubious methods to amalgamate and process their data (e.g. hide-the-decline Michael Mann).

However, these same people at WUWT have then made the sweeping statement that ALL use of tree-rings as temperature proxies are suspect. Anyone with any idea of how tree-ring temperature proxies work knows that this last leap in logic is completely false. It is easy to show that there are some circumstances where tree-ring widths of specifically selected species do in fact primarily depend upon nearby mean seas surface temperatures. That this is indeed the case can be shown by comparing modern instrumental temperature records to measured tree ring widths.

Unfortunately, these “experts” have convinced the majority of the mob that the use of tree-ring widths as temperature proxies is scientific anathema. They have been so successful at doing this that it now become virtually impossible to talk about this diagnostic in a sensible manner without being shouted down.

The same is now becoming true of using curve fitting as a valid diagnostic tool. Of course, there are many ways to use curve fitting that can fool the user into believing that they have found some magical window that allows them to clearly see the underlying physical principles of a natural phenomenon. This is particularly true when curve fitting is used as a diagnostic in climate science because of the inherently complex nature of the physics of the climate system. Many of the systems that are under study are inter-dependent upon other parts of the climate system and so it isn’t long before a hypothesis or model has so many free parameters that it could just about fit any physical system through a simple adjustment of the multitude of fitting parameters.

However, it is logically false to claim that because these dangers exist, it is virtually pointless to use curve fitting method to try an understand the underlying climate physics.

For example, take the 9.1-year cycle that is clearly detected in the world mean temperatures. Wavelet analysis shows that this 9.1-year cyclical pattern is present in the temperature record from 1870 to 1915 and then disappears between 1915 and 1960, before reappearing after this data.

These observational facts allow us to speculate as to why this might be the case.

One hypothesis that has been put forward is that the effect of lunar tides upon the Earth’s climate system may be responsible for this cyclical signal. This based on the simple mathematical fact that if you have two rates associated with the tidal forcings [in this case the 8.85-year lunar apsidal cycle (LAC) and the 9.3 (=18.6/2)-year half lunar nodal cycle (LNC)] they will impact the climate system with a period that is equal to the harmonic mean, giving:

2* (8.85 x 9.3) / (8.85 + 9.3) = 9.069 years = 9.1 years.

This is just the old mathematical problem: If Bob takes 4 hours to dig a hole and Fred takes 2 hours

to dig a hole, how long does it take Bob and Fred working together to dig a hole?

Answer: It is the harmonic mean of their two rates for digging a hole i.e.

2 * (4 x 8) / (4 +8) = 5.33′ hours

Hence, it is not unreasonable to propose that the lunar tides may play a role in influencing the world’s mean temperature.

The question then becomes; “if this is the case, then how could the lunar tides accomplish this task?”

So here is a simple application of curve fitting technique that can validly be used to help a researcher to further investigate the underlying physics.

astroclimateconnection March 25, 2018 at 7:36 am

Astro, I cannot tell you how absolutely unpleasant this kind of attack is. You have made a host of claims about “people”, “they”, “these same people”, and “these ‘experts'”, without a single quotation or identification of anyone.

This is nothing but throwing excrement at the wall and seeing if it will stick … it does, but only to you.

This is why I insist that people QUOTE WHAT THE HECK THEY ARE TALKING ABOUT. Your spray-gun style is impossible to disagree with, because you haven’t said either who is doing something or what they actually said. I’ve written at length about tree-rings. Are you talking about me? If so, what did I say and where did I say it?

Gotta say … you are off to an extremely bad start. I suppose I should read the rest of your rant, but I got this far and I was too nauseous to proceed.

If you actually have a scientific point to make, I suggest that you start over entirely and that you make it without your nasty insinuations about unspecified actions by un-named people. I’ll read that scientific comment. I won’t read the rest of your current comment. It’s just too ugly.

w.

My apologies. The comments that I made were not specifically directed at you or anyone else in particular. They are general comments which apply to the overall tone of the discussion on these issues.

All I am trying to say is that is very easy to devalue a useful scientific technique or method by pointing out its flaws and inconsistencies. Many of the criticisms that are given are valid. However, I believe that is illogical to then make the conclusion that little of real value can be obtained by using these techniques. I am not specifically accusing you of saying that, however, I fear that some of those who are reading your post are erroneously drawing this false conclusion. I believe that you are too experience a researcher to make such a silly mistake. However, I am left with the impression that some of the other commentators are not being as discerning as you.

If you read the last 1/3 of my post you will see that I give a specific example of a case where curve fitting can actually be used to guide the direction of a research investigation. I believe that It can be useful to see what cyclical frequencies are present in the observational data and that knowledge of these frequencies can be used to draw a limited inference about the underlying physics in some cases.

I will not respond to your personal attacks specifically directed at me nor your smearing of Nicola Scafetta other than to say that it is a serious character flaw in an otherwise stirling researcher and scientist.

astroclimateconnection March 25, 2018 at 2:39 pm

NO, they are not “general comments”. They are nasty accusations against un-named people, which is cowardly.

Again I say, QUOTE WHATEVER THE HELL YOU ARE TALKING ABOUT. Your vague handwaving without any specific examples is meaningless.

More anonymous accusations against un-named people. You are truly an unpleasant person.

And I’m left with the impression that you are a craven coward who is using his anonymity to make ugly accusations without specifying who the hell you are talking about.

And if you read my last comment you’d know that I said I wasn’t going to read one more word of that pile of bovine waste products, my stomach won’t take it.

Indeed it can be. However, in climate science, such situations are not common.

I did not “smear” Scafetta. I described his ridiculous claims about how the historical temperature is cyclical, complete with a variable number of cycles which changed with every post. Those are FACTS, not any kind of personal attack, he may be a fine man who loves his kids and is kind to his dog.

His science, however, is a joke, as I clearly demonstrated.

Finally, as to what you call my “personal attacks” on you, I have nothing against you personally. However, you have a nasty, ugly habit of smearing excrement on everything in range without making a single verifiable or falsifiable claim. This is a habit that you need to get rid of ASAP if you wish to have a discussion with me. In general, I don’t take well to attacks, whether on me or someone else, from people without the albondigas to sign their own names to their words. And when these attacks are unquoted, uncited, and unsupported in the deliberately vague manner of your attacks, I will make the slimy amoral nature of your words clear to you.

Don’t like it? Either change your ways or go play somewhere else. Sorry, but I don’t bear fools gladly, and I have no plans to change that.

w.

[Recommend we let this reply cool down for a few minutes/hours. Then read it again. .mod]

Willis, I think you overreact. I saw no indication of accusation of wrongdoing, simply discussion in a general way. Not a rant. You are too quick to take offense, and to give it. It must be hard addressing all these comments, but no one’s trying to tear you a new one (that I’ve noticed). Your efforts are appreciated.

” However, you have a nasty, ugly habit of smearing excrement on everything in range without making a single verifiable or falsifiable claim.”

Willis, you are freaking paranoid. This person is trying to be nice and not make any personal attacks, not insult anyone. He’s talking generally and may not even know who gave him his impressions. Can you not just have a conversation? Even after he apologized for giving you the wrong impression, you have to insult him,

“And I’m left with the impression that you are a craven coward who is using his anonymity to make ugly accusations without specifying who the hell you are talking about.”

It’s you who are making ugly accusations.

Kristi Silber March 25, 2018 at 5:23 pm

Thanks, Kristi. Me, I think you under-react, so I guess we are even.

Here’s the thing. I won’t put up with someone slinging mud at un-named people like this:

First off, that’s total bollocks. There have been and will continue to be discussions of tree rings here. However, the field has been badly tainted by the “one tree in Yamal” problem, along with the Mannian data-mining method that finds hockey sticks in red noise … but that has nothing to do with WUWT. That was done by Michael Mann and his insistence on crappy science and post-hoc selections of tree ring proxies, data snooping at its worst. It has nothing to do with “experts” or “the mob” here at WUWT … and just who is “the mob” who is blindly following the “experts”? You?

Here’s the problem. There is absolutely no way to respond to astroboy’s attack on WUWT because there are no names, no examples, no citations, no quotations, nothing at all but ugly accusations. I will not put up with that kind of an attack.

And no, that is not a “discussion” as you blithely claim. He’s given us nothing to discuss. It is an attempt to tear down and discredit the site by claiming that on WUWT there are “experts” who are misleading “the mob”.

Really? That passes for a “discussion” on your planet?

Now, I’m more than willing to discuss that unlikely possibility … but there’s nothing there I can discuss. Am I one of the “experts” misleading “the mob”? I can’t say, but it certainly could be what he means … or not, which is why it is such a slimy thing to say. If I protest, then he can say with feigned innocence “Oh, I wasn’t talking about you, how could you think such a terrible thing of me, I was speaking of someone else”.

And if I don’t protest, then lots of folks will assume he means me. Lose-lose.

At the end of every one of my posts, I ask people to QUOTE THE EXACT WORDS THAT THEY ARE DISCUSSING. I do that from long experience of underhanded people like astroturf or whatever his name is. And I’m not doing it for my health. I am perfectly serious. Allowing this kind of handwaving and mud-slinging to go on unopposed is a huge mistake. All that happens is that other folks, usually anonymous popups like astroslug, jump up to agree and pile on and start repeating the accusations and making more totally unsubstantiated claims. Before you know it the thread goes to hell.

The only way I’ve found to deal with that is to head it off at the pass by slapping whoever is starting the nonsense alongside the head and saying “CITE YOUR NASTY ALLEGATIONS, YOU HOCKEY PUCK!” or something like that.

Now Kristi, when you get around to writing a scientific post, publishing it here on WUWT, and curating it, and I hope you will do so, then you can try any method you like when this kind of madness of the crowd starts kicking in … and it will. At that point, you are welcome to try rubbing their tummies and blowing in their ears, or any other method you’d like.

However, I’ll stick with what works for me …

Thanks for the good thoughts,

w.

Willis,

Thank you for your reply. I was a bit worried about what I may have brought upon myself.

I see where you are coming from, and you made some good points. I had to go back and read the posts. Perhaps if you hadn’t stopped reading just when you did it might have made more sense.

It’s none of my business, in a sense – but on the other hand, exchanges like that might set the tone, make people afraid to comment. And the thing about flinging and sticking excrement is just too graphic. It would be great if that image weren’t around again. Please?

“Thanks, Kristi. Me, I think you under-react”

Huh. I try hard to keep my cool even though I have to wade through scores of comments I find offensive/nonsensical. I don’t care half as much about climate change as I do about the fact that scientists have had their raison d’etre stolen. Without the public’s trust, science loses its value to society. I believe with 98.6% certainty that the distrust is not merited, and that makes me angry. It’s not easy being among the 1.4% minority around here. Not sure why I do it.

I have an article in mind, but it would be very unpopular. I don’t like being attacked, either. Thanks for being civil.

Regards,

Kristi

My name is Ian Wilson. Because I use blogger.com to post here to WUWT it automatically uses my blog site name on blogger.com which astroclimateconnection. I login to the WUWT comments section using this method because it is convenient and because it allows my comments to be distinguished from another Ian Wilson who posts here from time to time.

I agree that Nicola SCafetta has been all over the place with his claimed solar-planetary cycles but there is method in his madness. Most of the changes in the cycle lengths have come about because of his evolving formulation of what he perceives to be the most likely explanation for what he is observing in the data. I am sure that if I were to review your published research work here at WUWT it would include on-going changes to some of the claims that you make. These changes are to be expected in an ongoing investigation and show that the researcher is reformulating their beliefs and opinions as the evidence unfolds.

Again, I will ignore the personal attacks [fool, slimy amoral nature, astroboy, astroturf, astroslug etc. etc.) and try to appeal to your better nature.

It is impossible to give a specific example where someone has said that tree-ring widths are not trustworthy. However, it cannot be denied that if someone tries to discuss a scientific result on WUWT that relies upon using tree-ring widths as a proxy for atmospheric temperatures, there is usually a spray of comments that poo poo the findings by using the blanket statement that “tree-rings can’t be trusted”. This is not your fault, nor is it Anthony’s fault that this is happening. However, it is hard to deny that these dismissive attitudes are not present when this issue comes up.

All I am trying to do is express my fear that a similar pattern of events could inadvertently result from this particular post, even though you don’t intend it. You [and most of your readers] and I know that curve fitting and spectral analysis is a scientifically valid technique if it is done properly.

I think that on this point we can get some agreement.

Sorry, my specific example should have read:

This is just the old mathematical problem: If you travel at 10 mph from town A to town B and 20 mph on the return trip, what is the average speed?

Answer: It is the harmonic mean of the two speeds i.e.

2 / ( (1/ 10) + (1/20)) = 13.333′ mph

I think there is a math error in your digging example. If Fred takes two hours alone, Bob helping will decrease the time by at least a little, not increase it to more than twice Fred’s unassisted time.

Loren Wilson March 25, 2018 at 3:44 pm Edit

Sounds like maybe astroclimateconnection is one of “the mob” that he claims have been led astray by the “experts” here at WUWT … or maybe he’s just wrong …

w.

As you point out, tree rings are affected by many things, not just temperature.

The list of other things is a lot longer than the list you give.

There are other problems.

Tree rings only form during the growing season, so you know nothing about the rest of the year.

Also trees have optimum temperatures. Because of this, both temperature increases as well as temperature decreases can cause decreases in ring growth.

Since it is impossible to filter out all of these other things, the only thing tree rings measure is the quality of the growing season.

It is not “unscientific” to proclaim that tree rings can NEVER be used as temperature proxies.

My comment seems to have gone to moderation for some GFR!

Your Freeman Dyson story reminds me of another story about Fermi told to me by a very senior member of our group when I was a young grad student. He’d made a very careful series of nuclear measurements and then fitted the latest theory to them. He took the data plot with error bars plus the fit plotted over the data and showed it to Fermi. Fermi laid the plot on his desk, pulled a ruler out of a drawer and drew a straight line through the data. “You will never convince me that the theory is any better than that.”

Thanks, Paul. Interesting story to me, since I actually use that method. I calculate the R^2 of a straight-line fit and the R^2 of the proposed curve. Unless the second is significantly better than the first, Occam suggests that the straight line is first choice.

w.

Nice post, Willis. The salient curve fitting point applies to a LOT more than just Scaffetta. Wadhams arctic ice and Amstrup polar bears come readily to mind. And your point can be broadened to a lot more modeling and statistical practices in ‘climate science’. Homogenization, sea level rise (Nerem), parameter tuning,…

As Mark Twain said, “There are lies, damned lies, and statistics. Or, to quote physicist Ernest Rutherford, “If you need statistics to make sense of your experiment, you should have done a better experiment.” Or, to more optimistically quote statistician George Box, “All models are wrong, but some are useful.” The climate problem with Box’ observation is, which?

ristvan March 25, 2018 at 8:20 am: “All models are wrong, but some are useful.” The climate problem with Box’ observation is, which?

Willis Eschenbach: ” Here’s the bar that you need to clear: “One way, and this is the way I prefer, is to have a clear physical picture of the process that you are calculating.” .”

WR: We need a clear physical picture of the processes that create our future weather and future climate. That is one. We need to know all interrelations between factors that play a role and we need to quantify everything. And to quantify well, we need appropriate data over long periods.

We have neither of them.

Most ‘models’ are part of the created ‘virtual world’ in which ‘science is settled’. But we need real world data and we need to understand real world processes. Plus a theory that makes sense.

Great post Willis!

Willis,

“That’s one natural and one anthropogenic forcing, ”

I have to quibble with this one. How can snow related albedo be anthropogenic? Orbital variability represents a natural change in forcing, while snow related albedo is the natural response to a change in forcing. Considering snow related albedo anthropogenic is tacitly acknowledging that CO2 is the primary forcing influence on the climate, which it absolutely is not, in fact, it’s not even properly called forcing.

Only the Sun forces the climate system while changing CO2 concentrations and changes to snow albedo are changes to the system. A change to the system can be said to be EQUIVALENT to a change in forcing keeping the system constant. This is what it means when claims are made about CO2 ‘forcing’. Note as well that the pedantic model adds equivalent forcing from CO2 to a system modified with increased CO2 concentrations counting the effect twice.

This is just another of the many levels of misdirection, indirection and misrepresentation between the controversy (alarmism vs. sanity) and what’s actually controversial (the climate sensitivity factor).

Snow and ice albedo rises substantially during the ice ages as a result of orbital and tilt variations eventually resulting in -5.0C temperature changes.

If that explains the ice ages, why would that not work for today’s climate albeit with much smaller changes.

Willis,

Yes, ice albedo has an effect, but its not feedback and not anthropogenic. It’s the systems natural response to forcing, where forcing is exclusive to solar input. Changing CO2 concentrations also changes the system, but its the size of the influence this has which is at the root of the controversy, is far lower than claimed by the IPCC and has little effect on the average temperature or where the locations of the average 0C isotherm are per hemisphere.

Note that in the ice ages, a far larger portion of the planet was covered in ice and it’s melting had a proportionally far larger influence on the planets temperature. The magnitude of its influence decreases as the 0C isotherm moves towards the poles. If you were to consider all of the ice on the planet to disappear, the resulting increase in solar energy would only be about half of the emissions required to increase the surface temperature by 3C. This is because 2/3 of it is moot as clouds are already reflecting energy. This effect is also evident in the seasonal response of the planet where surface snow extends nearly as far as ice age glaciers.

The relevant effect of ice and snow is to change the effects of clouds from only trapping heat at the surface when ice is present to both trapping heat at the surface and reflecting away additional energy when the surface is ice free.

Willis,

The variable is called “Snow Albedo – Black Carbon”, which led me to think that they are using the change in snow albedo due to soot on the snow as their metric. But this could be a wrong interpretation.

w.

Thank you, Wllis. Very well done!

Willis,

The problem to solve consists actually in, starting from different time series (that could be linked in someway to discover), to find (by natural or artificial intelligence) the structure of a conceptual model that is able to reproduce as well as possible the time series. These time series are not linked to any parameter; they are (non perfect) indicators of the behaviour of some elements of the system. Once such a conceptual (hypothetical) model is defined, a causality analysis (above and beyond the Granger causality approach; see Josuah Pearl book on “Causality”) can be undertaken, and the model can eventually be cleaned from some insignificant links. To the best of my understanding, the most comprehensive and likely HYPOTHETICAL model that could be built looks like this one: https://www.dropbox.com/preview/Climate/meta-model%20climate_20180115.pptx. Remember such a model is highly non-linear and a tiny fluctuation of one of the parametrs could have a significant effect. Also, from “common wisdom”, it is understood that a “primary cause” must send one or many “causal arrows” but not receive any ones. An “effect” must exhibit symetrical characteristics. This is NOT the case for temperature AND CO2 (or other GHGs). They receive and emit many causal arrows in the model; they belong to the category of “relay variables”, embedded in several (in)direct feedback loops. In system analysis it is recommanded NOT to try and modify such relay variables, as the effect is damped out by a strong stabilizing feedback loop, or, on the other hand, leads to an outcome that is highly non predicitble. Relay variables make part of several feedback loops, that can be stabilizing or not. For the temperature, paleoclimatic evidence shows that the climate system is in a chaotic mode, spinning around two strange attractors in the phase plan: the “moderate” and the “glacial” state::

https://www.dropbox.com/preview/Climate/Phase%20plan%20analysis%20of%20Vostok%20data.pptx All other fluctuations observed are actually nothing else than orbital fluctuations around those attractors.It seems obvious to me that the climate system is remarkably stable (the temperature feedback loops must be very effective) and that is simply switches between these two modes. Now, coming back to causality, If you take a look at the first figure linked, you will discover that in this (hypothetical) model, the causes are on the top of the figure: cosmic rays, gravity and electromagnetic planetary fields, météorites. And, I am affraid, these “causes” are not tunable by whatever carbon tax, energy transition or efficiency program. It is possible also that such a complex system generates endogenic fluctuations, resulting simply from its structure. In a nutshell, such a “meta-model” leads to the conclusion that climate fluctuations are natural and of chaotic nature, and thus non predicitble at a longer time horizon (certainly not at a century time scale, as IPCC is claming to do with its projections).

Henri,

Interesting post. Unfortunately, I don’t have a dropbox account and couldn’t see your figures, which sound interesting.

“. In a nutshell, such a “meta-model” leads to the conclusion that climate fluctuations are natural and of chaotic nature, and thus non predicitble at a longer time horizon (certainly not at a century time scale, as IPCC is claming to do with its projections).”

I don’t think this is quite true. There are constraints to the behavior of climate: patterns, interactions, feedbacks, lag times and buffers that tend to keep things from getting unstable. Not everything is unpredictable or chaotic; some solar effects on climate are predictable, it’s just that they are sometimes swamped by other events or interactions. You could have a series of volcanoes swamp a change in W/m2, for example.

Predicting averages and trends seems to me very different from predicting individual weather events.

This tendency to see reality in statistics is a problem throughout our society. I have arguments with the PC folks on campus who insist that there being only about 15-20% women and minorities in mechanical engineering is “proof” of some sort of discrimination which they currently explain as “chilly climate”. But they can never tell me anything specific about this chilly climate. They can’t point to a mechanism, or any method by which it works, nor who is involved, or when it occurs, or anything tangible. As nearly as I can see we do somersaults trying to recruit more women and minorities right up to giving them unrealistic assessments of their capabilities and expectations for future success.

Curve fitting climate outcomes is a likelihood sort of analysis–statistical evidence perhaps, but without a solid physical model I don’t find it all that persuasive. Back before the voyager fly-by missions of Jupiter and Saturn I had a short-run correspondence with some astronomers at Cornell who had found radii of the moons of the giant planets through statistical measures of occultation light curves. While they used reasonable models of limb-darkening, they had no way to handle background variations of light curves (somewhat like a parameterization problem). Their estimates of radii could be greatly in error, which I tried to illustrate by way of examples, but which turned out to be quite wrong after the fly-by. People just will not apply much skepticism to their favorite models.

Curve fitting can work if the wind is fair and the force is with you. Kepler figured out that planets moved in elliptical orbits with the Sun at one of the foci by curve fitting. It was Newton who later (sort of) figured out why. (We still don’t seem to really understand squat about gravity although we can characterize its effects very satisfactorily) But I think Kepler’s work was a rare exception where a single “easily” analyzed natural phenomenon almost completely controlled the situation.

I put “easily” in quotes because what Kepler did was anything but easy given the mathematical and theoretical tool kit he had to work with.

In general I think Willis is dead right. It’s reasonable to try curve fitting on the off chance that you might learn something. But you probably won’t. Then, if fails to tell you anything useful, you should move on. Adding more variables to salvage your failed curve fit is likely to be a total waste of time.

To play Devil’s Advocate slightly though. the Fermi story is largely irrelevant. We are not trying to get to the sort of “truths” that Fermi and Dyson were, but to get to a point where we can say with some degree of reasonable certainty whether or not man-made CO2 is going to be a problem.

The problem is that climate science claims (i) a level of understanding of the climate and (ii) an ability to model that are obviously far beyond their actual capabilities. I am not looking for Fermi’s level of proof, because we are dealing with potentially serious real world problems.

Phoenix, if we are indeed dealing with “potentially serious real world problems” then I would want a GREATER degree of proof than if we’re discussing the mating habits of coleopterids, not a lesser degree … call me crazy, I’m unwilling to spend billions of dollars based on “some degree of certainty” as the climate alarmist recommend, thank you very much.

w.

Willis. I agree with you entirely on the uselessness of curve fitting in climate modelling

see http://climatesense-norpag.blogspot.com/2017/02/the-coming-cooling-usefully-accurate_17.html

Here is a quote from the link Section 1

“Harrison and Stainforth 2009 say (7): “Reductionism argues that deterministic approaches to science and positivist views of causation are the appropriate methodologies for exploring complex, multivariate systems where the behavior of a complex system can be deduced from the fundamental reductionist understanding. Rather, large complex systems may be better understood, and perhaps only understood, in terms of observed, emergent behavior. The practical implication is that there exist system behaviors and structures that are not amenable to explanation or prediction by reductionist methodologies. The search for objective constraints with which to reduce the uncertainty in regional predictions has proven elusive. The problem of equifinality ……. that different model structures and different parameter sets of a model can produce similar observed behavior of the system under study – has rarely been addressed.” A new forecasting paradigm is required.

An exchange with Javier on a recent WUWT thread went :

“Javier

March 19, 2018 at 11:37 am

Norman, don’t you read my articles here at WUWT? I wrote an article last week about the millennial solar cycle and how it is identified both in solar activity proxies and climate proxies. You can look it up.

The problem is that the millennial cycle does not peak in 2004. It peaks ~ 2095, and definitely between 2050-2100. The article explains it.

Dr Norman Page

March 19, 2018 at 2:00 pm

Javier as you see I wrote -” Looks like we are on the same page” after seeing your 13th article Fig 5 and Fig 7 see also the spectral analysis in comment

https://wattsupwiththat.com/2018/03/13/do-it-yourself-the-solar-variability-effect-on-climate/#comment-2764127

Nowhere in the article do I see an explanation for ” It peaks ~ 2095, and definitely between 2050-2100. ”

Your 5:29 pm comment of the 13th shows a Figure with a peak late in the 21st century. But this looks like a curve derived from some mathematical formula. Nature doesn’t do math – it creates fuzzy cycles. I pick my peak from the extant empirical temperature and neutron data. The 990 – 2004 cycle is not symmetrical – more like a sawtooth shape with about a 650 year down leg and 350 year up leg. Projections which ignore the 2004 apex or turning point are unlikely to be successful in my opinion.”

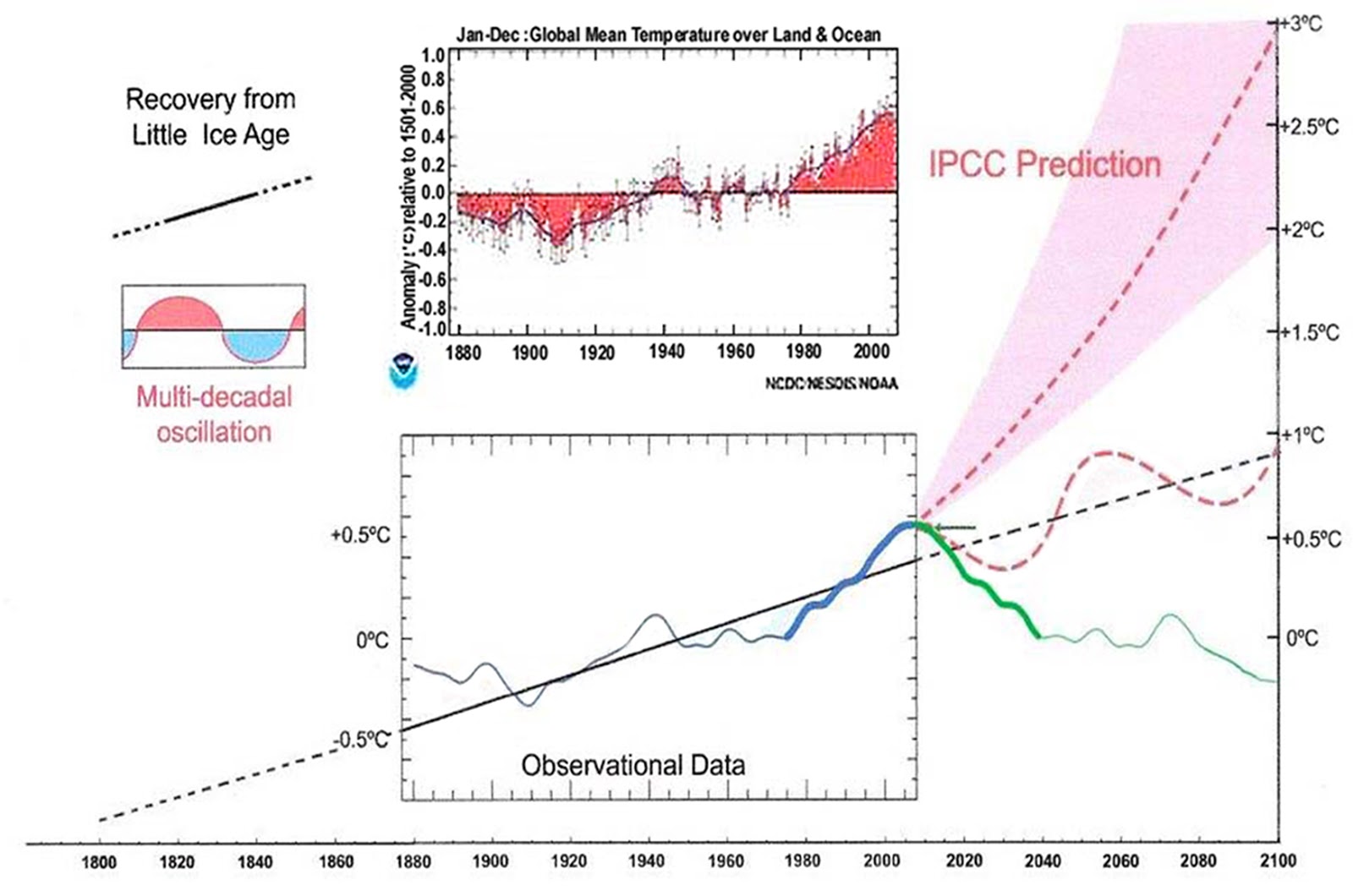

Here is my forecast to 2100 based on the observed millennial and 60 year cycle picked from the data

in Figs 3 and 4 in the link.

Fig. 12. Comparative Temperature Forecasts to 2100.

Fig. 12 compares the IPCC forecast with the Akasofu (31) forecast (red harmonic) and with the simple and most reasonable working hypothesis of this paper (green line) that the “Golden Spike” temperature peak at about 2003 is the most recent peak in the millennial cycle. Akasofu forecasts a further temperature increase to 2100 to be 0.5°C ± 0.2C, rather than 4.0 C +/- 2.0C predicted by the IPCC. but this interpretation ignores the Millennial inflexion point at 2004. Fig. 12 shows that the well documented 60-year temperature cycle coincidentally also peaks at about 2003.Looking at the shorter 60+/- year wavelength modulation of the millennial trend, the most straightforward hypothesis is that the cooling trends from 2003 forward will simply be a mirror image of the recent rising trends. This is illustrated by the green curve in Fig. 12, which shows cooling until 2038, slight warming to 2073 and then cooling to the end of the century, by which time almost all of the 20th century warming will have been reversed. Easterbrook 2015 (32) based his 2100 forecasts on the warming/cooling, mainly PDO, cycles of the last century. These are similar to Akasofu’s because Easterbrook’s Fig 5 also fails to recognize the 2004 Millennial peak and inversion. Scaffetta’s 2000-2100 projected warming forecast (18) ranged between 0.3 C and 1.6 C which is significantly lower than the IPCC GCM ensemble mean projected warming of 1.1C to 4.1 C. The difference between Scaffetta’s paper and the current paper is that his Fig.30 B also ignores the Millennial temperature trend inversion here picked at 2003 and he allows for the possibility of a more significant anthropogenic CO2 warming contribution.

.

“One way, and this is the way I prefer, is to have a clear physical picture of the process that you are calculating. The other way is to have a precise and self-consistent mathematical formalism. You have neither.”

Hoping to predict the earth’s long-term temperature by having a clear physical picture of the solar system is a non-starter. Consider the recent issues raised by the CERN CLOUD experiments. No one has that clear picture. The other way is probably a non-starter as well, with or without “self-consistent mathematical formalism,” whatever that means. The data bases do not have sufficient coverage in time and space to yield useful results. A third requirement is that the results must be testable within a practical time frame. Now, this is not likely to be achievable within the lifetimes of most humans alive today.

Expectations of long-term climate studies should be defined before embarking on lifetime projects running down rabbit holes and producing nothing of value. A practical goal is to successfully predict global mean temperatures, or whatever, within a range of values narrow enough to realistically guide public policy decisions. Until then, “What if” studies can be deferred for a few decades until the boundary conditions are known, that is, probability weighted estimates, not hot button “high” estimates or “low” estimates that are, by themselves, meaningless.

WIllis wrote: “After a bit of experimentation, I found that I could get a very good fit using only Snow Albedo and Orbital variations.”

When one performs a multiple linear regression, isn’t one first supposed to analyze the explanatory variables for co-variance? When two variables are highly correlated, I believe one is supposed to eliminate one of such such variables and admit that one can’t know which potential explanatory variable is responsible. However, you did explicitly say that you arrived at your equation by performing a multiple linear regression.

Many ENSO indices involve SST, which is a problem when one is trying to predict explain warming. Christie et al used a cumulative MEI index, which would imply the 1982 El Nino still impacts today’s temperature. If one wants temperature-independent ENSO index, an older version relied up the difference in surface pressure between Tahiti and Darwin. Total atmospheric pressure is conserved.

Frank March 25, 2018 at 2:43 pm Edit

Thanks, Frank. I used a simple procedure. I had a total of twelve different explanatory variables. I did a multiple linear regression with all of them. Then I started throwing out the ones that didn’t explain much (large p-values). When I got down to just a few, I messed around adding and taking out variables until I found the best pair, which were orbital (which is basically a straight line) and snow albedo – BC.

It’s a problem even when the indices are pressure-based, as I discussed above. You reference the SOI, the Southern Ocean Index based on the differences in surface pressure between Tahiti and Darwin … but those pressure differences in turn are correlated with temperature differences. We know that inter alia because the SOI and the e.g. Nino3.4 index are closely related, and one is pressure-based and the other is temperature based (R^2= 0.41, p-value < 2.2E-16).

w.

If you are interested, Wikipedia (and many other places) has an article on the problem of multi-colinnearity in predictors for multiple linear regressions.

“Multicollinearity does not reduce the predictive power or reliability of the model as a whole, at least within the sample data set; it only affects calculations regarding individual predictors. That is, a multivariate regression model with collinear predictors can indicate how well the entire bundle of predictors predicts the outcome variable, but it may not give valid results about any individual predictor, or about which predictors are redundant with respect to others.”

https://en.wikipedia.org/wiki/Multicollinearity

Pressure can’t change temperature globally and thereby contribute to global warming. Temperature in NINO regions can contribute to global warming. So, if I’m trying to separate the natural variability signal from ENSO from the GHG signal, I’d prefer to model the effect of ENSO using the SOI. However, I must admit I am having a hard time turning my gut feeling into a robust rational. So I’ll defer to your argument.

Thanks for your reply.

Willis, you’ve got the trunk and the tail moving on the elephant with just just two parameters.

In an above article, it is reported that climate scientists now have a global warming explanation for their embarrassing adventures of getting stuck in the Arctic Ice while surveying the inexorable meltdown. They studiously ignored what’s becoming a Fleet of Fools in Antarctica on a similar quest.

Now you are sending a lot of climate scientists away unhappy that a two parameter model betters their $300 million supercomputer products and it uses just two natural forcings (although I get it that the coefficients have been derived to navigate the temperature swings).

In both the ice and curve-fitting exercises, enormous hubris is on display. That they would then forecast worse- than-we-thought futures with these meaningless creations pretty well sums up the totality of their scientific research. Alas, where are today’s Enrico Fermis and Richard Feymans to save researchers from their hubris.

Nicely done, Willis. The thought occurred to me when you mentioned you can fashion a fit by tuning any inputs , that you could make the futility of the exercise even more obvious by using, say, the actual price of beef over the past century with some other unrelated data set. It’s risen over time and with increased CO2. The USGS has annual mineral and metal prices since 1900 that could also be used.

Cheers, and enjoy the rain while it lasts.

Gary

Thanks, Gary. You should enjoy this website, “Spurious Correlations” …

w.

“Now you are sending a lot of climate scientists away unhappy that a two parameter model betters their $300 million supercomputer products and it uses just two natural forcings (although I get it that the coefficients have been derived to navigate the temperature swings).”

A common mistake. when you compare two models ( a GCM and willis’) you cant simpley compare them on the lowest dimension statistic. For example, If I do a model of an aircraft, say with 6 degrees of freedom, and every last aero detail, it is designed to predict more than one thing. It can of course be used to predict the glide path on landing as well. The simple problem — predicting the glide path– can ALSO be done with a very simple model. in practice the simple model can even outperform the complex model. If you argue that the simple model is “better”, you really miss the point because the simple model can only do one thing.

it cant do take off, or rolls, or turns, or a Herbst Manuever whereas the 6 DOF can. The simple model cant do stalls, or spins or any things that the real model can.

A GCM has to do more than Surface temps. It has to do precipitation, winds, temperature at all altitudes, etc.

For SOME uses a simple model may be better than a complex model. Like calculating a glide path, but no one who works in the modelling business would argue or fret over cases where simple models outperformed complex models.

WRT Willis’s model, There is a reason why Snow Albedo works so well.. Any guess why? And its not a natural forcing.. do you have a wild guess why?

too funny

Thank you Steven for your thoughtful comments on the differences between models and the omnibus things they try to show. I think I was clear I didn’t mean that Willis had a useful climate model costing a few dollars by comparison to the science models. Two things:

a) For your aeronautical example, yes they can make a model and test it in a short period and the variables in the model come from physics, a century of successful flight and experiment (wind tunnel etc.). It wouldn’t hurt at the beginning of a radical new design idea to have one that gives some confidence that it would simply first fly – the number one question. They can, of course, make a small physical model, too, aware that, technically, you have to go with materials and air that aren’t right for the downsized physical model.

The latter small assurance is really where we are with climate science. Complex interactions , poor quality and distribution of data sites, limited experimentation, incomplete knowledge of what the variables are make it a different animal than aeronautics. Number one in climate science is temperature. It’s called global warming for goodness sakes, notwithstanding the name changes. Do their models fly (in a forecast)? Perhaps one day, but for the moment they have only crashes and burns and this is because tuning models and parametric manipulations with this basket of variables and unknowns in the way they do it isn’t even at the nailing-two-sticks-together and throwing it stage and is little different from Willis’s model.

b) Aeronautical engineers don’t change the “data” (out of frustration?) to make the electronic model “fit”. I grant you TOBs and station moves, equipment changes…, but am perplexed that, as Mark Steyn noted at a Senate hearing, to the effect that how can we be so confident what the temperature will be in a hundred years when we still don’t know what it will be in 1950! Now take this jambalaya and tune parameters to hindcast a model!

Steven, I believe you are an extraordinarily smart guy but with a blind spot you didn’t used to have. I needn’t explain what the poker term “tell” is. Like climate scientists do all the time to agrandize their craft, they invoke “the physics” when it has been a curve fitting exercise after the sobering attempt at application of physics. Sociology became social “science” after it was thoroughly corrupted by anti capitalist ideology; and what about the Deutche Demokratische Republik invocation?

You always invoke favorable comparisons between climate models that havent worked and sophisticated engineering models that work like a clock (I’m an engineer and uncertainty is always our number one concern – its why most engineers are CAGW sceptics).

Another tell is you now come in to do battle against sceptics on articles showing fairly poor science. I know many here are knee jerk anti global warming types no different than mindless proponents of it. But you seem to show contempt for scepticism in general these days when you know it should be the default position until bonifides are at least half established.

Re albedo, I initially didn’t realize Willis had soot in mind and thought he had erred in labelling albedo as anthropogenic instead of a natural forcing. I’m sure you want to tell me that the data comes from a model. The larger albedo effect is measured by satellite which I’m sure you would also point out is indirect and based on a model. Model is a word not a certificate of worthiness. Good forecasts are the certificate. Plunge a good thermometer into boiling distilled water at sea level and I can predict what it will read.

Thank you for the Fermi – Dyson conversation; I hadn’t seen that before.

I have a simple thermal balance model for the 0-2000m of the oceans where the emissivity changes as a log function of CO2 concentration. It has a single factor that is determined to minimise the squared error between the NOAA measured temperature data and the modelled temperature. The measured and modelled temperature anomalies are aligned for year 2017 to align at the time of the more thorough ARGO data. This chart shows the comparison:

https://1drv.ms/b/s!Aq1iAj8Yo7jNgnXLo5LnjuHhohGM

Limiting CO2 to 570ppm results in equilibrium rise of 0.083K degrees from the 1850 level; about 0.64K degrees from the current level.

Same model but using measured sunspot number with a 22 year delay to modify the emissivity rather than using any sensitivity to CO2:

https://1drv.ms/b/s!Aq1iAj8Yo7jNgniSxAGfk6xFTfkM

For this simple model the CO2 dependent emissivity gives a better fit than the sunspot dependent emissivity. Of course both the CO2 and temperature could be driven in the same way by another variable.

The 0-2000m thermal response is highly damped and is a better indication of thermal trends than any other temperature measurement that has all the noise created by the chaos of weather. The heat imbalance reaches 1.4W/sq.m or 504TW globally, which is well within the estimated 1000 to 1500TW transport capacity of the thermohaline deep ocean circulation.

Rick, I don’t get the connection between emissivity and either CO2 or sunspots. Emissivity is a function of the nature of the emitting surface alone. How does, say a black ball at a temperature of 290K, know how much CO2 or how many sunspots are above it? Reread the Fermi quote.

It is not a black ball. It is water that has a thin yet complex surface coating or layer that I have reduced to a single factor that I have termed emissivity as it reduces the rate of heat loss from the surface. In one version of the model the emissivity changes by a small factor based on a log function of the CO2 content in the surface layer. In the other I adjust the emissivity by a small factor based on a linear relationship with sunspots.

My emissivity term is more aptly described as the effective emissivity as it is based on the measured average conditions at the earth’s surface to achieve the initial thermal balance. The use of effective emissivity of the surface, where conditions can be measured, provides a better representation of Earth thermal balance than some non-surface emitting as a black body with an implied temperature somewhere above the actual surface.

RickWill March 26, 2018 at 5:17 am

Like Gary, I don’t have a clue what this means. Emissivity is inherent in the substance. The emissivity of water is not a function of CO2 concentration. What am I missing?

w.

Read it as effective emissivity. A single factor that is based on the ratio of emitting power of Earth’s oceans to space with what it would be if it were a black body.

The oceans are the dominant store of heat in the climate system and the ocean surface has the highest temperature meaning all heat flows from that surface, whether it is into the deep ocean by mixing through waves and currents or to the atmosphere by various means and then into space.

RickWill March 26, 2018 at 2:31 pm

I’m sorry, but near as I can tell you’re just making things up. AFAIK there is absolutely no evidence that emissivity is affected by either CO2 or sunspots. If you have a source saying that this is even theoretically possible, now would be the time to bust it out …

w.

Possibility the best known and most readily available model of how the atmospheric layer affects the emissive power of the Earth’s surface is MODTRAN:

http://climatemodels.uchicago.edu/modtran/

This enables the user to adjust the surface temperature as well as various atmospheric components and then determine the radiating power of the surface. If you use it with the preset values it produces a radiating power at top of atmosphere of 298.52W/sq.m for a surface temperature of 299.7K. A black body surface at that temperature would emit 457.4W/sq.m. So the effective emissivity is determined to be 0.652 in this example.

If the value of CO2 is set to 280ppm then the radiating power is 300.22W/sq.m. In this case the effective emissivity is 0.656. MODTRAN readily demonstrates how CO2 alters the effective emissivity.

My model determines an effective emissivity of the ocean surface. In one case I use CO2 as the only factor affecting the effective emissivity. In the other I use sunspot # as the sole factor affecting emissivity. It happens that when the effective emissivity is modified by a small factor dependent on the log of CO2 the model gives a good fit to the measured temperature anomaly. The sunspot dependent emissivity not very good fit.

Rick, it sounds like you are measuring the amount of energy radiated from say the ocean that makes it through the atmosphere, comparing it to the amount emitted at the ocean surface, and giving it the name “effective emissivity”.

Let me suggest that if this is the case, that you call it by any other name. “Emissivity” has a clearly defined meaning in science, and it has nothing to do with anything but the material. In particular, it is not dependent on whether or not the underlying material is in a vacuum or surrounded by a gas.

What it appears you are measuring is the absorptivity of the overlying atmosphere (which per Kirchoff’s law is equal to the emissivity of the atmosphere at any given frequency).

But this is not the emissivity, or even the “effective emissivity” of the ocean. All you do with that name is confuse things entirely as you have just seen here.

In general, trying to redefine a name that already has a clear scientific meaning is an exercise in futility.

w.

No part of Earth’s surface meets the strict definition of emissivity as it applies only to an isothermal surface. However it is widely referenced as such.

The surface of the earth ocean is most often warmer than the air above and warmer than the water below. So all heat flows from this surface whether up or down. That should be the emitting temperature. I could go through the complex process of analysing the emission and absorption for a myriad layers of gases in the atmosphere similar to MODTRAN but the energy released from the surface all ends up radiated to space. I have lumped all that complexity into a single parameter. In my view it is best described as emissivity as it reduces the radiation from the emitting surface at its average temperature compared with a black surface with no absorption layers would produce.

An observer on the moon would see a multicoloured sphere. Without prior knowledge of the atmosphere they see a surface with varying emittive power due to changes in the emitting surface temperature and the pixel level emissivity. The emissivity changes all over that surface. I reduce all those pixel level values to a single average value that encompassed the very thin atmospheric layer.

I am willing to consider other terms than effective emissivity but it is not absorption as atmospheric absorption only affects a modest proportion of the energy ultimately emitted to space.

Transmittance factor may be a more applicable term as that implies passing through the atmosphere and what is eventually released to space. The single term would lump the average surface emissivity and the average transmittance of the atmospheric layer.

RickWill March 27, 2018 at 9:36 pm

Thanks, Rick. I don’t understand the purpose of lumping together a) the emissivity of the underlying surface, b) the transmissivity of the overlying atmosphere, and c) the “atmospheric window”, running all of that in reverse through the Stefan-Boltzmann equation and calling it “effective emissivity. Other than confusing me and everyone else, what do you gain from that?

Best regards,

w.

The purpose is to simplify Earth’s energy balance to a single dependent factor that lumps atmospheric, surface and external factors together then use the model to test various theories on how measured changes in the atmosphere or outside the atmosphere, like sunspots, correlate to measured changes in the temperature anomaly.

I will do a write up on the model that covers the key features and results and publish it. The reason I made the initial post here was to make the point that a single parameter model, based on CO2 increasing, gives good correlation with measured 0-2000m ocean temperature anomaly. This temperature is good representation of the total energy in the climate system on earth and has little noise. It is the most likely candidate for determining the actual climate trend. I do not need a myriad of tunable parameters to achieve good correlation; just one that is a log function of CO2.

If the 0-2000m anomaly continues on its current trajectory till 2030 I would say CO2 is a dominant factor. If we see a turn down in the anomaly in the next couple of years it indicates that CO2 is not a dominant player in the energy balance.

And the margin of error in the actual measurement is what? And the NIST traceable calibration records are available for review where? Claiming a greater than 1 sigma accuracy of +/-0.5°C for any temperature record is optimistic fantasy at best. This would fail your ISO 9000 audit immediately in industry. This why the CO2 sensitivity is unlikely to be high. We are still bouncing around in the same natural variability range we always have in spite of nearly doubling the CO2. There is no statistically valid evidence of any unusual temperature variation at all.

What is of the most import to me is the methods used to create an Average Global Temperature going back in time. Focusing on the land based data only for a moment, it is pretty clear there are a lot of gaps in the record. Take for example the GHCN daily maxima and data minima data set. I pulled the data from this site.