A Quick Note from Kip Hansen

A quick note for the amusement of the bored but curious.

A quick note for the amusement of the bored but curious.

While in search of something else, I ran across this enlightening page from the folks at UCAR/NCAR [The University Corporation for Atmospheric Research/The National Center for Atmospheric Research — see pdf here for more information]:

“What is the average global temperature now?”

We are first reminded that “Climate scientists prefer to combine short-term weather records into long-term periods (typically 30 years) when they analyze climate, including global averages.” As we know, these 30-year periods are referred to as “base periods” and different climate groups producing data sets and graphics of Global Average Temperatures often use differing base periods, something that has to be carefully watched for when comparing results between groups.

Then things get more interesting, in that we get an actual number for Global Average Surface Temperature:

“Today’s global temperature is typically measured by how it compares to one of these past long-term periods. For example, the average annual temperature for the globe between 1951 and 1980 was around 57.2 degrees Fahrenheit (14 degrees Celsius). In 2015, the hottest year on record, the temperature was about 1.8 degrees F (1 degree C) warmer than the 1951–1980 base period.”

Quick minds see immediately that 1.8°F warmer than 57.2°F is actually 59°F [or 15° C] which they simply could have said.

UCAR/NCAR goes on to “clarify”:

“Since there is no universally accepted definition for Earth’s average temperature, several different groups around the world use slightly different methods for tracking the global average over time, including:

NASA Goddard Institute for Space Studies

NOAA National Climatic Data Center

UK Met Office Hadley Centre”

We are told, in plain language, that there is no accepted definition for Earth’s average temperature, but assured that it is scientifically tracked by the several groups listed.

It may seem odd to the scientifically-minded that Global Average Temperature is measured and calculated to the claimed precision of hundredths of a degree Celsius without first having an agreed upon definition for what is being measured.

When I went to school, we were taught that all data collection and subsequent calculation requires the prior establishment of [at least] an agreed upon Operational Definition of the variables, terms, objects, conditions, measures, etc. involved.

A brief of the concept: “An operational definition, when applied to data collection, is a clear, concise detailed definition of a measure. The need for operational definitions is fundamental when collecting all types of data. When collecting data, it is essential that everyone in the system has the same understanding and collects data in the same way. Operational definitions should therefore be made before the collection of data begins.”

Nonetheless, after having informed the world that there is no agreed upon definition for Global Average Temperature, UCAR assures us that:

“The important point is that the trends that emerge from year to year and decade to decade are remarkably similar—more so than the averages themselves. This is why global warming is usually described in terms of anomalies (variations above and below the average for a baseline set of years) rather than in absolute temperature.”

In fact, the annual anomalies themselves differ one-from-another by > 0.49°C — an amount just slightly smaller than the whole reported temperature anomaly from 1987 to date (a 30-year climate period). [The difference between GISS June 2017 and UAH June 2017].

So, let’s summarize:

- We are told that 2015, the HOTTEST year ever, was …. what? ….. 59°F or 15° C – which is not hot except maybe in the opinion of the Inuit and other Arctic peoples — which may be a clue as to why they really talk in anomalies instead of absolute temperatures.

- Although a great deal of fuss is being made out of Global Average Temperature, there is no agreed upon definition of what Global Average Temperature actually means or how to calculate it.

- Despite the problems of #2 above, major scientific groups around the country and the world are happily calculating away on the as-yet undefined metric, each in a slightly different way.

- Luckily (literally, apparently) the important point is that although all the groups get different answers to the Global Average Surface Temperature question – we suppose it’s because of that lack of an agreed upon definition of what they are calculating — the trends they find are “remarkably similar”. [That choice of wording does not fill me with confidence in the scientific rigor of the findings — it so sounds like my term – “luckily”]. Even less reassuring is being told that the trends are “more [remarkably similar] … than the averages themselves.”

- And finally, because there is no agreed upon definition of Global Average Temperature and the results for the undefined metric from varying groups are less [remarkably] similar than the trends; even the calculated anomalies themselves from the different groups are as far apart from one another as the entire claimed temperature rise over the last 30 year climatic period.

# # # # #

Author’s Comment Policy:

Although some of this brief note is intended tongue-in-cheek, I found the UCAR page interesting enough to comment on.

Certainly a far cry from settled science — both parts by the way — not settled — and [some of it] not solid science.

I’m happy to read your comments and reply — but not to Climate Warriors.

# # # # #

Using anomalies to study temperatures, when we have such a ridiculously small set of numbers for an almost incomprehensible reality, is simply nonsense.

1- There is no such thing as “normal” in climate or weather.

2- What exactly am I supposed to expect in the future based upon the range of possibilities we see in the geologic record? Are the changes we see happening really all that extreme?

3- No.

a·nom·a·ly

əˈnäməlē/

noun

1. something that deviates from what is standard, normal, or expected.

Anomalies only exist in the minds of the creators of “normal”.

There is a way to calculate a meaningful average and this is the Stefan-Boltzmann temperature of an ideal black body emitting the average emissions of the surface and is the convention used for establishing temperatures from satellite measurements whose sensors only measure emissions. Averaging emissions is far more valid then averaging temperature, since Joules are Joules and each is capable of the same amount of work, in fact the units of work are Joules. The system quite linear in Joules which should be expected based on the constraints of COE. The problem is the temperature centric sensitivity used by the consensus is intrinsically nonlinear, where emitted energy is proportional to the temperature raised to the forth power.

The reason they do this is that expressing the sensitivity as 0.8C per W/m^2 sounds plausible, while expressing the same thing in the energy domain becomes 4.3 W/m^2 of incremental surface emissions per W/m^2 of forcing and is obviously impossible. The 1 W/m^2 of forcing must result in 3.3 W/m^2 of ‘feedback’ and any system who’s positive feedback exceeds the input is unconditionally unstable. The climate system is quite stable, otherwise we wouldn’t even be here to discuss it.

Climate science has abused the meaning of Black Body. What it means in climate science is not what it means to Kirchhoff.

and Kirchhoff has been proven wrong too, in the lab. The material makeup of the “black body” matters. Kirchhoff claimed it did not.

Kirchoff’s radiation law refers to bodies in local thermal equilibrium. Inside your refrigerater, or your living room, all items come to the same temperature eventually, regardless of emissivity. That is Kirchoff’s law. Now, a far-from-equilibrium situation like sun, earth, space, where equilibrium does not occur, then emissivities do make a difference, unless the bodies concerned are greybodies, which also follow Kirchoff’s law at thermal equilibrium.

The earth is sometimes considered a blackbody as an approximation. The sun is a blackbody.

Kirchhoff’s Law of Thermal Emission is proven false. Labratory experiments have invalidated it.

I’ll say it gain, EARTH IS NOT A BLACK BODY AND CANNOT BE TREATED AS ONE

Correct! It surprises me they have got away with such nonsense for so long.

Well we can be sure that the earth is NOT a ” Black Body “.

There is NO such thing as a black body, in ANY sense of the term.

It is a fictional abstract that simply cannot exist anywhere, so since it cannot exist, it must not exist anywhere.

There is NO material or object OF ANY KIND that can and does absorb 100.000000..% of even ONE single frequency or wavelength of Electro-Magnetic Radiation energy that falls upon it. And a ” Black Body ” is required to do that for ALL frequencies and wavelengths from zero frequency up to zero wavelength.

In order to have zero reflectance, a black body would have to have the exact same permeability and permittivity as free space (Munought, and Epsilonnought) and at all frequencies and wavelengths.

So the Stefan-Boltzmann law, and the Planck Radiation formula for the spectral radiant emittance of a black body, are all simply theoretical.

But REAL bodies, that are quite good approximations over limited ranges, to what a BB is supposed to do, can be constructed, and those theories are very useful for doing practical calculations, and designs. We do know that no body can due solely to it’s Temperature, emit any wavelength or frequency of radiation energy at a higher rate that predicted for a black body.

So BB radiation theory provides a boundary envelope constraining REAL THERMAL radiation spectral radiant emittance. NON-THERMAL sources of radiation energy can’t be compared to BBs, because their radiances are not driven by any Temperature, but by material specific properties.

BB radiation is entirely independent of ANY material considerations, and depends only on Temperature.

G

Mark,

I never said the Earth is a black body. Only that the surface itself (excluding the effects of the atmosphere) has an emissivity close enough to 1 that we can consider it to be a black body without introducing any significant error. The equivalent surface temperatures calculated from satellite data based on considering the surface an ideal BB match measured surface temperatures quite well and track changes in surface temperatures even better.

Nothing is an ideal bb, but there’s a lot that is approximately a bb, including the surface of the Earth (actually the top of the oceans and bits of land that poke through), the surface of the Moon and much more.

The Earth itself, at least viewed from space, looks more like a gray body relative to its surface temperature than a black body with an effective emissivity of about 0.61.

We can also use the concept of an equivalent black body to establish an energy domain equivalence. For example, the average of 239 W/m^2 emitted by the Earth has an EQUIVALENT black body temperature of 255K. What this means is that the total radiation (energy) leaving the Earth is the same amount of as an ideal BB at 255K.

I

don’t get why so many people get so bent out of shape regarding the concept of EQUIVALENCE. It all boils down to EQUIVALENT energy and since Joules are Joules, why is this such a big deal?

George,

Many years ago I read an article in Scientific American (back when it was worth reading) about an ‘invention’ that came very close to being a Black Body. It was a stack of double-edged razor blades bolted together. The sharp edges allowed light to enter between blades, but got trapped in the ‘canyons’ between. Thus, it came very close to absorbing all the light that impinged on the razor blade edges.

Black Body razor blade details: https://ntrs.nasa.gov/archive/nasa/casi.ntrs.nasa.gov/19630000002.pdf

cnoevil,

Do I understand you to say both that satellite EST, based on BB radiation, is very close to measured surface temperature (14C or 287K) and that BB EST is really 255K. Please explain.

“It was a stack of double-edged razor blades …”

Will any razor blades work or do they need to be some of Occam’s?

@graphicconception

“Will any razor blades work or do they need to be some of Occam’s?”

Ha!

Skorrent1,

Yes, that is correct. The surface temperatures derived from observed emissions at TOA track surface measurements very well.

The total radiation at TOA is not measured by weather satellites, which only measure emissions in a couple of bands in the transparent region of the atmosphere and a narrow band that’s sensitivity to water vapor content. However, the same analysis that applies a radiative transfer model to measured results to establish the surface temperature can be used to determine the total power leaving the planet and this is very close to the 240 W/m^2 corresponding to a 255K average temperature.

There is such thing as a black body, take a box and coat inside with graphite or soot. Seal it and the soot or graphite will create a radiation curve, and induce thermal equilibrium.

This is essential to the gaseous sun model.

Kirchhoff claimed it does matter what material constitutes the black body and used soot or graphite to speed up the process of thermal equilibrium as those materials aborb and emit.

But Kirchhoff was incorrect, it does indeed matter what the cavity consists of, this has been done in labratory experiment and the results of using different materials produce different results.

Kirchhoff assumed any material would produce the same results, but obviousy he might have to wait 10 days for thermal equilibrium and the radiation curve he wanted so he covered the inside with soot or lamp black.

The thing is, it was the lamp black that produced the results he wanted NOT the cavity.

This means THE GASEOUS SOLAR MODEL IS DEAD

If one understand’s the standard model for a gaseous sun and the implications of Kirchhoff’s law being wrong.. it meas the sun cannot be a gaseous body.

Furthermore, if the sun has even a tiny amount of condensed matter, it cannot collapse into a black hole, ever.

Black holes are anti science nonsense.

For the earth to be treated as a “black body” there would have to be NO inputs and NO outputs.

Anti science JUNK

This is not gas folks.

http://wwwcdn.skyandtelescope.com/wp-content/uploads/Faculae-on-puffy-granules-Goran-Scharmer_RSAS.jpg

http://wwwcdn.skyandtelescope.com/wp-content/uploads/Puffy-Granules_m.jpg

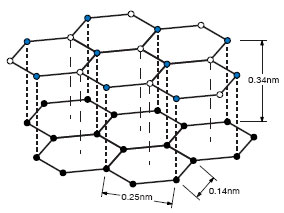

It’s a lattice, similar to this, which is not possible with the gaseous sun

http://www.sunflowercosmos.org/img_glossary_of_astronomy/granule.jpg

Best guess, like Jupiter the sun has liquid metallic hydrogen. If true, a black hole can never form from a star.

Liquid profile, matter. Not plasma or gas. You really have to reach for straws to claim this is not a liquid form of matter.

http://www.espritsciencemetaphysiques.com/wp-content/uploads/2016/01/article-0-1885c54e000005dc-549_634x632.jpg

graphicconception,

Occam only had one razor. You will need multiple Occams.

Here is Kirchhoffs’ law tested in the lab. If this law falls, MOST of current astronomy will have to be redone.

Pierre Marie Robitaille.

https://youtu.be/YQnTPRDT03U

Kirchhoff’s law is incorporated into Planck’s law, which is the birth of quantum physics. This will also fall.

Most of what we think we know is wrong.

It is well understood that Temperature varies from time to time, and from place to place on the earth.

For example, during any ordinary week, it is not at all uncommon in Downtown Sunnyvale, where I live, to have a 24 hour variation in Temperature of 30 deg. F or more. It is quite routine. So 30 deg. F is 16.7 deg. C which is much greater than the 12 to 24 deg. C range that the entire global mean Temperature has remained within for about the last 650 million years.

Since it is known that Temperature varies with time, then the only way to get a VALID global MEAN Temperature is to SAMPLE a Nyquist VALID sampling of global locations, ALL AT THE VERY SAME INSTANT OF TIME.

Measuring one temperature here now, and another temperature over there at some other time, and maybe that place there next week, is total BS. You are simply gathering up instances of total noise.

And when you don’t even do that but read the difference from this thermometer, and what it might have said some 30 years ago, is also complete rubbish.

So we have rubbish to the nth degree.

And throughout all of this there could be on any northern midsummer day, places on earyth with Temperatures that can range over an extreme range of about 150 deg. C, and a routine range of 120 deg. C.

And due to a clever argument, by Galileo Galilei, we know that at any time there can be an infinite number of places on earth having any specific Temperature in that range that you want to pick.

And throughout all of this, the planet pays no heed to these machinations, and could care less what GISS thinks the TEMP is anywhere at any time. It’s ALL ” Fake NEWS ”

G

George

The only data I consider suitable for establishing an average is satellite data sampled every 4 hours covering the entire glob with a grid size of 30km or less.

George ==> Ah, I knew this essay would provoke the Truth to peek its glorious head out of the clouds! \

george e. smith ==> I like your CliSci summary so much, I’d like your expressed permission to quote you in the future. May I have it? (*** – and please assure me that this is your real name, not an internet handle — I won’t quote pseudonyms) .

They had talking thermometers back in the old days, “…read the difference from this thermometer, and what it might have said some 30 years ago,…”?

” ALL AT THE VERY SAME INSTANT OF TIME.”

I was watching a PBS program on Einstein last night where they claimed that Einstein proved that there was no such thing as syncronicity.

However, even more challenging is that because half the globe is dark and half light at what is approximately the same time, one really would need to take two global readings, approximately 12 hours apart, to get Tmax and Tmin. But, then one would be dealing with possible changes due to weather! So, I think that it is really better to take an annual time series where every station is sampled in the late afternoon for Tmax, and again just before sunrise for Tmin — or do continuous sampling in case a moving cold front would change the times of Tmax and Tmin.

Clyde,

” …one really would need to take two global readings …”

Modern satellite data is getting close to continuous in real time and the resolution keeps getting better and better. Most of the available data is aggregated as 3 hour samples where most points on the globe are sampled 8 times a day from geosynchronous satellites and twice a day from polar orbiters which is far more samples then required by the Nyquist rate to determine min/max accurately. Spacial resolution from the earliest satellites is about 30 km pixels, but newer data is available with < 10 km resolution. At the very least, going back about 3 decades, each point on the planet has been sampled twice a day by at least 1 polar orbiter and there are usually at least 2 of these at any one time. The biggest problem with weather satellite data is that it comes from many different generations of satellites with different fields of view and sensor characteristics all at the same time and merging them together is not a trivial task, but this is a solved problem.

Anything based on sparse, homogenized surface measurements is GIGO, but results arising from satellite data are generally much more representative of reality.

George;

What would you consider a “Nyquist valid sample of global locations”? I wonder what it would cost to provide that coverage?

A bigger issue is that you’re not asking a thermometer from 30 years ago but listening to Chinese whispers.

Or should that be Hansen whispers?

Averaging the high and low for a 24 hour period does not make much sense if one wants to know what the actual average temp is, or was.

During summer, the temp in a place like Southwest Florida may have been in the 88-92 F range for 12-15 hours of the day, and may have gotten as low as 75 or so for a half an hour during a brief downpour before warming right back up after the sun came back out, and then cooled gradually after sunset to get to about 80 by dawn. In fact that was about what happened at my house today.

The halfway point between the daily high and low is not at all what one gets if one records to temp in 15 minute increments and then averages those readings out.

Different sorts of trends at other places and times of year are very easily shown to give a similar disparity between the median of the high and low and the average of what the temp was during those 24 hours.

In winter lots of places are very cold all during the long night time and warm up briefly in the afternoon, for example.

And then given the illogical and error introducing process by which they round F degrees and then convert to C degrees (or whatever the actual process is) makes it even more ridiculous.

Unless the temps are measured on some sort of grid pattern in three dimensions, I doubt the number they give for global average temp means much of anything.

Certainly not fit for scientific discussion or understanding of the atmosphere, let alone policy making that affects our entire economy over many years.

That these pulled from a hat numbers are then compared to some constantly adjusted from actual measurements 30 year period makes the entire exercise of climate science in 2017 more of a joke than a serious avenue of scientific investigation.

“Averaging the high and low for a 24 hour period does not make much sense if one wants to know what the actual average temp is, or was.”

Exactly. Whenever I hear a discussion about “average” temps I think about the events of Nov. 11, 1911, a day when a stable high was sitting over the central US setting record high temps and was followed by a fast moving Arctic cold front that set record lows the same day. Oklahoma City went from a record 83°F down to 17°F in a few hours. Springfield, MO dropped 40°F in 15 minutes and another 27°F by midnight. That was 80° at 3:45PM, 40° at 4PM and 13° by midnight.

So feel free to talk about 0.01° differences and TOBS etc. Granted 11/11/1911 was exceptional (and probably removed as an outlier from the record) but similar events happen almost every year. If there is such a thing as an “average temp” nobody knows what it is or ever was.

DJ,

The Nyquist rate tells us that we can resolve a periodic function with only 2 samples per period, which considering diurnal variability to be periodic, requires only 2 samples per day.

@co2isnotevil

This is what George wrote, in part:

It appears that George is suggesting that there is a Nyquist sampling interval over a geographical area (one thermometer per 10, 100, or 1,000 square miles, for example) that is necessary to properly resolve the global average temperature. This would be separate from the minimum number of samples per day that would be required. And to the extent that air temperatures are non-sinusoidal, you may need more than 2 per day to capture the signal. Even with 2 per day on a sinusoid, that only means you can resolve the frequency. It certainly doesn’t guarantee you’ll catch the max and min.

“any system who’s positive feedback exceeds the input is unconditionally unstable. The climate system is quite stable, otherwise we wouldn’t even be here to discuss it.”

Ergo, the claims of the warmunists that a feed back mechanism will kick into drive temperatures high that their estimate of the CO2 equilibrium sensitivity (which are way too high) are twaddle.

higher.

Anthony: Edit function please.

Regarding the large difference between the GISS and UAH anomalies for June 2017: These datasets have different baseline periods. GISS uses 1951-1980, and only two of those years are in the US record. I don’t remember UAH’s baseline period, but I doubt it begins before 1985.

UAH is 1981-2010

That’s part of the problem. If you use different base periods, you get different answers. That’s not really science—I’m not sure what it is. Also, as temperatures level off, the anomalies decrease, so using an older base period gives more warming.

” in that we get an actual number for Global Average Surface Temperature:”

But we did have that, from NCDC/NOAA. Try this.

(1) The Climate of 1997 – Annual Global Temperature Index “The global average temperature of 62.45 degrees Fahrenheit for 1997″ = 16.92°C.

http://www.ncdc.noaa.gov/sotc/global/1997/13

(2) http://www.ncdc.noaa.gov/sotc/global/199813

Global Analysis – Annual 1998 – Does not give any “Annual Temperature” but the 2015 report does state – The annual temperature anomalies for 1997 and 1998 were 0.51°C (0.92°F) and 0.63°C (1.13°F), respectively, above the 20th century average, So 1998 was 0.63°C – 0.51°C = 0.12°C warmer than 1997. 1998″ = 16.92°C + 0.12°C = 17.04°C.

(6) average global temperature across land and ocean surface areas for 2015 was 0.90°C (1.62°F) above the 20th century average of 13.9°C (57.0°F) = 0.90°C + 13.9°C = 14.80 °C

http://www.ncdc.noaa.gov/sotc/global/201513

Now the math and thermometer readers at NOAA reveal – 2016 – The average global temperature across land and ocean surface areas for 2016 was 0.94°C (1.69°F) above the 20th century average of 13.9°C (57.0°F), =0.94°C + 13.9°C = 14.84 °C

https://www.ncdc.noaa.gov/sotc/global/201613

So NOAA says the results are 16.92 & 17.04 are less than 14.80 & 14.84. Which numbers do you think NCDC/NOAA thinks is the record high? Failure at 3rd grade math or failure to scrub all the past. (See the ‘Ministry of Truth’ 1984).

For all the data adjusters, please apply Nils-Axel Mörner’s quote

“in answer to my criticisms about this “correction,” one of the persons in the British IPCC delegation said, “We had to do so, otherwise there would not be any trend.” To this I replied: “Did you hear what you were saying? This is just what I am accusing you of doing.”

http://www.21stcenturysciencetech.com/Articles_2011/Winter-2010/Morner.pdf

DD, I have been quoting those figures, as I did about an hour before you, waiting for the usual suspects to come along and explain what was done and why in their twisted scientific world.

But Nick, Steve & Zeke have ignored it for the last 2 years.

Simply stated, their methodology gives them the ability to make the numbers and thus the trend anything they want it to be.

The question of objective physical reality seems a quaint and disimportant notion to them, as they work to pound the square peg of reality into the round hole of warmista dogma.

Great post, DD More.

Donald ==> This post is not a scientific report — just a quick note on CliSci Double-Speak. The “differing base periods” (mentioned in paragraph 3) are part of the double-speak problem.

“When you have many standards, you do not have a standard.”

“Doublespeak” is synonymous with “equivocation.” An equivocation is an argument in which a term changes meaning in the midst of the argument. While an equivocation looks like a syllogism it isn’t one. Thus, while it is logically proper to draw a conclusion from a syllogism it is logically improper to draw a conclusion from an equivocation. To draw such a conclusion is “equivocation fallacy.”

If a change in the meanIng of a term is made impossible through disambiguation of the language in which an argument is made then the equivocation fallacy cannot be applied. Thus I long ago recommended to the USEPA that they disambiguation their global warming argument. They did not respond to my recommendation. The National Climate Assessment did not respond to the same recommendation. Review of the arguments of both organization reveals that they are examples of equivocations. We’re they to disambiguate their arguments these arguments would be revealed to be nonsensical.

And they work out their average, when half of the world has no data!

https://www.ncdc.noaa.gov/temp-and-precip/global-maps/

So what…50% of the population is below average intelligence (:-))

actually slightly more than that

Leo ==> cute! (but true-ish)

The real problem is the 10-15% of the people who think they are smarter than they actually are. These are the ones who like to assimilate facts so they sound like they know what they’re talking about but never actually look into the things they think they already know.

Then there’s liars, like Michael Mann and the hockey team, who think they are smart enough to get away with lies!

At the antipode for Lake Woebegone.

Is that a problem? Can’t they just make it up? (/sarc)

Interpolation of data is common in science. However, when your interpolation algorithm creates a blotchy pattern, as the anomaly maps do, that in no way reflects reality, you should change the algorithm.

http://www.cpc.ncep.noaa.gov/products/analysis_monitoring/enso_update/gsstanim.gif

Notice how the actual data is what you’d expect? The anomaly, or the current temperatures difference from “average” creates a blotchy unrealistic pattern that you never see. If you were to reconstruct what NOAA claims is average, you would have this blotchy pattern of temperature that you never see in reality, clearly the algorithm parameters to create the “average” is erroneous. I have one guess on which way the algorithm skews past data.

Didn’t Hansen/Lebedeff ‘fix’ this with homogenization? /sarc

So much of what’s wrong with climate science can be traced back to one individual who’s either the most incompetent or the most malevolent scientist there ever was. I prefer to think that it’s just incompetence driven by confirmation bias and group think rather than a vindictive quest driven by ego…

If you go back to the beginning of the whole thing, there was no group think…that came later.

And it is difficult to imagine where confirmation bias crept in, since there has been no confirmation other than what has been invented to match the original assertions.

I personally prefer to take an unflinching look at what sort of person he has demonstrated himself to be.

Giving the benefit of the doubt is fine for an initial assumption…but we have lots of evidence now with which to draw a conclusion.

When someone who makes clear predictions on a regular basis and has never been right yet, maintains an air of confident self-assurance…what should one think then?

His track record proves his incompetence, his unwillingness to accept being incorrect shows he is not fact but ego driven, and his vindictive attitude towards those who have been correct where he has been wrong shows he is not scientific and not a humble person.

Dissecting his character flaws is beside the point however.

He is wrong.

Calculating it isn’t the issue. Whether or not it has any physical meaning is the issue. And the answer is, it doesn’t. It’s a useless calculation no matter how it is derived.

🙂 This one made me laugh… Its the precision they claim to have that drives me nuts. It’s just not possible given the data, the instruments used over the time period used, and the methods they use to calculate an “average”.

So now I have a new thought to make me chuckle – they don’t even know what it is they are measuring. That actually is not surprising – it explains how they (the activists) can so easily change their story – just go with the data and methods that back your story line instead of committing to a method.

I have a suggestion, why not just use the raw data as is and accept the error margin that goes with it? So you have data starting in the 1800’s with a wide error and better data as you get to present. I bet used in such a way, the entire story of AGW disappears into the margin of error.

Tony’s graph….

Now THAT really sheds light on the Adjustments and Manipulations NASA made to the Past Data

I suspect that the same errors that cause the models to run hot in the future makes them run cold in the past, so they adjust past temperatures to match the models elevating the legitimacy of the models over the ground truth.

co2 ==> Models actually produce “chaotic” results — that is, results highly sensitive to initial conditions, the typically shown spaghetti-graph of results all over the place in a widening cone going forward — the tuning is what makes them show temperature generally up in the future.

Kip,

I’ve done a lot of modelling of both natural and designed systems. One of the first tests I apply to any model is to vary the initial conditions to make sure the results converge to the same answer. Otherwise, it most likely indicates an uninitialized variable.

co2 ==> I suppose you are familiar with the muti-run output graphs from climate models — they run them with today’s intial conditions, then re-run them with very sightly different initial conditions, over and over, and get results that look like this:

That is chaotic output caused by extreme sensitivity to initial conditions — and the results have absolutely nothing whatever to do with the real world.

“RAW DATA !!!”

You cant trust raw data, you don’t know if the people picking it washed their hands after going to the toilet !!

Raw data needs cleaning & cooking before consumption.

(:-))

I always forget whether I’m supposed to wash my before or after I’ve gone to the bathroom when I handle food. That’s why my wife does the cooking. (Kidding!)

Don’t you mean, “Raw data needs cleaning & cooking before presumption.”?

(Or maybe the “pesumption” comes first? I always forget.8-)

Gunga ==> Actually, in truth, I often feel the need to wash my hands after handling climate data.…

Raw data needs cleaning & cooking before consumption.

“… before corruption“

Robert of Texas ==> Seriously, the “error margins” — in this case, actually the original measurements are “ranges” — usually at least 1 degree wide — which has to be added to the actual error margins (thermometer error, human error, interpolation error) which has to be added to the confidence intervals…….the total width of the “error bars” would far exceed the claimed temperature rise since 1880 — I would guess at least +/- 2 degrees C — certainly for all records before the mid-20th century.

https://en.wikipedia.org/wiki/Circular_reasoning

https://en.wikipedia.org/wiki/Motivated_reasoning

And in spite of calculating an actual number there is never any discussion of error measurement. Anyone that has actually done any sort of statistical analysis knows this is possible to do and it will give remarkably useful information about your average from year to year. Specifically it will tell you whether the variability from year to year is within relative norms expected. We could then actually have a discussion of whether we should be using 1-2-3 standard deviations in the discussion depending on the degree of confidence you wanted in those numbers (99-95-68%). But we don’t do that. Why? Because it would detract from the anxiety.

I like the overall approach they use.

“We prefer to look at long term averages of approximately 30 years.

But Hey! LOOK AT THIS NUMBER!

This mean that 2015 was hottest than every preceding year and warmer of 1 degree C than the 30 years mean? I think that mean must be compare to other mean. Or single warm data with mean of warm data.

Where am I wrong in this?

It’s warmer than the base period of 1951 to 1980. Yes, the 30 year mean. It’s a mean for 2015 compared to the mean of the base period—mean compared to mean.

No is 39 years nean to 1 year mean!

Sorry. 30 years not 39.

That’s what I said: The mean for 2015 is compared to the mean of the base period 1951 to 1980.

I keep belabouring this point, so here goes again.

The hottest year ever was 2015 and it was 59F or 15C and yet in 1998 it was and still is clearly stated that the temperature in 1997 was 62.45F or 16.92C and in 1999 they said 1988 was even hotter.

They currently show 1998 as being 58.13F or 14.53C just by changing baseline – Yeah Right!

Anomalies make it easier to hide the cheating……

There is no such thing as an average temperature. You can’t even measure the average temperature in a room (without measuring the temperature of every molecule), let alone a planet’s atmosphere.

+ 1,000,000 . Average annual temperature of the Earth is an utterly nonsensical concept. It’s hard to believe that hundreds of thousands of University Professors have not got the cojones to make an hoinest statement like this. It’s basic and elementary. Undergraduate physics really.

Apparently it is not undergraduate physics anymore…..

Sheri ==> Under-grad physics is now what they used to teach in High School — real physics doesn’t start until Grad School these days….you need to be able to do Maths — which generally are not really taught in HS or undergrad uni courses anymore.

Kip: That is truly disturbing.

Kip – to be fair, what you are taught in college – undergrad – depends on your major. For example, engineering students take heavy duty math and physics in freshman year. They’re used as weed-out courses to separate the sheeps from the goats. Majors in pre-med and the basic sciences, as a rule, have to pass a calculus course. Business majors, at least at UNC and NC State, have to do the same.

But for sure, rigor in math and sciences is restricted to engineering and science majors.

In my semi-rural county on the NC coast, every high school kid on a graduation track has to pass Algebra II. Anyone showing an interest and facility for math takes calculus.

English/humanities majors in college get a pass in math if they place out. Seems reasonable to me.

What you do is measure multiple points, and then give error bars to account for the fact that the molecules you didn’t measure might be different from the ones you did.

I’m reminded of a story about the difference between accuracy and precision.

Seems a recently minted engineer was doing calculations out to several decimal places when his boss looked over his shoulder and observed, “I don’t know about that last number, but the first one is wrong.”

+1

Yep

Someone obviously calculated a figure approximately 1 deg. C. Someone else converted that to Fahrenheit and in the process implied an accuracy that simply isn’t justifiable.

As Kip points out the various global averages are …

Specifying the anomalies to two decimal places is risible.

I believe Norman Augustine had a law regarding “On making a precise guess.”

“The weaker the data available upon which to base one’s conclusion, the greater the precision which should be quoted to give the data authenticity.”

True story:

8 people around a table 40 years ago.

Private engineer pulls a statistic out of the air and states, “80% of I & I (inflow/infiltration) comes from roof drain connections (so our proposal is obviously the best….)”

Head of State agency turns to agency engineer and asks, “is that correct?”

Agency engineer doesn’t know but answers “Yes” to avoid admitting he doesn’t know something.

Three weeks later Head of State agency is quoting the made up stat at various meetings.

Stat was educated guess for specific location and wasn’t far off, but it became accurate and authentic for a number of years in all locations.

“Norman Augustine”

There’s a name I haven’t heard in a while.

IPCC AR5 Annex III: Glossary

Energy budget (of the Earth) The Earth is a physical system with an energy budget that includes all gains of incoming energy and all losses of outgoing energy. The Earth’s energy budget is determined by measur¬ing how much energy comes into the Earth system from the Sun, how much energy is lost to space, and accounting for the remainder on Earth and its atmosphere. Solar radiation is the dominant source of energy into the Earth system. Incoming solar energy may be scattered and reflected by clouds and aerosols or absorbed in the atmosphere. The transmitted radiation is then either absorbed or reflected at the Earth’s surface. The average (WAG) albedo of the Earth is about 0.3, which means that 30% of the incident solar energy is reflected into space, while 70% is absorbed by the Earth. Radiant solar or shortwave energy is transformed into sensible heat, latent energy (involving different water states), potential energy, and kinetic energy before being emitted as infrared radiation.

With the average surface temperature of the Earth of about 15°C (288 K), (SEE THAT??!!)

the main outgoing energy flux is in the infrared part of the spectrum. See also Energy balance, Latent heat flux, Sensible heat flux. (That was back in ‘13 ‘14 when AR5 was being published.)

Global mean surface temperature An estimate of the global mean surface air temperature. However, for changes over time, only anomalies, as departures from a climatology, are used, most commonly based on the area-weighted global average of the sea.

Land surface air temperature The surface air temperature as mea¬sured in well-ventilated screens over land at 1.5 m above the ground.

The SURFACE IS NNNOOOOTTTTT the GROUND!!!!!!!! In fact most weather measuring stations do not even measure or record GROUND temperature.

Now, during the day the earth and air both get hot but objects sitting in the sun get hotter than both. Once the sun goes down the air cools rapidly, the ground does not so the idea that the air warms the ground is patently BOGUS!!!!

The genesis of RGHE theory is the incorrect notion that the atmosphere warms the surface. Explaining the mechanism behind this erroneous notion demands RGHE theory and some truly contorted physics, thermo and heat transfer, energy out of nowhere, cold to hot w/o work, perpetual motion.

Is space cold or hot? There are no molecules in space so our common definitions of hot/cold/heat/energy don’t apply.

The temperatures of objects in space, e.g. the earth, moon, space station, mars, Venus, etc. are determined by the radiation flowing past them. In the case of the earth, the solar irradiance of 1,368 W/m^2 has a Stefan Boltzmann black body equivalent temperature of 394 K. That’s hot. Sort of.

But an object’s albedo reflects away some of that energy and reduces that temperature.

The earth’s albedo reflects away 30% of the sun’s 1,368 W/m^2 energy leaving 70% or 958 W/m^2 to “warm” the earth and at an S-B BB equivalent temperature of 361 K, 33 C cooler than the earth with no atmosphere or albedo.

The earth’s albedo/atmosphere doesn’t keep the earth warm, it keeps the earth cool.

Shouldn’t the energy budget of Earth include heat from the core?

If the heat from the core comes from radioactive decay then YES, but lets make it small so we can virtually ignore it.

If the heat from the core comes from the gravito-thermal effect in solids then NO because then it will come from the sun and that will COMPLETELY mess up the K-T diagram.

Perhaps it comes from somewhere else?

(/s, possibly somewhere in the above)

Heat from the “ground”, called heat flow, is very small compared to heat from the sun. It is measured in milliwatts and is less than 100 milliwatts/m2. Wiki says it is 91.6 mW/m2. Geologists, geophysicists, engineers work with heat flow routinely.

“Once the sun goes down the air cools rapidly, the ground does not ”

Respectfully disagree, based on observations anyone can make nearly every day in every location.

Surfaces in the sun warm far more rapidly than the air does…the air never gets as hot as surfaces than have the sun shining on them.

And likewise, after sunset, surfaces cool far more rapidly than air.

This is why dew forms long before fog.

Dew can form on grass and hoods of cars and such before twilight is even over, while fog might not set in for several hours more.

At colder temps and drier air, frost can form and maintain at an air temp of 38 degrees when there is no wind (below 5 mph).

This shows again that surfaces in fact cool far more rapidly than air does.

>>

And likewise, after sunset, surfaces cool far more rapidly than air.

<<

And if fog forms, it’s called “radiation fog.” I still remember the five types of fog from my meteorology training many, many years ago.

Jim

“the average annual temperature for the globe between 1951 and 1980 was around 57.2 degrees Fahrenheit (14 degrees Celsius). In 2015, the hottest year on record, the temperature was about 1.8 degrees F (1 degree C) warmer than the 1951–1980 base period.”

It’s false analysis to compare a 30-year average with the measurement of a single year.

The valid comparison is 1951-1980 with 1996-2015. That difference is about 0.5 C = 0.9 F, half of what NCAR reports.

Pat Frank ==> The comparison is just of magnitude — not the actual measurements. The CAGW hypothesis consensus crowd is aghast that the current “anomaly” is about 0.5 °C above the climatic average (30-year average) yet the magnitude of the claimed rise and the magnitude of the difference of calculated anomaly between climate groups is the same.

Agreed Frank. The caveat to your 30/30 comparison should also be that this is a recognized cold period compared to a recognized warm period. A further comparison of the previous warm period would therefore be enlightening in terms of cyclicality. Something like 1910- 1940 perhaps.

Nobody is interested in coming up with a standard definition of what constitutes “the globe” that is being averaged because it is the equivalent of setting the goalposts in cement. Remember when the global average temperature wasn’t rising, so they had to add in heat from the deep oceans? A loose definition allows for easy adjustment.

Badgers don’t have Kangos.

Yes, the IPCC’s self serving consensus needs all the wiggle room they can fabricate in order to provide what seems like plausible support for what the laws of physics precludes. The more unnecessary levels of indirection, additional unknowns and imaginary complexity they can add, they better they can support their position.

Speaking of operational definitions, how about the term anomaly. Standard dictionary definitions such as the OED on-line definition “Something that deviates from what is standard, normal, or expected” does not seem applicable to annual global mean temperatures. Can someone please explain to me what is the “standard” or “normal” or “expected” annual global mean temperature? The use of the term anomaly in this case seems to continue the recent “trend (sic)” of diluting the specificity of language.

I believe that was exactly the idea.

RE: “anomaly” — in CliSci, the current usage seems to be simply “the difference between this and that” — calling it an “anomaly” instead of “the difference” makes it sound like it is something wrong — casts a negative connotation on it — as if it were something that shouldn’t be there.

It is propaganda by language alone — “propter nomen” or “because of the name” — a difference is just a difference but an anomaly is something wrong.

Plus it sounds more sciencier.

Peter,

Yes, the term “residual” might be a better choice than “anomaly.”

In aviation the standard atmospheric temperature is 15 C. It is now and it was 30 years ago.

Skinner ==> The same is true in the search for “Earth-like planets”.

I found this blogpost about the difference between average temperature and average irradiance interesting and informative.

http://motls.blogspot.com/2008/05/average-temperature-vs-average.html?m=1

Trenberth et al 2011jcli24 Figure 10

This popular balance graphic and assorted variations are based on a power flux, W/m^2. A W is not energy, but energy over time, i.e. 3.4 Btu/eng h or 3.6 kJ/SI h. The 342 W/m^2 ISR is determined by spreading the average discular 1,368 W/m^2 solar irradiance/constant over the spherical ToA surface area. (1,368/4 =342)

There is no consideration of the elliptical orbit (perihelion = 1,415 W/m^2 to aphelion = 1,323 W/m^2) or day or night or seasons or tropospheric thickness or energy diffusion due to oblique incidence, etc.

This popular balance models the earth as a ball suspended in a hot fluid with heat/energy/power entering evenly over the entire ToA spherical surface. This is not even close to how the real earth energy balance works. Everybody uses it. Everybody should know better.

An example of a real heat balance based on Btu/h is as follows. Basically (Incoming Solar Radiation spread over the earth’s cross sectional area, Btu/h) = (U*A*dT et. al. leaving the lit side perpendicular to the spherical surface ToA, Btu/h) + (U*A*dT et. al. leaving the dark side perpendicular to spherical surface area ToA, Btu/h) The atmosphere is just a simple HVAC/heat flow/balance/insulation problem.

Latitude Range Net Area Incident Power Flux Cos ϴ Power In

0 to 10 3.875E+12 1,362.8 5.280E+15

10 to 20 1.151E+13 1,321.4 1.520E+16

20 to 30 1.879E+13 1,239.8 2.329E+16

30 to 40 2.550E+13 1,120.6 2.857E+16

40 to 50 3.143E+13 967.3 3.041E+16

50 to 60 3.642E+13 784.7 2.857E+16

60 to 70 4.029E+13 578.1 2.329E+16

70 to 80 4.294E+13 354.1 1.520E+16

80 to 90 4.429E+13 119.2 5.280E+15

You might note that the average temperatures of those latitude zones are not proportional to the fourth root of the radiation flux they receive. Thanks to Hadley circulation, regions closer to the equator are cooler than predicted, regions closer to the poles are warmer than predicted. Average temperatures equal predicted temperatures around 40 degrees north and south.

On a side note, check out the equatorial Pacific heat trend. A weak La Nina is forming me thinks.

http://www.cpc.ncep.noaa.gov/products/analysis_monitoring/enso_update/heat-last-year.gif

Actually this is a very good point. The real SSTs show a La Nina like pattern with anomalously cool tongue of Trade wind induced cool water in the eastern tropical pacific. This is clear to see. What hides this La Nina like SST pattern is anomalies…..those normals are not what they seem, because each Nino has the strongest warm pool in different locations, so the normals have huge errors spatially and spread out the “so call normal SST pattern”.

You can clearly see that the tropical eastern Pacific is much cooler than elsewhere at similar latitudes, but anomalies say nothing to see here out of the ordinary. However, Out going Long wave Radiation (OLR) also shows a lack of convection associated with these cool tropical SSTs hence tropical convection has adopted a La Nina like pattern over the Pacific and has done so for many months now.

The only question is not whether a La Nina like pattern is occurring but really more a question of about how strong it gets.

http://www.cpc.ncep.noaa.gov/products/analysis_monitoring/enso_update/sstanim.gif

OLR

http://www.cpc.ncep.noaa.gov/products/analysis_monitoring/enso_update/olra-30d.gif

Seeing as the temperature in 2015 is 1 year/noise, not climate (by their own definition), they should be saying how the period 1986 to 2015 compares to 1951-1980, to be scientific instead of misleading and political!

Using 1951-1980 as the base period is interesting, since temperature was falling and CO2 rising during that period. Why not 1911-1940, which was warmer than 1951-1980 and had a warming rate quite similar to post 1980? Using 1911-1940 would isolate CO2 increase effects (?) from temperature and provide a better baseline for comparison with the current temperature trend. Until 2014, two of our major northern California cities, Santa Rosa and Ukiah, showed cooling compared to the 1930s, and only exhibited warming after “homogenization.” Michael Crichton found the same for Alice Springs, Australia, in his novel “State of Fear,” http://www.thesavvystreet.com/state-of-fear-gets-hotter-with-global-warming/ which I just reads again and find its observations and conclusions as timely as in 2004.

re. State of Fear

He also points out that scientists are far from impartial observers.

Scientists know how ‘human’ they are but they won’t admit that in public. Instead, we have folks like Dr. Michael Mann claiming godlike certainty. It’s actually evil.

Thank you, Kip, for another thought provoking article.

Re Global Averages, I am curious if anyone has updated that now decades old question: What is the Global Average telephone number?

other Ed ==> I’ll bet’cha it isn’t 555 555-5555!

If we ignore access codes, international calling codes and area codes, we are left with seven digit subscriber numbers. The largest possible seven digit number is 999 9999 or, expressed differently, 9,999,999. That’s one less than 10,000,000.

000 0000 isn’t a possible phone number, but for ease of arithmetic, let’s pretend it is. That makes the average 4,999,999.5 which isn’t a valid phone number except at Hogwarts. Possibly it’s 499 9999 ext. 5.

I’m guessing that a seven digit phone number can’t have a leading zero because of the way the old mechanical switches worked. That means the lowest possible phone number would be 100 0000. In that case the average would be 449 9999.5

Hope that helps. 😉

commie ==> Come on, man! Its not Climate Science …… If it were Climate Science, we would have a half-a-dozen international groups using nationally funded super-computers calculating away on half-a-dozen different definitions of Global Average Phone Number using several dozen different approaches running multiple model runs producing chaotic results which could then be averaged into perfectly nonsensical, but “Good Enough for Climate Science”, answers. You gotta get real!

Whatever it is, it’s a government number and it’s warmer than it was 20 years ago. If you phone it they will want some tax money. It was 1.5 less 200 years ago.

Call immediately as it will be ringing under water by next week, or so.

Kip,

Of course it isn’t! Because 555 555 555 is the phone number given out by good looking women in the bar that don’t want to be bothered by ugly geeks.

Clyde ==> I suppose this bit of wisdom is from personal experience? 🙂

My old phone number was 41W; the W indicated that it was a party line, and we shared our with the Methodist minister. Private lines only had numbers, like my best friends had 2 and 45. These numbers would not fit in an average very well.

commie, not all phone numbers are possible. In the US, there are no phone numbers that start with 0, 911, 811 or 411.

“When I went to school, we were taught that all data collection and subsequent calculation requires the prior establishment of [at least] an agreed upon Operational Definition of the variables, terms, objects, conditions, measures, etc. involved.”

Yes…that is required for good science, but AGW is not about science. It is about politics and advocacy. In those realms it is a great advantage to NOT have agreed upon operational definitions. It is very important to be able to make things mean anything you want them to mean any time you want. The latest example is the use of the terms alt-right and alt-left. No one can define those terms, but they can be thrown around very effectively in the realms of politics and persuasion.

A good (for the umpteenth time) explanation of the use of anomalies versus absolutes is a recent post at RealClimate: http://www.realclimate.org/index.php/archives/2017/08/observations-reanalyses-and-the-elusive-absolute-global-mean-temperature/

Tom ==> Yes, that and the fact that if they used the actual Absolute Average Global Temperature [59°F or 15°C] no one would hear the phrase “Global Warming” or “Hottest Year Ever” without laughing.

The global anomalies are calculated with a theoretical even spread of data that is (very loosely it appears) based on the actual data. If it really was the result of only averaging the records and weighting then he would have a point and we would have a global TA that was similar to that in the 70 s that induced a global cooling scare.

Hey, don’t complain! He was being honest about errors. I think it was sort of like tiptoeing through a minefield 🙂

Now I’m really confused 😉

Thank you (as always), Kip.

“I believe that climate scientists put decimal points in their forecasts to show they have a sense of humor.”

H/T William Gilmore Simms

An average is not necessary. Think more in terms of an index such as the Dow Jones, or S&P500 stock indexes. It does not matter what the number is when finding a trend, it only matters that the index is consistently calculated the same way each time it is calculated.

Chris ==> Ah, if that were only the case we would be dealing with…..something like….Science.

The CAGW trick is to keep calculating, keep re-defining, keep adjusting (both the past and the present — they’d adjust the future if they could figure out how to do it) : The only thing the CAGW promoters don’t do is change their overall narrative (kinda like the NY Times — which has a pre-established editorial narrative for all possible stories, journalists write to the narrative, not the actual news).

Chris, if the index you calculate doesn’t at least approximate the movement of the whole, then it is worthless.

That’s one reason why the DOW has fallen out of favor. 100 years ago, the 50 top companies represented the bulk of the total value in the market. Today the fraction of total wealth represented by the top 50 companies is only a tiny fraction of total market value.

Chris & MarkW ==> The index idea is perfectly valid for some sorts of data — the trick is knowing what the Index really represents and what movements of the index mean in the real world.

In the stock market, an index can give a good general idea of investor confidence (well, these days, computer-trading programmatic confidence, maybe). A consumer price index can inform us about prices consumers pay for common items. A Grocery Basket Index tells us if prices of basic foods are risingor falling, and how much.

An Index of Land & Sea Surface Temperatures can tell us only what it is really counting. It probably can not tell us if the Earth is warming and certainly not why.

It is the SIGNIFICANCE — the implied meaning — of these indexes that are false. Indexes are great propaganda tools, because they produce scientific looking numbers to which can be attached all sorts of meanings that seem reasonable at first glance.

I’ve been a science geek all my life with hobbies ranging from astronomy to ornithology, so initially I took the climate scientists at face value. Then I stumbled into Climate Audit and WUWT, and was blown away by the politics and deceipt.

It’s very unfortunate that such a new and promising scientific field was hijacked by politicians and ideologues. Caution, skepticism and moderation are no match for fear and paranoia. But I think we’ve passed peak hysteria, even if the politicians and media will refuse to let go.

If the earth approximates to a black body or is even remotely close to it are all the photographs of it from space shown by NASA faked in a studio or photoshopped?

Cage ==> You can probably be sure that ALL photos on the internet have been Photoshopped — to one degree or another. I actually Photoshop even simple graphs I use in my essays — to increase contrast, to take out background greys, improve readability (especially borrowed images of graphs). I also Photoshop images (photos) to improve their appearance on the web — years ago one had to do this or everything was ghastly — no so much today — but ai do it as a matter of course.

David,

The Earth, as viewed from space is not a black body, but the Moon is once you subtract the reflected energy and the Earth would be too if not for its atmosphere. Relative to the emission behavior of something like a planet or moon, reflected light is irrelevant to the radiant balance, except indirectly by its absence. It might seem that the Moon is very bright, but its albedo is only about 0.12, where if it’s albedo was 1.0, it would be as bright as the Sun with a temperature of absolute 0 and no emissions in the LWIR!

If you look at Earth in the LWIR and were only concerned about the total average emissions, it would be indistinguishable from an ideal BB at about 255K. If you further examined the emitted spectrum, you would notice that the peak average emissions (color temperature per Wein’s displacement) for clear skies corresponds to the average temperature of the surface below and for cloudy skies corresponds to the temperature of the cloud tops when adjusted for non unit cloud emissivity, but in both cases, the spectrum has gaps arising from GHG absorption, reducing the total emitted energy to what an ideal BB at 255K would emit.

It’s important to point out that the emission temperature of Earth is dominated by the emission temperature of clouds covering about 2/3 of the planet, which for Earth clouds is about 262K, so the NET absorption band attenuation required by GHG’s for the emissions to be equivalent to BB at 255K is not a whole lot.

The first (and only) time I ever looked at all 40 or so of the CMIP models, I made the “mistake” of calculating absolute temperatures rather than anomalies. I was astounded to note that the different models varied by about 3 degrees C in their baseline absolute temperature for their starting year (1880, I think). Now consider two models differing by 3 C. Each model will include some areas of the globe that are below the freezing point of water, but one will have a much higher area of ice than the other, affecting estimates of albedo, etc. So that alone would lead to major changes in how well each model matches reality. Since all models are tuned, each will adopt a different method of tuning in order to match historical records. So we would see some more or less arbitrary choices of aerosols, clouds, and other items of great uncertainty in order to make the fudge factors work.

Yes. See Mauritsen 2013 on the absolute temperature disparities in CMIP3 and 5 and the model tuning implications. Discussed in essay Models all the way Down.

Well at least they didn’t try to report it to 0.01.

Well look to how religions in the past reconciled the contradictory, vague and deceptive aspects of their various scriptures and dogmas.

The climatocracy are doing much the same.

Who is their God? Al Gore? Lol! He’s big enough.

Who wants to get rich beyond their wildest dreams ?

Invent an a/c compressor that isn’t so loud/annoying that the cicadas compete with the noise.

Independent of incomparable baselines, the global anomalies aren’t fit for purpose for a basic reason, inadequate coverage. This is true for land only, where large swaths of Africa, South America, and northern Eurasia either have no data or no long term data. The same is true except moreso for yhe oceans in the pre float/Argo era. Best would be to create a Dow Jones like global index of good, well maintained stations with long records. For example RutherGlen Ag and Darwin in Australia, DeBilt Netherlands, Sulina Rumania, Armaugh Ireland, Hokkaido Japan, Lincoln (University station) Nebraska, Rekjavik Iceland, Durban South Africa. Note not all are GHCN. No homogenization. Perhaps coverage area weighted. That way one has a land record unbiased anomaly trend. Why has this not been done? I suspect because it would show little or no warming, just like each of the named candidates for the index..

Rud ==> Gads, hate to be serious on this thread….the satellite products are problematic as well, as they are derived metrics — in that they do not measure temperature themselves, but ‘something’ else which is then translated into what is believed to be the equivalent of ‘surface temperature’.

That said, they may, in the long run, prove to be useful for climate studies.

When will the next “base” period be defined and used?

Matthew W ==> There are some groups using 1980-2010 (may be 81 ).

PS: Try not to ask serious questions when we are fooling around and making fun……Kip 🙂

Is global average temperature a meaningful concept? A bit like averaging all the numbers in the phone book – only one phone will answer.

The global average in C is not particularly useful because there is too much latitudinal and regional variation. But a correctly computed global anomaly is (for climate trends) because it refers to change over time relative to each specific station independently. That change over time can meaningfully be averaged globally. The residual big problem is individual station quality. As said above, most GHCN is not for purpose. And there are many fit for purpose stations not in GHCN. Rutherglen Australia, University of Nebraska at Lincoln, and Univeristy of Durban, South Africa are examples noted above.

Rud ==> Some thought has to be given to the idea that anomalies “refer.. to change over time relative to each specific station independently ” and thus “can meaningfully be averaged globally”.

Obviously one can do it mathematically, and maybe even worm out a way to make it seemingly properly weighted for area etc etc, but it will not, and can not, tell us anything about the quantity of extra solar energy being retained by the Earth due to GHGs. It will only tell us something (mostly political) about how temperatures are generally changing — and that only maybe meaningful.

Forrest ==> Well, there are lots of ways to retain the useful and necessary information from weather station data. Aggregating is not always a good or even useful idea — visualizations that show multiple data sets on the same graph, for instance, can give a better idea of regional trends or boundaries.

See my recent series on Averages.

Forrest ==> ah….aggregating onto a single visual….yes, often a very good choice. There a lot of interesting ways to show data visually, each making their own contribution to our understanding.

In CliSci, the continuous insistence on on showing a single global average metric for climate phenomena has been obscuring and hiding most of all the important information for decades.

A global average with absolute precision is impossible.

However you can get an average, it’s just that the error bars will depend on the number and distribution of your sensors.

The more sensors you have and the more complete the distribution, then the lower your error bars will be.

The error bars for the current climate network would have to be at least 5C, given the paucity of sensors and the extremely poor distribution. (Most are in N. America and W. Europe)

As you go back into the past, both the quality, number and distribution of the sensors gets worse.

MarkW ==> Yes, the GAST error bars, if based on reality, would be larger/wider than the rise in temperature over the industrial era.

That said, Mosher is probably right, at least we know it is warmer now than in the depths of the Little ice Age.

these 30-year periods have no scientific meaning or value , this period cam about because it was hopped that given this long the failure or reality to match models regards the relationship CO2 and temperature increases would be overcome by a change in reality .

It simply has no meaning , no value , no validity other than as a political tool . It could have easily been 40 or 35 years without making any difference at all .

It is indeed a classic example o the area where numbers are picked out of thin air and whose only value comes there perceived impact in supporting ‘the cause ‘

knr ==> Yes, that is correct. There is nothing particular scientific or even magical about the 30 in 30-year-climatic-average. It is just a convention in today’s climate science (might change tomorrow).

60yrs would be a better base, then we have the other half of the sine wave on the main sub-century natural variability curve. This helped warmer disaster proponents over the first half of the wave but now its peaked and going down again much to the their chagrin. You will see this base period changed before they endure the return of the Pause.

The concept of climate normals goes back to the 1930’s in the US. I suspect the interval was chosen partly due to limited coverage and more so due to the onerous task of doing the necessary calculations by hand.

Great news. The world’s average temperature is 15 degrees, and that is the hottest the world has been since the industrial revolution began. Global warming? Still a bit chilly isn’t it? Where’s Josh with an appropriate cartoon?

Robber ==> Mother to child at breakfast table: “OK, Jimmy, your lunch is in your backpack…the school bus will be here soon ..let me check the weather..Oh, Hottest Day ever again, 59 degrees, better wear your sweater”….

“In fact, the annual anomalies themselves differ one-from-another by > 0.49°C —”

Apologies for not being to put up the plot now but it would be good to see a moving SD for 120 months of differences. Places like Argentina ( hardly a backwater in the early 20th C) has only data for Buenos Aires until 1960. Surely the different methods mean that the spread of differences decreases with time?

And it does, until mid century to what is expected for monthly uncertainties of 0.1 C (or √2 for the difference). This is for the difference in BEST and CRUTemp.

Why does it get worse as third-world countries start taking temperatures seriously?

Do both of these either have or leave out oceans? Need to compare two similar things. Also, not sure you can really do standard deviation with just two numbers. Comparison with 3-4 land only data sets would be more informative. But, I understand what you are getting at.

They’re both land only and SD of 60 values ( difference in 60 consecutive months) the 60 is arbitrary choice as indicator of more precise measurements as more and better data comes in. That they seem to correlate better when the 40s blip needs to go rather than for the past 30 years is a concern.

How dare you guys sending the hockey schtick Mann to oz , can’t you keep him over there somehow , we have enough fake scientists here already .

In geological time scales we are discussing in this post an indiscernible rise in temperature at a time we should be warm anyway. Carry on.

Kip, that’s for starters. When they systematically through an algorithm keep changing the past data, both their global temperatures and anomalies of previous base periods have a life of only one month. As Mark Steyn said at the Senate Committee, how can one consider what the temperature will be in 2100 when we still don’t know what it will be in 1950! This means even their models are tuned to something that doesn’t exist anymore.

Standard day sea level definition = 59 deg F / 15 deg C, 1013 millibars / 14.2 psi.

Thanks! Interesting article

But is it not the same with so much in climate science.

Just testing to see how long before this comment is deleted.

tom0mason ==> Why would you think that anyone would delete your comment?

Most comments here do not even go through moderation — most are simply passed through (after the usual forbidden words automagic-moderation).

Your comment would only be deleted if it was in gross violation of WUWT Policy — it has to be pretty bad to get deleted altogether. The Moderator or the post author (in this case, myself) might “snip” out some bit of egregious offensiveness (death threats, nasty name calling) etc.

Your comment above doesn’t violate any WUWT policy — in fact, doesn’t actually say anything other than you think it might be deleted.

It’s simple projection, Kip. It’s SOP for the warmunists, so they believe everyone must do it.

I’ve had some problems with WordPress and/or Firefox lately. Although it appears my comments were being accepted they were not. Things seemed to have settled down after uninstalling/reinstalling the browser (Firefox).

tom ==> Good, glad you got it sorted out. Neither the management nor the authors here want anyone to feel their input is not welcome. I’ll admit that I will occasionally get a comment that “can’t be posted” … and have to re-write it even though there are no obvious “bad” words etc.

Tom and Forrest ==> you can always ask the Moderator. Including MODERATOR in your comment, either on a post or on the Test page, automagically calls the comment to his/her/its attention. The Mod can check to see if your comment is struck somewhere. The author of an individual post can usually do the same thing.

@Forrest Gardener

Basically my comment above was a test as my comments appeared to have been accepted and posted, however after closing Firefox browser then restarting any browser the comments had disappeared.

I finally realized it was probably the Firefox, and remembered it had updated itself twice recently. I can only think something had screwed-up in the update process.

It was not just this site but also on other WordPress sites and only with Firefox.

As I said a complete uninstalling/reinstall of Firefox today and this appears (I hope) to have cleared the issue.

P.S. I am on a Linux system which has been very stable for more than 5 years.

Worse than we don’t know the present temperature, the pre-industrial temperature is more uncertain. We are told by COP21 we should not exceed 2 C above pre-industrial temp. But the best temperature record has a range of 7 to 10 C. 3 degrees spread is greater than the target 2 degrees. And no SST data. The uncertainty is unknown. They have no idea what absolute temperature they are aiming for.

http://blogs.nature.com/news/files/2012/07/berkeley.jpg

Strangelove ==> Hmmmm….. The one thing good about the BEST graphs is that they include some sort of error bars or CIs, although not wide enough — the Global average before the world wars are really just vague guesses and would have error bars just as wide as those shown for 1750 (no instrumental record goes back that far — and it is too close to the present to use paleo methods, in my opinion.)

Where is this graph from — can you give a link?

Here’s link. Slightly different 1750s range = 6.6 to 9.6 C

http://berkeleyearth.lbl.gov/regions/global-land

Lensman ==> Yeah — different. Thanks for the link.

The image you provided is from the original BEST pjt Results paper in 2012:

static.berkeleyearth.org/papers/Results-Paper-Berkeley-Earth.pdf

which does not appear on their current site, and most links to it returned by Google are broken. This one returns the .pdf file.

I do not trust the BEST data, methods, or motivation — particularly their attempts at the attribution problem.

I was taught that an operational definition is a definition of a term in a manner so explicit that all persons applying the definition as a criterion for identifying something would come to exactly the same conclusion as to whether or not the definition applies in any particular instance of it’s attempted application.

In empirical science an operational definition of a quantity is a definition that references the complete, replicable process for quantifying the result of the operation. This, in principle, allows separate investigators to apply the same process to the determination of a quantity and to directly compare their results. For example, ‘temperature’ can be measured by a process that involves the comparison of voltages between two thermocouples, one of which is in thermal contact with the object of interest and the other is in contact with a specific medium of precisely known reference temperature.

Logically an operational definition identifies a well-characterized parent group to which a term belongs, along with necessary and sufficient criteria to distinguish it from all other members of the same group. For example, to define ‘sanguine’ as ‘the color of blood’ identifies the parent group (‘colors’) and provides a criterion (‘is your color the same color as blood?’) that clearly distinguishes it from other members of the parent group.

The important point of an operational definition is that it completely removes all individual variation among observers from the exercise.

tadchem ==> And for CliSci, lacking an Operational Definition for one of its most commonly referenced metrics…..? Maybe the same for sea surface temperature (there are at least two different terms in use), sea level … worse than Biology which lacks an agreed upon definition for what constitutes a “species”.

Harson:

I have previously criticized you for trying to be a ‘jack of all trades’ writer,

covering too many subjects to be an expert in all of them.

I particularly criticized your article on obesity where you claimed

calories didn’t matter — something fat people love to hear!

To demonstrate that I have nothing against you, and only judge

what you write:

I can’t tell you how disappointed I am after reading this article,

and finding it was better than a related post I made on my climate change blog:

http://elonionbloggle.blogspot.com/2017/08/total-confusion-on-absolute-mean-global.html

I congratulate you on a good article, and selecting a far too often forgotten subject:

What is the absolute mean global temperature?

A secondary question, ignored just as often, and perhaps a subject for your next article, is:

How can one number represent the ever changing climate on our planet?

Richard ==> Thank you for your kind comment — it is a sign of real intellectual maturity to step out of the all-too-ubiquitous trend of personalization — making everything personal or about a person — rather than discussing ideas, concepts, understandings. This is much appreciated.