UPDATE: for those visiting here via links, see my recent letter regarding Dr. Richard Muller and BEST.

I have some quiet time this Sunday morning in my hotel room after a hectic week on the road, so it seems like a good time and place to bring up statistician William Briggs’ recent essay and to add some thoughts of my own. Briggs has taken a look at what he thinks will be going on with the Berekeley Earth Surface Temperature project (BEST). He points out the work of David Brillinger whom I met with for about an hour during my visit. Briggs isn’t far off.

Brillinger, another affable Canadian from Toronto, with an office covered in posters to remind him of his roots, has not even a hint of the arrogance and advance certainty that we’ve seen from people like Dr. Kevin Trenberth. He’s much more like Steve McIntyre in his demeanor and approach. In fact, the entire team seems dedicated to providing an open source, fully transparent, and replicable method no matter whether their new metric shows a trend of warming, cooling, or no trend at all, which is how it should be. I’ve seen some of the methodology, and I’m pleased to say that their design handles many of the issues skeptics have raised and has done so in ways that are unique to the problem.

Mind you, these scientists at LBNL (Lawrence Berkeley National Labs) are used to working with huge particle accelerator datasets to find minute signals in the midst of seas of noise. Another person on the team, Dr. Robert Jacobsen, is an expert in analysis of large data sets. His expertise in managing reams of noisy data is being applied to the problem of the very noisy and very sporadic station data. The approaches that I’ve seen during my visit give me far more confidence than the “homogenization solves all” claims from NOAA and NASA GISS, and that the BEST result will be closer to the ground truth that anything we’ve seen.

But as the famous saying goes, “there’s more than one way to skin a cat”. Different methods yield different results. In science, sometimes methods are tried, published, and then discarded when superior methods become known and accepted. I think, based on what I’ve seen, that BEST has a superior method. Of course that is just my opinion, with all of it’s baggage; it remains to be seen how the rest of the scientific community will react when they publish.

In the meantime, never mind the yipping from climate chihuahuas like Joe Romm over at Climate Progress who are trying to destroy the credibility of the project before it even produces a result (hmmm, where have we seen that before?) , it is simply the modus operandi of the fearful, who don’t want anything to compete with the “certainty” of climate change they have been pushing courtesy NOAA and GISS results.

One thing Romm won’t tell you, but I will, is that one of the team members is a serious AGW proponent, one who yields some very great influence because his work has been seen by millions. Yet many people don’t know of him, so I’ll introduce him by his work.

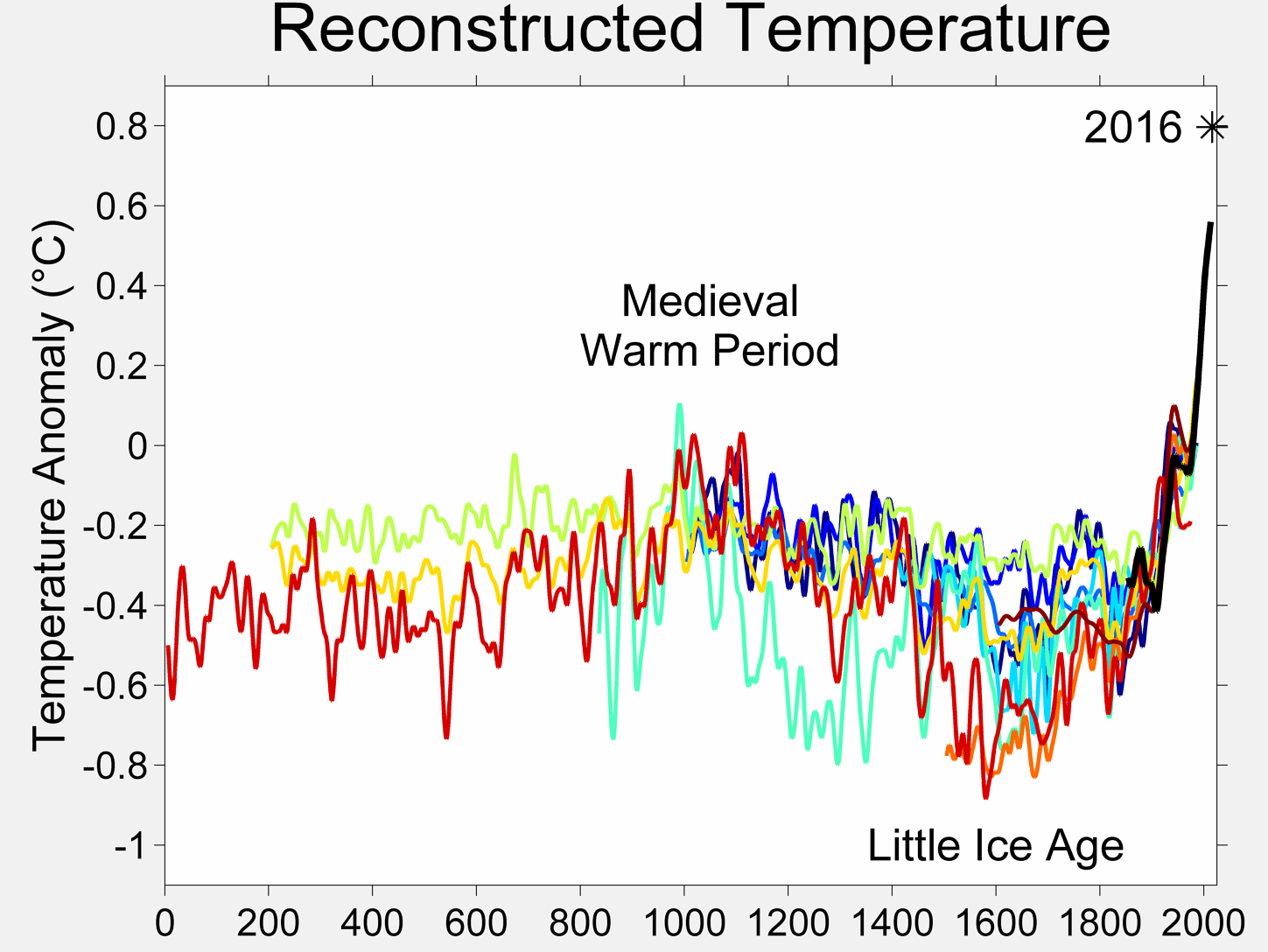

We’ve all seen this:

It’s one of the many works of global warming art that pervade Wikipedia. In the description page for this graph we have this:

The original version of this figure was prepared by Robert A. Rohde from publicly available data, and is incorporated into the Global Warming Art project.

And who is the lead scientist for BEST? One and the same. Now contrast Rohde with Dr. Muller who has gone on record as saying that he disagrees with some of the methods seen in previous science related to the issue. We have what some would call a “warmist” and a “skeptic” both leading a project. When has that ever happened in Climate Science?

Other than making a lot of graphical art that represents the data at hand, Rohde hasn’t been very outspoken, which is why few people have heard of him. I met with him and I can say that Mann, Hansen, Jones, or Trenberth he isn’t. What struck me most about Rohde, besides his quiet demeanor, was the fact that is was he who came up with a method to deal with one of the greatest problems in the surface temperature record that skeptics have been discussing. His method, which I’ve been given in confidence and agreed not to discuss, gave me me one of those “Gee whiz, why didn’t I think of that?” moments. So, the fact that he was willing to look at the problem fresh, and come up with a solution that speaks to skeptical concerns, gives me greater confidence that he isn’t just another Hansen and Jones re-run.

But here’s the thing: I have no certainty nor expectations in the results. Like them, I have no idea whether it will show more warming, about the same, no change, or cooling in the land surface temperature record they are analyzing. Neither do they, as they have not run the full data set, only small test runs on certain areas to evaluate the code. However, I can say that having examined the method, on the surface it seems to be a novel approach that handles many of the issues that have been raised.

As a reflection of my increased confidence, I have provided them with my surfacestations.org dataset to allow them to use it to run a comparisons against their data. The only caveat being that they won’t release my data publicly until our upcoming paper and the supplemental info (SI) has been published. Unlike NCDC and Menne et al, they respect my right to first publication of my own data and have agreed.

And, I’m prepared to accept whatever result they produce, even if it proves my premise wrong. I’m taking this bold step because the method has promise. So let’s not pay attention to the little yippers who want to tear it down before they even see the results. I haven’t seen the global result, nobody has, not even the home team, but the method isn’t the madness that we’ve seen from NOAA, NCDC, GISS, and CRU, and, there aren’t any monetary strings attached to the result that I can tell. If the project was terminated tomorrow, nobody loses jobs, no large government programs get shut down, and no dependent programs crash either. That lack of strings attached to funding, plus the broad mix of people involved especially those who have previous experience in handling large data sets gives me greater confidence in the result being closer to a bona fide ground truth than anything we’ve seen yet. Dr. Fred Singer also gives a tentative endorsement of the methods.

My gut feeling? The possibility that we may get the elusive “grand unified temperature” for the planet is higher than ever before. Let’s give it a chance.

I’ve already said way too much, but it was finally a moment of peace where I could put my thoughts about BEST to print. Climate related website owners, I give you carte blanche to repost this.

I’ll let William Briggs have a say now, excerpts from his article:

=============================================================

Word is going round that Richard Muller is leading a group of physicists, statisticians, and climatologists to re-estimate the yearly global average temperature, from which we can say such things like this year was warmer than last but not warmer than three years ago. Muller’s project is a good idea, and his named team are certainly up to it.

The statistician on Muller’s team is David Brillinger, an expert in time series, which is just the right genre to attack the global-temperature-average problem. Dr Brillinger certainly knows what I am about to show, but many of the climatologists who have used statistics before do not. It is for their benefit that I present this brief primer on how not to display the eventual estimate. I only want to make one major point here: that the common statistical methods produce estimates that are too certain.

…

We are much more certain of where the parameter lies: the peak is in about the same spot, but the variability is much smaller. Obviously, if we were to continue increasing the number of stations the uncertainty in the parameter would disappear. That is, we would have a picture which looked like a spike over the true value (here 0.3). We could then confidently announce to the world that we know the parameter which estimates global average temperature with near certainty.

Are we done? Not hardly.

Read the full article here

Discover more from Watts Up With That?

Subscribe to get the latest posts sent to your email.

It does look very promising. But is there a possibility that the surface temperature data will turn out to be so poor that it is impossible to draw any robust conclusions from it? If so, how likely is that to be the case?

David Brillinger is an expert in time series. It would be very interesting to see what the BEST project will do to appraise the issues surrounding compliance with the Nyquist-Shannon Sampling Theorem. In both space and time.

I have mentioned this on quite a number of occasions here on WUWT. It is an essenial point with regard to interpreting the shape of the signal from sampled data. Have a look at “aliasing” to see what can happen to the interpretation of sampled data when the sampling rate is poorly selected.

I should repeat – it is not the same issue as statistical sampling and convergence to underlying properties But on that topic, it would also be interesting to see where BEST stands in the issue of stationarity.

His method, which I’ve been given in confidence, and agreed not to discuss

is somewhat disturbing. Smacks a la Corbin or worse. One may hope that the method would eventually be disclosed, otherwise it may be time for a FOIA.

REPLY: Leif, that’s really out of line, and I’m disappointed that you would go there with such a heavy-handed comment.

Have you never discussed some idea, method or process with a colleague in confidence, holding that confidence until they had a chance to publish their work in paper? As indicated in the article, there will be full disclosure once the project is ready. I have no reason to believe otherwise. Dr. Muller knows that I and many others will publicly eviscerate him should those promises of full disclosure and replicability not be kept.

– Anthony

I must say I second Pamela Gray.

The climate will keep on changing.

Our problem is we do not know what causes it to change and how.

It seems to me a lot of hassle with no real point.

Beesaman says:

“I too seem to have become persona non grata over at Climate Progress (is it funded by Colonel Gaddafi I wonder, for all its diatribe about being nonpartisan it certainly acts in a partisan manner?)”

I can sympathize.

Climate Progress is funded by someone actually worse than Col. Gaddafi: George Soros. That’s why CP is so heavily partisan, and why it censors out honest scientific skeptics. Soros’ ultimate goal is a totalitarian world government.

Joe Romm is just a Soros sock puppet. He’s bought and paid for, so he does exactly what George tells him to do. You will never get the true climate picture at CP, because it is a climate propaganda echo chamber populated by mutual head-nodders. If contrary views can’t be posted at a blog, any time spent there is wasted.

Are there some scientists participating in this project who have created physical hypotheses and produced empirical research which is generally accepted in that specialty as showing that the hypotheses are reasonably well-confirmed?

It will be good just to see a climate project whose methods are reproducible, whose data and meta data are available. No matter what it shows it will at least be usable research.

Pamela Gray says:

March 6, 2011 at 8:16 am

What Pamela said. Extremely well stated, Ms. Gray.

Leif Svalgaard says:

March 6, 2011 at 10:05 am

The project will be worthless if all data and metadata is not published. I hope it will be.

Isn’t kind of ironic that that colorful hippie artwork shows that the temperature has been below normal for “98%” of the time for its complete 2 000 year time frame.

So essentially if the next 2 000 years is slightly above normal for “98%” the complete 4 000 year time frame will produce a normal average. :p

Andrew Zalotocky says:

March 6, 2011 at 9:45 am

It does look very promising. But is there a possibility that the surface temperature data will turn out to be so poor that it is impossible to draw any robust conclusions from it? If so, how likely is that to be the case?

That in itself will be an excellent conclusion.

“Global Warming Art”; i came across that before, a warmist referred to one wikipedia graph as a source and i looked where it came from because i thought it was one of William Connolleys artworks but it turned out to be from “Global Warming Art” which gave me an even creepier felling. wikipedia links to this website for “Global Warming Art”:

http://www.globalwarmingart.com/

It looks defunct ATM. Don’t know whether to trust these guys or this BEST thing.

REPLY: they have a web database error, just needs a rebuild. I’ll let them know – Anthony

I apologize for posting a downer, but I cannot see any good coming from the BEST project. All they are doing is reanalyzing statistical data on temperature. But there is no great disagreement on temperature increase over the last century. The disagreements are on the reliability of the data and the cause of the rise. (Of course, there is the matter of Hansen constantly decreasing the warmth of the Thirties and increasing that of the Nineties, but I doubt that they tackle that.)

Consequently, the BEST project will come up with a number that is close to Hansen’s number and once again we will hear trumpeted the claim that there is a scientific consensus on Hansen’s work. The BEST project is an attempt to revive the meme of scientific consensus.

Briggs has a link to a UK Guardian article:

http://www.guardian.co.uk/science/2011/feb/27/can-these-scientists-end-climate-change-war

where Muller commits to posting all of the data and methods online. Judith Curry is mentioned as a member of BEST also. Steve McIntyre is quoted as saying “anything that [Muller] does will be well done”.

I look forward to the results.

As I’ve been “forced” to think about “temperature” and “average temperature”…etc.,

I’ve also been forced to think of my ENGINEERING BACKGROUND and realized that these efforts are essentially MAGNIFICENT FRAUDS from the get go.

Let’s use this as an example. I take a temperature at my house. (Right now, 30 F). I take it in two hours…a front comes through, it’s 25 F. Normally that’s the “peak temperature” of the day. Is my temperature today, 25 F, or 30 F? Is that my “maximum”? What defines what temperature is “significant”.

Likewise, what do I use? Surfaces station records? (See http://www.surfacestations.org) Ship’s logs? (Again, WHEN was the reading taken?) Tree ring proxies (see climateaudit.org), ice core and O18/O16 ratios (dubious from the get go)?

It all comes down to what ENERGY CONTENT THERE IS, and which way THAT is trending.

But, that…like the Seven Bridges of Königsberg problem, becomes irreducible or insoluble. The solution to that, create a MEANINGLESS metric that you convince (the ignorant and the academically gullible) that you can solve.

Alas, this work does not: build houses, drill oil wells, make nuclear plants, make medical devices. (Do I sound INSANE? No, there is a SANITY to this comment. It is meant to point out this POINTLESS EFFORT, which DOES NOT HELP HUMANITY versus things that do.)

Let’s hope these folks might find something MEANINGFUL to do with their short (we are all in that realm) lives before they pass the torches on to the next generation.

Thanks for this Anthony. What you describe is what we have all hoped for. Real science, that attempts to achieve objectivity. Real science, that uses empirical measurements to calibrate. Real science, based on deductive reasoning in the spirit of Karl Popper. Real science, that informs debate not becomes the debate. As scientists or those who would call themselves such, we must at the end remain true to our philosophy for only then can we stand with pride. So to that I would add this sage advice:

“Polonius:

This above all: to thine own self be true,

And it must follow, as the night the day,

Thou canst not then be false to any man.

Farewell, my blessing season this in thee!

Laertes:

Most humbly do I take my leave, my lord.

Hamlet Act 1, scene 3, 78–82”

Anthony, as a person who above all knows the importance of investigating the actual environment of each surface station, and the difficulty (such that you had to enlist scores [hundreds?] of volunteers to help), how can you accept this BEST approach on faith? Are they investigating the actual conditions of any of their 30,000 stations? Will they know when or where a station was moved and records were lost concerning it? I have written to them about my concerns and received no answer. Please please reassure me on this point. Without metadata such as you developed in the surfacestations project, the data are meaningless.

I think it will be nice to know the answer but will it really have any affect on the politics? Some people seem to not let ‘facts’ interfere with ‘The Cause’ . I don’t think it answer the real underlying question as to cause. [Just to be clear, I mean the thing(s) that effected the change(s) not ‘The Cause” ].

Stephen Richards says:

“The project will be worthless if all data and metadata is not published. I hope it will be.”

I agree. But it is only fair to allow them to withhold their data, methodologies, metadata, code, etc., until publication. It should all be archived publicly online at that point.

This is a golden opportunity to set an example of scientific transparency. If everything is publicly archived at publication, then it will be much harder for anyone else to argue that they shouldn’t have to do the same thing.

Thanks, Anthony, this is good news and sorely needed!

I’m terribly ambivalent about AGW, mostly because I’m such a critic of the methodologies employed by Jones/Mann/Briffa etc. I’ve often argued to reboot the whole process and start over, which earns me angry stares from nearly everyone in the climate science community.

The climate system is FAR more complex than the Hockey Team & their groupies (Gavin) want to admit, with many more influences (solar, galactic, physical, chemical, biological) than their models attest to. Let’s see what some clean data shows.

Mind you, these scientists at LBNL (Lawrence Berkeley National Labs) are used to working with huge particle accelerator datasets to find minute signals in the midst of seas of noise.

I commune with physicists from DOE’s Fermilab and can attest to their focus and dedication on dealing with amazingly complex data analysis, so this is a fantastic addition!

Let’s get the catastrophists away from the process entirely, and let the chips & data fall where they may. I can live with any conclusions if the science is sound.

“I’m prepared to accept whatever result they produce, even if it proves my premise wrong.”

I read that statement by Anthony as saying that he will approach the result with an open mind, not dismiss or accept it in advance.

I too will try to treat it on its merits, but I have very severe doubts that there is enough data of sufficient quality to give meaningful results over even the last ten decades (or maybe much less than that), let alone over any longer period.

I also have very severe doubts that the “land temperature”, which they are doing first, is as valuable a measure as the “sea temperature”. But then, there is even less data for that, isn’t there?

Probably the first thing I will look for in the results is their evaluation of uncertainty. If the older results are not shown with greater uncertainty, then I am going to have difficulty accepting anything.

“His method, which I’ve been given in confidence and agreed not to discuss”

I have no problem with that. It’s a work in progress. Obviously, the method will need to be fully documented with the results.

I await the results with interest but no great expectations.

1DandyTroll says:

March 6, 2011 at 11:09 am

Yo dude, there you go spreading all that logic about again!

Do you think it might catch on, especially in the “hippie artwork” world?

I still don’t see why you need a whole bunch of data points in the first place. We have the Central England Temperature, the Irish record of similar length, and probably a couple others. If you’re looking for long-term GLOBAL trends, they must show up in a consistent and well-calibrated record of two or three consistently rural places. If nothing else, simple data gives you a much better chance of pinning down the contaminating factors.

The big step forward with this project will be that the data and method will be published and available for inspection and critique. The reconstruction will be more credible than its predecessors for this reason alone. No more excuses about “data confidentiality” and losing the data.

If Global Warming causes Global Cooling, what then does Global Cooling cause? Snowball Earth?