Last week, Larry Kummer posted a very thoughtful article here on WUWT:

A climate science milestone: a successful 10-year forecast!

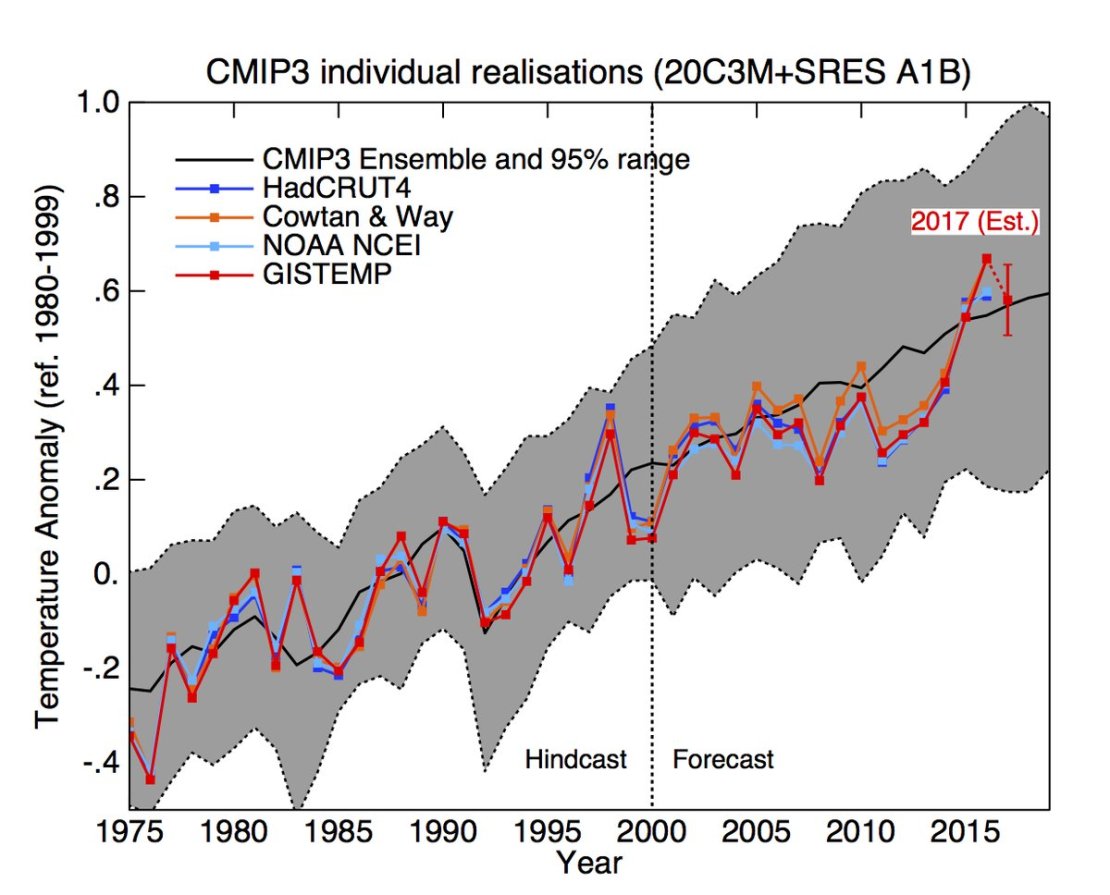

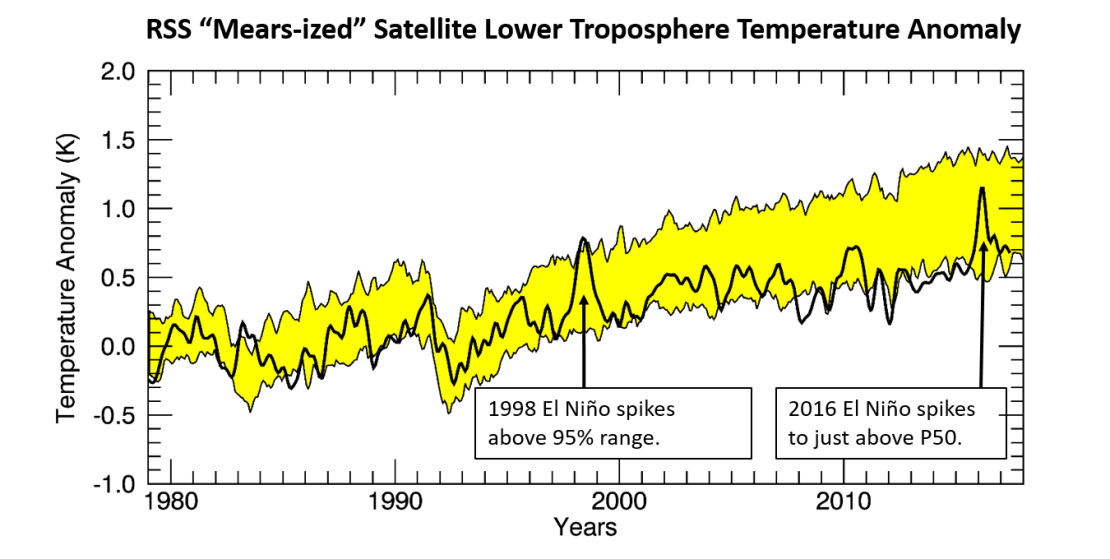

At first glance, this did look like “a successful 10-year forecast:

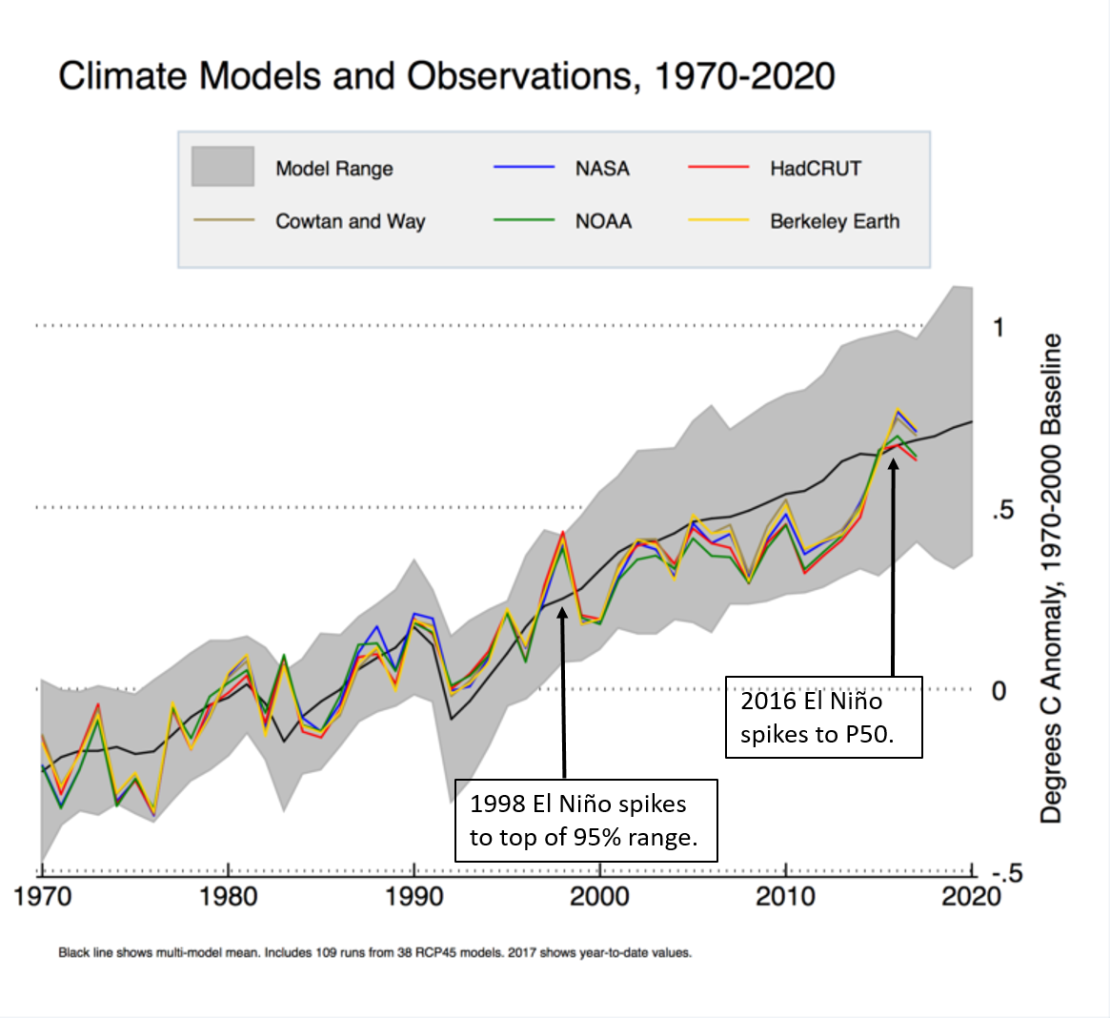

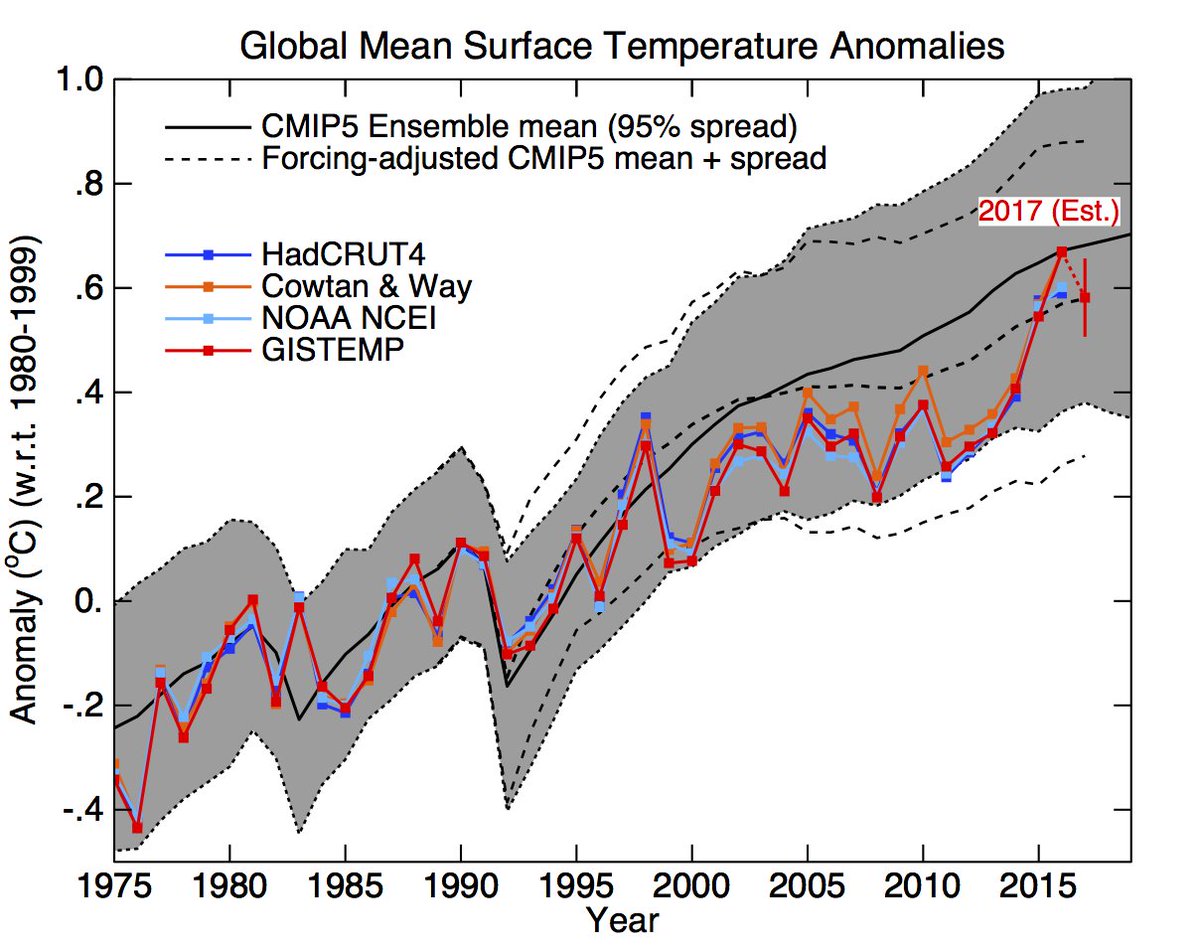

The observations track closer to the model ensemble mean (P50) than most other models and the 2016 El Niño spikes at least a little bit above P50. Noticing that this was CMIP3 model, Larry and others asked if CMIP5 (the current Climate Model Intercomparison Project) yielded the same results, to which Dr. Schmidt replied:

CMIP5 version is similar (especially after estimate of effect of misspecified forcings). pic.twitter.com/Q0uXGV4gHZ

— Gavin Schmidt (@ClimateOfGavin) September 20, 2017

The CMIP5 model looks a lot like the CMIP3 model… But the observations bounce between the bottom of the 95% band (P97.5) and just below P50… Then spike to P50 during the 2016 El Niño. When asked about the “estimate of effect of misspecified forcings, Dr. Schmidt replied:

As discussed here: https://t.co/0d0mcgPq0G

(Mostly misspecification of post-2000 solar and volcanoes)

— Gavin Schmidt (@ClimateOfGavin) September 21, 2017

Basically, the model would look better if it was adjusted to match what actually happened.

The only major difference between the CMIP3 and CMIP5 model outputs was the lower boundary of the 95% band (P97.5), which lowered the mean (P50).

CMIP-5 yielded a range of 0.4° to 1.0° C in 2016, with a P50 of about 0.7° C. CMIP-3 yielded a range of 0.2° to 1.0° C in 2016, with a P50 of about 0.6° C.

They essentially went from 0.7 +/-0.3 to 0.6 +/-04.

Progress shouldn’t consist of expanding the uncertainty… unless they are admitting that the uncertainty of the models has increased.

Larry then asked Dr. Schmidt about this:

Small question: why are the 95% uncertainty ranges smaller for CMIP5 than CMIP3, by about .1 degreeC?

— Fabius Maximus (Ed.) (@FabiusMaximus01) September 22, 2017

Dr. Schmidt’s answer:

Not sure. Candidates would be: more coherent forcing across the ensemble, more realistic ENSO variability, greater # of simulations

— Gavin Schmidt (@ClimateOfGavin) September 22, 2017

“Not sure”? That instills confidence. He seems to be saying that the CMIP5 model (the one that failed worse than CMIP3) may have had “more coherent forcing across the ensemble, more realistic ENSO variability, greater # of simulations.”

I’m not a Twitterer, but I do have a rarely used Twitter account and I just couldn’t resist joining in on the conversation. Within the thread, there was a discussion of Hansen et al., 1988. And Dr. Schmidt seemed to be defending that model as being successful because the 2016 El Niño spiked the observations to “business-as-usual.”

well, you could use a little less deletion of more relevant experiments and insertion of uncertainties. https://t.co/nbAQhtjznrpic.twitter.com/gb29tm6rup

— Gavin Schmidt (@ClimateOfGavin) September 5, 2017

I asked the following question:

So… the monster El Niño of 2016, spiked from ‘CO2 stopped rising in 2000’ to “business as usual”?

— David Middleton (@dhm1353) September 25, 2017

No answer. Dr. Schmidt is a very busy person and probably doesn’t have much time for Twitter and blogging. So, I don’t really expect an answer.

In his post, Larry Kummer also mentioned a model by Zeke Hausfather posted on Carbon Brief…

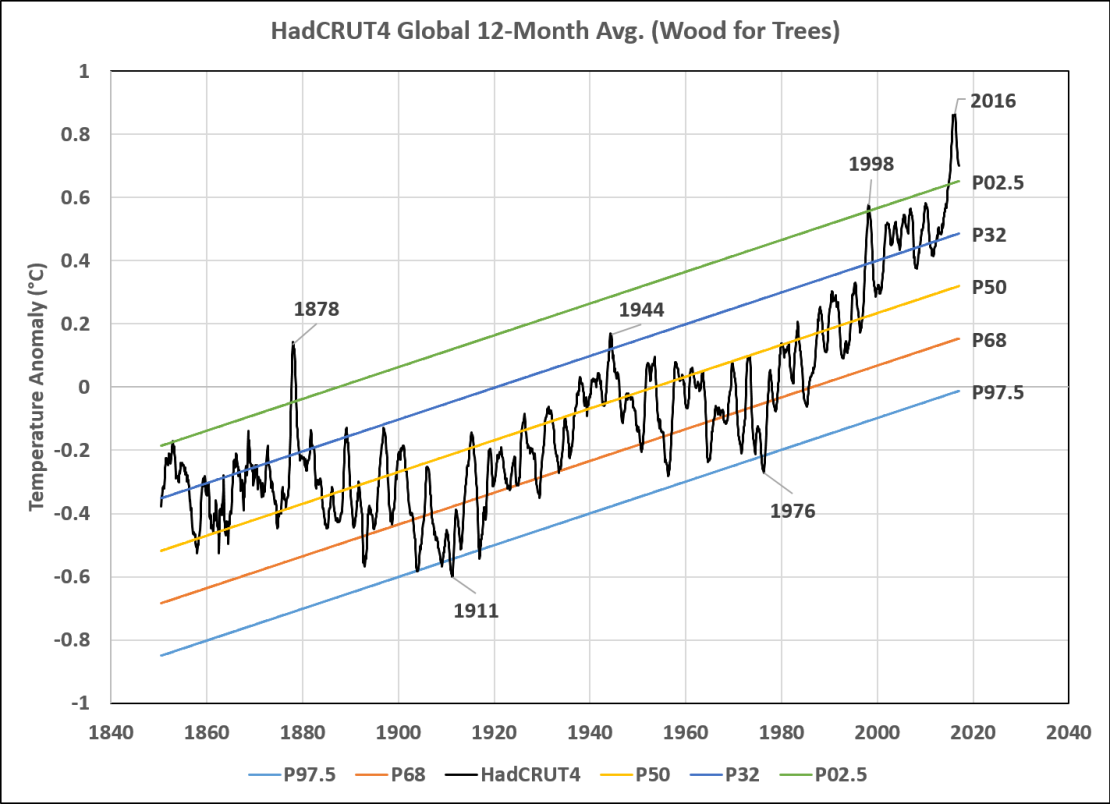

El Niño events like 1998 and 2016 are not high probability events. On the HadCRUT4 plot below, I have labeled several probability bands:

| Standard Deviation | Probability Band | % Samples w/ Higher Values |

| +2σ | P02.5 | 2.5% |

| +1σ | P32 | 32.0% |

| Mean | P50 | 50.0% |

| -1σ | P68 | 68.0% |

| -2σ | P97.5 | 97.5% |

Yes… I am assuming that HadCRTU4 is reasonably accurate and not totally a product of the Adjustocene.

I removed the linear trend, calculated a mean (P50) and two standard deviations (1σ & 2σ). Then I added the linear trend back in to get the following:

The 1998 El Niño spiked to P02.5. The 2016 El Niño spiked pretty close to P0.01. A strong El Niño should spike from P50 toward P02.5.

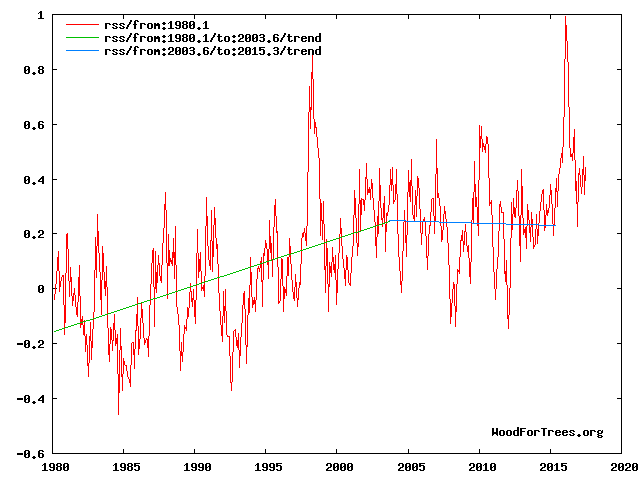

All of the models fail in this regard. Even the Mears-ized RSS satellite data exhibit the same relationship to the CMIP5 models as the surface data do.

–

The RSS comparison was initialized to 1979-1984. The 1998 El Niño spiked above P02.5. The 2016 El Niño only spiked to just above P50… Just like the Schmidt and Hausfather models. The Schmidt model was initialized in 2000.

This flurry of claims that the models don’t “run hot” because the 2016 El Niño pushed the observations toward P50 is being driven by an inconvenient paper that was recently published in Nature Geoscience (discussed here, here and here).

Claim of a substantial gap between model projections for global temperature & observations is not true (updated with 2017 estimate): pic.twitter.com/YHzzXtbhs9

— Gavin Schmidt (@ClimateOfGavin) September 20, 2017

21 September 2017 0:27

Factcheck: Climate models have not ‘exaggerated’ global warming

ZEKE HAUSFATHER

A new study published in the Nature Geosciences journal this week by largely UK-based climate scientists has led to claims in the media that climate models are “wrong” and have significantly overestimated the observed warming of the planet.

Here Carbon Brief shows why such claims are a misrepresentation of the paper’s main results.

[…]

All (95%) of the models run hot, including “Gavin’s Twitter Trick”. From Hansen et al., 1988 to CMIP5 in 2017, the 2016 El Niño spikes toward the model ensemble mean (P50)… Despite the fact that it was an extremely low probability weather event (<2.5%). This happens irrespective of then the models are initialized. Whether the models were initialized in 1979 or 2000, the observed temperatures all bounce from the bottom of the 95% band (P97.5) toward the ensemble mean (P50) from about 2000-2014 and then spike to P50 or slightly above during the 2016 El Niño.

Aren’t we wasting too much time on a “prediction after the fact”?

“Predictions are hard, especially about the future.”, Yogi Berra, American philosopher

If it doesn’t fit, you must acquit !

“The future ain’t what it used to be.”

So the bottom line here is the Gavin Schmitt deliberately chose the out of date CMIP3 model output because the ensemble mean looks a lot closer to the observations than the more recent CMIP5.

Scientific frawd, clear and simple.

The rest about grey bands and percentiles is just smoke. This article fails to clearly make the most important point because it goes into too much detail.

Good to bring this out but could have been a lot clearer and to the point.

CMIP5 version is similar (especially after estimate of effect of misspecified forcings)

so their models run too hot and make poor forecasts …. until they do some post hoc parameter tweaking , at which point it is no longer a forecast but a new hindcast.

The 2007 models look better than the 2013 models. Despite having had more time and additional observations from the post Mt Pinatubo period they get worse results. They are now going backwards.

It is a very telling admission of the failure and misdirection of the modelling community that Gavin Schmidt chose the older models to misinform the public,

Wrong. It was a piece of JUNK. (See comments on the thread accompanying that “thoughtful” (cough) piece.)

got that right. but it was a piece of junk contrived to subvert.

cunctator is a wolf in sheep’s clothing.

Kumer’ s thoughts are agenda driven. The man loves the sight of his own words doesn’t he? 🙂

If that was the case, I doubt he would have written such an eloquent dissection of RCP8.5 as this…

https://wattsupwiththat.com/2015/11/05/manufacturing-nightmares-an-example-of-misusing-climate-science/

maybe google fabius maximus and the fabian society

also see

https://www.crossroad.to/articles2/05/dialectic.htm

David,

“If that was the case, I doubt he would have written such an eloquent dissection of RCP8.5 as this…”

Was RCP8.5 being taken seriously? By anyone? Or was it something so far “out there” that eviscerating it did no realm harm to the alarmist cause? Essentially beating a dead (on arrival) horse . . If so, what a convenient way to demonstrate one was being tough on the alarmists, without laying a finger on them, so to speak.

RCP 8.5 is the basis of pretty-well every claim of CAGW. It is routinely described as the “expected” or “business-as-usual” case in peer-reviewed literature.

Yes Janice, my thoughts exactly! I thought the world was supposed to end on the 23rd but it just ended for me after reading this very, very stupid statement on WUWT:

F%ck me, what ever happened to emotional intelligence. Have IQs dropped sharply since I’ve been away!

“Middleman” – always political – has finally shown his true stripes! God help us indeed, because these ideologues will kill us all before they finally wake up! ;-(

There’s no logical inconsistency with describing a post as “thoughtful” and mostly disagreeing with it. While being rude and obnoxious can be fun, it isn’t always necessary.

This was my last comment on Larry’s post:

https://wattsupwiththat.com/2017/09/22/a-climate-science-milestone-a-successful-10-year-forecast/#comment-2619709

Larry’s conclusions:

https://wattsupwiththat.com/2017/09/22/a-climate-science-milestone-a-successful-10-year-forecast/

Let’s see…

I disagreed with:

His characterization of Gavin’s Twitter Trick as a demonstration of predictive skill in a climate model.

Boosting funding for climate sciences.

Beginning a well-funded conversion to non-carbon-based energy sources.

And beginning more aggressive efforts to prepare for extreme climate.

I politely disagreed with most of his post… without insulting him.

Well, that would be thought crime committed in your own head, an absurd continuum that logically leads to casting everything from “the other”, as hate speech.

Maybe you are right though, if the words “very, very stupid statement” fit the bill.

What is going on with these really dumb articles about the skill of model ensembles?

When you read the literature and all the approaches to this “problem” you wade straight in to the absurdity of arguing the base line for model runs, started today and hind casting this weeks adjusted data, of last centuries’s data and “sliming” it laterally to find the best fit. And I’m not joking, this is the dead set reality and very best received wisdom available today from these insane collectivists*.

*I can describe them thusly because they make no pretence themselves of being anything other!

Nice out-of-context quote.

The tortured data is starting to confess, just a couple more adjustments and it fits 100%.

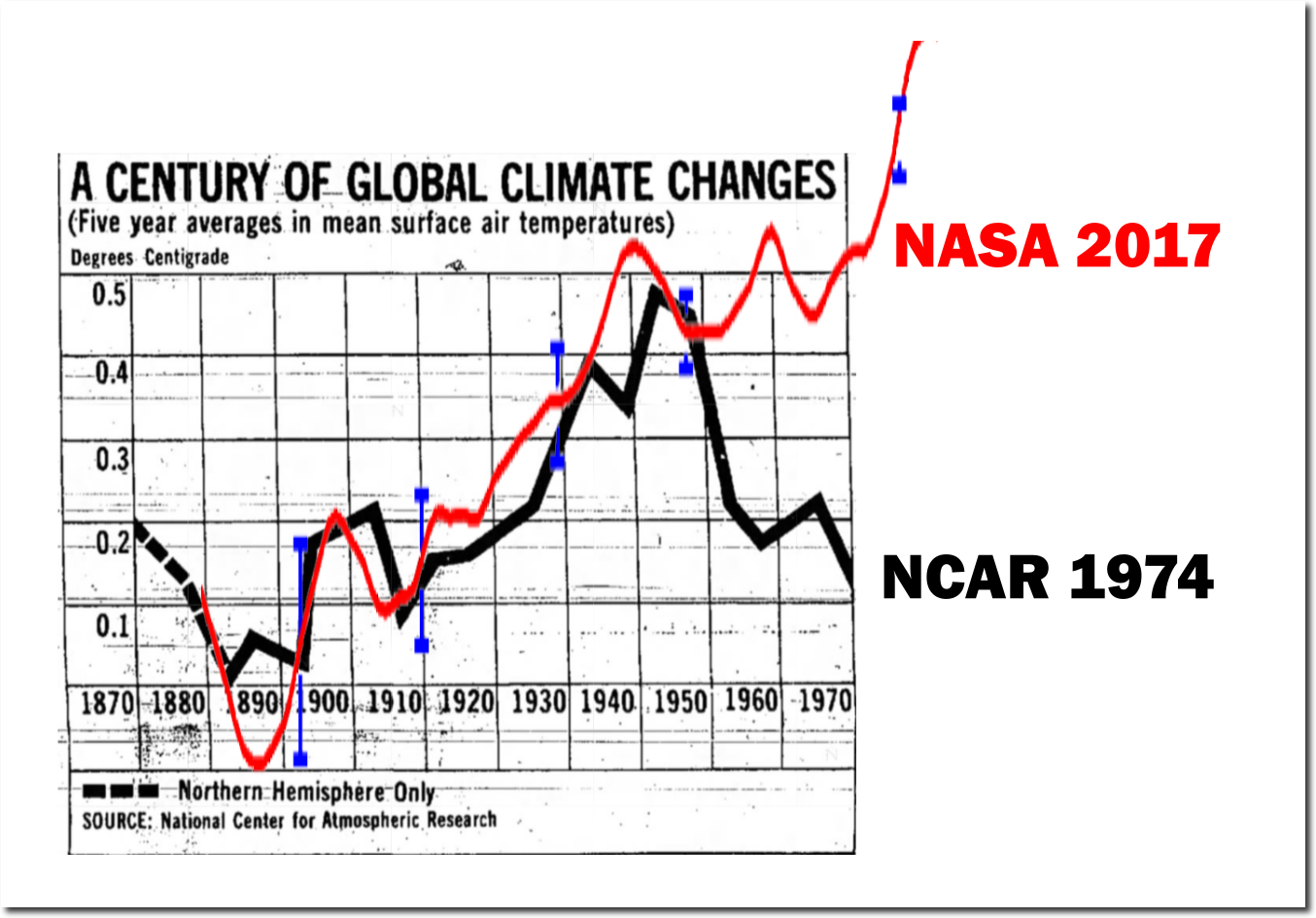

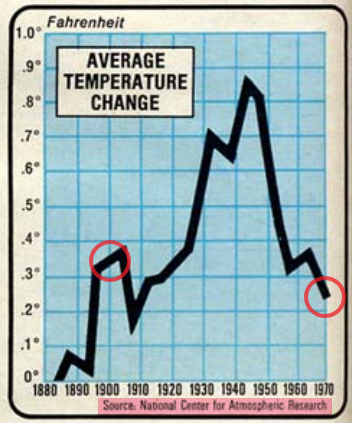

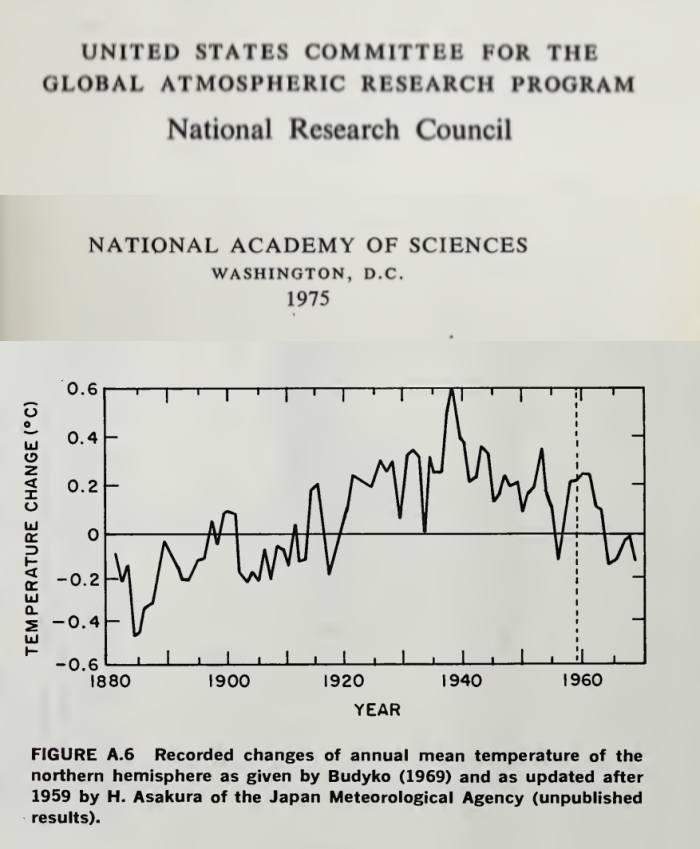

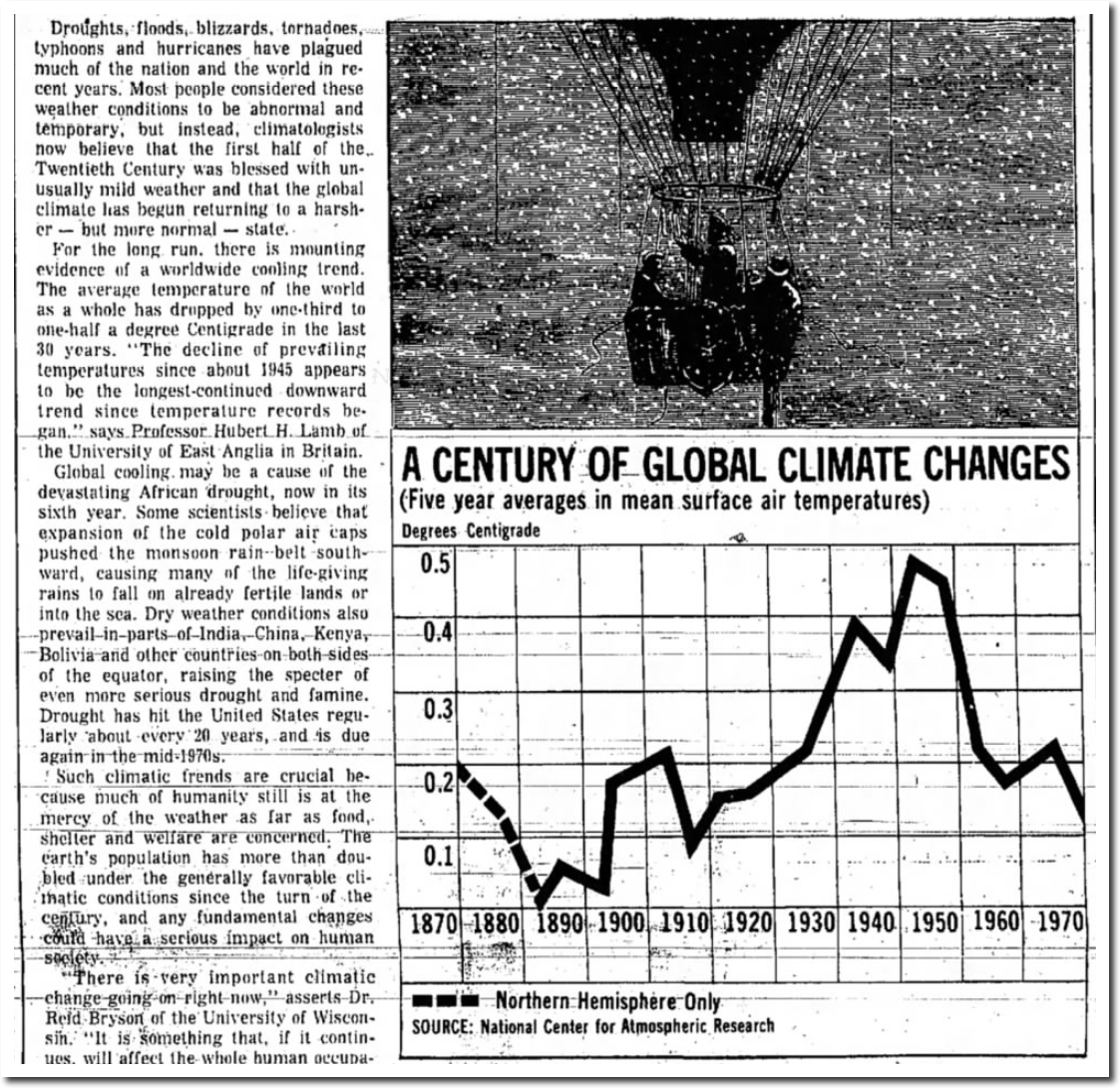

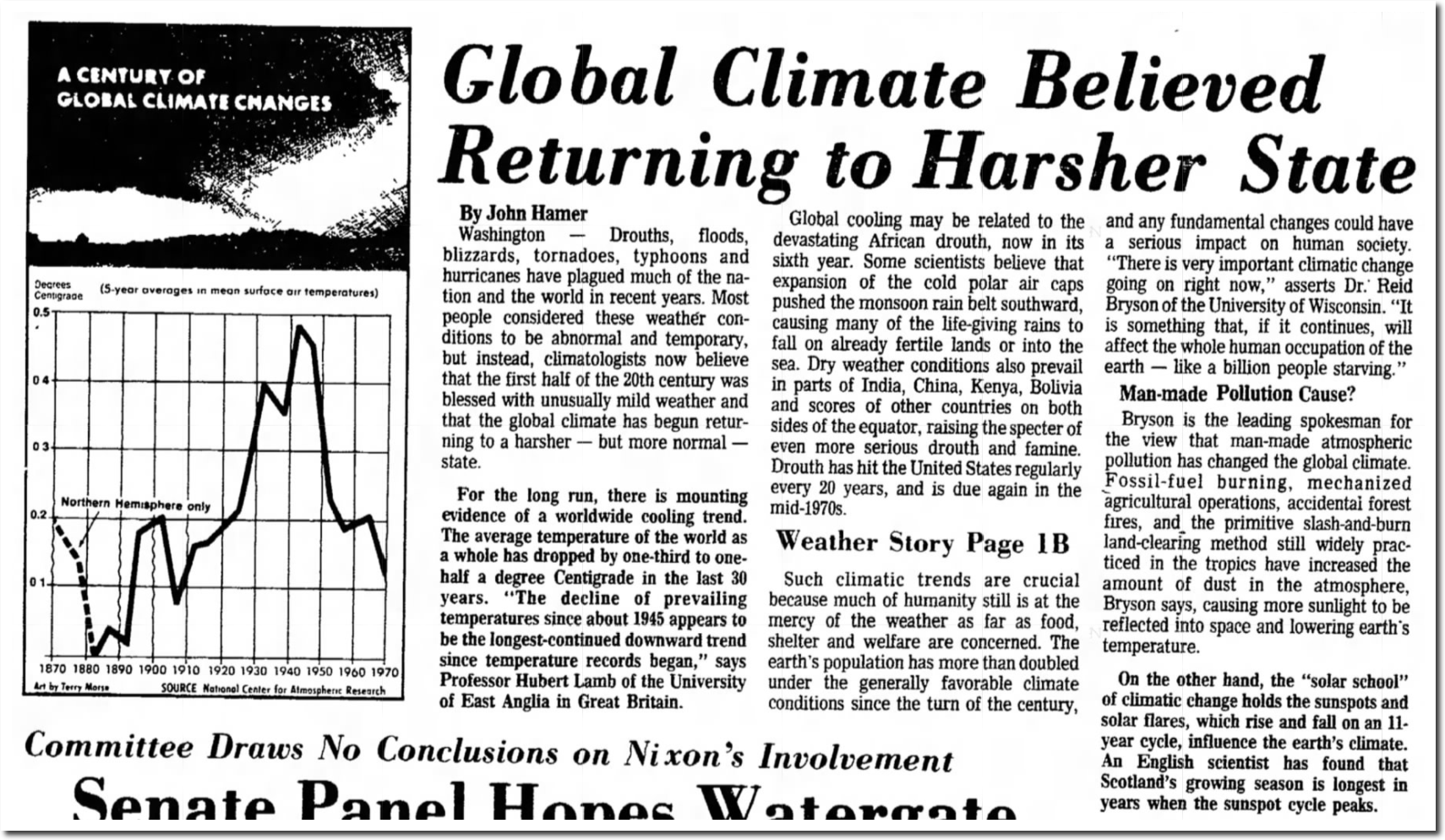

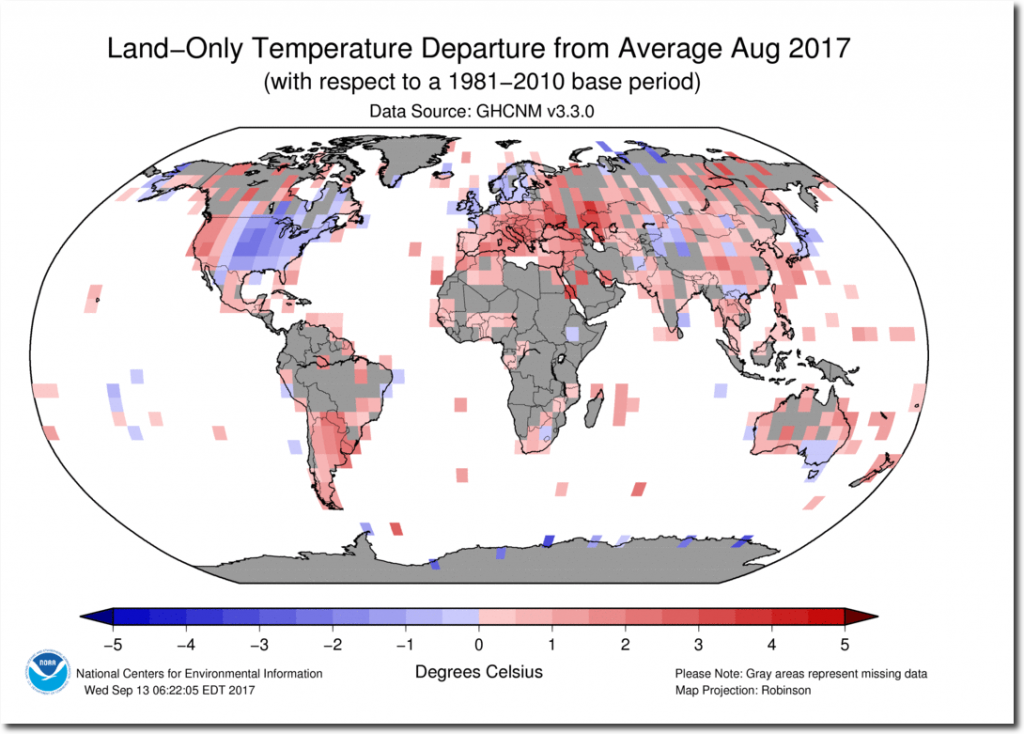

Sorry but knowing how these ‘data’ lines evolved I cannot take anything that is based on such serious. Just a few examples here:

That’s why I trust the Climategate CRU more than GISS & NOAA.

I am somewhat suspicious of the CRU figures to put it mildly – not least because the main London temperatures from Heathrow airport are routinely in the 2-4 deg C range above the rural temperatures outside – and sometimes even more.

Winter minimum temperatures for London are routinely 4 degC Hotter than outside London, and sometimes more. The last week’s daily forecast figures showed Heathrow as 2 deg C above the adjacent towns – which themselves suffer from UHI.

These are then adjusted for UHI – But not so far as I can make out by anything like the actual super-heated temps that Heathrow records. Those effects alone are enough to create overheating in the UK temperature records held by CRU.

Once a “researcher” starts correcting data to fit the theory. . .

Notice if roughly the correct reading originally had been used from 1970, (NCAR 1974) the NASA 2017 rise in temperature would had only reached about the 1950’s levels now. They have adjusted past trends that much, different decades appear warmer when probably the late 1930’s were the warmest years. Reducing the cooling has conned many over the decades into thinking recently was warmer, when in fact the only thing that happened was the planet had recovered from a cooling period until the late 1970’s. This is why absolute temperatures should be used and not anomalies so this fiddling would be lot more difficult to hide.

Steven Goddard produces these plots, and they seem to circulate endlessly, with no attempt at fact-checking, or even sourcing. I try, but it’s wearing. The first GISS plot is not the usual land/ocean data; it’s a little used Met Stations only, essentially an update of a 1987 paper. I don’t know if it’s right. But the second shows what is claimed to be a NCAR plot, or at least based on NCAR data. That plot isn’t from any NCAR publication, and the data is not available anywhere. Instead it is from the famous Newsweek 1975 article on “global cooling”. And that just said it was a temperature plot; didn’t even say what it was temperature of. From the context, it seemed to be US, which figures. There was very little digidised global data in 1974.

Nick, the 1974 NCAR line is real,as it was in an old Newsweek magazine back in 1974:

2001 version is NASA, NOT Met,Nick:

https://web.archive.org/web/20011129113305/http://www.giss.nasa.gov:80/data/update/gistemp/graphs/Fig.A.ps

“The first GISS plot is not the usual land/ocean data; it’s a little used Met Stations only, essentially an update of a 1987 paper.”

Sunset,

“NASA, NOT Met”

Yes, that is exactly what I said about Newsweek. It’s their plot, not NCAR’s. And note that they don’t say what it is the temperature of. I think it’s US (lower 48). But it’s not the sort of detail that bothers SG or hi fans.

“NASA, NOT Met”

As I said, and is it is clearly labelled, it is Met Stations. It is an index that Hansen and Lebedeff used in 1987, with met stations extrapolated to cover the whole Earth (since they had no SST). GISS has kept updating, but it is rarely quoted, and no-one else calculates such an index.

First quote should read

” it was in an old Newsweek magazine”

“I don’t know if it’s right” – LOL.

As for the NCAR data, so what if you can’t find the data in a publication 40-50 yrs after the fact? Do you think it was made-up that it came from NCAR to appear in Newsweek, and nobody at NCAR ever noticed? Here’s a supposed NAS representation of data around the same time…

It goes ahead and tells you that it is the northern hemisphere. Clearly the NCAR involves smoothing and doesn’t have annual data explicitly shown, but it’s not hard to see how the NAS one would look quite similar to the NCAR one with longer smoothing intervals.

“…Instead it is from the famous Newsweek 1975 article on “global cooling”. And that just said it was a temperature plot; didn’t even say what it was temperature of. From the context, it seemed to be US, which figures…”

The data is very similar, but it isn’t the graphic out of the Newsweek article. The headline reads “global.” The dashed line of the first 15 years or so says “northern hemisphere only.” So the context is clearly global. Are you saying Steven Goddard took the Newsweek graph, converted it to Celsius in a new plot made to look like it came from the 1970s, and added fake titles, 15 years of fake data, and a fake legend?

Thank you Sunsettommy!

N.B.: Nick played fast and very loose with his baseless claims while insidiously slinging ad hominems.

with no attempt at fact-checking, or even sourcing. I try, but it’s wearing.

Nick, I’m calling you out on this BullSh1t…..All you had to do was ask Tony

You didn’t try sh1t….and posted this crap

Nick

It is good to receive you input, and I think that I can help clarify some of the points that you raise.

You only have to look at Hansen’s own 1981 paper (Science Volume 213) figure 3 to see that he fully accepts the NCAR 74 plot (which is similar to the NAS 1975 plot, more on which later)

http://notrickszone.com/wp-content/uploads/2017/01/NASA-NH-Temperatures-Hansen-81.jpg

This is the very top part of his figure 3 (ie., the NH 90 – 23.6 N temperature profile plot). Look at the paper and look at what Hansen says on page 961 about Fig 3, viz:

It is clear from Fig 3 itself, that Hansen as at 1981 considered the NH temperature as at 1980 to be some 0.3degC cooler than it was in 1940.

Footnote 9 is the NAS 1975 report (the plot from which I set out below), and footnote 43 is the Brinkman 1976 paper, the Vinnikov 1976 paper, and the Jones & Wigley 1980 paper (I presume that you have read all 3 of those). So the Team were very much on board that this represented the temperature profile of the NH. You will recall that I have frequently pointed out to you what Jones and Wigley say about the inadequacies of SH temps in their 1980 paper, and you will note that Hansen states:

Show cooling over 0.5c in northern hemisphere and cooling around 0.5c in the southern hemisphere between about 1960 and 1976. The people have be conned where cooling was at least 0.5c for global temperatures. The 1930’s to 1940’s were warmer, but not shown on here.

http://onlinelibrary.wiley.com/store/10.1029/2004JD005306/asset/image_n/jgrd11778-fig-0001.png?v=1&t=j829h01d&s=47343e9607738facd2a12febf82c6b2471bc3139

Link doesn’t work, but information on here from ERA40, NCEP and CRU.

http://onlinelibrary.wiley.com/doi/10.1029/2004JD005306/full

Nick

It is always good to receive you input, and I think that I can help clarify some of the points that you raise.

You only have to look at Hansen’s own 1981 paper (Science Volume 213) figure 3 to see that he fully accepts the NCAR 74 plot (which is similar to the NAS 1975 plot, more on which later)

http://notrickszone.com/wp-content/uploads/2017/01/NASA-NH-Temperatures-Hansen-81.jpg

This is the very top part of his figure 3 (ie., the NH 90 – 23.6 N temperature profile plot). Look at the paper and look at what Hansen says on page 961 about Fig 3, viz:

It is clear from Fig 3 itself, that Hansen as at 1981 considered the NH temperature as at 1980 to be some 0.3degC cooler than it was in 1940.

Footnote 9 is the NAS 1975 report (the plot from which I set out below), and footnote 43 is the Brinkman 1976 paper, the Vinnikov 1976 paper, and the Jones & Wigley 1980 paper (I presume that you have read all 3 of those). So the Team were very much on board that this represented the temperature profile of the NH. You will recall that I have frequently pointed out to you what Jones and Wigley say about the inadequacies of SH temps in their 1980 paper, and you will note that Hansen states:

,

Here is the NAS 1975 plot referenced in footnote 9 of Hansen’s 1981 paper.

Of course, if you look at various Hansen papers you will see how he has altered the temperature profile of the US. That too has been substantially altered these past 25 years.

Slow down,Nick!

You appear confused:

“Sunset,

“NASA, NOT Met”

Yes, that is exactly what I said about Newsweek. It’s their plot, not NCAR’s. And note that they don’t say what it is the temperature of. I think it’s US (lower 48). But it’s not the sort of detail that bothers SG or hi fans.”

No it was NOT from NASA,never said it was either.

I gave you the link that shows it is FROM NASA!

You say,

“NASA, NOT Met”

As I said, and is it is clearly labelled, it is Met Stations. It is an index that Hansen and Lebedeff used in 1987, with met stations extrapolated to cover the whole Earth (since they had no SST). GISS has kept updating, but it is rarely quoted, and no-one else calculates such an index.”

The wayback machine shows it is NASA not Metoffice.

https://web.archive.org/web/20011129113305/http://www.giss.nasa.gov:80/data/update/gistemp/graphs/Fig.A.ps

Go ahead and see for yourself:

2001 version : Fig.A.ps

https://realclimatescience.com/nasa-doubling-warming-since-2001/

You have yet to show a link to support your position.

Nick, I have asked Tony about the charts in question.

You know he used to work at NCAR,doing charts and stuff?

Your personal issues with the graphics themselves don’t invalidate the reality of data tampering that has gone on over time.

To be fair Goddard provides many unique lines of evidence almost daily to support the overall message of data tampering over time. You should challenge him on his blog https://realclimatescience.com

http://notrickszone.com/wp-content/uploads/2017/01/NASA-NH-Temperatures-Hansen-81.jpg

Guys

I have a comment that I have tried to post a couple of times that has disappeared into the ether. Hopefully it will get posted in due course in which I explain these plots.

(Found and posted) MOD

Unfortunately, I am unable to cut and copy Figure 7.11 form Observed Climate Variation and Change on page 214. But I can confirm that this plot (endorsed by the IPCC) shows that the NH temperatures as at 1989, was cooler than the temperature at 1940, and the temperature as at 1920. Not much cooler but a little cooler.

This is the IPCC Chapter 7

Lead Authors: C.K. FOLLAND, T.R. KARL, K.YA. VINNIKOV

Contributors: J.K. Angell; P. Arkin; R.G. Barry; R. Bradley; D.L. Cadet; M. Chelliah; M. Coughlan; B. Dahlstrom; H.F. Diaz; H Flohn; C. Fu; P. Groisman; A. Gruber; S. Hastenrath; A. Henderson-Sellers; K. Higuchi; P.D. Jones; J. Knox; G. Kukla; S. Levitus; X. Lin; N. Nicholls; B.S. Nyenzi; J.S. Oguntoyinbo; G.B. Pant; D.E. Parker; B. Pittock; R. Reynolds; C.F. Ropelewski; CD. Schonwiese; B. Sevruk; A. Solow; K.E. Trenberth; P. Wadhams; W.C Wang; S. Woodruff; T. Yasunari; Z. Zeng; andX. Zhou

Figure 7.11: Differences between land air and sea surface temperature anomalies, relative to 1951 80, for the Northern Hemisphere 1861 -1989 Land air temperatures from P D Jones Sea surface temperatures are averages of UK Meteorological Office and Farmer et al (1989) values

I also have a copy of the Official US Dept Energy Report of 1985: Projecting the Effects of Increasing Carbon Dioxide which contains a very similar plot on page 151.

Figure 5.1. Annual mean surface air temperature anomalies from 1880-1981: (solid curve) Vinnikov et al. (1980); and (dashed curve) Jones et al. (1982). Figure from Weller et al. (1983), and includes points updated to 1981 by Jones

This plot (figure 5.1) shows Vinnikov through to 1980 putting 1980 at some 0.4 degC cooler than 1940, and Jones through to 1981 putting 1981 about 0.1 to 0.15 cooler than 1940.

This report, on page 152, also includes the Arctic Sea Ice reconstruction from the Vinnikov 1980 paper

An abridged attempt:

You only have to look at Hansen’s own 1981 paper (Science Volume 213) to see that these are well accepted plots, the source of which is sound.

It will be seen from figure 3 of Hansen’s paper that he fully accepts the NCAR 74 plot (which is similar to the NAS 1975 plot, more on which later).

At 7.04 hrs, I have posted a part of Figure 3. Please note that this is the very top part of his figure 3 (ie., covering solely the NH 90 – 23.6 N temperature profile). If one reviews the paper, it will be noted what Hansen says on page 961 about Fig 3, viz:

It is clear from Fig 3 itself, that Hansen as at 1981 considered the NH temperature as at 1980 to be some 0.3degC cooler than it was in 1940.

Footnote 9 is a reference to the NAS 1975 report/book (the plot from which I set out above at 7.02. This comes from page 148 of the report/book), and footnote 43 is the Brinkman 1976 paper, the Vinnikov 1976 paper, and the Jones & Wigley 1980 paper (I presume that you have read all 3 of those).

So the Team were very much on board that this represented the temperature profile of the NH. I have frequently pointed out what Jones and Wigley say about the inadequacies of SH temps in their 1980 paper, and you will note that Hansen states:

Mr. Stokes should admit that he is dishonest AND careless.

Mr. Stokes is not dishonest… And pretty well all of us are careless at times.

“As for the NCAR data, so what if you can’t find the data in a publication”

The reason for wanting to see the publication is that then it will be explained what the plot really is. What kind of data is it based on? Newsweek wasn’t saying at all. Is it land only? Land/ocean? It looks to me as if the b&w plot above is supposed to be global but probably land only; there was no systematic SST or NMAT at the time. The NASA 2017 is probably land/ocean. These are things you need to know to ensure it is a fair comparison.

Des Monies Register

Lincoln Evening Journal

Nick, Tony writes a post disputing your assertions.

https://realclimatescience.com/2017/09/nick-stokes-busted/

You did make a couple of errors,that I didn’t notice,but he sure did.

Here is the link to the IPCC website for Chapter 7. Have a look at page 214.

https://www.ipcc.ch/ipccreports/far/wg_I/ipcc_far_wg_I_chapter_07.pdf

Also have a look at page 202 for Figure 7.1 which shows the Medieval Warm period and the LIA. These are the plots that the IPCC would sooner that one forgets.

As others have noted there appears to be a concerted effort to erase the past. How can anyone have any confidence in the data when it has undergone so much revision?

Richard,

Yes, clearly there was a circulating newspaper story at the time with near identical text and graphs claiming to be global temperature, some of which attribute the writing to John Hamer and the artwork to one Terry Morse. What we don’t have is an NCAR source document saying what kind of measure it is – land only, land/ocean? How much data was available? How about some scepticism?

“How about some scepticism?”

You have none to give. !

Nick needs a bigger shovel………

Nick Stokes : Busted Part 2

https://realclimatescience.com/2017/09/nick-stokes-busted-part-2/#comments

Part 3 coming soon.

It appears that Mr. Stokes might be deliberately trying to confuse people, because he is getting badly exposed here and at Tony’s site right now.

Already give this link before, but for some reason you have chosen to not read it. The paper looks at global temperatures including NCAR and they easily agree with the chart and actually show more cooling.

The NCEP/NCAR shows more cooling than with the 1974 chart between 1960 and 1976.

http://onlinelibrary.wiley.com/doi/10.1029/2004JD005306/full

The satellite show only 0.2c difference between land and ocean since 1979. Therefore whether it is land or land/ocean has little relevance. The bigger difference on surface data with HADCRUT and GISS is because it misses huge amount of the land so temperatures varies more.

The NH and North American temperatures also show there are very similar. The SH also shows cooling around 0.5c, but with the NH it is more.

“Already give this link before, but for some reason you have chosen to not read it. “

I read it, but just couldn’t think of anything to say. What you have linked to is a modern paper on reanalysis. Totally off topic. Yes, one of those has NCAR in the name. But there is no mention of NCAR 1974, or anything even close. It’s just not about that. It does talk about some old NCAR data assimilated into reanalysis, but there is no corresponding reconstruction.

The argument is about where this supposed NCAR plot of 1974, supposedly showing how GISS has altered the record, or some such, actually comes from. I noted a Newsweek source; Tony Heller seems to think something is busted because he found it in a different newspaper. But there is no provenance. Richard Verney mentioned some earlier papers, but they all had NH temperatures; The Goddard plot claims to be global.

I’m sure you can find modern graphs that look something like NCAR 1974. But what doesn’t seem to bother people is the basic question – what are they plotting? And what was NCAR plotting then? It matters.

Nick Stokes September 26, 2017 at 10:02 pm

To answer your questions. The graphs are of air temperature over land. No fiddling with totally bogus SSTs, as per Karlization.

There were more well maintained and monitored reporting stations before independence for British colonies than after, so these data are hard and valid, unlike the made up, pretend “data” of HadCRU, NASA and NOAA today, from fewer stations.

Note Lamb’s comments, from back when the Hadley Centre still practiced science rather than politics.

NCAR’s graphs from the 1970s were the best possible stab at actual science. Now they just produce PC garbage, in cahoots with NASA GISS and HadCRU.

Nick,

That c. 1945-77 (when the PDO flipped) was colder than 1912-44 is not a controversial conclusion. Without totally unwarranted “adjustments”, 1978-2010 was no warmer than 1912-44.

The early 20th century warming and late 20th century warming were practically identical, with a dramatic cold interval in between, just as the early 20th century warming was preceded by a turn of the century cold spell, c. 1879-1911, which was preceded by the first warming cycle of the Current Warm Period, after the end of the LIA.

And need I point out that, during the dramatic poastwar cooling, CO2 was increasing, just as it did during the late 20th century warming, but not during the early 20th century warming to anything like the same degree, and during the Depression and WWII, fell.

“To answer your questions. The graphs are of air temperature over land.”

It doesn’t answer the questions. What sort of land? Global? NH only? No-one seems to care.

But what does this say for the honesty of the original plot? It just has NASA 2017 in big letters, and NCAR 1974. And we’re supposed to think that something nefarious has gone on, as is always being claimed at Goddard’s and parrotted here.. But no mention that one is land (of some sort) and one is global land/ocean. Which would be different even if NCAR 1974 (if the newsapers got it right) had had access to more than a few hundred stations.

With it being in deg F it is not actually as big as it looks. I don’t know for certain what the plot was, but normally all papers pre-1980’s usually only had the NH because SH data was extremely limited. SST’s were limited too so probably only from land observations. Although the actual source would be nice to find to replicate, other data sources confirm the same or similar representation. It seems there was doubt about anything like this from back then, not just the source. It was easy to confirm that there was similar data sets that supported it.

I have only been able to find reanalysis version of NCEP/NCAR, although I wouldn’t say it was off topic because they support previous newspaper graphs. Compared with corresponding gridded values from the CRUTEM2v data set produced directly from monthly station data. Also including the processed CRO station values of monthly air temperatures. Therefore a data set that is not from reanalysis also supports it too.

“Monthly mean 2-m temperature anomalies from ERA-40 and NCEP/NCAR have been compared with corresponding gridded values from the CRUTEM2v data set produced directly from monthly station data [Jones and Moberg, 2003], referred to in subsequent sections simply as Climatic Research Unit (CRU) data. CRUTEM2v is based on anomalies computed for all stations that provide sufficient data to derive monthly climatic normals for the period 1961–1990. Station values are aggregated over 5° × 5° grid boxes and adjustments made for changes over time in station numbers within each box [Jones et al., 1997, 2001].”

“In this paper, processed station values of monthly mean surface air temperature are compared with corresponding values derived from the products of two reanalyses, the 45-Year European Centre for Medium-Range Weather Forecasts (ECMWF) Re-Analysis (ERA-40) and the first National Centers for Environmental Prediction/National Center for Atmospheric Research (NCEP/NCAR) reanalysis.”

Tony shows that those “met stations only” are part of GISS LAND only. YOU on the other hand claimed it was not land/ocean data,which YOU created out of thin air,since NOBODY said they were land/ocean, you did that.

This is what I despise about you,creating deliberate misdirection,with crispy Red Herrings. You do this a lot,wonder why anyone thinks you are credible when you make dishonest,misleading comments over and over……… You spent a lot of time here with numerous red herrings of things no one else claimed.

Tony writes,

Nick Stokes : Busted Part 3

“Nick Stoke’s final idiotic claim takes us right to the heart of this scam.

The first GISS plot is not the usual land/ocean data; it’s a little used Met Stations only

This was the GISS web page in 2005. Top plot was “Global Temperature (meteorological stations.) No ocean temperatures.

(2005 webpage)

“The 2001 GISS web page had the same thing.”

(2001 webpage)

https://realclimatescience.com/2017/09/nick-stokes-busted-part-3/#comments

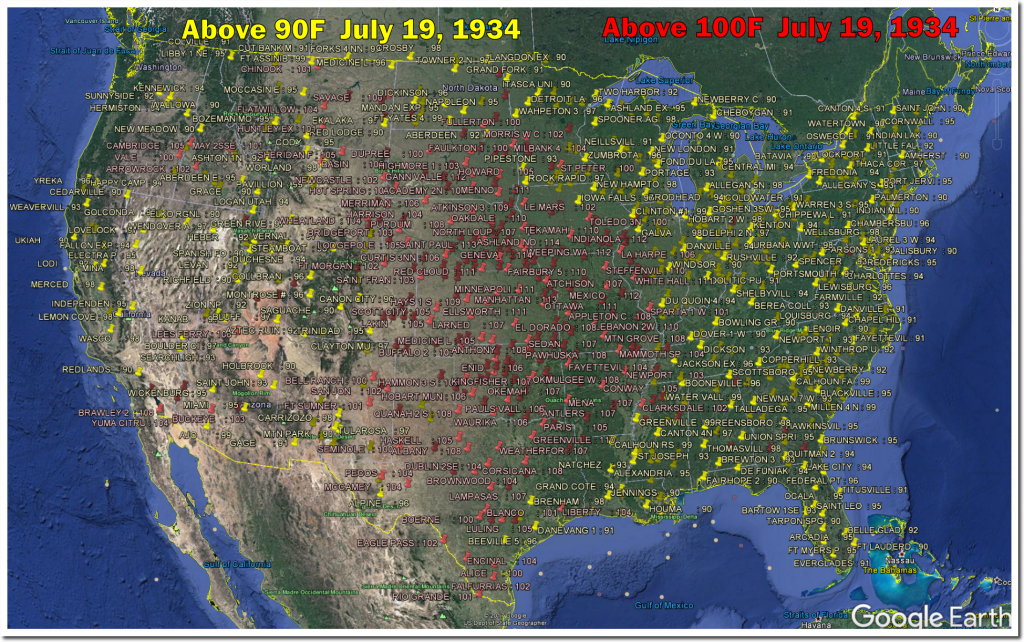

At the bottom of the linked page,Tony shows the 1999 GISS chart,that has year 1934 hotter than 1998,for USA,but in 2017 GISS plot 1998 is now hotter.

Don’t even try lying over this Nickyboy! I remember SEEING that 1999 chart when it was originally posted at the NASA website. I know you and Mosher, claim there were no such level of adjustments,but then again you two have been exposed over and over in Red Herrings, Lies and other shameful crap.

Well, Nick, you have been caught straight out. So very not sorry. 🙂

https://realclimatescience.com/2017/09/nick-stokes-busted/

Feel at liberty to defend yourself on WUWT or on Tony’s site. I’ll bring the popcorn.

Lance,

I’ve been being told that for days now. But no-one ever says how. I don’t think they can figure it out.

I said that there was no NCAR source, so we can’t work out just what is being plotted. I said that it came from a Newsweek story. Heller says that he got it instead from another old newspaper. So? Still no NCAR source.

And he says indignantly, well of course it’s land only, it’s all they had. What a defence! He wasn’t telling you that on his graph. He’s claiming that the difference between a 1974 plot of land only (probably NH, despite the newspaper heading) and 2017 land/ocean is due to GISS fiddling. Never tells you that they are just quite different things being plotted.

And sunsettommy still doesn’t even try to figure out what the GISS plots are. The GISS we have been following and discussing for years is GISS Land/Ocean. Those GISS plots marked Met Stations are something different – he still seems to have no idea what. They are a continuation of the Met Stations index of the paper Hansen and Lebedeff, 1987. They used Met stations data to extrapolate over the whole globe. It’s isn’t land/ocean, and it isn’t land only. And most people think it is no longer a good idea, and no-one else does it. It is rarely referenced. Again, Heller isn’t going to tell you any of this.

Let’s give Tony Heller credit for these graphs!

B

Tony Heller didn’t make the graphs. He exposed them. And he trounces Nick soundly about his claims here too.

Are the adjustments/changes peer reviewed? All of them?

No Sailboarder.

Here is a link to Tony’s site showing the many GISS adjustments over the years. Notice how they eliminate the well known cooling trend from the 1960’s to the 1970’s?

History Of NASA/NOAA Temperature Corruption

https://realclimatescience.com/history-of-nasanoaa-temperature-corruption/

and,

NASA Doubling Warming Since 2001

https://realclimatescience.com/nasa-doubling-warming-since-2001/

Tony does fabulous work. Am I missing something or is his blog site not on the WUWT list? If not, what can the reason be to blacklist one of the best researchers out there?

One of the best presentations there is ….. should be required viewing in all high school and college level science classes on how science is NOT supposed to work!!!

@sailboarder –

“Realclimate” is under the “Pro AGW Views” section of WUWT’s links.

Tony now also regularly makes short videos on his Youtube channel under “Tony Heller”.

For an example a recent excellent demonstration of much of the appallingly dishonest presentation of Arctic ice data: https://www.youtube.com/watch?v=cu5Lu_lls4A

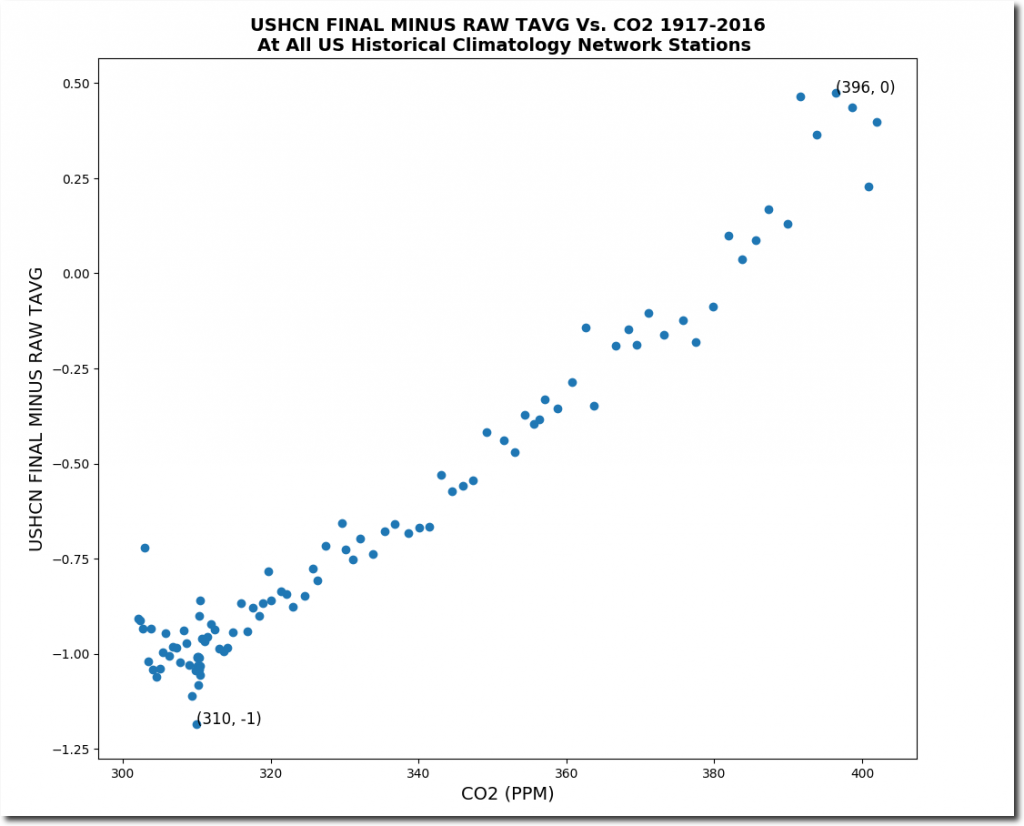

“The gray areas are filled in with computer modeled temperatures, meaning that about 50% of the global data used by NASA and NOAA is fake.”

Which may be the very simple explanation of the results: by each run most of the data is being computer modelled with the very models that predict a warming.

This explains also the other find, the adjustments are perfectly in line with the atmospheric CO2 increase as will result from a model:

https://imgur.com/a/Jz0Vv

So my take on this is that we more and more see computer model agreeing with the very same computer model and not with real data…

Lars, incredibly enough, over at Climate etc. a commenter using the moniker “Steven Mosher” now claims that the correlation between the adjustments and the rise of CO2-levels is a “proof” that the adjustments are correct:

https://judithcurry.com/2017/09/26/are-climate-models-overstating-warming/#comment-858823

lars, every time you see the term reanalysis data used you know you are being lied to.

Oops, my bad – “realclimate” is not “realclimatescience”

I’m probably not the first one to mix them up.

Sorry.

Ross McKitrick has a post at Climate etc https://judithcurry.com/2017/09/26/are-climate-models-overstating-warming/ also looking at this issue.

Excellent analysis by Dr. McKitrick.

He’s not a Doc.

I just promoted him!

Oops, he is! (I was thinking of Steve Mc.)

I would think that if the models were doing a good job, then typical years should have temperatures fluctuating around the ensemble mean, and unusual warming events like an El Nino should be pushing the upper boundary of the envelope of uncertainty. I would also expect that if what are clearly outliers (El Nino) are removed, the trend of the remaining years should parallel the ensemble mean. That isn’t what we are seeing! I’d say that it is evident that the models are (still) running too warm.

“and unusual warming events like an El Nino should be pushing the upper boundary”

Not really. There are unusual cooling events too (La Nina). And if they come first, as in 2008/9 and 2012/12, then the track goes down, then back to average.

NS,

I don’t think you understand. Ninos and Ninas are anomalous events and they should appear as outliers against a background of predictable changes. If the uncertainty boundaries are doing their job properly, showing the extremes of natural variability, then the anomalous events should be pushing those boundaries.

Bingo!

i think nick does understand. as reality edges ever further away from modeled “projections” i think nick along with many others will become more obtuse. either that or admit they are wrong. ten years from now the amo will make many so called experts look like the snake oil salesmen they really are. i don’t doubt they believe their own hype ,but belief never trumps reality.

Spot on, Clyde!

Clyde, you’re right…don’t let them trick you by saying La Ninas are as cold as El Ninos are hot

And La Niña events don’t blast through the bottom of the temperature record like a few El Niño events have blasted through the top.

1911 and 1976 tap P97.5… But they were also the inflection points of the ~65-yr climate cycle.

Does Nick Stokes know anything?

Anything?

Nick is very knowledgeable and, from my experience, very honest. Even when I disagree with him, I appreciate his informed disagreement. Personal attacks on him don’t really contribute to the debate.

David,

Neither does his disinformation.

And I’m surprised that you don’t see it for what it is.

Nick maybe wrong in his interpretation and he might get the facts wrong on occasion… but I really haven’t seen anything that makes me question his honesty.

When people like Nick and Steve Mosher disagree with me, they generally force me to improve my arguments.

David Middleton says:

September 27, 2017 at 1:40 am

Nick maybe wrong in his interpretation and he might get the facts wrong on occasion… but I really haven’t seen anything that makes me question his honesty.

When people like Nick and Steve Mosher disagree with me, they generally force me to improve my arguments.

Sorry I disagree with you. Reread his statement to my post. In my view he is trying to obfuscate, project doubt without a founded argument. He does not produce a similar graph or point to a warmist produced similar graph that would debunk Tony’s graphs. Yes he does word his post careful enough not to appear directly wrong but the tens of answers in the post below do show how wrong he is.

He does not accept any correction but doubles down and tries to evade. I do not see that as honest debate.

I have no trust in the kind of science where historical data changes. Especially that it is not a case, oh we found and error and corrected it, but it is a continuous years by year sneaky adaptation always in the same direction.

There are many other problems with the temperature data as is anyhow, even without these sneaky adjustments.

Everybody knows that a city has a higher temperature then the environment (UHI). One needs only a car with thermometer and drive in and out the city. The bigger the city, the greater the difference.

So does a growing city not cause a growing temperature to be measure by a station that was at the periphery of the city 100 years ago? Moving the station at the periphery and having it a second time engulfed by the city would not cause another warming?

To this Berkeley does find no UHI effect? On the contrary a cooling city effect? If the consequences would not cost billions over billions it would be funny.

Tony Heller does point to the gradual data adjustment towards the continuous warming as shown in models. He does a great work.

Well, David, I’ve never found too much wrong with your arguments, or your science.

Mosher and Stokes on the other hand push the warmist disinformation irrespective of the facts.

That’s how their propaganda works.

You’ll never convince them that they are mistaken, because they are “believers” not scientists.

Carry on.

“Mosher and Stokes on the other hand push the warmist disinformation irrespective of the facts.”

The word you’re looking for is “disingenuous”, I think.

Thanks Cat,

Yep; that’s the one I was looking for.

Somehow or other it went missing from my vocab, but you found it for me.

Was it at the bottom of the ocean with all that missing heat?

I need to know. There might be more of them down there cooking away.

Sceptical Sam,

I agree with David about Stokes. I think Stokes is actually quite knowledgeable, and I haven’t caught him in an outright lie. However, I think that both he and Mosher (If you can get him to write a coherent paragraph) often indulge in sophistry to support their idealogy, and I have never known Stokes to admit that he was wrong. So, I wouldn’t characterize him as being ‘open minded’ or objective. But, as David points out, he makes a good foil to sharpen one’s arguments.

When you can get them to post in complete sentences… 😆

Slightly OT – looks like we have a big La Nina brewing in the Pacific:

http://www.bom.gov.au/climate/enso/#tabs=Sea-sub%E2%80%93surface

Yes, there is a very big la nina coming:

http://www.bom.gov.au/fwo/IDYOC002.gif?1506378561

The spike will belong soon to the past.

It will take far more than a major La Nina to make temperatures from NOAA/GISS (and their companions in mal-adjustment) drop back down to reality.

i agree andy. the downside of the amo should do the trick though. the recent el nino released a lot of heat over a long period of time (it never bested the peak of the 98 event ,i don’t care what anyone says they recorded , the natural responses like fish movements do not support that position) and i think the coming la nina will be a long period event ,relatively speaking, without it bottoming out as low as previous events .note this is a belief, not a projection .

“big La Nina”?

Not really, says the BoM:

“Five of the eight models suggest SSTs will cool to La Niña thresholds by December 2017, but only four maintain these values for long enough to be classified as a La Niña event.”

But… Nick… The models run hot! 😆

What use the models then Nick?

And BoM?

The BoM has lost its credibility. It is wrong even more frequently than the models.

David Middleton

September 26, 2017 at 3:43 pm

But… Nick… The models run hot! 😆

Mr Middleton shame, that comment is not funny!

I thought it was fracking hilarious! 😎

Yeah. And six months ago the CPC models were projecting a weak El Niño or the warm side of ENSO neutral.

GW, then eventually they will get it correct.

Funny Twitter handle.

The answer is simple.

If Zeke, Gavin and the rest of the phoneys believe their models are right, let them forecast temperatures over the next 20 years.

We’ll then come back and see how close they were. In the meantime, let’s stop obsessing about CO2 and get back to the things that should really be concerning us .

(Sorry – I forgot – Zeke, Gavin and co rely on this nonsense to earn a living.)

And let them back their forecasts with bets on the new London climate betting site.

All bets are off if there is another “pause busting” paper?

Seems like risky business tasking those with control over temperature data to make predictions

Didn’t the boundaries change because they are 95% certain now instead of just 90% ?

https://www.democracynow.org/2013/9/27/headlines/ipcc_scientists_now_95_certain_climate_change_caused_by_humans

Just in case people have a short memory.

The real trick is that the models that they are using for these comparisons are not the models that they are showing the politicians. The mid-range of the models in this comparison warm at less than 2 degrees C per century.

By contrast, a few years ago the CSIRO in Australia presented a model to Prime Minister Julia Gillard with six or seven degrees warming by 2070. She used that to justify introduction of a carbon tax.

gee the same CSIRO where nick stokes used to work?

Yes, but that CSIRO model was just “hyperbowl”.

That might be a little esoteric, so for the benefit of our international readers:

But I’m sure Nick will be stoked.

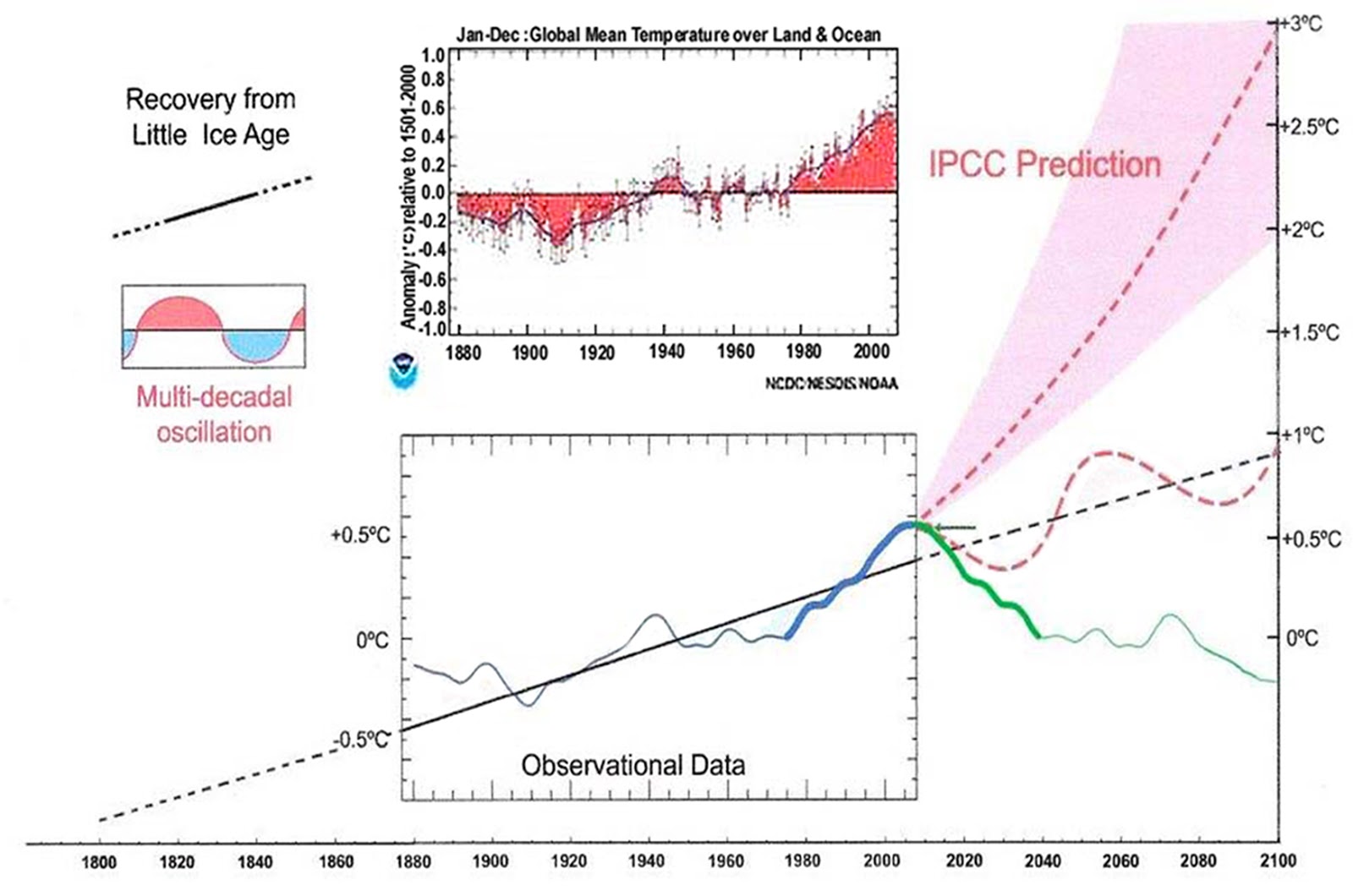

Here is what is really going on See Figs 1 -12 at

https://climatesense-norpag.blogspot.com/2017/02/the-coming-cooling-usefully-accurate_17.html

Here is fig 4

“The RSS cooling trend in Fig. 4 and the Hadcrut4gl cooling in Fig. 5 were truncated at 2015.3 and 2014.2, respectively, because it makes no sense to start or end the analysis of a time series in the middle of major ENSO events which create ephemeral deviations from the longer term trends. By the end of August 2016, the strong El Nino temperature anomaly had declined rapidly. The cooling trend is likely to be fully restored by the end of 2019.”

and Fig 12

Fig. 12. Comparative Temperature Forecasts to 2100.

“Fig. 12 compares the IPCC forecast with the Akasofu (31) forecast (red harmonic) and with the simple and most reasonable working hypothesis of this paper (green line) that the “Golden Spike” temperature peak at about 2003 is the most recent peak in the millennial cycle. Akasofu forecasts a further temperature increase to 2100 to be 0.5°C ± 0.2C, rather than 4.0 C +/- 2.0C predicted by the IPCC. but this interpretation ignores the Millennial inflexion point at 2004. Fig. 12 shows that the well documented 60-year temperature cycle coincidentally also peaks at about 2003.Looking at the shorter 60+/- year wavelength modulation of the millennial trend, the most straightforward hypothesis is that the cooling trends from 2003 forward will simply be a mirror image of the recent rising trends. This is illustrated by the green curve in Fig. 12, which shows cooling until 2038, slight warming to 2073 and then cooling to the end of the century, by which time almost all of the 20th century warming will have been reversed.”

Schmidt continues to make the same egregious error of scientific judgement as of the majority of academic climate scientists ,lukewarmers , the MSM ,GWPF and the ecoleft chattering classes in general by projecting temperatures forward in a straight line beyond a peak and inversion point in the millennial temperature cycle.

Climate is controlled by natural cycles. Earth is just past the 2003+/- peak of a millennial cycle and the current cooling trend will likely continue until the next Little Ice Age minimum at about 2650.

Using statistical maleficence to pretend that their statistically mal-adjusted temperature series are somewhere near their non-validated models.

Its a travesty !!

All the data confirms a long term temp rise caused by AGW. You are all distracting yourselves with nitpicking when you should be publishing your evidence in the real world like any good scientist does.

Here, it means nothing.

WTF, after the “data” has been infilled and adjusted. Ever heard of the Texas Marksman game?

“All the data confirms a long term temp rise caused by AGW”

WRONG !

There is basically ZERO data showing any anthropogenic global warming

A highly beneficial and natural rise out of the coldest period in 10,000 years.

Local warming from UHI effects , that feeds through into the calculated global temperature.

Nearly all of the recent (since 1979) REAL warming has come from ocean events and oscillations.

There is a large amount of “fabricated” temperature rise on top of that.

Those “adjustments” are the only form of “anthropogenic warming” in the last 40+ years.

There is ZERO signal of any CO2 based warming in the whole of the satellite era.

WTF . Nonsense .The Figs, data and interpretations linked at 2:44 above are a blog version of the following

peer reviewed paper:

The coming cooling: usefully accurate climate forecasting for policy makers.

Norman J. Page

Houston, Texas

Dr. Norman J. Page

Email: norpag@att.net

DOI: 10.1177/0958305X16686488

Energy & Environment

0(0) 1–18

(C )The Author(s) 2017

Reprints and permissions:

sagepub.co.uk/journalsPermissions.nav

DOI: 10.1177/0958305X16686488

journals.sagepub.com/home/eae

ABSTRACT

This paper argues that the methods used by the establishment climate science community are not fit for purpose and that a new forecasting paradigm should be adopted. Earth’s climate is the result of resonances and beats between various quasi-cyclic processes of varying wavelengths. It is not possible to forecast the future unless we have a good understanding of where the earth is in time in relation to the current phases of those different interacting natural quasi periodicities. Evidence is presented specifying the timing and amplitude of the natural 60+/- year and, more importantly, 1,000 year periodicities (observed emergent behaviors) that are so obvious in the temperature record. Data related to the solar climate driver is discussed and the solar cycle 22 low in the neutron count (high solar activity) in 1991 is identified as a solar activity millennial peak and correlated with the millennial peak -inversion point – in the UAH temperature trend in about 2003. The cyclic trends are projected forward and predict a probable general temperature decline in the coming decades and centuries. Estimates of the timing and amplitude of the coming cooling are made. If the real climate outcomes follow a trend which approaches the near term forecasts of this working hypothesis, the divergence between the IPCC forecasts and those projected by this paper will be so large by 2021 as to make the current, supposedly actionable, level of confidence in the IPCC forecasts untenable.

1) The surface data does show AGW, humans adjusting it to show more warming that didn’t exist.

2) Only warming occurred over the last 20 years has been from the strong El Nino, but humans adjusting it have caused a bigger difference from before.

3) Any warming is no different from noise and almost as big as the errors involved.

4) The AMO and ENSO explain most of the warming during the long term temp rise.

5) Taking those into account in 4) there is little room for anything else.

WTF,

here is real evidence that AGW is not being supported by the failed IPCC per decade warming trend prediction of .30C,which has been in place since the 2007,they also said the same in the 1990 report too,which was wrong too,

“For the next two decades, a warming of about 0.2°C per decade is projected for a range of SRES emission scenarios. Even if the concentrations of all greenhouse gases and aerosols had been kept constant at year 2000 levels, a further warming of about 0.1°C per decade would be expected.”

https://www.ipcc.ch/publications_and_data/ar4/wg1/en/spmsspm-projections-of.html

The satellite data shows it is LESS than 50% of the predicted rate,BASED on the AGW conjecture:

http://www.woodfortrees.org/graph/rss/from:1990/mean:12/plot/rss/from:1990/trend

Not even close!

WTF,

Now why would someone like yourself waste time sharing your thoughts at a place where “it means nothing?”

Clyde,

Because I like seeing the excuses you guys come up with for your glaring failures within the scientific community, hence the existence of sites like this.

(You are trolling,debate the comments instead) MOD

Poor WTF.. attention seeking… by mindless yapping

wtf, if you can point me to one paper that identifies the human causal signal in the recent small amount of warming the globe has experienced i will immediately become a warmist.

WTF, an empty vessel makes the louder echo…vassal would work too i suppose.

MOD,

Pointing out the skeptic’s failure to engage with the scientific community and get any counter evidence to work is not trolling, just basic observation.

(This is your second warning,stop trolling and debate the replies) MOD

Creatively preposterous claim, ‘WTF’. The common orthodox warmist demand is for only “peer reviewed” criticism of the orthodoxy, in publications controlled by the guardians of the orthodoxy. One really should climb from under your metaphoric bridge and realize just how preciously irrational that is.

WTF, if you want to be labeled as anything other than a cliche ridden troll, try something really difficult, like defending the temperature records in HADCRUT and GISSTEMP from the conclusion that they have been adjusted and infilled to the point of bearing no resemblance to reality.

WTF,

You are long on opinion and short on substantiation. You accuse skeptics of not having any significant contributions here. However, I view my submissions as a form of informal peer review. However, the process depends on people like yourself actually submitting comments with logic, or counter-citations that are germane, not just vitriolic opinions.

Your very first statement, “All the data confirms [sic] a long term temp rise caused by AGW.”, is not supported beyond being contested. It is generally accepted that the Earth has been warming since at least the end of the Little Ice Age. Logically, the second half of your statement cannot be supported. “AGW” is a presumed effect of anthropogenic activities, not the actual process for causing warming. Overlooking your limited ability to even state your beliefs, there is considerable controversy just what the extent of anthropogenic contributions are.

So, you really aren’t making any contribution here either for or against your belief system. It would seem that your primary purpose is to be an annoyance to people whom you think are not as smart as yourself. That would seem to be a decent definition of a “troll.”

The only way these demonstrated-incorrect models could have been induced to create an accurate hindcast is if the parameters were “tuned” to give that result regardless of reality. Using these faked models to claim that they will give an accurate forecast is ludicrous. Dr Schmidt is being disingenuous perhaps? Or maybe climate science is as difficult for him as was mathematics.

Models are computer programs. They can be written to produce whatever result you want. It don’t mean they are correct. Or, if correct, correct for the right reasons.

With enough knobs, dials and degrees of freedom, any past behavior can be reproduced by any model. The problem is always predicting the future and the more wrong the un-tuned model was to begin with, the further from reality predictions of the model ‘tuned’ to the past will be.

“CMIP-5 yielded a range of 0.4° to 1.0° C in 2016, with a P50 of about 0.7° C. CMIP-3 yielded a range of 0.2° to 1.0° C in 2016, with a P50 of about 0.6° C.

They essentially went from 0.7 +/-0.3 to 0.6 +/-04.

Progress shouldn’t consist of expanding the uncertainty…”

Isn’t that diminishing the uncertainty?

Not if the CMIP3 result is the “progress.”

CMIP5 is supposed to be the progress. It is Phase 5 of the Coupled Model Intercomparison Project whereas CMIP3 was Phase 3. I think Fabius Maximus has things backwards and Gavin doesn’t know enough to tell the difference.

Nick, I made the same point in David’s identical comments on a previous post and he never replied. I thought he was just going to quietly never mention it again. That reaction at least would have been rational.

There were 500+ comments in that thread. I didn’t read them all.

Schmidt’s CMIP3 model was presented as “progress” over CMIP5. CMIP-5 yielded a range of 0.4° to 1.0° C in 2016, with a P50 of about 0.7° C. CMIP-3 yielded a range of 0.2° to 1.0° C in 2016, with a P50 of about 0.6° C.

They essentially went from 0.7 +/-0.3 to 0.6 +/-04.

Progress shouldn’t consist of expanding the uncertainty… unless they are admitting that the uncertainty of the models has increased.

Larry then asked Dr. Schmidt about this oddity and Schmidt didn’t have a coherent answer.

David Middleton: Please provide evidence that Dr. Schmidt claimed that CMIP3 was progress over CMIP5.

I’m pretty sure I wrote my post in English. If you have a problem with something I posted, quote the exact phrase, in context.

David Middleton: I asked about your comment claiming that Dr. Schmidt said CMIP3 was progress over CMIP5. Nothing in what you wrote supports that. So you just plain lied, and continue to lie.

Quote my exact words instead of lying about what I posted.

David Middleton: You wrote “Schmidt’s CMIP3 model was presented as “progress” over CMIP5.” That was flatly untrue.

Quote my exact words, in context, instead of lying about what I posted.

I was referring to the WUWT post by Larry Kummer in this comment…

My comment was in reply to this comment…

The CMIP3 model was referred to as “A climate science milestone: a successful 10-year forecast!” Hence, progress over previous models.

I never said that Dr. Schmidt called it progress. Although he is the one who posted it as a more successful model than the CMIP5 model he subsequently posted.

No, Larry Kumer did not refer to CMIP3 as progress over CMIP5. He referred to progress in the existence of a successful 10-year projection. Emphasis on 10 years. Obviously that had to be CMIP3 because it was published 10 years ago. It could not be CMIP5 because not enough time has passed. So you lied about Kumer’s statement, and continued by implying Schmidt agreed with that false statement. Never anywhere was there any basis in fact for anyone but you having claimed that CMIP3 was progress over CMIP5. My guess is that you got confused but then instead of simply admitting that you have continued to dig yourself deeper. Pathetic.

When I’m quoting people, I put these things ” ” around the quote or use the blockquote tags.

I didn’t say Larry referred to it as progress. I said he presented it as progress (a milestone in climate science).

Learn to read…

https://wattsupwiththat.com/2017/09/22/a-climate-science-milestone-a-successful-10-year-forecast/

Ed Hawkins CMIP5 ensemble has over 10 years of forecast mode. Schmidt’s CMIP3 model was cearly presented as progress over CMIP5 models.

By the way, I think there’s a decimal point missing in “-04.”

Gavin Schmidt’s reproduction of Hansen’s 1998 paper is a lie. Hansen’s Scenario A was presented at the time as the “business as usual” scenario, with continued exponential growth of CO2 emissions.When asked by Congress, under oath, Hansen said that scenario A was “business as usual.” In the 1988 paper his only caveat on that scenario was that, since it had exponential input, it “must eventually be on the high side of reality” as we start running into resource shortages – an event that has not yet happened.

Since 1998, at least through about 2009, CO2 emissions actually grew to a level that would follow the numerical rate of exponential growth assumed in the 1998 study. But after Pat Michaels, Michael Crichton and others stared lambasting this prediction since it was so far off, Hansen and Schmidt took the “high side of reality” quote from the original paper out of context and pretended that scenario A was only a “worst case” scenario and that Scenario B was the benchmark prediction for “business as usual.” But in the original model run, Scenario B assumed sufficient emissions controls to attain a constant rate of growth in greenhouse gas emissions, a scenario that never occurred except perhaps immediately following the 2007-09 recession.

In other words, since 2008 our CO2 emissions have actually grown at the exponential rate predicted in Scenario A, Hansen testified at the time that Scenario A was the “business as usual” scenario. but 30 years later when that forecast was wildly off base, Hansen and Schmidt lie and say that Scenario B was the “business as usual” scenario.

I used to think that it was. A vs B is kind of subjective. Steve McIntyre demonstrated that B was closest to “business-as-usual.” Nick will probably post a link to McIntyre’s analysis.

David — no, Hansen’s testimony is very clear, he was making a prediction based on emissions, not concentrations — remember, he was making policy recommendations! The fact he got the relationship between emission and concentrations completely wrong cannot make his model of “emissions vs temperature” more accurate..

http://classicalvalues.com/2014/12/getting-skeptical-about-the-claims-made-by-skepticalscience-about-skeptics/

“A vs B is kind of subjective.”

The term “business as usual” is subjective. And what Hansen actually said in his paper was:

But basically none of this matters. The whole point of scenarios is that they cover things outside the science. What decisions people will make in the future. They are used for matters that scientists don’t claim to be able to predict. So there isn’t any use fussing about which they thought most likely. They calculated all of them, and the only thing that matters is, what actually happened?

I duscuss that here. It has extracts from the data, and links to Steve McIntyre’s threads, including some of his plots. Basically we agree that the scenario that happened is B; I would give it a B- (SM was writing in 2008).

Just temperature charts. The CO2 scenarios were laid out in narratives.

Forrest,

No, but the numbers are available. He plotted CO2 forcings, which are related to concentration by formula:

Reality is probably closest to B. The temperatures mostly tracked C.

“The term ‘business as usual’ is subjective. And what Hansen actually said in his paper was:

‘Scenario B is perhaps the most plausible”

But basically none of this matters. The whole point of scenarios is that they cover things outside the science.”

First, you are also taking quotes out of context. His comment about Scenario B immediately followed his comment that Scenario A couldn’t last indefinitely and that Scenario C likely wouldn’t be implemented, i.e. this quote on Scenario B was for the long term – not the first 20-30 years of projections (for which scenario A went out about 100 or so years). And ask yourself why Hansen would have told Congress under oath that Scenario A was the “business as usual scenario” if he really thought that Scenario B was the most likely case? Was he lying to Congress? And in later Congressional testimony when discussing scenario B as being plausible, he was discussing that scenario over much longer time frames than the initial 30 years of the forecast.

Nor is the task of validating any of these projections outside science. This is NASA’s model. All they have to do is dust it off the shelf today and put in the actual greenhouse emissions and volcanic eruptions that had occurred since 1988, and see just how well the model performs with the emissions path we now know occurred.Hansen didn’t do that when he tried to defend his paper, but instead simply made a useless comparison of actual temperatures against his three scenarios, and only in hindsight said that scenario B was the target to hit. (Texas sharpshooter, anyone?)

Here’s a link to a graph that charts annual CO2 emissions globally and by country from 1990 to 2015 and 1960 to 2015, respectively:

Look at those charts and try to say that greenhouse gas emissions followed the same linear trend that existed from 1960 to 1988, onward out to 2010, which is what scenario B entailed. Also in Hansen’s paper you will find the numerical value for the growth rate assumed for the exponential increase (a modest 1.5% per year), and if you use that growth rate on the 1998 emissions you get to at least what our current emissions are.

What Hansen and Schmidt are doing is a bait and switch. The prediction in 1998 was to estimate temperature as a function of emission scenarios, and the 1988 paper expressed “forcing” as a simple function of atmospheric CO2 concentration (rotely converted to temperature by a theoretical no-feedback estimate of equilibrium temperature at that concentration). Look at the y-axis of FIG. 2 of Hansen ’88 you cite and you’ll see that it’s in temperature, not watts/meter squared, and again only actually reflects GHG concentrations since the temperature values were just a theoretical conversion to equilibrium temperature.

The real world emissions followed scenario A , as apparently did actual CO2 concentrations in the air (since the alarmist line, even today, is that the cumulative CO2 in the atmosphere from industrial activities has doubled since 1988, and a 1.5% growth rate wouldn’t even get past 1.6). This is a huge problem for Hansen and Schmidt since it means that the world did indeed match or even exceed Hansen’s “Scenario A” exactly as he described it in his paper. Their response was a pathetically obvious piece of historical revisionism – pretending that the GHG concentration “forcing” was no longer GHG concentrations, but instead W/m^2 (power) and then ginned up some theoretical calculations, and . . . “oh, hey, will you look at that? Forcing seems to look a lot like scenario C.”

This is so transparent that only the terminally gullible would fall for it. In 1998 they splattered a few lines on a wall and told Congress that the sky was falling because the world’s trajectory was along the top, scary line and said “you have to listen to us.” Congress didn’t listen to them, and the sky never fell. When they got called on their silliness, they went back to their original paper and gave words and graphs new meanings that were never intended in the original paper, and came up with some entirely new post-hoc calculations to try to pretend that the prediction was right all along, that war is peace, freedom is slavery, and ignorance is strength.

If the goal is to demonstrate your scientific understanding of a system by accurate predictions, then once a prediction is made, you later test whether it’s right or it’s wrong. There’s nothing in between, and you don’t shift the prediction into something it wasn’t. You don’t get to revise history and retroactively readjust the conditions on which the prediction was made. If Hansen in 1998 didn’t understand the Earths climate system well enough to get the simple stuff right (how much added heat input results from CO2 emissions of constant growth) it’s a farce to think that he understood the system well enough to get the hard stuff right (what temperature results).

Lovely little clipped picture without context, Nick.

Hansen’s full statement preparatory to your tiny snippet:

Hansen explains his “model” a tad bit more later in the paragraph.

WUWT, Climate Audit and Lucia’s Blackboard also “investigated” Hansen’s always changing forecasts.

http://appinsys.com/GlobalWarming/HansensPredictions1988_files/image001.gif

Note the “difference between “observations” added to Hansen’s forecasts around 2008, and Gavy’s twitter trick as shown above.

Or the temperatures applied by Lucia:

http://appinsys.com/GlobalWarming/HansensPredictions1988_files/image012.jpg

http://appinsys.com/GlobalWarming/HansensPredictions1988_files/image014.jpg

Or Steve McIntyre’s version of Willis’s digitized data with Steve adding in the then current data?

N.B.

Steve McIntyre’s “observations: shows observed anomalies ranging from 0.0°C in 1960 to 2008’s +0.55°C (GISS)

or RSS’s +0.16°C in 1979 to +0.45°C in 2008

(Both eyeball estimates.)

Where Gavy’s twitter silliness shows temperature observation anomalies in 1976 as -0.45°C while 2008 reaches +0.3°C.

Yes, the various charts were “re-centered” to align chart basics:

e.g. “In order to put the three Scenarios apples and apples to the GISS GLB temperature series (basis 1951-1980), I re-centered the three scenarios slightly so that they were also zero over 1958-1967.”

Hansen had several other views on his forecasts in the document, including:

Three of six faces represents a maximum “B scenario”. Four sides represent a lower “A scenario”

CO² increase from 2016 to 2017 was roughly 2.42ppm; which works out to a 0.604% increase from 2017. A lack of catastrophic warming is not a surprise.

Kurt September 26, 2017 at 7:47 pm

The real world emissions followed scenario A , as apparently did actual CO2 concentrations in the air (since the alarmist line, even today, is that the cumulative CO2 in the atmosphere from industrial activities has doubled since 1988, and a 1.5% growth rate wouldn’t even get past 1.6). This is a huge problem for Hansen and Schmidt since it means that the world did indeed match or even exceed Hansen’s “Scenario A” exactly as he described it in his paper.

Hansen’s projections for CO2 were as follows for 2016:

Scenario A: 405.5

Scenario B: 400.5

Scenario C: 367.8

Actual global annual average: 402.8

So the CO2 projection fell between A and B, not bad agreement after 30 years.

David Middleton September 26, 2017 at 4:47 pm “Just temperature charts. The CO2 scenarios were laid out in narratives.”

In the submission to the congressional record, the scenarios were clearly described as emission scenarios. The plots are of the scenarios.

As Kurt points out, the actual emissions were actually in excess of A, mainly because of China.

Steve McIntyre has a good analysis of Hansen’s paper & testimony…

https://climateaudit.org/2008/01/16/thoughts-on-hansen-et-al-1988/

It’s almost too convoluted to figure out what Hansen’s reference case was.

Phil. — that’s a common point of confusion, Hansen was pretty close on CO2 concentrations (although A was too pessimistic about the relationship of emissions to concentrations) but way, way off on methane, which flattened in the 1990s for reasons that are still the subject of some debate.

Naturally this leads AGW enthusiasts to promote the idea that Hansen’s model was awesome, because no one could have predicted what methane would do (actual argument from SkS). The implications for predictability of temperature trends seems largely to escape them.

Steve’s 2008 post is as excellent as his work usually is — he notes that in 1998 Hansen claimed the forcings were more like B. This was probably true, but emissions looked more like A.

Remember, Congress cannot pass a law to change concentrations, legislation can only affect human emissions, and that policy was the subject of the testimony. So when judging his 1988 predictions, Scenario A is the correct choice.

If people want to go back and run Hansen’s model with updated assumptions, they should feel free, but that cannot affect his 1988 predictions one way or another.

Apart from the strong El Niño events of 1998 and 2015-16, GISTEMP has tracked Scenario C, in which CO2 levels stopped rising in 2000, holding at 368 ppm.

The utter failure of this model is most apparent on the more climate-relevant 5-yr running mean:

“Steve’s 2008 post is as excellent as his work usually is — he notes that in 1998 Hansen claimed the forcings were more like B. This was probably true, but emissions looked more like A.”

This isn’t accurate. In 1998 Hansen ridiculously alleged that actual forcing approximated Scenario C, even though his data really indicated that actual forcing exceeded scenario A. His deceit in the paper was in ignoring the conservative assumption, made in all three scenarios presented in his original forecast, that the growth rate in emissions starting in 1988 would only be 1.5%, with the three scenarios having different forecasts of what the growth rate would do after that, and even though the historical growth rate was more like 4%. The link to his original paper is here, look at p. 9345 and Appendix B to see that all three scenarios assumed an unrealistic, instantaneous cut in the growth rate of GHG forcing to 1.5% starting in 1988:

https://pubs.giss.nasa.gov/abs/ha02700w.html

So in 1998, a decade later, in a lame attempt to show that he got his predictions right, Hansen presents a graph indicating that year-over-year percentage growth rate of the combined forcing of all the GHGs considered in his first paper started plummeting (only due to methane and some CFCs), but the growth rate in net forcing started dropping from the 4% growth rate that was much higher than what all three scenarios presumed would occur in 1998. Hansen superimposed his three scenarios over this curve without accounting for the discontinuity that should have been in each of the three scenarios in this the graph, at 1998 on the x-axis, i..e Hansen’s graph inappropriately lined up the curves on the vertical axis to pretend that the actual growth rates were following scenario C, when really scenarios A, B, and C all should have dropped way below that curve starting in 1988.

Here’s a link to the paper – the offending graph is FIG. 5B (look carefully at the legend on the vertical axis):

https://pubs.giss.nasa.gov/abs/ha01100t.html

Also, look at FIG. 5A. You’ll note that in his original scenario A, in 2005 the combined forcing of all the gasses.considered (CO2, methane, CFCs, Nitrous oxide, and other trace gasses i) were forecast to be about 2.2 W/m^2. Here is an IPCC chart indicating the actual relative forcing of these gases in 2005

You’ll notice that the sum of the forcing of CO2, methane, N2O, and CFCs is right on track with Hansen’s scenario A shown in FIG. 5A of his 1998 paper, and this doesn’t even consider other trace gasses Hansen threw into scenario A. The very notion that GHG forcing folowed Hansen’s admittedly unrealistic scenario C beginning in 1988, given all the global growth throughout the 1990s and 2000s is so absurd that, in order to be suckered by that argument, you have to want to believe it so badly that all rational thought goes out of your mind.

Kurt September 27, 2017 at 3:17 pm

“Hansen’s projections for CO2 were as follows for 2016: Scenario A: 405.5; Scenario B: 400.5; Scenario C: 367.8 – Actual global annual average: 402.8

So the CO2 projection fell between A and B, not bad agreement after 30 years.”

There is no agreement to be had; Hansen’s prediction, even on how much CO2 would be in the atmosphere was way off.

No it was not, as shown above A was about 3ppm above actual and B was about 2ppm below actual.

I don’t know where you came up with those numbers – they certainly weren’t in Hansen’s 1988 paper, so I suspect that they were put out post hoc as some sort of deceitful statistical trick.

Clearly you don’t know, so you make up your own conspiracy theory, they are from the file containing the projected concentrations released by Hansen in 1989, the same source as used by McIntyre when he plotted the data in 2008.

But let’s assume for a second that you have accurately correlated each of Hansen’s emissions scenarios with a 1998 prediction of CO2 atmospheric concentrations in 2016 at each of those scenarios.

Let’s not because that’s not what I or McIntyre did!

With respect to CO2 emissions, Hansen’s scenario A assumed that CO2 emissions would only grow at a 1.5% rate.

No it did not, it assumed that from 1981 onwards (after the end of Keeling’s observations) the ‘annual increment’ in CO2 would grow at 1.5% yr-1, i.e. it is the second derivative not the first as you have erroneously supposed. Since the annual increment in 1981 was 1.4ppm, 36 years of 1.5% growth in that increment would mean that it would now be growing at 2.3ppm yr-1.

The world blew through that rate throughout the 1990s and 2000s, and even after the stall in CO2 emissions growth over the last several years, growth since 1998 still average around 2% a year – well above Hansen’s 1.5% growth rate for emissions scenario A (33% off), and his scenario B assumed that growth rates would drop to 1.0% in 1990, 0.5% in 2000 and 0% in 2005. Needless to say, scenario B didn’t happen.