By Andy May

In the Great Climate Change Debate between Professor David Karoly and Professor Will Happer, Glenn Tamblyn was called upon to finish the consensus side of the debate after Karoly backed out. The details are described in my latest book. The debate contained an illuminating exchange of opinions on satellite versus surface temperature measurements. This is Glenn Tamblyn’s opinion:

“Stitching together raw data from multiple satellites is very complex. Thus, the satellite datasets are much less accurate than the surface temperature datasets.

Professor Happer’s stronger emphasis on satellite temperature measurements does not agree with the experts on the subject.”

(Tamblyn, 2021b, pp. 7-8)

Satellites measure microwave radiation emitted from oxygen atoms in the atmosphere to estimate the “brightness” temperature, which can then be converted to an actual atmospheric temperature. No correlation to any other measurements is required. The measured brightness is compared to the brightness temperature of deep space (-455°F) and a target of known temperature within the satellite to compute the actual temperature in the atmosphere.[1]

Due to interference and clouds, this technique does not work close to the surface, so satellite atmospheric temperatures cannot be directly compared to surface measurements. The satellite measurements are best for measuring air temperatures in the mid-troposphere and the lower stratosphere.

The Hadley Centre has estimated that their best current estimate of global monthly average SST accuracy (sea surface temperature average uncertainty from 2000 to 2021) is about ±0.033°C and David Karoly supplied an estimate of ±0.1°C. This is a bit less accurate than the accuracy Roy Spencer and John Christy estimate for their satellite measurements of ±0.011°C for a monthly average.[1]

Given these peer-reviewed analyses, we can comfortably assume that the satellite data is at least as accurate as the surface data, if not more accurate. Besides, the satellite data covers a larger volume of the atmosphere, and it uniformly covers more of the globe than the surface data.

Tamblyn seems to think that because the data used to create the satellite record is from multiple satellites, this necessarily means the satellite temperature record is less accurate. This is incorrect, the satellites are merged with an accurate procedure described by John Christy.[2]

We also need to remember that the surface measurements are made in a zone with great volatility and a large diurnal variation. The satellites measure higher in the atmosphere, in a more stable environment, and are better suited to estimating climate change, as opposed to surface weather changes.

In Figure 1, the UAH satellite lower troposphere temperature record (light blue) is centered at about 10,000 feet.[2] It is compared to the Hadley Centre sea surface temperatures (HadSST4, in gray) and the land and ocean surface temperature dataset (HadCRUT5, in orange).

Happer, clearly states that the satellites are measuring a different temperature and have consistent, nearly global coverage. Ground measurements, on the other hand, are sparse, irregularly spaced, and made with many different devices.[3]

In Figure 1 we can see the HadSST and the UAH satellite lower troposphere warming trends are identical at 0.14°C/decade. The major difference is that the El Niños and La Niñas are more extreme in the lower troposphere than on the surface. Two prominent El Niños are visible in 1998 and 2016. Prominent La Niñas have the reverse relationship to the El Niños as seen in 2008 and 2011.

The HadCRUT5 dataset is well below the other two in the beginning of the period and above at the end. It has a warming rate of 0.19°C/decade. This 36% increase in warming rate is entirely due to adding land-measured surface temperatures to the SSTs, even though land is less than 30% of Earth’s surface.

Figure 1 leads us to three important conclusions. First, the El Niño years support the idea that a warmer surface will lead to more evaporation, which carries heat from the ocean surface to the lower troposphere; the same process that carries heat from an athlete’s skin into the atmosphere. As the evaporated water condenses to droplets, usually in clouds, it releases the heat of evaporation into the surrounding air. The lower troposphere UAH temperature, plotted in Figure 1, is most sensitive to temperatures around 10,000 feet, but it includes some emissions below 6,500 feet, which is a common altitude of lower clouds.

Second, since the overall rate of HadSST warming is about the same as the UAH lower troposphere warming, it suggests that the extra warming in the HadCRUT5 land plus ocean dataset is suspect.

Third, if HadCRUT5 is correct, it means the surface is warming faster than the lower and middle troposphere. If this is true, the IPCC Reports and models suggest that the warming is not due to greenhouse gases.[4] It could be that additional warming in the troposphere, above 6,500 feet, is due to El Niños and not due to GHGs (greenhouse gases). It is hard to accept that the data plotted in Figure 1 is both accurate and consistent with the idea that greenhouse gases are causing surface warming. For a further discussion of the relationship between GHGs and the ratio of surface to middle troposphere warming see my previous post.

In summary, both common methods of determining the global average temperature, satellite, and surface, are probably accurate to within a tenth of a degree, as suggested by Karoly. Both methods show the world is warming, but currently the HadCRUT5 surface warming estimate of 0.19°C/decade is significantly higher than the satellite lower troposphere and SST estimates of 0.14°C/decade. The excess surface warming is entirely due to land measurements or adjustments to them. The adjustments made to the CRUTEM5 land surface temperature measurements and the similar Global Historical Climatology Network (GHCN) are significant and controversial as explained by Peter O’Neill and an impressive list of coauthors.[5] To be clear, O’Neill, et al. evaluated the GHCN data and not CRUTEM5, but the adjustments to each of these datasets are similar and they both have the same weaknesses. The justification for the 36% increase in warming rate between the ocean and the land plus ocean records is not clear and probably indicates a problem with the CRUTEM5 land temperature record, the greenhouse gas warming hypothesis, or both.

Download the bibliography here.

This post was originally published at the CO2 Coalition.

-

(Spencer & Christy, 1990) ↑

-

(Christy, Spencer, & Braswell, 2000) ↑

-

(Kennedy, Rayner, Atkinson, & Killick, 2019) and (Karl, Williams, Young, & Wendland, 1986) ↑

-

(IPCC, 2021, pp. Figure 3-10, page 3-162) and (IPCC, 2013, pp. Figure 10.8, page 892). The dependence of the higher tropospheric warming trend on the CO2 greenhouse effect is most easily seen in the 2013 AR5 report since they present natural warming in blue and greenhouse warming in green in their figure. ↑

-

(O’Neill, et al., 2022) ↑

Satellites at least have no infill or missing stations problem. As the satellites agree with weather balloons, I would regard them as more reliable than surface stations, which do have a UHI issue.

Also, when you have 100% coverage of the planet, homogenization can’t be used to distort the data.

Not exactly 100% coverage. I think UAH is 85N-85S and RSS 80N-80S (back before it was “adjusted”.

Satellites do give the best coverage we’ve had.

There are also polar orbiters (NOAA-N) that can fill in the N and S polar regions, not necessarily with the u-wave measurements, but with other data that can be correlated to the u-wave measurements and produce similar temperature proxies.

The area above 85ºN and below 85ºS is a bit less than 1M.km² each

The area of the planet is about 510 M.km²

That means that the UAH data misses only about 0.4% of the planet.

1M km sq. About one Waddam?

🙂 Lols

Satellite data is not 100% coverage all day&night. They have a large % coverage after each cycle but how long does that take? They try to combine data from multiple satellites (multiple sources, multiple different design/aged sensors).

See figure 7.4

https://www.sciencedirect.com/topics/earth-and-planetary-sciences/metop

From Wiki..

“Satellite temperature measurements are inferences of the temperature of the atmosphere at various altitudes as well as sea and land surface temperatures obtained from radiometric measurements by satellites.

Satellites do not measure temperature directly. They measure radiances in various wavelength bands, which must then be mathematically inverted to obtain indirect inferences of temperature. The resulting temperature profiles depend on details of the methods that are used to obtain temperatures from radiances. As a result, different groups that have analyzed the satellite data have produced differing temperature datasets.

The satellite time series is not homogeneous. It is constructed from a series of satellites with similar but not identical sensors. The sensors also deteriorate over time, and corrections are necessary for orbital drift and decay. Particularly large differences between reconstructed temperature series occur at the few times when there is little temporal overlap between successive satellites, making intercalibration difficult.”

https://en.wikipedia.org/wiki/Satellite_temperature_measurements

All important points to remember, the UAH LT data are a convolution of the sensor responsivity with the 0-10km altitude air temperature that decrease exponentially from the surface.

Temperature is never measured directly. A common thermometer measures the expansion or contraction of mercury or alcohol as a proxy for temperature. You can’t measure the kinetic energy of a gazillion molecules.

That looks like an interesting publication. Unfortunately it is paywalled and I can’t find a free copy. I was able to find another interesting paper by some of the same authors using the GOES-R satellites as well.

Inferring missing links and other purposes.

And…the best surface station network (US) covers only 6% of the earth’s surface area and is compromised by many stations falling out of siting specifications due to land use changes.

Many regions have only very sparse coverage.

UAH only has the 9504 of the10368 grid cells filled with values, but they label their global average temperature as being from 90S to 90N which means they are effectively filling in the 864 unfilled cells with the average of the 9504 filled cells to get that full sphere average.

The only datasets that have true full sphere coverage are reanalysis like ERA5. The ERA5 warming is +0.19 C/decade which matches HadCRUT. Note that unlike UAH which uses a trivial global weighted infilling strategy HadCRUT uses a local weighted gaussian regression strategy.

bgdwx

Let me add that as opposed to UAH 6.0, UAH5.6 had a 100 % coverage as well.

This is visible only when processing the two grids for comparison purposes.

Download e.g. ‘tltmonacg_5.6′ or ‘tltmonamg.1979_5.6‘ from

https://www.nsstc.uah.edu/data/msu/t2lt/

and you will see that the topmost and bottommost three latitude bands contain valuable data.

Interesting. I wasn’t aware that v5 had 100% coverage. Interesting indeed!

It is better to hide what you can’t accurately observe.

And, as another commenter wrote, that’s no more than 0.4 % of the Globe, and even less if you take the latitude weighting into account.

” As the satellites agree with weather balloons… ”

Firstly, you very certainly don’t mean ‘satellites’, but rather UAH’s processing of NOAA satellite data.

Moreover, you claim sounds to me a bit strange, because ‘satellites’ in fact agree best with those weather balloon (radiosonde) processing methods which were derived from… satellite data.

To be convinced, you just need to consider the weather balloon subset named RATPAC (RATPAC-A for yearly, RATPAC-B for monthly data).

RATPAC is a tiny subset (85 units) of the about 1500 weather balloons (radiosondes) managed by NOAA within the IGRA data set.

The data provided by these 85 units is highly homogenized, according to techniques (RAOBCORE, RICH) developed about 15 years ago at Vienna U (Austria).

Here you see a comparison I made years ago, of the homogenized RATPAC-B data with

– the original data out of the IGRA data set;

– the average of the entire IGRA data set.

RATPAC-B shows considerably lower trends and higher homogeneity than the IGRA set out of which it was originally selected.

*

Feel free also to have a look at an interesting publication:

Satellite and VIZ–Radiosonde Intercomparisons for Diagnosis of Nonclimatic Influences

John R. Christy and William B. Norris (2006)

https://journals.ametsoc.org/view/journals/atot/23/9/jtech1937_1.xml

There you see how 31 radiaosondes peu à peu miraculously fit satellite data.

*

IGRA radiosonde data starts in 1958, and has data for 13 different atmospheric pressure levels, from surface up to 30 hPa (i.e. about 21 km altitude).

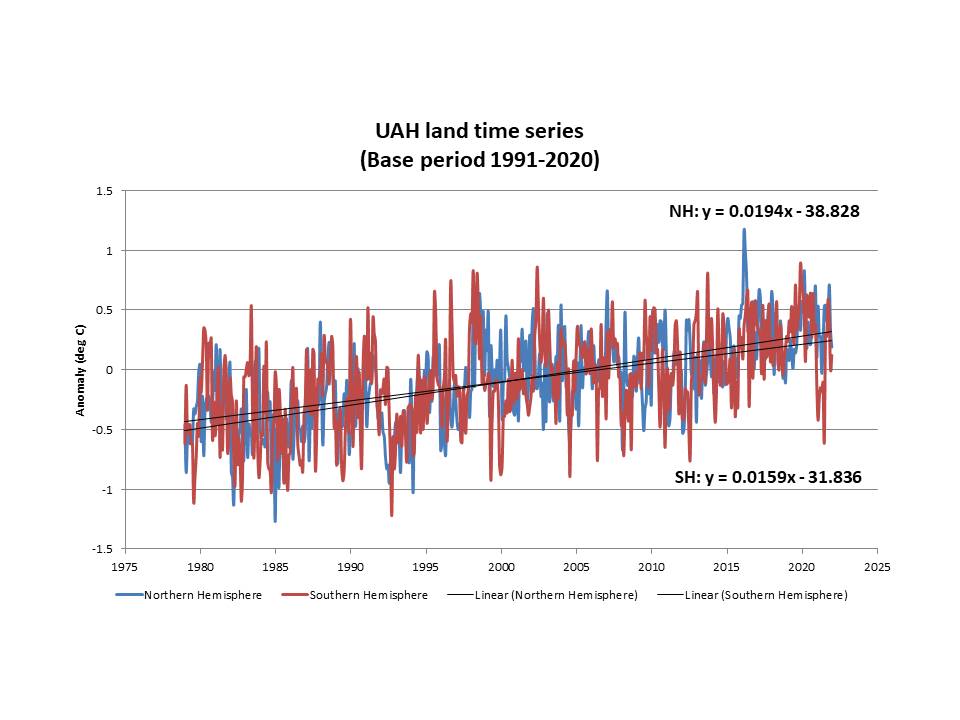

The 700 hPa level was selected for the graph below, because it is nearest to the 10,000 ft mentioned above in Andy May’s head post; moreover, the average absolute temperature of UAH measurements is around -9 C, what gives, when considering a lapse rate of 6.5 °C / km in the LT, about 3.7 km, corresponding to 640 hPa.

Here is the graph:

In blue, you have RATPAC-B at 700 hPa; in red, UAH Globe land.

{ The reason not to choose UAH Global (land+ocean) is that most radiosondes (70 % in RATPAC) are located on land, the rest being on islands. }

Trends for 1979-2022, in °C / decade:

*

But not quite surprisingly when you are familiar with UAH analyses like that made by Richard E. Swanson, the best polynomial fit calculated in a spreadsheet out of RATPAC-B data is obtained when you select the 300 hPa level.

That means however an altitude of about 9 km, where you hardly could find the temperatures measured by UAH for the LT.

Sources

IGRA

https://www1.ncdc.noaa.gov/pub/data/igra/

RATPAC-B

ftp://ftp.ncdc.noaa.gov/pub/data/ratpac/ratpac-b/

Bindidon, Thanks, very helpful addition to the discussion.

Andy May, thanks in turn for the convenient reply.

In fact I wanted to post a longer comment showing more aspects of the radiosondes data.

But I lacked the time to do, and post right here what I had intended to add.

*

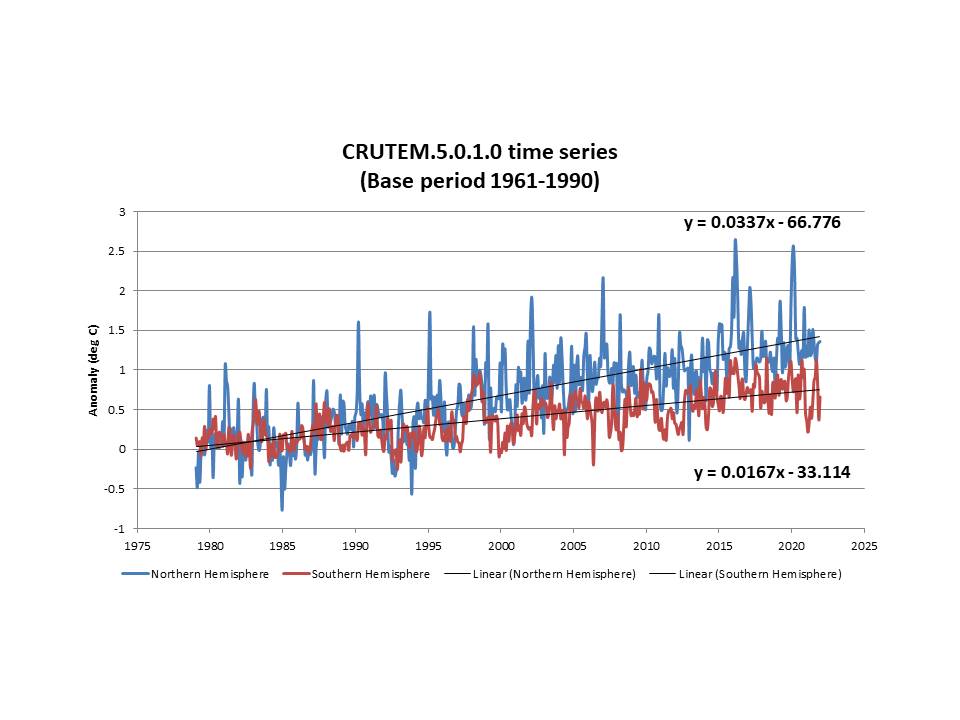

You wrote above

” Both methods show the world is warming, but currently the HadCRUT5 surface warming estimate of 0.19°C/decade is significantly higher than the satellite lower troposphere and SST estimates of 0.14°C/decade.

The excess surface warming is entirely due to land measurements or adjustments to them. “

And in a previous document, you wrote:

” It is possible that the urban heat island effect, combined with the UK Hadley Centre homogenization algorithm has contaminated the land-only CRUTEM5 record and distorted it. “

” Frankly, it suggests there is a problem with CRUTEM5. Figure 3 suggests the problem is getting worse in recent years, not better. “

If that was the case: how then do you interpret this comparison of CRUTEM5 with the surface level of the same RATPAC-B radiosonde data?

Trends for 1979-2022, in °C / decade:

CRUTEM5’s trend for 1958-now (0.22 C / decade) is somewhat higher than that of the radiosonde data (0.22 C / decade).

This is only due to the lower temperatures before 1990, because while CRUTEM5’s trend for 2000-2022 keeps at 0.28, that of the radiosonde data is 0.34 °C / decade.

So your claim about “problems with CRUTEM5” is quite questionable when we compare Hadley’s land surface series with RATPAC-B.

Please don’t tell me that after agreeing to a match of RATPAC-B at 700 hPa with UAH6.0 LT, you would suddenly dispute a match of the same RATPAC-B at surface with a surface time series like CRUTEM5.

*

Furthermore, if you compare the trend of a global surface series (0.19 C/decade) to the trend of a lower tropospheric series (0.14 C/decade) and claim the former is too high just because the latter’s trend is as low as it is that of a sea surface series (0.14 C/decade), then that is not plausible either.

Because as a comparison of UAH6.0 with its previous revision UAH5.6 suggests, we could perfectly interpret the agreement of the UAH global data with a sea surface series as an ocean cooling bias in UAH.

*

I’m quite convinced that a mix of natural causes like heat transfer from the SH to the NH via the Thermohaline circolation, together with anthropogenic causes (UHI, GHE increase) will over the long term provide for a much better explanation than these permanent guesses about biases in land surface measurements.

” CRUTEM5’s trend for 1958-now (0.22 C / decade) is somewhat higher than that of the radiosonde data (0.22 C / decade). ”

should read

” CRUTEM5’s trend for 1958-now (0.22 C / decade) is somewhat higher than that of the radiosonde data (0.19 C / decade). “

In Climate Consensus World, “experts on the subject” should be taken to mean “those who agree with me”

Hi Andy.

Great piece. Conclusion is the same as mine. It ain’t the CO2 who did it.

https://breadonthewater.co.za/2022/03/08/who-or-what-turned-up-the-heat/

It would be great if you could let me know if you agree with my essay. Best wishes.

Henry,

Most of the essay is fine, but a quick read found two things I do not agree with:

Lots of people disagree with this. The ACRIM TSI record shows solar output increasing to around 2000-2005, then beginning a decline. See here:

IPCC Politics and Solar Variability – Andy May Petrophysicist

I do not think volcanic activity has caused any warming in recent times. It can though. The Siberian Traps volcanism (Permian) had an effect for a very long time. The NAIP volcanism of the PETM (Tertiary) also had a long-term effect. More minor eruptions, like Mt. Pinatubo lasted several years at most. Some info here: The Paleocene-Eocene Thermal Maximum or PETM – Andy May Petrophysicist

I will have more in the next few weeks.

Wrote about short term effects of VEI 5-6 (not thousands of years long flood basalt eruptions like the Siberian Traps), just ‘ordinary’ stuff like Pinatubo, in essay Blowing Smoke in eponymous ebook. The short term cooling effect of stratospheric aerosols lasts at most 2-3 years. Presented all the physical science ‘proofs’.

Thanks Andy.

I have strong proof from a statistical analysis of daily data from 54 weather stations balanced on latitude that Tmax started to go down from 1994-1995.

I think I will try to add this into the essay.

However, as we now know, earth kept warming up. So the extra heat is or has come from somewhere else.

The only way to explain the results in Table 2 is extra volcanic activity in the arctic ocean and arctic region. I have given a number of volcanoes that have erupted there.

I have added that graphic in my essay.

it seems most people do not understand that most volcanoes are on the bottom of the oceans. If they erupt, you do not get to see much, especially if is is very deep. It is just the heat that goes into the ocean. Most gasses like CO2 , sulphur and chlorine will be dissolved in the water before you even see it because of the high temperature.

When you conaider heat capacity it is nonsense to calculate average temperature of air and water using a simple average. This violates the conservation if energy.

(T1+T2)/2 = avg(T)

only works when the two objects are the same material and the same mass.

Perhaps this: Intensive and extensive properties – Wikipedia

Let me make it even simpler. If you put a thermometer into two liquids in two containers of different size, each containing a liquid of different heat capacity, and each thermometer reads the same, then the average temperature of the two containers is, guess what?

Nevertheless, a man can become woman of the year. 🙂

Snip snip.

Ouch!

… and Bob’s your Auntie

This confounds thermal mass and temperature concerning ‘heat’. Higher thermal mass contains more ‘heat’ (using statistical mechanics definition) but can be as same temp. Change Delta T requires different delta heat, granted. But the resultant expressed as delta T (not delta heat) should be additive.

Lay translation—to previous comment to the last Andy May post. INM CM5 has higher ocean thermal inertia, one of two main reasons it more closely resembles observations. The reason is exactly the distinction between heat and temp mediated by thermal mass.

By mathematical definition, it is indeed correct to state that the average temperature = (T1 +T2)/2, just as it would be correct to state that the average velocity for a fly and a supersonic transport would be (Vfly+Vsst)/2.

See Tom.1’s reference to the difference between intensive and extensive properties.

I believe you are thinking that temperature is a direct measurement of the heat (sensible thermal energy) content of a liquid . . . nothing could be further from the truth.

To link temperature to energy, one has to consider not only heat capacity, which you mentioned, but also total mass and whether on not the temperature measurement is being made at the exact point of phase change in the material (e.g., for water, the freezing/thawing or boiling/condensation points).

ERA5 uses hourly grids processed on ~12 timesteps. The global average temperature computed from those grids is similar to HadCRUT’s (Tmin+Tmax)/2 strategy.

We know that many surface stations have physical problems with siting and maintenance, and not only in the US. The satellites don’t.

Plus, UAH is able to discern whether there is a tropical troposphere hotspot as modeled by all BUT INM. (There isn’t one.) See my comment to Andy’s last post concerning INMCM5.

So naturally, the alarmists prefer the poorer land surface record that shows more warming, as this excellent post shows.

NOAA has consistently demonstrated that they can make temperature whatever they desire.

h/t Tony Heller

Here is a graph from Gavin Schmidt’s attempt to explain away the CMIP6 models divergence from observations AND how he would prefer to show the model outputs instead of

John Christy’s most recent spaghetti graph.

On realclimate.org [where this image came from] he discussed the graph.

Note that 1) Gavin has arbitrarily removed all the models that he thinks are running too hot,

then does the average [there are 2 colors of models] & then 2) uses surface temps rather than satellite data so it looks like the models are more accurate.

I believe this doctored graph is the one Simon uses all the time.

It’s also convenient how the area between the warmest and coolest models are shaded in hiding all of the individual models. Hiding the fact that all of the models except the Russian model run hotter than even the surface data.

Nobody hides data better than a climate scientist.

There is no scientific justification for averaging the results of various climate models. Basically, the practice is scientific misconduct.

Supposedly the averaging is done to try and get rid of “noise” leaving only the anthropogenic signal. But how does a model produce “noise” in the first place?

That “scientific misconduct” you speak of is used to provide more objective skill (as scored by the anomaly correlation coefficient) in forecasts of the 500 mb heights (and other fields) for operational weather forecasts provided by ensemble models like the GEFS and EPS.

No matter what the errors time will show the trend.

Looking at satellite data for say sea level has the errors larger than the yearly trend as such ask me in two decades what has happened.

Using the errors to help persuade, is the fraud.

These temperature constructs are getting more & more confusing.

My local weather report now posts what the current temperature “feels like”.

I’m moved to ask –

“is that when a cloud bank passes overhead?”

OR

“is that when I’m standing directly in the sunshine, or under a tree?”

OR

“is that just after I’ve had a haircut, a shave, and trimmed my eyebrows, nose hair and ear hair?”

OR

“when having a pee by the forest trail, I didn’t realize it was that cold that day!“

With enough averaging, a record that has no trend will be transformed into one that has a trend. The real (unsmoothed) record of global temperature is in the former category. It is just one long so-called “pause”, which is interrupted by occasional steps during El Ninos. Without the El Ninos, even averaging wouldn’t produce a trend.

https://rclutz.com/2022/03/11/still-no-global-warming-cool-february-land-and-sea/

El Ninos aren’t caused by CO2.

I agree with your words Phil, but I find your graph highly dubious. The HadCRUT4 40’s and 50’s seem rather low.

I was wondering if anyone else saw this. Congrats.

If the steps up are driven by El Nino’s then the first question to ask is what drives the El Nino’s. I am highly doubtful that it is CO2 growth in the atmosphere. The second question to ask is that since that which goes up usually comes down at some point, when can we expect to see the stairsteps down? I’m guessing this is part of a cyclical process. One that the models simply don’t incorporate.

Andy’s is a good defense of the satellite data. The only thing I would add is that the lack of amplified warming in the deep layer temperatures from the satellite versus surface temperature could be due to stronger negative IR feedback than climate models have. Specifically, weak positive or zero water vapor feedback and high cloud feedback (i.e. Lindzens infrared iris) would cause what is being seen, and the forcing could still be increasing CO2. There is no way to know for sure.

Rubbish – ocean surface temperature is limited to 30C over any annual cycle. The sky goes dark day and night over open oceans if the temperature reaches 31C and the surface temperature then regulates back to 30C.

Heat loss is regulated by sea ice such that the water below the ice never gets colder than -1.8C.

These two powerful thermostatic limits regulate earth’s energy balance.

Orbital precession is the dominant driver of the observed climate trends. Atmospheric CO2 is irrelevant to any direct climate impact on the energy balance.

The Nino 34 region has cooled over the last 40 years:

http://bmcnoldy.rsmas.miami.edu/tropics/oni/ONI_NINO34_1854-2021.txt

No climate model shows a cooling trend for the last 40 years over the Nino34 region.

RW, I appreciate your contributions—to a point, The AGW/Climate Change claims have to do mainly with the atmosphere over the land where we live. (Because nothing else is sufficiently scary.) So all your ocean points are at least secondary. No matter if true. And I have yet to see you convincingly couple them here.

Clearly you have not understood the fundamentals of Earth’s energy balance.

Globally, land masses always absorbs heat from the oceans. That is the nature of net precipitation; latent heat absorbed in oceans and some released over land to form precipitation thereby warming the land by reducing the rate of cooling. Without oceans, Earth would be cooler.

The net radiation balance over land is always negative and usually positive over oceans. Oceans absorb heat and give it to the atmosphere over land:

https://wattsupwiththat.com/2021/11/14/global-water-cycle/

Easy for anyone with an ounce of knowledge and a curious mind to verify.

No climate model has predicted the observed reduction in freshwater runoff from land over the past 50 years.

https://www.bafg.de/GRDC/EN/03_dtprdcts/31_FWFLX/freshflux_node.html

https://www.pnas.org/doi/10.1073/pnas.1003292107

The lag in warming is supposed to be due to ocean thermal inertia. All climate models rely on the claimed energy imbalance warming the oceans. The CSIRO model shows the ocean surface in the western Pacific reaching 40C by 2300 – per attached. That is just crazy nonsense.

Globally, land is always cooler than the oceans so there can be no catastrophic warming without oceans playing along with that unphysical requirement.

I agree Roy. But I sure wish the possible problems I’ve highlighted in the last two posts with the land-temperature records (both GHCN and CRUTEM) were looked into. Looking at the discrepancies straight up and simply, it sure looks like the data is bad or GHGs are not important, at least if you believe the models (I don’t).

You are correct, there are other possible explanations for the discrepancies, but are the IPCC looking into them? As far as I can see they only consider GHGs, volcanic eruptions, and aerosols, everything else is held constant. Seems over simplistic, but that is what I see.

Andy May, you mention “The adjustments made to the CRUTEM5 land surface temperature measurements and the similar Global Historical Climatology Network (GHCN) are significant and controversial…”

Here is an illustration of the effect of adjustments, looking at July Tavg data for years 1895-2021. No claim is being made here concerning the validity of adjustments. I am just showing the bulk results for one month for the contiguous U.S.

The USHCN is a subset of GHCN covering the contiguous U.S. There is a list of 1,218 stations for which monthly data is available here at this link as final values after pairwise homogeneity adjustment (FLs.52j), time-of-observation adjustment (tob) and as raw values.

https://www.ncei.noaa.gov/pub/data/ushcn/v2.5/

The readme.txt file explains the file formats and coding. There is a DMFLAG description:

E = The value is estimated using values from surrounding stations because a monthly value could not be computed from daily data; or, the pairwise homogenization algorithm removed the value because of too many apparent inhomogeneities occuring close together in time.

So I analyzed the July data in the FLs.52j file for tavg to separate “E-only” flagged data and not-E-flagged data to compare to each other and to the finalized values. The values plotted are the mean for each year of all non-missing values. I also include a plot of the count of E and not-E entries in each year’s data, and the total count of stations by year for which values are non-missing. In the early years, there are less than 1,218.

The resulting plots are here at this link.

https://drive.google.com/drive/folders/1nBKn8NgO1iZwOk94XisExAIUWdOYZxPw?usp=sharing

The E-only component is cooler at the beginning and warmer at the end of the time series, than the final values (FLs). In the middle, there are relatively fewer E-flagged values, but these fewer entries are much warmer in part of the record. The not-E component is warmer than the final values at the beginning and cooler at the end. The E-only values are cooler than the not-E values in the early part of the time series and much warmer afterward.

Make of it what you will, but these are the bulk results for July Tavg data for USHCN. The E-flagged and not-E-flagged values are very different.

I hope you are not implying there is a contrived upward trend due to the homogenisation process. No respected scientific organisation would ever do such a thing.

/s Lols

USHCN is a US index, not global. Further, it was replaced by climdiv in

2014. No global index uses local average replacements.

USCCRN is a very close match to the linear trend of UAH.

UAH is validated by pristine surface data, as well as balloon data.

ClimDiv is deliberately matched to USCRN, because any massive divergence would show that adjustment were taking place.

GHCN is shown to have manic, unjustified adjustments of all historic data.

Wow! What strikes me are the differences between the plots. Of course, the area covered changes with each plot, but still, they are very different. If nothing else, we need to be aware of the uncertainty in the land-only records.

Yes, and one wonders whether the application of adjustment algorithms improves the reliability of the finalized record as an indicator of overall warming or cooling, or degrades it.

“Given these peer-reviewed analyses, we can comfortably assume that the satellite data is at least as accurate as the surface data, if not more accurate.”

We got a glimpse of the real accuracy when both UAH and RSS brought out new versions a few years ago. They were radically different. While UAH had been warming faster than most surface measures, and RSS slower ( so Lord Monckton used it for his Pause series), after the changes they switched places, with RSS now warmer, and UAH showing less warming than surface data. This is discussed here. The UAH trend went from 0.16 to 0.13 C/decade.

The graph below, from the link, shows the difference between the successive versions of each satellite set, compared with changes to GISS over a longer period of years:

As you’ll see, the version difference could be as much as 0.1C. So if V6 is 0.01 accurate, that must be a sudden change from V5.6.

RSS is now “adjusted” using “climate models”.. so funny !! Try again

UAH is validated with balloon and sample pristine surface data.

Apples and oranges Nick. Most of the big changes are after 2000. In any case, I’m not comfortable with the RSS changes, they used some clearly inaccurate satellite data (NOAA-15). But, even accepting the differences you show in your plot, how do you explain the much larger differences between HadSST and HadCRUT5? Your scale covers -.1 to +.1, Figure 1 shows a difference of 0.05 per decade over 4 decades!

“Apples and oranges Nick. Most of the big changes are after 2000.”

Not apples and oranges. At the time that plot was made in 2018, V5.6 and V6 were both being published by UAH as global indices for TLT. Two values, with a 0.1°C difference. Yet you cite their 1990 claim of 0.01°C accuracy. Was that true of both V5.6 and V6? ???

“how do you explain the much larger differences between HadSST

and HadCRUT5?”

Now that really is apples and oranges (with UAH being a banana). They are measuring different places, as Bellman has been saying. And we have long known that the land surface is warming faster than the sea, as expected, since excess GHG forcing heat has to go into the sea depths.

The trends for 1979-2021 from here are in °C/Century:

1.907 HADCRUT 5

1.435 HadSST 4

2.805 CRUTEM

NOAA gets similar:

1.724 Land/Ocean

1.264 SST

2.959 Land

Land is warming faster, and raises the land/ocean average. That is just the way it is. BTW satellite trends are:

1.345 UAH TLT

2.140 RSS TLT

Not a brilliant demonstration of satellite accuracy.

Here is Roy’s own version of the difference plot – note the different scale

http://www.drroyspencer.com/wp-content/uploads/V6-vs-v5.6-LT-1979-Mar2015.gif

Sorry, it doesn’t show, as it lacks https there. Here is my copy:

Known changes for known satellite issues.

Totally unlike the agenda driven manipulations of the sparse urban surface data.

Good points.

“Known changes for known satellite issues.”

Andy May’s ” the accuracy Roy Spencer and John Christy estimate for their satellite measurements of ±0.011°C for a monthly average” refers to a 1990 estimate. His “the satellites are merged with an accurate procedure described by John Christy.[2]” refers to a 2000 paper. These massive changes were made in 2015. When were the “known satellite issues” known? How do we know that in 2015 they finally solved them all?

And then there is the ongoing huge discrepancy between UAH and RSS. I guess we’ll be told that unlike UAH, RSS make “agenda-driven manipulations”. But when the positions were reversed, and RSS showed more cooling, WUWT loved them. The whole first series of Pause articles of Lord Monckton was based exclusively on RSS. UAH then told a different story.

Since you are dealing in anomalies tell us exactly what you are complaining about. More to the point, how does a temp of 16 vs 15 for a baseline make a difference in an anomaly trend?

The baseline average is supposed to eliminate differences in absolute temp. Are you saying there is a problem with this?

“Since you are dealing in anomalies”

Completely muddled. This whole post deals in anomalies. UAH posts nothing but anomalies. Have you ever seen an underlying average absolute TLT temperature? What would it even mean? At what altitude? It certainly wouldn’t be fifteen or sixteen degrees.

Why can’t you answer the questions?

The very fact that NH/SH temp anomalies are averaged together says that most believe this is ok.

Nick,

You are oversimplifying the computation of error. First of all, true “error” is never really known and can only be estimated. Second, on a series, like HadCRUT5 or UAH 6, it depends upon the time it is estimated and what the measurements are compared to. I referenced Spencer and Christy 1990 deliberately because it measured the error in the satellite measurement directly and obviously ignored the then unknown problems of bias and drift. It was the error of a perfect satellite measurement. It was thus comparable to the estimate of error in HadCRUT5 I was using.

As you say, a full estimate of all possible error in both measurements, if it could even be done, would be huge. But Tamblyn’s comment implied that one was better than the other, I needed to compare apples and apples, which I did. You are trying to compare all current known sources of error in UAH, even post 2000 to a minimalist measure of error in HadCRUT5, not valid and disingenuous.

If we included all known sources of error in HadCRUT5, the total error bars would be huge, probably much larger than UAH, but who knows? I refer you to Pat Frank’s (2019, 2021), Nicola Scafetta (2021), and Peter O’Neill (2022). I think you know all this and are just blowing smoke, but if you really want to understand estimating error, read all the articles.

“It was the error of a perfect satellite measurement. “

Interesting concept.

“I refer you to Pat Frank’s (2019, 2021), Nicola Scafetta (2021), and Peter O’Neill (2022). “

Thank you, but no.

Nick, I find it amusing that you decline to study the science of error and uncertainty. You and the IPCC are a perfect fit.

“Nick, I find it amusing that you decline to study the science of error and uncertainty.”

By declining to “study” Pat Frank’s citation free rants – rants he and superterranea have demonstrably, repeatedly, debunked – he is instead spending his time usefully.

I have read and written plenty about Pat Frank’s paper, which was rightly rejected vigorously by numerous journals, and have been cited I think just twice in research journals. Even Roy Spencer said it was all nonsense.

Because all the great and mighty climastrologers don’t understand that uncertainty is not error. Like yourself.

“Known changes for known satellite issues.”

The changes between v6 and v5 were a lot more than correcting for known satellite issues. It was a complete rewrite, using different methods.

According to Dr Spencer the biggest reason for the change in warming rates was reducing the sensitivity to the land surface temperature.

https://www.drroyspencer.com/2015/04/version-6-0-of-the-uah-temperature-dataset-released-new-lt-trend-0-11-cdecade/

At least UAH uses a consistent method throughout its record. They don’t do correction midway through the entire record because an LIG was replaced so the appearance of a long record is maintained.

Are you saying that UAH makes no correction for the different satellites used over the last 40 years?

I didn’t say that did I? I said they don’t adjust part of the past record in order to maintain a “long record”. You should work on your comprehension.

At some point as new satellites are put into orbit, that may become an issue. You can belch about it at that time.

Then how do they correct for all the different satellites that have been used over the last 40 years?

Maybe I’m not understanding what you think the issue is, but UAH does have to adjust the data for each satellite.

https://www.drroyspencer.com/2015/04/version-6-0-of-the-uah-temperature-dataset-released-new-lt-trend-0-11-cdecade/

You do realize that corrections are the result of a detailed procedure for basically determining a correction table/graph for instruments, right?

These are not “let’s look at the data and change what we think is wrong” type corrections.

It isn’t dealing with “less than perfect data”, as you so ineloquently state, and using the data to locate what you think is in error and then using other data to “calculate” the corrections.

Every scientific instrument that has been calibrated should have a calibration table or sheet that shows how to “correct” readings taken by that instrument.

UAH has not only access to the instruments, but methods and procedures to determine calibration corrections.

That is a far cry from doing a seat of the pants “let’s look at the data and change what we think is wrong” type of correction based only upon some fanciful theory.

I am sure the UAH folks not only keep the original measurements, but also use them to calculate temperatures based upon the new calibration charts they create.

If you have read their procedures you would know that they are very careful in what they do by satellite.

“It isn’t dealing with “less than perfect data”, as you so ineloquently state”

That “ineloquant” statement was from Dr Roy Spencer, as should be clear from the fact I put it in a blockquote and gave you the source of the quotation.

Bellman,

All this is well known. And I agree with you. It still has no impact on what I wrote. Any estimate of error is a snapshot in time.

Where you and Nick are going off the rails is your mixing of modern technology and the technology of 30 years ago. This is explicitly not what I did in the post. You act as if the exact error in either the HadCRUT5 or the UAH records is known today, this is far from true, and there will be a UAH7 and a HadCRUT6 someday with supposed improvements.

I was simply finding two compatible estimates of error and comparing them. You are debating an unrelated issue for which there is no answer. You are asking: “What is the absolute error in UAH.” It doesn’t exist for UAH, and it doesn’t exist for HadCRUT5.

My problem with all of this is the claim of something like 0.01 error. That is most likely a calculation of the standard deviation of sample means and not the uncertainty in the actual measurements. It’s more a measurement of the precision of the measurement than the uncertainty associated with the measurements. The uncertainty associated with the measurements is undoubtedly higher than the precision of the measurements. If so, then trying to estimate a linear trend from the data, at least to a resolution of hundredths of a degree, is a losing proposition. Anomalies don’t help. Anomalies inherit the uncertainty of the base data.

To me this is all nothing more than seeing phantoms in the fog. The uncertainty of what you are seeing makes it impossible to be sure of what is going on.

This is true, but I compared it to a comparable estimate of the error of measurement from HadCRUT5. I wanted to compare apples to apples to show that Tamblyn’s statement was wrong. I did no comprehensive study of the accuracy of UAH, this has already been done by John Christy and others. I just compared the ideal accuracy of both UAH and HadCRUT.

They all use standard deviation of the sample means instead of true propagation of uncertainty from the data elements. Thus comparisons are meaningless as to uncertainty. Berkeley even states they use device resolution as the measurement uncertainty. Simply unfreakingamazing.

No, they don’t. Christy et al. 2003 perform a type B evaluation and propagate the uncertainty per the rules that have been well established for decades by the likes of Bevington, Taylor, GUM, NIST, etc while Mears et al. 2009 use the monte carlo technique. Rhode et al. 2013 performs the jackknife technique for evaluating uncertainty. And as I’ve pointed out to you before you are misrepresenting the device resolution you found in the data files. I think Andy deserves the respect of being given the actual quote (in its entirety) that describes the device resolution figure you see in Berkeley Earth’s data files for full transparency.

1. Assuming the uncertainty of a measurement is equal to the precision of the measurement device is NOT a Type B evaluation.

2. None of the names you dropped claim you can use Monte Carlo techniques on measurements containing systematic error, MC techniques work with random error.

As usual you try to claim statistical analysis techniques work in all measurement situations when both Taylor and Bevington specifically say they don’t and I have provided you their exact quotes. You have one tool in your toolbelt and you try to apply it to everything.

That’s 3 strawmen in one post. And out of respect I’m giving you the opportunity to tell Andy what Berkeley Earth actually said in regards to the uncertainty figure published in the raw data files.

No strawmen, just facts. None of which you actually address. You just use the Argument by Dismissal tactic.

Here is what the Berkjeley Earth raw data file for Tmax, single valued data shows for uncertainty:

” Uncertainty: This is an estimate of the temperature uncertainty, also expressed in degrees Celsius. Please refer to dataset README file to determine the nature of this value. For raw data, uncertainty values usually reflect only the precision at which the measurement was reported. For higher level data products, the uncertainty may include estimates of statistical and systematic uncertainties in addition to the measurement precision certainty.

In addition, the format of these values may reflect conversion from Fahrenheit.” (bolding mine, tg)

I’ve given you this at least twice before. Why don’t you write it on a post-it and stick it up somewhere you can see it? Note carefully the word “MAY” in the text above, not “will” but “may”. So who knows? And if you are using products produced from raw data then you are *NOT* getting anything other than the assumed precision of the measuring device. And even that is questionable. As I showed you before, BE assumes the precision of some data from the 1800’s to be 0.01C! REALLY?

Here is what *I* said: “It’s more a measurement of the precision of the measurement than the uncertainty associated with the measurements.”

And I stand by that statement. ANYTHING based on BE raw data severely understates the uncertainties associated with the product.

Now, why don’t you answer my Points 1 and 2?

TG said: “I’ve given you this at least twice before.”

No. **I** have given this to you at least twice before. This is the first time I’ve seen you post it.

TG said: “Why don’t you write it on a post-it and stick it up somewhere you can see it?”

You mean like here and here?

TG said: “So who knows?”

We all know because Berkeley Earth tells us.

TG said: “BE assumes the precision of some data from the 1800’s to be 0.01C! REALLY?”

Where do you see 0.01 C?

TG said: “Here is what *I* said: “It’s more a measurement of the precision of the measurement than the uncertainty associated with the measurements.””

TG then said: “And I stand by that statement.”

And that statement is dead wrong. It is wrong because you are conflating the instrument resolution uncertainty that you saw in the raw files with the uncertainty of the global average which Berkeley Earth provides here and is documented here which contains far more than just the individual instrument resolution uncertainty.

“We all know because Berkeley Earth tells us.”

I don’t know. How do you know? Do you know *exactly* how BE guesses at the uncertainty of temperatures from 1920 in their “higher level” products?

I’ve posted this to you before. I’m not going to spend my time trying to educate you on this again. Search the uncertainty entries for 1920 in their Tmax, single value raw data.

“Uncertainties represent the 95% confidence interval for statistical and spatial undersampling effects as well as ocean biases.”

In other words they are *STILL* using the standard deviation of the sample means which is *NOT* uncertainty!

TG said: “Search the uncertainty entries for 1920 in their Tmax, single value raw data.”

I don’t see 0.01 anywhere. I see values on the order of 0.1 (for C reporting) and 0.05 (for F reporting) in the raw files that have some variability depending on the number of observations made, but I don’t see 0.01.

Here is a record from 1925:

702142 1 1925.792 3.903 0.0090 31 0

See the 0.0090 value? That is the uncertainty interval for that record. It was recorded for 1925.

.009 uncertainty? Really? This is probably based on the standard deviation of the sample means – which is a measure of the precision of the calculated mean and *NOT* a measure of the accuracy of the mean (i.e. its uncertainty) stemming from propagation of the uncertainties of the data elements.

Got it. Yeah, so that’s telling you that the instrument was marked in 1/10 of degree C increments. Note that 0.05 / sqrt(31) = 0.09. I do agree that the 0.09 is only the uncertainty of the monthly mean with the precision propagated through. It does not include other forms of uncertainty. But we already know that because that’s what the file tells us.

It’s not 0.09, it’s 0.009! Meaning the LIG thermometers would have had to be marked in HUNDREDTHS of a degree! 0.005/sqrt(31) ≈ 0.009

And they had calibrated (in tenths or hundredths!) LIG thermometers in 1925?

“It does not include other forms of uncertainty. But we already know that because that’s what the file tells us.”

And any product using the raw data is going to be WAY off on their uncertainty calculations!

TG said: “It’s not 0.09, it’s 0.009!”

Doh. That’s definitely a typo on my part. I meant to say 0.05 / sqrt(31) = 0.009.

TG said: “0.005/sqrt(31) ≈ 0.009”

0.005 / sqrt(31) = 0.0009 not 0.009

TG said: “Meaning the LIG thermometers would have had to be marked in HUNDREDTHS of a degree!”

0.05 / sqrt(31) = 0.009. In other words, 0.009 means the thermometer is marked in tenths of a degree C.

My thermometer reads to .01 😉

So does mine. But its uncertainty is 0.5C, stated right in the documentation for it. Precision is not accuracy.

Orbital precession dominates the solar variation over Earth. Looking at average solar intensity sets failure as the only destiny in analysing climate change.

The solar intensity over the northern hemisphere has been increasing for 500 years and reducing in the Southern Hemisphere for the same period.

Looking specifically at the land masses in the NH, the solar variation since 1970 to 2020 is not trivial when considered on a monthly basis:

Jan 0.13W/sq.m

Feb 0.36

Mar 0.62

Apr 0.83

May 0.76

Jun 0.32

Jul -0.24

Aug -0.64

Sep -0.7

Oct -0.49

Nov -0.22

Dec -0.05

So boreal springs are getting more sunlight but boreal autumn less. These are for the entire NH land mass but there are more significant variations in solar intensity at various latitude.

Good temperature records show these trends. The oceans are almost constant surface temperature; just more area limits to 30C when the land warms up and net evaporation drops off. However in October, November and December, more of the latent heat input to the oceans is transferred to land so the land cools less in those months than it warms in response to the solar changes in the boreal spring. The other factor is that most of the Earth’s land area is in the NH. Those three months are the only time atmospheric water actually warms the surface. Atmospheric water is a net cooling agent.

Atmospheric water has a residence time estimated at 7 days and annual variation of more than 25%. To think that increasing atmospheric water is going to cause runaway global warming is so naive it is ridiculous. More atmospheric water indicates that land is warming more than oceans as is expected given the changing solar intensity due to orbital precession and global distribution of land and water surfaces..

The surface and sea surface trends were almost identical up to ~1980 and from then the surface and sea surface trends diverge for some reason.

infills and homogenization make surface records unfit for purpose … and garbage …

Exactly.

There is only one reason for homogenization and that is say “we are preserving long records”. That is an absolutely the worst scientific rationalization I have ever seen.

Fabricating new data to replace accurate, measured and recorded data is wrong. Records should be either declared unfit for purpose and discarded or the original stopped and a new one started.

If you are left with short records, deal with it!

Surface temperature data sets aren’t actually measuring climate as they are measuring a tiny fraction of the surface. This tiny fraction are then adjusted to another tiny fraction to try and enhanced confirmation bias by squeezing out further warming.

The best way to describe surface temperature data sets are measuring weather to the higher temperature possible.

In most cases, the “tiny fraction of the surface” amounts to what is in the Stevenson Screen when the readings were taken.

Look at the attached. Can anyone really expect that the min/max temps wouldn’t vary in a similar fashion? This shows a 10 degree difference at least, and in a small geographic area.

The range of different thermometers values reflects the different microclimates where each thermometer resides. To say we “know” what the temperatures were down to the 1/100th of a degree or less is simply science fiction. Even the newest thermometers only read what the temperatures are within that little bitty enclosure at that little bitty piece of land where it is located.

Has anyone yet noticed that plots of landuse change almost exactly match the hockey stick? We even see the levelling off of land development around year 2000, in line with temperature. We could simply be witnessing the changing surface energy balance sheet, if you happen to buy into hockey-stick temperature plots. Roughly 40% of the land surface has now been rendered effectively desert by human activity. This would have significant impact on latent heat flux, or evaporative cooling of the land surface.

“Has anyone yet noticed that plots of landuse change almost exactly match the hockey stick? We even see the levelling off of land development around year 2000, in line with temperature. We could simply be witnessing the changing surface energy balance sheet,”

The same magnitude of temperature increase occurred from 1910 to 1940, when land development was much less than today.

“The same magnitude of temperature increase occurred from 1910 to 1940, when land development was much less than today.”

The same as what? The rate of landcover change in the period you mention appears to be quite rapid thanks to petrol tractors in the US and bigger tillage implements. After the damage was done Roosevelt implemented better conservation practices.

This is a very complex issue. It’s why Freeman Dyson said climate models had to be more holistic in order to better represent the climate.

I would note that since the 40’s we have seen a lot of changes in crops being planted as well as the amount of land covered in crops. I can tell you that the temperature measured in the middle of a 200 acres of soybeans is lower than the temperature on the edges of the field. Evapotranspiration is the likely reason. If that field used to be planted in alfalfa or milo there was probably a far different temperature profile. The same thing applies to the amount of corn being grown compared to what crops were being raised in the 40’s. The higher use of fertilizer to increase crop growth has certainly made a difference in the amount of land usable for raising crops which undoubtedly has changed temperature profiles. Moldboard plowing of fields has all but disappeared in farming today and I’m sure that has made some kind of difference.

Climate models dependent solely on CO2 growth to forecast future temperatures take none of this into consideration. They are not holistic at all. They are mere representations of the modelers biases.

“This is a bit less accurate than the accuracy Roy Spencer and John Christy estimate for their satellite measurements of ±0.011°C for a monthly average.[1]”

The source for that estimate is listed as 1990, which would be several versions of UAH out of date.

But I doubt that the uncertainty in monthly averages is very important, and difference in trends between different data sets is more likely to be caused by a changing bias.

“The HadCRUT5 dataset is well below the other two in the beginning of the period and above at the end.”

That’s just an artifact of using a recent base period. HadCRUT is warming faster than UAH, but what that looks like depends on where you align the anomalies. You don’t know if they both started the same and HadCRUT has pulled away, or if HadCRUT started much lower and has now caught u, or anything else.

“Third, if HadCRUT5 is correct, it means the surface is warming faster than the lower and middle troposphere.”

That also requires UAH to be correct. RSS shows the lower troposphere warming faster than any of the surface data sets.

“The justification for the 36% increase in warming rate between the ocean and the land plus ocean records is not clear and probably indicates a problem with the CRUTEM5 land temperature record, the greenhouse gas warming hypothesis, or both.”

But UAH shows a 50% difference between land and ocean warming rates.

“RSS shows the lower troposphere warming faster than any of the surface data sets.”

RSS is “adjusted” using “climate models” Of course it shows excessive warming. !

Wasn’t RSS using climate models back in the days of version 3? Back when RSS was being declared the most accurate of all the data sets.

I don’t know why using models of how the climate works would be less accurate than the much simpler linear models UAH uses.

Because climate models don’t use actual measurements and UAH does.

Both use actual measurements, both make corrections using models. It’s just that UAH uses an empirically derived linear model for it’s diurnal drift corrections, whereas RSS uses a climate model for its corrections.

I said models don’t use actual measurements. And they don’t. Pay attention!

Of course models use actual measurements. This is less no true for global circulation models as it is for any scientific model like newtonian mechanics, relativistic mechanics, quantum mechanics, the UAH TLT model, etc.

The criticism the skeptical community had with RSS’s approach was not that they used a model; but that they used a GCM model. They listened to the criticism and adopted a model closer to what UAH uses.

BTW…UAH uses a one-size-fits-all model for their LT product. Specifically that model is LT = A*MT + B*TP + C*LS where A, B, and C are set to +1.538, -0.548, +0.010 respectively. I encourage you to play around with this model. Experiment with different parameters for A, B, and C and see how it changes the LT temperature. Even small changes in these tuning parameters can result in large changes in the LT trend.

Not according to Nick Stokes and the other model enthusiasts. The models use the physics, not the measurements for initial values. They only compare past measurements to validate their hindcasts.

Get with Stokes and get your story straight.

You can’t even keep your own story straight. A, B, and C are not measurements. They are guesses. And the rest are not temperature measurements either, they are conversions from radiance to temperature.

TG said: “Not according to Nick Stokes and the other model enthusiasts. The models use the physics, not the measurements for initial values. They only compare past measurements to validate their hindcasts.”

I’m not sure what context Nick Stokes is referring to. And I suspect you are talking about global circulation models. GCMs are models. But not all models are GCMs. For example, F = d/dt(mv) is a model, but it is not a GCM itself. In fact, all GCMs use the F = d/dt(mv) model plus many other models. Models are built on top of other models. When you speak of a model you need to be specific about which model you are referring to avoid confusion.

TG said: “You can’t even keep your own story straight.”

I stand by what I said.

TG said: “A, B, and C are not measurements. They are guesses.”

Duh! They are elements of the model. MT, TP, and LS are the measurements.

TG said: “And the rest are not temperature measurements either, they are conversions from radiance to temperature.”

They are temperature measurements. The units are in Kelvin. Just because they are outputs from yet another model does not make them any less of a measurement than a measurement of a temperature from an RTD which also uses a model to map electrical resistance to a meaningful value in units of C or K.

Just about everything WUWT is about temperature. Temperatures are forecasted using the GCM’s.

“Duh! They are elements of the model. MT, TP, and LS are the measurements.”

You said the temperature models use temperatures! They don’t. They use guesses at temperatures.

I’m sorry, radiance is not temperature. It’s a guess at a temperature. It might be a good guess but it is *still* a guess. That’s why the guesses get modified every so often!

RTD sensors are calibrated against a lab standard and a calibration curve developed. Where is the lab standard used to for a calibration of a radiance sensor? Is there a calibration lab floating at 10000 feet that I’ve never heard about?

TG said: “You said the temperature models use temperatures!”

What I said is that the UAH TLT model is LT = A*MT + B*TP + C*LS where A, B, and C are set to +1.538, -0.548, +0.010 respectively. This model uses tuning parameters A, B, and C to temperature inputs MT, TP, and LS to derive the TLT temperature. Because its output is a temperature and because its inputs are temperatures I suppose you can call that a temperature model that use temperatures. But those are your words; not mine. If YOU have a problem with the way YOU worded that then it is up to YOU to reformulate it. I’m not going to go out of my way to defend your statements so make sure the reformulation is something you are okay with.

“ But those are your words; not mine.”

No, they are *YOUR* words.

You said:

“Of course models use actual measurements. This is less no true for global circulation models as it is for any scientific model like newtonian mechanics, relativistic mechanics, quantum mechanics, the UAH TLT model, etc.” (bolding mine, tg)

Tlt isn’t truly a model. It predicts nothing, nothing backwards and nothing forward. It is a weighted composite of measurements. You may as well say the speedometer on your car is a model.

Again, look around. The model advocates *do* claim they don’t use temperatures

go here: https://www.carbonbrief.org/qa-how-do-climate-models-work

You are doing a poor job of running and hiding. Try again.

“I said models don’t use actual measurements.”

I’m not sure what models you are talking about.

As far as I know, the only models RSS are using is to correct for diurnal drift. Both UAH and RSS do that, they use different models. UAH uses a simple linear model, whilst RSS uses a model based on the diurnal cycle.

You are still showing your mathematician biases.

length x width = area is *NOT* a model. It is a calculation using measurements with uncertainty. Tlt is just a calculation. Only a mathematician would call a calculation a “model”.

And using a model to calibrate a calculation depends on the model being accurate, which they aren’t! UAH uses actual measurements from balloons to calibrate against.

I consider F=d/dt(mv) and LT = 1.538*MT – 0.548*TP + 0.010*LS to be models because F and LT are only approximations of the true force F and true temperature LT. They are approximations based on how Newton and UAH modeled reality. Einstein and RSS modeled reality differently and thus get different results for the force F and temperature LT.

UAH doesn’t calibrate against a “model”. It calibrates its calculations against measurements made by balloons.

note: I would write your F equation as F = ma = m * (dv/dt). It’s much more clear. Or even d(mv)/dt

MT, TP, and LS are measurements, not variables. They are like the measurements of distance. L_total = L1 + L2 + L3. That’s a calculation, not a functional relationship and therefore is not a “model”.

distance is a measurement. Time is a measurement. velocity is a variable, dL/dt, Different combinations of distance and time can give the same velocity. Velocity defines a model describing the functional relationship between distance and time. Acceleration is a variable, different changes in velocity over different time intervals can give the same acceleration, i.e. dV/dt. So acceleration is also a functional relationship.

L x W = A is a model. Getting A from actual measurement means subdividing a lot into 1 sqm blocks and counting each square meter one by one without measuring L and W first. However simple L x W is, it still is a model and a very accurate one that has served as well for thousands of years.

The comparison is (ocean) vs (land plus ocean). UAH does show 50% difference between land and ocean, but the (ocean) rate is 0.12, while (land+ocean) is .14, only a 16% increase.

Sorry, my mistake.

Bellman, Lots of questions/comments. My answers are in [square brackets]

“This is a bit less accurate than the accuracy Roy Spencer and John Christy estimate for their satellite measurements of ±0.011°C for a monthly average.[1]”

The source for that estimate is listed as 1990, which would be several versions of UAH out of date.

But I doubt that the uncertainty in monthly averages is very important, and difference in trends between different data sets is more likely to be caused by a changing bias.

[The original accuracy is applicable for a monthly average. However bias, whether due to a decaying orbit, or another reason, will change that with time. I wanted to have a number comparable to the HadCRUT5 number I was using.]

“The HadCRUT5 dataset is well below the other two in the beginning of the period and above at the end.”

That’s just an artifact of using a recent base period. HadCRUT is warming faster than UAH, but what that looks like depends on where you align the anomalies. You don’t know if they both started the same and HadCRUT has pulled away, or if HadCRUT started much lower and has now caught u, or anything else.

[It is not an artifact of the base period, I was comparing trends, the base period has no effect on the trends.]

“Third, if HadCRUT5 is correct, it means the surface is warming faster than the lower and middle troposphere.”

That also requires UAH to be correct. RSS shows the lower troposphere warming faster than any of the surface data sets.

[The UAH accuracy is not a factor. The UAH trend is the same as the HadSST trend to two decimal places, the HadCRUT5 trend is 36% higher. If all the numbrs are correct, then the surface it warming 36% faster than the middle troposphere and the SST.]

“The justification for the 36% increase in warming rate between the ocean and the land plus ocean records is not clear and probably indicates a problem with the CRUTEM5 land temperature record, the greenhouse gas warming hypothesis, or both.”

But UAH shows a 50% difference between land and ocean warming rates.

[You need to show that or supply a reference. In any case, HadCRUT5 is land+ocean. I am not comparing CRUTEM to HadSST.]

“The original accuracy is applicable for a monthly average. However bias, whether due to a decaying orbit, or another reason, will change that with time. I wanted to have a number comparable to the HadCRUT5 number I was using.“

My problem is you are taking at face value a improbably small uncertainty interval, which given how different the 1990s version of UAH was to current ones doesn’t seem justified.

“It is not an artifact of the base period, I was comparing trends, the base period has no effect on the trends.“

The trends are not affected by the base period. What is affected by base period is the statement “The HadCRUT5 dataset is well below the other two in the beginning of the period and above at the end.”

“The UAH accuracy is not a factor. The UAH trend is the same as the HadSST trend to two decimal places, the HadCRUT5 trend is 36% higher. If all the numbrs are correct, then the surface it warming 36% faster than the middle troposphere and the SST.“

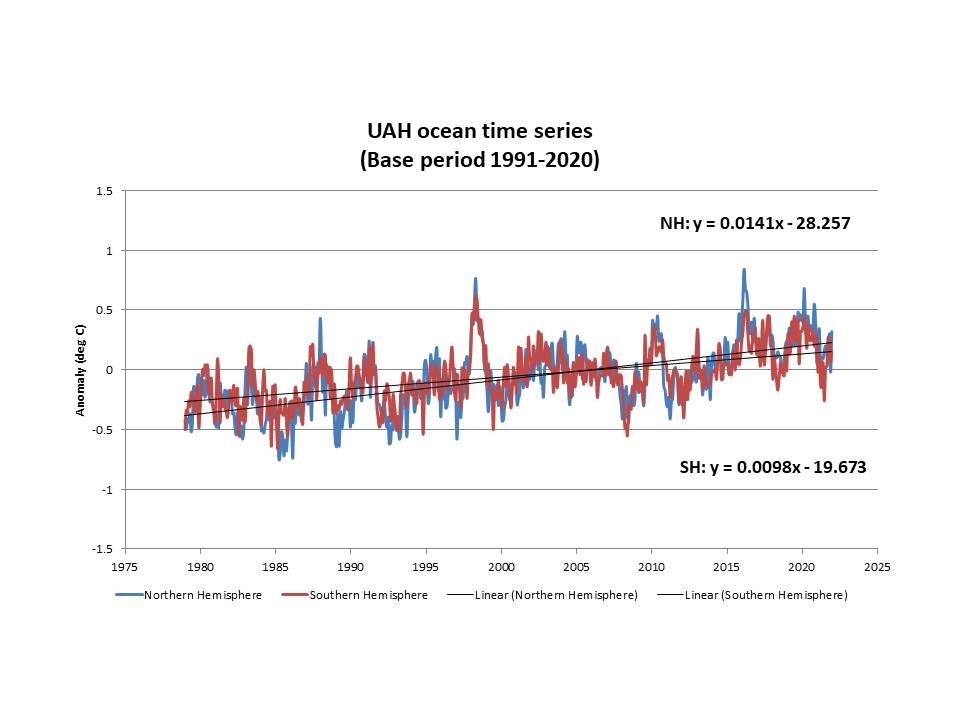

But given that UAH Global and HadSST are measuring different things, having the same trend to two decimal places must be a coincidence. The fact that UAH Sea area trend is different demonstrates it must be a coincidence.

“You need to show that or supply a reference. In any case, HadCRUT5 is land+ocean. I am not comparing CRUTEM to HadSST.”

As Ted pointed out I was mixing up the land only values with global. The correct figures from UAH are Global 0.14 °C / decade, Sea only 0.12 °C / decade.

Source: http://www.drroyspencer.com/2022/03/uah-global-temperature-update-for-february-2022-0-00-deg-c/

The difference is about 16%, compared with the 36% for HadCRUT 5.

Correct, the UAH difference is 16%, I have attached a figure illustrating that.

See my answer to Nick Stokes above. Estimating error is very complicated and comparing the error estimates for two different things, as Tamblyn tried to do, is even more complicated.

What I tried to do was find peer-reviewed estimates of the inherent error in one-month satellite temperature estimates to one-month HadCRUT5 estimates. They can’t be perfect comparisons, they measure two different things as you mention. But, I think I did OK. Both are estimates of all-in error, but both assume no extraordinary or unknown sources of error, both assume ideal conditions. Like with Nick, if you have questions about error estimates in measuring average temperatures, see Pat Frank’s (2019, 2021), Nicola Scafetta (2021), and Peter O’Neill (2022). I think both of you know all this and are just blowing smoke, but if you really want to understand estimating error, read all the articles. This sort of thing was part of my past job for 42 years.

Remember, the original estimate in Spencer’s 1990 paper was for one perfect satellite. But, his estimate was validated (except for some then unknown processing problems) by Christy, et al. 2000, with comparisons to weather balloon data. Like all areas of scientific inquiry, estimating error is a growing thing, too frequently ignored, especially by the IPCC. You should understand all this, Tamblyn did not provide any backup for his assertion that surface measurements were more accurate than satellite. I used as close to an apples-to-apples set of monthly estimates of error under “perfect” conditions to show they are of comparable accuracy, and satellite might even be more accurate.

Comparing an estimate, under perfect conditions, from 1990 to today, as you and Nick are trying to do, is explicitly not valid in this case.

“Correct, the UAH difference is 16%, I have attached a figure illustrating that.”

It doesn’t seem to me to be a problem that satellite data shows a smaller difference between land and sea than surface data sets. Satellites are measuring temperature well above the surface where I would expect there to have been some mixing between the different air masses.

Climate models, at least in the tropics, predict that middle troposphere should warm faster than the surface. If the surface is warming faster, as the data in Figure 1 suggests, it is a problem for the models and the hypothesis that GHGs control warming. That is the main point. It isn’t the difference between the surface and the tropospheric temperatures that is the issue, it is the direction, which is the opposite of what the models say it should be. See the previous post as well.

“Estimating error is very complicated and comparing the error estimates for two different things, as Tamblyn tried to do, is even more complicated.“

I’m certainly not trying to make any claims about the true uncertainty of any data set. It’s complicated and well above my pay grade. To me the exact monthly uncertainty is probably not knowable and not that useful.

I’ve been spending much of my time here being simultaneously being attacked for not accepting the near perfection of whichever satellite data is in fashion, and being attacked for being a cheer leader for UAH becasue I doubt the monthly uncertainty could be as high as ±3 °C.

I don’t necessarily think that UAH is worse or better than any other data set, and prefer to look at a range of evidence. Empirically, comparing one data set to another, it seems impossible that any data set can be accurate to a couple of hundredths of a degree, and impossible that any could be uncertain to multiple degrees.

But as I said, I don’t think the monthly uncertainty is the problem. The problem is that there are differences in the trends in all data sets, and that isn’t caused by random errors – it has to be caused by long terms changes in bias.

I’m not either and it is above my paygrade as well.

Agreed, and well said.

I trust the satellite data, but I also trust this: National Temperature Index | National Centers for Environmental Information (NCEI) (noaa.gov)

We ignore the lessons of the dust bowl and surface energy budget of the USA in 1930s at our peril. Looking at the sky with TOA balances is gettin silly – consensus science has run amok and is destroying civilization.

Furthermore, at least in your choices, sea surface temperatures (a medium with a very different specific heat capacity than air or surficial materials) are averaged with air temperatures over land. They shouldn’t be conflated!

The Hadley Centre has estimated that their best current estimate of global monthly average SST accuracy (sea surface temperature average uncertainty from 2000 to 2021) is about ±0.033°C and David Karoly supplied an estimate of ±0.1°C.

Somebody doesn’t know the difference between precision and accuracy.

Probably!

A few years ago, the Roy Spencer site gave the overall accuracy of the satellite measuring system as around 1 C. All the rest was averaging, lots of averaging.

As we know, whilst averaging can improve precision, it can never change accuracy.

Surface temperatures do not correlate well day-to-day with temperatures above the friction layer. High pressure systems tend to be associated with cold air mass. Cold air is more dense, sinking and giving high pressure to push the air at the surface out of the way. This means little cloud forms so insolation warms the surface during the day, so warm surface is caused by cold atmosphere. At night terrestrial radiation cools the surface so low-level temperatures will be low.

Conversely low pressure systems tend to be warm, with warm air mass rising at a front, for example, to cause a frontal low. Above the centre of the low most of the air mass is warm, but due to cloud the sun does not warm the surface well, but there is also much less diurnal variation with less terrestrial radiation escaping.

Following on from our exchange under your “Comparing AR5 to AR6” article here at WUWT, after “thinking about” the issue a bit more I have come up with a “stripped down” version of my hotspot plot.

While it isn’t clear whether El Ninos (alone ???) result in “additional warming” when it comes to the long-term underlying trends, I don’t think that ENSO’s influence is limited to the lower troposphere (/ below 6,500 feet) …

NB :”400-150 hPa” counts as “above 6,500 feet”, but this isn’t a plot of the “Global / 90°N-90°S” average anomalies, it is strictly limited to the famous “tropical (20°N-20°S) tropospheric hotspot” region !

Still “Work In Progress”, but as I’m limited to attaching 1 image file from my local hard disk per post references to this sub-thread may come in useful in the future …

For ONI (V5), from 1964 to 2021 (for “adjusting” the RATPAC-B line) the trend is -0.135 “units” (°C ???) per century and the average is -0.003 “units”.

From 1979 to 2021 (for “adjusting” all three MSU lines), however, the trend is -0.641 and the average is -0.02.

Looking at the relative amplitudes (Max – Min) of the 1987/8/9 and 1997/8/9 “fluctuations” gave me scaling factors of 0.445 for the RATPAC-B (1964-2021) curve and 0.314 for the MSU (1979-2021) curves.

NB : The “adjustment delay” used for ONI is fixed at 4 months.

YMMV …

Your graphs and analysis are always much appreciated.

While I appreciate the compliment, I wouldn’t qualify what I do as “analysis” … “idle musings”, maybe ?

It is always possible that I might be wrong !

Given the limited scope of the above graphs (20N-20°S latitude band, 400-150 hPa altitude averages), not to mention the lack of verification by other people, I cannot claim to have “definitively proven” that “The climate models run way too hot !” … though that last one is (very ?) suggestive …

All I need to do now is find the “copious spare time” (sic) to extend the above “analysis” to the global latitude “band” (90°N-90°S) and the lower-troposphere datasets (MSU [T]LT + RATPAC 850-300 hPa altitude averages).

None of those uncertainties on the temperature record are correct. It’s absurd to assert you can measure global average temperature to a fraction of a degree C when none of the noise functions on the underlying measurements are known.