By Christopher Monckton of Brenchley

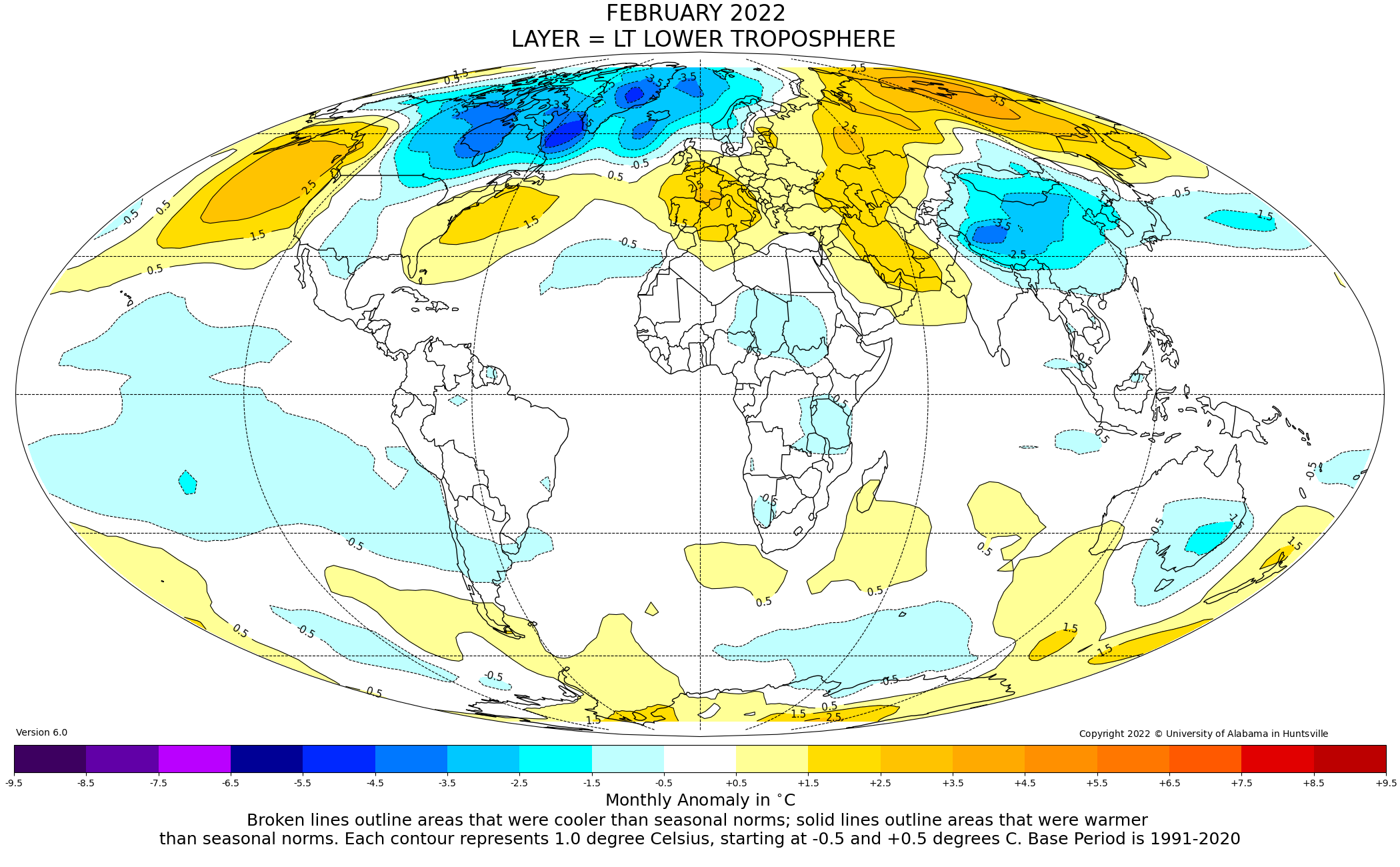

The drop from 0.03 K to 0.00 K from January to February 2022 in the UAH satellite monthly global mean lower-troposphere dataset has proven enough to lengthen the New Pause to 7 years 5 months, not that you will see this interesting fact anywhere in the Marxstream media:

IPeCaC, in its 1990 First Assessment Report, had predicted medium-term global warming at a rate equivalent to 0.34 K decade–1 up to 2030. The actual rate of warming from January 1990 to February 2022 was a mere two-fifths of what had been “confidently” predicted, at 0.14 K decade–1:

The entire UAH record since December 1978 shows warming at 0.134 K decade–1, near-identical to the 0.138 K decade–1 since 1990, indicating very little of the acceleration that would occur if the ever-increasing global CO2 concentration and consequent anthropogenic forcing were exercising more than a small, harmless and net-beneficial effect:

Note that all these charts are anomaly charts. They make the warming look much greater and more drastic than it is in reality. The 0.58 K warming trend since late 1978 represents an increase of just 0.2% in absolute global mean surface temperature – hardly a crisis, still less an emergency.

Meanwhile, the brutal invasion of Ukraine by Mr Putin and his cronies is bringing about a growing realization, among those who have eyes to see and ears to hear, that the global-warming narrative so sedulously peddled by the climate-change industrial complex originated in the Desinformatsiya directorate of the KGB. For a detailed background to this story, visit americanthinker.com and click on the archive for March 2022. There, the kind editors have published a 5000-word piece by me giving some history that readers of WUWT will find fascinating. It is a tale much of which, for security reasons, has not been told until now.

It is worth adding a little more about the economic aspect of this sorry tale of Western feeblemindedness and craven silence in the face of the unpersoning – the relentless campaign of vicious reputational assault – to which all of us who have dared to question the Party Line have been subjected.

Do not believe a word of what either the Russian media or the Western media are saying about Mr Putin. He is not a geriatric who has lost his touch. The events now unfolding in Ukraine have been planned since long before Putin’s silent coup against Boris Yeltsin in 2000, after which, over the following five years, Putin put 6000 of his former KGB colleagues into positions of power throughout the central and regional governments of Russia. Some of those who were in post in 2004 are listed above. Many are still there.

The televised meeting of senior advisers at which Putin shouted at those of them who dithered when recommending that Ukraine should be invaded was a classic maskirovka, designed to convey to the West the impression of an unhinged and mercurial dictator who might reach for the nuclear button at any moment.

The chief purpose of the Ukraine invasion was to hike the price of oil and gas, and particularly of the Siberian gas delivered to Europe via many pipelines, some of which date back to the Soviet era.

It was Putin’s Kremlin, later joined by Xi Jinping in Peking, that founded or took over the various “environmental” lobby groups that have so successfully campaigned to shut down the coal-fired power stations, particularly in Europe, which is now abjectly dependent upon Russian gas to keep the lights on when the unreliables are unreliable.

That is why one should also disbelieve the stories to the effect that the sanctions inflicted on Russia by the West are having a significant impact. The truth is that they were fully foreseen, prepared for and costed. The thinking in the Kremlin is that in due course the increased revenue from Russian oil and gas will more than compensate for any temporary dislocations caused by Western attempts at sanctions, which look impressive but count for remarkably little.

But surely sending close to a quarter of a million troops to Ukraine is expensive? Not really. Putin keeps 1.4 million under arms anyway – about five times as many per head as the UK, which has 200 tanks to Putin’s 15,000. The marginal logistical cost of the invasion is surprisingly small: and Putin will gain Ukraine as his compensation. It is the world’s most fertile agricultural area, and it is big. Russia is already a substantial exporter of grain: once it controls Ukraine it will have as much of a stranglehold on world food prices as it now has on world oil and gas prices, and it will profit mightly by both.

Putin’s first decisive act of policy when he became Tsar of Some of the Russias was to slash the Russian national debt, which currently stands at less than a fifth of the nation’s annual GDP. That is the ninth-lowest debt-to-GDP ratio in the world. Once he has gained control of Ukraine and its formidable grain plain, he can add the profits from worldwide sales to his immense profits from the elevated oil and gas price. His plan is to pay off Russia’s national debt altogether by 2030.

In this respect, Putin’s Russia compares very favourably with Xi’s China, whose national, regional and sectoral debts are colossal. For instance, the entire revenue from ticket sales for the much-vaunted high-speed rail network is insufficient even to meet the interest payments on the debt with which it was built, let alone to meet the operating costs.

Once Putin has restored Kievan Rus and Byelorus to the Sovietosphere, he is planning to expand his nation’s currently smallish economy no less rapidly than did the oil-rich nations of the Middle East. Do not bet that he will fail.

It is galling that those of us who have been sounding warnings about the Communist origin of the global-warming narrative for decades have gone unheeded. The late Christopher Booker, who came to the subject after reading a piece by me in Britain’s Sunday Telegraph and devoted most of his weekly columns to the subject thereafter until his untimely death, wrote week after week saying that by doing away with coal we should put ourselves at the mercy of Russia and its Siberian gas.

However, our politicians, nearly all of whom lack any strategic sense or knowledge of foreign affairs, and who are less scientifically literate than at any time since the Dark Ages, paid no heed. Now some of them are waking up, but far too late.

On the far side of the world, in Australia, the land of droughts and flooding rains, the south-east has been getting some flooding rains. The ridiculous Tim Flannery had been saying to any climate-Communist journalist who would listen a decade ago that global warming would cause all the rivers in south-eastern Australia to run dry. Now, of course, the climate-Communist news media are saying that the floods are because global warming. Bah! Pshaw!

“one should also disbelieve the stories to the effect that the sanctions inflicted on Russia by the West are having a significant impact.”

The ban from the Swift (text messaging – that’s what it is) system is Pythonesque, it merely means Russia will have to pick up the phone more.

As John Lennon once said, gimme some truth.

I have reservations concerning the seizure of properties and bank accounts of Russian oligarchs and forced closure of independent Russian businesses here. I wonder whether we are observing a normalization of such theft of property and rights by governments, even though I don’t have a yacht, etc.

First they came for the Russian yachts…

This is not a new thing.

It is a rather blunt instrument and as you are arguing, there is no due process where both sides can present arguments before the assets are frozen.

Yes, I’m also concerned about sanctions and seizures of assets without legal process. If you were intending to ferment revolt and begin a process of regime change through funding a disaffected internal or excile minority, that is exactly what you’d do. This is thus fueling a legitimate fear for Putin and his cronies. If you wanted peace you’d respect property.

Take your disinformation somewhere else you Slavophile troll. What kind of craziness are you spouting? Putin and his oligarchs are the ones with no respect for property and in the act of “regime change” in Ukraine, in case you’ve been living in a cave for the last decade. He has invaded and attacked a peaceful, democratic, sovereign nation with no provocation. He is destroying billions of dollars of private property, has killed thousands already, and has displaced millions of people. Putin is a violent, remorseless, petty little tyrant with Napoleonic ambitions. Now he is a war criminal. He has joined the ranks of Stalin, Mao, and Hitler in the history books. The oligarchs and military leaders who support him are just as culpable. Just to be clear.

Should not be a surprise considering the gulag that Nasty Pelosi runs in Wash DC.

The RAF sank my yacht by mistake, but did not pay compensation

Dear Lord M.,

I always enjoy your essays, but this one and the Am Thinker piece are beyond excellent.

The warmunistas have finally achieved their world war. The bombs are falling. Mothers clutch their children in dank cellars listening to the rolling thunder above. The streets run red with blood. All this wished for, planned for, by design of monsters.

Putin is to blame, and his minions. Caesar never stands alone. But the seeds of destruction were laid by the lefty liberal hippie milli sheeple herders, the barkers of hate, the panic porners, the commissars of death and slavery, in their gilded mansions, mad with greed and lacking any semblance of conscience.

It is a tragic day for humanity. You and a few others saw all this coming years ago and tried to warn us. It is not your fault. Your efforts have been stellar. You could not have done any more. I know that is little consolation. I have no tonic for grief.

Our man in Canada is looking into freezing and seizing accounts of truckers who had a massive protest in front of Parliament Hill for about 5 weeks of the coldest winter in a long time. In this huge long protest, not one person was physically hurt, probably a world record for a protest of this size and duration, a poster of a peaceful protest. This, despite the usual paid small group with swastika placards to taint the truckers with white supremacist BS and give the corrupted MSM a focus for fake news articles.

So much for freedom of speech and the right to protest peacefully in Canada.

It has been inching up for decades. Recall the wide spread ‘taking’ of property in the US some while back from people accused of various crimes, no proceedings, certainly no convictions, necessary. More of less every state was getting on that gravy train. I think the courts eventually put some limitations on the practice, either that or the msm stopped reporting on it.

They came 4 me well be4 the yachts…kaint have any freeman loose

You have reservations about governments around the world making life uncomfortable for Russian oligarchs in bed with the war criminal Putin??!! Your utter disconnection from the reality of an unprovoked war on a peaceful, democratic country by a murderous dictator and his evil cronies and the devastating impact it is having on millions of innocent people is…there are no words. Wow. Just wow. Like the global warming nutjobs, you’re bleeting about an imaginary problem while a monumental and real crisis happens before your eyes.

At least you didn’t call me a racist, stink.

Da, da, da) Well,

The Ukraine girls really knock me out (… Wooh, ooh, ooh)

They leave the West behind (Da, da, da)

And Moscow girls make me sing and shout (… Wooh, ooh, ooh)

That Georgia’s always on

My, my, my, my, my, my, my, my, my mind

Oh, come on

Woo (Hey)

(Hoo) Hey

Woo hoo (Yeah)

Yeah, yeah

Hey, I’m back In the U.S.S.R.

You don’t know how lucky you are, boys

Back In the U.S.S.R.

I think this should be 5 years and 7 months, not 7 years and 5 months?

Thanks as ever. Russia, however has 12400 tanks, but many are obsolete, compared to western armour.

Even obsolete tanks are quite effective against women and children.

Even obsolete tanks will withstand untrained men with machine guns.

And be in no doubt that the vast majority of the non-obsolete tanks will be a match for almost anything, especially tanks operated by the “snowflakes” that our army have gone out of their way to recruit.

Not that our army is likely to see much action, at least until Putin is enforcing his “corridor” through Lithuania to Kaliningrad. Adolf would have been proud of him.

“Adolf would have been proud of him.”

I don’t know, Adolf’s Wehrmacht defeated the French and British armies and then overran Northern France in two weeks.

Absolutely.

But the “Danzig Corridor” was a very successful pretext for invasion.

You think Vlad isn’t aware of that?

And, on the other hand, Zhukov did a great job against the Japanese tanks at Khalkin Gol in 1939 and went on to win against much more sophisticated German tanks using fairly basic but effective T-34s from Stalingrad to Berlin.

Meanwhile the tanks the British were provided with in early part of the war up to Dunkirk were, ahem, a bit embarrassing.

Zhukov – like most US military tacticians – relied on overwhelming numbers.

Nothing more.

You won’t find many British tanks at the bottom of the English channel, and there are a lot of tanks down there.

Zhukov succeeded where other Soviet Generals foundered.

There’s a US Sherman tank near us recovered from the botton of Start Bay, South Devon, when a training exercise for D day went tragically wrong when the troops and landing craft were ambushed by German Uboats.

Operation Tiger – an Amphibious D Day Rehearsal Disaster (combinedops.com)

E-boats not U-boats. E-boats were kinda like MTBs (Motor Torpedo Boats which many in the US would recognize as PT Boats) but larger with torpedo tubes that fired from the bow of the hull. They were sturdier built craft than PT boats. Top speeds of MTBs and E-boats were comparable.

The American tanks that sunk were using a British flotation device.

That is true but not fair. It was stupid to launch those DD Tanks off Omaha in the existing sea conditions. And had the officer in charge on the spot had been able to communicate with the half of his command that did launch, it would not have happened.

The British lost a few too but their beaches were much more protected and the waters not so rough and so a lot more of their made it.

The DD tanks were the only one of Hobarts “follies” that the US decided to use. The other several specialized tank configurations that British Officer came up with did great service with the British.

Ike was offered a limited number of LTVs (Alligators) as the Marines were using in the Pacific, but refused them. That was a mistake.

American Sherman tanks known as Tommy Cookers to the Wehrmacht.

Only when fitted out as a Sherman Firefly with a British 17lb anti tank gun did it stand any kind of chance against German armour

Anyone that thinks that the invading Allies would have done better with the equivalent of a Tiger or Panther during the invasion and pursuit phases in Europe, is mistaken. Does not understand the logistical realities of the time. And does not understand how unreliable the Tiger was nor how the Panther was limited in range by fuel and the very limited service life of it’s all metal tracks on hard surface roads.

Admittedly the US should probably have started to introduce the M 26 Pershing earlier and in greater numbers than it did but this failure is understandable considering the fact that even at the time of the Battle in the Ardennes most US front line troops lacked proper winter clothing for most of that battle.

There is a reason why the Russians decided to use lend lease M-4 Shermans and not the T-34s to make the long track over the mountains to reach Austria.

I get tired of the one sided way this argument is presented.

There is a story about some German officer who noticed cans of Spam after they overran the Ardennes line and said something to the effect that Germany was finished if the US was able supply the Army from across the Atlantic.

In 1942, maybe August….the German who was in charge of War Production flew to Hitler’s Wolf’s Lair or whatever in Ukraine and informed him that Germany could not out produce the Allies…too few people and resources – advised him to make peace.

In the movie, Battle of the Bulge, there is a scene where a German general looks at captured packages from families to US soldiers. The general says much the same thing.

It is a movie, but based on a true story!!

They were known as Tommy-Cookers because the tanks tended to burn rather well when hit close to fuel tanks, which I believe were un-armoured so vulnerable to shell hits! The Sherman Firefly was indeed a good upgrade with it’s 76.2mm 17lb shells. However, as in so many cases, the British Army was slow to learn, until the Comet tank was developed with a well powered engine, good well sloped armour, & a 76.2 barrelled gun!!! The final success story, the Centurion tank never got to see tank on tank action merely mopping up works towards the end. However, in the early years, British tanks had better armour than the German tanks, but poor firepower, with mere 3lb & 5lb guns, mere popguns compared to 75mm & 88mm guns on the other side!!! However the Sherman tank must always be viewed as a workhorse throughout!!!

The problem with the early British tanks of WW II was not only being under gunned but also poor mechanical reliability.

A British enlisted tank driver gave one of the first M-3 Stewarts delivered to N. Africa a test drive. He did his best to get it to throw a track. When asked by his officer how he liked it, he responded “It’s a Honey”.

And that right there in a nutshell expresses the primary reason the US tanks were favored. No tanks produced by any nation during the war came close to having the mechanical reliability of those that came off the production lines of the US.

Above I noted the “Tommy Cookers” phrase. And it was true until later versions of the M-4 came out with wet storage for the ammunition for their primary gun. Those later versions would still brew up due to the gasoline used for their fuel but they gave the crew time to bail out before their internally stored ammo started blowing up and thus improved survivability.

I think that the Israeli army used nearly 1000 Centurions in the 6 day war, mostly regunned

And Sherman’s upgrade with a 105.

Well sloped armour on the Comet???

A 90 degree upper glacis isn’t well sloped. Ok the turret was reasonable, but the hull was almost a box.

Sherman Tanks were designed to be mass produced in HUGH numbers. They were under armed but, non the less, their NUMBERS overran the Wehrmacht.

Tanks were important but:

I suspect many have heard that in the US Army the infantry is known as “the Queen of Battles”. How many have wondered what “the King of Battles” is? Well the answer is the Artillery. King Louis XIV had “Ultima Ratio Regum” (The Ultimate Argument of Kings) cast into all of his artillery pieces and he was correct.

It was the development of artillery that eventually ended the usefulness of castles as fortified redoubts and caused the evolution of siege warfare. It was artillery that turned the infantryman into a gopher where by a good trench or hole is an essential for survival in static warfare.

But despite the trenches and holes about 75% all KIA during WW I were from Artillery. During WW II Artillery accounted for about 64% of the total casualties in the war against Germany and Italy. In the war against Japan it was about 46%.

BS, the Sherman was dominate in North Africa and quite possible the E8 version was the best tank of the war.

Although it was before the war, I think Zhukov’s victory at Khalkin Gol was the decisive battle of WW2. Japan was soundly defeated and decided to not join the Axis war on the Soviet Union. Had Japan gone into Siberia rather than the Pacific (and not brought America into the war with the Peal Harbor sneak attack) an Axis victory would have been certain.

When “Blitzkreig” failed at the outskirts of Moscow…it was “ovah”. It became a war of attrition…Hitler was not too good at arithmetic…believed in “will” over numbers…he was wrong.

True this, the air force was completely designed around lightning war, with no strategic and heavy lift capabilities at all.

I saw a special a few months back that claimed that during the battle for Russia, over half of German supplies were still being delivered in horse drawn carts.

Meanwhile the Russians used US produced trucks and after the supply chain through Iran had opened the supply of trucks was so prolific that when the sparkplugs became fouled parked it and grabbed another.

And that is another aspect of logistics that the amateurs ignore or are ignorant of. No combatant in WW II even came close to achieving the efficiency or scope of the US vehicle and recovery, repair, and maintenance efforts.

It came not only from a concerted effort of the Army to make it that way but from the fact that during the prewar years the US had a far larger population of men familiar with mechanics than any of the other combatants.

It seems to be a talent that has been forgotten. My father related how during WWII, along with food rationing, one could not get repair parts for cars. When his low-compression 30s-vintage Ford started blowing oil, he shimmed the cylinder with a tin-can, and got many more miles out of it.

Unfortunately, a talent that is pretty much useless these days (unless you have a very old car).

Even with that talent, a modern battle tank doesn’t work that way. Coaxial laser on the main gun, coupled with a targeting computer that calculates range, windage, tube wear, temperature, humidity. Frequency hopping encrypted communications. IVIS for battlefield awareness of every other tank and attached vehicles. Armor that is definitely not “patch and go.”

That said, tankers ARE trained to do everything that they CAN still do, like breaking track (a very physical job that, sorry, females just cannot do). There is less and less of that as technology advances.

The story is probably apocryphal, but I remember reading about the world’s only DC-2 1/2. The story is that during the early days of WW2, and the Americans were evacuating ahead of the advancing Japanese. In a Japanese attack on an American airfield a DC-3 had one of it’s wings destroyed. The mechanics weren’t able to find any undamaged DC-3 wings, but they did find a DC-2 wing. So they jury rigged a way to connect the DC-2 wing onto the DC-3 body and used it to help evacuate the field.

I got it first hand. To be fair, my father was a machinist, amateur gunsmith, and knife maker in his younger days. He worked his way up to be a tool and die maker and jig and fixture builder at the end of his career.

As a teenager, I stripped first-gear in my ’49 Ford V8, drag racing with a friend. I know he had never repaired a transmission of that vintage. Yet, he unhesitatingly pulled the transmission, and repaired it, with the admonition, “Next time, you do it by yourself.” He was prescient!

And, I have heard that in the early years of cars, many a farmer made money pulling tourists out of the mud holes in what passed for roads, using their plow team.

I certainly believe this—they had to regauge thousands of kms of Russian broad gauge railroads before being able to get rail supply east. Trucks ate fuel, plus they were needed for the motorized infantry.

New interesting perspective for me, I haven’t studied the Japan/Russian war, which apparently was never settled after WWII due to mutual claims of 5 islands north of Japan. That Japan needed oil, and COULD have had it with much shorter supply lines from Russia, is something to think about. And without bringing the US into the war, there would have been nothing to obstruct the shipments to Japan.

Russia attacked on 2 fronts. That could have engendered a completely different ending to WWII. Without US steel and trucks and jeeps, etc. the Russian war machine would have probably collapsed.

All of Europe, possibly less the UK, would be speaking German and most of Asia north of the Himalayas would be Speaking Japanese.

Something for me to study in my retirement, thanks Jeffery P.

Even more simple, Hitler doesn’t attack Russia. Builds the bomb and puts it on V2 rockets. War over.

Yes, jeffery,

And interesting that Stalin waited until the peace for the Khalkin Gol campaign was signed before following the Nazis into Poland, then swallowing the Baltic states.

And we all (hopefully) are aware how those occupations worked out.

And some of Putin’s latter day admirers today are shocked, shocked I say, that the children of the poor sods who suffered then and continually until 1991 are lacking in enthusiasm for Putin and his chums, being back in charge of their lives.

And training professional snowflakes:

https://townhall.com/tipsheet/mattvespa/2022/03/04/as-russia-invades-ukraine-our-military-is-ensuring-we-pronouns-and-gender-identity-right-n2604118

The question isn’t Russian tanks against western tanks, because nobody in the west has the courage to stand up to Putin. Not the Stasi informant in Russia and certainly not the president suffering from dementia.

No, Ukraine will have to stand alone, and they don’t have enough tanks to do the job.

The US should stay out.

It’s too late to share responsibility. From 2014, this is the Slavic Spring in the Obama, Biden, Clinton, McCain, Biden Spring series.

This former SF soldier targeted for Europe during the cold war agrees. Weapons and advisors and that is it. No conventional forces deployed in Ukraine. I would bet though that guys from my former unit, the 10th SFG(A), are on the ground in country and advising and providing intel. That is exactly what they are trained to do and exactly the theater, including language, they have been trained to do it in.

I hear stories about the effectiveness of Javelins and our donation of hundreds of these to Ukraine. Perhaps these level the playing field to some extent or least place some concerns in the minds of Russian tank operators.

Also NLAW’s. (Fire n Forget guided missiles) Apparently they’re better for close combat which will be needed in urban fighting, and also a lot cheaper. Ukraine needs thousands of those rockets.

What a mess it all is.

My understanding of NLAWs is that they are great for lightly armored vehicles, which MOST of any convoy is. Javelins for tanks, NLAWS for the rest, Stingers for air cover, good mix.

Its hard to subjugate an armed populace when the majority of your troops are trying to protect that expensive armor. As I said on a previous Thread, small hunter/killer teams supported by a local populace will devastate armored maneuver. Send in the Javelins! The Ukraine is and will become more of a bloody mess.

Tanks are meaningless, if The Ukrainians can keep the Russian from total control of the Air they can win as long as the west keeps sending supplies.

They are NOT obsolete to Ukraine’s few tanks while the newest T-14 Tank is very powerful but only 20 of them built.

They cannot take Russia on head to head in armored warfare. What they can do is extract a very heavy price in urban combat. You gain nothing knocking down buildings because the rubble serves equally well for cover and concealment for the defenders and obstructs the passage of vehicles, including armor. The only reason to knock down the high buildings is to deny them as observation points. Other than that, it is self defeating in an urban area that one wishes to take control of with troops on the ground.

I’d be very surprised if there are any large scale tank on tank operations. The Ukrainians know they don’t have the equipment for such operations. They seem to be gearing up for hit and run and urban operations.

This is NOT a tank battle, if it were, Russian tanks would be spread out passing through the fields, not sitting in convoys.

I have posted this before, the Flying Tigers is the model. Get US pilot volunteers, place them on furlough, get them Ukrainian citizenship, repaint A 10s, F 15s, and old F117As in Ukrainian colors, give them to Ukraine, and this war would be over in 2 weeks tops. Imagine what US stand off weapons, F117A targeting of Russian AA missile batteries and A 10s attacking he 40 mile long convoy. Total game changer.

Either the Russians will withdraw, or they will use nuclear weapons, but either way, it would be over.

It is not a tank battle obviously and it isn’t simply because it can’t be. Your ploy will not work though. You simply will not get the numbers of qualified volunteers even if this government would support such an effort, Which it won’t.

The country simply is not big enough either.

And long-range sniper nests.

For sure Putin has his strategy, but I cannot help but think he has underestimated the unified response of the West, especially where there are a few leaders that would see this distraction as a blessing in disguise, affording them the opportunity to hide their abysmal polling figures and low voter confidence by taking “decisive action” against an old foe now universally maligned. Time will tell

“he has underestimated the unified response of the West”

The West’s response at the moment is unified hysteria #putinbad.

Most people giving their “expert” views on the situation would have been unable to point out Ukraine on a map two weeks ago. It was the same with covid, everybody became overnight virologists.

Ukraine has been hung out to dry by the West and NATO.

If you want to stop a bully you have to punch him not tickle his roubles.

“If you want to stop a bully you have to punch him”

That’s right. A bully understands a punch in the nose.

Mike Tyson: Everybody has a plan until he is punched in the face.

I do think Putin underestimated the Ukrainian forces and overestimated the capabilities of the Russian army. I also agree underestimated the Western response but I believe without an outright ban on Russian oil and gas, the sanctions won’t have the desired efffect.

There seems to be an unofficial ban on Russian oil. Private companies are taking it upon themselves and are refusing to handle Russian oil.

I imagine Chicom brokers will be more than happy to fill in the gap and the Chicoms will be happy to buy Russian oil.

Putin can do a lot of damage before all the money runs out.

Lots of Ukrainian citizens have Russian families.

Putin could have levelled the place, but hasn’t.

As pointed out in the article, this is about resource gathering.

Russians pride themselves on 50yr plans, we can’t make plans for 5 minutes in the West.

It’s sad but true…

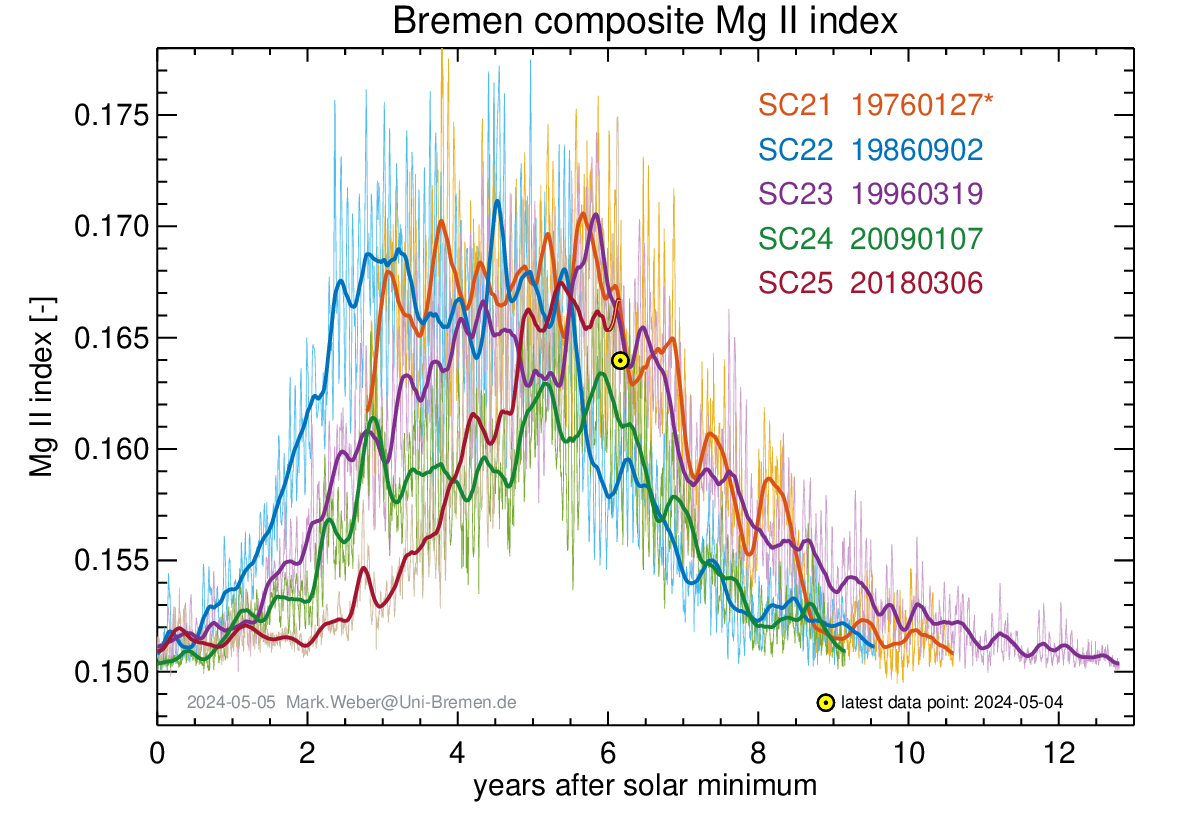

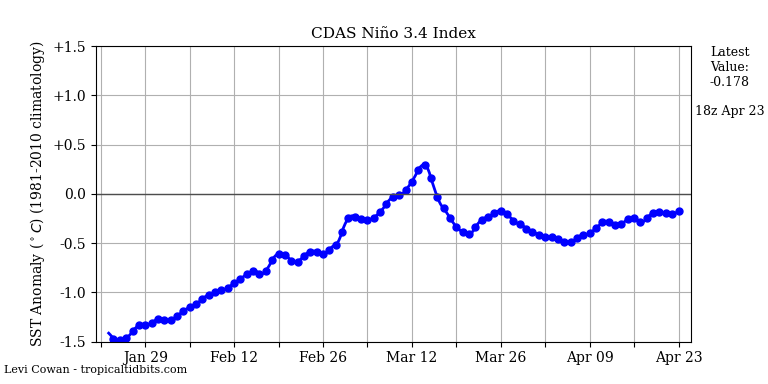

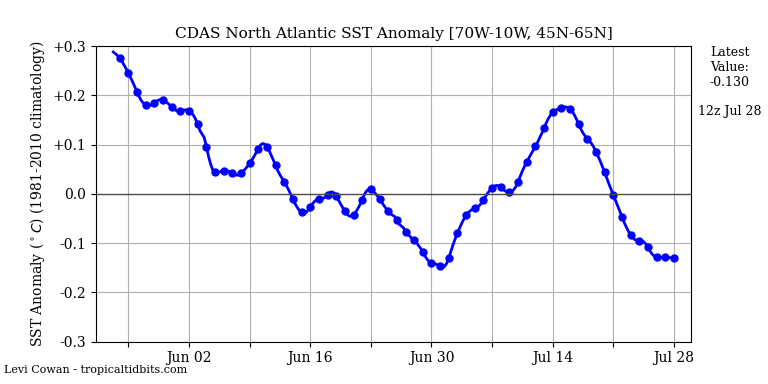

Galactic radiation levels are still at solar minima levels to the 23rd solar cycle.

UV radiation levels are still low.

This indicates a weakening of the Sun’s magnetic activity. Therefore, I predict another La Niña in November 2022.

Very little chance of El Niño.

Most grateful to Ireneusz Palmowski for his interesting material predicting that the current weak la Nina may occur again this coming winter.

Well that’s just great. If that comes to pass I don’t think I will able to stand the ”it’s climate change” squawking when we have floods again next summer.

I had hoped for an El Nino as selfishly, I’m building a house and need the hot dry days…

I still think China has played a very large role … primarily in raising the price of energy in the west as part of its long term plan to take our manufacturing.

We are now in a position, where if we want to go to war with China … we have to ask them to supply all the basic spare parts.

And, if you look at the way China has put money into Universities that then went woke and started hating Britain … you can see it wasn’t just an attack on British industry, but also an attack on the cohesiveness of British society.

If Christopher, as you suggest, the Russians have been pouring money into the Green groups, whilst China pours money into academia turning them into Western hating wokes, then it more or less explain how we got to this appalling situation.

There’s a good chance there is more to the China-Russia story, Mike (aka SS). China could roll through Russian anytime they want, and once Russia has been weakened militarily and economically they might go for it. Taiwan can wait. Remember the Tom Clancy book “The Bear and the Dragon”? The USA won’t be coming to the Russian Rescue in reality, partly because China has more on the Brandon Crime Family than does Russia. What a mess.

China is facing several self inflicted wounds. CMB mentioned the high speed rail debacle that wastes millions per day. There are many others to be sure:

1. Population demographic shift to more retirees than workers, thanks to the one-child policy.

2. Massive imbalance in the ratio of males to females. Millions of female babies were aborted or killed at birth.

3. Belts and Roads initiative debt bomb.

4. A Massive GOAT f**k of a housing bubble crisis. Billions wasted by the likes of Evergrande et al. on failed non real estate ventures.

5. Billions wasted on creating ghost cities nobody will ever live in due to the declining population.

6. The loss of upwards of 80% of the populations personal wealth due to the housing market crash.

7. Drastic lockdown measures have largely failed, and only served to anger the population.

8. If the Chinese people discover the Wuhan Virus was created in a lab the will go ape s**t and there will be blood.

9. Massive debt incurred in road building projects to nowhere to stimulate the economy.

China is in much worse shape than most people realize. It remains to be seen if they will do something stupid like make a grab for Tiawan.

Can someone tell me why this guy is saying this on twitter please?

Dr Simon Lee

@SimonLeeWx

· 2 Mar 2020

Even the UAH satellite-based estimate of global lower-tropospheric temperatures (which has the slowest warming trend of all major datasets) showed Earth had an exceptionally warm February – the 2nd warmest on record, behind only 2016, which was fuelled by a Super El Niño.

I really wish it would warm up. More snow on the way 😡

Two years ago?

Because that’s about February 2020. 2022 was only 16th warmest.

Here’s the current February graph.

And yet CO2 rises 😉

Why don’t you ask him why he’s saying that?

Sorry guys, my mistake. I could have sworn that when I first looked at that tweet it read March 2 2022.

I guess I’ll have to blame old eyes and a small screen.

Don’t sweat it. I make a ton mistakes myself. I’m a little embarrassed to admit this but in one post I said carbon had 12 protons multiple times. Carbon has 12 protons and neutrons only 6 of which are protons. Now that is embarrassing.

The full monthly lower-troposphere anomaly dataset is reproduced in the head posting. The temperature has fallen quite a bit since February 2020.

And one should be careful not to deploy the device used by the climate Communists, of picking out a single anomalous value that suits the Party Line and then considering it in isolation.

As the head posting shows, the underlying rate of global warming is small, slow, harmless and net-beneficial.

2020 is tied for first place with 2016, despite the largest downturn in anthropogenic CO2 in history.

And everyone knows the climate responds instantly to even the slightest change in CO2. Or something.

It does so, without fail, within about a couple weeks of the leaves coming out, every Spring. The northern hemisphere MLO CO2 peak is in May every year.

I think you’re getting your causation backwards there. CO2 goes down because the leaves come out.

One of us is missing something. When anything reaches a peak, it has nowhere to go but down. And, I did mention the leaves coming out.

I do expect better from you.

I’m sorry if I misunderstood your argument.

You were replying to Matthew Schilling suggesting that the climate does not respond instantaneously to changes in CO2, and so I assumed when you said “It does so, without fail, within about a couple weeks of the leaves coming out, every Spring.” you meant that the climate was reacting immediately to a change in CO2. If that’s not what you meant I apologize, but in that case I’m not sure how it’s relevant to Matthew’s comment.

And we have another new start date for ‘the pause’! Oct 2014 replaces Aug 2015, or whatever other start date provides the longest non-positive duration that can be wrangled from the UAH data.

Notwithstanding the constant changes to whenever this latest ‘pause’ was supposed to have started, we should ask ourselves the usual question:

‘Is a period of 7 years and 5 months (AKA 89 months) without a best estimate warming trend unusual in a data set spanning several decades that, overall, shows statistically significant warming?’

The answer, as usual, is ‘no’.

Rounding up to a neat 90 months, there are 321 such overlapping periods in the full UAH data set. Of these, 111 are periods of no warming or else cooling. More than one third of all consecutive 90-month periods in the UAH data set do not show a warming trend. Despite this, the data set shows an overall statistically significant warming trend.

Given that Lord M starts counting (from his various start points) at the peak of a big El Nino, and finishes counting at the trough of the recent double-dip La Nina, it is hardly surprising to find yet another ~90 month period of no warming. Suggesting that the underlying long term warming trend has stopped or reversed is a wish.

There is no global warming and as long as La Niña lasts there will not be. Many regions will be cooler.

For example, Australia will have below average temperatures due to cloud cover.

http://tropic.ssec.wisc.edu/real-time/mtpw2/product.php?color_type=tpw_nrl_colors&prod=global2×pan=24hrs&anim=html5

And soon after the next round of El Nino conditions recommence, that map will look decidely red. Doesn’t matter what a single month looks like; it’s just a snap shot. What matters is the underlying long term trend; and that remains statistically significant warming.

“What matters is the underlying long term trend; and that remains statistically significant warming.” – said all the climate experts in 1940.

Don’t confuse ToeFungalNail with a climate expert. He’s just a run-of-the-mill climate alarmist who makes “predikshuns” based on his belief that CO2 dominates all other factors that influence the climate.

ToeFungalNail doesn’t understand that CO2 is just one factor out of many. He doesn’t understand that, since climate models are unable to hindcast major changes in the climate when CO2 was stable, the models are essentially useless.

Meab said: “since climate models are unable to hindcast major changes in the climate when CO2 was stable, the models are essentially useless.”

Willeit et al. 2019 was able to hindcast major changes in the climate over the last 3 million years both with stable and unstable CO2 trends. Their model even explains the transition from 40k to 100k year glacial cycles around 800,000 YBP. It hindcasts both the CO2 and T profiles with pretty reasonable skill. As always, if you know of a model that has better skill in replicating both CO2 and T simultaneously I’d love to review it.

Yet another worthless bit of computer modelling, completely devoid of any physical basis.

Would you mind posting a link to the model you had in mind that exhibits better skill and also explains the mid Pleistocene transition and which has a firm physical basis?

I have no idea, but I do know that a model with as many adjustable knobs and dials as climate models can be “adjusted” to fit any desired scenario.

The Standard Model of particle physics has a lot of knobs and dials that can be adjusted. Do you hold the same prejudice against it as you for the climate models?

This is nonsense.

Wrong. The Standard Model is based on the fundamental constants, has only four forces, six leptons and three quarks, and makes physically-verifiable predictions.

”Willeit et al. 2019 was able to hindcast major changes in the climate over the last 3 million years”

God spare me. You actually believe we have the slightest clue about the details of the climate 3 million years ago?

You need help. And I don’t mean regular help but a team of specialists working round the clock. 🙂

Mike said: “You actually believe we have the slightest clue about the details of the climate 3 million years ago?”

Yes. I’ve not seen a convincing reason to doubt the abundance of evidence which says that glacial cycles were common.

When do you expect the next El Nino to happen and how big will it be?

And that warming is the result of what, TFN? What is the significance of that minor warming?

The AMO (down) cycle will prove you wrong Nail.

ResourceGuy said: “The AMO (down) cycle will prove you wrong Nail.“

The AMO was negative from 1965 to 1998 and positive through at least 2019. Based on Berkeley Earth’s data the temperature increased about 0.50 C during the cool phase (34 years) and 0.45 C during the warm phase (21 years). Those are warming rates of +0.15 C/decade and +0.21 C/decade. Based on that alone you could reasonably hypothesize that the future trend would be lower, but since it was still significantly positive even during the cool phase I don’t think it is reasonably to hypothesize that it would be negative.

I’m not suggesting a univariate model but I guess you took it that way.

Except for the last 7 years and 5 months. That is about 1/4 of the 30-year baseline. Not quite a “snap shot.”

As every good climate scientist knows, once a trend starts, it never, ever, ends.

I don’t know if you are this math deficient, or just being your usual duplicitous self.

The calculation of the pause starts at the present and works backwards in time.

According to the leading lights of the AGW panic, such long pauses aren’t possible.

For once I’d like someone who insists Monckton is working backwards, to explain exactly what they think he does, and why it make a difference.

This is about how you determine which month will be the start of the pause. You look at one month after another until you have found the correct start month, i.e. the earliest month that gives you a non-positive trend from that month to the most recent month. It makes no sense to perform your search backwards as you won’t know you have found the earliest such month until you have gone back to the start of the data set. It’s easier to start at the beginning look at each potential start month and stop as soon as you find the first one that gives you a non-positive trend. But it makes no difference which direction you look in, you will get the same result.

NEE!

It.

“The cure for boredom is curiosity. There is no cure for curiiosity.” — Dorothy Parker

1) For a given dataset, fix the end-point as “the last available monthly anomaly value”.

2) Working backwards, find the earliest month that results in a (just) negative trend.

3) One month later, when a new “last available monthly anomaly value” becomes available, go to step 1.

The latest results, for the main surface (GMST) and satellite (lower troposphere) datasets, are shown below.

It doesn’t, it’s merely an “interesting” phenomenon.

To classically trained detractors it can (legitimately …) be called “intellectual onanism”.

For ignorant peasants (such as myself, who need to look up what the term “sermo vulgaris” means instead of simply recalling it from memory) the term usually employed is “math-turbation”.

NB : The results still count as “interesting” though …

Thanks. Yes that is how the start date can be determined. The question still remains, why work backwards in step 2? The only way to be sure a given month is the earliest month is to go all the way to the earliest date e.g. December 1978. You could just as easily start at the earliest date and work forwards until you found the first negative trend.

You have been VERY badly misinformed …

That’s not what I’m describing. What I’m saying is you have to look at every start date (or end date if you prefer) calculate the trend from that date to the present, and choose from all possible dates the one that gives you the longest pause.

The forwards method

What’s the trend from December 1978 to February 2022. It’s positive so reject that as a start date.

What’s the trend from January 1979 to February 2022. That’s positive so reject that.

Repeat for each month until you get to

What’s the trend from October 2014 to February 2022. It’s negative. Hooray! We have the start date for the longest possible pause. We can stop now.

The backwards method

Whats the trend from January 2022 to February 2022. It’s negative, it’s the best candidate for a pause, but we have to keep going on.

Whats the trend from December 2021 to February 2022. It’s negative, it’s the best candidate for a pause, but we have to keep going on.

And so on till

Whats the trend from March 2018 to February 2022. It’s positive so not a candidate for a pause – April 2018 remains our best candidate, but it could easily turn negative again, so we keep going.

Until

Whats the trend from October 2017 to February 2022. It’s negative. Hooray, we can use that as a start date for the pause, but we have to keep going.

So on through more negative months until

Whats the trend from September 2014 to February 2022. It’s positive so not a candidate for a pause. October 2014 is now our best start date. But we can’t know if it won;t turn negative again. So keep going.

Finally

Whats the trend from December 1978 to February 2022. It’s still positive. We’ve come to the end of our data, so go back to the earliest pause date we found October 2014 – and that’s the start of the pause.

NB : I don’t “have to” do that, it’s one option amongst many.

On the other hand, “been there, done that, got the T-shirt” …

Start 8000 years ago

Actually, you can save yourself some time by looking at a graph and seeing what historical dates can be eliminated as being impossible. Not that you will probably notice the difference unless you are still using an old 8-bit computer with a 1MHz clock speed.

True, and I thought about mentioning something to that effect, but it’s getting complicated enough. You can generally tell when the trend isn’t going to go negative, and either stop there if going backwards or start there if going forwards.

In all fairness, I don’t use an algorithm to determine the start of the pause, I just generate a time series of every month and eyeball it to see the earliest start date, and this also allows me to see where to cherry pick periods with fast warming rates.

The issue still is why people think using any process to find the exact start month to give you the longest pause is not cherry-picking as long as it’s calculated and done backwards. To me, the very fact you are doing the calculation for every month is what makes it a cherry-pick.

CMoB is doing the equivalent of your “Trends to last data point” line. And Bellman is right. You can start in either direction, but computationally starting at the beginning requires less calculations since you get to stop the moment a non-positive trend is observed. Starting at the end forces you to walk all the way to the beginning.

And yet CO2 keeps rising 🙂

Derg said: “And yet CO2 keeps rising “

ENSO keeps happening too.

A sphincter says what?

ENSO is the El Nino Southern Oscillation. It has been shown to drive the UAH TLT temperature up during the warm phase (El Nino) and drive it down during the cool phase (La Nina).

You hit the nail right on the head!

The fact that CO2 keeps rising *should* mean there will be no pause. That’s what the climate models all show – NO PAUSE.

The longer the pause the more questionable the tie-in between CO2 and temperature becomes.

When the climate models get good enough to predict the pauses then they might become useful for predicting the future. Don’t hold your breath.

I have submitted two articles supporting your position, but Charles has not been willing to publish them. Would you be interested in seeing them?

Yep. Send’em along! Do you still have my email!

TG said: “The fact that CO2 keeps rising *should* mean there will be no pause.”

That would only true if CO2 were the only thing modulating the atmosphere temperatures.

TG said: “That’s what the climate models all show – NO PAUSE.”

If all climate models show NO PAUSE then why is it that I see a lot of pauses in the CMIP5 members available on the KNMI Explorer?

Again, here is the graph of the models.

Where are the pauses?

TG said: “Where are the pauses?”

Download the tabular data for each member from the KNMI Explorer. Load the data into Excel. Do a =@LINEST(X1:X89) on each monthly value. Look for occurrences where LINEST is less or equal to zero. If Excel is not your thing you can use R or your favorite programming language.

“Download the tabular data for each member from the KNMI Explorer. Load the data into Excel. Do a =@LINEST(X1:X89) on each monthly value. Look for occurrences where LINEST is less or equal to zero. If Excel is not your thing you can use R or your favorite programming language.”

I don’t need to do so. I’ve already posted the data in the form of a graph of the model outputs included in CMIP5.

TG said: “I don’t need to do so. I’ve already posted the data in the form of a graph of the model outputs included in CMIP5.”

How did you apply the Monckton method to the graph? With so many lines on that graph how did you make sure you weren’t confusing members especially when the lines crossed?

I can only assume you are color blind. You can separate out the model runs via their color.

TG said: “I can only assume you are color blind. You can separate out the model runs via their color.”

I zoomed in on the graph you posted and put the pixel grid on it. It looks to me that a lot of them have the same color. I also noticed that when multiple members land on the same pixel the color seems to be a blend of all of them. And the mass in the center looks to be blended together so thoroughly that I can’t tell where an individual member even starts. Maybe my eyes are failing me. Maybe you can help out. Would you mind separating out just a single member here so that I can see how you are doing it?

What’s the big deal? With computer, going back to 1978 and doing all the calculations won’t even give you enough time to go get a cup of coffee.

It’s not a deal at all, big or small. I just don’t understand why people say it must be done in the slightly more complicated way, and more importantly why they think doing it this way means you are being more honest than if you did it the other way.

If you work forward, you have to take each month in turn and then run the calculations from that month to the current month. Sure you get the same results in the end, but it takes a lot more time to find the last month.

If you start from the current month, you find the answer in one pass.

The claim has been that he cherry picks the start month, which he has never done.

It doesn’t take more time that’s my point. Start in December 1978 and work forward. You stop when you reach October 2014 as that’s the first negative trend. Start in January 2021 and you have to go all the way back to December 1978 before you can be certain you’ve found the earliest start date.

“The claim has been that he cherry picks the start month, which he has never done.”

My claim is that looking at every possible start date in order to find the result you want is cherry-picking. Again I’ll ask, if I check every possible start date to find the earliest date where the trend is greater than 0.34°C / decade (The rate Monckton claims the 1990 IPCC predicted), would you consider that to be a cherry-pick or just a carefully calculated period?

The start date for that one is October 2010. Would you object if I right an article claiming that for the last 11 years and 5 months the earth has been warming faster than the IPCC predicted, or would you ask why I chose that particular start date?

Poor, hapless, mathematically-challenged Bellman! I do not cherry-pick. I simply calculate. There has been no global warming for 7 years 5 months. One realizes that an inconvenient truth such as this is inconsistent with the Party Line to which Bellman so profitably subscribes, but there it is. The data are the data. And, thanks to the hilarious attempts by Bellman and other climate Communists frenetically to explain it away, people are beginning to notice, just as they did with the previous Pause.

There has been warming at over 0.34°C / decade for the past 11 years and 5 months. I did not cherry pick this date, I calculated it[*]. This is obviously inconvenient to anyone claiming warming is not as fast as the imagined prediction of from the 1990 IPCC report, so I can understand why that genius mathematician Lord Monckton chooses to ignore this carefully calculated trend. It doesn’t fit with his “the IPCC are a bunch of communists who make stuff up” party spiel. But the data are the data. And no amount of his usual libelous ad hominems, will distract from his inability to explain why this accelerated warming is happening despite the pause.

[*] Of course it is a cherry pick.

Pick a start date if 8000 ago

Tricky using satellite data.

Then start in 1958. We have good balloon data which agrees with the satellite from 1979. There has been no global warming for 64 years…..At least!

What’s the point? We know how this will go, I’ll point to all the data showing a significant warming trend since 1958 and you’ll say that doesn’t count because you don;t like the data. But for the record, trends since 1958

GISTEMP: +0.165 ± 0.021 °C / decade

NOAA: +0.150 ± 0.021 °C / decade

HadCRUT: +0.139 ± 0.020 °C / decade

BEST: +0.175 ± 0.018 °C / decade

Above uncertainties taken from Skeptical Science Trend Calculator.

RATPAC-A

Surface: 0.166 ± 0.024 °C / decade

850: 0.184 ± 0.022 °C / decade

700: 0.165 ± 0.022 °C / decade

500: 0.197 ± 0.027 °C / decade

My own calculations from annual global data. Uncertainties are not corrected for auto-correlation.

All uncertainties are 2-sigma.

As usual, you can’t explain the difference between precision and accuracy. How do you get a total uncertainty of 0.02C from measurement equipment with a 0.5C uncertainty?

That’s no different than saying if you make enough measurements of the speed of light with a stop watch having an uncertainty of 1 second you can get your uncertainty for the speed of light down to the microsecond!

The fact is that your “trend” gets subsumed into the uncertainty intervals. You can’t tell if the trend is up or down!

He makes the same blunders, over and over and over, and he still doesn’t understand the word.

It’s the uncertainty in the trend. I.e. the confidence interval. You know the thing Monckton never mentions in any of his pauses, and you Lords of.Uncertainty never call him out on.

“The fact is that your “trend” gets subsumed into the uncertainty intervals. You can’t tell if the trend is up or down!”

Which would mean the pause is meaningless, and calculating an exact start month doubly so.

The same old propaganda, repeated endlessly

The uncertainty of the trend depends on the uncertainty of the underlying data. The uncertainty of the trend simply cannot be less then the uncertainty of the data itself.

Uncertainty and confidence interval are basically the same thing. The uncertainty interval of a single physical measurement is typically the 95% confidence interval. That means when you plot the first data point the true value will lie somewhere in the uncertainty interval. When you plot the next point on a graph it also can be anywhere in the confidence interval. Therefore the slope of the connecting line can be from the bottom of the uncertainty interval of the first point to the top of the uncertainty interval for the next point, or vice versa – from the top to the bottom. That means the actual trend line most of the time can be positive or negative, you simply can’t tell. Only if the bottom/top of the uncertainty interval for the second point is above/below the uncertainty interval of the first point can you be assured the trend line is up/down.

Again, the trend line has no uncertainty interval of its own. The uncertainty of a trend line is based on the uncertainty of the data being used to try and establish a trend line.

Can you whine a little louder, I can’t hear you.

I have never said anything else. UAH is a metric, not a measurement. It is similar to the GAT in that the total uncertainty of the mean is greater then the differences trying to be measured. You can try and calculate the means of the two data sets as precisely as you want, i.e. the standard deviation of the sample means, but that doesn’t lessen the total uncertainty (ii.e. how accurate the mean is) of the each mean.

Are you finally starting to get the whole picture? So much of what passes for climate science today just totally ignores the uncertainty of the measurements they use. The stated measurement value is assumed to be 100% accurate and the uncertainty interval is just thrown in the trash bin.

Agricultural scientists studying the effect of changing LSF/FFF dates, changing GDD, and changing growing season length have recognized that climate needs to be studied on a county by county basis. National averages are not truly indicative of climate change. And if national averages are not a good metric then how can global averages be any better?

If the climate models were produced on a regional or local basis I would put more faith in them. They could be more easily verified by observational data. Since the weather models have a hard time with accuracy, I can’t imagine that the climate scientists could do any better!

“The uncertainty of the trend depends on the uncertainty of the underlying data.”

No it doesn’t, at least not usually. The data could be perfect and you will still have uncertainty in the trend. But there’s little point going through all this again, given your inability to accept even the simplest statistical argument.

“Therefore the slope of the connecting line can be from the bottom of the uncertainty interval of the first point to the top of the uncertainty interval for the next point, or vice versa – from the top to the bottom.”

Which is why you want to have more than two data points.

“Only if the bottom/top of the uncertainty interval for the second point is above/below the uncertainty interval of the first point can you be assured the trend line is up/down.”

Again, not true, but also again, I see no attempt to extend this logic to anything Monckton says. If, say there’s’ a ±0.2°C in the monthly UAH data, then by this logic the pause period could have warmed or cooled by 0.4°C, a rate of over 0.5°C / decade.And if you take the Carlo, Monte analysis the change over the pause could have been over 7°C, or ±9°C / decade.

As it happens the uncertainty over that short period is closer to the first figure, around ±0.6°C / decade, using the Skeptical Science Trend Calculator which applies a strong correction for auto correlation. But that uncertainty is not based on any measurement uncertainty, it’s based on the variability of the data combined with the short time scale.

“I have never said anything else”

Fair enough if you think the pause is meaningless, but I see many here who claim it proves there is no correlation between CO2 levels. It’s difficult to see how it can do that if you accept the large uncertainties.

Projection time.

Do you want me to enumerate all the times he’s ignored all explanations for why he’s wrong? Insisting that uncertainty in an average increases with sample size, refusing to accept that scaling down a measurement will also scale down the uncertainty, insisting that you can accurately calculate growing degree days knowing only the maximum temperature for the day. To name but three of the top of my head.

You are wrong on each of these. The standard deviation of the sample means is *NOT* the uncertainty of the average value. You can’t even state this properly. The standard deviation of the sample means only tells you how precisely you have calculated the mean of the sample means. It does *NOT* tell you anything about the uncertainty of that average. Precision is *NOT* accuracy. For some reason you just can’t seem to get that right!

There is no scaling down of uncertainty. You refuse to accept that the uncertainty in a stack of pieces of paper is the sum of the uncertainty associated with each piece of paper. If the uncertainty of 200 pages is x then the uncertainty of each piece of paper is x/200. u1 + u2. + … + u200 = x. This is true even if the pages do *not* have the same uncertainty. If the stack of paper consists of a mixture of 20lb paper and 30lb paper then the uncertainty associated with each piece of paper is *NOT* x/200. x/200 is just an average uncertainty. You can’t just arbitrarily spread that average value across all data elements.

Growing degree-days calculated using modern methods *IS* done by integrating the temperature profile above a set point and below a set point. If the temperature profile is a sinusoid then knowing the maximum temperature defines the entire profile and can be used to integrate. If it is not a sinusoid then you can still numerically integrate the curve. For some reason you insist on using outdated methods based on mid-range temperatures – just like the climate scientists do. If the climate scientists would get into the 21st century they would also move to the modern method of integrating the temperature curve to get degree-days instead of staying with the old method of using mid-range temperatures. HVAC engineers abandoned the old method almost at least 30 years ago!

Thanks for illustrating my point.

Nothing in your points about the distinction between precision and accuracy do you explain how increasing sample size can make the average less certain. Your original example was having 100 thermometers each with an uncertainty of ±0.5 °C, making, you claimed, the uncertainty of the average ±5 °C. If your argument is that this uncertainty was about accuracy not precision, i.e. caused by systematic rather than random error, it still would not mean the uncertainty of the average culd possibly be ±5 °C. At worst it would be ±5 °C.

What do you think x/200 means if not scaling down. You know the size of a stack of paper, you know the uncertainty of that measurement, you divide the measurement by 200 to get the thickness of a single sheet, and you divide the uncertainty by 200 to get the uncertainty of that thickness.

The outdated methods requiring average temperatures in GDD was exactly the one you insisted was the correct formula here. It shows the need to have both the maximum and minimum temperatures and to get the mean temperature form them along with the range. I suggested you try it out by keeping the maximum temperature fixed and seeing what happened with different minimum temperatures. I take it you haven;t done that. Here’s the formula you wrote down, with some emphasis added by me.

(New Total GDD) = (Yesterday’s Total GDD) + (1/π) * ( (DayAvg – κ) * ( ( π/2 ) – arcsine( θ ) ) + ( α * Cos( arcsine( θ ) ) ) )

DayAvg = (DayHigh + DayLow)/2

κ = 50 (the base temp.)

α = (DayHigh – DayLow)/2

θ = ((κ – DayAvg)/α)

Who let prof bellcurveman have a dry marker again?

“Nothing in your points about the distinction between precision and accuracy do you explain how increasing sample size can make the average less certain.”

Increasing the sample size only increases the PRECISION of the mean. As the standard deviation of the sample means gets smaller, you are getting more and more precise with the value calculated – THAT IS *NOT* THE UNCERTAINTY OF THE MEAN which is how accurate it is.

If you only use the stated value of the mean for each sample and ignore the uncertainty propagated into that mean from the individual members of the sample and then you use that mean of the stated values to determine the mean of the population you have determined NOTHING about how accurate that mean is.

Take 10 measurements with stated values of x_1, x_2, …, x_10 each with an uncertainty of +/- 0.1. Then let q = Σ (x_1, …, x_10). The uncertainty of q, ẟq, is somewhere between ẟx_1 + ẟx_2 + … + ẟx_10 and sqrt(ẟx_1^2 + ẟx_2^2 + … + ẟx_10^2)

Now, let’s say you want to calculate the uncertainty of the average. q_avg = Σ (x_1, …, x_10) / 10.

The uncertainty of q_avg is somewhere between ẟx_1 + ẟx_2 + … + ẟx_10 + ẟ10 (where ẟ10 = 0) and sqrt(ẟx_1^2 + ẟx_2^2 + … + ẟx_10^2 + ẟ10^2) (where ẟ10 = 0)

Taylor’s Rule 3.18 doesn’t apply here because n = 10 is not a measurement.

from Taylor: “If several quantities x, …, w are measured with small uncertainties ẟx, …, ẟw, and the measured values are used to compute (bolding mine, tg)

q = (x X … X z) / (u X …. X w)

If the uncertainties in x, …, w are independent and random, then the fractional uncertainty in q is the sum in quadrature of the original fractional uncertainties.

ẟq/q = sqrt[ ẟx/x)^2 + … (ẟz/z)^2 + (ẟu/u)^2 + …. + (ẟw/w)^2 ]

In any case, it is never larger than their ordinary sum

ẟq/q = ẟx/x + … + ẟz/z + ẟu/u + … + ẟw/w

Even if you assume that u, for instance is 10, the ẟ10 just gets added in.

Thus the uncertainty of the mean of each sample is somewhere between the direct addition of the uncertainties in each element and the quadrature addition of the uncertainties in each element. Since the number of elements is a constant (with no uncertainty) the uncertainty of the constant neither adds to, subtracts from, or divides the uncertainty of the sum of the uncertainties from each element.

When you use the means of several samples to calculate the mean of the population by finding the average of the means, the uncertainty associated with each mean must be propagated into the average.

Average-of-the-sample-means = (m_1 + m_2 + … + m_n) / n,

where m_1, …, m_n each have an uncertainty of ẟm_1, ẟm_2, …, ẟm_n

The uncertainty ẟAverage_of_the_sample_means is between

ẟAverage_of_the_sample_means/ sum_of_the_sample means =

ẟm_1/m_1 + ẟm_2/m_2 + …. + ẟm_n/m_n

and

sqrt[ (ẟm_1/m_1)^2 + ( ẟm_2/m_2)^2 + … + (ẟm_n/m_n)^2 ]

The standard deviation of m_1, …, m_n is

[Σ(m_i – m_avg)^2 / n where i is from 1-n ]

This is *NOT* the uncertainty of the mean. Totally different equation.

I simply do not expect you to even follow this let alone understand it. My only purpose is to point out to those who *can* follow it and understand it that the standard deviation of the sample means is *NOT* the same thing as the uncertainty of the mean of the sample means.

Only a mathematician that thinks all stated values are 100% accurate would ignore the uncertainties associated with measurements and depend only on the stated values of the measurements.

Thanks for reminding me and any lurkers here of the futility of arguing these points with you. You’ve been asserting the same claims for what seems like years, provided no evidence but the strength of your convictions, and simply refuse to accept the possibility that you might have misunderstood something.

Aside fro the failure to provide any justification, this claim is self-evidently false. You are saying that if you have a 100 temperature readings made with 100 different thermometers, each with an uncertainty of ±0.5 °C, then if this uncertainty is caused by systematic error – e.g. every thermometer might be reading 0.5 °C to cold, then the uncertainty in the average of those 100 thermometers will be ±50 °C. And that’s just the uncertainty caused by the measurements, nothing to do with the sampling.

In other words, say all the readings are between 10 and 20 °C, and the average is 15 °C. Somehow the fact that the actual temperature around any thermometer might have been as much as 20.5 °C, means that the actual average temperature might be 65 °C. How is that possible?

“Taylor’s Rule 3.18 doesn’t apply here because n = 10 is not a measurement.”

Of course 10 is a measurement. It’s a measure of the size of n, and it has no uncertainty.

Uncertainty is not error.

And the relevance of this mantra is?

The problem is same regardless of how you define uncertainty. How can 100 thermometers each with an uncertainty of ±0.5 °C result in an average with a measurement uncertainty between ±5 and ±50 °C?

tg: “How is it possible for the uncertainty to be 65C? What that indicates is that your average is so uncertain that it is useless. Once the uncertainty exceeds the range of possible values you can stop adding to your data set. At that point you have no idea of where the true value might lie.”

I’m not surprised you can’t figure this one out!

Oh I figured it out a long time ago. I’m just seeing how far you can continue with this idiocy.

Could you point to a single text book that explains that adding additional samples to a data set will make the average worse?

Taylor and Bevington’s tome. I already gave you the excerpts from their text books that state that statistical analysis of experimental data (i.e. temperatures) with systematic errors is not possible.

“adding additional samples to a data set will make the average worse?”

Very imprecise. It makes the UNCERTAINTY of the average greater. You are still confusing preciseness and accuracy. You can add uncertain data and still calculate the mean very precisely. What you *can’t* do is ignore the uncertainty and state that the preciseness of your calculation of the mean is also how uncertain that mean is.

And all you do is keep claiming Taylor and Bevington are idiots and their books are wrong.ROFL!!

I’m sorry that’s an inconvenient truth for you but it *IS* the truth! The climate scientists combine multiple measurements of different things using different measurement devices all together to get a global average temperature. What do you expect that process to give you? In order to get things to come out the way they want they have to ignore the uncertainties of all those measurement devices and of those measurements and assume the stated values are 100% accurate. They ignore the fact that in forming the anomalies that the uncertainty in the baseline (i.e. an average of a large number of temperature measurements, each contributing to uncertainty) and the uncertainty in the current measurement ADD even if they are doing a subtraction! They just assume that if all the stated values are 100% accurate then the anomaly must be 100% accurate!

They just ignore the fact that they are creating a data set with a HUGE variance – cold temps in the NH with hot temps in the SH in part of the year and then vice versa in another part of the year. Wide variances mean high uncertainty. But then they try to hide the variance inside the data set by using anomalies – while ignoring the uncertainty propagated into the anomalies.

At least with UAH you are taking all measurements with the same measuring device. It would be like taking one single thermometer to 1000 or more surface locations to do the surface measurements. At least that would allow you to get at least an estimate of the systematic error in that one device in order to provide some kind of corrective factor. It might not totally eliminate all systematic error but it would at least help. That’s what you get with UAH.

BTW, 100 different measurement devices and measurements would give you at least some random cancellation of errors. Thus you should add the uncertainties using root-sum-square -> sqrt( 100 * 0.5^2) = 10 * 0.5 = 5C. Your uncertainty would be +/- 5C. That would *still* be far larger than the hundredths of a degree the climate scientists are trying to identify. If you took 10 samples of size 10 then the mean of each sample would have an uncertainty of about 1.5C = sqrt( 10 * .5^2) = 3 * .5. Find the uncertainty of the average of those means and the uncertainty of that average of the sample means would be sqrt( 10 * 1.5^2) = 4.5C. (the population uncertainty and the uncertainty of the sample means would probably be equal except for my rounding). That’s probably going to be *far* higher than the standard deviation of the sample means! That’s what happens when you assume all the stated values in the data set are 100% accurate. You then equate the uncertainty in the mean with the standard deviation of the sample means. It’s like Berkeley Earth assuming the uncertainty in a measuring device is equal to its precision instead of its uncertainty.

No, the uncertainty of the mean would be +/- 5C. Thus the true value of the mean would be from 10C to 20C. Why would that be a surprise?

Remember with global temperatures, however, you have a range of something like -20C to +40C. Huge variance. So a huge standard deviation. And anomalies, even monthly anomalies, will have a corresponding uncertainty.

How is it possible for the uncertainty to be 65C? What that indicates is that your average is so uncertain that it is useless. Once the uncertainty exceeds the range of possible values you can stop adding to your data set. At that point you have no idea of where the true value might lie. In fact, with different measurements of different things using different devces there is *NO* true value anyway. The average gives you absolutely no expectation of what the next measurement will be. It’s like collecting boards at random out of the ditch or trash piles, etc. You can measure all those boards and get an average. But that average will give you no hint as to what the length of the next board collected will be. It might be shorter than all your other boards, it might be longer than all the other boards, or it may be anywhere in the range of the already collected boards – YOU SIMPLY WILL HAVE NO HINT AS TO WHAT IT WILL BE. The average will simply not provide you any clue!

With multiple measurements of the same thing using the same device the average *will* give you an expectation of what the next measurement will be. If all the other measurements range from 1.1 to 0.9 with an uncertainty of 0.01 then your expectation for the next measurement is that it would be around 1.0 +/- .01. That’s because with a gaussian distribution the average will be the most common value – thus giving you an expectation for the next measurement.

“And all you do is keep claiming Taylor and Bevington are idiots and their books are wrong.ROFL!!”

I claim nothing of the sort. I keep explaining to you that they disagree with everything you say, which makes them the opposite of idiots.

“I keep explaining to you that they disagree with everything you say, which makes them the opposite of idiots.”

They don’t disagree with everything I say. The problem is that you simply don’t understand what they are saying and you refuse to learn.

These are two entirely different things. Different methods apply to uncertainty in each case.

In scenario 1 you do not get a gaussian distribution of random error, even if there is no systematic error. In this case there is *NO* true value for the distribution. You can calculate an average but that average is not a true value. As you add values to the data set the variance of the data set grows with each addition as does the total uncertainty.

In scenario 2 you do get a gaussian distribution of random error which tend to cancel but any systematic error still remains. You can *assume* there is no systematic error but you need to be able to justify that assumption – which you, as a mathematician and not a physical scientist or engineer, never do. You just assume the real world is like your math books where all stated values are 100% accurate.

As Taylor says in his introduction to Chapter 4:

“As noted before, not all types of experimental uncertainty can be assessed by statistical analysis based on repeated measurements. For this reason, uncertainties are classified into two groups: the random uncertainties, which can be treated statistically, and the systematic uncertainties, which cannot. This distinction is described in Section 4.1. Most of the remainder of this chapter is devoted to random uncertainties.” (italics are in original text, tg)

You either refuse to understand this or are unable to understand this. You want to apply statistical analysis to all situations whether it is warranted or not. Same with bdgwx.

Bevington says the very same thing: “The accuracy of an experiment, as we have defined it, is generally dependent on how well we can control or compensate for systematic errors, errors that will make our results different from the “true” values with reproducible discrepancies. Errors of this type are not easy to detect and not easily studied by statistical analysis.”

Temperature measurements are, by definition, multiple measurements of different things using different measurement devices. Thus they are riddled with systematic error which do not lend themselves to statistical analysis. There is simply no way to separate out random error and systematic error. A data set containing this information can be anything from multi-modal to highly skewed to having an absolutely huge variance. Your typical statistical parameters such as mean and standard deviation simply do not describe such a data set well at all.