By Andy May

The IPCC lowered their estimate of the impact of solar variability on the Earth’s climate from the already low value of 0.12 W/m2 (Watts per square-meter) given in their fourth report (AR4), to a still lower value of 0.05 W/m2 in the 2013 fifth report (AR5), the new value is illustrated in Figure 1. These are long term values, estimated for the 261-year period 1750-2011 and they apply to the “baseline” of the Schwabe ~11-year solar (or sunspot) cycle, which we will simply call the “solar cycle” in this post. The baseline of the solar cycle is the issue since the peaks are known to vary. The Sun’s output (total solar irradiance or “TSI”) is known to vary at all time scales (Kopp 2016), the question is by how much. The magnitude of short-term changes, less than 11 years, in solar output are known relatively accurately, to better than ±0.1 W/m2. But, the magnitude of solar variability over longer periods of time is poorly understood. Yet, small changes in solar output over long periods of time can affect the Earth’s climate in significant ways (Eddy 1976) and (Eddy 2009). In John Eddy’s classic 1976 paper on the Maunder Minimum, he writes:

“The reality of the Maunder Minimum and its implications of basic solar change may be but one more defeat in our long and losing battle to keep the sun perfect, or, if not perfect, constant, and if inconstant, regular. Why we think the sun should be any of these when other stars are not is more a question for social than for physical science.” (Eddy 1976)

Using recent satellite data, it has been determined that the Sun puts out ~1361 W/m2, measured at 1AU, the average distance of the Earth’s orbit from the Sun. Half of the Earth’s surface is always in the dark and the sunlight hits most latitudes at an angle, so to get the average absorbed or reflected we divide by 4, to get ~340 W/m2. Then after subtracting the energy reflected by the atmosphere and the surface, we find the average radiation absorbed is about 240 W/m2.

The Earth warms when more energy is added to the climate system, the added energy is called a climate “forcing” by the IPCC. The total anthropogenic forcing over the industrial era (1750 to 2011, 261 years), according to the IPCC (IPCC 2013, 661), is about 2.3 (1.1-3.3) W/m2 or about 1.0% of 240. Also, on page 661, the IPCC estimates the total forcing due to greenhouse gases in 2011 to be 2.83 (2.54-3.12) W/m2. The forcing for CO2 alone is 1.82 (1.63-2.01) W/m2. They further estimate that the growth rate of CO2 caused forcing, from 2001 to 2011 is 0.27 W/m2 or 0.027 W/m2/year using the same methods. These are a lot of numbers, so we’ve summarized them in Table 1 below.

|

IPCC Anthropogenic Forcing Estimates (AR5, page 661) |

|||||

| Time period | Cause | Years | Total Forcing | Forcing per year | Percent of 240 |

| W/m2 | W/m2/yr | W/m2 | |||

| 1750-2011 | Humans |

261 |

2.3 |

0.0088 |

0.96% |

| 1750-2011 | CO2 |

261 |

1.82 |

0.0070 |

0.76% |

| 2001-2011 | CO2 |

10 |

0.27 |

0.0270 |

0.11% |

Table 1. Anthropogenic forcing as estimated by (IPCC 2013, 661).

The IPCC’s assumed list of radiative forcing agents and their total forcing from 1750 to 2011 are shown in Figure 1. Next to the IPCC table, I’ve shown the Central England temperature (CET) record, which is the only complete instrumental temperature record that goes back that far in time. The CET is mostly flat until the end of the Little Ice Age and then, after a dip around the time of the Krakatoa volcanic eruption in 1883, it shows warming to modern times.

Figure 1. On the left is the IPCC list of radiative forcing agents from page 697 of AR5 WG1 (IPCC 2013). Notice they assume that solar irradiance is very small, in this post we examine this assumption. On the right is the Central England temperature record (CET), the only instrumental temperature record that goes back to 1750. The CET data source is the UK MET office.

If the Sun were to supply all 2.3 W/m2 of the forcing described in Figure 1, but as a steady change over 261 years, the change each year would have to average ~0.0088 W/m2/year. So, assuming constant albedo (reflectivity) the change in solar output would have to be 4×0.0088 on average or 0.035 W/m2/year. As noted above, we multiply by four because the Earth is a sphere and half of it is in the dark. This is a total increase in solar output of 9.2 W/m2 over 261 years (1750-2011), a change of 0.7%. Some might say we should start at 1951, since that is the agreed date when CO2 emissions became significant (IPCC 2013, 698-700). But, I started at 1750 to cover the “industrial era” as defined by the IPCC, the choice is somewhat arbitrary as long as we go back far enough to precede any significant human CO2 emissions. The year 1750 is also useful because it is near the end of the worst part of the Little Ice Age, the coldest period in the last 11,700 years (the Holocene). Do we know the solar output, over the past 261 years, accurately enough to say the Sun could not have changed 9.2 W/m2 or some large portion that amount? In other words, is the IPCC assumption that solar variability has a very small influence on climate valid?

How accurate are our measurements of Solar output?

The solar cycle variation of TSI is about 1.5 W/m2 or 0.1% from peak to trough (~5-7 years) or 0.25 W/m2/year and 0.02%/year. These changes are much larger than the longer-term changes of 0.0088 W/m2/year computed above. So, simply because we can see the ~11-year solar cycle does not necessarily mean we can see a longer-term trend that could have caused current warming. Satellite TSI measurement instruments deteriorate under the intense sunlight they measure and they lose accuracy with time. We have satellite measurements of varying quality over much of the last four solar cycles. The raw data are plotted in Figure 2 and the critical ACRIM gap is highlighted in yellow. Because the Nimbus7/ERB (Earth Radiation Budget) and ERBS/ERBE instruments are much less precise and accurate than the ACRIM (Active Cavity Radiometer Irradiance Monitor) instruments, filling this gap is the most important problem in making a long-term TSI composite (Scafetta and Willson 2014).

Figure 2. Raw satellite total solar irradiance (TSI) measurements. The ACRIM gap is identified in yellow. The trend of the NIMBUS7/ERB instrument in the ACRIM gap is emphasized with a red line. Source: (Soon, Connolly and Connolly 2015).

As Figure 2 makes clear, calibration problems have caused the satellites to measure widely different values of TSI, the solar cycle minima range from 1371 W/m2 to 1360.5 W/m2. Currently, the correct minimum is thought to be around 1360.5, but just a few years ago it was thought to be ~1364 W/m2 (Haigh 2011). After calibration corrections have been applied, each satellite produces an internally consistent record, but the records are not consistent with one another and no single record covers two or more complete solar cycles. This makes the determination of long-term trends problematic.

There have been three serious attempts to build single composite TSI records from the raw data displayed in Figure 1. They are shown in Figure 3.

Figure 3. Three common composites of the data shown in Figure 1. The ACRIM gap is identified in yellow. The PMOD composite is by P.M.O.D. (Frohlich 2006) also the source of the figure (pmodwrc.ch), the ACRIM composite is from the ACRIM team (Scafetta and Willson 2014), the IRMB composite is from the Royal Meteorological Institute of Belgium (Dewitte, et al. 2004).

The ACRIM and IRMB composites show an increasing trend during the ACRIM gap and the PMOD composite shows a declining trend. This figure was made several years ago by the PMOD team when the baseline of the TSI trend was more uncertain, so the IRMB and PMOD composites are shown with a ~1365 W/m2 base and the ACRIM composite is shown with a ~1360.5 W/m2 baseline, which is currently preferred. The important point, shown in Figure 3, is that the long-term PMOD trend is down, the ACRIM trend is up to the cycle 22-23 minimum (~1996) and then down to the cycle 23-24 minimum (~2009), and the IRMB trend is up. Thus, the direction of the long-term trend is unclear. Figure 4 shows the details of the PMOD and ACRIM trends, this is from (Scafetta and Willson 2014).

Figure 4. The ACRIM and PMOD composites showing opposing slopes in the solar minima and in the ACRIM gap, highlighted in yellow. Source: (Scafetta and Willson 2014).

In Figure 4 we see the differences more clearly. The ACRIM TSI trend from the solar cycle low between 21 and 22 to 22-23 is +0.5 W/m2 in 10 years or 0.05 W/m2 per year, then the trend is down to the cycle 23-24 minimum. The PMOD composite is steadily down about 0.14 W/m2 in 22 years (1987-2009) or 0.006 W/m2/year. The difference in these trends is 0.056 W/m2/year. If this is extrapolated linearly for 261 years, the difference is 14.6 W/m2, more than the 9.2 W/m2 required to cause the recent warming.

NOAA believe the SORCE satellite TIM (Total Irradiance Monitor) instrument is accurate and accepts the ~1360.5 TSI baseline it establishes. They have normalized the three composites discussed above to this baseline. After normalizing, they averaged the three composites to produce the record shown in Figure 5. The SORCE/TIM record starts in February 2003, so the average after that is replaced by the SORCE/TIM record. Averaging three records with differing trends creates a meaningless trend, so this TSI record is of little use for our purposes, but they also construct an uncertainty function (something notably missing for the individual composites) using the differences between the composites and the estimated instrument error. The NOAA composite is shown in Figure 5 and their computed uncertainty is shown in Figure 6, both figures show the raw data and a 100-day running average.

Figure 5. The NOAA/NCEI composite. It is the average of the three composites shown above, with the data after Feb. 2003 replaced by the SORCE/TIM data The ACRIM gap is indicated in yellow. The low points between solar cycles 21-23 and 22-23 are marked on the plot. Data source: NOAA/NCEI.

In the NOAA composite (Figure 5) the increase in the solar minimum value from the solar cycle 21-22 minimum to the solar cycle 22-23 minimum appears, just as it does in the IRMB and the ACRIM composites. The solar cycle 23-24 minimum drops down to the level of the 21-22 minimum, but this is a forgone conclusion since the earlier records are normalized to this value in the SORCE/TIM record. In fact, given that everything is normalized to TIM, we only have two points in this whole composite that we can try and use to determine a long-term trend, the 21-22 minimum and the 22-23 minimum, the peaks cannot be used since they are known to be variable (Kopp 2016). Thus, we don’t know very much.

Figure 6. NOAA TSI uncertainty, computed from the difference between the ACRIM and PMOD values, after normalization to the SORCE/TIM values, plus an assumed 0.5 W/m2 uncertainty in the SORCE/TIM absolute scale until the TIM data and uncertainties are available after Feb. 2003. A rapid increase in the computed TIM error occurs late in 2012. The ACRIM gap is highlighted in yellow. The low points between solar cycles 21-23 and 22-23 are marked on the plot. Data source: NOAA/NCEI.

Greg Kopp has calculated that in order to observe a long-term change in solar output of 1.4 W/m2 per century, or about 3.5 W/m2 since 1750, which is 38% of the total 9.2 W/m2 required to explain modern warming; non-overlapping instruments would need an accuracy of ±0.136 W/m2 and 10 years of measurements to even see the change (Kopp 2016). As Figure 6 makes clear, the SORCE/TIM instrument, the best instrument in orbit today, has an uncertainty at least 3.5 times the required level to detect such a trend and it decayed rapidly after 10 years.

Discussion

The estimated uncertainty in the NOAA satellite composite is well over 0.5 W/m2 and it increases as a function of time before 2003. The three original composites come with no estimated uncertainty, their accuracy, or lack of it, is unknown. NOAA simply used the differences in the composites to estimate the uncertainty. This makes estimating a trend from the satellite data problematic (Haigh 2011). To look at the longer term, we must rely on solar proxies, such as sunspot counts and proxies of the strength of the solar magnetic field. The relationship of the proxies to solar output is not known and can only be estimated by correlating the proxies to satellite data. Professor Joanna Haigh summarizes this in the following way:

“To assess the potential influence of the Sun on the climate on longer timescales it is necessary to know TSI further back into the past than is available from the satellite data … The proxy indicators of solar variability discussed above have therefore been used to produce an estimate of its temporal variation over the past centuries. There are several different approaches taken to ‘reconstructing’ the TSI, all employing a substantial degree of empiricism and in all of which the proxy data (such as sunspot number) are calibrated against the recent satellite TSI measurements, despite the problems with this data outlined above.” (Haigh 2011)

The uncertainty in these proxy estimates cannot be quantified, but it must be greater than the potential error (uncertainty) in the satellite data, which varies from 0.48 W/m2 to over 0.8 W/m2. Let’s return to the slopes discussed above and illustrated in Figure 4. If we combine the opposing slopes of the ACRIM and PMOD composites, the difference is 0.056 W/m2/year. The NOAA estimated uncertainty (Figure 6) in the cycle 21-22 minimum is over 0.7 W/m2 and in the 22-23 minimum it is over 0.6 W/m2. If this uncertainty is considered, the extrapolated long-term linear trend could be as high as 0.13 W/m2 to 0.18 W/m2/year. Over 261 years, these values could add up to 34 to 47 W/m2. Both values are much higher than the 9.2 W/m2required to account for the roughly one-degree of warming observed over the past 261 years (see Figure 1 and the discussion).

Given the way the composites have been generated, we only have two points to work with in determining a long-term solar trend, the points are the lows of solar cycles 21-22 and 22-23. Everything has been adjusted to the low of solar cycle 23-24, so it isn’t usable. With two points all you get is a line and a linear change is unlikely for a dynamo. Basically, the satellite data is not enough.

We have no opinion on the relative merits of the three composite TSI records discussed. There are, for the most part, logical reasons for all the corrections made in each composite. The problem is, they are all different and have opposing trends. Each composite selects different portions of the available satellite records to use and applies different corrections. The resulting, different long-term trends are simply a reflection of the component instrument instabilities (Kopp 2016). For discussions of the merits of the ACRIM composite see (Scafetta and Willson 2014), for the PMOD composite see (Frohlich 2006), for the IRMB composite see (Dewitte, et al. 2004). There are arguments for and against each composite. There are also numerous papers discussing how to extend the TSI record into the past using solar proxies. For a discussion of some of the most commonly used TSI reconstructions of the past 200 years see (Soon, Connolly and Connolly 2015). The problem with the proxies is that the precise relationship they have with TSI or solar output in general is unknown and must be based on correlations with the, unfortunately, flawed satellite records.

Whether one matches a proxy to the ACRIM or PMOD composite can make a great deal of difference in the resulting long term TSI record as discussed in (Herrera, Mendoza and Herrera 2015). As the paper makes clear, reasonable proxy correlations to the ACRIM and PMOD composites can result in computed values of TSI, in the 1700s, that are more than two W/m2 different. Kopp discusses this problem in more detail in his 2016 Journal of Space Weather and Space Climate article:

“TSI variability on secular timescales is currently not definitively known from the space-borne measurements because this record does not span the desired multi-decadal to centennial time range with the needed absolute accuracies, and composites based on the measurements are ambiguous over the time range they do cover due to high stability-uncertainties of the contributing instruments.” (Kopp 2016)

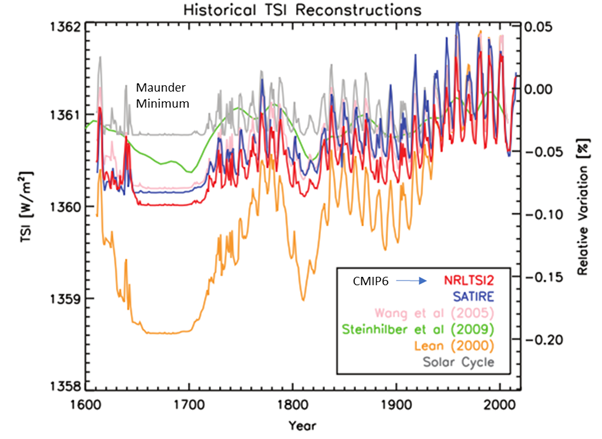

Kopp also provides us with the following plot (Figure 7) comparing different historical TSI reconstructions. The red NRLTSI2 reconstruction is the one that will be used for the upcoming IPCC report and in CMIP6 (Coupled Model Inter-comparison Project Phase 6). The TSI reconstructions plotted in Figure 7 are all empirical and make use of various proxies of solar activity (but mainly sunspot counts) and their assumed relationship to total solar output. Figure 7 illustrates some of the uncertainty in these assumptions.

Figure 7. Various recent published TSI reconstructions. The NRLTSI2 reconstruction will be used for the upcoming IPCC report and CMIP6. There is a great deal of spread during the Maunder Minimum, over 2 W/m2 and the long-term trends are very different. The figure is modified after one in (Kopp 2016).

In answer to the question posed at the beginning of the post, no we have not measured the solar output accurately enough, over a long enough period, to definitively say solar variability could not have caused all or a significant portion of the warming observed over the past 261 years. The most extreme reconstruction in Figure 7 (Lean, 2000), suggests the Sun could have caused 25% of the warming and this is without considering the considerable uncertainty in the TSI estimate. There are even larger published TSI differences from the modern day, up to 5 W/m2 (Shapiro, et al. 2011), (Soon, Connolly and Connolly 2015) and (Schmidt, et al. 2012). We certainly have not proven that solar variability is the cause of all or even a large portion of the warming, only that we cannot exclude it as a possible cause, as the IPCC appears to have done.

Works Cited

Dewitte, S., D. Crommelynck, S. Mekaoui, and A. Joukoff. 2004. “Measurement and Uncertainty of the Long-Term Total Solar Irradiance Trend.” Solar Physics 224 (1-2): 209-216. doi:https://doi.org/10.1007/s11207-005-5698-7 .

Eddy, John. 1976. “The Maunder Minimum.” Science 192 (4245). https://www.jstor.org/stable/1742583?seq=1#page_scan_tab_contents.

—. 2009. The Sun, the Earth and near-Earth space: a guide to the Sun-Earth system. Books express. https://www.amazon.com/Sun-Earth-Near-Earth-Space-Sun-Earth/dp/1782662960/ref=sr_1_2?ie=UTF8&qid=1537190646&sr=8-2&keywords=The+Sun%2C+the+Earth+and+near-Earth+space%3A+a+guide+to+the+Sun-Earth+system.

Fox, Peter. 2004. “Solar Activity and Irradiance Variations.” Geophysical Monograph (American Geophysical Union) 141. https://www.researchgate.net/profile/Richard_Willson3/publication/23908159_Solar_irradiance_variations_and_solar_activity/links/00b4951b20336363f2000000.pdf.

Frohlich, C. 2006. Solar Irradiance Variability since 1978. Vol. 23, in Solar Variability and Planetary Climates. Space Sciences Series of ISSI, by Calisesi Y., Bonnet R.M., Langen J. Gray L. and Lockwood M. New York, New York: Springer. https://link.springer.com/chapter/10.1007/978-0-387-48341-2_5.

Haigh, Joanna. 2011. Solar Influences on Climate. Imperial College, London. https://www.imperial.ac.uk/media/imperial-college/grantham-institute/public/publications/briefing-papers/Solar-Influences-on-Climate—Grantham-BP-5.pdf.

Herrera, V. M. Velasco, B. Mendoza, and G. Velasco Herrera. 2015. “Reconstruction and prediction of the total solar irradiance: From the Medieval Warm Period to the 21st century.” New Astronomy 34: 221-233. https://www.sciencedirect.com/science/article/pii/S1384107614001080.

IPCC. 2013. In Climate Change 2013: The Physical Science Basis. Contribution of Working Group I to the Fifth Assessment Report of the Intergovernmental Panel on Climate Change, by T. Stocker, D. Qin, G.-K. Plattner, M. Tignor, S.K. Allen, J. Boschung, A. Nauels, Y. Xia, V. Bex and P.M. Midgley. Cambridge: Cambridge University Press. https://www.ipcc.ch/pdf/assessment-report/ar5/wg1/WG1AR5_SPM_FINAL.pdf.

Kopp, Greg. 2016. “Magnitudes and timescales of total solar irradiance variability.” J. Space Weather and Space Climate 6 (A30). https://www.swsc-journal.org/articles/swsc/abs/2016/01/swsc160010/swsc160010.html.

Scafetta, Nicola, and Richard Willson. 2014. “ACRIM total solar irradiance satellite composite validation versus TSI proxy models.” Astrophysics and Space Science 350 (2): 421-442. https://link.springer.com/article/10.1007/s10509-013-1775-9.

Schmidt, G.A., J.H. Jungclaus, C.M. Ammann, E., Braconnot, P. Bard, and T. J. Crowley. 2012. “Climate forcing reconstructions for use in PMIP simulations of the last millennium.” Geosci. Model Dev. 5 185-191. http://pubman.mpdl.mpg.de/pubman/faces/viewItemOverviewPage.jsp?itemId=escidoc:1407662.

Shapiro, A., W. Schmutz, E. Rozanov, M. Schoell, M. Haberreiter, A. V. Shapiro, and S. Nyeki. 2011. “A new approach to the long-term reconstruction of the solar irradiance leads to large historical solar forcing.” Astronomy and Astrophysics 529 (A67). https://www.aanda.org/articles/aa/pdf/2011/05/aa16173-10.pdf.

Soon, Willie, Ronan Connolly, and Michael Connolly. 2015. “Re-evaluating the role of solar variability on Northern Hemisphere temperature trends since the 19th century.” Earth Science Reviews 150: 409-452. https://www.sciencedirect.com/science/article/pii/S0012825215300349.

Excellent piece, as usual. The thing that one appreciates and welcomes is the author’s attention to defining an hypothesis clearly, and then marshalling the evidence, while being careful to work through exactly what the logical relationship is between evidence and the hypothesis.

This is so very rare in popularizations of climate issues. Normally what we find is emotional tirades about, for instance, the sun or the MWP, without a careful account of what the hypothesis is, and what sort of evidence we need, and how what we have bears on the hypothesis, and what the alternative explanations might be, and how they in turn can be assessed. Well done again.

I’m no clearer having read this what exactly the effects of the sun have been and are. But at least I do know why this is beyond being answered satisfactorily by the evidence we have now, and what this evidence logically considered can and cannot show. That’s science for you. Some things are just not known with precision at this point. If you cannot know them, all the same you greatly gain from knowing why you can’t.

Thank you. Your comments are sufficient reward for the work I put into the post.

+10 :<)

That is a wonderfully well put comment to a great survey of the state of knowledge on this subject. Thanks for the time put into this, and for the links to the underlying papers!

+ I would say great majority with one or two let’s say minor exceptions

Science is compelled to be a prophesier. We all demand it. Who will listen to your conclusion? “We do not know” Science can observe the present and try to measure it. We can record our observations and measurements. We can compare our our past and present observations. The we can make guesses about what we didn’t observe – or guesses about what we haven’t observed. But that is the limit of the possible. We can only hope to be educated guessers.

But to believe science can reveal the past and proclaim the future – that is imbuing Science with religious authority. Our current observations and measurements of the TSI are insufficient to draw meaningful conclusions about past and future variability. But as you note the truth doesn’t stop the IPCC from revealing to us the secret knowledge.

Actually, the same could be said for ALL the data in climate science. None of it is of sufficient quality or of sufficient length to even begin to say any of the claims made by the CAGW meme.

In my undergraduate days I was told not to ever touch the collected data. It is what it is. To interpolate or extend beyond the data would have caused an immediate failure of the lab. All that could be concluded was that in the regime observed a certain relationship could be discerned between the dependent and independent variables. We could then determine whether the relationship concurred with or was nonconcurrent with an outcome predicted by theory within that regime. If it concurred, we could then describe how to create other conditions to test theoretic predictions in extended regimes. The purpose was always to test theoretical predictions in ever more extreme conditions to find cases in which the theory made a bad prediction. As we were dealing with pretty well tested physics, of course we never disproved anything, though we did conduct a couple of experiments that didn’t measure what we thought we were measuring. Our professor took about twenty minutes with our setup before laughing at what we did, but he did have to look at it to figure it out. Undergrads can be creative in getting into trouble, luckily it didn’t create anything dangerous.

+1

Exactly, Climate Science should be about developing and maintaining rigorous data collection and storage protocols so our great-great-grand children have some basis to develop climate simulations. The enormous investment in modeling could be used as grand scale experimental design tools to show us where our investment priorities need to be for data collection.

News Flash, it isn’t the radiation from the sun that matters, it is the amount of warming radiation that reaches the earth that counts. You can have a hot sun and plenty of clouds and the earth would cool. The Cosmic Ray theory has a hot sun clearing out cosmic rays reaching the earth, clearing the skies of clouds and making the earth even hotter when the sun is hot.

Nice theory. So how much in reality greater cloud cover during solar minima actually appears in the surface temperature record?

Internal variability completely swamps whatever effect this is supposed to cause during the last 70 years. I wouldn’t be so sure about the LIA, but then again, this means the effect is very slow to take on and probably has an oceanic component. Right?

Saying the Sun has an influence is not necessarily completely bollocks, but then again, the above explanantion does not appear to be fit in the decadal picture. Centennial maybe?

Yep …. the TSI at top of atmosphere, and any “calculated” value of the actual energy reaching the surface is meaningless. To accurately assess the impact of a change in solar energy input, we would have had to have a surface measuring network across the globe, much like the thermometer network that actually measured the daily SW and LW radiation reaching the surface over the last 50 years. Calculated values are simply too uncertain. On top of that, as the article states (good read btw), the uncertainty with the TSI instruments is too large for any meaningful measurement as pertains to climate impacts, and any calculated value would multiply that inherent uncertainty making the calculated value even more useless.

Dr. Deanster, there is such a record of instruments measuring SW and LW radiation at the surface. ETH Zurich is maintaining the database, and provides analysis of brightening and dimming periods over time. Observed tendencies in surface solar radiation:

My synopsis with images and links: https://rclutz.wordpress.com/2017/07/17/natures-sunscreen/

“To accurately assess the impact of a change in solar energy input, we would have had to have a surface measuring network across the globe, much like the thermometer network that actually measured the daily SW and LW radiation reaching the surface over the last 50 years. ”

Yep, and that warming is due to radiation that CO2 is transparent to. CO2 has nothing to do with warming the surface.

Thank you Andy for providing that survey and discussion.

Thanks Ron, you are welcome.

We do not know how constant the solar constant is although some will try to provide the falsehood that we do.

We do not know how weak TSI was during the Maunder Minimum.

We have inadequate measurements of TSI now .much less trying to extrapolate what is was in the past.

TSI is constantly revised.

In addition TSI changes within themselves are but a small part of the solar/climate connection.

Then to top it off you have the dishonest IPCC trying to downplay solar climate connections and prop up human contribution. It is so ridiculous , ludicrous and so fake, and such a waste of time, but if they want to bring it on ,bring it on.

Not to worry however, because AGW fake theory is now in the process of ending as the global temperatures are no longer rising and better yet falling, and even better yet will continue to fall as we move forward..

More on my thoughts below.

It is ridiculous to even entertain the thought that non existent AGW, which has hi jacked all natural variability which was in a warming mode from the end of the Little Ice Age to 2005 had any climatic impact on this event much less the global temperature rise.

My point will be proven now – over the next few years as global temperatures continue to fall in response to all natural climatic factors now transitioned to a cold mode.

If there is any validity to AGW , the global temperatures should continue to rise now-next few years, but they will not because AGW does not exist.

What controls the climate are the magnetic field strengths of both the sun and earth. When in sync as they are now(both weakening) the earth should grow colder.

In response to weakening magnetic fields the following occurs:

EUV light decreases – results in an weaker but more expansive polar vortex. Greater snow coverage.

UV light decreases – results in overall sea surface oceanic temperatures decrease.

Increase in GALACTIC COSMIC RAYS- results in changes to the global electrical circuit, cloud coverage , explosive major volcanic activity.

In other words during periods of very weak long duration magnetic field events the earths cools due to a decrease in overall oceanic sea surface temperatures and a slightly higher albedo due to an increase in global cloud/snow coverage and explosive volcanic activity.

Thus far all overall global temperature trends for the past year or two have been down and I expect this trend to continue.

This is true for every potential factor, not in the beam of the flashlight.

+1

You shine your light where you can, because the lamppost is fixed.

In the case of ‘climate science’, there’s a big fat government guy making sure the lamppost already installed has an indefinite supply of bulbs lighting the spot below it better and better. And the guys with torches are regularly laughed at, their findings ridiculed, and the government tries to defund torches because the guys at the lamppost told they’re spreading misinformation.

A physical greenhouse is a structure which prevents winds, convection, precipitation, etc. – but allows

for slow conduction – and contains atmospheric gases.

Where is the Earth’s physical greenhouse?

How can you have a greenhouse gas without a greenhouse?

But I thought our Earth’s greenhouse gases worked like a blanket.

A big holey, damp blanket that can vary in size, altitude, and shape while somehow still keeping the planet overly warm.

[Technically, the mods must point out that the planet is beneath the theoretical CO2 blanket, thus the planet is kept “underly warm” at all times, especially during the Ice Ages. .mod]

The biggest lie in the IPCC’s work and the Alarmists’ claims is the certainty. Is what they say possible? Yes, they could be right. But do they have evidence that allows them to proclaim 95% certainty? Nowhere near. That 95% is a political necessity however, a number that is large enough to convince our dumb and credulous politicians that we must do something.

Given how little we understand about our climate, I cannot understand how any honest climate scientist can support such certainty?

+1

“I cannot understand how any honest climate scientist can support such certainty?”

Their paycheck depends on it.

Should be: Their paycheck depends on it.

I do miss those lost upgrades…

” I cannot understand how any honest climate scientist can support such certainty”

honest climate scientist = oxymoron?

phoenix44,this is the biggest bone of contention when it comes to climate science. the assertion of absolute certainty ,quite often stated in abstracts when the content of the same paper shows no such thing.

yet another excellent article by andy may,a pleasure to read.

Over a year the solar energy striking the Earth changes by around 7% because the Earth’s orbit is not perfectly circular. It also matters that the two hemispheres don’t absorb the same.

The ratio of the maximum distance from the Sun to the minimum distance is about 1.034. link Because the energy arriving at the Earth goes by the inverse square law, the energy striking the planet varies by about 1.07 or 7%.

You’ve spotted the elephant in the room! A non-linear system with sinusoidal variation in Parameter X usually gives a different value of Yavg from the simplistic assumption that the average of the dependent variable, Y, corresponds to its value at Xavg.

It matters because NASA thinks that a quarter watt per square meter is significant.

My constant complaint is that the error bars on the measurements are huge but the alarmists think they can calculate stuff to within a percent.

While internal variability can cause large variations in temperature over significant periods it is always going to be difficult to ascribe any such similar changes to solar effects.

It often seems like many people acknowledge this may render certain calculations worthless, and then just proceed on the basis that they are a worthwhile pursuit anyway. (Protein folders do something very similar. Who wants to talk themselves out of a job.)

There really are some things in science that should not be attempted. This is widely acknowledged in the general, but not the particular. If scientists won’t address this directly then, ultimately, politicians will.

Snip – irrelevant to the discussion.

I don’t think the planet is a pathetic pile of s***. I would be more supportive if you had used such extreme language to describe Dr Thomas J. Chalko MSc, PhD. (I clicked on your link) Chalko is at a minimum a total nut-case.

Chalko’s “research” is serious? I thought it was a parody.

Because of the elliptical orbit the solar “constant” varies from 1,415 W/m^2 at perihelion to 1,323 W/m^2 at aphelion, a swing of 92 W/m^2. Geometry & algebra. That’s bound to leave a mark.

Because of the tilted axis any particular point on ToA sees a 700 W/m^2 swing from solstice to solstice. There is the origin of the seasons.

Makes the 2 W/m^2 increase from 1750 to 2010 and the 8.5 W/m^2 RCP and 0.05 and .12 variations look like rounding errors.

Because of the elliptical orbit the solar “constant” varies from 1,415 W/m^2 at perihelion to 1,323 W/m^2 at aphelion, a swing of 92 W/m^2. Geometry & algebra. That’s bound to leave a mark.

The solar constant doesn’t vary during the orbit because it’s evaluated at 1AU regardless of the position of the Earth. Also because the earth orbits faster when it’s closer to the sun that ‘swing’ cancels out (Kepler’s Laws).

“Also because the earth orbits faster when it’s closer to the sun that ‘swing’ cancels out (Kepler’s Laws).” “phil.”

Prove it.

Kepler’s Law of Areas

See for example:

http://hyperphysics.phy-astr.gsu.edu/hbase/kepler.html

An illustration of this effect is that the time from the March equinox to the September equinox is 189 days, from the September equinox to the March equinox is 176 days, QED.

Hi Phil I think you are mistaking distance for speed. The planet is not traveling faster because the ace has less distance the time needed to cross it is less.

michael

arc not ace oops

No, the linear velocity changes as does the angular velocity.

The maximum value is 30.29 km/s at perihelion (2–5 January) and the minimum value is 29.27 km/s at aphelion (4–6 July).

Jorge,

Earth’s orbit is slightly elliptical, not circular, so its mean speed around the sun is an average, not a constant.

Earth is closest to the sun (at perihelion) and orbits fastest around January 3. It is farthest from the sun (at aphelion) and orbits least rapidly around July 4. Since, per Kepler, equal areas must be swept in equal time intervals, the planet’s orbital velocity must be the swiftest at perihelion and slowest at aphelion.

Nicholas:

Came here to say this. You did a better job than I would have.

The data is averaged for 1AU, (line 5) but if you look (line 10 iirc) it includes the actual reading (if I can find the original dataset. Might have to hunt the SORCE raw data back again and double check, been a bit.

That being said, in another discussion I also brought up that using absolute temperature we see a change of .8 degrees Kelvin, out of 288 degrees, a .3% change in temperature. If there was a direct correlation, that would mean a 4w/m^2 change in 1365w/m^2 would result in all of the changes to date.

I have seen anything from 1/1.5 to 4 or even 6 in that same time period though.

A serious question:

If the “temperature of the earth” varies directly with changes in radiative energy absorbed,

and if energy radiated from the earth varies as the forth power of radiating temperature,

then a 1% increase in energy absorbed results in a 4% increase in energy radiated away.

Why is the earth not safely nested in a negative-feedback temperature cocoon?

The IPCC lowered their estimate of the impact of solar variability on the Earth’s climate from the already low value of 0.12 W/m2

==========

It is simply to show that W/m2 is a nonsensical measure of climate.

Consider what would happen if the Sun’s TSI remained constant in W/m2 but the radiation shifted so that it was all in the IR band. All the green plants on earth would die and the climate of the earth would be HUGELY different than today.

Yet there is a frequency shift in the Sun during the solar cycle. Where is this accounted for in TSI? It isn’t.

The problem is that the IPCC and solar science in general regards the earth as a lifeless grey body and calculates climate on that basis, without taking into effect that the primary driver of climate on planet earth is life.

Very interesting, as noted, real science. I recall studies on coastal plankton productivity in the early 60s that were measuring variation in surface solar insolation. My impression is that biologists have not done much with this, important as proven by plankton seasons. It probably is often overshadowed except in exceptionally consistent clear water. Even then, wave action and other changes are a problem.

First sentence in a 1957 review–“Solar radiation is probably the most fundamental ecological factor in the marine environment.” I haven’t done my homework on this, but wonder if solar panels are more important than measurements. I do know how difficult it is to measure, usually not, in coastal waters. So much to learn.

Solar panels have the same problem as the instruments in space – doing their job makes them less efficient at doing their job. Every photon that liberates an electron on the plate makes a change in crystal structure that ever so slightly damages the surface. After a couple of years, the same incident sunlight generates a measurably lower current output from the panel.

Every panel produced has a pretty wide variance from batch to batch and panel to panel, so even changing the panels every season wouldn’t guarantee consistent results in output. As there is no baseline to compare to, solar panels can’t generate usable scientific data on the output of the sun except on order of magnitude level changes (which, thankfully for all of us living around this star, never happens!)

Based on examination of a lot of marine science papers in the last couple of decades, I wonder if two factors have skewed study. (1) The environmental movement. (2) Movement to computers, satellites and buoys for data. There has still been a lot of valuable work done, but suspect we missed a lot by not being on site.

I think this may be the only recent paper I found locally, but did not chase all the physical journals and never did a computer search. There are a few studying predator-prey reactions with light, but the sun was not important to them. I do know that day/night studies are not common.

Lugo-Fernández, M. Gravois and T. Montgomery. 2008. Analysis of sechhi depths and light attenuation coefficients in the Louisiana-Texas shelf, northern Gulf of Mexico. Gulf of Mexico Science. 16(1):14-27. Interesting that this mid 19th century method goes back to a Frenchman named Secchi, maybe others

Having dived tropical reefs around Guam, I can say there is a distinct difference in the behavior of sea life between the day and night (at least there). I would think any study that purported to evaluate an ecosystem would have to do extensive day/night evaluations in order to even come close to a comprehensive evaluation. Of course I studied physics not biology, so as the old saying goes – what do I know?

From a fisherman’s perspective (my dad), the fish are greatly affected by what happens above the water as well as below. When they want to feed and when they don’t. Location, tide, wind and rain all have a very big impact on catching a fish (and by extension, their activity).

There is simple solution to that. For long time measurement give up permanent watching of the sun, just make fast snapshot once a day.

Do not let instrument to be permanently damaged by sun. Just hide it behind shutter and open for 1 second a day. This way instrument decay will be negligible. We would have data for 800,000 years instead of 10.

How sure are we of all the posted trend estimates for solar forcings required for the warming? Is this the right way to go?

How can the IPCC do real physics when the sun’s power is quartered first? The ocean at the sub-solar point responds to full TSI, not TSI/4, under clear skies. Does anyone figure income on a 24-hour average? No, it’s always figured on actual hours of real work. Same with the sun’s energy.

The IPCC loves to downplay the sun’s influence. The way they do this is by just assuming the solar input has a minimal influence, as that is the only way to downplay the glaring discrepancy in solar epochs since 1850, the only way to ignore the energetic difference between the falling phase to the rising and high long-term solar activity regime:

***

I think we can know with certainty whether TSI varies enough to cause climate change, even though we’re uncertain about a number of things wrt TSI, such as long-term averages and extremes, and absolute value.

I determined in 2014 that HadSST3 global SST warms and cools at decadal scales at 120sfu F10.7cm flux, 94 v2 sunspot number, equivalent to 1361.25W/m2 LASP SORCE TSI.

Solar cycle activity extremes and duration above or below this threshold drive climate changes with long-term TSI changes that follow the sun’s magnetic activity and sunspots. The modern warming occurred because sunspot number averaged higher than the 94 SSN decadal warming threshold.

” Does anyone figure income on a 24-hour average? No, it’s always figured on actual hours of real work. Same with the sun’s energy.”

But when a person is not working they are spending the money earned, must like the Sun’s energy is lost at night. So it is the NET that must be considered not the gross.

Hi Tom. You made two good points; my analogy could’ve been better.

If you’ve seen Willis’ tropical temperature (and rain?) plot covering a day you’ll notice it registers to the morning daylight hours, maximizing early afternoon, then dropping off until the next day. The ocean temperature and evaporation are responding to the instantaneous incoming solar at full TSI under clear skies.

IPCC does this quartering because of the Trenberth cartoon. The concept of a “Forcing,” that the atmosphere can and does heat the surface of the Earth, depends on 24/7 so-called “Downwelling Infrared Radiation,” which would easily be shown to be nonsense without the quartering. Anyone who does not exist in a cave knows that the Sun heats the surface of the Earth, not the atmosphere.

“Forcings” is/are a completely absurd concept. All made up so that the IPCC can sound scientific, but they do not, to anyone who knows anything about science. The Earth has no average temperature that we can know to any certainty, and we certainly did NOT know what it was in 1750.

Since the following is relevant to Michaels’s up/down/”back” radiation comment I trust it will survive mod.

I’ll plow this ground, beat this dead horse, yet some more. Maybe somebody will step up and ‘splain scientifically how/why I’ve got it wrong – or not.

Radiative Green House Effect theory: (Do I understand RGHE theory correctly?)

1) 288 K – 255 K = 33 C warmer with atmosphere – rubbish. (simple observation & Nikolov & Kramm)

But how, exactly is that supposed to work?

2) There is a 333 W/m^2 0.04% GHG up/down/”back” energy loop that traps/re-emits per QED simultaneously warming BOTH the atmosphere and the surface. Good trick. Too bad it’s not real. – thermodynamic nonsense.

And where does this magical GHG energy loop first get that energy?

3) From the 16 C/289 K/396 W/m^2 S-B 1.0 ε BB radiation upwelling from the surface. – which due to the non-radiative heat transfer participation of the atmospheric molecules is simply not possible. (TFK_bams09)

No BB upwelling & no GHG energy loop & no 33 C warmer means no RGHE theory & no CO2 warming & no man caused climate change.

Got science? Bring it!!

Nicholas, The surface is slightly warmer than the atmosphere during the day. It radiates IR, which is mostly captured by water vapor or CO2 in the lower atmosphere and held for a while (maybe 0.5 seconds). While it is being held the molecule is excited and it has thousands of collisions with neighboring molecules and can transfer the energy to them, exciting them and “warming” the air locally. Convection (wind and currents) moves this energy (“warming”) around. This is the greenhouse effect. More greenhouse gases enhances the effect.

I’ve no problem with the concept, my problem is with the magnitude of the effect. Assuming it controls climate change is where they lose me.

“It radiates IR…”

Which is measured incorrectly because emissivity is assumed and 1.0 is wrong, 0.16 is real.

Not enough to matter (63) – unless it’s all BB (396) – which is not possible.

No BB upwelling – GHGs have nothing to absorb and re-radiate – no RGHE.

1 + 2 + 3 = no RGHE & no GHG warming & no CAGW.

Any one of those 3 points takes out RGHE.

Are my three steps a valid representation of the greenhouse effect theory?

The only reason the greenhouse effect theory even exists is to explain how/why the earth is warmer with an atmosphere, step 1, 288 – 255 = 33, which is simply not so.

You concede step two, but want to dicker and nit-pick over the magnitude.

Step 3 exists to explain step 2 and since step 3 does not exist step 2’s magnitude is zero.

How can the IPCC do real physics when the sun’s power is quartered first? The ocean at the sub-solar point responds to full TSI, not TSI/4, under clear skies.

And how long does it react to the full TSI, one minute?

At any given time the earth is receiving TSI*∬cos(𝜃)cos(𝜙)d𝜃d𝜙

that’s where the factor of 4 comes from.

At any given time the earth is receiving TSI*∬cos(𝜃)cos(𝜙)d𝜃d𝜙

that’s where the factor of 4 comes from.

No it doesn’t. No way that complicated. It’s discular r area’s 1,368 spread over spherical’s r area or disc/4.

That is where it comes from, TSI*cos(𝜃)cos(𝜙) gives the flux/unit area received by any point on the Earth’s surface, the double integral from -𝜋 to 𝜋 gives the total being received by the total Earth’s surface at any time. That gives the same factor of 4 that the ratio of projected area to surface area gives because it comes from the same math.

how long does it react to the full TSI, one minute?

From Time and Date:

“Position of the Sun

On Wednesday, September 19, 2018 at 16:52:00 UTC the Sun is at its zenith at Latitude: 1° 19′ North, Longitude: 74° 34′ West

The ground speed is currently 463.83 meters/second, 1669.8 kilometres/hour, 1037.5 miles/hour or 901.6 nautical miles/hour (knots). ”

The sun moves 17.3 miles/minute. Is the solar noon over in one minute after it moves 17.3 miles?

The equator receives a daily pulse of sunlight, integrated, and governed by this curve, minus cloud and aerosol effects.

The equator receives a daily pulse of sunlight, integrated, and governed by this curve, minus cloud and aerosol effects

Exactly, that curve shows the cos relationship I gave. Your curve shows the effect at the equator, as you go away from the equator you get a similar cos dependence hence the double integral of cos(𝜃)cos(𝜙).

Yes, integrating results in deriving the factor of 4 reduction, but its much easier to understand when you compare the area of the Earth’s surface emitting energy to the area of the plane across which solar energy is arriving.

Why is this this often ignored relative to energy absorbed by the atmosphere? When the same integration is performed for the atmosphere, it emits over twice the area it absorbs, thus the net emitted flux either up or down is limited to half of what the atmosphere absorbs. Even more perplexing is that the data confirms the roughly 50/50 split!

“…the data confirms the roughly 50/50 split!”

IR instruments measure temperature with T/C & thermopiles with a temp/mv relationship. W/m^2 is inferred assuming the emissivity. SURFRAD assumes 1.0 which is wrong. BB emission from the surface is not possible.

The up/down/”back” “measurements” are due to bad data, misunderstood and misapplied pyrogeometers.

Why is this this often ignored relative to energy absorbed by the atmosphere?

It isn’t. When you do a energy balance for the earth you equate the incoming energy with that leaving.

The incoming energy is TSI(1-albedo)𝝅r^2

Energy leaves from the whole surface area, 4𝝅r^2

Nick,

Yes, the ‘back radiation’ measurements are completely bogus. The data I’m talking about is based on a first principles examination of the energy balance.

The first thing we need to do is unwind Trenberth’s excess complexity that serves no purpose other than to obfuscate.

We can ignore latent heat and thermals, relative to the balance, as whatever effect they are having is already embodied by the average temperature and its emissions. When you subtract their return to the surface from the bogus back radiation term, all that’s left are the 390 W/m^2 offsetting surface emissions.

Relative to the thermodynamic state of the planet, the water cycle links the water in clouds with surface water over short time periods. The result is that energy absorbed and emitted by the liquid and solid water in the atmosphere (clouds) can be considered a proxy for energy absorbed and emitted by the water in the oceans, relative to averages.

Now, lets reconsider the AVERAGE balance.

In the steady state, 240 W/m^2 arrives from the Sun and 390 W/m^2 is emitted by the surface at its average temperature of 288K. The ISCCP cloud data combined with GHG concentrations tells is that the atmosphere absorbs about 300 W/m^2 of the 390 W/m^2 emitted, leaving only 90 W/m^2 to escape into space.

To offset the 240 W/m^2 arriving, 150 W/m^2 more is required which when added to the 90 passing through exactly offsets the 240 W/m^2 arriving.

The only possible source of these 150 W/m^2 are the 300 W/m^2 absorbed by the atmosphere, leaving 150 W/m^2 more to be returned to the surface and combined with 240 W/m^2 of solar input to offset 390 W/m^2 of surface emissions.

150 W/m^2 is half of the 300 W/m^2 absorbed.

QED

Great article Andy. My comment has vanished…

There it is… will a dropbox image show?

https://www.dropbox.com/s/2k5yg60qxxnccwk/The Modern Maximum.jpg

Crap. So many ways to misstype

Thanks for trying. Giving .png a go:

Lolz 🙂

The way these reconstructions all move upward over time make solar look favorable, as the latter decades are higher. But, the NRLTSI2 reconstruction for example is wrong because it doesn’t incorporate the v2 sunspot revision numbers, which will increase older high solar cycles TSI to similar levels of recent high activity cycle TSI.

TSI acts like a pulse-width amplitude modulated heat source, the longer it’s high the hotter we get, and vice versa, and it’s operated from a narrower range than most of these reconstructions show. I consider the narrower range more realistically tracking solar cycles to be very favorable for solar TSI forcing.

Nearly every star we observe varies with what are mostly unexplained random periods and intensities. If the Sun is as constant as claimed, it would be a very rare and unusual star.

The total solar energy arriving at the surface varies by 80 W/m^2 (almost 6%) between perihelion and aphelion. The 20 W/m^2 difference averaged across the surface after 30% reflection becomes 14 W/m^2. According to the IPCC’s nominal ECS of 0.8C per W/m^2, this should result in a temperature difference of 11C between the 2 hemispheres. No where near enough energy is transported between hemispheres to offset this much of a change in forcing, so where is this temperature difference?

The two hemispheres respond quite differently to changes in solar insolation and we see this in the ice cores with a clear 22K periodicity corresponding to the precession of perihelion. In the N hemisphere, the winter snow band is mostly over land and snow readily accumulates. In the S hemisphere, the winter snow band is over the ocean and snow and ice slowly extends from the Antarctica mainland, rather than rapidly accumulate in place. A serious error often found in models is to AU normalize solar input.

Despite what alarmists claim proxies are telling us, we really have no idea about how much the Sun (or the Earth’s orbit for that matter) has varied over geologic time. It’s as likely that the orbit and Sun has been constant for billions of years as it is that the Earth is only a few thousand years old. In fact, the latter is a prerequisite for the former.

The tenuous proxy evidence of a far distant climate that was much warmer or colder than we see in the ice cores is far more likely to be due to variable solar output then the absurd concept of a highly amplified GHG effect arising from CO2 causing warming or the lack of CO2 causing cooling.

The total solar energy arriving at the surface varies by 80 W/m^2 (almost 6%) between perihelion and aphelion.

No that’s the flux, the energy is measured in J/m^2, a W is a J/s.

However since the rate at which the Earth moves also depends on distance from the sun which cancels out the increase in flux. (Kepler’s Law of Areas)

Phil,

The planet responds to the instantaneous energy flux and only averages are affected by the average energy across the period of the average. Otherwise, we wouldn’t notice seasonal variability, much less any temperature difference between night and day. Over the course of a year, the total energy does balance out, but what doesn’t balance out is the asymmetric response as perihelion shifts through the seasons. This was one of Milankovitch’s arguments that’s often ignored but is supported quite well by the ice cores.

The difference in albedo between summer and winter is larger in the N than the S, owing to the aforementioned difference in where the snow belt resides. As a result, the larger winter solar input in the N hemisphere winter is offset by a relatively larger reflectivity, as the lesser solar input to the S hemisphere winter is offset by a lower reflectivity. When this flips in about 11K years, it will add to the asymmetry, rather than act to cancel it out, and the seasonal variability will get significantly larger than it is today, especially in the N hemisphere.

Because of the T^4 relationship between temperature and emissions and that it’s the forcing/emissions whose range is symmetrically extended, winters get colder by more than summers get warmer giving glaciers a chance to grow. Today, we are the opposite end of that cycle where the relative difference between summer and winter in the N hemisphere is at its lowest possible levels.

We don’t notice this because the S hemisphere is less sensitive to instantaneous flux and is more aligned with average flux owing to the much larger fraction of water and where that water is relative to how the surface reflectivity responds to the temperature. In 11K years, the S hemisphere climate will not have changed by much, except perhaps Australia, but the N hemisphere climate will see significant changes across the US, Canada, Europe and Russia while the equatorial climate will stay pretty much the same as it is now.

Over the course of a year, the total energy does balance out, but what doesn’t balance out is the asymmetric response as perihelion shifts through the seasons.

So you agree with me. You said total energy varied and then quoted the change in flux. As I showed the Earth spends more time from the March equinox to the September equinox (189 days), than from the September equinox to the March equinox (176 days). Also the length of day varies for the same reason =/-7.5 mins.

I didn’t mention the shift of per/aphelion relative to season (it’s currently close to the solstice) as that’s a longer term variation.

Phil (replying to co2isnotevil, adding Leif Svalgaard to query)

OK, so let me ask the derivative of that question:

What IS the correct approximation for a “calendar year” measurement (temperature, ice area, solar radiation, whatever) that varies over the 365.25 day year?

1) Do we skip leap years? Average them with 365 days? Use Feb 29 only when convenient?

2) Is “data” on Day-of-Year (DOY) = 62 DOY = 62 for all years: or only leap years, or just for 3 of the 4 years excluding all leap years? Or do we consider DOY = 62 “good enough” for DOY = 62 (and 63)? After all, solar radiation doesn’t change very much.

(By the way, ZERO papers mention this in their plots and printed files! Can Aug 12 1966, 1977, 1998, and 1999 all be the same DOY when plotted? ) Or, as mentioned, does it matter?

3) The most accurate method is to add/average/list/compare all 365 days for a seasonally changing value. Impractical, but possible.

So what IS APPROPRIATE (accurate enough) to evaluate monthly averages and “total year effects” of a 365.25 day year?

4) 4 equal days (12-22, 3-22, 6-22, 9-22 pick four important dates: Each equinox, the two solstices. But not accurate enough.

5) The 12 “average months” are better, but what is the “average solar irradiation absorbed” each month?

Are 28, 30, and 31 day-long months to be equally important?

Which is the “accepted” representative Day-of-Year for the 12 months?

The 15th of each month? (Not per ) The 14th on Feb, but the 15th on every other month?

6) If “The more days, the better” philosophy holds, then what IS adequate:

The 1, 15 of every month?

The 5, 10, 15, 20, 25, 30? (OOpsie. Skips February 28 and 29. Or do we use “the last day of Feb = the 30th of very other month””?)

The 2, 12, 22 of every month? (Gets all of the four special days of solstices and equinoxes!)

Combine those! The 3, 6, 9, 12, 15, 18, 21, 24, 27, 30 of every month?

Skip months entirely? (Although this prevents using any printed reference that DO use monthly information!)

DOY = 10, 20, 30, 40, 50, 60, 70, 80, 90 …..

(OOPSIE – 360 and 365 are not represented properly. Or are they? Is DOY = 360 then DOY = 376 “correct” for an interval trying to approximate an entire year? Seems like that “skips” too many day in the winter of each year!)

DOY = 5, 10, 15, 20, 25, 30, 35, 40, 45, 50, 55 …

For example: “Average for each months (whatever) is calculated for the 15th of the month”

Real world number, 365 day year.

Well ‘months’ are an artificial construct and the start of the year is totally arbitrary, at best we can just compare like with like from year to year. In England prior to 1750 the start of the year was March 25 not Jan 1. What would make sense to me for this purpose would be to start the year at the winter solstice.

The meteorological year has the winter season being from 1 Dec to the end of Feb. The slight mismatch between the solstices and per/aphelion makes things slightly more complicated.

Little Ice Age = Urban Myth

The Big Lie repeated so often it becomes true.

1. Where are the bodies….

2. In the ‘photo’ we have of it (frozen River Thames), people are dancing (ice skating) on the ice, they are visiting shops and market traders, they are having an outdoor BBQ and generally having A Nice Time

3. What we do have for temperature records show it to be a non-event.

4. Oh. Charles Dickens wrote a lot about White Christmases.

Err. Charles Dickens was a novelist – he wrote fiction. Fiction does NOT evolve around the boring, the everyday and the mundane.

If anything did happen, it was due to Queen Elizabeth continuing the tradition of her father and chopping trees to build a war-machine. That certainly would change the English Climate. And in more ways than one.

Similar to the burning of forest.

And why she need such a huge war machine? (For the time)

She was generally accepted to be indecisive and totally dependent upon her advisers, especially her ‘nanny’.

Why was she so insecure, so lacking in confidence and untrusting of her own judgement?

Especially when it came to men and suitors.

Not because she was quite addicted to sugar by any chance?

Why courtiers and other folks with aspirations would get their teeth painted black or even knocked completely out – to make themselves appear like The Queen and able to feast on refined sugar. As Mary Queen of Scots knew, if you wanted to curry favour with Liz, you wrapped your request inside a box of sugared almonds.

Any parallels there?

Who has the largest army in this world and who (seemingly and gobsmackingly) goes through 3 cans per day, each, of carbonated soda-pop. 3 cans. Each. Daily. ?????? !!!!!!!

And *who* is the most paranoid about trivial & inconsequential things, such as decimal places of solar grunt and sunspots………

Funny thing, science, innit

and you do ‘get’ that sunspots are the Dancing Faeries on the pinhead?

and that computers & Sputniks are the equivalent to QE1’s nanny?

*Everybody* these days is indecisive (buck passing) and didn’t QE1 set herself as a true role-model in birth-rate reduction?

What is gibberish, Alex?

Survey says!

+1 Robert

So much nonsense based on the utterly ridiculous.

Your jihad against processed sugar is duly noted, and roundly ridiculed.

BTW, how did chopping down trees in England cause the LIA in the southern hemisphere?

Peta,

A summary of the Little Ice Age:

“The Little Ice Age was a horrible time for mankind according to Behringer. Glaciers advanced in the Alps and destroyed homes, it was a time of perpetual war, famines and plagues. Horrible persecutions of Jews and “witches” were common. Society was suffering from the cold and lack of food and they needed to blame someone. They chose Jews and old unmarried women unfortunately. Over 50,000 witches were burned alive. Tens of thousands of Jews were massacred. Not because there was any proof, just because someone had to suffer for the bad climate. Some people, the masses mainly, seem to need to blame someone or humanity’s sins for natural disasters. Behringer notes that in The Little Ice Age: “In a society with no concept of the accidental, there was a tendency to personalize misfortune.”

Source: https://andymaypetrophysicist.com/climate-and-civilization-for-the-past-4000-years/

Hello Peta of Newark

Thank you for the information regarding the size of largest army and their consumption of soda pop.

I never dreamed that the people’s army of the republic of China drank that much soda..

michael

https://www.statista.com/statistics/264443/the-worlds-largest-armies-based-on-active-force-level/

Peta,

Thermometer records in the CET show that the 1690s and 1700s, during the depths of the Maunder Minimum, were the coldest interval of the LIA.

The other coldest cycles of the LIA also fell during periods of low sunspots, with warming cycles between them. But overall and globally, the LIA was significantly cooler than today, as shown by proxy data as well as temperature readings.

It was the most recent of the centennial-scale coolings, alternating with warming cycles, since the Holocene Climatic Optimum.

Peta,

ERI reigned from 17 November 1558 to 24 March 1603. Her “nanny” Kat Ashley died in the summer of 1565, while away from court. So the queen could have relied on her counsel for fewer than seven years.

Peta of Newark September 19, 2018 at 9:17 am

Oh another thing, Peta bread, QE1 died in 1603, years before England’s great ship building period.

Perhaps you mean the Spanish, you know that “Great Armada” thingy .

You really need to brush up on your History, I would suggest you start with “The Roman Imperial Army” by Graham Webster Copyright 1969. It covers the 1st&2nd centuries during the Roman warming period.

After you have read that, get back to us and I recommend a good History of the 30 years war in Europe during the Little Ice Age. The comparisons and contrasts between the two time periods are interesting.

Specifically, what the Romans were able to do and the later European States were not. For example, the Romans were able to keep eight Legions on the Rhine for two hundred years and keep them healthy. In total, the empire maintained between 25 to a maximum of 30 legions though out the empire. During the Little Ice Age armies would routinely lose between 50%-90% of their strength come winter.

The fate of Johann Tserclaes (Count von Tilly’s) Catholic army after the defeat at White Mountain is telling, it froze to death during the retreat.

The Little Ice Age is no urban legend, only someone of vast ignorance would state that.

Start reading.

michael

Michael,

You’re right that the Royal Navy didn’t face a timber crisis until the 1650s. ERI did support the RN, however her ship-building program was slow but steady. She tried to remedy the decline into which the sea service had fallen under the reigns of her brother and sister. Her early goal was 30 ships in 20 years.

Despite this program, the RN relied heavily on commandeered commercial ships for defense against the 130-ship Armada of 1588. It boasted 22 large galleons and 108 armed merchant ships, including four Neapolitan war galleasses.

The RN had 34 smaller warships, plus 163 armed merchant vessels, just 30 of over 200 tons, and 30 flyboats.

At the outset of the First Anglo-Dutch War (1652–54), Commonwealth Britain had 18 (1st and 2nd rate) ships of the line superior in firepower to the Netherlands’ flagship Brederode, largest in its navy. Furthermore, not only were the British (no longer Royal) navy ships larger, with more guns, but the English guns were bigger than Dutch naval guns. The English could thus fire and hit enemy ships at a longer distance, causing comparatively more damage with their shot. I don’t know the number of 3rd through 6th rate British warships at that time.

In 1652, the Netherlands’ navy had only 79 warships, many in bad repair, so that fewer than 50 were seaworthy. The deficiency in the Dutch navy was to be made good by arming merchantmen. As noted, all were inferior in firepower to the largest English first and second rate ships.

During the war, both sides built up their navies to around 300 ships. Hence, the timber troubles.

When the wars that prompted the naval expansions ended there was often surplus timber that was redirected to house building. The beams in my parents’ house (which is ~400 yrs old) were unused ship timbers.

Must be beautiful. Painted or stained & varnished?

With their “natural” bends and angles (the “bents” of a reinforced angle joint where the ribs meet the deck)?

Or as squared-off large lumber beams and columns?

Phil,

So much more wonderful than mighty 20th century warships turned into razor blades.

You are blessed.

Supposedly parts of Mayflower were bought from a shipbreaker’s yard in Rotherhithe to help build a barn in the village of Jordans, Bucks. A Quaker center, William Penn is buried there.

The dual American connection makes me suspect a tourist angle, but the barn is in private hands and no longer visitable.

Sorry my response from yesterday didn’t show up. Stained and varnished, looks good I was back visiting this summer, my sister lives there now. The beams were sort of squared off with unused mortices for their previous application, not a right angle in the place (stone built).

This is a very poor post. Extrapolating to 261 years the noisy measurements of only 3 or 4 sunspot cycles is bad science. There is now general agreement that the changes in TSI are due to changes in the sun’s magnetic field. We have measured the latter with accuracy since the 1970s. The sun’s magnetic field also determines the diurnal range of the variation of the geomagnetic field which is known since the 1740s. Also the intensity of geomagnetic storms, which is known with good accuracy back to the 1840sm and even the modulation of cosmic rays reaching back much farther in time. All of those effects show that there has not been a large variation [whether 9 or 14 W/m2] of the basal level of TSI, exceeding 0.5 W/m2.:

http://www.leif.org/research/EUV-F107-and-TSI-CDR-HAO.pdf

Leif,

The whole point of the post was:

I totally agree with this statement.

You also say:

OK, not very controversial. Now, how accurately can we measure the Sun’s magnetic field and precisely how do we compute the TSI, how accurate is the calculation? To go far back in time we need to compare the magnetic field measurements to sunspots, how accurate is that? Your ppt is very interesting and you have a lot of good correlations, but no indication of accuracy at the level required.

Can you supply TSI at the accuracy Kopp computes? That is + or – 0.136 W/m^2?

You note that TSI no longer follows the SN. Where is the error, how much error is there?

My only point is that we do not know solar variability, from ANY source accurately enough to exclude solar variability as a possible cause for recent warming, or, at least, a large part of it.

Now, how accurately can we measure the Sun’s magnetic field and precisely how do we compute the TSI, how accurate is the calculation? To go far back in time we need to compare the magnetic field measurements to sunspots, how accurate is that?

My comment supplies the necessary error analysis. The measurements of the solar magnetic field are very accurate. The sensitivity of TSI to the magnetic field can be gauged from the rotational and solar cycle behaviour. There is no way TSI could have varied 13.6 W/m2 [=1%] over the past 300 years, which is 10 times the well-established solar cycle variation. Such a variation would have resulted in a 1/4% variation of Temps = 0.72 C. In particular, at every solar minimum the activity falls to nearly zero, so TSI must be almost constant for every minimum, even if we allow for a generous error of 0.5 W/m2.

Since it is unlikely that people who disagree will even look at my comment, I affix here the abstract:

A composite record of the total unsigned magnetic (line-of-sight) flux over the solar disk can be constructed from spacecraft measurements by SOHO-MDI and SDO-HMI complemented by ground-based measurements by SOLIS covering the period 1996-2016, covering the two solar mimina in 1996 and 2009 and the two solar maxima in 2001 and 2014. A composite record of solar EUV from SOHO-SEM, TIMED-SEE, and SDO-EVE covering the same period is very well correlated with the magnetic record (R2=0.96), both for monthly means. The magnetic flux and EUV [and the sunspot number] are extremely well correlated with the F10.7 microwave flux, even on a daily basis. The tight correlations extend to other solar indices (Mg II, Ca II) reaching further back in time. Solar EUV creates and maintains the ionosphere. The conducting E-region [at ~105 km altitude] supports an electric current by a dynamo process due to thermal winds moving the conducting region across the Earth’s magnetic field. The resulting current has an easily observable magnetic effect at ground level, maintaining a diurnal variation of the geomagnetic field [discovered by Graham in 1722]. Data on this variation go back to the 1740s [with good coverage back to 1840] and permit reconstruction of EUV [and proxies, e.g. F10.7] back that far. We confirm that the EUV [and hence the solar magnetic field] relaxes to the same [apart from tiny residuals] level at every solar minimum. Since the variation of Total Solar Irradiance [TSI] is controlled by the magnetic field, the reconstruction of EUV does not support a varying ‘background’ on which the solar cycle variation of TSI rides, strongly suggesting that the Climate Data Records advocated by NOAA and NASA are not correct before the space age. Similarly, the reconstruction does not support the constancy of the calibration of the SORCE/TIM TSI-record since 2003, but rather indicates an upward drift, suggesting an overcorrection for sensor degradations.

Leif,

“Very” accurate is not very specific. One of your correlations has an R^2 of 0.96, this is also not good enough to establish that “There is no way TSI could have varied 13.6 W/m2.”

According to Kopp:

So you see, the accuracies that you refer to are orders of magnitude too low, we need >99.9%, which is my point. When it comes to proxies, you can quantify the quality of the correlation, perhaps, but the accuracy of the TSI record is in question, so is the accuracy and duration of the EUV record. Lots of good work, but the accuracy required just isn’t there to back up your assertion. In any case R^2 is only a measure of correlation, not accuracy. Further, the relationship to climate is another unknown jump. Speculation stacked on speculation. This is an important area that is being totally ignored by the IPCC, by assuming it doesn’t matter.

the accuracy of the TSI record is in question, so is the accuracy and duration of the EUV record

The quoted accuracy is 0.5 W/m2 which is not in question. The EUV record is solid back to 1740. When you say ‘is in question’ you conveniently omit to say by whom, and by how much. The way of countering my comment is for each slide to argue or show that it is wrong, not by blanket hand waving.

Leif,

The accuracy of 0.5 W/m^2 you quote is only for the TIM instrument and only for its first 10 years. That is the best you can expect – and only for a short time. Even the TIM instrument deteriorates with time. This is all well documented in the post.

As for EUV, exactly how accurate are the measurements made in 1740? I see an R^2 of correlations to SSN of 0.917, not even close to the 0.999 we need to make our case. F10.7? I see an R^2 of correlation of 0.956, not very good at all. These values are from your ppt. I’m quoting your values.

Who is blanket hand waving? We have a particular accuracy we are looking for, + or – 0.136 W/m^2, I’m not seeing it in your data.

I see an R^2 of correlation of 0.956, not very good at all.

R2 of 0.956 means that 95.6% of the variation is explained by the correlation, leaving only less than 5% for wiggle room [or error if you wish].

You are not [doing] as I suggested. for each slide explain what is wrong with it.

Leif, a separate reply for this statement:

I do not think all indicators suggest that solar activity drops to zero in a solar minimum, your ppt contains a quote from Foukal and Eddy (2007), suggesting that there was a lot of variation even during the Maunder Minimum. Shapiro (2011) notes that sunspots do not form some lower limit of solar activity. The modulation function is still active, even when SN is zero.

In short, as you have written, there is no clear evidence that there is a secular increase in solar activity over the past 300 years, I agree with this. But, there is no clear evidence that there isn’t a secular increase – that is just as speculative.

Andy and Lief

Note the 274 p dissertation by Theodosios Chatzistergos, 2017

Analysis of historical solar observations and long-term changes in solar irradiance” https://d-nb.info/1142518949/34

David Hagen, Thanks for the link, good thesis.

But, there is no clear evidence that there isn’t a secular increase

Yes there is, and very strong indeed.

The sun’s magnetic field heats the corona where EUV is generated. The EUV creates the E-region [at 105 km altitude]. Dynamo action produces a current whose magnetic effect at ground level is easily measured [discovered in AD 1722]. With the exception of some years at the beginning of the time since, we have kept track on this effect and can thus directly calibrate it in terms of EUV flux [using modern data]. For such calibration and R2 of greater than 0.9 means extraordinary significant agreement. The result is direct evidence for a lack of secular increase. This is not in doubt and is not controversial. The causal relationships between solar magnetism, EUV [and F10.7] flux, ionospheric currents, and geomagnetic effect are well-understood: Slide 21 of

http://www.leif.org/research/EUV-F107-and-TSI-CDR-HAO.pdf