Propagation of Error and the Reliability of Global Air Temperature Projections

Guest essay by Pat Frank

Regular readers at Anthony’s Watts Up With That will know that for several years, since July 2013 in fact, I have been trying to publish an analysis of climate model error.

The analysis propagates a lower limit calibration error of climate models through their air temperature projections. Anyone reading here can predict the result. Climate models are utterly unreliable. For a more extended discussion see my prior WUWT post on this topic (thank-you Anthony).

The bottom line is that when it comes to a CO2 effect on global climate, no one knows what they’re talking about.

Before continuing, I would like to extend a profoundly grateful thank-you! to Anthony for providing an uncensored voice to climate skeptics, over against those who would see them silenced. By “climate skeptics” I mean science-minded people who have assessed the case for anthropogenic global warming and have retained their critical integrity.

In any case, I recently received my sixth rejection; this time from Earth and Space Science, an AGU journal. The rejection followed the usual two rounds of uniformly negative but scientifically meritless reviews (more on that later).

After six tries over more than four years, I now despair of ever publishing the article in a climate journal. The stakes are just too great. It’s not the trillions of dollars that would be lost to sustainability troughers.

Nope. It’s that if the analysis were published, the career of every single climate modeler would go down the tubes, starting with James Hansen. Their competence comes into question. Grants disappear. Universities lose enormous income.

Given all that conflict of interest, what consensus climate scientist could possibly provide a dispassionate review? They will feel justifiably threatened. Why wouldn’t they look for some reason, any reason, to reject the paper?

Somehow climate science journal editors have seemed blind to this obvious conflict of interest as they chose their reviewers.

With the near hopelessness of publication, I have decided to make the manuscript widely available as samizdat literature.

The manuscript with its Supporting Information document is available without restriction here (13.4 MB pdf).

Please go ahead and download it, examine it, comment on it, and send it on to whomever you like. For myself, I have no doubt the analysis is correct.

Here’s the analytical core of it all:

Climate model air temperature projections are just linear extrapolations of greenhouse gas forcing. Therefore, they are subject to linear propagation of error.

Complicated, isn’t it. I have yet to encounter a consensus climate scientist able to grasp that concept.

Willis Eschenbach demonstrated that climate models are just linearity machines back in 2011, by the way, as did I in my 2008 Skeptic paper and at CA in 2006.

The manuscript shows that this linear equation …

… will emulate the air temperature projection of any climate model; fCO2 reflects climate sensitivity and “a” is an offset. Both coefficients vary with the model. The parenthetical term is just the fractional change in forcing. The air temperature projections of even the most advanced climate models are hardly more than y = mx+b.

The manuscript demonstrates dozens of successful emulations, such as these:

Legend: points are CMIP5 RCP4.5 and RCP8.5 projections. Panel ‘a’ is the GISS GCM Model-E2-H-p1. Panel ‘b’ is the Beijing Climate Center Climate System GCM Model 1-1 (BCC-CSM1-1). The PWM lines are emulations from the linear equation.

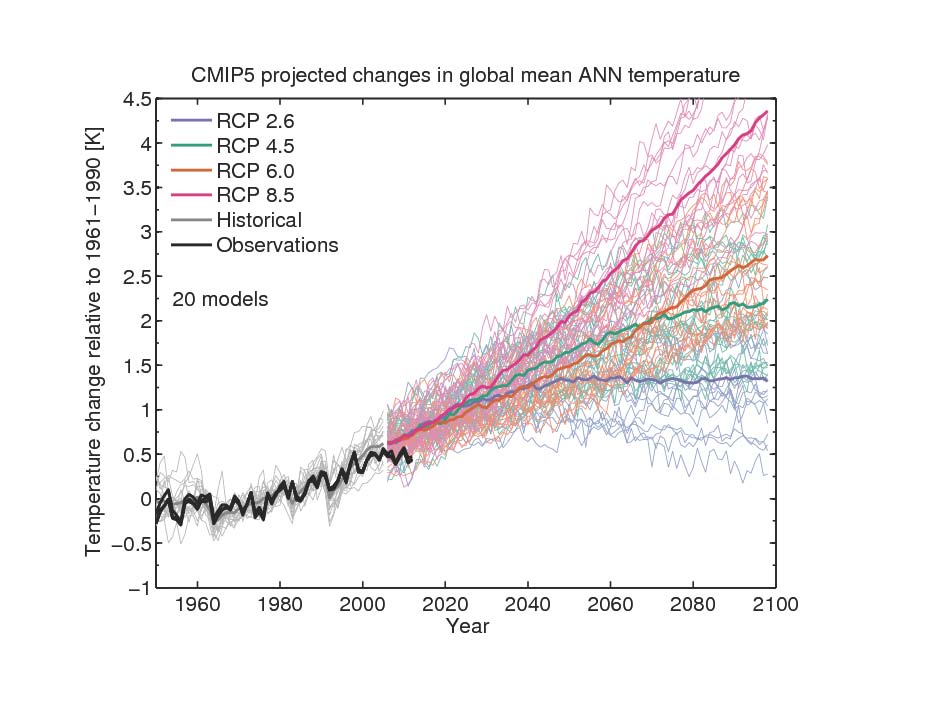

CMIP5 models display an inherent calibration error of ±4 Wm-2 in their simulations of long wave cloud forcing (LWCF). This is a systematic error that arises from incorrect physical theory. It propagates into every single iterative step of a climate simulation. A full discussion can be found in the manuscript.

The next figure shows what happens when this error is propagated through CMIP5 air temperature projections (starting at 2005).

Legend: Panel ‘a’ points are the CMIP5 multi-model mean anomaly projections of the 5AR RCP4.5 and RCP8.5 scenarios. The PWM lines are the linear emulations. In panel ‘b’, the colored lines are the same two RCP projections. The uncertainty envelopes are from propagated model LWCF calibration error.

For RCP4.5, the emulation departs from the mean near projection year 2050 because the GHG forcing has become constant.

As a monument to the extraordinary incompetence that reigns in the field of consensus climate science, I have made the 29 reviews and my responses for all six submissions available here for public examination (44.6 MB zip file, checked with Norton Antivirus).

When I say incompetence, here’s what I mean and here’s what you’ll find.

Consensus climate scientists:

1. Think that precision is accuracy

2. Think that a root-mean-square error is an energetic perturbation on the model

3. Think that climate models can be used to validate climate models

4. Do not understand calibration at all

5. Do not know that calibration error propagates into subsequent calculations

6. Do not know the difference between statistical uncertainty and physical error

7. Think that “±” uncertainty means positive error offset

8. Think that fortuitously cancelling errors remove physical uncertainty

9. Think that projection anomalies are physically accurate (never demonstrated)

10. Think that projection variance about a mean is identical to propagated error

11. Think that a “±K” uncertainty is a physically real temperature

12. Think that a “±K” uncertainty bar means the climate model itself is oscillating violently between ice-house and hot-house climate states

Item 12 is especially indicative of the general incompetence of consensus climate scientists.

Not one of the PhDs making that supposition noticed that a “±” uncertainty bar passes through, and cuts vertically across, every single simulated temperature point. Not one of them figured out that their “±” vertical oscillations meant that the model must occupy the ice-house and hot-house climate states simultaneously!

If you download them, you will find these mistakes repeated and ramified throughout the reviews.

Nevertheless, my manuscript editors apparently accepted these obvious mistakes as valid criticisms. Several have the training to know the manuscript analysis is correct.

For that reason, I have decided their editorial acuity merits them our applause.

Here they are:

- Steven Ghan___________Journal of Geophysical Research-Atmospheres

- Radan Huth____________International Journal of Climatology

- Timothy Li____________Earth Science Reviews

- Timothy DelSole_______Journal of Climate

- Jorge E. Gonzalez-cruz__Advances in Meteorology

- Jonathan Jiang_________Earth and Space Science

Please don’t contact or bother any of these gentlemen. On the other hand, one can hope some publicity leads them to blush in shame.

After submitting my responses showing the reviews were scientifically meritless, I asked several of these editors to have the courage of a scientist, and publish over meritless objections. After all, in science analytical demonstrations are bullet proof against criticism. However none of them rose to the challenge.

If any journal editor or publisher out there wants to step up to the scientific plate after examining my manuscript, I’d be very grateful.

The above journals agreed to send the manuscript out for review. Determined readers might enjoy the few peculiar stories of non-review rejections in the appendix at the bottom.

Really weird: several reviewers inadvertently validated the manuscript while rejecting it.

For example, the third reviewer in JGR round 2 (JGR-A R2#3) wrote that,

“[emulation] is only successful in situations where the forcing is basically linear …” and “[emulations] only work with scenarios that have roughly linearly increasing forcings. Any stabilization or addition of large transients (such as volcanoes) will cause the mismatch between this emulator and the underlying GCM to be obvious.”

The manuscript directly demonstrated that every single climate model projection was linear in forcing. The reviewer’s admission of linearity is tantamount to a validation.

But the reviewer also set a criterion by which the analysis could be verified — emulate a projection with non-linear forcings. He apparently didn’t check his claim before making it (big oh, oh!) even though he had the emulation equation.

My response included this figure:

Legend: The points are Jim Hansen’s 1988 scenario A, B, and C. All three scenarios include volcanic forcings. The lines are the linear emulations.

The volcanic forcings are non-linear, but climate models extrapolate them linearly. The linear equation will successfully emulate linear extrapolations of non-linear forcings. Simple. The emulations of Jim Hansen’s GISS Model II simulations are as good as those of any climate model.

The editor was clearly unimpressed with the demonstration, and that the reviewer inadvertently validated the manuscript analysis.

The same incongruity of inadvertent validations occurred in five of the six submissions: AM R1#1 and R2#1; IJC R1#1 and R2#1; JoC, #2; ESS R1#6 and R2#2 and R2#5.

In his review, JGR R2 reviewer 3 immediately referenced information found only in the debate I had (and won) with Gavin Schmidt at Realclimate. He also used very Gavin-like language. So, I strongly suspect this JGR reviewer was indeed Gavin Schmidt. That’s just my opinion, though. I can’t be completely sure because the review was anonymous.

So, let’s call him Gavinoid Schmidt-like. Three of the editors recruited this reviewer. One expects they called in the big gun to dispose of the upstart.

The Gavinoid responded with three mostly identical reviews. They were among the most incompetent of the 29. Every one of the three included mistake #12.

Here’s Gavinoid’s deep thinking:

“For instance, even after forcings have stabilized, this analysis would predict that the models will swing ever more wildly between snowball and runaway greenhouse states.”

And there it is. Gavinoid thinks the increasingly large “±K” projection uncertainty bars mean the climate model itself is oscillating increasingly wildly between ice-house and hot-house climate states. He thinks a statistic is a physically real temperature.

A naïve freshman mistake, and the Gavinoid is undoubtedly a PhD-level climate modeler.

The majority of Gavinoid’s analytical mistakes include list items 2, 5, 6, 10, and 11. If you download the paper and Supporting Information, section 10.3 of the SI includes a discussion of the total hash Gavinoid made of a Stefan-Boltzmann analysis.

And if you’d like to see an extraordinarily bad review, check out ESS round 2 review #2. It apparently passed editorial muster.

I can’t finish without mentioning Dr. Patrick Brown’s video criticizing the youtube presentation of the manuscript analysis. This was my 2016 talk for the Doctors for Disaster Preparedness. Dr. Brown’s presentation was also cross-posted at “andthentheresphysics” (named with no appreciation of the irony) and on youtube.

Dr. Brown is a climate modeler and post-doctoral scholar working with Prof. Kenneth Caldiera at the Carnegie Institute, Stanford University. He kindly notified me after posting his critique. Our conversation about it is in the comments section below his video.

Dr. Brown’s objections were classic climate modeler, making list mistakes 2, 4, 5, 6, 7, and 11.

He also made the nearly unique mistake of confusing an root-sum-square average of calibration error statistics with an average of physical magnitudes; nearly unique because one of the ESS reviewers made the same mistake.

Mr. andthentheresphysics weighed in with his own mistaken views, both at Patrick Brown’s site and at his own. His blog commentators expressed fatuous insubstantialities and his moderator was tediously censorious.

That’s about it. Readers moved to mount analytical criticisms are urged to first consult the list and then the reviews. You’re likely to find your objections critically addressed there.

I made the reviews easy to apprise by starting them with a summary list of reviewer mistakes. That didn’t seem to help the editors, though.

Thanks for indulging me by reading this.

I felt a true need to go public, rather than submitting in silence to what I see as reflexive intellectual rejectionism and indeed a noxious betrayal of science by the very people charged with its protection.

Appendix of Also-Ran Journals with Editorial ABM* Responses

Risk Analysis. L. Anthony (Tony) Cox, chief editor; James Lambert, manuscript editor.

This was my first submission. I expected a positive result because they had no dog in the climate fight, their website boasts competence in mathematical modeling, and they had published papers on error analysis of numerical models. What could go wrong?

Reason for declining review: “the approach is quite narrow and there is little promise of interest and lessons that transfer across the several disciplines that are the audience of the RA journal.”

Chief editor Tony Cox agreed with that judgment.

A risk analysis audience not interested to discover there’s no knowable risk to CO2 emissions.

Right.

Asia-Pacific Journal of Atmospheric Sciences. Songyou Hong, chief editor; Sukyoung Lee, manuscript editor. Dr. Lee is a professor of atmospheric meteorology at Penn State, a colleague of Michael Mann, and altogether a wonderful prospect for unbiased judgment.

Reason for declining review: “model-simulated atmospheric states are far from being in a radiative convective equilibrium as in Manabe and Wetherald (1967), which your analysis is based upon.” and because the climate is complex and nonlinear.

Chief editor Songyou Hong supported that judgment.

The manuscript is about error analysis, not about climate. It uses data from Manabe and Wetherald but is very obviously not based upon it.

Dr. Lee’s rejection follows either a shallow analysis or a convenient pretext.

I hope she was rewarded with Mike’s appreciation, anyway.

Science Bulletin. Xiaoya Chen, chief editor, unsigned email communication from “zhixin.”

Reason for declining review: “We have given [the manuscript] serious attention and read it carefully. The criteria for Science Bulletin to evaluate manuscripts are the novelty and significance of the research, and whether it is interesting for a broad scientific audience. Unfortunately, your manuscript does not reach a priority sufficient for a full review in our journal. We regret to inform you that we will not consider it further for publication.”

An analysis that invalidates every single climate model study for the past 30 years, demonstrates that a global climate impact of CO2 emissions, if any, is presently unknowable, and that indisputably proves the scientific vacuity of the IPCC, does not reach a priority sufficient for a full review in Science Bulletin.

Right.

Science Bulletin then courageously went on to immediately block my email account.

*ABM = anyone but me; a syndrome widely apparent among journal editors.

Unless climate science has somehow been endowed with naturally occurring models, all computational results are “hard-coded in.”

If the climate models correctly handled the physics, this wouldn’t keep happening…

and tuning for the past is tuning for the future too, cannot be one withou the other David

The output is all the evidence you need to show they have no idea what they are doing.

All they know from the past is temps are not going to swing outside of those higher values and of couse a few low runs to catch lower trends so they can claim “one model was right” when that is one of the biggest logical fallacies I have ever heard

It’s programmed into the code that parameterizes evaporation. CMIP it’s done with mass conservation.

http://www.cesm.ucar.edu/models/atm-cam/docs/description/node13.html#SECTION00736000000000000000

“If the climate models correctly handled the physics, this wouldn’t keep happening…”

But it would…..

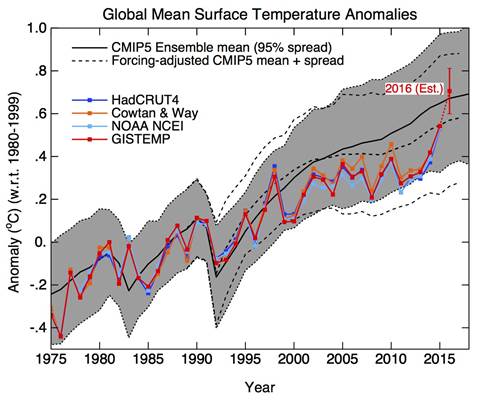

That graph is an ensemble of individual runs which have representation of natural variation within the climate system.

There are 95% confidence limits derived from that spaghetti of runs to encompass that natural variation.

You seem to expect that the averaging all those individual runs should have the GMT running up the middle of them, whereas (as we know) there the are dips/bumps of natural variation involved.

The actual GMT can only be expected to lie within those confidence limits.

Barely tracking the lower 95% confidence band (P97.5) is not a demonstration of predictive skill.

If the model is a reasonable depiction of reality, the observed temperatures should track around P50 and deflect toward the top or bottom of the 95% band during strong ENSO episodes.

“Barely tracking the lower 95% confidence band (P97.5) is not a demonstration of predictive skill.”

Yes it is … observations are within the constraints of the variation of individual runs, which is all the ensemble model runs can be asked to fairly do.

?w=700

?w=700

Also, you do agree we have to compare against the forcings that actually occurred and not the ones used in the model runs? (should be rhetorical).

And let’s see where the GMT tracks during a prolonged +PDO cycle, as compared to a -PDO.

http://research.jisao.washington.edu/pdo/pdo_tsplot_jan2017.png

If you make a barn big enough even a child can hit it !!

David Middleton October 23, 2017 at 12:09 pm

Barely tracking the lower 95% confidence band (P97.5) is not a demonstration of predictive skill.

And yet when the Arctic sea ice extent does this we’re told it’s normal!

http://nsidc.org/arcticseaicenews/charctic-interactive-sea-ice-graph/

Tonyb,

The variation described by multiple runs of a single model is what should be compared to measured reality, not an ensemble of runs of different models. A y model where reality does not fall within that models “uncertainty envelope” at some specified level (e.g. two sigma) should be rejected as not an accurate representation of reality. The pooled variation across many different models means nothing… each model is a logical construct which stands, or falls, on its own… most fall. Those should be modified or abandoned.

Tonyb,

The variation described by multiple runs of a single model is what should be compared to measured reality, not an ensemble of runs of different models. A y model where reality does not fall within that models “uncertainty envelope” at some specified level (e.g. two sigma) should be rejected as not an accurate representation of reality. The pooled variation across many different models means nothing… each model is a logical construct which stands, or falls, on its own… most fall. Those should be modified or abandoned.

Red herring fallacy.

‘all computational results are “hard-coded in.”’

If that’s all you mean by the phrase, it’s a pointless tautology. But the original commenter said:

“The computer simulations in question have hard coded in that an increase in CO2 causes warming. Hence these computer simulations beg the question as, does CO2 cause warming, and therefore are of no value.”

If it’s calculated by a computer is means the result was hard coded in, and therefore of no value. Maybe he meant that all calculated results are of no value – you can’t be sure here – but I hope not.

The physics have to be hard-coded in. However, the model is of no or little value it never demonstrates predictive skill.

The model is basically hard-coded to yield more warming with more CO2. It has to be. However, more warming with more CO2 is not a demonstration of predictive skill.

X (+/- y) with each doubling of CO2 would be predictive skill… if the observations approximated X (+/- y). However the observations consistently approximate X-y ((+/- y)).

DM – climate models are, of course,

not hard coded in. they’re the result of

numerically solving a large set of PDEs.

look at the description

for any model

for the mathematics.

GCM’s are nothing more than engineering code. If they were based on pure physics there would no difference between the dozens of models presented by the IPCC and various modelling groups. There should be be no need for “ensembles”.

i don’t know what “just engineering code” means.

the models give different results because they make

different choices, especially about parametrizations.

this is actually the main use of climate models by

scientists — not to project the temp

to 2100, but to compare results when a particular

component of a model is altered

because climate warming is GHG driven in models, warming in models is utterly dependent on GHG extrapolation, higher values more warming, they cannot ever go the other way, and history shows that is incorrect, unless you believe in hockeysticks.

To show this, run a climate model with increasing GHGs at current rates and run it 10000 years, and watch the earth turn into a melting ball. After such long runs the models would produce complete nonsense, but we are meant to believe them over 100 years?

ugh

Climate science is nothing but VERY IMPRECISE NUMBERS and you cannot create a precise theory out of very imprecise numbers

You would think that climate scientists would see the outputs as nonsense, but there really are buffoons out there that believe the Earth will become like Venus from our CO2 emissions.

They wont admit it, but the incorrect theory about Venus is exactly what has led to this junk science

Pretty sure that’s exactly where Hanson came from, NASA Planetary group iirc studying Venus.

you don’t say Micro 🙂 Thanks for that, it was a suspicion, until I read your post. Now I am convinced.

To sum this up, your paper was not published because it was not deemed ‘popular’ enough. We’re living the Dark Age of science, but the science renaissance is coming soon.

+1 Book burning

I’ll trust climate models more when they can 1 Hindcast without tuning and 2 cluster around observations to a reasonable degree and show some accuacy in tracking OBSERVED variability.

Juggling models (like hausfather) to come down towards obs is scientifically criminal. And Nye says lock of deniers 😀

I do not have detailed knowledge of the spaghetti graphs or the details of model ensembles, however the range of outputs is huge.

Our climate has only one set of input parameters and only one output. Leaving aside chaos theory, the chance of simulating our climate is zilch, given our lack of knowledge and inability to model clouds and other important influences.

How do ensembles of models have any meaning? They contain values or assumptions that initialise the model and exclude alternatives. These alternatives are then given equal probability in parallel calculations in order to give a spread of less likely scenarios. Then the mean of these scenarios is selected if it gives a better match with reality, though it simply shows that the original assumptions were not optimum. How can a mean of wrong values have more credibility than the value closest to the mean?

For years, model tuning involved the warming effect of CO2 reduced by the aerosol cooling factor, ignoring the fact that for years, both values were far beyond credible, observational values. It seems that today, reality is still an inconvenient factor.

cat – where are the observed forcings for CO2

and aerosols

published?

& why were the RFs used not

“credible?”

I think that spaghetti graphs are results of different feedbacks applied in models.

Pat,

If you’re looking for somewhere to post your paper, you could always try viXra.

ATTP, see Micro’s reply to your incorrect post above, to indepth for you? 😉

Ken: Why not at your site, where you could attack it and mod away inconvenient truths from Micro?

Nick Stokes October 23, 2017 at 3:12 am

“On that logic you could say that computation could never reveal anything.”

Doesn’t “revealing” necessarily imply proof or the accuracy of what is being revealed? Nothing has been revealed if what is “revealed” is not so. Computation may suggest something, however it can’t reveal, let alone prove anything.

This isn’t rocket science. I have been writing (or building analog) simulations for more than 30 years. If the dominant dynamics in your simulation are divergent, parameter variation will cause divergent results. If the dominant dynamics are convergent, they won’t. We have always been told by warmists that global warming dynamics are divergent. CO2 causes a temperature rise that causes more water vapor that causes more temperature rise that causes both ice to melt and reflect less energy and a warming ocean to give up more CO2. Rinse and repeat. If the models are not diverging from different parameters, they are admitting that the dominant dynamics are convergent. If that is the case, we can cancel the panic. Warmists, which is it?

actual knowledge and experience makes ATTP dissolve like CO2 in low O2 content water.

This is very disappointing, Pat.

Following the link to the book’s download site brings me to: ?dl=0

?dl=0

Two large computer disaster hot spots and all other possible download links do not show alt text or any other hints.

A very untrustworthy method and place for a simple download.

If I remember correctly, those are fake ads ….

You are correct.

Clicking hot buttons that harbor malicious or seriously greedy content is never worth the effort.

Just click slow download, and down it comes, AtheoK

Pat Frank, I will not!

What you suggest is not safe or rational computing.

I have practiced safe computing since the early 1980s.

If I do not get to read the URL address of a link, I will not click on it.

Clicking unlabeled hot spots is begging for computer/network infection.

When you provide a link that is open and clear; I’ll consider downloading.

Another caveat, vague/hidden/questionable download hotspots surrounded by obvious phishing hot spots is a sure way to keep people with common sense from touching anything on that page.

Vague – The hotspot fails to indicate what will download.

Hidden – The hotspot URL does not show anywhere.

Questionable – Hotspots surrounded by phishing hotspots.

Your claimed download link taps all three danger warnings.

I didn’t get any of that extra stuff when I clicked on the link, not even the choice between slow and turbo download.

I’ve used that site several times to transmit large files to various people. There’s never been any trouble.

Tell you what, though. If you send me the link to a free upload site that you trust, I’ll put the paper there for you. You can email me at pfrank_eight_three_zero_AT_earth_link_dot_net.

Interesting topic, but lots of wasted time. We know that we do not have the data to correctly initialize the models. We also know that we do not have the physics to correctly model atmospheric flows in a free convective atmosphere. As a consequence, we know that all of the models will contain errors. Unfortunately, we have no idea of the size of the errors or how they get promulgated through time.

Models generate hypotheses. Hypotheses need testing. How do we test the model output? Given the tuning, we clearly need time to pass in order to benchmark model runs against reality. We do not yet have enough actual data to test the CMIP5 hypotheses. Suppose as the AMO rolls over into a negative phase that we observe a cooling similar to what the unadjusted data shows for the 1950-1970s period. I think it is safe to say that most of the CMIP5 runs will be rejected and we won’t care about error promulgation. Unfortunately, we are currently in a situation where all we have are the hypotheses and not enough data to test them.

As the saying goes, all models are wrong but some are useful. We may come to find out that all the models are wrong and that those pimping the results have done significant damage to both the credibility of science in general and the welfare of the human population.

We may come to find?

Too late, it’s wrong, AGW is wrong, the modern warm period is almost all natural. There is RF from CO2, just that there is also a great big negative water vapor response that cancels it at night.

Kudos to WUWT for giving Pat Frank another kick at the cat in showing us his paper. The one that if published, would overturn the status quo and we could all go home and not have to worry about whether CAGW was really an issue. No one can accuse WUWT of not giving fair time to every unpublished skeptic.

But this was just too much to accept:

“The stakes are just too great. It’s not the trillions of dollars that would be lost to sustainability troughers. Nope. It’s that if the analysis were published, the career of every single climate modeler would go down the tubes, starting with James Hansen. ”

Hopefully, his above quote was not his Introduction to the folks that were considering publishing his analysis.

If someone else had written that statement, then could be worth taking the time to really investigate his paper further. The hubris of his own work in his own mind and resentment towards the Journal’s for not being taken seriously, even here by many commenters, is proof enough (at least for me) that his analysis is not the the dragon slaying that he thinks it is.

spot on.

Errr…No!

Is that a pimple you see in your mirror benbenben

Earthling2, thanks for your self-serving, subjectivist, and critically meaningless contribution.

@Mosher: “in addition there are feedbacks which cannot be predicted”

Who knew that you could program a mathematical model into a computer when you did not understand all of the interactions!!!

I am in awe /not

@Nelson: “Models generate hypotheses.” Do they?

I thought computer models consisted of a set of formulas

ie they did what they were written to do.

It strikes me that the GCM linearity is perfect for task, validated by possessing a linear relationship to funding designed to promote the implementation of ideology.

Here is part of Section 1 of the blog version of my 2017 paper in Energy & Environment.

https://climatesense-norpag.blogspot.com/2017/02/the-coming-cooling-usefully-accurate_17.html

“For the atmosphere as a whole therefore cloud processes, including convection and its interaction with boundary layer and larger-scale circulation, remain major sources of uncertainty, which propagate through the coupled climate system. Various approaches to improve the precision of multi-model projections have been explored, but there is still no agreed strategy for weighting the projections from different models based on their historical performance so that there is no direct means of translating quantitative measures of past performance into confident statements about fidelity of future climate projections.The use of a multi-model ensemble in the IPCC assessment reports is an attempt to characterize the impact of parameterization uncertainty on climate change predictions. The shortcomings in the modeling methods, and in the resulting estimates of confidence levels, make no allowance for these uncertainties in the models. In fact, the average of a multi-model ensemble has no physical correlate in the real world.

The IPCC AR4 SPM report section 8.6 deals with forcing, feedbacks and climate sensitivity. It recognizes the shortcomings of the models. Section 8.6.4 concludes in paragraph 4 (4): “Moreover it is not yet clear which tests are critical for constraining the future projections, consequently a set of model metrics that might be used to narrow the range of plausible climate change feedbacks and climate sensitivity has yet to be developed”

What could be clearer? The IPCC itself said in 2007 that it doesn’t even know what metrics to put into the models to test their reliability. That is, it doesn’t know what future temperatures will be and therefore can’t calculate the climate sensitivity to CO2. This also begs a further question of what erroneous assumptions (e.g., that CO2 is the main climate driver) went into the “plausible” models to be tested any way. The IPCC itself has now recognized this uncertainty in estimating CS – the AR5 SPM says in Footnote 16 page 16 (5): “No best estimate for equilibrium climate sensitivity can now be given because of a lack of agreement on values across assessed lines of evidence and studies.” Paradoxically the claim is still made that the UNFCCC Agenda 21 actions can dial up a desired temperature by controlling CO2 levels. This is cognitive dissonance so extreme as to be irrational. There is no empirical evidence which requires that anthropogenic CO2 has any significant effect on global temperatures. ”

However establishment scientists go on to make another schoolboy catastrophic error of judgement by making straight line projections.

“The climate model forecasts, on which the entire Catastrophic Anthropogenic Global Warming meme rests, are structured with no regard to the natural 60+/- year and, more importantly, 1,000 year periodicities that are so obvious in the temperature record. The modelers approach is simply a scientific disaster and lacks even average commonsense. It is exactly like taking the temperature trend from, say, February to July and projecting it ahead linearly for 20 years beyond an inversion point. The models are generally back-tuned for less than 150 years when the relevant time scale is millennial. The radiative forcings shown in Fig. 1 reflect the past assumptions. The IPCC future temperature projections depend in addition on the Representative Concentration Pathways (RCPs) chosen for analysis. The RCPs depend on highly speculative scenarios, principally population and energy source and price forecasts, dreamt up by sundry sources. The cost/benefit analysis of actions taken to limit CO2 levels depends on the discount rate used and allowances made, if any, for the positive future positive economic effects of CO2 production on agriculture and of fossil fuel based energy production. The structural uncertainties inherent in this phase of the temperature projections are clearly so large, especially when added to the uncertainties of the science already discussed, that the outcomes provide no basis for action or even rational discussion by government policymakers. The IPCC range of ECS estimates reflects merely the predilections of the modellers – a classic case of “Weapons of Math Destruction” (6).

Harrison and Stainforth 2009 say (7): “Reductionism argues that deterministic approaches to science and positivist views of causation are the appropriate methodologies for exploring complex, multivariate systems where the behavior of a complex system can be deduced from the fundamental reductionist understanding. Rather, large complex systems may be better understood, and perhaps only understood, in terms of observed, emergent behavior. The practical implication is that there exist system behaviors and structures that are not amenable to explanation or prediction by reductionist methodologies. The search for objective constraints with which to reduce the uncertainty in regional predictions has proven elusive. The problem of equifinality ……. that different model structures and different parameter sets of a model can produce similar observed behavior of the system under study – has rarely been addressed.” A new forecasting paradigm is required”

Doc:

What could be clearer? The IPCC itself said in 2007 that it doesn’t even know what metrics to put into the models to test their reliability. That is, it doesn’t know what future temperatures will be and therefore can’t calculate the climate sensitivity to CO2.

They’ve never stopped saying it in one way or another:

“In sum, a strategy must recognise what is possible. In climate research and modelling, we should recognise that we are dealing with a coupled non-linear chaotic system, and therefore that the long-term prediction of future climate states is not possible. The most we can expect to achieve is the prediction of the probability distribution of the system’s future possible states by the generation of ensembles of model solutions. This reduces climate change to the discernment of significant differences in the statistics of such ensembles. The generation of such model ensembles will require the dedication of greatly increased computer resources and the application of new methods of model diagnosis. Addressing adequately the statistical nature of climate is computationally intensive, but such statistical information is essential.”

http://ipcc.ch/ipccreports/tar/wg1/505.htm

Keep shouting this from the rooftops. but don’t expect too many to hear you. There’s too much at stake for logic to win the day amongst the academics.

Very random question. Are the oscillations in the model output you describe in anyway similar to the phenomenoa ‘hunting oscillations’?

Pat

Perhaps you could provide examples of error propagation that will illustrate your position. Being an old land surveyor I am very familiar with the concept as it effects all sequential measurements that rely on a previous measurement. Think of surveying a rectangular tract of land using a series of measurements each containing an angle and a distance. Assume all reasonable care is used to constrain errors. There is an uncertainty in each measured angle and each distance. These errors propagate as the traverse continues so the error elipse at each new measured point grows and changes shape. They soon get larger than expected error but you cannot reject any coordinate pair that falls in the elipse as a blunder or obviously erroneous. When the traverse closes out on the beginning point. a proportion of the closing error is distributed to each traverse point. If this proportioning process is skipped you have large possible positional error in each corner of the tract. The error elipse at each corner is not the variance you would expect if you reran the traverse lots of times. It is in the dimensions of the coordinate system but is a statistical number, not a possible dimension. It is the size of your propagated uncertainty and you cannot reject any coordinate that falls in it without further analysis. Depending on the your methods the error elipse may be far outside any normal set of measurements.

It seems reasonable to me that the iterative process used to generate future climate scenarios is similar to the survey process and subject to the same error propagation analysis.

It seems reasonable to me that the iterative process used to generate future climate scenarios is similar to the survey process and subject to the same error propagation analysis.

==============

a good analogy. The problem with the future is that there is no way to “tie in” the end point and redistribute the error. The best that happens is that on each run of the model, it gains or loses energy due to loss of precision errors. This “missing” energy is then divided up across the model and added or subtracted back it as may be required to maintain the fictional accuracy of the model.

However, for a model to be accurate, you want to keep your time slices quite small, which means there will be many trillions upon trillions of iterations. And even if each iteration is 99.9999% accurate, if you iterate enough times it doesn’t take long before your actual accuracy is approximately 0%. (99.9999% ^ n tend to zero as n increases).

Ferd

Thanks for your comment. Can you think of other processes that are subject to uncertainty growing due to iterative processing? I’m guessing here but maybe some of the financial models are in this boat. The point that seems to mystify so many is the concept of an error uncertainty with the units of the process that can grow to nonphysical dimensions. The uncertainty tells you about the reliability of the solution not its likelihood.

DMA, I struggled very hard to get the concept across. Honestly, I went in to the process expecting they’d all be familiar with error propagation. After all, what physical scientist is not? But I drew a total blank with them.

It’s been as though they’e never heard of the topic. And they’ve been completely refractory to any explanation I could devise, including extracts from published literature.

Pat Frank – Many thanks for this article. Unfortunately, as in all of climate science, the subject is sufficiently far from primary school mathematics for it to be easily countered by obfuscation. It is truly disturbing how long it can take to get past determined gatekeepers. I was pleased that you picked up that Willis Eschenbach had come to the same conclusion as you, via a different path, back in 2011.

The article I wrote for WUWT in 2015 – https://wattsupwiththat.com/2015/09/17/how-reliable-are-the-climate-models/ – came at it from a third direction: pointing out that CO2 was the only factor in the climate models which was used to determine future temperatures. I know perfectly well that I can never get any paper past the gatekeepers, no matter how much extra detail and precision I put into them, so I echo your praise of WUWT for providing an uncensored voice to climate skeptics. I have however sent some of my articles separately to an influential person in a reputable scientific organisation, and had quite reasonable replies until I suggested that their organisation could emulate Donald Trump’s proposed Red Team – Blue Team debate. I received the terse one-line reply “There will be no debate supported by [the organisation].”. [It was a private conversation, so no names.].

I wonder whether there might be scope for a spin-off site from WUWT, that would effectively be a journal. Antony would be the publisher. There would be an editorial committee. When papers are submitted, Antony would allocate them to the most appropriate editor. That editor would then solicit peer review, in the same way as normal, but possibly using a broader range of reviewers. The main difference would be that the editor would have the knowledge and discretion to discount negative reviews if the criticisms have little merit.

Sounds like pal review.

@crackers345

By “a broader range of reviewers” I intended that the reviews would predominantly be solicited from the same consensus scientists as a present, but possibly with some luke-warmers / skeptics included. So I don’t think it would be fair to characterise that as pal review. The difference would be in having a knowledgeable editor. In my field, the editors know very little and don’t feel qualified to overrule a critical review. If you accuse my idea of anything, you should accuse it of having a pal editor, not pal reviewers.

Mike Jonas, it’s a culture of studied blindness, isn’t it.

Hundreds of Billion$ of dollars and hundreds of thousands of hours of computer time wasted to create ‘ensembles’ (?? What the fashionable CAGW alarmists are wearing this year?) of computer models that produce pathetically simple freshman-in-high-school y = mx + b results?

Linear Thinking In A Cyclical World…..

http://www.maxphoton.com/wp-content/uploads/2014/01/linear-thinking.png

climate models are about solving

a boundary value problem.

weather models solve an initial value problem.

===============

keep in mind that the famous ensemble mean spaghetti graph is in actual fact a mean of means. The individual model runs themselves are in point of fact not shown. Rather the spaghetti graph shows only the means of the actual model runs.

The simple fact remains. For 1 set of forcings, there are an infinite number of different future climates possible. Thus, any attempt to control climate by varying CO2 is a fools errand.

http://tutorial.math.lamar.edu/Classes/DE/BoundaryValueProblem.aspx

The biggest change that we’re going to see here comes when we go to solve the boundary value problem. When solving linear initial value problems a unique solution will be guaranteed under very mild conditions. We only looked at this idea for first order IVP’s but the idea does extend to higher order IVP’s. In that section we saw that all we needed to guarantee a unique solution was some basic continuity conditions. With boundary value problems we will often have no solution or infinitely many solutions even for very nice differential equations that would yield a unique solution if we had initial conditions instead of boundary conditions.

Here is the problem. Climate models are presented to the public as deterministic models with all the authority of a Newtonian two-body problem. As in any model, there are measurement and other errors. It is certainly true that the errors of one period must be propagated as errors in the initial conditions of the next period and so the cumulative errors grow. It may also be true that there are physical reasons why the expanding errors are physically impossible. But here is the point, you can bound the cumulative errors with a physical constraint if you like, but the fact is that at that point, the errors have swamped the deterministic effects of the model. The model is now just a statistical fit wrapped around some physics equations. There is no deterministic authority to the model. The only way to justify such a model is through the usual regression model validation processes using a calibration data set and then testing on data held in reserve. It’s just a statistical fit of two trending series: CO2 concentrations rising and temperatures rising. You could just as well fit rising government debt to rising temperature values. Trending data series have approximately one degree of freedom to explain their variation, so you fitting statistics will be terrible.

“Climate models are presented to the public as deterministic models with all the authority of a Newtonian two-body problem. “

No, they aren’t. People here love to quote just the first sentence of what the AR3 says about models:

“The climate system is a coupled non-linear chaotic system, and therefore the long-term prediction of future climate states is not possible. Rather the focus must be upon the prediction of the probability distribution of the system’s future possible states by the generation of ensembles of model solutions. Addressing adequately the statistical nature of climate is computationally intensive and requires the application of new methods of model diagnosis, but such statistical information is essential. “

You don’t find this caveat in the summary for executives, what you find is “extremely likely” BS.

And anyway this only translate into “let’s pretend that ensemble model solutions are representative of probability distribution of the system’s future possible states”. Which is utter BS, too.

Really, Nick?

According to you, Nick, people here “love to quote”. How absurd. If so, you should provide proof of your assertion.

A quick WUWT search of the opening phrase in that sentence reveals five examples with three of those examples components of a linked series of articles by Kip Hansen.

When quoted, source links were provided; for anyone desiring to read all of the context.

Within the two remaining search examples, the phrase, or a distant paraphrase is discussed ancillary to other topics.

Ergo; you claim is blatantly false.

Just another fake thread bomb by Nick Stokes.

Thank you for your reply. Regardless of who made what representations, the errors (or stochastic variables, if you like) swamp the deterministic variables, so the models are complicated regressions, not initial value solutions to a deterministic process. Please address the substance of my comment above.

Atheok

“A quick WUWT search of the opening phrase in that sentence reveals five example”

Too quick. This search returned 36 results for me – a few duplicates, but most different quotes. And if you ask for the “similar results”, you get 49. But the telling statistic is that if you search for the very much linked second sentence, ” Rather the focus must be upon the prediction…” you get only 7. And a few of those are from me, just pointing out the context from which it was ripped.

Nick, You are being a little disingenuous here with your wording of “a few duplicates”. Of your 36 results, more than 20 are from Kip Hansen, mostly from a single multi-part posting. Other results also contain duplicates. You may want to revisit your nonsense (to use one of your own favorite words) claim of “people here love to quote…”

Due to my slow DSL connection I have not read Mr. Frank’s paper and other documentation. However, from the OP and comments, it is clear to me that he is correct in saying that most all the climate modelers and alarmists do not understand that ‘error’ and ‘uncertainty’ are very different things.

Error is the difference between a measured (or assumed) value and the true unknown and unknowable value. Uncertainty is an estimate of the range of the probable range of the error. So if I state that the length of an object I measure is 1.00 meter and my measurement uncertainty is +/- 4 mm at 95% confidence, it means that there is less than a 2.5% chance that the true length is greater a than 1.004 m and less than a 2.5% chance it is less than 0.996 m. It is possible that the true length of the measured object is 1.00 m in which case the error is zero, but the uncertainty of the measured value is still +/- 4 mm.

Uncertainty can result from either systematic or random sources. E.g. an error in the value of a calibration reference will produce a systematic uncertainty in the calibrated instrument. A gauge block with a stated dimension of 10 cm that has a true dimension of 1.01 cm will result in a systematic uncertainty of + 0.01 cm in the calibrated instrument. Random uncertainty components are also present such as those that arise from instrument resolution. Only the random components of uncertainty can be reduced by statistical analysis of multiple measurements.

With respect to the subject of the post, propagation of Uncertainty may be illustrated by a simple example. Suppose I use a 1 cm gauge block to set a divider (i.e. a compass with two sharp points) which I then use to lay out a 1 meter measuring stick. I start at one end, lay off 1 cm with the divider, make a scribe mark, lay off another cm from this mark and so forth 100 times. Now suppose that my gauge block was actually 1.01 cm. Can I claim that my 1 meter stick is accurate to 0.01 cm – of course not – my process included a systematic error that compounded in each iteration. As a result my meter stick will be off by + 1 cm (100 x 0.01 cm). In reality, of course, the process described will result in additional errors due to inexact placement of divider points and scribe marks, etc. These factors could either increase or decrease the overall error.

Now suppose I made ten meter sticks by this method. Would I gain any confidence in their accuracy by comparing them to each other? I would likely see a small variation due to the random nature of errors in aligning divider points and making marks, but the large systematic error would remain unapparent.

These days it is not considered proper science to discuss “error” since it is by strict definition unknowable. The analysis of propagation of error thus requires one to start with a fallacious claim that error can be known. In reality, only uncertainty can be reasonably estimated and then only in probabilistic terms. The analysis of propagation of uncertainty is not trivial even in relatively simple systems when there are several variables with confounding interactions. It can become intractable in complex systems. This is at least one reason that in engineering the issue of uncertainty is often addressed by application of very large safety factors. For example steel members in a bridge may be designed on paper to carry loads five times greater than the maximum expected. In other situations application of mathematical models may simply have too much uncertainty and require full scale physical testing to evaluate fitness for purpose.

Finally, with respect to Mr. Frank’s publication problems – why would anyone be surprised that a paper that effectively says the results produced by climate models are worthless expect anything but negative reviews and recommendations against publishing when the editors select climate modelers and scientists who rely on model outputs as peer reviewers? The conflict of interest is obvious and monumental.

Good summary of error and uncertainty, Rick.

+1 .

Someone please show me a climate model that starts in 1960 and replicates the climate changes since then using only data known at that point in time.

@Nick Stokes

Would you please list the basic physics used in climate models? It is my understanding only advection, the pressure gradient force and gravity are the core dynamics (fundamental physics) of a GCM. The rest are tunable parameters; merely engineering code.

The core dynamics are the Navier-Stokes equations, momentum balance (F=ma), mass balance and an equation of state – basically ideal gas law. It is called that because those are govern the fastest processes and are tcritical for time performance. But then follow all the transport equations; energy (heat), mass of constituents, especially water vapor, with its phase changes, including surface evaporation. Then there are the radiative transfer equations; SW incoming and IR transfer. This is where CO2 is allegedly “hard coded” because it affects IR transfer. Then of course there the equations of ocean transport of heat especially, but also dissolved constituents.

Here is GFDL model of that ocean transport

That’s what they are telling you was all hard-coded. And maybe just picking a few parameters.

Mr Stokes, I still cant decide whether you are deliberately obtuse or are really ‘as thick as a plank’.

You can substitute every wonderfully exotic variable in the models with the timetables of London buses and as long as you keep the CO2 forcing variable intact you would get the same results.

Its complete rubbish. GIGO but with the proviso that the output is pre-designed.

Nick neglected to mention that ocean models don’t converge.

See Wunsch, C. (2002), Ocean Observations and the Climate Forecast Problem, International Geophysics, 83, 233-245 for a very insightful discussion of the ocean and its modeling.

DR: here’s the description of some

of the NCAR CAM

model:

http://www.cesm.ucar.edu/models/atm-cam/docs/description/description.pdf

equations and all

“With four parameters I can fit an elephant, and with five I can make him wiggle his trunk.”

Just for fun, i counted the number of time the locution “assumed to be” is used. I stopped counting at 25. I check an few of them, they weren’t trivial. I didn’t count the number of other way assumption can be expressed…

So

hundreds of assumptions ; hundreds of parameters.

No wonder the trunk wiggle as requested, as a serpent before his charmer.

I am pretty sure that with the very same model and a few fiddling of parameters, i could easily have CO2 reduce temperature and induces a new ice age, without you being aware of the trick

Let me point this out again, the radiation model is probably correct, more co2 does cause more radiation. It’s what happens from there that matters in the models.

Let make sure in the code, more water vapor is created. In model E they called it supersaturation. They allowed humidity to go over 100% to get water amplification out of the boundary layer into the atm. Without this hack, the models run cold. Most of these ran hot, so they adjusted aerosols to make them match measurements.

In CMIP they do the same, but in a section called mass conservation. They make sure enough water vapor mass makes it out of the boundary layer.

http://www.cesm.ucar.edu/models/atm-cam/docs/description/node13.html#SECTION00736000000000000000

The problem is temps follow dew points. remember it represents an energy barrier, to go lower it has to shed water, which requires the air to cool. As it does condense, it’s emitting sensible heat, IR, and if we were not there, it would likely hit another water molecule, and help evaporate it. At night, this layer radiates to space, but towards the surface as well, it slows the drop in temp, reduces how cold it get before morning.

I was out imaging till 5 am, the temp stopped dropping after midnight. Air temp was low 40’s, IR temp of the sky was -60F. That’s about 50W/m^2 of radiation out the optical window. All night, but temps didn’t really drop.

You can see how in the same situation, net radiation drops.

Also, when you look at temps dropping, an exp decay will be a smooth curve, the radiaus changes, but it’s smooth, when you look at them, and there’s a slight bend in the curve, the rate of energy flow (flux) changed. and you can see that in both of these.

If you understand how semiconductor carriers are manipulated, and controlled in a mosfet into operating like a switch, and then consider how the atm is actively controlling out going flux at night, there are a lot of similarities.

micro ,i would love to see your work submitted as a guest post here. i understand why you wouldn’t submit it to a journal for review.

But don’t ask about the fudge factors they use to parameterize what they don’t/can’t calculate.

Those fudge factors are the parameter tables they use to tune the model’s output projection to meet expectation.

Start with solid science as the first ingredients, then finish with large doses of crap, and you have a cake that tastes like crap.

I’d say you have a cake that basically IS crap. But then when you dig deep enough, even the IPCC admits this (quoted elsewhere in this discussion).

Pat Frank, you must be on to something. Anything that gets ATTP and Nick Stokes bowels in such an uproar has got to be worth pursuing.

Mosher……………not so much.