Guest Essay by Kip Hansen

Introduction:

Introduction:

Temperature and Water Level (MSL) are two hot topic measurements being widely bandied about and vast sums of money are being invested in research to determine whether, on a global scale, these physical quantities — Global Average Temperature and Global Mean Sea Level — are changing, and if changing, at what magnitude and at what rate. The Global Averages of these ever-changing, continuous variables are being said to be calculated to extremely precise levels — hundredths of a degree for temperature and millimeters for Global Sea Level — and minute changes on those scales are claimed to be significant and important.

In my recent essays on Tide Gauges, the question of the durability of original measurement uncertainty raised its toothy head in the comments section.

Here is the question I will try to resolve in this essay:

If original measurements are made to an accuracy of +/- X (some value in some units), does the uncertainty of the original measurement devolve on any and all averages – to the mean – of these measurements?

Does taking more measurements to that same degree of accuracy allow one to create more accurate averages or “means”?

My stated position in the essay read as follows:

If each measurement is only accurate to ± 2 cm, then the monthly mean cannot be MORE accurate than that — it must carry the same range of error/uncertainty as the original measurements from which it is made. Averaging does not increase accuracy.

It would be an understatement to say that there was a lot of disagreement from some statisticians and those with classical statistics training.

I will not touch on the subject of precision or the precision of means. There is a good discussion of the subject on the Wiki page: Accuracy and precision .

The subject of concern here is plain vanilla accuracy: “accuracy of a measurement is the degree of closeness of measurement of a quantity to that quantity’s true value.” [ True value means is the actual real world value — not some cognitive construct of it.)

The general statistician’s viewpoint is summarized in this comment:

“The suggestion that the accuracy of the mean sea level at a location is not improved by taking many readings over an extended period is risible, and betrays a fundamental lack of understanding of physical science.”

I will admit that at one time, fresh from university, I agreed with the StatsFolk. That is, until I asked a famous statistician this question and was promptly and thoroughly drummed into submission with a series of homework assignments designed to prove to myself that the idea is incorrect in many cases.

First Example:

Let’s start with a simple example about temperatures. Temperatures, in the USA, are reported and recorded in whole degrees Fahrenheit. (Don’t ask why we don’t use the scientific standard. I don’t know). These whole Fahrenheit degree records are then machine converted into Celsius (centigrade) degrees to one decimal place, such as 15.6 °C.

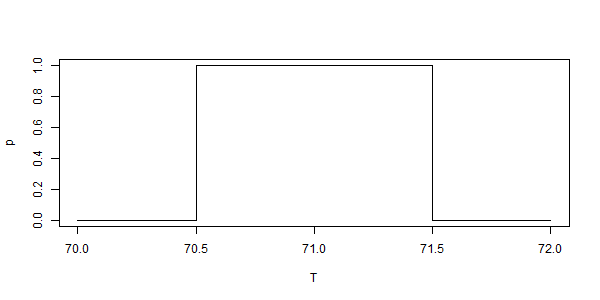

This means that each and every temperature between, for example, 72.5 and 71.5 °F is recorded as 72 °F. (In practice, one or the other of the precisely .5 readings is excluded and the other rounded up or down). Thus an official report for the temperature at the Battery, NY at 12 noon of “72 °F” means, in the real world, that the temperature, by measurement, was found to lie in the range of 71.5 °F and 72.5 °F — in other words, the recorded figure represents a range 1 degree F wide.

In scientific literature, we might see this in the notation: 72 +/- 0.5 °F. This then is often misunderstood to be some sort of “confidence interval”, “error bar”, or standard deviation.

It is none of those things in this specific example of temperature measurements. It is simply a form of shorthand for the actual measurement procedure which is to represent each 1 degree range of temperature as a single integer — when the real world meaning is “some temperature in the range of 0.5 degrees above or below the integer reported”.

Any difference of the actual temperature, above or below the reported integer is not an error. These deviations are not “random errors” and are not “normally distributed”.

Repeating for emphasis: The integer reported for the temperature at some place/time is shorthand for a degree-wide range of actual temperatures, which though measured to be different, are reported with the same integer. Visually:

Even though the practice is to record only whole integer temperatures, in the real world, temperatures do not change in one-degree steps — 72, 73, 74, 72, 71, etc. Temperature is a continuous variable. Not only is temperature a continuous variable, it is a constantly changing variable. When temperature is measured at 11:00 and at 11:01, one is measuring two different quantities; the measurements are independent of one another. Further, any and all values in the range shown above are equally likely — Nature does not “prefer” temperatures closer to the whole degree integer value.

[ Note: In the U.S., whole degree Fahrenheit values are converted to Celsius values rounded to one decimal place –72°F is converted and also recorded as 22.2°C. Nature does not prefer temperatures closer to tenths of a degree Celsius either. ]

While the current practice is to report an integer to represent the range from integer-plus-half-a-degree to integer-minus-half-a-degree, this practice could have been some other notation just as well. It might have been just report the integer to represent all temperatures from the integer to the next integer, as in 71 to mean “any temperature from 71 to 72” — the current system of using the midpoint integer is better because the integer reported is centered in the range it represents — this practice, however, is easily misunderstood when notated 72 +/- 0.5.

Because temperature is a continuous variable, deviations from the whole integer are not even “deviations” — they are just the portion of the temperature measured in degrees Fahrenheit normally represented by the decimal fraction that would follow the whole degree notation — the “.4999” part of 72.4999°F. These decimal portions are not errors, they are the unreported, unrecorded part of the measurement and because temperature is a continuous variable, must be considered evenly spread across the entire scale — in other words, they are not, not, not “normally distributed random errors”. They only reason they are uncertain is that even when measured, they have not been recorded.

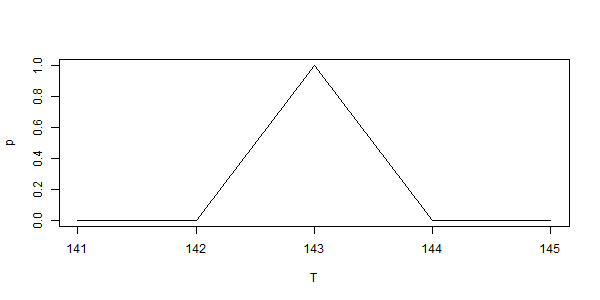

So what happens when we now find the mean of these records, which, remember, are short-hand notations of temperature ranges?

Let’s do a basic, grade-school level experiment to find out…

We will find the mean of a whole three temperatures; we will use these recorded temperatures from my living room:

11:00 71 degrees F 12:00 72 degrees F 13:00 73 degrees F

As discussed above, each of these recorded temperatures really represent any of the infinitely variable intervening temperatures, however I will make this little boxy chart:

Here we see each hour’s temperature represented as the highest value in the range, the midpoint value of the range (the reported integer), and as the lowest value of the range. [ Note: Between each box in a column, we must remember that there are an infinite number of fractional values, we just are not showing them at this time. ] These are then averaged — the mean calculated — left to right: the three hour’s highest values give a mean of 72.5, the midpoint values give a mean of 72, and the lowest values give a mean of 71.5.

The resultant mean could be written in this form: 72 +/- 0.5 which would be a short-hand notation representing the range from 71.5 to 72.5.

The accuracy of the mean, represented in notation as +/- 0.5, is identical to the original measurement accuracy — they both represent a range of possible values.

Note: This uncertainty stems not from the actual instrumental accuracy of the original measurement, which is a different issue and must be considered additive to the accuracy discussed here which arises solely from the fact that measured temperatures are recorded as one-degree ranges with the fractional information discarded and lost forever, leaving us with the uncertainty — a lack of knowledge — of what the actual measurement itself was.

Of course, the 11:00 actual temperature might have been 71.5, the 12:00 actual temperature 72, and the 13:00 temperature 72.5. Or it may have been 70.5, 72, 73.5.

Finding the means kiddy-corner gives us 72 for each corner to corner, and across the midpoints still gives 72.

Any combination of high, mid-, and low, one from each hour, gives a mean that falls between 72.5 and 71.5 — within the range of uncertainty for the mean.

Even for these simplified grids, there are many possible combinations of one value from each column. The means of any of these combinations falls between the values of 72.5 and 71.5.

There are literally an infinite number of potential values between 72.5 and 71.5 (someone correct me if I am wrong, infinity is a tricky subject) as temperature is a continuous variable. All possible values for each hourly temperature are just as likely to occur — thus all possible values, and all possible combinations of one value for each hour, must be considered. Taking any one possible value from each hourly reading column and finding the mean of the three gives the same result — all means have a value between 72.5 and 71.5, which represents a range of the same magnitude as the original measurement’s, a range one degree Fahrenheit wide.

The accuracy of the mean is exactly the same as the accuracy for the original measurement — they are both a 1-degree wide range. It has not been reduced one bit through the averaging process. It cannot be.

Note: For those who prefer a more technical treatment of this topic should read Clyde Spencer’s “The Meaning and Utility of Averages as it Applies to Climate” and my series “The Laws of Averages”.

And Tide Gauge Data?

It is clear that the original measurement accuracy’s uncertainty in the temperature record arises from the procedure of reporting only whole degrees F or degrees C to one decimal place, thus giving us not measurements with a single value, but ranges in their places.

But what about tide gauge data? Isn’t it a single reported value to millimetric precision, thus different from the above example?

The short answer is NO, but I don’t suppose anyone will let me get away with that.

What are the data collected by Tide Gauges in the United States (and similarly in most other developed nations)?

The Estimated Accuracy is shown as +/- 0.02 m (2 cm) for individual measurements and claimed to be +/- 0.005 m (5 mm) for monthly means. When we look at a data record for the Battery, NY tide gauge we see something like this:

| Date Time | Water Level | Sigma |

| 9/8/2017 0:00 | 4.639 | 0.092 |

| 9/8/2017 0:06 | 4.744 | 0.085 |

| 9/8/2017 0:12 | 4.833 | 0.082 |

| 9/8/2017 0:18 | 4.905 | 0.082 |

| 9/8/2017 0:24 | 4.977 | 0.18 |

| 9/8/2017 0:30 | 5.039 | 0.121 |

Notice that, as the spec sheet says, we have a record every six minutes (1/10th hr), water level is reported in meters to the millimeter level (4.639 m) and the “sigma” is given. The six-minute figure is calculated as follows:

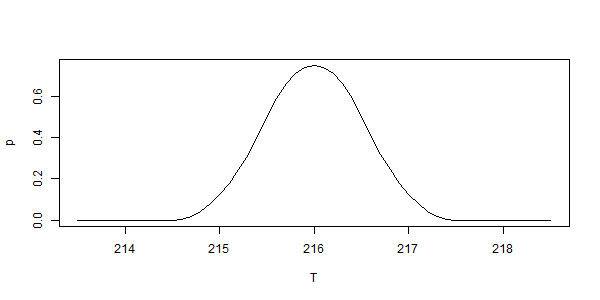

“181 one-second water level samples centered on each tenth of an hour are averaged, a three standard deviation outlier rejection test applied, the mean and standard deviation are recalculated and reported along with the number of outliers. (3 minute water level average)”

Just to be sure we would understand this procedure, I emailed CO-OPS support [ @ co-ops.userservices@noaa.gov ]:

To clarify what they mean by accuracy, I asked:

When we say spec’d to the accuracy of +/- 2 cm we specifically mean that each measurement is believed to match the actual instantaneous water level outside the stilling well to be within that +/- 2 cm range.

And received the answer:

That is correct, the accuracy of each 6-minute data value is +/- 0.02m (2cm) of the water level value at that time.

[ Note: In a separate email, it was clarified that “Sigma is the standard deviation, essential the statistical variance, between these (181 1-second) samples.” ]

The question and answer verify that both the individual 1-second measurements and the 6-minute data value represents a range of water level 4 cm wide, 2 cm plus or minus of the value recorded.

This seemingly vague accuracy — each measurement actually a range 4 cm or 1 ½ inches wide — is the result of the mechanical procedure of the measurement apparatus, despite its resolution of 1 millimeter. How so?

NOAA’s illustration of the modern Acoustic water level tide gauge at the Battery, NY shows why this is so. The blow-up circle to the top-left shows clearly what happens at the one second interval of measurement: The instantaneous water level inside the stilling well is different than the instantaneous water level outside the stilling well.

This one-second reading, which is stored in the “primary data collection platform” and later used as part of the 181 readings averaged to get the 6-minute recorded value, will be different from the actual water level outside the stilling well, as illustrated. Sometimes it will be lower than the actual water level, sometimes it will be higher. The apparatus as a whole is designed to limit this difference, in most cases, at the one second time scale, to a range of 2 cm above or below the level inside the stilling well — although some readings will be far outside this range, and will be discarded as “outliers” (the rule is to discard all 3-sigma outliers — of the set of 181 readings — from the set before calculating the mean which is reported as the six-minute record).

We cannot regard each individual measurement as measuring the water level outside the stilling well — they measure the water level inside the stilling well. These inside-the-well measurements are both very accurate and precise — to 1 millimeter. However, each 1-second record is a mechanical approximation of the water level outside the well — the actual water level of the harbor, which is a constantly changing continuous variable — specified to the accuracy range of +/- 2 centimeters. The recorded measurements represent ranges of values. These measurements do not have “errors” (random or otherwise) when they are different than the actual harbor water level. The water level in the harbor or river or bay itself was never actually measured.

The data recorded as “water level” is a derived value – it is not a direct measurement at all. The tide gauge, as a measurement instrument, has been designed so that it will report measurements inside the well that will be reliably within 2 cm, plus or minus, of the actual instantaneous water level outside the well – which is the thing we wish to measure. After taking 181 measurements inside the well, throwing out any data that seems too far off, the remainder of the 181 are averaged and reported as the six-minute recorded value, with the correct accuracy notation of +/- 2 cm — the same accuracy notation as for the individual 1-second measurements.

The recorded value denotes a value range – which must always be properly noted with each value — in the case of water levels from NOAA tide gauges, +/- 2 cm.

NOAA quite correctly makes no claim that the six-second records, which are the means of 181 1-second records, have any greater accuracy than the original individual measurements.

Why then do they make a claim that monthly means are then accurate to +/- 0.005 meters (5 mm)? In those calculations, the original measurement accuracy is simply ignored altogether, and only the reported/recorded six-minute mean values are considered (confirmed by the author) — the same error that is made as with almost all other large data set calculations, applying the inapplicable Law of Large Numbers.

Accuracy, however, as demonstrated here, is determined by the accuracy of the original measurements when measuring a non-static, ever-changing, continuously variable quantity and which is then recorded as a range of possible values — the range of accuracy specified for the measurement system — and cannot be improved when (or by) calculating means.

Take Home Messages:

- When numerical values are ranges, rather than true discrete values, the width of the range of the original value (measurement in our cases) determines the width of the range of any subsequent mean or average of these numerical values.

- Temperatures calculated from ASOS stations however are recorded and reported temperatures as ranges 1°F wide (0.55°C), and such temperatures are correctly recorded as “Integer +/- 0.5°F”. The means of these recorded temperatures cannot be more accurate than the original measurements –because the original measurement records themselves are ranges, the means must be denoted with the same +/- 0.5°F.

- The same is true of Tide Gauge data as currently collected and recorded. The primary record of 6-minute-values, though recorded to millimetric precision, are also ranges with an original accuracy of +/- 2 centimeters. This is the result of the measurement instrument design and specification, which is that of a sort-of mechanical averaging system. The means of tide gauge recorded values cannot be made more accurate the +/- 2 cm — which is far more accurate than needed for measuring tides and determining safe water levels for ships and boats.

- When original measurements are ranges, their means are also ranges of the same magnitude. This fact must not be ignored or discounted; doing so creates a false sense of the accuracy of our numerical knowledge. Often the mathematical precision of a calculated mean overshadows its real world, far fuzzier accuracy, leading to incorrect significance being given to changes of very small magnitude in those over-confident means.

# # # # #

Author’s Comment Policy:

Thanks for reading — I know that this will be a difficult concept for some. For those, I advise working through the example themselves. Use as many measurements as you have patience for. Work out all the possible means of all the possible values of the measurements, within the ranges of those original measurements, then report the range of the means found.

I’d be glad to answer your questions on the subject, as long as they are civil and constructive.

# # # # #

Thank you for all the hard work.

The first place to start is to point out that Global Average Temperature is NOT a “physical quantity”. You can not take the average of temperature, especially across vastly different media like land sea and ice. It’s scientific bullshit.

Are land + sea temperature averages meaningful?

https://judithcurry.com/2016/02/10/are-land-sea-temperature-averages-meaningful/

Before you start arguing about uncertainty ( which is a very good argument to get into ) you need to make sure are measuring something that is physically meaningful.

Greg, if you don’t think there is a physical “global temperature” what is your opinion of the global average of temperature anomalies? Ditto for sea surface levels.

This whole subject of uncertainty and measurement error is very complex out side a carefully constructed lab experiment. It is certainly key to the whole climate discussion and is something that Judith Curry has been pointing out fro at least a decade now.

However, this simplistic article by Kip does not really advance the discussion and sadly is unlikely to get advanced very much an anarchic chain of blog posts.

Kip clearly does not have the expertise to present a thorough discussion. It would be good if someone like his stats expert could have would have written it. This definately does need a thorough treatment and the currently claimed uncertainties are farcical, I will second him on that point.

Greg. You won’t get any argument from me that “Global Average Temperature” isn’t a poor metric. It’s very sensitive to the constantly changing distribution of warm water in the Pacific Ocean basin. Why would anyone not working on ENSO want a temperature metric that behaves like that? But it really is a physical quantity — if an inappropriate one for the purposes it’s being used for. Don’t you think it was almost certainly lower at the height of the last glaciation, or higher during the Cretaceous?

“if you don’t think there is a physical “global temperature”” – It’s not an opinion. It stems from the definition of temperature. They do indeed extend the notion of temperature in some very special cases for systems out of thermodynamic equilibrium, but typically it’s for dynamical equilibrium and they do lead to nonsense when taking out of context (such as absolute negative temperature). But for systems that are not even in dynamical equilibrium, such as Earth, it’s pure nonsense to average an intensive value that can be defined only locally, due of cvasiequilibrium. It’s not only pure nonsense, but it’s very provable that if you still insist of using such nonsense, you’ll get the wrong physical results out of calculation, even for extremely simple systems.

Don , maybe you should read the link in my first comment. There is a whole article explaining why global mean temperature is not physically meaningful.

Greg ==> I don’t disagree about global means — but one has to call them something — they certainty are a hot topic of conversation and research, even if they don;t really exist.

Dr. Curry’s point are well taken, many people do not understand the differences between energy and temperature. I also point out that “average daily temperature,” which has been interpreted as the average of the daily maximum and minimum is also misunderstood. We are now able to take temperature at the interval of our choice and come up with a weighted average. The average computed from just one daily maximum and one daily minimum assumes the temperatures spend equal amount of time clustered around the average. This is clearly not the case. So when comparing historical temperatures to newer values, it is important to realize the differences.

just to be clear oeman50, that was my article that Judith Curry published on here site. Note the credit just below the title. 😉

The main problem with averaging anything globally is that no living thing on Earth actually experiences the global average. Additionally, the average temperature tells us nothing about the daily range of temperatures. If I experience a day which is 60 degrees in the morning, and 100 degrees in the afternoon, is it not hotter than a day which starts out at 75 and reaches a high of 95? Yet once averaged, the 95 degree day is reported as 5 degrees hotter than the 100 degree day. Of course it gets more complex, but it would be like calculating a globally averaged per capita crime rate. You could do it, but it would be a useless number because the only thing that is important is the criime rate where you are or plan to be. Same with temperature. If we experience a decade where the global average temperature goes up a small amount, was it higher daytime highs that caused it? Was it higher daytime lows that caused it? Was the range the same, but the heat lingered on a little longer after sunset? You can’t tell what is happening unless you look at local specifics, hour by hour. It would be like trying to tell me what song I’m thinking of if I just told you what the average musical note was. Meaning is in the details.

In the same vein, I’ve always wondered why we track the CO2 content of the atmosphere without tracking all of the other greenhouse gases as closely. If CO2 concentration goes up, do we know for a fact that that increases the total amount of greenhouse gases? Could another gas like water vapor decrease at times to balance out or even diminish the total?

It just seems to me that we are standing so far back trying to get the “big picture” that we are missing the details that would have told us the picture was a forgery.

I’m no scientist, so blast me if I’m wrong, but the logic of it all seems to be lost.

Which is why only satellite, radiosonde and atmospheric reanalysis information [I hesitate to use “data.”] are appropriate for use in determining any averages, trends, etc.

In a few [number of?] years ARGO may be useful. Early ARGO information shows no worrisome patterns.

@ur momisugly Greg “This whole subject of uncertainty and measurement error is very complex”

Yes it is: “In 1977, recognizing the lack of international consensus on the expression of uncertainty in measurement, the world’s highest authority in metrology, the Comité International des Poids et Mesures (CIPM), requested the Bureau International des Poids et Mesures (BIPM) to address the problem in conjunction with the national standards laboratories and to make a recommendation.”

It took 18 years before the first version of a standard that deals with these issues in a successful way, was finally published. That standard is called: ´Guide to the expression of uncertainty in measurement´. There now exists only this one international standard for expression of uncertainty in measurement.

“The following seven organizations supported the development of the Guide to expression of uncertainty, which is published in their name:

BIPM: Bureau International des Poids et Measures

IEC: International Electrotechnical Commission

IFCC: International Federation of Clinical Chemistry

ISO: International Organization for Standardization

IUPAC: International Union of Pure and Applied Chemistry

IUPAP: International Union of Pure and Applied Physics

OlML: International Organization of Legal Metrology ..”

The standard is freely available. I think of it as a really good idea to use that standard for what should be obvious reasons. Even some climate scientists are now starting to realize that international standards should be used. See:

Uncertainty information in climate data records from Earth observation:

“The terms “error” and “uncertainty” are often unhelpfully conflated. Usage should follow international standards from metrology (the science of measurement), which bring clarity to thinking about and communicating uncertainty information.”

“Before you start arguing about uncertainty ( which is a very good argument to get into ) you need to make sure are measuring something that is physically meaningful.”

They are connected. The mean of an infinite number of measurements should give you the true value if individual measurements were only off due to random error. You need precise measurements to be sure that the distribution is perfect if you want others to believe that 10 000 measurements has reduced the error by √100. Even the act of rounding up or down means that you shouldn’t pretend that the errors were close to a symmetrical distribution and definitely not close enough to attribute meaning to a difference of 1/100th of the resolution. How anyone could argue against it is beyond me.

To then do it for something that it not an intrinsic property is getting silly. I know what people are thinking but the air around a station in the morning is not the same as that around it when the max is read.

Agreed, TG!

An excellent essay Kip!

I worked with IMD in Pune/India [prepared formats to transfer data on to punched cards as there was no computer to transfer the data directly]. There are two factors that affect the accuracy of data, namely:

Prior to 1957 the unit of measurement was rainfall in inches and temperature in oF and from 1957 they are in mm and oC. Now, all these were converted in to mm and oC for global comparison.

The second is correcting to first place of decimal while averaging: 34.15 is 34.1; 34.16 is 34.2; 34.14 is 34.1 and 34.25 is 34.3; 34.26 is 34.3; 34.24 is 34.2

Observational error: Error in inches is higher than mm and Error in oC is higher than oF

These are common to all nations defined by WMO

Dr. S. Jeevananda Reddy

Dr. Reddy, Very interesting. By the way, you can use alt-248 to do the degree symbol, °.

Take care,

Thank you for this information. I have always suspected the reported accuracy of many averaged numbers were simply impossible. This helps to clarify my suspicions. I also do not understand how using 100 year old measurements mixed with modern ones can result in the high accuracy stated in many posts. They seem to just assume that a lot of values increases the final accuracy regardless of the origin and magnitude of the underlying uncertainties.

Only bullshit results. Even for modern measurements, it’s the hasty generalization fallacy to claim that it applies to the whole Earth. Statisticians call it a convenience sampling. And that is only for the pseudo-measurement that does not evolve radically over time. Combining all together is like comparing apples with pears to infer things about a coniferous forest.

Standard calculations in Chemistry carefully watch the significant digits. 5 grams per 7 mililiters is reported as 0.7 g/mL. Measuring several times with such low precision results in an answer with equally low precision. The extra digits spit out by calculators are fanciful in the real world.

People assume that modern digital instruments are inherently more accurate than old-style types. In the case of temperature at least this is not necessarily so. When temperature readings are collated and processed by software yet another confounding factor is introduced.

With no recognition of humidity, differing and changing elevation, partial sampling and other data quality issues, the idea that we could be contemplating turning the world’s function inside out over a possible few hundredths of a degree in 60 years of the assumed process is plainly idiotic.

AGW is an eco Socialist ghost story designed to destroy Capitalism and give power to those who can’t count and don’t want to work. I’m hardly a big fan of Capitalism myself but I don’t see anything better around. Socialism has failed everywhere it’s been tried.

If quantization does not deceive you Nyqust will.

Kip says: “If each measurement is only accurate to ± 2 cm, then the monthly mean cannot be MORE accurate than that — it must carry the same range of error/uncertainty as the original measurements from which it is made. Averaging does not increase accuracy.”

…

WRONG!

…

the +/- 2cm is the standard deviation of the measurement. This value is “sigma of x ” in the equation for the standard error of the estimator of the mean:

https://www.bing.com/images/search?view=detailV2&ccid=CYUOXtuv&id=B531D5E2BA00E15F611F3DAEC1B85110014F74C6&thid=OIP.CYUOXtuvcFogpL3jEnQw_gEsBg&q=standard+error&simid=608028072239301597&selectedIndex=1

…

The error bars for the mean estimator depends on the sqrt of “N”

roflmao..

You haven’t understood a single bit of what was presented, have you johnson

You have ZERO comprehension when that rule can and can’t be used, do you. !!

(Andy, you need to do better than this when you think Johnson or anyone else is wrong. Everyone here is expected to moderate themselves according to the BOARD rules of conduct. No matter if Johnson is right or wrong,being rude and confrontative without a counterargument,is not going to help you) MOD

I know perfectly well when to use standard error for the estimator of the mean.

…

See comment by Nick Stokes below.

Andy, how about you drop the aggressive, insulting habit of addressing all you replies to “johnson”. If you don’t agree with him, make you point. Being disrespectful does not give more weight to your point of view.

Also getting stroppy from the safely of your keyboard is a bit pathetic.

lighten up greg

ROFL^2

You are a bit rude, Andy, but you are right.

Can we all TRY to be both polite and scientifically /mathematically correct please. It makes for a better blog all round.

Is Andy any ruder than Johnson was?

Especially when Johnson ignores facts, documentation and evidence presented in order to proclaim his personal bad statistics superior.

Nor should one overlook Johnson’s thread bombings in other comment threads.

Sorry, but it very obvious that mark DID NOT understand the original post.

When their baseless religion relies totally on a shoddy understand of mathematical principles, is it any wonder the AGW apostles will continue to dig deeper?

“I know perfectly well when to use standard error for the estimator of the mean.”

Again. it is obvious that you don’t !!

For those who are actually able to comprehend.

Set up a spreadsheet and make a column as long as you like of uniformly distributed numbers between 0 and 1, use =rand(1)

Now calculate the mean and standard deviation.

The mean should obviously get close to 0.5..

but watch what happens to the deviation as you make “n” larger.

For uniformly distributed numbers, the standard deviation is actually INDEPENDENT of “n”

darn typo..

formula is ” =rand()” without the 1, getting my computer languages mixed up again. !!

Furthermore, since ALL temperature measurements are uniformly distributed within the individual ranged used for each measurement, they can all be converted to a uniform distribution between 0 and 1 and the standard deviation remains INDEPENDENT OF “n”</strong)

Obviously, that means that the standard error is also INDEPENDENT of n

Andy, standard deviation and sampling error are not the same things, so please tell me what you think your example is showing?

Sorry you are having problems understanding, Mark.. Your problem, not mine.

Another simple explanation for those with stuck and confused minds.

Suppose you had a 1m diameter target, and, ignoring missed shots”, they were random uniformly distributed on the target.

Now, the more shots you have, the closer the mean will be to bulls eye..

But the error from that mean with ALWAYS be approximately +/- 0.5m uniformly distributed.

“The mean should obviously get close to 0.5.”

“Obviously, that means that the standard error is also INDEPENDENT of n”

Those statements are contradictory. Standard error is the error of the mean (which is what we are talking about). If it’s getting closer to 0.5 (true) then the error isn’t independent of n. In fact it is about sqrt(1/12/n).

I did that test with R : for(i in 1:10)g[i]=mean(runif(1000))

The numbers g were

0.5002 0.5028 0.4956 0.4975 0.4824 0.5000 0.4865 0.5103 0.5106 0.5063

Standard dev of those means is 0.00930. Theoretical is sqrt(1/12000)=0.00913

Seems to me that no matter how data is treated or manipulated there is nothing that can be done to it which will remove the underlying inaccuracies of the original measurements.

If the original measurements are +/- 2cm then anything resulting from averaging or mean is still bound by that +/- 2cm.

Mark, could you explain why you believe that averagaing or the mean is able to remove the original uncertainty ? because I can’t see how it can.

Btw I can see how a trend might be developed from data with a long enough time series – But until the Trend is greater than the uncertainty it cannot constitute a valid trend.

e.g. In temperature a trend showing an increase of 1 deg C from measurements with a +/- 0.5 deg C (i.e. 1 deg C spread) cannot be treated as a valid trend until it is well beyond the 1 deg C, and even then it remains questionable.

I’m no mathematician or statistician but to me that is plain commonsense despite the hard-wired predilection for humans to see trends in everything ………

Maybe someone here has experience with information theory, I did some work with this years ago in relation to colour TV transmissions and it is highly relevant to digital TV . All about resolution and what you need to start with to get a final result. I am quire rusty on it now but think it is very relevant here, inability to get out more than you start with.

Old England:

Consider this; you take your temperature several times a day for a period of time.

Emulating NOAA, use a variety of devices from mercury thermometers, alcohol thermometers, cheap digital thermistors and infra red readers.

Sum various averages from your collection of temperatures. e.g.;

Morning temperature,

Noon temperature,

Evening temperature,

Weekly temperature,

Monthly temperature,

Lunar cycle temperatures, etc.

Don’t forget to calculate anomalies from each average set. With such a large set of temperatures you’ll be able to achieve several decimal places of precision, though of very dubious accuracy.

Now when your temperature anomaly declines are you suffering hypothermia?

When your temperature anomaly is stable are you healthy?

When your temperature anomaly increases, are you running a fever or developing hyperthermia?

Then after all that work, does calculating daily temperatures and anomalies to several decimal places really convey more information than your original measurement’s level of precision?

Then consider; what levels of precision one pretends are possible within a defined database are unlikely to be repeatable for future collections of data.

i.e. a brief window of data in a cycle is unlikely to convey the possibilities over the entire cycle.

Nor do the alleged multiple decimals of precision ever truly improve the accuracy of the original half/whole degree temperature reading.

Then, consider the accuracy of the various devices used; NOAA ignores error rates inherent from equipment, readings, handlings, adjustments and calculations.

“The error bars for the mean estimator depends on the sqrt of “N””

Only true if the measured quantity consists of independent and identically distributed random variables. Amazing how few people seem to be aware of this.

Good luck in proving that there is no autocorrelation between sea-level measurements Mark!

Mark ==> You present exactly what I point out is the misunderstanding when a tide gauge measurement is presented as an integer — notated as 100 +/- 2cm. The +/-2cm is NOT a standard deviation, not an error bar, not a confidence interval — but it sure looks like one as they are all written in the same way. In actual fact, it is the uncertainty of the measurement, brought about by the physical design of the measurement instrument.

Kip: ” In actual fact, it is the uncertainty of the measurement ”

..

Maybe this can clear up your misunderstanding: https://explorable.com/measurement-of-uncertainty-standard-deviation

….

Just remember std deviation is defined independent of the underlying distribution…(i.e. normal, uniform, geometic, etc.)

“The +/-2cm is NOT a standard deviation, not an error bar, not a confidence interval”

Then what is “uncertainty”?

Kip/Nick: Actually a stated instrument MU is a confidence interval. It is defined in the ISO Guides and elsewhere (including NIST) as:

The default is a 95% confidence interval. Thus a measured value of 100 cm can be said to have a true value of between 98 and 102 cm with a 95% confidence if the instrument MU is +/- 2 cm. While it is indeed derived from the standard deviations of various factors that affect the measurement, it is actually a multiple of the combined SDs. Two times the SD for a 95% MU confidence. However, it is not related to the SD of multiple measurements of the measurand. This is a measure of the variability of the thing being measured and such variability is only partly the result of instrument MU. Proper choice of instruments should make instrument MU a negligible issue. Problems arise when the measurement precision required to make a valid determination is not possible with the equipment available. In short, if you want to measure sea level to +/- 1 mm you need a measuring device with an MU of less than 1 mm.

Put another way, you can’t determine the weight of a truck to the nearest pound by weighing it on a scale with a 10 pound resolution no matter how many times you weigh it.

Above I referred to multiple sources of MU that need to be combined. This is known as an uncertainty budget. As an example a simple screw thread micrometer includes the following items: repeatability, scale error, zero point error, parallelism of anvils, temperature of micrometer, temperature difference between micrometer and measured item. However, the vast majority of instrument calibrations are done by simple multiple comparisons of measured values of certified reference standards. In these calibrations there are always at least three sources of MU. The uncertainty of the reference standard, one half the instrument resolution and the standard deviation of the repeated comparison deviation from the reference value. In addition, to be considered adequate the Test Uncertainty Ratio (MU of device being calibrated divided by MU of reference) must be at least 4:1.

This is all basic metrology that should be well understood by any scientist or engineer. But I know from experience that it is not as is clearly evident in these discussions.

Thanks again for you clear and well informed opinion on these matters.

The problem with using S.D as the basis for establishing “confidence intervals” is that it is based soley on statistics and addresses only the sampling error.

If global mean SST is given as +/-0.1 deg C then a “correction” is made due to a perceived bias of 0.05 deg and the error bars are the same ( because the stats are still the same ) then we realise that they are not including all sources of error and the earlier claimed accuracy was not correct.

The various iterations of hadSST have not changed notably in their claimed confidence levels yet at one point they introduced -0.5 deg step change “correction”. This was later backed out and reintroduced as a progressive change, having come up with another logic to do just about the same overall change of 0.5 deg C.

Variance derived confidence levels do NOT reflect the full range of uncertainty, only one aspect: sampling error.

Greg ==> Best stay clear of the Statistics Department at the local Uni….they don’t like that kind of talk. Here either…as you see.

Mark S, you missed the whole point of why this isn’t so in the case of temperatures and tide gauges. If you measure the length of a board a dozen times carefully, then you are right. But if the board keeps changing its own length, then multiple measurings are not going to prove more accurate or even representative of anything. I hope this helps.

If the measurement is made of the same thing, the different results can be averaged to improve the accuracy.

Since the temperature measurements are being made at different times, they cannot be used to improve the accuracy.

That’s basic statistics.

Measuring an individual “thing” and sampling a population for an average are two distinct, and different things. You seem to be confusing the two.

Mark S Johnson,

You are quite wrong. If I handed you an instrument I calibrated to some specific accuracy, say plus or minus one percent of full scale for discussion purposes, you had better not claim any measurement made with it, or any averages of those values, is more accurate than what I specified. In fact, if the measurement involved safety of life, you must return the instrument for a calibration check to verify it is still in spec.

Where anyone would come up with the idea that an instrument calibration sticker that say something like “+/- 2 cm” indicates a standard deviation, I cannot imagine. In the cal lab, there is no standard deviation scheme for specifying accuracy. When we wrote something like “+/- 2 cm”, we meant that exactly. That was the sum of the specified accuracy of the National Bureau of Standards standard plus the additional error introduced by the transfer reference used to calibrate the calibration instrument plus the additional error introduced by the calibration instrument used on your test instrument.

Again, that calibration sticker does not say “+/- 2 cm” is some calculated standard deviation from true physical values. It means what at each calibration mark on the scale, the value will be within “+/- 2 cm” of true physical value. That does not, however specify the Precision of the values you read. That is determined by the way the instrument presents its values. An instrument calibrated to “+/- 2 cm” could actually have markings at 1 cm intervals. In that case, the best that can be claimed for the indication is +/- 0.5 cm. The claimed value would then be +/- 0.5 cm plus the +/- 2 cm calibration accuracy. Claiming an accuracy of better than +/- 2.5 cm would in fact be wrong, and in some industries illegal. (Nuclear industry for example.)

So drop the claims about standard deviation in instrument errors. It does not even apply to using multiple instrument reading the same process value at the same time. In absolutely no case can instrument reading values be assumed to be randomly scattered around true physical values within specified instrument calibration accuracy. Presenting theories about using multiple instruments from multiple manufacturers, each calibrated with different calibration standards by different technicians or some such similar example is just plain silly when talking about real world instrumentation use. You are jumping into the “How many angels can dance on the head of a pin” kind of argument.

Gary, they do not make an instrument that can measure “global temperature.”

…

Measuring “global temperature” is a problem in sampling a population for the population mean. Once you understand this, you may be able to grasp the concept of “standard error” which is comprised of the standard deviation of the instrument used for measurement, divided by the sqrt of the number of obs.

…

Now when/if they build an instrument that can measure the global temperature with one reading, then your argument might hold water.

Mark,

Where above do I mention “global temperature”? My statements were about the use of instrument readings (or observations to the scientific folks.) I would suggest that however that “global temperature” be derived, it cannot claim an accuracy better than the calibration accuracy of the instrumentation used. Wishful thinking and statistical averaging cannot change that.

Remember the early example of averages of large numbers was based upon farm folks at an agricultural fair guessing the weight of a bull. The more guesses that were accumulated, the closer the average came to the true weight. Somehow that has justified the use of averaging in many inappropriate situations. Mathematical proofs using random numbers do not justify or indicate the associated algorithms are universally applicable to real world situations.

Gary, the estimator of the population mean can be made more accurate with more observations. The standard error is inversely proportional to the sqrt of the number of obs.

…..

Here’s an example.

….

Suppose you wanted to measure the average daily high temperature for where you live on Oct 20th. You measure the temp on Oct 20th next Friday.

…

Is this measure any good?

…

Now, suppose you do the same measurement 10/20/2017, 10/20/2018, 10/20/2019 and 10/20/2020, then take the average of the four readings.

..

Which is more accurate?…..the single lone observation you make on Friday, or the average of the four readings you make over the next four years?

….

If you are interested in the real climatic average for your location on Oct 20th, you really need 30 years of data to be precise.

Gary, RE: weight of bull.

…

Here you go again with an incorrect analogy. The weight of an individual bull is not a population mean. Don’t confuse the two. The correct “bull” analogy would be to actually measure the weight of 100 bulls, to determine what the average weight of a bull is. The more bulls you measure, the closer you will get to what the “real” average bull weight is.

BZ!

There will be some of us (like Gary and myself) on here who have regularly sent instruments away to be calibrated and had to carefully consider the results, check the certificates etc. We appear to know rather more about this than some contributors today. I find it interesting that a simple experience like this can help a lot in an important discussion.

“the estimator of the population mean can be made more accurate with more observations. The standard error is inversely proportional to the sqrt of the number of obs.”

Two points here: 1. “estimator” mean guess. 2. your estimator may be made more precise according to a specified estimation algorithm. That does not relate to its accuracy. Your comment about standard deviation only applies to how you derive your guess.

“If you are interested in the real climatic average for your location on Oct 20th, you really need 30 years of data to be precise.”

Good now we are on the same page. You are achieving a desired PRECISION. Accuracy, however remains no better than the original instrumentation accuracy and often worse depending upon how the data is mangled to fit your algorithm. (F to C etc.)

“Here you go again with an incorrect analogy. The weight of an individual bull is not a population mean. Don’t confuse the two. The correct “bull” analogy would be to actually measure the weight of 100 bulls, to determine what the average weight of a bull is. The more bulls you measure, the closer you will get to what the “real” average bull weight is.”

Nope, the exercise was to determine the accuracy of guesses about the weight of a single bull tethered to a post at the fair. A prize was awarded to the person who guessed the closest. It was not about guessing the weight bulls as a population. The observation about that large numbers of guesses was that the average became closer to true weight of the bull as the number of guess increased, one guess per person. It was never claimed that random guess about random bulls would average to any meaningful or useful number.

Guessing the weight of an individual bull is not the same as sampling a population. Hey…..ever hear about destructive testing? It’s what happens when running the test obliterates the item “measured.” For example, how would you insure the quality of 1000 sticks of dynamite? Would you test each one, or would you take a representative random sample and test the smaller number?

Mark S Johnson October 15, 2017 at 9:02 am

“The weight of an individual bull is not a population mean. Don’t confuse the two.”

He didn’t confuse anything. He said “The more guesses that were accumulated, the closer the average came to the true weight. Somehow that has justified the use of averaging in many inappropriate situations.” But you like to fly off on your own illogical tangent, which just gets in the way of those of us trying to understand the arguments.

Then explain how that applies if the measurements are not normally distributed? And if you have no idea if they are normally distributed?Let’s say the sides of the block of metal I have on my desk.

Just to clarify Andy’s concerns. Mark Johnson is confusing uncertainty of the estimate with accuracy of the measure; they’re two different things, something Kip attempts to point out in his essay and also something that anyone familiar with measurement theory and statistics would understand from his essay. It’s possible a person without much practical experience in numerical modeling might miss the distinction, but I can assure you it’s there.

While the “law of large numbers” will reduce the error of estimate as Mark describes, it does nothing to increase accuracy of the measure.

Maybe another example is in order?

If a single measure is accurate +/- 2cm, it has an uncertainty associated with it also, which may perhaps be +/- 5mm. As repeated measures are taken and averaged, the uncertainty (5mm) can be reduced arithmetically as Mark Johnson describes, but the result is a measure accurate +/- 2cm with a lower uncertainty (for example +/- .1 mm).

I hope that resolves the conflicting views expressed here. I agree there’s no reason for ad hominem by either party. It’s a very confusing subject for most people, even some who’ve been involved with it professionally.

When what you are measuring is a population mean, it most certainly does increase the accuracy.

Mark S Johnson: The only person on this thread discussing measures of a population mean is you, and it’s almost certain the only training in statistics you’ve ever had involved SPSS.

Error in a measure is assumed to be normally distributed, not the measure itself. You need to meditate on that. The accuracy of a measure has nothing to do with the uncertainty of the estimate. The “law of large numbers” doesn’t improve accuracy, it improves precision. You’re wrong to argue otherwise.

Bartleby,

That is particularly true if there is a systematic error in the accuracy. If you have a roomful of instruments, all out of calibration because over time they have drifted in the same direction, using them to try to obtain an average will, at best, give you an estimate of what the average error is, but it will not eliminate the error. The only way that you are going to get the true value of the thing you are measuring is to use a high-precision, well-calibrated instrument.

Certainly true if there is systemic error, which really means the measure is somehow biased (part of an abnormal distribution); unless the error of estimate is normal, the law of large numbers can’t be used at all. It can never be used to increase accuracy.

The whole idea of averaging multiple measures of the same thing to improve precision is based on something we call a “normal error distribution” as you point out. We assume the instrument is true within the stated accuracy, but that each individual observation may include some additional error, and that error is normally distributed.

So, by repeatedly measuring and averaging the result, the error (which is assumed normal) can be arithmetically reduced, increasing the precision of the estimate by a factor defined by the number of measures. This is the “Students T” model.

But accuracy isn’t increased, only precision. 100 measures using a device accurate +/- 2cm will result in a more precise estimate that’s accurate to +/- 2cm.

Accuracy and Precision are two very different things.

‘The whole idea of averaging multiple measures of the same thing to improve precision is based on something we call a “normal error distribution”…’

Normal (or Gaussian) distributions are not required, though a great many measurement error sets do tend to a Normal distribution due to the Central Limit Theorem.

All that is required is that the error be equally distributed in + and – directions. Averaging them all together then means they will tend to cancel one another out, and the result will, indeed, be more accurate. Accuracy means that the estimate is closer to the truth. Precision means… well, a picture is worth a thousand words. These arrows are precise:

Bartemis illustrates very effectively, the difference between accuracy and precision.

Bartleby,

“100 measures using a device accurate +/- 2cm will result in a more precise estimate that’s accurate to +/- 2cm.

Accuracy and Precision are two very different things.”

Yes, if you are talking about a metrology problem, which is the wrong problem here. No-one has ever shown where someone in climate is making 100 measures of the same thing with a device. But there is one big difference between accuracy and precision, which is in the BIPM vocabulary of metrology, much cited here, but apparently not read. It says, Sec 2.13 (their bold):

“NOTE 1 The concept ‘measurement accuracy’ is not a quantity and is not given a numerical quantity value. “

Which makes sense. Accuracy is the difference between the measue and the true value. If you knew the true value, you wouldn’t be worrying about measurement accuracy. So that is the difference. If it has numbers, it isn’t accuracy.

Nick Stokes (perhaps tongue in cheek) writes: “So that is the difference. If it has numbers, it isn’t accuracy.”

Nick, if it doesn’t have numbers, it isn’t science. 🙂

Bartleby,

“isn’t science”

Well, it’s in the BIPM vocabulary of metrology.

Nick, there’s an old, old saying in the sciences that goes like this:

If you didn’t measure it, it didn’t happen.”

I sincerely believe that. So any “discipline” that spurns “numbers” isn’t a science. QED.

Bartleby,

I’m not the local enthusiast for use of metrology (or BIPM) here. I simply point out what they say about the “concept ‘measurement accuracy’”.

Nick Stokes writes: “I’m not the local enthusiast for use of metrology (or BIPM) here. I simply point out what they say about the “concept ‘measurement accuracy’”

OK. I don’t think that changes my assertion, that science is measurement based and so requires the use of numbers.

I’m not sure if you’re trying to make an argument from authority here? Id so it really doesn’t matter what the “BIPM” defines; accuracy is a numerical concept and it requires use of numbers. There’s no alternative.

If, in the terms of “metrology”, numbers are not required, then the field is no different from phrenology or astrology, neither of which is a science. Excuse me if you’ve missed that up until now. Numbers are required.

Mark S Johnson,

We have a very different take on what Kip has written. My understanding is that the tide gauges can be read to a precision of 1mm, which implies that there is a precision uncertainty of +/- 0.5mm. HOWEVER, it appears that the builders of the instrumentation and site installation acknowledge that each and all of the sites may have a systematic bias, which they warrant to be no greater than 2 cm in either direction from the true value of the water outside the stilling well. We don’t know whether the inaccuracy is a result of miscalibration, or drift, of the instrument over time. We don’t know if the stilling well introduces a time-delay that is different for different topographic sites or wave conditions, or if the character of the tides has an impact on the nature of the inaccuracy. If barnacles or other organisms take up residence in the inlet to the stilling well, they could affect the operation and change the time delay.

The Standard Error of the Mean, which you are invoking, requires the errors be random (NOT systematic!). Until such time as you can demonstrate, or at least make a compelling argument, that the sources of error are random, your insistence on using the Standard Error of the Mean is “WRONG!”

I think that you also have to explain why the claimed accuracy is more than an order of magnitude less than the precision.

Clyde, a single well cannot measure global average sea level. It does not sample with respect to the geographic dimension. Again there is confusion here with the precision/accuracy of an individual instrument, and the measurement of an average parameter of a population. Apples and oranges over and over and over.

Mark S Johnson,

I never said that a single well measured the average global sea level, and I specifically referred to the referenced inaccuracy for multiple instruments.

You did not respond to my challenge to demonstrate that the probable errors are randomly distributed, nor did you explain why there is an order of magnitude difference between the accuracy and precision.

You seem to be stuck on the idea that the Standard Error of the Mean can always be used, despite many people pointing out that its use has to be reserved for special circumstances. You also haven’t presented any compelling arguments as to why you are correct. Repeating the mantra won’t convince this group when they have good reason to doubt your claim.

Clyde the reason it’s called Standard Error of the Mean is because I’m talking about measuring the mean and am not talking about an individual measurement.

…

This is not about measuring the same block of metal 1000 times to improve the measurement. It’s about measuring 1000 blocks coming off the assembly line to determine the mean value of the block’s you are making.

Mark S Johnson,

You said, “…I’m talking about measuring the mean.” Do you own a ‘meanometer?” Means of a population are estimated through multiple samples, not measured.

You also said, “This is not about measuring the same block of metal 1000 times to improve the measurement. It’s about measuring 1000 blocks coming off the assembly line to determine the mean value of the block’s you are making.”

In the first case, you are primarily concerned about the accuracy and precision of the measuring instrument. Assuming the measuring instrument is accurate, and has a small error of precision, the Standard Error of the Mean can improve the precision. However, no amount of measuring will correct for the inaccuracy, which introduces a systematic bias. Although, if the electronic measuring instrument is wandering, multiple measurements may compensate for that if the deviations are equal or random at each event. But, if you have such an instrument, you’d be advised to replace it rather than try to compensate after the fact.

In the second case, you have the same problems as case one, but you are also confronted with blocks that are varying in their dimensions. Again, if the measuring instrument is inaccurate, you cannot eliminate a systematic bias. While the blocks are varying, you can come up with a computed mean and standard deviation. However, what good is that? You may have several blocks that are out of tolerance and large-sample measurements won’t tell you that unless the SD gets very large; the mean may move very little if any. What’s worse, if the blocks are varying systematically over time, for example as a result of premature wear in the dies stamping them, neither your mean or SD is going to be very informative with respect to your actual rejection rate. They may provide a hint that there is a problem in the production line, but it won’t tell you exactly what the problem is or which items are out of tolerance. In any event, even if you can justify using the Standard Error of the Mean to provide you with a more precise estimate of the mean, what good does it do you in this scenario?

“In the second case, you have the same problems as case one, but you are also confronted with blocks that are varying in their dimensions. In this case you shouldn’t be worrying about your instrument, your concern is your manufacturing process!

Clyde –

You’re playing into the hands of someone ignorant. It’s a common fault on public boards like this.

Both of you (by that I mean Johnson too) are freely exchanging the terms “accuracy” and “uncertainty”; they are not the same. Until you both work that out you’re going to argue in circles for the rest of eternity.

Nick Stokes ==> Said: October 16, 2017 at 10:11 pm

And the rest of the note? The very next sentence….. is!

This is exactly what Kip Hansen has argued all along and exactly what Bartleby just wrote** and yet you have just gone out of your way to cherry pick the quote and completely butcher the context of the very definition you are referring to!

*And measurement error is defined at 2.16 (3.10) thusly: “measured quantity value minus a reference quantity value”

**Bartleby wrote: “100 measures using a device accurate +/- 2cm will result in a more precise estimate that’s accurate to +/- 2c.”

SWB,

“The very next sentence…”

The section I quoted was complete in itself, and set in bold the relevant fact: “is not given a numerical quantity value“. Nothing that follows changes that very explicit statement. And it’s relevant to what Bartleby wrote: “a more precise estimate that’s accurate to +/- 2cm”. BIPM says that you can’t use a figure for accuracy in that way.

Mark ==> The +/- 2 cm is not the standard deviation. It is the original measurement accuracy specification, confirmed by NOAA CO-OPS. The “Sigma” is a different figure, provided by NOAA CO_OPS, as the standard deviation of the 181 1-second records being used to create a six-minute mean. That “sigma”, clarified by NOAA CO-OPS as ““Sigma is the standard deviation, essential[ly] the statistical variance, between these (181 1-second) samples.”

Please re-ready my email exchange with NOAA CO-OPS support:

+/- 2cm is the ACCURACY of the six-minute means — which are the only permanent record made by the Tide Gauge system from meASUREMENTS.

Well said, Kip.

Mark S: “the +/- 2cm is the standard deviation of the measurement”

No, it is not the SD. The SD can only be calculated after a set of readings has been made. The 2cm uncertainty is a characteristic of the instrument, determined by some calibration exercise. It is not an ‘error bar’, it is an inherent characteristic of the apparatus. Being inherent, replicating measurements or duplicating the procedure will not reduce the uncertainty of each measurement.

Were this not so, we would not strive to create better instruments.

You make an additional error I am afraid: each measurement stands alone, all of them. They are not repeat measurements of ‘the same thing’ for it is well known in advance that the level will have changed after the passage of second. The concept you articulate relates to making multiple measurements of the same thing with the same instrument. An example of this is taking the temperature of a pot of water by moving a thermocouple to 100 different positions within the bulk of the water. The uncertainty of the temperature is affected by the uncertainty of each reading, again, inherent to the instrument and the SD of the data. One can get a better picture of the temperature of the water by making additional measurements, but the readings are no more accurate than before, and the average is not more accurate just because the number of readings is increased. Making additional measurements tells us more precisely where the middle of the range is, but does not reduce the range of uncertainty. This example is not analogous to measuring sea level 86,400 times a day as it rises and falls.

Whatever is done using the 1-second measurements, however processed, the final answer is no more accurate than the accuracy of the apparatus, which is plus minus 20mm.

Crispin ==> Bless you, sir…even if you are in Beijing!

Help admin or mod or mods. A close block quote went astray just above. Please, thank you 😉

SWB ==> Think I’ve adjusted it the way you meant.

Nick Stokes==> October 18, 2017 at 12:58 am:

Talk about perversity – I can’t imagine it would be anything else – if you really are being intellectually honest!

Here is the whole reference (Their bold):

How could you completely miss the definition of Accuracy?

It is defined as the “closeness of agreement between a measured quantity value and a true quantity value of a measurand.”

It is very clear that the term is not numeric but ordinal and of course, ordinal quantities have mathematical meaning as you would well know!

“It is very clear that the term is not numeric but ordinal and of course, ordinal quantities have mathematical meaning as you would well know!”

Yes. And what I said, no more or less, is that it doesn’t have a number. And despite all your huffing, that remains exactly true, and is the relevant fact. I didn’t say it was meaningless.

Don’t feed the troll.

Auto

When I first considered the “law of large numbers” years ago, I applied an engineer’s mental test for myself. If I have a machine part that needs to be milled to an accuracy of .001 in, and a ruler that I can read to an accuracy of 1/16 in, could I just measure the part with a ruler 1000 times, average the result, and discard my micrometer? I decided that I would not like to fly in an aircraft assembled that way.

Mark, I am far from an expert but do remember a little of what I leaned in my classes on stochastic processes. If I were able to assume that the distribution from which I was measuring was a stationary or at least wide sense stationary, then the process of multiple measurements as you imply could in fact increase the accuracy. This is actually how some old style analog to digital converters worked by using a simple comparator and counting the level crossings in time you can get extra bits of accuracy. This is similar to your assertion here.

The main flaw here is that you must make the stationarity assumption. Sorry, but temperature measurements and tidal gauge measurements are far from stationary. In fact, the pdf is a continuing varying parameter over time so I have a hard time agreeing with your assertion about the improvement in accuracy.

Alan ==> Oh yes, they have the rule but forget the requirements for applying the rule. “Stationary” and “Static” and “Fixed”… those must be a feature of the thing being measured many times.

The “mean” of an ever-changing, continuous variable, is not “one thing measured many times”

This is essentially about significant digits. Not the standard deviation of a sample of sample means. These two things are different. Ok? You cannot manufacture significant digits by taking samples. Period.

It may be worth remembering – no calculated figure is entitled to more significant figures (accuracy) than the data used in the calculation.

In fact, the further your calculations get from the original measured number, the greater the uncertainty gets.

Three measurements, each with one digit of significance: 0.2, 0.3 and 0.5

…

The calculated average is what?

…

Is it 0?

is it .33?

or is it .33333 ?

In fact the more digits you add, the closer you come to the real value, namely one third.

Mark, what you illustrate in your example is the reduction of uncertainty and convergence on the true value that can be accomplished when averaging multiple observations of the same thing using the same instrument (or instruments calibrated to the same accuracy). It assumes several things, the one thing not mentioned in Kip’s article or your example is that all measures come from a quantity that’s normally distributed. So there are at least three assumptions made when averaging a quantity and using the “law of large numbers” to reduce uncertainty in the measure;

– That all measures are of the same thing.

– That all measures have the same accuracy.

– That the measures are drawn from an underlying normal distribution.

All three assumptions must be met for the mean to have “meaning” 🙂

Briefly, if you average the length of 100 tuna, and the length of 100 whale sharks, you won’t have a meaningful number that represents the average length of a fish. In fact, if you were to plot your 200 observations, you’d likely find two very distinct populations in your data, one for whale sharks and another for tuna. The data don’t come from a normal distribution. In this case, any measure of uncertainty is useless since it depends on the observations coming from a normal distribution. No increase in instrument accuracy can improve precision in this case.

I’ll get to this again in my comment on Kip’s essay below.

Bartleby, I believe this is the crux of the wealth of misunderstanding here: “That all measures are of the same thing.”

….

A population mean is not a “thing” in your analysis of measurement.

…

You can’t measure a population mean with a single measure, you need to do random sampling of the population to obtain an estimator of the mean.

…

This is not at all like weighing a beaker full of chemicals on a scale.

…

You don’t conduct an opinion poll by going to the local bar and questioning a single patron….you need a much larger SAMPLE to get an idea of what the larger population’s opinion is. In the extreme case where N(number of obs) = population size, your measure of the average has zero error.

The “average” temperature is not of any real value, it is the change in temperature, and then, as a change in the equator-polar gradient that seems to matter in climate. Purporting to find changes to the nearest thousandth of a degree with instruments with a granularity of a whole degree appears to be an act of faith by the warmist community. Credo quia absurdiam?

Mark S; You miss the point. What is the mean of 0.2+- 0.5, 0.3+- 0.5, and 0.5+- 0.5. Where the +- is uncertainty. Is it 0.3+- 0.5? How will even an infinite number of measurement reduce the uncertainty?

The range is going to be 0.8 to -0.5. You can say the mean is 0.3333, but I can say it is 0.565656 and be just as correct. Basically, just the mean without the uncertainty limits is useless.

“Bartleby, I believe this is the crux of the wealth of misunderstanding here: “That all measures are of the same thing.”

….

A population mean is not a “thing” in your analysis of measurement.”

Mark, you’ve been beaten enough. Go in peace.

Peter,

The actual rule is that no calculated result is entitled to more significant figures than the LEAST precise multiplier in the calculation.

I suspect that some mathematicians and statisticians unconsciously assume that all the numbers they are working with have the precision of Pi. Indeed, that might be an interesting test. Calculate PI many times using only measurements with one significant figure and see how close the result comes to what is known.

Clyde,

“Calculate PI many times using only measurements with one significant figure”

Something like this was done, by Buffon, in about 1733. Toss needles on floorboards. How often do they lie across a line. That is equivalent to a coarse measure. And sure enough, you do get an estimate of π.

Omg. Look, the example with needles just bakes perfect accuracy into the pie. Now let’s try marking needles as over a line or not with effing cataracts or something…good lord. I don’t understand why the idea of “your observations are fundamentally effing limited man!” is so hard to understand here. Nothing to do with minimizing random sampling error.

Kip is correct if the temperature never deviates from 72degF +/- 0.5degF. You will just write down 72 degF and the error will indeed be has he indicates.

Fortunately the temperature varies far more than that. One day, the temperature high/ow is 72/45 from 71.5 true and 45.6 true, the next day it is 73/43 from 72.3 true and 44.8 true, the next day it is 79/48 from 79.4 true and 47.9 true, and so on. The noise that is the difference between the true and recorded measurement has an even distribution as he notes, but can be averaged as long as the underlying signal swings bigger than the resolution of 1degF.

The Central Limit is a real thing. You average together a bunch of data with rectangular distribution you get a normal distribution. Go ahead and look at the distribution of a 6 sided dice. With one dice it’s rectangular. With two dice it’s a triangle. Add more and more dice and it’s a normal distribution.

Fortunately the signal varies by more than the 1 bit comparator window for the sigma-delta A/D and D/A converters in your audio and video systems, which operate on similar principles. It would be quite obvious to your ears if they failed to work. (yes, they do some fancy feedback stuff to make it better, but you can get a poor man’s version by simple averaging. I’ve actually designed and built the circuits and software to do so)

Peter

You assume you know the “true” temperature. Lets change that to all that you know is 72/45 +- 0.5, 73/43 +- 0.5, and 79/48 +- 0.5. Where the +- is uncertainty. Does the mean also have an uncertainty of +- 0.5. If not why not. Will 1000 measurements change the fact that each individual measurements has a specific uncertainty and you won’t really know the “true” measurement?

for 1,000 measurements the *difference* between the true and the measured will form a rectangular distribution. If that distribution is averaged the average forms normal distribution, per the central limit theorem. The mean of that distribution will be zero, and thus the mean of the written-down measurements will be the ‘true’ measurement.

Try performing the numerical experiment yourself. It’s relatively easy to do in a spreadsheet.

Or go listen to some music from a digital source. The same thing is happening.

Peter; The problem is that you don’t know the true value? It lies somewhere between +- 0.5 but where is unknown.

How odd that your digital sound system appears to know.

You do know the true value for some period (integrating between t0 and t1) as long as the input signal varies by much greater than the resolution of your instrument. You do not know the temperature precisely at t0 or any time in between t0 and t1. But for the entire period you do know at a precision greater than that of your instrument. This is how most modern Analog to Digital measurement systems work.

Whether a temperature average is a useful concept by itself is not for debate here (I happen to think it’s relatively useless). But it does have more precision than a single measurement.

Nick Stokes posted an example above. Try running an example for yourself. It just requires a spreadsheet.

Peter; consider what you are integrating. Is it the recorded value or the maximum of the range or the minimum of the range or some variations of maximum, minimum, and recorded range?

And I’m sorry but integrating from t0 to t1 still won’t give the ‘true’ value. It can even give you a value to a multitude of decimal places. But you still can’t get rid of the uncertainty of the initial measurement.

Consider your analog to digital conversion. You have a signal that varies from +- 10.0 volts. However, your conversion apparatus is only accurate to +- 0.5 volts. How accurate will your conversion back to analog be?

Do you mean accuracy or precision? I’ll try to answer both.

If you mean precision:

It depends on the frequency and input signal characteristics. In the worst case of a DC signal with no noise at any other frequency, the precision is +/- 0.5 volts.