Guest essay by George White

For matter that’s absorbing and emitting energy, the emissions consequential to its temperature can be calculated exactly using the Stefan-Boltzmann Law,

1) P = εσT4

where P is the emissions in W/m2, T is the temperature of the emitting matter in degrees Kelvin, σ is the Stefan-Boltzmann constant whose value is about 5.67E-8 W/m2 per K4 and ε is the emissivity which is 1 for an ideal black body radiator and somewhere between 0 and 1 for a non ideal system also called a gray body. Wikipedia defines a Stefan-Boltzmann gray body as one “that does not absorb all incident radiation” although it doesn’t specify what happens to the unabsorbed energy which must either be reflected, passed through or do work other than heating the matter. This is a myopic view since the Stefan-Boltzmann Law is equally valid for quantifying a generalized gray body radiator whose source temperature is T and whose emissions are attenuated by an equivalent emissivity.

To conceptualize a gray body radiator, refer to Figure 1 which shows an ideal black body radiator whose emissions pass through a gray body filter where the emissions of the system are observed at the output of the filter. If T is the temperature of the black body, it’s also the temperature of the input to the gray body, thus Equation 1 still applies per Wikipedia’s over-constrained definition of a gray body. The emissivity then becomes the ratio between the energy flux on either side of the gray body filter. To be consistent with the Wikipedia definition, the path of the energy not being absorbed is omitted.

A key result is that for a system of radiating matter whose sole source of energy is that stored as its temperature, the only possible way to affect the relationship between its temperature and emissions is by varying ε since the exponent in T4 and σ are properties of immutable first principles physics and ε is the only free variable.

The units of emissions are Watt/meter2 and one Watt is one Joule per second. The climate system is linear to Joules meaning that if 1 Joule of photons arrives, 1 Joule of photons must leave and that each Joule of input contributes equally to the work done to sustain the average temperature independent of the frequency of the photons carrying that energy. This property of superposition in the energy domain is an important, unavoidable consequence of Conservation of Energy and often ignored.

The steady state condition for matter that’s both absorbing and emitting energy is that it must be receiving enough input energy to offset the emissions consequential to its temperature. If more arrives than is emitted, the temperature increases until the two are in balance. If less arrives, the temperature decreases until the input and output are again balanced. If the input goes to zero, T will decay to zero.

Since 1 calorie (4.18 Joules) increases the temperature of 1 gram of water by 1C, temperature is a linear metric of stored energy, however; owing to the T4 dependence of emissions, it’s a very non linear metric of radiated energy so while each degree of warmth requires the same incremental amount of stored energy, it requires an exponentially increasing incoming energy flux to keep from cooling.

The equilibrium climate sensitivity factor (hereafter called the sensitivity) is defined by the IPCC as the long term incremental increase in T given a 1 W/m2 increase in input, where incremental input is called forcing. This can be calculated for emitting matter in LTE by differentiating the Stefan-Boltzmann Law with respect to T and inverting the result. The value of dT/dP has the required units of degrees K per W/m2 and is the slope of the Stefan-Boltzmann relationship as a function of temperature given as,

2) dT/dP = (4εσT3)-1

A black body is nearly an exact model for the Moon. If P is the average energy flux density received from the Sun after reflection, the average temperature, T, and the sensitivity, dT/dP can be calculated exactly. If regions of the surface are analyzed independently, the average T and sensitivity for each region can be precisely determined. Due to the non linearity, it’s incorrect to sum up and average all the T’s for each region of the surface, but the power emitted by each region can be summed, averaged and converted into an equivalent average temperature by applying the Stefan-Boltzmann Law in reverse. Knowing the heat capacity per m2 of the surface, the dynamic response of the surface to the rising and setting Sun can also be calculated all of which was confirmed by equipment delivered to the Moon decades ago and more recently by the Lunar Reconnaissance Orbiter. Since the lunar surface in equilibrium with the Sun emits 1 W/m2 of emissions per W/m2 of power it receives, its surface power gain is 1.0. In an analytical sense, the surface power gain and surface sensitivity quantify the same thing, except for the units, where the power gain is dimensionless and independent of temperature, while the sensitivity as defined by the IPCC has a T-3 dependency and which is incorrectly considered to be approximately temperature independent.

A gray body emitter is one where the power emitted is less than would be expected for a black body at the same temperature. This is the only possibility since the emissivity can’t be greater than 1 without a source of power beyond the energy stored by the heated matter. The only place for the thermal energy to go, if not emitted, is back to the source and it’s this return of energy that manifests a temperature greater than the observable emissions suggest. The attenuation in output emissions may be spectrally uniform, spectrally specific or a combination of both and the equivalent emissivity is a scalar coefficient that embodies all possible attenuation components. Figure 2 illustrates how this is applied to Earth, where A represents the fraction of surface emissions absorbed by the atmosphere, (1 – A) is the fraction that passes through and the geometrical considerations for the difference between the area across which power is received by the atmosphere and the area across which power is emitted are accounted for. This leads to an emissivity for the gray body atmosphere of A and an effective emissivity for the system of (1 – A/2).

The average temperature of the Earth’s emitting surface at the bottom of the atmosphere is about 287K, has an emissivity very close to 1 and emits about 385 W/m2 per Equation 1. After accounting for reflection by the surface and clouds, the Earth receives about 240 W/m2 from the Sun, thus each W/m2 of input contributes equally to produce 1.6 W/m2 of surface emissions for a surface power gain of 1.6.

Two influences turn 240 W/m2 of solar input into 385 W/m2 of surface output. First is the effect of GHG’s which provides spectrally specific attenuation and second is the effect of the water in clouds which provides spectrally uniform attenuation. Both warm the surface by absorbing some fraction of surface emissions and after some delay, recycling about half of the energy back to the surface. Clouds also manifest a conditional cooling effect by increasing reflection unless the surface is covered in ice and snow when increasing clouds have only a warming influence.

Consider that if 290 W/m2 of the 385 W/m2 emitted by the surface is absorbed by atmospheric GHG’s and clouds (A ~ 0.75), the remaining 95 W/m2 passes directly into space. Atmospheric GHG’s and clouds absorb energy from the surface, while geometric considerations require the atmosphere to emit energy out to space and back to the surface in roughly equal proportions. Half of 290 W/m2 is 145 W/m2 which when added to the 95 W/m2 passed through the atmosphere exactly offsets the 240 W/m2 arriving from the Sun. When the remaining 145 W/m2 is added to the 240 W/m2 coming from the Sun, the total is 385 W/m2 exactly offsetting the 385 W/m2 emitted by the surface. If the atmosphere absorbed more than 290 W/m2, more than half of the absorbed energy would need to exit to space while less than half will be returned to the surface. If the atmosphere absorbed less, more than half must be returned to the surface and less would be sent into space. Given the geometric considerations of a gray body atmosphere and the measured effective emissivity of the system, the testable average fraction of surface emissions absorbed, A, can be predicted as,

3) A = 2(1 – ε)

Non radiant energy entering and leaving the atmosphere is not explicitly accounted for by the analysis, nor should it be, since only radiant energy transported by photons is relevant to the radiant balance and the corresponding sensitivity. Energy transported by matter includes convection and latent heat where the matter transporting energy can only be returned to the surface, primarily by weather. Whatever influences these have on the system are already accounted for by the LTE surface temperatures, thus their associated energies have a zero sum influence on the surface radiant emissions corresponding to its average temperature. Trenberth’s energy balance lumps the return of non radiant energy as part of the ‘back radiation’ term, which is technically incorrect since energy transported by matter is not radiation. To the extent that latent heat energy entering the atmosphere is radiated by clouds, less of the surface emissions absorbed by clouds must be emitted for balance. In LTE, clouds are both absorbing and emitting energy in equal amounts, thus any latent heat emitted into space is transient and will be offset by more surface energy being absorbed by atmospheric water.

The Earth can be accurately modeled as a black body surface with a gray body atmosphere, whose combination is a gray body emitter whose temperature is that of the surface and whose emissions are that of the planet. To complete the model, the required emissivity is about 0.62 which is the reciprocal of the surface power gain of 1.6 discussed earlier. Note that both values are dimensionless ratios with units of W/m2 per W/m2. Figure 3 demonstrates the predictive power of the simplest gray body model of the planet relative to satellite data.

Figure 3

Each little red dot is the average monthly emissions of the planet plotted against the average monthly surface temperature for each 2.5 degree slice of latitude. The larger dots are the averages for each slice across 3 decades of measurements. The data comes from the ISCCP cloud data set provided by GISS, although the output power had to be reconstructed from radiative transfer model driven by surface and cloud temperatures, cloud opacity and GHG concentrations, all of which were supplied variables. The green line is the Stefan-Boltzmann gray body model with an emissivity of 0.62 plotted to the same scale as the data. Even when compared against short term monthly averages, the data closely corresponds to the model. An even closer match to the data arises when the minor second order dependencies of the emissivity on temperature are accounted for,. The biggest of these is a small decrease in emissivity as temperatures increase above about 273K (0C). This is the result of water vapor becoming important and the lack of surface ice above 0C. Modifying the effective emissivity is exactly what changing CO2 concentrations would do, except to a much lesser extent, and the 3.7 W/m2 of forcing said to arise from doubling CO2 is the solar forcing equivalent to a slight decrease in emissivity keeping solar forcing constant.

Near the equator, the emissivity increases with temperature in one hemisphere with an offsetting decrease in the other. The origin of this is uncertain but it may be an anomaly that has to do with the normalization applied to use 1 AU solar data which can also explain some other minor anomalous differences seen between hemispheres in the ISCCP data, but that otherwise average out globally.

When calculating sensitivities using Equation 2, the result for the gray body model of the Earth is about 0.3K per W/m2 while that for an ideal black body (ε = 1) at the surface temperature would be about 0.19K per W/m2, both of which are illustrated in Figure 3. Modeling the planet as an ideal black body emitting 240 W/m2 results in an equivalent temperature of 255K and a sensitivity of about 0.27K per W/m2 which is the slope of the black curve and slightly less than the equivalent gray body sensitivity represented as a green line on the black curve.

This establishes theoretical possibilities for the planet’s sensitivity somewhere between 0.19K and 0.3K per W/m2 for a thermodynamic model of the planet that conforms to the requirements of the Stefan-Boltzmann Law. It’s important to recognize that the Stefan-Boltzmann Law is an uncontroversial and immutable law of physics, derivable from first principles, quantifies how matter emits energy, has been settled science for more than a century and has been experimentally validated innumerable times.

A problem arises with the stated sensitivity of 0.8C +/- 0.4C per W/m2, where even the so called high confidence lower limit of 0.4C per W/m2 is larger than any of the theoretical values. Figure 3 shows this as a blue line drawn to the same scale as the measured (red dots) and modeled (green line) data.

One rationalization arises by inferring a sensitivity from measurements of adjusted and homogenized surface temperature data, extrapolating a linear trend and considering that all change has been due to CO2 emissions. It’s clear that the temperature has increased since the end of the Little Ice Age, which coincidently was concurrent with increasing CO2 arising from the Industrial Revolution, and that this warming has been a little more than 1 degree C, for an average rate of about 0.5C per century. Much of this increase happened prior to the beginning the 20’th century and since then, the temperature has been fluctuating up and down and as recently as the 1970’s, many considered global cooling to be an imminent threat. Since the start of the 21’st century, the average temperature of the planet has remaining relatively constant, except for short term variability due to natural cycles like the PDO.

A serious problem is the assumption that all change is due to CO2 emissions when the ice core records show that change of this magnitude is quite normal and was so long before man harnessed fire when humanities primary influences on atmospheric CO2 was to breath and to decompose. The hypothesis that CO2 drives temperature arose as a knee jerk reaction to the Vostok ice cores which indicated a correlation between temperature and CO2 levels. While such a correlation is undeniable, newer, higher resolution data from the DomeC cores confirms an earlier temporal analysis of the Vostok data that showed how CO2 concentrations follow temperature changes by centuries and not the other way around as initially presumed. The most likely hypothesis explaining centuries of delay is biology where as the biosphere slowly adapts to warmer (colder) temperatures as more (less) land is suitable for biomass and the steady state CO2 concentrations will need to be more (less) in order to support a larger (smaller) biomass. The response is slow because it takes a while for natural sources of CO2 to arise and be accumulated by the biosphere. The variability of CO2 in the ice cores is really just a proxy for the size of the global biomass which happens to be temperature dependent.

The IPCC asserts that doubling CO2 is equivalent to 3.7 W/m2 of incremental, post albedo solar power and will result in a surface temperature increase of 3C based on a sensitivity of 0.8C per W/m2. An inconsistency arises because if the surface temperature increases by 3C, its emissions increase by more than 16 W/m2 so 3.7 W/m2 must be amplified by more than a factor of 4, rather than the factor of 1.6 measured for solar forcing. The explanation put forth is that the gain of 1.6 (equivalent to a sensitivity of about 0.3C per W/m2) is before feedback and that positive feedback amplifies this up to about 4.3 (0.8C per W/m2). This makes no sense whatsoever since the measured value of 1.6 W/m2 of surface emissions per W/m2 of solar input is a long term average and must already account for the net effects from all feedback like effects, positive, negative, known and unknown.

Another of the many problems with the feedback hypothesis is that the mapping to the feedback model used by climate science does not conform to two important assumptions that are crucial to Bode’s linear feedback amplifier analysis referenced to support the model. First is that the input and output must be linearly related to each other, while the forcing power input and temperature change output of the climate feedback model are not owing to the T4 relationship between the required input flux and temperature. The second is that Bode’s feedback model assumes an internal and infinite source of Joules powers the gain. The presumption that the Sun is this source is incorrect for if it was, the output power could never exceed the power supply and the surface power gain will never be more than 1 W/m2 of output per W/m2 of input which would limit the sensitivity to be less than 0.2C per W/m2.

Finally, much of the support for a high sensitivity comes from models. But as has been shown here, a simple gray body model predicts a much lower sensitivity and is based on nothing but the assumption that first principles physics must apply, moreover; there are no tuneable coefficients yet this model matches measurements far better than any other. The complex General Circulation Models used to predict weather are the foundation for models used to predict climate change. They do have physics within them, but also have many buried assumptions, knobs and dials that can be used to curve fit the model to arbitrary behavior. The knobs and dials are tweaked to match some short term trend, assuming it’s the result of CO2 emissions, and then extrapolated based on continuing a linear trend. The problem is that there as so many degrees of freedom in the model, it can be tuned to fit anything while remaining horribly deficient at both hindcasting and forecasting.

The results of this analysis explains the source of climate science skepticism, which is that IPCC driven climate science has no answer to the following question:

What law(s) of physics can explain how to override the requirements of the Stefan-Boltzmann Law as it applies to the sensitivity of matter absorbing and emitting energy, while also explaining why the data shows a nearly exact conformance to this law?

References

1) IPCC reports, definition of forcing, AR5, figure 8.1, AR5 Glossary, ‘climate sensitivity parameter’

2) Kevin E. Trenberth, John T. Fasullo, and Jeffrey Kiehl, 2009: Earth’s Global Energy Budget. Bull. Amer. Meteor. Soc., 90, 311–323.

3) Bode H, Network Analysis and Feedback Amplifier Design assumption of external power supply and linearity: first 2 paragraphs of the book

4) Manfred Mudelsee, The phase relations among atmospheric CO content, temperature and global ice volume over the past 420 ka, Quaternary Science Reviews 20 (2001) 583-589

5) Jouzel, J., et al. 2007: EPICA Dome C Ice Core 800KYr Deuterium Data and Temperature Estimates.

6) ISCCP Cloud Data Products: Rossow, W.B., and Schiffer, R.A., 1999: Advances in Understanding Clouds from ISCCP. Bull. Amer. Meteor. Soc., 80, 2261-2288.

7) “Diviner Lunar radiometer Experiment” UCLA, August, 2009

George: Sorry to arrive late to this discussion. You asked: What law(s) of physics can explain how to override the requirements of the Stefan-Boltzmann Law as it applies to the sensitivity of matter absorbing and emitting energy, while also explaining why the data shows a nearly exact conformance to this law?

Planck’ Law (and therefore the SB eqn) was derived assuming radiation in equilibrium with GHGs (originally quantized oscillators). Look up any derivation of Planck’s Law. The atmosphere is not in equilibrium with the thermal infrared passing through it. Radiation in the atmospheric window passes through unobstructed with intensity appropriate for a blackbody at surface temperature. Radiation in strongly absorbed bands has intensity appropriate for a blackbody at 220 degC, a 3X difference in T^4! So the S-B eqn is not capable of properly describing what happens in the atmosphere.

The appropriate eqn for systems that are not at equilibrium is the Schwarzschild eqn, which is used by programs such as MODTRAN, HITRAN, and AOGCMs.

Frank,

The Schwarzschild eqn. can describe atmospheric radiative transfer for both the system in a state of equilibrium as well as out of equilibrium, i.e. during the path from one equilibrium state to another. But even what it can describe for the equilibrium state is an average of immensely dynamic behavior.

The point is data plotted is the net observed result of all the dynamic physics, radiant and non-radiant, mixed together. That is, it implicitly includes the effect of all physical processes and feedbacks in the system that operate on timscales of decades or less, which certainly includes water vapor and clouds.

Frank,

“So the S-B eqn is not capable of properly describing what happens in the atmosphere.”

This is not what the model is modelling. The elegance of this solution is that what happens within the atmosphere is irrelevant and all that complication can be avoided. Consensus climate science is hung up on all the complexity so they have the wiggle room to assert fantastic claims which spills over into skeptical thinking and this contributes to why climate science is so broken. My earlier point was that it’s counterproductive to try and out psych how the atmosphere works inside if the behavior at the boundaries is unknown. This model quantifies the behavior at the boundaries and provides a target for more complex modelling of the atmosphere’s interior. GCM’s essentially run open loop relative to the required behavior at the boundaries and hope to predict it, rather than be constrained by it. This methodology represents standard practices for reverse engineering an unknown system. Unfortunately, standard practices are rarely applied to climate science, especially if it results in an inconvenient answer. A classic example of this is testing hypotheses and BTW, Figure 3 is a test of the hypothesis that a gray body at the surface temperature with an emissivity of 0.62 is an accurate model of the boundary behavior of the atmopshere.

I’m only modelling how it behaves at the boundaries and if this can be predicted with high precision, which I have unambiguously demonstrated (per Figure 3), it doesn’t matter how that behavior manifests itself, just that it does. As far as the model is concerned, the internals of the atmosphere can be pixies pushing photons around, as long as the net result conforms to macroscopic physical constraints.

Consider the Entropy Minimization Principle. What does it mean to minimize entropy? It’s minimizing deviations from ideal and the Stefan-Boltzmann relationship is an ideal quantification. As a consequence of so many degrees of freedom, the atmosphere has the capability to self organizes itself to achieve minimum entropy, as any natural system would do. If the external behavior does not align with SB, especially the claim of a sensitivity far in excess of what SB supports, the entropy must be too high to be real, that is, the deviations from ideal are far out of bounds for a natural system.

As far as Planck is concerned, the equivalent temperature of the planet (255K) is based on an energy flux that is not a pure Planck spectrum, but a Planck spectrum whose clear sky color temperature (the peak emissions per Wein’s Displacement Law) is the surface temperature, but with sections of bandwidth removed, decreasing the total energy to be EQUIVALENT to an ideal BB radiating a Planck spectrum at 255K.

co2isnotevil:

Rather than calling the solution “elegant” I would call it an application of the reification fallacy. Global warming climatology is based upon application of this fallacy.

micro6500,

“There won’t just be notches, there would be some enhancement in the windows”

This isn’t consistent with observations. If the energy in the ‘notches’ was ‘thermalized’ and re-emitted as a Planck spectrum boosting the power in the transparent window, we would observe much deeper notches than we do. The notches we see in saturated absorption lines show about a 50% reduction in outgoing flux over what there would be given an ideal Planck spectrum which is consistent with the 50/50 split of energy leaving the atmosphere consequential to photons emitted by GHG’s being emitted in a random direction (after all is said and done, approximately half up and half down).

Which is a sign of no enhanced warming. The wv regulation will completely erase any forcing over dew point as the days get longer. But it only the difference of 10 or 20 minutes less cooling at the low rate after an equal reduction at the high cooling rates. So as the days lengthen you get those 20. And a storm will also wipe it out.

George: Figure 3 is interesting, but problematic. The flux leaving the TOA is the depended variable and the surface temperature is the dependent variable, so normally one would plot this data with the axes switched.

Now let’s look at the dynamic range of your data. About half of the planet is tropical, with Ts around 300 K. Power out varies by 70 W/m2 from this portion of the planet with little change in Ts. There is not a functional relationship between Ts and power out for this half of the planet. The data is scattered because cloud cover and altitude has a tremendous impact on power out.

Much of the dynamic range in your data comes for polar regions, a very small fraction of the planet. Data from the poles provides most of the dynamic range in your data.

The problem with this way of looking at the data is that the Atmosphere is not a blackbody with an emissivity of 0.61. The apparent emissivity of 0.61 occurs because the average photon escaping to space (power out) is emitted at an altitude where the temperature is 255 K. The changes in power out in your graph are produced by moving from one location to another one the planet where the temperature is different, humidity (as GHG) is different and photons escaping to space come from different altitudes. The slope of your graph may have units of K/W/m2, but that doesn’t mean it is a measure of climate sensitivity – the change in TOA OLR and reflected SWR caused by warming everywhere on the planet.

Frank,

Part of the problem here with the conceptualization of sensitivity, feedback, etc. is the way the issue is framed by (mainstream) climate science. The way the issue is framed is more akin to the system being a static equilibrium system whose behavior upon a change in the energy balance or in response to some perturbation is totally unknown or a big mystery, rather than it being an already mostly physically manifested highly dynamic equilibrium system.

I assume you agree the system is an immensely dynamic one, right? That is, the energy balance is immensely dynamically maintained. What are the two most dynamic components of the Earth-atmosphere system? Water vapor and clouds, right?

I think the physical constraints George is referring to in this context are really physically logical constraints given observed behavior, rather than some universal physical constraints considered by themselves. No, there is no universal physical constraint or physical law (S-B or otherwise) on its own, independent of logical context, that constrains sensitivity within the approximate bounds George is claiming.

RW.

The most dynamic component of any convecting atmosphere (and they always convect) is the conversion of KE to PE in rising air and conversion of PE to KE in falling air.

Clouds and water vapour and anything else with any thermal effect achieve their effects by influencing that process.

Since, over time, ascent must be matched by descent if hydrostatic equilibrium is to be maintained it follows that nothing (including GHGs) can destabilise that hydrostatic equilibrium otherwise the atmosphere would be lost.

It is that component which neutralises all destabilising influences by providing an infinitely variable thermal buffer.

That is what places a constraint on climate sensitivity from ALL potential destabilising forces.

The trade off against anything that tries to introduce an imbalance is a change in the distribution of the mass content of the atmosphere. Anything that successfully distorts the lapse rate slope in one location will distort it in an equal and opposite direction elsewhere.

This is relevant:

http://joannenova.com.au/2015/10/for-discussion-can-convection-neutralize-the-effect-of-greenhouse-gases/

Stephen,

“This is relevant:” (post on jonova)

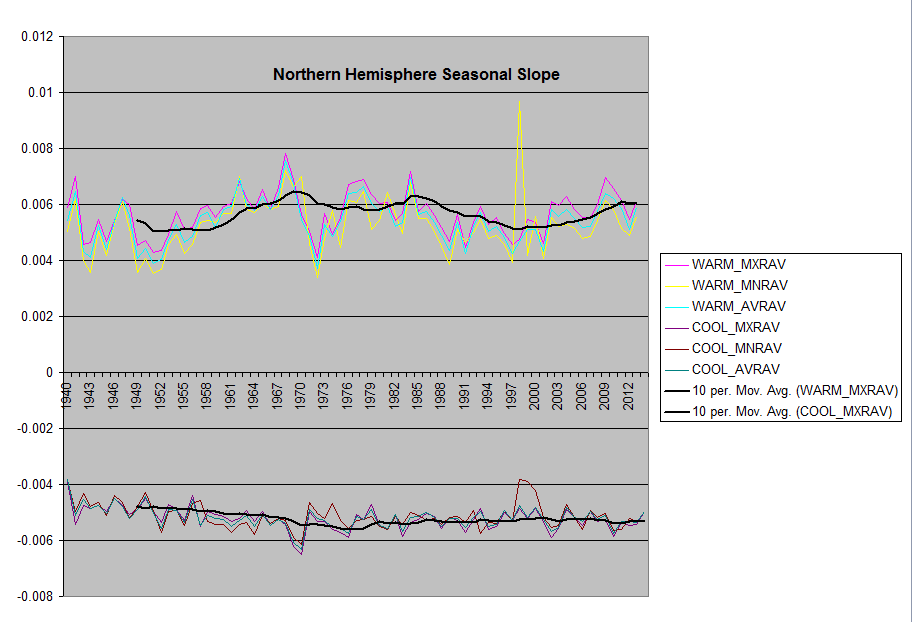

What I see that this does is provide one of the many degrees of freedom that combined drive the surface behavior towards ideal (minimize entropy) which is 1 W/m^2 of emissions per incremental W/m^2 of forcing (sensitivity of about 0.19 C per W/m^2). I posted a plot that showed that this is the case earlier in the comments. Rather than plotting output power vs. temperature, input power vs. temperature is plotted.

co2isnotevil

Everything you can envisage as comprising a degree of freedom operates by moving mass up or down the density gradient and thus inevitably involves conversion of KE to PE or PE to KE.

Thus, at base, there is only one underlying degree of freedom which involves the ratio between KE and PE within the mass of the bulk atmosphere.

Whenever that ratio diverges from the ratio that is required for hydrostatic equilibrium then convection moves atmospheric mass up or down the density gradient in order to eliminate the imbalance.

Convection can do that because convection is merely a response to density differentials and if one changes the ratio between KE and PE between air parcels then density changes as well so that changes in convection inevitably ensue.

The convective response is always equal and opposite to any imbalance that might be created.Either KE is converted to PE or PE is converted to KE as necessary to retain balance.

The PE within the atmosphere is a sort of deposit account into which heat (KE) can be placed or drawn out as needed. I like to refer to it as a ‘buffer’.

That is the true (and only) physical constraint to climate sensitivity to every potential forcing.

As regards your head post the issue is whether your findings are consistent or inconsistent with that proposition.

I think they are consistent but do you agree?

Stephen,

“I think they are consistent but do you agree?”

It’s certainly consistent with the relationship between incident energy and temperature, or the ‘charging’ path. The head posting is more about the ‘discharge’ path as it puts limits the sensitivity, but to the extent that input == output in LTE (hence putting emissions along the X axis as the ‘input’), it’s also consistent in principle with the discharge path.

The charging/discharging paradigm comes from the following equation:

Pi(t) = Po(t) + dE(t)/dt

which quantifies the EXACT dynamic relationship between input power and output power. When they are instantaneously different, the difference is either added to or subtracted from the energy stored by the system (E).

If we define an arbitrary amount of time, tau, such that all of E is emitted in tau time at the rate Po(t), this can be rewritten as,

Pi(t) = E(t)/tau + dE/dt

You might recognize this as the same form of differential equation that quantifies the charging and discharging of a capacitor where tau is the time constant. Of course for the case of the climate system, tau is not constant and has relatively strong a temperature dependence.

Thanks.

If my scenario is consistent with your findings then does that not provide what you asked for, namely

“What law(s) of physics can explain how to override the requirements of the Stefan-Boltzmann Law as it applies to the sensitivity of matter absorbing and emitting energy, while also explaining why the data shows a nearly exact conformance to this law?”

“If my scenario is consistent with your findings then does that not provide what you asked for”,

It doesn’t change the derived sensitivity, it just offers a possibility for how the system self-organizes to drive itself towards ideal behavior in the presence of incomprehensible complexity.

I’m only modelling the observed behavior and the model of the observed behavior is unaffected by how that behavior arises. Your explanation is a possibility for how that behavior might arise, but it’s not the only one and IMHO, it’s a lot more complicated then what you propose.

It only becomes complicated if one tries to map all the variables that can affect the KE/PE ratio. I think that would be pretty much impossible due to incomprehensible complexity, as you say.

As for alternative possibilities I would be surprised if you could specify one that does not boil down to variations in the KE/PE ratio.

The reassuring thing for me at this point is that you do not have anything that invalidates my proposal. That is helpful.

With regard to derived sensitivity I think you may be making an unwarranted assumption that CO2 makes any measurable contribution at all since the data you use appears to relate to cloudiness rather than CO2 amounts, or have I missed something?

Stephen,

“With regard to derived sensitivity I think you may be making an unwarranted assumption that CO2 makes any measurable contribution at all”

Remember that my complete position is that the degrees of freedom that arise from incomprehensible complexity drives the climate systems behavior towards ideal (per the Entropy Minimization Principle) where the surface sensitivity converges to 1 W/m^2 of surface emissions per W/m^2 of input (I don’t like the term forcing which is otherwise horribly ill defined). For CO2 to have no effect, the sensitivity would need to be zero. The effects you are citing have more to do with mitigating the sensitivity to solar input and is not particularly specific to increased absorption by CO2. None the less, it has the same net effect, but the effect of incremental CO2 is not diminished to zero.

With regard to other complexities, dynamic cloud coverage, the dynamic ratio between cloud height and cloud area and the dynamic modulation of the nominal 50/50 split of absorbed energy all contribute as degrees of freedom driving the system towards ideal.

Stephen,

OK, but the point is the process by which water is evaporates from the surface, ultimately condenses to form clouds, and then is ultimately precipitated out of the atmosphere (i.e. out of the clouds) and gets back to the surface is an immensely dynamic, continuously occurring process within the Earth-atmosphere system. And a relatively fast acting one as the average time it takes for water molecule to be evaporated from the surface and eventually precipitated back to the surface (as rain or snow) is only about 10 days or so.

The point is (which was made to Frank) is all of the physical processes and feedbacks involved in this process, i.e. the hydrological cycle, and their ultimate manifestation on the energy balance of the system, including at the surface, are fully accounted for in the data plotted. This is because not only is the data about 30 years worth, which is far longer than 10 day average of the hydrological cycle, each small dot component of that makes up the curve is a monthly average of all the dynamic behavior, radiant and non-radiant, know and unknown, in each grid area.

Frank,

It seems you have accepted the fundamental way the field has framed up the feedback and sensitivity question, which is really as if the Earth-atmosphere system is a static equilibrium system (or more specifically a system that has dynamically a reached a static equilibrium), and whose physical components’ behavior in response to a perturbation or energy imbalance will subsequently dynamically respond in a totally unknown way with totally unknown bounds, to reach a new static equilibrium.

The point is system is an immensely dynamic equilibrium system, where its energy balance is continuously dynamically maintained. It has not reached what would be a static equilibrium, but instead reached an immensely dynamically maintained approximate average equilibrium state. It is these immensely dynamic physical processes at work, radiant and non-radiant, know and unknown, in maintaining the physical manifestation of this energy balance, that cannot be arbitrarily separated from those that will act in response to newly imposed imbalances to the system, like from added GHGs.

It is physically illogical to think these physical processes and feedbacks already in continuous dynamic operation in maintaining the current energy balance would have any way a distinguishing such an imbalance from any other imbalance imposed as a result of the regularly occurring dynamic chaos in the system, which at any one point in time or in any one local area is almost always out to balance to some degree in one way or another.

The term “climate science” is inaccurate and misleading for the models that are created by this field of study lack the property of falsifiability. As the models lack falsifiability it is accurate to call the field of study that creates them “climate pseudoscience.” To elevate their field of study to a science, climate pseudoscientists would have to identify the statistical populations underlying their models and cross validate these models before publishing them or using them in attempts at controlling Earth’s climate.

Co2isnotevil

I would say that the climate sensitivity in terms of average surface temperature is reduced to zero whatever the cause of a radiative imbalance from variations internal to the system (including CO2) but the overall outcome is not net zero because of the change in circulation pattern that occurs instead. Otherwise hydrostatic equilibrium cannot be maintained.

The exception is where a radiative imbalance is due to an albedo/cloudiness change. In that case the input to the system changes and the average surface temperature must follow.

Your work shows that the system drives back towards ideal and I agree that the various climate and weather phenomena that constitute ‘incomprehensible complexity’ are the process of stabilisation in action. On those two points we appear to be in agreement.

The ideal that the system drives back towards is the lapse rate slope set by atmospheric mass and the strength of the gravitational field together with the surface temperature set by both incoming radiation from space (after accounting for albedo) and the energy requirement of ongoing convective overturning.

The former matches the S-B equation which provides 255K at the surface and the latter accounts for the observed additional 33K at the surface.

Stephen,

“The ideal that the system drives back towards is the lapse rate slope …”

You seem to believe that the surface temperature is a consequence of the lapse rate, while I believe that the lapse rate is a function of gravity alone and the temperature gradient manifested by it is driven by the surface temperature which is established as an equilibrium condition between the surface and the Sun. If gravity was different, I claim that the surface temperature would not be any different, but the lapse rate would change while you claim that the surface temperature would be different because of the changed lapse rate.

Is this a correct assessment of your position?

Good question 🙂

I do not believe that the surface temperature is a consequence of the lapse rate. The surface temperature is merely the starting point for the lapse rate.

If there is no atmosphere then S-B is satisfied and there is (obviously) no lapse rate.

The surface temperature beneath a gaseous atmosphere is a result of insolation reaching the surface (so albedo is relevant) AND atmospheric mass AND gravity.No gravity means no atmosphere.

However, if you increase gravity alone whilst leaving insolation and atmospheric mass the same then you get increased density at the surface and a steeper density gradient with height. The depth of the atmosphere becomes more compressed. The lapse rate follows the density gradient simply because the lapse rate slope traces the increased value of conduction relative to radiation as one descends through the mass of an atmosphere.

Increased density at the surface means that more conduction can occur at the same level of insolation but convection then has less vertical height to travel before it returns back to the surface so the net thermal effect should be zero.

The density gradient being steeper, the lapse rate must be steeper as well in order to move from the surface temperature to the temperature of space over a shorter distance of travel.

The surface temperature would remain the same with increased gravity (just as you say) but the lapse rate slope would be steeper (just as you say) and, to compensate, convective overturning would require less time because it has less far to travel. There is a suggestion from others that increased density reduces the speed of convection due to higher viscosity so that might cause a rise in surface temperature but I am currently undecided on that.

Gravity is therefore only needed to provide a countervailing force to the upward pressure gradient force. As long as gravity is sufficient to offset the upward pressure gradient force and thereby retain an atmosphere in hydrostatic equilibrium the precise value of the gravitational force makes no difference to surface temperature except in so far as viscosity might be relevant.

So, the lapse rate slope is set by gravity alone because gravity sets the density gradient which in turn sets the balance between radiation and conduction within the vertical plane.

One can regard the lapse rate slope as a marker for the rate at which conduction takes over from radiation as one descends through atmospheric mass.

The more conduction there is the less accurate the S-B equation becomes and the higher the surface temperature must rise above S-B in order to achieve radiative equilibrium with space.

If one then considers radiative capability within the atmosphere it simply causes a redistribution of atmospheric mass via convective adjustments but no rise in surface temperature.

Stephen,

“If there is no atmosphere then S-B is satisfied and there is (obviously) no lapse rate.”

I agree with most of what you said with a slight modification.

If there is no atmosphere then S-B for a black body is satisfied and there is no lapse rate. If there is an atmosphere, the lapse rate becomes a manifestation of grayness, thus S-B can still be satisfied by applying the appropriate EQUIVALENT emissivity, as demonstrated by Figure 3. Again, I emphasize EQUIVALENT which is a crucial concept when it comes to modelling anything,

It’s clear to me that there are regulatory processes at work, but these processes directly regulate the energy balance and not necessarily the surface temperature, except indirectly. Furthermore, these regulatory processes can not reduce the sensitivity to zero, that is 0 W/m^2 of incremental surface emissions per W/m^2 of ‘forcing’, but drives it towards minimum entropy where 1 W/m^2 of forcing results in 1 W/m^2 of incremental surface emissions. To put this in perspective, the IPCC sensitivity of 0.8C per W/m^2 requires the next W/m^2 of forcing to result in 4.3 W/m^2 of incremental surface emissions.

In other terms, if it looks like a duck and quacks like a duck it’s not barking like a dog.

Where there is an atmosphere I agree that you can regard the lapse rate as a manifestation of greyness in the sense that as density increases along the lapse rate slope towards the surface then conduction takes over from radiation.

However, do recall that from space the Earth and atmosphere combined present as a blackbody radiating out exactly as much as is received from space.

My solution to that conundrum is to assert that viewed from space the combined system only presented as a greybody during the progress of the uncompleted first convective overturning cycle.

After that the remaining greyness manifested by the atmosphere along the lapse rate slope is merely an internal system phenomenon and represents that increasing dominance of conduction relative to radiation as one descends through atmospheric mass.

I think that what you have done is use ’emissivity’ as a measure of the average reduction of radiative capability in favour of conduction as one descends along the lapse rate slope.

The gap between your red and green lines represents the internal, atmospheric greyness induced by increasing conduction as one travels down along the lapse rate slope.

That gives the raised surface temperature that is required to both reach radiative equilibrium with space AND support ongoing convective overturning within the atmosphere.

The fact that the curve of both lines is similar shows that the regulatory processes otherwise known as weather are working correctly to keep the system thermally stable.

Sensitivity to a surface temperature rise above S-B cannot be reduced to zero as you say which is why there is a permanent gap between the red and green lines but that gap is caused by conduction and convection, not CO2 or any other process.

Using your method, if CO2 or anything else were to be capable of affecting climate sensitivity beyond the effect of conduction and convection then it would manifest as a failure of the red line to track the green line and you have shown that does not happen.

If it were to happen then hydrostatic equilibrium would be destroyed and the atmosphere lost.

Stephen,

“However, do recall that from space the Earth and atmosphere combined present as a blackbody radiating out exactly as much as is received from space.”

This isn’t exactly correct. The Earth and atmosphere combined present as an EQUIVALENT black body emitting a Planck spectrum at 255K. The difference being the spectrum itself and its emitting temperature according Wein’s displacement.

I’ve no problem with a more precise verbalisation.

Doesn’t affect the main point though does it?

As I see it your observations are exactly what I would expect to see if my mass based greenhouse effect description were to be correct.

Stephen,

“As I see it your observations are exactly what I would expect to see if my mass based greenhouse effect description were to be correct.”

The question is whether the apparently mass based GHG effect is the cause or the consequence. I believe it to be a consequence and that the cause is the requirement for the macroscopic behavior of the climate system to be constrained by macroscopic physical laws, specifically the T^4 relationship between temperature and emissions and the constraints of COE. The cause establishes what the surface temperature and planet emissions must be and the consequence is to be consistent with these two endpoints and the nature of atmosphere in between.

Well, all physical systems are constrained by the macroscopic physical laws so the climate system cannot be any different.

It isn’t a problem for me to concede that macroscopic physical laws lead to a mass induced greenhouse effect rather than a GHG induced greenhouse effect. Indeed, that is the whole point of my presence here:)

Are your findings consistent with both possibilities or with one more than the other?

Stephen,

“Are your findings consistent with both possibilities or with one more than the other?”

My finding are more consistent with the constraints of physical law, but at the same time, they say nothing about how the atmosphere self organizes itself to meet those constraints, so I’m open to all possibilities for this.

Stephen wrote: “The most dynamic component of any convecting atmosphere (and they always convect) is the conversion of KE to PE in rising air and conversion of PE to KE in falling air.”

You are ignoring the fact that every packet of air is “floating” in a sea of air of equal density. If I scuba dive with a weight belt that provides neutral buoyancy, no work done when I raise or lower my depth below the surface: An equal weight of water moves in the opposite direction I move. In water, I only need to overcome friction to change my “altitude”. The potential energy associated with my altitude is irrelevant.

In the atmosphere, the same situation exists, plus there is almost no friction. A packet of air can rise without any work being done because an equal weight of air is falling. The change that develops when air rises doesn’t involve potential energy (and equal weight of air falls elsewhere), it is the PdV work done by the (adiabatic) expansion under the lower pressure at higher altitude. That work comes from the internal energy of the gas, lowering its temperature and kinetic energy. (The gas that falls is warmed by adiabatic compression.) After expanding and cooling, the density of the risen air will be greater than that of the surrounding air and it will sink – unless the temperature has dropped fast enough with increasing altitude. All of this, of course, produces the classical formulas associated with adiabatic expansion and derivation of the adiabatic lapse rate (-g/Cp).

You presumably can get the correct answer by dealing with the potential energy of the rising and falling air separately, but your calculations need to include both.

Frank,

At any given moment half the atmosphere is rising and half is falling. None of it ever just ‘floats’ for any length of time.

The average surface pressure is about 1000mb. Anything less is rising air and anything more is falling air.

Quite simply, you do have to treat the potential energy in rising and falling air separately so one must apply the opposite sign to each so that they cancel out to zero. No more complex calculation required.

”At any given moment half the atmosphere is rising and half is falling. None of it ever just ‘floats’ for any length of time.”

Nonsense, only in your faulty imagination Stephen.

Earth atm. IS “floating”, calm most of the time at the neutral buoyancy line of the natural lapse rate meaning as Stephen often writes in hydrostatic equilibrium, the static therein MEANS static. This is what Lorenz 1954 is trying to tell Stephen but it is way beyond his comprehension. You waste our time imagining things Stephen, try learning reality: Lorenz 1954 “Consider first an atmosphere whose density stratification is everywhere horizontal. In this case, although total potential energy is plentiful, none at all is available for conversion into kinetic energy.”

Lorenz does not claim that to be the baseline condition of any atmosphere.

Lorenz is just simplifying the scenario in order to make a point about how PE can be converted to KE by introducing a vertical component.

He doesn’t suggest that any real atmospheres are everywhere horizontal. It cannot happen.

All low pressure cells contain rising air and all high pressure cells contain falling air and together they make up the entire atmosphere.

Overall hydrostatic equilibrium does not require the bulk of an atmosphere to float along the lapse rate slope. All it requires is for ascents to balance descents.

Convection is caused by surface heating and conduction to the air above and results in the entire atmosphere being constantly involved in convective overturning.

Dr. Lorenz does claim that to be the baseline condition of Earth atm. as Stephen could learn by actually reading/absorbing the 1954 science paper i linked for him instead of just imagining things.

Less than 1% of abundant Earth atm. PE is available to upset hydrostatic conditions, allowing for stormy conditions per Dr. Lorenz calculations not 50%. If Stephen did not have such a shallow understanding of meteorology, he would not need to actually contradict himself:

“balance between KE and PE in the atmosphere so that hydrostatic equilibrium can be retained.”

https://wattsupwiththat.com/2017/01/05/physical-constraints-on-the-climate-sensitivity/comment-page-1/#comment-2393734

or contradict Dr. Lorenz writing in 1954 who is way…WAY more accomplished in the science of meteorology since as soundings show hydrostatic conditions generally prevail on Earth in those observations & as calculated: “Hence less than one per cent of the total potential energy is generally available for conversion into kinetic energy.” Not the 50% of total PE Stephen imagines showing his ignorance of atm. radiation fields and available PE.

There is a difference between the small local imbalances that give rise to local storminess and the broader process of creation of PE from KE during ascent plus creation of KE from PE in descent.

It is Trick’s regular ‘trick’ to obfuscate in such ways and mix it in with insults.

I made no mention of the proportion of PE available for storms. The 50/50 figure relates to total atmospheric volume engaged in ascent and descent at any given time which is a different matter.

Even the stratosphere has a large slow convective overturning cycle known as the Brewer Dobson Circulation and most likey the higher layers too to some extent.

Convective overturning is ubiquitous in the troposphere.

No point engaging with Trick any further.

”He doesn’t suggest that any real atmospheres are everywhere horizontal. It cannot happen.”

Dr. Lorenz only calculates 99% Stephen not 100% as you imagine or there would be no storms observed. Try to stick to that ~1% small percentage of available PE, not 50/50. I predict you will not be able.

”I made no mention of the proportion of PE available for storms. The 50/50 figure relates to total atmospheric volume engaged in ascent and descent at any given time which is a different matter.”

Dr. Lorenz calculated in 1954 that 99/1 available for ascent/descent which means the atm. is mostly in hydrostatic equilibrium, 50/50 figure is only in Stephen’s imagination not observed in the real world. Stephen even agreed with Dr. Lorenz 1:03pm: “because indisputably the atmosphere is in hydrostatic equilibrium.” then contradicts himself with the 50/50.

”It is Trick’s regular ‘trick’ to obfuscate in such ways and mix it in with insults.”

No obfuscation, I use Dr. Lorenz’ words exactly clipped for the interested reader to find in the paper I linked & and only after Stephen’s initial fashion: 1/15 12:45am: “I think Trick is wasting my time and that of general readers.” No need to engage with me, but to further Stephen’s understanding of meteorology it would be a good idea for him to engage with Dr. Lorenz. And a good meteorological text book to understand the correct basic science.

“Much of the dynamic range in your data comes for polar regions”

This is incorrect. Each of the larger dots is the 3 decade average for each 2.5 degree slice of latitude and as you can see, these are uniformly spaced across the SB curve and most surprisingly, mostly independent of hemispheric asymmetries (N hemisphere 2.5 degree slices align on top of S hemisphere slices). Most of the data represents the mid latitudes.

There are 2 deviations from the ideal curve. One is around 273K (0C) where water vapor is becoming more important and I’ve been able to characterize and quantify this deviation. This leads to the fact that the only effect incremental CO2 has is to slightly decrease the EFFECTIVE emissivity of surface emissions relative to emissions leaving the planet. It’s this slight decrease applied to all 240 W/m^2 that results in the 3.7 W/m^2 of EQUIVALENT forcing from doubling CO2.

The other deviation is at the equator, but if you look carefully, one hemisphere has a slightly higher emissivity which is offset by a lower emissivity in the other. As far as I can tell, this seems to be an anomaly with how AU normalized solar input was applied to the model by GISS, but in any event, seems to cancel.

George, what you are seeing at toa, is my WV regulating outgoing, but at high absolute humidity, there’s less dynamic room. The high rate will reduce as absolute water vapor increases, so the difference between the two speeds will be less. Thus would be manifest as the slope you found as absolute humidity drops moving towards the poles, increasing the regulation ability, the gap between high and low cooling rates go up.

Does the hitch at 0C have an energy commiserate with water vapor changing state?

“Does the hitch at 0C have an energy commiserate with water vapor changing state?”

No. Because of the integration time being longed than the lifetime of atmospheric water, the energy of the state changes from evaporation and condensation effectively offset each other, as RW pointed out.

The way I was able to quantify it was via equation 3 which relates atmospheric absorption (the emissivity of the atmosphere itself) to the EQUIVALENT emissivity of the system comprised of an approximately BB surface and an approximately gray body atmosphere. The absorption can be calculated with line by line simulations quantifying the increase in water vapor and the increase in absorption was consistent with the decrease in EQUIVALENT emissivity of the system.

But you have two curves, you need say 20% to 100% rel humidity over a wide range of absolute humidity (say Antarctica and rainforest) you’ll get a contour map showing ir interacting with both water and co2. As someone who has designed cpu’s you should recognize this. This making a single assumption for an interconnect model for every gate in a cpu, without modeling length, parallel traces, driver device parameters. An average might be a place to start, but it won’t get you fabricated chips that work.

micro6500,

In CPU design there are 2 basic kinds of simulations. One is a purely logical simulation with unit delays and the other is a full timing simulation with parasitics back annotated and rather than unit delay per gate, gates have a variable delay based on drive and loading.

The gray body model is analogous to a logical simulation, while a GCM is analogous to a full timing simulation. Both get the same ultimate answers (as long as timing parameters are not violated) and logical simulations are often used to cross check the timing simulation.

George, I was an Application Eng for both Agile and Viewlogic as the simulation expert on the east coast for 14 years.

GCM are broken, their evaporation parameterization is wrong.

But as I’ve shown, we are not limited to that.

My point is that Modtran, Hitran, when used with a generic profile is useless for the questions at hand. Too much of the actual dynamics are erased throwing away so much knowledge. Though it is a big task, that I don’t know how to do.

micro6500,

“GCM are broken …”

“My point is that Modtran, Hitran, when used with a generic profile is useless for the questions at hand.”

While I wholeheartedly agree that GCM’s are broken for many reasons, I don’t necessarily agree with your assertion about the applicability of a radiative transfer analysis based on aggregate values. BTW, Hitran is not a program, but a database quantifying absorption lines of various gases and is an input to Modtran and to my code that does the same thing.

While there are definitely differences between a full blown dynamic analysis and an analysis based on aggregate values, the differences are too small to worry about, especially given that the full blown analysis requires many orders of magnitude more CPU time to process than an aggregate analysis. It seems to me that there’s also a lot more room for error when doing a detailed dynamic analysis since there are many more unknowns and attributes that must be tracked and/or fit to the results. Given that this what GCM’s attempt to do, it’s not surprising that they are so broken, Simpler is better because there’s less room for error, even if the results aren’t 100% accurate because not all of the higher order influences are accounted for.

The reason for the relatively small difference is superposition in the energy domain since all of the analysis I do is in the energy domain and any reported temperatures are based on an equivalent ideal BB applied to the energy fluxes that the analysis produces. Conversely, any analysis that emphasises temperatures will necessarily be wrong in the aggregate.

Then I’m not sure you understand how water vapor is regulating cooling, because a point snapshot isn’t going to detect it, and it’s only the current average of the current conditions during the dynamic cooling across the planet.

micro6500,

“because a point snapshot isn’t going to detect it”

There’s no reliance on point snapshots, but of averages in the energy domain of from 1 month to 3 decades. Even the temperatures reported in Figure 3 are average emissions, spatially and temporally integrated and converted to an EQUIVALENT temperature. The averages smooth out the effects of water vapor and other factors. Certainly, monthly average do not perfectly smooth out the effects and this is evident by the spread of red dots around the mean, but as the length of the average increases, these deviations are minimized and the average converges to the mean. Even considering single year averages, there’s not much deviation from the mean.

The nightly effect is dynamic, that snapshot is just what it’s been, which I guess is what it was, but you can’t extrapolate it, that is meaningless.

Stephen wrote: “At any given moment half the atmosphere is rising and half is falling. None of it ever just ‘floats’ for any length of time. The average surface pressure is about 1000mb. Anything less is rising air and anything more is falling air.

Yes. The surface pressure under the descending air is about 1-2% higher than average and the pressure underneath rising air is normally about 1-2% lower. The descending air is drier and therefore heavier and needs more pressure to support its weight. To a solid first approximate, it is floating and we can ignore the potential energy change associated with the rise and fall of air.

You can only ignore the PE from simple rising and falling which is trivial

You cannot ignore the PE from reducing the distance between molecules which is substantial.

That is the PE that gives heating when compression occurs.

However, PdV work is already accounted for when you calculate an adiabatic lapse rate (moist or dry). If you assume a lapse rate created by gravity alone and then add terms for PE or PdV, you are double-counting these phenomena.

Gases are uniformly dispersed in the troposphere (and stratosphere) without regard to molecular weight. This proves that convection – not potential energy being converted to kinetic energy – is responsible for the lapse rate in the troposphere. Gravity’s influence is felt through the atmospheric pressure it produces. Buoyancy ensures that potential energy changes in one location are offset by changes in another.

Sounds rather confused. There is no double counting because PE is just a term for the work done by mass against gravity during the decompression process involved in uplift and which is quantified in the PdV formula.

Work done raising an atmosphere up against gravity is then reversed when work is done by an atmosphere falling with gravity so it is indeed correct that PE changes in one location are offset by changes in another.

Convection IS the conversion of KE to PE in ascent AND of PE to KE in descent so you have your concepts horribly jumbled, hence your failure to understand.

Brilliant!

George: Before applying the S-B equation, you should ask some fundamental questions about emissivity: Do gases have an emissivity? What is emissivity?

The radiation inside solids and liquids has usually come into equilibrium with the temperature of the solid or liquid that emits thermal radiation. If so, it has a blackbody spectrum when it arrives at the surface, where some is reflected inward. This produces an emissivity less than unity. The same fraction of incoming radiation is reflected (or scattered) outward at the surface; accounting for the fact that emissivity equals absorptivity at any given wavelength. In this case, emissivity/absorptivity is an intrinsic property of material that is independent of mass.

What happens with a gas, which has to surface to create emissivity? Intuitively, gases should have an emissivity of unity. The problem is that a layer of gas may not be thick enough for the radiation that leaves its surface to have come into equilibrium with the gas molecules in the layer. Here scientists talk about “optically-thick” layers of atmosphere that are assumed to emit blackbody radiation and “optically thin” layers of atmosphere whose: 1) emissivity and absorptivity is proportional to the density of gas molecules inside the layer and their absorption cross-section whose emissivity varies with B(lambda,T), but whose absorptivity is independent of T.

One runs into exactly the same problem thinking about layers of solids and liquids that are thin enough to be partially transparent. Emissivity is no longer an intrinsic property.

The fundamental problem with this approach to the atmosphere is that the S-B is totally inappropriate for analyzing radiation transfer through an atmosphere with temperature ranging from 200-300 K, and which is not in equilibrium with the radiation passing through it. For that you need the Schwarzschild eqn.

dI = emission – absorption

dI = n*o*B(lambda,T)*dz – n*o*I*dz

where dI is the change in spectral intensity, passing an incremental distance through a gas with density n, absorption cross-section o, and temperature T, and I is the spectral intensity of radiation entering the segment dz.

Notice these limiting cases: a) When I is produced by a tungsten filament at several thousand K in the laboratory, we can ignore the emission term and obtain Beer’s Law for absorption. b) When dI is zero because absorption and emission have reached equilibrium (in which case Planck’s Law applies), I = B(lambda,T). (:))

When dealing with partially-transparent thin films of solids and liquids, one needs the Schwarzschild equation, not the S-B eqn.

When an equation such as the S-B or Schwarzchild is at the center of attention of a group of people there is the possibility that the thinking of these people is corrupted by an application of the reification fallacy. Under this fallacy, an abstract object is treated as if it were a concrete object. In this case, the abstract object is an Earth that is abstracted from enough of its features to make it obey one of the two equations exactly. This thinking leads to the dubious conclusion that the concrete Earth on which we live has a “climate sensitivity” that has a constant but uncertain numerical value. Actually it is a certain kind of abstract Earth that has a climate sensitivity.

Terry: From Wikipedia: “The concept of a “construct” has a long history in science; it is used in many, if not most, areas of science. A construct is a hypothetical explanatory variable that is not directly observable. For example, the concepts of motivation in psychology and center of gravity in physics are constructs; they are not directly observable. The degree to which a construct is useful and accepted in the scientific community depends on empirical research that has demonstrated that a scientific construct has construct validity (especially, predictive validity).[10] Thus, if properly understood and empirically corroborated, the “reification fallacy” applied to scientific constructs is not a fallacy at all; it is one part of theory creation and evaluation in normal science.”

Thermal infrared radiation is a tangible quantity that can be measured with instruments. It’s interactions with GHGs have been studied in the laboratory and in the atmosphere itself: Instruments measure OLR from space and DLR measured at the surface. These are concrete measurements, not abstractions.

A simple blackbody near 255 K has a “climate sensitivity”. For every degK its temperature rises, it emits 3.7 W/m2 or 3.7 W/m2/K. (Try it.) In climate science, we take the reciprocal and multiply by 3.7 W/m2/doubing to get 1.0 K/doubling. 3.8 W/m2/K is equivalent and simple to understand. There is nothing abstract about it. The earth also emits (and reflects) a certain number of W/m2 to space for each degK of rise in surface temperature. Because humidity, lapse rate, clouds, and surface albedo change with surface temperature (feedbacks), the Earth doesn’t emit like blackbody at 255 K. However, some quantity (in W/m2) does represent the average increase with TOA OLR and reflected SWR with a rise in surface temperature. That quantity is equivalent to climate sensitivity.

Frank:

In brief, that reification is a fallacy is proved by its negation of the principle of entropy maximization. If interested in a more long winded and revealing proof please ask.

Frank,

“Do gases have an emissivity?”

“Intuitively, gases should have an emissivity of unity.”

The O2 and N2 in the atmosphere has an emissivity close to 0, not unity, as these molecules are mostly transparent to both visible light input and LWIR output. Most of the radiation emitted by the atmosphere comes from clouds, which are classic gray bodies. Most of the rest comes from GHG’s returning the the ground state by emitting a photon. The surface directly emits energy into space that passes through the transparent regions of the spectrum and this is added to the contribution by the atmosphere to arrive at the 240 W/m^2 of planetary emissions.

Even GHG emissions can be considered EQUIVALENT to a BB or gray body, just as the 240 W/m^2 of emissions by the planet are considered EQUIVALENT to a temperature of 255K. EQUIVALENT being the operative word.

Again, I will to emphasize that the model is only modelling the behavior at the boundaries and makes no attempt to model what happens within.

Since emissivity less than unity is produced by reflection at the interface between solids and liquids and since gases have no surface to reflect, I reasoned that that would have unit emissivity. N2 and O2 are totally transparent to thermal IR. The S-B equation doesn’t work for materials that are semi-transparent and (you are correct that) my explanation fails for totally transparent. The Schwarzschild equation does just fine. o = 0 dI = 0.

The presence of clouds doesn’t interfere with may rational why Doug should not be applying the S-B eqn to the Earth. The Schwarzschild equation works just fine if you convert clouds to a radiating surface with a temperature and emissivity. The tricky part of applying this equation is that you need to know the temperature (and density and humidity) at all altitudes. In the troposphere, temperature is controlled by lapse rate and surface temperature. (In the stratosphere, by radiative equilibrium, which can be used to calculate temperature.)

When you observe OLR from space, you see nothing that looks like a black or gray body with any particular temperature and emissivity. If you look at dW/dT = 4*e*o*T^3 or 4*e*o*T^3 + oT^4*(de/dT), you get even more nonsense. The S-B equation is a ridiculous model to apply to our planet. Doug is applying an equation that isn’t appropriate for our planet.

Frank,

“The S-B equation doesn’t work for materials that are semi-transparent”

Sure it does. This is what defines a gray body and that which isn’t absorbed is passed through. The wikipedia definition of a gray body is one that doesn’t absorb all of the incident energy. What isn’t absorbed is either reflected, passed through or performs work that is not heating the body, although the definition is not specific, nor should it be, about what happens to this unabsorbed energy.

The gray body model of O2 and N2 has an effective emissivity very close to zero.

Frank wrote: The S-B equation doesn’t work for materials that are semi-transparent”

co2isnotevil replied: “Sure it does. This is what defines a gray body and that which isn’t absorbed is passed through.”

Frank continues: However the radiation emitted by a layer of semi-transparent material depends on what lies behind the semi-transparent material: a light bulb, the sun, or empty space. Emission (or emissivity) from semi-tansparent materials depends on more that the just the composition of the material: It depends on its thickness and what lies behind. The S-B eqn has no terms for thickness or radiation incoming from behind. S-B tells you that outgoing radiation depends only on two factors. Temperature and emissivity (which is a constant).

Some people change the definition of emissivity for optically thin layers so that it is proportional to density and thickness. However, that definition has problems too, because emission can grow without limit if the layer is thick enough or the density is high enough. Then they switch the definition for emissivity back to being a constant and say that the material is optically thick.

Frank.

“Frank continues: However the radiation emitted by a layer of semi-transparent material depends on what lies behind the semi-transparent material”

For the gray body EQUIVALENT model of Earth, the emitting surface in thermal equilibrium with the Sun (the ocean surface and bits of land poking through) is what lies behind the semi-transparent atmosphere.

The way to think about it is that without an atmosphere, the Earth would be close to an ideal BB. Adding an atmosphere changes this, but can not change the T^4 dependence between the surface temperature and emissions or the SB constant, so what else is there to change?

Whether the emissions are attenuated uniformly or in a spectrally specific manner, it’s a proportional attenuation quantifiable by a scalar emissivity.

Frank,

“The tricky part of applying this equation is that you need to know the temperature (and density and humidity) at all altitudes.”

I agree with what you are saying, and this is a key. You can regard a gas as a S-B type emitter, even without surface, provided its temperature is uniform. That is the T you would use in the formula. A corollary toi this is that you have to have space, or a 0K black body, behind, unless it is so optically thick that negligible radiation can get through.

For the atmosphere, there are frequencies where it is optically thin, but backed by surface. Then you see the surface. And there are frequencies where it is optically thick. Then you see (S-B wise) TOA. And in between, you see in between. Notions of grey body and aggregation over frequency just don’t work.

Nick said: You can regard a gas as a S-B type emitter, even without surface, provided its temperature is uniform.

Not quite. For black or gray bodies. the amount of material is irrelevant. If I take one sheet of aluminum foil (without oxidation), its emissivity is 0.03. If I layer 10 or 100 sheets of aluminum foil on top of each other or fuse them into a single sheet, it emissivity will still be 0.03. This isn’t true for a gas. Consider DLR starting it trip from space to the surface. For a while, doubling the distance traveled (or doubling the number of molecules passed, if the density changes) doubles the DLR flux because there is so little flux that absorption is negligible. However, by the time one reaches an altitude where the intensity of the DLR flux at that wavelength is approaching blackbody intensity for that wavelength and altitude/temperature, most of the emission is compensated for by absorption.

If you look at the mathematics of the Schwarzschild eqn., it says that the incoming spectral intensity is shifted an amount dI in the direction of blackbody intensity (B(lambda,T)) and the rate at which blackbody intensity is approached is proportional to the density of the gas (n) and its cross-section (o). The only time spectral intensity doesn’t change with distance traveled is when it has reached blackbody intensity (or n or o are zero).

When radiation has traveled far enough through a (non-transparent) homogeneous material at constant temperature, radiation of blackbody intensity will emerge. This is why most solids and liquids emit blackbody radiation – with a correction for scattering at the surface (ie emissivity). And this surface scattering the same from both directions – emissivity equals absorptivity.

Frank,

“This is why most solids and liquids emit blackbody radiation”

As I understand it, a Planck spectrum is the degenerate case of line emission occurring as the electron shells of molecules merge, which happens in liquids and solids, but not gases. As molecules start sharing electrons, there are more degrees of freedom and the absorption and emission lines of a molecules electrons morphs into broad band absorption and emission of a shared electron cloud. The Planck distribution arises as probabilistic distribution of energies.

Frank,

My way of visualizing the Schwarzschild equation has the gas as a collection of small black balls, of radius depending on emissivity. Then the emission is the Stefan-Boltzmann amount for the balls. Looking at a gas of uniform temperature from far away, the flux you see depends just on how much of the view area is occupied by balls. That fraction is the effective S-B emissivity, and is 1 if the balls are large and dense enough. But it’s messy if not at uniform temperature.

Nick,

“Notions of grey body and aggregation over frequency just don’t work.”

If you are looking at an LWIR spectrum from afar, yet you do not know with high precision how far away you are, how would you determine the equivalent temperature of its radiating surface?

HINT: Wein’s Displacement

What is the temperature of Earth based on Wein’s Displacement and its emitted spectrum?

HINT: It’s not 255K