Guest essay by George White

For matter that’s absorbing and emitting energy, the emissions consequential to its temperature can be calculated exactly using the Stefan-Boltzmann Law,

1) P = εσT4

where P is the emissions in W/m2, T is the temperature of the emitting matter in degrees Kelvin, σ is the Stefan-Boltzmann constant whose value is about 5.67E-8 W/m2 per K4 and ε is the emissivity which is 1 for an ideal black body radiator and somewhere between 0 and 1 for a non ideal system also called a gray body. Wikipedia defines a Stefan-Boltzmann gray body as one “that does not absorb all incident radiation” although it doesn’t specify what happens to the unabsorbed energy which must either be reflected, passed through or do work other than heating the matter. This is a myopic view since the Stefan-Boltzmann Law is equally valid for quantifying a generalized gray body radiator whose source temperature is T and whose emissions are attenuated by an equivalent emissivity.

To conceptualize a gray body radiator, refer to Figure 1 which shows an ideal black body radiator whose emissions pass through a gray body filter where the emissions of the system are observed at the output of the filter. If T is the temperature of the black body, it’s also the temperature of the input to the gray body, thus Equation 1 still applies per Wikipedia’s over-constrained definition of a gray body. The emissivity then becomes the ratio between the energy flux on either side of the gray body filter. To be consistent with the Wikipedia definition, the path of the energy not being absorbed is omitted.

A key result is that for a system of radiating matter whose sole source of energy is that stored as its temperature, the only possible way to affect the relationship between its temperature and emissions is by varying ε since the exponent in T4 and σ are properties of immutable first principles physics and ε is the only free variable.

The units of emissions are Watt/meter2 and one Watt is one Joule per second. The climate system is linear to Joules meaning that if 1 Joule of photons arrives, 1 Joule of photons must leave and that each Joule of input contributes equally to the work done to sustain the average temperature independent of the frequency of the photons carrying that energy. This property of superposition in the energy domain is an important, unavoidable consequence of Conservation of Energy and often ignored.

The steady state condition for matter that’s both absorbing and emitting energy is that it must be receiving enough input energy to offset the emissions consequential to its temperature. If more arrives than is emitted, the temperature increases until the two are in balance. If less arrives, the temperature decreases until the input and output are again balanced. If the input goes to zero, T will decay to zero.

Since 1 calorie (4.18 Joules) increases the temperature of 1 gram of water by 1C, temperature is a linear metric of stored energy, however; owing to the T4 dependence of emissions, it’s a very non linear metric of radiated energy so while each degree of warmth requires the same incremental amount of stored energy, it requires an exponentially increasing incoming energy flux to keep from cooling.

The equilibrium climate sensitivity factor (hereafter called the sensitivity) is defined by the IPCC as the long term incremental increase in T given a 1 W/m2 increase in input, where incremental input is called forcing. This can be calculated for emitting matter in LTE by differentiating the Stefan-Boltzmann Law with respect to T and inverting the result. The value of dT/dP has the required units of degrees K per W/m2 and is the slope of the Stefan-Boltzmann relationship as a function of temperature given as,

2) dT/dP = (4εσT3)-1

A black body is nearly an exact model for the Moon. If P is the average energy flux density received from the Sun after reflection, the average temperature, T, and the sensitivity, dT/dP can be calculated exactly. If regions of the surface are analyzed independently, the average T and sensitivity for each region can be precisely determined. Due to the non linearity, it’s incorrect to sum up and average all the T’s for each region of the surface, but the power emitted by each region can be summed, averaged and converted into an equivalent average temperature by applying the Stefan-Boltzmann Law in reverse. Knowing the heat capacity per m2 of the surface, the dynamic response of the surface to the rising and setting Sun can also be calculated all of which was confirmed by equipment delivered to the Moon decades ago and more recently by the Lunar Reconnaissance Orbiter. Since the lunar surface in equilibrium with the Sun emits 1 W/m2 of emissions per W/m2 of power it receives, its surface power gain is 1.0. In an analytical sense, the surface power gain and surface sensitivity quantify the same thing, except for the units, where the power gain is dimensionless and independent of temperature, while the sensitivity as defined by the IPCC has a T-3 dependency and which is incorrectly considered to be approximately temperature independent.

A gray body emitter is one where the power emitted is less than would be expected for a black body at the same temperature. This is the only possibility since the emissivity can’t be greater than 1 without a source of power beyond the energy stored by the heated matter. The only place for the thermal energy to go, if not emitted, is back to the source and it’s this return of energy that manifests a temperature greater than the observable emissions suggest. The attenuation in output emissions may be spectrally uniform, spectrally specific or a combination of both and the equivalent emissivity is a scalar coefficient that embodies all possible attenuation components. Figure 2 illustrates how this is applied to Earth, where A represents the fraction of surface emissions absorbed by the atmosphere, (1 – A) is the fraction that passes through and the geometrical considerations for the difference between the area across which power is received by the atmosphere and the area across which power is emitted are accounted for. This leads to an emissivity for the gray body atmosphere of A and an effective emissivity for the system of (1 – A/2).

The average temperature of the Earth’s emitting surface at the bottom of the atmosphere is about 287K, has an emissivity very close to 1 and emits about 385 W/m2 per Equation 1. After accounting for reflection by the surface and clouds, the Earth receives about 240 W/m2 from the Sun, thus each W/m2 of input contributes equally to produce 1.6 W/m2 of surface emissions for a surface power gain of 1.6.

Two influences turn 240 W/m2 of solar input into 385 W/m2 of surface output. First is the effect of GHG’s which provides spectrally specific attenuation and second is the effect of the water in clouds which provides spectrally uniform attenuation. Both warm the surface by absorbing some fraction of surface emissions and after some delay, recycling about half of the energy back to the surface. Clouds also manifest a conditional cooling effect by increasing reflection unless the surface is covered in ice and snow when increasing clouds have only a warming influence.

Consider that if 290 W/m2 of the 385 W/m2 emitted by the surface is absorbed by atmospheric GHG’s and clouds (A ~ 0.75), the remaining 95 W/m2 passes directly into space. Atmospheric GHG’s and clouds absorb energy from the surface, while geometric considerations require the atmosphere to emit energy out to space and back to the surface in roughly equal proportions. Half of 290 W/m2 is 145 W/m2 which when added to the 95 W/m2 passed through the atmosphere exactly offsets the 240 W/m2 arriving from the Sun. When the remaining 145 W/m2 is added to the 240 W/m2 coming from the Sun, the total is 385 W/m2 exactly offsetting the 385 W/m2 emitted by the surface. If the atmosphere absorbed more than 290 W/m2, more than half of the absorbed energy would need to exit to space while less than half will be returned to the surface. If the atmosphere absorbed less, more than half must be returned to the surface and less would be sent into space. Given the geometric considerations of a gray body atmosphere and the measured effective emissivity of the system, the testable average fraction of surface emissions absorbed, A, can be predicted as,

3) A = 2(1 – ε)

Non radiant energy entering and leaving the atmosphere is not explicitly accounted for by the analysis, nor should it be, since only radiant energy transported by photons is relevant to the radiant balance and the corresponding sensitivity. Energy transported by matter includes convection and latent heat where the matter transporting energy can only be returned to the surface, primarily by weather. Whatever influences these have on the system are already accounted for by the LTE surface temperatures, thus their associated energies have a zero sum influence on the surface radiant emissions corresponding to its average temperature. Trenberth’s energy balance lumps the return of non radiant energy as part of the ‘back radiation’ term, which is technically incorrect since energy transported by matter is not radiation. To the extent that latent heat energy entering the atmosphere is radiated by clouds, less of the surface emissions absorbed by clouds must be emitted for balance. In LTE, clouds are both absorbing and emitting energy in equal amounts, thus any latent heat emitted into space is transient and will be offset by more surface energy being absorbed by atmospheric water.

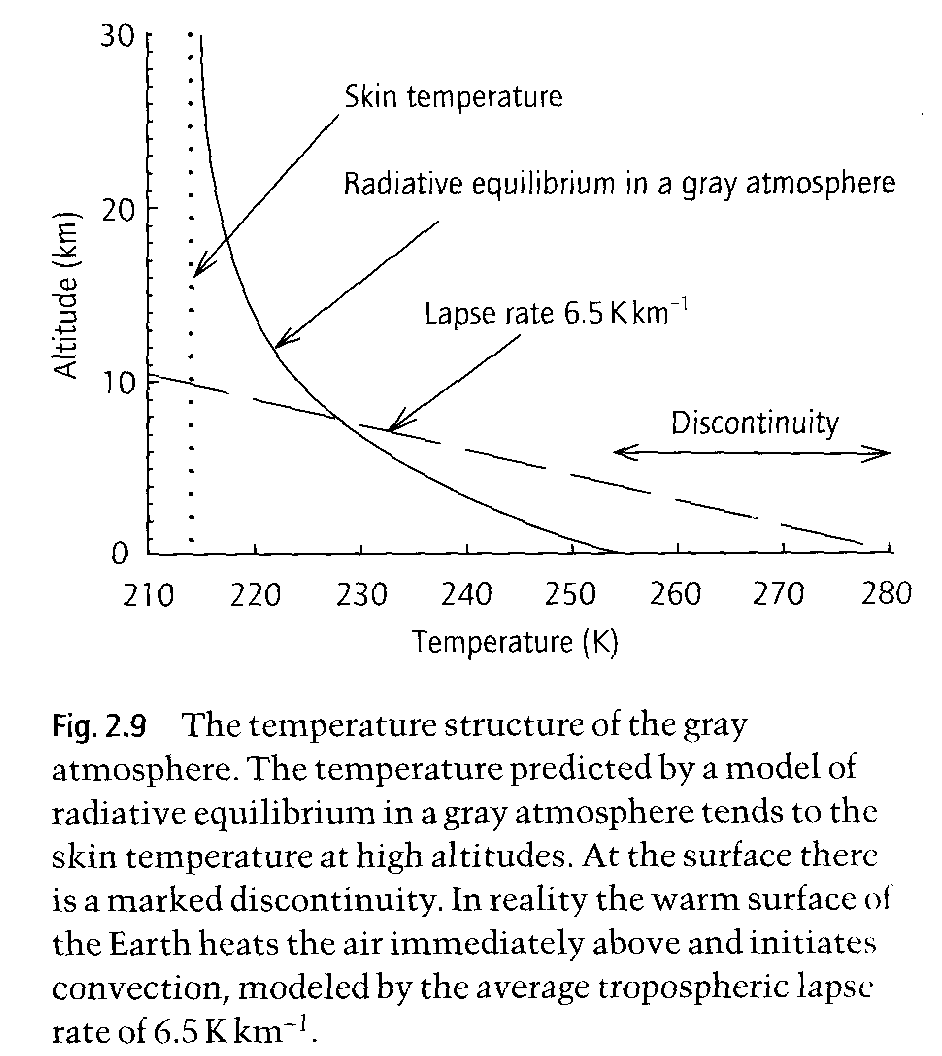

The Earth can be accurately modeled as a black body surface with a gray body atmosphere, whose combination is a gray body emitter whose temperature is that of the surface and whose emissions are that of the planet. To complete the model, the required emissivity is about 0.62 which is the reciprocal of the surface power gain of 1.6 discussed earlier. Note that both values are dimensionless ratios with units of W/m2 per W/m2. Figure 3 demonstrates the predictive power of the simplest gray body model of the planet relative to satellite data.

Figure 3

Each little red dot is the average monthly emissions of the planet plotted against the average monthly surface temperature for each 2.5 degree slice of latitude. The larger dots are the averages for each slice across 3 decades of measurements. The data comes from the ISCCP cloud data set provided by GISS, although the output power had to be reconstructed from radiative transfer model driven by surface and cloud temperatures, cloud opacity and GHG concentrations, all of which were supplied variables. The green line is the Stefan-Boltzmann gray body model with an emissivity of 0.62 plotted to the same scale as the data. Even when compared against short term monthly averages, the data closely corresponds to the model. An even closer match to the data arises when the minor second order dependencies of the emissivity on temperature are accounted for,. The biggest of these is a small decrease in emissivity as temperatures increase above about 273K (0C). This is the result of water vapor becoming important and the lack of surface ice above 0C. Modifying the effective emissivity is exactly what changing CO2 concentrations would do, except to a much lesser extent, and the 3.7 W/m2 of forcing said to arise from doubling CO2 is the solar forcing equivalent to a slight decrease in emissivity keeping solar forcing constant.

Near the equator, the emissivity increases with temperature in one hemisphere with an offsetting decrease in the other. The origin of this is uncertain but it may be an anomaly that has to do with the normalization applied to use 1 AU solar data which can also explain some other minor anomalous differences seen between hemispheres in the ISCCP data, but that otherwise average out globally.

When calculating sensitivities using Equation 2, the result for the gray body model of the Earth is about 0.3K per W/m2 while that for an ideal black body (ε = 1) at the surface temperature would be about 0.19K per W/m2, both of which are illustrated in Figure 3. Modeling the planet as an ideal black body emitting 240 W/m2 results in an equivalent temperature of 255K and a sensitivity of about 0.27K per W/m2 which is the slope of the black curve and slightly less than the equivalent gray body sensitivity represented as a green line on the black curve.

This establishes theoretical possibilities for the planet’s sensitivity somewhere between 0.19K and 0.3K per W/m2 for a thermodynamic model of the planet that conforms to the requirements of the Stefan-Boltzmann Law. It’s important to recognize that the Stefan-Boltzmann Law is an uncontroversial and immutable law of physics, derivable from first principles, quantifies how matter emits energy, has been settled science for more than a century and has been experimentally validated innumerable times.

A problem arises with the stated sensitivity of 0.8C +/- 0.4C per W/m2, where even the so called high confidence lower limit of 0.4C per W/m2 is larger than any of the theoretical values. Figure 3 shows this as a blue line drawn to the same scale as the measured (red dots) and modeled (green line) data.

One rationalization arises by inferring a sensitivity from measurements of adjusted and homogenized surface temperature data, extrapolating a linear trend and considering that all change has been due to CO2 emissions. It’s clear that the temperature has increased since the end of the Little Ice Age, which coincidently was concurrent with increasing CO2 arising from the Industrial Revolution, and that this warming has been a little more than 1 degree C, for an average rate of about 0.5C per century. Much of this increase happened prior to the beginning the 20’th century and since then, the temperature has been fluctuating up and down and as recently as the 1970’s, many considered global cooling to be an imminent threat. Since the start of the 21’st century, the average temperature of the planet has remaining relatively constant, except for short term variability due to natural cycles like the PDO.

A serious problem is the assumption that all change is due to CO2 emissions when the ice core records show that change of this magnitude is quite normal and was so long before man harnessed fire when humanities primary influences on atmospheric CO2 was to breath and to decompose. The hypothesis that CO2 drives temperature arose as a knee jerk reaction to the Vostok ice cores which indicated a correlation between temperature and CO2 levels. While such a correlation is undeniable, newer, higher resolution data from the DomeC cores confirms an earlier temporal analysis of the Vostok data that showed how CO2 concentrations follow temperature changes by centuries and not the other way around as initially presumed. The most likely hypothesis explaining centuries of delay is biology where as the biosphere slowly adapts to warmer (colder) temperatures as more (less) land is suitable for biomass and the steady state CO2 concentrations will need to be more (less) in order to support a larger (smaller) biomass. The response is slow because it takes a while for natural sources of CO2 to arise and be accumulated by the biosphere. The variability of CO2 in the ice cores is really just a proxy for the size of the global biomass which happens to be temperature dependent.

The IPCC asserts that doubling CO2 is equivalent to 3.7 W/m2 of incremental, post albedo solar power and will result in a surface temperature increase of 3C based on a sensitivity of 0.8C per W/m2. An inconsistency arises because if the surface temperature increases by 3C, its emissions increase by more than 16 W/m2 so 3.7 W/m2 must be amplified by more than a factor of 4, rather than the factor of 1.6 measured for solar forcing. The explanation put forth is that the gain of 1.6 (equivalent to a sensitivity of about 0.3C per W/m2) is before feedback and that positive feedback amplifies this up to about 4.3 (0.8C per W/m2). This makes no sense whatsoever since the measured value of 1.6 W/m2 of surface emissions per W/m2 of solar input is a long term average and must already account for the net effects from all feedback like effects, positive, negative, known and unknown.

Another of the many problems with the feedback hypothesis is that the mapping to the feedback model used by climate science does not conform to two important assumptions that are crucial to Bode’s linear feedback amplifier analysis referenced to support the model. First is that the input and output must be linearly related to each other, while the forcing power input and temperature change output of the climate feedback model are not owing to the T4 relationship between the required input flux and temperature. The second is that Bode’s feedback model assumes an internal and infinite source of Joules powers the gain. The presumption that the Sun is this source is incorrect for if it was, the output power could never exceed the power supply and the surface power gain will never be more than 1 W/m2 of output per W/m2 of input which would limit the sensitivity to be less than 0.2C per W/m2.

Finally, much of the support for a high sensitivity comes from models. But as has been shown here, a simple gray body model predicts a much lower sensitivity and is based on nothing but the assumption that first principles physics must apply, moreover; there are no tuneable coefficients yet this model matches measurements far better than any other. The complex General Circulation Models used to predict weather are the foundation for models used to predict climate change. They do have physics within them, but also have many buried assumptions, knobs and dials that can be used to curve fit the model to arbitrary behavior. The knobs and dials are tweaked to match some short term trend, assuming it’s the result of CO2 emissions, and then extrapolated based on continuing a linear trend. The problem is that there as so many degrees of freedom in the model, it can be tuned to fit anything while remaining horribly deficient at both hindcasting and forecasting.

The results of this analysis explains the source of climate science skepticism, which is that IPCC driven climate science has no answer to the following question:

What law(s) of physics can explain how to override the requirements of the Stefan-Boltzmann Law as it applies to the sensitivity of matter absorbing and emitting energy, while also explaining why the data shows a nearly exact conformance to this law?

References

1) IPCC reports, definition of forcing, AR5, figure 8.1, AR5 Glossary, ‘climate sensitivity parameter’

2) Kevin E. Trenberth, John T. Fasullo, and Jeffrey Kiehl, 2009: Earth’s Global Energy Budget. Bull. Amer. Meteor. Soc., 90, 311–323.

3) Bode H, Network Analysis and Feedback Amplifier Design assumption of external power supply and linearity: first 2 paragraphs of the book

4) Manfred Mudelsee, The phase relations among atmospheric CO content, temperature and global ice volume over the past 420 ka, Quaternary Science Reviews 20 (2001) 583-589

5) Jouzel, J., et al. 2007: EPICA Dome C Ice Core 800KYr Deuterium Data and Temperature Estimates.

6) ISCCP Cloud Data Products: Rossow, W.B., and Schiffer, R.A., 1999: Advances in Understanding Clouds from ISCCP. Bull. Amer. Meteor. Soc., 80, 2261-2288.

7) “Diviner Lunar radiometer Experiment” UCLA, August, 2009

I’m particularly interested in answers to the question posed at the end of the article.

George

There is no need explain an overriding of the law because there is no need to do so. The observed increase in temperature from a perfect black body to where we are today is entirely consistent with the law and can be estimated by anyone who has finished a second year heat transfer course. An exact calculation is more complex, but not beyond your average graduate mechanical engineer.

And the same is true for a non ideal black body, also called a gray body. Unfortunately, consensus climate science fails to make this connection. They simply can’t connect the dots between the sensitivity of the gray body model and the claimed sensitivity which differ by about a factor of 4.

The simple answer is probably that the Stefan Boltzmann law only applies to bodies in thermal equilibrium.

As long as the concentrations of CO2 are changing the earth is storing energy and will continue to do so for

several thousand years after CO2 levels stabilise (due to energy being stored in the ocean).

It should be also be pointed out that that neither Fig. 1 or Fig. 2 conserve energy. In each case there is

energy missing meaning that the analysis is wrong.

Germinio,

“As long as the concentrations of CO2 are changing …”

The planet has completely adapted to all prior CO2 emissions, except perhaps some of the emissions in the last 8-12 months. If the climate changed as slowly as it would need to for your hypothesis to be valid, we would not even notice seasonal change, nor would hemispheric temperature vary by as much as 12C every 12 months, nor would the average temperature of the planet vary by as much as 3C during any 12 month period.

No. It just means that the earth has a fast and a slow response to any perturbations. Both together need

to be considered before any claims that the earth is in thermal equilibrium and that the Stefan Boltzmann

law can be applied.

Earth rotates. So it never ever will be in thermal equilibrium.

PS I agree with your assertion as to the necessity for equilibrium. It is not sufficient.

SB also assumes it is isothermal. Well silly me, so does thermal equilibrium require isothermality.

G

CART BEFORE HORSE?

https://wattsupwiththat.com/2016/12/06/quote-of-the-week-mcintyres-comment-to-dilbert-creator-scott-adams-on-climate-experts/comment-page-1/#comment-2363478

Hi again Michael,

I wrote above:

“Atmospheric CO2 lags temperature by ~9 months in the modern data record and also by ~~800 years in the ice core record, on a longer time scale.”

In my shorthand, ~ means approximately and ~~ means very approximately (or ~squared).

It is possible that the causative mechanisms for this “TemperatureLead-CO2Lag” relationship are largely similar or largely different, although I suspect that both physical processes (ocean solution/exsolution) and biological processes (photosynthesis/decay and other biological) play a greater or lesser role at different time scales.

All that really matters is that CO2 lags temperature at ALL measured times scales and does not lead it, which is what I understand the modern data records indicate on the multi-decadal time scale and the ice core data records indicate on a much longer time scale.

This does not mean that temperature is the only (or even the primary) driver of increasing atmospheric CO2. Other drivers of CO2 could include deforestation, fossil fuel combustion, etc. but that does not matter for this analysis, because the ONLY signal that is apparent signal in the data records is the LAG of CO2 after temperature.

It also does not mean that increasing atmospheric CO2 has no impact on temperature; rather it means that this impact is quite small.

I conclude that temperature, at ALL measured time scales, drives CO2 much more than CO2 drives temperature.

Precedence studies are commonly employed in other fields, including science, technology and economics. The fact that this clear precedence is consistently ignored in “climate science” says something about the deeply held unscientific beliefs in this field – perhaps it should be properly be called “climate religion” or “climate dogma” – it just doesn’t look much like “science”.

Happy Holidays, Allan

Its not normal science. Its post normal science. The key characteristic of post normal science is to question the certainty of normal science. Its a reversal of the burden proof regarding our freedom to do things without first proving no harm. The pressure on this is occurring on every farm, home, beach, city in the world. Thus any amount of normal science suggesting that it is not likely that CO2 is a problem is going to be inadequate. The “sandpile” theory of Al Gore is the operative principle here. Catastrophe always results from piling sand too high. The fact that climate science fails is irrelevant. You must keep feeding the machine until they get it right. And of course they will never get it right because all the models will continue to feature CO2 as the operative principle of the greenhouse effect as they did in AR5 after the science opinion changed from all the warming to half the warming. The models continue to push for all. There are no science arguments to change this. The change can only occur politically and via retaining our culture of individual initiative.

Uh, those laws would be:

1) The law of unethical practitioner (given an accurate & accepted law of physics plus an unethical practitioner, results are unpredictable, usually catastrophically so)

2) The law of money (If you got money, I want some. When dealing with an an unethical practitioner, results are unpredictable, usually catastrophically so)

3) Stupid people (ok, those lacking minimal scientific training) can be tricked into believing stupid things. When manipulated by an unethical practitioner, results are unpredictable, usually catastrophically so)

You forgot the power law (I want to control you. When dealing with sheeple, results are predictable, they will worship and follow you even into catastrophes of their own doing.)

So many mistakes in this I don’ t know where to start. If anyone wants an excellent and complete and relatively simple (for such a complicated concept) discussion of the science of CO2. I suggest taking Steve McIntyre’s advice and go visit scienceofdoom. This article isn’t sky dragons, but it is close.

If you think there are so many errors, pick one and I’ll tell you why it’s not an error and we can go on to the next one. Better yet, answer the question.

Figure one conflates absorptivity with emissivity. As drawn the proper coefficient is alpha, not epsilon. Though absorptivity is a function of emissivity, it isn’t the same thing and your figure is mistaken. I won’t carry on pointing out your other errors, minor and major. I’ve answered the question separately.

Figure 1 places a wikipedia defined black body as its source and a wikipedia defined gray body between the black body and where the output is observed. If you keep reading and go on to figure 2, you will see a more proper diagram where the equivalence between atmospheric absorption and effective emissivity of the gray body model are related.

This is just another model and best practices for developing a model is to represent behavior in the simplest way possible. This way, there are fewer possibilities to make errors.

Uhhh. Figure two is algebraically identical to figure one and still conflates emissivity with absorptivity.

There is no conflation, although absorption and the EFFECTIVE emissivity of the gray body model are related to each other through equation 3.

I got lost at Fig. 1. A black body source emits radiation – OK. A gray body filter absorbs it .. that’s only a half of the story, it also emits radiation back. You have to include this effect.

Curious George,

Yes, you are correct and that point is addressed in Figure 2. Figure 1 simply uses the Wikipedia definitions of a black body and a gray body (one that doesn’t absorb all of the incident energy) to show how even the constrained Wikipedia definition of a gray body is just as valid for a gray body radiator and its this gray body radiator model that closely approximates how the climate system responds to incident energy (forcing), from which the sensitivity can be calculated exactly.

I now look at Figure 2, assuming that the “Gray body atmosphere” is the “Gray body filter” of Fig. 1. In order to absorb all of the Black Body radiation, the Gray Body Filter would have to be black.

I have a feeling that you have a real message, but it needs work. In this form it does not get to me.

Curious George,

The gray body atmosphere absorbs A, passes (1-A) and redistributes A half into space and half back to the surface. The ‘grayness’ is manifested by the (1 – A) fraction that is passed through. This is the unabsorbed energy the wikipedia definition of a gray body fails to account for.

There is a box in the middle with an A. On the left there is Ps=σT^4. This is correct. On the right there are three equations with two arrows. The equation Po=Ps(1-A/2) is identical to Po=εσT^4, given that you have defined ε=(1-A/2) . This is wrong. For one thing, T atmosphere is not the same as T surface. Also, the transmitted energy is a function of absorptivity, not emissivity. The correct equation is Po=ασT^4. σ is not equal to ε. You are conflating emissivity and absorptivity. If we take the temperature of the gray body “surface” as T2, The what you are showing as Ps(A/2) is actually εσT2^4, but you have shown it to be εσT^4. T is not equal to T2. I could go on, but I won’t.

John,

T atmosphere is irrelevant to this model. Only T surface matters. Beside, other than clouds and GHG’s, the emissivity of the atmosphere (O2 and N2) is approximately zero, so its kinetic temperature, that is, its temperature consequential to translational motion, is irrelevant to the radiative balance and corresponding sensitivity. You might also be missing the fact that the (1-A)Ps term is the power not absorbed by the gray body atmosphere, per the Wikipedia definition of a gray body (see the dotted line?).

“Figure one conflates absorptivity with emissivity. As drawn the proper coefficient is alpha, not epsilon. ”

Except – “To be consistent with the Wikipedia definition, the path of the energy not being absorbed is omitted.” so Figure 1 merely shows a blackbody has epsilon =1 and between 0 and 1 for the gray body. No conflating at all. Looks like you were just desperate to write “So many mistakes in this I don’ t know where to start.” rather than a honest mistake. I don’t have my glasses with me so I’ll refrain from giving it a thumbs up and i suggest that you give it a more thorough read before giving it a thumbs down.

John,

Could you recommend a video (30 to 45 min.) explaining the science and ramifications of CAGW? I have Dr. Dressler’s debate with Dr. Lindzen, but it’s a little old. I’d like your take on good video explaining CAWG.

Robert Essenhigh developed a quantitative thermodynamic model of the atmosphere’s lapse rate based on the Stephan Boltzmann law:

“The solution predicts, in agreement with the Standard Atmosphere experimental data, a linear decline of the fourth power of the temperature, T^4, with pressure, P, and, at a first approximation, a linear decline of T with altitude, h, up to the tropopause at about 10 km (the lower atmosphere).” Prediction of the Standard Atmosphere Profiles of Temperature, Pressure, and Density with Height for the Lower Atmosphere by Solution of the (S-S) Integral Equations of Transfer and Evaluation of the Potential for Profile Perturbation by Combustion Emissions. Energy & Fuels, (2006) Vol 20, pp 1057-1067. http://pubs.acs.org/doi/abs/10.1021/ef050276y

Cited by

How does this apply here? The only temperature in the model is the surface temperature which is at 1 ATM is still subject to the T^4 relationship. The model doesn’t care about how energy is redistributed throughout the atmosphere, just about how that energy is quantified at the boundaries and that from a macroscopic point of view of those boundaries, not only does it behave like a gray body, it must.

co2isnotevil. Essenhigh’s equations enable validating and extending White’s model. Earth’s average black body radiation temperature is not from at surface but in the atmosphere. White states:

Essenhigh calculates temperature and pressure with elevation. He includes average absorption/emission of H2O and CO2 as the two primary greenhouse gases:

From these, a detailed thermodynamic climate sensitivity could calculated from Essenhigh’s equations.

George says with regard to incoming energy : “If more arrives than is emitted, the temperature increases until the two are in balance.”

…

This is not necessarily true, especially when considering what happens when the incoming energy melts ice or evaporates water. The temperature remains constant while energy is absorbed, until the ice completely melts, or the water completely evaporates. Only after melting or evaporation ends can the temperature of the remaining mass begin to increase. Since there is both a lot of ice, and a lot of water on the planet earth, this presents a problem with this over-simplified model of the temperature response of our planet to incoming energy from the sun.

Rob,

Consider the analysis to be an LTE analysis averaged across decades or more. The seasonal formation and melting of ice, evaporation of water and condensation as rain all happens in approximately equal and opposite amounts and more or less cancel. Any slight imbalance is too far in the noise to be of any appreciable impact. There’s also incoming energy turned into work that’s not heat. Consider the origin of hydroelectric power, although it eventually turns into heat when you turn on your toaster.

You have a point there co2isnotevil, consider the electromagnetic emissions visible in this picture. They do not follow the Stf-Boltz temperature relationship. They are not toasters, but a lot of sodium vapor lamps. http://spaceflight.nasa.gov/gallery/images/station/crew-30/hires/iss030e010008.jpg

Even LED’s emit heat, but isn’t the light still just photons leaving the planet?

Sodium vapor lamps and LEDs do not produce photons like an incandescent lamp. Since an incandescent lamp is using heat to generate the photons, it follows the Stf-Bltz equations. Yes the sodium vapor lamps and LEDs produce small amounts of heat, but they are not using heat to generate the photons they emit. So the emissions you see in the picture, being mostly sodium vapor lamps and powered by a hydroelectric dam, would not follow the Stf-Bltz law.

Rob,

So, the biggest anthropogenic influence by man is emitting light into space (Planck spectrum or not) which means that less LWIR must leave for balance and the surface cools. Before man, the biggest influence came from fireflies.

I think you’re confusing whether its a Planck spectrum or not with whether or not its emitted energy must conform to the SB Law. Consider that the clear sky emissions of the planet have a color temperature representing the surface temperature, but have an SB equivalent temperature that is lower owing to attenuation in GHG absorption bands.

In effect, we can consider a sodium lamp (or even a laser) a gray body emitter with lots of bandwidth completely attenuated from its spectrum accompanied with broad band attenuation making it seem the proper distance away such that the absolute energy emitted by the lamp measured at some specific distance matches what would be expected based on the color temperature of the lamp.

You missed the point co2isnotevil. The Stf-Blz analysis is inappropriate for the earth system, because there are numerous ways that incoming solar energy is stored/distributed on Earth than is reflected by a temperature differential. My point is that the analysis in this article neglects important details that make the analysis invalid.

Rob,

My point is that the exceptions are insignificant, relative to the required macroscopic behavior. Biology consumes energy as well and turns it into biomass. But you add all this up and you will be hard pressed to find more than 1%.

Consider this co2isnotevil: The ” ε ” value for the Earth is not constant, but is a non-linear function of T. The best example would be comparing the ” ε ” value for Snowball Earth, versus the ” ε ” for Waterworld.

Rob,

Absolutely the emissivity is a function of T and here it that function:

http://www.palisad.com/co2/misc/st_em.png

None the less, in LTE and averaged across the planet, it has an average value and that’s all I’m considering here. The only sensitivity that matters is the long term change in long term averages. Because my analysis emphasizes sensitivity in the energy domain (ratios of power densities), rather than the temperature domain (IPCC sensitivity), the property of superposition makes averages more meaningful.

You can also look here to see other relationships between the variables provided by and derived from the ISCCP cloud data set. Of particular interest is the relationship between post albedo input power and the surface temperature, whose slope is about 0.2C per W/m^2. Where this crosses with the relationship between planet emissions and temperature is where the average is.

http://www.palisad.com/co2/sens

“Biology consumes energy as well and turns it into biomass.”

co2isnotevil, how much energy is “consumed” by increasing the volume of the atmosphere? Warmed gases expand, yes? It’s something I’ve not seen addressed, though maybe I missed it.

mellyrn,

“Warmed gases expand, yes?”

Yes, warmed gases expand and do work against gravity, but it’s not enough to be significant relative to the total energies involved.

What a load of complications you present. Lapse rate, can you explain it? Why is the stratosphere, well, stratified? How about that pesky lapse rate back at its shenanigans in the mesosphere? And then stratification again in the thermosphere?

These questions persist because some think they know the answer but have not questioned assumptions. Just like assuming no bacteria could live at a pH of under 1 and with all sorts of digestive enzymes. ..helicobacter pylon ring a bell?

Keith,

“Lapse rate, can you explain it?”

Gravity. None the less, as I keep trying to say, what happens inside the atmosphere is irrelevant to the model. This is a model of the transfer function between surface temperature and planet emissions. The atmosphere is a black box characterized by the behavior at its boundaries. As long as the model matches at the boundaries, how those boundaries get into the state they are in makes no difference. This is standard best practices when it comes to reverse engineering unknown systems.

Anyone who thinks that the complications within the atmosphere have any effect, other than affecting the LTE surface temperature which is already accounted for by the analysis, is over thinking the problem. Part of the problem is that consensus climate science adds a lot of unnecessary complication and obfuscation to framing the problem. Many are bamboozled by the complexity which blinds them to the elegant simplicity of macroscopic behavior conforming to macroscopic physical laws.

No they are not insignificant, they’re the cause of the changing emissivity in your graph.

It is sign of regulation.

micro6500,

“they’re the cause of the changing emissivity in your graph.”

I’ve identified the largest deviation (at least the one around 273K) as the consequence of the water vapor GHG effect ramping up and not as the result of the latent heat consequential to a phase change. The former represents a change to the system, while the later represents an energy flux that the system responds to. Keep in mind that the gray body model is a model of the transfer function that quantifies the causality between the behavior at the top of the atmosphere and the bottom. This transfer function is dependent on the system, and not the specific energy fluxes and at least per the IPCC, the sensitivity is defined by the relationship between the top (forcing) and bottom of the atmosphere (surface temp).

I understand.

I’m just pointing out that there is a physical reason for emissivity to be changing, it is the atm adapting to the differing ratios of humidity and temperature as you sweep from equator to pole and the day to day swings in temp (which everyone seems to want to toss out!). The big dips are where the limits of the regulation are reached because you’ve hit the min and max temps of your working “fluid”. But in between, you’ve seeing the blend of 2 emissivity rates getting averaged.

Do all of the measurements line up on a emissivity line in Fig 3?

So what I haven’t solved is the temp/humidity map that defines outoging average radiation for all conditions of humidity under clear skies. In the same black box fashion, if you have an equation that defines that line in Fig 3 (instead of an exp regression of the data points), a physical equation based on this changing ratio, would have to have the same answer, right.

micro6500,

“if you have an equation that defines that line in Fig 3”

The green line in Figure 3 is definitely not a regression of the data, but the exact relationship given by the SB equation with an emissivity of 0.62 (power on X axis, temp on Y axis). It’s equation 1 in the post.

So the average of this is about e=.62?

is about e=.62?

Yes, the average EQUIVALENT emissivity is about 0.62. To be clear, this is related to atmospheric absorption by equation 3 and atmospheric absorption can be calculated with line by line simulations which gets approximately the same value of A corresponding to an emissivity of 0.62 (within the precision of the data). So in effect, both absorption (emissivity of the gray body atmosphere) and the effective emissivity of the system can be measured and/or calculated to cross check each other.

Rob, you are attempting to apply local physical conditions to a global radiation model of limits on the radiation. The energy that goes to melting ice or evaporating water stays in the system, without changing the system temperature until it affects one or both of the physical boundaries- the surface or the upper atmosphere emissions.

Seeing that oceans comprise almost 70% of the surface of the planet, you cannot call them “local.”

Condensation happens around 18000 feet above MS on average. That corresponds to the halfway point on atmospheric mass distribution. It is also where flight levels start in the US because barometric altimetry gets dicey and one must rely on in route ATC to maintain separation …enough aviation, back to meat and taters.

Average precipation is about 34″ rain per year. The enthalpy escapes sensible quantification via thermometry but once at 18,000 feet, it heats the upper troposphere and even some of the coldest layers of the stratosphere where it RISES…

This is not quite true. Ice colder than the melting point will warm. Evaporation of water will only change if it warms (for a given humidity). The cooling effect of the evaporation will reduce the warming but not eliminate it. This misunderstanding is behind the reason the large negative feedback effect of evaporation cooling is largely ignored. Latent heat is moved from the surface (mostly the oceans) to the clouds when it condenses.and part is radiated to space from cloud tops.

Richard,

Covered this in a previous thread, but the bottom line is that the sensitivity and this model is all about changes to long term averages that are multiples of years. Ice formation and ice melting as well as water evaporation and condensing into rain happens in nearly equal and opposite amounts and any net difference is negligible relative to the entire energy budget integrated over time.

“Atmospheric GHG’s and clouds absorb energy from the surface, while geometric considerations require the atmosphere to emit energy out to space and back to the surface in roughly equal proportions.”

It goes wrong there. It’s true very locally, and would be true if the atmosphere were a thin shell. But it’s much more complex. It is optically dense at a lot of frequencies, and has a temperature gradient (lapse rate). If you think of a peak CO2 emitting wavelength, say λ = 15 μ, then the atmosphere emits upward in the λ range at about 225K (TOA). But it emits to the surface from low altitude, at about 288K. It emits far more downward than up.

Nick,

The atmosphere is a thin shell, at least relative to the BB surface beneath it.

You should also look at the measured emission spectrum of the planet. Wavelengths of photons emitted by the surface that would be 100% absorbed show significant energy from space, even in the clear sky. In fact, the nominal attenuation is about 3db less then it would be without absorption lines.

George,

The atmosphere is optically thick at the frequencies that matter. Mean free path for photons can be tens of metres. But the more important issue is temperature gradient. You want to use S-B; what is T? It varies hugely through this “thin shell”.

NIck,

The atmosphere is optically thick to the relevant wavelengths only when clouds are present, but not the emissions of the clouds themselves. The clear sky lets about half of all the energy emitted by the surface pass into space without being absorbed by a GHG and more than half of the emissions by clouds owing to less water vapor between cloud tops and space. The nominal attenuation in saturated absorption bands is only about 3db (50%) owing to the nominal 50/50 split of absorbed energy.

The atmospheric temperature gradient is irrelevant for the reasons I cited earlier. The model is only concerned with the relationship between the energy flux at the top and bottom of the atmosphere. How that measured and modelled relationship is manifested makes no difference.

Being Canadian, I have to say. . . . Eh? Are you suggesting that the direction of radiation from any particular particle is not completely random? Given that the energy emitted by the heated particles decreases with temperature and temperature decreases with altitude, I can’t see how emissions are preferentially directed downward. The hottest stuff is the lowest. Heat moves from hot to cool. The heat move up, not down. As do the emissions. Emissive power decreases with temperature. For any particular molecule, the odds that the energy will go to space are the same as the odds it will go to ground. I’m missing something Nick.

Nick. Never mind. I see it. For others. Consider a co2 molecule at 10 meters. It gets hit by a photon from the surface. It can radiate the energy from that photon in any direction. Now consider a molecule at twenty meters. It too gets hit by a photon from the surface. It is also possible for that molecule to get hit by the photon emitted by the molecule at 10 meters. There are more molecules at 10 meters than at twenty, so there is more emission downwards. Over 10’s of meters this is hard to measure. Over 10 kilometres, a bit less. Of course the odds of the molecule at 20 meters seeing a photon are less because some of those were absorbed at 10 meters. Also, the energy of the photons emitted by the molecules at 10 meters is lower because the temperature is lower. Have you done the math Nick? Is it a wash, or is there more downward emission?

John,

The density profile doesn’t really matter because the ‘excess’ emission downward are still subject to absorption before they get to the surface and upward emissions have a lower probability of being absorbed,

Also, as I talked about in the article, if the atmosphere absorbs more than about 75% of the surface emissions, then less than half is returned to the surface. If the atmosphere absorbs less than 75% of the surface emissions, then more than half must be returned to the surface. My line by line simulations of a standard atmosphere with average clouds gets a value of A about 74.1%, so perhaps slightly more than half is returned to the surface, but it’s within the margin of error. Two different proxies I’ve developed from ISCCP data show this ratio to bounce around 50/50 by a couple of percent.

John, a photon at 15 μ carries the same energy regardless of the bulk temperature of the gas. The energy increases directly with the frequency. Due to collisions some molecules always have a higher energy and can emit a photon. The frequency of the photon depends on what is emitting the photon and how the energy is distributed among the electrons in the molecule or atom. The energy of the photon doesn’t depend on the temperature, but the number emitted/volume does.

Does this evolve the atm conditions second by second? If it’s just a static snapshot it is meaning less.

“Does this evolve the atm conditions second by second?”

Not necessary, but is based on averages of data sampled at about 4 hour intervals for 3 decades.

Sensitivity represents a change in long term averages and that is all we should care about when considering what the sensitivity actually is.

Then it’s wrong, the outgoing cooling rate changes at night as air temps near dew point, it is not static. You can not just average this into a “picture” of what’s happening. This is another reason the results are so wrong.

micro6500,

“You can not just average …”

Without understanding how to properly calculate averages, any quantification of the sensitivity is meaningless and quantifying the sensitivity is what this is all about.

Actually sensitivity has to be very low, Min temps are only very minimally effected by co2, it’s 98-99% WV.

John,

The main thing to remember is not so much the concentration gradient, but the temperature gradient. Your notion of a CO2 molecule re-radiating isn’t quite right. GHG molecules that absorb mostly lose the energy through collision before they can re-radiate. Absorption and radiation are decoupled; radiation happens as it would for any gas at that temperature.

At high optical density (say 15 μ), a patch of air radiates equally up and down. Absorption is independent of T. But the re-emission isn’t. What went down is absorbed by hotter gas, and re-emitted at higher intensity.

There is a standard theory in heat transfer for the high optical density case, called Rosseland radiation. The radiant transfer satisfies the diffusion equation. Flux is proportional to temperature gradient, and the conductivity is inversely proportional to optical depth (mean path length). This works as long as most of the energy is reabsorbed before reaching surface or space. Optical depth>3 is a rule of thumb, although the concept is useful lower. It’s really a grey body limit – messier when there are big spectral differences.

“Have you done the math Nick? Is it a wash, or is there more downward emission?”

I think the relevant math is what I said above. Overall, warmer emits more, and the emission reaching the surface is much higher than that going to space, just based on temp diff.

At issue is it’s not static during the night, it changes as air temps cool toward dew point, as water vapor takes over the longer wave bands (the optical window doesn’t change temp).

Nick

GHG molicules also absorb energy through collision, gas what they do with that energy.

Nick

The atmosphere is a gas and therefore doesn’t emit blackbody/ graybody radiation. It only emits spectral lines. If you are considering particles like dust and water( in liquid and solid phase) then it can emit BB/GB radiation.

Alex,

“It only emits spectral lines.”

Yes, but even more importantly, only a tiny percent of the gas molecules in the atmosphere have spectral lines in the relevant spectra.

Oddly enough, many think that GHG absorption is rapidly ‘thermalized’ into the kinetic energy of molecular motion which would make it unavailable for emission away from the planet (O2/N2 doesn’t emit LWIR photons) and given that only about 90 W/m^2 gets through the transparent window (Trenberth claims even less), it’s hard to come up with the 145 W/m^2 shortfall without substantial energy at TOA in the absorption bands.

I don’t like the term ‘thermalised’. It implies a one way direction when in fact it isn’t. Molecules can lose vibrational energy through collision, they can also obtain rotational energy through collision. It goes equally both ways. Emission and absorption are also equal. A complex interchange but always in balance(according to probability of course).

It’s all a matter of detection. Most people (including scientists) don’t know how stuff works. They are basically lab rats that don’t have a clue. They don’t need to know, they just do their job accurately and precisely. Unfortunately the conclusions they draw can be totally erroneous.

If you imagine a molecule as a sphere then it will emit in any direction. In fact over 41,000 directions if the directions are 1 deg wide. Good luck having a detector in the right place to do that. that’s why it’s easier to use absorption spectroscopy. All energy comes from one direction and there are enough molecules to ‘get in the way’ and absorb energy. There is no consideration for emission, which can be in any direction and undetectable.

The instrumentation is perfect for finding trace quantities of molecules and things. Absolutely useless for determining the total energy emitted by molecules.

Anyone who thinks they can determine emission and energy transfer through this method should have their eye removed with a burnt stick.

Everything that is above zero K Temperature emits thermal radiation; including all atmospheric gases.

It’s called thermal radiation because it depends entirely on the Temperature and is quite independent of any atomic or molecular SPECTRAL LINES.

Its source is simply Maxwell’s equations and the fact that atoms and molecules in collision involve the acceleration of electric charge.

An H2 molecule essentially has zero electric dipole moment, because the positive charge distribution and the negative charge distribution both have their center of charge at the exact same place.

But during a collision between two such molecules (which is ALL that “heat” (noun) is), the kinetic energy and the momentum is concentrated almost entirely in the atomic nuclei, and not in the electron cloud.

The proton and the electron have the same magnitude electric charge (+/-e) but the proton is 1836 times as massive as the electron, so in a collision it is the protons that do the billiard ball collision thing, , and the result is a separation (during the collision) of the +ve charge center, and the negative charge center due to the electrons. and that results in a distortion of the symmetry of the charge distribution which results in a non-zero electric dipole moment, so you get a radiating antenna that radiates a continuum spectrum based on just the acceleration of the charges. There also are higher order electric moments, which might be quadrupolar, Octopolar or hexadecapolar moments, and they all can make very fine radiating antennas.

Yes the thermal radiation from gases is low intensity but that is because the molecular density of gases is very low. They are highly transparent (to at least visible radiation) which is why their thermal radiation isn’t black body Stefan-Boltzmann or Planck spectrum radiation.

Some of the 4-H club physics that gets bandied about in these columns, makes one wonder what it is they teach in schools these days. Well I guess I actually know that since I am married to a public school teacher.

G

George E. Smith,

“Everything that is above zero K Temperature emits thermal radiation; including all atmospheric gases.”

Not at any relevant magnitude relative to LWIR and it can be ignored. In astrophysics, the way gas clouds are detected is by either emission lines if its hot enough or absorption lines of a back lit source if its not. The problem is that the kinetic energy of an atmospheric O2/N2 molecule in motion is about the same as an LWIR photon, so to emit a relevant photon, it would have to give up nearly all of its translational energy. If only laser cooling could be this efficient.

A Planck spectrum arises as molecules with line spectra merge their electron clouds forming a liquid or solid and the degrees of freedom increase as more and more molecules are involved. This permits the absorption and emission of photons that are not restricted to be resonances of an isolated molecules electron shell. In one sense, its like extreme collisional broadening.

Have you tried collision simulations based on nothing but the repulsive force of one electron cloud against another? The colliding molecules change direction at many atomic radii away from where the electrons get close enough to touch/merge. As they cool, they can get closer and the outer electron shells merge which initiates the phase change from a gas to a liquid. In fact, nearly all interactions between atoms and molecules occurs in the outer most electron shell.

Nick StokesJanuary 5, 2017 at 6:49 pm

“Atmospheric GHG’s and clouds absorb energy from the surface, while geometric considerations require the atmosphere to emit energy out to space and back to the surface in roughly equal proportions.”

It goes wrong there. It’s true very locally, and would be true if the atmosphere were a thin shell. But it’s much more complex. It is optically dense at a lot of frequencies, and has a temperature gradient (lapse rate). If you think of a peak CO2 emitting wavelength, say λ = 15 μ, then the atmosphere emits upward in the λ range at about 225K (TOA). But it emits to the surface from low altitude, at about 288K. It emits far more downward than up.””

–

Nick. It goes wrong there. When you write, ” It emits far more downward than up.”

Surfaces emit upwards by definition. Very hard to emit anything when it goes inwards instead of outwards.

Nonetheless atoms and molecules emit in all directions equally.

Hence the atmosphere, not being a surface, at all levels emits upwards, downwards and sideways equally.

What you are trying to say, I guess is that there is a lot of back radiation of the same energy before it finally gets away.

This does not and cannot imply that anything emits more downwards than upwards. Eventually it all flows out the upwards plughole [vacuum], while always emitting equally in all directions except from the surface.

Nick,

“It goes wrong there. It’s true very locally, and would be true if the atmosphere were a thin shell. But it’s much more complex. It is optically dense at a lot of frequencies, and has a temperature gradient (lapse rate). If you think of a peak CO2 emitting wavelength, say λ = 15 μ, then the atmosphere emits upward in the λ range at about 225K (TOA). But it emits to the surface from low altitude, at about 288K. It emits far more downward than up.”

Yes, significantly more IR is passed to the surface from the atmosphere than is passed from the atmosphere into space, due to the lapse rate. Roughly a ratio of 2 to 1, or about 300 W/m^2 to the surface and 150 W/m^2 into space. However, if you add these together that’s a total of 450 W/m^2. The maximum amount of power that can be absorbed by the atmosphere (from the surface), i.e. attenuated from being transmitted into space, is about 385 W/m^2, which is also the net amount of flux that must exit the atmosphere at the bottom and be added to the surface in the steady-state. By George’s RT calculation, about 90 W/m^2 of the IR flux emitted by the surface is directly transmitted into space, leaving about 300 W/m^2 absorbed. This means that the difference of about 150 W/m^2, i.e. 450-300, must be part of a closed flux circulation loop between the surface and atmosphere, whose energy is neither adding or taking away joules from the surface or nor adding or taking away joules from the atmosphere.

Remember, not all of the 300 W/m^2 of IR passed to the surface from the atmosphere is actually added to the surface. Much of it is replacing non-radiant flux leaving the surface (primarily latent heat), but not entering the surface. The bottom line is in the steady-state, any flux in excess of 385 W/m^2 leaving or flowing into the surface must be net zero across the surface/atmosphere boundary.

George’s ‘A/2’ or claimed 50/50 split of the absorbed 300 W/m^2 from the surface, where about half goes to space and half goes to the surface, is NOT a thermodynamically manifested value, but rather an abstract conceptual value based on a box equivalent model constrained by COE to produce a specific output at the surface and TOA boundaries.

Just because the atmosphere as a whole mass emits significantly more downward to the surface and upwards into space does NOT mean upwelling IR absorbed somewhere within has a greater chance of being re-radiated downwards than upwards. Whether a particular layer is emitting at 300 W/m^2 or 100 W/m^2, if 1 additional W/m^2 from the surface is absorbed, that layer will re-emit +0.5 W/m^2 and +0.5 W/m^2 down. The re-emission of the absorbed energy from the surface, no matter where it goes or how long it persists in the atmosphere, is henceforth non-directional, i.e. occurs with by and large equal probability up or down. And it is this re-radiation of absorbed surface IR back downwards towards (and not necessarily back to) the surface that is the physical driver of the GHE or the underlying mechanism of the GHE. NOT the total amount of IR the atmosphere as a whole mass passes to the surface.

The physical meaning of the ‘A/2’ claim or the 50/50 equivalent split is that not more than about half of what’s captured by GHGs (from the surface) is contributing to downward IR push in the atmosphere that ultimately leads to the surface warming, where as the other half is contributing to the massive cooling push the atmosphere makes by continuously emitting IR up at all levels. Or only about half of what’s initially absorbed is acting to ultimately warm the surface, where as the other half is acting to ultimately cool the system and surface.

This was supposed to say:

“Just because the atmosphere as a whole mass emits significantly more downward to the surface THAN upwards into space does NOT mean upwelling IR absorbed somewhere within has a greater chance of being re-radiated downwards than upwards.”

This also was supposed to say:

“Whether a particular layer is emitting at 300 W/m^2 or 100 W/m^2, if 1 additional W/m^2 from the surface is absorbed, that layer will re-emit +0.5 W/m^2 UP and +0.5 W/m^2 down.”

” It emits far more downward than up.”

Photons are emitted equally in all directions. At optical thickness below 300 meters the atmosphere radiates as a blackbody. CO2 is absorbing and emitting (and more importantly kinetically warming the transparent bulk of the atmosphere) according to its specific material properties all the while throughout this 300m section.

The specific material property of CO2 is that it is a very light shade of greybody. It absorbs incredibly well, but re-radiates only a fraction of the incident photons. It transfers radiation poorly. Radiative transfer, up or down, is simply not how it works in the atmosphere.

Admittedly, a bit out of my depth here. “Photons are emitted equally in all direction”. Is this statement impacted by geometry? By this I mean, aren’t both the black body and grey body spherical or at least circular?

Clif,

‘”Is this statement impacted by geometry?”

Absolutely and this explains the roughly 50/50 split between absorbed energy leaving the planet or being returned to the surface.

It’s for the same reason that we consider the average input about 341 W/m^2 and not 1366 W/m^2 which is the actual flux arriving from the Sun. It just arrives over 1/4 the area over which its ultimately emitted.

A blackbody has no inherent dimension or shape. It is just a concept. The word “radiation” itself implies circularity, but that’s just the way we like to think of something that goes in every imaginable direction equally.

gymnosperm,

“It absorbs well, but re-radiates only a fraction of the incident photons.”

Not necessarily so. The main way that an energized CO2 molecule returns to the ground state is by emitting a photon of the same energy that energized it in the first place and a collision has a relatively large probability of resulting in such emission. It’s a red herring to consider that much of this is ‘thermalized’ and converted into the translation energy of molecules in motion. If this was the case, we would see little, if any, energy in absorption bands at TOA since that energy would get redistributed across the whole band of wavelengths, nor would we see significant energy in absorption bands being returned to the surface. See the spectrums Nick posted earlier in the comments.

CO2 has only one avenue from the ground state to higher vibrational and rotational energy levels. This avenue is the Q branch and it gets excited at WN 667.4. This fundamental transition is accompanied by constructive and destructive rotations that intermittently occupy the range between 630 and 720. CO2 also has other transitions summarized below.

“Troposphere” was a mental lapse intended as tropopause, but I have left it because it is interestingly true.

If you are measuring light transmission through a gas filled tube and you switch off 667.4, all the other transitions must go dark as well.

The real world is not so simple and there are lots ways for molecules to gain energy.

It is well known that from ~70 kilometers satellites see CO2 radiating at the tropopause. This is quite remarkable because it is also well known that CO2 continues to radiate well above the tropopause and into the mesosphere.

The point here is that the original source of 667.4 photons is the earth’s surface. In a gas tube it is impossible to know if light coming out the other end has been “transmitted” as a result of transparency, or absorption and re-emission. What we do know is that within one meter 667.4 is virtually extinguished and the tube warms up.

The fate of a 667.4 photon leaving the earth’s surface is the question. The radiative transfer model will have it being passed between layers of the atmosphere by absorption and re-emission like an Australian rules football…

I think it’s quite possible that it really doesn’t do much until water vapor starts condensing, which has a lot of node in the 15u area, so during condensing events, the water is an bright emitter, and it could stimulate the co2 @ 15u. The stuff that goes on inside gas lasers……

Yes.

And the satellites looking down see CO2 radiating at the tropopause, where absorption of solar radiation by ozone adds a lot of new energy. This in spite of looking down through~60 km of stratosphere reputedly cooling from radiating CO2.

I have been reading the comments in:

http://jennifermarohasy.com/2011/03/total-emissivity-of-the-earth-and-atmospheric-carbon-dioxide/

There is a fascinating exchange between Nasif Nahle and Science of Doom.

SOD argues transmission = 1-absorption and what is absorbed must be transmitted.

Nasif calculates from measurements a column emissivity of .002, and then argues absorption must be similarly low.

Their arguments BOTH fail on Kirchoff’s law, which pertains only to blackbodies. CO2 is a greybody, a class of materials that DO NOT follow Kirchoff’s law.

It’s to simplistic a solution.

“It’s to simplistic a solution.”

What’s not transmitted is absorbed and eventually re-transmitted.

The difference between transmission and re-transmission is that transmission is immediate and across the same area as absorption while re-transmission is delayed and across twice the area. It’s the delayed downward re-transmission that makes the surface warmer than it would be based on incident solar input alone. Clouds and GHG’s contribute to re-transmission where the larger effect is from clouds.

The only thing necessary to grasp in this perceived “torrent of words”, a tour de force unlike any on the matter, is George’s explication of the ‘gray body’.

It is that simple. Bravo.

Well, all of this “average” radiation calculation stuff is really good fun.

But, the correct way to analyze this problem is to follow each instance of a “ray” of light (with it’s corresponding energy) through a complex system and apply the known and very well verified laws of refraction, transmission, scattering, etc to each and every “ray” of light moving through the system.

Once this is done properly one quickly concludes that the “Radiative Greenhouse Effect” simply delays the transit time of energy through the “Sun/Atmosphere/Earth’s Surface/Atmosphere/Energy Free Void of the Universe” system by some very small time increment, probably tens of milliseconds, perhaps as much as a few seconds.

Given that there are about 86 million milliseconds (or 86,000 seconds) in each day this delay of a few tens/hundredths of milliseconds has NO effect on the average temperature at the surface of the Earth,

I again suggest that folks “read up” about how optical integrating spheres function. The optical integrating sphere exhibits what a climate scientist would consider nearly 100% forcing (aka “back-radiation”) and yet there is no “energy gain” involved,

Yes, a “light bulb” inside an integrating sphere will experience “warming” from “back radiation” and this will change it’s efficacy (aka efficiency). BUT in the absence of a “power supply”, a unit that can provide ‘unlimited” energy (within some bounds, say +/- 100%) this change in efficacy cannot raise the average temperature of the emitting body,

This is all well known stuff to folks doing absolute radiometry experiments. “Self absorption” (aka the green house effect) is a well known and understood effect in radiometry. It is considered a “troublesome error source” and means to quantify and understand it are known, if only to a small set of folks that consider themselves practitioners of “absolute radiometry”

Thanks for your post, Cheers KevinK.

KevinK January 5, 2017 at 7:22 pm

But, the correct way to analyze this problem is to follow each instance of a “ray” of light (with it’s corresponding energy) through a complex system and apply the known and very well verified laws of refraction, transmission, scattering, etc to each and every “ray” of light moving through the system.

“Radiative Greenhouse Effect” simply delays the transit time of energy by some very small time increment, probably tens of milliseconds, perhaps as much as a few seconds.”

Kevin a slight problem is that that ray of light/energy package may actually hit millions of CO2 molecules on the way out. A few milliseconds no problems but a a thousand seconds is 3 hours which means the heat could and does stay around for a significant time interval. Lucky for us in summer I guess.

angech, please consider that light travels at 186,000 miles per second (still considered quite speedy). So even if it “collides” with a million CO2 molecules and gets redirected to the surface it’s speed is reduced to (about) 0.186 miles per second (186,000 / 1 million). That is still about 669 miles per hour (above the speed of sound, depending on altitude).

So, given that the vast majority of the mass of the atmosphere around the Earth is within ten miles of the surface, at ~669 miles per hour the “back radiation” has exited to the “energy free void of space” after 0.014 hours (10 miles / 669 mph) which equals (0.014 hours * 60 minutes/hr) = 0.84 minutes = (0.84 minutes * 60 minutes/second) = 50.4 seconds.

it is very hard to see how a worst case delay of ~50 seconds can be reasonably expected to change the “average temperature” of a system with a “fundamental period” of 86,400 seconds…..

Cheers, KevinK

KevinK,

“it is very hard to see how a worst case delay of ~50 seconds …”

While this kind of delay out into space has no effect, its the delay back to the surface that does it. Here’s a piece of C code that illustrates how past emissions accumulate with current emissions to increase the energy arriving at the surface and hence, its temperature. The initial condition is 240 W/m^2 of input and emissions by the surface, where A is instantly increased to 0.75. You can plug in any values of A and K you want.

#include

int main()

{

double Po, Pi, Ps, Pa;

int i;

double A, K;

A = 0.75; // fraction of surface emissions absorbed by the atmosphere

K = 0.5; // fraction of energy absorbed by the atmosphere and returned to the surface

Ps = 239.0;

Pi = 239.0;

Po = 0.0;

for (i = 0; i < 15; i++) {

printf("time step %d, Ps = %g, Po = %g\n", i, Ps, Po);

Pa = Ps*A;

Po = Ps*(1 – A) + Pa*(1 – K);

Ps = Pi + Pa*K;

}

}

Love your work Kevin!

Have you noticed the cooling rate at night decays exponentially?

micro, thanks for the compliment.

I have not considered the decay of the cooling rate. Seems like some investigation is needed, where do I apply for my grant money ???

Cheers, KevinK

If you find some, let me know. We’ll it looked like it was reaching equilibrium, but my ir thermometer kept telling me the optical window was still 80 to 100F colder, same as it was when it was cooling fast.

Thanks for doing physics here. It’s a great refresher. Some of it I’ve not revisited since I was at uni.

Ok, here are some references for folks to read at their leisure;

Radiometry of an integrating sphere (see section 3,7; “Transient Response”)

https://www.labsphere.com/site/assets/files/2550/a-guide-to-integrating-sphere-radiometry-and-photometry.pdf

Tech note on integrating sphere applications (see section 1.4, “Temporal response of an Integrating Sphere”)

https://www.labsphere.com/site/assets/files/2551/a-guide-to-integrating-sphere-theory-and-applications.pdf

Note, Optical Integrating Spheres have been around for over a century, well known stuff, very little “discovery/study” necessary.

Another note; the ‘Transient Response” to an incoming pulse of light is always present, a continuous “steady state” input of radiation is still impacted by this impulse response. However the currently available radiometry tools cannot sense the delay when the input is “steady state”. The delay is there, we just cannot see/measure it.

Cheers, KevinK.

One also has to include the fact that doubling the amount of CO2 in the Earth’s atmosphere will slightly decrease the dry lapse rate in the troposphere which offsets radiative heating by more than a factor of 20. Another consideration is that H2O is a net coolant in the Earth’s atmosphere. As evidence of this the wet lapse rate is signifficlatly lower than the dry lapse rate. So the H2O feedback is really negative and so acts to diminish any remaining warming that CO2 might provide. Another consideration is that the radiant greenhouse effect upon which the AGW conjecture depends has not been obsered anywhere in the solar system. The radiant greenhouse effect is really ficititious which renders the AGW conjecture as ficititious. If CO2 really affected climate then one would xpect that the increase in CO2 over the past 30 years would have caused at least a measureable increase in the dry lapse rate in the troposphere but such has not happened.

@ willhaas

January 5, 2017 at 8:04 pm : Thanks, Wil.. Radiation is ineffective because of optical depth below 5km, except in the window. We do know that the faster and mightier conduction-thermalisation-water vapour convective and condensate path totally dominates in clearing the opaque bottom half of the troposphere, and then some.. As per Standard Atmospheres. But it still works on Venus and Titan, for starters.

A simple model, based on known physics and 1st principles, yields an estimate of ‘climate sensitivity’ that approximates physical evidence while illustrating (yet again) that climate sensitivity estimates from complex software models of planetary climate are unrealistically way too high!

Very interesting. Thank you, George White!

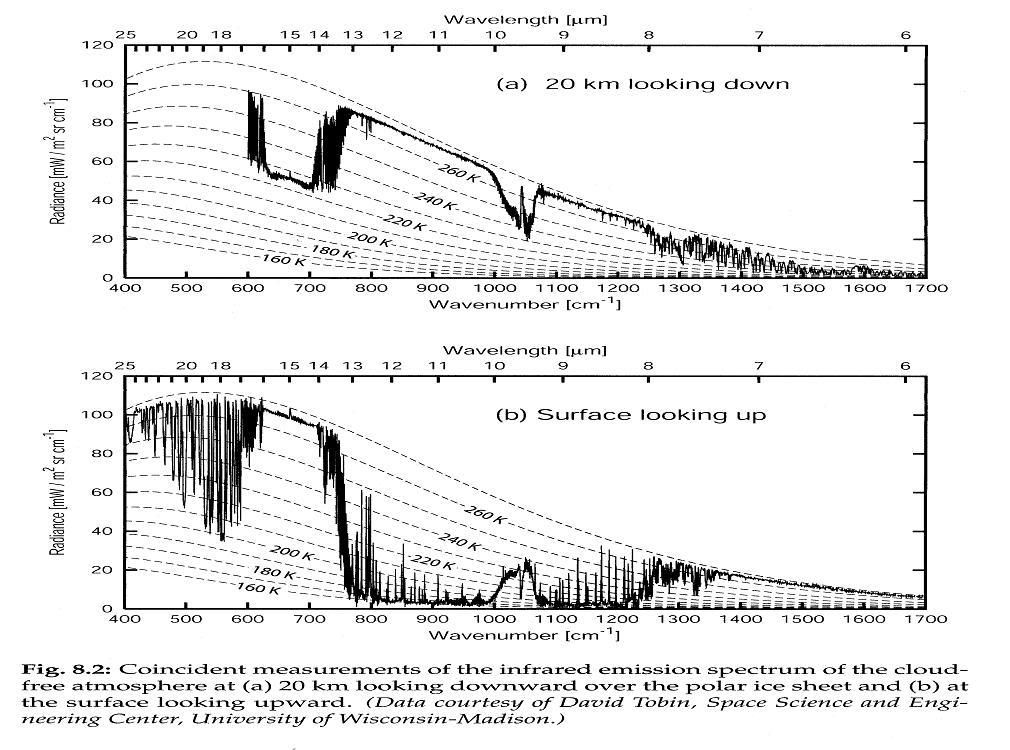

It’s time to show some real spectra, and see what can be learnt. Here, from a text by Grant Petty, is a view looking up from surface and down from 20km, over an icefield at Barrow at thaw time.

If you look at about 900 cm^-1, you see the atmospheric window. The air is transparent, and S-B from surface works. In the top plot, the radiation follows the S-B line for about 273K, the surface tempeerature. An looking up, it follows around 3K, space.

But if you look at 650 cm^-1, a peak CO2 frequency, you see that it is following the 225K line. That is the temperature of TOA. The big bite there represents the GHE. It’s that reduced emission that keeps us warm. And if you look up, you see it following the 268K line. That is the temperature of air near rhe ground, which is where that radiation is coming from. And so you see that, by eye, the intensity of radiation down is about twice up.

In this range radiation from the surface (high) is disconnected from what is emitted at TOA.

NIck,

You are conflating a Planck spectrum with conformance to SB. If you apply Wein’s displacement law the average radiation emitted by the planet, the color temperature of the planets emissions is approximately equal 287K while the EQUIVALENT temperature given by SB is about 255K owing to the attenuation you point out in the absorption bands. Moreover; as I said before, the attenuation in a absorption bands is only about 3db and it looks basically the same from 100km except for some additional ozone absorption.

Where do you think the 255K equivalent temperature representing the 240 W/m^2 emitted by the planet comes from?

George,

“Where do you think the 255K equivalent temperature representing the 240 W/m^2 emitted by the planet comes from?”

It’s an average. As you see from this clear sky spectrum, parts are actually emitted from TOA (225K) and parts from surface (273K). If you aggregate those as a total flux and put into S-B, you get T somewhere between. Actually, it’s more complicated because of clouds, which replace the surface component by something colder (top of cloud temp), and because there are some low OD frequencies where the outgoing emission comes from various levels.

But the key thing is that you can’t make your assumption that the atmosphere re-radiates equally up and down. It just isn’t so.

Nick,

“But the key thing is that you can’t make your assumption that the atmosphere re-radiates equally up and down. It just isn’t so.”

What do you think this ratio is if its not half up and half down?

The sum of what goes up and down is fixed and the more you think the atmosphere absorbs (Trenberth claims even more than 75%), the larger the fraction of absorption that must go up in order to acheive balance.

George,

“What do you think this ratio is if its not half up and half down?”