Guest essay by Larry Hamlin

NOAA concocted an array of “Selected Significant Climate Anomalies and Events” for year 2023 that are shown below as prominently featured in their Annual 2023 Global Climate Report.

Of the 25 highlighted entries on NOAA’s “Selected Significant Climate Events” diagram 13 are related to cyclone and hurricane events across the various oceans of the Northern and Southern hemispheres that occurred during the year 2023.

These 13 highlighted isolated events suggest that 2023 experienced many extreme weather hurricane and cyclone events that will no doubt be hyped by climate alarmists with flawed claims of “extreme hurricane and cyclone climate events” having occurred in year 2023.

However, the context of these highlighted events fails to provide a complete and comprehensive global history of the cyclone and hurricane tropical events for the year 2023 with these highlighted events grossly misrepresenting the totality of the year 2023 cyclone and hurricane outcomes across the global oceans.

A prior WUWT article shown below demonstrates that these NOAA highlighted cyclone and hurricane “events” completely misrepresent the very “normal” global hurricane and cyclone year 2023 outcome.

The conclusion of this WUWT article notes:

Unlike the NOAA year 2023 concocted and prominently featured “Selected Significant Climate Events” diagram which falsely hypes the extent of the year 2023 global hurricane and cyclone season outcomes the data from the Colorado State University Tropical Meteorology Project clearly establishes that the year 2023 was a very unremarkable and “normal” year for cyclones and hurricanes across the globe.

Climate alarmist claims that hype the year 2023 hurricane and cyclone season as being an “extreme weather outcome” are absurd and incompetent as is NOAA’s “Selected Significant Climate Events” hurricane diagram portrayal.

Equally disturbing in the NOAA prominently feature “Selected Significant Climate Events” diagram are the highlights noted for both the Arctic and Antarctic global regions.

Instead of identifying the significant 8 yearlong (2016 to 2023) and 17 yearlong (2007 to 2023) inclusive periods of downward temperature anomaly trends respectively for these regions, NOAA hypes sea ice extent issues that are unaddressed in its report.

The reality of the sea ice extent issues for the arctic and Antarctic regions are addressed here and here.

The Arctic Region highest average temperature anomaly was 3.00 degrees C that occurred in year 2016 as shown below from NOAA’s Global Time Series data with a clear downward temperature anomaly trend since 2016 to 2.55 degrees C in 2023 (ignored and concealed by alarmists).

The Arctic’s year 2023 average temperature anomaly was 0.45 degrees C below its prior highest average temperature anomaly year of 2016.

The Antarctic Region highest average temperature anomaly was 0.65 degrees C that occurred in 2007 as shown below from NOAA’s Global Times Series data which clearly shows a downward temperature anomaly trend since year 2007 (ignored and concealed alarmists) .

The Antarctic’s year 2023 average temperature anomaly was 0.50 degrees C below its prior highest average temperature anomaly year of 2007 at 0.15 degrees C.

The Arctic and Antarctic global regional year 2023 long term significant downward temperature anomaly trends of 0.45 degrees C and 0.50 degrees C respectively were ignored in the “Selected Significant Climate Anomalies” diagram while the year 2023 North America and Europe global regional increase and decrease anomaly outcomes of 0.02 and 0.01 degrees C respectively are highlighted as being “Selected Significant Climate Anomalies” merit worthy.

Additionally, NOAA’s prominently featured diagram fails to highlight the largest temperature anomaly change of all 16 global regions with that being the reduction of 0.8 degrees C for the East N Pacific region (over a 9 year inclusive period of 2015 to 2023) as shown below.

Rather than address this large year 2023 average temperature anomaly reduction NOAA’s “Selected” diagram highlight for the East N Pacific global region is adorned with a flawed claim that Hurricane Dora exacerbated the fire in Lahaina with that flawed assessment addressed in more detail here.

Also, the Hawaiian global region large year 2023 average temperature anomaly reduction of 0.66 degrees C is unaddressed in the NOAA’s “Selected” highlighted diagram. This large reduction (over a 9 year inclusive period) of 0.66 degrees C from 2015 is shown below.

NOAA’s year 2023 average temperature anomaly data for its 16 global regions had 4 global regions which had by far the largest incremental changes from their prior record high average temperature anomaly values (all of them reductions) with these regions being:

Arctic – a reduction of 0.45 degrees C from year 2016

Antarctic – a reduction of 0.5 degrees C from year 2007

Hawaiian – a reduction of 0.66 degrees from 2015

East N Pacific – a reduction of 0.8 degrees C from 2015

Yet none of these significantly largest by far year 2023 average temperature anomaly reductions is addressed in the prominent NOAA’s “Selected Significant Climate Anomalies and Events” diagram for any of these regions while upward average temperature anomaly changes as small as 0.02 degrees C (North America) are highlighted.

The NOAA “Selected Significant Climate Anomalies and Events” diagram misrepresents many significant global anomalies and events for year 2023.

NOAA can’t even cherry pick well.

That’s why they call it “selection”.

NOAA can’t . . . etc.

And do not know the difference between climate and weather.

Chalk another one up to “Why we don’t trust our government.”

NOAA are manifesting symptoms of an entity of managerial class output rather than scientific output when it comes to climate.

Downright average administrators doing what they are told to, rather than high integrity scientists doing their best to show us what is true, and their honestly calculated estimation of “how true” ie certainty, to the best of their efforts and knowledge.

In such an environment, people of real talent, curiosity and integrity cannot thrive.

The 2023 Atlantic hurricane season resulted in 12 deaths. The Pacific season resulted in 64 deaths, for a total of 76 deaths.

Annualized, that is 0.2 deaths/day.

OTOH, approximately 25,000 people starved to death every day.

The climate alarmists are like evil magicians. Look at THIS hand that I want you to focus on. Don’t look at the other hand where the fatalities are 125,000 times worse!

Only 76 deaths? I’ll raise you. Even the mainstream media occasionally leaks a truth like the 90 deaths blamed on the recent cold.

https://www.cbsnews.com/news/freezing-weather-united-states-deaths-cold-continues/

Of course it’s always been true that cold-related deaths far outnumber heat-caused ones and NOAA left them out of their carefully selected anecdotal “climate anomalies”.

Reminds of me this Lancet misdirection

Look at the chartmanship going on with the scale

And compare to fentanyl-related overdose deaths. The latest tally from the CDC is over 107,000 people in the 12-month period ending in August 2022. And this does not include the walking dead or just dead while on street taking it.

In just five years, deaths involving fentanyl — the most frequently implicated substance — rose from 6 per 100,000 people to 22 per 100,000, according to the CDC.

Regarding TCs, NOAA keep peddling the “Storms” narrative, as opposed to TCs (ie hurricanes)

Named storms are higher than average in the Atlantic, for instance, but hurricanes are not. This is because NOAA now “name” more tropical storms, not because more are actually occurring.

https://notalotofpeopleknowthat.wordpress.com/2023/12/01/the-2023-atlantic-hurricane-season-was-average-not-4th-busiest/

Seems to me that I remember WUWT pointing out: Now they are naming Tropical Storms when before they didn’t. My short search doesn’t answer when that happened. I’ll keep looking.

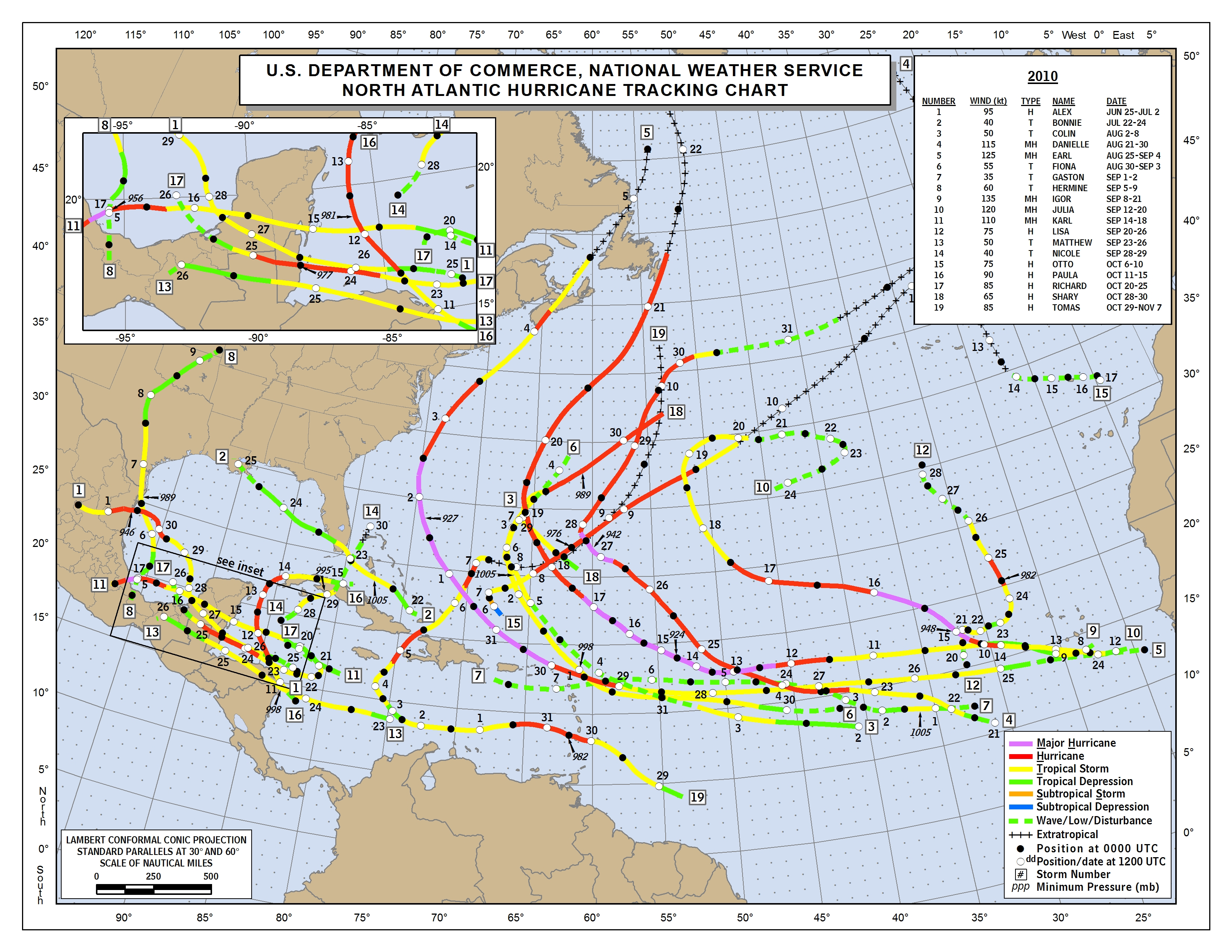

Sure looks like they were doing it in 2010

Uhm, dunno all those listed have wind speeds 35 kts or over.

I’ll keep looking

“Selected…”

I, for one, would not expect anything less than the very best cuts.

In the UK neck of the global woods, we’ve had the more or less usual run of winter depression systems (geddit!) coming over from the Atlantic, now given exotic sounding names like: storm “Gerrit, Henk, Isha etc”, it’s never storm Dave, storm Mike, or storm Steve. Even the names are a bit, well, a bit middle class. Will we get to storm Tarquin?

“…none of these significantly largest by far year 2023 average temperature anomaly reductions is addressed in the prominent NOAA’s “Selected Significant Climate Anomalies and Events” diagram”

I think it’s more than fair to say that the narrative is very much a work in progress.

I’m waiting for “Gentle Breeze Eric”…

You missed it… it was that gentle

The temperature scales are wrong. 0 degrees Celsius correspond to 32 degrees Fahrenheit.

Eduard: Nope, you are wrong. Before commenting in the future, read the heading on the graphs. The data are being presented as anomalies.

Regards,

Bob

PS: Welcome to WattsUpWithThat, Eduard.

?

A simple mistake, Eduard.

If you’re here to learn about climate, you’ve come to the right place

NOAA: the sandcastle attempting to stand against the incoming tide.

No doubt as to who will no longer be

standingin good standing at the end.The infographic quite plainly states which regions had above normal activity and which had below normal activity. You are tilting at windmills.

This is not how trends work. There will always be short-term variability expressed in the climate system, particularly for small regions, that does not indicate that there has been a change in the long-term underlying trend.

That variability goes both up and down. Guess which one gets the most attention!

Each region that Larry highlights exhibits a long-term warming trend. That is what deserves attention.

You didn’t answer the question. You just deflected. Typical.

The long term trend gets the attention, and the long term trend is upward. Does that answer your question?

You are talking about piddlingly short-term trends.

The longer term trend, say 3000 years, is DOWNWARDS, everywhere. !

Yes, it has warmed slightly since the LIA.

This an absolutely GOOD THING !!

He is playing with the downvote button again.

The measurement uncertainty is so wide that you can’t KNOW what the long term trend is!

A monkey is capable of taking 30 pairs of numbers and averaging them to obtain some average monthly value. Have you ever studied or had firsthand experience with meteorology? If an average temperature of 25.5°F can represent two different days [(H37, L14) and (H43, L8)], how do you not consider that lunacy?

Not just two different days. Two entirely different climates. If a metric can’t distinguish identifiable differences then it isn’t a good metric. Yet climate science stubbornly clings to using median daily temperature instead of moving to either degree-days or enthalpy.

It is not lunacy at all, if you observe the mean to be changing over time, it might inspire you to investigate the highs and lows to see how they are changing relative to each other. But knowing that change is occurring at all is unquestionably useful.

The mean can represent entirely different days with completely different highs and lows, so what observable change can be noted in that scenario? The highs and lows aren’t even the absolute lowest recordings; they are also hourly averages. The average also ignores crucial details about the day that contributed to the registered temperatures (snow, rain, urban heat island, UV intensity, sea breeze, etc.).

Observing a change in the mean daily temperature over time can let you track the changing seasons. Over longer periods it can let you track changes in the local climate. Convert the mean temperature into the mean anomaly and you can track changes in the regional or even global climate.

You don’t know, without additional investigation, whether a change in the low or high is contributing more to the change in the mean, but the fact that the mean is changing at all is valuable information.

Similarly, if I were to observe an increasing trend in the average height of American males over time, that information is useful. I don’t know from the average alone whether short males are getting tall or whether tall males are getting taller, but knowing there is a directional change happening is useful information.

This is all, like, stats 101 stuff.

Tracking the daily highs and lows would only be informative at a particular station (let’s not forget that near-surface weather station records are highly inhomogeneous); even then, no one thinks to consider the intricate interactions of various factors contributing to the temperature at a given time. Elements like precipitation, UV intensity, and other atmospheric conditions play crucial roles, and reducing this complexity to a single mean value can be misleading. Otherwise, temperature is highly dynamic and varies significantly even over short distances (see the attached image for example). In the case of seasons, the same average temperature can present itself in different seasons; an averaged temperature of 29.5°F, for example, could be the result of an extreme cold front in the spring or autumn, or just a ‘normal’ day in winter. The average ignores, and as a result, increases variance.

Comparing it to tracking the average height of American males is a poor analogy because human height is relatively stable and doesn’t undergo rapid and complex day-to-day changes like temperature. The range of temperature variations across different regions on Earth is, also, far more extensive.

Yes, this is one reason why anomalies are used for combining multiple station records.

Well, of course, a lot of people think about these things, but these things are weather. The thing we are tracking is climate change, which occurs over time periods of decades, where these phenomena are nothing more than background noise. The average anomaly is a perfectly suitable statistic for tracking change over time in the regional climate state. Even when tracking the seasons using an average daily temperature for a single station, the weather on a single day doesn’t really matter because you are tracking change over many successive days, weeks, and years, and the seasonal cycle will eventually win out over day to day variability.

“Yes, this is one reason why anomalies are used for combining multiple station records.”

Anomalies can’t tell you if it is summer or winter. So what good are they in combining multiple station records.

What is the variance of the data distribution associated with those combined station records? Are you ever going to answer that?

That anomalies eliminate seasonality is a feature, not a bug, if you are trying to track climate change.

Anomalies do *NOT* eliminate seasonality! The variance of temperature is different in winter than in summer. Therefore the anomalies are going to also have a different variance in winter and summer meaning the seasonality is *NOT* being eliminated!

You only *think* it’s being eliminated because you ignore the variance of the temperature distributions throughout the year!

And you call ignoring variance in a distribution *science*?

How different a temperature at a given location is from the norm is consistent across seasons, unless there is a change in the climate occurring. You seem to have a preternatural inability to grasp the concept of an anomaly.

“How different a temperature at a given location is from the norm is consistent across seasons, unless there is a change in the climate occurring. You seem to have a preternatural inability to grasp the concept of an anomaly.”

Malarky! That tells me you have NEVER, EVER calculated the variance of winter temps vs summer temps.

Winter temps have a wider variance meaning the anomalies will also have larger variance after the baseline is subtracted!

This has *NOTHING* to do with a change in the climate. Never has, never will.

*YOU* have a preternatural ability to ignore necessary statistical descriptors for a data distribution by just assuming them away.

Oop, Tim! We are talking about the anomaly. Gotta keep track of the conversation, bud.

In other words you have NEVER bother to calculate the variance of the anomalies.

You just ignore them.

Typical for a climate scientist.

What he’s saying is that anomalies ignore variance, and that’s why it increases. Are the details of what’s causing the temperature to increase or decrease not important? Isn’t it crucial to know the recent wind patterns or UV intensity in x location? Temperature is not some static property; it has various influences, all of which act dynamically, determining what the temperature is at a given time and place. The details are equally, if not more, important than the recorded temperature itself. It seems to me that anomalies are being tracked for people to blame CO2 for all changes on Earth, which is, of course, illogical.

The anomaly is the variance, the anomaly specifically measures how much a measurement at a given moment differs from the typical value. If there were no variance, all anomalies would take a constant value, and if there were a change in the climate at this location with zero variance, the anomalies would express as a straight sloped line.

All of the things you mention are important to understand, but it is also important to have a metric that generally tracks how the system is changing. The change in the global mean temperature anomaly can tell us if the planet is in an ice age, for instance. Obviously it is not the only metric we should consider, and scientists monitor a multitude of aspects of the climate system, from changes in sea ice and ice sheet cover, to sea level and ocean heat content, to changes in ecosystems. The global mean temperature anomaly just happens to be one single metric that is useful for its purpose.

Who doesn’t understand “stats 101” here? Check the mirror for the answer.

“ the anomaly specifically measures how much a measurement at a given moment differs from the typical value. “

Typical value? Does that typical value have a measurement uncertainty? In climate science the measurement uncertainty of the average is assumed to be zero, the average is 100% accurate.

Try telling that to a Boeing engineer today.

“If there were no variance, all anomalies would take a constant value”

NO! They would not. The anomaly today can easily be +1C and tomorrow it could be -1C! That’s not a constant value. Those two values contribute to the variance of the data set, they may even define the total range of values the anomaly has taken on over time! The *real* issue is what is the measurement uncertainty of those aomalies? If it +/- 0.5C then their average could just as easily be +1.5C or +0.5C and -0.5C or -1.5C!

The total range could be as large as 3C or as small as 1C. YOU DON’T KNOW! It’s all part of the GREAT UNKNOWN and it’s metric is the measurement uncertainty!

“The change in the global mean temperature anomaly can tell us if the planet is in an ice age, for instance.”

The anomalies can’t tell you if you are in Las Vegas or Miami! or Rio de Janeiro! How can they tell you if we are in an ice age?

“The global mean temperature anomaly just happens to be one single metric that is useful for its purpose.”

It is *NOT* fit-for-purpose because it can’t distinguish one climate from another!

Anomalies are not a measure of variance. Anomalies are a rate of change, like going from 50 mph to 60 mph. That is not the variance in speed of travel. It is more correctly called a ΔT, a rate of change. Temperature anomalies should be dimensioned as “ΔT/T·time” in order to properly compare them when averaging.

Here is an example. You are a car owner in NASCAR. You are at a track testing your two cars. You want to know how quickly they can accelerate down a straightaway. You measure each and find one can accelerate at 20 mph/second. The other one at 10 mph/sec.

Would you choose the first one as your main car because it can accelerate twice as fast?

Tell what another factor might be that is important, and we’ll see if you have a clue.

Now, for temperature. Let’s say Fargo, ND has a monthly anomaly of +1F in July. In the same July, the Antarctic has an anomaly of +1.

On a relative basis does an anomaly of +1 have the same effect in both places?

According to the World Meteorological Organization, climate is weather averaged over multiple decades. So, you can’t dismiss these factors as weather; they affect the recorded measurements, making them impossible to ignore. If you want to track overall energy intake, you would have to find a way to separate the noise from the measurement, but the noise (the weather itself) contributes to the measurement. The factors that go into the recorded temperatures interact dynamically, so there’s no way to separate them. Every single measurement at a weather station has non-random factors that contribute to the temperature at a given time. Would you consider the morning and evening sunrise noise? During the evening, as the sun sets, the thermometer can experience cooling effects (radiative heat loss), and if there are clear skies, the temperature can drop more rapidly.

Would you consider snow depth or snowfall from an ongoing blizzard noise? Would you consider an averaged temperature of 46.0°F at a thermometer near a cave the same as an averaged temperature of 46.0°F at a thermometer near the top of the mountain?

———————-

Can you explain your reasoning as to why you think this response is an effective rebuttal to:

We are talking about the shortcomings of the average and why it isn’t representative of larger regions.

When you average together many months or years of data, you cancel out a lot of the random noise – what you are left with is the climate signal. So, in fact, you don’t care at all about any particular weather event, what you care about is the long term underlying signal. This process of removing random noise by taking many observations is a standard part of statistical analysis.

Any given observation is a combination of underlying signal + random variability + measurement error. We don’t care about the last two, so we don’t care about one single day, we want to observe many, many days. The random parts go away when we do this and we are left with the part we are interested in – the climate signal.

We don’t care about these differences – that’s one reason why the anomaly makes sense. We care about the trend, and as long as we aren’t introducing a spurious trend by combining the two records (by using anomalies), then we don’t care at all about their difference in elevation.

“When you average together many months or years of data, you cancel out a lot of the random noise – what you are left with is the climate signal.”

Malarky! This *IS* the climate science meme: all measurement uncertainty, including natural variation, is random, Gaussian, and cancels. But this is never, AND i MEAN *NEVER*, justified by climate science in any manner!

As Walter points out, this isn’t noise, It’s part of the signal.

You aren’t even using an AVERAGE. It’s a median value of a multi-modal distribution. And it tells you nothing about the actual signal which is the entire distribution, including what you consider to be “noise”.

“So, in fact, you don’t care at all about any particular weather event, what you care about is the long term underlying signal.”

Like Walter says, the weather event *IS* the signal. It is the WEATHER that determines the climate, not the median daily temperature. The median daily temperature can’t distinguish between the climate in Las Vegas and Miami, or Port Barrow, AK and Lincoln, NE, or any other combination of locations!

“We don’t care about these differences”

Who is “we”? Climate scientists with a monied interest in global warming? Who wouldn’t know the variance of a data distribution from a hole in the ground?

The anomaly makes NO sense. If it can’t distinguish between climates then it gives you *NO* information about the global climate. And the global climate is *NOT* determined by the daily median value of a multi-modal temperature distribution.

It’s like teaching a monkey how to use tools.

If all you have is a hammer, then every problem is a nail!

That is not what I said or implied, it is simply the windmill that you are forever tilting against. The random component of error is random, non-random error is nonrandom and does not cancel with greater numbers of observations. Whether it was 3.5 degrees in Boston on Friday, Janaury 26, 2024 and whether it was 3.5 degrees or 4 degrees or 3 degrees or 0 degrees on Thursday, January 26, 2023 is weather and is, for all intents and purposes, random. If there is a change in the mean temperature on January 26ths in Boston over many years then that is not random, it is a shift in the climate, and that is the thing we want to track.

The weather is a combination of signal + noise + error, and we don’t care about the last two, so we reduce them by making many observations over a long time.

“The random component of error is random, non-random error is nonrandom and does not cancel with greater numbers of observations. “

u(total) = u(random) + u(systematic)

Do you know the true value for the temperature at the Forbes AFB measurement device? If not, how do you know the systematic bias it has? If you don’t know the systematic bias then how do you know what the random component is?

As usual, you totally ignore the fact that no one versed in up-to-date metrology uses “error” any longer. The use of error implies you know the true value. How do you know the true value?

The requirement for cancellation of random error requires:

Temperature measurements are:

The temperature data meets NONE of the requirements for assuming cancellation of random errors. NONE!

“weather and is, for all intents and purposes, random.”

You just argued in another message that weather is *NOT* random, that it varies by season! Pick one and stick with it!

“ If there is a change in the mean temperature on January 26ths in Boston over many years then that is not random, it is a shift in the climate, and that is the thing we want to track.”

Malarky! You simply have no way of KNOWING this. You don’t know if one resistor on the substrate of the bridge reading the value of the PTR has changed value, or if someone planted fescue grass instead of Bermuda grass under the measurement station, or if a mud dauber wasp has built a nest in the air intake of the station or if a sandstorm scoured away some of the paint on the station screen, or on and on and on ……

THESE ARE WHAT MEASUREMENT UNCERTAINTY IS MEANT TO ALLOW FOR!

I’ll repeat again, you and climate science want us all to believe

“that all measurement uncertainty is random, Gaussian, and cancels leaving the stated measurement value not as an estimate but as a 100% accurate measurement over time”.

You don’t need to know what the random component of the error is to know that it is reduced by making numerous observations. You can only identify the systematic bias by analyzing the conditions that lead to systematic bias in station sites.

That is, you can reduce u(total) by reducing u(random) by taking many measurements. You can only reduce u(systematic) through investigation and analysis, but you don’t need to know u(total) to identify component of u(systematic).

Of course it does, for a single station in a single location, you very much can assume that a single measurement device is taking multiple measurements of the same thing. You can also assume that daily variance in temperature is orders of magnitude greater than the sources of random measurement error in the device, so the priority is to take multiple measurements over many days to reduce random noise in the dataset.

Pretty silly to claim you have no way of assessing systematic bias in stations when you’re on a website that literally rose to prominence due to an effort to identify systematic station biases.

You enclose this statement in quotation marks as though someone other than you has ever said it. I think you should speak to a doctor about the hallucinations you seem to be having.

“You don’t need to know what the random component of the error is to know that it is reduced by making numerous observations. “

Get a pad of legal paper and write this out 1000 times. Maybe it will sink in.

In order to assume cancellation of random errors it is required:

It is also required that the measurement distribution be Gaussian.

Tmax and Tmin are single measurements of different things even if measured by the same device and the measurement environments are not the same for both so they don’t meet the repeatability condition.

Go spend some time writing these requirements out 1000 times. We’ll wait for you to return.

“That is, you can reduce u(total) by reducing u(random) by taking many measurements.”

No, you can’t. Because you are not measuring the same thing multiple times under repeatability conditions.

“You can only reduce u(systematic) through investigation and analysis, but you don’t need to know u(total) to identify component of u(systematic).”

Does the measurement uncertainty of ASOS stations being +/- 0.3C tell you what the random component of uncertainty is? What the systematic component of uncertainty is? You *know* u(total), what are the component values? Is u(random) the same for every station? Is u(systematic) the same for every station? How about for daytime vs nighttime measurements? Is u(total) Gaussian for every station?

You continue to make unjustified assumptions and try to justify them with handwaving! The meme that more readings means a more accurate average is just garbage. Especially when combining readings from different measuring devices!

“Pretty silly to claim you have no way of assessing systematic bias in stations when you’re on a website that literally rose to prominence due to an effort to identify systematic station biases.”

Give it a BREAK! Identifying that systematic biases exist is a far cry from determining their VALUE! You are just throwing crap against the wall now.

“You enclose this statement in quotation marks as though someone other than you has ever said it. I think you should speak to a doctor about the hallucinations you seem to be having.”

You say it every single time you make a statement that “random error cancels”.

AJ: “You don’t need to know what the random component of the error is to know that it is reduced by making numerous observations.”

AJ: “That is, you can reduce u(total) by reducing u(random) by taking many measurements.”

AJ: “Of course it does, for a single station in a single location, you very much can assume that a single measurement device is taking multiple measurements of the same thing.”

Tmax and Tmin are the same thing? Only a climate scientist would say this! Both humidity and pressure play a part in what the temperature is. Both can change drastically between Tmax and Tmin. You are *NOT* measuring the same thing. You are *NOT* measuring under the same environmental conditions.

Climate science supposedly uses temperature as a proxy for enthalpy. And then ignores the fact that a change in enthalpy can change the temperature!

“You can also assume that daily variance in temperature is orders of magnitude greater than the sources of random measurement error in the device, so the priority is to take multiple measurements over many days to reduce random noise in the dataset.”

But you are *still* measuring different things! Have you *ever* read Possolo’s TN1900, Example 2? Can you list out the assumptions he had to make in that example? Do you understand why he used Tmax as the measurand and not Tmedian?

Again, climate is weather over time. If you eliminate weather then you lose the ability to distinguish climate. It’s why temperature is such as poor proxy for enthalpy. Why do you continue to refuse why Las Vegas and Miami can have the same Tmedian value but vastly different climates? How does Tmedian tell you *anything* meaningful?

They are not measurements of different things, they are measurements of air temperature at a fixed distance from the ground, made using the same device. Your whole philosophy about this is just stupid, according to your position, we can’t measure average streamflow because each molecule of water passing by the current profiler produces a measurement of a distinct entity that can’t be compared to any other molecule of water. It’s asinine. I think you know deep down it’s dumb.

Air temperature measurements are a time series—you get exactly one chance to capture a sample before it is gone—FOREVER. N == one!

By the same token, so are streamgage measurements, but I’m sure you’re not silly enough to try and argue that it is impossible to measure average stream flow using these instruments.

A strawman non sequitur is all you can come up with?

Pathetic.

It is impossible to cancel random errors in measuring streamflow by measuring two different streams using two different gauges and taking single measurements at two different times!

Yet that is what you want us to believe about temperature measurements – you can cancel random error by measuring two different streams (two different volumes of air) using two different gauges (different thermometers) and taking the measurements at two different points in time (Tmax vs Tmin).

“They are not measurements of different things, they are measurements of air temperature at a fixed distance from the ground, made using the same device.”

Your willful ignorance has reached a new height! Unfreakingbelievable.

That’s like saying that measuring the length of two different 2″x4″ boards is measuring the same thing – MEASURING A BOARD!

“Your whole philosophy about this is just stupid, according to your position, we can’t measure average streamflow because each molecule of water passing by the current profiler produces a measurement of a distinct entity that can’t be compared to any other molecule of water. It’s asinine. “

You can’t even get this straight! You don’t measure water flow at the molecule level, at least usually! You are as bad as your compatriots – no experience in physical science at all!

My guess is that you know absolutely nothing about the quantum mechanics of how a PN junction works in a diode or transistor. You don’t measure each electron, you measure at the macro level! My guess is that you don’t even know the difference between the observer effect and the uncertainty principle! Or you wouldn’t have made up such an inane comparison!

If you want to cancel random error in the reading of a tape measure used to measure A single 2″x4″ board you take multiple readings OF THAT SINGLE BOARD using THE SAME TAPE MEASURE EACH TIME, and you take them CLOSE TOGETHER IN TIME.

You can’t cancel random measurement error by taking single measurements of every 2″x4″ board in the lumber yard! But that is what temperature measuring is – measuring the characteristics of a volume of air that changes from second to second making each volume into a separate board! Even worse the measurements can be several hours apart in time meaning different environments as well as different boards. And to top it all off you want us to believe that you can cancel random errors by using two different tape measures!

They are measurements of different things. As many times as NIST TN 1900 Example 2 has been mentioned here along with the GUM, one would expect you to have a better idea of how to handle measurement uncertainty.

TN 1900 shows that when measuring different variations of a measurand (monthly average temperatures) one must evaluate the variance in the data as one component of uncertainty “u”. One should notice that this example excludes both systematic uncertainty and measurement uncertainty. In the real world, these would both be added to the total uncertainty. The GUM addresses this in F.1.1.2. I’ll show it here in case you are unfamiliar with it.

In case you are unsure of what this means. It discusses sampling of a measurand such as daily temperatures over a month’s time. TN 1900 addresses this by finding the variance of the data, modifying it the Standard Error of the Mean, and expanding it to achieve a 95% confidence interval.

The latter part of the GUM statement says to add this the observed variance of a single sample, i.e., the measurement uncertainty of a single measurement determined by multiple measurements of the same thing, by the same device, by the same person, over a short period of time.

Since NOAA has specified a Type B uncertainty for ASOS stations as ± 1.8° F, this should be added to the uncertainty determined from the data. This would make the TN 1900 example have a measurement uncertainty of about ± 3.6° F,

This is a big deal for pilots of heavy aircraft. A 2° C change is equivalent to a 1000 foot change in altitude. This may not sound like much, but on a maximum load in a 747 it does make a difference.

You may have some training in statistics but you don’t appear to have much in making measurements and assessing their uncertainty. Could you tell us what senior level lab courses in physical sciences like physics, chemistry, or electrical you have taken?

Actually, ASOS uncertainty is ±1.8° F.

USCRN is ±0.3° C or ±0.54° F.

Random error in a given observation means the likelihood of positive errors would be equal to the likelihood of negative errors. In the case of measuring temperature, the errors are asymmetrical; if a thermometer is placed in an area consistently exposed to direct sunlight, there’s likely to be a consistent positive bias. The errors also interact with each other, meaning that any measurement has its own context that separates it apart from another. Regarding the climate system, it’s highly non-linear, which means that small changes can lead to disproportionately large outcomes somewhere else. There’s feedback loops too.

Nonrandom error, like siting issues, are not addressed by taking many observations, the way to do that is with the adjustments the people on the site spent inordinate amounts of time railing against. Random weather noise is absolutely reduced by taking many observations over a long period. The mental effort you spend denying this for… no tangible reason that I can discern, is astonishing.

You STILL haven’t bothered to read Hubbard and Lin on station adjustments have you? Why not?

Adjustments can ONLY be made accurately on a station-by-station basis, require an on-site calibration, and can’t be applied to past data.

Weather is not noise – IT IS PART OF THE SIGNAL! You can’t just average it away!

You can disagree with the suite of adjustments applied, that’s fine. We will all look forward to your publican(s) on the subject after they pass peer review. The point is that systematic bias is not being ignored by mainstream climate science.

They’ve already been peer reviewed and published! The article authors are Hubbard and Lin. Go look it up!

Or don’t. We all know you would rather remain willfully ignorant on the subject!

And systematic uncertainty *is* being ignored by mainstream climate science when they use the standard deviation of the sample means as a measure of the accuracy of the mean. The SEM can be 0 (zeor) while being wildly inaccurate. And climate science will never know because they ignore the accuracy metric for the average of a distribution.

Heck, climate science can’t even provide the variance of the data they use! How then can they judge the uncertainty of the average?

They don’t do this. The SEM provides an estimate of the precision of the mean, no one treats it differently.

Malarky! Dividing the average value by the square root of the sample size is endemic in climate science in order to come up a value for the certainty of the average. By definition this is the SEM, it gives the interval in which the average might lie but it does *NOT* say anything about the accuracy of that average.

Whenever you see the words “confidence interval”, it is speaking of the SEM. That is what GISS calculates for their GISTEMP data. It’s how they get such a small uncertainty interval and high confidence value.

Here is what GISS says about station uncertainty:

“The random uncertainties can be significant for a single station but comprise a very small amount of the global LSAT uncertainty to the extent that they are independent and randomly distributed. Their impact is reduced when looking at the average of thousands of stations.

The major source of station uncertainty is due to systematic, artificial changes in the mean of station time series due to changes in observational methodologies. These station records need to be homogenized or corrected to better reflect the evolution of temperature. The homogenization process is a difficult, but necessary statistical problem that corrects for important issues albeit with significant uncertainty for both global and local temperature estimates.” (bolding mine, tpg)

Not a single proof is provided that the data distribution is Gaussian. If it isn’t then assuming an independent and random distribution of error that cancels cannot be justified!

Nor does GISS recognize that station systematic uncertainty can be due to calibration drift that is *NOT* amenable to identification using statistical analysis. Homogenizing one station with measurements from another station assumes the second station has no systematic bias and totally violates what Hubbard and Lin found clear back in 2002! All the homogenization does is spread systematic bias around which contaminates *other* stations.

It is pretty apparent to me, and it is becoming more obvious to more and more people, that nothing in climate science follows well-known metrology principles. It’s a bunch of statisticians and computer programmers who don’t understand the GREAT UNKNOWN and think they can correct everything using statistics. There’s not a single practicing engineer on this planet that believes that. The biggest surprise of all is that climate science has gotten by with this statistical nonsense for so long without being called on it!

A straw man argument with no significance to the current argument. It is an argumentative fallacy.

Try to stay on task and discuss the issue.

Why do you think NOAA has supplied a ± 1.8° F Type B uncertainty for ASOS stations? Do you think this uncertainty cancels when you average two or more readings from different things. If so, you need to go study the GUM some more.

Short-term weather events can have asymmetric effects on temperature. A sudden rainstorm can lead to a rapid drop in temperature, while the dissipation of clouds after a storm could result in a quick temperature rise. Warm and cold fronts bring directional influence; a cold front passing through will lead to a drop in temperature, while a warm front will lead to a rise in temperature. It’s hard to classify what is truly random in the context of a temperature measurement at a given place.

In the case of a weather station located near Anchorage, we know that Anchorage’s climate is much milder and less variable than inland Alaska due to its proximity to the North Pacific Ocean and the Gulf of Alaska. During the winter, the ocean releases stored heat, preventing extreme cold, while in the summer, it acts as a natural air conditioner, preventing extreme heat. This would be a systematic bias, but it’s inseparable from the measurement because it contributes to the measurement combined with a multitude of other factors.

Consider another station located in Fairbanks, Alaska. The area is continental and is located near the Alaska Range, which can cause temperature inversions, act as a geographical barrier, and impact the movement of air masses, precipitation patterns, and temperature distributions. In short, a different but inseparable systematic bias. You can’t quantitatively state the effect the mountains have on the recorded temperature readings because each temperature reading will have its own context. How can you average those two locations together? This is the way our planet works! It’s bizarre how modern-day climate science assumptions do not reflect real-world meteorology conditions. Seems like you are the one in denial.

Nonrandom error, like siting issues, are not addressed by taking many observations, the way to do that is with the adjustments the people on the site spent inordinate amounts of time railing against.

It’s not something you can just adjust. How does an aging thermometer interact with consistent exposure to sunlight? It’s not the same as a brand new thermometer would. How does an aging thermometer interact with all the weather phenomena, as opposed to a new thermometer? These questions don’t get answered. Yet, people think it’s possible to fix that bias; it interacts with the multitude of other variables. I can’t stress multitude of other variables enough to you!

****

No event in the whole universe is technically random, the nature of it is simply so chaotic that we can treat it as random for all practical applications. We don’t care about these singular, quasi-random weather events if we are assessing how much the climate state has changed. This is the point, and nothing in your diatribe detracts from it.

Of course it is. How else would you account for it? You study how the biasing effect impacts the station record, and you devise an approach for removing it. You publish your method so other people can read about it, and you publish the results of your analysis. If someone thinks they have a better way to do it or if they think there is something wrong with your method, they can publish their own. That’s science.

Your argument is beginning to distill down to, “it all feels too complicated for me.” Which is a personal issue for you, it’s not something that bothers actual scientists.

The set of which you are not a member.

In observational analysis, the characterization of a random error are:

The weather is unpredictable, but temperature measurements exhibits characteristics that deviate from a normal distribution, and the temperature distribution varies in each region. The weather also does not demonstrate a balanced likelihood of positive and negative influence; it’s asymmetrical, as I mentioned earlier in my ‘diatribe’. So, random weather variability can’t be classified as random error. If you measure the same thing multiple times, your measurements will cluster around the ‘true value,’ which would be the overall energy uptake. In a temperature measurement, what would be the true value? ‘Random weather variability’ also interacts with conditions at the near surface at that field site that are systematic; you can’t quantify and separate these errors from the true value. It’s completely lost within the noise. There will always be noise, and it will always be distinct in each measurement.

You just deflected from my main point, as usual. Of course, I didn’t mean that no one can try, no matter how nonsensical. How can you remove its effect if it manifests itself differently in every measurement?

Once you understand that not a single person here defending the GAT is a physical scientist, an engineer, or a craftsman/machinest/surveyor/etc the insane assertions they make become more understandable.

They are blackboard statisticians who have never had to certify that a bridge will carry a certain load depending on the measurement uncertainty of the shear strength of the beams making up the bridge. All measurement uncertainty is random, Gaussian, and cancels. Therefore the measurement uncertainty becomes Savg/sqrt(N). Just make more measurements and the average will get more and more precise.

“No event in the whole universe is technically random, the nature of it is simply so chaotic that we can treat it as random for all practical applications. “

That’s irrational. It’s no better than saying the weather is an Act of God, an infrequent miracle!

From Bevington, “Data Reduction and Error Analysis”, 3rd edition, Chapter 1:

“The accuracy of an experiment, as we have defined it, is generally dependent on how well we can control or compensate for sysstematic errors, errors that will make our results different from the “true” values with reproducible discrepancies. Errors of this type are not easy to detect and not easily studied by statistical analysis.”

Taylor, “An Introduction to Error Analysis”, 2nd Ed, Chapter 4:

“As noted before, not all types of experimental uncertainty can be assessed by statistical analysis based on repeated measurements. For this reason, uncertainties are classified into two groups, the random uncertainties which can be treated statistically, and the systematic uncertainties, which cannot”.

If you don’t know the systematic uncertainty component then you can’t know the random component either.

Are you now going to tell us that these two stalwarts of metrology don’t know what they are talking about?

Climate science basically says to ignore anything that might quantify uncertainty. Ignore variance of the data, it’s not important. Ignore systematic bias, we’ll just assume it away with some guessed at adjustments.

It truly is garbage in, garbage out. Ask any Boeing engineer today if you can just assume all measurement uncertainty is random, Gaussian, and cancels.

Here is a graph of temperatures I made several years ago. I was investigating the Central Limit Theory at the time.

Examine the difference in the variances of the stations that were scattered about the U.S. Treating these as 60 samples of size 6 does give a Gaussian shape that can be used to estimated the mean. The standard deviation of the sample means (SDOM) likewise gives a smaller interval of where the mean may lay. However, The value of the uncertainty in measurement is defined in NIST TN 1900. The variance in all the measurements should be used to calculate the measurement uncertainty of the mean value and is quite large when compared to the SDOM.

This also shows that the distributions of station temperatures can not be considered to be Gaussian whereby averaging multiple stations will cancel errors.

If you look at the Santa Barbara distribution of temperatures, you will see an almost perfect normal distribution. That is exactly what you are saying about the Alaska stations.

Climate science don’t do histograms.

or variance

or skewness

or kurtosis

or interquartile ranges

or measurement uncertainty

Another purveyor of Fake Data, just like Mickey Mann.

Calibration drift is not usually random. Electronic components change value as they age and typically do so in the same direction. So it can’t cancel.

Nor can you just assume natural variation is random and cancels as AlanJ and climate science does. Natural variation is PART OF THE SIGNAL. If you don’t account for it and just try to “assume” it away you are losing part of the signal, a SIGNIFICANT part.

u(total) = u(random) + u(systematic)

You can’t assume that the random part cancels since you can only know what the total measurement uncertainty is. If you don’t know the uncertainty component values then you don’t know what cancels and what doesn’t.

AlanJ makes the common mistake so endemic in climate science, measurement uncertainty is *NOT ERROR*. It is an interval which contains the values it would be reasonable to assign to the measurand. You can only identify “error” if you know the true value and the true value is part of the GREAT UNKNOWN.

It’s why the universally accepted JCGM has moved away from using “true value +/- error” to using “estimated value +/- measurement uncertainty”. Some in science are not even using estimated vale any longer and are just expressing the measurement uncertainty interval, e.g. 15.2C to 15.7C, so as to not confuse those unwilling to move to the new concept in metrology – those like AlanJ and those in climate science.

Oops, I never said it could. You’ve gone off and hallucinated again. Gotta be careful with that, Tim.

No one assumes this, nonrandom signals don’t cancel, and there are non-random multi-decadal signals in the climate system that are present in long term surface temperature records. This is not a problem, we want to see such behavior. What we don’t want is the random element of the weather, which we reduce by taking many measurements.

This is completely false and based on nothing, you’ve just made it up whole cloth. It is absolutely possible to identify error without knowing the “true” value.

“What we don’t want is the random element of the weather, which we reduce by taking many measurements.”

Weather is not random. Climate is weather over a long period. Weather is *the* determining factor is climate.

When you do an average you lose the ability to determine climate. It’s why the “average” for Las Vegas and Miami can be the same while their climates are totally different. A metric for climate that can’t differentiate between climates is useless.

u(total) = u(random) + u(systematic)

Every text on metrology is going to be based on that simple equation. There *is* a reason why “u” is used so often to denote uncertainty – because the values are Unknown!

If you don’t know the values of the components, u(random) and u(systematic) then how can you assume one cancels?

I’ll repeat it again:

Cancellation of random measurement errors require:

Please elucidate for us just how the temperature measurements of Tmax and Tmin at two different locations meet these requirements.

My guess is that you will just ignore this request, prove me wrong.

The metric is for climate change, we are not trying to define different climate zones by using the temperature anomaly – there are entire classification systems dedicated to this purpose – we are trying to track if the mean state of the climate in these regions and around the world is changing. This is the point you fail to comprehend over and over and over.

Tim, we really must start at a basic level of understanding if we are to have a productive conversation. Tmax and Tmin are measured by the same device in a single location.

“The metric is for climate change, we are not trying to define different climate zones by using the temperature anomaly – there are entire classification systems dedicated to this purpose – we are trying to track if the mean state of the climate in these regions and around the world is changing. This is the point you fail to comprehend over and over and over.”

Again, if you can’t differentiate different climates with the metric then how do you know if the climate is changing?

You are still stuck trying to clam that some hokey Tavg is *climate*. Temperature, especially a hokey median from a multi-modal distribution, is climate. It isn’t. If it was you could tell the difference between San Diego and Ramona, CA based solely on their respective “average” temperature. The actual truth is that you can’t!

As Walter has tried to explain to you, climate is WEATHER, not median temperature. That’s as true for anomalies as it is for the absolute temps. If an anomaly can’t distinguish between summer and winter temps having different variances, then how can the anomalies tell you anything about climate? And it is *YOU* that are saying the anomalies in Lincoln, NE and Rio de Janeiro can tell you the difference in climates between the two locations in December, not me.

Nope, I’m saying that changes in the Tavg anomaly over long periods of time indicate a change in the climate. That’s it, that’s all, nothing more, nothing less. Nobody uses the global mean temperature anomaly to investigate regional or local climate change impacts.

You don’t know this.

Then GAT is a meaningless number that tells nothing about “the climate”.

Tavg can’t tell you the difference in climate between Las Vegas and Miami. Neither can the anomaly. The anomaly at both can be the same and yet the climates are different. Does the anomaly in Las Vegas tell you about the climate change Miami might be seeing?

What you are spouting is religious dogma taken on faith.

“IT’S A MIRACLE I TELL YOU!”

Again, a change in the rate of change in temperature is not indicative of a change in climate.

Worse, there is a dichotomy between the GAT as an anomaly and the multitude of local and regional locations with little to no warming over a long period of time. How do you explain that?

Lastly, what we are trying to show you is that the claimed anomalies are so far within the uncertainty of measurement that you simply can not make a conclusion. To say that a temperature has increased 0.01 degrees means you must have measurements with a resolution and accuracy to allow a determination of this small value. Temperature measurements nowhere, not even in USCRN have the resolution nor the measurement uncertainty to allow this determination. You are trying to convince folks that averaging different things can provide increased resolution. You need to talk to a machinist and see if they agree that you can do that. You have no concept of tolerances and quality control. These folks know what you are purveying would never withstand in the real world.

Now you are down to just posting lies.

He just gets wilder and wilder with his insane assertions. The difference between a measured value and the “true value” is known as a discrepancy. The measured value is “true value + error” (error may be plus or minus). MV = TV + Err. That means Err = MV – TV. If you don’t know TV then you simply cannot know Err.

When the hand waving starts, you know they are flailing.

The random element of the weather determines the uncertainty of what you are calculating. CLIMATE is the AVERAGE of WEATHER over a long period of time. If you are not examining the entire variance of the weather in climate, then you can’t call yourself a climate scientist! You are merely a statistician whose main goal in life is to obtain a mean value of some data regardless of the signal actually signifies.

Removing the variance (amplitude) of a signal by declaring the different amplitude values as “noise” is attempting to use signal processing language to justify ignoring what the signal is telling you. Do you really think that signal processing is all about limiting the amplitude variations in a signal?

What you are doing is defining the “signal” as the mean value of the signal. That is not “removing” noise!

Every time you try to make excuses you only amplify what you don’t know. You would do better to just admit you have no training in making measurements that must stand in judgement of legal review.

+100

“ If you want to track overall energy intake, you would have to find a way to separate the noise from the measurement, but the noise (the weather itself) contributes to the measurement. “

Somehow climate scientists simply don’t understand this. It’s like a AM radio carrier signal modulated by a time-varying signal. To a climate scientist the modulating signal is noise and only the average frequency of the signal, i.e. the carrier frequency, is of interest.

It’s ignorance of the worst kind because its willful.

I think another factor that plays into it is time. Weather does not operate synchronously with the human calendar; we know that time-of-observation bias exists. Who’s to say the observational period 24:00 – 23:59 isn’t a bias itself? Some days have mornings as the warmest part of the day because of a passing cold front. A ‘once-in-a-century’ heatwave is only termed as such due to the relatively short record of measurements; nothing suggests that these so-called ‘once-in-a-century’ heatwaves can’t occur more or less frequently.

This is why the integrative degree-day metric has always been attractive for me. It will catch, assuming the measurements have sufficient granularity, such changes and will be reflected in the metric.

Spikes like heat waves tend to get damped as far as significance when the entire annual degree-day calculation is added up. That doesn’t happen when a week-long heat wave can have a large impact on daily median values which, in turn, have a large impact on a monthly average value.

Not at all uncommon for my location for the daily high to occur one second after midnight when a deep cold front is passing through.

“in the average height of American males “

Another nonsense analJ-ogy.

“Observing a change in the mean daily temperature over time can let you track the changing seasons.”

No, it can’t. It can’t tell you whether it is summer in the SH and winter in the NH or vice versa!

It’s only Stats 101 if you include the VARIANCE associated with your anomalies! Where is the variance calculation?

The temperature anomaly at a given location is a measure of variance. The distribution of temperature anomalies around the globe at a given point in time is a distribution of variances in temperature for that time. If the mean of the distribution of variances (anomalies) is observed to shift over time, that means the global climate is changing.

Where did you get your training is statistical analysis of experimental data?

The anomaly is *NOT* a measure of variance. The anomaly *HAS* variance associated with it at any given point in time.

KM has coined the term trendologist to describe you. It fits you perfectly. To you the variance is how far a stated value is from the trend line fitted to those stated values — all the while ignoring the fact that those stated values have measurement uncertainty and variance associated with them.

You are caught out with your statement that variance is always random, Gaussian, and cancels. Variance does *NOT* cancel, It is not reading error or anything else.

Variance is a measure of the uncertainty of an average, even in a Gaussian distribution. The anomaly is *NOT* a measure of the uncertainty of anything.

“The anomaly is not a measure of variance, it is a measure of variance.” You have simply got to think about what you write before pressing the Post Comment button.

Oh, that’s not any statement I ever made. That’s a statement you are falsely attributing to me. You need to learn to separate the things people are actually saying with the lies you’ve convinced yourself of, it’s causing you delusions.

Who do you think you are fooling?

““The anomaly is not a measure of variance, it is a measure of variance.””

I didn’t say that. That phrase appears ONCE in this entire article. Yet you put quotes around it like *I* said it.

When you ignore the variance of the data then you *ARE* assuming that the variance somehow cancels. The proof is that you absolutely refuse to give us the variance of the data you are calculating the average from.

THE AVERAGE ALONE IS NOT A COMPLETE STATISTICAL DESCRIPTION OF A DATA SET.

Why is that so hard for you to understand. If you can’t tell us the variance then that means you have no idea of what the distribution of the data is. Any assumption you make about “random” can’t be justified without a complete statistical description of the data.

I’ve never said anything contrary to this, you’re just fighting with ghosts and phantoms.

Then give us the variance of the temperature data from Forbes AFB, Topeka, KS.

If you can’t then you are ignoring it!

And if you ignore it then you are *NOT* providing a complete statistical description of the data!

But you never discuss the other statistical parameters which leads one to believe they hold no significance to you.

Tell us about the variance in a monthly series of temperatures. What is it? How do you obtain an uncertainty from it? How do you average one monthly random variable with another and deal with the different variance of each?

He isn’t rational because he is fully enrolled in the climate cult thus will say anything to defend the impossible since rational people knows there is NO climate crisis occurring.

FACT: NO Hot Spot exists.

FACT: NO Positive Feedback Loop exists.

FACT: Climate cultists ignore these two easily proven AGW conjecture prediction failures thus AGW is a failed pile of shite but that isn’t accepted because it is a religion they must follow.

Who pays you to post this tripe?

It has nothing to do with the variance of anything. It is a rate of change, not a variance.

The variance for an anomaly is actually derived from the two random variables that are used to calculate it.

Var(X – Y) = Var(X) + Var(Y) where:

X = random variable associated with the monthly average, and,

Y = random variable associated with the baseline average.

The associated uncertainty is the √variance.

Climate science doesn’t even recognize random variables and how they are dealt with. If they did, they would recognize that anomalies are not averages, they are the subtraction of two random variables.

When averaging anomalies, the individual uncertainty of each anomaly must be propagated through the entire calculation, just as you would any uncertainty. See the GUM for doing this.

I found this insightful.

No emergency.

The graph shows the increase in the global mean temperature quite plainly.

Absolutely zero evidence of human causation except urban and mal-adjustments.

Warming that wouldn’t even be noticed except for all the chicken-little idiocy that the AGW cultists like you go on with.

Also , BEST is not “global” in any way….. It is purely URBAN.

Mr. J: And you have an expert to establish the cause of the increase shown? There’s a guy here named Greene who will say it, maybe you should look him up?

So what!

Many times, in the interglacial period there have been warming trends…..

Most of the warming is at night and in winter.

What are your professional credentials in trendology?

I can understand what a “climate anomaly” is. Some parameter that can be compared to an average. I do not think that the term “Climate Event” makes any sense. It is invariably used to describe weather events with the strong implication that this event is the new average so it is a picture of the “New Climate”. A hot year averaged with the previous 29 and compared to each of the previous 30 year groupings in the record may give us some climate information if the record is long enough. The Held V Montana trial used this term throughout and was never challenged. All of the young plaintiffs complained of smokey or rainy years yet none of them had even experienced enough weather to comprise one climate data point for comparison.

Anomalies inherit the variance of the parent components ( var(x+y) = var(x) + var(y) ). The variance of a distribution is a metric for how uncertain the average value is. Anomalies are always more uncertain than each of the parent components. Better to look at the parent components by themselves than the differences.

What are x and y in your equation?

Don’t start. You are out of your depth. We’ve been down this road before. When is variance a valid statistical descriptor? Always? Never? Sometimes?

You could have simply said you don’t know.

ROFL!! In other words you have no idea what the variance of the temperature data is. You never have, you don’t now, and you never will. Like climate science you think the average alone is sufficient to describe a data distribution.

The usual manic laughter and deflection.

It’s a simple question. When you are talking about subtracting two random variables, what exactly are those variables? You won’t answer the question because you don’t understand your own argument. I suspected as much, and now your refusal to answer the simple question demonstrates it.

YES! The question *IS* what exactly are those variables!!!

That’s EXACTLY what I’ve been trying to tell you and you adamantly refuse to address!

How do you combine the sinusoidal daytime temperature profile with the exponential decay nighttime temperature profile to come up with an AVERAGE that is meaningful?

That average, actually a median of a multi-modal distribution, can’t distinguish between two different climates so how can it be a useful metric?

When winter temps have a wider range than summer temps, meaning they have a higher variance, how DO YOU combine them to get an “average” global temperature for the same month, i.e. combining SH temps with NH temps?

Why won’t you address what the variance of all these variables is? You simply can’t even describe a Gaussian distribution using only the average – yet you and climate science does!

Don’t try to put this on me. I’ve looked at the variance of the winter and summer temps from my station. I *KNOW* they are different. I don’t do like you do and just ignore that very basic fact!

It’s on YOU to justify calculating a GAT while ignoring the variance of the data at the same time!

“YES! The question *IS* what exactly are those variables!!!”

If you don;t know why do you expect anyone else to?

You refuse to answer that simple question, and it’s easy to see why – so you just try to deflect with your other misunderstandings which have nothing to do with the point.

You say:

I’ll assume you meant var(x-y), given you are talking about anomalies. But you can’t just apply the equation var(X-Y) = var(X) + var(Y), without specifying what the random variables X and Y are. And your problem is you never do state what they are and keep using them to mean different things at the same time.

If X is an individual daily temperature and Y is an individual daily temperature from the base period, you can say that var(X) + var(Y) will be the variance in subtracting one from the other. But then you are saying nothing about the variance of the anomaly or of a monthly average. If X is the monthly average and Y is the average of the base period, the equation still works, but then var(X) and var(Y) are not variances of daily values, they are the variance of the averages.

But you always try to pretend they are the same thing. Hence why you find it so difficult to just say exactly what X and Y mean in your equation.

I’ve spent 2 years trying to get you to understand where your mistake is – but it’s futile because in your mind, and those of your cheerleaders below, you are incapable of being wrong. You think you understand how “combining” random variables works, but everything you say betrays your ignorance on the matter.

“If you don;t know why do you expect anyone else to?”

*YOU* are the one trying to say that var(x+y) ≠ var(x) + var(y) when it comes to temperature. It’s up to *YOU* to identify the variables!

“I’ll assume you meant var(x-y), given you are talking about anomalies.”

Your lack of algebra skills is showing again. “y” can be either negative or positive. You still show it as x + y

“ without specifying what the random variables X and Y are.”

What do you combine to get a temperature anomaly? Be honest. Don’t play stupid. It’s an argumentative fallacy known as Appeal to Ignorance.

If the variance of the monthly average is based on multiple daily values over a decade then those daily values taken as a data set have a variance. Thus the decadal distribution is described by the statistical descriptors of average and variance.

If that decadal monthly average is then used to subtract from tomorrows daily median value of temp then the anomaly inherits the variance of the components – the decadal monthly average and its own variance. Remember, the daily Tmedian is made up of two values representing a data set, a small one for sure but still a data set. And the variance of that data set of two elements will have a variance (Tmax- Tmedian)^2 + (Tmin – Tmedian) ^2. (the denominator of n-1 is 1).

Thus the variances of the two components will add:

Var(total) = Var(decadal monthly avg) + Var(Tdaily-median)

You are a troll, nothing more and nothing less. You can’t even understand the very basics. Give it a break. I’ll not answer you any more on this subject. You don’t know enough about it to make a meaningful comment!

This one still sums it all up completely:

“The only way they can get uncertainty intervals down into the hundredths and thousandths of a degree is by ignoring the uncertainty of the individual components. THE ONLY WAY.

“You can *NOT* decrease uncertainty by averaging. You simply can’t. Trying to discern temperature differences in the hundredths digit by averaging when the underlying data is only accurate to the tenths digit (or even the units digit) is an impossibility.

“It truly is that simple.

“Pat Frank knows more about measurement and uncertainty than you will EVER know.

“*YOU* still think that precision and accuracy are the same thing no matter how many people, including experts, tell you.

“And we are supposed to believe *YOU*?

“*YOU* still think that an average value, a single number, has a distribution associated with it and you can use GUM Eq 10 to evaluate the accuracy of that average value.

“And we are supposed to believe *YOU*?

“You still think that the partial derivative in GUM Eq 10 is NOT a weighting factor for the uncertainty components.

And we are supposed to believe *YOU*?”

— TG

And this one:

“He’s a troll, Tim” — Pat Frank

“This one still sums it all up completely:”

Yes it does. Lots of arguments by assertion and appeals to authority.

“Pat Frank knows more about measurement and uncertainty than you will EVER know”

The Pat Frank who thinks uncertainty and standard deviations can be negative? That authority?

“*YOU* still think that an average value, a single number, has a distribution associated with it and you can use GUM Eq 10 to evaluate the accuracy of that average value.”

For new listeners, it was karlomonte who insisted you had to use equation 10 to determine the uncertainty of an average.

“You still think that the partial derivative in GUM Eq 10 is NOT a weighting factor for the uncertainty components.”

I said you can call it what you want – it doesn’t change it’s value. This was in response to Tim claiming that the derivative of x^2 was 2, and insisting that the derivative of 2x was not 2.

“The Pat Frank who thinks uncertainty and standard deviations can be negative? That authority?”

Your reading skills are still atrocious. The standard deviation describes an interval around the mean – implying that it has a component of (mean – σ/2 and a component of (mean + σ/2). A negative interval (-σ/2) and a positive interval (+σ/2).

Someday you *really* need to learn basic algebra.

“For new listeners, it was karlomonte who insisted you had to use equation 10 to determine the uncertainty of an average.”

For new listeners KM said you use Eq 10 to find the uncertainty propagated onto the average. Bellman still thinks the standard deviation of the sample means defines the accuracy of the mean instead of the interval in which the population mean might lie. The accuracy of that mean is measured by the variance of the population, not by how precisely you calculate the mean.

” said you can call it what you want – it doesn’t change it’s value.”

No, that is *NOT* what you said. You said the partial derivative was *NOT* a weighting factor after I told you that it was. Then I had to show you how to use relative uncertainty for a quotient and the partial derivative becomes a weighting factor for the uncertainty. You couldn’t even figure out what Possolo did in TN1900, Example 2 until I showed you the simple algebra.

“This was in response to Tim claiming that the derivative of x^2 was 2, and insisting that the derivative of 2x was not 2.”

Your lack of reading comprehension skills are showing again. This is *NOT* what I said. I said specifically that the partial derivative of R^2 becomes a weighting factor of 2. And you said that was wrong. I had to show you how the simple algebra makes it such. And it’s not obvious that you understand that even now!

“Your reading skills are still atrocious.”

Are you claiming he didn’t say it? Do you want me to fish out the week long comment section where you tried to defend his claim that uncertainty and standard deviations could be negative?

“The standard deviation describes an interval around the mean – implying that it has a component of (mean – σ/2 and a component of (mean + σ/2). A negative interval (-σ/2) and a positive interval (+σ/2).”

Yes, that’s an excellent example of the contortions you went through to pretend that standard deviations could be negative.

“Someday you *really* need to learn basic algebra.”

It’s hilarious that you think this is a convincing putdown, when you sop often demonstrate your inability to understand simple equations.

“For new listeners KM said you use Eq 10 to find the uncertainty propagated onto the average.”

Which is what you insist we have to do to determine the measurement uncertainty of an average.

“Bellman still thinks the standard deviation of the sample means defines the accuracy of the mean instead of the interval in which the population mean might lie.”

The interval in which it’s reasonable to attribute the population mean is very much the definition of uncertainty favored by the GUM. But the SEM is not what we are talking about in equation 10 – that’s just propagation the measurement uncertainty onto the exact average.

“The accuracy of that mean is measured by the variance of the population, not by how precisely you calculate the mean. ”