May 2nd, 2022 by Roy W. Spencer, Ph. D.

The Version 6.0 global average lower tropospheric temperature (LT) anomaly for April, 2022 was +0.26 deg. C, up from the March, 2022 value of +0.15 deg. C.

The linear warming trend since January, 1979 still stands at +0.13 C/decade (+0.12 C/decade over the global-averaged oceans, and +0.18 C/decade over global-averaged land).

Various regional LT departures from the 30-year (1991-2020) average for the last 16 months are:

YEAR MO GLOBE NHEM. SHEM. TROPIC USA48 ARCTIC AUST

2021 01 0.12 0.34 -0.09 -0.08 0.36 0.49 -0.52

2021 02 0.20 0.32 0.08 -0.14 -0.66 0.07 -0.27

2021 03 -0.01 0.12 -0.14 -0.29 0.59 -0.78 -0.79

2021 04 -0.05 0.05 -0.15 -0.29 -0.02 0.02 0.29

2021 05 0.08 0.14 0.03 0.06 -0.41 -0.04 0.02

2021 06 -0.01 0.30 -0.32 -0.14 1.44 0.63 -0.76

2021 07 0.20 0.33 0.07 0.13 0.58 0.43 0.80

2021 08 0.17 0.26 0.08 0.07 0.32 0.83 -0.02

2021 09 0.25 0.18 0.33 0.09 0.67 0.02 0.37

2021 10 0.37 0.46 0.27 0.33 0.84 0.63 0.06

2021 11 0.08 0.11 0.06 0.14 0.50 -0.43 -0.29

2021 12 0.21 0.27 0.15 0.03 1.62 0.01 -0.06

2022 01 0.03 0.06 0.00 -0.24 -0.13 0.68 0.09

2022 02 -0.01 0.01 -0.02 -0.24 -0.05 -0.31 -0.50

2022 03 0.15 0.27 0.02 -0.08 0.21 0.74 0.02

2022 04 0.26 0.35 0.18 -0.04 -0.26 0.45 0.60

The full UAH Global Temperature Report, along with the LT global gridpoint anomaly image for April, 2022 should be available within the next several days here.

The global and regional monthly anomalies for the various atmospheric layers we monitor should be available in the next few days at the following locations:

Lower Troposphere: http://vortex.nsstc.uah.edu/data/msu/v6.0/tlt/uahncdc_lt_6.0.txt

Mid-Troposphere: http://vortex.nsstc.uah.edu/data/msu/v6.0/tmt/uahncdc_mt_6.0.txt

Tropopause: http://vortex.nsstc.uah.edu/data/msu/v6.0/ttp/uahncdc_tp_6.0.txt

Lower Stratosphere: http://vortex.nsstc.uah.edu/data/msu/v6.0/tls/uahncdc_ls_6.0.txt

Discover more from Watts Up With That?

Subscribe to get the latest posts sent to your email.

In the real world, another month of nothing to worry about. In the fantasy world of ‘climate change’ alarmists…..PANIC!!!!

UAH6 has been collecting data for over 42 years, mostly during an upswing in the approximately 60 to 70-year warming and cooling cycle and over a period of rapidly increasing atmospheric CO2 concentrations. Wouldn’t its 1.3 C/century atmospheric temperature trend (less for the oceans) put an absolute top limit for climate sensitivity?

A maximum warming of our atmosphere of about 1.3 C (or less) over the next hundred years would be a net benefit for Earth’s plants and animals. It would also be well within the Holocene’s estimated temperature variations. All of the CliSciFi in the world, including wildly inaccurate UN IPCC climate models, doesn’t change that observation.

Interesting point.

Accepted carbon dioxide change is 280 -> 410 ppm or 410/280 = 1.46;

So maximum upper limit is 1.9 degrees C, per doubling of carbon dioxide, with *no* natural or cyclic warming.

Yes, Tony, I agree that we have had about a 46% increase in atmospheric concentrations of CO2 since the mid-19th Century. Its not clear how that translates to an upper limit of 1.9 C/doubling.

You explicitly state “absolute top limit”, so no natural warming. All warming is then due to carbon dioxide. I have to take it as implied that no natural warming also rules out natural cooling.

At this point it is a straight ratio: (410/280) * 1.3 = 1.9

Side note: It has been asserted that there is now “natural cooling” going on, which has been overcompensated for by the addition of the atmospheric carbon dioxide. That without this lucky cooling, the greenhouse warming we are seeing would be “way worse”.

(Where have we heard things like this before?)

Anyway the “natural cooling” was advanced without a scrap of evidence. I dismiss it just as quickly and easily.

Tony, where did I say that all of the warming in the UAH6 trend was related to CO2? I assumed that people would realize my mention of a warming/cooling cycle would indicate natural changes. The implication is that despite rapid increases in atmospheric CO2 concentrations warming was mild even during natural warming cycles. And I really don’t care what the CliSciFi practitioners are saying about natural cooling hiding CO2 warming.

Also, since CO2’s theoretical effects on temperatures is logarithmic a straight ratio is not applicable.

My point about natural cooling (given an assumption that it is occurring) is that the increased Carbon Dioxide (assuming man-made CO2 is warming temperatures) is what is giving us the bountiful agriculture we now have.

Alternatively, if the Global Climate Models are correct and we truly are seeing a warming of several degrees due to CO2, then without the warming from our CO2 emissions, we would be seeing mass starvation throughout the world and so wars for food and resources and perhaps the fall of civilization itself.

Dave there is only natural warming and natural cooling. If earth was 1.3 C warmer then all the Russian snow would be all gone. Still plenty of snow and low temperatures at 70 degree north. April will feel like May, May will feel like June. Any warming shouldn’t be assumed CO2. 1.3 C equates to 7 watts not 1.9 watts That’s just made up math’s. .

The 7 W/m2 figure is the result of sblaw(289.3) – sblaw(288). That would be the radiative response of a 1.3 K increase in temperature for a blackbody. The 1.9 W/m2 figure is the radiative force at 1.5xCO2. Radiative force and radiative response are different concepts. Be careful not to conflate the two.

If maximum warming of the atmosphere was 1.3 C then this is what the earth’s temperature would look like for April. 4th of April is the mid point to summer’s peak. Earth reaches 10 C in summer which is far from what were told as in 17 C for July. 17 C is just for the Northern Hemisphere in July while the southern hemisphere is around 3 C.. Earth emitted 340 watts at beginning of April which is normal. UAH has a pole missing in their data, Antarctic. Which was very cold recently.

“UAH has a pole missing in their data, Antarctic.”

??????????????

I download the UAH data set every month.

Here is a piece of the header and March data.

NoPol Land Ocean SoPol Land Ocean

0.74 0.31 1.23 0.03 0.81 -0.34

Antarctica seems like it is there to me.

Thank you for correcting me. Although SoPol isn’t for April update. April SoPol will appear in the May’s update.

April’s data will appear in April’s update. It’s just that April’s data isn’t available yet. It is usually posted within the 1st or 2nd weeks of the month.

Stephen, I honestly have no idea as to what you are trying to say.

My comment was based on the approximately 42 year-long trend of 0.13 C/decade for globally averaged temperature increases reflected in the UAH6 dataset. Based on the fact that the record occurred during a period of maximal rising atmospheric CO2 additions and over a warming period of a cyclical warming/cooling trend, I speculate that is a good indication of the maximal sensitivity of our climate to CO2 increases. That’s it.

You only know anomalies. Not global average surface absolute temperature. If you did you would know what I’m talking about. Anyone can look at any coordinate on earth for absolute temperatures. Here are 154 climates (in Celsius) on earth for the date shown. All averaged into thermal energy. What you don’t know is what the thermal energy should be at a particular month. In winter this is less than 340 watts and in summer this is above 340 watts. April 2nd is 91 days till summer peak and 91 days till winter minimum. Anomalies make you think their is a trend. Ignoring what happens to past anomalies. If earth was 1.3 C above normal then date 2nd April atmosphere would have 346 watts of thermal energy not 339 watts as shown. My previous post showed 15 C which has thermal energy 390 watts. Summer only reaches 360 watts. So April cannot ne 390 watts. If your still unsure what I’m talking about look it up. . . .

Change 154 climates to 154 observations from this site earth :: a global map of wind, weather, and ocean conditions (nullschool.net)

Stephen, my life is becoming too short (74 y/o) to play endless numbers games.

“In the real world, another month of nothing to worry about.”

Indeed, and along with the ice-core millenial temperature reconstructions (GISP2) the UAH instrumental record suggests the idea of a recovery out of the LIA is reasonable

GISTEMPs LOTI is out for March, here’s how many changes they made to their Land Ocean Temperature Index so far in 2022 DATA :

Jan Feb Mar Apr May Jun Jul Aug Sep Oct Nov Dec

291 243 252

Yep, essentially flat temperatures over the bulk of 21st Century to date, except for Super El Nino periods. Certainly far under those predicted by the UN IPCC CliSciFi models. All of the governmental lies in the world can’t change that observational fact.

So, they can measure the temperature of the whole earth to hundredths of a degree? BS.

It’s done using a sophisticated Al Gore Rhythm.

You know what they call a woman using the Rhythm method? Mother.

Do you know what they call a woman relying on the Pill? Mom.

Teal S, Alison Edelman A. Contraception Selection, Effectiveness, and Adverse Effects. A Review.

JAMA. 2021;326(24):2507-2518.

“Pregnancy rates of women using oral contraceptives are 4% to 7% per year” – and double that in those under 21 years of age. When the study includes a cohort adherent enough to be followed for a year. And NFP at 22% for a year.

I thought it meant all her children would be musically inclined.

Yup, the Al Gore Rhythm’s gonna get you

(Apologies to Gloria Estefan)

No. UAH claims ±0.20 C of uncertainty on monthly TLT anomalies. See Christy et al. 2003 for details.

The paper indicates that they think that they can calculate the temperature to an uncertainty characterized by variation in the second significant figure to the right of the decimal point.

However, it is generally recommended that the nominal (average) value to not carry significant figures beyond the most significant figure in the uncertainty. That is, if one were to add them to obtain the range, the tenths columns would be the last significant figure shown for both statistical parameters for monthly data.

The paper does say that the uncertainties are “estimates,” not rigorous calculations.

As presented, the numbers suggest that the 95% uncertainties are “estimated” to +/-0.005 deg C. I’m dubious of the claim.

I did a type A evaluation of uncertainty using UAH and RSS. It came in at ± 0.16 C which is consistent with the type B evaluation from Christy et al. 2003 and the monte carlo evaluation from Mears et al. 2011. I’ve not seen a publication or calculation following the GUM procedures that comes to a significantly different conclusion.

The ±0.20 C figure comes from Christy, Spencer, Norris, Braswell, and Parker. If there are concerns with the way it is reported I recommend contacting one of the authors. Dr. Spencer has been active on his blog today so you might get a response to your concern regarding significant figures if you post there.

You didn’t do a “type A” evaluation of uncertainty. How do I know? You don’t have the data and you don’t even know proper statistics nomenclature.

Christy et. al. (2003) did NOT conclude an error of ±0.20 C in that paper.

STOP pretending that you’re a scientist when you’ve clearly established that you’re a phony and an idiot.

meab said: “You didn’t do a “type A” evaluation of uncertainty. How do I know? You don’t have the data and you don’t even know proper statistics nomenclature.”

In accordance with GUM 3.3.5 I computed the positive square root of the variance of the difference of UAH and RSS. This gave me u = 0.12 C for the difference. This means u = sqrt[0.12^2 / 2) = 0.08 C using the root sum square rule for the individual anomalies. Therefore the expanded uncertainty at K = 2 is U = 0.16 C. I encourage you to download the data here and here and do the calculations yourself.

meab said: “Christy et. al. (2003) did NOT conclude an error of ±0.20 C in that paper.”

pg 627 column 2 paragraph 2:

meab said: “you’re a phony and an idiot.”

I’ll be the first to admit that I’m ignorant (aka idiot). And the more I learn the more I realize how ignorant (aka idiot) I actually am. Anyway, if you want to convince me that the expanded type A standard uncertainty using UAH and RSS observations is significantly different than 0.16 C then present a different value. We’ll discuss methods and their adherence to established statistical and uncertainty analysis procedures. To convince me that UAH monthly anomalies were not assessed as ±0.20 C you will need to show that the Christy et al. 2003 publication is a forgery and how it went unnoticed especially to Dr. Spencer and Dr. Christy for 20 years.

You calculated the difference between two sets of data with unknown uncertainty for the individual values in the data set?

That’s not a Type A uncertainty! If the values are inaccurate due to systematic errors, either in the sensors themselves or in the calculation algorithm used to convert radiance to temperature, then the Type A uncertainty of each is unknown.

If both sets of data have a 1C uncertainty they could still be within .01C of each other. Meaning *YOU* would see a .01C uncertainty in their difference but it wouldn’t tell you anything about how accurate the actual data is – i.e. their true uncertainty!

I agree that your quote is accurate, I was wrong about one thing, as you said, Christy et. al (2003) did claim this as their error in 2003. I apologize but, once again, your lack of knowledge has led you to believe in a completely bogus estimate.

What Christy et. al. did in the 2003 paper was to compare their satellite estimate to 3 (or 4 in one case) different SUBSETS of radiosonde data. As easily seen in Table 10, the different radiosonde data SUBSETS lead to distinctly different error estimates. While the monthly anomalies are fairly consistent across each SUBSET, the annual anomalies (radiosonde minus satellite) are HUGELY different, ranging from 0.04 to 0.20 C. That should have been your first clue that the error is not even close to what they claimed. They called out the RMS error computed from just 3 small data subsets as the error estimate. That’s not the error.

Now take a look at Figure 6 from a much more recent Christy paper (2018) found at:

https://www.tandfonline.com/doi/full/10.1080/01431161.2018.1444293

data:image/png;base64,iVBORw0KGgoAAAANSUhEUgAAAAEAAAABCAYAAAAfFcSJAAAADUlEQVQIW2NkYGCoBwAAiQCB1bUosQAAAABJRU5ErkJggg==

Just in case you can’t see it, It shows the (near) GLOBAL linear temperature trend from 1979 to 2015 calculated from a GLOBAL radiosonde data set (unajusted), and 3 different estimates from analysis of the same satellite data (UAH, RSS, and NOAA).

The data show a HUGE difference in estimates from .09 deg C/decade, for the unajusted radiosonde data, about the same for UAH, to .15 C/decade for RSS.

Something is VERY wrong.

One thing they found is that MANY changes had been made to the radiosondes – changes in equipment, changes in location of ascent, changes in the length of tether (balloon shading instrument package), etc. They needed to correct for all of these (stupidly done) changes. Using each satellite estimate, individually, they found the discontinuities where the radiosonde data suddenly jumped – this was used assuming the jumps were where (stupidly done) changes had been make and they adjusted the radiosonde data to take out the (stupidly done) changes. What did they get? HUGELY different adjusted radiosonde estimates depending on which satellite estimate was used to find the discontinuities.

What does this mean? Looking at the different estimates for the linear trend, there’s MUST be HUGE differences in the absolute temperature from the radiosondes depending on whether you use UAH, RSS, or NOAA satellite estimates to find the inconsistencies in the radiosonde data and correct for them. HUGE.

This means that you CAN’T use radiosonde data to calibrate the absolute temperature of the satellites and then use it to estimate the error.

You’re a dupe, BadWaxJob.

meab said: “Something is VERY wrong.”

Or very different. Notice that it is an apples to oranges comparison since different grids are used. A significant portion of the trend difference is because UAH and RSS are measuring different things.

meab said: “One thing they found is that MANY changes had been made to the radiosondes – changes in equipment, changes in location of ascent, changes in the length of tether (balloon shading instrument package), etc. They needed to correct for all of these (stupidly done) changes.”

This is true of most datasets including satellite, radiosonde, and surface station. They all have to make adjustments to correct for issues like these.

meab said: “there’s MUST be HUGE differences in the absolute temperature from the radiosondes depending on whether you use UAH, RSS, or NOAA”

Of course they have different absolute temperatures. They are measuring different things. As figure 6 shows that does explain some of the trend difference between UAH and RSS. Another difference is the fact that both UAH and RSS likely have systematic biases. Either way both are within the ±0.05 C/decade uncertainty that Christy et al. 2003 estimated.

meab said: “This means that you CAN’T use radiosonde data to calibrate the absolute temperature of the satellites and then use it to estimate the error.”

Radiosonde data is not used to calibrate the absolute temperature of the satellites. The MSUs are calibrated against the cosmic microwave background and the average of the platinum resistance thermometers on a hot target. Radiosonde data is used to asses error (Christy et al. 2003), but other methods are used as well. Spencer & Christy 1992 used the same method I did above just with different source data and Mears et al. 2011 used the monte carlo method.

Badwaxjob,

You purport to be able to do statistics, but you fail at grade-school arithmetic. The HUGE difference in the adjusted RADIOSONDE decadal trend, depending on which satellite ANALYSIS is used to “adjust” the radiosonde data (all satellite ANALYSES use the same data with UAH throwing out more bad data than RSS) adds up to a difference of over two tenths of a degree absolute over the 4 decades of the data all by itself. According to your phony error claim, that leaves very little error for common-mode errors (the TOTAL uncertainty that is common to all “adjusted” radiosonde estimates) and, likewise, almost no errors inherent to the satellite measurements themselves. There is simply no possibility that your original claim of accuracy is correct as it’s not even correct for relative errors.

In addition, your claim of being able to estimate the TOTAL error in UAH by computing the variance between RSS and UAH is LAUGHABLE. It shows a total ignorance of stationary systematic vs. random errors.

Finally, you are obviously completely missing the point, all these estimates are trying to arrive at the exact same thing – the long-term temperature trend. That’s why the satellite ANALYSIS must be and is calibrated to radiosonde data. However, the radiosonde data “adjustments” add up to as much as a THIRD of the computed trend all by themselves.

You should have figured out by now that the field of climate research is SORELY lacking in the estimation of errors. Most climate reaserchers can’t do even basic statistics. Neither can you.

meab said: “You purport to be able to do statistics, but you fail at grade-school arithmetic.”

I didn’t do the arithmetic. I had Excel do it.

meab said: “According to your phony error claim”

My claim is that the type A evaluation of uncertainty using UAH and RSS monthly anomalies is ±0.16 C, the type B evaluation provided by Christy et al. 2003 is ±0.20C and the monte carlo evaluation provided by Mears et al. 2011 is about ±0.2 C as well. That’s it. If there is any overreach in the interpretation of these figures it is coming from you and you alone. Don’t expect me to defend your misinterpretations.

meab said: “almost no errors inherent to the satellite measurements themselves”

Spencer & Christy 1992 say it is ±0.3 C. Zou et al. 2006 say it is ±0.28-0.42 K.

meab said: “There is simply no possibility that your original claim of accuracy is correct as it’s not even correct for relative errors.”

My type A calculation was 0.16 C. Christy et al. 2003 got 0.20 C and Mears et al. 2011 got about 0.2 C as well. What value do you get? How far off are we?

meab said: “your claim of being able to estimate the TOTAL error in UAH by computing the variance between RSS and UAH is LAUGHABLE.”

I didn’t claim it was the “TOTAL error”. I said it was the type A evaluation of uncertainty and nothing more. I (along with Bellman and others) have been trying to tell the WUWT community that we should not eliminate the possibility of a significant systematic error in the UAH dataset, but people on here are not interested in listening to us. Maybe they’ll listen to you?

meab said: “That’s why the satellite ANALYSIS must be and is calibrated to radiosonde data.”

Like I said before it’s not calibrated to radiosonde data. It’s calibrated to the cosmic microwave background and a hot target using platinum resistance thermometers onboard the satellites themselves.

meab said: “Most climate reaserchers can’t do even basic statistics.”

Then maybe WUWT and Monckton should not be advertising UAH as the be-all-end-all global average temperature dataset.

Now you’re admitting that the error is greater than you claimed (remember that we were discussing significant digits). They barely have one significant digit after the decimal point.

You obviously have no idea how UAH and others use the radiosonde data – they would be clueless how to convert the microwave soundings to a meaningful temperature without it. That doesn’t surprise me, clueless seems to be your perpetual state of being.

I’ll remind you that I’m the one who pointed out that Christy et al. 2003 say it is 0.20 C. That is in the ballpark of the type A evaluation I got of 0.16 C and the monte carlo evaluation of Mears et al. 2011, but significantly greater than the 0.01 C figure John Shotsky thought it was or the 0.005 C figure Clyde Spencer thought it was. And I’ve made it known on multiple occasions that I’m not going to eliminate the possibility that it is higher than 0.20 C either so I don’t know why you’re directing your concern at me as opposed to JS and CS. And what about Monckton? Are you going to voice your concern to him when he posts his monthly pause article?

Because you’re the fool who incessantly claimed 0.16 when that’s not even the appropriate error to consider.

Because you’re the fool who falsely claimed that the satellite ANALYSIS did not depend on the radiosonde data to calibrate microwave soundings (you don’t even know the difference between calibrating the instrument and calibrating the analysis).

Because you pose as a scientist when you clearly aren’t.

Because you often make false statements and, instead of being a stand-up guy and admitting that you were wrong, you try to get out of the hole you dug for yourself by standing on more of your own excrement.

Because you don’t understand that Monkton’s updates are valid. They clearly illustrate that, while CO2 is a factor, it cannot be the sole control knob on the climate as other factors can conspire to eliminate CO2’s influence for long times – even at the highest concentration of atmospheric CO2 ever measured.

That’s why.

meab said: “Because you’re the fool who incessantly claimed 0.16 when that’s not even the appropriate error to consider.”

Yeah. That’s the value I got. I’m certainly not claiming it is the be-all-end-all uncertainty; far from it. I’d rather go with the higher 0.20 C from Christy et al. 2003 myself. Anyway, what figure did you get when you did a type A evaluation using the UAH and RSS monthly anomalies? What do you think the appropriate error is?

meab said: “Because you’re the fool who falsely claimed that the satellite ANALYSIS did not depend on the radiosonde data to calibrate microwave soundings”

I’m not the one making that claim. It comes from Spencer and Christy 1992 which say the MSU instrument calibration is “totally independent of radiosonde data” in the UAH inaugural publication I cited above. Zou et al. 2006 provide more details and confirm that the calibration is done with the cosmic microwave background and a hot target with two platinum resistance thermometers onboard the satellites. There’s no mention of radiosonde data. So if a false claim has been made it is Spencer, Christy, Zou, Goldberg, Cheng, Grody, Sullivan, Cao, Tarpley and I suspect all of the engineers who worked on the MSUs over the years that are doing so; not me.

meab said: “Because you pose as a scientist when you clearly aren’t.”

I’m not a climate scientist. I’ve been open and transparent about that from day 1.

meab said: “Because you often make false statements and, instead of being a stand-up guy and admitting that you were wrong, you try to get out of the hole you dug for yourself by standing on more of your own excrement.”

I’m not of aware of any mistakes that haven’t already been addressed. I’m certainly open to discussing them though. Can you quote something I said in this article that you feel is a false statement?

meab said: “Because you don’t understand that Monkton’s updates are valid.”

Are they? The gist I’m getting from your rhetoric here is that WUWT and Monckton should not be treating UAH as the be-all-end-all global average temperature dataset.

meab said: “They clearly illustrate that, while CO2 is a factor, it cannot be the sole control knob on the climate”

I’ve been trying to explain this to the WUWT community for months. Maybe you can you help. John Tillman below was questioning this. It might be helpful if you commented as well. Maybe he’ll listen to you.

There is *NO* be-all-end-all global average temperature dataset!

UAH appears to just be the best on we have. It is not contaminated from adjustments to data 20 years in the past based on calibration adjustments made today. It is not contaminated from homogenization of stations with temperatures from other stations that simply are too far apart to provide adequate correlation in temperatures. It’s uncertainty intervals are pretty much standard for all readings around the globe while the uncertainties of sea and land instruments vary all over the globe and are universally ignored by climate scientists and programmers trying to calculate a “global average temperature”.

You are addressing two different things. The first is the temperature datasets (i.e. reality) and the second is the climate models (i.e. tarot cards). The climate models are mostly tuned using CO2 as the main control knob.

When reality doesn’t jive with the tarot cards then which should be questioned?

Jesus. They use radiosonde data in the ANALYSIS. NOBODY was taking about calibrating the microwave instrument.

I would NEVER compare UAH and RSS to get the error in UAH. They’re based on the same data, and doing this would NEVER give the total error. YOU ARE A COMPLETE IDIOT.

UAH has greater error than you falsely claimed. Be that as it may, it’s probably the best estimate.

You have lied many times.

meab said: “NOBODY was taking about calibrating the microwave instrument.”

meab said: “This means that you CAN’T use radiosonde data to calibrate the absolute temperature of the satellites”

That is what I’m responding to you. You brought up the topic of calibration of the absolute temperature. The MSU is what measures the absolute temperature. It is not calibrated with radiosonde data. It is calibrated using the CMB and a hot target with platinum resistance thermometers. Refer to to Spencer & Christy 1992 and/or Zou et al. 2006 for details.

meab said: “I would NEVER compare UAH and RSS to get the error in UAH.”

That’s fine. Nobody is going to force you to do so. But in accordance with the GUM and other established procedures for performing a type A evaluation I happen to choose that method. Spencer and Christy used a similar approach in the inaugural publication. There are other ways you can do a type A evaluation though. In another post I did it with the UAH grid following the procedure outlined in GUM section 4. I’ll be able to do the leg work for you if you describe how you would choose to do it. It might be interesting to see how your preferred method compares with my result, the Christy et al. 2003 result, and the Mears et al. 2011 result.

meab said: “UAH has greater error than you falsely claimed.”

Why beat around the bush? Lay it out. What is the UAH uncertainty? How much different is it than UAH/RSS observational type A evaluation of 0.16 C, the Christy et al. 2003 type B evaluation of 0.20 C, and the Mears et al. 2011 monte carlo evaluation of 0.2 C? And how do you arrive at that value whatever it may be?

Here’s the deal. This isn’t personal and I’m not picking on you. But unless you start providing hard data and peer reviewed citations backing up your claims I don’t have any other choice but to dismiss them. My challenge to you for the next post is to provide that elusive UAH uncertainty figure and peer reviewed citations backing up that you seem convinced exists.

Quit cherry picking from stuff you know nothing about.

From the GUM:

“3.3.5 The estimated variance u2 characterizing an uncertainty component obtained from a Type A evaluation is calculated from series of repeated observations and is the familiar statistically estimated variance s2 (see 4.2 ). The estimated standard deviation (C.2.12 , C.2.21 , C.3.3 ) u, the positive square root of u2, is thus u = s and for convenience is sometimes called a Type A standard uncertainty. For an uncertainty component obtained from a Type B evaluation, the estimated variance u2 is evaluated using available knowledge (see 4.3 ), and the estimated standard deviation u is sometimes called a Type B standard uncertainty. Thus a Type A standard uncertainty is obtained from a probability density function (C.2.5 observed frequency distribution (C.2.18 ) derived from an ), while a Type B standard uncertainty is obtained from an assumed probability density function based on the degree of belief that an event will occur [often called subjective probability (C.2.1 )]. Both approaches employ recognized interpretations of probability. ”

Please note – “series of repeated observations”. Let me say once again, multiple measurements of the same thing.

Now let’s look at 4.2, it says:

“4.2 Type A evaluation of standard uncertainty 4.2.1 In most cases, the best available estimate of the expectation or expected value µq of a quantity q that varies randomly [a random variable (C.2.2)], and for which n independent observations qk have been obtained under the same conditions of measurement (see B.2.15), is the arithmetic mean or average q (C.2.19) of the n observations: ”

Please note:. for which n independent observations qk have been obtained under the same conditions of measurement”

Now let’s look at B2.15:

“B.2.15 repeatability (of results of measurements) closeness of the agreement between the results of successive measurements of the same measurand carried out under the same conditions of measurement ”

Please note:

“same measurand carried out under the same conditions of measurement

The GUM does not let you “average” measurements and their uncertainties when you are measuring different things with different devices. And, before you say they use the same satellites, they do not. Neither do they use the same algorithms or they would have exactly the same results.

Until you admit to yourself that measurement uncertainty is a real thing and that multiple measurements of the same thing is needed to isolate the uncertainty that exists you will continue to cherry pick things that don’t apply. You basically continue to reveal your background as a mathematician and not someone who has dealt with real world measurements.

It should also be pointed out that the measurement of radiance is a function of a number of factors. You may calibrate your sensor against space but that doesn’t mean it will give you values adequate to estimate temperature when pointed at the ground.

I personally would rather just see the radiance vales themselves plotted with no mathematical fiddling at all to try and create a “temperature” value from them. If radiance is a true proxy for temperature then the conversion to temperature would not be needed, just evaluate the radiance trends!

Maybe WUWT should stop publishing UAH data until they specify the uncertainty for each figure. Too many people here think that in publishing the monthly data to 2dp, it means they are claiming it’s ±0.005°C.

People who have a particular interested in any given topic can study it in depth as they choose.

Alternatively, they can bring up the issue here in the comments, as Clyde Spencer has.

Halting publication of data reeks of censorship, the unstated aim, perhaps?

After we stop the publishing, then we can burn the books that have already been published too.

Its a great idea. I wonder why no one ever thought about doing this before.

I’m going to suggest this to the new Brandon Ministry of Truth department.

I didn’t say UAH should be banned from publishing their data, I was just sarcastically wondering if this blog shouldn’t announce it every month when it clearly upsets so many here.

If you think WUWT not publicizing data is akin to book burning I assume you complain every month they fail to mention the results from RSS and GISS.

UAH is supposed to be the only data set everyone here is supposed to trust, yet every month I seem to be the one who has to say that I don’t think Dr Spencer, et al, are manipulating the data, or fraudulently claiming to know the actual mean to within 0.005°C.

A corollary to this discuss is that of adjustments. I have been told over and over again that adjustments regardless of intent is fraud. In fact, some on here goes as far as to say it is prosecutable criminal fraud. And that anyone publishing, promoting, analyzing, or otherwise using the data is complicit in the fraud and is equally culpable.

Here are the adjustments UAH has made.

Year / Version / Effect / Description / Citation

Adjustment 1: 1992 : A : unknown effect : simple bias correction : Spencer & Christy 1992

Adjustment 2: 1994 : B : -0.03 C/decade : linear diurnal drift : Christy et al. 1995

Adjustment 3: 1997 : C : +0.03 C/decade : removal of residual annual cycle related to hot target variations : Christy et al. 1998

Adjustment 4: 1998 : D : +0.10 C/decade : orbital decay : Christy et al. 2000

Adjustment 5: 1998 : D : -0.07 C/decade : removal of dependence on time variations of hot target temperature : Christy et al. 2000

Adjustment 6: 2003 : 5.0 : +0.008 C/decade : non-linear diurnal drift : Christy et al. 2003

Adjustment 7: 2004 : 5.1 : -0.004 C/decade : data criteria acceptance : Karl et al. 2006

Adjustment 8: 2005 : 5.2 : +0.035 C/decade : diurnal drift : Spencer et al. 2006

Adjustment 9: 2017 : 6.0 : -0.03 C/decade : new method : Spencer et al. 2017

I’m curious what the WUWT thinks of this. Do you think Dr. Spencer and Dr. Christy have committed fraud? Do you think WUWT is complicit since they promote the data?

You are comparing apples and oranges. Revising past thermometer data based on current calibration values *is* a fraud because you don’t know the calibration error of the past readings. Revising CALCULATION algorithms used for changing radiance to temperature is not the same thing at all. That kind of change applies to all temperatures thus calculated.

bdgwx, altering actual data and adjusting algorithms used to analyze data without reasonable justification are both fraud. Neither of the fraud examples is the case for any of the items you mentioned.

I agree. I don’t think UAH nor WUWT are engaging in fraud here. You, Bellman, a few others, and I seem to be in the minority though. I was more interested in soliciting feedback from those who think adjustments regardless of reason or justification are always fraudulent.

Diurnal drift and orbital decay are inevitable and must be accounted for. The methods for doing this are pretty well defined. Of course, any particular level of adjustment can be debated about whether that is truly the best adjustment when you get into the complexity of radiance and temperature and altitude and the tiny atmospheric drag and oblate earth effects, etc. that make Dr. Spencer’s science difficult.

Not adjusting for them would be very bad science.

Even in the “vacuum” of outer space when flying to other planets, adjustments need to be made because things don’t fly exactly where it’s calculated that they should go.

Also, the instruments themselves have to be adjusted for as their temperatures, the satellite temperatures, etc. change.

Yep. There are good reasons for making adjustments. Unfortunately the majority of the WUWT audience does not accept them. They are also blissfully unaware that UAH makes 0.307 C/decade worth of adjustments. It seems like every month we see a post or two saying that UAH is the only dataset the poster will trust because they don’t make adjustments.

You have no idea what the “majority” of the WUWT audience is.

This is because they do not necessarily post here so the size has never been measured.

So once again, you make assumptions and then cast those assumptions as fact.

That’s your second mistake. Your first mistake is that you believe your assumptions are valid.

Do you think the majority of the WUWT audience supports adjusting data? Will you defend justified adjustments the next time someone posts here challenging them?

The majority of the WUWT supports making those adjustments that can be supported with actual data. Willy nilly applying corrections to past data based on current calibration adjustments is *NOT* adequately supported by actual data. Neither is adjusting station data using other station data whose accuracy is not known and whose correlation to the station is determined by the distance between the two stations – especially where a distance of 50 miles or more means the data are *not* adequately correlated.

“: Unfortunately the majority of the WUWT audience does not accept them.”

Stop saying this. It’s just not true. It’s only an indication that you simply don’t understand what you are talking about.

Calibrating an instrument in May, 2000 and using the calibration adjustment determined at that point in time to “correct” data from that instrument for the entire interval from 1900 to 2000 is simply fraudulent. You have NO IDEA what the calibration adjustment for that instrument was in 1900! Or 1940. Or 1980. Or 1990!

That is what UNCERTAINTY intervals are meant to provide – an estimate of the interval the true value would be in at a point in time. It is why it is so *important* to propagate uncertainty intervals properly instead of just ignoring them!

The standard deviation of sample means is *NOT* equivalent to the total uncertainty of the mean of the entire data set. The uncertainty of the mean is determined from the propagation of the uncertainty of the individual elements. And when those elements are measurements of different things you simply cannot assume that all errors are random and that they cancel – which is what most climate scientists seem to do.

Based on a couple of posts here I think there may be a misunderstanding about these 9 itemized adjustments especially the 1st one. It is listed as “simple bias correction” (Christy et al. words; not mine) but it includes all of the corrections applied by the MSU processing outside of anything UAH has done and UAH’s own corrections described in Spencer & Christy 1992 which I would not describe as “simple” at all. These adjustments include the interpolation between the CMB and hot target, regression derived limb darkening correction, deep convection removal, spatial infilling, temporal infilling, satellite homogenization, diurnal heating cycle, annual heating cycle. deconvolution, etc. In other words, UAH performs most of the same types of adjustments as the surface station datasets plus some that even they don’t. I just want to make sure this is clear and that there is no confusion or misunderstanding. Refer to the publications cited above for the details.

How many of these are actual “adjustments” to radiance measurements and how many are changes in the calculation function used to convert the radiance measurements to a temperature? If you are changing the calculation function then it would apply to *all* measurements, not just some, and it is not an “adjustment” to the actual measurements themselves.

Perhaps a little thought experiment might help. I have two cameras, an old Nikon and new Minolta. I used the Nikon to take pictures from 1970 through 1990. I’ve used the new Minolta from 1990 through 2010. I find out that the Minolta has a slight hue offset so I use Photoshop to go back and correct all the pictures I’ve taken with the Minolta. Now, do I also correct all the pictures I took with the Nikon for the same offset? A climate scientist of today would. I wouldn’t. What would you do?

The limb darkening correction is an interesting one. The MSU data processing uses the procedure defined in Smith et al. 1974 which is a 3rd order citation off of the Grody et al. 1983 citation in Spencer & Christy 1992. Anyway, this procedure utilizes directly observed soundings to form the basis of the adjustment. But they do not use soundings that are temporally colocated with the MSU observation. Instead they use select balloon and rocket soundings from the 1970’s. In other words they use data from the 1970’s to make adjustments to MSU observations from at least 1979 to 1998 (I believe AMSU uses a different technique).

The grid processing is also interesting. Spencer & Christy 1992 describe an infilling procedure in which empty grid cells are filled in using a simple linear interpolation (distance based) up to 37.5 degrees away. If empty grid cells remain at the monthly level they do a temporal interpolation up to 2 days away to fill in the remaining cells.

The satellite homogenization or merging is interesting too. Like the homogenization used in surface station datasets UAH must also correct the changepoint differences caused by instrument package changes from one satellite to the next. Additionally they must also deal with a time-of-observation issue caused by the orbital timing of different satellites. They deal with this by computing the annual cycle in 1982 and using that as a basis with an additional intersatellite sub-correction to make the ToB bias correction for all data past and future. The details can be found in Spencer & Christy 1992.

BTW…just because I’m pointing out all of the different adjustments UAH utilizes does not mean that I think UAH is an unsuitable options for global average temperature measurements. I just find it interesting that the dataset employs adjustments that are not significantly unlike the adjustments the surface station datasets employ and which are widely criticized here. In fact, an argument can be that the adjustments in the satellite data are far more intrusive than anything the surface station datasets are doing. My point is only that it seems there is a dichotomy in WUWT’s promotion of UAH and demotion of everything else based on the erroneous belief that UAH does not employ adjustments, grid infilling, etc. like what the others do.

Bellman, I assume you are proposing that for the various surface temperature datasets and, especially, for the UN IPCC CliSciFi climate models as it relates to their “projections” for each and every climate metric.

AS far as I’m aware this blog does not publish any other data set on a monthly basis, and if any other data sets are mentioned it’s usually to accuse them of being corrupt.

UAH is assumed to be trustworthy, but still gets accused of being wrong whenever a monthly anomaly isn’t in line with expectations.

But my main point is that, unlike most other data sets, UAH does not give any assessment of the uncertainty in their monthly figures. The best anyone can find is a rough estimate made for earlier versions. Personally, I don’t think this matters too much, but others here get very worked up about it.

Who gets worked up about it? I think UAH measures something other than surface air temperatures six feet above the ground. I know that their basic measurement is not temperature. As to orbital adjustments, those are pretty standard since we have plenty of experience. Do I question their algorithms? No, they have adjusted them as needed and apply them equally. Do I think with all the variables of clouds, particulates, etc., that they can get better than 0.12 uncertainty? I don’t know. My guess is that it is larger than that.

As to thermometer readings recorded as integers, anomalies with 2 or 3 digit decimal point precision, that is a joke!

“Who gets worked up about it?”

If you scroll up, you’ll see the specific comment I was having fun with. E.g.

The if you remember back a month there were a few people saying it was impossible to know what the trend was unless you knew the uncertainty on the monthly values, and that the true rate of change could be multiple degrees per decade.

Anyone that believes UAH is anything more than a metric is mistaken. It is a metric that can be used to track trends in radiance values. Converting those radiance values to temperatures is only useful in providing a measurement unit people are used to seeing. Actual radiance values are affected by all kinds of factors, e.g. humidity, wind, particulates, temperature inversions, trace gas concentrations, clouds, etc. All of which are not tracked closely enough to be used in any conversion algorithm – so the algorithms get tuned just like the climate models do.

Does this mean UAH has uncertainties in the temp values it calculates? OF COURSE! So does everything else. Pick your poison and stop complaining about the choice of poison other choose to use!

Who claims UAH is something other than a metric?

If you are claiming the anomalies reported by UAH do not actually have any correspondence with temperature change, than you are accusing it’s creators of a serious misrepresentation.

“Pick your poison and stop complaining about the choice of poison other choose to use!”

I’m not sure now if I’m being criticized for pointing out there uncertainties in UAH or for underestimating what they are. I prefer not to pick any one particular poison. I think it’s useful to have a variety, both to illustrate some of the uncertainties, but also to see that they can’t all too wrong.

The only relationship between radiance and temperature is a calculated one. None of the satellites measure temperature directly using a thermometer. Even a thermometer doesn’t measure heat directly, it is only a proxy. Actual heat content is a function of humidity and pressure as well as temperature.

It simply doesn’t matter how well done the calculation is done, it will always be missing important factors, just like the climate models do.

Don’t put words in my mouth. You are simply too uneducated on the subject to actually pontificate on it. The point is that UAH has just as much uncertainty as any other temperature data set. Any claims otherwise are simply not serious. Those uncertainties propagate into the anomalies just as they do with surface thermometer readings and anomalies calculated from them.

That’s not what you are doing. You are trying to cast UAH as unsuitable for use when in fact it is the temperature data set that suffers least from unjustified adjustments to past measurements. If you don’t like UAH then pick a different one for you to use, but at least justify to yourself why you think it is better even if you can’t justify it to everyone else.

The fact is that *all* measurements have uncertainty. Learn it, live it, love it.

“You are trying to cast UAH as unsuitable for use …”

I’m not sure when you think I’ve done that. It was only a couple of months ago I was being accused of being a UAH groupie.

I use UAH all the time here. When it’s claimed that satellite data proves surface data is wrong, I’ll argue that satellite data can also have problems and you shouldn’t assume one is necessarily better than the other, but that doesn’t mean you reject any satellite data set out of hand. As I’ve said before, I think it’s best to look at a range of data. As you say, all measurements have uncertainty.

The current Monckton pause period (longest trend <= to 0 C/decade) sits at 91 months starting in 2014/10.

The current doubled warming period (longest trend >= to +0.26 C/decade) sits at 184 months starting in 2006/11.

The current peak warming period is +0.34 C/decade starting in 2011/02.

When I was in high school I went on a field trip and wound up cherrypicking in apple trees. The girls loved it.

My fishing lake is still frozen. I sure could use your global warming.

A clear indication that the next ice age is starting.

Maybe, one of the coldest April’s ever despite all the CO2.

Monckton pause since 2014/10

Interestingly “NSIDC Sea Ice Daily” graph shows more Arctic sea ice since 2014, compared to present day, as well.

Last time I posted the link, it was unduly long in moderation….so lookitupurslf…..

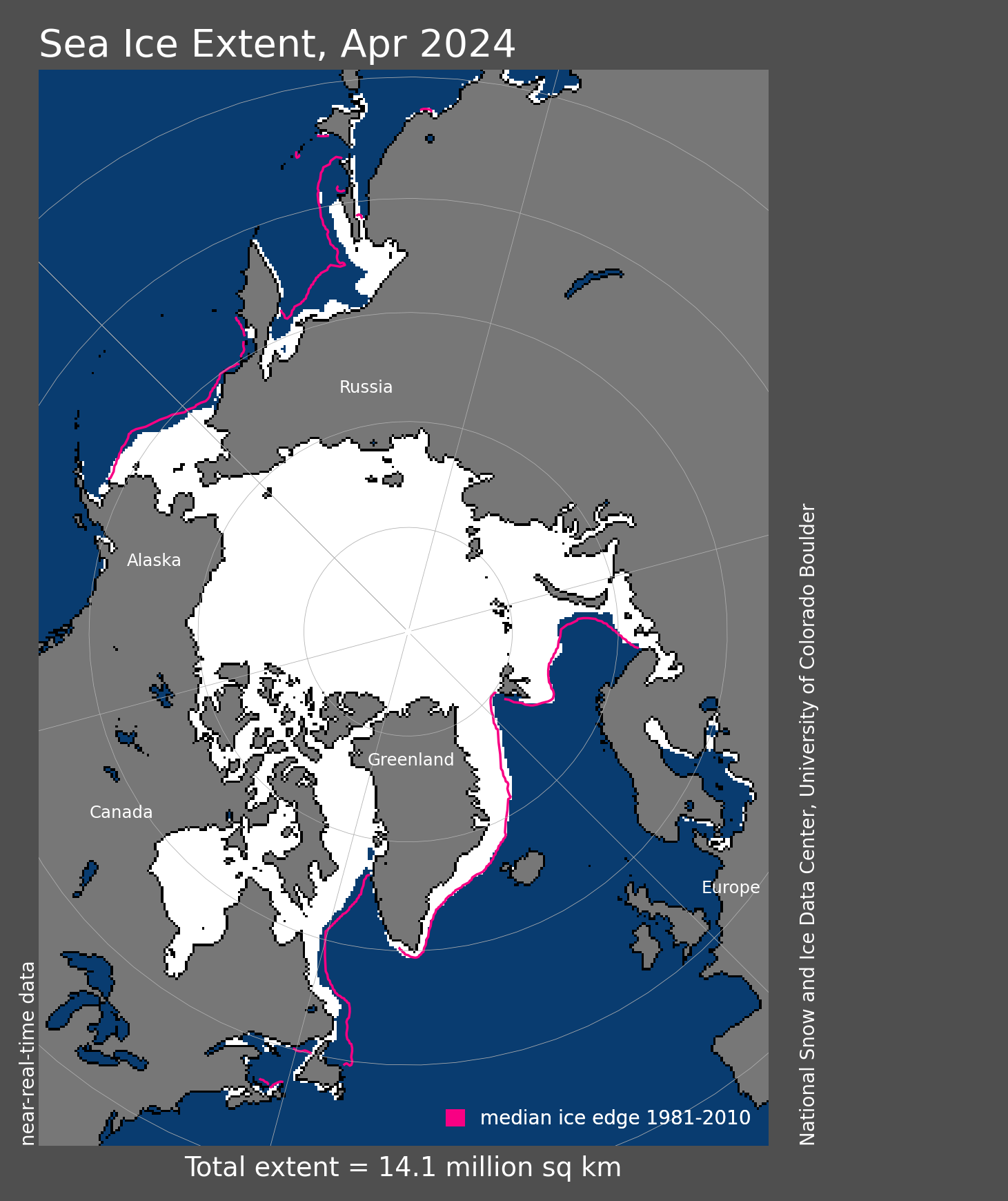

Northern sea ice extent in April 2022.

This is just a nit that I thought was interesting. Why is the eastern side of the Kamchatka Peninsula ice covered but the west side is not?

Smaller waves?

Shallower water, for starters.

Earth has cooled for over six years since February 2016. How is this possible, if CO2 be the control knob on climate?

When is the jig up on the CACA hoax? If six years be insufficient, then when? Until the next Super El Nino in snother 18 years?

CO2 isn’t the control. It is only a control knob. Nevermind that the Earth actually has warmed over the last six years. The ocean took up about 48 ZJ of energy from 2016 through 2021 [Cheng et al. 2022], It is the atmosphere that hasn’t warmed because there are many factors like the switch from an ENSO+ to an ENSO- phase that are in play.

Please post evidence that CO2 has any measurable effect on temperatures.

IPCC AR5 WG1 pg 721-730. It’s by no means an exhaustive list of evidence, but it is reasonably comprehensive. I encourage you read the whole report as well. It is only 1500 pages and is pretty easy read (relatively speaking) so it won’t take much time to get the high level overview. You can then spend a lifetime (or as much time as you want) taking the deep dive into the 9200+ direct lines of evidence and the hundreds of thousands of 2nd and 3rd order lines of evidence at your discretion.

The old “the heat is hiding in the oceans” meme. An oldie but goldie. Just as lame in 2022 as in 2012 and 2002.

If more salubrious plant food in the air isn’t heating the atmosphere, how does it heat the seas? And why those seas farthest from where the air is supposedly warming?

It’s not just CO2. It’s any polyatomic molecule that has the ability to heat the climate system. It does so by impeding the transmission of energy to space. As a result there is an energy imbalance placed on the climate system consistent with the 1st law of thermodynamics. And because the climate system has a finite heat capacity it is forced to warm because of the imbalance. The warming takes place in the climate system as a whole, but due to numerous energy transfer processes the excess energy gets dispatched to the various heat reservoirs with a lot of variability. Over the course of decades it averages out to about 90% in the ocean, 5% in the land, 4% in snow/ice, and only 1% in the air. Because the air has a very low heat capacity it experiences the most variability with many months and years having a net loss of energy. But because nature desires a high entropy state the air eventually equilibrates with the warmer system overall. See Schuckmann et al. 2020 for more details.

Some of the usual suspects from your prior link, to include Cheng, and just as tendentious. Indeed more so, since so many more perps.

Anti-physical mumbo jumbo, with added gobbledygook from Trenberth, the original “hiding in the oceans” purveyor.

Can you post a link to the alternate peer reviewed publication regarding the same subject matter that you prefer?

CACA spewers claim “the” or “principle” control knob:

https://www.airclim.org/acidnews/co2-principal-climate-control-knob-study-confirms#:~:text=Carbon%20dioxide%20(CO2)%20is,2%20as%20important%20greenhouse%20gasses.

In fact, it’s so negligible as best to ignored, thanks to its great beneficial effects.

I read the Lacis et al. 2010 publication. First…The authors are not discussing the monthly variability of the TLT temperature. They are discussing the reasons for the difference between Earth’s nominal global mean surface temperature Ts and the effective mean global temperature Te. Second…The authors do not say CO2 is the only agent responsible for Ts – Te anyway. They only say it, along with the other non-condensing GHGs, is the primary or key agent for the difference. Furthermore the authors call out many other agents that play a role in the Ts – Te difference including but not limited to water vapor, clouds, sea ice cover, ice sheets, orbital dynamics, solar dynamics, volcanos, rock weathering etc. They even call out O2 and N2 which aren’t even GHGs. And they discuss climatic change events like the PETM which were not driven by CO2 but can have a significant effect on the final Ts.

Do those temperatures have a traceability to standards?

Part of the issue is that satellite readings are not measurements of temperature. They are measurements of radiance from parts of the atmosphere. Temperatures are then calculated from the radiance values using an algorithm. How closely these calculations actually provide actual temperatures is impossible to physically verify meaning their uncertainty can’t be physically determined either. Any claim of uncertainty is actually based on the standard deviation of sample means of the calculated temperature values. Meaning no one actually knows just how accurate the temperatures truly are. If there are systematic errors in the calculation algorithms all the sample means may be close together, i.e. a small standard deviation of the sample means, but the accuracy of those means could be off by some unknown value – which is the *actual* uncertainty.

I thought Christie used radiosonde balloons to calibrate/verify their calculations/model. Bviously that would only test it at set altitudes/locations, on the other hand, if they match in some places, not much reason to suspect they don’t in general.

The radiosonde data are not true values; all they can do is compare “trends”. Plus the radiosonde data has to be massaged into something that resembles the 0-10km temperature profile convoluted with the response curve of the satellites’ detectors (microwave sounding units).

They frequently use radiosonde balloons for comparisons, but the calibration is done with the cosmic microwave background and a hot blackbody target. The hot blackbody target has two platinum resistance thermometers that are averaged to “reduce the noise and improve the accuracy” of the temperature reading [Zou et al. 2006].

This planet is on fire. The end is “Nye”.

I think results for April are wrong. It feels cooler here. I don’t know where it got warmer.

Henry in South Africa

Same in Oregon. Record late snow in Portland.

And hail on the blossoms. Today. Again. Coldest and longest winter in 50 years. Only two sunny days this year so far. Wet, too. Ground is saturated into May (six months and counting) with no end in sight.

Ice ages consist of short hot summers and long cold winters. Just what you get when earth’s orbit elongates per the big Milankovitch cycle.

Same here in NE Oregon .in Union county we’ve had a continuous week of cold and snow at the middle of the month . Now we have had successive days of wind and rain . NWS is calling for a warm up to the mid 60s by Thursday

Gonna find my late wife’s CD of Carmen Miranda and “Tropical Heatwave” 😁.

I’m in Pendleton. Saturday Cattle Baron BBQ cookoff was interrupted by a violent thunderstorm, leading to fisticuffs between contestants.

Proving once again that global cooling leads to hardship, civil strife, and violence, whereas warming brings life, harmony, and tasty briskets.

Leaving the Little Ice Age for the Tri-tip Age is a good thing.

More BBQ, more plant food in the air.

Same here in Southern Ontario Canada – April was like an extra March.

I guess it shows in the UAH regional data: while the Northern Hemisphere is +0.35C and the Arctic up 0.45C, the US48 was -0.26°C on average.

Here in Vancouver BC daily high temperatures have been below average (other than the odd day or two) since our one week “heat dome” early last summer.

Credible data are tropics: -0.04 and USA48: – 0.26 C.

It also went cooler in Holland. Perhaps Roy must check the calibrations?

It really does begin to look that way, that’s for sure

Coolest April on record in Spokane (Eastern Washington) since records began in 1881.

Pre-monsoon temperatures across India and Pakistan tend to skew the global average up a little. It averages out – when the monsoon breaks we’ll likely be experiencing an upswing in temperatures. You can always guarantee that when one part of the globe is cooler, another part is warmer – hence the reason why they use a global average and not regional datasets.

And the average tells you nothing about the climate of anyplace that actually exists!

But Gavin Schmidt says the only temperatures that mean anything are those where you live. At least that was his response to using remote sensing for atmospheric temperatures instead of the corrupted surface estimates.

When the Arctic “shares” its colder air with us, usually it

gets our warmer air in return.

Currently quite cool in the UK, too.

Strange how these high temperatures claimed by Alarmists don’t seen to coincide where people live?

UK mean temperatures for April were +0.22°C above the 1991-2020 average. Close to the UAH global anomaly.

Now where was it I saw this recent topping out pattern starting to form…..

NOAA SST-NorthAtlantic GlobalMonthlyTempSince1979 With37monthRunningAverage.gif (880×481) (climate4you.com)

Looking at those ocean temps a non-climate-scientist scientist or engineer would conclude that the huge increase in CO2 emissions, especially in the developing world, since 2000 have stopped the warming trend.

But I guess good eyesight and logic are not required for climate scientists.

Its a real bitch when the North Atlantic won’t do what the UN IPCC CliSciFi models tell it to do.

In other news, it appears that non-government adjusted temperatures may be peaking from their Little Ice Age lows during the early 21st Century. Who knows? Certainly not government funded CliSciFi practitioners based on their track record.

The North Atlantic and cycles in general have either been moved to the ignore list or it does carry real weight and that forces even more of the full court media press that rivals the land war in Europe for attention and airtime.

SW Ohio cooler than normal April. Just sayin’.

Over 2.5+ degrees below normal average April temps.

Andrew

4th coldest April in MN

It’s far worse than expected ! Unprecedented !

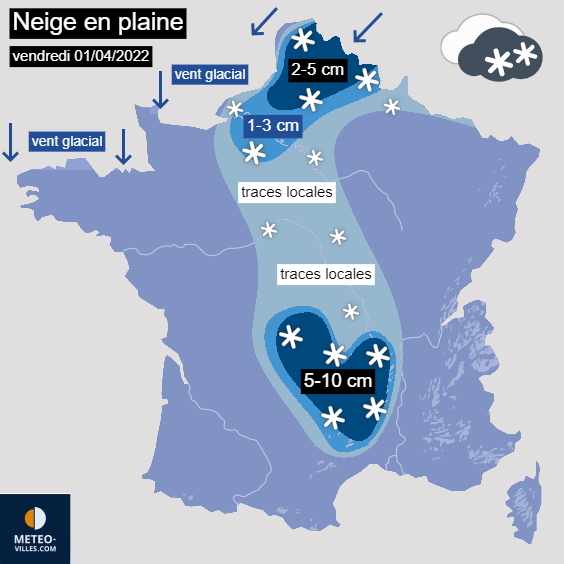

Main climatic events of the year: Storm, flash flood, heat wave after heat wave, followed by cold wave.

No, it was not from recent global warming scare story. It was the year 1800, from notable weather events in the archives of France.

https://www.meteo-nice.org/chronique

So what about the main weather event of the first quarter of 2022

..a bit limp, what would you expect in the freezing cold weather.

Interesting to note how cool temps in Australia have been all summer long. Is that indicative of a shift in Southern Hemisphere temps?

During La Niña +0.6 in Australia unreliable.

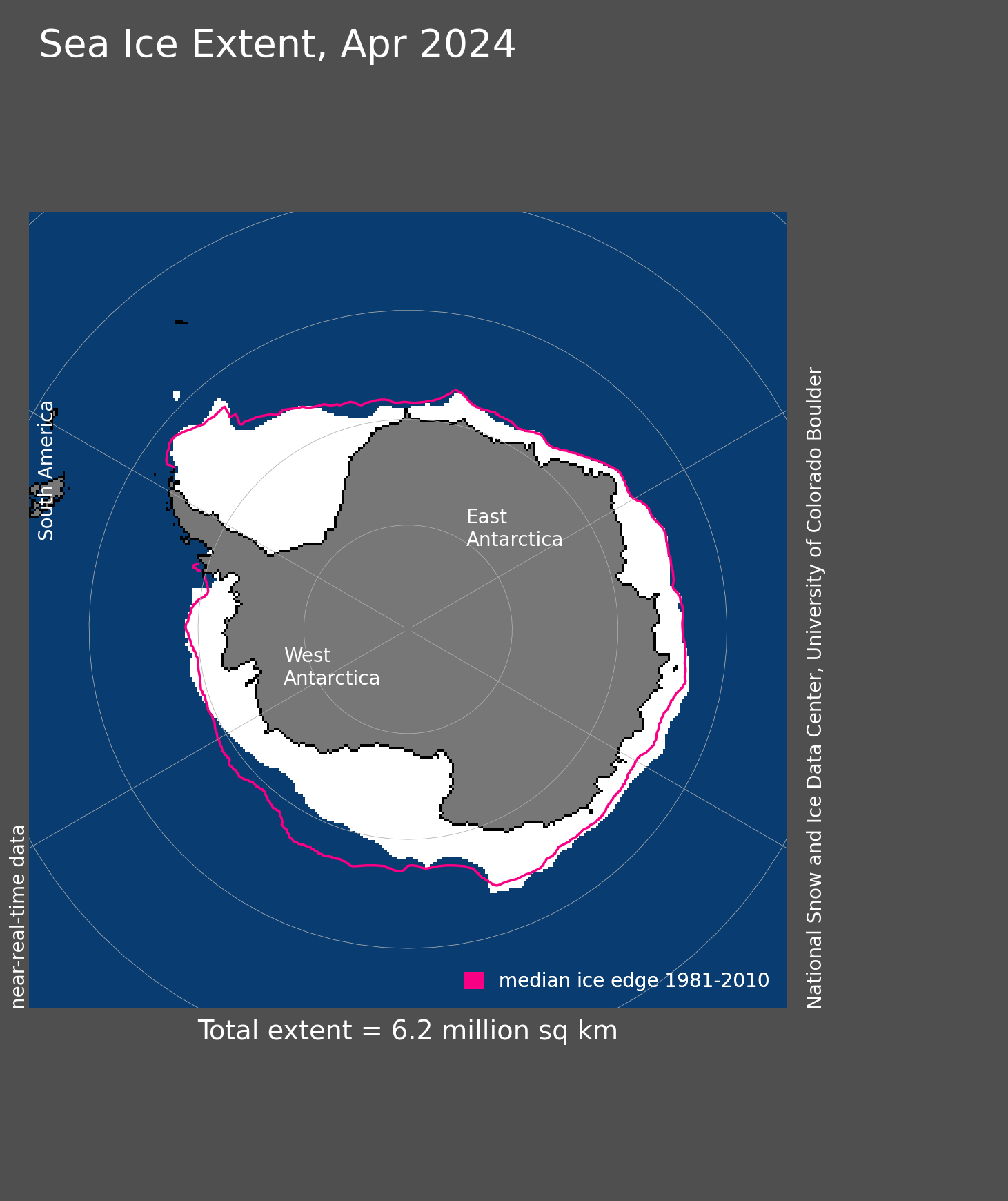

Southern sea ice extent in April 2022.

Not on the west coast of Australia. Same as previous strong La Ninas – continued hot conditions all through summer, and it’s still warm as we go into Autumn/Fall. Still wearing shorts and T shirts.

A major snowstorm in western Nebraska.

The Solar Activity – Global Cooling Trackers – April . 2022

https://climatesense-norpag.blogspot.com/

Quote

1. The Solar Activity – Global Temperature Correlation.

https://blogger.googleusercontent.com/img/a/AVvXsEjbSnv27PK5uV7c0Ma7QRapv7GTZzY9Vj-edBzo4-PGCqMgI436-pZKAJyNWKAArON6oLdvaOa6-XZI7JWxkNUFXA9TLmu09PGbHcanacgzHZPDhmPT51T1alwqM8mTTdnFpygOMjn3TnfMNORzad001xsTOwbtHMtDXinlXYjVTxI-rJXnWXv6iAz8tw=w655-h519

Fig.1.Correlation of the last 5 Oulu neutron count Schwab cycles and trends with the Hadsst3 temperature trends and the 300 mb Specific Humidity. (28,29) see References in parentheses ( ) at https://climatesense-norpag.blogspot.com/2021/08/c02-solar-activity-and-temperature.html

The Millennial Solar Activity Turning Point and Activity Peak was reached in 1991. Earth passed the peak of a natural Millennial temperature cycle in the first decade of the 21st century and will generally cool until 2680 – 2700.

Because of the thermal inertia of the oceans the correlative UAH 6.0 satellite Temperature Lower Troposphere anomaly was seen at 2003/12 (one Schwab cycle delay) and was + 0.26C.(34) The temperature anomaly at 2022/03 was +0.26C (34).There been no net global warming for the last 20 years. The Oulu Cosmic Ray count shows the decrease in solar activity since the 1991/92 Millennial Solar Activity Turning Point and peak There is a significant secular drop to a lower solar activity base level post 2007+/- and a new solar activity minimum late in 2009.The MSATP at 1991 correlates with the MTTP at 2003/4 with a 12/13 +/- year delay. In Figure 1(5) short term temperature spikes are colored orange and are closely correlated to El Ninos. The had sst3gl temperature anomaly at 2037 is forecast to be + 0.05.

2. Arctic Sea Ice.

Arctic sea ice reached its maximum extent for the year, at 14.88 million square kilometers (5.75 million square miles) on February 25. The 2022 maximum was the tenth lowest in the 44-year record.

Fig.2 Arctic Sea Extent (NSIDC)

Arctic sea ice extent on 05/01/22 (Lt Blue) was 13,571,000 sqkms the twelfth lowest on record, and 150,000 sqkms above the 2011- 2022 average

3.Arctic Sea ice volume.

Average Arctic sea ice volume in March 2022 was 21,700 km3. This value is the 6th lowest on record for March, about 2,200 km^3 above the record set in 2017. See Data at: http://psc.apl.uw.edu/research/projects/arctic-sea-ice-volume-anomaly/#:~:text=February%202022%20Monthly%20Update,mean%20value%20for%201979%2D2021.

4.Sea Level

Fig. 3 Sea Level https://climate.nasa.gov/vital-signs/sea-level/It can now be plausibly conjectured that a Millennial sea level peak will follow the Millennial solar activity peak at 1991/92 . This may occur at a delay if one half of the fundamental 60 year cycle i.e at 2021/22. Fairbridge and Sanders 1987 (18).The rate of increase in sea level from 1990 – present was 3.4 millimeters/year. The net rate of increase from Sept 13th 2019 to Jan. 03 2022 in Figure 3 was 2.3mm/year. If sea level begins to fall by end 2022 the conjecture will be strengthened.

5. Solar Activity Driver and Near term Temperatures

An indicator of temperatures for 5 – 6 months ahead is provided by the SOI graph

Fig.4 30 Day moving SOISustained positive values of the SOI above +7 typically indicate La Niña (cooling) while sustained negative values below −7 typically indicate El Niño.(warming) Values between +7 and −7 generally indicate neutral conditions. Values at April 12 2022 indicate possible cooler than normal NH summer temperatures until Sept/Oct. 2022. The 2022 minimum sea ice extent should be greater than in 2021.

http://www.bom.gov.au/climate/enso/#tabs=Pacific-Ocean&pacific=SOI

Basic Science Summary

The global temperature cooling trends from 2003/4 – 2700 are likely broadly similar to or probably somewhat colder than those seen from 996 – 1700+/- in Figure 5.(.3) From time to time the jet stream will swing more sharply North – South. Local weather in the Northern Hemisphere in particular will be generally more variable with, in summers occasional more northerly extreme heat waves, droughts and floods and in winter more southerly unusually cold snaps and late spring frosts.

The IPCC -UNFCCC “establishment” scientists deluded first themselves, then politicians, governments, the politically correct chattering classes and almost the entire UK and US media into believing that anthropogenic CO2 and not the natural Millennial solar activity cycle was the main climate driver. This led governments to introduce policies which have wasted trillions of dollars in an unnecessary and inherently futile attempt to control earth’s temperature by reducing CO2…………..

Equal 4th warmest April in the UAH record. The same as 2020.

Top ten warmest Aprils

The two warmest Aprils were both during very strong El Niños. This year we are in a moderate LA Niña.

Arctic sea ice was the highest on yesterday’s date since 2013. And that was close.

But Arctic sea ice extent minimum has been in an uptrend since 2012, and flat since 2007. The natural fluctuation has switched to icier mode.

The extent of sea ice in April in the Northern Hemisphere.

Is the anomaly in the Arctic +0.45 C?

Are records kept for regional averages over the same time period? I’m curious about how, say, North America compares to Europe.

No, it would spoil all of the fun and games if they kept regional datasets – one global average to keep the warming firmly in place and hide the fudge factor in the weeds.

You might want to click on the link to the UAH data. Loads of regional data

The warmest weather is in the Indian Ocean.

La Nina related winds and currents driving the atmospheric and oceanic heat into the Indian Ocean? I guess I could ask Bob Tisdale.

are there any sea surface CO2 correlates with the hot Indian Ocean areas to suggest increased outgassing there ?

It’s a bit worrying as it’s going UP under the influence of a protracted La Niña which is expected to segue into 2023

I don’t know about worrying, but it is interesting. I just looked and if this La Nina persists for only 5 more months it will be the first time in the UAH period of record in which 3 consecutive years of a single season were in the La Nina phase.

In April, a new wave of cold water reached the equatorial Pacific Ocean from the south.

Wind-driven upwelling?

La Niña.

http://www.bom.gov.au/archive/oceanography/ocean_anals/IDYOC007/IDYOC007.202205.gif

In April, there was a negative stratospheric temperature anomaly above 60 S, which portends a strong polar vortex in the south and very cold temperatures in Antarctica.

I’ve been searching for my fir-lined codpiece.

I just find it very interesting how different REGIONS shift and share the burden of “warming”. And then they take a break and cool down. It has never been every region that has shown an increase in temperatures at the same time. I would think that every region showing warming at the same time would be a prediction of global change climate warming.

So there is no globall warmining, it is all a lie. Got it.

One of the 5 warmest Aprils on record, despite many months of naturally cooling La Nina conditions. It’s like there’s something other than natural influences forcing the warming. No one knows what that could possibly be though.

Not where I live. 4th coldest April evah.

Another monthly meaningless statement. Would you like to include the 30s, 40s and 50s in that ”record”? You are seeing something that is not there dude.

The UAH April record only starts in 1979, so I can’t include the 30s, 40s or 50s. According to the GISS global surface temperature record, the warmest April that occurred during the 1930s, 40s and 50s was +0.19C above the anomaly base (1951-1980). Every single April since 1986 has been warmer than that.

Here we go again with the multiple times adjusted proxy measurement of part of the atmosphere, mysteriously out of step with similar proxy measurements

0.5 C in 45 years. Unless this graph heads south in a serious way I wont be convinced CO2 is a non event.

Anyway, the warming is slight, and beneficial, as is the CO2, but it looks to me like it IS causing warming, as it should (to the average temp).