October 1st, 2021 by Roy W. Spencer, Ph. D.

The Version 6.0 global average lower tropospheric temperature (LT) anomaly for September, 2021 was +0.25 deg. C, up from the August, 2021 value of +0.17 deg. C.

REMINDER: We have changed the 30-year averaging period from which we compute anomalies to 1991-2020, from the old period 1981-2010. This change does not affect the temperature trends.

The linear warming trend since January, 1979 is +0.14 C/decade (+0.12 C/decade over the global-averaged oceans, and +0.18 C/decade over global-averaged land).

Various regional LT departures from the 30-year (1991-2020) average for the last 21 months are:

YEAR MO GLOBE NHEM. SHEM. TROPIC USA48 ARCTIC AUST

2020 01 0.42 0.44 0.41 0.52 0.57 -0.22 0.41

2020 02 0.59 0.74 0.45 0.63 0.17 -0.27 0.20

2020 03 0.35 0.42 0.28 0.53 0.81 -0.96 -0.04

2020 04 0.26 0.26 0.25 0.35 -0.70 0.63 0.78

2020 05 0.42 0.43 0.41 0.53 0.07 0.83 -0.20

2020 06 0.30 0.29 0.30 0.31 0.26 0.54 0.97

2020 07 0.31 0.31 0.31 0.28 0.44 0.26 0.26

2020 08 0.30 0.34 0.26 0.45 0.35 0.30 0.25

2020 09 0.40 0.41 0.39 0.29 0.69 0.24 0.64

2020 10 0.38 0.53 0.22 0.24 0.86 0.95 -0.01

2020 11 0.40 0.52 0.27 0.17 1.45 1.09 1.28

2020 12 0.15 0.08 0.22 -0.07 0.29 0.43 0.13

2021 01 0.12 0.34 -0.09 -0.08 0.36 0.49 -0.52

2021 02 0.20 0.32 0.08 -0.14 -0.66 0.07 -0.27

2021 03 -0.01 0.13 -0.14 -0.29 0.59 -0.78 -0.79

2021 04 -0.05 0.05 -0.15 -0.28 -0.02 0.02 0.29

2021 05 0.08 0.14 0.03 0.06 -0.41 -0.04 0.02

2021 06 -0.01 0.31 -0.32 -0.14 1.44 0.63 -0.76

2021 07 0.20 0.33 0.07 0.13 0.58 0.43 0.80

2021 08 0.17 0.26 0.08 0.07 0.33 0.83 -0.02

2021 09 0.25 0.18 0.33 0.09 0.67 0.02 0.37

The full UAH Global Temperature Report, along with the LT global gridpoint anomaly image for September, 2021 should be available within the next few days here.

The global and regional monthly anomalies for the various atmospheric layers we monitor should be available in the next few days at the following locations:

Lower Troposphere: http://vortex.nsstc.uah.edu/data/msu/v6.0/tlt/uahncdc_lt_6.0.txt

Mid-Troposphere: http://vortex.nsstc.uah.edu/data/msu/v6.0/tmt/uahncdc_mt_6.0.txt

Tropopause: http://vortex.nsstc.uah.edu/data/msu/v6.0/ttp/uahncdc_tp_6.0.txt

Lower Stratosphere: http://vortex.nsstc.uah.edu/data/msu/v6.0/tls/uahncdc_ls_6.0.txt

Prediction..

This winter the NH will get down to 2008 – 12 levels.

Better divert those biomass cargo ships to Australia to load with high quality thermal coal.

Somewhere I read, the summer and September are comparable to 1962, where the 3. coldest winter since the 40ies followed.

Well if you are talking about 11-12. that is a non winter, one of the warmest on records

actually a blend of 08-09,10-11 might be pretty good as 09-10 was an el nino and so that would be an antilog. The 11-12 winter was an anti winter

Heavens!

Thanks for this info about warmth surprisingly cooling the head of some Coolistas.

But as do many commenters here, you seem to privilege the view on your good old CONUS.

Depending on where Mike lives, he might be right, because the winters of 2010/12 were, for example, anything but warm in Germany.

I don’t have my desktop here, otherwise I could show the anomaly differences between CONUS and Western Europe at that time.

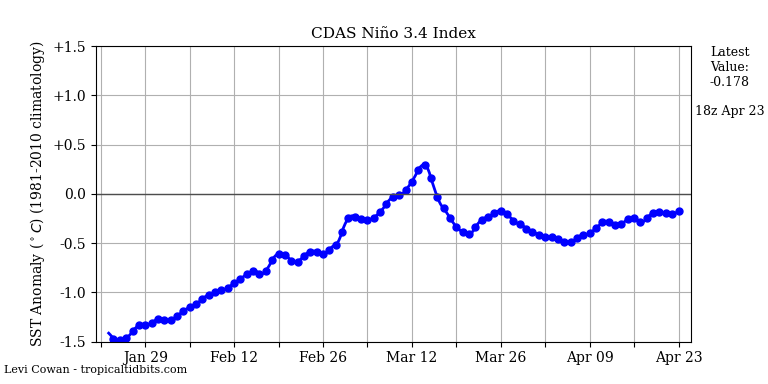

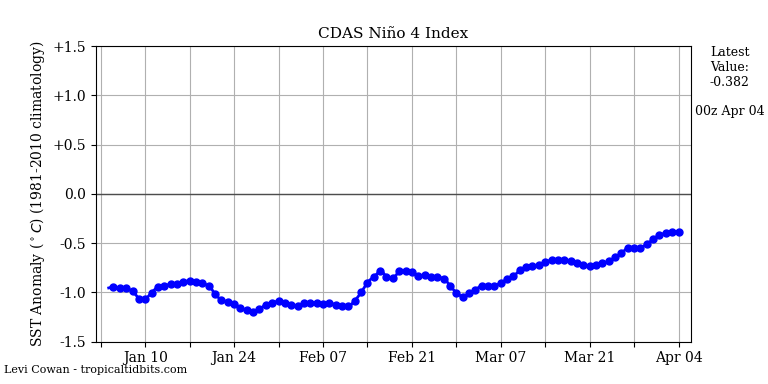

This La Nina looks locked-in.

– Major cold in four months (and sooner).

– Energy systems in the UK and Germany compromised by intermittent green energy schemes.

– The “”Perfect Storm”, brewed by idiot politicians

– What could possibly go wrong, with these energy-imbeciles in charge?

– Extreme cold and energy shortages. This will end badly.

I tried to warn them in 2002 and 2013, but they would not listen.

Regards, Allan

For the Southern Hemisphere I’m predicting extreme heat in 4 months or sooner. 37.6C at my place yesterday – 10C above the long-term average – a record for the date – so sooner rather than later.

Pardon my skepticism but what is your location?

10ks from Beerburrum.

http://www.bom.gov.au/climate/dwo/IDCJDW4010.latest.shtml

Loydo

Beerburrum weather station only since 2000.

Totally pointless.

Several older nearby stations show hottest October day 23rd 1988.

Looks like I live about 40 minutes away from Loydo.

It was nice weather yesterday and a welcome change coming out of the rather cool winter.

I’m in eastern Oz too. Yes, there were three hot days in a row, which was superb because it was during the long weekend. Even in lockdown, my family could have lot’s of fun in the pool, even my missus who never goes in because the water is too cold for her. Now it’s cool again, so I’m the only one who goes in the pool. It seems I need to get a pool heater.

What a perfect location for you Loy’

Beerburrum we’re told by Wikipedia means Green Parrot Mountain

You are a Green parrot and you dump a mountain of “stuff” here.

*SQUAWK! Gettin’ hot!

Loydo want a cracker

She hails from the country experimenting with Fascism, socialism and fantasy “science” at the moment. They seem intent on alienating their entire citizenry.

Beerburrum is a small town near the Queensland Sunshine Coast just north of Brisbane ….

http://www.bom.gov.au/places/qld/beerburrum/forecast/

I thought it was a strange-accented way of saying Birmingham – you know, a little bit South of Glasgow!

come on man glasgow is way north of birmingham, got the whole state of tennessee in between them…..lol

They are succeeding, the ring leaders being a majority of Premiers of State Governments under the influence of the Union controlled leftist Australian Labor Party (not Labour).

A recent blast of northerly winds, from the equator, caused that surge in Northern Australia. Meanwhile Southern NSW is running at a -1C anomaly for 2021.

“-1C anomaly for 2021”

Source?

Ignore the troll she will find some butchered BOM using acorn 2 trash data set to support “the cause”. You can argue this junk all day long but did it change anything for anyone in Australia … nope. Noone noticed or cared but if we all say don’t worry Loydo we will be net zero by 2050 she will be happy (just remember to cross your fingers) 😉

But you’re ok with it if Peter just made it up?

He might as well just make it because that is about what BOM data is. The bottom line is the data is so tortured who cares beside you a few mates. If you need evidence of how little it matters at this stage the PM isn’t going to even attend COP26 in Glasgow. Now all the little greentards will moan to Biden and he will ask Australia to attend and the PM will make a last minute trip to appease Biden.

http://www.bom.gov.au/climate/current/month/nsw/summary.shtml#recordsTminAvgLow

From your link:

So he linked minimum you linked maximum and so the made up numbers game rolls on ;=)

The lows made the average lower than normal.

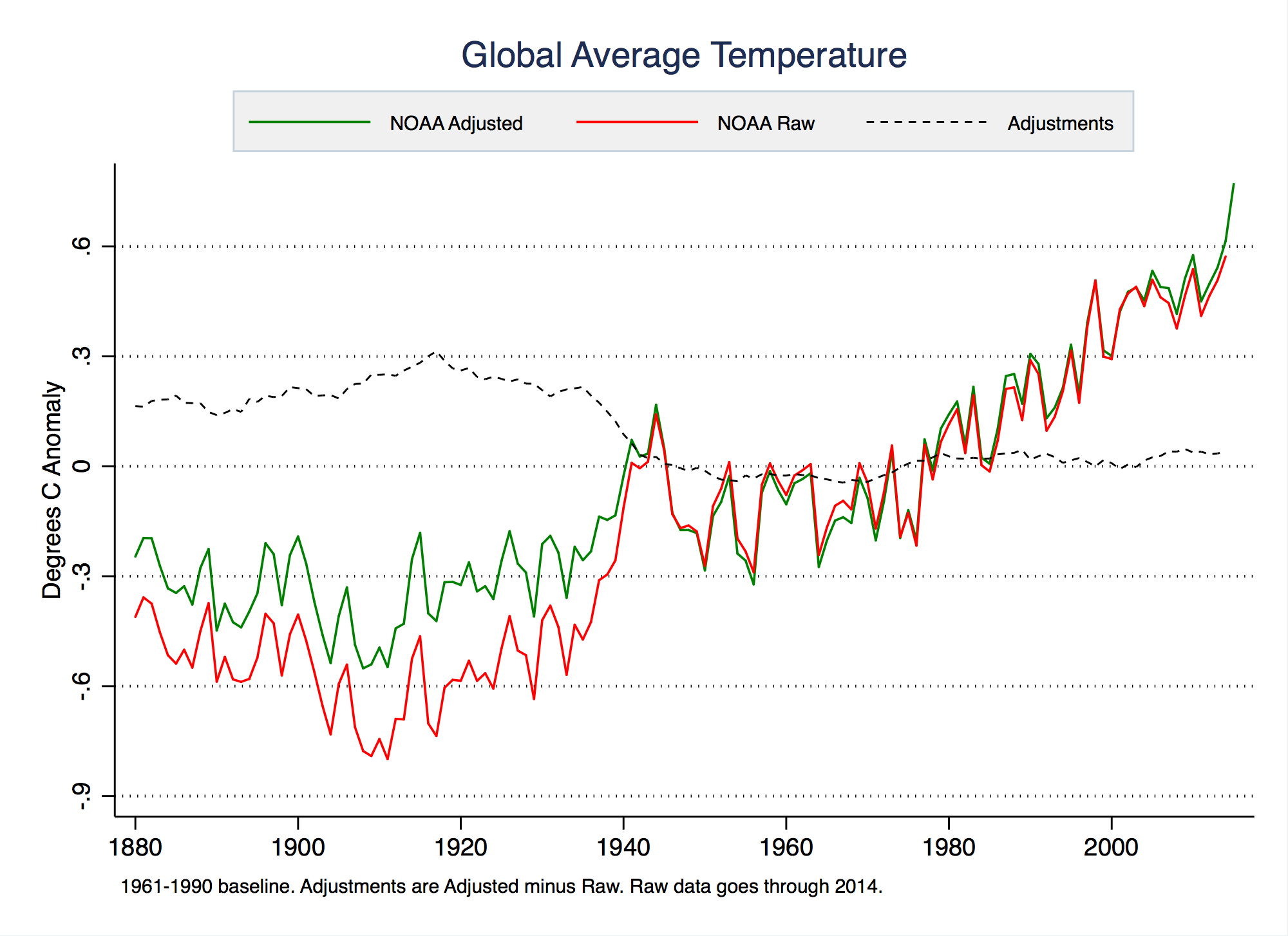

Who on earth uses the 1961-1990 average? That included the end of the global downturn from 1940-1976. We are continually told climate is the average of the past 30 years. So, compared with the past 30 years, is it getting warmer (as predicted) or colder (also as predicted)?

That’s how averages work. They include higher and lower temperatures then mash the temperature into an amorphous number that conveys no information.

“I BLAME GLOBAL WARMING.”

IN THESE DAYS OF “CATASTROPHIC GLOBAL WARMING,” THE SOUTH POLE JUST SUFFERED ITS COLDEST ‘WINTER’ IN RECORDED HISTORY

October 4, 2021 Cap Allon

With an average temperature of -61.1C (-78F), the South Pole has just logged its coldest 6-month spell ever recorded (April-Sept).

Hopefully you and Stokes can use one less blanket for winter in your efforts to fight global warming.. climate change..I mean climate extinction.

Carry on stormtroopers

Mmm, you stick with Elder MacRae.

And you still think Man didn’t land on the moon…one trick pony indeed.

That’s about the fifth or sixth time you’ve repeated this silly lie, I guess that makes you the pony.

All you do is repeat lies.

Such as?

Pretty much everything you post on here.You’re not worth the money you’re getting.

Hiding behind baseless slurs? Name one.

Like that imaginary warmth hiding somewhere in the oceans?Try again,this time maybe show proof the earth is not cooling.And this time no doctored graphics and links that only suit the warmists narrative and are manipulated into oblivion.

THE GREENS’ PREDICTIVE CLIMATE AND ENERGY RECORD IS THE WORST

The ability to predict is the best objective measure of scientific and technical competence.

Climate doomsters have a perfect NEGATIVE predictive track record – every very-scary climate prediction, of the ~80 they have made since 1970, has FAILED TO HAPPEN.

“Rode and Fischbeck, professor of Social & Decision Sciences and Engineering & Public Policy, collected 79 predictions of climate-caused apocalypse going back to the first Earth Day in 1970. With the passage of time, many of these forecasts have since expired; the dates have come and gone uneventfully. In fact, 48 (61%) of the predictions have already expired as of the end of 2020.”

To end 2020, the climate doomsters were proved wrong in their scary climate predictions 48 times – at 50:50 odds for each prediction, that’s like flipping a coin 48 times and losing every time! The probability of that being mere random stupidity is 1 in 281 trillion! It’s not just global warming scientists being stupid.

These climate doomsters were not telling the truth – they displayed a dishonest bias in their analyses that caused these extremely improbable falsehoods, these frauds.

There is a powerful logic that says no rational person or group could be this wrong for this long – they followed a corrupt agenda – in fact, they knew they were lying.

The global warming alarmists have a NO predictive track record – they have been 100% wrong about every scary climate prediction – nobody should believe them.

The radical greens have NO credibility, make that NEGATIVE credibility – their core competence is propaganda, the fabrication of false alarm.

I keep track of your lies. Take off that blanket. And we really did land on the moon…

Of course Mann landed on the moon! It’s where he got his tree ring proxy data showing the hockey stick!

Haven’t seen Stokesy for ages he must be locked down and unable to get to the internet cafe.

Haven’t seen Stokesy for ages. He wrote on his blog that he would be out of action for a while. Hopefully he will be back in 2-3 months.

Well he did tell us how he fights climate change by turning his thermostat down really low during winter and using extra blankets. Maybe he is waiting for it to warm up?

Pretty weak. He plainly, repeatedly, told us, here and in Moyhu, that he had to take care of someone who’s ailing. He found time for 1-2 comments in the interim.

Kindly respect that…..

Why do I have to respect that? A true eco warrior would fight on we are talking about the end of the world 🙂

Is the smiley face a way of saying something without taking responsibility for it?

Without speaking for Mr. Stokes, this was never his view. For example, he explicitly minimized the damage from sea level rise. Things can be real bad, and practically remediable without the straw man manufactured denier hyperbole…

I take full responsibility for everything I post the smile because I find your post stupid given he is a serial troll. I certainly will not speak for Nick as he redefines everything it’s hard to even understand what his point is half the time.

If you are right he will be back in a couple of months to continue his trolling so I get a few weeks of less trolls .. what is not to enjoy about that.

“…..as he redefines everything it’s hard to even understand what his point is half the time.”

I have a different view of his comments, but I will double down on my intent to avoid speaking for him. I hope that we avoid cheap shotting him in his absence, but – predictably – it appears that is now starting….

If only we could cheap-shot some big oily boob in his absence.

His point is to win the argument, no matter how much he has to twist definitions and logic.

https://www.merriam-webster.com/dictionary/sophistry

How does one “minimize the damage” when no damage exists? That’s some special kind of trick.

+1 Rory

So you are predicting Summer will have hot weather?

Well yeah, it was in response to Crystal Balls MacRae predicting cold weather for Canada.

Might be warm up the top right but rather expletive deleted cool down the bottom left. So send us some of your above long term average heat.

It sounds like you are predicting that the southern hemisphere will surprisingly experience Summer.

”For the Southern Hemisphere I’m predicting extreme heat in 4 months or sooner. 37.6C at my place yesterday – 10C above the long-term average – a record for the date – so sooner rather than later.”

Predict what you like. The continent will be cooler than average this summer.

Any ”extreme heat” will have nothing to do with anything.

It is called weather lolly.

No show us how you came to forecast such temperatures?

Allan I have spent 15 years complaining to the BBC, sending endless emails to David Cameron Teresa May and my Conservative MP to whom I delivered an A4 file box with hundreds of pages of evidence which he looked at feigned interest but the response was I have stopped a number of wind farms but it has done nothing for my political career.

The most dangerous word in the English language is “belief” you cannot argue with a belief and politicians like the media have a vested short term interest in scaring people witless to sell product and this is true “The whole aim of practical politics is to keep the populace alarmed (and hence clamorous to be led to safety) by menacing it with an endless series of hobgoblins, all of them imaginary.”— H. L. Mencken US editor (1880 – 1956).

I have repeated arguments with my Son based upon my belief that when politicians say they came into politics because they wanted to make peoples lives better then proceed with witless policy initiatives based upon their personal beliefs and prejudices unsubstantiated by evidence which they wouldn’t understand because to the vast majority numbers represent difficulty. Recently I presented evidence of the nonsense of climate crisis to some friends who immediately recoiled at all of the numbers graphs and text in horror at the quantum. Politicians are only ordinary folk who like the idea of high salary combined with their infantile do good ideology – socialism bordering on communism – AOC is a classic example. Instead of doing what they say in public was the reason they went into politics instead of abiding by their own propaganda once in a position of power they immediately use that position to advance their own ideological agenda.

Climate change is just another leftist bandwagon and Boris Johnson has jumped on board because labour and Liberals are the same so it blurs the image at elections and what politicians want is to be in power at whatever the cost to the environment or to the electorate. Like the markets its all short term and as Margaret Thatcher said “socialism is fine until it runs out of other peoples money”. AOC doesn’t understand the quantum of American debt and Boris is a feckless intellectually diminished thick witted idiot. If millions die from hypothermia because of the way in which the media functions with a dysfunctional government Boris will always make sure its always some one else’s fault.

How many “unexpected” mortaiity events due to exposure to cold (no heat at home) might occur? Does anyone even dare to address that?

I keep wondering if this is how society starts to crumble.

Well, civilization was fun while it lasted, wasn’t it?

I am not sure how the whole southern hemisphere managed to be +0.33 when Antarctica had the coldest temperature ever last month.

It was 0.5 colder than the previous low record in 1976.

Antarctica only accounts for 5% of the SH area.

Land Area?

Here’s the UAH map for September

Not sure how this looks like Antarctica had the coldest month ever.

The upward trend continues smoothly.

Downward trend extends to five years and seven months. With another La Niña in the works, the cooling will extend at least into next year. With SC 25 looking weak, probably longer than that, despite still increasing plant food in the air.

Downward trend extending.

Yes, long term the very mild upward trend of about 0.12C per decade continues. This trend, measured from the coldest point of the last hundred years, is milder than the trend from the end of the 17th century. That means it is both well within natural range and far below the trend predicted by every model based on the hypothesis that changes in CO2 are a primary driver of temperature.

+42X10^42

Linear thinking again

Well, I thought your comment was funny.

Spock said something like it first in reference to Khan.

Yes it does. It’s called the baseline Alex.

What is normally the hottest month on a world scale is it when the earth is closest to the sun perihelion ?

how strong is this effect

Perihelion occurs around mid-January, Aphelion occurs around mid-July, & from I have read over the years, it makes damned all difference to the Earth’s temperature!!! For my own biased views, the big shiny ball thingamagigybob in the sky is what counts, it is a massive fusion reactor after all, converting hydrogen into helium constantly!!! Sceptically yours, AtB.

It is a good question and the answer will surprise most. Perihelion last occurred before the austral summer solstice in 1585. !2,500 years earlier, perihelion occurred on the boreal summer solstice. So from 12,500 years ago to 8,000 years ago the glaciers melted and the sea level rose 140m.

The beginning of the current precession cycle 400 years ago means that perihelion will move toward the boreal summer solstice over the next 12,000 years. That means the current phase of glaciation is under way.

In the present era, January is the month of maximum solar radiation over the oceans. That means the water cycle is in top gear and the ocean surface temperature is at its MINIMUM as cool water is being drawn from cooler depths due to the high level of surface evaporation and transport of water to land. Over the next 12,000 years the sunlight will be increasing over the northern hemisphere causing the water cycle to eventually spin up in June and July resulting in higher rainfall in the boreal summer and higher snowfall during the boreal autumn and winter. The snow will begin to accumulate as the next phase of glaciation sets in.

According to Berkeley Earth these are the 1951-1980 averages in degrees C.

Jan: 12.94 ± 0.03

Feb: 12.45 ± 0.02

Mar: 13.06 ± 0.02

Apr: 13.98 ± 0.02

May: 14.95 ± 0.03

Jun: 15.67 ± 0.03

Jul: 15.95 ± 0.03

Aug: 15.78 ± 0.03

Sep: 15.19 ± 0.03

Oct: 14.26 ± 0.02

Nov: 13.24 ± 0.03

Dec: 12.50 ± 0.03

So July is the warmest month of the year.

That’s because 2/3 of the Earth’s land mass is in the Northern Hemisphere. Land heats (and cools) faster than the ocean because of the ocean’s huge thermal capacity and the fact that the ocean brings up cooler water from a a bit deeper down in the summer, thus diluting the warmer surface. The planet’s average temperature peaks during the NH summer despite the fact that the Earth is closest to the sun in January. Anyone who has been to the SH in their summer can tell you that the sun is intense – ~7% more intense than in the NH during NH summer.

I call BS on those uncertainty intervals, no way they are that small.

Can you post a link to a peer reviewed publication with an analysis of the global mean temperature in absolute terms with an accompanying uncertainty analysis that comes to a significantly different conclusion?

I can give you a textbook reference which says that when you combine independent, random variables that you add their variances. Thus standard deviation grows (since it is the sqrt of the variance).

And single, independent, random temperature measurements of different things are a perfect example. In this case their uncertainty interval represents a variance. The variances add when you combine them.

Will you believe the textbook? I’m not going to waste my time typing it in if you are just going to ignore it.

Is that the one you used on another post, which I argued shows that uncertainties of the mean decreases with sample size?

https://wattsupwiththat.com/2021/10/01/confirmed-all-5-global-temperature-anomaly-measurement-systems-reject-noaas-july-2021-hottest-month-ever-hype/#comment-3360535

As you say when you add things the variance of the sum increases with the sum of the variances. As you should also know when you take the square root to get the standard deviation that means the standard deviation of the sum increases with the root sum square of the standard deviations of the independent variables. And finally when you multiply by 1/n to get the mean the standard deviation will be divided by n, which if all the uncertainties are the same means the standard deviation (uncertainty) of the mean will be equal to the standard deviation of the population divided by the square root of the sample size.

Not this nonsense, again. Please have mercy.

Do you have a point, or are you just going to tut from the sidelines?

Are you going to demonstrate how the average of a month’s worth of temperatures taking every minute by a thermometer with an uncertainty of 0.5°C can have an uncertainty of 6°C?

Are you going to do what you insisted I did, and go through the partial derivatives in the GUM in order to show how the uncertainty of the mean increases with sample size?

Or are you just going to make snide one liners?

Make those u(T) numbers as small as possible, this is your ultimate mission in life!

So your answer to my first three question is no, and to the last is, yes. Thanks for clearing that up.

You don’t like my answers, so why should I waste time repeating them?

You are STILL conflating the combination of independent, random variables with the combination of DEPENDENT, random variables.

The GUM tells you how to handle uncertainty of DEPENDENT, random variables, i.e. multiple measurements of the SAME thing.

The same statistical operations do not apply when you have independent, random, multiple measurements of DIFFERENT things.

You’ve admitted that when you combine independent, random variables that their variances add. The population standard deviation then becomes sqrt(Var). You just won’t admit that you can’t then find an “average” variance by dividing sqrt(Var) by n. The “average” variance is a meaningless metric.

All you do when you try to do this is come up with an average variance for each independent, random variable included in the population. That is *NOT* any kind of standardized metric I can find used anywhere.

If Var1 = 6, Var2 = 10, and Var3 =50 then the sum of the variances is 66 and that is the variance for the combined population. Thus the sqrt(66)=8.1 is the standard deviation for the population.

The *average* variance for the three members using your logic is 66/3 = 22. The standard deviation for an average variance would thus be sqrt(22)=4.7. But that is for each element, not for the population as a whole!

Please note that you can’t add the standard deviations for each element, not even the average standard deviation, and get the population standard deviation. 4.7 * 3 = 14.1, not 8.1!

You still quite get over the hurdle that the standard deviation of the mean describes the interval in which the sample mean might lie. That is a description of how precisely you have calculated the mean. It does NOT describe the accuracy of the mean you have calculated. More samples in the calculation means a more precise mean. That is *NOT* the same thing as the population standard deviation which describes the interval in which any value is expected to be found, including the mean value.

You continue to try and say these are the same thing — but they aren’t.

If you combine multiple, independent, random variables then you might be able to narrow the interval in which the mean will lie by adding more independent, random variables (that’s not a guarantee tho). But the accuracy of the mean is not the interval describing the accuracy of the mean, that is the population uncertainty.

This is a very important distinction. Yet it seems most mathematicians, statistician, and climate scientists simply don’t grasp it. You can’t lower the uncertainty of combining two independent, random temperature measurements by dividing by 2 or sqrt(2). The uncertainty of that mean will always be the RSS of the two uncertainties if not the direct addition of them. Just like you can’t lower the variance of a combination of a series of independent, random variables by dividing by sqrt(2).

Perhaps this text will make it more clear:

—————————————————

The standard deviation s (V ) calculated using the formula 3.3 is the standard deviation of an individual pipetting result (value). When the mean value is calculated from a set of individual values which are randomly distributed then the mean value will also be a random quantity. As for any random quantity, it is also possible to calculate standard deviation for the mean s (Vm ). One possible way to do that would be carrying out numerous measurement series, find the mean for every series and then calculate the standard deviation of all the obtained mean values. This is, however, too work-intensive. However, there is a very much simpler approach for calculating s (Vm ), simply divide the s (V ) by square root of the number of repeated measurements made:” (bolding mine, tpg)

——————————————————

“When the mean value is calculated from a set of individual values which are randomly distributed then the mean value will also be randomly distributed. ”

That’s called uncertainty! If you can’t make repeated measurements then you can’t use the sqrt(N).

And you STILL don’t understand what “independent variables” mean. It does not mean measuring different things as opposed to measuring the same thing, it means the variables are not correlated. You say the GUM tells you how to handle DEPENDENT variables, yet the GUM only uses the word dependent once, and that’s in relation to a measurand depending on parameters.

You are STILL confusing the issue of adding the value of variables to get a sum, with combining separate populations to get an new population.

You STILL haven’t done what you insisted I do and show how equation (10) can be used to show how you handle uncertainties of a mean. Note that this specifically deals with independent quantities.

And you STILL understand that “… the standard deviation of the mean describes the interval in which the sample mean might lie.” is exactly what I and I think everyone else means by the uncertainty of the mean.

“And you STILL don’t understand what “independent variables” mean. It does not mean measuring different things as opposed to measuring the same thing,”

Of course it does! Measurements of the same thing are all DEPENDENT! The values all depend on the measurand. Whether it be length of a board, a chemical analysis of a sample, or the weight of a lead weight.

Measurements of different things are INDEPENDENT! Different boards, different chemical samples, or different lead weights!

“You are STILL confusing the issue of adding the value of variables to get a sum, with combining separate populations to get an new population.”

No, I am not!

What do you think Y = X1 + … + Xn means?

Xi is an independent, random population!

And Y is not a sum of all the various elements. If that were the case then Var(Y) = Var(X1) + Var(X2) + …. + Var(Xn) would make no sense at all!

I think this applies directly here:

——————————————-

4.2.1 In most cases, the best available estimate of the expectation or expected value μq of a quantity q that varies randomly [a random variable (C.2.2)], and for which n independent observations qk have been obtained under the same conditions of measurement (see B.2.15), is the arithmetic mean or average q‾‾ (C.2.19) of the n observations:

_______________________________________

(bolding mine, tpg)

Same conditions of measurement actually means the same measureand and the same measuring device.

This also applies:

——————————————————

Section 5.1.2 (where equation 10 lies)

The combined standard uncertainty uc(y) is an estimated standard deviation and characterizes the dispersion of the values that could reasonably be attributed to the measurand Y (see 2.2.3).

——————————————————

(bolding mine, tpg)

You will note that it speaks to THE MEASURAND. Not multiple measurands. Meaning multiple measurements of the SAME THING.

You are still throwing crap against the wall to see if something will stick.

From “The Basic Practice of Statistics”, Third Edition, David S. Moore:

“The shape of the population distribution matters. Our “simple conditions” state that the population distribution is Normal. Outliers or extreme skewness make the z procedures untrustworth ….”

(same thing applies for t procedures)

There is no guarantee that a combination of independent, random variables will result in a Normal distribution. This applies directly to temperatures.

Here is the main point:

“More samples in the calculation means a more precise mean. That is *NOT* the same thing as the population standard deviation which describes the interval in which any value is expected to be found, including the mean value.”

I’ve been trying to help you to see that the issue here might just be one of definition. A more precise estimate of the mean is exactly how I define the uncertainty of the mean. If I say the mean is 100±2, I’m saying that the calculated mean is probably within 2 of the actual mean. (How probable depends on how the uncertainty interval is defined).

You are defining the uncertainty of the mean, as how good it is at defining the population. If you say the mean is 100±50, you mean that most of the population is between 50 and 150. If it makes any sense to define it like that is another matter, but it would avoid a lot of wasted time if you would be clear about your definition. As I’ve said before, and you threw in my face, I think the distinction is between a confidence interval, and a prediction interval.

“This is a very important distinction. Yet it seems most mathematicians, statistician, and climate scientists simply don’t grasp it.”

Has it ever occurred to you that if on a matter of mathematics and statistics, most mathematicians and statisticians disagree with you, it’s just possible you might be wrong?

Then mean of what, exactly?

It matters.

It’s the average number of meaningless one line posts you make under each comment.

Stop whining, if you don’t understand single sentences, you will never understand Tim’s careful lessons.

All I’m trying to establish here is what you and Tim and co mean when you say “the uncertainty of a mean”. I’m trying to get a careful response as I think this is the source of much of the confusion in these interminable threads.

If you have a statement that mean hight of an adult male in a country is 180cm, with a standard uncertainty of 5cm, do you think that is saying the actual mean is more likely to be somewhere between 175 and 185 cm, or do you think it should be read as most adult men are between 175 and 185cm?

“A more precise estimate of the mean is exactly how I define the uncertainty of the mean.”

The standard deviation of the mean describes the spread in values that the means calculated from various samples of the population will have. it does *not* mean that the mean values calculated from samples of the whole population get closer to any “true value”. Not when you are combining independent, random variables.

The uncertainty of those means takes on the value calculated for the uncertainty of the population. The mean is just one more random value and can fall anywhere in the uncertainty interval.

Remember, for a combination of independent, random variables there is no “true value”. There is no “expected” value like there is when you are taking multiple measurements of the same thing. The mean you calculate from a sample of the population is not a true value. As I keep pointing out the mean value may not even exist in reality, nor can you assume a Normal distribution for the population.

You keep wanting to ignore than variance goes up when you combine independent, random variables. That variance is a direct metric for the uncertainty of the population. The wider the variance gets the more uncertain the mean gets as well. The wider the variance of the population the higher the possibility you have of picking samples from that population that vary all over the place thus the standard deviation of the mean grows as the variance of the population grows. Every time you add an independent, random value to the population the variance gets bigger. So the mean also gets less certain. This doesn’t happen for dependent, random values which cluster around an expected value. But there is *no* expected value for a collection of independent, random variables that are not correlated.

I’m not wrong. There are too many sources, such as Taylor and Bennington that have written tomes on this. There are references all over the internet, many of which I have pointed out to you multiple times. But you stubbornly refuse to understand what they are saying.

You want to believe that taking multiple measurements of one piece of lead is the same as taking multiple measurements of many different pieces of lead. One has values clustered around an expected value and one doesn’t. You want to pretend that they both have distributions clustered around an expected value and you can use the same statistical approach to both. That’s just plain wrong.

“As you should also know when you take the square root to get the standard deviation that means the standard deviation of the sum increases with the root sum square of the standard deviations of the independent variables.”

So what? That’s no different than the root-sum-square Taylor uses when propagating uncertainties for independent, random variables.

“And finally when you multiply by 1/n to get the mean the standard deviation will be divided by n”

Why do you make that leap? The standard deviation of what? Dividing the sum of the VALUES by n to get the population average for a grouping of independent, random values doesn’t mean you divide the total variance by n!

This seems to be where you continue to get lost! Variance of the population is the variance of the population. It doesn’t change, you don’t divide it by n to get an average variance!

There is no requirement that I can find anywhere that says when you divide the sum of the values by n that you also have to divide the variance or sqrt(variance) by n as well.

Can you link to something that says you must do that?

“Why do you make that leap?”

I’ve already explained to you here, it follows from the equation you gave me

Var(Y) = a_1^2Var(X1) + … + a_n^2Var(Xn)

“It doesn’t change, you don’t divide it by n to get an average variance!”

Of course it doesn’t. I’m not looking for an average variance, I’m after the variance of the average.

“There is no requirement that I can find anywhere that says when you divide the sum of the values by n that you also have to divide the variance or sqrt(variance) by n as well.”

You divide the square root of the variance by the square root of n. The square root of the variance is otherwise known as the standard deviation of the population.

“Can you link to something that says you must do that?”

GUM 4.2.3

Why are you so dense?

This applies to multiple measurements of the same measurand, not time series measurements.

Who said anything about time series. We are trying to establish what happens when you take an average.

An average of what? It matters! And Tim already gave you this:

https://wattsupwiththat.com/2021/10/04/uah-global-temperature-update-for-september-2021/#comment-3361862

You desperately want to divide by sqrt(N) because it gives nice small numbers—this is a psychological bias.

I swear you can’t read. From your excerpt of the GUM:

“Thus, for an input quantity Xi determined from n independent, repeated observations Xik” (bolding mine, tpg)

What do you think this is talking about? REPEATED MEASURMENTS OF THE SAME THING!

Why do you always skip over that part in whatever you read?

““It doesn’t change, you don’t divide it by n to get an average variance!”

Of course it doesn’t. I’m not looking for an average variance, I’m after the variance of the average.”

The variance of what average? Variance isn’t an average, it is variance! It doesn’t change when you calculate the average of the values in the population!

“The variance of the average” does not mean that “variance is an average”.

So, to be clear, if I want to estimate the mean height of a human male, I should only measure a single person’s height? Because otherwise the uncertainty of the mean is just going to grow and grow.

The variance will grow as you add more and more people to the database. Not sure what the distribution will lok like but if it is a normal distribution, only 68% will fall within one standard deviation. The rest will fall outside that interval.

The variance does not necessarily increase as you add more people.

Here’s a graph showing the variance for different sample sizes taking from a normal distribution.

A total non sequitur—periodic temperature measurement sets are NOT normal, and you already know this but choose to deny reality.

It was an illustration that increasing sample size does not necessarily increase variance. The example was talking about men’s heights, not temperature, and you’d get similar results whatever the distribution.

(In fact rushing of to test this I notice that the above graph was using a uniform distribution, not a normal one. Sorry. Here’s one using a normal distribution.)

And here’s the same taking random samples from a sine wave.

BFD—has nothing to do with averaging temperatures or corrupting old data.

But it has everything to do with the claim I was addressing: “The variance will grow as you add more and more people to the database.”

Now do you agree with that remark or are you just going to try changing the subject?

I was never discussing people in a database, you must have me confused with someone else.

You *have* to be selective with the people you add or your variance *will* grow! Age, health, wealth, food supply, etc all impact the possible populations of “people”. As you add in more “people” the variance *will* grow – unless you restrict the possible people you add. In which case you are not analyzing independent, random variables.

This was exactly the point I was making to you a day or two ago. Combining two populations does not result in a new population with variance equal to the sum of the two variances.

Combine two populations with the same mean and variance and the variance of the new population will be roughly the same. Combine two populations with very different means but the same variance, and the variance of the new population will be bigger than the sum of the variances.

“Combining two populations does not result in a new population with variance equal to the sum of the two variances.”

Once you restrict the elements you add to the population you no longer have independent, random variables. I’m not sure you understand the definition of independent and random.

“Combine two populations with the same mean and variance and the variance of the new population will be roughly the same.”

Not if they are independent and random populations. The variance will add. I’ve given you quotes from two textbooks verifying this. Now you are just being willfully ignorant.

Why do you think I’m restricting anything. I’m describing the mean and variance of the two populations I have. I keep asking you to check the definition of random independent variables.

Let’s say I have a pack of 100 cards with the numbers 1 to 100 written on them, one unique number on each one. And I have another identical pack off cards. Are the two packs the same thing or different things? Is drawing a random card from each deck independent? Do each deck represent two random independent variables?

Then what of the decks goes from 2 to 101, does that make it more independent or less?

I’ll leave it as an exercise to the reader to determine what happens to the variance if I shuffle the two decks together in either of these examples.

A sine wave is not a probability distribution. Go look it up. It has no variance or standard deviation.

Again, as you’ve been told over and over, temperature measurements as a function of time cannot be considered a fixed population, another fact you are desperate to overlook. Your examples of sampling are meaningless.

No one ever said increasing SAMPLE size increases variance. Adding elements to the population increases variance of the population, at least for independent, random variables.

It depends on what people you are adding. They need to be from the same population. Add the heights of a few Watusis into a population of pygmies and you will get a vastly expanded variance.

So if I want to estimate the mean of a population is it better to have a large or a small sample size?

Temperature “populations” do not exist.

Can you answer the specific I question asked instead of deflecting?

The number of samples irrelevant, again:

—Sampling a fixed population—

IS NOT THE SAME AS

—Periodic time series sampling—

Until you understand this, you will remain hopelessly lost in the swamp.

More deflection. Can you directly answer the question? If I want to calculate a population mean, is it better to have a large or small sample size?

Even in a normally distributed population where the values have some measure of dependency, e.g. you are taking multiple measurements of the same thing, the size of the sample determines the standard deviation of the mean you calculate. You can either take more small samples or take fewer but larger samples.

What if you took many large samples? Would that be worse than many small samples?

Or the corollary…if you want to measure the mean temperature on a particular day make sure you only take one reading and make sure you are using a instrument that isn’t doing 1-minute averages like what AWOS and ASOS does otherwise the uncertainty is just going to grow and grow.

I can only imagine that Monte and the Gormans believe the old adage “measure twice and cut once” is sheer propaganda.

I think they have a lot of psychological tricks to ensure they never consider they might be wrong. Either the rules don;t apply if you are measuring the same thing, or uncertainty doesn’t mean what you think it does.

Currently Tim is arguing that there’s a difference between combining multiple measurements to estimate an area under a curve, and averaging them.

Take all the buildings in NYC and average their heights—what does this number tell you? Not much.

Pick 10-12 at random and average their heights, how close will you be to the average of all of them?

According to you lot, everything is normal, so you’ll be pretty dern close.

This has to one of the silliest comments you have made to date: averaging is NOT integration.

You can use an integral to find the average value of a function between a and b, it’s just the definite integral between a and b, divided by (b – a).

In this case, however, we are only approximating an integral by taking multiple measurements over a time period. Working out the area of each rectangle formed by the time between the readings and the temperature reading. (strictly speaking by the average of the two readings), and the adding together to estimate the area under the curve.

As we are measuring degree days the width of each rectangle is equal to the proportion of the day between the readings, so if they are taking every hour, the width of each rectangle is 1/24.

This to me is effectively averaging 24 temperature readings. But even if you don;t accept that it still means you are combining 24 uncertain thermometer readings, and by Tim’s and possibly your logic the uncertainties should increase.

But as far as I can tell Tim thinks that doesn’t happen here and that this method has less uncertainty than averaging the values.

(He also thinks you can get an accurate value for CDD’s by treating the day as a sine wave, when you only know the maximum value. Do you have any opinions on this you’d like to share?)

“You can use an integral to find the average value of a function between a and b, it’s just the definite integral between a and b, divided by (b – a).”

Of course you can! So what? That isn’t what a degree-day *is*. Again, do your dimensional analysis.

If the area is degree-day and you divide by day you wind up with degree, not degree-day.

This is very simple. Do they not even teach dimensional analysis any more?

“n this case, however, we are only approximating an integral by taking multiple measurements over a time period.”

You do not have to do this if you assume the temperature profile curve is a sine wave. Using that assumption you can do a direct calculation. In any case you still wind up with an AREA, i.e. degree-day!

“Working out the area of each rectangle formed by the time between the readings and the temperature reading.”

What do you think an integral is?

“(strictly speaking by the average of the two readings), and the adding together to estimate the area under the curve.”

I covered this in detail earlier. Let me work up another picture for you. I’ll attach it. You use the midpoint of the line between point A and point B. In doing so you get a good estimate of the area under the curve from A to B.

“This to me is effectively averaging 24 temperature readings.”

Again, learn how to do dimensional analysis! An average of temperature values gives you a dimension of “degree”, not degree-day! The area under the curve is height (degree) multiplied by the width (time – day). AREA! Each rectangle ADDS to the area under the curve!

“ But even if you don;t accept that it still means you are combining 24 uncertain thermometer readings, and by Tim’s and possibly your logic the uncertainties should increase.”

If you do it this way you are correct. It is *NOT* correct if you assume the temperature curve is a sine wave. Look at it this way. Assume a cloudless, windless day. The heating from the sun is then dependent on the angle of the sun and a point on the Earth. The maximum heat input is when the sun is directly overhead. The minimum heat input is when the sun is at either horizon. That describes a sine curve. If S is the amount of heat from the sun when it is overhead then the heat input is H = S * sin(t), where t is the time from sunrise. If the temp is dependent on the heat from the sun then T = Tmax * sin(t)

If you have changing conditions during the day then Tmax may be different for different periods. If you can identify those periods you can do a piecewise integration – no approximation.

” by Tim’s and possibly your logic the uncertainties should increase.

Why would you believe that uncertainty will grow when you involve multiple temperatures in an integration but it won’t grow when you add temperatures from different stations?

Let’s take four successive temperature measurements with stated values of 70.1, 70.2, 70.3, and 70.4, each with an uncertainty of +/- 0.5. Assume the intervals are separated by 1 to make the calculation simple. The midpts of the stated values are 70.15, 70.25, and 70.35. The max uncertainty temps are 70.6, 70.7, 70.8, and 70.9. The midpts for those are 70.65, 70.75, an 70.85.

So the areas are

70.15(1) + 70.25(1) + 70.35(1) = 210.75

70.65(1) + 70.75(1) + 70.85(1) = 212.25

So the positive uncertainty has gone from 0.5C to 1.5C. It has tripled. The original uncertainty for each temp was +/- 0.5C, an interval of 1C. The uncertainty has grown to +/- 1.5C, an interval of 3C.

Why wouldn’t these same temperatures from three separate measurement locations not see the total uncertainty grow from +/- 0.5C to +/- 1.5C?

If the three temperatures are independent, random measurements their uncertainties (variances) should add. Thus you get 0.5 + 0.5 + 0.5 = 1.5.

Are you trying to imply that the uncertainty when doing the approximated integration will *NOT* grow?

Apparently not, it must be racist now or something…

The value is the same if you talk about degree-days over 1 day, or the average degree over that same day. You could just as easily measure the average temperature over a day in degree-days. It would be the same as the length of time is 1.

Changing the dimensions does not change the uncertainty. You are calculating the cooling degree-days for a day by taking multiple measurements and combining them.

“You do not have to do this if you assume the temperature profile curve is a sine wave. Using that assumption you can do a direct calculation. In any case you still wind up with an AREA, i.e. degree-day! ”

You can approximate CDD or HDD by assuming temperature is a sine wave, in which case you only need to know the maximum and minimum values. Are you really saying this will give you a more accurate figure than making multiple measures throughout the day?

“If the temp is dependent on the heat from the sun then T = Tmax * sin(t)”

And you still don’t accept that this is not the formula for a daily temperature profile, even if you assume it follows a sine curve. You simply cannot get that just by knowing the maximum temperature. Your formula would mean that if the max was 75°F, then half the day would be below 0°F and would drop to a minimum of -75°F.

You would also get a completely different profile if you were using Celsius and had a max of 23.9°C. Does that not strike you as improbable?

(Continued)

“Why would you believe that uncertainty will grow when you involve multiple temperatures in an integration but it won’t grow when you add temperatures from different stations?”

I’m not the one claiming that combining samples increases uncertainty. I think the more measurements you take during the day the more accurate the resulting value will be. This is true whether you are taking a daily average or calculating CDDs. In part this is because measurement errors will tend to cancel out the more values you add. But as I said to monte, this assumes the measurement errors are independent, which might not be the case.

You on the other hand argue the more measurements you combine the more the measurement uncertainty increases, whether you are taking an average or summing.

“Are you trying to imply that the uncertainty when doing the approximated integration will *NOT* grow?”

I’m not implying it , I’m stating it. The measurement uncertainty of approximating the integral by taking multiple readings will *NOT* grow as the number of measurements increases. And if the measurement errors are independent the measurement uncertainty will decrease, just as with an average.

This is quite apart from the reduction in uncertainty of having a better fit for the actual temperature profile. If you base an average or integral on just two measurements you are assuming there is a straight line (or at least symmetrical) from one to the next. Adding more measurements between the two will give you a more accurate value.

Are you really saying that you think an estimate of a daily CDD would be less accurate if measurements were taking every minute than if they were taking every hour?

And once again your intuition is fooling you.

We aren’t wrong. You *still* can’t provide any specific reference that says independent, random populations, when combined, don’t have their individual variances add.

You keep trying to say that measuring DIFFERENT THINGS, is the same as measuring the SAME THING.

It isn’t.

And you *still* haven’t figured out what an integral is, have you?

When you integrate a velocity curve over time what do you get? Do you get an average miles/hour? Or do you get total distance travelled?

is it (miles/hour)(hours) = miles?

How does that become average miles/hour?

“We aren’t wrong. You *still* can’t provide any specific reference that says independent, random populations, when combined, don’t have their individual variances add.”

For the last time, when you say add, do you mean as in sum or as in union? You don;t need to see a reference to realize that putting together several populations with the same or different variance and means will not necessarily give you a population whose variance is equal to the sum of all the variances of the original populations. You can check it out yourself with the stats package of your choice.

“When you integrate a velocity curve over time what do you get?”

You get distance traveled, over wise known speed-hours. Once you’ve got that if you divide it by the time you get the average speed. Alternatively if you know the average speed and multiply by the time you get distance traveled.

Similarly if you have 60 degree-days measured over 30 days, you have an average of 2 degree-days per day, or 2 degrees. Or if you know the average temperature over the base line for the month has been 2 degrees, you can multiply by 30 to get 60 cooling degree-days.

None of this quibbling matters, because whatever units you are using, you are still getting them by combining multiple measurement, which you claim will increase uncertainty whether you average of add them.

“For the last time, when you say add, do you mean as in sum or as in union?”

I answered you. Twice actually.

It is a union. A sum would make no sense. You’ve been given the references to show this.

Y = X1 + … + Xn

Var(Y) = Var(X1) + … + Var(X2).

Y was a sum then why would the variances add? The sum wouldn’t have a variance.

Why is this so hard to understand?

“You don;t need to see a reference to realize that putting together several populations with the same or different variance and means will not necessarily give you a population whose variance is equal to the sum of all the variances of the original populations. “

I’ve given you at least five references, two of them textbooks, that say different. You have yet to give me a reference that says that isn’t true. You just say “you don’t need a reference”. Really?

“You get distance traveled, over wise known speed-hours. Once you’ve got that if you divide it by the time you get the average speed. Alternatively if you know the average speed and multiply by the time you get distance traveled.”

OMG! You *really* don’t understand dimensional analysis, do you?

What units does speed have? E.g. miles/hour

What units does time have? E.g. hours

What do you think you get from speed-time? You get (miles/hour) * hour = miles!!!!!!

The area under a curve IS NOT AN AVERAGE! The area under a curve is the area under the curve!

An integral does *NOT* give you an average. You have to do another calculation to get the average.

What do you get when divide degree-day by day? You get degree. An average TEMPERATURE, not an average degree-day!

Why is this so hard to understand?

Where does this dreck they claim come from?? Is the education system really this broken? Ack.

“It is a union. A sum would make no sense. You’ve been given the references to show this.

Y = X1 + … + Xn

Var(Y) = Var(X1) + … + Var(X2).”

Did you notice the “+” signs? This text, whatever it is, is talking about what the variance is of a random variable Y, when Y is the sum of multiple random variables, X, X2, … Xn.

It’s saying the variance of Y will be eual to the sum of the variances of all the Xs.This is the very basis of saying the standard deviation of Y (the uncertainty of Y), will be equal to the square root of the sum of the squares of the standard deviations of each X. And is of course how you get to the formula for the standard error / deviation of the mean.

If the author is claiming you can use this to determine what the variance of the union of different populations is, they are sadly mistaken. For multiple reasons I’ve already told you this cannot be correct, and I’ve all ready given you the correct formula.

But you don’t have to take my word for it, just do it in a stats package or spread sheet. Generate random populations with different means and variances and see what the variance of the combined populations is. See if the effects are the same if the means are identical as when they are completely different.

“You have yet to give me a reference that says that isn’t true. You just say “you don’t need a reference”. Really?”

Here’s one I found

https://www.emathzone.com/tutorials/basic-statistics/combined-variance.html

But in this case I really don’t need to know the exact formula to know that you are wrong. It just follows from thinking about what it would mean if you were correct.

“OMG! You *really* don’t understand dimensional analysis, do you?

What units does speed have? E.g. miles/hour

What units does time have? E.g. hours

What do you think you get from speed-time? You get (miles/hour) * hour = miles!!!!!!”

You do have this strange way of angrily repeating something I’ve just said as if I was wrong. I said the integral of the your velocity over time chart would be distance traveled, I didn’t think it necessary to specify that the distance would be measure in mile.

“The area under a curve IS NOT AN AVERAGE!”

The area under the curve is the average when divided by the range of the x-axis. If your graph was for 1 hour the distance traveled in miles would be the average speed in miles per hour.

“What do you get when divide degree-day by day? You get degree. An average TEMPERATURE, not an average degree-day!”

Again they are one and the same if you are looking at a single day.

And even if you don’t the the connection between integrals and averages, you still haven’t explained why you think the uncertainties will be different.

Gad, go back and take a real calculus course. I learned this stuff in high school. Seriously, who teaches such blatant nonsense?

“You *still* can’t provide any specific reference that says independent, random populations, when combined, don’t have their individual variances add.”

https://www.emathzone.com/tutorials/basic-statistics/combined-variance.html

Note the second formula means that if you combine two populations of the same size and mean, then the combined variance will be the average of the two variances, not the sum.

OMG!

“Suppose we have two sets of data containing n1 and n2 oservations”

Observations of the SAME THING!

50 male wages and 40 female wages, apparently working the same job. If you gathered the wages for 50 independent, male workers at random (pick 50 different jobs, i.e. dishwasher, welder, fireman, etc) *then* you would have independent, random variables to combine. Same for the female workers.

Then the textbook quotes I’ve given you would apply – the variances add.

“Observations of the SAME THING!”

Why do you think they have to be observations of the “SAME THING”™? The page starts by saying n_1 and n_2 have different means and variances. You really need to define what you mean by the same thing.

If you are saying 90 workers all earning different amounts are the same thing, then I think we are getting somewhere.

Projection time.

You imagine many things, being a subscriber to climastrology.

MEASURE THE *SAME* THING TWICE.

It won’t do you any good to measure board 1 and then go measure board 2 before you cut board 1!

This seems to be a subtlety that seems to elude you, Bellman, and bdgws.

Measuring the same thing multiple times and taking multiple measurements of different things require different treatment.

You can’t use the same hammer on both. One is a nail, the other is an ice cream cone!

So you agree that if I need to cut a board to fit, measuring the space the board needs to go into twice and taking the mean of both measurements will give me a better result? What if both measurements have an uncertainty of +/- 1mm? Should I then just be satisfied that a single measurement will yield the best estimate?

“So you agree that if I need to cut a board to fit, measuring the space the board needs to go into twice and taking the mean of both measurements will give me a better result?”

Of course it will. You are taking multiple measurements OF THE SAME THING! Those dependent, random measurements form a probability distribution around an expected value.

“What if both measurements have an uncertainty of +/- 1mm?”

So what? They still form a probability distribution around an expected value, the “true value”.

Do you think that the same thing happens when you measure the length of two different boards? Do those measurements form a probability distribution around a true value?

“Should I then just be satisfied that a single measurement will yield the best estimate?”

You only get one chance for a measurement when you are measuring temperature. Your measurand disappears into the universe never to be seen again once you have measured it.

What do you do in that case?

Even in these kinds of stations there is *still* uncertainty associated with the final averaged temperature. Did you somehow think that each sensor doesn’t have its own drift characteristic? It’s own aging characteristic, it’s own calibration variance?

And when you add that average temperature to the temperature measured by another station 20 miles away do you think those two temperatures do NOT somehow represent measurements of different things making them independent and random?

For if they *are* independent and random then their uncertainties add. Those uncertainties may be smaller than for a different kind of measurement station but they will still add. And when you combine enough of them that uncertainty will *still* swamp out any “differences* you are trying to measure.

No, that isn’t it at all! If you measure the height of a human male from ages 3 to 70 what will the mean tell you? The mean won’t tell you the height of a human male adult! The height of human males *is* age dependent, so in the population of all human males there are correlations between the data points meaning the data points are not independent. There is a confounding variable involved. Even if you measure the height of all human males 28 years of age you will be mixing populations such as pygmies and Watusis and etc. You will wind up with a multi-modal distribution and the mean you calculate won’t be very descriptive of anything.

If you are combining multiple temperature measurements of different things then you will probably have a non-Normal distribution population. You are not even guaranteed that what you calculate for a mean in such a distribution

even exists physically.

Most statistical tools, at least fundamental ones, are based on a Normal population distribution. But uncertainty doesn’t have a probability distribution for a single measurement. If you treat the uncertainty as the variance then those uncertainties *do* grow as you add more members since variances add when combining indiependent, random populations And variance *is* a measure of how uncertain your calculated mean is.

Suppose you combine temperature measurements from Chile and Kansas in July. What kind of distribution will you have? One will have temperatures clustered at a much lower value than the other. You will, in essence, have a double-humped, bimodal distribution. You pull a sample of that population in order to calculate a mean, assuming that sample will have a normal distribution. Will the sample have a normal distribution? Will the mean of the sample work out to be the same as the mean of the entire population? Will the mean of the entire population actually tell you anything about the entire population? If you consider these to be independent, random measurements then shouldn’t you be able to add the means of the two populations? Since the total variance will be large what conclusion can you reach about what that mean implies?

I’m sorry but standard deviation of the mean calculated from multiple samples, or even one sample, is not the same thing as the uncertainty of the mean. Calculating the mean more and more precisely doesn’t mean what you calculate is a “true value”, not for independent, random, uncorrelated variables.

It’s why the GAT is so useless. Everyone assumes that the standard deviation of the mean is synonymous with the uncertainty of the mean. It isn’t. And true scientists trained in metrology *should* recognize that. It is truly dismaying to me that so many scientists, including climate scientists, think that the theory of large numbers or the central limit theory or whatever you want to call it applies to *all* situations. It *ONLY* applies when you have one measurand with multiple measurements being analyzed.

Lol. Let’s simplify things. Suppose we want to estimate the mean of a population that follows a normal distribution. Will our estimate be improved if we take a large sample size versus taking a small one? Or will our estimate of the mean actually be more uncertain the larger our sample size?

You are correct. They aren’t that small.

The other issue is that MORE than 2/3rd of the stations monitoring the temperature is in the Norther Hemisphere.

Are you challenging Berkeley Earth’s data showing that July is the warmest month of the year for the planet as a whole?

It’s cold in the SH when it is hot in the NH.

What makes you think it can’t be colder in the SH than it is warmer in the NH?

Because there’s more land in the Northern Hemisphere.

I thought spatial krigging and weighting of grid squares took care of that.

Drop the ‘k’ and you have ‘rigging’.

Why would you think that?

I am now having grave doubts even of this data. With my own weather station I know as fact that I have just had the coldest September in the 18 years i have kept data. So just to the southwest of London must be totally at odds with the rest of the planet.

September 2021 globally was cooler than last year, at anomaly of 0.25 vs. 0.40.

September 2019 was even warmer, at 0.61, but in 2018 cooler, at 0.13.

September 2017 was a balmy 0.54, while in 2016 a less toasty 0.45.

So last month was the second coolest September of the past six years. From warmest to coolest: 2019, 2017, 2016, 2020, 2021 and 2018.

The warmest month in the dedicated satellite record was February 2016, at the height of Super El Niño, just pipping out peak of 1997-98 SEN.

John, do these anomalies take into account the change in baseline at the start of 2021?

Yes.

Your weather station is at odds with the rest of England, never mind the global satellite data. It was the 3rd warmest September in the past 18 years

Last month was the second coolest of the past six Septembers, globally.

It tied September 2015, and was cooler than 2014’s 0.29 as well. The Septembers of 2013 and 2012 were 0.34 and 0.39.

So this year tied for second coolest of past ten years.I didn’t go farther back.

Earth is cooling.

Maybe I wasn’t clear, but I was talking about the temperature in England. Not the UAH data. That’s why I said andy in epsom’s station was at odds with the rest of England. It was the 3rd warmest September in England in the last 18 years, actually tied with 2nd place.

As you say UAH was only the 5th warmest in the last 18 years, but given the uncertainty who knows the exact ranking.

Can I just ask what your weather station data came ouit to?

Do not have my own weather station and even if I did I doubt it would be as meaningful as Met Office data. I do know from my persoanl experience that there has been a lot of warm weather this September.

THank you. you have just proved that you are a waste of time discussing anything with.

Thanks, I have enough trouble keeping up with the Gormans. But just in case, could you give the figures for your weather station and how it compares with the past 18 years?

I use a Vantage Vue. It has a manufacturer stated uncertainty of +/- 0.5C – better than the Federal standard uncertainty of +/- 0.6C.

“It tied September 2015, and was cooler than 2014’s 0.29 as well. The Septembers of 2013 and 2012 were 0.34 and 0.39.”

I think you need to check your data. 2015 was 0.09°C, 2014 was -0.01°C.

2021 was 5th warmest of the last ten years, that is pretty much in middle.

Ranked order of last 10 Septembers:

Without two major cooling volcanos early in the record and warming Super El Ninos at the middle and on the end, there wouldn’t be much of a trend. Maybe a warming of 0.25 to 0.3 C over the period. A continuation of the post-Little Ice Age warming. It will get cold when that ends.

Compare sat data with 2m data ??? 😀

Here’s the graph of temperatures in England.

Looks like the global warming equivalent of a Rorschach test. People can see whatever they want to see in the graph. I see a cyclical temperature fluctuation with a period of approximately 30 years, with the last ten years or so representing the peak of a cycle.

..I see a 70 year cycle with max around 1940 and 2010, most likely governed by AMO, but also a slight increase between the cycles – the continuing recovery from the little ice age. https://en.wikipedia.org/wiki/Atlantic_multidecadal_oscillation

I’m saying nothing about the trend, just pointing out that this September was warm, and not as was claimed in the original comment the coldest in 18 years.

I find it a good indication of the skepticism here that andy in epsom’s claim has gone unchallenged and been awarded several up votes, whereas any attempt by me to present actual data is immediately jumped upon, down voted and we arguments about things unrelated to the simple fact I was making.

Still, I’d like to see you demonstrate a 30 year cycle on English September temperatures. You say the last ten years has been a peek. It seems odd that a cycle can have a peak lasting a 3rd of its duration.

Why is that odd? Deserts can have dry cycles with peaks that last a long time before it changes.The High Plains have dry/wet cycles where the dry part lasts a significant part of the cycle. That’s why the High Plains are classified as semi-arid and the prairie grass developed a root system that can go down 8 ft or more.

What’s that old Hamlet quote? More things in heaven and earth that dreamt of in your philosophy?

Bellend, you’ve unwittingly posted data that forms a compelling case against climate alarmism. Just like the whole planet, there was rapid warming from 1910 to 1940 (at low CO2 and low rate of CO2 increase), cooling from 1940 to 1970 (at rising CO2) and warming again from 1970 to the present. However, in the last ~ two decades, at the highest CO2 of the whole period, the rate of warming has been much less than it was from 1910 to 1940.

This is proof positive that CO2 is NOT the only control knob on the climate. It’s actually funny that you alarmists routinely post data that contradicts your one (phony) narrative.

Funny how people here will assume a graph showing one month in a small and highly changeable part of the planet, can be used as a proxy for the entire world.

Lets see. The trend from 1970 to 2001 is +0.24 ± 0.36°C / decade, faster than global value over that period, but not statistically significant.

The trend from 2002 to 2021 is -0.09 ± 0.80°C / decade. Cooling, but not statistically significant, nor statistically different from the previous 30 years.

How much of an effect has that cooling had on the underlying warming rate?

The trend from 1970 to 2021 is +0.29 ± 0.18°C / decade. Still warming, and now statistically significant (though I’m not accounting for auto-correlation). But the warming rate is now slightly faster than it was because of all that cooling.

Or,

What are you trying to prove?

I was talking about the whole planet not one month of local data, and you know it, Bellend.

The cooling period of the mid-century is a statistically significant thing – it’s why many were predicting an impending ice age in the early 70s. Claim it isn’t significant, and you’re lying.

Try not thinking so linearly. There’s NO reason to believe that the climate behaves linearly (not even CO2 as the effect of CO2 on temperature is known to be logarithmic). If the climate isn’t linear, it makes NO sense to fit a line. None at all. To see why you’ve been so badly misinformed, try to fit the data with a cubic function. You’ll get a better correlation coefficient than a line and and you’ll see that you’ve been brainwashed into thinking that rising CO2 concentrations explain all (or even most) of the warming.

“I was talking about the whole planet not one month of local data, and you know it, Bellend.”

Maybe I distracted by your by your witty play of words on my pseudonym, but I thought you were responding to my graph of English September temperatures, saying that I had unwittingly posted data that proved your point. If you weren’t talking about England in September, which of my many data points were you talking about?

“The cooling period of the mid-century is a statistically significant thing – it’s why many were predicting an impending ice age in the early 70s. Claim it isn’t significant, and you’re lying.”

What’s your null-hypothesis here? No warming, or warming at the current rate?

“If the climate isn’t linear, it makes NO sense to fit a line”

Mostly agree, and have pointed this out to Monckton several times when he tries to fit a straight line to the entirety of the HadCRUT data, when it clearly isn’t linear. However, it’s usually best to start with a straight line and only change when it’s clear that the data is not fitting.

“To see why you’ve been so badly misinformed, try to fit the data with a cubic function. You’ll get a better correlation coefficient than a line …”

Yes, that’s a given. More degrees of freedom lead to a better fit, which is one reason why you have to be cautious about fitting a polynomial. It might give you a reasonable smoothing, but it ‘s unlikely to be telling you what’s actually happening.

<sigh> okay, so proxy data shows the following ( except for in IPCC reports go figure )

Or

Here is the real Abrantes et al. 2017 publication. Notice that this graph does not appear anywhere in that publication as you have presented. The timeseries is real, but it actually comes from Luterbacher et al. 2016 which is focused not n the NH like what your graph says, but on Europe and is consistent with other studies including the pioneering work of Hurbert Lamb in 1965

By the way the charts I shared do NOT mean that CO2 would not have a modest warming effect on the earth. It would! However the question is not one of the warming effect of CO2 but the overall cyclical warming and cooling of the planet.

Let me place it another way, are you saying there is 0% chance that the warming that we are seeing could be caused by NATURAL processes and enhanced SLIGHTLY by CO2?

If your answer is that it is IMPOSSIBLE for that to be the case I ask why do over a thousand WORLD WIDE papers show that these warm periods existed?

And lest you think that I am using ‘debunked’ information here is one FROM James Hansen… which I believe is NOT as honest as it could be but whatever.

I don’t get it, what is the point of these graphs you post over and over and over? What exactly is their purpose? To lure people into pointless arguments about meaningless hundredths of a Kelvin?

Usually to illustrate the point I’m trying to make, or to proved a better context on someone else’s claim.

Show those temps with an actual temperature. With the y-axis starting a zero and a 15.? baseline, you won’t even be able to draw a line that shows. A graph showing anomalies is simply a propaganda device. Does anyone even think people would care if the temp was 16.5 rather than 15?

So are you saying returning to little ice age temperatures would not be a problem if it’s difficult to see on a graph showing K from 0?

The warming half of an approximately 70-year cycle. Added to the beneficial warming since the Little Ice Age.

You can’t truthfully state that his weather station is at odds with “the rest of England” – only that it is at odds with the “official” temperature” stations. Local conditions can vary wildly when measuring weather. If the “official” stations are mostly near buildings, parking lots, jet exhausts and other heat contributors then of course they will show warming as population and heat pollution rise.

I was replying in kind to his comment “So just to the southwest of London must be totally at odds with the rest of the planet”.

The UK has been warm this September. I know because I live here. If Epsom really was experiencing the coldest September in 18 years whilst everywhere in the country were experiencing the opposite, I’d be very surprised. But why would I, or anyone here trust a single account using their own weather station over all the professional weather stations?

Does that not strike you as being a more likely explanation than there being some vast conspiracy to inflate global temperatures?