Guest essay by Eric Worrall

A Team of Scientists plans to use AI to improve climate modelling of sub-grid scale phenomena such as turbulence and clouds. But there is no reason to think an AI will have any more luck than human climate modellers.

International Collaboration Will Use Artificial Intelligence to Enhance Climate Change Projections

MARCH 23, 2021 10:18 PM AEDT

A team of scientists, backed by a $10 million grant from Schmidt Futures, will work to enhance climate-change projections by improving climate simulations using artificial intelligence.

$10 million effort, backed by Schmidt Futures, to be led by NYU Courant Researcher

A team of scientists, backed by a $10 million grant from Schmidt Futures, will work to enhance climate-change projections by improving climate simulations using artificial intelligence (AI).

Led by Laure Zanna, a professor at New York University’s Courant Institute of Mathematical Sciences and NYU’s Center for Data Science, the international team will leverage advances in machine learning and the availability of big data to improve our understanding and representation in existing climate models of vital atmospheric, oceanic, and ice processes, such as turbulence or clouds. The deeper understanding and improved representations of these processes will help deliver more reliable climate projections, the scientists say.

“Despite drastic improvements in climate model development, current simulations have difficulty capturing the interactions among different processes in the atmosphere, oceans, and ice and how they affect the Earth’s climate; this can hinder projections of temperature, rainfall, and sea level,” explains Zanna, part of the Courant Institute’s Center for Atmosphere Ocean Science and a visiting professor at Oxford University. “AI and machine-learning tools excel at extracting complex information from data and will help bolster the accuracy of our climate simulations and predictions to better inform the work of policymakers and scientists.”

…

Due to the complexity of the atmosphere, ocean, and ice systems, scientists rely on computer simulations, or climate models, to describe their evolution. These models divide up the climate system into a series of grid boxes, or grid cells, to mimic how the ocean, atmosphere, and ice are changing and interacting with one another. However, the number of grid boxes chosen is limited by computer power; currently, climate models for multi-decade projections use grid box sizes measuring approximately 50 km to 100 km (roughly 30 to 60 miles). Consequently, processes that happen on scales that are smaller than the grid cell–clouds, turbulence, and ocean mixing–are not well captured.

…

Read more: https://www.miragenews.com/international-collaboration-will-use-artificial-532909/

Top marks for admitting model temperature projections struggle to capture important processes. Clouds, storms, ocean mixing and turbulence are likely the reason open ocean surface temperatures in the tropics are capped at 30c.

But why do I think the AI approach will struggle to improve on human efforts?

The reason is decades of effort to improve understanding the global climate has not answered basic questions, like how much does global temperature change in response to adding more CO2, and human brains are far more powerful than any AI.

AIs work best when the solution is easy to approach, when a gentle slope of improving results provides a strong indication to the AI that it is making progress.

But I do not think this is a good description of the climate system. The lack of progress over the last three decades, despite thousands of intelligent people dedicating years of their lives to the effort, implies the solution to better climate modelling is very difficult to find. Outside the narrow range of correct solutions there is likely a vast wilderness of poor quality answers, with very little indication of which direction the AI needs to travel to discover a high quality solution.

Either that, or there is something fundamental missing from the theory, and a high quality solution will not be possible until the missing piece of the puzzle is found.

Even a powerful AI struggles to search a multi-dimensional problem space when the correct answer is poorly signposted – there are many more ways to be wrong than right.

Even though Schmidt futures is technically private (looks like it is tax exempt), NYU is not. Remember the Johnny 5 principle: “It just runs programs.” An AI (or any) software can never be smarter than the IQ of the smartest programmer divided by the number of programmers on the team. Then half that number for government funded software. But, being the product of a government funded team, there is no one responsible for failure. Winning all around!

In a sane world, the grant proposal and all documentation, and source code, should be subject to FOIA and third part audit.

“Climate scientists™” were never going to be able to do it with REAL intelligence..

… but they think “artificial” will work…

Sorry guys, in both cases, its the “intelligence” part that is missing.

C’mon man, you don’t think Griff, Simon, Big Oil….are intelligent 🤓

not in the least bit…… Artificial, maybe, but NEVER the intelligent part.

“The reason is decades of effort to improve understanding the global climate has not answered basic questions, like how much does global temperature change in response to adding more CO2, and human brains are far more powerful than any AI.

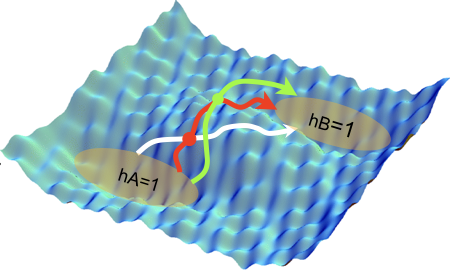

AIs work best when the solution is easy to approach, when a gentle slope of improving results provides a strong indication to the AI that it is making progress.” Good point Eric. The bottom of the bowl in your animation is at ECS = Zero C. Or rather, ECS is some value not reliably distinguishable from zero C. But the modelers have stubbornly refused to consider this as the most likely result. So whatever this “team of scientists” accomplishes will be like colorizing a stick-figure cartoon and calling it more realistic.

In this image I have plotted the hourly “vertical integral of total energy” in the atmosphere for one year at a single gridpoint near where I live. I have used units of Watt-hours per square meter on the vertical scale. It is commonly taken that the direct warming effect of a doubling of CO2 from pre-industrial times is about 3.7 Watts per square meter, or 3.7 Watt-hours per hour per square meter. Notice how that value disappears in the vertical scale as the total energy in the atmosphere varies rapidly and constantly as weather happens.

The point? No “team of scientists” using “AI” will ever find that 3.7 Watt-hours per hour by computation. We are blind to it because the atmosphere is the only authentic model of its own performance, and it operates so as to completely obscure our view of the ECS of such a small influence as the doubling of CO2.

I apologize to those readers who may have already seen this from comments on other postings.

This data is from the ERA5 reanalysis product by ECMWF (The European Centre for Medium-Range Weather Forecasts.)

Yep – just looking at the curve it should be obvious the noise drowns out the signal.

Just a note. The curve is not “noise”. It is representative of the true value of the component being measured. Noise is extraneous information that is not part of the generated component and interferes with determining the true value of the component.

Reading this a lot of folks have been indoctrinated into the thought process that the data being measured and recorded IS THE SIGNAL. It is not. Temperature is a continuous function just like the sound from a violin string. All the other parts of the climate are continuous functions also.

Trying to use entries in a database of discreet measurements of various items is a waste of time if you don’t even have a clue what the physical continuous waveforms are in a synchronous basis.

Using one temperature per day which is then averaged until it screams stop is not going to help with continuous variations in clouds on a moment to moment basis. We have a pretty good mathematical basis for handling continuous waveforms such as speech by digitizing them and processing them. We don’t even have a clue how to define a temperature waveform mathematically. Can you use Fourier or wavelet analysis. How about on a global basis? The same applies to humidity, wind, convection, everything you can think of.

My fear is that these folks will train an AI with hosed measurements to give the response they want then claim see, we can insure you about GAT. It will basically be used to perform multiple regressions on temperature and spit out what it was trained to do.

I think you “get” the problem visually, which is why I posted this. But I also agree with Jim Gorman here that it is really not “noise” per se that drowns out the signal. It is the power of the measurable and rapid changes and reversals of the energy state of the atmosphere itself. I just can’t understand how a climate scientist can claim to have detected the “signal” of human influence on the climate via greenhouse gas emissions.

In statistical terms it is the “variance”.

AI is the trendy approach to programming. But like all computer programs, they will only say what they are programmed to say.

So are the recipients of the $10 million going to send up balloons to measure how much IR radiation is trapped by clouds at various altitudes, and how much sunlight is reflected? If not, AI by itself cannot improve the modeling of clouds without valid input data.

The PEs are taking information from the artificially intelligent to create an artificial intelligence – yeah that’ll work.

You realize that if they solve the problem, they will all be out of jobs, don’t you?

It seems to me, since I’ve photographed a lot – a LOTTT!! — of clouds that cloud formation is more oriented toward chaos than anything else. What might appear to be a thunderhead promising a whopper of a storm can peter out to nothing, and at the same time, a puffy mop that looks like a dandelion gone to seed can turn into a derecho that will do more damage than a semi-truck on an icy road…. or NOT. It is chaos at work there, not prescribed mathematics, and this seems to be something the people who make these proposals fail to understand.

It’s the reason we seldom get forecast further ahead than 7 days maximum, and those forecasts frequently change within 12 hours.

You can’t do this stuff in a laboratory or pretend that you can make predictions cast in stone, not when you’re addressing a system that changes on a whim, something over which they have absolutely zero control.

This should be interesting.

AI is a fancy tag for neural networks. These can work well if you throw a lot of training data and right and wrong answers at them. An awful lot.

So, can we expect real world climate data to find it’s way into modelling that has resisted verification from the outset? Don’t wait up.

“The lack of progress over the last three decades, despite thousands of intelligent people dedicating years of their lives to the effort, implies the solution to better climate modelling is very difficult to find.”

Doubtful. In reality it means the climate is not doing what the “thousands of intelligent people” and their programmers want it to do.

Or, there is something fundamentally wrong with the theory, and no amount of computer power will produce an observationally valid result, until that theory is modified to reflect observed reality – or abandoned all together.

You’ve hit the nail on the head. They are trying to define continuous functions with some digital sampling, i.e., one Tavg per day and think they can define how that function is varying through time.

The “I” in AI is the wrong choice. Computers are not “Intelligent”.

It’s like humans who have learned stuff by rote. They don’t really know what they are talking about.

The problem with AI is that you are expected to trust the answers. It cannot explain its methodology and reasoning.

And another thing. Use of AI is completely against the Scientific Method.

I can just about condone the use of computer models in some science and engineering situations – e.g. meteorology (where no other method can yield the required forecasts in the required time frame) or structural engineering (where the prototype testing would be prohibitively costly or completely impossible, and anyway the constitutive laws are quite simple and well-understood). But computerised numerical models are still a very poor second to real testing.

But AI isn’t modelling – and how is AI even close to being equivalent to real testing?

The climate frenzy started in the mid-seventies with Hansen’s computer model and wouldn’t exist without them.

Climate computer models are ‘the disease of which they purport to be the cure’.

Because adaptive smoothing functions perform better than human deduction inside a limited frame of reference (i.e. scientific logical domain) and inference over a marginally expanded scope. Same characterization deficits. Same computational limits. Same known and unknown unknowns. Same brown matter to infill the missing links.

My opinion: If the AI models run colder than existing models they will be “disappeared”; if the AI models run hotter than existing models they will be touted as better than mom’s apple pie (which is an impossibility – but there ya go)

Good grief, $10M will barely cover the first week’s leccy bill for any decent computer.

Assuming they can lay hands on one

10M would have got a nice little seafront abode in Aus, what about that instead?

Am sure anyone with even a modicum of intelligence, artificial or not, could learn a stack more about climate by shacking up there than a box, any box, of lectronix could teach them

They need to study cloud behaviour first. Models are only as bad as the modellers.

Put Willis in complete charge and control to choose his team and that may be the best $10 mil ever spent!

It can be instructive to read researchers’ words when they talk about what they intend to do, or hope to achieve, when they are seeking funding.

They tend to be a lot more honest about the failings in their field.

I don’t have much objection to govt spending an extra $10 million or so on improving cloud modelling. God knows, they need it. AI might even help a little bit, though not as much as they hope/claim.

And a extra million might possibly have the inadvertent effect of drawing attention to just how bad they are at it, and how we are wasting $Trillions on political initiatives based on currently inadequate modelling.

So, what you are saying, is we should welcome this initiative, promote it like it’s the latest half-naked teenager dancing to a synthesized backbeat, add some brawny boys in the background? You know, advertise the whole thing to the point where everyone in the whole world knows about this marvelous new Thing, then we sit back and wait for the inevitable to happen: A huge spout of garbage to flood the collective consciousness. That would finaly prove the worthlessness of this kind of project, and we can return to normal life?

Tempting, tempting.

Unfortunatley, most people will fail the Turing Test, so whatever garbage this AI produces, they will gleefullly dance to the discordant tune, like there’s no tomorrow (literally).

In military terms, Artificial Intelligence translates as Fake Information…

And just to be sure, modelling a cloud perfectly, would require resolution down to the smallest eddy current, which is smaller than the naked eye can see. Approximations will improve over time, though…always with the approximations.

But hey, at least the poor bastards are recognising those fluffy things in the sky now…

If AI really works then in lightening speed it will take over everything. The reasoning is that if AI works then that AI can be used to create a better AI and that better AI can be used to create an even better AI and so on ( until some physical limit hit like chip speed ).

I think that be heuristics you describe there, the recipe for hubris, which is the word you use in polite conversation to say “that guy’s head is full of his own shitty preconceptions”.

Back to GIGO…

The .gif showing the computational energy well is instructive. For a first year undergraduate.

One of the most significant difficulties is that it is unknown where you are starting from on an energy landscape. For one quick example found on the web, look at a bigger picture here:

You simply don’t know where your calculation is starting from and where it might finish. Are you climbing to the main summit, or some little bump on a ridge far removed from the peak of interest?

The program typically focuses on climbing a local ‘energy hill’. But it is likely not the biggest most important hill. It only hill climbs to the peak which the program randomly finds or is arbitrary assigned to. In a cloud you may think you’ve climbed Everest, when you actually took a wrong turning at the South Col and are standing on the summit of Lhotse.

Of course, people are long aware of this problem, but there is no encompassing solution that I have heard of. It would be front page news if they had solved it. For some background:

“M. Mitchell, J. Holland, S. Forrest

When will a genetic algorithm outperform hill climbing?

J. Cowan, G. Tesauro, J. Alspector (Eds.), Advances in Neural Information Processing Systems, Morgan Kauffman, San Francisco, CA (1994), pp. 51-58″

I thought the science of CAGW was settled?

How will $10 million for yet another model settle it more?

Oh hell, here comes Sky-net.

Don’t you mean “Cli-net’?

Parameterizing clouds is one of the lessor deficiencies in GCMs. Other deficiencies include:

Not using measured water vapor

Failing to account for thermalization

Bogus application of feedback control theory

Failure to account for ocean cycles

Failure to account for variations in solar influence

I think Joni Mitchell had this all figured out a few years back.

I’ve looked at clouds from both sides now

From up and down, and still somehow

It’s cloud illusions I recall

I really don’t know clouds at all. . .

https://youtu.be/aCnf46boC3I <– Have a listen