Guest Post by Werner Brozek, Excerpted from Professor Robert Brown from Duke University, Conclusion by Walter Dnes and Edited by Just The Facts:

A while back, I had a post titled: HadCRUT4 is From Venus, GISS is From Mars, which showed wild monthly variations from which one could wonder if GISS and Hadcrut4 were talking about the same planet. In comments, mark stoval, posted a link to this article, Why “GISTEMP LOTI global mean” is wrong and “HadCRUt3 gl” is right“, who’s title speaks for itself and Bob Tisdale has a recent post, Busting (or not) the mid-20th century global-warming hiatus, which could explain the divergence seen in the chart above.

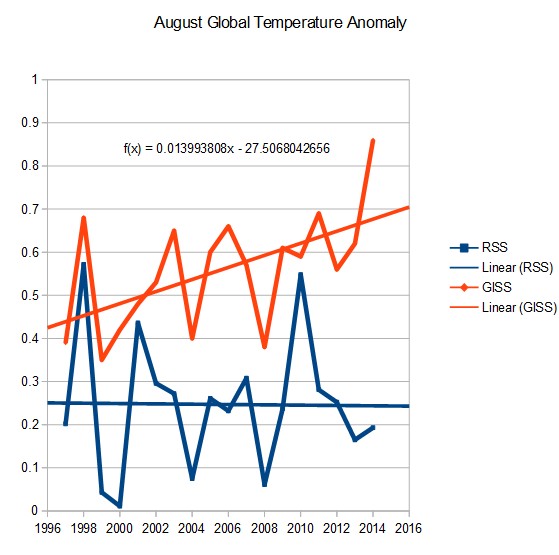

The graphic at the top is the last plot from Professor Brown from his comment I’ve excerpted below, which shows a period of 19 years where the slopes go in opposite directions by a fairly large margin. Is this reasonable? Think about this as you read his comment below. His comment ends with rgb.

rgbatduke

November 10, 2015 at 1:19 pm

“Werner, if you look over the length and breadth of the two on WFT, you will find that over a substantial fraction of the two plots they are offset by less than 0.1 C. For example, for much of the first half of the 20th century, they are almost on top of one another with GISS rarely coming up with a patch 0.1 C or so higher. They almost precisely match in a substantial part of their overlapping reference periods. They only start to substantially split in the 1970 to 1990 range (which contains much of the latter 20th century warming). By the 21st century this split has grown to around 0.2 C, and is remarkably consistent. Let’s examine this in some detail:

We can start with very simple graph that shows the divergence over the last century:

http://www.woodfortrees.org/plot/hadcrut4gl/from:1915/to:2015/trend/plot/gistemp/from:1915/to:2015/trend

The two graphs have widening divergence in the temperatures they obtain. If the two measures were in mutual agreement, one would expect the linear trends to be in good agreement — the anomaly of the anomaly, as it were. They should, after all, be offset by only the difference in mean temperatures in their reference periods, which should be a constant offset if they are both measuring the correct anomalies from the same mean temperatures.

Obviously, they do not. There is a growing rift between the two and, as I noted, they are split by more than the 95% confidence that HadCRUT4, at least, claims even relative to an imagined split in means over their reference periods. There are, very likely, nonlinear terms in the models used to compute the anomalies that are growing and will continue to systematically diverge, simply because they very likely have different algorithms for infilling and kriging and so on, in spite of them very probably having substantial overlap in their input data.

In contrast, BEST and GISS do indeed have similar linear trends in the way expected, with a nearly constant offset. One presumes that this means that they use very similar methods to compute their anomalies (again, from data sets that very likely overlap substantially as well). The two of them look like they want to vote HadCRUT4 off of the island, 2 to 1:

http://www.woodfortrees.org/plot/hadcrut4gl/from:1915/to:2005/trend/plot/gistemp/from:1915/to:2005/trend/plot/best/from:1915/to:2005/trend

Until, of course, one adds the trends of UAH and RSS:

http://www.woodfortrees.org/plot/hadcrut4gl/from:1979/to:2015/trend/plot/gistemp/from:1979/to:2015/trend/plot/best/from:1979/to:2005/trend/plot/rss/from:1979/to:2015/trend/plot/uah/from:1979/to:2015/trend

All of a sudden consistency emerges, with some surprises. GISS, HadCRUT4 and UAH suddenly show almost exactly the same linear trend across the satellite era, with a constant offset of around 0.5 C. RSS is substantially lower. BEST cannot honestly be compared, as it only runs to 2005ish.

One is then very, very tempted to make anomalies out of our anomalies, and project them backwards in time to see how well they agree on hind casts of past data. Let’s use the reference period show and subtract around 0.5 C from GISS and 0.3 C from HadCRUT4 to try to get them to line up with UAH in 2015 (why not, good as any):

http://www.woodfortrees.org/plot/hadcrut4gl/from:1979/to:2015/offset:-0.32/trend/plot/gistemp/from:1979/to:2015/offset:-0.465/trend/plot/uah/from:1979/to:2015/trend

We check to see if these offsets do make the anomalies match over the last 36 most accurate years (within reason):

http://www.woodfortrees.org/plot/hadcrut4gl/from:1979/to:2015/offset:-0.32/plot/gistemp/from:1979/to:2015/offset:-0.465/plot/uah/from:1979/to:2015

and see that they do. NOW we can compare the anomalies as they project into the indefinite past. Obviously UAH does have a slightly slower linear trend over this “re-reference period” and it doesn’t GO any further back, so we’ll drop it, and go back to 1880 to see how the two remaining anomalies on a common base look:

http://www.woodfortrees.org/plot/hadcrut4gl/from:1880/to:2015/offset:-0.32/plot/gistemp/from:1880/to:2015/offset:-0.465

We now might be surprised to note that HadCRUT4 is well above GISS LOTI across most of its range. Back in the 19th century splits aren’t very important because they both have error bars back there that can forgive any difference, but there is a substantial difference across the entire stretch from 1920 to 1960:

http://www.woodfortrees.org/plot/hadcrut4gl/from:1920/to:1960/offset:-0.32/plot/gistemp/from:1920/to:1960/offset:-0.465/plot/hadcrut4gl/from:1920/to:1960/offset:-0.32/trend/plot/gistemp/from:1920/to:1960/offset:-0.465/trend

This reveals a robust and asymmetric split between HadCRUT4 and GISS LOTI that cannot be written off to any difference in offsets, as I renormalized the offsets to match them across what has to be presumed to be the most precise and accurately known part of their mutual ranges, a stretch of 36 years where in fact their linear trends are almost precisely the same so that the two anomalies differ only BY an offset of 0.145 C with more or less random deviations relative to one another.

We find that except for a short patch right in the middle of World War II, HadCRUT4 is consistently 0.1 to 0.2 C higher than GISStemp. This split cannot be repaired — if one matches it up across the interval from 1920 to 1960 (pushing GISStemp roughly 0.145 HIGHER than HadCRUT4 in the middle of WW II) then one splits it well outside of the 95% confidence interval in the present.

Unfortunately, while it is quite all right to have an occasional point higher or lower between them — as long as the “occasions” are randomly and reasonably symmetrically split — this is not an occasional point. It is a clearly resolved, asymmetric offset in matching linear trends. To make life even more interesting, the linear trends do (again) have a more or less matching slope, across the range 1920 to 1960 just like they do across 1979 through 2015 but with completely different offsets. The entire offset difference was accumulated from 1960 to 1979.

Just for grins, one last plot:

http://www.woodfortrees.org/plot/hadcrut4gl/from:1880/to:1920/offset:-0.365/plot/gistemp/from:1880/to:1920/offset:-0.465/plot/hadcrut4gl/from:1880/to:1920/offset:-0.365/trend/plot/gistemp/from:1880/to:1920/offset:-0.465/trend

Now we have a second, extremely interesting problem. Note that the offset between the linear trends here has shrunk to around half of what it was across the bulk of the early 20th century with HadCRUT4 still warmer, but now only warmer by maybe 0.045 C. This is in a region where the acknowledged 95% confidence range is order of 0.2 to 0.3. When I subtract appropriate offsets to make the linear trends almost precisely match in the middle, we get excellent agreement between the two anomalies.

Too excellent. By far. All of the data is within the mutual 95% confidence interval! This is, believe it or not, a really, really bad thing if one is testing a null hypothesis such as “the statistics we are publishing with our data have some meaning”.

We now have a bit of a paradox. Sure, the two data sets that these anomalies are built from very likely have substantial overlap, so the two anomalies themselves cannot properly be viewed as random samples drawn from a box filled with independent and identically distributed but correctly computed anomalies. But their super-agreement across the range from 1880 to 1920 and 1920 to 1960 (with a different offset) and across the range from 1979 to 2015 (but with yet another offset) means serious trouble for the underlying methods. This is absolutely conclusive evidence, in my opinion, that “According to HadCRUT4, it is well over 99% certain GISStemp is an incorrect computation of the anomaly” and vice versa. Furthermore, the differences between the two can not be explained by the fact that they draw on partially independent data sources — if this were the case, the strong coincidences between the two across piecewise blocking of the data are too strong — obviously the independent data is not sufficient to generate a symmetric and believable distribution of mutual excursions with errors that are anywhere near as large as they have to be, given that both HadCRUT4 and GISStemp if anything underestimate probable errors in the 19th century.

Where is the problem? Well, as I noted, a lot of it happens right here:

http://www.woodfortrees.org/plot/hadcrut4gl/from:1960/to:1979/offset:-0.32/plot/gistemp/from:1960/to:1979/offset:-0.465/plot/hadcrut4gl/from:1960/to:1979/offset:-0.32/trend/plot/gistemp/from:1960/to:1979/offset:-0.465/trend

The two anomalies match up almost perfectly from the right hand edge to the present. They do not match up well from 1920 to 1960, except for a brief stretch of four years or so in early World War II, but for most of this interval they maintain a fairly constant, and identical, slope to their (offset) linear trend! They match up better (too well!) — with again a very similar linear trend but yet another offset across the range from 1880 to 1920. But across the range from 1960 to 1979, Ouch! That’s gotta hurt. Across 20 years, HadCRUT4 cools Earth by around 0.08 C, while GISS warms it by around around 0.07C.

So what’s going on? This is a stretch in the modern era, after all. Thermometers are at this point pretty accurate. World History seems to agree with HadCRUT4, since in the early 70’s there was all sorts of sound and fury about possible ice ages and global cooling, not global warming. One would expect both anomalies to be drawing on very similar data sets with similar precision and with similar global coverage. Yet in this stretch of the modern era with modern instrumentation and (one has to believe) very similar coverage, the two major anomalies don’t even agree in the sign of the linear trend slope and more or less symmetrically split as one goes back to 1960, a split that actually goes all the way back to 1943, then splits again all the way back to 1920, then slowly “heals” as one goes back to 1880.

As I said, there is simply no chance that HadCRUT4 and GISS are both correct outside of the satellite era. Within the satellite era their agreement is very good, but they split badly over the 20 years preceding it in spite of the data overlap and quality of instrumentation. This split persists over pretty much the rest of the mutual range of the two anomalies except for a very short period of agreement in mid-WWII, where one might have been forgiven for a maximum disagreement given the chaotic nature of the world at war. One must conclude, based on either one, that it is 99% certain that the other one is incorrect.

Or, of course, that they are both incorrect. Further, one has to wonder about the nature of the errors that result in a split that is so clearly resolved once one puts them on an equal footing across the stretch where one can best believe that they are accurate. Clearly it is an error that is a smooth function of time, not an error that is in any sense due to accuracy of coverage of the (obviously strongly overlapping) data.

This result just makes me itch to get my hands on the data sets and code involved. For example, suppose that one feeds the same data into the two algorithms. What does one get then? Suppose one keeps only the set of sites that are present in 1880 when the two have mutually overlapping application (or better, from 1850 to the present) and runs the algorithm on them. How much do the results split from a) each other; and b) the result obtained from using all of the available sites in the present? One would expect the latter, in particular, to be a much better estimator of the probable method error in the remote past — if one uses only those sites to determine the current anomaly and it differs by (say) 0.5 C from what one gets using all sites, that would be a very interesting thing in and of itself.

Finally, there is the ongoing problem with using anomalies in the first place rather than computing global average temperatures. Somewhere in there, one has to perform a subtraction. The number you subtract is in some sense arbitrary, but any particular number you subtract comes with an error estimate of its own. And here is the rub:

The place where the two global anomalies develop their irreducible split is square inside the mutually overlapping part of their reference periods!

That is, the one place they most need to be in agreement, at least in the sense that they reproduce the same linear trends, that is, the same anomalies is the very place where they most greatly differ. Indeed, their agreement is suspiciously good — as far as linear trend is concerned – everywhere else, in particular in the most recent present where one has to presume that the anomaly is most accurately being computed and the most remote past where one expects to get very different linear trends but instead get almost identical ones!

I doubt that anybody is still reading this thread to see this — but they should.

rgb

P.S. from Werner Brozek:

On Nick Stokes Temperature Trend Viewer note the HUGE difference in the lower number for the 95% (Cl) confidence limits between Hadcrut4 and GISS from March 2005 to April 2016:

For GISS:

Temperature Anomaly trend

Mar 2005 to Apr 2016

Rate: 2.199°C/Century;

CI from 0.433 to 3.965;

For Hadcrut4:

Temperature Anomaly trend

Mar 2005 to Apr 2016

Rate: 1.914°C/Century;

CI from -0.023 to 3.850;

In the sections below, we will present you with the latest facts. The information will be presented in two sections and an appendix. The first section will show for how long there has been no statistically significant warming on several data sets. The second section will show how 2016 so far compares with 2015 and the warmest years and months on record so far. For three of the data sets, 2015 also happens to be the warmest year. The appendix will illustrate sections 1 and 2 in a different way. Graphs and a table will be used to illustrate the data. The two satellite data sets go to May and the others go to April.

Section 1

For this analysis, data was retrieved from Nick Stokes’ Trendviewer available on his website. This analysis indicates for how long there has not been statistically significant warming according to Nick’s criteria. Data go to their latest update for each set. In every case, note that the lower error bar is negative so a slope of 0 cannot be ruled out from the month indicated.

On several different data sets, there has been no statistically significant warming for between 0 and 23 years according to Nick’s criteria. Cl stands for the confidence limits at the 95% level.

The details for several sets are below.

For UAH6.0: Since May 1993: Cl from -0.023 to 1.807

This is 23 years and 1 month.

For RSS: Since October 1993: Cl from -0.010 to 1.751

This is 22 years and 8 months.

For Hadcrut4.4: Since March 2005: Cl from -0.023 to 3.850

This is 11 years and 2 months.

For Hadsst3: Since July 1996: Cl from -0.014 to 2.152

This is 19 years and 10 months.

For GISS: The warming is significant for all periods above a year.

Section 2

This section shows data about 2016 and other information in the form of a table. The table shows the five data sources along the top and other places so they should be visible at all times. The sources are UAH, RSS, Hadcrut4, Hadsst3, and GISS.

Down the column, are the following:

1. 15ra: This is the final ranking for 2015 on each data set.

2. 15a: Here I give the average anomaly for 2015.

3. year: This indicates the warmest year on record so far for that particular data set. Note that the satellite data sets have 1998 as the warmest year and the others have 2015 as the warmest year.

4. ano: This is the average of the monthly anomalies of the warmest year just above.

5. mon: This is the month where that particular data set showed the highest anomaly prior to 2016. The months are identified by the first three letters of the month and the last two numbers of the year.

6. ano: This is the anomaly of the month just above.

7. sig: This the first month for which warming is not statistically significant according to Nick’s criteria. The first three letters of the month are followed by the last two numbers of the year.

8. sy/m: This is the years and months for row 7.

9. Jan: This is the January 2016 anomaly for that particular data set.

10. Feb: This is the February 2016 anomaly for that particular data set, etc.

14. ave: This is the average anomaly of all months to date taken by adding all numbers and dividing by the number of months.

15. rnk: This is the rank that each particular data set would have for 2016 without regards to error bars and assuming no changes. Think of it as an update 20 minutes into a game.

| Source | UAH | RSS | Had4 | Sst3 | GISS |

|---|---|---|---|---|---|

| 1.15ra | 3rd | 3rd | 1st | 1st | 1st |

| 2.15a | 0.261 | 0.358 | 0.746 | 0.592 | 0.87 |

| 3.year | 1998 | 1998 | 2015 | 2015 | 2015 |

| 4.ano | 0.484 | 0.550 | 0.746 | 0.592 | 0.87 |

| 5.mon | Apr98 | Apr98 | Dec15 | Sep15 | Dec15 |

| 6.ano | 0.743 | 0.857 | 1.010 | 0.725 | 1.10 |

| 7.sig | May93 | Oct93 | Mar05 | Jul96 | |

| 8.sy/m | 23/1 | 22/8 | 11/2 | 19/10 | |

| 9.Jan | 0.540 | 0.665 | 0.908 | 0.732 | 1.11 |

| 10.Feb | 0.832 | 0.978 | 1.061 | 0.611 | 1.33 |

| 11.Mar | 0.734 | 0.842 | 1.065 | 0.690 | 1.29 |

| 12.Apr | 0.715 | 0.757 | 0.926 | 0.654 | 1.11 |

| 13.May | 0.545 | 0.525 | |||

| 14.ave | 0.673 | 0.753 | 0.990 | 0.671 | 1.21 |

| 15.rnk | 1st | 1st | 1st | 1st | 1st |

If you wish to verify all of the latest anomalies, go to the following:

For UAH, version 6.0beta5 was used. Note that WFT uses version 5.6. So to verify the length of the pause on version 6.0, you need to use Nick’s program.

http://vortex.nsstc.uah.edu/data/msu/v6.0beta/tlt/tltglhmam_6.0beta5.txt

For RSS, see: ftp://ftp.ssmi.com/msu/monthly_time_series/rss_monthly_msu_amsu_channel_tlt_anomalies_land_and_ocean_v03_3.txt

For Hadcrut4, see: http://www.metoffice.gov.uk/hadobs/hadcrut4/data/current/time_series/HadCRUT.4.4.0.0.monthly_ns_avg.txt

For Hadsst3, see: https://crudata.uea.ac.uk/cru/data/temperature/HadSST3-gl.dat

For GISS, see:

http://data.giss.nasa.gov/gistemp/tabledata_v3/GLB.Ts+dSST.txt

To see all points since January 2015 in the form of a graph, see the WFT graph below. Note that UAH version 5.6 is shown. WFT does not show version 6.0 yet. Also note that Hadcrut4.3 is shown and not Hadcrut4.4, which is why many months are missing for Hadcrut.

As you can see, all lines have been offset so they all start at the same place in January 2015. This makes it easy to compare January 2015 with the latest anomaly.

Appendix

In this part, we are summarizing data for each set separately.

UAH6.0beta5

For UAH: There is no statistically significant warming since May 1993: Cl from -0.023 to 1.807. (This is using version 6.0 according to Nick’s program.)

The UAH average anomaly so far for 2016 is 0.673. This would set a record if it stayed this way. 1998 was the warmest at 0.484. The highest ever monthly anomaly was in April of 1998 when it reached 0.743 prior to 2016. The average anomaly in 2015 was 0.261 and it was ranked 3rd.

RSS

For RSS: There is no statistically significant warming since October 1993: Cl from -0.010 to 1.751.

The RSS average anomaly so far for 2016 is 0.753. This would set a record if it stayed this way. 1998 was the warmest at 0.550. The highest ever monthly anomaly was in April of 1998 when it reached 0.857 prior to 2016. The average anomaly in 2015 was 0.358 and it was ranked 3rd.

Hadcrut4.4

For Hadcrut4: There is no statistically significant warming since March 2005: Cl from -0.023 to 3.850.

The Hadcrut4 average anomaly so far is 0.990. This would set a record if it stayed this way. The highest ever monthly anomaly was in December of 2015 when it reached 1.010 prior to 2016. The average anomaly in 2015 was 0.746 and this set a new record.

Hadsst3

For Hadsst3: There is no statistically significant warming since July 1996: Cl from -0.014 to 2.152.

The Hadsst3 average anomaly so far for 2016 is 0.671. This would set a record if it stayed this way. The highest ever monthly anomaly was in September of 2015 when it reached 0.725 prior to 2016. The average anomaly in 2015 was 0.592 and this set a new record.

GISS

For GISS: The warming is significant for all periods above a year.

The GISS average anomaly so far for 2016 is 1.21. This would set a record if it stayed this way. The highest ever monthly anomaly was in December of 2015 when it reached 1.10 prior to 2016. The average anomaly in 2015 was 0.87 and it set a new record.

Conclusion

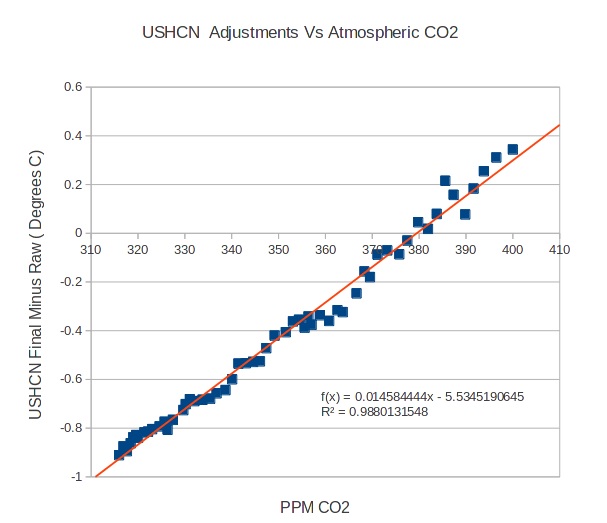

If GISS and Hadcrut4 cannot both be correct, could the following be a factor:

* “Hansen and Imhoff used satellite images of nighttime lights to identify stations where urbanization was most likely to contaminate the weather records.” GISS

* “Using the photos, a citizen science project called Cities at Night has discovered that most light-emitting diodes — which are touted for their energy-saving properties — actually make light pollution worse. The changes in some cities are so intense that space station crew members can tell the difference from orbit.” Tech Insider

Question… is the GISS “nightlighting correction” valid any more? And what does that do to their “data”?

Data Updates

GISS for May came in at 0.93. While this is the warmest May on record, it is the first time that the anomaly fell below 1.00 since October 2015. As for June, present indications are that it will drop by at least 0.15 from 0.93. All months since October 2015 have been record warm months so far for GISS. Hadsst3 for May came in at 0.595. All months since April 2015 have been monthly records for Hadsst3.

There is something weird going on with the data bases, and only a real, outside investigation could reveal what.

As such “derived” or “deranged” data have accumulated political significance, political, agenda driven choices have to be made; how interesting.

What on earth could stray light from low powered LED lighting do to global or local Temperatures ??

As far as I a aware, no current LED lighting technology emits energy in LWIR bands, other than the much reduced thermal radiation due to their case Temperature, and that is peanuts compared to incandescent lighting.

G

* “Hansen and Imhoff used satellite images of nighttime lights to identify stations where urbanization was most likely to contaminate the weather records.” GISS

* “Using the photos, a citizen science project called Cities at Night has discovered that most light-emitting diodes — which are touted for their energy-saving properties — actually make light pollution worse.

I think you missed the point. LED lighting may not do anything to the actual temperature. The referenced GISS press release at http://www.giss.nasa.gov/research/news/20011105/ states that nighttime brightness is used by GISS to ADJUST MEASURED TEMPERATURES. The question is not “what do bright LEDs do to real temperatures?”, but rather “what do bright LEDs do to the GISS temperature adjustments?”. If the adjustments are invalid, the GISS “adjusted data” is invalid.

“What on earth could stray light from low powered LED lighting do to global or local Temperatures”

It appears lights are used as a marker to indicate population centers for UHI avoidance? My best swag is more LEDs in some cities might skew a relative brightness scale, making incandescent rich urban areas appear less populated?

Jeez. Nightlights are not used to correct the data.

nightlights are used to CLASSIFY the stations as urban or rural.

nightlights are used to CLASSIFY a station. Notthing more. read the code

The dataset used , Imhoff 97, is Not a very good source,

Basically it captures what stations were urban in 1997 and which were rural.

As a proxy of urbanity nightlights is ok.. but it really measures electrification.

The actual Adjusting of stations happens by comparing urban and rural and urban are forced into agreement with rural stations.

Well, you’re the only one to say “correct”, everyone else said “adjust”. So assuming you meant “adjust”, your typically condescending comment contradicts itself at the end. If you actually meant “correct”, then you just battled your own strawman, and lost. Good job!

FANTASTIC !!!!

There is very little doubt in my mind that the historic temperature record prior to the advent of satellite-based measurement is absolutely worthless.

I doubt that the historical temperature data are worthless, though I would agree regarding the “adjusted” data and the anomalies produced from them.

Thing is, the raw data needs to be adjusted. Especially during that period of frequent TOBS PM-AM shifts and MMTS conversion. Those will spuriously decrease the warming trend, esp. for the well sited stations. And then there are station moves.

But there are other biases, too. Poor microsite (affecting 77% of the USHCN) is a net warming trend bias on the level of TOBS and is not accounted for at all.

That is Anthony’s Great Discovery.

Not to mention the CRS unit, itself. Tmax trend is way to high and Tmin trend is too low. (It nets out at maybe 25% too high for Tmean.) That is not accounted for by anyone. And it’s a moving bias — by now, a great majority of the USHCN CRS units have been replaced, so the bias is diminished. But CRS units are all over the place in the GHCN. Not only is that not accounted for in any metric, but MMTS is adjusted to the spuriously high level of CRS.

So raw data won’t do. Just won’t. But the fact that it must be adjusted makes it extremely important to apply those adjustments very carefully. And, as much of the GHCN metadata is either flawed or missing, even many adjustments must be inferred. And if BEST can infer from a data jump, we can infer in terms of trend and graduality. Sauce for the goose. And it’s very, very important not to leave out any geese. Viz microsite; viz CRS bias.

Sounds like an argument for a global CRN – real data rather than “adjusted” estimates.

When I think about “adjusting” data, I am reminded of Rumpelstiltskin spinning straw (bad data) into gold (good data). Then I think of GISS, spinning nothing (missing data) into gold (good data). I suspect the Brothers Grimm would be impressed. 😉

“And if BEST can infer from a data jump, we can infer in terms of trend and graduality. Sauce for the goose. And it’s very, very important not to leave out any geese. Viz microsite; viz CRS bias.”

Sorry. but if you do any adjusting you had better double blind test it.

The test dataset is available, so you can apply yoru adjustment code to blind data

and see how it does.

here is a clue. remember your Mcintyre.

IF you introduce a new method you need to do a methods paper first to show the method works

mixing up a new results and new method paper is just asking for trouble,

Second we dont infer adjustments from data jumps. so get your ducks in line.

You find a data jump by pairwise comparisons and infer that the jump is spurious. In many cases, correctly, I would say.

I think BEST is a very good first step in methodological terms. But I think it cannot ferret out systematic, gradual biases in its present form. This is correctable, however.

Sounds like an argument for a global CRN – real data rather than “adjusted” estimates.

It certainly is a — strong — argument for a GCRN. However . . .

When I think about “adjusting” data, I am reminded of Rumpelstiltskin spinning straw (bad data) into gold (good data).

There is some gold in that-there straw. Yes, it’s a pity we have to dig for it and refine it through demonstrably necessary adjustments.

Thing is, we are stuck with whatever historical data we have. We can’t go back in time and do a GCRN. We have to do the best we can with what we have.

Then I think of GISS, spinning nothing (missing data) into gold (good data). I suspect the Brothers Grimm would be impressed. 😉

Infill in the USHCN is less of a problem than for GHCN. Less missing data, far superior metadata (at least going back to the late 1970s, which covers our study period). Dr. Nielsen-Gammon did us some excellent work on infill — and how to cross-check it. We will address that in followup to our current effort.

The problem here is the mangled, systematically flawed adjustments applied to the GISS and NOAA surface data, and, yes, also to Haddy4. In order to ferret anything meaningful out of the historical non-CRN type data, adjustments do need to be applied. But the trick is to get it right. (Devil, meet details.)

Adjustments must be fully accounted for, clear, concise, replicable, and justifiable by clearly explained means. One must be able to say how and why any adjustment is made.

At least BEST does that, although I dispute their results (again, for easily explained, demonstrable reasons). BEST is a great first shot, but fails to address at least two gradual systematic biases that take it/keep it off the rails.

But with those factors included, the results would be hugely improved, and the stage set for further improvements as they are identified and quantified. Yes, Mosh and I are barking up different trees, using different approaches, but at least he is barking in the right direction. (GISS, NOAA, Haddy, not so much.)

Any researcher or layman must be able to ask why any particular station was adjusted an get a good answer. A “full and complete” answer that can be examined and found correct or wanting. (Not currently forthcoming from the creators of the main surface metrics.) And one may be equally certain that even if an adjustment improves accuracy, it will not result in perfection. Not even the excellent USCRN (i.e., little/no need to adjust) can give us that.

One must also be aware that this effort is in its adolescence. Our team (Anthony’s team) has identified at least two first-order factors which result in systematic, gradual diversion that makes complete hash out of any attempt at homogenization: Microsite and CRS bias. But there may be more. And, therefore, any system of adjustment must be done top-down and amenable to correction and improvement as our knowledge improves.

It is an ongoing effort. We, ourselves, have traveled that path. First, we upgunned our rating metric from Leroy (1999) to Leroy (2010). (And who knows what further refinement would bring?) Then we addressed, step by step, the various criticisms of our 2012 effort. Each step brought overall improvement — we think. And if we thought wrong, then independent review will follow publication and we will continue to address, refine, and improve.

Finally, homogenization, correctly applied, will improve results. But it is tricky to apply correctly, and, if done wrong — which it is — makes the problem worse rather than better.

Individually, no, not worthless. But averaged all together, yes, worthless.

Except when your tree rings become worthless and then they become worthwhile again.

Well we know for sure that the 150 odd years of data from 73% of the globe; namely the oceans, is total trash since it is based on water Temperature at some uncontrolled depth, rather than lower tropospheric air Temperature at some allegedly controlled height above the surface.

Christy et al showed, that those two are not the same, and not even correlated, so the old ships at sea data is total baloney, and not correctible to lower troposphere equivalent.

G

I don’t know about useless. So long as they put proper error bars on the data.

+/- 20C should be about right.

Exactly right. I also believe the error bars used for all the data is wrong; I suspect proper error calculation using instrumentation limits etc. would create larger error bars. Further I question the use of 95% confidence levels rather than 99%.

cool, skeptics disappear the LIA and the MWP.

Further I question the use of 95% confidence levels rather than 99%.

(*Grin*) What Mosh said. (You don’t even need the 20C.)

Look, I been there and done that. There is no 99%. Fergeddaboutit. One is lucky to get 95%, and even then only in the aggregate (far less the regionals). Heck, even station placement is a bias.

Yeah, we took what we could get. (And you ain’t seen nothin’ yet.) But the results are not meaningless, either — or war colleges wouldn’t stage wargames.

Error spec. on the type of commercial temperature gauges used on ships’ engine inlet temperature is no better than ±2°C, and may be as much as double that. Further, it is highly unlikely that the gauges are ever checked for calibration.

It is fascinating to note that certain climate database “experts” appear to prefer them to the ARGO buoys.

Steven Mosher: “cool, skeptics disappear the LIA and the MWP.”

Sceptics? Really?

You know, I always thought that was what you real climate “scientists” have done.

He was obviously referring to:

Which of course would not disappear them, but simply say for all we know they were far more extreme, or far less. (Saying “I do not know”, is never an assertion in any direction.

To all the foregoing – pretending accuracy that is not true is not science. Sorry, as David A. states, the proper answer is “We DON’T KNOW.” You can all pretend that you can use shoddy, spotty, measurements to say what the earth’s temperature was x years ago, and you can all pretend that somehow 95% confidence levels is meaningful. But a real scientist recognizes when their data is useless and accepts that using poor data (if that’s all they have) means their theory remains just that, a theory.

Anyone want to try new pharmaceuticals or a new bridge structure based on the type of data climate scientists use? How about a computer that works with the accuracy of 95% confidence levels?

Different things need different degrees of accuracy.

well…I do agree that the use of anomalies should be abandoned. This will in itself show just how small the temperature changes are.

In an average year, global average temperatures vary by 3.8 C between January and July. So anomalies are actually way less, therefore I do not see anomalies as a problem.

Without anomalies we would go insane, our eyes would never stop waving and we’d probably miss the seasonal trend comparisons. Please don’t take my anomalies away. (Besides, I anomalize each station to itself, not some arbitrary baseline.)

I see a need for both, and consider that a part of any comparison between disparate data sets may find that using absolute GMT is helpful in finding differences. (BTW, the IPCC models produce some interesting absolute GMTs.)

Also, do not forget that GISS deletes polar SST data…

https://bobtisdale.wordpress.com/2010/05/31/giss-deletes-arctic-and-southern-ocean-sea-surface-temperature-data/

Well they have to be viewed in light of a typical global Temperature range on any ordinary northern summer day, is at least 100 deg. C and could be as much as 150 deg. C.

So looking for a one deg. C change over 150 years in that is just silly.

G

– George

From freezing to boiling in the same day? Where do you live?

Ah! Globally! Never mind, lol.

If you go just by the hemisphere having summer, the swing isn’t that great, but if you include the winter lows in Antarctica with the highs in the NH, then, yeah, even 150 degrees C on some days is possible. Let’s say a blistering but possible 57 C somewhere in the north and the record of -93 C in the south.

No place in the NH gets anywhere nearly as cold as Antarctica.

“well…I do agree that the use of anomalies should be abandoned. This will in itself show just how small the temperature changes are.”

BerkeleyEarth doesnt use anomalies. The answer is the same. its just algebra.

What Mosh said.

Anyway, anomalies are much easier to deal with if you’re making comparisons on graphs. We only have two eyes and they don’t even have adjustable microscopes. Our team uses anomalies.

And, yeah, you can do it to the degree C, even over a century. (Tenths, hundredths, thousandths, is problematic.)

1/10, /100th, 1/1000s are not a problem

you fundamentally dont understand what it means. it is not a measure of precision.

I will do a simple example.

I give you a scale. it reports pounds, integer pounds.

I ask you to weigh a rock

you weigh it three times.

1 pound

1 pound

2. pounds

Now I ask you the following question:

based on your measurements PREDICT the answer I will get if I weigh the rock with a scale that reports

weight to 3 digits?

When we do spatial statistics the math underlying it is Operationally performing a prediction of what

we would measure at unmeasured locations IF we had a perfect thermometer.

It’s an estimate.

So lets return to the rock weighing.

You average the three data points and you come up with 1.33

That doesnt mean you think your scale is good to 1/100th.

What it MEANS.. is this.. IF you predict 1.33 as being the true weight, then your prediction will MINIMIZE the error.

So what would you predict? would you predict 1 lb? well that’s just 1.000000

would you predict 2 pounds? or 2.000000

The point in doing a spatial “average” is that you are doing a prediction of the points you didnt measure.

And you are saying 1.33 will be closer than any other prediction, it will never be correct, it will just be LESS WRONG than other approaches. and you can test that.

Same thing if you weigh 100 swedes. suppose I weighed 100 swedes with a scale that was only good to a pound. I average the numbers and I come up with 156.76.

Whats that mean? Well, if we want to USE the average to make predictions it means this.

Go find some swedes i didnt weigh.

Now weigh them with a really good scale…

I will predict that my error of prediction will be smaller if I choose 156.76 than if I choose 156 or 157

or pick the same 100 and re weigh them with a really good scale

Its not that I know the weight of the 100 to 1/100th. I dont. Its rather this: I use the weight of the known

to predict the weight of the unknown and then I can test my prediction and judge it by its accuracy.

A spatial average is nothing more and nothing less than a prediction of the temperature at the PLACES

where we have no measurement. by definition that is what it is.

So for example. we can create a field using all the BAD stations and PREDICT what we should find at the CRN stations..

we can use the bad and predict.. and then check.. what did the CRN stations really say?

And we can use the CRN stations to predict what the other stations around it should say..

and then test that.

i know we call it averaging.. but thats really confusing people. its using an “averaging” process to predict

http://www.spatialanalysisonline.com/HTML/index.html?geostatistical_interpolation_m.htm

“Steven, isn’t the principle that intermediate and final results should not be given to a greater precision than the inputs?”

Of course it is, Forrest. In absolutely every scientific and engineering discipline apart from climate “science”, that is.

Steven suffers from False Precision Syndrome, along with the rest of his disingenuous ilk.

I know, Mosh, I know.

I agree with you.

We do the same kind of thing.

Regarding: “Within the satellite era their agreement is very good,”: I think there is a problem even within the satellite era. If one splits the satellite era into two periods for obtaining linear trends, pre and post 1998-1999 El Nino, with the split being in early 1999 (ends come closest to meeting without adding an offset), and one compares GISS, HadCRUT4 and either of the main satellite indices (I chose RSS), one gets this:

http://woodfortrees.org/plot/rss/from:1979/to:1999.09/trend/offset:0.15/plot/rss/from:1999.09/trend/offset:0.15/plot/hadcrut4gl/from:1979/to:1999.09/trend/plot/hadcrut4gl/from:1999.09/trend/plot/gistemp/from:1979/to:1999.09/trend/offset:-0.05/plot/gistemp/from:1999.09/trend/offset:-0.05

What I see: HadCRUT4 and the satellites agree that warming was rapid from 1979 to 1999 and slower after 1999, and GISS disagrees with this.

After NOAA came out with their pause buster, GISS promptly followed. My earlier article here compares GISS anomalies at the end of 2015 versus the end of 2014 in the first table:

https://wattsupwiththat.com/2016/01/27/final-2015-statistics-now-includes-december-data/

Donald Klipstein says:

…GISS disagrees…

Here’s a look at RSS vs GISS:

And this shows Michael Mann’s prediction versus Reality:

http://s28.postimg.org/c6vz5v2i5/Hadcrut4_versus_Something.png

Another comparison:

One more showing GISS vs RSS:

NASA/GISS is out of whack with satellite data, and GISS always shows much more warming. But do they ever explain why?

dbstealey wrote: “NASA/GISS is out of whack with satellite data, and GISS always shows much more warming. But do they ever explain why?”

They never explain why because if they did they would have to admit to manipulating the data for political purposes.

I agree, this is my take on it.

http://i62.tinypic.com/2yjq3pf.png

Something changed.

This is one I made last summer around the time everybody was talking about the Karl “Pausebusters” paper.

https://andthentheresphysics.wordpress.com/2016/03/08/guest-post-surface-and-satellite-discrepancy/

Isn’t that the paper many many scientists believe to be fraudulent?

The satellite series has a break when they switch to AMSU.. around may 1998.

Well, they do know that. (And they discuss it and tell us how they deal with it.)

However, one must expect natural surface trend — both warming and cooling — to be somewhat larger than satellite: Natural ground heat sink will have that effect. Thats why Tmax generally comes ~4 hours after noon, and the ground is still flushing its excess heat at sunrise. Indeed, it is this effect that causes spurious warming due to bad microsite. UAH 6.0 for CONUS shows a 13% smaller trend than the reliable USCRN from 2005 to 2014 (a period of cooling: i.e, UAH cools less).

“Well, they do know that. (And they discuss it and tell us how they deal with it.)

Actually there is a debate on how to handle it. My post got thrown in the trash.

but if you handle AMSU correctly the discrepancy vanishes.

http://www.atmos.uw.edu/~qfu/Publications/jtech.pochedley.2015.pdf

the differences between satellites and the surface are Limited to those regions of the earth that have

Variable land cover— Like SNOW covered areas. The satellite algorithms assume a constant emissivity and well.. thats wrong.

GIS (via Hansen et al., 1981) showed a global cooling trend of -0.3 C between 1940 and 1970.

http://www.giss.nasa.gov/research/features/200711_temptracker/1981_Hansen_etal_page5_340.gif

Then, after that proved inconvenient, it was “adjusted” to show a warming trend instead.

Hansen et al., 1981:

http://www.atmos.washington.edu/~davidc/ATMS211/articles_optional/Hansen81_CO2_Impact.pdf

“The temperature in the Northern Hemisphere decreased by about 0.5 C between 1940 and 1970, a time of rapid CO2 buildup. The time history of the warming obviously does not follow the course of the CO2 increase (Fig. 1), indicating that other factors must affect global mean temperature.”

“A remarkable conclusion from Fig. 3 is that the global mean temperature is almost as high today [1980] as it was in 1940.”

I don’t think CO2 buildup was all that rapid from 1940 to 1970. In 1970, CO2 was around 325 PPMV. The Mauna Loa record did not exist in 1940, but CO2 was probably around 300 PPMV then. It is around 405 PPMV now.

http://woodfortrees.org/plot/esrl-co2

CO2 was 310 ppm in 1940. It was 300 ppm in 1900.

CO2 emissions rates rose rapidly during 1940 to 1970, from about 1 GtC/yr to 4.5 GtC/yr. And yet global cooling of -0.3 C occurred during this period.

Made a mistake. The 0.3C difference is in the Hansen ’81 global chart. That’s the one you eyeballed to get that value, kenneth (it’s the difference between 1940 and 1965). The “about 0.5” difference was NH land, as quoted by Hansen.

For completeness, here’s the current global (land) comparison.

1940 = 0.06

1965 = -0.17

1970 = 0.07

Worth noting: Hansen ’81 had “several hundred” land stations. GISS current has 10 times that many. One check to see any changes in processing/adjustments would be to use the same land stations for both.

NASA/GISS (1981): “Northern latitudes warmed ~ 0.8 C between the 1880s and 1940, then cooled ~ 0.5 C between 1940 and 1970, in agreement with other analyses. .. The temperature in the Northern Hemisphere decreased by about 0.5 C between 1940 and 1970. … A remarkable conclusion from Fig. 3 is that the global mean temperature is almost as high today [1980] as it was in 1940.”

In 1981, NASA/GISS had the NH warming by 0.8 C. They’ve now removed half of that NH warming, as it’s down to 0.4 C.

In 1981, NASA/GISS had the NH cooling by -0.5 C between 1940 and 1970. It’s now been warmed up to just -0.2 C of NH cooling.

In 1981, NASA/GISS had 1980 almost -0.1 C cooler than 1940. 1980 is now +0.2 C warmer than 1940.

Apparently you find these “adjustments” to past data defensible, barry. What’s happened to all this recorded cooling? Why has it been removed?

Agee, 1980

http://journals.ametsoc.org/doi/pdf/10.1175/1520-0477%281980%29061%3C1356%3APCCAAP%3E2.0.CO%3B2

The summaries by Schneider and Dickinson (1974) and Robock (1978) show that the mean annual temperature of the Northern Hemisphere increased about 1°C from 1880 to about 1940 and then cooled about 0.5°C by around 1960. Subsequently, overall cooling has continued (as already referenced) such that the mean annual temperature of the Northern Hemisphere is now approaching values comparable to that in the 1880s.

Cimorelli and House, 1974

http://ntrs.nasa.gov/archive/nasa/casi.ntrs.nasa.gov/19750020489.pdf

Aside from such long-term changes, there is also evidence which indicates climate changes occurring in contemporary history. Mitchell (1971) among others, claims that during the last century a systematic fluctuation of global climate is revealed by meteorological data. He states that between 1880 and 1940 a net warming of about 0.6°C occurred, and from 1940 to the present our globe experienced a net cooling of 0.3°C.

http://www.pnas.org/content/67/2/898.short

In the period from 1880 to 1940, the mean temperature of the earth increased about 0.6°C; from 1940 to 1970, it decreased by 0.3-0.4°C.

Schneider, 1974

http://link.springer.com/chapter/10.1007/978-3-0348-5779-6_2#page-1

Introduction: In the last century it is possible to document an increase of about 0.6°C in the mean global temperature between 1880 and 1940 and a subsequent fall of temperature by about 0.3°C since 1940. In the polar regions north of 70° latitude the decrease in temperature in the past decade alone has been about 1°C, several times larger than the global average decrease

Mitchell, 1970

http://link.springer.com/chapter/10.1007/978-94-010-3290-2_15

Although changes of total atmospheric dust loading may possibly be sufficient to account for the observed 0.3°C-cooling of the earth since 1940, the human-derived contribution to these loading changes is inferred to have played a very minor role in the temperature decline.

Robock, 1978

http://climate.envsci.rutgers.edu/pdf/RobockInternalExternalJAS1978.pdf

Instrumental surface temperature records have been compiled for large portions of the globe for about the past 100 years (Mitchell, 1961; Budyko, 1969). They show that the Northern Hemisphere annual mean temperature has risen about 1°C from 1880 to about 1940 and has fallen about 0.5 °C since then

Those reports were based on a few hundred land stations. Current data is based on 6000+. The big picture is much the same, only the details have changed.

\Mid-century is still showing cooling. 1965 is still much cooler than 1940, 1970 is still cooler than 1940. 1980 is now warmer. No reason every annual offset should be the same or even in the same direction with 6000 more NH weather stations added, nor any reason why the trends should be expected to remain exactly the same.

Another check one can make on GISS (or any of the global surface temperature data) is to compare with products from other institutes.

NOAA, HadCRUt, JMA, for example.

I’ll nominate the JMA (Japanese Meteorological agency).

http://ds.data.jma.go.jp/tcc/tcc/products/gwp/temp/ann_wld.html

Unfortunately, NH land-only data is not available from JMA, so we’ll have to make do with this global land+ocean plot. Looks very similar to the other products. And 1980 is warmer than 1940.

4 institutes producing very similar global plots.

Could it be that the plots from 40 years ago were worse estimates than the current ones? Have you considered that possibility at all?

If not, why not?

barry: “Those reports were based on a few hundred land stations. Current data is based on 6000+.”

So…what does the current number of stations have to do with the modern-day adjustments to temperature data available from the 1940 to 1970 period that showed a global-scale cooling of -0.3 C that has now been removed to show about a -0.05 to -0.1 C cooling at most? Answer: absolutely nothing. We can’t add stations to the historical record. Is that really the logic you’re using here?

I will ask again. And, likely, you will ignore it again. Why was the -0.3 C cooling removed from the global record, since that’s what the 1970s and 1980s datasets showed with the temperature data available at that time? Why is 1980 now +0.2 C warmer than 1940 when it was -0.1 C cooler as of 1981 (NASA)? Adding stations has absolutely nothing to do with these questions. It’s hand-waving.

As for your claims about station data being so much better now — which, again, has absolutely nothing to do with altering past temperature datasets from the 1970s and 1980s — were you aware that thousands of land stations have been removed since the 1980s — mostly in the rural areas, as these didn’t show enough warming?

http://realclimatescience.com/2016/01/the-noaa-temperature-record-is-a-complete-fraud/

NOAA has no idea what historical temperatures are. In 1900, almost all of their global min/max temperature data was from the US. Their only good data, the US temperature data, is then massively altered to cool the past.

NOAA has continued to lose data, and now have fewer stations than they did 100 years ago.

This is why their [NOAA] data keeps changing. They are losing the rural stations which show less warming, causing the urban stations to be weighted more heavily.

http://realclimatescience.com/wp-content/uploads/2016/01/Screenshot-2016-01-21-at-05.06.48-AM-768×444.png

As was clear in Figure 1-1 above, the GHCN sample size was falling rapidly by the 1990s. Surprisingly, the decline in GHCN sampling has continued since then. Figure 1-3 shows the total numbers of GHCN weather station records by year. Notice that the drop not only continued after 1989 but became precipitous in 2005. The second and third panels show, respectively, the northern and southern hemispheres, confirming that the station loss has been global.

The sample size has fallen by about 75% from its peak in the early 1970s, and is now smaller than at any time since 1919.As of the present the GHCN samples fewer temperature records than it did at the end of WWI.

barry: “Those reports were based on a few hundred land stations. Current data is based on 6000+.”

So…what does the current number of stations have to do with the modern-day adjustments to temperature data actually available from the 1940 to 1970 period that showed a global-scale cooling of -0.3 C that has now been removed to show about a -0.05 to -0.1 C cooling at most? Answer: absolutely nothing. We can’t add stations to the historical record. Is that really the logic you’re using here?

I will ask again. And, likely, you will ignore it again. Why was the -0.3 C cooling removed from the global record, since that’s what the 1970s and 1980s datasets showed with the temperature data available at that time? Why is 1980 now +0.2 C warmer than 1940 when it was -0.1 C cooler as of 1981 (NASA)? Adding stations has absolutely nothing to do with these questions. It’s hand-waving.

http://static.skepticalscience.com/pics/hadcrut-bias3.png

barry, can you provide an explanation for why land and sea temperatures rose and fell largely in concert from 1900 to 1975, and then, after that, land temperatures rose by about +0.8 or 0.9 C, but SSTs only rose by 0.3 C? What could scientifically explain this gigantic divergence, and what scientific papers can support these explanations?

You can’t add more historical data as you find it and increase the pool of information?? What nonsense.

In the 1990s Researchers for the GHCN collected and digitized reams of weather data from thousands of non-reporting weather stations around the world and published a paper on it in 1997. That’s why the number of stations dropped precipitously thereafter. The off-line station data they collected was dated only up to the time the project finished.

Stations weren’t taken away. They were added.

http://www.ncdc.noaa.gov/oa/climate/research/Peterson-Vose-1997.pdf

Can’t imagine why this should be a problem. More information is better.

The cooling between 1940 1970 and wasn’t “adjusted to show a warming trend.” It’s still there.

http://www.woodfortrees.org/plot/gistemp/from:1930/to:1980/mean:12/plot/gistemp/from:1940/to:1971/trend

This is a global data set. WFT doesn’t have GISS NH separately. But it would show even more cooling than global, as the SH cooled very little in that period.

Temperature data from that era indicated that the cooling was -0.3 C globally.

Benton, 1970

http://www.pnas.org/content/67/2/898.short

Climate is variable. In historical times, many significant fluctuations in temperature and precipitation have been identified. In the period from 1880 to 1940, the mean temperature of the earth increased about 0.6°C; from 1940 to 1970, it decreased by 0.3-0.4°C. Locally, temperature changes as large as 3-4°C per decade have been recorded, especially in sub-polar regions. … The drop in the earth’s temperature since 1940 has been paralleled by a substantial increase in natural volcanism. The effect of such volcanic activity is probably greater than the effect of manmade pollutants.

The GIS temp now says the cooling was only about -0.05 C to -0.1 C. In other words, the cooling period was warmed up via “adjustments”.

http://www.woodfortrees.org/plot/gistemp/from:1940/to:1970/mean:12/plot/gistemp/from:1940/to:1970/trend

And why are you using the 1930 to 1980 plot and 1940 to 1971 trend when it was apparently your point to specify the 1940 to 1970 period?

At WFT, if you run the trend to 1971, that means the trend runs to Dec 31 1970. But it makes little difference to Dec 31 1969, if that’s what you meant. The cooling trend is still there and slightly steeper.

The extra 10 years extra in the plot either side of the period was so I could get a visual on a few years before or after: makes no difference to the trend result for the specified period. Cooling trend is still apparent in GISS after adjustments.

Also, if you look carefully at Hansen’s 1981 graph, the eyeballed temperature difference from 1940 to 1970 is about 0.1C. Slightly less in the current GISS data. There is a bigger dip in Hansen ’81 around 1965 that looks more like a -0.3C difference from 1970. Perhaps that’s what you eyeballed?

In the current GISS data, there is a -0.5C difference in temps globally from 1945 to 1965.

None of these are trends of course, just yearly differences. Original assertion: “[GISS] was “adjusted” to show a warming trend instead” is completely wrong. I note you have changed the point to the period being less cooling. You seem to think it was deliberate. Same accusation could have been flung at the UAH record at certain times.

All data sets, satellite included, undergo revisions. Except for UAH in 2005 applying corrections that produced a clear warming signal after no-warming prior, the big picture has remained quite similar. Looks like someone has noticed (yet again) that there are differences between the data sets and decided (yet again) that those differences are supremely meaningful.

http://realclimatescience.com/wp-content/uploads/2016/06/2016-06-10070042-1.png

This is what I was referring to when I wrote that the global cooling period of about -0.3 C to -0.4 C (1940 to 1970) was adjusted out of the long term trend. Sorry if this wasn’t clear.

http://realclimatescience.com/2016/06/the-100-fraudulent-hockey-stick/

Isn’t it interesting that NASA had 1980 temperatures as almost -0.1 C cooler than 1940.

Hansen et al., 1981: “A remarkable conclusion from Fig. 3 is that the global mean temperature is almost as high today [1980] as it was in 1940.”

And yet the current NASA/GISS graph has essentially no cooling between 1940 and 1970, and 1980 is now +0.2 C warmer than 1940.

http://data.giss.nasa.gov/gistemp/graphs_v3/Fig.A.gif

The adjustments have removed the -0.3 C cooling between 1940 to 1970 and replaced them with a warming of almost +0.3 C between 1940 and 1980.

This is what was meant by “adjusted to show a warming trend.” Sorry if this wasn’t clear.

barry: “Also, if you look carefully at Hansen’s 1981 graph, the eyeballed temperature difference from 1940 to 1970 is about 0.1C.”

I’m not “eyeballing” this, barry. I’m using the words of Hansen himself from his 1981 paper:

“Northern latitudes warmed ~ 0.8 C between the 1880s and 1940, then cooled ~ 0.5 C between 1940 and 1970, in agreement with other analyses”

To have only cooled by -0.1 C between 1940 and 1970 – which is apparently your claim – this would require that the -0.5 C NH cooling was counterbalanced by a +0.3 C SH warming. Do you have evidence that the NASA data showed a +0.3 C warming during the 1940 to 1970 period, or are you just “eyeballing” this claim?

Where did the -0.5 C of NH cooling as reported in 1981 go, barry?

kenneth, the Hansen quote is about NH temps, land stations only. Let’s compare apples with apples.

GISS provide NH land-only surface temperatures in their current list of indexes. I’ll compare anomalies and link to the data set below to check for yourself.

I think it’s a waste of time, because in 1981 there were fewer stations in the GISS database. Far more have been added since then (including with data 1940-70), and processing has changed. It’s utterly unsurprising that the plots will be different, and niggling over it is practically futile. For the sake of argument, however….

Current NH GISS land only:

1940 = 0.10

1970 = 0.02

Difference of about -0.1C, quite similar to the Hansen plot.

From the Hansen chart you have clearly selected 1965 as the coolest point – that’s the point at which the numerical difference from 1940 is about 0.3C (1970 is much warmer than 1965 on that graph – it’s only 0.1C or so different from 1940: look again carefully, the low spike is NOT at 1970). You described this numerical difference between one year and another as a “trend.” It is not. Trends are calculated using all data between two years.

If we take 1965 as the low point WRT Hansen 1981 and use that as a reference for the current GISS data.

Current NH GISS:

1940 = 0.10

1965 = -0.20

A difference of about 0.3C, quite similar to the difference between those years in Hansen ’81.

Well. I’m actually surprised they are so similar, but consider it partly coincidence.

Here’s the GISS data page. Make sure to select Northern Hemisphere Land-Surface Air Temperature Anomalies. Otherwise you’ll be comparing apples to oranges WRT Hansen ’81.

http://data.giss.nasa.gov/gistemp/

Or you can go directly to the table of NH, land-only anomalies by clicking the link below.

http://data.giss.nasa.gov/gistemp/tabledata_v3/NH.Ts.txt

kennethrichards June 16, 2016 at 8:30 pm wrote:

“Isn’t it interesting that NASA had 1980 temperatures as almost -0.1 C cooler than 1940.

Hansen et al., 1981: “A remarkable conclusion from Fig. 3 is that the global mean temperature is almost as high today [1980] as it was in 1940.”

And yet the current NASA/GISS graph has essentially no cooling between 1940 and 1970, and 1980 is now +0.2 C warmer than 1940.”

This is more properly described as blatant alarmist fraud.

GISS has 10 times more land stations now as they had 35 years ago, and improved methods.

But in the spirit of understanding, here is a map of the world you would probably prefer to the fraudulent “modern” maps they have nowadays.

And people believe that Sydney actually exists!

From that graph it warmed 0.6 degrees in 10 years, was that runaway catastrophic global warming?

And the following lost about 0.3 C in under a month:

https://moyhu.blogspot.ca/p/latest-ice-and-temperature-data.html#NCAR

Neither is “runaway catastrophic global warming”. That is due to the cyclical nature of things. If either change were to hold steady for a century, then we could talk about whether or not we have “runaway catastrophic global warming”.

different data sources in 1981, different method..

Yes, and prior global records from earlier show an even greater drop and a higher 1940s blip, and records of NH ice decrease and extremes are a confirmation of this, as well as the “Ice Age Scare” which was very much real, if also inconvenient to CAGW enthusiasts.

The climate-gate emails openly talk about removing the blip, and how that would be “good”. (Good for what? Good for funding? Good for scaring people?) Remember this, no paper has been produced explaining the major changes that comprise the historic changes in the global record, and simple hand waving of we have better methods and compilation now, are not adequate or even reasonable explanations.

Cimorelli and House, 1974

http://ntrs.nasa.gov/archive/nasa/casi.ntrs.nasa.gov/19750020489.pdf

Aside from such long-term changes, there is also evidence which indicates climate changes occurring in contemporary history. Mitchell (1971) among others, claims that during the last century a systematic fluctuation of global climate is revealed by meteorological data. He states that between 1880 and 1940 a net warming of about 0.6°C occurred, and from 1940 to the present our globe experienced a net cooling of 0.3°C.

Benton, 1970

http://www.pnas.org/content/67/2/898.short

Climate is variable. In historical times, many significant fluctuations in temperature and precipitation have been identified. In the period from 1880 to 1940, the mean temperature of the earth increased about 0.6°C; from 1940 to 1970, it decreased by 0.3-0.4°C. Locally, temperature changes as large as 3-4°C per decade have been recorded, especially in sub-polar regions. … The drop in the earth’s temperature since 1940 has been paralleled by a substantial increase in natural volcanism. The effect of such volcanic activity is probably greater than the effect of manmade pollutants.

Central Intelligence Agency,1974

http://documents.theblackvault.com/documents/environment/potentialtrends.pdf

“Potential Implications of Trends in World Population, Food Production, and Climate”

According to Dr. Hubert Lamb–an outstanding British climatologist–22 out of 27 forecasting methods he examined predicted a cooling trend through the remainder of this century. A change of 2°-3° F. in average temperature would have an enormous impact. [pg. 28, bottom footnote]

A number of meteorological experts are thinking in terms of a return to a climate like that of the 19th century. This would mean that within a relatively few years (probably less than two decades, assuming the cooling trend began in the 1960’s) there would be brought belts of excess and deficit rainfall in the middle-latitudes; more frequent failure of the monsoons that dominate the Indian sub-continent, south China and western Africa; shorter growing seasons for Canada, northern Russia and north China. Europe could expect to be cooler and wetter. … [I]n periods when climate change [cooling] is underway, violent weather — unseasonal frosts, warm spells, large storms, floods, etc.–is thought to be more common.

“…Finally, there is the ongoing problem with using anomalies in the first place rather than computing global average temperatures. ”

An excellent point.

This should be the only issue that realists talk about.

Don’t get pulled in to the faux debate about comparing anomalies. You’re arguing with charlatan con-men about their techniques for pulling the wool over your eyes, and pretending the issue is valid and meaningful.

It is not.

Look at other important statistical averages that are commonly used:

Stock market averages

Baseball: pitchers’ earned run average, batters’ batting average, etc.

Football: runners’ yard per game average, quarterbacks’ completion average, etc.

Basketball: shooters’ points per game average, shooters’ free throw completion average, etc.

And on, and on…..

“Anomalies” are a con game created to confuse the masses with a complicated faux-statistical manipulation.

The measured “global average temperature” (which is in itself meaningless, but that’s a whole ‘nother issue), even accounting for all the “homogenization,” and other manipulations by the charlatans, has risen by 1.8 degrees F!

From 57.2 degrees average from 1940 to 1980 to 59 degrees average in 2015!

That’s “averaging” the deserts of the Empty Quarter of Saudi Arabia, the Gobi, Death Valley, the Sahara, and others (130 F) with the Arctic and Antarctic (-50 F) over a year.

That’s not too scary.

But an “anomaly” can be made much scarier, and is more easy to manipulate.

Forget anomalies, don’t get sucked into the con-men’s game.

Well the total range of global Temperature on any day like today is at least 100 deg. C and can be as high as 150 deg. C from -94 deg. C in the Antarctic highlands to +60 deg. C in dry North African deserts or the middle East.

And EVERY possible Temperature in such a daily extreme range can be found some place on earth, in fact in an infinity of places.

Nothing in the real universe besides human beings responds in any way to the average of anything; it cannot even be observed; simply calculated after the fact, and adding no information to what we already know from the real measurements.

So just what the hell is global climate anyway ??

G

george says:

So just what the hell is global climate anyway ??

That’s easy. It’s the opening scene of a science fiction thriller, in which all of human existence is threatened with extinction by a slightly warmer, more pleasant world.

It just needs a little work before they sell the movie rights.

I hear Leo and Matt are already interested…

Yes, George. That’s the point.

Realists have allowed ourselves to be pulled into the con-men’s terms of reference. Big mistake.

“Global average temperature anomalies” are the tools of a con game–designed to obfuscate the reality that you described–a global range of daily temperatures from -50 F to +130 F.

“Global average temperature” is meaningless to begin with. “Global average temperature anomalies” just further obfuscate reality.

Don’t get sucked in to the con-men’s faux-reality.

That basically boils-down how Climate Inc. so easily spreads propaganda to the masses. The general every-day citizen/simpleton/idiot/laymen doesn’t even understand that climate change over at least the past 200 years is only apparent on high resolution (too high) temperature graphs of something that’s not even tangible. Climate, by its very definition is a statistical concept, and who would actually expect that the moving average of an inherently variable system not to change over time?

If Climate Inc., instead concentrated on biome change, the tangible affect of climate change, then they would have much more credibility, maybe even traverse the divide from pseudoscience to science. But scientifically attributing local biome shifts to an anthropogenic signal is much more difficult than creating an easily manipulated data set of something abstract and passing it off as real. Concentrating on actual biome change would also draw too much attention to the positives of a warmer climate, and it would be very difficult to call any of it “global” considering very few places outside the Arctic are showing biome changes.

anomalies?

http://static.berkeleyearth.org/pdf/decadal-with-forcing.pdf

http://berkeleyearth.lbl.gov/auto/Regional/TAVG/Figures/global-land-TAVG-Trend.pdf

anomalies?

http://berkeleyearth.org/wp-content/uploads/2015/03/land-and-ocean-baseline-comparison-map-large.png

we dont use anomalies.

If you dont like anomalies just add a constant.

the constant to add for each month is in this file.

http://berkeleyearth.lbl.gov/auto/Global/Land_and_Ocean_complete.txt

the answer doesnt change

Yes, that would work. But would you not then have to plot all things with a 12 month mean to even out the natural 3.8 C variation every year?

http://berkeleyearth.lbl.gov/auto/Global/Land_and_Ocean_summary.txt

Add 14.762 to every year.. Annual absolute temperature.

According to NAOO it was 62.45 degrees F in 1997 and even hotter in 1998.

Short time periods tend to show more variation in trend between GMST data sets. They use different methods/coverage after all (GISS interpolates Arctic, which has warmed more strongly according to UAH, while Had4 doesn’t).

(First graph in the OP links to the second, BTW)

Run 1960 to present and the trends converge. Not exact, but then we don’t expect them to be.

http://www.woodfortrees.org/plot/gistemp/from:1960/plot/hadcrut4gl/from:1960/plot/gistemp/from:1960/trend/plot/hadcrut4gl/from:1960/trend

Had4 = 0.135C/decade

GISS = 0.161C/decade

A better comparison would be to mask those parts of the globe HadCRUt doesn’t cover that GISS does. Then the area being measured would be apples to apples at least.

Yes. A good clue is this.

ANYTIME you see people using woodfortrees to do analysis you can be pretty certain that something

is wrong with the analysis. Its bitten me so many times that I just stopped.

1. Its a secondary source— like wikipedia

2. it doesnt allow you mask data to insure the same spatial coverage.

“1. Its a secondary source— like wikipedia”

Disingenuous – mendacious even, like most of your evasive efforts.

Woodfortrees data sources are the industry standard ones, as can be readily checked, so are anything but secondary sources.

It’s a good basic tool.

But I wouldn’t use it if I’m going to do serious work on that sort of stuff; I want the raw and adjusted data, at least. I want a site survey or exact location so I can do the survey. And I may want separate stages of adjustment data, for that matter (or not, depending on what I’m doing). And that’s not even touching on the metadata (so important and so much missing).

cat weazel..

did you VERIFY that WFT uses the exact data that BEST publishes?

that CRU publishes

that GISS publishes

forget that they dont have current versions of UAH did ya?

Ask Evan this… Did he get his data from WFT. Nope. Why not?

There is NO VALIDATION. No documentation. no code no traceability. just a random ass website.

Regarding Steven Mosher’s note about comparing WFT to original-source data such as HadCRUT, etc: I have done that enough times to see that WFT is real. Also, WFT does not use UAH 6 because that version is still in beta status according to Spencer until a relevant paper passes peer review. Meanwhile, UAH 6 is similar enough to RSS 3.4 (the version used by WFT) for most meaningful purposes of finding trends.

“Regarding Steven Mosher’s note about comparing WFT to original-source data such as HadCRUT, etc: I have done that enough times to see that WFT is real. Also, WFT does not use UAH 6 because that version is still in beta status according to Spencer until a relevant paper passes peer review. Meanwhile, UAH 6 is similar enough to RSS 3.4 (the version used by WFT) for most meaningful purposes of finding trends.”

Funny how did you check of Best go?

its even funnier since IF you really checked then you would know how to get the real source data

and nobody who had the real source data would ever take the chance that a secondary source might

change month to month.

In short, even IF you checked a few times a good skeptic who always questions would not simply trust

the next month to be right because the check was right the month before.

By the way.. did you check the calculation of trends? the filtering? the averaging?

Nope.

“I am not an academic researcher and hence have no need for formal references. However, if you’ve found this site useful, an informal ‘mention in dispatches’ and a Web link wouldn’t go amiss.

This cuts both ways, however: The algorithms used on this site have not been formally peer reviewed and hence should not be used unverified for academic publication (and certainly not for policy- making!). This site is only intended to help find interesting directions for further research to be carried out more formally.”

Only skeptics would just use stuff without checking.

Hint.. If we change the URL or file format his stuff breaks.. which means he doesnt maintain the site.

Paul updated some things a year ago after I emailed him, but WFT hasn’t updated since, and half the temperature data is now outdated. BEST data is outdated, HadCRU4 is previous version, UAH is not yet updated (version 6 is still ‘Beta’). Etc etc.

It’s a handy tool for big picture stuff, but not so great for detailed analysis. Uses basic Ordinary Least Squares trend analysis, which doesn’t account for autocorrelation. There’s no uncertainty interval output.

Apply caveats when using.

Ask Evan this… Did he get his data from WFT. Nope. Why not?

Because surviving peer review is a Good Thing.

Getting back to a more simplistic query.

I’ve often wondered, if the entirety of the AGW adventure is derived from a global temperature change of 1 degree, or so, isn’t the reliability in the ability to accurately measure the temperature of the planet over 100 years, or so, vital to every assertion?

It appears as though the global temperature measuring is a bit more sloppy than we have been led to believe and it’s not so reliable?

There must be some margin of error. Do scientists know?

If climate scientists are incapable of determining the margin of error doesn’t that further diminish reliability in the global temperature.

From my view the AGW seems more like a chaos of unreliable presumptions being repeatedly massaged by unscrupulous “experts” who had mistakenly invested their entire careers in a tall tale.

I see constant contradictions like this.

SHOCK WEATHER FORECAST: Hottest August in 300 YEARS on way …

http://www.express.co.uk › News › Nature

Daily Express

Jul 31, 2014 – Britain faces record temperatures as the heatwave is fuelled by the jet stream … say the UK heatwave in August will be the hottest in 300 years …

SHOCK CLAIM: World is on brink of 50 year ICE AGE and BRITAIN …

http://www.express.co.uk › News › Weather

Daily Express

Oct 27, 2015 – BRITAIN faces DECADES of savage winters driven by freak changes in … SHOCK WEATHER WARNING: Coldest winter for 50 YEARS set to bring. … winter whiteouts and led to the River Thames freezing 300 years ago.

And when I look at this I want to dismiss the entirety of climate science as worse than useless.

http://www.longrangeweather.com/global_temperatures.htm

I just want to know what is going on. Is that too much to ask?

My BS detector has been buzzing for so long I fear it is either broken or the climate change crusade is the biggest fraud in human history.

The express is awful for alarming weather scaremongering that never come off and are not based on science. These headlines are equally bad as the alarmist climate so called scientists.

Steve Oregon:

“I see constant contradictions like this.”

No contradiction.

Do not confuse ANYTHING regard weather/climate in the Daily express as anything other than garbage. Dangerous garbage at that with it’s potential to cause distress to the elderly. Here are some thoughts on what the Meteorological community think of it. The chief journalist involved is one Nathan Rao. And the chief expert, James Madden.