Climate Modeling Dominates Climate Science

By PATRICK J. MICHAELS and David E. Wojick

What we did

We found two pairs of surprising statistics. To do this we first searched the entire literature of science for the last ten years, using Google Scholar, looking for modeling. There are roughly 900,000 peer reviewed journal articles that use at least one of the words model, modeled or modeling. This shows that there is indeed a widespread use of models in science. No surprise in this.

However, when we filter these results to only include items that also use the term climate change, something strange happens. The number of articles is only reduced to roughly 55% of the total.

In other words it looks like climate change science accounts for fully 55% of the modeling done in all of science. This is a tremendous concentration, because climate change science is just a tiny fraction of the whole of science. In the U.S. Federal research budget climate science is just 4% of the whole and not all climate science is about climate change.

In short it looks like less than 4% of the science, the climate change part, is doing about 55% of the modeling done in the whole of science. Again, this is a tremendous concentration, unlike anything else in science.

We next find that when we search just on the term climate change, there are very few more articles than we found before. In fact the number of climate change articles that include one of the three modeling terms is 97% of those that just include climate change. This is further evidence that modeling completely dominates climate change research.

To summarize, it looks like something like 55% of the modeling done in all of science is done in climate change science, even though it is a tiny fraction of the whole of science. Moreover, within climate change science almost all the research (97%) refers to modeling in some way.

This simple analysis could be greatly refined, but given the hugely lopsided magnitude of the results it is unlikely that they would change much.

What it means

Climate science appears to be obsessively focused on modeling. Modeling can be a useful tool, a way of playing with hypotheses to explore their implications or test them against observations. That is how modeling is used in most sciences.

But in climate change science modeling appears to have become an end in itself. In fact it seems to have become virtually the sole point of the research. The modelers’ oft stated goal is to do climate forecasting, along the lines of weather forecasting, at local and regional scales.

Here the problem is that the scientific understanding of climate processes is far from adequate to support any kind of meaningful forecasting. Climate change research should be focused on improving our understanding, not modeling from ignorance. This is especially true when it comes to recent long term natural variability, the attribution problem, which the modelers generally ignore. It seems that the modeling cart has gotten far ahead of the scientific horse.

Climate modeling is not climate science. Moreover, the climate science research that is done appears to be largely focused on improving the models. In doing this it assumes that the models are basically correct, that the basic science is settled. This is far from true.

The models basically assume the hypothesis of human-caused climate change. Natural variability only comes in as a short term influence that is negligible in the long run. But there is abundant evidence that long term natural variability plays a major role climate change. We seem to recall that we have only very recently emerged from the latest Pleistocene glaciation, around 11,000 years ago.

Billions of research dollars are being spent in this single minded process. In the meantime the central scientific question – the proper attribution of climate change to natural versus human factors – is largely being ignored.

This is really what Carl Sagan was referring to. Billions of dollars and billions of models, more models than there are grains of sand on the beaches of the earth.

It seems to me that climate models never seem to compute the expected climate at ANY place on earth where actual climate related measurements are made.

A model (of anything) should first of all behave just like the real anything, which means you should be comparing the real and the modeled (in the same places).

G

So what happens when you search climate change and filter model…

Why would I want to do that ??

I’ve seen no climate change that seems worthy of note. Well I’ve never been to the Antarctic highlands so there are climate extremes I haven’t experienced; but I also haven’t seen the plots of ANY climate models of groups of climate models that would even hint that they even related to this planet, let alone anything real. If I ever get to where I start to think climate doesn’t change, I’ll let everybody know. I have no idea where the notion that ANYBODY doesn’t believe climate changes, ever came from. Certainly wasn’t my idea.

Yes I do believe CO2 captures 15 micron LWIR photons, and I do believe CO2 in the air has gone from 315 ppmm to circa 400 since IGY 1957/58.

So what; doesn’t seem to have done anything much that I can see.

G

Whilst I’m highly sceptical of the utility of current GCMs wrt to policy and, frankly, appalled by the

amount of speculative papers based on model output, I think this misses the point. It’s also easy

strawman material for those who give much more credence to model output than I think they should.

GCMs, we are told, are not meant to simulate or compute the existing earth climate, They are meant

to model the response of the climate system to various forcings. It’s the trend, response and

sensitivity that are important. I believe it’s a stronger argument to focus on the fact GCMs, in

nearly all cases, clearly get these wrong too and that the ‘model ensemble’ is statistical garbage whose

ony apparent purpose is to broaden the spread through which observations run. If each individual

model was run many times to understand it’s precision wrt. input parameters then compared

against observations, I reckon we’d be able to cull at least 97% of them.

You can’t “model” what you don’t know. To model the climate would require actually knowing all the ins and outs and all the factors that affect it. You can write “simulations” of things you don’t know, like Sim City and Sim Farm or Sim Climate. You don’t need to understand the subject you are simulating all that well to approximate it. Climate “models” do not exist at this time since we don’t have enough understanding of climate to model it. and that is why it is so frustrating to hear them say that they are worth while. In another 50 years, when due diligence in scientific work brings enough understanding of climate, they can write better simulations, but they won’t be able to model climate until there is enough actual raw, unaltered data to base their model on in the first place. The same applies to other fields in science as well.

Billions of research dollars are being spent in this single minded process. Billions of dollars that could have gone into repairing Americas crumbling Infrastructure instead of into the pockets of green scammers.

Why don’t we funnel some of the funds into zika virus research?

http://youtu.be/0gDErDwXqhc

Excellent

Thanks Galen, great video, I am sharing it with the warmists I know ( sadly some are family) I hope they have the patience to get the point!

Galen,

This is why I love this website. Articles like this.

Thank you.

Good except it buys Hansen’s easily disprovable claim the Venus can be explained as a GHG “runaway” .

We have 100’s of millions of years of climate history and we are postulating on a natural process that has gone on for billions of years.

And, just about a few tenths of a degree per (whichever time period)

Moreover, within climate change science almost all the research (97%) refers to modeling in some way.

There’s that magic 97% number again. ;->

Yes but it only occurs in 57 Countries or States.

g

Varieties of locations!

97% – Cook’s Constant! How could it have been any other number?

Whoa! At the time I viewed this page there were 272,977,970 WUWT views…. 97 and 97 again! That’s it … tinfoil hat time!

Ha haa I remember Cook’s Constant from school. The bovine scatology number you used to get the answer you wanted

“Cook’s Constant” – Excellent. I will have to remember that.

In marketing it’s recommended to price your product at $97 rather than $100 or $95 it seems to be more Believable to the consumer.

So it’s a marketing ploy, not real science.

I think I’d be hard-pressed to find any paper that I’ve been a co-author on that included the term “climate change” that didn’t also include some form of the term “model.” If we we’re using a statistical (empirical)model ourselves, we most certainly were comparing our result to those based on some sort of “model.” The latter examples were not envisioned as explicit “model” improvement projects, but rather either formal or informal model testing.

-Chip

Indeed so. This paper seems to identify occurrences of “model” with GCMs as suggested by the Cray picture. But as it also says, models are basic in science, and climate papers will use many kinds – statistical models, radiative models, etc. Not all costs billions.

I agree, which is why Wojick and I have a pretty vigorous debate about the utility of semantic analysis. Nonetheless, it sees very odd that “model” would appear so much less in the other disciplines that presumably may use the same statistical tools.

I’m not so sure about this avenue of research, or,perhaps, that it yields meaningful results. More than anything else, I put this up to see what kind of comments it would generate, and I think Chip and Nick are right in their observations.

So, are any of you suggesting climate models are not central to the CAGW case? I find that extremely hard to doubt, and if true, then the “science” done using the model’s outputs/results as though credible indicators of real world eventualities are, in effect, extensions of the money spent on those models. Don’t see a lot of “What effect would global cooling have on critter/environment X” . . do you?

Very odd is the point of the analysis.

Yes. The article only sees the word “model”, it doesn’t see how it is used. There aren’t too many climate hypotheses that can be tested without some kind of model. So the distinction needs to be made, somehow, between the models whose use is regarded as research and research which is tested using a model. May I be so bold as to classify these as “bad” and “good” model uses respectively. (Well, good if done properly).

Having said that, though, the reality is that the vast majority of all climate research has “bad” model use. Or certainly, it seems, where public funding is used. To compound the problem, the “bad” use models are unfit for purpose, because their structure is upside-down. As I explained here https://wattsupwiththat.com/2015/11/08/inside-the-climate-computer-models/ the models are simply low-resolution weather models which necessarily become hopelessly inaccurate in a few days. When they then aggregate their weather results into a climate result, the whole thing necessarily remains a work of fiction. For a model to be useful for this purpose, it would surely have to be structured as a climate model.

It is not surprising that “there aren’t too many climate hypotheses that can be tested without some kind of model”, since at root the logical difference between “hypothesis” and “model” is a minor one.

Hypotheses are probably commonly understood to refer more to a binary sort of model, with an answer like “yes” or “no”. Likewise, models may typically tend to predict more complicated curves, but certainly the word “model” could be used to refer to a binary sort of question.

Perhaps the prevalence of “models” as a term of art seen in climate science is nothing more than a historically based convention.

I’m not seeing how either of those two uses of a model are legitimate. A computer model produces only what it is programmed to produce, so I can’t see it as a legitimate source of “research.” Nor do I see how the output of a computer model is in any way useful in testing research, for the same reason. I can see a model used as a source of a hypothesis to be tested by actual research.

As for why climate science accounts for so much of the modeling done in science, the reason seems obvious; it’s the only tool in a climate scientist’s arsenal to quantify any postulated climate phenomenon. You can’t conduct any type of controlled experiment on the planet’s climate system, and seeing as there is only one Earth, you can’t perform any kind of statistical study like an epidemiologist might do to determine the effect of, say butter consumption on cholesterol levels. In other words, honest-to-goodness scientific procedures aren’t available to the modern climate scientist who might want to explore how much X you get for a given change in Y. But instead of being honest and saying “we don’t know and we will never know,” the modern climate scientist fabricates their evidence by programming a computer to simulate the way they think (wish) the climate behaves, and then uses the output of their creation as “data” to support the assumptions they used to program the computer,

When the computer output doesn’t predict actual measurements taken later (and only a fool would think it within the realm of possibility that a mathematical model could do that, given the pitifully small amount of time we’ve had to study the Earth’s climate and the overwhelming handicap we have when collecting data and experimenting on the Earth’s climate system), the modern climate scientist cheats using a variation on the Texas Sharpshooter fallacy. Since past data is known at the time the model is programmed, the model can be tuned to generally show the historical up and down trends of past climate, and then all you have to do is later graph the prediction and future measurements using anomalies, while cherry picking the base or reference period for the anomalies. In this manner, the subsequent measurements can be vertically lined up to fall within the very wide predictive portion of the range bands of the model, thereby “painting the target” within the model’s predictions, and while the past measured temperature anomalies may drift in and out of the models’ ranges, they generally track the inflection points of the past climate, since those were known and could be pre-programmed into the model. All in all, it looks nice to the average journalist and politician, but it’s still still just an illusion.

In most other fields people don’t write articles about their every day use of models .

In fields like algorithmic high frequency trading where powerful languages like those http://Kx.com , they keep their models secret and secure if they work and bury them silently if they don’t .

I do think that with the advent of GCMs, “models” came to dominate the climate science literature–not through GCM-development papers alone (although there certainly are plenty of those), but also through papers using GCM output either to drive impact analyses (these are a dime a dozen) or papers that compare observations with model-derived predictions/projections (if even in an off-hand fashion).

I think Pat and David’s analysis shows this general result, but probably requires refinements in order to be able to drill down to specifics.

-Chip

whew! – it’s a relief that some question the validity of the authors’ method – it includes but doesn’t exclude – and does neither wisely

Chip and Nick, can you explain why the rest of science does not talk this way? I think the dominance of modeling in climate science is so entrenched that we cannot imagine it being otherwise, that is we cannot imagine a true science of climate change. Would that we could.

I think a lot of it is that other fields of physical science you work up from fundamental classical equations — which , along with the necessary math , are the first textbooks bought and the first things taught to undergraduates .

There is no such foundation for “climate science” . You cannot find the essential quantitative sequence of equations explaining how optical phenomena “trap” greater heat at the bottoms of atmospheres than their tops . It should be trivial to point to the page or so of non-optional equations in an intro text and say “plug in these values for the parameters and you see it get X much hotter on the side away from the source” . But you can’t .

Thus 70+ never rejected “models” and decades of stagnation .

climate forecasting:economic forecasting::climate modeling:economic modeling

a.k.a. grossly inaccurate.

Science is in a downward spiral, being rotted from within by shoddy practices and poor practitioners. Has science entered a death spiral, as indifferent, inept scientists raise up new generations of even poorer researchers? The facts look grim. http://theresilientearth.com/?q=content/science-death-spiral

That web page of yours looks grim, too — white text on brown background is really hard to read.

MP, My eyes are not what they used to be. Hint—– hold down the control key and scroll your mouse.

Ideas have Consequences: Post-modern philosophy has led to post-modern science. Everyone is entitled to their own facts.

It seems the artwork on the super-computer in the picture is not current; there is an updated version that better reflects the advanced processing steps that occur inside those boxes.

For all of the people pictured in that article, you need to find a picture with them holding a hat. Preferably in front of them, open end up.

…and the federal observation network lay in ruins. Just think what could be done to improve climate measurement with a small fraction of the modeling money. At some point, there may not be any credible observations to calibrate climate models and then we have a self-fulfilled prophecy – modeled observed data and modeled forecasts. Who needs to verify anything? Gore triumphs!

I spent 15 years as a electronics simulation expert, and this was what got me looking at GCM’s, it’s great if it’s right, but they use modeling to confirm attribution of observations, which are used to validate models.

Are you saying that “data” are used to set up the models, and then the models are validated by producing the “data” that were used to set them up?

Right here, you have the long and the short of it. This is what many people consider to be the fundamental failings of the models. The modelers would have you look at the devilishly complex calculations embodied in how the models do what they do. Others take a more global view to see what the models are doing overall. The output of the models simply reaffirm the assumptions built into them, the rest is smokescreen.

Do you mean SPICE modeling or the like ??

I did a lot of SPICE like modeling of CMOS Analog/Digital circuits, which included the real Semiconductor Physics of the specific structures I was laying out.

We never ever made any masks for any ICs that did not already perform properly in the SPICE environment, and that included, what the hell the circuit would do, during the power turn on transient. Quite often, a circuit that would function properly with the correct power supply voltages applied, would simply never get through the transient turn on states, to ever reach that steady state operating condition.

I designed some beautifully elegant Analog circuits, that were damnably clever, until the SPICE discovered it wouldn’t turn on.

You couldn’t afford enough prototype engineering runs in wafer fab to find out such glitches.

G

Modelling doesn’t necessarily mean you need a Terra-Computer.

Years ago, you could buy a three bit mechanical flip flop “logic” gizmo from one of those science hobby stores. It was just a bunch of levers and such tht you could assemble into say three JK flip flops, with various inputs and feedbacks, and you could actually make a three bit counter out of it.

You slid a lever back and forth, and that was the clocking signal, and the damn thing would cycle through the states.

Well of course, such a three bit circuit must have eight possible states.

I had designed a very high speed counter (for that time). It had a total count of 200,000 and it was an up down counter that could run at a clock speed of 200MHz. The very fastest then available logic flip flops, were Motorola MECL3 D-flipflops, that could clock at 300 MHz. Nobody made a JK flip flop that would toggle at 300 MHz.

Well my counter needed to be parallel loaded with a number for the terminal count, and then counted down to zero, and reloaded. But it had to be able to divide the input clock frequency by ANY integer from 1 to 200,000. at 200 MHz.

So you had to be able to detect the zero state (or terminal count); enable the parallel load input gates, load the count number, and be ready to start counting five nanoseconds later, whether the count was 1, or 200,000 or anything in between.

Normally the cost of making such a thing would require a lot of flip flops that were a damn side faster than 200 MHz and a totally parallel counting system.

MY counter started with a divide by four, made out of those MECL3 D-flip flops; three of them plus some special transistor gates made out of fast transistors to get around the D-limitation (I needed JK).

That was followed by a divide by five quinary counter that was made out of 50MHz high speed TTL JK flip flops (Sylvania). The remaining counter of 10,000 count was made of low power 10 MHz TTL Fairchild MSI ICs , namely four decade counters, and it was all basically ripple counters, rather than parallel. (Very clever architecture).

But I digress, the thing was my quinary was made out of three JK flip flops with appropriate feedbacks to count by five.

So hell, I built a model of that quinary out of that Edmund Scientific mechanical contraption to see if it would work. simple matter to connect all the levers to provide the proper feedbacks, and in minutes I was merrily sliding the clock strip back abd forth, and watching the thing step through the five states.

So I hightailed it over to my boss to show him what a genius I was, and he played with it, not quite understanding the correct clock slide stroke required, so he rough housed it a bit, and then told me, he couldn’t even move the clock strip.

Well I had had no problem, so I grabbed it off him, and sure enough the damn thing was locked up solid, and no way to move the clock, without busting something.

Well my quinary wa supposed to count like a shift register (for speed), so the sequence of states was: 000, 001, 011, 110, 100, … 000 …

Well of course three bits gives you eight states, not five, so there were three states, my counter never got into.

They were 010, 101, and 111.

So Iooked what my boss had done, and sure enough, my fancy counter was in the 111 state, and in that state, if you clocked it, it sure enough was supposed to go from 111 to 111. In the mechanical gizmo, that meant no go on the clock input.

So that left 010 and 101 states. So I forcibly flipped the middle flip flop back to zero to get the 101 state, and low and behold, the clock line was again free, and it now toggled back and forth between 010, and 101, which the logic diagram confirmed it was supposed to do.

So I had to add additional logic gates to my quinary to force a return to 000 if it ever got into 101, or 0101, or 111.

Well I cheated, and simply recognized the illegal 1X1 state and it clocked from there to 000.

That Edmunds Scientific mechanical contraption saved me a ton of grief.

The counter was for a completely digital timing unit for a pulse generator for semiconductor testing, so it could count a 200 MHz crystal controlled oscillator to make accurate 5 nanosec increments. It also included logic switched PC board strip lines , giving ten delay increments of 100 psec, and four delay increments of 1 nsec, so I could make pulse widths, and periods and anything else in 100 psec steps, all with crystal controlled accuracy. That was in 1968 as near as I can remember.

It never went into production. They one day laid off the entire advanced circuits group, and decided to outsource the testing to a big company already in that business, instead of trying to build their own.

That ultra fast divide by N counting architecture was never published and to my knowledge it is still the fastest way to do a divide by N counter. But today, the same architecture could do GHz speeds, with today’s hardware.

Yes some modeling can be invaluable when it is done properly.

G

Spice, digital, digital mated with real vlsi chips, timing, transmission line, pretty much all of it.

The interesting thing is your circuit might have been fine, but the numerical engine might not have stabilized within the time steps allowed. That was a lot of what I did too, explain results when it worked and when it didn’t.

You could model the climate in spice, same kind of differential equation solver, but adjusting the model would be time consuming.

Mr. Analog, Bob Pease had a lot of not so nice things to say about SPICE:

http://electronicdesign.com/author/bob-pease

” Mr. Analog”

Well we all can’t be Mr. Analog 🙂

As Harry would sort of say, you have to know your limitations.

I designed a Fairchild I^2L gate array for NASA on a 68010 multi bus system and a vt100 that worse case clocked at about 270mhz, and best at 450mhz, it counted the number of bits or anti bits in a 32 bit word, forwards or backwards.

But nothing as clever as your Babbage Machine 🙂

Analog is better. The sampling rate is higher. LOL

Amen. If you apply a little engineering understanding, you can often get the job done with greatly reduced resources. On the other hand, if you have the resources, the brute force method often gets the job done quicker, and cheaper, in terms of engineering man-hours.

If you’re going to build a million of something, you can spend a lot of time figuring out the clever efficient solution. If you’re going to build one, you’re better to brute force it because your major expense is engineering. (… unless you’re building one thing for a satellite.)

On the other hand, there’s nothing like the satisfaction of doing something on an 8 bit computer that somebody else couldn’t do because it crashed his mainframe. 🙂

The story photo shows a beautiful, pristine and unmarred landscape on the front of them. Shouldn’t it show acres of land pockmarked with windmills and solar panels to power said computers. Maybe the UPS and diesel back-up generator too. I guess whatever makes them sleep at night.

Well festooned with dead Eagles !!

g

Cannot hind cast.

Cannot forecast.

Cannot replicate.

Cannot be science.

+10

You cannot infer the amount of modeling done by climate scientists from the number of times three words are used in scientific papers. Did it ever occur to you that one model could be mentioned thousands of times?

The total number of models does not matter.

It’s how many papers are using modeling instead of real world data that matters.

Which you cannot determine using this post’s spurious correlation. Did you know there is a 95.23% correlation between the number of math doctorates awarded and the amount of uranium stored at US nuclear power plants?

http://www.tylervigen.com/spurious-correlations

The only correlation claimed is between the use of the word modeling and doing modeling. So how is that spurious? Words refer to things.

Saul is once again desperate to change the subject.

That’s hardly surprising. The models are the only place that CAGW has ever been found.

Spot on.

“It seems that the modeling cart has gotten far ahead of the scientific horse.”

Not only is the cart ahead of the horse, but it’s so far ahead that the horse can’t even see it any more.

MarkW, knowing a bit about horses, by that time the horse ( smart animal that he is) has realized that the barn is the best place to be and is back there already.

Do we need to distinguish between the normal process of scientific modelling which tries to visualize the interaction of the various components of a system and craaaaaazy, whacked out modelling which plugs data into holes where it doesn’t fit, which glosses over huge gaps in the understanding of processes and which can never be falsified no matter how poorly it fits reality, all in support of predetermined beliefs about how the system works?

I continue to contend that a competitive planetary model can be written in at most a few hundred succinct APL definitions — no more expressions than required in a textbook working thru the quantitative physics . And will execute efficiently on anything from a smart phone to a supercomputer .

And be understandable to those who can understand mathematical physics in any field .

An example of just how succinct and expressive an APL can be , the

dot productcan be defined in Arthur Whitney’s K from http://Kx.com as :dot : ( +/ * ) .and will calculate the dot product not just between a pair of vectors but between arbitrary arrays of pairs of vectors , for instance pairs for every voxel in a 3D map over the globe .

Anybody interested in cooperating on such a model , contact me on my website , http://CoSy.com .

Well so that would get you presumably a correct result for each of the Physics processes actually included.

So what about all of the real world physics processes, that are not included in your iphone model ??

G

I’m not sure what you mean ?

200 equations , when , for instance , the Planck function can be expressed in one line and be applied to whole sets of frequencies and sets of temperatures like

dotabove , can express a hell of a lot of physics .And it’s a lot easier to understand what’s being done . And if it’s wrong , correct it . Here’s the Planck function in K in terms of the 3 ways to measure distance and time : temporal and spacial frequency ( wave number ) and wave length .

boltz : 1.3806488e-23 / Boltzman constant / Joule % KelvinPlanckf : {[ f ; T ] ( 2 * h * ( f ^ 3 ) % c ^ 2 ) % ( _exp ( h * f ) % boltz * T ) - 1 }

Planckf..h : " in terms of temporal frequency / W % sr * m ^ 2 from cycles % second "

Planckn : {[ n ; T ] ( 2 * h * ( c ^ 2 ) % n ^ 3 ) % ( _exp ( h * f ) % boltz * T ) - 1 }

Planckn..h : " in terms of spatial frequency / W % sr * m ^ 2 from cycles % meter "

Planckl : {[ l ; T ] ( ( 2 * h * c ^ 2 ) % l ^ 5 ) % ( _exp ( h * c ) % l * boltz * T ) - 1 }

Planckl..h : " in terms of wave length / W % sr * m ^ 2 from meters % cycle "

I implement the computations of the temperature of a gray ball in our orbit from the temperature , radius and distance from the sun in a small handful of expressions in my Heartland presentation ,and add one more tor the computation in terms of arbitrary spectra . A couple more would apply a cosine map over the sphere and any spectral map over the surface .

What’s the downside ? It’s hard to compare what these notations accomplish versus the hundreds or thousands of lines of traditional scalar , I’ll call them Algol family languages . It is this power and flexibility which causes them to find their market in money center financial applications . Because , if you’re really good at number crunching , the numbers with the greatest value are ones that have currency symbols attached . And generally , http://Kx.com in particular , run on some of the biggest fastest systems anywhere . But now days these APLs also run on Raspberry Pi .

So , I’m not sure what you mean in your comment .

My own priority these days is getting other heads to join me in fleshing out my own 4th.CoSy , abstracting all I’ve learned from years in K and traditional APLs in a chip level Forth so it will run on any current or future processors . But modeling planetary physics I see as almost a virgin field given the ungainly archaic languages such models are currently quite inscrutably implemented in .

As your friendly neighborhood environmental scientist I have to say: no, just no.

First, science in general is very focused on modelling. If anything, climate modelling is quite down to earth because its parameters are so easily measured. Virtually all of economics, theoretical physics, astronomy and a very substantive part of experimental physics and chemistry etc. etc. are based on computer modelling of some sorts. Climate research field is WAY to small to produce 55% of all papers related to modelling.

Second, that’s just not how google scholar works. It always gives you a couple of million results if you use a general search term. Try using web of science or something for a more suitable search engine.

Clearly this article does not pass the first ‘but does this statistic have any bearing on reality?’ test.

Well, back to doing some system dynamics modelling of my own.

Cheers

Ben

benben says: “First, science in general is very focused on modeling…”

That was clearly stated when discussing the 900,000 papers found. How’d you miss that?

55% of these model-related papers seem to be related to climate change, which suggests modeling is lopsidedly high in climate science. How’d you miss that?

benben says: “…If anything, climate modelling is quite down to earth because its parameters are so easily measured…”

Lol, good one. A handful of model parameters as “so easily measured.” There are plenty of unknown parameters, parameters that are not modeled, uncertainty in the parameters involved in feedbacks, etc. And even if you had those nailed-down, using the hydrostatic approximation would screw you over.

How about your buddies at Nature.com? http://www.nature.com/news/climate-forecasting-build-high-resolution-global-climate-models-1.16351

Nature.com says: “…Simplified formulae known as ‘parameterizations’ are used to approximate the average effects of convective clouds or other small-scale processes within a cell. These approximations are the main source of errors and uncertainties in climate simulations3. As such, many of the parameters used in these formulae are impossible to determine precisely from observations of the real world…”

Is “parameters used in the formulae are impossible to determine precisely from observations” the same thing as “parameters are so easily measured?” Sounds like complete opposites.

benben says: “…Well, back to doing some system dynamics modelling of my own…”

Good luck to that poor system.

I don’t see how you manage to misinterpret what I wrote so badly, but bravo!

Climate science is such a tiny field compared to equally modelling heavy fields such as theoretical physics and economics that it is completely impossible that 55% of ALL modelling related papers across all sciences come from climate change field, even if every single paper ever written by a climate scientist was 100% modelling based.

Second, Nature is correct, but nevertheless climate is a lot easier to measure than11th dimensional constructs (theoretical physics) or completely non existing constructs (the homo-economicus, in economics). Climate science does not exist in a vacuum, which somehow people here seem to think it does, and just because it uses modelling doesn’t make it automatically wrong, which people here definitely seem to think is true.

Well, at least you got that last one on the ball. My system is feeling particularly queasy today!

@ur momisugly DB, + Benben, + Betty, ( and whom ever can help me) The word ” climate scientist” keeps showing up everywhere but can any of you tell what that is and at what uni I can get a “climate scientist” degree? To me that description did not exist until like, 4 years ago. But all of a sudden everybody has a degree in it. I am just wondering. Whenever I look at the credentials of these “Climate Scientists” I get confused. The descriptions seem to vary from Psychologist to astronomer (or is that Astrologist?) or Meteorologist ( that one I get). But nowhere there seems to be a description that kind of makes it clear to me, and thanks for any answer.

The word ” climate scientist” keeps showing up everywhere but can any of you tell what that is and at what uni I can get a “climate scientist” degree?

Well it sure doesn’t seem to require any quantitative courses beyond some sort of physics for poets pretend quant courses .

I got sucked into this slough because of the grossly amateurish level of math and physics I saw pervade theses blog battles . Clearly not up to even that required in just about any field of undergraduate engineering .

And to wield the power of the national or global government on these matters , better to have a degree in poli sci or sociology .

I think ‘climate scientist’ is mostly a boogy man invented on the far right spectrum of american politics. I have quite a few people working on climate change and none of them would call themselves ‘climate scientist’. It’s just easier to create an alternative narrative if you have only a limited number of players. That’s why you keep seeing the same few ‘bad people’ pop up on this site (michael strong, the Indian guy from the IPCC, Gore). And because people don’t want to deal with the fact that virtually all of the sciences work on climate change sometimes and are happy to do so, they invent the generic sinister person ‘the climate scientist’.

Its quite impressive writing from that point of view, what Anthony et al. project on this site!

Anyway, more to the point: my flatmate who works on the climate models so disliked by the commenters here refers to his field as atmospheric physics.

Funny that benben declares that the name that most climate scientists use to refer to themselves is nothing more than an invention of the far right.

BTW, has anyone else noticed the constant habit of liberals to declare that everyone who is more conservative than they are, is far right, extreme right, or something similar.

It goes back to their desperate need to de-legitimize anyone who disagrees with them.

MarkW, I think that if you take the world average political preference and plot the average WUWT commenter on that they’d end up pretty far on the right end of the spectrum. I’m obviously not saying extreme right, which we use (in europe) at least to signify a whole different type of social movement.

So not a dismissal, just an observation. An accurate one I think.

It really is sad when leftists assume that they are the measure against which all things should be measured.

As always, the communists call the socialists conservative.

“It really is sad when leftists assume that they are the measure against which all things should be measured.”

The left know they’re super-smart and everyone else is wrong.

The right know about the Dunning-Kruger Effect.

benbenben says:

If anything, climate modelling is quite down to earth because its parameters are so easily measured.

OK then, measure this parameter: quantify AGW with a measurement.

Ready? OK. On your mark…

…Get set…

…GO!

Hey! What happened? You didn’t ‘Go!‘. Why not?

Could it be that the AGW parameter isn’t so easy to measure?

In fact, AGW is central to the entire ‘dangerous man-made global warming’ debate. You know, the scientific debate that your alarmist contingent has decisively lost.

If you can’t even measure the most basic parameter, then all you’re doing is blowing hot air… Mr. “Environmental Scientist”. (Heavy emphasis on ‘environmental.‘ But on ‘scientist’… not so much.)

[Deleted. More wasted paragraphs from a site pest. -mod]

Not only that, ‘Greenhouse’ was a theory when it was proposed and it still is a theory today.

[Deleted. More wasted paragraphs from a site pest. -mod]

DB’s whole point is that you can’t quantitatively measure AGW.

As for the assertion that modeled parameters are easily measured, that assertion is demonstrably false unless your definition of “easily” is completely divorced from “accurately.” We don’t even measure the average surface temperature of the Earth, but instead use an assumed proxy – the mean of the daily min/max at locations mostly selected around population centers. No person could ever think that a min/max mean is accurately representative of heat flux from a surface, which varies proportionally to the fourth power of instantaneous temperature. (What if the daily max temp at a location doesn’t change over time but stays at that point for longer as time passes? What if the min temperature goes up but the max stays the same while the time spent at the max shortens over time?) Nor were those measurement locations selected for the purpose of global climate measurement, and even of they were, they are mostly corrupted by biases that have never been shown to be reliably corrected for.

We have no reliable temperature record of the oceans, or of the atmosphere except at a very thin layer. We have no reliable measurement of amounts of precipitation over the oceans (70% of the Earth’s surface). We can’t model clouds. We have no time machine to go back and verify the accuracy of proxy temperature reconstructions used to tune the models.

We simply do not have the data to even begin to develop a mathematical model that one could reasonably believe accurately simulates the behavior of a system as complex as the Earth’s climate system. Nor will we ever have that data since collection of it would require procedures that are simply not available. Ask yourself a simple question – if computer models are good enough for climate scientists to tell us how much of a temperature increase we get for a doubling of CO2, and then to proceed to tell us how much more flooding, and droughts, and hurricanes we get for that temperature increase, why are other scientists in other disciplines bothering with that pesky process of actual experimentation? Why don’t the drug companies and the epidemiologists stop all those time-consuming, expensive double-blind studies and long-term tracking of hundreds of patients? Hell, they should just get with the program, develop a “mathematical model” of the human body and simulate its response to whatever drugs or things we eat, submit it to the FDA for approval, and save a ton of time and money. Why is it only climate scientists who insist on driving public policy based solely on the unverified quantitative output of theoretical computer models?

Betty, betty, betty, benben claimed that it was easy. All DB did was prove that benben is once again lying.

[Note: “Betty” is a fake. “Her” comments have been deleted. -mod]

well, I would say that some of the parameters are inferred and others are directly observed. And we have a HUGE amount of emperical data that is being fed directly in these models (precipitation, moisture, albedo, particles etc etc etc.). So it’s kind of weird to ask for a single AGW parameter and then rail against the fact that that does not exist.

Once again I invite all skeptics to just follow a MOOC or two and make their own model and then play around with some of the published CIMP5 models. It’s not that difficult and it’ll certainly take less time than spending years arguing in the comment section of this blog.

Cheers!

Ben

We do have giga-bytes worth of data. The problem is that tera-bytes would still be insufficient for the problem at hand.

MarkW, sure, that may or may not be true, but to say that there is no data at all (like DB likes to do) is not true. I’m glad we agree on that at least 😉

The difference between having less that 0.1% the data needed and having no data at all is hardly worth mentioning, unless your goal is deception.

“Virtually all of economics, theoretical physics, astronomy and a very substantive part of experimental physics and chemistry etc. etc. are based on computer modelling of some sorts.”:

That’s nonsense. Aside from your “theoretical” physics example, none of the fields in that list are “based on” modeling. Each may USE computer models, but those models are developed only after physical data was used to postulate relationships, and further physical data was used to confirm and quantify the accuracy of those postulated relationships. In none of those fields do scientists rely exclusively on the output of a computer model to quantify a physical phenomenon, even so far as to question the accuracy of physical measurements that conflict with the model, as do many “climate scientists.” You’re confusing instances where past scientific practices have utilized models independently verified to produce reliable results (astronomy, physics, chemistry), with reliance on theoretical models as a substitute for the scientific inability to experimentally measure the behavior of a system in response to a change in an input.

Benben, unlike Google, Google Scholar does not give you millions of hits. GS is a precise bibliometric instrument. People use the term modeing when they talk about modeling. The use of this term in climate science is incredibly high. I believe this is diagnostic, as stated. Modeling has taken over climate science.

https://scholar.google.com/scholar?hl=en&q=modelling+AND+climate+AND+change&btnG=&as_sdt=1%2C7&as_sdtp=

2,380,000 results

I’m glad that at you at least made an easily falsifiable statement David 😉

Cheers,

Ben

benben, if the inputs are so easily measured, what’s the exact amount of aerosols released into the atmosphere for each of the last 50 years, broken down by chemical composition.

Your belief in things already disproven is admirable.

Climate “science’ likes models because they are easier to manipulate than measurements to obtain a politically defined result.

(They adjust the data also, but it is easier to spot those machinations than those hidden deep in the assumptions of a complex computer program.)

To be correct, 55% of *published articles* are about climate modeling, not 55% of research done. If you are running a GCM it is incredibly easy to run the model, publish a paper, tweak the model, run it again, publish. This way you rack up an impressive number of publications with very little effort. Compare that with old school climatology where you did field work, tramping around collecting ice cores, tree rings, etc to try and tease out past climates. That’s labor intensive and hence far fewer papers published.

And are you saying 4% of the entire Federal science research budget or 4% of NOAA’s budget. Huge difference. 4% of the overall Federal budget would not be “a tiny fraction”

You may be on to something here, but I think you need to refine your methodology and tighten your definitions.

This is so true. Just looking at number of publications is… just not very relevant. Nothing wrong with writing a couple of modelling papers. Good exercise in scientific writing and dealing with reviewers and the such. But it’s kind ridiculous to write these kind of blog posts based on that.

The articles report the research so they are a reasonable proxy for it. Most of the research is based on modeling on one way or another. The point is very simple.

I was going to same something very similar about that 55% number. It is an over-reach to say #papers ~ research done.

That said, you have understand the motivation to cram the peer-review literature with quantity, it precludes a careful quality assessment when the bulk paper in the GCM field is so large. One person or group could never carefully and exhaustivily review such a bulk. Any naysaying, a counterparty could always say, “but what about these papers.”

And that is precisely the point. Advocates of Climate change, and their model outputs it entirely depends upon, are still attempting to position it as the Null Hypothesis by flooding the journals with circular logic papers.

Joelobryan, I don’t think that that’s true. If you’re in the field you know exactly what the value of these and other papers is. And the interface between science and policy is always messy and that hardly has anything to do with modelling itself. See for instance medical research!

As a lay-man you can still get a feel for how important a work is. Is it published in a top ranking journal? It’s probably good. Is it published in some random chinese journal? Not so much. Can you only find it via google scholar or is it a conference proceeding? Ha.

Anyway, the truth of the matter is that climate research is pretty well done nowadays. Websites like WUWT create this alternative narrative of a bunch of crazy power hungry bureaucrats abusing incredibly simplistic models to undermine democracy. It’s entertaining to read but it’s just really far removed from the boring daily grind of science, of which climate research is very much a part.

Hey, you’re always free to follow a couple of MOOCs on the topic and see for yourself how things are done, instead of rely on stuff like whats posted here!

Cheers,

Ben

Ben,

no its like the CMIP 3/5 ensembles. With such a sphaghetti splay of supposedly plausible insilico climate responses to rising pCO2, then Post hoc, one can cherry pick the one or few to claim success to observation. That’s the very definition of pseudoscience.

“a bunch of crazy power hungry bureaucrats”

Don’t need that; inertia and momentum are enough.

Joel,

My flatmate makes climate models for a living, so I know how they work and have played around a bit with them. It’s just pretty standard science. The models all point in roughly the same direction because that’s just the way it is, not because of some massive conspiracy.

You want proof? Very easy: just go on coursera, follow a MOOC or two and make your own model. Shouldn’t take you more than a couple of weeks, depending on your math skills. And then play around with any of the hundreds of publicly published models.

Please also note that the comment section here is never frequented by someone who says ‘I made a climate model myself and it proves that these CIMP models are bullshit’. The reason being that anyone who has the skills to make a climate model understands that the CIMP models are doing quite a good job.

Contrast that with the post on which we are commenting here. The guys at WUWT can’t even put together a decent google scholar search.

Now, I understand that most of the stuff on this site is actually a super conservative American flamewar against liberal Americans, but its just weird to see science caught up in it.

Cheers,

Ben

benben says:

…the CIMP models are doing quite a good job.

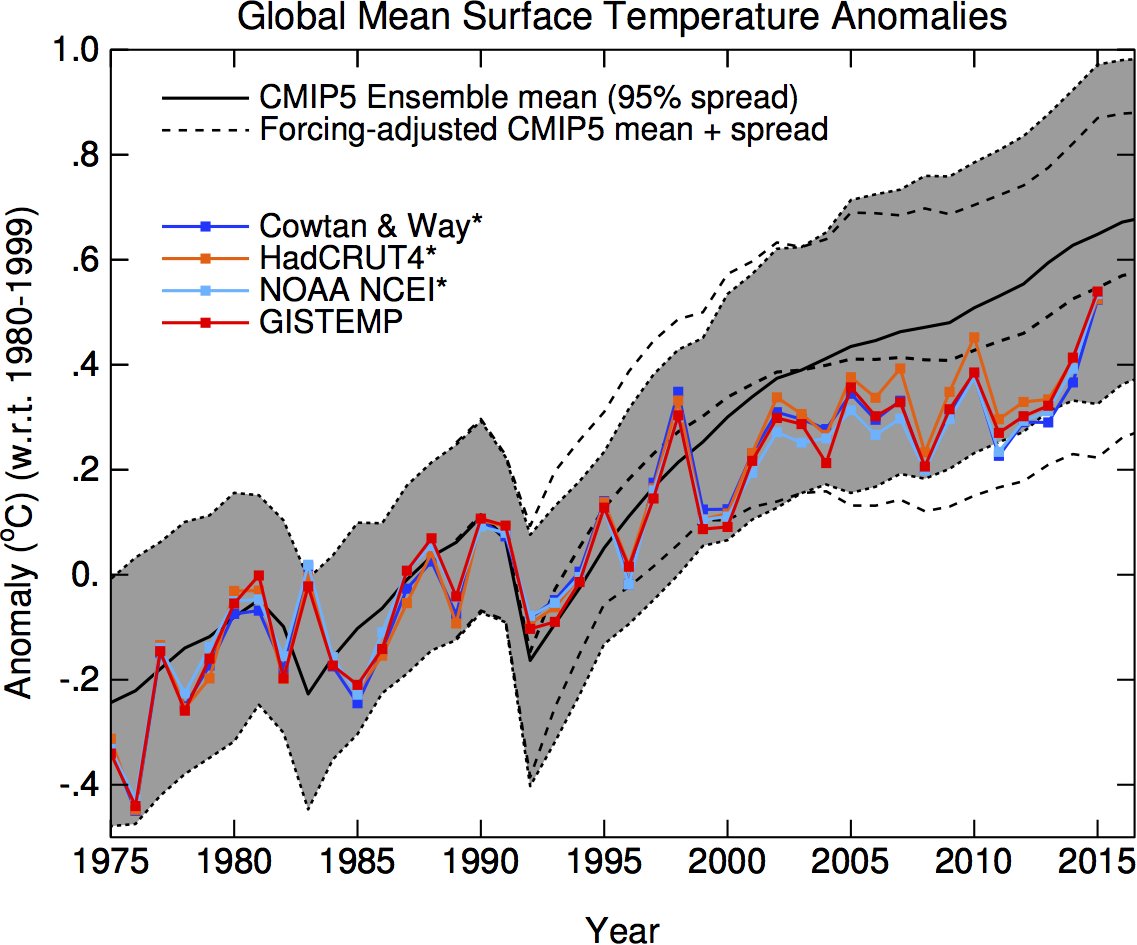

http://l.yimg.com/fz/api/res/1.2/u.i.A9hIbX2Ql7L7LC5_jg–/YXBwaWQ9c3JjaGRkO2g9Njk5O3E9OTU7dz0xMDE1/http://notrickszone.com/wp-content/uploads/2013/09/73-climate-models_reality.gif

(*snicker*)

I find it virtually impossible even to get people to agree on how to calculate the temperature of a uniformly colored ( isotropic spectrum ) ball in our orbit .

And if you can’t start with that , you have left the domain of analytical physics .

Which largely explains Cork Hayden’s observation that if it were really science there would be 1 model , not , what is it now ? 70+ ?

dbstealey:

*snicker*

Yes, Tone, giggle like a little girl.. suits you.

We all know that Giss, Had, Noaa are all massively adjusted to try to get somewhere near the erroneous models.

Your point is?

yep, dbstealey

The models basically construct the side of a barn.. and still missed it by a proverbial mile. !!

dbstealy: That graph you posted was manipulated by selecting a base period from 1980-1999 to measure the anomaly. All this does is force the four measurement lines into the model range. The surface temperature data starts more than a century ago – why do you suppose that cute little picture of yours not only focused only on the tail end of it, but re-centered the measurements to the model projections around an arbitrary slice within that window?

Kurt, I think you mean Toneb not dbstealey. Toneb posted the manipulated graph.

It’s also possible that the the ongoing ‘adjustements’ to the series in Toneb’s graph are designed to keep the series in the model range.

Billy,

Kurt was obviously referring to Toneb’s chart. He referred to four models, not to 70+. The one I linked to has been posted numerous times without any criticism or questioning. And similar charts have also been posted showing the same thing.

Next:

Bob Armstrong,

Correct, there are more than 70 climate models. And Toneb’s are more in error than most of them. For those who may want to know why, just do a search for ‘cowtan & way’. They’ve been thoroughly debunked, and now only True Believers like toneb pay them any attention.

Bob Armstrong, I don’t know exactly to what discussion you’re referring to, but its pretty hyperbolic to state that just because you can’t agree on how to model something you have ‘left left the domain of analytical physics’. And anyway, atmospheric physics is a different domain to start with so that isn’t such a big problem 😉

And also: “Which largely explains Cork Hayden’s observation that if it were really science there would be 1 model , not , what is it now ? 70+”

So the assumption here is that something is only science if it has one answer? or that it can only be modeled in 1 way? That just… isn’t how science works. If only because different models focus on different things. I think the main thing CIMP5 shows is that you can model the climate in a very wide variety of ways and yet get roughly the same outcome (more co2 = higher temperatures). That is exactly what gives the rest of the world the confidence in the AGW hypothesis. It’s not just 1 model. It’s, like you say, 70+ independently constructed models that show the same behaviour.

Cheers,

Ben

Because they are programmed that way.

BenBen , watching this discussion I have concluded you are an intellectually dishonest troll . You should realize that anything other than an accurate understanding of reality is not optimal for your own welfare or the welfare of your family or anybody other than the crapitalist class which feeds off the force required to subjugate the rational to their willful delusions .

Here’s the model , in K for thermal power spectrum as a function of wavelength:

Planckl : {[ l ; T ] ( ( 2 * h * c ^ 2 ) % l ^ 5 ) % ( _exp ( h * c ) % l * boltz * T ) - 1 }Planckl..h : " in terms of wave length / W % sr * m ^ 2 from meters % cycle "

Here’s the model for its integral in 4th.CoSy :

: T>Psb ( P -- T ) 4. _f ^f 5.6704e-8 _f *f ;Those are the only models , winnowed thru many decades of experiment and observation , for those processes .

That’s physics . There is only one model for calculating the temperature of a gray ball in our orbit and that gives a temperature of about 278.5 +- 2.3 which explains 97% ( ’tis a magic number ) of our estimated surface temperature .

The model for a ball asymmetrically irradiated with a uniform ( isotropic ) arbitrary spectrum is

( ( dot[ ae ; source ] % dot[ ae ; sink ] ) ^ 1%4 ) * TgrayBodywhere

aeis the absorption=emission spectrum ( color ) of the object .Either that is correct and the next expression in constructing a quantitative audit trail from the output of the Sun to our estimated surface temperature , or we need to stop right there and get it right .

Any other procedure is not physics .

“70+ independently constructed models that show the same behaviour.”

And not a single one of them matches what the real world has done.

Funny thing that.

But MarkW, we can at least agree on the obvious right? That the models show that there is a certain amount of warming, and that observations also show that there is warming.

Ignoring the magnitudes for a second, the upward trend is pretty clear for both model and observation:

https://wattsupwiththat.com/2016/05/15/april-2016-global-surface-landocean-and-lower-troposphere-temperature-anomaly-update/

But that doesn’t show any sort of relation, might as well compare the models to population.

And in fact, there has been no measurable loss of night time cooling, which would be required if Co2 was the cause of the warming.

benben is starting to sound a lot like Nye.

CO2 is up, temperatures are up, therefore it’s settled.

The models have gotten the amount of warming off by huge amounts.

The models have gotten the timing of the warming off by huge amounts.

The models have gotten the distribution of the warming, both geographically and in terms of altitude, off by huge amounts.

If the best you can do is state that models predicted warming and it warmed, then you are in pathetically bad shape.

I’m not trying to prove that the models work by saying that. I’m just wondering if I can get you to agree with something that is blazingly obvious. The temp trend in the observations is up. The models point up. You don’t have to agree with anything else, not causality or anything. Just that the trend for both is up.

You guys are so amazingly combative, I’m just trying to get the barest glimmer of an actual conversation going here 🙂

Cheers

Ben

Eh, I’m not sure the published temperature series mean anything. So the fact they show a slight warming isn’t all that compelling, especially since the changes in surface temp do not have the fingerprint of Co2 warming, they have mostly regional changes to min temp.

A different way to see the surface station data

https://micro6500blog.wordpress.com/2015/11/18/evidence-against-warming-from-carbon-dioxide/

Bob Armstrong,

“BenBen , watching this discussion I have concluded you are an intellectually dishonest troll.”

I’ve watched him for months, and conclude he’s psychopathic, and appeals to consider “the welfare of your family or anybody other than the crapitalist class” are falling on deaf ears, so to speak.

Meanwhile, IRS complains about its IT budgets.

From IPCC working group I – executive summary:

“The climate system is a coupled non-linear chaotic system, and therefore the long-term prediction of future climate states is not possible.”

So why, exactly, is all this modeling being done?

answer: a dollar sign with a 1 and followed by 13 zeros behind it.

Jock

Straight to the heart of the matter – my hero

Climate science is a shambles. There is very few scientists just data collectors and modellers. What a sad state of affairs. Nearly every paper I read states “can’t come to a conclusion, need more data” and yet they are swimimng in data. The AGW have got most tied up trying to find the holy grail instead of putting their heads out the window and looking at reality. There are only a very few individuals that can truely call themselves Climate Scientists. Looking from the outside, its a disgrace.

The local TV station frequently provide the predictions of three different models when providing their prognostications of the next days rainfall or snowfall. They even give a map of these predictions. Rarely are the results ever the same and even rare is even one of them correct in predicting the correct amount. Three times they predicted heavy snowfall over the entire county over the last two years. The county had the snow plows at the ready but no snow fall. Once they predicted “less than an inch, just a heavy dusting.” We got 18 inches. The snow came so fast that by the time they called out the drivers none could make it to the maintenance yard. It took are days before people could go to work. And the CAGW Cult loves these models.

Those weather models are still inherently more reliabe that climate models. See comment below and my previous guest post here on the trouble with models.

Exactly.

Agreed.

Science has progressed to become an institution of prophets, profits, and white collar jobs. It was inevitable.