UPDATE 2: Animation 1 from this post is happily displaying the differences between the “Best” models and observations in the first comment at a well-known alarmist blog. Please see update 2 at the end of this post.

# # # #

UPDATE: Please see the update at the end of the post.

# # #

The new paper Risbey et al. (2014) will likely be very controversial based solely on the two co-authors identified in the title above (and shown in the photos to the right). As a result, I suspect it will garner a lot of attention…a lot of attention. This post is not about those two controversial authors, though their contributions to the paper are discussed. This post is about the numerous curiosities in the paper. For those new to discussions of global warming, I’ve tried to make this post as non-technical as possible, but these are comments on a scientific paper.

The new paper Risbey et al. (2014) will likely be very controversial based solely on the two co-authors identified in the title above (and shown in the photos to the right). As a result, I suspect it will garner a lot of attention…a lot of attention. This post is not about those two controversial authors, though their contributions to the paper are discussed. This post is about the numerous curiosities in the paper. For those new to discussions of global warming, I’ve tried to make this post as non-technical as possible, but these are comments on a scientific paper.

OVERVIEW

The Risbey et al. (2014) Well-estimated global surface warming in climate projections selected for ENSO phase is yet another paper trying to blame the recent dominance of La Niña events for the slowdown in global surface temperature warming, the hiatus. This one, however, states that ENSO contributes to the warming when El Niño events dominate. That occurred from the mid-1970s to the late-1990s. Risbey et al. (2014) also has a number of curiosities that make it stand out from the rest. One of those curiosities is that they claim that 4 specially selected climate models (which they failed to identify) can reproduce the spatial patterns of warming and cooling in the Pacific (and the rest of the ocean basins) during the hiatus period, while the maps they presented of observed versus modeled trends contradict the claims.

IMPORTANT INITIAL NOTE

I’ve read and reread Risbey et al. (2014) a number of times and I can’t find where they identify the “best” 4 and “worst” 4 climate models presented in their Figure 5. I asked Anthony Watts to provide a second set of eyes, and he was also unable to find where they list the models selected for that illustration.

Risbey et al. (2014) identify 18 models, but not the “best” and “worst” of those 18 they used in their Figure 5. Please let me know if I’ve somehow overlooked them. I’ll then strike any related text in this post.

Further to this topic, Anthony Watts sent emails to two of the authors on Friday, July 18, 2014, asking if the models selected for Figure 5 had been named somewhere. Refer to Anthony’s post A courtesy note ahead of publication for Risbey et al. 2014. Anthony has not received replies. While there are numerous other 15-year periods presented in Risbey et al (2014) along with numerous other “best” and “worst” models, our questions pertained solely to Figure 5 and the period of 1998-2012, so it should have been relatively easy to answer the question…and one would have thought the models would have been identified in the Supplementary Information for the paper, but there is no Supplementary Information.

Because Risbey et al. (2014) have not identified the models they’ve selected as “best” and “worst”, their work cannot be verified.

INTRODUCTION

The Risbey et al. (2014) paper Well-estimated global surface warming in climate projections selected for ENSO phase was just published online. Risbey et al. (2014) are claiming that if they cherry-pick a few climate models from the CMIP5 archive (used by the IPCC for their 5th Assessment Report)—that is, if they select specific climate models that best simulate a dominance of La Niña events during the global warming hiatus period of 1998 to 2012—then those models provide a good estimate of warming trends (or lack thereof) and those models also properly simulate the sea surface temperature patterns in the Pacific, and elsewhere.

Those are very odd claims. The spatial patterns of warming and cooling in the Pacific are dictated primarily by ENSO processes and climate models still can’t simulate the most basic of ENSO processes. Even if a few of the models created the warning and cooling spatial patterns by some freak occurrence, the models still do not (cannot) properly simulate ENSO processes. In that respect, the findings of Risbey et al. (2014) are pointless.

Additionally, their claims that the very-small, cherry-picked subset of climate models provides good estimates of the spatial patterns of warming and cooling in the Pacific for the period of 1998-2012 are not supported by the data and model outputs they presented, so Risbey et al. (2014) failed to deliver.

There are a number of other curiosities, too.

ABSTRACT

The Risbey et al. (2014) abstract reads (my boldface):

The question of how climate model projections have tracked the actual evolution of global mean surface air temperature is important in establishing the credibility of their projections. Some studies and the IPCC Fifth Assessment Report suggest that the recent 15-year period (1998–2012) provides evidence that models are overestimating current temperature evolution. Such comparisons are not evidence against model trends because they represent only one realization where the decadal natural variability component of the model climate is generally not in phase with observations. We present a more appropriate test of models where only those models with natural variability (represented by El Niño/Southern Oscillation) largely in phase with observations are selected from multi-model ensembles for comparison with observations. These tests show that climate models have provided good estimates of 15-year trends, including for recent periods and for Pacific spatial trend patterns.

Curiously, in their abstract, Risbey et al. (2014) note a major flaw with the climate models used by the IPCC for their 5th Assessment Report—that they are “generally not in phase with observations”—but they don’t accept that as a flaw. If your stock broker’s models were out of phase with observations, would you continue to invest with that broker based on their out-of-phase models or would you look for another broker whose models were in-phase with observations? Of course, you’d look elsewhere.

Unfortunately, we don’t have any other climate “broker” models to choose from. There are no climate models that can simulate naturally occurring coupled ocean-atmosphere processes that can contribute to global warming and that can stop global warming…or, obviously, simulate those processes in-phase with the real world. Yet governments around the globe continue to invest billions annually in out-of-phase models.

Risbey et al. (2014), like numerous other papers, are basically attempting to blame a shift in ENSO dominance (from a dominance of El Niño events to a dominance of La Niña events) for the recent slowdown in the warming of surface temperatures. Unlike others, they acknowledge that ENSO would also have contributed to the warming from the mid-1970s to the late 1990s, a period when El Niños dominated.

CHANCE VERSUS SKILL

The fifth paragraph of Risbey et al. (2014) begins (my boldface):

In the CMIP5 models run using historical forcing there is no way to ensure that the model has the same sequence of ENSO events as the real world. This will occur only by chance and only for limited periods, because natural variability in the models is not constrained to occur in the same sequence as the real world.

Risbey et al. (2014) admitted that the models they selected for having the proper sequence of ENSO events did so by chance, not out of skill, which undermines the intent of their paper. If the focus of the paper had been need for climate models to be in-phase with obseervations, they would have achieved their goal. But that wasn’t the aim of the paper. The concluding sentence of the abstract claims that “…climate models have provided good estimates of 15-year trends, including for recent periods…” when, in fact, it was by pure chance that the cherry-picked models aligned with the real world. No skill involved. If models had any skill, the outputs of the models would be in-phase with observations.

ENSO CONTRIBUTES TO WARMING

The fifth paragraph of the paper continues:

For any 15-year period the rate of warming in the real world may accelerate or decelerate depending on the phase of ENSO predominant over the period.

Risbey et al. (2014) admitted with that sentence, if a dominance of La Niña events can cause surface warming to slow (“decelerate”), then a dominance of El Niño events can provide a naturally occurring and naturally fueled contribution to global warming (“accelerate” it), above and beyond the forced component of the models. Unfortunately, climate models were tuned to a period when El Niño events dominated (the mid-1970s to the late 1990s), yet climate modelers assumed all of the warming during that period was caused by manmade greenhouse gases. (See the discussion of Figure 9.5 from the IPCC’s 4th Assessment Report here and Chapter 9 from AR4 here.) As a result, the models have grossly overestimated the forced component of the warming and, in turn, climate sensitivity.

Some might believe that Risbey et al (2014) have thrown the IPCC under the bus, so to speak. But I don’t believe so. We’ll have to see how the mainstream media responds to the paper. I don’t think the media will even catch the significance of ENSO contributions to warming since science reporters have not been very forthcoming about the failings of climate science.

Risbey et al (2014) have also overlooked the contribution of the Atlantic Multidecadal Oscillation during the period to which climate models were tuned. From the mid-1970s to the early-2000s, the additional naturally occurring warming of the sea surface temperatures of the North Atlantic contributed considerably to the warming of sea surface temperatures of the Northern Hemisphere (and in turn to land surface air temperatures). This also adds to the overestimation of the forced component of the warming (and climate sensitivity) during the recent warming period. Sea surface temperatures in the North Atlantic have also been flat for the past decade, suggesting that the Atlantic Multidecadal Oscillation has ended its contribution to global warming, and, because by definition the Atlantic Multidecadal Oscillation lasts for multiple decades, the sea surface temperatures of the North Atlantic may continue to remain flat or even cool for another couple of decades. (See the NOAA Frequently Asked Questions About the Atlantic Multidecadal Oscillation (AMO) webpage and the posts An Introduction To ENSO, AMO, and PDO — Part 2 and Multidecadal Variations and Sea Surface Temperature Reconstructions.)

For more than 5 years, I have gone to great lengths to illustrate and explain how El Niño and La Niña processes contributed to the warming of sea surface temperatures and the oceans to depth. If this topic is new to you, see my free illustrated essay “The Manmade Global Warming Challenge” (42mb). Recently Kevin Trenberth acknowledged that strong El Niño events cause upward steps in global surface temperatures. Refer to the post The 2014/15 El Niño – Part 9 – Kevin Trenberth is Looking Forward to Another “Big Jump”. And now the authors of Risbey et al. (2014)—including the two activists Stephan Lewandowsky and Naomi Oreskes—are admitting that ENSO can contribute to global warming. How many more years will pass before mainstream media and politicians acknowledge that nature can and does provide a major contribution to global warming? Or should that be how many more decades will pass?

RISBEY ET AL. (2014) – AN EXERCISE IN FUTILITY

IF (big if) the climate models in the CMIP5 archive were capable of simulating the coupled ocean-atmosphere processes associated with El Niño and La Niña events (collectively called ENSO processes hereafter), Risbey et al (2014) might have value…if the intent of their paper was to point out that models need to be in-phase with nature. Then, even though all of the models do not properly simulate the timing, strength or duration of ENSO events, Risbey et al (2014) could have selected, as they have done, specific models that best simulated ENSO during the hiatus period.

However, climate models cannot properly simulate ENSO processes, even the most basic of processes like Bjerknes feedback. (Bjerknes feedback, basically, is the positive feedback between the trade wind strength and sea surface temperature gradient from east to west in the equatorial Pacific.) These model failings have been known for years. See Guilyardi et al. (2009)and Bellenger et al (2012). It is very difficult to find a portion—any portion—of ENSO processes that climate models simulate properly. Therefore, the fact that Risbey et al (2014) selected models that better simulate the ENSO trends for the period of 1998 to 2012 is pointless, because the models are not correctly simulating ENSO processes. The models are creating variations in the sea surface temperatures of the tropical Pacific but that “noise” has no relationship to El Niño and La Nina processes as they exist in nature.

Oddly, Risbey et al (2014) acknowledge that the models do not properly simulate ENSO processes. The start of the last paragraph under the heading of “Phase-selected projections” reads [Reference 28 is Guilyardi et al. (2009)]:

This method of phase aligning to select appropriate model trend estimates will not be perfect as the models contain errors in the forcing histories27 and errors in the simulation of ENSO (refs 25, 28) and other processes.

The climate model failings with respect to how they simulate ENSO aren’t minor errors. They are catastrophic model failings, yet the IPCC hasn’t come to terms with the importance of those flaws yet. On the other hand, the authors of Guilyardi et al. (2009) were quite clear in their understandings of those climate model failings, when they wrote:

Because ENSO is the dominant mode of climate variability at interannual time scales, the lack of consistency in the model predictions of the response of ENSO to global warming currently limits our confidence in using these predictions to address adaptive societal concerns, such as regional impacts or extremes (Joseph and Nigam 2006; Power et al. 2006).

ENSO is one of the primary processes through which heat is distributed from the tropics to the poles. Those processes are chaotic and they vary over annual, decadal and multidecadal time periods.

During some multidecadal periods, El Niño events dominate. During others, La Niña events are dominant. During the multidecadal periods when El Niño events dominate:

- ENSO processes release more heat than “normal” from the tropical Pacific to the atmosphere, and

- ENSO processes redistribute more warm water than “normal” from the tropical Pacific to adjoining ocean basins, and

- through teleconnections, ENSO processes cause less evaporative cooling from, and more sunlight than “normal” to reach into, remote ocean basins, both of which result in ocean warming at the surface and to depth.

As a result, during multidecadal periods when El Niño events dominate, like the period from the mid-1970s to the late 1990s, global surface temperatures and ocean heat content rise. In other words, global warming occurs. There is no way global warming cannot occur during a period when El Niño events dominate. But projections of future global warming and climate change based on climate models don’t account for that naturally caused warming because the models cannot simulate ENSO processes…or teleconnections.

Now that ENSO has switched modes so that La Niña events are dominant the climate-science community is scrambling to explain the loss of naturally caused warming, which they’ve been blaming on manmade greenhouse gases all along.

RISBEY ET AL. (2014) FAIL TO DELIVER

Risbey et al (2014) selected 18 climate models from the 38 contained in the CMIP5 archive for the majority of their study. Under the heading of “Methods”, they listed all of the models in the CMIP5 archive and boldfaced the models they selected:

The set of CMIP5 models used are: ACCESS1-0, ACCESS1-3, bcc-csm1-1, bcc-csm1-1-m, BNU-ESM, CanESM2, CCSM4, CESM1-BGC, CESM1-CAM5, CMCC-CM, CMCC-CMS, CNRM-CM5, CSIRO-Mk3-6-0, EC-EARTH, FGOALS-s2, FIO-ESM, GFDL-CM3, GFDL-ESM2G, GFDL-ESM2M, GISS-E2-H, GISS-E2-H-CC, GISS-E2-R, GISS-E2-R-CC, HadGEM2-AO, HadGEM2-CC, HadGEM2-ES, INMCM4, IPSL-CM5A-LR, IPSL-CM5A-MR, IPSL-CM5B-LR, MIROC-ESM, MIROC-ESM-CHEM, MIROC5, MPI-ESM-LR, MPI-ESM-MR, MRI-CGCM3, NorESM1-M and NorESM1-ME.

Those 18 were selected because model outputs of sea surface temperatures for the NINO3.4 region were available from those models:

A subset of 18 of the 38 CMIP5 models were available to us with SST data to compute Niño3.4 (ref. 24) indices.

For their evaluation of warming and cooling trends, spatially, during the hiatus period of 1998 to 2012, Risbey et al (2014) whittled the number down to 4 models that “best” simulated the trends and 4 models that simulated the trends “worst”. They define how those “best” and “worst” models were selected:

To select this subset of models for any 15-year period, we calculate the 15-year trend in Niño3.4 index24 in observations and in CMIP5 models and select only those models with a Niño3.4 trend within a tolerance window of +/- 0.01K y-1 of the observed Niño3.4 trend. This approach ensures that we select only models with a phasing of ENSO regime and ocean heat uptake largely in line with observations. In this case we select the subset of models in phase with observations from a reduced set of 18 CMIP5 models where Niño3.4 data were available25 and for the period since 1950 when Niño3.4 indices are more reliable in observations.

The opening phrase of “To select this subset of models for any 15-year period…” indicates the “best” and “worst” models varied depending on the 15-year time period. Risbey et al. (2014) presented the period of 1998 to 2012 for their Figure 5. But in other discussions, like for those of their Figures 4 and 6, the number of “best” and “worst” models changed as did the models. The caption for their Figure 4 includes:

The blue dots (a,c) show the 15-year average trends from only those CMIP5 runs in each 15-year period where the model Niño3.4 trend is close to the observed Niño3.4 trend. The size of the blue dot is proportional to the number of models selected. If fewer than two models are selected in a period, they are not included in the plot. The blue envelope is a 2.5–97.5 percentile loess-smoothed fit to the model 15-year trends weighted by the number of models at each point. b and d contain the same observed trends in red for GISS and Cowtan and Way respectively. The grey dots show the average 15-year trends for only the models with the worst correspondence to the observed Niño3.4 trend. The grey envelope in b and d is defined as for the blue envelope in a and c. Results for HadCRUT4 (not shown) are broadly similar to those of Cowtan and Way.

That is, the “best” models and the number of them changes for each 15-year period. In other words, they’ve used a sort of running cherry-pick for the models in their Figure 4. A novel approach. Somehow, though, this gets highlighted in the abstract as “These tests show that climate models have provided good estimates of 15-year trends, including for recent periods and for Pacific spatial trend patterns.” But they failed to highlight the real findings of their paper: that climate models must be in-phase with nature if the models are to have value.

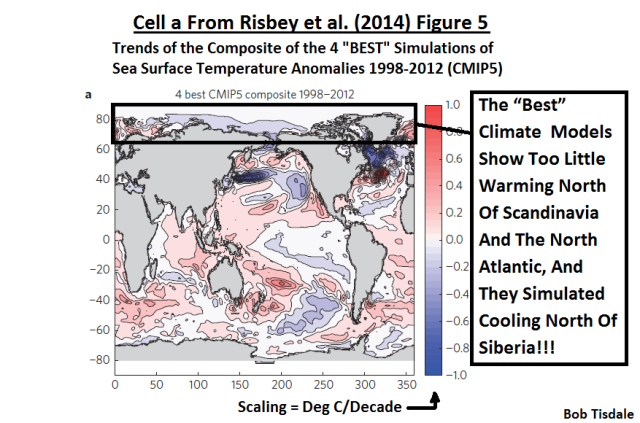

As noted earlier, I’ve been through the paper a number of times, and I cannot find where they listed which models were selected as “best” and “worst”. They illustrated those “best” and “worst” modeled sea surface temperature trends in cells a and b of their Figure 5. See the full Figure 5 from Risbey et al (2014) here. They also illustrated in cell c the observed sea surface temperature warming and cooling trends during the hiatus period of 1998 to 2012. About their Figure 5, they write, where the “in phase” models are the “best” models and “least in phase” models are the “worst” models (my boldface):

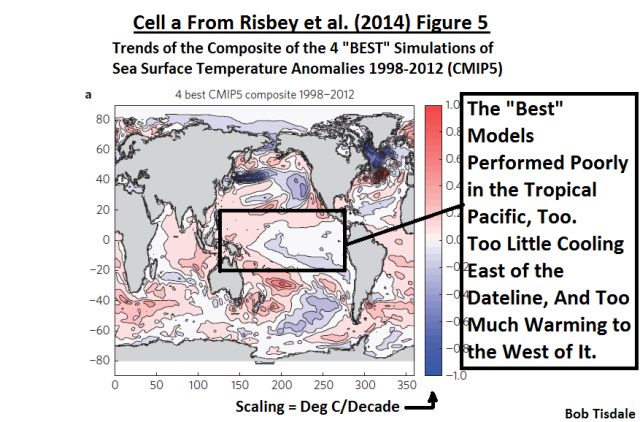

The composite pattern of spatial 15-year trends in the selection of models in/out of phase with ENSO regime is shown for the 1998-2012 period in Fig. 5. The models in phase with ENSO (Fig. 5a) exhibit a PDO-like pattern of cooling in the eastern Pacific, whereas the models least in phase (Fig. 5b) show more uniform El Niño-like warming in the Pacific. The set of models in phase with ENSO produce a spatial trend pattern broadly consistent with observations (Fig. 5c) over the period. This result is in contrast to the full CMIP5 multi-model ensemble spatial trends, which exhibit broad warming26 and cannot reveal the PDO-like structure of the in-phase model trend.

Let’s rephrase that. According to Risbey et al (2014), the “best” 4 of their cherry-picked (unidentified) CMIP5 climate models simulate a PDO-like pattern during the hiatus period and the trends of those models are also “broadly consistent” with the observed spatial patterns throughout the rest of the global oceans. If you’re wondering how I came to the conclusion that Risbey et al (2014) were discussing the global oceans too, refer to the second boldfaced sentence in the above quote. Figure 5c presents the trends for all of the global oceans, not just the extratropical North Pacific or the Pacific as a whole.

We’re going to concentrate on the observations and the “best” models in the rest of this section. There’s no reason to look at the models that are lousier than the “best” models, because the “best” models are actually pretty bad.

That is, to totally contradict the claims made, there are no similarities between the spatial patterns in the maps of observed and modeled trends that were presented by Risbey et al (2014)—no similarities whatsoever. See Animation 1, which compares trend maps for the observations and “best” models, from their Figure 5, for the period of 1998 to 2012.

Animation 1

Again, those are the trends for the observations and the models Risbey et al (2014) selected as being “best”. I will admit “broadly consistent” is a vague phrase, but the spatial patterns of the model trends have no similarities with observations, not even the slightest resemblance, so “broadly consistent” does not seem to be an accurate representation of the capabilities of the “best” models.

A further breakdown follows. I normally wouldn’t go into this much detail, but the abstract does close with “spatial trend patterns.” So I suspect that science reporters for newspapers, magazines and blogs are going to be yakking about how well the selected “best” models simulate the spatial patterns of sea surface temperature warming and cooling trends during the hiatus.

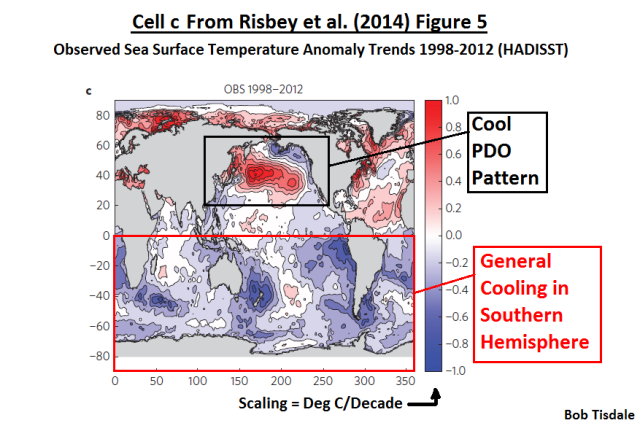

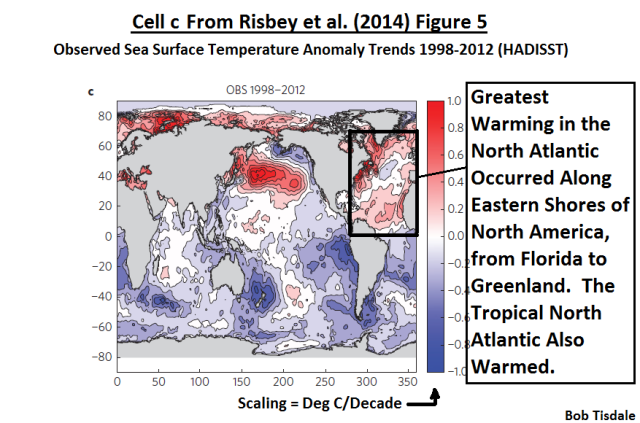

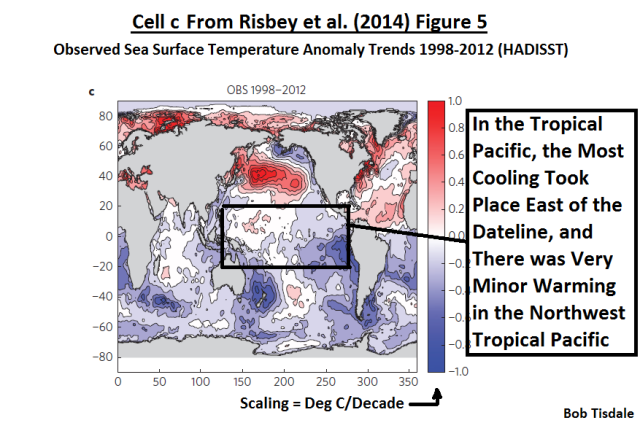

My Figure 1 is cell c from their Figure 5. It presents the observed sea surface trends during the hiatus period of 1998-2012. I’ve highlighted 2 regions. At the top, I’ve highlighted the extratropical North Pacific. The Pacific Decadal Oscillation index is derived from the sea surface temperature anomalies in that region, and the Pacific Decadal Oscillation data refers to that region only. See the JISAO PDO webpage here. JISAO writes (my boldface):

Updated standardized values for the PDO index, derived as the leading PC of monthly SST anomalies in the North Pacific Ocean, poleward of 20N.

The spatial pattern of the observed trends in the extratropical North Pacific agrees with our understanding of the “cool phase” of the Pacific Decadal Oscillation (PDO). Sea surface temperatures of the real world in the extratropical North Pacific cooled along the west coast of North America from 1998 to 2012. That cooling was countered by the ENSO-related warming of the sea surface temperatures in the western and central extratropical North Pacific, with the greatest warming taking place in the region east of Japan called the Kuroshio-Oyashio Extension. (See the post The ENSO-Related Variations In Kuroshio-Oyashio Extension (KOE) SST Anomalies And Their Impact On Northern Hemisphere Temperatures.) Because the Kuroshio-Oyashio Extension dominates the “PDO pattern” (even though it’s of the opposite sign; i.e. it shows warming while the east shows cooling during a “cool” PDO mode), the Kuroshio-Oyashio Extension is where readers should focus their attention when there is a discussion of the PDO pattern.

Figure 1

The second “region” highlighted in Figure 1 is the Southern Hemisphere. According to the trend map presented by Risbey et al (2014), real-world sea surface temperatures throughout the Southern Hemisphere (based on HADISST data) cooled between 1998 and 2012. That’s a lot of cool blue trend in the Southern Hemisphere.

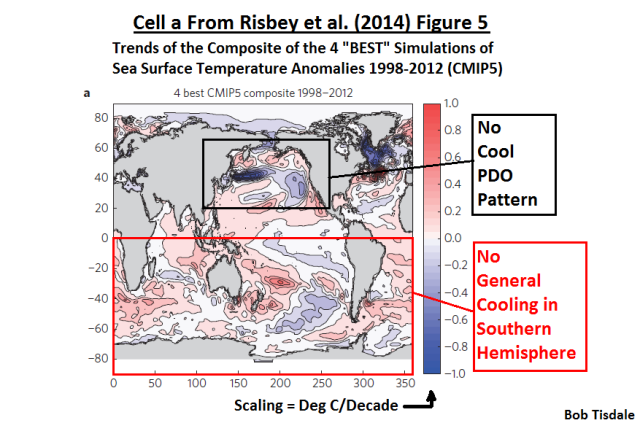

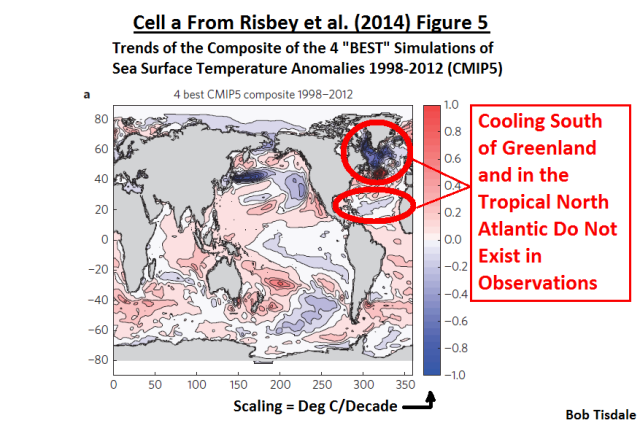

I’ve highlighted the same two regions in Figure 2, which presents the composite of the sea surface temperature trends from the 4 (unidentified) “best” climate models. A “cool” PDO pattern does not exist in the extratropical North Pacific of the virtual world of the climate models, and the models show an overall warming of the sea surfaces in the South Pacific and the entire Southern Hemisphere, where the observations showed cooling. If you’re having trouble seeing the difference, refer again to Animation 1.

Figure 2

The models performed no better in the North Atlantic. The virtual-reality world of the models showed cooling in the northern portion of the tropical North Atlantic and they showed cooling south of Greenland, which are places where warming was observed in the real world from 1998 to 2012. See Figures 3 and 4. And if need be, refer to Animation 1 once again.

Figure 3

# # #

Figure 4

The tropical Pacific is critical to Risbey et al (2014), because El Niño and La Niña events take place there. Yet the models that were selected and presented as “best” by Risbey et al (2014) cannot simulate the observed sea surface temperature trends in the real-world tropical Pacific either. Refer to Figures 5 and 6…and Animation 1 again if you need.

Figure 5

# # #

Figure 6

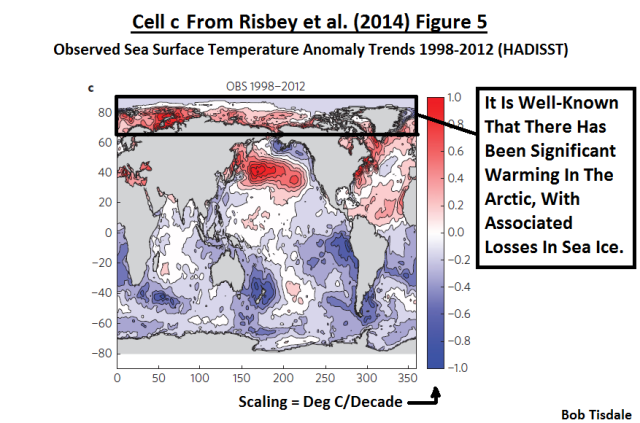

One last ocean basin to compare: the Arctic Ocean. The real-world observations, Figure 7, show a significant warming of the surface of the Arctic Ocean, and that warming is associated with the sea ice loss. The “best” models, of course, shown in Figure 8 do not indicate a similar warming in their number-crunched Arctic Oceans. The differences between the observations and the “best” models stand out like a handful of sore thumbs in Animation 1.

Figure 7

# # #

Figure 8

Because the CMIP5 climate models cannot simulate that warming in the Arctic and the loss of sea ice there, Stroeve et al. (2012) “Trends in Arctic sea ice extent from CMIP5, CMIP3 and Observations” [paywalled] noted that the model failures there was an indication the loss of sea ice occurred naturally, the result of “internal climate variability”. The abstract of Stroeve et al. (2012) reads (myboldface):

The rapid retreat and thinning of the Arctic sea ice cover over the past several decades is one of the most striking manifestations of global climate change. Previous research revealed that the observed downward trend in September ice extent exceeded simulated trends from most models participating in the World Climate Research Programme Coupled Model Intercomparison Project Phase 3 (CMIP3). We show here that as a group, simulated trends from the models contributing to CMIP5 are more consistent with observations over the satellite era(1979–2011). Trends from most ensemble members and modelsnevertheless remain smaller than the observed value. Pointing to strongimpacts of internal climate variability, 16% of the ensemble member trendsover the satellite era are statistically indistinguishable from zero. Resultsfrom the CMIP5 models do not appear to have appreciably reduceduncertainty as to when a seasonally ice-free Arctic Ocean will be realized.

WHY CLIMATE MODELS NEED TO SIMULATE SEA SURFACE TEMPERATURE PATTERNS

If you’re new to discussions of global warming and climate change, you may be wondering why climate models must be able to simulate the observed spatial patterns of the warming and cooling of ocean surfaces. The spatial patterns of sea surface temperatures throughout the global oceans are one of the primary factors that determine where land surfaces warm and cool and where precipitation occurs. If climate models should happen to create the proper spatial patterns of precipitation and of warming and cooling on land, without properly simulating sea surface temperature spatial patterns, then the models’ success on land is by chance, not skill.

Further, because climate models can’t simulate where, when, why and how the ocean surfaces warm and cool around the globe, they can’t properly simulate land surface temperatures or precipitation. And if they can’t simulate land surface temperatures or precipitation, what value do they have? Quick answer: No value. Climate models are not yet fit for their intended purposes.

Keep in mind, in our discussion of the Risbey et al. Figure 5, we’ve been looking at the models (about 10% of the models in the CMIP5 archive) that have been characterized as “best”, and those “best” models performed horrendously.

INTERESTING CHARACTERIZATIONS OF FORECASTS AND PROJECTIONS

In the second paragraph of the text of Risbey et al. (2014), they write (my boldface):

A weather forecast attempts to account for the growth of particular synoptic eddies and is said to have lost skill when model eddies no longer correspond one to one with those in the real world. Similarly, a climate forecast of seasonal or decadal climate attempts to account for the growth of disturbances on the timescale of those forecasts. This means that the model must be initialized to the current state of the coupled ocean-atmosphere system and the perturbations in the model ensemble must track the growth of El Niño/Southern Oscillation2,3 (ENSO) and other subsurface disturbances4 driving decadal variation. Once the coupled climate model no longer keeps track of the current phase of modes such as ENSO, it has lost forecast skill for seasonal to decadal timescales. The model can still simulate the statistical properties of climate features from this point, but that then becomes a projection, not a forecast.

If the models have lost their “forecast skill for seasonal and decadal timescales”, they also lost their forecast skill for multidecadal timescales and century-long timescales.

The fact that climate models were not initialized to match any state of the past climate came to light back in 2007 with Kevin Trenberth’s blog post Predictions of Climate at Nature.com’s ClimateFeedback. I can still recall the early comments generated by Trenberth’s blog post. For examples, see Roger Pielke Sr’s blog posts here and here and the comments on the threads at ClimateAudit here and here. That blog post from Trenberth is still being referenced in blog posts (this one included). In order for climate models to have any value, papers like Risbey et al (2014) are now saying that climate models “must be initialized to the current state of the coupled ocean-atmosphere system and the perturbations in the model ensemble must track the growth of El Niño/Southern Oscillation.” But skeptics have been saying this for years.

Let’s rephrase the above boldfaced quote from Risbey et al (2014). It does a good job of explaining the differences between “climate forecasts” (which many persons believe they’ve gotten so far from the climate science community) and the climate projections (which we’re presently getting from the climate science community). Because climate models cannot simulate naturally occurring coupled ocean-atmosphere processes like ENSO and the Atlantic Multidecadal Oscillation, and because the models are not “in-phase” with the real world, climate models are not providing forecasts of future climate…they are only providing out-of-phase projections of a future world that have no basis in the real world.

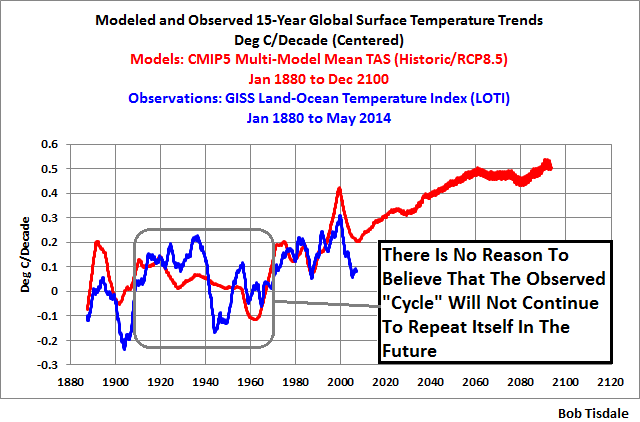

Further, what Risbey et al. (2014) failed to acknowledge is that the current hiatus could very well last for another few decades, and then, after another multidecadal period of warming, we might expect yet another multidecadal warming hiatus—cycling back and forth between warming and hiatus on into the future. Of course, the IPCC does not factor those multidecadal hiatus periods into their projections of future climate. We discussed and illustrated this in the post Will their Failure to Properly Simulate Multidecadal Variations In Surface Temperatures Be the Downfall of the IPCC?

Why don’t climate models simulate natural variability in-phase with multidecadal variations exhibited in observations? There are numerous reasons: First, climate models cannot simulate the naturally occurring processes that cause multidecadal variations in global surface temperatures. Second, the models are not initialized in an effort to try to match the multidecadal variations in global surface temperatures. It would be a fool’s errand anyway, because the models can’t simulate the basic ocean-atmosphere processes that cause those multidecadal variations. Third, if climate models were capable of simulating multidecadal variations as they occurred in the real world—their timing, magnitude and duration—and if the models were to allowed to produce those multidecadal variations on into the future, then the future in-phase forecasts of global warming (different from the out-of-phase projections that are currently provided) would be reduced significantly, possibly by half. (Always keep in mind that climate models were tuned to a multidecadal upswing in global surface temperatures—a period when the warming of global surface temperatures temporarily accelerated (the term used by Risbey et al.) due to naturally occurring ocean atmosphere processes associated with ENSO and the Atlantic Multidecadal Oscillation.) Fourth, if the in-phase forecasts of global warming were half of the earlier out-of-phase projections, the assumed threats of future global warming-related catastrophes would disappear…and so would funding for climate model-based research. The climate science community would be cutting their own throats if they were to produce in-phase forecasts of future global warming, and they are not likely to do that anytime soon.

A GREAT ILLUSTRATION OF HOW POORLY CLIMATE MODELS SIMULATE THE PAST

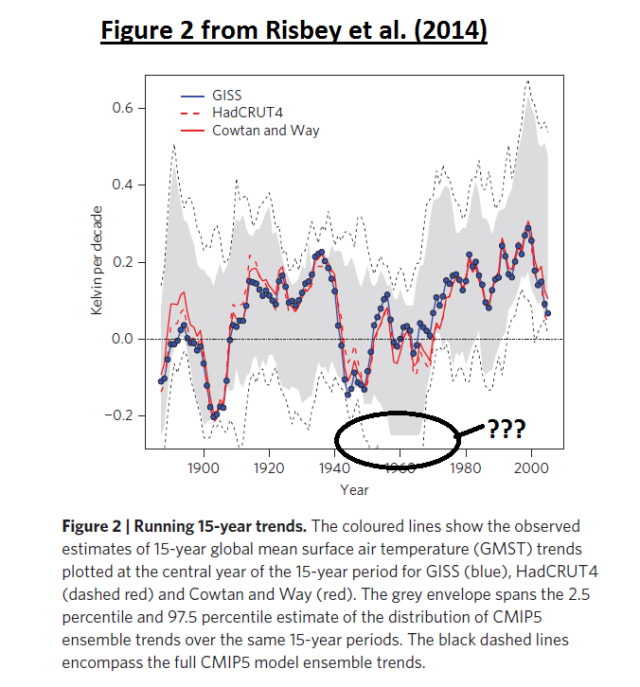

My Figure 9 is Figure 2 from Risbey et al. (2014). I don’t think the authors intended this, but that illustration clearly shows how poorly climate models simulate global surface temperatures since the late 1800s. Keep in mind while viewing that graph that it is showing 15-year trends (not temperature anomalies) and that the units of the y-axis is deg K/decade.

Figure 9

Risbey et al. (2014) describe their Figure 2 as (my boldface):

To see how representative the two 15-year periods in Fig. 1 are of the models’ ability to simulate 15-year temperature trends we need to test many more 15-year periods. Using data from CMIP5 models and observations for the period 1880_2012, we have calculated sliding 15-year trends in observations and models over all 15-year periods in this interval (Fig. 2). The 2.5-97.5 percentile envelope of model 15-year trends (grey) envelops within it the observed trends for almost all 15-year periods for each of the observational data sets. There are several periods when the observed 15-year trend is in the warm tail of the model trend envelope (~1925, 1935, 1955), and several periods where it is in the cold tail of the model envelope (~1890, 1905, 1945, 1970, 2005). In other words, the recent `hiatus’ centred about 2005 (1998-2012) is not exceptional in context. One expects the observed trend estimates in Fig. 2 to bounce about within the model trend envelope in response to variations in the phase of processes governing ocean heat uptake rates, as they do.

While the recent hiatus may not be “exceptional” in that context, it was obviously not anticipated by the vast majority of the climate models. And if history repeats itself, and there’s no reason to believe it won’t, the slowdown in warming could very well last for another few decades.

I really enjoyed the opening clause of the last sentence: “One expects the observed trend estimates in Fig. 2 to bounce about within the model trend envelope…” Really? Apparently, climate scientists have very low expectations of their models.

Referring to their Figure 2, the climate models clearly underestimated the early 20th Century warming, from about 1910 to the early 1940s. The models then clearly missed the cooling that took place in the 1940s but then overestimated the cooling in the 1950s and 60s…so much so that Risbey et al. (2014) decided to erase the full extent of the modeled cooling rates during that period by limiting the range of the y-axis in the graph. (This time climate scientists are hiding the decline in the models.) Then there’s the recent warming period. There’s one reason and one reason only why the models appear to perform well during the recent warming period, seeming to run along the mid-range of the model spread from about 1970 to the late 1990s. And that reason is, the models were tuned to that period. Now, since the late 1990s, the models are once again diverging from the data, because they are not in-phase with the real world.

Will surface temperatures repeat the “cycle” of warming and hiatus/cooling that exists in the data? There’s no reason to believe they will not. Do the climate models simulate any additional multidecadal variability in the future? See Figure 10. Apparently not.

Figure 10

My Figure 10 is similar to Figure 2 from Risbey et al. (2014). For the model simulations of global surface temperatures, I’ve presented the 15-year trends (centered) of the multi-model ensemble-member mean (not the spread) of the historic and RCP8.5 (worst case) forcings (which were also used by Risbey et al.). For the observations, I’ve included the 15-year trends of the GISS Land-Ocean Temperature Index data, which is one of the datasets presented by Risbey et al. (2014). The data and model outputs are available from the KNMI Climate Explorer. The GISS data are included under Monthly Observations and the model outputs are listed as “TAS” on the Monthly CMIP5 scenario runs webpage.

It quite easy to see two things: (1) the modelers did not expect the current hiatus, and (2) they do not anticipate any additional multidecadal variations in global surface temperatures.

Note: If you’re having trouble visualizing what I’m referring to as “cycles of warming and histus/cooling” in Figure 8, refer to the illustration here of Northern Hemisphere temperature anomalies from the post Will their Failure to Properly Simulate Multidecadal Variations In Surface Temperatures Be the Downfall of the IPCC?

OBVIOUSLY MISSING FROM RISBEY ET AL (2014)

One of the key points of Risbey et al. (2014) was their claim that the selected 4 “best” (unidentified) climate models could simulate the spatial patterns of the warming and cooling trends in sea surface temperatures during the hiatus period. We’ve clearly shown that their claims were unfounded.

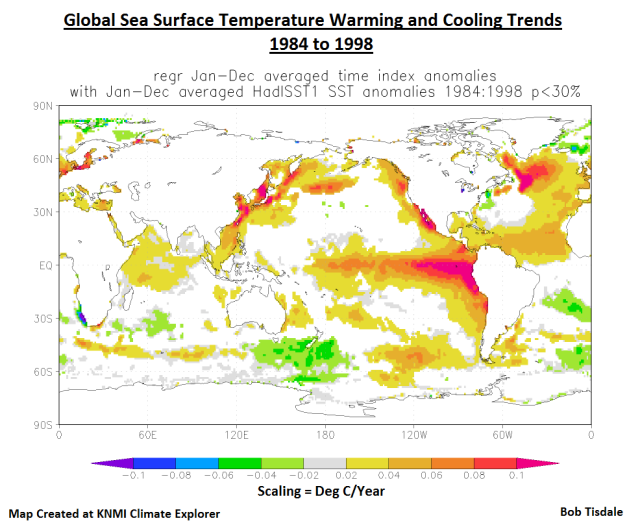

It’s also quite obvious that Risbey et al. (2014) failed to present evidence that the “best” climate models could reproduce the spatial patterns of the warming and cooling rates in global sea surface temperatures during the warming period that preceded the hiatus. They presented histograms of the modeled and observed trends for the 15-year warming period (1984-1998) before the 15-year hiatus period in cell b of their Figure 1 (not shown in this post). So, obviously, that period was important to them. Yet they did not present how well or poorly the “best” models simulated the spatial trends in sea surface temperatures for the important 15-year period of 1984-1998. If the models had performed well, I suspect Risbey et al. (2014) would have been more than happy to present those modeled and observed spatial patterns.

My Figure 11 shows the observed warming and cooling rates in global sea surface temperatures from 1984 to 1998, using the HADISST dataset, which is the sea surface temperature dataset used by Risbey et al. (2014). There is a clear El Niño-related warming in the eastern tropical Pacific. The warming of the Pacific along the west coasts of the Americas also appears to be El Niño-related, a response to coastally trapped Kelvin waves from the strong El Niño events of 1986/87/88 and 1997/98. (See Figure 8 from Trenberth et al. (2002).) The warming of the North Pacific along the east coast of Asia is very similar to the initial warming there in response to the 1997/98 El Niño. (See the animation here which is Animation 6-1 from my ebook Who Turned on the Heat?) And the warming pattern in the tropical North Atlantic is similar to the lagged response of sea surface temperatures (through teleconnections) in response to El Niño events. (Refer again to Figure 8 from Trenberth et al. (2002), specifically the correlation maps with the +4-month lag.)

Figure 11

Climate models do not properly simulate ENSO processes or teleconnections, so it really should come as no surprise that Risbey et al. (2014) failed to provide an illustration that should have been considered vital to their paper.

The other factor obviously missing, as discussed in the next section, was the modeled increases in ocean heat uptake. Ocean heat uptake is mentioned numerous times throughout Risbey et al (2014). It would have been in the best interest of Risbey et al. (2014) to show that the “best” models created the alleged increase in ocean heat uptake during the hiatus periods. Oddly, they chose not to illustrate that important factor.

OCEAN HEAT UPTAKE

Risbey et al (2014) used the term “ocean heat uptake” 11 times throughout their paper. The significance of “ocean heat uptake” to the climate science community is that, during periods when the Earth’s surfaces stop warming or the warming slows (as has happened recently), ocean heat uptake is (theoretically) supposed to increase. Yet Risbey et al (2014) failed to illustrate ocean heat uptake with data or models even once. The term “ocean heat uptake” even appeared in one of the earlier quotes from the paper. Here’s that quote again (my boldface):

To see how representative the two 15-year periods in Fig. 1 are of the models’ ability to simulate 15-year temperature trends we need to test many more 15-year periods. Using data from CMIP5 models and observations for the period 1880_2012, we have calculated sliding 15-year trends in observations and models over all 15-year periods in this interval (Fig. 2). The 2.5-97.5 percentile envelope of model 15-year trends (grey) envelops within it the observed trends for almost all 15-year periods for each of the observational data sets. There are several periods when the observed 15-year trend is in the warm tail of the model trend envelope (~1925, 1935, 1955), and several periods where it is in the cold tail of the model envelope (~1890, 1905, 1945, 1970, 2005). In other words, the recent `hiatus’ centred about 2005 (1998-2012) is not exceptional in context. One expects the observed trend estimates in Fig. 2 to bounce about within the model trend envelope in response to variations in the phase of processes governing ocean heat uptake rates, as they do.

Risbey et al. (2014) are making a grand assumption with that statement. There is insufficient subsurface ocean temperature data, for the depths of 0-2000 meters, before the early-2000s, upon which they can base those claims. The subsurface temperatures of the global oceans were not sampled fully (or as best they can be sampled) to depths of 2000 meters before the ARGO era, and the ARGO floats were not deployed until the early 2000s, with near-to-complete coverage around 2003. Even the IPCC acknowledges in AR5 the lack of sampling of subsurface ocean temperatures before ARGO. See the post AMAZING: The IPCC May Have Provided Realistic Presentations of Ocean Heat Content Source Data.

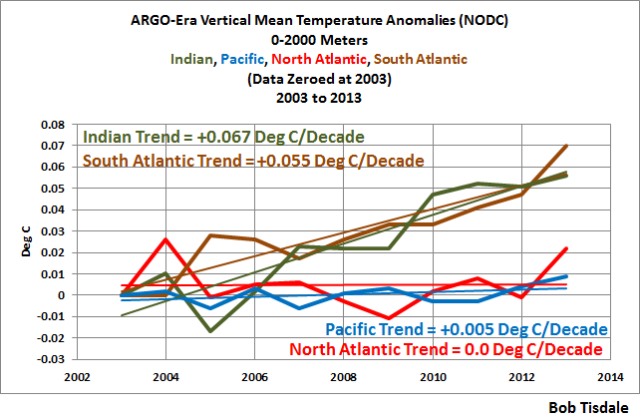

Additionally, ARGO float-based data do not even support the assumption that ocean heat uptake increased in the Pacific during the hiatus period. That is, if the recent domination of La Niña events were, in fact, causing an increase in ocean heat uptake, we would expect to find an increase in the subsurface temperatures of the Pacific Ocean to depths of 2000 meters over the last 11 years. Why in the Pacific? Because El Niño and La Niña events take place there. Yet the NODC vertically average temperature data (which are adjusted for ARGO cool biases) from 2003 to 2013 show little warming in the Pacific Ocean…or in the North Atlantic for that matter. See Figure 12.

Figure 12

It sure doesn’t look like the dominance of La Niña events during the hiatus period has caused any ocean heat uptake in the Pacific over the past 11 years. Subsurface ocean warming occurred only in the South Atlantic and Indian Oceans. Now, consider that manmade greenhouse gases including carbon dioxide are said to be well mixed, meaning they are pretty well evenly distributed around the globe. It’s difficult to imagine how a well-mixed greenhouse gas like manmade carbon dioxide caused the South Atlantic and Indian Oceans to warm to depths of 2000 meters, while having no impact on the North Atlantic or the largest ocean basin on this planet, the Pacific.

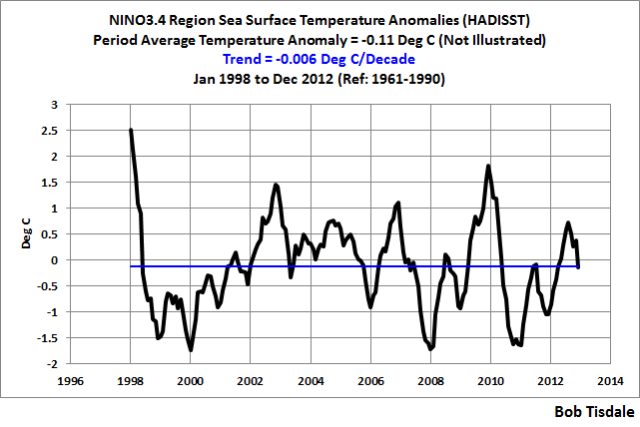

REFERENCE NINO3.4 DATA

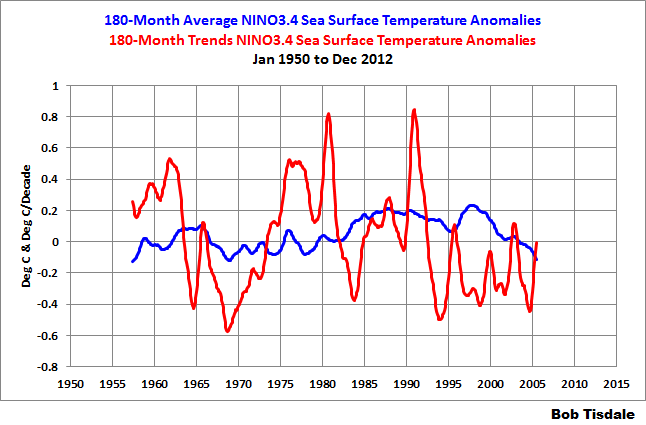

For those interested, as a reference for the discussion of Figure 5 from Risbey et al. 2014), my Figure 13 presents the monthly HADISST-based NINO3.4 region sea surface temperature anomalies for the period of January 1998 to December 2012, which are the dataset and time period used by Risbey et al for their Figure 5, and the NINO3.4 region data and model outputs were the bases for their model selection. The UKMO uses the base period of 1961-1990 for their HADISST data, so I used those base years for anomalies. The period-average temperature anomaly (not shown) is slightly negative, at -0.11 Deg C, indicating there was a slight dominance of La Niña events then. The linear trend of the data is basically flat at -0.006 deg C/decade.

Figure 13

SPOTLIGHT ON CLIMATE MODEL FAILINGS

Let’s return to the abstract again. It includes:

We present a more appropriate test of models where only those models with natural variability (represented by El Niño/Southern Oscillation) largely in phase with observations are selected from multi-model ensembles for comparison with observations. These tests show that climate models have provided good estimates of 15-year trends, including for recent periods and for Pacific spatial trend patterns.

What they’ve said indirectly, but failed to expand on, is:

- IF (big if) climate models could simulate the ocean-atmosphere processes associated with El Niño events, which they can’t, and…

- IF (big if) climate models could simulate the ocean-atmosphere processes associated with longer-term coupled ocean atmosphere processes like the Atlantic Multidecadal Oscillation, another process they can’t simulate, and…

- IF (big if) climate models could simulate the decadal and multidecadal variations of those processes in-phase with the real world, which they can’t because they can’t simulate the basic processes…

…then climate models would have a better chance of being able to simulate Earth’s climate.

Climate modelers have been attempting to simulate Earth’s climate for decades, and climate models still cannot simulate those well-known global warming- and climate change-related factors. In order to overcome those shortcomings of monstrous proportions, the modelers would first have to be able to simulate the coupled ocean-atmosphere processes associated with ENSO and the Atlantic Multidecadal Oscillation…and with teleconnections. Then, as soon as the models have conquered those processes, the climate modelers would have to find a way to place those chaotically occurring processes in phase with the real world.

As a taxpayer, you should ask the government representatives that fund climate science two very simple questions. After multiple decades and tens of billions of dollars invested in global warming research:

- why aren’t climate models able to simulate natural processes that can cause global warming or stop it? And,

- why aren’t climate models in-phase with the naturally occurring multidecadal variations in real world climate?

We already know the answers, but it would be good to ask.

THE TWO UNEXPECTED AUTHORS

I suspect that Risbey et al (2014) will get lots of coverage based solely on two of the authors: Stephan Lewandowsky and Naomi Oreskes.

Naomi Oreskes is an outspoken activist member of the climate science community. She has recently been known for her work in the history of climate science. At one time, she was an Adjunct Professor of Geosciences at the Scripps Institution of Oceanography. See Naomi’s Harvard University webpage here. And she has co-authored at least two papers in the past about numerical model validation.

Stephan Lewandowsky is a very controversial Professor of Psychology at the University of Bristol. How controversial is he? He has his own category at WattsUpWithThat, and at ClimateAudit, and there are numerous posts about his recent work at a multitude of other blogs. So why is a professor of psychology involved in a paper about ENSO and climate models? He and lead author James Risbey gave birth to the idea for the paper. See the “Author contributions” at the end of the Risbey et al. (my boldface):

J.S.R. and S.L. conceived the study and initial experimental design. All authors contributed to experiment design and interpretation. S.L. provided analysis of models and observations. C.L. and D.P.M. analysed Niño3.4 in models. J.S.R. wrote the paper and all authors edited the text.

The only parts of the paper that Stephan Lewandowsky was not involved in were writing it and the analysis of NINO3.4 sea surface temperature data in the models. But, and this is extremely curious, psychology professor Stephan Lewandowsky was solely responsible for the “analysis of models and observations”. I’ll let you comment on that.

CLOSING

The last sentence of the abstract of Risbey et al. (2014) clearly identifies the intent of the paper:

These tests show that climate models have provided good estimates of 15-year trends, including for recent periods and for Pacific spatial trend patterns.

Risbey et al. (2014) took 18 of the 38 climate models from the CMIP5 archive, then whittled those 18 down to the 4 “best” models for their trends presentation in Figure 5. In other words, they’ve dismissed 89% of the models. That’s not really too surprising. von Storch et al. (2013) “Can Climate Models Explain the Recent Stagnation in Global Warming?” found:

However, for the 15-year trend interval corresponding to the latest observation period 1998-2012, only 2% of the 62 CMIP5 and less than 1% of the 189 CMIP3 trend computations are as low as or lower than the observed trend. Applying the standard 5% statistical critical value, we conclude that the model projections are inconsistent with the recent observed global warming over the period 1998-2012.

Then again, Risbey et al. (2014) had different criteria than Von Storch et al. (2013).

Risbey et al. (2014) also failed to deliver on their claim that their tests showed “that climate models have provided good estimates of 15-year trends, including for recent periods and for Pacific spatial trend patterns.” And their evaluation of climate model simulations conveniently ignored the fact that climate models do not properly simulate ENSO processes, which basically means the fundamental overall design and intent of the paper was fatally flawed.

Some readers may believe Risbey et al. (2014) should be dismissed as a failed attempt at misdirection—please disregard those bad models from the CMIP5 archive and only pay attention to the “best” models. But Risbey et al. (2014) have been quite successful at clarifying a few very important points. They’ve shown that climate models must be able to simulate naturally occurring coupled ocean-atmosphere processes, like associated with El Niño and La Niña events and with the Atlantic Multidecadal Oscillation, and the models must be able to simulate those naturally occurring processes in phase with the real world, if climate models are to have any value. And they’ve shown quite clearly that, until climate models are able to simulate naturally occurring processes in phase with nature, forecasts/projections of future climate are simply computer-generated conjecture with no basis in the real world…in other words, they have no value, no value whatsoever.

Simply put, Risbey et al. (2014) has very effectively undermined climate model hindcasts and projections, and the paper has provided lots of fuel for skeptics.

In closing, I would like to thank the authors of Risbey et al. (2014) for presenting their Figure 5. The animation I created from its cells a and c (Animation 1) provides a wonderful and easy-to-understand way to show the failings of the climate-scientist-classified-“best” climate models during the hiatus period. It’s so good I believe I’m going to link it in the introduction of my upcoming ebook. Thanks again.

# # #

UPDATE: The comment by Richard M here is simple but wonderful. Sorry that it did not occur to me so that I could have included something similar at the beginning of the post.

Richard M says: “One could use exactly the same logic and pick the 4 worst models for each 15 year period and claim that cimate [sic] models are never right…”

So what have Risbey et al. (2104) really provided? Nothing of value.

UPDATE 2: Dana Nuccitelli has published a much-anticipated blog post at TheGuardian proclaiming climate models are now accurate due to the cleverness of Bisbey et al (2014). Blogger Russ R. was the first to comment on the cross post at SkepticalScience, and Russ linked my Animation 1 from this post, along with the comment:

Dana, which parts of planet would you say that the models “accurately predicted”?

The gif animation from this post is happily blinking away below that comment. I wasn’t sure how long it would stay there before it was deleted, so I created an animation of two screen caps from the SkS webpage for your enjoyment. Thanks, Russ R.

Note to Rob Honeycutt: I believe Russ asked a very clear question…no alluding involved.

Thanks, Anthony!

…and Thanks, Bob!

holy smokes Bob, you wrote us a book! 🙂

Thanks. It’s gonna take some chewin…

Great Review. NOW you can take’em to the cleaners. WE hope this trash is going to be retracted.BTW AW talk and presentation of data at the 9ICCC conference was spectacular and basically agrees with SG re Confirmation Bias (except that SG call’s it fabrication) of USA data. So lets all be pals. LOL

The lack of any Supplementary Information (SI) suggests these authors aren’t much interested in transparency…but we already knew that from dealings with Lewandowsky. It’s all about getting that talking point in the media “climate models replicated the pause”, and really little else.

Few if any journalists will understand what they have been fed beyond “climate models correctly simulated “the pause”, so all is well with climate science”. They’ll miss the fact that a handful of cherry picked models from the CMIP5 ensemble were used (shades of Yamal and the handful of trees) or that just because they line up with ENSO forcing in the period doesn’t mean they have any predictive skill.

Basically what went on here is that they a priori picked the “best” models that lined up with observations (without identifying them so they can be checked) They chose which models performed best with observations and called that confirmation while ignoring the greater population of models.

It would be like picking a some weather forecast models out of the dozens we have that predicted a rainfall event (weather) most accurately, then saying that because of that those weather forecast models in general are validated for all rainfall events (climate). It says nothing though about the predictive skill of of those same models under a different set of conditions. Chances are they’ll break down under different combinations of localized synoptic forcings, just like those “best” climate models likely won’t hold up in different scanarios of AMO, PDO, ENSo, etc.

Given the number of citizen scientists that populate this blog it is probably not a good idea to question the ability of a psychologist to contribute to the science and politics of climate change. In the case of this particular psychologist it is far better to allow his record to scream its madness for all to hear and to highlight that cacophonous prattle as indicators of the quality of his work. He is quite capable of stepping on his own diction.

” For those new to discussions of global warming, I’ve tried to make this post as non-technical as possible,..”

==========

Umm, not sure it is possible to get “non-technical” enough for this reader.

However, the thought is appreciated 🙂

And your hard work.

That is, the “best” models and the number of them changes for each 15-year period. In other words, they’ve used a sort of running cherry-pick for the models in their Figure 4. A novel approach.

….

As noted earlier, I’ve been through the paper a number of times, and I cannot find where they listed which models were selected as “best” and “worst”.

Since the “best” models keep changing depending on the 15-year period chosen, are they saying that all of the models are “best”, according to when they are chosen?

That is even better than averaging a number of “bad” models in order to get a good one.

Excellent constructive criticism of a paper, Prof Tisdale. A forensic examination with, “nothing added”. An object lesson in scientific debate for others to learn from and follow.

Isn’t Oreskes and Lewandowskys role here quite clear? They have been reliable foot soldiers of the climate scare industry. To co-author a paper which is strictly about the physics of climate is just a prop up, perhaps one to be wielded as a bat to hunt down the likes of (say) Moncton which are somewhat at the fringe of climate science trying to get in while these two clowns get a free ride to inflate their lists of publications.

Anthony Watts says:

July 20, 2014 at 12:06 pm

The lack of any Supplementary Information (SI) suggests these authors aren’t much interested in transparency…but we already knew that from dealings with Lewandowsky. It’s all about getting that talking point in the media “climate models replicated the pause”, and really little else.

(Bold mine)

True that.

[snip – way way off topic, stop with the thread bombing of irrelevant solar topics please -Anthony]

What’s the full list of authors–the entire rogue’s gallery?

Bob, can’t get the link/animation to work….

The animation I created from its cells a and c (Animation 1)……….

I don’t understand why they would publish the paper that so clearly undermines climate models? Are they so blinded by confirmation bias that they cannot see it?

Figure 10, GISS.

The continued ex post facto hammering down upon the 1930s temperature stands out again. F Scott Fitzgerald would be incredulous.

They’re here.

The Sydney Morning Herald headline about Risbey et al (2014): “Climate models on the mark, Australian-led research finds”

http://www.smh.com.au/environment/climate-change/climate-models-on-the-mark-australianled-research-finds-20140720-zuuoe.html

On the mark?

Latitude says: “Bob, can’t get the link/animation to work….”

Have you clicked on it? Sometimes they hang up.

15 year period? But isn’t that just weather and all real climate observations must be over 30 years? Hoist them upon their own petard, and all that.

rogerknights says: “What’s the full list of authors–the entire rogue’s gallery?”

Listed at the top of the page here:

http://www.nature.com/nclimate/journal/vaop/ncurrent/full/nclimate2310.html

James S. Risbey, Stephan Lewandowsky, Clothilde Langlais, Didier P. Monselesan, Terence J. O’Kane & Naomi Oreskes

All of these papers by the “team” of true believers start out with the flawed premise that Anthropogenic CO2 driven global warming is unequivocally true as an a priori assumption. Then they try and come up with reasons the real world doesn’t comply with their predictions. This is exactly the wrong approach, a true scientist in Feynman’s mold would try and determine what is wrong with the models and theory, rather than try and find out why the data is “wrong.” They assume the theory is right and try and find weasel room excuses for why the real world isn’t complying with their pet theory.

And the two coauthors? Can we do the same thing they do to discredit this? Say, well, they aren’t “climate scientists” so what they say doesn’t matter?

Bob Tisdale says:

July 20, 2014 at 12:29 pm

They’re here.

The Sydney Morning Herald headline about Risbey et al (2014): “Climate models on the mark, Australian-led research finds”

Bob –

Is “on the mark” Australian for “not even close”?

And another thing…once again with the hiding the pea. We have models that are accurate! We won’t tell you what they are though…you just want to prove us wrong. Idiotic, and disgraceful that they will probably get away with it, just like the list of Chinese weather stations that went missing after the paper that proves there is no UHI effect. Dog ate my homework again.

Seems like a classic case of the Texas Sharpshooter Fallacy

http://youarenotsosmart.com/2010/09/11/the-texas-sharpshooter-fallacy/

JohnWho says: “Since the “best” models keep changing depending on the 15-year period chosen, are they saying that all of the models are “best”, according to when they are chosen?”

We don’t know which models were chosen to be “best” for any 15-year period. Therefore, we don’t know if each of the 18 models with sea surface temperature data made it to the “best” list at least once or whether “best” was dominated by a handful of models.

Everything Lewandowsky touches feels like a trap these days. I wonder what his ethics committee thinks they’ve approved for this one… My commenting alone will obviously prove that I wear a tinfoil hat, in his next paper.

Publication of this paper is the stimulus designed by Lewandowsky for his continuing research into the psychology of “deniers”. Lewandowsky’s minions are gathering blog responses and comments as we speak which will in due course be analysed, rated, binned, and characterized in his next psychology paper.

So is this really just a variant of the old Texas Sharpshooter.

If you fire enough climate models and pick the one that hits the target after the event… you are actually aiming by picking where the shot hit – not by having a clue which end of the gun is the pointy bit.

And you also seem to be saying thy changed the “best models” for each 15 year period (repeating the logical error)?

Surely that can’t get published. Not even in Nature Climate Change.

I’ve just emailed the author of that piece (Peter Hannam) Bob Tisdale pointed out, providing him a link to this. You can too:

phannam@fairfax.com.au

BTW his email link is on his article, so no “outing” is going on here.

I suggest every WUWT reader follow suit when they see articles like that, email the author and tell them they didn’t look beyond the press release.

Be respectful and factual when and if you do please.

“We present a more appropriate test of models…” that get us the most public money.

daviditron says at July 20, 2014 at 12:37 pm …

We cross-posted. It does sound like repeated application of the Texas Sharpshooter Fallacy.

But I just can’t believe that could ever get published. The editor of the journal would have to “Out” the incompetent peer reviewers.

It can’t be that stupid.

Will J. Richardson says: “Publication of this paper is the stimulus designed by Lewandowsky for his continuing research into the psychology of “deniers”.”

Maybe they’ll make me laugh as much as Figure 5 from this paper did. I sure hope so. I can use a good laugh now and then.

Bob Tisdale–” Because Risbey et al. (2014) have not identified the models they’ve selected as “best” and “worst”, their work cannot be verified.”

Enough said, except, Climate Agnotology from a historian and a psychologist at work.

Meh.

Bob Tisdale wrote: “ENSO is one of the primary processes through which heat is distributed from the tropics to the poles. Those processes are chaotic and they vary over annual, decadal and multidecadal time periods.”

Then he quoted from the paper:

The oceans via ENSO factors play a major role in the natural heat distribution of our planet and the models can’t handle that; and yet the IPCC claims that ONLY CO2 could account for any observed warming since they say that they can think of nothing else. It looks to me as if this paper admits there is at least one “something else” that needs to be accounted for especially since CO2’s correlation with warming has failed over the last 17 years. It has been my position for years that we are not accounting for the ocean and the atmosphere property while we chase the magic molecule CO2 as the driver of climate.

Thanks to Bob for this very informative analysis.

Bob Tisdale says:

July 20, 2014 at 12:29 pm

They’re here.

The Sydney Morning Herald headline about Risbey et al (2014): “Climate models on the mark, Australian-led research finds”

http://www.smh.com.au/environment/climate-change/climate-models-on-the-mark-australianled-research-finds-20140720-zuuoe.html

On the mark?

============================

It’s almost as if he had the article already written.

Thanks, Bob.

That animation switching between the four “good” models and reality says it all. If that is the best the models can do, I would be very reluctant to board that boat. I think it is sunk at the dock.

In fact the paper is so poor that I can’t help but think it is chaff, dropped to foul up our radar, more than a real paper put out by sincere scientists.

Good work gentlemen! And a recension of the paper clear enough for even us dumb arts guys to follow. Good science and the thoughtful explanation of the evidence, that’s a rare combination in Climate Studies. Keep up the good work- and thanks.

Bob: Well done. I really enjoyed the article and appreciate the work that went into pulling it apart. My one suggestion is that now you have time perhaps you can develop a shorter more pointed piece. For me the most telling part was the fact that the models chosen failed to describe the actual pattern of 15 year trends in the Pacific, Atlantic or Arctic Oceans. It seems to me that this is a massive disconfirmation of Risbey’s approach and a confirmation of your point that models fail to encapsulate ENSO processes..

@Bob Tisdale –

I can’t get to the animation either from here or from your site.

Supposedly, it is here:

But WordPress says “404 – file not found”.

daviditron says: July 20, 2014 at 12:37 pm

Seems like a classic case of the Texas Sharpshooter Fallacy

Thanks for that link. That about sums it up neatly.

Climate models are not yet fit for their intended purposes. Again I think this paper, like the actual meaning of Adaptation in IPCC, is once again confirming that climate models are not intended to be Enlightenment type hard objective science. They are social science models created to justify forcing social theories into K-12 classrooms and policy making. Did you know Jeremy Rifkin now even uses the term ‘Empathy Science’ to describe this new view of science where students will learn to reparticipate in the Biosphere?

This is where Lew’s background factors in. The use of this climate modelling to promote to continued emphasis on the Biosphere in the political sense. The one created by Verdansky in the USSR that US Earth System Science is founded upon in the first place. It keeps coming up in my research on the complementary concept of Teilhard de Chardin’s noosphere.

That should be Valadimir Ivanovich Vernadsky, not Verdansky.

Caffeine time for Robin.

Bob Tisdale says:

July 20, 2014 at 12:29 pm

“The Sydney Morning Herald headline about Risbey et al (2014): “Climate models on the mark, Australian-led research finds”

On the mark?”

———————————————————————————————————————–

“mark”: noun; a person who is the target of a fraud.

Whoa, I’ll have to read this opus again later, thanks Bob.

“Animation 1” hyperlink in the last paragraph is broken, should lead to http://bobtisdale.files.wordpress.com/2014/07/risbey-et-al-figure-5-animation-best-v-obs1.gif

On the basis of comparison of published figure 5a to 5c, this paper should never have gotten through peer review. Let alone that the ‘best 4’ models which fail in figure 5 are not identified. I would think an appropriate note to NCC concerning corrigendum/ retraction would be in order on those grounds alone.

This one is going to end as an own goal, since the basic flaws are self evident.

Very nice work, Bob. I had just finished reading the paper when you got this post up. Could have saved myself some wasted time.

@ FergalR – (July 20, 2014 at 1:18 pm)

Thanks. That works.

Given the visual inconsistencies in Figure 5 that Bob has pointed out, how did this get through Nature’s peer review process?

Judging from the part of it that I read before my eyes glazed over, this would have been a really good series of 3-5 parts.

As a single post, 90% of it will go unread. You don’t communicate when you write words. You communicate when other people read them.

Eighty-five hundred words is three times the length of the typical article in Nature.

FergalR says: ““Animation 1″ hyperlink in the last paragraph is broken, should lead to http://bobtisdale.files.wordpress.com/2014/07/risbey-et-al-figure-5-animation-best-v-obs1.gif”

I fixed the hyperlink in the last paragraph.

Latitude and JohnWho, was that the link you were discussing?

I’m afraid that the major input of Professor Lewandowsky into this paper will lead to much criticism on the grounds that, as a cognitive psychologist, he knows nothing about the analysis of climate models and climate observations. This criticism will unfortunately tend to obscure the fact that he knows nothing about questionnaire design and data analysis in his chosen field of psychological research either. Similarly, Oreskes knows nothing about the analysis of historical sources. They are charlatans in their academic fields, but they’re big at the Guardian, Salon, and Huffington Post.

Londo suggests above that Lewandowsky and Oreskes have been given a leg up into the world of serious science. But isn’t the push in the other direction? Two media superstars in the wonderful world of warmology are willing to promote the unknown Risbey into the media limelight of climate change superstardom in exchange for billing as supporting acts. (Remember, Lewandowsky and Oreskes get cited on Obama twitter accounts, something that doesn’t happen to many scientists).

It’s just possible that the whole thing may backfire. The hook to catch the attention of the media big fish is that this is a paper in Nature. Thanks to the speedy footwork of Bob Tisdale and Anthony, Nature editors will already be aware that they’ve got a can of rotting worms on their hook. Not all Nature’s readers are blinkered activists.

@ Bob Tisdale –

Yes. That was the link.

Thanks

Bob (if I may be so familiar) thanks for this, I must now read the paper.

However in reading your comments (and having been reading the recent stuff at Jo Nova’s) and having an interest in modelling, it occurred me to observe that the spatial distributions of surface temperatures (say) across the globe could be used as input to a “black box” model of the climate.

I’m obviously aware of your interest in this area – have you (or anyone else to your knowledge) considered taking the step towards modelling using this information as a basis?

Bob, Thanks for the enlightenment.

It is a shame that the media pick up the press release and run with the headline as you noted:

> The Sydney Morning Herald headline about Risbey et al (2014): “Climate models on the mark, Australian-led research finds”

This coverage is something out of an alternative universe.

If I understand this correctly then the following is a list of the issues that Bob Tisdale claims this paper has:

1 It doesn’t show that the “best models” match observation but the paper asserts it does.

2 The “best models” are just closest to observations by chance – best has no meaning.

3 The “best models” are defined through applying the Texas Sharpshooter method of picking whichever model is closest to observations at that time period and not discussing the reason why it’s closest (which is luck – see 2)… and then repeating the error for each time period with no discussion as to what ceased to be working for the previous “best models” when they are discarded.

4 It neglects to describe which models are the “best models” for figure 5 which makes the paper untestable.

5 The expected variation of the models is so large that the findings of “consistent with” are almost trivial.

Is that a fair list?

@ Bob Tisdale –

Link is still a problem at your WordPress site.

JohnWho says: “Link is still a problem at your WordPress site.”

I just fixed it at my blog, too. Thanks.

The first version of the Animation 1 didn’t have the units identified for the color scaling, so, after I added the note and uploaded the revised animation, I deleted that first version. But then I forgot to go back and update that link in the final paragraph. Sorry for the confusion.

Oops, make that:

“It’s all about getting that talking point in the media “climate models replicated the pause”, and really little else.”

What will they say when the temperature starts declining?

HAS says: “… it occurred me to observe that the spatial distributions of surface temperatures (say) across the globe could be used as input to a “black box” model of the climate. I’m obviously aware of your interest in this area – have you (or anyone else to your knowledge) considered taking the step towards modelling using this information as a basis?”

The climate science community actually uses sea surface temperature data for specialty models that are categorized as AMIP.

M Courtney says: “Is that a fair list?”

I haven’t studied the Texas Sharpshooter method so I can’t confirm, and I did not address your item 5 in my post: “The expected variation of the models is so large that the findings of “consistent with” are almost trivial.”

It is with great satisfaction that I notice that this paper from the climate industry from Risbey et al. (2014) which includes luminaries such as Lewandowsky and Oreskes now acknowledges that changes in the intensities and frequencies of El Niños has an important influence on global temperature anomaly.

Of course as noticed here the current ENSO models are unable to simulate or forecast ENSO variations.

The only thing that would happen if they utilize more powerful computers to forecast ENSO is that they would arrive to an erroneous result quicker.

To only way for these models to create better result is if the main drives are included and understood, which I now know is from a combination of tidal forcing and changes in the electromagnetic activities of the Sun. I’m currently compiling material for a presentation that I’m going to make on this subject.

Don’t Mosher and the IPCC claim that the mean of all the models was the most accurate?

It seems that the historian and psychologist are trying to demonstrate the failures of the models. Maybe we should give them more rope?

Sorry about point 5.

It was last because I was least confident of that.

I took it from:

My misunderstanding – I withdraw point 5:

“5 The expected variation of the models is so large that the findings of “consistent with” are almost trivial.”

But I do think you’ve spotted the Texas Sharpshooter fallacy in this paper. So did daviditron at July 20, 2014 at 12:37 pm and he provided a handy link.

“Bjerknes feedback”

I get that after a few too many burritos.

IOW, consensus has dropped from 97% to 11%.

I would have been surprised if you couldn’t cherry pick a few models from 89, that could then, when used in isolation; produce an outcome that would line up with ENSO forcing over a 15 year period.

One wonders what on earth (or on whatever planet) Oreskes and Lewandowsky have to do with this paper. The objective of this paper clearly has nothing to do with science.

On the positive side thanks again Bob for a detailed factual analysis. I am in awe of your mental endurance. Also thanks to Anthony for his unflinching persistence on these issues.

Well written, easy to follow, and just technical enough to force me to read under my breath. 🙂 Your own competency lent it grace.

Per Strandberg (@LittleIceAge) says: “Of course as noticed here the current ENSO models are unable to simulate or forecast ENSO variations.”

We’re not discussing ENSO models here, Per. We’re discussing the models used by the IPCC for hindcasting and projections of future climate that are stored in the CMIP5 archive.

Cheers.

Bob Tisdale says:

July 20, 2014 at 12:29 pm

They’re here.

The Sydney Morning Herald …..

————————————————

The lunatics are in my hall.

The paper holds their folded faces to the floor

And every day the paper boy brings more.

– Pink Floyd

How did this crock pass peer review?

Oh sorry, my bad- it was “pal” review.

I just mistyped then corrected fundcasting (instead of hindcasting). Fundcasting: a climate model based study in search of additional funding.