By Christopher Monckton of Brenchley

I propose to raise a question about the Earth’s energy budget that has perplexed me for some years. Since further evidence in relation to my long-standing question is to hand, it is worth asking for answers from the expert community at WUWT.

A.E. Housman, in his immortal parody of the elegiac bromides often perpetrated by the choruses in the stage-plays of classical Greece, gives this line as an example:

I only ask because I want to know.

This sentiment is not as fatuous as it seems at first blush. Another chorus might say:

I ask because I want to make a point.

I begin by saying:

You say I aim to score a point. Not so:

I only ask because I want to know.

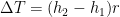

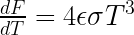

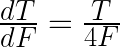

Last time I raised the question, in another blog, more heat than light was generated because the proprietrix had erroneously assumed that T / 4F, a differential essential to my argument, was too simple to be a correct form of the first derivative ΔT / ΔF of the fundamental equation (1) of radiative transfer:

![]() , | Stefan-Boltzmann equation (1)

, | Stefan-Boltzmann equation (1)

where F is radiative flux density in W m–2, ε is emissivity constant at unity, the Stefan-Boltzmann constant σ is 5.67 x 10–8 W m–2 K–4, and T is temperature in Kelvin. To avert similar misunderstandings (which I have found to be widespread), here is a demonstration that T / 4F, simple though it be, is indeed the first derivative ΔT / ΔF of Eq. (1):

Like any budget, the Earth’s energy budget is supposed to balance. If there is an imbalance, a change in mean temperature will restore equilibrium.

My question relates to one of many curious features of the following energy-budget diagrams for the Earth:

Energy budget diagrams from (top left to bottom right) Kiehl & Trenberth (1997), Trenberth et al. (2008), IPCC (2013), Stephens et al. (2012), and NASA (2015).

Now for the curiosity:

“Consensus”: surface radiation FS falls on the interval [390, 398] W m–2.

There is a “consensus” that the radiative flux density leaving the Earth’s surface is 390-398 W m–2. The “consensus” would not be so happy if it saw the implications.

When I first saw FS = 390 W m–2 in Kiehl & Trenberth (1997), I deduced it was derived from observed global mean surface temperature 288 K using Eq. (1), assuming surface emissivity εS = 1. Similarly, TS = 289.5 K gives 398 W m–2.

The surface flux density cannot be reliably measured. So did the “consensus” use Eq. (1) to reach the flux densities shown in the five diagrams? Yes. Kiehl & Trenberth (1997) wrote: “Emission from the surface is assumed to follow Planck’s function, assuming a surface emissivity of 1.” Planck’s function gives flux density at a particular wavelength. Eq. (1) integrates that function across all wavelengths.

Here (at last) is my question. Does not the use of Eq. (1) to determine the relationship between TS and FS at the surface necessarily imply that the Planck climate-sensitivity parameter λ0,S applicable to the surface (where the coefficient 7/6 ballparks allowance for the Hölder inequality) is given by

The implications for climate sensitivity are profound. For the official method of determining λ0 is to apply Eq. (1) to the characteristic-emission altitude (~300 mb), where incoming and outgoing radiative fluxes are by definition equal, so that Eq. (4) gives incoming and hence outgoing radiative flux FE:

where FE is the product of the ratio πr2/4πr2 of the surface area of the disk the Earth presents to the Sun to that of the rotating sphere; total solar irradiance S = 1366 W m–2; and (1 – α), where α = 0.3 is the Earth’s albedo. Then, from (1), mean effective temperature TE at the characteristic emission altitude is given by Eq. (5):

The characteristic emission altitude is ~5 km above ground level. Since mean surface temperature is 288 K and the mean tropospheric lapse rate is ~6.5 K km–1, Earth’s effective radiating temperature TE = 288 – 5(6.5) = 255 K, in agreement with Eq. (5). The Planck parameter λ0,E at that altitude is then given by (6):

Equilibrium climate sensitivity to a CO2 doubling is given by (7):

where the numerator of the fraction is the CO2 radiative forcing, and f = 1.5 is the IPCC’s current best estimate of the temperature-feedback sum to equilibrium.

Where λ0,E = 0.313, equilibrium climate sensitivity is 2.2 K, down from the 3.3 K in IPCC (2007) because IPCC (2013) cut the feedback sum f from 2 to 1.5 W m–2 K–1 (though it did not reveal that climate sensitivity must then fall by a third).

However, if Eq. (1) is applied at the surface, the value λ0,S of the Planck sensitivity parameter is 0.215 (Eq. 3), and equilibrium climate sensitivity falls to only 1.2 K.

If f is no greater than zero, as a growing body of papers finds (see e.g. Lindzen & Choi, 2009, 2011; Spencer & Braswell, 2010, 2011), climate sensitivity falls again to just 0.8 K.

If f is net-negative, sensitivity falls still further. Monckton of Brenchley, 2015 (click “Most Read Articles” at www.scibull.com) suggest that the thermostasis of the climate over the past 810,000 years and the incompatibility of high net-positive feedback with the Bode system-gain relation indicate a net-negative feedback sum on the interval –0.64 [–1.60, +0.32] W m–2 K–1. In that event, applying Eq. (1) at the surface gives climate sensitivity on the interval 0.7 [0.6, 0.9] K.

Two conclusions are possible. Either one ought not to use Eq. (1) at the surface, reserving it for the characteristic emission altitude, in which event the value for surface flux density FS may well be incorrect and no one has any idea of what the Earth’s energy budget is, and still less of an idea whether there is any surface “radiative imbalance” at all, or the flux density at the Earth’s surface is correctly determined from observed global mean surface temperature by Eq. (1), as all five sources cited above determined it, in which event sensitivity is harmlessly low even under the IPCC’s current assumption of strongly net-positive temperature feedbacks.

Table 1 summarizes the effect on equilibrium climate sensitivity of assuming that Eq. (1) defines the relationship between global mean surface temperature TS and mean outgoing surface radiative flux density FS.

| Climate sensitivities to a CO2 doubling | |||||

| Source | Altitude | λ0 | f | ΔTS,100 | ΔTS,∞ |

| AR5 (2013) upper bound | 300 mb | 0.310 K W–1 m2 | +2.40 W m–2 K–1 | 2.3 K | 4.5 K |

| AR4 (2007) central estimate | 300 mb | 0.310 K W–1 m2 | +2.05 W m–2 K–1 | 1.6 K | 3.3 K |

| AR5 implicit central estimate | 300 mb | 0.310 K W–1 m2 | +1.50 W m–2 K–1 | 1.1 K | 2.2 K |

| AR5 lower bound | 300 mb | 0.310 K W–1 m2 | +0.75 W m–2 K–1 | 0.8 K | 1.5 K |

| M of B (2015) upper bound | 300 mb | 0.310 K W–1 m2 | +0.32 W m–2 K–1 | 0.7 K | 1.3 K |

| AR5 central estimate | 1013 mb | 0.215 K W–1 m2 | +1.50 W m–2 K–1 | 0.6 K | 1.2 K |

| M of B central estimate | 300 mb | 0.310 K W–1 m2 | –0.64 W m–2 K–1 | 0.5 K | 1.0 K |

| M of B upper bound | 1013 mb | 0.215 K W–1 m2 | +0.32 W m–2 K–1 | 0.5 K | 0.9 K |

| M of B lower bound | 300 mb | 0.310 K W–1 m2 | –1.60 W m–2 K–1 | 0.4 K | 0.8 K |

| M of B central estimate | 1013 mb | 0.215 K W–1 m2 | –0.64 W m–2 K–1 | 0.4 K | 0.7 K |

| Lindzen & Choi (2011) | 300 mb | 0.310 K W–1 m2 | –1.80 W m–2 K–1 | 0.4 K | 0.7 K |

| Spencer & Braswell (2011) | 300 mb | 0.310 K W–1 m2 | –1.80 W m–2 K–1 | 0.4 K | 0.7 K |

| M of B lower bound | 1013 mb | 0.215 K W–1 m2 | –1.60 W m–2 K–1 | 0.3 K | 0.6 K |

Table 1. 100-year (ΔTS,100) and equilibrium (ΔTS,∞) climate sensitivities to a doubling of CO2 concentration, applying Eq. (1) at the characteristic-emission altitude (300 mb) and, boldfaced, at the surface (1013 mb).

It is worth noting that, even before taking any account of the “consensus’” use of Eq. (1) to govern the relationship between TS and FS, the reduction in the feedback sum f between IPCC’s 2007 and 2013 assessment reports mandates a corresponding reduction in its central estimate of climate sensitivity from 3.3 to 2.2 K, of which only half, or about 1 K, would be expected to occur within a century of a CO2 doubling. The remainder would make itself slowly and harmlessly manifest over the next 1000-3000 years (Solomon et al., 2009).

Given that the Great Pause has endured for 18 years 6 months, the probability that there is no global warming in the pipeline as a result of our past sins of emission is increasing (Monckton of Brenchley et al., 2013). All warming that was likely to occur from emissions to date has already made itself manifest. Therefore, perhaps we start with a clean slate. Professor Murry Salby has estimated that, after the exhaustion of all affordably recoverable fossil fuels at the end of the present century, an increase of no more than 50% on today’s CO2 concentration – from 0.4 to 0.6 mmol mol–1 – will have been achieved.

In that event, replace Table 1 with Table 2:

| Climate sensitivities to a 50% CO2 concentration growth | |||||

| Source | Altitude | λ0 | f | ΔTS,100 | ΔTS,∞ |

| AR5 (2013) upper bound | 300 mb | 0.310 K W–1 m2 | +2.40 W m–2 K–1 | 1.3 K | 2.6 K |

| AR4 (2007) central estimate | 300 mb | 0.310 K W–1 m2 | +2.05 W m–2 K–1 | 0.9 K | 1.8 K |

| AR5 implicit central estimate | 300 mb | 0.310 K W–1 m2 | +1.50 W m–2 K–1 | 0.6 K | 1.3 K |

| AR5 lower bound | 300 mb | 0.310 K W–1 m2 | +0.75 W m–2 K–1 | 0.4 K | 0.9 K |

| M of B (2015) upper bound | 300 mb | 0.310 K W–1 m2 | +0.32 W m–2 K–1 | 0.4 K | 0.7 K |

| AR5 central estimate | 1013 mb | 0.215 K W–1 m2 | +1.50 W m–2 K–1 | 0.3 K | 0.7 K |

| M of B central estimate | 300 mb | 0.310 K W–1 m2 | –0.64 W m–2 K–1 | 0.3 K | 0.6 K |

| M of B upper bound | 1013 mb | 0.215 K W–1 m2 | +0.32 W m–2 K–1 | 0.3 K | 0.5 K |

| M of B lower bound | 300 mb | 0.310 K W–1 m2 | –1.60 W m–2 K–1 | 0.2 K | 0.4 K |

| M of B central estimate | 1013 mb | 0.215 K W–1 m2 | –0.64 W m–2 K–1 | 0.2 K | 0.4 K |

| Lindzen & Choi (2011) | 300 mb | 0.310 K W–1 m2 | –1.80 W m–2 K–1 | 0.2 K | 0.4 K |

| Spencer & Braswell (2011) | 300 mb | 0.310 K W–1 m2 | –1.80 W m–2 K–1 | 0.2 K | 0.4 K |

| M of B lower bound | 1013 mb | 0.215 K W–1 m2 | –1.60 W m–2 K–1 | 0.2 K | 0.3 K |

Table 2. 100-year (ΔTS,100) and equilibrium (ΔTS,∞) climate sensitivities to a 50% increase in CO2 concentration, applying Eq. (1) at the characteristic-emission altitude (300 mb) and, boldfaced, at the surface (1013 mb).

Once allowance has been made not only for the IPCC’s reduction of the feedback sum f from 2.05 to 1.5 W m–2 K–1 and the application of Eq. (1) to the relationship between TS and FS but also for the probability that f is not strongly positive, for the possibility that a 50% increase in CO2 concentration is all that can occur before fossil-fuel exhaustion, for the IPCC’s estimate that only half of equilibrium sensitivity will occur within the century after the CO2 increase, and for the fact that the CO2 increase will not be complete until the end of this century, it is difficult, and arguably impossible, to maintain that Man can cause a dangerous warming of the planet by 2100.

Indeed, even one ignores all of the considerations in the above paragraph except the first, the IPCC’s implicit central estimate of global warming this century would amount to only 1.1 K, just within the arbitrary 2-K-since-1750 limit, and any remaining warming would come through so slowly as to be harmless. It is no longer legitimate – if ever it was – to maintain that there is any need to fear runaway warming.

Quid vobis videtur?

Discover more from Watts Up With That?

Subscribe to get the latest posts sent to your email.

I am not a scientist, but a little common sense would seem to indicate that 400 or even 540 parts per million of CO2 in the atmosphere could not have the major temperature driving force that the “science is settled” crowd would have us believe.

That is putting a lot of power in a trace gas that is even just a tiny percentage of the greenhouse gases in the atmosphere.

I really do think that is a pretty weak argument.

Common sense might tell you something iff you have strong grasp on the underlying facts, if not, it is worth nothing. When you have some knowlegde on this, iwe are not talking about ‘common’ sense any more.

That is an invalid response to a simple point. OK, you disagree, but you fail to provide any rationale or argument to support your disagreement.

Not one of the supposed physics processes have addressed the ‘magic’ of how one lone molecule causes 10,000 other molecules to warm 0.5, 1.0, 2.0, 3.0, 4.0 and so on degrees Kelvin.

Instead the individual molecular interactions are ignored and buried with gross assumptions.

Hugh,

I’m with Dave on this one. Common sense questions often reveal good insights.

Here is another one …

In the IPCC energy budget for the Earth, it shows 342 W/sqm as the down-welling energy from ‘greenhouse gasses’. Can you please tell me where on that chart the down-welling energy is shown from the 99.96% of the atmosphere that is not CO2?

You won’t find it because it is a fudge. All gasses emit photons downwards to the earth, not just ‘greenhouse gasses’. The percentage that come from CO2 is tiny.

Perhaps we should use the special man made c02 to insulate our houses in winter.

It seems its the most insulative material in the universe, trapping all that heat.

Just think off all the polar bears we can save not having to burn that evil fossil fuel…

The possibilities are endless…. you can have pockets of man made x02 on our jackets, earmuffs, gloves, etc

Don’t be bloody rude. Einstein said that physics is not common sense. However, many people will naturally will apply common sense to physics as they do to their everyday lives

morLogicThanU June 27, 2015 at 3:40 pm

Perhaps we should use the special man made c02 to insulate our houses in winter.

Not too wild an idea. We currently use argon gas in the insulating space between panes of multi-pane windows, but CO2 has a thermal conductivity ~5% lower than argon. Argon is around 23 times more abundant than CO2, however, and is the main residual gas left from the industrial production of oxygen and nitrogen, so it’s handier to use. And that leaves more CO2 in the air to help grow broccoli and keep us warm.

It would appear that a basic course in thermodynamics is a prerequisite.

“The emissivity of a real surface varies with the temperature of the surface as well as

the wavelength and the direction of the emitted radiation.”

http://www.kostic.niu.edu/352/_352-posted/Heat_4e_Chap12-Radiation_Fundamentals_lecture-PDF.pdf

http://kntu.ac.ir/DorsaPax/userfiles/file/Mechanical/OstadFile/Sayyalat/Bazargan/cen58933_ch11.pdf

http://web.iitd.ac.in/~prabal/MEL242/(33)-radiation-fundamentals%20-2.pdf

http://faculty.uoh.edu.sa/n.messaoudene/Heat%20Transfer/Heat%20Trans%20Ch%2012.pdf

https://en.wikipedia.org/wiki/Black-body_radiation

https://en.wikipedia.org/wiki/Emissivity

https://en.wikipedia.org/wiki/Radiation_properties

So, if emissivity is a function of T (temperature), as the textbooks suggest, then everything shown after Equation (1) is wrong.

From wikipedia (atmosphere of earth):

“If the entire mass of the atmosphere had a uniform density from sea level, it would terminate abruptly at an altitude of 8.50 km (27,900 ft).”

Pre-industrial levels of CO2 at 280 ppm would equal a layer of air of 2.38 m at sea level pressure (this ballpark figure assumes for simplicity that each molecule in the atmosphere takes up equal space: 8500*280/1000000). At present there is around 400 ppm suggesting that that mankind added around 1 m of infra-red absorbing CO2 (at sea level pressure). Are you sure that this could not possibly have an effect because it is a trace gas?

Take a 0.3mm thick sheet of aluminum foil and measure how much light passes through it. Now add another 0.3mm thick sheet and measure again.

Are you sure doubling the total thickness couldn’t possibly have an effect?

Scot,

No, I am not sure about that 🙂

However, I am really not sure what point you are trying to make (really!).

linear thought in a logarithmic world will get you lost every time.

Joel,

Are you saying that Scot is pointing out the logarithmic relation between greenhouse gas concentrations and temperature? I know that. I still don’t know why it needs pointing out 😉

–At present there is around 400 ppm suggesting that that mankind added around 1 m of infra-red absorbing CO2 (at sea level pressure). Are you sure that this could not possibly have an effect because it is a trace gas?–

Suppose one had flat piece of land which 1 km square. And one measured the air temperature in the middle of this field in white box 5 feet above the ground and it had average temperature of 12 C

And then put a glass box 1 square km by 5 meter high. And it it elevated above the ground by 5 meters. So top of box is 10 meters above the ground.

Then you filled the box with air will enough pressure so that the air does become a lower pressure then outside air when air is the coldest. So it’s sealed and designed to withstand any higher pressure were air to become warmer.

Then measure the average temperature for another year with white box in the middle. And it should be warmer temperature than compared to not having

the big glass box. Then you replace the air with pure CO2 and so thereby added 5 meters of CO2.

And question would be how warmer would the average temperature as measured in white box be?

And does increase the highest daytime temperature by how much. And/or does it increase the night time temperatures by the most?

Gbaikie,

Can you please re-write? As an example:

“Then you filled the box with air will enough pressure so that the air does become a lower pressure then outside air when air is the coldest. ”

I can try to interpret what you mean, but it will only lead to misunderstandings.

Gbaikie,

Can you please re-write? As an example:

“Then you filled the box with air will enough pressure so that the air does become a lower pressure then outside air when air is the coldest. ”

I can try to interpret what you mean, but it will only lead to misunderstandings.

Have it slightly over pressurized- say 1/2 psi.

Whether CO2 is a trace gas is actually irrelevant; the key issue is whether an absorbtion-band photon leaving the surface would make it through to space. Surprisingly, in spite of the low concentration, the answer is that such a photon will encounter a CO2 molecule ever few tens of metres. We call this the mean free path. Thus, even at 400ppm, an outbound phton will encounter many CO2 molecules on its route upwards. This should explain why increasing the concentration beyond a certain level doesn’t have all that much of an effect on the odds of a photon escaping, ending up back at the surface, or being converted into bulk atmospheric heat.

Here is the mathematics behind it: http://www.biocab.org/Mean_Free_Path_Length_Photons.html

In my view, the minutae of arguments atmospheric warming rates and responses within the frame of fluctuations of natural variability under decadal time scales are nonsense. The absolutely only thing that matters is that the earth is warming with MASSIVE amounts of excess heat. This is happening when the sun is at it’s longest and deepest minimum in over 100 years, and with nearly 40% of the total warming effects of CO2 being shielded by aerosols (dust and pollution) in the atmosphere.

please observe the following graphic:

The amount of heat represented in this graph is about 93% off the total global heat accumulation that has occurred over the last 10 years (I am only considering the very accurate ARGO buoy analysis annotated in red). This warming represents so much heat energy that, if it was to be put into the earth’s atmosphere, the atmosphere would warm by nearly 17C ( and we would all be dead).

The reason that this matters is that the only way that the earth reaches equilibrium is through blackbody radiation to space. This heat energy represents an continual investment of energy into the earth that will NEVER GO AWAY, until the earth’s surface warms enough to equalize with the incoming energy.

So my question to you all is:

With this definitive evidence of heat accumulation in the earth, why do you still believe that humans are not the primary contributor to this effect?

and

If you believe that humans are the primary contributor to this effect, why would you want to do anything but maximize the implementation of alternative energy and distributed generation, using clean fuel sources that give people the independence and freedom to charge their own cars from the solar panels on their own roofs and never send a single more dime to Saudi Arabia?

???

Ian,

What about the molecules of N2 and O2 or other atmospheric gases? They also absorb and emit photons – how do you isolate the CO2 effect? The outbound photon is much more likely to hit an N2 or O2 molecule which will block its path to space.

The chances are 99.96% that the photon will hit a air molecule other than CO2. This other molecule will either emit a new photon or simply vibrate a bit more.

The link you provided actually gives the answer in its first conclusion:

“The results obtained by experimentation coincide with the results obtained by applying astrophysics formulas. Therefore, both methodologies are reliable to calculate the total emissivity/absorptivity of **any** gas of any planetary atmosphere.”

Any gas acts this way – not just CO2. That is why CO2 only being a trace gas is relevant.

Bernard,

https://en.m.wikipedia.org/wiki/Greenhouse_gas

See section under non greenhouse gases.

Wagen,

Thanks for that link. I have read it before and its definition of radiative gases is helpful. My first point is that all matter radiates photons – provided it is above absolute zero. This includes N2 and O2. Larger gas molecules tend to be more radiative than smaller molecules as your link describes – but they all do radiate. My second point is that the chances of an emitted photon hitting a non CO2 molecule are 99.96% which means that most of what happens next is driven by the properties of those molecules, not CO2. Hence CO2 only being a trace gas is relevant.

And given that these photons are traavelling at 299792.458 km/sec, what does it matter if they interact with some CO2 molecules on their way out to space.

So the photon takes a zig-zag course on its way through the 50km of atmosphere whilst travelling at 299792.458 km/sec, it merely delays the photon by seconds at most (possibly only fractions of seconds).

Given that on average, there is 12 hours of darkness, this gives plenty of time for photons to escape to space before the next surge of incoming photon from the sun is received and the entire process repeats itself, such that there is no build up in temps. All the energy received during the day has plenty of opportunity to find its way out to space during the nighttime hours..

Sorry, wiki again (on N2):

“Molecular nitrogen is largely transparent to infrared and visible radiation because it is a homonuclear molecule and, thus, has no dipole moment to couple to electromagnetic radiation at these wavelengths. Significant absorption occurs at extreme ultraviolet wavelengths, beginning around 100 nanometers.”

If your point is that all molecules in the atmosphere absorb and re-radiate, then you have difficulties explaining the direct effects of sunshine. I may misunderstand your point, though.

Richard,

CO2 molecules hit by infra-red may radiate back (and this could be back to the ground) or they may give the energy of their excited state to another molecule they are colliding with.

Wagen,

Do you agree that all matter with a temperature greater than absolute zero emits thermal radiation? I had assumed that was a generally accepted principle but please correct me if I am wrong as what I say next comes from this.

If you do agree then you will agree that N2 and O2 will therefore emit radiation according to their temperature. Approximately half of that will be back down towards the Earth’s surface.

Question is – where did they get their thermal energy from? You say that N2 and O2 are largely transparent to visible and IR radiation. Logically that means they get must get their energy from other frequencies? Or maybe they simply get it from convection and conduction in the atmosphere?

Whatever the source of their energy, N2 and O2 are emitting thermal radiation to the ground just like CO2. The wavelengths may vary but even if they are not in the LWIR range, they will still be absorbed by the earth and re emitted at longer wavelengths just like when sunlight or UV hits the surface of the earth. Bottom line is that CO2 and the other ‘greenhouse gasses’ are not the only sources of down-welling radiation.

Jai: When you look at that “very accurate” ARGO data, please note that the heat content of the top 100 m has fallen (through 2013 at least) and that of the next 200 m has remained almost unchanged. Most of the heat has accumulated from 700-2000 m. That heat accumulation is large, but so is the mass warming, so the temperature change over a decade is ridiculously small – averaging about 0.01 degK. IMO, the ARGO data is interesting, but doesn’t agree with what was logically expected and climate models projected. Perhaps we will know more in a decade or two, but I see little reason to believe such tiny changes in temperature constitute conclusive evidence of global warming, much less the amount of warming expected from anthropogenic CO2.

Ian Macdonald, may I suggest that there is more to it than the CO2 absorption band for photons leaving the Earth’s surface. What is missing from the IPCC is any mention of the absorption bands across the entire spectrum, in particular, for the incoming Sunshine.

The absorption spectrum for CO2 has its primary maximum at about 4.3 microns, within the spectral range of our incoming Sunshine. The secondary maximum is at about 15 microns, within the spectral range of the average Earth’s surface emission. All of the other spectral lines are at shorter wavelengths, that is, not within the range of the Earth’s emissions.

The primary peak for CO2 absorbs about three times the energy from the incoming Sunshine as does the secondary peak in absorbing energy out-going from the Earth’ surface. Hence any increase in CO2 atmospheric concentration will cause cooling of the Earth as less energy reaches its surface in the first place, that is, before any surface emission that might be “trapped” by the atmosphere.

As the Earth’s temperature has been stable throughout this century, with neither warming nor cooling, it is apparent that the four molecules of CO2 to the 10,000 molecules of other atmospheric gases is insufficient to cause any measurable effect on the Earth’ temperature regardless of what path the photons may take. This is especially important for the back-radiation of the incoming Sun’s energy as those photons escape directly into space never to return.

Bernard, Wagen and others: Your comments reflect some significant misunderstanding about the behavior of GHGs and radiation.

1). The troposphere and most of the stratosphere are in local thermodynamic equilibrium. This means that an excited vibrational state of CO2 is relaxed by collisions much faster than the excited state can emit a photon. Therefore essentially all thermal infrared photons arise from GHG molecules that were excited by collisions, not by absorption of a photon. Therefore emission of thermal infrared photons by GHGs in the atmosphere depends only on the local temperature – local thermodynamic equilibrium. “Re-emission of absorbed photons is negligible. Essential all absorbed photons become kinetic energy (heat) when they are absorbed. “Thermalized” is a name for this process.

2). Essentially all the radiative cooling of the atmosphere is done by CO2 and water vapor. “All matter above absolute zero may radiate” at some low rate, but the power radiated by N2 and O2 in our atmosphere is negligible compared with GHGs.

3). The atmosphere is warmed by absorption of thermal infrared by GHGs and cooled by emission of thermal infrared by GHGs. These energy gains or losses are transmitted to and from N2 and O2 by collisions. In general, more energy is lost than gained by radiation in the troposphere and the difference is supplied by latent heat from the condensation of water vapor into liquid water and ice (clouds). About 1/3 of sunlight that isn’t reflected back into space is absorbed by the atmosphere before it reaches the ground. Convection of latent heat stops at the tropopause, so all heat transfer above occurs by radiation. Since DLR and OLR are similar near the surface, convection is more important than net radiation in removing heat from the surface.

4). The strongest absorption lines for CO2 are saturated by all of the CO2 in the atmosphere, but weaker lines are not. And all lines have width, so that, even if the center is saturated, the sides may not be. The 3.7 W/m2 forcing for 2XCO2 is a small change in the 100+ W/m2 contribution of CO2 to OLR and comes mostly from the weaker lines and sides of stronger lines. So saturation is a real phenomena, but increasing CO2 still decreases OLR.

Hope this helps.

— Bernard Lodge

June 27, 2015 at 9:56 pm

Wagen,

Do you agree that all matter with a temperature greater than absolute zero emits thermal radiation? I had assumed that was a generally accepted principle but please correct me if I am wrong as what I say next comes from this.-*-

Well if matter emits thermal radiation, one can assume such emission would involve energy leaving this matter.

Matter does not inherently have infinite amount of energy to emit, so one say it has some finite amount of energy it could emit. And this finite energy would be what meant by being at a temperature greater than zero.

Gas temperature is kinetic energy. A gas molecule can stop moving [and *could* do this hundreds of times within one second] and then regains it’s velocity via collision with other gas molecules. And were all gas molecule in area were to stop moving then that gas would be at absolute zero temperature.

So gas molecule is like fast moving bullet, the difference is a bullet could also be warm and radiating while racing towards something before it hits anything- a molecule does not likewise radiate between hitting something [another molecule or say a photon].

All gas can interact with other matter and it can gain energy by such interaction which can then cause the gas molecule to emit a photon. But the molecule itself does not have some finite amount energy which it emits.

Or the temperature of gas is the average velocity of million or billions of gas molecules.

And a gas molecule absorbs and emits electromagnetic energy by having it’s electrons going to higher energy level and then returning to more stable energy level.

Since matter is comprised of protons and electrons and all electron could go to higher energy level, All matter can absorb and emit thermal radiation.

Now, according to ideal gas law, ideal gas don’t lose their kinetic energy via

collision with other ideal gases. If they radiated energy via collision, that would mean they would lose kinetic energy [or that’s all the energy they have to lose].

So CO2, N2, O2 are considered ideal gases. H20 is not ideal gas

because at earth temperature and pressure it can condense [form into water] So H20 collision with other H20 molecules can lose energy in the collision [they stop being a gas and be liquid- of course the liquid gains the kinetic energy, but the gas loses a member. But also briefly sticking or clumping together, adds heat which can radiated].

So what CO2 and other greenhouse gases are is they are much more easily [then non-greenhouse gas] to have have the electron energized by infrared light. They gaining electromagnetic energy and then emit the same electromagnetic energy- and emit it in random direction [all matter emits in random direction],

So the greenhouse gas aren’t transparent to some wavelengths of IR, whereas non greenhouse are mostly transparent to IR [but being transparent, they can reflect, scatter some of this light, but don’t absorb and re-radiate very much of it].

Jai Mitchell:

“…warming with MASSIVE amounts of excess heat. This is happening when the sun is at it’s longest and deepest minimum in over 100 years,”

It sounds like you’re saying the sun has been during the whole 20th century in some kind of minimum? It seems if anything could be said about max or min, some kind of minimum started in 21th century mostly during the so-called “hiatus”. However, climatic responses would be normally deduced on meta-decal timescales, often 30 years periods, with significant trends around half of that, around 15 years. That’s why the 18+ years hiatus in isolation is still significant and at the same time not yet definitive in term of climate science.

” and with nearly 40% of the total warming effects of CO2 being shielded by aerosols (dust and pollution) in the atmosphere.”

It sounds like you’re saying adding dust and pollution would be a good protection from global warming? If we’re already blocking 40% of the looming disaster now, perhaps doubling the aerosols might be a solution? :-p

I’m a little confused as to how NOAA got 1955-2015 data for 0-2000m heat content, when their own data page only shows data for 0-700m for that timescale. For 0-2000m, the data only goes back to 2005.

Co2 by it’s nature accelerates the evaporative heat transport. So the individual photon’s incidental contact with a co2 molecule gives the photon a boost on it’s way out of the system.

Same reason why the evaporation of liquid co2 causes minus (-109.3) degree dry ice. Sucks all of the heat out of the local area and keeps on running. http://www.thegreenhead.com/2007/10/portable-dry-ice-maker.php

Jai Mitchell is putting up Mister Karl’s delusional mish mash of boat intake corrections applied to sea temperature buoys.

That’s a party foul. IT’s on a level with barfing in the punch.

Bernard,

“Do you agree that all matter with a temperature greater than absolute zero emits thermal radiation? I had assumed that was a generally accepted principle but please correct me if I am wrong as what I say next comes from this.”

No. Some molecules only move faster. I.e. Atmosphere warms (which may lead to other molecules to radiate more though).

Hugh.

“It sounds like you’re saying adding dust and pollution would be a good protection from global warming?”

Yes it would (lowering ocean ph ignored here). You would have to live with the smog though.

Frank,

Thanks for your reply.

You say …. ‘the power radiated by N2 and O2 in our atmosphere is negligible compared with GHGs’.

Do you have a source for that?

‘Negligible’ is an opinion – can you quantify it?

Jai Mitchell

My question to you is, why do you ignore the geologic and glacial history that natural processes dominate the climate system? We’ve seen large fluctuations in climate and temperatures in the past, with no CO2 “cause” for the fluctuation. From Rasmussen et.al., (2014) “About 25 abrupt transitions from stadial to interstadial conditions took place during the Last Glacial period and these vary in amplitude from 5 °C to 16 °C, each completed within a few decades”, and no, the world did “die”….Please leave your bubble and join the real world….. http://www.sciencedirect.com/science/article/pii/S0277379114003485

Bernard Lodge wrote: “You say …. ‘the power radiated by N2 and O2 in our atmosphere is negligible compared with GHGs’. Do you have a source for that? ‘Negligible’ is an opinion – can you quantify it?”

Rats, you noticed the modestly vague words I used when I was sure of these facts but didn’t have proof at my fingertips. Time to do better.

You can go to the online MODTRAN calculator at the link below and remove carbon dioxide, water vapor and methane from the spectrum of outgoing radiation by putting zeros in the top five boxes. The software calculates the spectrum of OLR at the top of the atmosphere (70 km). The spectrum of the radiation reaching space looks like the blackbody spectrum emitted by the surface of the earth at a single temperature. At no wavelength is there any evidence that the N2 and O2 in the atmosphere have absorbed and emitted any OLR. When the traditional GHGs absorb and emit, less radiation is emitted at characteristic wavelengths. For example, with the default setting of 400 ppm of CO2, the intensity emitted around 15 um is appropriate for a temperature of 220 degK because all of the 15 um radiation emitted by the earth’s surface has been absorbed. The average photons that escapes to space at this wavelength is emitted by CO2 molecules near the tropopause where the temperature is 220 degK (about 13 km). 15 um photons emitted upward from lower in the atmosphere have little chance of escaping to space. If you play with the model, perhaps you can convince yourself that traditional GHGs have a much bigger impact on OLR and therefore N2 and O2 are negligible in comparison.

http://climatemodels.uchicago.edu/modtran/modtran.html

You can find many closely figures with the absorption spectra of GHGs on the web (search infrared spectrum gases), such as the one below. Such Figures never include N2, because “everyone” knows it is “negligible”. They show O2 plus O3, and strongest bands in this spectrum are due to O3. (O2 does absorb UV.)

http://www.meteor.iastate.edu/gccourse/forcing/spectrum.html

At http://www.spectracalc.com, you can plot the spectrum of N2 and CO2. The strongest lines for CO2 are 10 orders of magnitude stronger than for N2. For O2 and CO2, the difference if about 6 orders of magnitude. I don’t have much experience with this website.

Hope this helps.

A different view, putting you in the position of a photon trying to make it from the surface to space past those ‘few’ CO2 molecules is pursued at http://moregrumbinescience.blogspot.com/2009/11/how-co2-matters.html

Similar answer ultimately, but perhaps easier to visualize.

Bernard,

There’s an easier way to think of this. Sure, the N2/O2 is matter with the potential to emit EM energy, but it has an emissivity of near zero and near unit transmittance. Would you assert that if the Earth’s atmosphere contained only its present amounts of N2 and O2 and nothing else, significant emissions from the O2/N2 would be seen from space and that the average temperature and sensitivity would be any different than the case for no atmosphere at all? The only photons we can see that are escaping the planet either originate directly from the surface or clouds and pass through the N2/O2 and GHG’s without being absorbed and emissions by GHG molecules. If you examine the spectral properties of the planets emissions, those in the GHG absorption bands are about 3db lower then they would be (about 1/2) without GHG absorption. While the N2/O2 do have emission/absorption bands, they are not in the visible or relevant LWIR spectrum.

George

daveandrews723 June 27, 2015 at 7:12 am

Not only are you not a scientist but you aren’t a philosopher or logician either. What you have written comes into that famous “not even wrong” category.

Argument from personal incredulity is no argument at all. it irks me that Mr Watts permits such dreck to be published here as it makes us climate realists look stupid.

https://yourlogicalfallacyis.com/personal-incredulity

Of course, the other fudge is showing DWIR from “greenhouse gases”, and then pretending that is coming from CO2, when H2O is doing almost all of it.

CO2 does not radiate below 11km.

Unexpected effect of CO2

The weight of air pressure is equivalent to the pressure of 10m of water. On a simple pro rata calculation 280 parts per million of this is equivalent to a 3mm thick layer of solid CO2.

The radiation emitted to space treating earth as a black body would imply a black body temperature of 255K, which is -18 degrees C, yet the surface temperature is effectively 288K or +15 degrees C, giving a 33 degree C (or K) difference with and without atmosphere.

The standard texts say that water vapour is responsible for most of this greenhouse gas-driven rise, and that CO2 is responsible for only around 23% of it. So you can work out that the pre-industrial level of 280 ppm CO2 is responsible for an 8 degree C higher surface temperature than would be the case without it. Not bad for a 3mm thick solid layer – and contradictory to what at least your common sense is telling you.

Common sense might then tell you that doubling the thickness from 3mm to 6mm (concentration rising from 280 ppm to 560 ppm) would double the temperature rise. But again common sense would be wrong, because it is somewhat less than that. All the discussion centres around how much more water vapour would be in the atmosphere if CO2 caused an initial temperature rise, and what the additional rise from the extra water vapour greenhouse effect would be – and there is a lot more water vapour in the atmosphere than CO2.

So common sense is really not a good guide when a calculation based on known physics can get you the right answer.

Climate Pete

You and the general population have been grossly mislead. The fact is that the Greenhouse Effect of 33 degrees Celsius is completely fictitious.

A primary claim for the cause of global warming has always been that the Earth suffers a “greenhouse effect”, being 33 degrees Celsius warmer than it would be if there were no greenhouse gases in the atmosphere. This is calculated from the difference between the estimated global surface temperature of 15 deg.C and the estimated temperature of the Earth without greenhouse gases. How many of the public are aware of the method used to calculate the latter?

The calculated temperature is derived from the surface temperature of the Sun, the radius of the Sun, the distance of the Earth’s orbit from the centre of the Sun and the radius of the Earth using the Stefan Boltzmann equation with an albedo of 0.3 to give an effective blackbody temperature of -18 deg.C.

Behind this seemingly innocuous calculation is hidden rarely stated assumptions. For example the Stefan Boltzmann equation applies to radiation from a cavity in thermal equilibrium emitting directly into a vacuum. That means that the model Earth is a perfectly smooth sphere with a uniform surface, no oceans, forests, deserts, mountains, ice sheets and, amazingly, no atmosphere with the same surface temperature everywhere, that is, no day or night. Furthermore the model assumes that the Sun’s insolation is spread uniformly across the whole Earth surface so no tropical equatorial zone and no ice-covered poles, just a uniform solid surface with a uniform albedo of 0.3 everywhere receiving a uniform insolation of one quarter of the Sun’s known insolation, everywhere across the spherical surface of the Earth. Does anyone, other than the IPCC and its followers, think that this is a reasonable model of the Earth from which to estimate the “greenhouse effect”, that is, the temperature difference between an Earth with and without “greenhouse gases” in the atmosphere when the model does not even include an atmosphere? I certainly do not.

Well Dave, you seem to have diverted the whole thread away from MofB’s question regarding the appropriateness of the 390 Wm^-2 surface total radiant emittance value.

Where I think Christopher, might have had some gear slippage, is that this question seems to be based on an expectation that TSI – albedo attenuation losses, should match the simple BB radiation emittance of the surface, using of course the 1/4 sphere / circle surface area factor; a factor which personally I find totally bogus.

342 Wm^-2 over the entire earth surface, less the 30% or so albedo losses, can’t possibly raise the surface Temperature to 288 K, corresponding to the 390 value.

So your Lordship, have you considered the additional energy over and above the Planckian radiative assumption, that is conveyed from the surface to the atmosphere, and subsequently lost to space.

You have direct thermal conductivity of HEAT energy (noun) from the total surface to the atmosphere, followed of course by convective transfer of this energy (as heat) to the upper atmosphere, which at some point must be converted to some spectrum of LWIR radiation (also BB like) for transfer out to space (at least half of it.

Then of course, for the oceanic parts of the earth condensed surface, you will have a considerable latent heat of evaporation that gets added to the atmospheric “heat energy”, and also convected to higher altitudes, where it will get extracted in the condensation and possibly freezing phase changes.

Remember that this “heat energy” must get extracted by transfer to other colder atmospheric gases, or of course by THERMAL (BB like) emission from the H2O molecules, so when the water droplets or ice crystals fall to earth as rain or snow or other precipitation, that latent heat does not return to the surface with the water, since the water had to lose it already before the phase change can occur.

So the total energy in the upper atmosphere that must eventually be lost as thermal radiation to space, is somewhat greater than just the original surface total radiant emittance number.

And frankly, I’m not convinced that we have good data on just what all those processes contribute to the mix.

I also do not like the feedback model that has increased CO2 simply creating increased downward GHG LWIR radiation back to the surface.

In my view, the controlling feedback connection, is the direct Surface Temperature change resulting in an evaporation change of 7% per deg. C, as found by Wentz et al (SCIENCE for July 13 2007, “How much more rain will global warming bring ?” ) or words close to that.

That atmospheric H2O change implies somewhere, a commensurate change in cloud cover that directly attenuates the incoming solar spectrum radiation that is able to reach the surface.

And that effect is a huge and negative feedback factor, as it directly affects the amount of atmospheric (CAVU) attenuation that reduces the exo TSI from 1362 Wm^-2 down to a surface value closer to 1,000 Wm^-2.

The outgoing CO2 LWIR capture is of course real. But I also believe it is quite irrelevant to the outcome, as the water feedback to the solar input, is where the stabilizing control is.

And Dave you say that you are NOT a scientist.

Evidently not a solid state Physicist either.

You computer of perhaps some finger toy, or whatever you logged in here on, contains silicon chips that contain silicon atoms in a diamond lattice at a density of about 5.6 E+22 Si atoms per cc.

Surface layers in CMOS structures that make the thing work contain tiny amounts of dopant atoms at densities of maybe 10^16 to 10^18 impurity atoms per cc, and without that impurity content of one part in five million, to one in 50,000, which is way less than the atmospheric CO2 abundance, you simply couldn’t be here conversing with us all.

So don’t hang your hat on the “too little to do anything” mantra.

Denying the CO2 or other GHG capture process, is not a good hill to choose to die on.

Just my opinion of course.

I forgot to add, that with the sun beating down on the surface (below it) at about 1,000 Wm^-2, rather than 240 Wm^-2 as Trenberth asserts, will easily raise the surface Temperature during the day to Temperatures, which we all experience every day.

I’m lost on one point- *when* did they decide it was 2C since 1750? I never heard that until the last year or so. And I thought I understood that the Avg Temp had already risen a fair chunk of that 2C between 1750 and 1850…?

IMO it’s because the Industrial Age began shortly after 1750, not 1850, and CO2 levels were similar in both centuries.

Much of the warming since the depths of the little Ice Age during the Maunder Minimum occurred in the early 18th century. After 1750 there was both cooling during the Dalton Minimum and warming after it until the Modern Warming Period began in the late 19th century.

Here ya go.

http://www.carbonbrief.org/blog/2014/12/two-degrees-a-selected-history-of-climate-change-speed-limit/

I believe an economist came up with the idea a few decades ago. Sorry, I can’t remember the name now. And… before you ask… yes, I mean an economist, and, yes, I mean a few decades ago.

Well done CM of B. I guess Trenberth and his cohorts didn’t expect to ever be questioned,

I mean, such a pretty set of illustrations!

But interestingly, the little ‘o” knows 97% of “real scientists” are “in agreement” ?

This reminds me of Barry’s Dame Edna’s stage show when singling out any foreigners in the audience;

“They don’t understand a word I’m saying, but they love colour and movement”.

Should it be “I ask only because I want to know” and not “i only ask because ————”

I ask only because I want to know.

Fowler, in Modern English Usage, argued strongly that the more logical placement of “only” should not be made a guideline for copy editors.

“I ask only because I want to know” would not scan, which was why Housman wrote “I only ask because I want to know”.

I am chuckling to myself after reading this… So in summary, if we assume that the “Scientific Consensus” actually had a valid point in understanding the “:heat budget”, M of B can demonstrate using the same process it leads to a tiny amount of warming… Precious. LOL

It takes a Texan to get straight to the main point. Well done, Robert!

CO2 has been found guilty

When no crime’s been reported,

But the alarmists’ agenda

Demands facts are distorted!

http://rhymeafterrhyme.net/climate-the-numbers-dont-add-up/

So is that what the judge did in the Netherlands? Guilty without evidence?

Judges aren’t scientists

And neither am I,

But we’re being lead down a path

And you need to ask why?

Given that surface temperatures are changing at different rates (higher rates that is) than the troposphere temperature trends, we should, indeed, move the sensitivity estimates to be based on the surface only rather than on the troposphere like it is in climate theory.

And, the change in temperature (K) per additional forcing (1.0 W/m2) is, indeed, smaller at the surface (0.184 K/W/m2) than at the tropospause (0.265 K/W/m2).

And, the surface needs to increase its forcing (including the feedbacks) by +16.5 W/m2 in order to increase its temperature by 3.0K while the tropopause only needs +11.5 W/m2.

In the theory, the tropopause goes up in temperature by 3.0K per doubling because there is +4.2 W/m2 of direct GHG forcing per doubling (including other GHGs besides CO2) and the water vapor and cloud and albedo and lapse rate feedbacks add another 7.3 W/m2 of forcing.

And the lapse rate feedback is supposed to be negative. The lapse rate of 6.5K/km is supposed to be decrease in climate theory (the troposphere hotspot) while it is clearly increasing in the current environment given that the troposphere is increasing in temperature by much less than the surface.

Climate science uses a bunch of shortcuts in order to avoid doing all this simple math properly. Lately, they have been avoiding it because the math does not work to get to 3.0C per doubling.

If we move the calculations to the surface and include the proper feedbacks for water vapor and clouds that are actually showing up, we get only 1.1C of warming per doubling and a total forcing change from the current 390 W/m2 at the surface to 395.9 W/m2.

And that only occurs because we are also ignoring the Planck feedback whereby outgoing longwave radiation should increase as the surface temperature rises. You never ever hear anyone in the climate science community talking about this.

How much energy does it take to accelerate the hydrological cycle, and to grow 20 percent more bio-life.

I forgot the question mark, but I only ask because I want to know.

A minimum of some.

Bill Illis:

The answer is that it doesn’t matter. Whatever the source of warming — assuming that there is indeed some such source of warming — the hotspot is still required in theory.

The reason is that hot gases become less dense and rise, to the point at which they shed their heat via radiation to space. So no matter what was causing it to get hot, there would still be a hotspot. Different gases might have to rise to somewhat different altitudes before they shed that heat, but remember too that atmospheric gases tend to be rather well-mixed.

So the absence of a hotspot is not just evidence against CO2-based warming, it is evidence against significant warming, period.

+1

Hi Christopher,

Subject to certain limitations that don’t apply here, the inverse of a derivative equals the derivative of the inverse. So your starting assumption is correct. We can double check by taking the derivative of the inverse explicitly:

F = kT^4, therefore

T = (F/k)^0.25

= (1/k)^0.25 * F^0.25

So:

dT/dF = 0.25 * (1/k)^0.25 * F^(-0.75)

= 0.25 * (1/k)^0.25 * F^(0.25) * F^(-1)

= 0.25 * T * F^(-1)

= T/(4F)

Without quibbling over the use of S-B at all, the consensus method seems to describe a stable system. Which is what we have.

Are the tales of runaway warming in the Summary of Policymakers or described in the actual AR5 report?

(or did I completely misunderstand your math this early morning).

jeanparisot,

You wrote “the consensus method seems to describe a stable system. Which is what we have.”

You are correct.

The tales of runaway warming exist only in the fevered imaginations of a certain type of “skeptic”. And, I suppose, in history since in the early days (perhaps 40 years ago) there was some speculation that it *might* be a possibility.

Hansen still warns of the Venus Express, does he not? Or do you include the former head of GISS and perpetrator of the global warming scare of the 1980s to 2010s as a certain kind of skeptic?

I love it when Venus is mentioned. 96.5% concentration of CO2 and 96 times the atmospheric mass. So approximately 230,000 times as many CO2 molecules as here in Earths atmosphere, but only about 2.5 times the absolute temperature at the surface. If you still believe in “dangerous climate change” after those stats, well, there is no helping you!

Sturgis Hooper wrote: “Hansen still warns of the Venus Express”.

I was going to answer “not so far as I am aware” but then I thought, “well this is Hansen we’re talking about” so I decided I better do a search.

So I correct myself: “only in the fevered imaginations of a certain type of “skeptic” and certain kooks on the alarmist fringe”.

Mike M.

June 27, 2015 at 9:13 am

You appear to have joined the debate late. Your observation is, however, evidence that as little as 4 to 5 years ago, the consensus was pushing thermogeddon and that since the reality of the pause became reluctantly accepted by the consensus (despite the recent NOAA attempt “hide the pause”), the number of death by fire CAGW types has shrunk considerably (your certain type of ‘sceptic’ I guess – a funny word for a the remaining end of the world zealots that used to make up the mainstream).

I can see that your late arrival at a time when real sceptics had already chopped the crisis in half would make it look like sceptics were quibbling over a degree or so.

Just in the past week we have been treated to actual “end of the world and no human can survive” warnings.

And all manner of other crazy s**t.

And it is not coming from skeptics.

This is a bizarre contention from Mike M.

A paper from just two years ago in Nature Geosciences, calculates that, “The runaway greenhouse may be much easier to initiate than previously thought”.

http://news.nationalgeographic.com/news/2013/13/130729-runaway-greenhouse-global-warming-venus-ocean-climate-science/

So in fact “tales of runaway warming” still do exist in the fevered imaginations of Catastrophic Anthropogenic Climate Change Alarmists (CACCA).

Mike M. (the imposter) wrote:

So Gavin Schmitt is a “certain type of skeptic” by even acknowledging it nine years ago?

http://www.realclimate.org/index.php/archives/2006/07/runaway-tipping-points-of-no-return/

And oh my lying eyes, it must be my imagination allowing me to read this as well – http://arxiv.org/abs/1201.1593

I think most informed people know that the ESSENTIAL ingredient for CAGW theory has always been that more CO2 will beget warming that will then beget more water vapor which begets even MORE warming thus begetting even more water vapor (plus methane from tundra under the north pole, heh heh), ad nauseum until there is so much begetting going on that the planet is ….

Gee, I wonder who it was who introduced the idea that we would reach a point in man made global warming where it would be impossible to do anything about it? In 2006 someone said –

Mike M (the one who struggles with reading comprehension):

I was responding to a comment by jeanparisot who wrote: “Are the tales of runaway warming in the Summary of Policymakers or described in the actual AR5 report?”

Mainstream climate science, even IPCC, does not warn about runaway warming. Here is a link that talks about why, and also about the often inapt use of the phrase “tipping point”: http://www.realclimate.org/index.php/archives/2006/07/runaway-tipping-points-of-no-return/

Maybe if you read it a second time, you will understand it.

And your other link says that “almost all lines of evidence lead us to believe that is unlikely to be possible, even in principle, to trigger full a runaway greenhouse by addition of non-condensible greenhouse gases such as carbon dioxide to the atmosphere”.

In my original reply to jeanparisot, I overstated by implying that none of the alarmists make such ridiculous claims. I have since corrected that.

Yeah, “(the one who struggles with reading comprehension)” was needlessly nasty. Just wanted to make the point that two can play that game. Maybe you were just trying to be funny, but calling someone and imposter is not funny.

I applaud your efforts Lord Monckton, the further you dig into the data and theory the less valid it becomes. Observations:http://drtimball.com/wp-content/uploads/2011/05/average-global-temperature.jpg

1) CO2 traps heat most efficiently at 15 micrometers. That is consistent with -80 degrees C. The absorption band does spread out to include 0 degree C or 255 degree K. At 5 K into the atmosphere both H2O and CO2 exist so 255 degree K IR should be absorbed. If CO2 were the cause of atmospheric warming would be in the upper atmosphere, not the surface temperatures. CO2 doesn’t efficiently absorb 10 micrometer IR very well. The problem is, there isn’t a hot spot 5 k into the atmosphere.

http://wattsupwiththat.com/2014/08/04/what-stratospheric-hotspot/

2) We have 800 million years of geologic history when CO2 reaches levels as high as 7,000 PPM. Never in the past 800 million years when 99.999% of the record had higher CO2 levels, did the earth experience catastrophic warming…never. Clearly, using the data from the past 600 million years, the sensitivity of CO2 and temperature is non-existent. Are climate scientists not ware of the data they have already published that totally refutes their theory?

http://drtimball.com/wp-content/uploads/2011/05/average-global-temperature.jpg

3) The “evil sister” of Global Warming is Ocean Acidification. For your next article you may want to do the math exploring how much CO2 would be required to alter the pH of the Oceans, and put that in terms of CO2 existing in the atmosphere and fossil fuel consumption.

Once again, keep up the great work.

It really does seem to me that if the chart you posted is accurate, then it proves there is nothing to worry about.

What is the source of this graph, please?

http://www.geocraft.com/WVFossils/Carboniferous_climate.html

The graph at the link provided by Nick Boyce is very similar to the one posted by co2islife, but they are not the same. The one posted by co2islife does not include the error limits (more than a factor of 2). But I suspect that the ultimate source for the “data” in the two graphs is the same. The link to the paper is provided on the web page linked to by Nick. It’s a model!

I believe the original source is: http://www.biocab.org/carbon_dioxide_geological_timescale.html

And here is the revised version from the above source, to wit:

http://www.biocab.org/Geological_Timescale.jpg

Whenever I see these charts it appears that everyone seems to think that gravity has been constant; however, there is no way that the giant dinosaurs could have survived with our existing gravity. Therefore, how might a gravity of about 50% of the present have affected our atmosphere millions of years ago.?

You are assuming that the fossil interpretation of giant land animals is correct. An easier explanation is that all large dinosaurs were water based. Perhaps the T-Rex, for example was half submerged in water, like a Carnivorous hippo.

Or gravity has remained the same, but atmospheric pressure was greater during the time of the dinosaurs. Some suggest that the atmosphere must have been about 2 bar.

O2 was far higher.

“there is no way that the giant dinosaurs could have survived with our existing gravity.”

If one is to assume that gravity is variable, then the implications of that render just about all of scientific inquiry a waste of time, as one must allow that all the other physical constants and forces also varied.

And if they vary, why just in time? Why not in space?

Separately, such logic as is attempted by this statement also concludes that bumblebees cannot fly, and various other conclusions that are at variance with observation.

Anyway, this train of thought makes as much sense as the guys who say there is no such thing as water vapor (calling it the myth of cold steam) or convection.

Earl,

There is every way that the giant dinosaurs could have and did survive under gravity the same as at present.

The only suborder of dinosaurs outside the size range of Cenozoic land animals were the sauropods, and far from all of them. Estimates of their weight are coming down, as more is learned of their anatomy, but they’re still bigger than anything before or since.

However mechanical studies of their hollow-boned structure show that they violate no laws of mass or motion.

It may be that some spent part of their lives in water, but that former hypothesis is no longer required to explain their unusual size. At least some of them did however apparently float, as shown by footprints. Sauropods apparently returned to North America in the Late Cretaceous across water gaps from South America, after a long absence.

By what mechanism do you suppose gravity to have been the same as now in the Paleozoic, when land animals were smaller than now, then suddenly much greater for part of the Mesozoic, then back down again?

Earl

Out in the world there is a doctor who needs a patient. Help him out. Go find him.

Eugene WR Gallun

Earl,

To paraphrase another poster above this post, ……. that claim about gravity makes as much sense as ……. the claim that the giant pterosaur named Quetzalcoatlus was one of the largest known flying animals of all time …… with a wingspan estimated to be 10–11 meters (33–36 ft) ……. and an estimated weight of around 200–250 kilograms (440–550 lb).

Read more @ur momisugly https://en.wikipedia.org/wiki/Pterosaur_size

http://news.nationalgeographic.com/news/2009/01/images/090107-pterosaur-picture_big.jpg

There were some very large mammals during the Oligocene epoch. Approx. 30 million years ago.

Samuel,

Unless gravity still hadn’t reached present strength 25 to six million years ago, how then to explain these flying giant birds:

http://www.washingtonpost.com/national/health-science/a-newly-declared-seabird-species-may-be-the-largest-to-ever-live/2014/07/07/0940783c-05e4-11e4-8a6a-19355c7e870a_story.html

No reason to suppose that the largest pterosaurs didn’t fly, as all evidence suggests that they did. Their remains have been found far out to sea. They didn’t paddle there. They soared, like big sea birds, such as the one reported in the above link.

Biomechanical study of Argentinosaurus, the largest sauropod known from good material, shows no need to invoke variable gravity or mass of earth:

https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3864407/

Conclusion:

Forward dynamic simulations shows that an 83 tonne sauropod is mechanically competent at slow speed locomotion. However it is clear that this is approaching a functional limit and that restricting the joint ranges of motion is necessary for a model without hypothetical passive support structures. Much larger terrestrial vertebrates may be possible but would probably require significant remodelling of the body shape, or significant behavioural change, to prevent joint collapse due to insufficient muscle.

PS: One reason herbivores eating such low quality vegetation as conifer needles could reach such great mass was the abundance of trees even in their often arid environments, thanks to the healthy levels of ambient CO2 in those lush, luxurious periods.

Mid-Cretaceous CO2 was probably around 2000 ppm, but might have been higher. Arboreal vegetation probably maxes out its potential at a little lower level than that, but the extra plant food in the air didn’t hurt.

@ur momisugly sturgishooper June 29, 2015 at 1:38 pm

I fail to comprehend the inference that the force of gravitational attraction on the body mass of land animals, …… as measured at sea level, ….. was increasing prior to 6 million years ago, let alone 25 million years ago. But on the contrary, it would be logical to assume that the force of gravitational attraction on the body mass of land animals, …… as measured at sea level, ….. was decreasing post-22,000 years BP simply because of the Post Glacial Sea Level Rise of 450+ feet.

Now flying giant birds is one thing, but flying giant reptiles is a per se, …. horse of a different color. And the last statement in your cited reference pretty much supports my claim about the inability of giant pterodactyls, and possibly all pterodactyls, of having the ability of flight or flying. To wit, quoting from your cited source:

The ability of “self powered” flight surely evolved on or near sea shores by the smaller species of dinosaurs that were in the process of evolving a “feathered” covering of their body …. simply because of the steady and consistent “on-shore” and “off-shore” winds which made for an easy “lift-off” from the ground …. as well as an “easy task” for said evolving dinosaurs to remain “off-ground” for extended periods of time. There are several Sea Bird species of today that are dependent on said “sea breezes” for getting airborne.

Phooey, …. there are several good reasons why. To wit:

1. The length of their wing-span and calculated/estimated body weight.

2. Their lack of sufficient wing muscles for self-powered flight.

3. Their inability to achieve “lift-off” without the aid of an extremely strong breeze or wind.

4. Their extremely large “bill-snipper” compared to body size would be of no use whatsoever to a “flying” predator ….. and would probably prove quite dangerous in trying to control their flight path …. due to a weight “imbalance”, … a very short tail …… and/or the applied forces of unanticipated “wind shear”.

“Found far out to sea” means nothing …. because that locale may have been a swampy area, a tidal zone or a very shallow Inland Sea ….. many, many eons ago.

Me thinks and actually believe, that all pterosaurs were shallow-water feeding reptiles that employed the same “feeding technique” as does the present day Black Heron, to wit:

http://ibc.lynxeds.com/files/pictures/IMG_0954_0.jpg

The hot spot only mattered when the believers thought they found it. Once it was gone it was irrelevant.

The entire theory of CAGW is based on a positive feed back loop… as your chart shows; when does this feed back occur?

My belief is man made c02 has special properties that regular co2 doesn’t have … You simply don’t know it yet… /sarc

I have that chart pinned up on my wall at work, along with the 18+ year satellite measured temperature increase pause graphs, regularly updated from the good Lord.Monkton.

+1

The cool upper atmosphere cannot warm the warm lower atmosphere, particularly the lower two-to-two hundred meters where most of us live.

Lord Monckton: Your math is correct only in the limit that the change in T and F approach zero, the standard assumption one makes in elementary derivatives. Your equation can be re-written in a form I find more useful:

dF/F = 4*(dT/T)

A 1% change in F (2.4 W/m2 post albedo) produces a 0.25% change in T (about 3/4 of a degK). This equation is independent of any assumptions about emissivity.

This above equation becomes inaccurate with large % changes in T.

[T*(1+dt/T)]^4 = T^4 * [1 + 4*(dt/T) + 6*(dt/T)^2 + 4*(dt/T)^3 + (dt/T)^4]

This equation ignores the higher powers of dt/T which are negligible for the changes of few percent encountered in climate change on Earth.

If the entire system warmed by 1 deg., then (as you say) the increase in surface emission is much greater than the increase in emission of the whole system to outer space. But it is the latter that matters in climate sensitivity, since it is what is actually lost by the system. Does that answer your basic question?

Correct.

The F involved here is forcing. That’s the hypothetical initial radiation reduction in which an instantaneous change in (say) atmospheric opacity would result because of the higher effective radiation altitude. To restore equilibrium, what needs to happen is for that new radiation altitude to warm up enough to get outgoing radiation back up to the incoming level, and to a first approximation it warms about the same as the surface.

So it’s the temperature at the effective radiation altitude, not at the surface, that goes into the Stefan-Boltzmann equation.

I seem to remember some previous difficulty in explaining forcing to Lord Monckton, so I’ll elaborate. , where the temperature is

, where the temperature is  , the temperature at which the Stephan-Boltzmann equation gives an outgoing radiation intensity the same as the incoming value from space. By convention, we can consider that concentration’s forcing to be zero.

, the temperature at which the Stephan-Boltzmann equation gives an outgoing radiation intensity the same as the incoming value from space. By convention, we can consider that concentration’s forcing to be zero. , then the new radiation altitude’s temperature would initially be

, then the new radiation altitude’s temperature would initially be  , where

, where  and

and  is lapse rate. This would cause an initial radiation imbalance of

is lapse rate. This would cause an initial radiation imbalance of  . The value of that hypothetical initial imbalance is what is referred to as the “forcing” associated with the concentration increase.

. The value of that hypothetical initial imbalance is what is referred to as the “forcing” associated with the concentration increase. , i.e., by the change in radiation-altitude temperature that would be needed to redress the initial radiation imbalance. As Dr. Spencer observed, the change in surface, as opposed to radiation-altitude, radiation would be more than the imbalance to be eliminated. But it isn’t the surface radiation we’re interested in when we talk about climate sensitivity; it’s the outgoing radiation.

, i.e., by the change in radiation-altitude temperature that would be needed to redress the initial radiation imbalance. As Dr. Spencer observed, the change in surface, as opposed to radiation-altitude, radiation would be more than the imbalance to be eliminated. But it isn’t the surface radiation we’re interested in when we talk about climate sensitivity; it’s the outgoing radiation.

When we talk about sensitivity, we’re talking about response to a given forcing. So you have to know what the forcing is. If you start at pre-industrial CO2 concentration (and thus atmospheric optical density), the effective radiation altitude is

Now, if optical density were to increase instantaneously from that zero-forcing level to a level at which the new radiation altitude is

Because of that imbalance the surface warms. How much would the surface warm without feedback? If we ignore things like lapse-rate feedback, etc., it warms by

So the change in surface temperature would need to be more than would be required for the surface radiation to increase by the forcing; it would instead be the temperature change required for the radiation-altitude radiation to increase by that quantity: a larger number. Although we are indeed talking about a temperature change at the surface, therefore, it’s the absolute temperature at the effective radiation altitude, not at the surface, that needs to be used in Stefan-Boltzmann to determine the “Planck parameter.”

John, perhaps you can explain to a simpleton like me on a couple of confusing issues. I am fully aware of the fact that energy, radiation and temperature are not the same things so I don’t think my confusion is there.

My first confusion has to do with the gas laws. I understand that there can be an energy gain due to GHG but I do not understand how that can translate to higher atmospheric temperatures at surface altitudes unless the atmospheric pressure increases as a result of this increase in energy. Common sense tells me that the atmospheric volume would increase a little and the temps would stay the same rather than pressure go up. To me you seem to be somewhat implying this but still translate that into a surface temperature increase. What am I missing between the universal gas laws an the atmosphere? The second confusion that I have is in the radiation budget diagrams. I see no inclusion implying long term storage of energy within the biomass and the rate of storage in the biomass increases with increasing GHG. Would this have something to do with my confusion to the first question? Maybe I am doomed to stay confused.

Perre DM:

Not sure I understand your question, but maybe this will help:

To a first approximation, don’t worry about the gas-law issues. Just think about the radiators.

Radiation that reaches space comes from the molecules at the surface and at various altitudes in the atmosphere, but the overall effect is as though they were all at an effective radiating altitude, which is somewhere in the troposphere that is higher if there is more greenhouse gas and there thus are more radiators to make a thicker “blanket.” Due to the lapse rate, a higher effective altitude means a larger temperature difference between that altitude and the surface. But that altitude’s temperature must over time equal the level that results in the same amount of radiation that comes from space (and isn’t reflected), so it can be thought of as fixed independently of what the effective radiation altitude is. Since the effective radiating altitude is therefore always at the same temperature independently of how high it is, but the difference between its temperature and the surface depends on that altitude, the surface temperature has to be higher for a greater effective radiating altitude.

Joe Born

I would question you about the assumption you make in the first paragraph — and assumption it most certainly is — as you yourself admit.

“If you start at pre-industrial CO2 concentration (thus atmospheric optical density) the effective radiation altitude is h1, where the temperature is Teq, the temperature at which the Stephan-Boltzmann equation gives an outgoing radiation intensity the same as the incoming value from space. By convention, we can consider that concentration’s forcing to be zero.

“By convention” means that the basis of your whole argument begins with an assumption.

Do you have any proof for it? Isn’t it just another warmists meme that is “cherry picked”? To put what you are saying another way using a well known cultural image —

O Noes, we were living in the garden of carbon dioxide paradise and we screwed it up!

Really, isn’t that what you are assuming? That we were just a couple of hundred years ago living in the garden of carbon dioxide paradise?

What if I say that carbon dioxide levels during pre-industrial times were too low and then blame the Little Ice Age on the lack of CO2 in the atmosphere?

I then could say that if in fact there is CO2 caused global warming we are merely returning to a time when CO2 concentration was truly optimal — and soon we will actually reach the concentration at which “the Stephan-Boltzmann equation gives an outgoing radiation intensity the same as the incoming value from space”.

Because you “assume” at the beginning all you after arguments are worthless. You are employing a well-known Sophist trick. Let me set the initial assumption and I will have you admitting that pigs can fly.

Eugene WR Gallun

Joe Born: So the change in surface temperature would need to be more than would be required for the surface radiation to increase by the forcing; it would instead be the temperature change required for the radiation-altitude radiation to increase by that quantity: a larger number. Although we are indeed talking about a temperature change at the surface, therefore, it’s the absolute temperature at the effective radiation altitude, not at the surface, that needs to be used in Stefan-Boltzmann to determine the “Planck parameter.”