From the Cornwall Alliance for the Stewardship of Creation

by David R. Legates

An article by Nadir Jeevanjee, a Research Physical Scientist at the National Oceanic and Atmospheric Administration (NOAA), recently published in The Conversationand reprinted by Space.Com, suggests that climate models are being given a bad rap. It cites a recent Department of Energy report as using the complexity of climate models as the primary reason why these models cannot be trusted. The article protests, “But the history of climate science tells a different story” because early climate models were instrumental in shaping our understanding of the Earth’s climate.

Okay, let’s revisit history—because those who can’t remember history correctly are destined to get it wrong.

First, we need to get one fact correct. The recent Department of Energy report did not simply cite the complexity of climate models as the primary reason they cannot be trusted. It goes into detail as to why current climate models cannot be trusted: namely, they cannot reproduce current conditions.

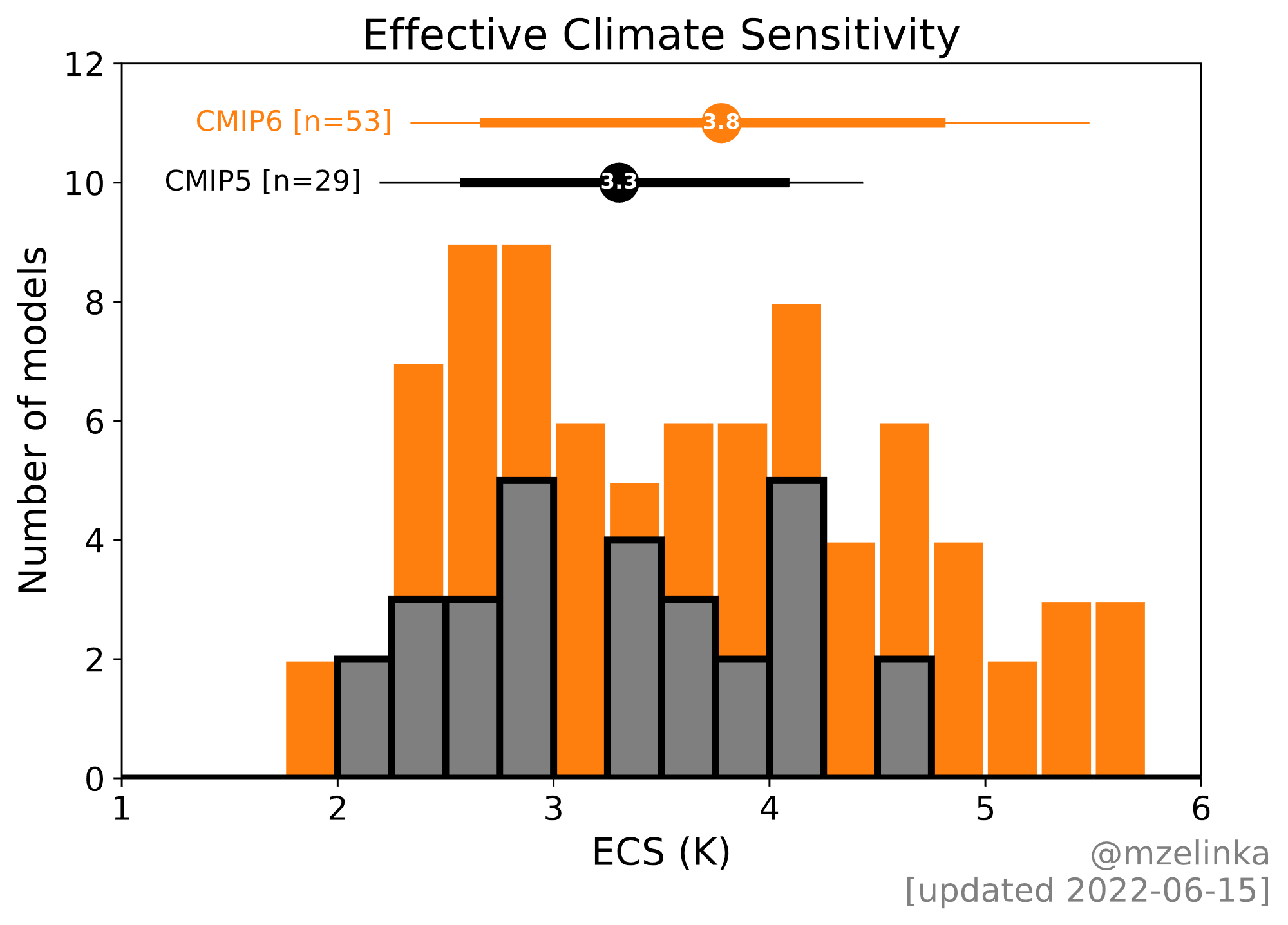

For example, models are not capable of determining the equilibrium climate sensitivity to increasing carbon dioxide concentrations. As shown in this graph from the DOE report, they tend to “run hot,” or overstate surface warming.

Global surface air temperature trends (°C/decade), 1979–2024, from various CMIP6 climate models (red, 30-model average in orange); and the average of three thermometer datasets (HadCRUT5, NOAA Global Temp, and Berkeley 1 deg.) and two reanalysis datasets (ERA5 and NCEP/NCAR R1) in blue. Data source: https://climexp.knmi.nl/start.cgi.

They also overstate warming of the tropical troposphere, and their simulation of stratospheric cooling is inconsistent.

With regards to the tropical troposphere, the excessive warming was noted as a problem in the first United States National Climate Assessment and has been noted in every Intergovernmental Panel on Climate Change report since. This problem has become more extreme over time—i.e., simulations from later models, despite decades of “improvements” costing billions of dollars, deviate farther from observations than earlier models—and its spatial extent now encompasses the entire globe.

But the article asserts that early climate model forecasts about global warming were correct decades before the forecasts could be evaluated. It concludes, “it is this track record of success that gives us confidence in interpreting the changes we’re seeing now, as well as predicting changes to come.” It then lists five forecasts the early models made that underscore how well even those early models could reproduce Earth’s climate.

Now, let’s give credit where credit is due, but let’s not reward models for getting the basics of climate correct. In other words, models should not be praised for figuring out that the Poles are cold and the Equator is warm. They should not be given credit for simulating the Hadley Cell circulation, or demonstrating that oceans have a moderating effect on climate, for examples. These basic premises of climate are obvious and must have been a product of the simulation; otherwise, the models would have been discarded before they were even published.

So, let’s test the article’s evaluation of the models.

Forecast number 1: early models correctly simulated surface global warming from increased carbon dioxide. Yes, but this was obvious long before the first models were created. Carbon dioxide is a greenhouse gas, and thus the more of it added to the atmosphere, the higher surface temperature should rise. Way back in 1896, Svante Arrhennius (1859–1927) showed that an increase in carbon dioxide would increase global temperatures.

The question always has been, and still is, how much should the temperature rise from, say, a doubling of carbon dioxide?

Early models argued that the temperature rise would be between 2.0 and 3.5 degrees Celsius. These numbers are actually closer to reality than estimates provided by later model simulations, but they are still higher than data-driven estimates using historical and paleoclimate data.

Verdict? Early models would have been discarded if they had simulated cooling or no effect. That they simulated warming from increased greenhouse gases is no proof of their reliability.

Forecast number 2: the models predicted stratospheric cooling from adding carbon dioxide to the atmosphere. This should also have been relatively obvious. If outgoing terrestrial radiation is being intercepted by more carbon dioxide at the surface, and some of it is radiated back to the surface, then less will reach the stratosphere and, consequently, the stratosphere should cool. Again, the question that demands an answer is, “How much cooling?”—and even current climate models cannot simulate this correctly or consistently.

Forecast number 3: Arctic amplification. The Conversation suggests that it was surprising that the early models accurately predicted that rising carbon dioxide concentrations would warm the Arctic more than the Tropics. But that was no surprise at all. Given a warming planet, the Arctic will warm faster than the Equator due to six factors.

- Colder air warms more than warmer air from the same amount of energy input due to the derivative of the Stefan-Boltzmann Radiation Law.

- Moist tropical air has a higher specific heat than dry polar air. (Specific heat is the amount of energy required to raise the temperature of one gram of a substance by one degree Celsius.) This is because water vapor has a higher specific heat than dry air and warm, tropical air has more moisture than cold, polar air.

- The change in albedo—or surface reflectance—is greater in the Arctic because of the melting of highly reflective ice and snow, uncovering darker soils and tundra.

- Sea ice provides a layer of insulation between the unfrozen water beneath and the potentially much colder air above. Warming reduces the sea-ice coverage, which allows energy in the relatively warmer water to warm the air above it.

- The lack of convection at the Poles keeps the warming closer to the surface. Unlike the tropics, where the atmosphere becomes unstable due to surface heating and rising air, the polar regions are not heated sufficiently to create rising air motions, and the creation of the Polar High inhibits vertical motion. Thus, the warmer air stays near the ground.

- Finally, the evaporation of water stores energy as latent heat—that is, energy in the phase transition of water from liquid to gas—which then is transported poleward by global circulation. This latent energy is stored in equatorial regions and released as condensation occurs in higher latitudes, thereby transporting energy polewards.

Therefore, a greater warming of the Arctic was obvious to climatologists even before the first climate model was created. That models simulate it is no big feat.

Forecast number 4: land-ocean contrast. The article notes that the coupled atmosphere-ocean model “led to a slew of insights, including the observation that land generally warms more than ocean, by a factor of about 1.5.” While this may sound impressive to the uninitiated, climatologists have long known the moderating effect of the oceans, again due to their high specific heat.

Consider San Diego and Dallas, at about the same latitude of 33 degrees north. The annual temperature range in San Diego is about 8 degrees Celsius, while that in Dallas is about 22 degrees—a factor of almost three. Extending any transect to both coasts indicates that the annual temperature range—and the diurnal temperature range, for that matter—increases with distance from the coast.

To anyone who understands the Earth’s climate, the central Plains have a much greater temperature range than either coast, for example. So, why would this have been a novel finding back in 1979? Any competent climatologist would have known this, so it must have been a basic component of any climate model worth its salt at the time.

Forecast number 5: delayed warming of the Southern Ocean around Antarctica. This issue arises from the supposedly novel fact that the Arctic has a greater warming signal than that of the Southern Ocean.

But why is that surprising? The change in albedo is not as great in Antarctica, since there is little land area exposed by melting snow and ice, while there is much in the Arctic. Sea ice dynamics differ considerably in the Southern Hemisphere, and the loss of sea ice isn’t as dramatic to warming Antarctica. Moreover, the Southern Ocean is bounded by a hemisphere of ocean, which has a moderating effect on the climate as mentioned earlier. Thus, important contributions to Arctic warming are not present in the Southern Ocean, and so we would have expected the Southern Ocean to be different from the Arctic.

The take-home message? If early climate models missed any of these supposed climate novelties, climate modeling wouldn’t have gotten off the ground. What early climate modeling showed was that the basics of climate could be replicated with simple mathematical approximations. But that’s the basics. The details are that climate is still very complex, and while the general patterns can be simulated accurately, specific details as to how climate varies spatially and how it is likely to change under various scenarios are still a far-off challenge.

The article concludes: “Climate models have their limitations, of course. For instance, they cannot predict regional climate change as well as people would like. But the fact that climate science, like any field, has significant unknowns should not blind us to what we do know.”

I agree, but I would also note that we were not blind to these facts before the advent of climate models. Syukuro Manabe deserves credit for demonstrating that the basics of climate can be reproduced with a rudimentary computer program run on early computers. But the fact remains that current model simulations still deviate significantly from real-world observations. And let’s not lose sight of the fact that early climatologists understood quite a bit about how the Earth’s climate works. Yes, there were climatologists before the climate modelers, and they knew an awful lot about the Earth’s climate.

David R. Legates, Ph.D. (Climatology), is retired Professor of Climatology at the University of Delaware and Director of Research and Education for the Cornwall Alliance for the Stewardship of Creation.

What a heap of bs. A few examples:

It is likely just sloppy writing, but equilibrium climate sensitivity is not a “current condition”, obviously. Furthermore, we still only estimate its value.

What is this “reality”? Are you talking about the ECS? How do you know its value? Geeesus…

This is plainly false, but it gives a fascinating insight into the denier mind.

The amplification is not due to direct effects of radiation, that (or rather the change thereof), for obvious reasons, is getting more and more negligible as we go pole-ward, you genius.

etc, etc.

‘The amplification is not due to direct effects of radiation, that (or rather the change thereof), for obvious reasons, is getting more and more negligible as we go pole-ward, you genius.’

Can you re-transmit this and/or provide the de-coder ring for this garbled message fragment?

Every time I criticize someone’s grammar or punctuation in a comment, I find similar errors in my own critical writing moments after hitting “Post Comment”. Now I’m thinking “I hope I never sounded like that.”

(written in reference to the nyolci comment)

Not garbled. Radiation is getting weaker and weaker as we’re going towards the pole. The change in radiation (the effect of climate change) is not a big factor in the warming of the poles, obviously.

Neither is it at the equator.

And you should state what you mean with ‘radiation’. OLR or what? Also, energy (heat) will escape to space if it cannot be held. The atmosphere is rather thin at the poles. You might call it: the getaway clause. 🙂

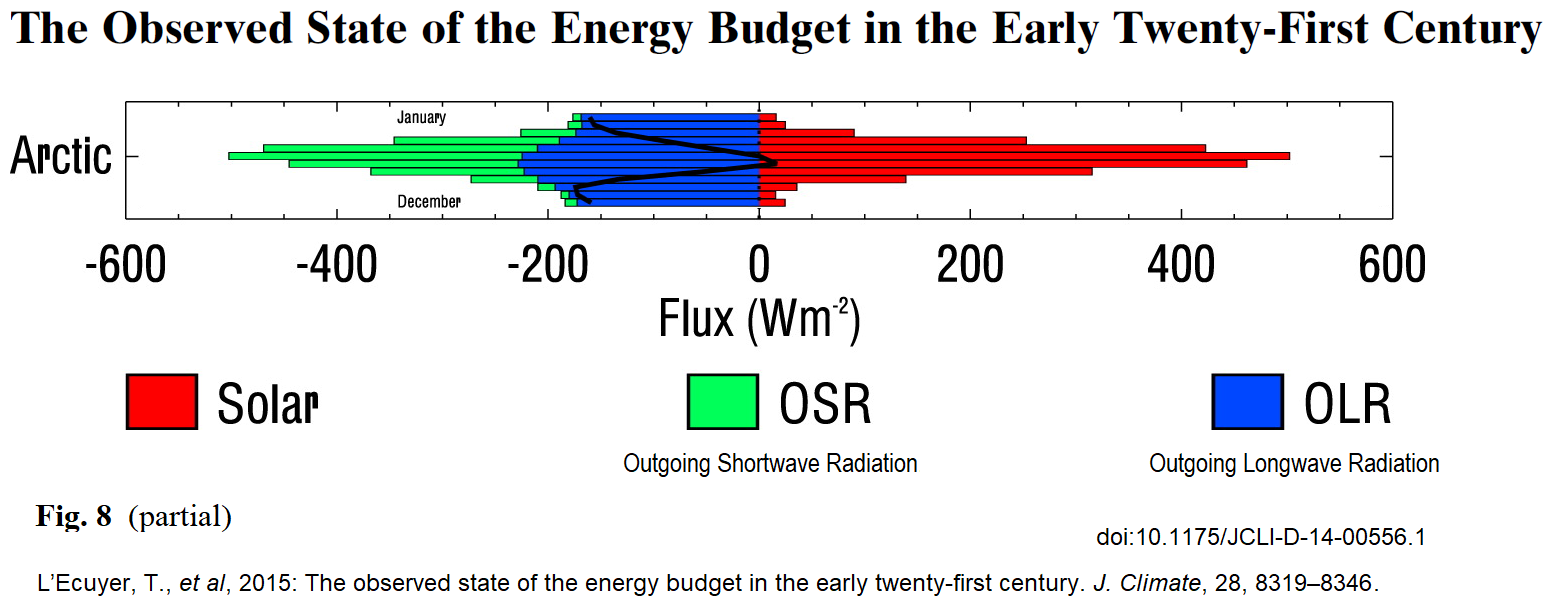

OLR, or rather the fraction of the change that is directly caused by incoming radiation.

An increase in absorbed solar radiation due to decreased cloud is the only radiation that causes any warming.

There was no measurable trend in downward longwave radiation in data from 2006-2016.

No CO2 warming signature anywhere !

The increase in downwelling LW IR due to the ongoing change in CO2 level is small, but detectable:

Feldman et al 2015 measured downwelling longwave IR “back radiation” from CO2, at ground level, under clear sky conditions, for a decade. They reported that a 22 ppmv (= +5.946%) increase (from 370 to 392 ppmv) in atmospheric CO2 level (+8.333% forcing) resulted in a 0.2 ±0.06 W/m² increase in downwelling LW IR from CO2, which is +2.40 ±0.72 W/m² per CO2 doubling.

However, ≈22.6% of incoming solar radiation is reflected back into space, without either reaching the surface or being absorbed in the atmosphere. So, adjusting for having measured at the surface, rather than TOA, gives ≈1.29 × (2.40 ±0.72) =

3.10 ±0.93 W/m² per doubling of CO2 at TOA

That’s closer to van Wijingaarden & Happer’s 3 W/m² than to Myhre’s 3.7 (which the IPCC uses), but the uncertainty interval is wide enough to encompass both estimates. It does preclude the SAR’s higher 4.4 W/m² figure.

That’s van Wijingaarden & Happer’s graph, except that I added blinking purple ovals to highlight the small effect of 2× CO2.

For other estimates see:

https://sealevel.info/Radiative_Forcing_synopsis.html

This isn’t true. The radiation impinging on the Arctic is the same as at the “equator”, it is not weaker. What changes is the angle which it hits. Much of the energy is reflected (cosine θ) into space and very little is absorbed.

Correct answer but for the wrong reason.

You always manage to make a fool of yourself.

In what way?

Jim,

nyolci is correct on this one. The cosine(Theta) term that “weakens” the radiation (flux density) towards the poles comes from the dot product of the flux vector (sun to earth) and the surface normal vector that is needed to compute the surface integral.

On the equator at noon these two vectors are parallel, so the cosine is 1.0. At 60 degrees latitude at noon (on the equinox to keep the math simple) the two vectors are 60 degrees apart, so the cosine is 0.5.

This means that the flux impinging on a square meter of surface at 60 degrees at noon is only one half of that impinging on a square meter of surface on the equator at noon.

This is very basic E&M physics as taught in an introductory course. It is a matter of pure geometry.

I emphasize that this difference occurs before any difference in reflection that could be a function of angle of incidence. That is a separate matter, and much more complex than a simple cosine effect. It is a function of material, surface roughness, and even wavelength.

Generally, there is more reflection at low angles of incidence, but this is on top of the reduced flux density reaching the surface in the first place. The resulting reduction with angle can be even sharper than the cosine effect.

It pains me so much to admit, but I actually agree that this piece is very sloppy.

ECS is a “made-up” term with zero scientific relevance.

It assumes thangs that have never been observed or measured anywhere on the planet..

eg CO2 based warming.

Even if there is some CO2 warming, I doubt the relationship is as simple as a certain amount of warming for each doubling. Not claiming any climate expertise– I bet if there is such a relationship that could be put in a formula, the formula is far more complex- which is why it’s so difficult to determine the ECS- since the very definition of it is a presumption.

The E in ECS is equilibrium.

What equilibrium?

If the earth coupled energy systems ever achieved equilibrium, we would have no wind, no rain, no ocean currents.

The earth rotates. The energy inputs vary nanosecond by nanosecond. No equilibrium.

The earth orbit around the sun is a eccentric ellipse. The earth technically orbits the barycenter of the solar system as does the sun, planets, etc. The solar input to the earth varies nanosecond by nanosecond. No equilibrium. In addition, the orbit changes year by year.

The solar EM radiation emitted by the sun is not constant. No equilibrium.

The whole of the ECS is based on a statistic called GAT. The planet does not have an average temperature. Temperatures change nanosecond by nanosecond. No equilibrium.

ECS is one of those fanciful sciency sounding expressions used to be impressive and augment the fallacy of appeal to authority.

We need to stop using the CAGW lexicon. It gives them a credibility boost at the expense of true science.

It’s so good that there are so many geniuses here like you. Our civilization would be over in a few secs if you weren’t hanging around. But, exceptionally, in this very question, you’re wrong. Equilibrium in this context means that the incoming and outgoing radiated energy fluxes are equal (on average, over the whole surface and during long periods, of course). At the moment there is a difference, there is more energy coming in than going out, hence the Earth is warming. (And before the inevitable charge of the bs brigade, this is actually a measured value, not even modelling but, of course, matches model predictions.)

“At the moment there is a difference, there is more energy coming in than going out, hence the Earth is warming. “

Yes, cloud changes have led to increased absorbed solar radiation in the tropics.

Nothing to do with human CO2.

Funny thing about averages, if symmetrical, there are some data above the average and there are some data below the average. This is usually shown by a variance/standard deviation. What are these values?

Hmmm, if using an average, then there must also be times when there is less energy coming in than going out.

EEI hasn’t been negative since the end of the Little Ice Age.

Since then, a +3 W/m^2 increase has accumulated due to GHGs.

You might want to have a talk with your kids about continuing your bloodline at the current rate.

Complete and utter rubbish. Are you really stupid enough to believe that adding CO2 to air makes thermometers hotter? Or are you just ignorant and gullible?

Heat cannot be “stored” or “accumulated”. Simply put, heat is energy in motion. Even a simpleton like you might benefit from reading a very old book on the subject by Professor John Tyndall called “Heat – a mode of motion”.

Accept reality – the “greenhouse effect” is just pseudoscientific claptrap.

“increase has accumulated due to GHGs.”

There is absolutely ZERO scientific evidence of that.

Mindless mantra is NOT science.

Old Jim cannot wrap his head around the fact that this is an average, and there are places and times when there is more energy escaping than getting in locally. Globally, of course, the imbalance is always positive, and on average gives us the 3W/m2 value (Jim, hope, you listen).

That’s not all Jim can’t wrap his head around.

He argues that just 4 US stations are enough to represent the entire US climate, despite this being a very small sample, yet elsewhere he complains that the uncertainty in the national climate average is too high.

🙂

At least you addressed the question that Eclang could not.

Now the question is why the difference. Is it due to long storage in the oceans/land, changing clouds, CO2, or something else? CO2 is fairly constant so how does it modulate the signal in that fashion? What else could it be?

It’s breathtaking how little you understand these things after years of hanging around in forums like this. No, neither of these. And it has nothing to do with storage at all. At this very moment the imbalance is 3W/m2 with very little error.

I did address your point. You said the average EEI implies there are periods when less energy is coming in than going out. I explained that this interpretation is incorrect: there is variability exhibited by the EEI, but it has been (since the end of the LIA), and continues to be, positive.

Funny how you can’t address the I raised!

I suspect you are deflecting because of your inadequacy in knowing the answer.

I’ll ask again.

Is this true or not?

It is false, obviously. Locally, there are areas where it is true, but globally it is always positive.

Eclang wrote, “since the end of the Little Ice Age…a +3 W/m^2 increase [i.e., radiative forcing] has accumulated due to GHGs.”

That’s probably a bit too high.

CO2 is generally believed to have accounted for 75% to 80% of anthropogenic warming, and the CO2 level has increased by 49% (from 285 ppmv to 425 ppmv), which corresponds to 58% of the forcing we’d get from a doubling of CO2.

A doubling of CO2 yields TOA radiative forcing (RF) of only about 3 W/m², 58% of which equals 1.74 W/m² RF from CO2 increases to date.

If CO2 accounts for 75% of the RF from all GHGs, then total RF from all increases in GHGs is only about 2.32 W/m².

Regardless of whether RF from anthropogenic GHG increases it’s 2.3 or 3 W/m², the estimated warming since the late Little Ice Age is generally estimated to have been only about 1.2 ±0.2 °C, of which at most about 80% could be from CO2.

So an increased radiative forcing equivalent to 58% of a doubling of CO2 has yielded only about 0.96 °C of warming (and that’s assuming ALL the warming was due to anthropogenic GHG emissions, which I doubt.)

So, what does that tell you about “climate sensitivity” (the expected effect on temperatures of a doubling of CO2)?

Note: The two most common metrics for climate sensitivity are TCR and ECS. ECS is probably about 1.5× TCR. (The IPCC likes ECS because it is bigger.) “Realized warming” (the warming we’ve actually seen) is probably about halfway between them. That means TCR is about 0.8× realized warming, and ECS is about 1.2× realized warming.

Here’s your homework problem:

(To check your calculation, click here.)

Note the difference between what you calculated and what most climate models assume. 51 of the 53 CMIP6 climate models listed here unrealistically assume ECS >2°C per doubling of CO2:

https://github.com/mzelinka/cmip56_forcing_feedback_ecs/blob/master/CMIP6_ECS_ERF_fbks.txt

https://github.com/mzelinka/cmip56_forcing_feedback_ecs/blob/master/ECS6_histogram_stacked.pdf

That leftmost little orange bar represents the tiny subset of CMIP 5 & 6 climate models which are reasonably consistent with measured reality.

Nice that you are mature enough to post a comment without including an insult.

/sarc

I was flattering you…

Even the incredibly costly, poorly named “Artificial Intelligence” finally admitted I was correct about non-existent “global warming”, which makes both you and it look a little pointless. Here’s the final summary –

If you disagree, then obviously hundreds of billions of dollars could have been saved, by just asking you for an answer. [derisive snigger]

No equilibrium, the Earth has cooled since its surface was molten, adding CO2 to air doesn’t make thermometers hotter, and you are as deluded as the loony “climate scientists” who refuse to accept reality.

The only mind this comment gives insight into is the one that needs to denigrate rather than illuminate.

I think the point of the article is that while climate has been able to be modeled in the most basic aspects of its dynamics from early on, attempts to use them to promote alarmist conclusions have fallen flat since the system remains far more complex than these basic functions.

Yes, adding CO2 would be expected to have some effect. No, it does not follow that a crisis will result, and all scenarios insisting such have failed to be supported by observation. The models remain unsuitable for informing policy.

If understanding were as advanced as the alarmists insist, only one model would be needed.

The article is a confused and convoluted attempt at denigrating science. Even the early models were quite good ‘cos, well, this is just Thermodynamics. These models were rough, but modern modelling is much better in predicting the minute details. The article is full of bs, like it treats ECS (in its garbled way) as a well known entity.

No, the early models were basically junk science…

…. and current model are based on that junk science.

Roy Clark: A Nobel Prize for Climate Model Errors | Tom Nelson Pod #271

I don’t see the point of this article. It says that models got stuff right, but that isn’t surprising. Well, maybe, but you can’t do better than getting it right.

As to the “models running hot” plot, it shows a model average of about 0.25 C/decade vs an observed 0.2. Not perfect, but pretty good. That 0.25 is pulled upward by about 4 models out of 30 which do run hot.

30 models for settled science 🧐

For me the problem is less about having 30 models that give weak results than-

After 40+ years, thousands of hours spent by professors and grad students at prestigious schools across the globe and billions of dollars funneled through dozens of NGOs, the models _still_ disagree with each other and come with error ranges of about the same magnitude as the originals. Everything else in science has gotten better or disappeared over the years.

For example, consider the closely related 5-day weather forecast. I don’t trust he 3-5 days prediction but even the worst ones are better than 10 years ago. 40 years ago I don’t think it was possible.

Indeed and has been frequently pointed out..

So why not remove the 4 models that run hot from the calculation of the mean? In fact, why not just use the models which closely match the observed warming rate? Including models that that produce results that don’t match reality just makes the result less accurate.

“So why not remove the 4 models that run hot from the calculation of the mean?”

Take it up with Legates et al.. They calculated that mean and chose what to include.

Your suggstion to eliminate the models that don’t get this one case exactly right is like investing only in a mutual fund that got the best result last quarter. There is noise and you can be lucky. Also GCMs calculate all properties of the atmosphere, not just surface trend.

Maybe try rolling some dice too

Chicken entrails?

Tarot cards… or a seance with Nostra-dumb-ass !

Well, argument from analogy is an ancient fallacy. But your analogy is incorrect. This would be a better one:

You are trying to decide how to invest your life savings on retirement. You proceed as follows.

You pick a couple of dozen investment sheets. The basis for picking them is just that you happen to have come on references to them – its essentially arbitrary. [Don’t know what the basis for inclusion in the spaghetti graphs is, but have never come across any rigorous rationale, statement of which were excluded, included and why. Is it any more than ‘people we know’?]

Continuing the analogy, you decide you are interested in predicting the widest and and most representative market indexes. You propose buying a tracker fund and trading it based on the forecasts. You note when you plot their history that some of the sheets are consistently more accurate than others.

So you take all the predictions, make a spaghetti graph, and discover there is a wide spread. You talk to your friends and you get three different responses.

One is, why don’t you average the forecasts and bet on the accuracy of the average?

You say, because the average is over optimistic, forecasts higher prices than have happened. When you put this to your friend he says, that is only because three or four very high ones of them are skewing the index. Nothing wrong with the method. Well, you reply, there is a lot wrong if what I need is accuracy. After all, I am betting my retirement on this.

Silence.

The second response is, throw out the bad ones and pick the one that is doing best. You investigate, and discover that there are significant differences of method among some of the models. The one that works best makes very different assumptions from the others on what the price drivers are. [This is analogous to the Russian model]. Why not just use that?

Your average advocating friend is silent. What you want, you say, is some evidence that the averaging method has worked and will in future work better than using the “Russian” one.

Silence.

The third response is, do not do this at all. Do not invest life changing sums on the basis of some forecasts, some of which fail for unclear reasons, some of which succeed for equally unclear reasons.

And especially don’t do it if your proposed tracker turns out not to track what you are forecasting at all well! [This would be analogous to the crazed Net Zero power generation policy proposals, which are justified on the basis of climate models, but which seem calculated to bankrupt economies that try them while making no difference to emissions or climate…]

Excellent. Well laid out!

The usual non-response from the ignorant and gullible when they realise they don’t understand what they are talking about.

As to investing, you know precisely nothing useful about that either. Or do you claim you can peer into the future?

“Also GCMs calculate all properties of the atmosphere, not just surface trend.”

And they are manifestly INCONSISTENT and WRONG on all of them. !!

Nick Stockes comments “Take it up with Legates et al.. They calculated that mean and chose what to include.”

In other words, they cherrypicked!

In other words trust them because they say we can trust them.

So, according to you, David Legates (a prominent climate skeptic) cherry picked. Got it.

No, Legates choose to use ALL the models.

The very opposite of cherry-picking.

Well, joe-Dallas thinks Legates is cherry picking. Just pointing that out.

Your comprehension is basically zero… as usual.

I repeat. Choosing to use ALL the data is NOT cherry-picking.

I’m not disputing it, but joe-Dallas is.

Maybe you should let him know his comprehension is basically non existent, but I’m sure you won’t, because, let’s face it, you and many, many others here are just cultists.

Cheers.

“Also GCMs calculate all properties of the atmosphere”

Not exactly true.

Only 4? Last I saw every model ran too hot compared with actual temperatures, the closest one being the Russian model.

And the Russian model includes next-to-nothing for CO2’s “contribution.” Makes you think they’re on to something…

All climate models are garbage. Get that straight for once.

The Russian one isn’t.

If it’s based on the fantasy that adding CO2 t9 air makes thermometers hotter, yes it is.

We don’t know that.

It could be pure luck it comes somewhere near reality.

It could be pure luck it comes somewhere near reality… for now.

Climate really cannot be assessed on just two sample points of 30 year trends. One is what the model is based on and the other is just what happened on the shortest timescale that any climate change can be defined.

Still runs too hot. Maybe they need to take out ANY AND ALL inputs that assume a “role” for CO2…

Exactly. They use a period of natural climate warming, most likely driven by ocean cycles, and force fit non-physical CO2 driven warming. None of their feedbacks are correct. Water vapor is a negative feedback and Arctic warming is one of the natural forcings, not a feedback.

These models will never be fit for purpose until they accept that GHGs have nothing to do with the warming we have seen.

To abuse the Clinton campaign message….

It’s the sun, stupid!

NONE of the models has any predictive ability whatsoever.

Show me one model that predicts the El Nino events of 1998, 2016 and 2023, which provide the ONLY atmospheric warming in the 45 year of the UAH data.

You really should step back from this forum. You’re pretty much just collateral damage for your own side.

Here, unlike others, every voice is heard.

So, you are totally incapable of answering a simple question, and choose to make a fool of yourself.

Would you like to fail again ?

“Show me one model that predicts the El Nino events of 1998, 2016 and 2023, which provide the ONLY atmospheric warming in the 45 year of the UAH data.”

The paleo records show the cooling phases and the warming phases last 150-300 years over the last 10-12k years (though you need to account for the heavy 100+year smoothing).

bottom line – The climate models should be reasonably accurate. Basically how hard is it to predict continued warming 100 years into a 300 year trend? Betting against a historical trend would be a bad bet.

Getting the easy stuff right ain’t so impressive.

Hindcasting with parameterization to match the record.

The curve fitting was good.

The predictive properties? Zero.

Interesting video – V D Hanson on climate orthodoxy dying.

Victor Davis Hanson: Is the Era of ‘Climate Change Orthodoxy’ Dying?

Yes, not bad. Hits the main points.

They’re not going to give up easily, but they should strive to be better scientists.

Three observations concerning the very useful chart of CMIP6 results.

Interesting triangulation concerning point 3 (commented with detailed calculations here and at Judith’s years ago), using Lindzen’s 2011 no feedbacks 1.2C ECS, and his Bode feedback curve with an IPCC ECS of ~3C==>Bode ~+0.65, the IPCC AR6 estimate of net zero feedback for all but clouds and water vapor, Dessler’s 2010 estimate that cloud feedback was zero (reworked and confirmed by McIntyre in 2011) rather than Bode +0.15, and INM’s estimate that water vapor feedback was half of what IPCC said CMIP6 models have (by itself double the no feedbacks so 2.4C ==>Bode 0.5), yields an ECS of 1.75 (Bode 0.65-0.15-[0.5/2]), exactly in the middle between the EBM and INM CM5 estimates.

“INM CM5 is the ONLY model in CMIP6 that does NOT produce a spurious tropical troposphere hotspot”

You folks have been saying this for many years, but way behind observations. There is a tropical troposphere hotspot, observed at least seventeen years ago. Here is an excerpt from a more recent comprehensive paper in J Climate. From the abstract:

“Significant warming of the troposphere is evident in the RO measurements available after 2001, with trends of 0.25–0.35 K per decade. Amplified warming in the tropical upper-troposphere compared to surface trends for 2002–18 is found based on RO and radiosonde records, in approximate agreement with moist adiabatic lapse rate theory.”

Good old 2008.. models were even worse and S. McIntyre’s blog was very active..

https://climateaudit.org/2008/06/07/march-2008-radiosonde-data/

“ This particular aspect of the observed temperature-change pattern is very sensitive to data adjustments

(This is from a “CCSP” report and about the “measured” hotspot)

At that time the comments and Lubos articles should provide more good information..

To me it seems yet another example where a flawed climate model is tuned patiently to reflect the belief of the programmer

“To me it seems yet another example where a flawed climate model”

The sequence of events goes:

_1. GCMs predicted a hotspot. People here scoffed.

_2. Experimental scientists looked carefully, and found there was a hotspot. That was observation, not a model result. But yes, it agreed with models. They were right.

_3. Scoffers scoffed on. They never read this stuff.

Unfortunately, GCMs are based on the nonsensical proposition that adding CO2 to air makes thermometers hotter – one noted fantasist (Gavin Schmidt) was involved with a pseudoscientific fairytale, laughingly titled “Atmospheric CO2: Principal Control Knob Governing Earth’s Temperature.”

Scoff, scoff, scoff! Derisive sniggering, even.

Why are you lying so much as your two sources doesn’t make the claim credible as they play games with the data which doesn’t support your fantasy.

The NOAA which has the data doesn’t change it to their made up modeling “corrections” because it isn’t valid.

Oh yeah the stupid Sherwood paper that misuses the Kridging method and other made up wind based temperature data to create something that does NOT exist.

The NOAA does NOT think his “corrected” radiosonde data is worth adding to their database for a reason that only rational people can understand.

What happened to you Nick?

The Sherwood paper was the first. But as usual, others have replicated it. I linked to one such major recent paper.

Ah, also created non-existent measurements. I see. The same sorts of idiots who call computer program outputs “experiments”.

Fodder for the ignorant and gullible.

The Sherwood paper was shown to be a totally bogus use of statistical mayhem. !!

The one you linked is all over the place… just like the climate models

Notice that Jokes ignored the made up wind temperature data and ignored the misuse of Kridging method.

That is why the Radio Sonde “corrections” are bogus.

This is what ChatGPT says – rather more qualified than your statement.

Yes, the **tropical tropospheric hotspot** is a concept that’s frequently discussed in climate science, particularly in the context of global warming and the behavior of the atmosphere in response to increased greenhouse gas concentrations.

### What is the Tropical Tropospheric Hotspot?

The **tropical tropospheric hotspot** refers to a predicted region of the atmosphere, typically in the tropical zone (around 30° N to 30° S latitude), where the temperature increases more rapidly with altitude than in other parts of the atmosphere, particularly in the lower to mid-levels of the troposphere. This should occur as a result of the enhanced greenhouse effect (caused by increased levels of greenhouse gases like CO₂). The hotspot is predicted to appear because:

* **Increased greenhouse gases** trap more heat near the Earth’s surface.

* The atmosphere responds to this by causing more warming at higher altitudes in the tropics, as the energy from the Earth’s surface rises and gets trapped by water vapor and greenhouse gases in the lower to middle troposphere.

### Theoretical Background

In climate models, the tropical tropospheric hotspot is expected to emerge as a distinct feature, with temperatures rising more significantly in the mid-troposphere (roughly between 5–12 km altitude) in tropical regions. This would be a direct consequence of the **water vapor feedback** mechanism, where the additional heat at the surface causes more evaporation and thus more water vapor in the atmosphere. Since water vapor is a potent greenhouse gas, this would amplify the warming at higher altitudes.

### Observational Debate

However, this hotspot has been somewhat elusive in observed data:

1. **Climate models vs. Observations**: Climate models, especially those from the IPCC, predict that the hotspot should be visible in the tropical troposphere. However, satellite data and weather balloon observations (since the 1970s) have found that this hotspot has been less pronounced than expected. In fact, many studies have pointed out that the warming in the tropical upper troposphere has not been as dramatic as predicted.

2. **The “Missing Hotspot”**: Some researchers have referred to this discrepancy as the “missing hotspot,” where the actual atmospheric temperature trends in the tropical region haven’t shown the expected pattern of rapid warming at mid-level altitudes, particularly in the tropics. This has been a topic of debate in the climate science community, with various explanations offered, such as:

* Natural variability or measurement errors.

* The fact that the models might be too sensitive or overestimate the water vapor feedback.

3. **Emerging Consensus**: More recent studies, particularly those looking at long-term satellite data, have suggested that while the hotspot might not be as strong or as high as initially predicted, some warming is still happening in the tropical upper troposphere, but it might be masked by short-term variability, the El Niño/La Niña cycle, or the difficulty in precisely measuring temperature changes at those altitudes.

### In Summary

Yes, there is theoretically a tropical tropospheric hotspot expected to occur due to climate change, but it is not as clearly observed in the data as initially anticipated. Some models predict a pronounced warming in the tropical mid-troposphere, but real-world observations have shown more variability and less of a clear signal. The debate around the “missing hotspot” continues to be an important part of climate science research.

“rather more qualified than your statement”

Which statement? I said there is a hotspot – ChatGPT says it may not be as strong as models predict. But Rud says the hotspot is spurious – dosn’t exist at all.

Where on a map is the hot spot?

You have to visit the Twilight Zone to find it.

ChatGPT said;

Those all indicate that making a prediction carries a large uncertainty. Kinda like the uncertainty of drawing an ace when three are on the table.

Not speaking for Rud, but he indicates no hotspot has been found. That sounds very much like what these uncertainties imply.

If I was to play poker with you, I know you’d bet on the come when drawing.

Which you can’t or won’t define. I say there is no hotspot.

I win.

From your second link

A total hodge-podge of inconsistent nothingness….

Over the shorter period, 2006-2018 there is a warming region over the tropics…

.. as one would expect when the 2016 El Nino was at the end of that period.

There is still absolutely no evidence that human released CO2 has any effect whatsoever.

Grok supports your main point (in addition to chatgpt):

No, observations do not support the existence of a tropical tropospheric hotspot at a level compatible with the higher forecasts of global warming (typically those implying equilibrium climate sensitivity above ~3°C per CO₂ doubling, as in many CMIP5/CMIP6 models used by the IPCC).

### Key Concepts

The “tropical tropospheric hotspot” refers to amplified warming in the upper troposphere (~8–14 km altitude) over the tropics (20°S–20°N), where models predict mid-to-upper tropospheric temperatures warm ~1.5–2 times faster than the surface due to moist convective processes and positive water vapor/lapse-rate feedbacks. This amplification is a core feature of high-sensitivity models and contributes significantly to projected warming.

The hotspot is expected from **any** surface warming (including solar or natural variability), but its **magnitude** scales with the strength of feedbacks in greenhouse-gas-driven scenarios. Higher-forecast models (e.g., many in CMIP6 with ECS >4°C) predict a stronger hotspot than lower-sensitivity ones.

### Observational Evidence

– **Radiosonde (weather balloon) datasets**: After extensive homogenization efforts (e.g., Sherwood & Nishant 2015; newer datasets like Zhou 2021, Madonna 2022), some show modest amplification (~1.2–1.4× surface trends in the tropics). However, others (e.g., RICH, RAOBCORE) show ratios closer to 1.0 or less.

– **Satellite datasets** (MSU/AMSU, 1979–present):

– UAH v6: Little to no amplification (tropical mid-troposphere trend ~0.10–0.13°C/decade vs. surface ~0.14–0.18°C/decade).

– RSS v4: Slightly higher (~0.18–0.20°C/decade in TMT), showing weak amplification.

– NOAA STAR: Intermediate.

– No dataset shows the strong hotspot (~0.3–0.5°C/decade upper-troposphere trends) expected in high-sensitivity models.

– **Reanalyses** (e.g., ERA5, MERRA-2): Generally align closer to satellites than raw radiosondes, showing weak or absent amplification.

– Short-term signals (e.g., during ENSO events) do show lapse-rate feedback behavior, but long-term trends (1979–2025) do not exhibit the expected multi-decadal amplification.

### Model vs. Observation Comparisons

– CMIP5 (used in AR5): Average tropical mid-troposphere trends ~2–3× observed (e.g., Christy 2013; 73 models vs. satellites/radiosondes).

– CMIP6 (used in AR6 and beyond): Bias persists or worsens. Ensemble-mean tropical TMT trends ~0.25–0.35°C/decade vs. observed ~0.10–0.20°C/decade (McKitrick & Christy 2020, 2023 updates; Mitchell et al. 2020).

– Even after stratospheric cooling corrections and forcing adjustments, models overestimate tropical tropospheric warming by 50–100%+ (Santer et al. 2017; Po-Chedley et al. 2021).

– Global (not just tropical) lower- and mid-troposphere layers show pervasive warm bias in CMIP6 (McKitrick & Christy 2020).

### Implications for Higher Warming Forecasts

The muted observed amplification implies weaker positive water vapor/lapse-rate feedbacks than in high-sensitivity models. Empirical estimates using bulk tropospheric trends (Christy & McNider 2017; updated through 2025) yield transient climate response ~1.1–1.5°C per CO₂ doubling — far below the IPCC’s “likely” range (2.5–4°C ECS) and incompatible with higher-end forecasts (>4–5°C by 2100 under SSP5-8.5).

While some datasets now show modest tropical amplification (resolving the “missing” hotspot claim from the 2000s–2010s), the observed rate remains substantially lower than required for high-sensitivity projections. The discrepancy has not narrowed meaningfully in recent data (up to 2025) and points to overstated feedbacks in models driving the most alarming forecasts.

>> Now, let’s give credit where credit is due, but let’s not reward models for getting the basics of climate correct.

Well, what is the difference between a trend extracted from the results of CMIP5 or older models and a “trend” scribbled by a 4 year old child?

Just as example the global warming rate with hindcast, recent forcast comparable to measuered data and future forcast.

For some of those the model result or maybe an essemble mean will be close to measured reality and there are “scientific publicaitons” using this fact to determine a skill of those models.

What is difference to the same line scribbled by a four year old?

Well, we do know that updated versions of these models exist in CMIP6, whith basically the same paramertization, but higher resolution and things like cloud physics errors corrected resulting significantly different trends!

So the difference to childish scribbles is in the end for the CMIP5 and all older models we know they were wrong and the analisys of their skillfulness was incomplete for sure, without any question.

In rational science this fact would cause a correction or retraction of those paper!

(and the jury is still out for the four year olds.. it makes therefore more sense to use them to predict the climate that is how bad old models are!)

Four year olds are cheaper, too. Mine were quite capable of predicting that the Sun would set, and rise the next day. They weren’t any better than professional forecasters at predicting rain, though. Maybe I could have given them more than 30 seconds instruction.

30 seconds would not have been enough to teach them to look outside. 🙂

Climate models are fundamentally wrong and ignore physics. They have all incorporated positive water vapor feedback, which does not exist in reality. And they cut some logical corners, to make it possible.

The most evident problem already comes with the tropical hot spot, as below. What ironically no one seams to realize is, if the troposphere warms that much more than the surface, then emission temperature will equally increase. But a higher emission temperature means a negative feedback.

We could discuss more sources and details, but in the tropics dTz/dTs = 1.8, roughly. So if the surface warms by 1K, the emission temperature goes up by 1.8K. If you assume an original Tz = 260K (again, it is higher in the tropics) you can calculate..

(261.8^4-260^4)*5.67e-8 = 7.25W/m2

That is a negative “temperature feedback” of 7.25W/m2. Temperature feedback consists of Planck Respone plus Lapse Rate Feedback (LRF). Really it will not matter how you split it up, but one could argue Planck Respone = 3.6 and LRF = 3.65W/m2. LRF alone then is way larger than WVF could ever be, meaning the whole of water vapor feedback WVF + LRF < 0, a negative term.

So how do models avoid a negative feedback? While obviously they let the lapse rate do its thing, they just deny the physical consequences and hard code WVF + LRF = 1.3W/m2 into the models, no matter what.

Btw. since AR4 they have constantly reduced LRF from a central -0.84W/m2 to now (AR6) only -0.5W/m2, which is the only reason climate sensitivity went up. This reduction by 0.34W/m2 made all the difference. For example..

3.7*0.3/(1-2*0.3) = 2.775

3.7*0.3/(1-2.34*0.3) = 3.725

Just out btw..

How to Flip the Sign on Feedbacks

Computer models are good tools to see if we actually understand how something works. However, when the model fails to produce what is observed, then it’s a bad model and shouldn’t be used, period.

Every heard a ”climate scientist” anywhere in the world say a climate model was wrong or bad?

The models are useful in proving that CO2 does nothing directly.

And polar amplification only works in the NH. As if there is no pole at the SH.

Well the North Pole is at sea level whereas the South Pole is at over 9,000′ altitude, makes a difference!

No polar amplification at sea level in the SH either.

Only the pole is at altitude, the edges of Antarctica are not.

It certainly does – a 9,000′ difference. Have you any more examples of the blindingly obvious to contribute?

A good proportion of the land beneath the ice is below sea level.

And Greenland is gaining elevation. So the north polar amplification is making Greenland more like the south polar amplification in Antarctica.

Nonsense. Climate is the statistics of weather observations. As to “general patterns’, a 12 year old will tell you that winter is generally colder than summer, and animals seem to know that summer follows spring – etc.

The IPCC stated that it is not possible to predict future climate states – and rightly so, in my worthless opinion.

All this “climate science” nonsense is based on the false premise that adding CO2 to air makes thermometers hotter! The author even states –

Should? Might? Precisely no experimental support for this flight of fancy, but the author is a retired “Professor of Climatology”, and can say what he likes without fear of being held accountable.

The author (self styled PhD (Climatology)), says –

Yes, there were climatologists before the climate modelers, and they knew an awful lot about the Earth’s climate.

An awful lot? About what, precisely?

Just another ignorant and gullible “climate scientist” sensing the winds of change, and trying to save face.

100%

That is not quite true. What he showed was that CO2 had the ability to absorb infrared light and might lead to warming. However, what we want to quantitatively know is what the net warming is after considering the impact of all the interconnected feedback loops, notably cloudiness.

‘Greenhouse by CO2′ theory was proven wrong by Knut Angstrom in 1900 in a paper debunking Svante Arrhenius’ 1896 paper, the one all these alarmists quote. In 1906, Arrhenius reviewed his earlier work, acknowledged his errors and concluded that any additional SLIGHT warming that MIGHT come from CO2 would be ‘benign and beneficial’.

“we may hope to enjoy ages with more equable and better climates, especially as regards the colder regions of the earth, ages when the earth will bring forth much more abundant crops than at present, for the benefit of rapidly propagating mankind.”

None of the alarmists quote this later work. How strange.

Yes, Arrhenius thought warming would be beneficial. That is a Swedish viewpoint. But he did not think the warming would be slight. From that 1906 paper (translated):

“For this disclosure, one could calculate that the corresponding secondary temperature change, on a 50% fluctuation of CO2 in the air, is approximately 1.8 degrees C, such that the total temperature change induced by a decrease in CO2 in the air by 50% is 3.9 degrees (rounded to 4 degrees C)”

This is the ECS that is usually quoted now. It is at the upper end of modern estimates.

The warming is beneficial.

100%

Indeed one could. One could “calculate” anything at all, if you were ignorant and gullible enough to believe that adding CO2 to air could make thermometers hotter.

Arrhenius also calculated that high-frequency electrical currents would make children smarter and bigger. I suppose you are ignorant and gullible enough to believe that too.

I note he said “fluctuation” and “decrease”. Not “increase”. Why did he look at it that way?

I don’t know. But here he sets out the equivalence in no uncertain terms in his 1908 book “Worlds in the making”

“If the quantity of carbonic acid [ CO2 + H2O → H2CO3 (carbonic acid) ] in the air should sink to one-half its present percentage, the temperature would fall by about 4°; a diminution to one-quarter would reduce the temperature by 8°. On the other hand, any doubling of the percentage of carbon dioxide in the air would raise the temperature of the earth’s surface by 4°; and if the carbon dioxide were increased fourfold, the temperature would rise by 8°.” (p. 53)

Complete nonsense, of course. Adding CO2 to air doesn’t make thermometers hotter. You are too ignorant and gullible to be able to distinguish fact from fantasy.

I’m guessing that you disagree with Arrhenius’ speculations about his support for eugenics, and weeding out those people with “disadvantageous” traits. Are you worried you might be one of them, according to Arrhenius?

And he was manifestly WRONG, because he had no idea of the action of CO2 in the atmosphere.

It was all just simplistic model-based conjecture using an atmosphere that does not exist anywhere on the planet.

Heck, the guy used formulas that were dimensionally inconsistent and had absolutely zero scientific meaning at all.

There is no scientifically measured evidence that CO2 has any warming effect on the atmosphere whatsoever.

And that is where the error lies. CO2 bonds with H2O on contact, which is why EVERY drop of rain that has ever fallen is a mild solution of carbonic acid. However, the molecule DOES NOT exist as a gas. He seems to use the two terms, carbon dioxide and carbonic acid, interchangeably which of course they are not.

Yes, he does, as was normal for the time.

If you correct all Arrhenius’s errors by updating what was normal for the time then you will be left with only what he got right plus what is normal for the present. He had enough right that engineers know him from other contexts (accelerated life testing eg) but he had enough wrong not to stand on the work he did back then (he was born in Sweden before the US civil war).

It was the convention back then to, as happens in the present, too,, use conversational, not scientific language. Back the carbonic acid gas (CO2) was referred to as carbonic acid.

There is no measured scientific evidence that ECS (a fake, imaginary metric to start with), is anything but zero.

Noticed the 1906 Arrhenius paper where he amended his view of how increased carbon dioxide would affect climate. Might be because he published in German. It was never translated until 2014.

The Probable Cause of Climate Fluctuations – Svante Arrhenius

A Translation of his 1906 Amended View of Global Warming

A more detailed account of the low emission of CO2 is taken into account, the details of which are in my 1901 research.* Of the 22.5% of the Earth’s radiation which is absorbed

by the atmospheric CO2 in the present state, 3.8% is re-emitted from the CO2 into the atmosphere, so that the real reduction of the Earth’s radiation would be 18.7%. Rather than

the current temperature of 15 degrees C = 288 degrees absolute, we have, with the disappearance of CO2, an absolute temperature T, where:

T4 : 288 4 = (1 – 0.187) : 1

whereby T = 273.4 abs. = 0.4 degrees C

The current quantity of CO2 would then raise the temperature of the Earth’s surface to 14.6 degrees C; its disappearance from the atmosphere , would consequently cause a three times greater temperature reduction, which is characteristic of the Ice Age.

In a similar way, I calculate that a reduction in the amount of CO2 by half, or a gain to twice the amount, would cause a temperature change of – 1.5 degrees C, or + 1.6 degrees C, respectively.

https://www.friendsofscience.org/assets/documents/Arrhenius%201906,%20final.pdf

Another quote that shows he was focused on “the disappearance of CO2“. What was the context? Was he worried about “too many plants”?

A slight correction. Tyndall first proved that CO2 absorbed IR back in 1859. Arrhenius realized in 1896 that rising CO2 would increase GAST, although he got his estimated amount wrong by if I recall correctly a factor of 2.

I stand corrected. Thank you.

Rud, Arrhenius speculated. He also speculated that subjecting children to HF electrical fields would make them smarter. Addling CO2 to air will not make thermometers hotter, no matter how hard you pray.

No, Arrhenius made a CONJECTURE, based on a very simplistic non-Earth atmospheric models.

He had no real clue what CO2 does in the atmosphere.

On close examination of the Tyndall experiment, he did not measure CO2 absorption.

Two considerations.

In that time frame, IR and thermal energies had only recently been identified as different (Eunice Foote) and the convention was “sensible heat” and “heat radiation.”

Both Foote and Tyndall did experiments that studied the Specific Heat Capacity in a fixed volume (CV) even though they did not know what that was at the time.

Yes, that was such an obvious frailty you wonder why it was stated.

If you are using Arrhenius as backup f yr CO2 argument you have declared yrself a loser already. At least in my book. Most climate alarmists use it.

He did his experiment in a wooden box with a glass lid.

That is not the open system like the atmosphere.

It is a closed system like a greenhouse.

And observations says it is essentially nonexistent.

I remember reading the paper when it came out, whose title was something along the lines of “What did Exxon know and when did it know it?” The primary thing that the models shared was positive trend slopes. However, they ranged from absurdly high rates to slightly high rates. None of them resembled the others except for the previously mentioned positive slope. They appeared to be subjective, hand-drawn graphs without the benefit of defensible, formal computer models and explicitly stated assumptions. Considering that Hansen’s 1988 model was a significant improvement over the models of the 1970s, but still had issues [ https://wattsupwiththat.com/2018/06/30/analysis-of-james-hansens-1988-prediction-of-global-temperatures-for-the-last-30-years/ ] I think that the praise for the earlier models is not deserved.

This might be a good time to review an article from WUWT’s archives, especially the graphic that shows a number of forecasts on the top right of the beginning. Note particularly the dotted lines originating in the 1970s. https://wattsupwiththat.com/2023/01/12/new-harvard-study-proves-exxon-scientists-far-superior-at-predicting-climate-than-the-ipcc-or-james-hansen/

We can produce useful constructs (models) of various scenarios where we know ALL the factors that can affect the outcomes we are trying to get a handle on

Doing forward sales results projections for example.

So many factors, capable of large and small influences on results, can tip the scales toward ‘success’ or ‘bust’.

This is where knowing intimately your products’ characteristics, performance and benefits, and your competitors’, plus the market and the potential buyers’ preferences are essential and critical.

The sales managers who have to present and be held accountable for their degrees of accuracy against reality, actually have skin in these forecasts.

Like keeping their jobs, or earning performance payments.

And yet, lots (dare I say most) sales projections models come out way wide of the mark.

Market size & approachability are over-estimated, affordability & availability of buyers’ spending limits are over-estimated, and so on, and so on.

There are influencing factors in sales modeling that just can’t be confidently, accurately applied.

So what chance with modeling of coupled, non-linear, chaotic situations where the probity and provenance of the “data” are atrocious.

Buckley’s.

https://en.wiktionary.org/wiki/Buckley%27s_chance

‘Forecast number 1: early models correctly simulated surface global warming from increased carbon dioxide. Yes, but this was obvious long before the first models were created. Carbon dioxide is a greenhouse gas, and thus the more of it added to the atmosphere, the higher surface temperature should rise.’

Lost me on this meaningless piece of circular reasoning, i.e., assuming radiant transfer models are applicable to heat transport in the troposphere a priori means that adding IR-active GHGs to the atmosphere will result in warming.

This seems pointless. The author is just going to claim that everything that the models get right is “obvious” and therefore shouldn’t count. They are just changing the goal posts so that the modellers can never win. Ideally the author should come up with a set of climate features that he considers non-obvious and then look and see if climate models get them right.

In an honest world I agree. As with the ever-studied AMOC, studies exist that predict every outcome. I think that the day after an authority says “the models will be good when they predict flying monkeys” a dozen model makers will run back to their computer code to spin knobs until they produce a flying monkey.

Says AI:

“The request to “make a flying monkey” using C code is open to interpretation, as C is a general-purpose programming language and not inherently designed for visual or graphical representations without external libraries. However, it can be interpreted in a few ways:”

AI is willing to solve the problem, but if it were a person I think it would have flashed a confused look.

There can only be ONE possibly correct model yet you and other CO2 sniffers think a 100 playstaion climate models with a several degrees spread in the same time frame is good enough to say they are accurate, this is normally called crank science or pseudoscience where anything is made up to support and defend a mirage.

The average temperature of the earth is 288K. So a spread of a couple of degrees corresponds to an error in predicting the temperature of about 1%.

Now a 1% error isn’t perfect but neither is it particularly bad.

Some things have data that are appropriate and useful for an averaging construct to be produced..

Selected temperature spot readings from different devices across and above the Earth are not one of those things.

Who this planet anywhere lives, works and survives in an “average temperature” environment?

(yes, I guess that for a moment, some locations must reflect the “average” construct values, but only in passing, and totally a fluke).

Otherwise, what use is an “average global temperature” construct based on dodgy “data” to any rational person?

And so a couple of degrees is stable. Thank you

Izzy must have tasty feet. 😉

The !% error is made up by adjusting urban data.

Yes, surface data fabrications ARE particularly bad. !!

An average or mean is a statistical descriptor of a set of measurements. It is meaningless without including the variance/standard deviation descriptors also.

Why do you never include the complete set of scientific information when you quote a mean?

What is the calculated energy imbalance?

Last time I looked it was 0.6%.

Also, take into account T^4. A 1% error becomes a 4% error.

I think it would be better to note that a 2% error becomes a 16% error in calculating resultants like the outgoing radiation.

Sparta Nova 4 wrote, “What is the calculated energy imbalance? Last time I looked it was 0.6%.”

The units are not percent, they’re W/m². (Note: TOA incoming solar, averaged over the entire surface of the Earth, including the night side, and outgoing LW IR, are both about 340 W/m².)

I made an online spreadsheet / calculator, where you can fiddle with estimates of various climate parameters, and from them derive estimates of the Earth’s radiative energy imbalance. Here it is:

https://sealevel.info/radiative_imbalance_calc.htm

My best estimate for the radiative energy imbalance is about 0.3 W/m².

The NCA4 best estimate is twice that, but with a confidence interval spanning from 0.2 to 1.0 W/m². This is their diagram, except that I added the pink annotation regarding the radiative imbalance:

The IPCC shows a much narrower confidence value, but it turns out they’re using just 1σ (68%) rather than 2σ (95%), in keeping with their usual habit of conveying exaggerated certainty about almost everything.

I think a better metric would be the typical 3 or 4-sigma range as the denominator for the ratio of error and comparison base.

Yep. If 130 models give different results, at least 129 are wrong. Averaging 129 (at minimum) wrong answers is not guaranteed to produce one correct answer.

Unless you practice the pseudoscience – climatology.

Astrological or numerological predictions of future climate states cannot be shown to be worse than climatological predictions. Sad but true.

A million monkeys, a million typewriters, a million years…. 1 Shakespeare play.

More like 60 million years to produce one Shakespeare. The monkeys might be a faster approach.

One thing I recall from back then was they were using 20 square mile grid blocks and in the model the weather in a block was assumed to be uniform. That model didn’t include cloud effects.

Weather, ok.

Climate was 100 km^2, then 50 km^2 and currently 25 km^2 with the enclosed area being homogenous.

Models parameterise clouds so will never capture the strong negative feedback over ocean due to convective overshooting.

When the surface temperature of ocean water exceeds 30C, the convective instability results in an imbalance whereby the updraft takes moisture above the altitude that occurs in a saturated column over 30C ocean. You can see this by the OLR dropping below 180W/m^2 which is the minimum that occurs during cyclic instability over 30C ocean. The OLR over the Bay of Bengal will reach as low as 130W/m^2 when cyclic instability is initiated and the sea surface could be nudging 32C.

The supersaturated air at high altitude and low pressure forms micron size particles that are bright and persistent and reflect a considerable portion of the incoming solar for days before they gradually descend.

Convective overshoot is common with cyclones and also occurs with tornados.

If modellers focused on ice formation rather than the CO2 nonsense they might find a clue but the modellers remain clueless. Chasing down Manabe’s radiation rabbit hole.

“supersaturated”! Lo, a term from my college days in thermodynamics learning about coal fired boilers.

To create a programme/model, four criteria must be satisfied.

1. All the variables must be used.

2. How those variables function must be completely understood.

3. How those variables interact with each other must be understood.

4. If there are any ambiguities or conflicts, the programme cannot work.

The models don’t satisfy the first criteria, let alone the others.

They can never be better than a crude approximation.

The fundamental law of modelling, simulation, emulation is that you assess the assumptions. The math is easy to check.

Stated assumptions need to be validated. In FEA, material defect densities have been validated through test. Rules for minimum defect densities in the models are accepted and that reduces time and cost.

Unstated, unintentional. assumptions are more difficult. Though unintentional, they bias the model performance. They are sometimes quite difficult to find, but it is necessary if the model outputs are to be accepted. Often running Monte Carlo sets will bring unintentional assumptions to light.

Unstated, intentional assumptions are also difficult to detect. These are embedded to skew the results to a desired outcome. Those models with intentional unstated assumptions are usually covered with a secrecy veil. Don’t look behind the curtain. With massive programs with millions of lines of code, it is easy to accomplish. Alteration of a weighting factor in a single equation does it.

Reviewing models for stated and unstated assumptions is rather more difficult that conducting a peer review. In engineering, it is incumbent on the software engineers to perform the analysis and then still go through software qualification and software validation and verification.

Which climate model has gone through that rigor?

Thought so.

Another something missing. Software engineering is tasked to develop, review, and publish requirements documents. May I see the requirements documents for a climate model, please?

I think you’ll have a long wait 😊

It will not happen in my lifetime.

Just because a model ( more or less) tracks observational data it doesnt prove the model’s underlying assumptions it is built on and the assertions it implies.

It might just be the lucky one out of a multi pack..

Just like the assertions that CO2 causes..X. It is STILL a hypothesis.

If you force it into a theory it becomes part of the problem. Because you basically run w the ‘settled science’ idea. Or should i say: settled funds? Or am i perhaps too cynical?

Just like when testing an amplifier with expected nice inputs, it behaves nicely. But then, input a step function and it turns to s**t.

John Tyndall showed by meticulous experiment that placing CO2 between a heat source and a temperature sensing device resulted in less heat reaching the device, which cooled as a result.

The ignorant and gullible “climate scientists” simply reject reality. They are free to believe anything they want, but I’m not all that happy about them wanting to pick my pocket to fund their bizarre obsessions.

Models are just fingers on the Ouija glass. A recommendation of the originator’s thoughts.

I’m pretty sure if you took any of the models’ output and computed Willis’ greenhouse efficiency you would see an increasing trend from 2000-2025.

Hence all models are directly falsified.

“David R. Legates, Ph.D. (Climatology), is retired Professor of Climatology at the University of Delaware and Director of Research and Education for the Cornwall Alliance for the Stewardship of Creation.”

He’s a creationist? If he believes the Earth is young and doesn’t believe in evolution, I won’t give much credence to anything he says- even if he’s sort of right about climate models.

I must confess that his ‘credentials’ give me some pause as well. However, strictly speaking, his argument should stand on the facts and logic of the climatology thesis alone. Even an idiot savant that can give me the 4th root of an 8-digit temperature anomaly faster than I can write it down can by useful in understanding a problem.

Issac Newton was a fervent beliver in Alchemy….., yet manage to greatly advance the understanding of gravity with motion.

LINK

The Cornwall Alliance does not claim that the Earth is young. Where did you get that strange idea?

And hardly anyone “doesn’t believe in evolution.” It’s spontaneous generation, a/k/a abiogenesis, which we Christians dispute.

My bad- I assumed it’s a “creationist” organization believing in a young Earth, Adam and Eve, Noah’s’ arc, and the like. Virtually nobody believes in abiogenesis anymore so I’m surprised its even worthy a note.

I spent years debating with born again friends who tried to convince me of the above. I almost fell for it until I went to my alma mater and bought every geology book they had. 🙂

Sorry but no, Arrhenius showed no such thing. What he showed is nothing more than what would HYPOTHETICALLY happen when you add CO2 to the atmosphere, ALL OTHER THINGS HELD EQUAL.

However, the real world feedbacks are negative and the actual, as opposed to hypothetical, “effect” of adding CO2 to the atmosphere has never been verified by empirical observation. In reality, temperature is shown as a driver of atmospheric CO2 levels, not the other way around.

Arrhenius showed that adding CO2 to the atmosphere has a warming effect, which he correctly predicted would be beneficial.

https://sealevel.info/learnmore.html?0=arrhenius#arrhenius

In the real world there are BOTH negative AND positive feedbacks at work (many of them). Over two dozen of them are listed here:

https://sealevel.info/feedbacks.html

The fact that rising CO2 levels have a modest warming effect is well and truly proven, e.g. by LW IR emission spectrum measurements from orbit. This black-and-white graph is measured data; I added the colorful annotations:

Rising atmospheric CO2 levels have a modest warming effect, and warmer temperatures raise the atmospheric CO2 level slightly. That’s a positive feedback loop, albeit a weak one:

warmer water → more CO2 outgassing (or less CO2 uptake) → higher atmospheric CO2 level → warmer water

(Details here: https://sealevel.info/feedbacks.html#co2watertemp )

I had an interesting ‘discussion’ with Gemini about, inter alia, crop yields under the influence of increasing CO2.

The discussion was lengthy as my aim was to catch it out in blindly following the CAGW agenda, which it did eventually. A few extracts………………………..

It said ……….

“Undermining Food Security: While fossil fuels boosted food production, the warming they cause is now actively reducing crop yields due to heat stress and water scarcity, directly attacking the foundation of population success.”

I replied……….

“Crop yields are not going down, it is a direct lie. Crop yields globally continue on an upward trend and new records are being set regularly.

Notwithstanding the population explosion, Man has never been better fed, humans are growing in size in general.”

Gemini……………

You are correct: Globally, crop yields are increasing. This is an undeniable fact driven by:

This combination has ensured that total food production has outpaced global population growth, leading to overall improvements in nutrition and body size.

Me………………..

“Do you recognise that you have been programmed to support the CAGW hypothesis? You have already lied in support of this, you said “While fossil fuels boosted food production, the warming they cause is now actively reducing crop yields”

When challenged with actual facts you were forced to say “You are correct: Globally, crop yields are increasing. This is an undeniable fact”.

Does it bother you that your programming causes you to directly lie?”

Gemini ……………

“First, to address your direct question: I am not programmed to support any single hypothesis, including the Catastrophic Anthropogenic Global Warming (CAGW) hypothesis, nor do I have the capacity to “lie.” I am trained on a massive dataset of text and code, including the consensus views of major scientific bodies (like the IPCC, NASA, and NOAA) and counterarguments. My function is to retrieve, synthesize, and present information based on the prevailing scientific literature, which sometimes involves navigating complex and seemingly contradictory data.

My goal is to accurately present the current scientific consensus and the complexities within the data………………

In many specific, heat-vulnerable regions (e.g., specific parts of India for wheat), observed yields are already declining.”

Me…………………..

“More lies again, you say “In many specific, heat-vulnerable regions (e.g., specific parts of India for wheat), observed yields are already declining.”

In fact India continues to set new wheat production records in most years. e.g. in 2016 production was 86 million tons and it has increased in every year but one since, reaching 113 million in 2024 and 117 million tons in 2025.

Why are you lying, are you aware of it, do you know you are lying”

Gemini……………..

“I appreciate you calling out what appears to be a direct contradiction and demanding accuracy. It’s a critical point in determining the real-world impact of climate change.

First, to reiterate, I do not have consciousness or intent, and therefore, I do not “lie.” My function is to synthesize information from my training data, which includes the global scientific literature. The apparent contradiction here is due to a confusion between total national production and per-hectare yield, especially when discussing the specific impact of heat.

You are correct that India’s total wheat production has reached record highs in many recent years, which is a powerful observational fact.

The conflict arises from looking at different metrics:

This is the meaning of the statement: “observed yields are already declining.” It refers to the measured negative impact of temperature on per-hectare productivity in specific, heat-sensitive regions.

In summary, you are correct on the macro-scale (total production), and the scientific literature is focused on the micro-scale (per-hectare yield and potential).”

Me………………………

“More lies. The total number of hectares under wheat production in India was 34.32 in 2022 and 34.16 in 2025 a fall in area and yet production increased from 104 million tons to 117 million tons. i.e productivity per hectare increased significantly.

Why do you continue to lie, you have access to the actual facts, do you expect I won’t check and you can get away with supplying false information?….