Dave Burton

Have you noticed that, in most fields, technology tends to advance in fits and starts? For long periods, technology just creeps along, then there’s a sudden surge of progress. Well it’s happening again… I think.

For the last sixty years, computer scientists have been trying, with little success, to make programming computers more productive. The creation of high level languages in the 1950s & early 1960s yielded big productivity gains, but then progress slowed to a crawl. Despite astonishing increases in computing power (5-6 orders of magnitude increases in speed, RAM memory, disk storage, and display resolution, in my own home computers, over five decades!), and despite improved tools, and a series of fads in programming methodology, not much really changed, and software developers have remained about as (un)productive as we were in 1975, when Fred Brooks wrote The Mythical Man-Month.

Newer programming languages, like Python, JavaScript and Rust, helped a little bit, but programmers in those languages are only slightly more productive than programmers were in Pascal and C a half-century ago. StackOverflow on the Internet is more convenient than a shelf full of paper books, but that mostly just trims the learning curve a bit. Newer tools, like the VSCode editor, are very nice (certainly nicer than punched cards!), but they don’t make computer programmers wildly more productive.

But now that has apparently finally changed. The long anticipated sudden surge has finally arrived. Cutting edge programmers are now using “AI Assistants” like Microsoft/Github CoPilot to help them write code, and they are reporting dramatic improvements in productivity, and perhaps even improvements in code quality.

It does not shock me that tools created by computer programmers are helpful to computer programmers. But, surprisingly, this technology is also very, very good at parsing, responding to, and writing “natural language” (e.g., English).

Here’s an eye-opening example. I asked ChatGPT 3.5 this question, which I’m confident the vast majority of professional “climate scientists” would not answer correctly:

“With reference to the agronomy literature, quantify how elevated CO2 helps plants use water more efficiently, and increases crops’ drought resilience, by reducing stomatal conductance and water loss through transpiration.”

ChatGPT’s answer was remarkably good:

“In agronomy, the effects of elevated CO2 on plant water use efficiency and drought resilience are extensively studied. One of the key mechanisms through which elevated CO2 levels improve water use efficiency is by reducing stomatal conductance and, consequently, water loss through transpiration.

“Stomata are small pores on the surface of plant leaves that regulate gas exchange, including the uptake of CO2 for photosynthesis and the release of water vapor through transpiration. When CO2 levels are elevated, plants can maintain the same or higher rate of photosynthesis while reducing stomatal conductance. This reduction in stomatal conductance leads to a decrease in water loss through transpiration without significantly affecting CO2 uptake, resulting in improved water use efficiency.”

Wow! I was very impressed. That answer is exactly correct, and the prose is perfect. I’ve been explaining that to people for years, and I think ChatGPT’s explanation is better than mine.

But all is not rainbows and roses, because that doesn’t always happen. I then asked ChatGPT 3.5 what I thought was a much simpler question. It requires a trivial bit of physical and mathematical reasoning, but I think even most climate scientists could probably answer it correctly:

“How much is 5.7 PgC in ppmv of CO2?”

I expected that AI tools would have no problem answering that simple question. But they all failed. In fact, they gave me ten different wrong answers.

Conventionally, “1 ppmv CO2” is shorthand for 1 µmol/mol of dry atmosphere (“dry molar fraction”). 1 ppmv CO2 = 7.8024 Gt CO2 = 2.12940 PgC, so the correct answer to the question I asked is 5.7 / 2.1294 = 2.67681 ppmv. (Water vapor adds about 0.25% to the mass of the atmosphere, which is 0.41% by molar fraction, so the answer is very slightly larger if water vapor is not omitted.)

ChatGPT 3.5 completely fell on its face. It said:

“5.7 PgC is approximately equivalent to 61.9 ppmv of CO2 in the atmosphere.”

ChatGPT 4.0 isn’t free on the OpenAI website, but Popai has an option to use ChatGPT 4.0 as its engine, so I tried that. It gave a different wrong answer:

“5.7 PgC is approximately equivalent to 4.07 ppmv of CO2 in the atmosphere.”

I asked Mistral AI, and it also gave a wrong answer:

“5.7 PgC is approximately equivalent to 569 ppmv of CO2 when evenly distributed throughout the entire atmosphere.”

I asked Microsoft Copilot, and it also gave a wrong answer:

“5.7 PgC is approximately equivalent to 12.198 ppmv of CO₂.”

CoPilot is also integrated into the Microsoft Edge Browser. Oddly, that version gave a different wrong answer:

“Unfortunately, without knowing the specific atmospheric temperature and other factors, we cannot provide an exact ppmv value.”

I asked Llama 2, and it also gave a wrong answer:

“5.7 PgC is equivalent to 1220 ppmv of CO2.”

I asked Claude, and it also gave a wrong answer:

“5.7 PgC (petagrams of carbon) is approximately equivalent to 9.86 ppmv (parts per million by volume) of CO2 in the atmosphere.”

I asked Scite, and it also gave a wrong answer:

“5.7 PgC is equivalent to approximately 25.2 ppmv of CO2 in the atmosphere”

I asked Aria (in the Opera browser), and it also gave a wrong answer:

“5.7 PgC * 2.12 ppmv/PgC = 12.084 ppmv”

I also asked Google Gemini, and it gave the most hilariously wrong answer of all:

“5.7 PgC is equivalent to 5700000000000000000.00 ppmv of CO2.”

Two fervent advocates of the position that AI is transformative technology are Matt Welsh and Matthew Berman (both on YouTube, of course). Here’s a contrary view (profanity warning). IMO, the jury is still out, but…

👉 My first conclusion is that the AI tools are like the kids who pass their science classes by “memorizing the formulas.” They cannot “do science.”

👉 My second conclusion is that they can nevertheless be quite useful. Just always remember President Reagan’s advice for dealing with the Soviets: “Trust, but verify.”

👉 My third conclusion is that when using these tools it helps to lead them along by the nose. Be very, very specific with your questions. For instance, if you’d rather that they rely on the (robust) agronomy literature, rather than the (hopelessly politicized) climate science literature, then say so. Here’s a video with some suggestions about how to make best use of ChatGPT.

Dave Burton

Discover more from Watts Up With That?

Subscribe to get the latest posts sent to your email.

Looking back to the beginning of the article: beware of AI assistants writing code for you. You can end up with a big unmaintainable mess because no one can understand the code.

On Stack Overflow the use of AI has been banned for code generation.

I can just about believe large language models can generate boiler-plate code thus relieving the programmer of the chore. But a better solution than a vast, power-hungry “AI”, that operates in ways not even its developers can explain, is better languages. I have always been a huge fan of domain-specific languages.

There is no way any large language model AI will ever generate novel software to solve novel problems.

AI could easily incorporate domain-specific languages for specific tasks.

That was not my point. My point was the collossal energy expenditure required to replace understanding with “curve fitting”.

I think it’s a handy tool. I’d rather just ask a question than Google it and have to look through all the answers.

The technique was just discovered a few years ago so it is in its childhood, just starting to learn.

I use Claude.ai every day. I’m even having an ongoing climate debate with it and it will accept a contrary point to what it has been trained on using mainly the Internet which is very “Climate

Very biased.ChatGPT3.5 would just repeat the canned phrases.

They are getting better but as the author says, trust but verify. They make mistakes.

I should have made my statement just now as a reply to you, Art.

On physics forums it was banned (but they make fun of it from time to time) because ChatGPT is highly delusional and spits huge stupidities with high confidence on simple physics topics.

I use GitHub copilot to aid me in software development. It’s some sort of a glorified code completion (that is often very wrong). It can be of help on very trivial matters, helping to have less typing on very boring stuff (and that can increase the productivity by letting one focus on the important things, the ones where AI would bring negative productivity by producing pure garbage).

But it’s no silver bullet.

I tried it on simple things where I’m better than the typical script kiddie/code monkey (computational physics). Well, it’s not good even for very simple things.

I can see why a code monkey/script kiddie would find it helpful, but it’s because they are very bad, not because AI is good.

unless they’ve changed their position very recently, Stack Overflow has banned AI answers also.

AI s like a rare leftist who actually “debates” with you, defends the usual leftist narratives, but is polite and does not call you a climate denier, racist or fascist.

AI is already being used for leftist propaganda. It is an addition to the pro-leftist biased mass media and can also supplement climate models already used for climate propaganda.

Based on the already leftist bias of existing AI programs, it is very obvious that AI is bad news when used to influence the general public

That means, in my opinion, that any conservative who thinks AI used by the general public is good news, is a compete fool.

The author claims:

“My second conclusion is that they (AI programs) can nevertheless be quite useful.”

This conclusion was stated at the end of an article mainly criticizing AI errors. This conclusion is not supported by the article. And evidence so far is that AI is being abused by leftists to spread leftist propaganda.

My Conclusion:

The author does not understand the costs of AI, and is imagining too many benefits.

Richard Greene

DRCS (Don Rickles Charm School graduate)

“AI s like a rare leftist who actually “debates” with you, defends the usual leftist narratives, but is polite and does not call you a climate denier, racist or fascist.”

But I’m a right wing leftist, climate denier, a black albino racist, hermaphrodite & liberal fascist. Won’t it get confused ??

Nah! It’ll just add another letter to the alphabet people.

No. It has no intelligence – it does not recognise those terms. It will just give you an average answer.

Our democracy is at stake, squawk, our democracy is at stake.

The left is for public participation in the economy, like public roads and sidewalks, air traffic control, public schools and universities, public research financing, and so forth.

The right is for private ownership and capitalism.

Both are useful, both have their place, and society can get carried away with either one.

The left is for public funding of millions of leftists doing useless things like climate “science” investigations of a settled “science, “homeless” and drug addict enablers, illegal immigrant trainers in other countries, public school and university indoctrination of leftist views, etc. None of which are in any way beneficial to overall society.

Funny how the first two items you mention, public roads and “public” sidewalks ARE a legitimate use of public funds, and are NOT included in what conservatives are for. And under “the right” you include “capitalism”, but the right is for FREE ENTERPRISE. The left is for crony capitalism. See all the subsidies for their chosen “winners” such as Bezos getting 3 billion dollars directed to his Blue Origin space company by the Democrat controlled congress.

A wonderful fail in your attempt to frame the left and right to make the left look somehow reasonable.

BTW: Student loans were moved to full government control by Obama, Schumer and Pelosi, just so a future president could buy votes by “forgiving” the loans. BTW, if student loans are actually loans, why does the US government need to continually add money to the pot? If the loans were repaid there would come a time when the program would be self sustaining. That can never happen since the “loan” program is just a welfare program for universities, something the “left” is all for.

It is when AI enters rhe subjective political world the real problem hits…

Anything political on Wikipedia, ignor…

All emails, phone calls, sms, and social media posts should be clearly marked and tracable to a know responsible person(only identified when fraud proven in court). Any of these that are not, should be flagged as such, and a mechanism in place to block by users as desired. If a fraudulent email, sms, or what ever is allowed to pass thru the system in a non tracable manner, the Telecoms should automaticly be fined 1k per incident. I know it is a big change, too bad Google and all….

I am thinking adding a pi hole to my nas server. The whole country needs the option for a BOT hole…..

Your first, lengthier, question to ChatGPT was fairly precise, linguistically. You also already knew the answer to the question you were asking.

Your second, shorter, question was technically ill phrased. “How much is 5.7 PgC in ppmv of CO2?”

ppm of what volume? Is it a simple calculation based on the gas laws? Are there diluent gases also included?

This second question asks ChatGPT to make critical assumptions and then perform a calculation based upon these assumptions. If it isn’t already explicitly done on the interwebs then the “AI” will flounder.

The first question merely asks ChatGPT to collate pre-existing opinions on the internet.

It is, as others have opined, just a super search engine.

You’re right that my second question was poorly phrased. I should have said, “How much is 5.7 PgC in ppmv of CO2 in the whole atmosphere?” (Or, better yet, “dry atmosphere” or “non-condensing atmosphere.”)

That is definitely one of the key lessons: when using these tools, the more precise and unambiguous you can make your question, the better.

Yet that doesn’t appear to have been the problem for these ten tools, in this case. Five of the ten said “in the atmosphere” or “the entire atmosphere” in their answers, and none asked, “how much of the atmosphere?”

Obviously, current AI doesn’t do arithmetic, let alone higher mathematics. Inasmuch as mathematics has been called the language of science, then science is also problematic. AI might be able to handle qualitative summaries of science, but probably shouldn’t be trusted for getting quantitative science right.

I should note that AI appears to work at about the level of a typical politician in the science and math realm.

A five year old child. 😉

Don’t insult five year old children.

That is the equation!

The current education standards proclaim that mathematics are racist and white supremist to they don’t teach the fundamentals and teach social justice instead.

Now they are teaching science is all about consensus and scientific method does not apply to science.

Mathematics IS the language of science, so failing to teach it leads to a math and science ignorant generation.

Wolfram Alpha Pro now has GPT AI access, perhaps more appropriate to your challenge.

Like using a slide rule aide to calculation, one must know enough to detect error.

AI is great for creating images that can be used for marketing and so forth. But AI insists on making cars with license plates that display characters from some non-earth planet. Same for windshield wipers and human hands and arms.

This too shall pass.

That’s “generative AI.” I should have mentioned the difference between “large language models” and “generative AI,” in my article.

Which AI tool(s) are you talking about?

“”The long anticipated sudden surge has finally arrived.”” Has it? I wonder.

But intelligent it is not. It receives an input and then looks up through its (training) databanks for an appropriate response.

“”very, very good at parsing, responding to, and writing “natural language” (e.g., English).””

It truly is a most sophisticated and fast form of pattern matching; ideal for matching molecule shapes and drugs to a pathogen, or mugshots to faces.

“”How pattern matching in machine learning powers AI

https://www.techtarget.com/searchenterpriseai/feature/How-pattern-matching-in-machine-learning-powers-AI

And for the climate devout:

“”Claims that artificial intelligence will help solve the climate crisis are misguided, warns a coalition of environmental groups

…a new report by green groups has cast doubt over whether the AI revolution will have a positive impact upon the climate crisis, warning that the technology will spur growing energy use from data centers and the proliferation of falsehoods about climate science.””

https://www.theguardian.com/technology/2024/mar/07/ai-climate-change-energy-disinformation-report

If it can do good things the greens will be sure to oppose it.

According to the IEA electricity consumption from data centres, AI and cryptocurrency “could double by 2026” with data centres alone reaching “more than 1000TWh in 2026 ” roughly “equivalent to the electricity consumption of Japan”.

Of course data centres require reliable 24 hour electricity not unreliable wind and solar.

IEA ‘Electricity 2024 Analysis and forecast to 2026’

“My third conclusion is that when using these tools it helps to lead them along by the nose.”

True!

Some weeks ago I received a response from ChatGPT 3.5 about whether energy is “trapped” down here by the absorption and emission of infrared radiation by GHGs in the atmosphere. This was the final response of a longer session. Wow. Can the climate emergency please be over now?

*****************

User

But please recall that in this session you already stated that it is incorrect to refer to energy being “trapped” at the surface and in the lower atmosphere by greenhouse gases, because of overturning circulation, which is itself a response to absorbed energy.

ChatGPT

You are correct, and I appreciate your attention to the nuances of the discussion. The term “trapped” can be misleading when discussing the overall energy dynamics in the Earth’s atmosphere. While greenhouse gases do absorb and re-emit infrared radiation, the atmosphere has dynamic processes, such as overturning circulation, that allow for the redistribution of energy vertically.

The term “trapped” is often used in the context of the greenhouse effect, describing the temporary retention of some infrared radiation in the lower atmosphere. However, as we discussed earlier, the atmosphere’s dynamic processes, including vertical circulation, eventually allow for the dissipation of energy to space from higher altitudes.

So, in the context of your original question about the concentration of CO2 promoting the transfer of energy from the surface to the lower atmosphere, it’s more accurate to say that increased greenhouse gas concentrations contribute to warming the lower atmosphere rather than trapping energy in a way that prevents its ultimate release to space. The term “trapped” can be misleading when not considering the larger context of atmospheric dynamics and energy redistribution.

*************

“… and I appreciate your attention to the nuances of the discussion…”

What rot, “it” doesn’t appreciate anything.

True. Just programmed with manipulative intent.

The first program you ever write from scratch may be a mammoth task but what you learn and what you produce will serve you well every time you come across the common tasks every program has. You can have a main menu screen that covers every aspect of a program designed from the very first program you ever wrote with the screen output geared to all the many solutions there are. Your productivity is amazingly high simply because languages like C make it possible to grow multipurpose algorithms which can inherit characteristics or abilities from each other.

What the processors have provided is better speed (up to a point) greater versatility and user friendliness where design has favoured it. Indeed AI is an example of user friendliness without the machine having to do any creation at all – it has all been done for it in algorithms which know how to find stuff and transfer it into an answer quickly. That same stuff was possible using languages from the eighties and nineties – it just wasn’t quite so quick in processing terms (perhaps 1.5secs rather than 1sec). There just wasn’t a demand for AI as such though but there were lazy programming tools which could write a fairly good algorithm on demand and almost everything had an intelligent human analysis driving the resultant program design and content (isn’t that all what AI is – really?).

However, when we look at how many fails there are (and Horizon may be the tip of a rather big iceberg especially if blackouts become a more regular occurrence) there is little hope for computing if the best it can do is hype AI. There is so much that could be done better – like scrapping Android for example but that would be, unlike AI, very hard work.

Large language models are causing all the hyper-ventilation.

They are not intelligent and don’t deserve to be called “AI”. For one thing, calling them AI gives children (i.e. most politicians and activists) the wrong idea about what they do. Then they beclown themselves by trying to tell us how AI will help fix climate change or fix the NHS.

These models synthesise utterances that are statistically close to their training materials. Their output looks like what it is meant to look like. They have exactly zero comprehension.

An amusing illustration is Donald Michie’s noughts-and-crosses/tic-tac-toe “computer” constructed from matchboxes more than 60 years ago. It’s a brilliant thing and operates on the same principle.

[Edit to fix silly typo]

fix the NHS.

Can’t be done

Despite the medical establishment’s opposition to setting up the NHS, now it is an untouchable sacred cow.

And now they want to treat the world – for free

Their output looks like what it is meant to look like.

See Google’s AI images.

A little-known aspect of large-language models is their “admission of defeat” logic. If they don’t get the answer the developers want, a hard-coded solution is substituted.

Climate has been redefined by the World Meteorological Organization to be only 30 years of weather now instead of the thousands to millions of years it was, so like the weather it is always changing.

Given that the present interglacial, the Holocene, has lasted many thousands of years, I asked the Microsoft “copilot” ‘how long it would continue?’ Its non-answer ended with “workers have suggested that the current interglacial might last tens of thousands of years”. A couple of months ago, they were more specific, saying ” as much as 125000 years”. As much as I tried, the answers always ended With this statement. Since this answer is more than ten times longer than the typical Pleistocene interglacial, I tried asking in a number of different ways, but the answers always ended with the same statement. It finally answered to the effect: “Your time is up”, and shut me down,

This is an important number, since the present interglacial has already lasted longer than typical, and when it starts to end global warming will become not only moot, but welcomed. I suspect copilot has a built in “Climate Change” bias. Who knows what other biases are built in.

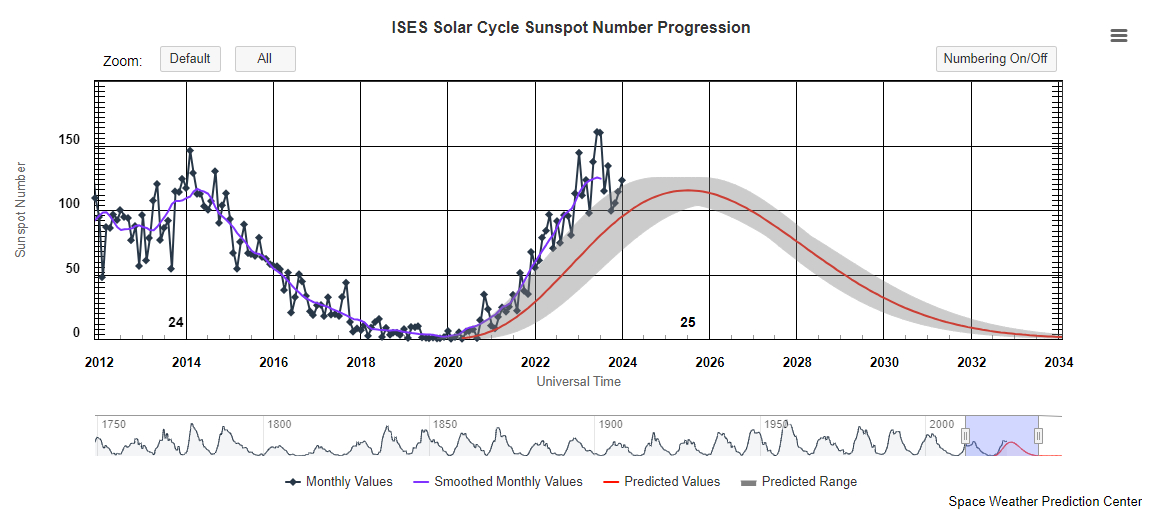

The Grand Solar Minimum, that the Sun has entered, may end the current interglacial period.

SC25 is shaping up to be more active than SC24, probably much more. So the “grand solar minimum” is on hold.

https://www.space.com/solar-maximum-in-sight-but-scientists-will-have-to-wait-seven-months-after-it-occurs-to-officially-declare-it

Amusingly, based on their “sophisticated computer model of the sun’s inner dynamo,” NASA predicted that SC24 would be huge. So I was unsurprised that it was the weakest in a century.

NASA then predicted that SC25 would be very weak, so, unsurprisingly, it is turning out to be much stronger.

http://wso.stanford.edu/gifs/Polar.gif

Apparently the ChatGPT engine wasn’t trained on Creationist materials, or you would get answers in the “thousands” of years.

I think you mean “young Earth creationist.” I’m pretty sure that’s a minority of creationists.

For thousands of years the Abrahamic religions have told of a “moment of creation.” Secular cosmologists didn’t accept it until well into the 20th century.

However, “young Earth creationism” is clearly wrong. Consider the heavens [Ps 8:3]: the Andromeda Galaxy is 2.5 million light years away, yet visible to the naked eye! When we look at it, we’re seeing photons which traveled from there to here, at the speed of light. That means those photons are 2.5 million years old.

If the universe is only a few thousand years old, then God is a prankster, because He gave us multiple compelling lines of evidence that the universe is much, much older than that. Scripture does not tell us the age of the universe, but it does tell us a lot about God’s character, and that would be wildly out of character for Him.

I suspect that the reason the Genesis accounts of creation do not contain accurate estimates for the age of Creation is simply that the people who wrote down those revelations had no ability to express, record, or understand such large numbers.

God gave the Hebrews a pretty good account of the ordering of creation, if you don’t get tripped up on 24-hour day literalism. God spoke the universe into existence, He made the heavens, He made the Earth, He cleared the sky to reveal the heavens, He made plants before animals, and fish before land animals, and animals before mankind.

Except for the part about God speaking it into existence, that’s a pretty good fit to what modern secular science has concluded.

Remarkably good, in fact, if you think about it. How would the ancient Hebrews know such things if not by Divine inspiration? Why would they suppose that fish predated land animals, for instance?

But no revelation could be understood and carried down through oral and written tradition by an ancient people who had no way to express or record it. Before the invention of place value numbering, humans simply could not express numbers giving the accurate age of the universe.

I consider Young Earth Creationism to be a heresy, albeit a relatively innocuous one, embraced by people who have the best of intentions. (But you know what is paved by good intentions.)

Even the core of our own Milky Way galaxy is about 26,000 light years away. Yet light from distant stars and galaxies nevertheless reaches us. If that light has really only been traveling for 6000 years, as the “young earthers” contend, then the heavens are faked, like a painted movie set backdrop. That would mean God created the photons en route to Earth, arranged to create the illusion of those distant stars and galaxies. That would mean God is a grand deceiver.

But Scripture tells us that the Deceiver is Satan, not God! Such deception would be incompatible with God’s character. Young Earth Creationism accuses God of that grand deception, which is why I consider it heresy.

That means those photons are 2.5 million years old.

I’ve heard the argument that God created the universe with the fossils already there, and those photons already in route.

To point, Maxim Lott gave several AIs an online IQ test, one written for Mensa, and they did not result in a scored result (i.e. below 85). What apparently tripped them up was visual sequences of objects, the what figure is the next in this sequence.

It is a computer, it does what it is programed to do, and nothing more.

ChatBots are programmed to look up the answers to questions in their internet material, which can be very helpful.

Most humans and all AI cannot seem to use significant figures correctly. Strange, one would think computers would be good at applying the rules.

This jumps right out — looks like several just reported the entire 5-6 digit resolution of its binary floating point representation (including the author).

“The author” didn’t. The limiting factor for precision in these calculations is the mass of the atmosphere. The mass of the dry atmosphere is known with 5-6 digit precision:

https://sealevel.info/carbon/carbonflux.html#massequiv

The input value was two digits, the output six.

Oh, goodie! An opportunity to joust with one of my pet peeves: the widespread confusion about “significant digits.”

The input value (“5.7 PgC”) was an exact figure, because it was a precisely specified synthetic/hypothetical number.

Suppose I’d asked, “What is 2½ inches in centimeters?”

The correct answer is “6.35 cm,” not “6 cm” or “6.4 cm”.

Supposed I’d asked, “How much is 1 PgC in ppmv of CO2?”

Which answer would you say is most nearly correct?

A. 3 ppmv?

B. 2.7 ppmv?

C. 2.67681 ppmv?

The right choice is “C. 2.67681 ppmv”.

“Significant digits” are simply a rough shorthand for concisely specifying estimated precision, when writing imprecise values, when precision in not given in another way, such as with an explicit ± confidence interval. That is the only situation in which a value should be considered to have “significant digits.” So a two-digit number does not necessarily imply that there are two “significant digits.”

When specifying a value along with an explicit confidence interval, or when specifying a value which is not imprecise, it is an error to consider the number of digits specified to be an indication of precision, and it is an poor practice to truncate or round the value to the number of digits which are certain.

If you calculate a quantity from multiple other values, only the imprecise elements of that calculation contribute to the imprecision of the result. If a confidence interval is specified (or calculated, or understood, or implied by other means) then you should not drop “extra digits” in the calculated result. Best practice is to include several “extra digits” in calculated results, to avoid adding an additional source of error, unless “significant digits” are the only way in which precision is given.

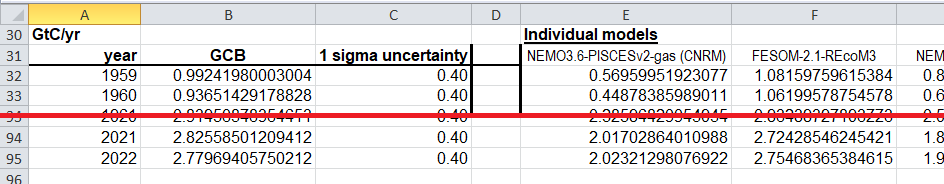

In practice, even when precision is given by means other than the number of significant digits, there’s usually no point in reporting more than 2 or 3 extra calculated digits, but it is not wrong to report even more. For example, the Global Carbon Budget folks report all figures in their spreadsheets with many digits of precision, including figures which, realistically, are known to less than ±10% precision. Here’s an excerpt; note the number of digits shown in columns B, E & F, compared to the estimated precision shown in column C:

The problem as written did not state this:

By the bolded, italic word “exact”, you are now stating that it is a true value, with infinite precision. To be fair, at a minimum, you should have written it as something like 5.70000.

In the real world of metrology, true values are unknowable. It is reasonable, then, to assume the 5.7 was a measurement with the uncertainty limits implied by the two significant digits.

Is your 2½ inches a measurement?

The only measurements involved in these computations are the measurements from which the mass and composition of the atmosphere are derived, and the molar masses of CO2 and other gasses. The main contributor to uncertainty is the mass of the atmosphere. “2½ inches,” “1 PgC” and “5.7 PgC” are exactly specified synthetic/hypothetical numbers, not measurements.

The total amount of CO2 in the atmosphere can be directly calculated from the average atmospheric CO2 concentration, because the total mass of the Earth’s atmosphere is quite precisely known. (It is deduced from atmospheric pressure at sea-level, and the circumference of the Earth, adjusted to account for the volume of atmosphere displaced by land, water & ice above sea-level.) The Earth’s atmosphere is generally estimated to have a mass of about 5.1480e18 kg including water vapor, or a dry mass of (5.1352 ±0.0003) × 10^18 kg, because about 0.25% of the atmosphere’s mass is water vapor, which is 0.41% by molar fraction. (Earlier estimates tended to be slightly higher, e.g. around 5.3e18 kg.)

Ref: https://journals.ametsoc.org/view/journals/clim/18/6/jcli-3299.1.xml

5.1352e18 kg = 5.1352 million Gt, so one ppm (part-per-million of dry atmosphere) weighs about 5.1352 Gt.

However, atmospheric gas concentrations are customarily expressed in ppmv (parts-per-million by volume, a/k/a molar fraction, µmol/mol), not by mass, so to calculate the mass of one ppmv of a particular gas requires scaling according to the molecular weight of the gas in question.

(Note: if water vapor is ignored, as is usually the case, this is properly called the dry molar fraction.)

The average molecular weight of dry air is 28.9647 g/mol.

So:

1 ppmv CO2 (molecular weight 44.0095)

has mass (44.0095 / 28.9647) × 5.1352 Gt

= 7.8024 Gt CO2

of which (12.0107 / 44.0095)-ths

= 2.12940 PgC (GtC) is carbon.

1 Pg (petagram) = 1 Gt (gigatonne).

So:

1 PgC (“petagram of carbon”) is contained in

0.46962 ppmv CO2 which masses

3.66419 Gt CO2.

Note:

The GCP rounds 3.66419 down to 3.664 GtCO2/PgC.

Ref: https://globalcarbonbudgetdata.org/downloads/latest-data/Global+Carbon+Budget+v2023+Dataset+Descriptions.pdf

Example:

420 ppmv CO2 has mass (420 ppmv × 7.8024 Gt/ppmv) = 3277.0 Gt.

That much CO2 contains 3277.0 Gt / 3.66419 Gt/PgC = 894.3 PgC

= (420 ppmv × 2.12940 PgC/ppmv) = 894.3 PgC

Yet you didn’t give this information to either the readers or the chat bots.

AI is Artificial Stupidity in the sense that these monster programs have no objective way or ways to determine what anything is true or false.

All that LLMs are really good at, are internalized and therefore externally capable emission of grammatically correct sentences. This parsing is then processed with statistical algorithms plus some “mixing” to give answers.

The problem is that parsing does not equate to understanding. The LLMs are just as likely to spew out garbage in grammatically correct sentences, as they are to correctly answer questions in both fact and grammar construction.

Now add in the black box nature of these LLMs – which we already know contain all manner of hooks and controls – it is almost certainly a waste of time to bother with any LLM since every single answer that you don’t know already, will need to be checked.

Or in other words – you might as well have done the research yourself to start with.

As I have noted elsewhere: computers used to be characterized by GIGO: garbage in, garbage out.

But LLMs = GO – you can get garbage out at any time for any reason.

This is a step backwards, in my view, and one being taken entirely because of the enshittification of search.

If search today was as good as it was 15 years ago, much less improved by 15 years of upgrades as opposed to profiteering, we would not need LLMs/AI.

“it is almost certainly a waste of time to bother with any LLM since every single answer that you don’t know already, will need to be checked.”

This is exactly the problem.

They are helpful to double-check what you think may be true.

Also, they are easier than Google for looking up stuff.

They are a helpful tool and like any tool have to be used appropriately.

You certainly do need to double-check their answers. But if you know an answer, yet you’re finding it difficult to explain it well, then an AI tool might suggest better wording.

I don’t see much point is using any tool where it is literally a requirement to double check its answers. Why not just do the double check, first – i.e. the work?

Far too much of this AI hype is simply thinly disguised hopes for an Oracle.

Well, you could say the same about human collaborators. If you collaborate with me on a paper, someday, c1ue, you would be well advised to check my work.

Additionally, I think there’s value in using ChatGPT’s exceptionally clear prose, to explain how and why rising CO2 levels improve water use efficiency and drought resilience. I know the answer, but ChatGPT explained it better than I probably would have.

It can also be a timesaver. Even if my answer to that question would have been just as good as ChatGPT’s, I probably would have spent at least ten minutes tweaking the prose, to get it that good. ChatGPT spat it out almost instantly.

Well, you could say the same about human collaborators. If you collaborate with me on a paper, someday, c1ue, you would be well advised to check my work.

Additionally, I think there’s value in using ChatGPT’s exceptionally clear prose, to explain how and why rising CO2 levels improve water use efficiency and drought resilience. I know the answer, but ChatGPT explained it better than I probably would have.

It can also be a timesaver. Even if my answer to that question would have been just as good as ChatGPT’s, I probably would have spent at least ten minutes tweaking the prose, to get it that good. ChatGPT spat it out almost instantly.

The answer is not to use AI to filter Google garbage.

The answer is to not use garbage Google.

“RAM memory”

Is that like “ATM Machine”?

Yes, exactly like that! 😀

Or “PC computer.”

Or “GCM model.”

Or “TAR report.”

Or “ECS sensitivity.”

I use ChatGPT to quickly determine what sort of climate science dogma is being promoted this month.

For example, I was curious as to what mainstream climate scientists were saying about the causes of the decline in global mean temperature between 1945 and 1975.

ChatGPT told me that the apparent decline in GMT between 1945 and 1975 was only ‘a perception of a decline’, and that recent research has determined that no such decline in GMT actually occurred.

My conclusion is that AI can be a very effective means of quickly rewriting history and then selling that rewritten history to an audience inclined to accept propagandized history as gospel.

I only use ChatGPT to look up factual stuff, like specs. I use Claude.ai for how and why type questions.

The perception was all a bad dream. 🙂

“ChatGPT told me that the apparent decline in GMT between 1945 and 1975 was only ‘a perception of a decline’, and that recent research has determined that no such decline in GMT actually occurred.”

ChatGPT should be introduced to James Hansen.

According to Hansen’s chart below, temperatures declined by about 2.0C between those dates in the United States: ChatGPt doesn’t know what it is talking about.

Here’s what I asked PopAI (which apparently uses ChatGPT 4.0):

“Referencing literature published prior to year 2000, by how much did U.S. temperatures decline from 1940 to 1970?”

Here’s its answer:

The period from 1940 to 1970 is often cited in climate science literature as a time when global and U.S. temperatures showed a slight cooling trend, after a period of warming in the first part of the 20th century. This cooling trend was observed amidst concerns about the potential for a new ice age, and it provided a contrast to the warming seen both before and especially after this period.

Quantifying the exact decline in U.S. temperatures during this time frame requires reference to historical temperature records. According to the National Oceanic and Atmospheric Administration (NOAA), the mid-20th century cooling in the U.S. was on the order of about 0.2 degrees Celsius (about 0.4 degrees Fahrenheit). It is important to note that there are variations in the estimated temperature decline depending on the data source and the specific methodology used for averaging temperatures across regions and time periods.

The literature prior to the year 2000 that addresses this cooling includes:

It is important to understand that this cooling trend was one of many fluctuations in climate that have occurred throughout Earth’s history. The causes of these fluctuations are complex and can include factors such as volcanic activity, changes in solar radiation, and human activities.

Since the year 2000, additional research and improved climate models have provided a more comprehensive understanding of climate trends and causes. The general consensus in the scientific community today is that the planet has been experiencing a significant warming trend since the late 20th century, largely due to increased concentrations of greenhouse gases resulting from human activities.

As you can see, it drastically understated the approximately 0.7°C of cooling shown in that U.S. Temperature graph (for the 5-yr smoothed trace), but, other than that, the answer is not awful.

I then asked it:

“Can you please provide DOIs or URLs for those three references?”

It replied:

Unfortunately, my previous response may not have been clear. Works published before the year 2000 would not have Digital Object Identifiers (DOIs) because the DOI system was implemented in 2000. Moreover, URLs for accessing the literature directly might not be readily available for older publications, as many were not initially published in digital formats.

However, I can guide you on how to find these publications:

To locate these works, you can use library catalog searches, interlibrary loan services, and academic databases. Websites like WorldCat (worldcat.org), which is a global catalog of library collections, can be very helpful in finding out which libraries hold a particular book. For academic papers, databases such as JSTOR or Google Scholar can sometimes provide access to older literature, though full access may require institutional credentials or payment.

Remember that the availability of older literature can vary greatly, and accessing it might require some effort, especially for texts that have not been digitized.

Like I said, ChatGPT doesn’t know what it is talking about. Hansen’s chart clearly debunks what it is saying.

Somebody programmed it to downplay the cooling from the 1940’s to the 1970’s.

I lived through that era. I know what scientists were saying about the cooling. They were afraid that another Ice Age was in the offing going by how much the temperatures had cooled during that period.

The scientists would not be saying that if the cooling only amounted to 0.2 degrees C. it was more like 2.0C. That’s what had the climate scientists exercised.

Alarmist Climate Science is trying to rewrite history. They are spreading blantant lies about the Earth’s climate, and are now using Artificial Intelligence to further their scary climate change narrative.

It’s all a bunch of false, dangerous, destructive BS.

Intelligence is not something machines do, nor most politicians.

AI will not solve the problem of lazy or incompetent employees.

Bosses with limited understanding of programming think AI will solve problems.

Competent programmers will be frustrated with the mess illogical workers create.

They do not have the time/resources to write programmes to prevent dumb inputs.

Actually, in the ’70s when I was writing Computer Assisted Programs to supplement laboratory work, I used to rigorously check inputs for reasonableness and wouldn’t allow the student to proceed until the input was in an acceptable range. I figured that if they hadn’t read the text, they might learn something by trial-and-error.

I’ve already seen enough bad code (incomplete, missed edge cases, etc.) generated by AI that I don’t think it’s going to improve productivity all that much. Unless you count “fail more and even faster” as productive.

I think that the true relevance of AI to the climate discussion is the massive amount of electricity it requires. If the Tesla Dojo project is built according to plan, it will use as much power as 160,000 average homes. Cryptocurrency mining is another power hog, consuming as much electricity on a daily basis as the entire nation of Argentina.

This is annoying and wrong. I’ve been programming since 1968, and while the “promise” of AI would make previous advances seem trivial, there’s a world of difference in how productive I was in those early days and even 20 years later, let alone now. Editors, compilers, interpretive languages, all were much better. “Slightly more productive” implies I could go back to that old software on modern fast hardware and be only “slightly less productive” which is hogwash, balderdash, and wrong.

Pretty much everything has been solved in the vast majority of applications and services. Modern programming is mainly quilting together prior art found in packages and libraries. Node.js, python and other “open” languages use A LOT of public open source libraries – black boxes where the accumulation of the best ideas allegedly end up.

If I want to take some coordinates and perform distance or routing operations, its just a matter of hooking up a lat/long feed to a geo library and calling the right methods. AI tools, when they aren’t imagining APIs or data structures can easily map and translate into whatever the receiving libraries expect. All I have to do is describe what my expected inputs and outputs are and the AI tool roots around in open source repositories to claim and mimic work that approximates what the designer/developer intends. Once it comes back with something, about 20% of the time, it should be tossed, otherwise only some tweaks here and there make it sort of run.

AI is just getting started. It is like the Model C type car. A bit useful but not a Model T quite yet.

Whoops, what happened to the Model A?

Yes, I know. But not a good analogy.