Opinion by Kip Hansen — 2 February 2020

The attacks continue in the Journals of Science against the EPA’s proposed “Strengthening Transparency in Regulatory Science” rule — commonly referred to as the Secret Science rule.

[WARNING: This is a long Opinion Piece — only those particularly interested in this policy issue should invest the time to read it. Others can click back to the home page and select another posting. — kh ]

The latest salvo comes from two scientists. They are: David B. Allison, PhD, Indiana University School of Public Health–Bloomington and Harvey V. Fineberg, MD, PhD of the Gordon and Betty Moore Foundation

David Allison is known as a long-term critic of faddish obesity research and “in 2008, Allison resigned as president-elect of the Obesity Society after signing an affidavit (expert report) stating that there was insufficient scientific evidence available to determine whether a proposed a law to require calorie counts to be listed on restaurant menus would be effective in reducing obesity levels.” [ source ] and “has been described as one of the leading skeptics regarding commonly issued nutrition advice”. [ source ] Further, “the National Institutes of Health is currently funding Allison to explore statistical tools to improve research reproducibility, replicability, and generalizability so as to contribute broadly to fostering fundamental creative discoveries, innovative research strategies, and promoting the highest level of scientific integrity in the conduct of science.” [ source ].

Harvey V. Fineberg is the president of the Gordon and Betty Moore Foundation. Moore was a co-founder of INTEL. Fineberg was also previously a Dean of the Harvard School of Public Health (now Harvard Chan School of Public Health). He has been involved with the National Academies of Science committee on reproducibility and replicability in scientific research.

These credentials are very solid for speaking up on the subject of what science should be used to set public policy. Both of these men have been active in combating what is known today as the Replication Crisis. This crisis in science is not a figment of the imaginations of a few skeptics and science-deniers. It is a very mainstream problem, both specific science fields, like psychology, and for science in general.

You would think then that both of these scientists would support the “Strengthening Transparency in Regulatory Science” rule whose purpose is to:

“EPA should ensure that the data and models underlying scientific studies that are pivotal to the regulatory action are available for review and reanalysis. The “Strengthening Transparency in Regulatory Science” rulemaking is designed to increase transparency in the preparation, identification and use of science in rulemaking. When final, this action will ensure that the regulatory science underlying EPA’s actions are made available in a manner sufficient for independent validation.“ …. “…the science transparency rule will ensure that all important studies underlying significant regulatory actions at the EPA, regardless of their source, are subject to a transparent review by qualified scientists.”

Yet, we find that Allison and Fineberg have joined forces, as co-authors, of two “shotgun” opinion/editorial pieces (simultaneous pieces in different journals) each being lead author on one and co-author on the other.

EPA’s proposed transparency rule: Factors to consider,many; planets to live on, one — by David B. Allison and Harvey V. Fineberg [published in PNAS as an Editorial]

and

The Use and Misuse of Transparency in Research — Science and Rulemaking at the Environmental Protection Agency — by Harvey V. Fineberg and David B. Allison [published in JAMA as a Viewpoint/Opinion piece]

Each of these articles uses rather peculiar logic to oppose the EPA’s proposed rule that would require that pivotal studies upon which public policy is based should be transparent and have their data and methods made available for independent validation by qualified scientists.

Allison, in his PNAS piece, uses the following:

“All other things being equal, absent the opportunity to fully inspect the data, methods, and logical connections of a study, scientists are less able to judge the validity of conclusions or the truth of propositions drawn from a study. …. Generating and evaluating the scientific evidence to form conclusions about the truth of a proposition is fundamental to the work of science. Notably, the Environmental Protection Agency (EPA) is not a scientist; it is a regulatory agency. EPA employs scientists and uses science to aid in its mission, but its primary mission is regulation and the protection of the environment and the public health, rather than simply drawing scientific conclusions. Regulatory decisions can, are, and should be informed by science. But science alone is not dispositive of regulatory decisions, and one should not conflate the role of scientists qua scientists with the role of scientists working in a regulatory process. Scientists working in a regulatory process must utilize the best information available to fulfill their charge of making decisions that benefit society, often under conditions of uncertainty.”

Somehow, scientists working at the EPA, “working in a regulatory process”, are not qualified “to form conclusions about the truth of a proposition” presented in scientific research – even though that is the very purpose of allowing scientists the “the opportunity to fully inspect the data, methods, and logical connections of a study”. Still Allison says that “scientists working in a regulatory process must utilize the best information available” — but states that they may, or even should, be denied access to the evidence necessary to form conclusions about validity of findings presented to them.

Allison seems to intentionally obfuscate the issue by conflating replication with EPA’s stated desire to be able to have “independent validation”.

“Certainly, reproducibility and replicability play an important role in achieving rigor and transparency, and for some lines of scientific inquiry, replication is one way to gain confidence in scientific knowledge. For other lines of inquiry, however, direct replications may be impossible due to the characteristics of the phenomena being studied. The robustness of science is less well represented by the replications between two individual studies [or reproduction of one or more studies] than by a more holistic web of knowledge reinforced through multiple lines of examination and inquiry”

Independent Validation of a study’s findings, or of the findings of a series of studies, is not the same thing as replication and not the same as reproducibility.

The National Academies offer these definitions:

Reproducibility means computational reproducibility—obtaining consistent computational results using the same input data, computational steps, methods, code, and conditions of analysis.

Replicability means obtaining consistent results across studies aimed at answering the same scientific question, each of which has obtained its own data.

Reproducibility and Replication are two similar terms that are often mistakenly conflated. It is difficult to talk about these terms without exact definitions — but many readers may, like myself, not quite agree with the above definitions. Neither covers the most basic action considered to be replicating a study — doing exactly the same thing over again and seeing if the results are the same — not just the computational steps, but everything. We see complaints when there are replication failures (when studies are found not to reproduce/replicate, as in the Ocean acidification fish behavior studies) that the reproduction/replication team did not follow exactly exactly the same procedures — “that’s why it failed to replicate”, they say.

The National Academies has produced this poster on the subject:

[ click to download full-sized .pdf ]

I generally think of this whole topic as two separate scientific activities: one is an attempt recreate exactly all the steps and procedures of a previously reported experiment to see if one gets the same results and the other is doing a similar experiment(s) meant to elucidate the same natural phenomena [phenomenon] — to see if one can ascertain if a reported effect really exists in the real world.

I fear when Allison speaks of “a more holistic web of knowledge reinforced through multiple lines of examination and inquiry” he means “lots of studies done by groups of like-thinking researchers who all support the same bias.” Ioannidis referred to this as “research findings may often be simply accurate measures of the prevailing bias.”

Allison quotes “Importantly, confidence in results can be obtained in other ways. These include peer review, replication [defined as “obtaining consistent results across studies aimed at answering the same scientific question, each of which has obtained its own data”], demonstration of generalizability, and yet other procedures.” I am afraid that Allison slips off the tracks when he makes the claim that Peer Review can give us confidence in results. Allison is active in the Replication Crisis arena and knows full well that despite peer review, most research findings, across nearly all fields, are probably false, despite the peer review process of all important journals. Note that Ioannidis is not just talking about epidemiological, psychology or nutritional research, he is talking medical and clinical research as well.

Recent findings in the Ocean Acidification field demonstrate Allison’s hope that “confidence in results can be obtained” by peer review and “consistent results across studies” is a false hope. Psychology discovered this sad-but-true fact years ago. What is required to obtain true confidence in results is Independent Validation.

The tendency in many fields of research that are “popular”, such as nutritional epidemiology, environmental health issues and climate science, is to claim that if one has many studies that all show the same small and tentative associational results that this then represents “proof” that has been “reinforced through multiple lines of examination and inquiry”. That concept is not true and is not scientific. Having lots of studies with very small associational results mean that there might be something that deserves further rigorous study — and this comes with the caveat that there might well be nothing there at all.

Allison actually tries to make the point that EPA not only can, but should, make policy based on science that is not definitive.

“Given this, many studies which might be uniquely informative and offer sound scientific evidence on which to base policy decisions might be excluded from the process. Again, this might be fine for a scientist qua scientist drawing conclusions about the truth of a proposition who might justifiably state that he or she is unwilling to declare a proposition to be demonstrated unless some rigorous standard of science has been met, but it is not appropriate for a decision-making entity which has the goal of making prudent decisions. Such a decision-making entity should base its decisions on the best available information, even when that best available information may not support definitive scientific conclusions.”

In other words, Allison promotes making regulations based not only on strong evidence, but on prevailing bias.

Allison raises the false flag of “patient confidentiality” as almost all detractors of the EPA proposed rule do, without any specific-to-EPA examples — always vague hand-waving about some other science somewhere else. There is a valid question regarding patient confidentiality — but it does not exclude serious scientists from reviewing data (for which permission has already been granted by the study subjects) for the purposes of validation. Obviously, these subsequent scientists would be under the same obligation of confidentiality as the original researchers. It has never been EPA’s position that any and all data from all studies must be made available to the general public.

After arguing that: “Should EPA trust all reported conclusions from scientific papers without probing further and, where reasonable, requiring that the data and studies be made reproducible and transparent? In our opinion, no.” he then falls back on “As we have argued here and elsewhere, reproducibility and public availability of data, while valuable, are neither necessary nor sufficient markers of the soundness of science and are not the only indicators of the soundness of science. Therefore, relying only on reproducible studies and publicly available data cannot be taken as equivalent to using the best available science, and adopting such a restrictive policy would be contrary to EPA’s responsibility.”

Allison raises the issue of trust — and concludes that EPA and its administrators cannot be trusted to identify the best available science under the proposed new rule. Yet, he apparently trusts them to have done so in the past where they have selected what science upon which to base regulations. It is apparent that Allison fears that some preferred science in the past is in danger of being invalidated under the proposed rule. Which science? Secret Science — science used to formulate regulations in the past for which the data and methods are still being hidden from EPA and from independent review by other qualified scientists.

In all of Allison’s long and wandering dissertation about trust and replication, he neglects the simple and obvious fact that EPA has always been trusted to have and to hold the scientific data necessary to fulfill its functions. Someone has always had to decide what science is valid and applicable to every regulatory decision. The fear that bias might be injected into the process does not only apply to the future under the proposed rule, but has always applied equally in the past — past biases may have driven policy making. This is the point of requiring that data and methods be available for independent validation by disinterested, qualified scientists.

I have demonstrated, in my recent essay, Secret Science Under Attack — Part 2, how weak and uncertain the science of the Harvard Six Cities Study and the American Cancer Society study known as ACS II was. James Enstrom, of UCLA and the Scientific Integrity Institute, in a recent letter to Allison, includes a link to his 22-page document in support of the EPA’s proposed “Strengthening Transparency in Regulatory Science” rule, which includes a massive amount of information concerning the weaknesses and faults of the past EPA’s scientific justification of air pollution regulations, especially those concerning PM2.5. Readers with deep interest in the subject should download Enstrom’s document and use the links and references as a topical guide to the literature that does not support the current PM2.5 regulations.

The JAMA opinion piece by Harvey Fineberg is easier to discuss, though just as logically odd. Fineberg freely admits:

“Transparency in science is a laudable goal. By describing with sufficient clarity, detail, and completeness the methods they use, and by making available the raw data that underlie their analyses, scientists can help ensure the reproducibility of their results and thus increase the trustworthiness of their findings and conclusions.”

but he then falls back on one of the same arguments presented by Allison:

“At the same time, transparency is not in and of itself a definitive standard for the usefulness of science in policy making.”

And, that is true — simply because some piece of science is transparent and its data freely available, does not mean that it is suitable as a basis for policy making. However, if it is transparent and data is available, then other scientists, both at EPA and elsewhere, can easily determine if it is suitable, or not.

Raising the same false flag of “patient confidentiality”, Fineberg launches into the oh-so-typical spiel of:

While sometimes falling short in its use of science, the EPA has traditionally strived to base regulations on the best available scientific evidence. For example, in 1997 the EPA adopted new air pollution regulations based mainly on 2 large epidemiological studies. The Harvard Six Cities study had begun in the 1970s to monitor the health of more than 8000 adults and children in 6 cities over 15 years while simultaneously tracking levels of air pollution, mainly related to burning of fossil fuels to generate electricity. Published in December 1993, the study found a strong gradient of mortality associated with increasing levels of airborne small particulates (diameter <2.5 μm). A second, independent study by the American Cancer Society followed 500 000 people in 154 cities for 8 years and reached similar conclusions in 1995.

Out of the thousands of research studies that the EPA must have used over the last 25 years to support regulations of differing kinds, Fineberg, like nearly all other detractors of the EPA proposed Secret Science rule, is concerned about ONLY TWO studies — the Harvard Six Cities study and the American Cancer Society study, known as CPS II. These two studies are the scientific backbone used to support the EPAs current regulation on PM2.5 air pollution and their [I believe “entirely unsupported”] claims of “thousands of lives lost very year”.

The National Academies poster on Reproducibilty and Replicability above [repeat link] does not call for use of non-definitive science — science which does not really answer a question — but warns:

“One type of scientific research tool, statistical inference, has an outsized role in replicability discussions due to the frequent misuse of statistics and the use of a p-value threshold for determining “statistical significance.” Biases in published research can occur due to the excess reliance on and misunderstanding of statistical significance.”

and

“Beyond reproducibility and replicability, systematic reviews and syntheses of scientific evidence are among the important ways to gain confidence in scientific results.”

Neither opinion piece by Allison/Fineberg and Fineberg/Allison makes a strong case for opposing the EPA’s proposed “Strengthening Transparency in Regulatory Science” rule.

Bottom Line:

If we want governmental regulations based on strong science, then that science must be fully available for review and validation by qualified, disinterested (not involved in the policy fight) scientists. That’s transparency.

Yes, we do want EPA’s internal scientists to be able to review, re-analyze and validate any science that is going to be used to make policy and regulations.

Yes, we do want other qualified statisticians and epidemiologists and clinical researchers to be able to see all the pertinent data, all the methods, all the computer code, all the statistical assumptions — everything. We want them to find the strengths and weaknesses of research results so that follow-up research can be done and prevent costly and destructive policy from being based on research fads prevailing biases in fields of research.

No, I do not want to take your word for it. I don’t care how many letters you have behind your name or what school you went to, who your mentor was or who your research pals are. I don’t care how many important names you can get to sign on to your paper as co-authors. If your research is important enough to have weight with policy makers and regulators, then I want that research independently validated. I don’t want to personally pay, nor do I want society to pay, for your hubris and pride.

# # # # #

Author’s Comment:

One way in today’s world to tell if something is a good idea, is to gauges how much certain segments of society protest against the idea. The better the idea, the more the outcry.

EPA’s Secret Science rule is a fine example of this. In calling for transparency, a broad cadre of scientists, researchers and journal editors have simultaneously realized, I think, that Independent Validation based on all-the-data-and-code-and-methods transparency will reveal that their favorite bedrock environmental studies are like the Emperor’s-New-Clothes: not much there.

The National Academies poster on reproducibility [pdf] contains this odd [to me] point as it #6: “Not all studies can be replicated. While scientists are able to test for replicability of most studies, it is impossible to do so for studies of ephemeral phenomena.” They only have ten points on the poster and they include this one? Readers, please, can you give important examples of this principle? You can buy the NAS book “Reproducibility and Replicability in Science as an eBook for about US$ 55 — it may explain ephemeral phenomena.

Addressing your comment to “Kip…” will bring it to my attention.

# # # # #

“ephemeral phenomena”

That’s like when you see a UFO, but it’s gone before you can take a picture of it.

David Blenkinsop ==> Good one! (That’s the kind of thing I was afraid they were talking about….)

Kip just a friendly suggestion. You should remove your warning.

It is an excellent posting on policy, and gets to the heart of the politics of creating Hobgoblins. It is must read for the vast middle who don’t understand what is the truth of the CAGW scare. Your warning can only serve to run, the very readers that need, it away. Consider:

1st – Your post, really, is not that long.

2nd – Readers are fully capable of paging down to see how long a post is.

3rd – If you fear it is too long then replace the warning with a bullet point executive summary for those who fear detail

I am more fatigued by the length of the comment board than the length of your posting

Bill ==> Thanks for your suggestion.

I have written about 150 essays here — and have learned what all journalists learn eventually — a majority of readers only get through the lede and then quickly lose interest.

Secret Science 3 is over 3,000 words — my normal limit is 2,000 words….thus the warning.

Appreciate your viewpoint, in any case. Thanks for being interested and speaking up.

I have had people comment after reading only the title.

I simple warning of a long essay not only warns casual readers but can act as a hook to make some readers curious enough to continue.

Kip,

[ You ] have had people comment after reading only the title shows:

– your title transports the topic

– the commenter is acquainted with the topic; and may help to contribute on its own risk.

Which should be in your mind.

Johann Wundersamer ==> Essay titles are like newspaper headlines — they are meant to introduce the topic and attract the eye of readers — encouraging them to begin reading. When they begin reading, they get the lede. In journalism, the lede is intended to both be the important stuff of a story. In other types of writing, an essay or article can begin with “the hook“: “The “hook” is that critical piece of newsworthy information that will capture the attention and interest of … audiences .”

Journalists have different methods and abilities at these two part of the trade.

“I don’t care how many important names you can get to sign on to your paper as co-authors. If your research is important enough to have weight with policy makers and regulators, then I want that research independently validated. I don’t want to personally pay, nor do I want society to pay, for your hubris and pride.”

Absolutely completely agreewith you Kip.

The secret science of the past EPAs, science where public policy is controlled, and those who want to keep it secret, must end.

The corruption of science behind a hidden agenda of ideology must end… for science sake.

Transparency is the bleach that washes away whitewash pseudoscience fabrications to meet personal political agendas. Agendas from those who think they know what is right, but are usually quite wrong.

Joel O’Bryan, PhD

“the bleach that washes away whitewash pseudoscience fabrications”

That is one of the worst mixed metaphors I’ve seen since Shakespeare’s “slings and arrows”. In fact it’s worse, I’d say it’s unprecedented !

Apart from that you spot on. The alarmists constantly try to claim the authority of science while doing the damnedest to throw the whole of science under a bus to force through their mindless agenda.

Yes, a pretty bad metaphor. I agree.

You got me.

I’ll try to do better going forward.

Admit you are wrong? Bad “scientist”, how DARE you!

Sorry, admitting you are wrong will NOT get you a chair at Harvard. Resubmit your resume and leave that out.

the problem is “peer review”….and how it’s been corrupted to mean truth

peer review is just advanced speel chex….peer review gets it out there so others can see it and try to replicate it

..and then if it holds up….it eventually becomes a maybe truth

science has gotten lazy…and is under pressure to publish or perish….once something is cited enough it’s almost impossible to back up

“doing exactly the same thing over again and seeing if the results are the same”

Climate models can’t even replicate themselves.

Jeff ==> Climate models are inherently chaotic — extremely sensitive to initial conditions — therefore NEVER return the same results unless started with exactly the same parameters.

Dr. O’Bryan ==> Thank you for your support. We are dealing cadres of like-minded researchers enforcing biases across whole fields of investigation. Ruinous when there “science” hits the policy-makers.

I’ve studied environmental epidemiology for a number of years. I made a list of the negative studies, air quality/health effects, that shows no association with adverse health effects.

https://junkscience.com/2018/06/negative-studies-and-pm2-5/#more-93941

I’ve also published papers finding no mortality effects of PM2.5 and ozone in California, ~2M e death certificates.

Stan ==> Thank you for weighing in — Jim Enstrom pointed me to some of your work in the field, which I have found enlightening and enjoyed reading.

READERS: see Stan Young’s list of PM2.5 studies linked above — well worth your time.

“Thank you for your support. We are dealing [with] cadres of like-minded researchers enforcing biases across whole fields of investigation. Ruinous when

theretheir “science” hits the policy-makers.”I realize it’s just a comment, but I know how meticulous you are, Kip. 🙂

Jeff ==> Thanks for your careful reading == I like to blame the auto-spelling feature (though it is often my fingers that are at fault….)

Reproducibility and Replicability is a rewarding front on which to attack the pseudo-science of the Warmistas, as they depend to a large degree on Secret Science, more accurately described as propaganda. Waremistas do not like to subject their speculative pronouncements to any sort of Independent Validation. This is the same reason that they do not like public debates as they cannot even survive that.

Indeed. Alarmists keep telling is that the Hockey Stick has been independently validated may times. Problem is, those “validations” almost always have one or more of the same authors, not really independent. Climate Audit is a gold mine for this sort of thing.

It’s unfortunate that two scientists who have engaged in tackling the reproduction crisis seem to have fallen prey to the same group-think which is causing the problem.

The politically corrupt body which is the target of these new rules on openness and transparency : the federal EPA, is riddled with group-think , bias and political activism masquerading as science.

Since its rules are aimed not just a protecting the environment but pursuing a political agenda there are many there who are prepared to bend the rules, distort and misrepresent science “for the cause”. Classic noble cause corruption.

It seems these two champions “good science” are victims of the same noble cause corruption, they are contorting their own arguments to say : but this is climate regulation, THAT’S DIFFERENT.

Greg,

Yes

Classic case of Noble Cause Corruption.

And the downfall of science.

The destruction of ethics.

Ideology subverts science.

Circa 1620 AD.

Greg ==> In this particular battle, of the hundreds of attacks in the EPA’s proposed rule, the opposition is peculiarly aligned behind JUST TWO studies: the Harvard Six Cities study and the CPS II. I suspect that if the wall of secrecy of these two studies is breached, there will be a domino effect of weak and iffy science all tumbling down and careers crashing.

….attacks on the EPA’s proposed….

Jim Enstrom secured the data for CPS II. He found that the original study did not use all the available data. Using all the data, he found no effect.

Stan ==> Yes, I linked to Enstrom’s work earlier in the series.

It’d be nice, KIP, but don’t agree. There’s always a juggernaut of more money & apparatchiks behind the “wall” ready to fill in any breach.

beng135 ==> i hope you’re not right.

Kip – Possibly I’m just being cynical (I doubt it) but the coordinated use of those TWO studies is probably, well, coordinated. I think the memo went out and is being dutifully obeyed, as usual. The two opinion pieces you review were also coordinated: the Empire Strikes back.

Redefining ‘reproducibility’, a fairly self-explanatory term (if you do something once and get a result, do you get the same result when you do it again), as some jargony ‘computational reproducibility’ is bewildering illogical. If you don’t get the same results when using the same data, calculations, methods and conditions, then you don’t have anything at all. Most experiments would address the last three of these these internally by replication to assess any experimental error in the data. Sounds like Newspeak to me.

Anyway, pretty interesting, if depressing, series on the assault against scientific transparency. Thanks, I guess.

Bureaucracy:

Rule #1 –> Expand power and people.

Rule #2 –> See Rule #1

Rule 3: Failure gets you more money to “fix” problems. Success usually the opposite.

Kip & Greg,

Yes!

Allison & Fineberg are using “Occham’s Shoehorn”*: a new device that helps you squeeze the data into your preconceived narrative.

* – a new term I just read in Lee Jussim’s delightful Quillette article:

//quillette.com/2020/01/29/an-orwelexicon-for-bias-and-dysfunction-in-psychology-and-academia/

Zippered ==> Loved the Quillette piece: Re-doing the link:

https://quillette.com/2020/01/29/an-orwelexicon-for-bias-and-dysfunction-in-psychology-and-academia/

This sort of twoke vocabularianism in embiasing.

That is an accurate representation of the way the issue is presented but is also a deceitful way of representing what such studies show to make it sound more dramatic.

Nearly all such epidemiological studies are statistical results claiming to show “early deaths” , sometime by as little to just few days “earlier” in the stats.

To present this as “lives lost” means someone died who would otherwise have survived. Not losing a few a days of incontinent senility that I would probably be will to trade away for a packet of nuts.

If the choice is not having my own independent transport and having to bus or walk to work against a statistical risk of dying 6 days earlier in a lowsy retirement home at the age of 95, I’ll take the PM2.5s any day of the week.

How many days of my life will be lost by the EPA refusing me access to time saving transport? Once again there is no cost/benefit assessment.

Won’t you please think of poor Nemo?

https://www.msn.com/en-au/motoring/research/tyre-emissions-1000-times-worse-than-exhausts/ar-BBZvfoU

Personally I’ve found that ‘ephemeral phenomena’ a superbly sublime cudgel to beat sanctimonious EV gits over the head with now. As in- Are you aware of the latest scientific research that shows….. and what do you think we evil pollooders should do about it now we’re all in this together? Petard.. hoist!

+1

I am going to visit mom in Florida shortly- she is 94 I’ll ask her about some of the trade off she has had to make over the years.

To Greg.

Instead of using “early deaths” they have been using “premature deaths”.

At a seminar presentation, I asked the speaker to give me the number for “premature”. Weeks, Months, Years? No answer. Premature is premature!

Kip is doing a good presentation on the fact that EPA is refusing data access.

Kip,

surely most events involving humans are ephemeral? How would you suggest people study whether or

not humans were responsible for the extinction of megafauna? It only happened once and is never going

to happen again. Similarly many events in the historical sciences (geology, evolutionary biology) only

ever occurred once and will never occur again — the rise of photosynthesis for example?

In astronomy many events are also essentially ephemeral. The last supernova in our galaxy occurred several

hundred years ago and while we might see another one we are never going to see enough to get decent statistics on anything.

None of your examples, even if they were all correct, are relevant to regulatory practices. Thus they are not relevant to the topic at hand – which could be characterized as being about speculation on what might happen in the future if such and such is or is not done.

The EPA uses “ephemeral” for things like waterway and gullies (I personally love the gullies one).Ephemeral streams—momentary running water Not the same as intermittant which is predictable by seasons and so forth It allows the EPA to expand its influence over virtually everything in your life and the definition is, well, ephemeral. Lovely Catch-22.

Izaak Walton ==> I don’t think that “nearly unique” or “only happened once in universal history” is what they are talking about as “ephemeral phenomena”. I would like to see some examples…..certainly examples as it applies to replicability or reproducibility.

Kip,

how about studying the effects of dropping nuclear weapons on cities? Or the effects of the Bhopal disaster? Data from such incidents is useful but hopefully won’t be replicated any time soon.

Izaak ==> The data collected by the original researchers in such cases was already collected after the event. That data should be available for re-analysis. We don’t need to drop more nuclear weapons or poison more people — we just need access to the data that was collected — and we need to apply our scientific sensibilities to the notion that, with the event gone by and the immediate effects no longer available for observation — that our conclusions will be tentative and prone to unknown and unknowable error.

Kip,

now you are dodging the question. Is the Bhopal disaster an “ephemeral event” that

is an example that applies to “replicability or reproducibility”. Being able to re-analyse

the data is a completely different question.

Izaak ==> We are talking about research findings about “ephemeral phenomenon” — whether or not that resaerch could be replicated. See the NAS chart in the essay — #6.

It is not the event that needs to be replicated — it is the research about the event and its findings.

“The last supernova in our galaxy occurred several hundred years ago”

Not even wrong. There are most likely many supernovae going on right now, we just won’t see them for hundreds, thousands, millions of years, if at all.

Hi Jeff,

The key part of the sentence was “our galaxy”. The last observed supernova that occurred in the

milky way was in 1680. On average there should be one supernova every 50 years in our galaxy so

one is overdue. See

https://www.skyandtelescope.com/astronomy-news/milky-way-supernova-rate-confirmed/

for example.

Of course plenty of other supernovas have been observed since 1680 just none in our galaxy.

Do you think we have been able to observe every supernova in our galaxy since 1680? I think you overestimate our ability to observe the entire galaxy.

Not an ephemeral event. The evidence of it having happened is still available. Same is true of supernova. From the context of usage of the term I would say that daylight meteorite falls and the green flash are examples of ephemeral phenomena. Science may have some difficulty with such happenings initially, but eventually understands the circumstances of occurrence well enough to gather data or model the phenomenon. A true ephemeral phenomenon the likes of which you seem to be suggesting is something that happens only once, leaves nothing behind. That can’t possibly be the basis of regulatory policy, nor even of science.

Kevin ==> I agree, rresearch on ephemeral events can’t be the basis of policy…no tin my opinion.

sorry kip — my reply was actually to izaak walton, but got misplaced.

Kevin ==> No worries –its like a conversation at a party sometimes — one is not always sure what comments are aimed at who.

Procedurally, It helps if one always starts a comment with the name of the person you are speaking to. The NY Times has started automatically beginning replies to comments with @persons_name.

I have always used my own mark-up “Kevin ==> message” been using it so long now, I doubt I’ll change to the more popular “@…”

Reproduce vs. Replicate

The definitions and distinctions between the terms are a quagmire. However, defining reproducibility as computational reproducibility is just BS.

I don’t see how that would yield other than 100% reproducibility.

There is a book, Rigor Mortis written by Richard Harris, which focuses on work by Amgen and Bayer to try to replicate published research findings as the first step in developing new drugs. It refers to the fact that scientists are often unable to reproduce their own experimental results.

The useful distinction is that reproduction does the experiment exactly the same way. If the results aren’t the same, then the experiment is, on the face of it, unreliable.

Replication attempts to validate the results using different methods. If you get the same results several different ways, the results are more trustworthy.

The problem is that some specialized fields have definitions of replication and reproduction which are exactly opposite to the ones above. For instance, when you talk about the replication of a data packet, you mean an exact copy. The nuance is that those fields, like data science or biology, use the terms replicate and reproduce in a different context than discussing published research findings.

Using field specific definitions of reproduction and replication to discuss the validity of published research findings is unhelpful. The context is completely different.

In any event, we need a term that means, “we did the work exactly the same way” and the results were, or were not, different. I would argue that that term is ‘reproduction’.

Agreed – computational reproduction only proves that you are smart enough to not try perpetrating an obvious fraud.

commie ==> Yes, I ran into the confusing/contrary definitions problem writing this series. The National Academies has a whole book (linked in the essay) on the topic, and they have supplied that “computational reproducibility” bit — with which I DO NOT agree. The NAS definitions leave out the most common-sense pragmatic action of replication.reproduction of findings.

To me, reproduce means to “produce again” the same thing. In other words, do exactly the same thing with the same inputs. Where replicate means to make a copy of something, which by implication is not exactly the same as the original; the inputs and methods may be somewhat different but the output is the same (or nearly). To me this means there is no contradiction in terms and it is clear which should be used when. For example, when we say a data packet has been replicated, it means we made a copy, not reproduced it using the same input data and computations as the original.

Paul Penrose ==> In the essay, I quote the National Academies definitions from their big (book length) study on of R and R.

I don’t agree with their definitions — they leave something big to be desired.

[] focuses on work by Amgen and Bayer to try to replicate published research findings as the first step in developing new drugs.

It refers to the fact that scientists are often unable to reproduce their own experimental results –> It refers to the fact that scientists with every new development have to start from zero, even if there’s already experience in that sector.

That paper worth a look:

https://www.nature.com/articles/s41558-019-0677-4

Forget CO2

Don’t think there’s much to be seen there – they apply a “model ensemble” (sound familiar?) to “determine” what would happen with less “ozone depleting substances” – which does nothing more than reflect the (undoubtedly wrong) input assumptions into the “models.”

Since the whole “ozone depleting substances” basis for the Montreal Protocol has been identified elsewhere a junk science (just like CO2 “driving” the Earth’s climate), I think that paper is just more junk science.

The problems with reproducibility and transparency are very deep and very wide. Scientists at the tops of their respective fields are unlikely to welcome any reform that may threaten their positions. Nor are they likely to welcome as their replacements (upon retirement) people who will take actions implicitly critical of their predecessors.

The only action with a reasonable prospect of success is wholesale culling of the upper echelons of the various sciences and promotion of those dedicated to the scientific method. (Gross oversimplification.) Unfortunately, this will reduce the likelihood of scientists delivering the ‘right’ (read ‘palatable’) results to a ruling political class whose relationship with the truth is frequently adversarial in nature. Therefore, the will to take the necessary drastic action is nonexistent.

The famous ‘self-correcting’ quality of Science will only come to the fore when the errors are too egregious to be denied. While the cracks in the edifice can be ignored or papered over, they will be.

The EPA reform is highly desirable, but I expect it to be watered down and/or ignored by those whose power and prestige have been enhanced by practising dodgy science. I hope to be proved wrong, but fear and self-interest almost always trump matters of principle

Well said. Too many egos at stake for them not to fight the notion of their “results” being “questioned,” especially those that have been trumpeted to the public already.

Lefty ==> It is unfortunate that this issue is almost entirely political — and its resolution will depend on the outcome of the next U.S. Presidential election.

Sabine Hossenfelder puts it this way:

Professional judgement is only ‘gut feeling’ until it is substantiated. Allowing people to use their professional judgement as the basis for developing regulations just results in bad regulations. Over regulation is a sever systemic problem which affects the viability of our society and economy. It’s not too much to expect regulations to be the result of robust science. Of course, when science is sufficiently robust, it becomes engineering. 🙂

commie ==> There is a lot of science being done to confirm the prevailing bias in many fields. and not enough effort is being made to dis-confirm these biases in their fields of study.

The Secret Science battle today is focused on the PM2.5 studies — which are so weak that in other fields they would be declared “nothing found” — which are emotionally so important to so many who have based their careers on finding tiny, iffy, “maybe” associations which are then declared to be costing (or saving) “thousands of lives”.

Other fields, outside of air pollution, are filled with similar “foundational” studies that are scientifically flawed — which if brought down, will collapse whole bulwarks of shoddy science.

There are some brave scientists risking their careers trying to correct all of this.

I have a suspicion that if the rule was proposed by Obama or some other President not named Trump, there would be much more support for it.

DHR ==> The issue is flamingly political. So many liberal/progressive causes are supported by un-sound science — the Sixties flower children now in positions of power — thinking that they can glow everything right. There are so many things that they “don’t like” and they have built card-houses of pseudo-science to bolster their views.

Those nasty conservatives are threatening those houses of cards.

I’d like to see a pot of government gold or foundation funds dedicated to replication – with an inherent bias to disprove claims. While this use of the editorial pages and PNAS is legit, to offer those vehicles are abused.

It is like those “expert” scientists who make dubious claims but hide their work behind firewalls – not availing of open source journals and encouraging transparency. Perhaps they do not want to allow non-scientists to point out the flaws in their logic and reasoning.

Publishers, not scientists, hide behind firewalls.

Journals establish their cache by having great research. This makes the journal worth paying for. And thus the journal is viable across time.

The alternative is: scientists pay for their share of the publication costs. We see where this has gotten us: there is now a growing topic of how to tell a decent open-access journal from a fly-by-night operation.

Thanks for this post, and the series, Kip.

Regards,

Bob

Bob ==> appreciate your support and hope you are doing well, — healthy and happy.

they just don’t want their fraudulent paper exposed. if the EPA checked it, the world will find out that they are not scientist but just your everyday hustler

Apin ==> Their papers are not really fraudulent — they are based on an invalid scientific viewpoint — a very odd idea that lots of itty-bitty findings add up to a big strong finding. This is not true — I will write another essay in this series with an example using medical research in which findings which appear much stronger than those in the Six Cities study or CPS II study are declared “no effect found”

Kip, Your reply to Apin said among other things:

“Their papers are not really fraudulent — they are based on an invalid scientific viewpoint — a very odd idea that lots of itty-bitty findings add up to a big strong finding.”

I’d call it having plausible deniability against accusations of fraud. A less than charitable description would be cherry picking. I’m thinking of all those charts where the temperature or sea level rise creep up over time. Colorado University’s Sea Level Research Group steadily increased the 1992-2004 rate of sea level rise from 2.6 mm/year beginning in 2004. By the end of that year 2.9 mm/yr and by 2006 it was 3.2 and upped to 3.4 in 2007 and was 3.5 by 2016. In 2018 all the numbers were changed and there was a big announcement that they had found an acceleration of 0.084 m/yr².

Dr. James Hansen said five meters of sea level rise was possible by 2100 Here’s the Link

http://www.columbia.edu/~jeh1/mailings/2011/20110118_MilankovicPaper.pdf

See Figure 7 Page 14 and 15 where Dr. Hansen is quoted:

” The 5 m estimate is what Hansen (2007) suggested was possible”

I’d call that an unsupported assertion.

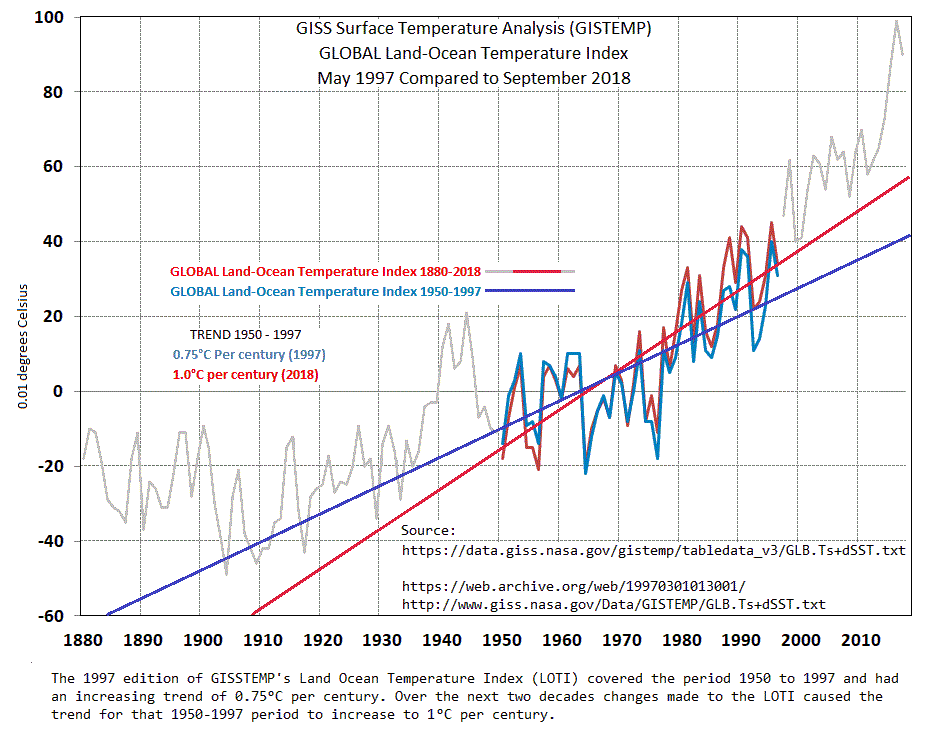

NASA’s GISTEMP is a steady story of changes every month. Here’s a graphic that shows the results of that:

Steve ==> These Public Health guys really really believe that they are right and justified. That’s the odd thing to me — I am following up with an example of medical research that finds RRs of the same magnitude, and declares the whole result a null finding.

Kip. Another fine article. Two comments:

1. I’m a big fan of Ioannidis and of better scientific reporting. But let’s not lose track of the fact that collecting the results of months or years of experimenting with probably numerous changes/improvements in data collection and reduction over the course of the effort is likely to be a LOT of work. I think there may be a real danger of setting standards so high that no one can or will comply with them.

2. “a more holistic web of knowledge reinforced through multiple lines of examination and inquiry …” — I don’t know about anyone else, but I find this stuff to be beyond my ability to parse. Is there an English translation somewhere? Is an English translation even possible?

Don K ==> As for your point #1 == if the researchers have collected enough data to perform their original analysis, then that information is already stored and collected in a usable format — and it is not more difficult to archive it than it as to record it in the first place. ages ago, this would have been a problem — how to preserve all those lab notebooks with scribbled notations? — today, before any serious analysis can be done, everything is digitized and put in a spreadsheet or database — lab notes are typoed in with a word processor and carefully saved as dated files, etc. There are plenty of places in the cloud to safely store gigabytes, even terabytes of original data. External Solid-state backup drives are cheap.

The “holistic web of knowledge” sounds like someone went to the University Of Disney.

As a matter of fact, I had my first run in with the term “holistic” from Eli Wallach, the actor, who was commenting on an EIS (Environmental Impact Statement) I wrote for the Connecticut DOT in the 70’s. I was working as a locational analyst and had to look up definition in order to respond…

yirgach ==> and how did it turn out in the end?

Every time I hear the words “holistic” and “wellness”, I immediately think “scam”.

“The problems with reproducibility and transparency are very deep and very wide. Scientists at the tops of their respective fields are unlikely to welcome any reform that may threaten their positions. Nor are they likely to welcome as their replacements (upon retirement) people who will take actions implicitly critical of their predecessors.”

AKA “Science advances one funeral at a time”

atrib Max Planck (but it’s more of a paraphrase really)

Most of the paper showing 97% consensus has flaws. They manage to pass it through Pal review (repeat not Peer review) system. You can see how quickly those papers are getting published. People with some common sense can even spot problems with those studies. But using models you can show anything you like! This is the way they are misleading the whole climate science research. If someone wants to publish their genuine concern about those flawed papers via letters or correspondence, the journals never publish those. It is not even sent for peer-reviewing. All those manipulations need to be exposed.

Mods

Has the new system started yet ?

If so, where do you register ?

Thank you

“We see complaints when there are replication failures (when studies are found not to reproduce/replicate, as in the Ocean acidification fish behavior studies) that the reproduction/replication team did not follow exactly exactly the same procedures — “that’s why it failed to replicate”, they say.”

I have a PhD son that works in immunology research. He says this is the main bugaboo of so much research – failure to perfectly describe experiment procedures so they can be replicated, followed by not implementing the described procedures exactly. I never realized how many different strains of lab mice there are, all with varying genetic traits. If you don’t perfectly describe which strain of mice you used then its a good bet your experiment will fail to be replicated. Even gene manipulation is an inherently inexact process. If you don’t test to find out exactly what your manipulation caused and describe this along with exactly how you did it then replicability will suffer.

“even when that best available information may not support definitive scientific conclusions.”

The governing principle here should be “first, do no harm”. If you don’t know where you came from then how can you judge where you need to go?

“There is a valid question regarding patient confidentiality — but it does not exclude serious scientists from reviewing data (for which permission has already been granted by the study subjects) for the purposes of validation.”

You don’t even need subject permission in most cases to do a replicative study, let alone to evaluate data. Accumulated data is typically anonymous from the outside. Do you need to tag each of the lab mice you use with a ID number and label your data with these ID’s in order for others to replicate your experiment?

If an event is ephemeral then why should government regulation be based on it? This just sounds like wanting to base regulation on record events, e.g. the hottest day in the past decade or the largest precipitation event in a century. It’s like basing regulation on an “average global temperature” when you don’t even know what the maximums and minimums are doing (which is where most environmental impacts occur) let alone what is actually happening in your region!

NASA’s global CO2 concentration map show the US with some of the highest CO2 concentrations across the globe, yet much of the US is a global warming hole. It also shows that CO2 concentrations are not homogenous around the globe. It shows Russian Siberia with an extremely high concentration while southern Russia and Mongolia have very low concentrations, so exactly how much dispersal of atmospheric CO2 actually happens? And supposedly much of Russian Siberia is a global warming hole just like the US! So, if high CO2 concentrations result in global warming holes and dispersion of atmospheric CO2 is questionable, then *exactly* what should US regulations be? Pushing an empty swing while your child is in the next swing over is a losing proposition. Is the US trying to lower its atmospheric CO2 concentrations the same kind of thing?

Tim ==> Thanks for your input. You have seen through many of the arguments used to fight the secret science rule.

The whole mice strain thing is wild — the public should never even be exposed to any science that is based on mouse studies — they are a fine investigative tool but their results have NOTHING to do with the health or treatment of human beings — the findings of such studies may someday lead to something that may apply to people — maybe.

Kip.

Yes mice strain thing is wild.

Now, the US has the most genetically diverse human population. Have fun with the epidemiology studies.

I think the IARC finding on glyphosate speaks volumes on this topic.

I think part of the problem is that these things are not ‘experiments’. They are not scientific method activities. They are ‘studies’ subject to the ‘garbage in garbage out’ problem with computer models. Being a long term IBM employee involved in decades of computer modeling and data research, I believe that the data analysis available at the time these studies were undertaken is crude compared to todays, and if we could simply get the data into one of our new analytics engines we might be able to confirm, or at least report, on the actual markers in the information. Sadly, not sure that will ever happen.

Tom Bauch ==> Part of the problem is that they are trying to find effects that may not be there at all — they hope to find something — they NEED to find something because of their pre-existing bias. This leads to Risk Ratios that in other fields would be dismissed as null findings — and even these tiny RRs may hve been found through statistical manipulation that did not follow the best statistical practices — may have involved a bit of juggling.

“and if we could simply get the data into one of our new analytics engines we might be able to confirm, or at least report, on the actual markers in the information. Sadly, not sure that will ever happen.”

No amount of analysis will help identify anything based on a “global average temperature”. No controls are laid on the measuring devices concerning altitude or humidity and both can affect temperature readings at any point in time. Couple this will the fact that 70% to 80% of the values used are *assumptions* (called “infill” by the climate modelers) and nothing you find with any analysis is anything but questionable.

It would be like you “infilled” most of the heights of the African population with the average height of Watusi’s. Any analytic conclusions you might reach would be fatally flawed from the git-go!

“How to Lie with Statistics” and throw a hissy when caught. Taught in every AGW class our there, and all government entities.

Sheri ==> Its a combination of the Wizard of Oz and Chinatown. Ordering the world to “Pay no attention to the man behind the curtain!” and the apathy expressed to Jake by his friend “Forget it, Jake, its Chinatown” — implying that the whole situation is so corrupt that one shouldn’t let oneself be discouraged by it all.

Agreed.

Very well done. Thank you for doing all the work necessary to provide such a definitive post, thank you for adding links to the words I have never seen before, thank you for providing the link to the Enstrom paper, and thank you for the excellent bottom line summary.

Roger Caiazza ==> You are welcome for it all — and thank you for noticing some of the time consuming finer-points of what I try to do.

Just to give you an idea how these “benefits” are used New York State’s offshore wind benefits study (https://cris.nyserda.ny.gov/All-Programs/Programs/Offshore-Wind/Offshore-Wind-in-New-York-State-Overview/Benefits-of-Offshore-Wind) claims “By developing the first 2,400 MW of offshore wind energy, New York will avoid more than 1,800 tons of NOx, 780 tons of SO2, and 180 tons of PM2.5 compared to a business-as-usual scenario without offshore wind energy. New Yorkers will also save approximately $1.0 billion in health costs and, more importantly, avoid about 100 fewer premature deaths.”

Roger ==> Utter nonsense, of course, but par for the course.

I find this subject interestingly similar to computer software issues of Verification and Validation. Verification is making sure a software product is built (programmed) and documented according to its specified design. Validation is making sure the software actually performs the job it was intended to do correctly, in the real world.

It should be obvious that Verification of a software product does not prove its correctness or even usefulness in the real world. Validation can only be claimed if the product correctly produces results that match real world values from multiple independent input sources.

Gary Wescom ==> Very apt comparison. I worked the early web at IBM Int HQ in Armonk…..bunch oif crazy guys hauled into drag the company into the 21st century — only partially successful.

Kip. “Readers, please, can you give important examples of this principle?” [ephemeral Phenomena}

Maybe they are thinking of observational sciences like astronomy, vulcanology and such where you can’t set up your instruments, calibrate them, then call up a supernova or eruption in order to do your replicative data collection? I’m guessing that there are analogous situations in other fields where timespans, economic considerations, altered ethical principles, humanitarian concerns, changing demographics, etc effectively preclude replication?

Don K ==> That’s an interesting guess — and may well be right.

I’m hoping someone has purchased the NAS book and will quote examples! (Or has access to it through their university library.)

Don K February 3, 2020 at 7:43 am

Kip. “Readers, please, can you give important examples of this principle?” [ephemeral Phenomena}

Maybe they are thinking of observational sciences like astronomy, vulcanology and such where you can’t set up your instruments, calibrate them, then call up a supernova or eruption in order to do your replicative data collection? I’m guessing that there are analogous situations in other fields where timespans, economic considerations, altered ethical principles, humanitarian concerns, changing demographics, etc effectively preclude replication.

____________________________________

Don, the difference is historical science, Technics and Military.

– Historical Science NEVER refers to “if”, what would have been “if”. Historical Science investigates what WAS.

– Technic, Military have to develop from already known to new challenges with “what would be achieved with if – “.

Johann ==> Maybe — but truthfully, studies of “Ephemeral Phenomena” are unlikely to produce evidence of causal relationships important to public health and used at EPA – nor to have data that needs to be withheld based on personal privacy issues.

From the PNAS editorial

“Unfortunately, the premise for this argument is false. Not all studies can be made reproducible and transparent, even by careful and well-intentioned scientists…….. EPA is wise to take steps to make the science they rely on reproducible and publicly available when feasible. In doing so, EPA will be providing a service to and participating in promoting greater openness and rigor within the scientific community at large. ……Again, in our opinion, the answer is no. Doing so would replace EPA’s legal and eminently reasonable mandate to make decisions on the “best available science”

Fisheries got in trouble using the “Best Available Science.”

They simply do not trust us, From an American Scientist book review on “THE MISINFORMATION AGE: How False Beliefs Spread. Cailin O’Connor and James Owen Weatherall. 266 pp. Yale University Press, 2019.” https://www.americanscientist.org/article/do-people-care-about-evidence

“First, we have to give up on the idea that we live in a free “marketplace of ideas” that allows only the best to prevail. Information has to be regulated to make sure that it conforms to the facts. Second, human beings are too vulnerable to manipulation by misinformation to be able to sustain a democracy. Democracy may be a moral imperative, but we need institutions that allow us to make decisions based on evidence rather than ignorance. These implications may be unappetizing, but they are insights that must be digested if we want a more informed society that makes wiser decisions.” The US is a Representative Republic.

AND

Smith, E. P. 2020. Ending Reliance on Statistical Significance Will Improve Environmental Inference and Communication. Estuaries and Coasts. 43:1–6.

“The American Statistical Association [mentioned in first paragraph of editorial] has published an editorial that presents guidelines for the use and interpretation of p-values. Numerous authors have commented and criticized its use as a means to identify scientific importance of results and have called for an end to using the term .statistical significance.’ ……Recent articles in Estuaries and Coasts were evaluated for reliance on the use of statistical significance and reporting errors were identified.” Better late (DECADES OR MORE!!) than never? Nothing in the abstract about communication. I looked in my stat book for p, “We are asking if the sample difference (example) may be no more than sampling variation from the hypothetical difference.”

The statistical knowledge of some of the “climate scientists” is atrocious. Temperature measurements are numbers, i.e. zero uncertainty, zero error. The Central Limit Theory lets you eliminate uncertainty and increase precision by 1/(sqrt N), where they think of N being the number in the total population. You can average populations of temperatures while paying no attention to the variances of the individual populations. On and on.

These folks wouldn’t last a week doing trending and forecasting on stock exchanges or performing quality control assessments in a complicated quality control process.

“Temperature measurements are numbers, i.e. zero uncertainty, zero error.”

Measuring devices don’t have uncertainty factors?

Jeff ==> I believe Jim was being facetious…

I have often written about “errorless sea level rise…”

The difference between accuracy and precision.

And don’t let scientists step on the toes of regulators because regulators know best for what ever they wish to influence. After all it is procedure that counts, and tradition. Science can only be used to validate. Cherry pick.

Mere scientists don’t know the complexities that regulators have created. Only one who has advanced on government can know.

Or how about difference and differences.

I don’t see why the thing doesn’t get addressed at front end of the grant system as well. The feds hold the purse strings on $40B in grant money. Set up transparency standards as a requirement for a grant.

Mike ==> Many Federal agencies are working on this front — and attempting to establish and institute systems for study pre-registration, data caching, etc etc.

They are fighting a long established system — but at least they are making the effort.

Yes it certainly is entrenched. the Grant system goes back to the end of WWII. It has become welfare for higher education but no accountability It’s galling to see Harvard with its hand outstretched while sitting on a $40.9B endowment.

No one is going to be able to EXACTLY reproduce another study if it involves laboratory experiments. The fish tanks won’t be at the exact level of cleanliness, the fish won’t be exactly the same as the original study, humans won’t exactly add or subtract the same amount of catalyst or re-agent or whatever. Perhaps such discrepancies won’t make a difference, but how would you know?

Jeff–Very good point, especially about field experiments [EXACTLY IMPOSSIBLE], especially where stats are applied. However, there is more than one way to confirm, or at least have some faith in results, without exact repetition. That is another reason why the argument about privacy is fallacious.

We have too many in the scientific community who want control.

Jeff ==> This is why that kind of replication/reproduction is hard. It also requires that original researchers actually know and have carefully recorded all gthe details of their experiment so that others and try to do the same thing.

However, this isn’t about trying to fault research teams — it is about trying to discover truths about thew world around us.

So, for example, if slightly increased CO2 raises lowers sea water pH (a complicated topic) and this change really affects fish behavior, then other similar experiments will show the same kinds of effects. When another team of researchers tried to find the effects, they were unable to do so. Independent Validation (in this case, lasck of validation.)

“ephemeral phenomena”

These are events that are possible but are an extremely infrequent natural occurence and/or difficult to impossible to make happen for practical or ethical reasons. Particle physics has lots of theory but experimental proofs have required tremendous effort and expense to obtain.

I have dealt with a form of this kind of thing called single event effects which are collisions of an atmospheric particle colliding with atoms in a memory device. Early memory technologies required higher voltage levels and were not as dense so were less likely to experience such a collision than our modern devices. Even with modern devices you need 1000’s of hours of exposure for a collision to occur in a detectable manner.

Since most flight test programs are on the order of a 1000 hours spread over multiple aircraft it becomes very unlikely that a collision will occur during a flight test program. This has forced aircraft manufacturers to require that devices be tested under high energy particle beams to show that they are robust to particle collisions.

Another sort of thing altogether would be a coding error that is only appears once every 1000 hours of operation. If you haven’t got instrumented code in place at the time of the occurrence you are left with nothing to explain what happened or what lead up to the problem. Very difficult to find these sorts of things.

Another favorite was the software error that disappeared when the code was instrumented. We call them heisenbugs. Very frustrating things!

pauligon59 ==> Thanks for filling me in on this ephemeral phenomena issue. I have done the tech/programming thing, and am familiar with the weird kinds of things that can happen.

If reproducibility is defined as getting the same computational result as a previous study for the same input data, this is important to check for computational errors or, in the case of the “hockey stick”, verifying that statistical calculation methods were not deliberately skewed or cherry-picked to produce results desired by the analysts. Mark Twain once said that there were “lies, damned lies, and statistics”, and checking reproducibility may help in discovering statistical lies.

But “reproducibility” defined as above is not sufficient to validate a scientific study–the same data analyzed a hundred times should produce the same conclusion a hundred times (if all the analysts are honest), but the data could have been cherry-picked, or in the case of epidemiological studies, the researchers may not have accounted for confounding factors (such as smoking, age, sex, or obesity).

This is where “replicability” is needed–to repeat the experiment or research with a different group of people or subjects of study (such as trees or ice cores in a climate study), and analyze all experiments with the same statistical technique. If all such experiments with similar controls and methods reveal similar results (within some statistical margin of error), then a conclusion may be justified. If a series of similar experiments yield widely differing results, then all results should be considered inconclusive, and no regulations should be based on them.

Neither reproducibility nor replicability (as defined in the article) are sufficient to validate a scientific theory, but both combined, and both need to be verified by a large number of independent investigators–“independent” meaning that the financial well-being of each investigator does not depend on agreeing or disagreeing with another investigator. The problem is, in the real world, such independence is difficult to find.

Steve Z ==> I quite agree. The more important for society — the bigger the societal impact of the research — the more important it is that several groups do independent verification.

“in the case of the “hockey stick”, verifying that statistical calculation methods were not deliberately skewed or cherry-picked to produce results desired by the analysts.”

And then the gauntlet of hatred and bile you have to negotiate via the establishment when you’re pointing out major flaws in a consensus paper. Just ask Steve McIntyre, among others.

Allison: “Notably, the Environmental Protection Agency (EPA) is not a scientist; it is a regulatory agency.

And yet “scientists”, that don’t want to reveal their data and models – that is, follow the bloody scientific method!, want to regulate. Yes, Kip, some mighty odd logic going on…

Thanks Kip. This is an extremely important discussion. As somebody who worked for decades under the tyranny of “best available data”, in my opinion this phrase is fatal to science based regulation. It results in inadequate data going into unfit models which generate whatever the modeller thinks the outcome should be. Researchers involved in regulation need to be able to say that available data is inadequate for a desired analysis and they should not be coerced into making do with promises that the work will be completed in the future. I know from personal experience that once a decision is made, there is low probability that any promised science will be done later. “Best available data” should be “data fit for intended use” and when there is inadequate data only interim regulation is justifiable.

BCBill ==> Yes, I agree that “Best available data” is code for data that we like (due to our biases) even if it is not definitive, sold science.

Talk about Doubling Down. Lancet editor knows very well the problems in his own field. Yet he supports Doctors for Xtinction Rebellion

Sheesh!!

Offline: What is medicine’s 5 sigma? Lancet Editor Richard Horton

“A lot of what is published is incorrect.” I’m not allowed to say who made this remark because we were asked to observe Chatham House rules. We were also asked not to take photographs of slides. Those who worked for government agencies pleaded that their comments especially remain unquoted, since the forthcoming UK election meant they were living in “purdah”—a chilling state where severe restrictions on freedom of speech are placed on anyone on the government’s payroll. Why the paranoid concern for secrecy and non-attribution? Because this symposium—on the reproducibility and reliability of biomedical research, held at the Wellcome Trust in London last week—touched on one of the most sensitive issues in science today: the idea that something has gone fundamentally wrong with one of our greatest human creations

The case against science is straightforward: much of the scientific literature, perhaps half, may simply be untrue. Afflicted by studies with small sample sizes, tiny effects, invalid exploratory analyses, and flagrant confl icts of interest, together with an obsession for pursuing fashionable trends of dubious importance, science has taken a turn towards darkness. As one participant put it, “poor methods get results”.

http://www.thelancet.com/pdfs/journals/lancet/PIIS0140-6736%2815%2960696-1.pdf

Lancet editor Richard Horton urges medical licensing body not to punish doctors in Extinction Rebellion protests

The Lancet is one of the most respected medical journals in the world.

So when its editor, Richard Horton, endorsed nonviolent protests to address a climate emergency, it generated attention far and wide.

Horton’s videotaped statement is available on the website of Doctors for Extinction Rebellion, which is a collective of medical professionals who’ve participated in civil disobedience.

They’ve done this because they see climate change as “an impending public health catastrophe”.

“The climate emergency that we’re facing today is the most important existential crisis facing the human species,” Horton said. “And since medicine is all about protecting and strengthening the human species, it should be absolutely foundational to the practice of what we do every single day.

https://www.straight.com/life/1317926/video-lancet-editor-richard-horton-urges-medical-licensing-body-not-punish-doctors

Lancet Editor: Protesting Climate Change Is a Doctor’s Duty

https://nonprofitquarterly.org/lancet-editor-protesting-climate-change-is-a-doctors-duty/

Amazing such an idiot could become a doctor. I’d be seriously concerned about being his patient, when he’s that delusional.

I can give you an amazing example of “ephemeral data” by NASA in what is the most significant cornerstone of the “GHE”. The first graph gives NASA estimate on global cloud forcing accoring to their “satellite data”. Essentially NASA claims clouds were largely neutral, or even positive over land. Over the ocean however they would be predominantly cooling, and massively so in certain regions.

Of course most weather observation (and the data hereto) is placed on land. Which is also true for the very raw NOAA data I downloaded before they pulled it from their site. But incidently this data set also included 10 stations located on the Aleut islands amid the Bering Sea, with strict maritime climate which gives us some insight into on of those “deep red” regions with massive negative cloud forcing.

The cloud / temperature correlation gives us the familiar patterns, with clouds cooling in spring and warming in autumn (for obvious reasons I would say).

The annual average then also shows a clear picture, with an almost linear correlation. The more clouds, the warmer it is.

And here is the obvious problem. These real, indepent data, which do not require a bottlenecked access to satellites, clearly show clouds are warming even in the Bering Sea, where NASA claims they were massively cooling. This result pretty much corresponds to all the regions investigated. Of course, if clouds are warming the planet rather than cooling it, the whole “GHE” story becomes obsolete, as there is not much scope for GHGs.

Epilogue:

The sheer magnitude of the push-back against the EPA’s proposed Transparency rule is truly astonishing. But very typical of what I have seen in each and every one of the Modern Scientific Controversies I have written about here at WUWT.

There exists a small group of researchers and air pollution scientists that have done research that contradicts the Six Cities and CPSII studies — finding no effect from ambient concentrations of PM2.5.

It is interesting times for Science in general.

I say: if Six Cities and CPSII are that important — then let’s put them out in the open with all the data (less personal identifiers) and methods made available to disinterested independent researchers. If the studies are sound and the findings solid, they will be validated. If not, then a huge regulatory burden can be lifted from society.

From the official published results of Six Cities and CPSII — they are null findings.

The greater good of science will be served either way.

# # # # #

Agreed. Time to rip down the curtain, and not permit another to be put up.

Don K February 3, 2020 at 7:43 am

Kip. “Readers, please, can you give important examples of this principle?” [ephemeral Phenomena}

Maybe they are thinking of observational sciences like astronomy, vulcanology and such where you can’t set up your instruments, calibrate them, then call up a supernova or eruption in order to do your replicative data collection? I’m guessing that there are analogous situations in other fields where timespans, economic considerations, altered ethical principles, humanitarian concerns, changing demographics, etc effectively preclude replication.

____________________________________

Don, the difference is historical science, Technics and Military.