Guest essay by John Ridgway

It was late evening, April 19, 1995, that the crestfallen figure of McArthur Wheeler could be found slumped over a Pittsburgh Police Department’s interrogation room table. Forlorn and understandably distressed by his predicament, he could be heard muttering dumbfounded astonishment at his arrest. “I don’t understand it,” he would repeat, “I wore the juice, I wore the juice!”

Wheeler’s bewilderment was indeed understandable, since he had followed his expert accomplice’s advice to the letter. Fellow felon, Clifton Earl Johnson, was well acquainted with the use of lemon juice as an invisible ink. Surely, reasoned Clifton, by smearing such ‘ink’ on the face, one’s identity would be hidden from the plethora of CCTV cameras judiciously positioned to cause maximum embarrassment to any would-be bank robber. Sadly, even after appearing to have confirmed the theory by taking a polaroid selfie, Mr Wheeler’s attempts at putting theory into practice had proven a massive disappointment. Contrary to expectation, CCTV coverage of his grinning overconfidence was quickly broadcast on the local news and he was identified, found and arrested before the day was out.

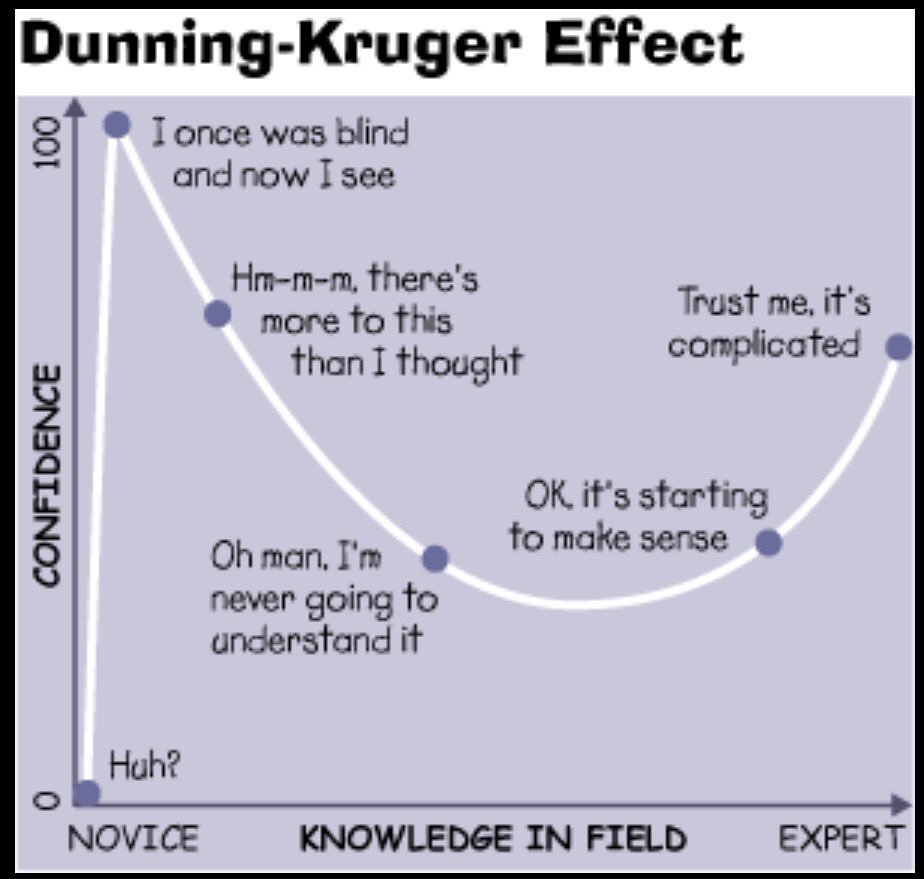

Professor David Dunning of Cornell University will tell you that this sad tale of self-delusion inspired him to further investigate a cognitive bias known as Illusory Superiority, that is to say, the predisposition in us all to think we are above average.1 After collaboration with Justin Kruger of New York University’s Stern School of Business, Professor Dunning was then in a position to declare his own superiority by announcing to the world the Dunning-Kruger effect.2 The effect can be pithily summarised by saying that sometimes people are so stupid that they do not know they are stupid – a point that was considered so obvious by some pundits that it led to the good professor being awarded the 2000 Ig Nobel Prize for pointless research in psychology.3

The idea behind the Dunning-Kruger effect is that those who lack understanding will also usually lack the metacognitive skills required to detect their ignorance. Experts, on the other hand, may have gaps in their knowledge but their expertise provides them with the ability to discern that such gaps exist. Indeed, according to Professor Dunning’s research, experts are prone to underestimate the difficulties they have overcome and so underappreciate their skills. Modesty, it seems, is the hallmark of expertise.

And yet, the current perception of experts is that they persistently make atrocious predictions, endlessly change their advice, and say whatever their paymasters require. This paints a picture of experts whose superiority may be as delusory as that demonstrated by bank robbers gadding about with lemon juice smeared on their faces. If so, they may be fooling no-one but themselves. However, the real problem with experts is that, no matter what one might think of them, we can’t do without them. We would dearly wish to base all our decisions upon solid evidence but this is often unachievable. And where there are gaps in the data, there will always be the need for experts to stick their fat, little expert fingers into the dyke of certitude, lest we be overwhelmed by doubt. Nowhere is this service more important than in the assessment of risk and uncertainty, and nowhere is the assessment of risk and uncertainty more important than in climatology, particularly in view of the concerns for Catastrophic Anthropogenic Global Warming (CAGW). For that reason, I was more than curious as to what the experts of the Intergovernmental Panel on Climate Change (IPCC) had to say in their Fifth Assessment Report (AR5), Chapter 2, “Integrated Risk and Uncertainty Assessment of Climate Change Response Policies”. Does AR5 (2) reassure the reader that the IPCC’s position on climate change is supported by a suitably expert evaluation of risk and uncertainty? Does it even reassure the reader that the IPCC knows what risk and uncertainty are, and knows how to go about quantifying them? Well, let us see what my admittedly jaundiced assessment made of it.

But First, Let Us State the Obvious

I should point out that the credentials carried by the authors of AR5 (2) look very impressive. They are most definitely what you might call, ‘experts’. If it were down to merely examining CVs, I might be tempted to fall to my knees chanting, “I am not worthy”. In fact, not to do so would incur the ‘warmist’ wrath: Who am I to criticise the findings of the world’s finest experts?4 Nevertheless, if one ignores the inevitable damnation one receives whenever deigning to challenge IPCC output, there are plenty of conclusions to be drawn from reading AR5 (2). The first is that the expert authors involved, despite their impressive qualifications, are not averse to indulging in statements of the crushingly obvious. Take, for example:

“There is a growing recognition that today’s policy choices are highly sensitive to uncertainties and risk associated with the climate system and the actions of other decision makers.”

One wonders why such recognition had to grow. Then there is:

“Krosnick et al. (2006) found that perceptions of the seriousness of global warming as a national issue in the United States depended on the degree of certainty of respondents as to whether global warming is occurring and will have negative consequences coupled with their belief that humans are causing the problem and have the ability to solve it.”

Is there no end to the IPCC’s perspicacity?

Now, I appreciate that my sarcasm is unappealing, but this sort of padding and waffle would sit far more comfortably in an undergraduate’s essay than it does a document of supposedly world-changing importance. It’s not a fatal defect but there is quite a lot of it and it adds little value to the document. Fortunately, however, there is plenty within AR5 (2) that is of more substance.

The IPCC Discovers Psychology

To be honest, what I was really looking for in a document that includes in its title the phrase, ‘Integrated Risk and Uncertainty Assessment’ was a thorough account of the concepts of risk and uncertainty and a reassurance that the IPCC is employing best practice for their assessment. What I found was a great deal on the psychology of decision-making (based largely upon the research of Kahneman and Tversky) highlighting the distinction to be made between intuitive and deliberative thinking. In particular, much is made of the importance of loss aversion and ambiguity aversion in influencing organisations and individuals who are contemplating whether or not to take action on climate change. In the process, some pretty flaky theories (in my opinion) are expounded, such as the following assertion regarding intuitive thinking in general:

“. . .for low-probability, high-consequence events. . .intuitive processes for making decisions will most likely lead to maintaining the status quo and focusing on the recent past.”

And specifically on the subject of loss aversion:

“Yet, other contexts fail to elicit loss aversion, as evidenced by the failure of much of the global general public to be alarmed by the prospect of climate change (Weber, 2006). In this and other contexts, loss aversion does not arise because decision makers are not emotionally involved (Loewenstein et al., 2001).”

Well, that doesn’t accord with my understanding of loss aversion, but I don’t want to get drawn into a debate regarding the psychology of decision-making. It’s a fascinating subject but the IPCC’s interest in it seems to be purely motivated by the desire to psychoanalyse their detractors and to take advantage of people’s attitudes to risk and uncertainty in order to manipulate them into supporting green policy. For example, whilst they maintain that:

“Accurately communicating the degree of uncertainty in both climate risks and policy responses is therefore a critically important challenge for climate scientists and policymakers.”

they go on to say:

“. . .campaigns looking to increase the number of citizens contacting elected officials to advocate climate policy action should focus on increasing the belief that global warming is real, human-caused, a serious risk, and solvable.”

This leaves me wondering if they want to increase understanding or are really just looking to increase belief. I suspect that AR5’s concentration on the psychology of risk perception reveals that the IPCC’s primary interest is in the latter. The IPCC seems too interested in exploitation rather than education. For example, the authors explain how framing decisions so as to make the green choice the default option takes advantage of a presupposed psychological predilection for the status quo. This may be so, but this is a sales and marketing ploy; it has nothing to do with ‘accurately communicating the degree of uncertainty’.

Given the IPCC’s agenda, such emphasis is understandable, but once one has removed the waffle and the psychology lecture from AR5 (2), what is left? Remember, I’m still looking for a good account on the concepts of risk and uncertainty and how they should be quantified. Such an account would provide a good indication that the IPCC is consulting the right experts.

Another Expert, Another Definition

Let us look at the document’s definitions for risk and uncertainty:

“‘Risk’ refers to the potential for adverse effects on lives, livelihoods, health status, economic, social and cultural assets, services (including environmental), and infrastructure due to uncertain states of the world.”

Nothing too controversial here, although I would prefer to see a definition that emphasises that risk is a function of likelihood and impact and may be assessed as such.

For ‘uncertainty’, we are offered the following definition:

“‘Uncertainty’ denotes a cognitive state of incomplete knowledge that results from a lack of information and / or from disagreement about what is known or even knowable. It has many sources ranging from quantifiable errors in the data to ambiguously defined concepts or terminology to uncertain projections of human behaviour.”

I find this definition more problematic, since it only addresses epistemic uncertainty (a ‘cognitive state of incomplete knowledge’). The document therefore fails to explain how the propagation of uncertainty may take into account an incomplete knowledge of a system (the epistemic uncertainty) combined with its inherent variability (aleatoric uncertainty). The respective roles of epistemic and aleatoric uncertainties is a central and fundamental theme of uncertainty analysis that appears to be completely absent from AR5 (2).5

Later within the document one finds that:

“These uncertainties include absence of prior agreement on framing of problems and ways to scientifically investigate them (paradigmatic uncertainty), lack of information or knowledge for characterizing phenomena (epistemic uncertainty), and incomplete or conflicting scientific findings (translational uncertainty).”

Not only does this differ from the previously provided definition for uncertainty (a classic example of the ‘ambiguously defined concepts and terminology’ that the authors had been so keen to warn against), it also introduces terms that will be unfamiliar to all but those who have trawled the bowels of the sociology of science. What I would much rather have seen was a definition that one could use to measure uncertainty, for example:

“For a given probability distribution, the uncertainty H = – Σ(pi ln(pi)), where pi is the probability of outcome (i).”

Despite promising to explain how uncertainty may be quantified, there is nothing in AR5 (2) that comes close to doing so. Fifty-six pages of expert wisdom are provided with not a single formula in sight. I suspect that I am not as impressed as the authors expected me to be.

Finally, I see nothing in the document that comes near to covering ontological uncertainty, i.e. the concept of the unknown unknown. This is very telling since it is ontological uncertainty that lies at the heart of the Dunning-Kruger effect. Could it be that the good folk of the IPCC are unconcerned by the possibility that their analytical techniques lack metacognitive skill?

However, if the definitions offered for risk and uncertainty left me uneasy, this is nothing compared to the disquiet I experienced when reading what the authors had to say about uncertainty aversion:

“People overweight outcomes they consider certain, relative to outcomes that are merely probable — a phenomenon labelled the certainty effect.”

Embarrassingly enough, this is actually the definition for risk aversion, not uncertainty aversion!6

All of this creates very serious doubts regarding the expertise of the authors of AR5 (2). I’m not accusing them of being charlatans – far from it. But being an expert on risk and uncertainty can mean many things, and those who are experts often specialise in a way that gives them a narrow focus on the subject. This appears to be particularly evident when one looks at what the AR5 (2) authors have to say regarding the tools available to assess risk and uncertainty.

Probability, the Only Game in Town?

A major theme of AR5 (2) is the distinction that exists between deliberative thinking and intuitive thinking. The document (incorrectly, in my opinion) equates this with the distinction to be made between normative decision theory (how people should make their decisions) and descriptive decision theory (how people actually make their decisions). According to AR5 (2):

“Laypersons’ perceptions of climate change risks and uncertainties are often influenced by past experience, as well as by emotional processes that characterize intuitive thinking. This may lead them to overestimate or underestimate the risk. Experts engage in more deliberative thinking than laypersons by utilizing scientific data to estimate the likelihood and consequences of climate change.”

So what is this deliberative thinking that only experts seem to be able to employ when deciding climate change policy? According to AR5 (2), it entails decision analysis founded upon expected utility theory, combined with cost-benefits analysis and cost-effects analysis. All of these are probabilistic techniques. Unsurprisingly, therefore, when it comes to quantifying uncertainty, AR5 informs that:

“Probability density functions and parameter intervals are among the most common tools for characterizing uncertainty.”

This is actually true, but it doesn’t mean it is acceptable. Anyone who is familiar with the work of IPCC Lead Author, Roger Cooke, will understand the prominence given in AR5 (2) to the application of Structured Expert Judgement methods. These depend upon the solicitation of subjective probabilities and the propagation of uncertainty through the construction of probability distributions. In defence of the probabilistic representation of uncertainty, Cooke has written:7

“Opponents of uncertainty quantification for climate change claim that this uncertainty is ‘deep’ or ‘wicked’ or ‘Knightian’ or just plain unknowable. We don’t know which distribution, we don’t know which model, and we don’t know what we don’t know. Yet, science based uncertainty quantification has always involved experts’ degree of belief, quantified as subjective probabilities. There is nothing to not know.”

I agree that there is no such thing as an unknown probability; probability simply comes in varying degrees of subjectivity. However, it is factually incorrect to state that ‘science based’ uncertainty quantification has always involved degrees of belief quantified as subjective probabilities, and it is a gross misrepresentation to assert that opponents of their use are automatically opposed to uncertainty quantification. Even within climatology one can find scientists using non-probabilistic techniques to quantify climate model uncertainty. For example, modellers applying possibility theory to determine the mapping of parameter uncertainty on output uncertainty have found there to be a 5-fold increase in the ratio as compared to an analysis that used standard probability theory (Held H., von Deimling T.S., 2006).8 This is not a surprising result. Possibility theory was developed specifically for situations of incomplete and/or conflicting data, and the mathematics behind it is designed to ensure that the uncertainty, thereby entailed, is fully accounted for. Other non-probabilistic techniques that have found application in climate change research include Dempster-Shafer Theory, Info-gap analysis and fuzzy logic, none of which get a mention in AR5 (2).9

In summary, I find that AR5 (2) places undue confidence in probabilistic techniques and fails woefully in its attempt to survey the quantitative tools available for the assessment of climate uncertainty. At times, it just looks like a group of people who are pushing their pet ideas.

Beware the Confident Expert

The Dunning-Kruger effect warns that there comes a point when stupidity runs blind. Fortunately, however, we will always have our experts, and they are presupposed to be immune to the Dunning-Kruger effect because their background and learning provides them with the metacognitive apparatus to acknowledge and respect their own limitations. As far as Dunning and Kruger are concerned, experts are even better than they think they are. But even if I accepted this (and I don’t) it doesn’t mean that they are as good as they need to be.

AR5 (2) appears to place great store by the experts, especially when their opinions are harnessed by Structured Expert Judgement. However, insofar as experts continue to engage in purely probabilistic assessment of uncertainty, they are at risk of making un-evidenced but critical assumptions regarding probability distributions; assumptions that the non-probabilistic methods avoid. As a result, experts are likely to underestimate the level of uncertainty that is framing their predictions. Even worse, AR5 (2) pays no regard to ontological uncertainty, which is a problem, because ontological uncertainty calls into question the credence placed in expert confidence. All of this could mean that the potential for climate disaster is actually greater than currently assumed. Conversely, the risk may be far less than currently thought. The authors of AR5 (2) wrote at some length about the difference between risk and the perception of risk, and yet they failed to recognise the most pernicious of uncertainties in that respect – ontological uncertainty. In fact, when I hear an IPCC expert say, “There is nothing to not know”, I smell the unmistakable odour of lemon juice.

John Ridgway is a physics graduate who, until recently, worked in the UK as a software quality assurance manager and transport systems analyst. He is not a climate scientist or a member of the IPCC but feels he represents the many educated and rational onlookers who believe that the hysterical denouncement of lay scepticism is both unwarranted and counter-productive.

Notes:

1 This cognitive bias goes by many names, illusory superiority being the worst of them. The bias refers to a delusion rather than an illusion and so the correct term ought to be delusory superiority. However, the term illusory superiority was first used by the researchers Van Yperen and Buunk in 1991, and to this day, none of the experts within the field has seen fit to correct the obvious gaffe. So much for expertise.

2 Kruger J; Dunning D (1999). “Unskilled and unaware of it: How difficulties in recognizing one’s own incompetence lead to inflated self-assessments”. Journal of Personality and Social Psychology, vol. 77 no. 6, pp: 1121–1134.

3 See http://www.improbable.com/ig/winners.

4 As it happens, I am someone who made a living by (amongst other things) constructing safety cases for safety-critical computer systems. This requires a firm understanding of the concepts of risk and uncertainty and how to form an evidence-based argument for the acceptability of a system, prior to it being commissioned into service. In short, I was a professional forecaster who relied on statistics, facts and logic to make a case. As such, I might even allow you to call me an expert. So, whilst I fully respect the authors of AR5, Chapter 2, I do believe that I am sufficiently qualified to comment on their proclamations on risk and uncertainty.

5 See, for example, “Kiureghian A. (2007), Aleatory or epistemic? Does it matter?, Special Workshop on Risk Acceptance and Risk Communication, March 26-27 2007, Stanford University”. If nothing else, please read its conclusions.

6 In expected utility theory, if the value at which a person or organisation would be prepared to sell-out, rather than take a risk, (i.e. the ‘Certain Equivalent’) is less than the sum of the probability weighted outcomes (i.e. the ‘Expected Value’) then that person or organisation is, by definition, risk averse.

7 Cooke R. M. (2012), Uncertainty analysis comes to integrated assessment models for climate change…and conversely. Climatic Change, DOI 10.1007/s10584-012-0634-y.

8 Held H., von Deimling T.S. (2006), Transformation of possibility functions in a climate model of intermediate complexity. In: Lawry J. et al. (eds), Soft Methods for Integrated Uncertainty Modelling. Advances in Soft Computing, vol 37. Springer, Berlin, Heidelberg.

9 It is not surprising that fuzzy logic is overlooked, since its purpose is to address the uncertainties resulting from vagueness (i.e. uncertainties relating to set membership), and this source of uncertainty seems to have completely escaped the authors of AR5 (2); unless, of course, they are confusing vagueness with ambiguity, which wouldn’t surprise me to be honest.

The Dunning-Kruger effect is alive and well – thanks to IPCC members expertly and repeatedly demonstrating exactly how it functions!

How do we know that David Dunning and Justin Kruger didn’t themselves belong to the group of ‘experts’ characterised by what is known as the D-K effect, i.e. cognitive bias wherein people of low ability suffer from illusory superiority or to put it simply, two academics were much more competent than everyone else to judge thinking ability of rest of us.

How? Just test their conclusions/predictions.

… Or were you just asking a rhetorical/sarcastic question?

Actually, we all fall somewhere along the DKE curve — a spectrum of points between accurate self-awareness and cluelessness. And at different places depending on the topic, I suppose.

Hi Gary, among few others, I too have been diagnosed more than on one occasion by our resident expert to be a prime candidate for a D&K treatment. :large

:large

In my case I know very little about a huge volume of stuff, or to put it in the more scientific terms the quantity/quality ratio ~ infinity

Personally, with an M.S. in Agriculture – I know a little about a whole lot. Expert at “ground” truth.

this is just a further example of the effect of power on the human mind.

This was well discuss in a book of a similar name.

In essence people believe there own BS at some point.

Brain pathways are opened as per with drug addition and can never be closed.

“In essence people believe their own BS at some point.”

… or more often someone else’s BS that they know nothing of, such as the universe is a hologram pulled around by strings ever since it emerged from a singularity to give birth to billions of new black ones, floating around in some other dark stuff.

To some it is BS, to others beautiful stuff of science, but to the peddlers of it it could be a precious fertiliser good for farming large grants and more often the academia’s life long incomes.

“Academics”and”Climate Scientists”have a brain?Who’d a thunk.

Yeap. Climastrological ‘experts’ think they know more physics than a physicist, more chaos theory than a chaos theory expert, more computer science than a computer scientist, more statistics than a statistician, more mathematics than a mathematician and the list goes on and on.

Funny about that Dunning, though, since psychology is largely a pseudo science and has abysmal reproducibility results.

And it’s funny about those experts in some more vague fields, they prove of having less expertise than advertised, when checked: http://repository.upenn.edu/cgi/viewcontent.cgi?article=1010&context=marketing_papers

And simply having experts in homeopathy, astrology, alchemy, creationism or climastrology for that matter does not prove they are really above Bozo the clown, no matter what a psychologist says.

Mann pontificating on hurricanes, a subject for which he has no expertise, is a classic example of your first paragraph

Great link, Adrian! I hope a lot of viewers read at least part of it.

Great quote from the linked paper which explains a lot:

The D K effect is psycho babble nonsense.

There is no mean for this. It’s an epic generalisation of a mythical human, and does not relate to real people whatsoever

“It’s an epic generalisation of a mythical human, and does not relate to real people whatsoever”

—————————-

I see a lot of ignorant yet boastfull people around me, babbling confidently about things they know nothing about, typical of the D K syndrome. They are real, and numerous. If you don’t see any of them “whatsoever”, try to get out of your bubble.

They’re called know-it-alls, and we recognized them just fine before Dunning and Kruger’s superfluous self-important pontificating.

The “illusory superiority bias” is specific to certain type of person and behavior, NOT the average person. The DK studies were specific to SOME of the test subjects in two distinct categories amongst the test subjects…(who were real people and not mythical at all) and there are numerous validating studies where people were tested/observed and those two groups of people did indeed exhibit this particular cognitive bias more so than those subjects who were NOT in those groups.

There is nothing “mythical” about the fact that SOME people who are extremely competent actually do underestimate/under-value their own skills and SOME incompetent people overestimate/over value their own. In the studies, the two groups represented the top 1/4th of subjects and the bottom 1/4 th of subjects, and NONE of the studies suggested that ALL of the subjects in both categories suffered from the bias. It also means that more than HALF of the subjects did NOT suffer from it. The studies ALSO indicated that after training, the incompetent actually corrected their bias.

The fact that your statement sounded really “superior” regarding this bias, AND demonstrated an obvious lack of understanding of even the most basic definition of it….well….does more to support it than refute it.

Just saying 🙂

Here’s a quote that may be more indicative of what we are seeing:

“By ‘educated incapacity’ we mean an acquired or learned inability to understand or see a problem, much less a solution. Increasingly the more expert, or at least the more educated, a person is, the more likely he is to be affected by this.”

Herman Kahn, William Brown, and Leon Martel, The Next 200 Years, William Morrow and Company, 1976, p. 22.

This was far too good not to share.

That’s why the most important part of scientific training is to realize how easy it is to fool yourself and how to take measures against it.

Something modern education too often fails to address. Parroting your profs words may help pass the next test, but does not count as scientific expertise.

It’s about never forgetting that you have a New Zealand cow.

(Non-Zero Chance of Wrong)

“For example, the authors explain how framing decisions so as to make the green choice the default option takes advantage of a presupposed psychological predilection for the status quo. This may be so, but this is a sales and marketing ploy”

Spot on!

In future, I shall call my bullshit detector the “Ridgeway Test”

Better keep the psychologists and their word puzzles and Cheshire Cat word definitions out of the real sciences. Wow it would impossible to study statistics and probability or mathematics of chemistry if all the terms and names were wrapped in this gobbeldy gook and made up words or known words with their meanings changed. And can you imagine what they could mess up with a set of engineering specifications and drawings? A design for a simple valve might end looking like a Citron.

I gather you mean a French Citroen, the most complex simple car.

“Status quo”? Is that latin for “settled science”? They would like to think so.

good article. Like every bit of it. No criticisms.

My brother was a geothermal scientist and when we meet we debate Climate Change .He says” I know that air with elevated amounts of CO2 will warm up more than ambient air. End of story ” I tell him it is not that simple the theory of global warming depends on positive feed backs and positive feed backs depend on the tropical hot spot in the atmosphere and that has never been located.

Back in the nineties I met and became a friend of John Maunder a meteorologist from New Zealand and in casual conversation I said I did-int believe in CAGW and he said neither did he ,I learn’t a lot from him and have not seen any convincing proof to change my mind since. Silly climate scare stories that are floated constantly might convince the younger generations but us old guys have seen it all before To cap it all off if the theory was proven beyond doubt no one would rely on consensus to reinforce there case .

“…that is to say, the predisposition in us all to think we are above average.1”

I’m with Homer on this, we aspire to be ‘average’!

Remember, 97% of all people are “above average”.

I am glad to always claim to be average, at best. A lifetime of experience has demonstrated to me that this is so. I would hate to be part of the 97% of anything.

The Lake Woebegone affect.

HA, not so.

For the past 30+– years the Public School System “edumacators” have been brainwashing their students into believing “everyone is

average percentile equal” ….. and that no student actually “fails” a subject, …… that they are just rated in the “lower percentile” of superior achieving intellectuals.OOPS, only the word “average” should had had a strike-thru.

….. into believing “everyone is

averagepercentile equal” …..” This cognitive bias goes by many names, illusory superiority being the worst of them. The bias refers to a delusion rather than an illusion and so the correct term ought to be delusory superiority. ”

Dictionaries equate “illusory” to misperception. Since cognitive bias is also a misperception, dictionaries make “illusory superiority” SEEM correct. Since illusory and illusion both refer to a characteristic of the subject being perceived, in this case superiority, illusory would only be correct if others also perceived the superiority. Since they do not, that perception of superiority is a self deception.

While I agree in substance with your thinking, most dictionaries equate “delusory” to deception of others, while equating “delusion” to deception of self. Your terminology should thus be “delusional superiority”.

SR

Yes, you’re right. Better still to call it “delusional superiority”. I had taken my cue from the fact that the psychologists refer to “illusory superiority” rather than “illusional superiority” and I had not been aware that dictionaries are making a distinction between “delusory” and “delusionary”. That said, I too notice that some dictionaries refuse to accept the distinction you and I would make between an illusion and a delusion.

Magicians & conjurors practice illusions,&we are deluded,or delude our selves if we believe these illusions are reality

John,

Delusion tends to be applied with regard to mental illness, and people can have cognitive biases without being mentally ill.

That you noted that your opinion (and Stevan’s) differs from that of the “experts” in word definitions and applications is a positive thing.

🙂

I am pleased that you posted here Aphan, since you provide a timely reminder that psychiatrists do indeed use the term ‘delusion’ in the narrow sense of a mental illness. That would explain why psychologists have avoided the term, ‘delusionary superiority’; they presumably invented their term for the benefit of fellow academics (not the lay public) and therefore would not wish to be accused of assuming that the cognitive bias is a form of mental illness. Your post also provides me with the opportunity to point out another frustrating habit that experts have: They appropriate existing words and imbue them with a more narrow and/or altered meaning in order to create their jargon. They can then chastise the lay public when they fail to pick up on this.

Meanwhile, in the big, wide world, we non-experts are advised by authorities such as H.W. Fowler, who had this to say in ‘Fowler’s Modern English Usage’:

“A delusion is a belief that, though false, has been surrendered to and accepted by the whole mind as the truth and so may be expected to influence action. An illusion is an impression that, though false, is entertained provisionally on the recommendation of the senses or the imagination, but awaits full acceptance and may be expected not to influence action.”

On such a basis, the lay public would find ‘delusionary superiority’ to be the appropriate terminology.

When I simply observe that the distinction between ‘delusion’ and ‘illusion’ appears to have become blurred in current usage, I am not differing from expert opinion, I am just wryly observing the dumbing down of language. I suspect I may also be speaking here for Stevan.

The IPCC’s position is that climate sensitivity to increasing atmospheric CO2 is up to ~10 times higher than it really is, and therefore humanity should beggar our economies in the developed world and deny cheap reliable energy to the developing world, in response to their fictitious threat.

As evidence of the IPCC’s utter incompetence, none of their scary scenarios have actually materialized in the decades that they have been in existence. They have a perfectly negative predictive track record, so nobody should believe anything they do or say.

George Carlin on the IPCC:(warning – language)

Of course, the IPCC is pervaded by self delusional superiority, because for the main part the Authors of the Report are not independent Experts, but instead are reviewing and relying upon either their own works (ie., papers which they themselves have written), or on work which they themselves were reviewers (due to the incestuous nature of peer review). The IPCC is essentially witness, judge and jury all rolled into one, and hence there is no objective critical thinking.

The IPCC is not fit for purpose since it does not consist of a body of independent Experts who are charged with ascertaining what is meant by climate, how does climate work, what, if any, climate change there has been to climate on regional levels, and on a global level, and why regional changes have been different, and the causes of such change.

The IPCC is not a scientific organisation, but rather a political one charged with promoting a particular agenda.

“….but rather a political one charged with promoting a particular agenda.”

As it seems to me are several organisations and think tanks which oppose climate science.

which oppose…the idiotic conclusions of…. climate science.

Run, Griff! The ice is returning! Lmao!

Griff returns with the same comment, but without the direct mention of ‘Koch’ or ‘fossil fuel industry funded’.

One-trick pony, Billy Goat Griff.

Griff, you talk as if we two sides have the same role and the same tests. But if my role is paying for your expedition to find the lost gold mine, and I have found your map to be unhelpful, I have no responsibility to come up with a better map. I just pull the plug, instead.

It would be nice to do more, but there is no such contract. In this situation I’m not being paid to do your expert job for you.

Griff,

George Carlin (above) has your number. Apparently you are all three.

Griff, even if the organisations which as you see as opposed to the CAGW theory are just political, they seem to have a better record at predicting outcomes than the CAGW climate models. Do you ever stop to think about that?

this is a great post

Have to disagree with one thing you said there, that the IPCC is “not fit for purpose.” It is, in fact, fit for a purpose – exactly the one you describe in your last paragraph. It is a political body charged with promoting “belief” in AGW to foster submission to economically suicidal (for most, but not for the politically connected cronies positioned to profit enormously) “climate policies.”

“So What Happened to Expertise with the IPCC?”

From what I can see, anyone with ANY real expertise left after they read the POLITICAL summaries.

Darts at a dartboard, thrown by monkeys . . .

That comes from Philip Tetlock. He studied the predictions of experts for decades. It turns out that experts have a lousy (worse than a dart throwing monkey) record of predicting the future. Here’s an example about trying to outguess the stock market.

There are two kinds of expertise. There is expert performance wherein someone is able to repeat, and possibly go a bit beyond, what they have learned to do. An example is a surgeon. Here’s a link to a wonderful survey paper by K. Anders Ericsson. It’s where the 10,000 hour figure cited by Malcolm Gladwell comes from.

Expert performance is very reliable. Civilization would collapse if that weren’t true. If half the bridges failed because of unreliable engineering we wouldn’t build bridges. etc. etc.

The other kind of expert is deemed to be so because of her superior education and experience. The problem is that we bestow on such people the respect earned by the expert performers. The truth is that we should take their bloviations cum grano salis.

The folks pushing CAGW are not expert performers. Their predictions are no better than those of a blindfolded dart throwing monkey and should be treated as such.

Dunning pointed out that stupid people tend to overestimate their abilities. He also found that experts often claim to know more than they actually do. link Tetlock pointed out that experts have a plethora of mechanisms to protect them from acknowledging their failed predictions. Experts are just as prone to human foibles as anyone else, they just have the skills to convince most people that they actually know what they’re talking about.

Spot on CommieBob . . . The older I get the more I become aware of just how little we really know.

“The other kind of expert is deemed to be so because of her superior education and experience. The problem is that we bestow on such people the respect earned by the expert performers.”

This is pounding the nail on the head in one stroke. There is a difference between experts and the credentialed. Although a credentialed person can be expert, he can only be so based on thousands of hours of performance. The credentialed without any discernible record of performance is not an expert, just some guy or gal with a diploma.

This is a good essay by one who is clearly well aware of the unreliability of forecasts of highly complex (possibly chaotic), multi-variate, non-linear systems.

Not only does climatology not know the coefficients, it doesn’t even know the independent variables.

Most WUWT readers are already well aware of the poor quality (collection, compilation, methods and coverage) of the historic temperature record.

These known substantial weaknesses in our knowledge of climate don’t exactly accord with most people’s concept of “settled science.”

The inherent contradictions in the progressive worldview are simply mind boggling.

Be who you are, you be you… think for yourself… question everything, especially authority … be a critical thinker… embrace your own truth… be an individual, not a conformist… trust the experts, not your own cognitive abilities… don’t question what authorities say… don’t think independent of what is acceptable.

Where the [pruned] have you seen a progressive saying you must think for yourself and question anything ? A progressive is just enable to see a person, he only see a representative of a group. You are not you, you are a part of a category (male, white, denier, or whatever, depending on the issue)

A progressive knows he is just a stupid ignorant man, and to be a better person is very simple: just stick to the beliefs of do-gooders. Do NOT think by yourself. Do NOT trust authority. Trust the group, the GOOD group, the group that talk about being good and doing good, whatever it takes (including hating and killing “evil” people).

[“just enable” should be what? .mod]

What you are describing is the result, not how progressives see themselves (their identity). The incremental (progressive) steps to get to that result (the hive mind) leveraged and co-opted the ideals of liberalism (some of the things that I described) through a process of mind-phuck (pardon my rather simplistic description, lol) to get to that point.

“just unable”?

Just unable?

Oops. Somehow I missed that,DM.

A leftist believes that most people are stupid and thinks, I need to be running these people’s lives.

A rightist believes that most people are stupid and thinks, I sure don’t want those people running my life.

A whatever-ist (like myself) believes that most people are stupid and thinks, “holy phuck Batman, we’re all going to die, so just let everyone live their own lives as they see fit and try to do things that don’t hurt them.”

When I said “most people are stupid”, methinks I should have said “most people are stupid (like myself)”

(p.s. it’s wonderful to not care what people think about you; very liberating). Cheers!

MarkW,

Yes, I think that one of the issues society deals with is the hubris of someone with (at best) a liberal arts background who thinks that they are smarter than everyone else and therefore they have a responsibility to run their lives. Most vocal progressives that I have come across have spoken and behaved as though they thought that they were smarter than those they disagreed with. That is why they often view themselves as Social Justice Warriors, on par with Joan of Arc.

Some people are unskilled and can be trained, some are ignorant and can be taught, some are misguided and can be advised, some are foolish… and I fear there’s no remedy for that. Like an incurable disease, we can only let the affliction run its course and hope the sufferer survives.

And I do not automatically exclude myself from any of the aforementioned groups. 🙂

“feels he represents the many educated and rational onlookers who believe that the hysterical denouncement of lay scepticism is both unwarranted and counter-productive”

“feels he represents the many educated and rational onlookers who believe that the hysterical denouncement of lay scepticism is both unwarranted and counter-productive”

Very interesting and accessible article about a quite unknown and complicated matter. Lots to be learned here.

Part of the problem is that we love a sort of mini-Authority Fallacy in experts. To use an example that is often used by people defending experts, yes, i want an expert to fly my plane. However, i don’t think that expert will be very good at forecasting developments in aircraft, or passenger numbers or even what the flying will be like next Tuesday,

Similarly, the IPCC assembled climate experts.Yes, perhaps the best qualified climate scientists in the world. But it then asked them to do something for which they had demonstrated no expertise whatsoever – forecasting the future climate for the next century or so.

The fact that they are not very good at forecasts doesn’t make them bad climate scientists, because we really don’t have enough knowledge or other abilities (e.g. computing power) to be good at that at all. But now that they have got themselves into the position where being a good forecaster means being a good climate scientists, they will be judged by their forecasting ability. Thus they will defend their forecasts and perhaps even (unwittingly in many cases) cross over to manipulating data to show that their forecasts are right.

This is the corruption and waste we see now.. Experts should have said “we can’t do this very well at all, so these are just guesses really” and then worked hard to [understand] the climate so that they could improve those forecasts. Instead, just about every piece of research seems to be about proving how the forecasts are in fact right.

As an author, adviser, and peer reviewer to the IPCC I am in a position to comment on their internal biases. When I have raised difficult questions about such topics as the CO2 output associated with the production of polycrystalline silicon cells, or whether hurricanes are increasing in frequency and intensity (or not), I find myself waved away with vague and unverifiable statements that “that doesn’t really matter”.

I repeatedly tell my students to Question Everything. But do not try that with the IPCC. Their minds were already made up 20 or more years ago, so new data are simply minor inconveniences.

And as for that “peer review” thing, most of the reviewers of my chapter in their mighty tome had no background in the field in question, and I found their questions inane. To answer them I had to go back to basics and start in their freshman year. And many of the papers they sent me to review were so far afield from my area of specialization that I had to return them with no comments. Needless to say, the IPCC seems to be less than satisfied with my performance. I guess I should be covered in rue, but I do have professional standards to uphold.

And I am, I hope, the inverse of the Dunning-Kruger Effect, because I am constantly beset with self-doubt. Maybe that means I have at least a modicum of expertise.

An expert is someone who knows more and more about less and less. Or alternatively, someone from out of town with a Powerpoint presentation.

The psychologist Edward de Bono, in his book “A Five Day Course in Lateral Thinking”, described the difference between an expert and an inpert by reference to a story about Edison. Towards the end of his career, Edison was allocated a number of bright young men to assist him. One day he assigned them the task of calculating the volume of a light bulb. The young men took the bulb away, measured every dimension, laboured with paper, pen and slide rule and came back with an answer. “You’re out by at least 10%” said Edison who drilled a small hole in the bulb, filled it with water and poured it into a measuring jug.

We need more inperts who understand a subject and fewer experts.

This speaks to my appreciation of an elegant automatic control mechanism that I encountered: a fire door mounted on an inclined track (down to close) held open by a fusible link. That approach of leveraging simple laws of nature (rather than more complicated technological solutions) has influenced my approach to life ever since.

We used to call them gravity feed systems, as gravity is more reliable than pumps.

But all he got was the interior volume, less the volume of the filament and its supports, and less the volume of the fitting.

To get the volume of the bulb, all his students needed to do was to immerse the bulb in water, and measure the volume displaced. Simple Archimedes. QED.

I Agree with Dudley, and that was the solution I would have used–displacement. However, the problem was to ‘calculate’ the volume, not to just ‘determine’ it.

Sorry, I disagree with Dudley. The immersion approach is simple, but it doesn’t measure the interior volume. That may have actually been the goal – if one plans to replace air with some other gas, one reasonably cares how much is needed. That volume is the interior volume, and should exclude the filament and supports. That said, I would do it differently. Start with the immersion to get the gross volume, then break the bulb and use the immersion technique to get the volume of the glass, etc, then subtract to get the net volume of the interior.

Dudley/Jsuter – You have identified a classic source of measurement uncertainty; incomplete definition of the measurand.

I don’t think Edison was very bright. He could have immersed the bulb in water and measured the volume change.

Sorry! Not paying attention I guess. Some “expert” got there ahead of me!

Like the legend of how Alexander the Great, when presented with the Gordian Knot and asked to try to undo it, drew his sword and cut it apart.

“…I am constantly beset with self-doubt. Maybe that means I have at least a modicum of expertise.”

“(Maybe) I don’t know” is key to all learning, and the converse attitude stops learning. To paraphrase a saying – The more a wise person learns, the more that person realizes how little (s)he knows. And the more a foolish person learns the more that person esteems how knowledgeable (s)he is.

🙂

An expert is anyone from out of town with a briefcase…

Or a carpet bag …

Climate and psychology are both consequential, fascinating subjects with a great deal of uncertainty and an almost equal amount of claims to expertise.Economics is perhaps even worse.

It is in the area of psychology why people make claims to why they have the knowledge to give advice that others should follow, when if they are in fact forced to explain the uncertainties of the field, are really stating that given a large enough group, their recommendation will have a small, positive effect, but fail most of the time for most of the subjects. Not something one could readily sell.

Why people want certainty, or the illusion of certainty, is a consequential issue. Lyndon Johnson’s crack about wanting a “one-armed economist” is nearly a Zen koan in the psychology of politics. Why is it that there is a market for bad advice?

Actually, it was Harry Truman’s crack.

You can hardly blame Johnson (or Truman) for that remark. What’s the old saying? Ask 10 economists for an opinion and you’ll get 14 answers?

If an economist and a lawyer were both drowning, and you could only save one of them, would you sit and watch, or just walk away?

One of many economist jokes on the wall in the econ department at UM St. Louis.

And somehow this predisposition and the desired result is aided by the “Precautionary Principle.” It amazes me the number of time thos pushing the argument of the supposedly “settled science” also throw in that even if it is not provable we must do this oui of precaution for our children and their children.

Reply to Usurbrain:-

And they have got the “Precautionary Principle ” wrong. According to Wikipaedia:

“The precautionary principle (or precautionary approach) to risk management states that if an action or policy has a suspected risk of causing harm to the public, or to the environment, in the absence of scientific consensus (that the action or policy is not harmful), the burden of proof that it is not harmful falls on those taking that action.”

In the case of CAGW, this means that as all the actions proposed to deal with “Climate Change” have a suspected risk of causing harm to the public, it is up to the proponents of these proposed actions to combat “Global Warming” to demonstrate that these actions are not harmful.

To date all the actions proposed by CAGW enthusiasts have been shown to have a substantial cost to the public, with in many cases actual harm (as in making power prices so great that people do not use heating or cooling when the weather is cold or warm, and die as a result, or businesses find their electricity costs have grown so high that they can no longer continue in business, and therefore make their workers unemployed). The plausible benefits of the actions proposed have been found to be low, and their “projections” of future states of the weather have been found to be incorrect, such that some have now admitted that their models are wrong (Remember Ben Santer – and I note that Michael E Mann was a signatory to that paper!

The Precautionary Principle is the darling of environmentalists. However, progressives seem to give it no thought when they advocate for major social changes without precedent. It is sufficient that they think changing the way that things are done will lead to a better, more just world.

The Precautionary Principle bites both ways. Who is to say that the cure will not cause more problems than the disease when the cure has never been tried except on very limited scale. And where it has been tried lots of unforeseen problems have resulted. As you scale up small problems become big problems.

Anyone who thinks that a climate sensitivity bounded with +/- 50% uncertainty is settled is either a fool or a charlatan. We know that extraterrestrial life exists somewhere in the Universe with near absolute certainty and yet this is far from settled. Climate science gets even worse as the various RCP scenarios add at least an additional +/- 50% uncertainty to the anthropogenic component considered equivalent to post albedo solar forcing. Making this so much worse is that even with all the uncertainty, the low end of the IPCC’s presumption isn’t even low enough to include the worst case magnitude of the effect as limited by the laws of physics

I am constantly surprised that anyone is surprised that the IPCC has gotten much science wrong or dabbles in propaganda, data manipulation, or other bad habits. Just take a look at their founding documents. The United Nations Framework Convention on Climate Change(UNFCC) and the International Panel on Climate Change(IPCC) that it started were political constructs from the start. All the science summaries and research reported is strictly on “human caused” climate change. The conclusion was assumed from the start and they merely looked only at supporting evidence.

To them there are no “unknown unknowns”, propaganda is a useful tool in shaping outcomes, and the results are already established.

Agreed – but it’s the Intergovernmental Panel, not International.

Therefore its acronym should be IGPOCC.