![clip_image002[4] clip_image002[4]](http://wattsupwiththat.files.wordpress.com/2017/09/clip_image00241.jpg?resize=489%2C357&quality=83)

NCAR

Guest Post by Clyde Spencer

Because of recent WUWT guest posts, and their comments, I decided to do what I have been putting off for too long – reading what the IPCC has to say about climate modeling. The following are my remarks regarding what I thought were some of the most important statements found in FAQ 9.1; it asks and answers the question “Are Climate Models Getting Better, and How Would We Know?”

IPCC AR5 FAQ 9.1 (p. 824) claims:

“The complexity of climate models…has increased substantially since the IPCC First Assessment Report in 1990, so in that sense, current Earth System Models are vastly ‘better’ than the models of that era.”

They are explicitly defining “better” as more complex. However, what policy makers need to know is if the predictions are more precise, and more reliable! That is, are they useable?

FAQ 9.1 further states:

“An important consideration is that model performance can be evaluated only relative to past observations, taking into account natural internal variability.”

This is only true if model behavior is determined by tuning to match past weather, particularly temperature and precipitation. However, this pseudo model-performance is little better than curve fitting with a high-order polynomial. What should be done is to minimize the reliance on historical data, using first-principles more than what is currently done, doing a projection and waiting 5 or 10 years to see how well the projection forecasts the actual future temperatures. The way things are done currently – although using first-principles – may not be any better than using a ‘black box’ neural network approach to make predictions, because of the reliance on what engineers call “fudge factors” to tune with history.

FAQ 9.1 goes on to say:

“To have confidence in the future projections of such models, historical climate—and its variability and change—must be well simulated.”

It is obvious that if models didn’t simulate historical climate well, there would be no confidence in their ability to predict. However, historical fit alone isn’t sufficient to guarantee that projections will be correct. Polynomial-fits to data can have high correlation coefficients, yet are notorious for flying off into the Wild Blue Yonder when extrapolated beyond the data sequence. That is why I say above that the true test of skill is to let the models actually forecast the future. Another approach that might be used is to not tune the models to all historical data, but only tune them to the segment that is pre-industrial, or pre-World War II, and then let them demonstrate how well they model the last half-century.

One of the problems with tuning to historical data is that if the extant models don’t include all the factors that influence weather (and they almost certainly don’t), then the influence of the missing parameter(s) is/are proxied inappropriately by other factors. That is to say, if there was a past ‘disturbance in the force(ing)’, of unknown nature and magnitude, to correct for it, it would be necessary to adjust the variables that are in the models, by trial-and-error. Can we be certain that we have identified all exogenous inputs to climate? Can we be certain that all feedback loops are mathematically correct?

Inconveniently, it is remarked in Box 9.1 (p. 750):

“It has been shown for at least one model that the tuning process does not necessarily lead to a single, unique set of parameters for a given model, but that different combinations of parameters can yield equally plausible models (Mauritsen et al., 2012).”

These models are so complex that it is impossible to predict how an infinitude of combinations of parameters might influence the various outputs.

The kind of meteorological detail available in modern data is unavailable for historical data, particularly prior to the 20th Century! Thus, it would seem to be a foregone conclusion that missing forcing-information is assigned to other factors that are in the models. To make it clearer, in historical time, we know when most volcanoes erupted. However, what the density of ash in the atmosphere was can only be estimated, at best, whereas the ash and aerosol density of modern eruptions can be measured. Historical eruptions in sparsely populated regions may only be speculation based on a sudden decline in global temperatures that last for a couple of years. We only have qualitative estimates of exceptional events such as the Carrington Coronal Mass Ejection of 1859. We can only wonder what such a massive injection of energy into the atmosphere is capable of doing.

Recently, concern has been expressed about how ozone depletion may affect climate. In fact, some have been so bold as to claim that the Montreal Protocol has forestalled some undesirable climate change. We can’t be certain that some volcanos, such as Mount Katmai (Valley of Ten Thousand Smokes, AK), which are known to have had anomalous hydrochloric and hydrofluoric acid emissions (see page 4), haven’t had a significant effect on ozone levels before we were even aware of variations in ozone. For further insight on this possibility, see the following:

Continuing, FAQ 9.1 remarks:

“Inevitably, some models perform better than others for certain climate variables, but no individual model clearly emerges as ‘the best’ overall.”

This is undoubtedly because modelers make different assumptions regarding parameterizations and the models are tuned to their variable of interest. This suggests that tuning is over-riding the first-principles, and it dominates the results!

My supposition is supported by their subsequent FAQ 9.1 remark:

“…, climate models are based, to a large extent [my emphasis], on verifiable physical principles and are able to reproduce many important aspects of past response to external forcing.”

It would seem that tuning is a major weakness of current modeling efforts, along with the necessity for parameterizing energy exchange processes (convection and clouds), which occur at a spatial scale too small to model directly. Tuning is ‘the elephant in the room’ that is rarely acknowledged.

The authors of Chapter 9 acknowledge in Box 9.1 (p. 750):

“…the need for model tuning may increase model uncertainty.”

Exacerbating the situation is the remark in this same section (Box 9.1, p. 749):

“With very few exceptions … modelling centres do not routinely describe in detail how they tune their models. Therefore the complete list of observational constraints toward which a particular model is tuned is generally not available.”

Lastly, the authors clearly question how tuning impacts the purpose of modeling (Box 9.1, p. 750):

“The requirement for model tuning raises the question of whether climate models are reliable for future climate projections.”

I think that it is important to note that buried in Chapter 12 of AR5 (p. 1040) is the following statement:

“In summary, there does not exist at present a single agreed on and robust formal methodology to deliver uncertainty quantification estimates of future changes in all climate variables ….”

This is important because it implies that the quantitative correlations presented below are nominal values with no anchor to inherent uncertainty. That is, if the uncertainties are very large, then the correlations themselves have large uncertainties and should be accepted with reservation.

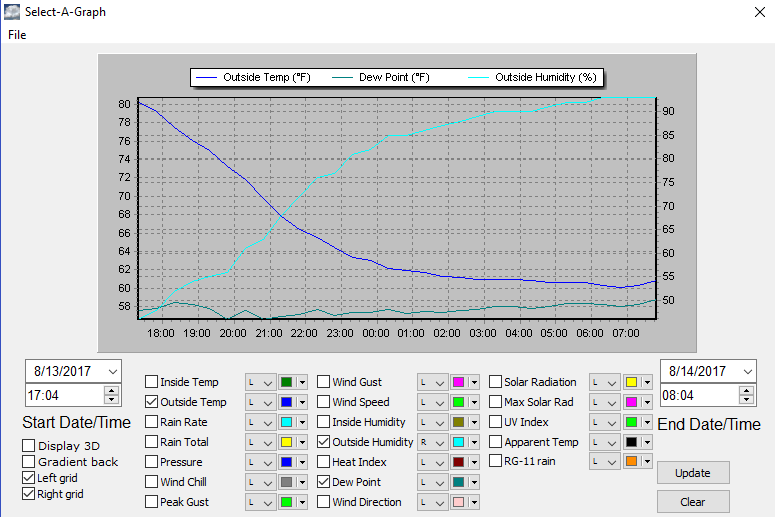

Further speaking to the issue of reliability is this quote and following illustration from FAQ 9.1:

“An example of changes in model performance over time is shown in FAQ 9.1, Figure 1, and illustrates the ongoing, albeit modest, [my emphasis] improvement.”

Generally, one should expect a high, non-linear correlation between temperatures and precipitation. It doesn’t rain or snow a lot in deserts, or at the poles (effectively cold deserts). Warm regions, i.e. the tropics, allow for abundant evaporation from the oceans and transpiration from vegetation, and provide abundant precipitatable water vapor. Therefore, I’m a little surprised that the following charts show a higher correlation between temperature and spatial patterns than is shown for precipitation and spatial patterns. To the extent that some areas have model temperatures that are higher than measured temperatures, then there have to be areas with lower than what is measured, in order to meet the tuning constraints of the global average. Therefore, I’m not totally convinced by the claims of high correlations between temperatures and spatial patterns. Might it be that the “surface temperatures” include the ocean temperatures, and because the oceans cover more than 70% of the Earth and don’t have the extreme temperatures of land, the temperature patterns are weighted heavily by sea surface temperatures? That is, would the correlation coefficients be nearly as high if only land temperatures were used?

![clip_image004[4] clip_image004[4]](http://wattsupwiththat.files.wordpress.com/2017/09/clip_image00441.jpg?resize=318%2C494&quality=83)

The reader should note that the claimed correlation coefficients for both the CMIP3 and CMIP5 imply that only about 65% of the precipitation can be predicted by the location or spatial pattern. If precipitation patterns are so poorly explained compared to average surface temperatures, it doesn’t give me confidence that regional temperature patterns will have correlation coefficients as high as the global average.

To read any or all of the IPCC AR5, go to the following hyperlink: http://www.ipcc.ch/report/ar5/wg1/

*Intergovernmental Panel on Climate Change, Fifth Assessment Report: Working Group 1; Climate Change 2013: The Physical Science Basis: Chapter 9 – Evaluation of Climate Models

It seems to me, and I’m no expert on climate models …. but … that all of this grid cell garbage, and climate models in general asses just the vertical movement of energy. Thus …. they fail. It is fact that the earth system absorbs, stores, moves, converts, converts again, stores again, and releases energy over an infinite number of time scales.

As such a model that is driven purely by absorption and release is destined to fail miserably. And fail they do.

“As such a model that is driven purely by absorption and release is destined to fail miserably. And fail they do.”

Isn’t the problem much deeper than how the software is coded?

Isn’t the real problem that we cannot as yet consistently and accurately describe earth’s climate system *period*, and therefore, regardless of how the model is coded (e.g., “driven purely by…”, etc.) it is destined to fail?

Just as fundamental in my view, is the idea that we can’t get accurate temp readings. If your initial data is questionable, no amount of adjustments will make it more reliable.

The IPCC said long ago that models cannot predict the future. The best they can provide is a probability. Where the IPCC went off the rails was to try and average this probability and produce a forecast. This is statistical nonsense.

“no individual model clearly emerges as ‘the best’ overall.”

————

In other words, we cannot tell if a model is right or wrong.

ferdberple,

Or even the degree to which a model is right or wrong because “… there does not exist at present a single agreed on and robust formal methodology to deliver uncertainty quantification estimates of future changes in all climate variables ….”

Splitting the data into training and validation datasets only works in when tuning is not allowed

As soon as you tune a model to better match the validation dataset you have made the validation dataset part of the training dataset and it loses all value for validation.

Training IS tuning

Similarly, around the time of AR5 publication, I too decided that I should read more detail about IPCC prognostications. I went first to my main specialities in chemistry. Specifically carbon chemistry. My PhD thesis involved both biochemistry, quantum-mechanical modelling, and preparation of compounds which are derivatives of carbon dioxide. I was quickly so outraged by some of the statements made about the chemistry of carbonic acid that my blood felt like it was approaching boiling point and I had to stop reading. It was as if it was written by greenpeace activist. I don’t know if I will ever go back to attempt to read IPCC reports in full. I simply cannot take that organisation seriously.

“I was quickly so outraged by some of the statements made about the chemistry of carbonic acid that my blood felt like it was approaching boiling point and I had to stop reading. ”

———————

Indeed. The paragraphs on CO2 “lifetime” is an outrageous example of obfuscation and pseudo-scientific spinning to entertain the idea that the satanic fossil fuel CO2 will stay in the atmosphere for ages to roast us. The IPCC wrote such garbage not to inform but to misdirect. And it was in the scientific report, imagine the propaganda in the economics and policy reports.

MH,

Have you shared your opinion of the behavior of CO2 with Nick Stokes? He has some interesting views on the behavior of CO2.

Forrest,

I agree with your assessment. However, I was primarily tweaking Stoke’s nose, and hoping that someone who was stronger in chemistry than me would take him to task for his unconventional position on acids.

Could you point me to these? I have a degree in chemistry and really got into physiology. Blood acid/base chemistry is fascinating. Blood, for those who are not aware of it, is a buffer solution, of which carbon dioxide and its hydrated and ionized forms are key. Blood entering the lungs is less alkaline than the blood leaving it. This does two things. One, it offloads the carbon dioxide more effectively and loads oxygen more effectively. Arterial to alveolar partial pressure difference pumps oxygen in. In the tissue, the oxygen is consumed, the carbon dioxide concentrations increase (bound and unbound), the blood pH goes from about 7.4 to 7.2. It gets lower than that, too, under certain conditions.

cdquarles,

Go to this post: http://wattsupwiththat.com/2015/09/15/are-the-oceans-becoming-more-acidic/

Search in the comments for “Nick Stokes.”

The same hindcast fit can be achieved by altering pairs (or any number) of parameters by amounts that offset changes in one or more. This is a trivial statement and essentially means there are, in practical terms, an infinite number of combinations of parameters that give the same hindcast.

Suspending disbelief that there is a right parameter set that can forecast the future, the chances of finding it are infinitessimaly small. Let’s do a thought experiment to hammer this this home. Imagine a successful forecast supported by 20years of observations after it was made. Now imagine changing its parameters in offsetting ways to arrive at the same successful forecast. What we have is not a skilled model, because there are still an infinite number of combinations giving the same result making the model useless because it is certain to go wildly off going forward. A lucky forecast with the wrong set or incomplete set is certainly possible (I’ve been dreading such an advent).

I believe the problem is a top down one. We’ve had an undulating series of glacial and interglacial periods with remarkably similar frequency. This natural variability demonstrates more powerful overriding forcing than that of CO2. Indeed, in detail, the saw tooth swings on multi-century to millennial scales observable in ice core records are far greater than CO2 forcing and are evidentally independent of CO2.

Another large factor that chisels CO2 down to, at best, a second to third order component is the enthalpy of water phase changes and attendant convection transfer of heat (bypassing the bulk of the atmospher) to high altitudes for diect expulsion of heat from the surface to space. Attendant albedo of clouds that form when incident heating reaches a certain maximum, acts to reflect heat energy back out of the atmosphere to space.

There was a fine article here at WUWT a few months ago that compared a number of interglacials and what information about our future they contain. If you want some scary climate, take a look how precipitously we drop into a new glacial period:

Oops the png didn’t “print”. I’ll retry.

http://www.co2science.org/education/reports/prudentpath/figures/Figure6ao.gif

Gary,

There is no greater intellectual ‘sin’ than to be right for the wrong reason because the prediction cannot be replicated.

This is like saying that because I can draw an accurate picture of an existing tree, that I should also be able to draw an accurate picture of what that tree will look like in 20 years.

again with lies about tuning to history.

read gavins lastest paper.

no tuning to past temperature or precipitation.

They may have changed the term to something else, but it’s still tuning.

No, they used and adjusted aerosols for decades because until a few years ago they didn’t have real data, so they made it up to tune model runs.

SM,

The official IPCC description of what goes on differs from your claim. However, it is germane to take note of my above quote: “With very few exceptions … modelling centres do not routinely describe in detail how they tune their models. Therefore the complete list of observational CONSTRAINTS toward which a particular model is tuned is generally not available.”

Now, the AR5 does state clearly that neither temperature or precipitation are themselves used as tuning parameters. However, they are typically used as constraints to which other parameters are adjusted, in order to obtain a match to historical temp’s and/or precip’. One has to make the grammatical distinction (a concept that shouldn’t be foreign to you) between “tuning to,” and “used to tune.”

Inasmuch as none of your above sentences have more than seven words, perhaps something ‘got lost in the translation.’ You might want to try to clarify what you meant.

Clyde, Steven Mosher calls it lies because they use a more devious reverse engineering with temps and tune with exaggerated fantasy aerosol effects to avoid other negative feedbacks so they can call a sheep a wolf because of how they dress it up. .

I can understand the difficulty dealing with unknown factors, but they deliberately leave out known factors, negative feedbacks, and until the pause, clear natural big variability factors because to acknowledge them would chisel away further at at a puny CO2 effect that has diminished to a point that they know we couldn’t get to plus 1.5C with business- as – usual with overdrive by 2100. They had to drop it to 1.5C when they realized the arbitrary 2C was impossible to attain, even going to all coal. Even the data manipulators don’t have thumbs heavy enough. The Paris agreement didn’t even bother pressing for commensurate reductions because they are going to get less than 1.5 no matter what. This, Mister Mosher, is real deep-seated lying that wasn’t even known before these zany times.

This is what goes for honesty in a sadly amoral, ends-justify- the-means big segment of society these days. They even tune the education system to create designer-brained clones of themselves to grow next generations consensus. Alinsky 101 is all they need. What Hausfather and Schmidt knowingly did with a perpetually fiddled cmip3 is devious and they will be applauded for it. In an earlier age they would be known as con artists. I used to call shame, but this is now a gentle word from the he past.

Com’on Mods, there’s a lot worse out there. I was called a liar by Moshe.

What parameters do they “tune” and how do those parameters relate to temperature and precipitation? Are we looking at lie by misdirection, Mr. Mosher?

So then Mosh. You do understand the totality of how the climate of planet earth works. Running the the models, all of them, from 1865, produces exactly the same result which exactly matches the data from all rural surface thermometers. Am I correct? or are you just waffling.

You used to be better than this current crap. What the hell happened to you. Howszyerfather? and Muller?

“read gavins lastest paper.”

Nah, when it comes to foretelling the future I prefer Nostradamus.

Or possibly Saint John the Divine.

Maybe even Mystic Meg.

But not Gavin.

Models fail regionally but can look good globally…that’s a sure sign of garbage.

The weather channel made a comment that the 2017 version of the American forecast model did worse in predicting hurricane paths than the 2016 version. So much for model updates.

I’d be curious to see what the models do out to the year 10,000,000 in order to see if the earth ends up getting hotter than the sun.

JL,

Well, if the models are running about 0.03 deg too hot per annum, then after some 10^6 years, they should be about predicting an Earth temperature of about 3×10^4 deg C. Not quite as hot as the sun! But, it would make going outside very uncomfortable.

Can someone please remind me which factors the ‘modelers’ are unable to factor in to a model? I believe ocean currents is/are one; what about wind, aerosols etc?

Convection. But that doesn’t really matter. It’s not like, you know, clouds are important.

Well, in the end, only radiation matters.

Clouds do stuff. But you don’t even need them to understand this.

The only condition anyone needs to really understand is multiple day clear skies. Preferably in dry, humid, warm and cold places.

Understand cooling at night, That is all that really matters.

We live on a water world, and that is the key driver of everything. That is the governor, and it would appear that everything else is just a pimple.

The top microns of the ocean, the ocean/atmosphere interface, the bottom microns of the atmosphere.

Well extend that to millimetres, but it is resolution within the 100 micron band that is critical.

The models are hopeless since they cannot even replicate the ITCZ (see http://english.iap.cas.cn/RE/201510/t20151022_153743.html), and most energy in and most energy out is the equatorial region of the planet. If we cannot get the equatorial region of this planet right, there is no hope.

Incidentally, many people consider the poles to be important, and they are since ocean currents distribute energy received in the tropics polewards, but that said, little energy is radiated from the poles because it is so very cold and T^4 determines this. It is the tropical region which not only receives most solar insolation but also due to the higher temperatures it radiates most energy.

I drive only using data from my rear view mirror.

+1

+2

Clyde: My criticism of model tuning would extend even farther than yours:

First, if your model is going to be used to attribute warming to man, then using any of the historical record to tune your model violates scientific ethics: You are tuning your model so that it attributes 100% of warming to known forcing (which is essentially all anthropogenic forcing). Then you are using that model to attribute warming to anthropogenic forcing and naturally the answer will always be about 100%.

Second, given the uncertain in forcing (particularly aerosol forcing), some uncertainty in warming, and the possibility of some unforced variability in climate (ENSO, AMO, PDO?, MWP?), one can’t really validate or invalidate models based on how much warming they predict. Is a model that predicts 0.3 K or even 0.5 K too much or too little warming over the historical record inferior to one that is right on?

Third: Given the first two points, the only legitimate way the tune a model is to see how well it reproduces current climate and the patterns of temperature, precipitation, rainfall, sea ice, and wind around the planet, including seasonal changes. Precipitation may be the most important of these observables. (Saturation water vapor pressure rises 7%/K of warming. If that humidity were all convected into the upper atmosphere at the current rate, that would increase latent heat flux from the surface by 5.6 W/m2/K. If climate sensitivity is 3.7, 1.8 or 1.2 K/doubling, only an additional 1, 2 or 3 W/m2/K respectively more heat escapes across the TOA to space. To get climate sensitivity right, AOGCMs must correctly suppress the rate at moist air is convected aloft, thereby raising relative humidity over the ocean and slowing evaporation.)

Fourth, the uncertainty associated with a model projection depends on how small a “parameter space” produces models that are equally good at reproducing today’s climate and how ECS varies within that parameter space. Unless one samples that parameter space, one has no legitimate scientific basis for assigning an uncertainty to any projection.

No it’s not arm waving. Well not in reality anyways. On a clear night, it would cool all night and likely 5 or 6F/hr with out water vapor. This is somewhat typical of the deserts.

But when the air cools at night, when it nears dew point, latent energy bound as water vapor becomes sensible heat that slows cooling by flooding at least some of the water vapor emission lines in IR.

This will slow or stop the air temp from dropping below dew point, under clear skies, that are still radiating 50-80W/m^2 through the optical window.

This high speed cool/slow speed cooling isn’t based on surface equilibrium SB equations, It’s not in equilibrium. Everyone assumes it is.

35-40% of the surface spectrum is exposed to temps well below zero, even after it stops cooling. That defies physics. Since it’s not defying physics, the general understanding is wrong.

Since this effect is specifically elicited as air temps near dew point, and until it cools to near dew point it cools quickly. And it will quickly to nearly the same temp at the same rate, just a longer time, if it starts from a little warmer temp. In effect, this nonlinear action reduces time at the low rate for time at the high rate. If the high rate is 4F/hr, and the slow rate is 0.5F/hr, 1C warmer Tmax, leads to a 1/8C increase in Tmin, as long as the night is long enough for this rate switch to happen.

But hardly no one seems to get this. This reduces CS to a fraction of a degree with no positive water feedback, an overwhelming negative water vapor feedback erases most or all of it. And it translates to over 97% correlation of Tmin to dew point temp.

The surface follows the ocean. But apparently no one makes any money if this all goes away.

The other day, I pointed out that on the shores of the Med, in the Summer, temperature falls away very slowly. I suspect that it was closely following near the dew point.

On 25th September there was a day time high of about 26degC early afternoon under cloudless skies. Still under cloudless skies at 02:30 hrs, on 26th September, it was still 24 degC, and even at 05:00 hrs (under cloudless skies) it was 22 degC. In fact the coolest time was reached at 08:00 hrs on 26th September, around 10 to 15 mins after sun up, and it was not until 11;00 hrs (under clear skies) that temperatures were back up to 24degC which was the temperature earlier that day at 02:30 hrs!!!

And the surface was not in equilibrium with the sky it radiates to. Something, I think energy stored as water vapor maintains.

It even stops cooling at the surface.

And the optical window is cold compared to the surface

There appears to be something in it.

There seems to be an under appreciation that this planet is a water world, and the full implications of that.

At worst, most nights you exchange time cooling slowly for cooling fast, until the difference between the two are gone.

Specifically it’s because the rate change is tied to the dew temp, it makes a switch. Switches are nonlinear. Transistors are switches, and nonlinear. And can regulate, because they have gain. And can change the rate of flow. In this case between the surface and space.

My concern is not what the IPCC says about models but what they do not say. Some important questions still remain unanswered.

In November 2016 Rosenbaum at Caltech posited that nature cannot be modeled with classical physics but theoretically might be modeled with quantum physics. (http://www.caltech.edu/news/caltech-next-125-years-53702) In the article “Global atmospheric particle formation from CERN CLOUD measurements,” sciencemag.org, 49 authors concluded “Atmospheric aerosol nucleation has been studied for over 20 years, but the difficulty of performing laboratory nucleation-rate measurements close to atmospheric conditions means that global model simulations have not been directly based on experimental data….. The CERN CLOUD measurements are the most comprehensive laboratory measurements of aerosol nucleation rates so far achieved, and the only measurements under conditions equivalent to the free and upper troposphere.” (December 2, 2016, volume 354, Issue 6316) The article emphasizes the importance of replacing theoretical calculations in models with laboratory measurements.

I have found no discussion of whether earth systems can or cannot be modeled with Newtonian physics in IPCC releases or in the mass media. Until that question is answered, comments from the IPCC re the question “Are Climate Models Getting Better, and How Would We Know?” seems irrelevant to me. Why have the results of the CERN CLOUD experiments been ignored by both sides on the climate change debate? Are the spokespersons on both sides too heavily vested in their respective narratives to consider other ideas?

When I asked Brent Lofgren (NOAA Physical Scientist) why climate models are based only on Newtonian physics, he replied: “Spectroscopy of atoms and molecules has its theoretical underpinnings in quantum mechanics, and this forms the basis for the definition of greenhouse gases. Although the QM is not explicitly modeled (doing this at the level of a climate model would be absurdly expensive), it is parameterized in the routines for radiative transfer, emission, and absorption, which lie at the root cause of not only GHG-caused climate change, but also the natural state of climate….”

Who made the decision that using QM to do climate models is too expensive? If the science used in models were the wrong science, policies stemming from the models would be the wrong policies. These are the really important questions that the IPCC needs to answer.

“My concern is not what the IPCC says about models but what they do not say.”

The following doesn’t give you concern?

“In sum, a strategy must recognise what is possible. In climate research and modelling, we should recognise that we are dealing with a coupled non-linear chaotic system, and therefore that the long-term prediction of future climate states is not possible. The most we can expect to achieve is the prediction of the probability distribution of the system’s future possible states by the generation of ensembles of model solutions. This reduces climate change to the discernment of significant differences in the statistics of such ensembles. The generation of such model ensembles will require the dedication of greatly increased computer resources and the application of new methods of model diagnosis. Addressing adequately the statistical nature of climate is computationally intensive, but such statistical information is essential.”

http://ipcc.ch/ipccreports/tar/wg1/505.htm

To summarize, the above *appears* to admit that:

1) as yet no valid scientific hypothesis (utilizing either ‘classical’ or ‘quantum’ physics) can accurately describe earth’s climate system, resulting in the truism that any accurate future predictions of the state of the climate are *impossible*

2) because we can’t yet describe the system we’re modeling, obviously it follows we can’t build a model to simulate it, therefore we’re reduced to building a bunch of them based on the limited information we do know and *hopefully* getting some sort of predictability probability that *might* give us a hint, but we can’t be sure

3) therefore, climate change is reduced to whatever statistical differences 2) shows us from the results of the model ensembles that can’t be trusted in the first place

4) we don’t yet have the hardware to run the model that can’t describe the system

5) even if we had 4) we can’t diag the model to see if it accurately represents the system we cannot yet describe

Should I not be concerned?

Yes, everyone should be very concerned. The various ensemble estimates are meaningless. There is no best estimate. The most that can be said is the best estimate might lie somewhere between the estimated 95% extreme values. No single value in the range is more likely to be right than another value, None of the model runs has any long-term predictive value. What the IPCC is not saying specifically is how they plan to fix the problems other than spending more money.

“What the IPCC is not saying specifically is how they plan to fix the problems other than spending more money.”

Well good grief, Tom! These good people are trying to save the world, how dare you question their methods over something as base as doing so on everyone else’s dime!

For shame!

/sarc

Although the QM is not explicitly modeled (doing this at the level of a climate model would be absurdly expensive), it is parameterized in the routines for radiative transfer, emission, and absorption, which lie at the root cause of not only GHG-caused climate change, but also the natural state of climate….”

I pointed out this problem years ago. Many people talk about radiative physics in classical physics terms only and still do, when radiative physic is strictly Einsteinian. Warming is a function of heat and kinetics not of absorption and re radiation to ground state.

If the basic physics is well known and understood and properly replicated in the model, there is no need for any tuning. In my opinion tuning is an admission that either the basic physics is not properly understood and/or it is not properly replicated in the model.

Further, why hindcast the model? Are we not giving models an easy ride by hindcasting? if the model has inherent errors, by asking it to go backwards as well as forward limits the implication and consequence of the inherent error. Far better to start the model in the past and go forward since that will more reliably test any inherent error in the model. I envisage that this is why Dr Spencer presents his model plot, the way he does. It better shows the true extent of the divergence.

If the physics is well known and understood and properly replicated in the model, why do models not forward cast only? Say start the putative models at 1850 with then known parameters allowing it to pan out to 2100, but assessing its worth by seeing how it fairs to date. After all it is claimed that in the long run ENSO is neutral and Volcanoes also only produce short term cooling, not long term impact.

One could make a suitable allowance over the short term (say over any 3 year period) to take account of any short lived natural event, but if the model is more than 0.3 degC out at the date of review, the model should be chucked out.

If the model does not replicate, within the 95% confidence level, the 1850 to say 2012 temperature record (other than for a short lived spike), the model should be chucked out.

If one wants to do a model run today, one sets the model parameters for 1850 and then run the model forward through to 2100, and review its output to see what it provides for the period 1850 to date, and whether that is close enough to observed reality (if there is such a thing as observed reality given our highly worked and reworked temperature reconstructions).

Homemade Chaotic Pendulum

And the corral is with the magnets always remaining in precisely the same place and strength, whereas in Earth’s system the equivalents are always moving their position, and their strengths.

a better example is the double jointed pendulum which quickly becomes chaotic

What a deja vu moment! In my freshman physics lab on mechanics in 1956, the class carried out individual experiments of choice. One classmate, Dave, chose to analyze the motion of a double jointed pendulum. Until now I did not appreciate the significance of this experiment. As I recall, Dave was not successful in analyzing the motions, but I always considered his experiment to be the most creative one in the class.

All,

With the notable exception of Mosher, who graced us with three whole(?) sentences of 7 words or less, the usual coterie of protesters has been conspicuous by their absence. Maybe they don’t feel they have good responses to those I quoted who wrote the AR5 chapter 9. Maybe they don’t think that it is worth their time to defend the lynchpin of the alarmist forecasts. Whatever the reason(s), the silence is deafening!

I know poor mosher went from English to world agw expert but he was at least able to think critically and logically in the beginning. He seems to have completely lost the plot since teaming up with Howsyerfather.

I hate to see a man brought down like that. It’s Bill mckitten-like

Forrest,

I don’t understand your remarks. We DO have historical information on many past events such as volcanic eruptions, and they can be input into the models at the appropriate time(s). What we can’t know is WHEN in the future there may be eruptions. That could be handled in a couple of different ways. One, is to parameterize the activity because there seems to be some cyclicity, or at least episodic eruptive behavior. Such a treatment might get actual years wrong, but keep the trend on track. Another approach would be to freeze the version of the model, and initialize the forecasting at some year before the model is actually run, and add in any volcanic activity that might have taken place after the model was frozen, and before the computer run was started.

The real issue with tuning is that there are many energy exchanges, such as convection and cloud formation, that can’t be computed at the spatial scale at which they work. So, they have to write sub-models to deal with that. These sub-models are the parameterizations. When the models are run, and don’t give reasonable results, the modelers adjust the parameters in the sub-models. The problem is, the discrepancies might be because of some reason other than the sub-models being wrong. That is where things go wrong!

Forrest,

I wouldn’t say “opposite conclusions.” We aren’t that different in our take on the status quo.

There is a long-standing, general admonition against over-fitting data. The best approach for polynomials is to use the minimum order that is physically justifiable, especially if one wants any hope of being able to extrapolate beyond the available data. Higher order polynomial fits are perhaps sometimes justified as providing better interpolation than a linear interpolation. In effect, there is an inverse relationship between the polynomial order and the implicit filtering of the data. In something as complicated as a GCM or ESM, with non-linear, non-polynomial relationships, AND feedback loops, It is difficult to even know if the data are over-fitted! (Although, I suspect that is the case.) Perhaps the modelers should be reminded of the KISS principle. The approach used currently is so naïve that one can’t specify a priori what the number of optimum variables is, nor which variables are most important, nor when one gets to a point of diminishing returns when trying to improve the models. It seems to be a lot of trial-and-error along the lines of, “Well, that didn’t work quite the way we hoped, what if we were to add (x) and see what that does?” I’m reminded of the quote to the effect, “If you have to resort to statistics, you didn’t design the experiment properly.” GCMs are really very complex, formal hypotheses that are being tested for compatibility with observations. Despite having been around since at least the 1970s, I have to question how much thought has gone into the high-level design of the hypotheses. It may be time to walk away from what has been done and take a fresh look at the whole problem.

Is it really worthwhile to discuss such a qualitative statements by IPCC [9.1]???

Dr. S. Jeevananda Reddy

Dr. S. Jeevananda ,

I’m not sure how to adequately respond to such a terse and open-ended question. There have been over 160 comments, so a number of people have obviously felt it worthwhile to respond to the qualitative points I have raised. Einstein was famous for his use of qualitative ‘Thought Experiments’ before he formalized the derived relationships in mathematics. Not everyone reading this blog can follow a more rigorous mathematical treatment of the issues. So, unless you are suggesting that we ignore those who can’t follow a more quantitative treatment, I’d say that qualitative is as good a place as any to start to raise questions. I’d suggest that you should feel free to continue the dialogue with a more quantitative guest submission.

Clyde

Climate models are hopeless for long-term forecast. Four adjustable parameters are laughable. Five or more is a fantasy from Disneyland. The anecdote about adjustable parameters in modeling originated from the great John von Neumann, retold by another great Enrico Fermi, and finally by Freeman Dyson. Their collective wisdom warns us of the pitfall in mathematical modeling

What’s also lacking in every climate model run is a consistent set of initial conditions – consistent across models, and consistent with reality. Evidently, climate “scientists” take very little care in setting the initial conditions for a multi-year run, and the models, if left to their own devices, “blow up.” That is, numerical instabilities grow to the point where results are obviously absurd. To “counter” this, rather than trying to get initial conditions right, the modelers put in algorithms which detect and damp out excursions. Those algorithms then become part of the Navier-Stokes equations (already adulterated with specious but indispensable empirical turbulence equations), and drive the overall model even further away from an actual description of the fluid mechanics.

Honestly, this whole thing is just a huge, huge farce, and a blight on science.