Guest essay by Mike Jonas

In this article, I take a look inside the workings of the climate computer models (“the models”), and explain how they are structured and how useful they are for prediction of future climate.

This article follows on from a previous article (here) which looked at the models from the outside. This article takes a look at their internal workings.

The Models’ Method

The models divide up the atmosphere and/or oceans and/or land surface into cells in a three-dimensional grid and assign initial conditions. They then calculate how each cell influences all its neighbours over a very short time. This process is then repeated a very large number of times so that the model then predicts the state of the planet over future decades. The IPCC (Intergovernmental Panel on Climate Change) describes it here. The WMO (World Meteorological Organization) describes it here.

[Enlarge]

Seriously powerful computers are needed, because even on a relatively coarse grid, the number of calculations required to predict just a few years ahead is mind-bogglingly massive.

Internal and External

At first glance, the way the models work may appear to have everything covered, after all, every single relevant part of the planet is (or can be) covered by a model for all of the required period. But there are three major things that cannot be covered by the internal workings of the model:

1 – The initial state.

2 – Features that are too small to be represented by the cells.

3 – Factors that are man-made or external to the planet, or which are large scale and not understood well enough to be generated via the cell-based system.

The workings of the cell-based system will be referred to as the internals of the models, because all of the inter-cell influences within the models are the product of the models’ internal logic. Factors 1 to 3 above will be referred to as externals.

Internals

The internals have to use a massive number of iterations to cover any time period of climate significance. Every one of those iterations introduces a small error into the next iteration. Each subsequent iteration adds in its own error, but is also misdirected by the incoming error. In other words, the errors compound exponentially. Over any meaningful period, those errors become so large that the final result is meaningless.

NB. This assertion is just basic mathematics, but it is also directly supportable: Weather models operate on much finer data with much more sophisticated and accurate calculations. They are able to do this because unlike the climate models they are only required to predict local conditions a short time ahead, not regional and global conditions over many decades. Yet the weather models are still unable to accurately predict more than a few days ahead. The climate models’ internal calculations are less accurate and therefore exponentially less reliable over all periods. Note that each climate cell is local, so the models build up their global views from local conditions. On the way from ‘local’ to ‘global’, the models pass through ‘regional’, and the models are very poor at predicting regional climate [1].

At this point, it is worth clearing up a common misunderstanding. The idea that errors compound exponentially does not necessarily mean that the climate model will show a climate getting exponentially hotter, or colder, or whatever, and shooting “off the scale”. The model could do that, of course, but equally the model could still produce output that at first glance looks quite reasonable – yet either way the model simply has no relation to reality.

An analogy : A clock which runs at irregular speed will always show a valid time of day, but even if it is reset to the correct time it very quickly becomes useless.

Initial State

It is clearly impossible for a model’s initial state to be set completely accurately, so this is another source of error. As NASA says : “Weather is chaotic; imperceptible differences in the initial state of the atmosphere lead to radically different conditions in a week or so.“. [2]

This NASA quote is about weather, not climate. But because the climate models’ internals are dealing with weather, ie. local conditions over a short time, they suffer from the same problem. I will return to this idea later.

Small Externals

External factor 2 concerns features that are too small to be represented in the models’ cell system. I call these the small externals. There are lots of them, and they include such things as storms, precipitation and clouds, or at least the initiation of them. These factors are dealt with by parameterisation. In other words, the models use special parameters to initiate the onset of rain, etc. On each use of these parameters, the exact situation is by definition not known because the cell involved is too large. The parameterisation therefore necessarily involves guesswork, which itself necessarily increases the amount of error in the model.

For example, suppose that the parameterisations (small externals) indicate the start of some rain in a particular cell at a particular time. The parameterisations and/or internals may then change the rate of rain over hours or days in that cell and/or its neighbours. The initial conditions of the cells were probably not well known, and if the model had progressed more than a few days the modelled conditions in those cells were certainly by then totally inaccurate. The modelled progress of the rain – how strong it gets, how long it lasts, where it goes – is therefore ridiculously unreliable. The entire rain event would be a work of fiction.

Large Externals

External factor 3 could include man-made factors such as CO2 emissions, pollution and land-use changes (including urban development), plus natural factors such as the sun, galactic cosmic rays (GCRs), Milankovitch cycles (variations in Earth’s orbit), ocean oscillations, ocean currents, volcanoes and, over extremely long periods, things like continental drift.

I covered some of these in my last article. One crucial problem is that while some of these factors are at least partially understood, none of them are understood well enough to predict their effect on future climate – with just one exception. Changes in solar activity, GCRs, ocean oscillations, ocean currents and volcanoes, for example, cannot be predicted at all accurately, and the effects of solar activity and Milankovitch cycles on climate are not at all well understood. The one exception is carbon dioxide (CO2) itself. It is generally-accepted that a doubling of atmospheric CO2 would by itself, over many decades, increase the global temperature by about 1 degree C. It is also generally accepted that CO2 levels can be reasonably accurately predicted for given future levels of human activity. But the effect of CO2 is woefully inadequate to explain past climate change over any time scale, even when enhanced with spurious “feedbacks” (see here, here, here, here).

Another crucial problem is that all the external factors have to be processed through the models’ internal cell-based system in order to be incorporated in the final climate predictions. But each external factor can only have a noticeable climate influence on time-scales that are way beyond the period (a few days at most) for which the models’ internals are capable of retaining any meaningful degree of accuracy. The internal workings of the models therefore add absolutely no value at all to the externals. Even if the externals and their effect on climate were well understood, there would be a serious risk of them being corrupted by the models’ internal workings, thus rendering the models useless for prediction.

Maths trumps science

The harsh reality is that any science is wrong if its mathematics is wrong. The mathematics of the climate models’ internal workings is wrong.

From all of the above, it is clear that no matter how much more knowledge and effort is put into the climate models, and no matter how many more billions of dollars are poured into them, they can never be used for climate prediction while they retain the same basic structure and methodology.

The Solution

It should by now be clear that the models are upside down. The models try to construct climate using a bottom-up calculation starting with weather (local conditions over a short time). This is inevitably a futile exercise, as I have explained. Instead of bottom-up, the models need to be top-down. That is, the models need to work first and directly with climate, and then they might eventually be able to support more detailed calculations ‘down’ towards weather.

So what would an effective climate model look like? Well, for a start, all the current model internals must be put to one side. They are very inaccurate weather calculations that have no place inside a climate model. They could still be useful for exploring specific ideas on a small scale, but they would be a waste of space inside the climate model itself.

A climate model needs to work directly with the drivers of climate such as the large externals above. The work done by Wyatt and Curry [3] could be a good starting point, but there are others. Before such a climate model could be of any real use, however, much more research needs to be done into the various natural climate factors so that they and their effect on climate are understood.

Such a climate model is unlikely to need a super-computer and massive complex calculations. The most important pre-requisite would be research into the possible drivers of climate, to find out how they work, how relatively important they are, and how they have influenced climate in the past. Henrik Svensmark’s research into GCRs is an example of the kind of research that is needed. Parts of a climate model may well be developed alongside the research and assist with the research, but only when the science is reasonably well understood can the model deliver useful predictions. The research itself may well be very complex, but the model is likely to be relatively straightforward.

The first requirement is for a climate model to be able to reproduce past climate reasonably well over various time scales with an absolutely minimal number of parameters. (John van Neumann’s elephant). The first real step forward will be when a climate model’s predictions are verified in the real world. Right now, the models are a long way away from even the first requirement, and are heading in the wrong direction.

###

Mike Jonas (MA Maths Oxford UK) retired some years ago after nearly 40 years in I.T.

References

[1] A Literature Debate … R Pielke October 28, 2011 [Note : This article has been referenced instead of the original Demetris Koutsoyiannis paper, because it shows the subsequent criticism and rebuttal. Even the paper’s critic admits that the models “have no predictive skill whatsoever on the chronology of events beyond the annual cycle. A climate projection is thus not a prediction of climate [..]“. A link to the original paper is given.

[2] The Physics of Climate Modeling. NASA (By Gavin A. Schmidt), January 2007

[3] M.G. Wyatt and J.A. Curry, “Role for Eurasian Arctic shelf sea ice in a secularly varying hemispheric climate signal during the 20th century,” (Climate Dynamics, 2013). The best place to start is probably The Stadium Wave, by Judith Curry.

Abbreviations

CO2 – Carbon Dioxide

GCR – Galactic Cosmic Ray

IPCC – Intergovernmental Panel on Climate Change

NASA – National Aeronautics and Space Administration

WMO – World Meteorological Organization

I think the modelers are trying to do too much with too many unknowns of a dynamic system using fixed geographic cells. I would argue that since climate can be viewed as “long term weather” and weather is carried by clouds and winds, the priority should be to accurately model clouds and winds over the globe. Thoughts?

It is noted above, this time by richardscourtney, that a 2% increase in cloud cover will compensate for the warming caused by a doubling of CO2.

Some people take the approach that a model must include all variables, all at once, to be valid. OK, that is a valid point of view.

I take the opposite view, start with the most important variable, get a first approximation. Add a second variable, get a second approximation. And so on. At least you start getting results.

From this point of view, cloud cover would be a major climate driver, and CO2 wold be a bit player at the bottom of the list. {I know, this begs the question, what drives cloud cover, but I doubt it is CO2, and you must start somewhere}

But this fixation on CO2 cannot help but to mislead, when there seem to be more important factors, even by the models.

Recent studies are suggesting Cosmic Rays and the strength of the Solar Winds affect the aerosols in the atmosphere to create more or less clouds !!!!

TonyL:

You say

Yes, cloud cover is a “major climate driver” but nobody really knows what changes cloud cover.

Good records of cloud cover are very short because cloud cover is measured by satellites that were not launched until the mid-1980s. But it appears that cloudiness decreased markedly between the mid-1980s and late-1990s

(ref. Pinker, R. T., B. Zhang, and E. G. Dutton (2005), Do satellites detect trends in surface solar radiation?, Science, 308(5723), 850– 854.)

Over that period, the Earth’s reflectivity decreased to the extent that if there were a constant solar irradiance then the reduced cloudiness provided an extra surface warming of 5 to 10 Watts/sq metre. This is a lot of warming. It is between two and four times the entire warming estimated to have been caused by the build-up of human-caused greenhouse gases in the atmosphere since the industrial revolution. (The UN’s Intergovernmental Panel on Climate Change says that since the industrial revolution, the build-up of human-caused greenhouse gases in the atmosphere has had a warming effect of only 2.4 Watts/sq metre).

So, changes to cloud cover alone could be responsible for all the temperature rise from the Little Ice Age but nobody knows the factors that control changes to cloud cover.

Richard

Has anyone ever tried to correlate cloud cover with CO2? Remember correlation does not imply causation but causation does imply correlation. ie no-correlation implies no-causation.

son of mulder:

You ask

Firstly, a correction.

Lack of correlation indicates lack of direct causation but correlation does NOT imply causation.

Now, to answer your question.

There is insufficient data to determine if there is correlation of atmospheric CO2 with cloud cover.

Clouds are a local effect so if atmospheric CO2 variations affect variations in cloud formation and cessation then it is the local CO2 which induces the alteration. Cloud cover is monitored but little recent evidence of local CO2 exists. And the OCO-2 satellite data of atmospheric CO2 concentration lacks sufficient spatial resolution.

One could adopt unjustifiable assumptions to make estimates of the putative correlation. There is good precedent for such unscientific practice in studies of atmospheric CO2; e.g. there are people who claim changes in atmospheric CO2 are entirely caused by anthropogenic emissions because if one assumes nothing changes without the anthropogenic emissions then a pseudo mass balance indicates the anthropogenic emissions are causing the change!

Richard

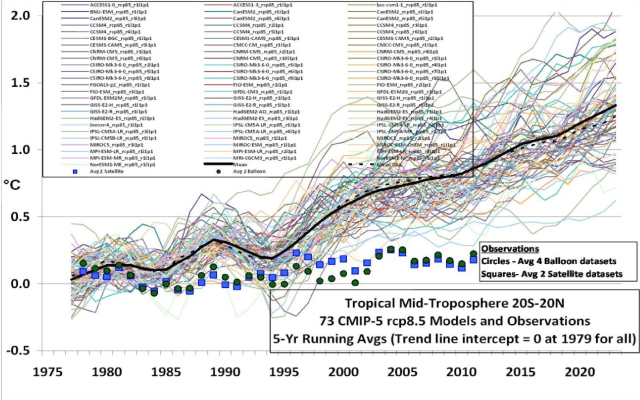

The issues with the models pertain to why their outputs diverge from reality as observed. Here an analysis taking that approach.

https://rclutz.wordpress.com/2015/03/24/temperatures-according-to-climate-models/

“The climate system is a coupled non-linear chaotic system, and therefore the long-term prediction of future climate states is not possible. ”

https://www.ipcc.ch/ipccreports/tar/wg1/501.htm

And yet confidence is high. But not as high as anyone who claims to be confident in modelling the impossible.

GCMs use solar forcing that is based on the Earth’s obliquity, axial tilt, and precesssion – i.e.,the exact factors that create Milankovitch cycles. We can calculate these very precisely. The author appears unaware of this fact.

The models also use the Solar Flux Factor. Yes, that is as bad as it sounds. The Solar Flux Factor is typically set at around 70% of actual, the models run way to hot otherwise. Very precise, so much for accuracy.

Calculation of the Solar Flux Factor is a routine factor in determining insolation for a planet. To obtain the insolation at any given time of year, this flux factor is multiplied by the solar constant at the time of perihelion.

Your claim that “The Solar Flux Factor is typically set at around 70% of actual…” in GCMs is incorrect.

Kevin O’Neill – I address Milankovitch cycles in my previous article (linked above). In part : The most important-looking cycles don’t show up in the climate, and for the one that does seem to show up in the climate (orbital inclination) we just don’t know how or even whether it affects climate.. So you see, knowing everything about Earth’s orbit doesn’t help because we don’t know how it affects climate.

Mike Jonas – you have said in your previous article and in this one that Milankovitch cycles are not included in GCMs. This is untrue. Milankovitch cycles *are* included thru the orbital forcings.

How Milankovitch cycles affect climate is obvious. One only needs to compare them to the glacial-interglacials to see how they affect climate. Changes in these orbital forcings will have noticeable effects over longer timescales with little noticeable effect on short timescales.

Mike – there is a long history of analyzing orbital forcings (Milankovitch Cycles) in GCMs on past climate. This is in contrast to your view that GCMs do not incorporate Milankovitch Cycles. I’ll quote from just one paper, Northern Hemisphere forcing of Southern Hemisphere climate during the last deglaciation, He, Shakun, Clark, Carlson, Liu, Otto-Bliesner & Kutzbach, Nature (2013):

“…our transient simulation with only orbital changes supports the Milankovitch theory in showing that the last deglaciation was initiated by rising insolation during spring and summer in the mid-latitude to high-latitude Northern Hemisphere and by terrestrial snow–albedo feedback.

Kevin O’Neill, you say “ One only needs to compare them to the glacial-interglacials to see how they affect climate.“. But we have no mechanism, so the “seeing how” cannot be coded into the models. In any case, we don’t “see how”, because the past climate hasn’t matched up with the orbital cycles, other than the orbital inclination. That’s the “100,000 year” cycle that matches the glacial-interglacial cycle, and it has people scratching their heads because it is the only cycle that doesn’t change Earth’s position wrt the sun. A lot of attention has been paid to the 65N idea without success, and various other ideas have been tried without success, The only workable hypothesis I have seen involves dust in space and there is no direct evidence that such dust exists.

Mike Jonas writes: “A lot of attention has been paid to the 65N idea without success, and various other ideas have been tried without success…

As the paper above quoted shows, you are unfamiliar with the scientific literature.

oneillinwisconsin – the He et al paper you cite looks at only the first half of the last deglaciation which represents one small part of one type of Milankovitrch cycle. It suffers from the problem that there were other such Milankovitch cycles that were not associated with deglaciation. Their model, which is based on insolation forcing, would certainly have predicted deglaciation for each such cycle. So we still don’t know. My suspicion – just a suspicion don’t take it too seriously – is that virtually everyone is looking in the wrong place namely the northern hemisphere while it is the southern hemisphere that principally drives climate on those timescales.

Mike – you said that GCMs do *not* include Milankovitch Cycles. I have shown that they do. The history of using GCMs to examine orbital forcings (Milankovitch Cycles) goes back to at least the 1980s. So any claim that GCMs do not include Milankovitch cycles (as you have made) shows a complete lack of familiarity with the actual science behind GCMs.

Now you are morphing your argument without admitting your initial claim was wrong. This is par for the course on the internet, but needs to be pointed out since you are unwilling to admit the error.

Now you write: “… it has people scratching their heads because it is the only cycle that doesn’t change Earth’s position wrt the sun..” This displays a misconception on your part with regards to these orbital forcings and their effect. The Milankovitch cycles most important changes are to the latitudinal distribution of solar forcing – not necessarily the total amount. This is why the insolation at 65N becomes important. It does not have scientists scratching their heads – just people that don’t understand the science.

oneillinwisconsin – you make it sound like the effect of Milankovitch cycles on climate are well understood. I don’t think they are. NOAA says “What does The Milankovitch Theory say about future climate change?

Orbital changes occur over thousands of years, and the climate system may also take thousands of years to respond to orbital forcing. Theory suggests that the primary driver of ice ages is the total summer radiation received in northern latitude zones where major ice sheets have formed in the past, near 65 degrees north. Past ice ages correlate well to 65N summer insolation (Imbrie 1982). Astronomical calculations show that 65N summer insolation should increase gradually over the next 25,000 years, and that no 65N summer insolation declines sufficient to cause an ice age are expected in the next 50,000 – 100,000 years.” (http://www.ncdc.noaa.gov/paleo/milankovitch.html). [my bold]

But Hays Imbrie and Shackleton say : “A model of future climate based on the observed orbital-climate relationships, but ignoring anthropogenic effects, predicts that the long-term trend over the next sevem thousand years is toward extensive Northern Hemisphere glaciation. “. (http://www.sciencemag.org/content/194/4270/1121) [my bold] [I assume that’s the same Imbrie, but I haven’t checked].

So what is it to be, warmer or cooler?

Hays et al also say “an explanation of the correlation between climate and eccentricity probably requires an assumption of nonlinearity“. In other words, they have no mechanism, they have some correlation but they can’t quite get it to fit, and they do think they are on the right track. They may well be, but trying to put any of that into bottom-up climate models is an exercise in futility.

Mike – Quote-mining is rarely an intellectually honest endeavor. The sentence immediately preceding the quote you selected from Hays et al says: “ It is concluded that changes in the earth’s orbital geometry are the fundamental cause of the succession of Quaternary ice ages.” Now, does that sound familiar? It’s the argument *I* made, that you dismissed.

As to your question: “So what is it to be, warmer or cooler?” You need to read harder. First, Hays et al say, “… the results indicate that the longterm trend over the next 20,000 years is toward extensive Northern Hemisphere glaciation and cooler climate .”

If we track down the references from the NOAA page you quoted we find “No soon Ice Age, says Astronomy,” by Jan Holland. The figure from Hollan for future insolation also shows numerous warming/cooling periods over the next 20 thousand years. Per Hollan summer insolation is both increasing *and* decreasing over the next 20ky – the peaks are higher than today and the troughs are lower.

Now, Hays et al was written in 1976 and Holland’s webpage says December 18, 2000. We can also note they were using different astronomical data sources. I don’t believe either would be used today as definitive of the state of scientific knowledge vis a vis orbital forcings. Still, it is a only very uncharitable reader who would claim they contradict each other.

Mike – both sources you quote believe that orbital changes are the basis for past glaciations. Where they differ is in their prediction for the next glaciation. Hays et al, working in the mid-70s, did not have access to the same orbital calculations that became available though the work of Andre Berger. Here are just three quotes from Climate change: from the geological past to the uncertain future – a symposium honouring Andre Berger:

“Investigators interested in the problem of ice ages, such as L. Pilgrim (1904), M. Milankovitch (1941) and, later, Sharaf et al. (1967) and A. Vernekar (1972) computed timeseries of elements expressed relative to the vernal equinox and the ecliptic plane, hence more relevant for climatic theories. However, the characteristic periods of these elements were not known, and there was no extensive assessment of their accuracy. In particular, there was nothing like a “double” precession peak in the orbital frequencies known at the time.” Note that Hays et al were using astronomical data from Vernekar.

“… his [Berger’s] finding of an analytical expression for the climatic astronomical elements, under the form a sum of sines and cosines (Berger 1978b). In this way, the periods characterising them could be listed in tables for the first time, and insolation could be computed at very little numerical cost. This very patient and obstinate work yielded the demonstration that the spectrum of climatic precession is dominated by three periods of 19, 22 and 24 kyr, that of obliquity, by a period 41 kyr, and that of eccentricity has periods of 400 kyr, 125 kyr and 96 kyr (the Berger periods). The origin of the 19 and 23 kyr peaks in the Hays et al. spectra was thus elucidated (Berger, 1977b). According to John Imbrie, this result constituted the most delicate proof that orbital elements have a sizable effect on the slow evolution of climate.”

Hays et al was a seminal paper in that it found these orbital periods in proxy data. Berger provided subsequent researchers even better orbital data through precise calculations.

“Berger was also the first to introduce and compute the longterm variations of daily, monthly and seasonal insolation and demonstrated the large amplitude of daily insolation changes (Berger, 1976a), which is now used in all climate models. His prediction in the early 1990s about the shortening of astronomical periods back in remote times (Berger et al., 1989,1992) has been confirmed by palaeoclimate data of Cretaceous ages, and constitutes thus an important validation and development of the Milankovitch theory for Pre-Quaternary climates.“

” I’ll quote from just one paper, Northern Hemisphere forcing of Southern Hemisphere climate during the last deglaciation, ”

Sorry Kevin, this paper is just model hoopla that studiously avoids plotting their output against the ice core data.

The only reasonable inference is that the AMPLITUDE of 65N insolation VARIABILITY correlates reasonably well, with high amplitude variability corresponding to stadials.

This is not surprising since when you zoom out from the Pleistocene time scale to the Phanerozoic time scale and its three glacial periods (which don’t correspond to any Milankovitch scale oscillation), it is clear that the transition to the Pleistocene is associated with extreme variability as well as general cooling.

It’s like a stabilizing feedback gets dampened or delayed. The variability could cause the cooling, result from the cooling, or both could be forced by something else. We just don’t know.

Whoops, got it upside down. 65N variability corresponds with interstadials, which IS sort of surprising as it is contrary to the trend into the Pleistocene.

Too long since looking at that graphic. 65N is a composite of M components, but 65N variability seems also associated with extremes of eccentricity, which is not at all surprising.

Boiling it all down : there are major changes in climate which correlate to some extent with Milankovitch cycles, but there is still no known mechanism. Lots of work has been done on changes in insolation (and yes these are in the models, see below) but they don’t explain the changes. Particular attention has been given to 65N because it looks most hopeful but it still doesn’t cut the mustard. Without a mechanism they can’t code anything into the models’ internal workings.

There’s a parallel here with solar activity on shorter time scales. There are very small variations in solar activity, which are coded into the models, but which have trivial effect on climate. Yet if you look back at past climate there is abundant evidence that the sun had more influence on climate. But there is no mechanism, so it can’t be coded into the models’ internal workings.

Worse than that, in both cases they don’t code anything else into the models. They could put ideas into the large externals but they don’t. They do put untested ideas in there when it suits them (eg. the water vapour and cloud feedbacks). Put simply, they ignore Milankovitch cycles in the same way that they ignore solar activity – it looks like the models are handling them but they haven’t tried to emulate them.

And this brings me back to my article above : OK so they can’t code solar activity and Milankovitch cycles into the models internal workings because there isn’t a mechanism. But they could put them into the large externals along with the various other real or imaginary things that are there (They might then have to protect them from the vagaries of the internal workings, but that is something they are doing already.). So what would you have then? You would have a large complex cell-based computer model driven almost entirely by factors fed into it from outside that cell-based system. A top-down system. While you are about it, chuck in a few other things that you don’t have a mechanism for. Given that in this system the internal cell-based logic is contributing absolutely nothing of value and has at times to be prevented from mucking things up, you might as well just ditch it and suddenly you are working with a cheap fast flexible model that can easily be understood by others when you explain your results to them. The Occam’s Razor of climate models. Now that would be a real advance.

From above:

Well, think again, but invert that series of thoughts.

The “global climate models” really are not either “global”, nor “climate” models. Rather, they started as “general circulation models” (also GCM by the convenient initials) but as LOCAL models of dust and aerosols in a single local area. (Particularly the LA Basin, the dust and nickle refining “acid rain” flumes from the Toronto area refineries for the Adirondacks acid rain studies.

THEN – only after the acid rain legislation and ozone hole legislation hysteria showed that the enviro movement could indeed get international laws passed in a general hysterical propaganda cloud of “immediate action is needed immediately” against a nebulous far-reaching problem that could be endlessly studied by government-paid “scientists” in government-paid glamorous campuses holding tens of billions of government-paid bureaucrats and programmers – only then did the CO2 global warming scenario begin its power play.

But the original local models were fairly good. It is why the GCM (general circulation models) look at the aerosol sizes and particle shapes and particle densities and the individual characteristics of every different size of particle so carefully. Entire papers are dedicated to particle estimates and models as a subset of the modeling.

Now, admittedly, the LOCAL wind and temperature conditions over short ranges of time began to be modestly accurate in these elaborate NCAR and Boulder campus computers – and the rest of the academia began demanding “their share” of the money. And the attention. And the papers. And the programmers. . So they simply expanded the model boundaries to the world’s sphere.

Thanks, Mike Jonas. Very good article, an excellent continuation for your previous article.

“The mathematics of the climate models’ internal workings is wrong.”

For an “inside” article, this is remarkably superficial. You have not shown any mathematics is wrong. You have not shown any mathematics. You have said nothing about the equations being solved. It’s just argument from incredulity. “Gosh, it’s hard – no-one could solve that”.

“In other words, the errors compound exponentially.”

No, that is wrong. They can – that is the mode of failure, It’s very obvious – they “explode”. In CFD (computational fluid dynamics), people put a lot of effort into finding stable algorithms. They succeed – CFD works. Planes fly.

That’s the problem with these sweeping superficial dismissals – they aren’t specific to climate. This is basically an assertion that differential equations can’t be solved. But they have been, for many years.

“Initial state”

Yes, the models do not solve de’s as initial value problems. That is very well understood. Neither does most CFD. They produce a working system that “models” the atmosphere.

“Small Externals”

Actually internals. But sub-grid issues are unavoidable when equations are discretised. Turbulence in CFD is the classic. And the treatment by Reynolds averaging goes back to 1895.

“Large Externals”

Yes, some future influences can’t be predicted from within the model. That’s why we have scenarios.

Here is an example of what AOGCM’s do produce, just from an ocean with bottom topography and solar energy flux etc. It isn’t just the exponential accumulation of error.

Nick Stokes – You say I have “not shown any mathematics“. You clearly do not understand what mathematics is. Mathematics at its most basic is simply the study of patterns (eg. http://www.amazon.com/Mathematics-Science-Patterns-Search-Universe/dp/0805073442 “To most people, mathematics means working with numbers. But [..] this definition has been out of date for nearly 2,500 years. Mathematicians now see their work as the study of patterns—real or imagined, visual or mental, arising from the natural world or from within the human mind.“). I do explain the pattern that leads to exponential error in the models.

You go on with “That’s the problem with these sweeping superficial dismissals – they aren’t specific to climate. “. But that is why I supported the argument with a climate-specific example. See : “NB. This assertion is just basic mathematics, but it is also directly supportable […].“.

The problems may well have been resolved in fluid dynamics, as you say, but they have not been resolved in climate. wrt temperature, the models’ output consists of a trend plus wiggles. The trend basically fits the formula

δTcy = k * Rcy

(see http://wattsupwiththat.com/2015/07/25/the-mathematics-of-carbon-dioxide-part-1/)

and is in effect protected from the models’ internal cell-based calculations while the wiggles are generated. The wiggles are meaningless, and no-one, not even the modellers, expects them to actually match future climate. So we can profitably throw away the mechanism that provides the wiggles, and concentrate on the things that give us trends. Currently, the models have one trend and one trend only – from CO2. It is clearly inadequate because modelled temperature deviates substantially from real temperature both now and in the past. All I am arguing is that the expensive part of the models is totally useless and can be thrown away while we work on the major drivers of climate. Those are all that we need in order to model climate. After we have nailed the climate, we can maybe – maybe – start to look at future weather.

“I do explain the pattern that leads to exponential error in the models.”

No, you don’t. There is a pattern which leads to exponential growth, but you explain none of it. Differential equations have a number of solutions, and there is just one that you want. Error will appear as some combination of the others. The task of stable solution is to ensure that, when discretised, the other solutions decay relative to the one you want. People who take the trouble to find out understand how to ensure that. Stability conditions like Courant are part of it. You explain none of this, and I see no evidence that you have tried to find out.

“The problems may well have been resolved in fluid dynamics, as you say, but they have not been resolved in climate”

Climate models are regular CFD. The fact that you claim to have identified a pattern in the output in no way says they are unstable; in fact, with instability there would be no such pattern.

“So we can profitably throw away the mechanism that provides the wiggles, and concentrate on the things that give us trends.”

Actually, you can’t. That is the lesson of the Reynolds averaging I cited earlier. There you can average to get rid of the wiggles, but a residual remains, which has the very important effect of increasing the momentum transport, or viscosity. And so in climate. The eddies in that video I showed are not predictions. They won’t happen at that time and place. But they are a vital part of the behaviour of the sea. The purpose of modelling them is to account for their net effect.

“They won’t happen at that time and place.“. Says it all, really.

I don’t mean that the eddies for example that you mention are unimportant. But you can’t model them together with everything else over an extended period using the models’ cell-based structure. It simply cannot work. In the article, I said “The research itself may well be very complex, but the model is likely to be relatively straightforward.“. Using your example, eddy research could identify how the eddies work in detail, and then work out how they influence climate in general. The general part could then be included in the climate models. There’s no point in trying to be more accurate than that, given all the other uncertainties. It’s the same as the relationship between pure mathematics and applied mathematics – the research is the pure, and what should go into the models is the applied.

You argue much about stability. I explain that the exponential errors in the models need not create instability. But they do still create errors that render them useless. Work on eddies in an eddy model until you really understand them, then put the results into a climate model. Don’t put mythical eddies, whose time and place are as you say unknown, into a climate model, it just adds to cost while doing nothing for results.

Speaks for itself:

«When initialized with states close to the observations, models ‘drift’ towards their imperfect climatology (an estimate of the mean climate), leading to biases in the simulations that depend on the forecast time. The time scale of the drift in the atmosphere and upper ocean is, in most cases, a few years. Biases can be largely removed using empirical techniques a posteriori. … The bias adjustment itself is another important source of uncertainty in climate predictions. There may be nonlinear relationships between the mean state and the anomalies, that are neglected in linear bias adjustment techniques. … »

(Ref: Contribution from Working Group I to the fifth assessment report by IPCC; 11.2.3 Prediction Quality; 11.2.3.1 Decadal Prediction Experiments )

This is Decadal Prediction – quite different. It is an attempt to actually predict from initial conditions on a decadal scale. It’s generally considered to have not succeeded yet, and they are describing why. Climate modelling does not rely on initial conditions.

Nick Stokes:

You say

That is an outrageous falsehood!

Modeling evolution of climate consists of a series of model runs where the output of each run is used as the initial conditions of the next run with other change(s) – e.g. increased atmospheric GHG concentration – also input to the next run.

Each individual run may be claimed to “not rely on initial conditions” but the series of model runs does. This is because each run can be expected to amplify errors resulting from uncertainty of the data used as input for the first run in the series.

Richard

So climate is not a chaotic system? This is very big news. You ought to do a paper.

You’re missing the point Nick. The issue isn’t whether there is an acceptable solution at any time step in the model run, the problem is that at every time step an error is generated which itself will have a resulting positive or negative bias on the projected temperature. And it won’t have an average of zero over time either. Why should it?

The Truth About SuperComputer Models

SuperComputer models used by environmentalists have such complex programs that no person can understand them.

.

So it’s impossible for any scientist to double-check SuperComputer projections with a pencil, paper and slide rule — which is mandatory for good science.

.

I am a fan of all models: Plastic ships assembled with glue, tall women who strut on runways, and computer models too.

.

During my intensive research on SuperComputer models, I discovered something so amazing, so exciting, that I fell off my bar stool.

.

It seems that no matter what process was being modeled — DDT, acid rain, the hole in the ozone layer, alar, silicone breast implants, CO2 levels, etc. — the SuperComputer output is the same — a brief prediction typed on a small piece of paper — and that piece of paper always says the same thing: “Life on Earth will end as we know it”.

.

That’s exactly what the general public has been told, again and again, for various environmental “crises”, starting with scary predictions about DDT in the 1960s.

.

.

I also have an alternate SuperComputer theory, developed while I worked in a different laboratory (sitting on a bar stool in a different bar):

— Scientists INPUT their desired conclusion to the SuperComputer: “Life on Earth will end as we know it”, followed by a description of the subject being studied, such as “CO2”.

—- Then the SuperComputer internally develops whatever physics model is required to reach the desired conclusion.

.

My alternative theory best explains how the current climate physics model was created.

By Jove, I think you have hit the nail on the head!

Now can we discus the tall, strutting women more?

I am glad I was not sitting on a bar stool when I read Richard Greene’s comment, or I would have fell off it from laughing.

Because it is true.

Who can take seriously the gloomy, monotonous, and never-correct-yet pronouncements of scaremongering end–of-the-world alarmists?

Such predictions are as old as language, it would seem.

The difference between “then” and now is that it did not used to be a hugely profitable business model to predict the end of the world.

Or did it?

Old Church: Do as we say or you will go to hell !

New Church: Do as we say or life on Earth will become hell (from the global warming catastrophe) !

None of this matters, because we will soon all be dead from DDT, acid rain, hole in the ozone layer, alar, or popping silicone breast implants. The latter would be a true catastrophe. I’m not sure which threat will do us in first. All these threats were said to end life on Earth as we know it, according to leftists, but they were not sure of the year.

Who’d want to live, anyway, if we all had to take rowboats, canoes and gondolas to get to work each morning, because of all the urban flooding from global warming. And think of the huge taxes required to replace subway cars with submarines, because the subway tunnels would be filled with water.

I suppose this would make a good science fiction movie … but it’s painful to hear this nonsense from people who actually believe it.

This is not the first article that I have read on the problems with the climate models. This one does it with brevity and is understandable.

All we have to do to better understand things with regard to models is to look back in time only about a year and the failure of the more precise regional models to get the measure of the coming blizzard in NYC. They shut the city down including the subways. The models proved to be wrong I think as close as 12 hours out.

The present climate models lack fidelity with current measurements. How is it then possible that they can be used to project temperatures out to the end of the century. This can only be called folly.

I spent a career on pump design. Given the same circumstances, I would never have stepped in front of my managers and use a program such as this and say you can believe its results. Yet folks are doing that. What standards are they using?

If it had been a model I was developing, management would have never known about it. Upon reviewing the results and sensing how well it matched measured data I would have concluded that I missed something fundamental and it would be back at the drawing board for further consideration and evaluation. I think it is that simple.

In my work on pump designs I worked with a very knowledgeable hydraulics designer. Years ago we started to use Computational Fluid Dynamics (CFD) in evaluating impeller designs. That initial effort was not successful. My experienced hydraulics designer said CFD stands for “Colorful Fluid Deception”. In the meantime, less sophisticated design tools worked quite well.

I can report that issue has been resolved and that improved CFD tools became available.

I came to this work as a “gear head” but became a “sparky” part time. My manger was an experienced designer of induction motors. We developed a model using TKsolver that married the hydraulic design with the motor. Over time it became more sophisticated. I became familiar with induction motor design and the equivalent circuit. It was not difficult for me to get the hydraulic design into the model using pump affinity laws.

When finished, it matched measured results within 2 to 3 percent. In a manner of minutes, I could resize an impeller, change the operating fluid temperature, change the motor winding and get accurate predictions including the full head flow curve.

This was all done without resorting to FEA. Later I learned how to use 2D magnetic FEA for the motor design. That was truly only necessary when we wanted to look at esoteric aspects of the design.

This is running a little longer than I wanted it to but I wanted to present a complete picture.

I also became quite familiar with the benefits of FFT in solving vibration issues from instrumented pump measurements.

It is that aspect that I will now delve into.

I kept reading in articles on this site about a 60-year cycle and I had seen other information about solar cycles so using a canned program that I had available I tried to fit these cycles to the measured Hadcrut4 data. In the figures that follow you will see a fit to the yearly H4 data that only employs seven cycles. I included a contribution from CO2 as well. In the end I was able to use natural cycles and accommodate a contribution from CO2 that fits the yearly data quite nicely.

https://onedrive.live.com/redir?resid=A14244340288E543!12220&authkey=!ADOWraTy8yvNgbY&ithint=folder%2c

Note the low value of ECS that was required from the table. The green line in the figure is the contribution of CO2 all by itself that is already part of the red fit line in the figure. The 60-year cycle became 66. The 350-year cycle and the 85-year cycle are from known solar cycles. Others are too.

Some, I am sure, will want to call it curve fitting. That does not bother me; I did many things just like this for 35 years and solved a lot of problems. Don’t try tell me the climate models could do this.

I started a quest to add more cycles and improve fidelity to the measured data. In these cases, I went to the monthly data and used additional datasets.

I was having problems with finding additional frequencies to add to the analysis. That is when I came upon the Optimized Fourier Transform developed by Dr. Evans. That changed things.

The OFT is contained in Dr. Evans spreadsheet and you can locate it here.

http://sciencespeak.com/climate-nd-solar.html

In my analysis I used the results of the OFT as input guesses into my program the tries to minimize the Sum of the Squares Error in fitting the data. Here are the results of my evaluations on various datasets using the most recent added data points. The RSS evaluations use my approximation for the Mauna Loa CO2 measurements that are included in the first link.

RSS Global

https://onedrive.live.com/redir?resid=A14244340288E543!12222&authkey=!AD4i2Hpt1SxLzjo&ithint=folder%2c

RSS NH

https://onedrive.live.com/redir?resid=A14244340288E543!12224&authkey=!ALVk9XRS9vmK6p8&ithint=folder%2c

RSS Tropics

https://onedrive.live.com/redir?resid=A14244340288E543!12226&authkey=!ALBywJBN8U-nY3c&ithint=folder%2c

RSS SH

https://onedrive.live.com/redir?resid=A14244340288E543!12227&authkey=!AMZTz5ITX7Yunic&ithint=folder%2c

Hadcrut4

https://onedrive.live.com/redir?resid=A14244340288E543!12229&authkey=!AJfJzy5TqCI0dqk&ithint=folder%2c

I will add a bonus evaluation that was completed in a similar manner

.

NINO 3.0

https://onedrive.live.com/redir?resid=A14244340288E543!12231&authkey=!AHwCAjgenmKflao&ithint=folder%2c

The number of natural cycles included in the above are approximately 90 sinusoids. With the exception of the NINO 3.0 evaluation it should be noted that all the evaluations have a low value of ECS. That was required to accommodate CO2 with the combined cycles and still match the measured data.

I think these evaluations fully support a much lower value of ECS than currently being contemplated in the climate models. I would then ask which method is doing a better job.

Only recently there are reasons to support a much lower value of ECS. They can be found here.

http://joannenova.com.au/2015/11/new-science-18-finally-climate-sensitivity-calculated-at-just-one-tenth-of-official-estimates/#more-44879

Therein, Dr. Evans concludes the following based upon his architectural changes he has proposed for the climate model:

Conclusions

There is no strong basis in the data for favoring any scenario in particular, but the A4, A5, A6, and B4 scenarios are the ones that best reflect the input data over longer periods. Hence we conclude that:

• The ECS might be almost zero, is likely less than 0.25 °C, and most likely less than 0.5 °C.

• The fraction of global warming caused by increasing CO2 in recent decades, μ,is likely less than 20%.

• The CO2 sensitivity, λC, is likely less than 0.15 °C W−1 m2.

Given a descending WVEL, it is difficult to construct a scenario consistent with the observed data in which the influence of CO2 is greater than this.

BTW, I have projected these results forward in time. It is a little problematical with the short number of years for the RSS dataset. If I don’t go too far I think the projections will be satisfactory. That is a story for another time.

Forget the models! I don’t know how reliable they are, how a computer can take into account all the possible variables…. Insted, let’s take a look at the past, at the way in which climate had evolved, what are the influences in this situation. Here are some ideas on that: http://oceansgovernclimate.com/.

Most models are not any better that the simplest model possible: take the main factors involved (CO2, human and natural aerosols and the solar “constant”) and calculate their influence in a spreadsheet. That is called an “Energy Balance Model” or EBM, top-down approach.

The result is as good, or better than from the multi-million-dollar “state of the art” GCM’s. See the report of Robert Kaufmann and David Stern at:

http://www.economics.rpi.edu/workingpapers/rpi0411.pdf

No wonder that report couldn’t find a publisher…

FE – and does the simple model have any spatial detail? Does it tell us anything about the hydrological cycle? How many other outputs does a GCM have besides surface temperature? Perhaps you should learn what a GCM is actually used *for* before you make such pronouncements. As the authors of the paper you cite say:

“ It does appear that there is a trade-off between the greater spatial detail and number of variables provided by the GCMs and more accurate predictions generated by simple time series models.”

How well does the simple energy balance model do on a five-day forecast for thousands of cities scattered across 7 continents?

oneillsinwisconsin:

You ask and say:

A simple model does better than GCMs at providing spatial detail, the hydrological cycle and cannot be misused to misrepresent such information because

(a) a simple model does not provide those indications

but

(b) GCMs provide wrong indications of those indications.

No information is preferable to wrong information.

And you would know these facts if you were to “learn what a GCM is actually used *for*”.

The GCMs represent temperature reasonably well but fail to indicate precipitation accurately. This failure probably results from the imperfect representation of cloud effects in the models. And it leads to different models indicating different local climate changes.

For example, the 2008 US Climate Change Science Program (CCSP) report provided indications of precipitation over the continental U.S. ‘projected’ by a Canadian GCM and a British GCM. Where one GCM ‘projected’ greatly increased precipitation (with probable increase to flooding) the other GCM ‘projected’ greatly reduced precipitation (with probable increase to droughts), and vice versa. It is difficult to see how provision of such different ‘projections’ “can help inform the public and private decision making at all levels” (which the CCSP report said was its “goal”).

Richard

It seems quite impressive that climate modellers have created climate models that give an output that APPEARS to some to be meaningful or even predictive.

But, I suspect that that is all that they have so far achieved.

If I was building a model of climate then I suspect that most of my time would be spent trying to stop my model from lurching from one extreme to the other.

Or trying to stop my model from doing something which is clearly ludicrous or counter to what we witness in reality.

If I was really good at making computer models of climate, then maybe my model could behave itself reasonably well for much of the time. And possibly even do a reasonable impression of reality.

In which case I could then go on to present the output as meaningful and predictive.

I could even suggest that people come and “study” various things by experimenting with the conditions inside my model.

In looking at this topic, I notice that modellers often reveal that this is exactly what they are doing. For example in this quote:

“By extending the convective timescale to understand how quickly storm clouds impact the air around them, the model produced too little rain,” said Dr. William I. Gustafson, Jr., lead researcher and atmospheric scientist at PNNL. “When we used a shorter timescale it improved the rain amount but caused rain to occur too early in the day.”

Keep tweaking Willy. And at some point you’ll maybe have a set of tweaks which do all the right things.

Though not necessarily for any of the right reasons…

Quote from: http://phys.org/news/2015-04-higher-resolution-climate-daytime-precipitation.html#jCp

That works only for a steady state system. We are definitely living on a planet that yo-yos between hot and cold mainly cold.

“This assertion is just basic mathematics, but it is also directly supportable”

I believe you are correct, at least in an analogous sense. Unfortunately though, it is just a belief of mine. The “direct support” you provide is a rhetorical argument an not one of “basic mathematics”. This article would have been better if the equations were provided. No rhetoric should be required if it “is just basic mathematics.”

Any computer model that ignores the obvious periodical, regular Ice Age cycles is useless. We can only guess as to what causes these regular, long, cold events. We don’t absolutely know how these happen, no absolute causation but we do know they happen and happen suddenly, not slowly.

Fine tuned models that ignore this fact are useless for predictions since they all assume the ‘normal’ is the warm part of the periodic, short Interglacials. This presumption is a huge problem.

Dear Mike,

To chase down your mathematical assertion, it would be interesting to examine how the supercomputer programmers treat the rounding problem. E.g. a rounding to the nearest billionth of a number in a programme that does a billion arithmetic operations per second with that number, could show an error in the most significant digit.

.

While everyone is piling on, don’t forget truncation due to format conversion and radix differences. One can really get off the wall results if those are ignored.

Mr. Layman here.

I said this some time ago regarding long range “climate models”. It seems to fit.

Confession of a computer modelling grad student:

As part of my MASc programme I wrote a huge simulation platform for a personal computer. The engine of the simulator used finite difference methods, since time I had, and memory was limited. The engine calculated the potential flow field of a non-newtonian fluid flowing around mobile solid elements, boundarylessly. I wanted to simulate a resin injection into a gas filled cavity partially filled with fiber reinforcements. I wanted to simulate the initial stages, and final result to maximize mixing and minimize gas entrapment. It was the hardest thing I ever had to do. It took me months to write the code circa 1990. Subsequently, have used significant numerical analysis tools for any number of problems.

My rule:

Garbage in = Gorgeous garbage out that can be used to convince anyone of anything.

I do not believe in the veracity of the gorgeous simulations. Though climate simulators may look convincing and real, the out put has to be compared with historical before and after data. That has NEVER happened. This programme can’t, with any kind of certainty, predict what will happen anywhere 24 hrs into the future.

So… computer models and fancy renderings routinely conceal the lack of precision and accuracy of the models by dithering the post analysis results to a pretty, and fake, higher precision. That is called marketing… not proper science. I now, and I did both.

Ask Willis E.. He knows. Ask him how many pixels represents a “cell” in his ditherings and how much atmosphere and land area is represented by an image pixel compared with the actual thermometer that existed in the cell providing the initial conditions. He is not alone. All computer wonks do this.

Which temperature data set are these computer games using?

The ones that had 1880 at one temp?….or the ones that had it 2 degrees colder?

..the one that showed the pause?…or the one that erased the pause?

The ones that had the 1930’s hotter?…or the ones that erased it?

…and on and on

What a waste of perfectly good brain cells, debating whether a computer game is right or not. No one will ever know.

The odds on it being right differ infinitesimally from zero.

I know if I was running thousands of computer games….after all that time, effort, and money…and someone told me they changed my numbers

I would blow the roof off!!

Someone should explain this to Steve Mosher. He recently made the claim that while climate-model projections may not be accurate in the short term, they will prove accurate at about 50 years out. If I’m remembering that correctly, I’d like to know the reasoning behind his claim. He must believe that all those compounding errors will magically cancel each other out over time. I sure wish that would happen with all the errors I have made over the years.

to do that….within the next 10 years global temperatures will have to jump up 1 degree almost over night

Easy – Steve hopes not to be around in 50 years. That’s also why IPCC concentrates on year 2100, not on year 2020.

Assuming those models will average out to a correct value seems to me like standing on a golf course and not being able to see where the hole is, but assuming that after one hits a few hundred balls down towards where one supposes it might be, that the hole will at the mathematical average position of all of the balls that were hit.

Such belief assumes a lot, including that one is even hitting the ball in the proper direction.

The hole may be behind you

Temperatures may fall.

These modelling clowns can win a million dollars if they figure out how to solve the fundamentals of atmospheric fluid dynamics.

http://www.theguardian.com/science/blog/2010/dec/14/million-dollars-maths-navier-stokes

I’d suggest it’s a random walk and nothing to do with fluid dynamics.

The lack of transparency of organizations like the UN (UNFCCC, IPCC, WMO), university government contractor groups on the dole (NCAR and UEA et al.) and government groups like ECMWF, NOAA et al. lends suspicion of chicanery when such groups give out “information” about “here is how we do it” like the IPCC and WMO “models” when these models produce unvalidatable results, which are well known, as exemplified by their reports. I tend to think the “here is how we do it” by such groups is just disinformation designed to deceive.

Not long ago Christopher Monckton of Brenchley and other authors showed in their paper a very simple climate model which can be benched marked and had good results.

So perhaps Monckton of Benchley and others should start a modeling initiative, an online “center for validatable climate modeling” with their simple climate model and funded through KickStarter, for instance, to stand as an alternative to modeling groups like the IPCC, WMO, UEA et al. whose honesty is in doubt.

🙂

I guess simple models that give good results (and show the world is doing just fine) aren’t giving value to those who want different answers.

Whereas a complex model with lots of parameters that show the world will soon end, has value to those who can use these answers for their own ends.

It’s all about whether the model is useful; in this case: can be marketed to make money or control.

Here the explanation for the failure of Climate Models:

“making a solid prediction of what’s going to happen in the next few decades is much tougher than modeling what’s going to happen to the whole globe a century from now,”

William Collins, IPCC lead author and climate modeller.

So – there you have it. Climate model projections will all converge upon reality. But only long AFTER the retirement and death of all currently existing climate modellers.

I know this may sound like skepticism – BUT – is this not just a feeble attempt to explain away the failure of computer assisted guessing to make any useful predictions whatsoever over the last two decades.

The predictions will all come to pass, we promise – but only after everyone currently alive – is dead.

From: http://newscenter.lbl.gov/2008/09/17/impacts-on-the-threshold-of-abrupt-climate-changes/

Seems there’s a simple solution to this problem that could save the day for Climate Science? We’ll just need to ask the current batch of climate modelers to “take one for the team” and jump of the nearest tall building?

First we could show them our projections. Before we project them from the parapet.

Assuming that a climate modeller is spherical. And that gravity g is invariant with height. And ignoring air resistance. And providing an arbitrarily selected mass for an average climate scientist taken from WHO tables of mass for the average sedentary person in highly paid academic employment.

Then we could commence with obtaining empirical validation.

Let the fun begin…

So how much money and resources have been thrown at climate modelling? All for nothing, if the methodology is wrong. It must have been the biggest waste ever in the history of science. Think of all worthwhile engineering projects that could have completed to help the third world, if the same effort and money was applied.

So, after all this herein above and all the prior graphs, data, points of order, yelling, attacking, back stabbing, lies, fraud, misplaced honor, fudging, it seems to some that what we have is to the point where we get in many of the Senate, House, and U.N. meet ups and assorted other hearings

In a back room somewhere there is a “fly keeper of a billion flies, and a guy with 100 lbs of fine ground black pepper, they have mixed it up the fly dung and black pepper on and in papers to be reviewed and now every one is hotly debating

Fly dung, 2 ,Pepper,,,6,,,, no!!!,,, no!!!!!! Pepper three, Fly dung 6,,,,,,

Did dung get removed from use?