Even on business as usual, there will be <1° Kelvin warming this century

By Christopher Monckton of Brenchley

Curiouser and curiouser. As one delves into the leaden, multi-thousand-page text of the IPCC’s 2013 Fifth Assessment Report, which reads like a conversation between modelers about the merits of their models rather than a serious climate assessment, it is evident that they have lost the thread of the calculation. There are some revealing inconsistencies. Let us expose a few of them.

The IPCC has slashed its central near-term prediction of global warming from 0.28 K/decade in 1990 via 0.23 K/decade in the first draft of IPCC (2013) to 0.17 K/decade in the published draft. Therefore, the biggest surprise to honest climate researchers reading the report is why the long-term or equilibrium climate sensitivity has not been slashed as well.

In 1990, the IPCC said equilibrium climate sensitivity would be 3 [1.5, 4.5] K. In 2007, its estimates were 3.3 [2.0, 4.5] K. In 2013 it reverted to the 1990 interval [1.5, 4.5] K per CO2 doubling. However, in a curt, one-line footnote, it abandoned any attempt to provide a central estimate of climate sensitivity – the key quantity in the entire debate about the climate. The footnote says models cannot agree.

Frankly, I was suspicious about what that footnote might be hiding. So, since my feet are not yet fit to walk on, I have spent a quiet weekend doing some research. The results were spectacular.

Climate sensitivity is the product of three quantities:

Ø The CO2 radiative forcing, generally thought to be in the region of 5.35 times the logarithm of the proportionate concentration change – thus, 3.71 Watts per square meter;

Ø The Planck or instantaneous or zero-feedback sensitivity parameter, which is usually taken as 0.31 Kelvin per Watt per square meter; and

Ø The system gain or overall feedback multiplier, which allows for the effect of temperature feedbacks. The system gain is 1 where there are no feedbacks or they sum to zero.

In the 2007 Fourth Assessment Report, the implicit system gain was 2.81. The direct warming from a CO2 doubling is 3.71 times 0.31, or rather less than 1.2 K. Multiply this zero-feedback warming by the system gain and the harmless 1.2 K direct CO2-driven warming becomes a more thrilling (but still probably harmless) 3.3 K.

That was then. However, on rootling through chapter 9, which is yet another meaningless expatiation on how well the useless models are working, there lies buried an interesting graph that quietly revises the feedback sum sharply downward.

In 2007, the feedback sum implicit in the IPCC’s central estimate of climate sensitivity was 2.06 Watts per square meter per Kelvin, close enough to the implicit sum f = 1.91 W m–2 K–1 (water vapor +1.8, lapse rate –0.84, surface albedo +0.26, cloud +0.69) given in Soden & Held (2006), and shown as a blue dot in the “TOTAL” column in the IPCC’s 2013 feedback graph (fig. 1):

Figure 1. Estimates of the principal positive (above the line) and negative (below it) temperature feedbacks. The total feedback sum, which excludes the Planck “feedback”, has been cut from 2 to 1.5 Watts per square meter per Kelvin since 2007.

Note in passing that the IPCC wrongly characterizes the Planck or zero-feedback climate-sensitivity parameter as itself being a feedback, when it is in truth part of the reference-frame within which the climate lives and moves and has its being. It is thus better and more clearly expressed as 0.31 Kelvin of warming per Watt per square meter of direct forcing than as a negative “feedback” of –3.2 Watts per square meter per Kelvin.

At least the IPCC has had the sense not to attempt to add the Planck “feedback” to the real feedbacks in the graph, which shows the 2013 central estimate of each feedback in red flanked by multi-colored outliers and, alongside it, the 2007 central estimate shown in blue.

Look at the TOTAL column on the right. The IPCC’s old feedback sum was 1.91 Watts per square meter per Kelvin (in practice, the value used in the CMIP3 model ensemble was 2.06). In 2013, however, the value of the feedback sum fell to 1.5 Watts per square meter per Kelvin.

That fall in value has a disproportionately large effect on final climate sensitivity. For the equation by which individual feedbacks are mutually amplified to give the system gain G is as follows:

where g, the closed-loop gain, is the product of the Planck sensitivity parameter λ0 = 0.31 Kelvin per Watt per square meter and the feedback sum f = 1.5 Watts per square meter per Kelvin. The unitless overall system gain G was thus 2.81 in 2007 but is just 1.88 now.

And just look what effect that reduction in the temperature feedbacks has on final climate sensitivity. With f = 2.06 and consequently G = 2.81, as in 2007, equilibrium sensitivity after all feedbacks have acted was then thought to be 3.26 K. Now, however, it is just 2.2 K. As reality begins to dawn even in the halls of Marxist academe, the reduction of one-quarter in the feedback sum has dropped equilibrium climate sensitivity by fully one-third.

Now we can discern why that curious footnote dismissed the notion of determining a central estimate of climate sensitivity. For the new central estimate, if they had dared to admit it, would have been just 2.2 K per CO2 doubling. No ifs, no buts. All the other values that are used to determine climate sensitivity remain unaltered, so there is no wriggle-room for the usual suspects.

One should point out in passing that equation (1), the Bode equation, is of general application to dynamical systems in which, if there is no physical constraint on the loop gain exceeding unity, the system response will become one of attenuation or reversal rather than amplification at loop-gain values g > 1. The climate, however, is obviously not that kind of dynamical system. The loop gain can exceed unity, but there is no physical reality corresponding to the requirement in the equation that feedbacks that had been amplifying the system response would suddenly diminish it as soon as the loop gain exceeded 1. The Bode equation, then, is the wrong equation. For this and other reasons, temperature feedbacks in the climate system are very likely to sum to net-zero.

The cut the IPCC has now made in the feedback sum is attributable chiefly to Roy Spencer’s dazzling paper of 2011 showing the cloud feedback to be negative, not strongly positive as the IPCC had previously imagined.

But, as they say on the shopping channels, “There’s More!!!” The IPCC, to try to keep the funds flowing, has invented what it calls “Representative Concentration Pathway 8.5” as its business-as-usual case.

On that pathway (one is not allowed to call it a “scenario”, apparently), the prediction is that CO2 concentration will rise from 400 to 936 ppmv; that including projected increases in CH4 and N2O concentration one can make that 1313 ppmv CO2 equivalent; and that the resultant anthropogenic forcing of 7.3 Watts per square meter, combined with an implicit transient climate-sensitivity parameter of 0.5 Kelvin per Watt per square meter, will warm the world 3.7 K by 2100 (at a mean rate equivalent to 0.44 K per decade, or more than twice as fast on average as the maximum supra-decadal rate of 0.2 K/decade in the instrumental record to date) and a swingeing 8 K by 2300 (fig. 2). Can They not see the howling implausibility of these absurdly fanciful predictions?

Let us examine the IPCC’s “funding-as-usual” case in a little more detail.

Figure 2. Projected global warming to 2300 on four “pathways”. The business-as-usual “pathway” is shown in red. Source: IPCC (2013), fig. 12.5.

First, the CO2 forcing. From 400 ppmv today to 936 ppmv in 2100 is frankly implausible even if the world, as it should, abandons all CO2 targets altogether. There has been very little growth in the annual rate of CO2 increase: it is little more than 2 ppmv a year at present. Even if we supposed this would rise linearly to 4 ppmv a year by 2100, there would be only 655 ppmv CO2 in the air by then. So let us generously call it 700 ppmv. That gives us our CO2 radiative forcing by the IPCC’s own method: it is 5.35 ln(700/400) = 3 Watts per square meter.

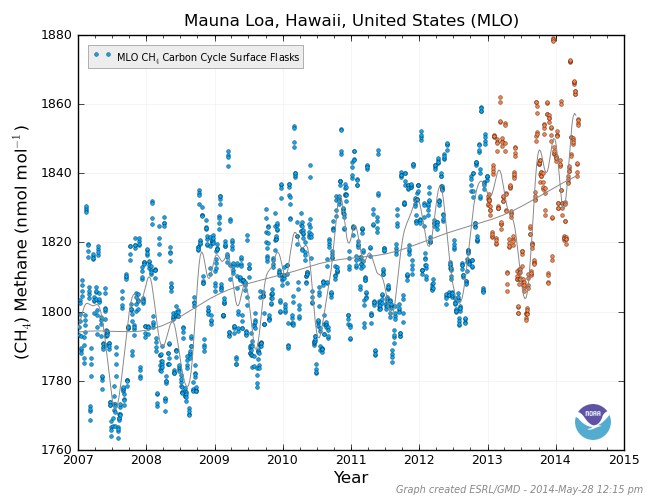

We also need to allow for the non-CO2 greenhouse gases. For a decade, the IPCC has been trying to pretend that CO2 accounts for as small a fraction of total anthropogenic warming as 70%. However, it admits in its 2013 report that the true current fraction is 83%. One reason for this large discrepancy is that once Gazputin had repaired the methane pipeline from Siberia to Europe the rate of increase in methane concentration slowed dramatically in around the year 2000 (fig. 3). So we shall use 83%, rather than 70%, as the CO2 fraction.

Figure 3. Observed methane concentration (black) compared with projections from the first four IPCC Assessment Reports. This graph, which appeared in the pre-final draft, was removed from the final draft lest it give ammunition to skeptics (as Germany and Hungary put it). Its removal, of course, gave ammunition to skeptics.

Now we can put together a business-as-usual warming case that is a realistic reflection of the IPCC’s own methods and data but without the naughty bits. The business-as-usual warming to be expected by 2100 is as follows:

3.0 Watts per square meter CO2 forcing

x 6/5 (the reciprocal of 83%) to allow for non-CO2 anthropogenic forcings

x 0.31 Kelvin per Watt per square meter for the Planck parameter

x 1.88 for the system gain on the basis of the new, lower feedback sum.

The answer is not 8 K. It is just 2.1 K. That is all.

Even this is too high to be realistic. Here is my best estimate. There will be 600 ppmv CO2 in the air by 2100, giving a CO2 forcing of 2.2 Watts per square meter. CO2 will represent 90% of all anthropogenic influences. The feedback sum will be zero. So:

2.2 Watts per square meter CO2 forcing from now to 2100

x 10/9 to allow for non-CO2 anthropogenic forcings

x 0.31 for the Planck sensitivity parameter

x 1 for the system gain.

That gives my best estimate of expected anthropogenic global warming from now to 2100: three-quarters of a Celsius degree. The end of the world may be at hand, but if it is it won’t have anything much to do with our paltry influence on the climate.

Your mission, gentle reader, should you choose to accept it, is to let me know in comments your own best estimate of global warming by 2100 compared with the present. The Lord Monckton Foundation will archive your predictions. Our descendants 85 years hence will be able to amuse themselves comparing them with what happened in the real world.

“thingadonta says:

June 9, 2014 at 3:40 am

….

Water vapour and associated cloud changes give negative feedback, as in the tropics (which is why they don’t get hotter than the deserts). ”

Actually, Florida is somewhat warmer than similar latitudes in the Sahara Desert. Florida’s daytime temperatures are a lot less hot than the Sahara, but their nights are a lot less cold- the AVERAGE for Florida is somewhat higher.

Mr Cook wonders why I don’t criticize those who come up with different predictions from mine. Well, there are so many uncertainties that it wouldn’t be fair to expect everyone to take the same view. But just about everybody’s prediction was in the range -1 to +1 Celsius for 21st-century global warming. All these outcomes are plausible, but +3.7 Celsius by 2100, or 8 C by 2300 (the IPCC’s estimates) are implausible.

Mr Bolder is very kind, but in a dark age those who persevere in insisting on the truth are seldom regarded as attractive. However, the ever-widening gap between prediction and reality cannot indefinitely be ignored. Perhaps in as little as 20 or 30 years people will look back and wonder what all the fuss was about.

From a simple model of an oscillating rate of warming plus C x time for the extra rate of warming due to anthropogenic carbon dioxide. Human use of fossil fuel is going up exponentially but the effect of CO2 on temperature is supposed to be logarithmic so I chose a linear function of time.

We get this plot

http://s5.postimg.org/fco4rwz8n/prediction.jpg

when fitted to the rate data.

http://s5.postimg.org/voy6hndk7/rate_model.jpg

It predicts that in 50 years time another hiatus will occur at 0.4-0.5 degrees warmer than now that lasts until 2100. This hiatus will last another 10-15 years.

Please give the prize to my cozy grandchildren.

Sir Christopher. At the bottom of your piece you say:

“That gives my best estimate of expected anthropogenic global warming from now to 2100: three-quarters of a Celsius degree.”

Quite clear. Except: temperature of what, estimated by whom? What about attribution (who says what made the temp rise/fall)?

http://www.ipcc.ch/publications_and_data/ar4/wg1/en/ch9s9-2-4.html

Care to clarify?

“Village Idiot” asks me to clarify my projection that Man will cause about 0.75 K global warming between now and 2100. The calculations are set out quite clearly in the head posting. They are of course subject to enormous uncertainty, but I should be prepared to bet quite a large sum that 21st-century business-as-usual warming will be closer to my estimate than to the business-as-usual 3.7 K projected by the IPCC. The differences between the IPCC’s estimate and mine are clearly set out in the head posting.

The passage in the IPCC’s fourth assessment report mentioned by “Village Idiot” is outdated. Among the many factors the IPCC has revised is its estimate of aerosol-driven negative forcing. It has greatly reduced what was always something of a fudge-factor intended to allow the modelers to make a case for high climate sensitivity. However, as the head posting again carefully explains, the implicit new central estimate of climate sensitivity in IPCC (2013) is about 2.2 K, not the 3.3 K in the previous report.

So we’re talking surface temperature or troposphere temps?

We are not talking of either surface temperatures or any particular tropospheric temperatures. We are talking of changes in surface temperatures. Read the definition of climate sensitivity in any IPCC report.

Monckton of Brenchley says:

June 10, 2014 at 11:43 am

Mr Koenders has indeed “missed something”: in the passage he quotes, I am discussing CO2-driven forcing as a fraction of total anthropogenic forcing. If methane concentration does not grow as fast as predicted (as it is not growing),

Really?

http://www.mfe.govt.nz/environmental-reporting/atmosphere/greenhouse-gases/images/ch4-baring.jpg

Methane is as much of a false alarm as ocean “acidification”.

The failed [as usual] predictions were that there would be a giant methane burp from calthrates outgassing due to accelerating global warming.

To date, exactly NO catastrophic AGW predictions have happened. Why should anyone believe the alarmist clique, when everything they predict truns out to be wrong?

Me, I prefer to not listen to those swivel-eyed preachers of doom and gloom. They are always wrong.

Monckton of Brenchley says: June 10, 2014 at 5:38 pm

>Mr Cook wonders why I don’t criticize those who come up with different

> predictions from mine. Well, there are so many uncertainties that it wouldn’t

>be fair to expect everyone to take the same view.

It wouldn’t be WISE for everyone to take the same view. I am something of

a lukewarmer on the issue of warming — of course it’s warming some — but

am wholly and vehemently skeptical of claims that “business as usual” must

be suspended in order to deal with the supposed problem.

Business as usual, as described by Mosher above, is the authorities,

decision makers, and responsible parties for various and competing

organizations to make, severally and separately, assumptions about

current trends, and make plans based on the assumptions. But there is

no reasonable reason to assume everybody must make the SAME

assumptions.

We see this all over. Assume the _Old Farmers Almanac_ recommends

the best time to plant is the dark of the moon. Whatever basis and assumptions

go into this recommendation, some will plant accordingly, some earlier, and

some later. Some will be wrong. But if EVERYBODY plants at the same time,

it’s a recipe for famine whenever ANY of the _OFA_ hidden assumptions,

algorithims and models turns out to be wrong.

Suppose we say NO CHILD LEFT BEHIND means all children must learn to

read from a phonics first or phonics only program. There is good evidence to

support phonics as a teaching method. But if EVERY school is REQUIRED to

use the same sorts of textbooks in the same sequence, the children who

for example have hearing impairments affecting their ability to process

phonemes will, by design of the program, fall behind. Same now, over a decade

later, with Common Core. Assuming, as the US federal department of education

does, that ALL children MUST learn the same things in the same sequence is

a recipe for failing those on a different (not necessarily earlier or later or better

or worse, just different) trend line. Yet billions of dollars are committed to programs,

decades after decades, that routinely fail to deliver to deciles and quintiles of

the demographics supposedly served.

Don’t even START with me about Stalin or Mao deciding on the best way to

industrialize…

“Phil.” is wrong, as usual. Methane concentration is indeed not rising as predicted, as the graph in the head posting amply demonstrates. As Mr Stealey has rightly pointed out, methane is yet another foolish scare. The IPCC admits that non-CO2 forcings are not 30% of anthropogenic forcings, as originally predicted, but just 17%, chiefly because methane concentration has not risen anything like as fast as predicted.

Chris. So when surface temperatures have risen, say, 1.5 degrees by the end of the century compared to June 2014 (compared to some running average), would you (theoretically 😉 ) conceed that you had underestimated climate sensitivity or say that you were correct and that the extra warmth came from something other than anthropogenic ghg emissions and their feedbacks?

Bare with me please – I am going somewhere with this, and possibly have a suggestion that you may find engaging 🙂

In answer to “Village Idiot”, even if one uses the IPCC’s own methods and data there is simply no excuse for its contention that on business as usual we might see – as a central estimate, albeit flanked by enormous error-bars – anything like as much as 3.7 K of global warming by 2100. My central estimate is 0.75 K, coincidentally about the same as the (largely natural) warming of the 20th century. But my estimate too too is flanked by large error bars. So, as I have said earlier in this thread, one can say with near-certainty that the 21st-century business-as-usual anthropogenic warming will be closer to 0.75 than to 3.7 K, but beyond that one would be falling into the same trap as the IPCC itself – trying to make predictions that lack a sufficient scientific underpinning of available precision.

The crowd-sourced estimate here, from some 29 participants, is that 21st-century warming will fall on the interval 0.12 [-1, 1] K. That interval of course encompasses my own estimate, which may indeed be on the high side. However, nowhere on that interval is there disaster for mankind.

Monckton of Brenchley:

At June 12, 2014 at 12:43 am you say

And my estimate is similar but I cheated by ‘stealing’ it.

I like empirical results, and empirical – n.b. not model-derived – determinations indicate climate sensitivity is less than 1.0°C for a doubling of atmospheric CO2 equivalent. This is indicated by the studies of

Idso from surface measurements

http://www.warwickhughes.com/papers/Idso_CR_1998.pdf

and Lindzen & Choi from ERBE satellite data

http://www.drroyspencer.com/Lindzen-and-Choi-GRL-2009.pdf

and Gregory from balloon radiosonde data

http://www.friendsofscience.org/assets/documents/OLR&NGF_June2011.pdf

I strongly commend everybody to read the Idso paper: although it is ‘technical’ it is a good and interesting read which reports eight different ‘natural experiments’.

Richard

My idea.

You say yourself that your monthly RSS pause mallard is destined for a watery quietus; temps will rise (a bit/eventually).

What about a new monthly thingy? For example, something like plotting IPCC ‘pathway/s’ (probably best for emitted CO2 equivalents rather than business as usual?), running average of the three surface temperature estimates, WUTW crowd-sourced estimate, your best shot. That sort of idea. Should be able to see some sort of development in the next 15-20 years, and the chance either for you to crow, or others to watch you squirm 😉

If you feel inclined, post a notice on the Village notice board. The Villagers – and hopefully some unbelieving Outsiders – can come with their suggestions on how to set it up, just to make sure there’s transparency and no monkey business.

Village Idiot:

re your post at June 12, 2014 at 1:14 am.

My idea.

If you want to ‘do your own thing’ then do it so others can admire it or laugh at it. Until then please stop disrupting WUWT threads with assertions based on nothing such as

Richard

In answer to “Village Idiot”, I have made it plain since I first wrote publicly about global warming in 2006 that some warming is to be expected (though probably not very much). It follows that the long pause shown so clearly in the RSS satellite record is going to come to an end. The theory is clear enough: all other things being equal, adding greenhouse gases to the atmosphere will be likely to cause warming. The question is whether the amount of warming caused is likely to have serious consequences, to which the answer is No.

The significance of the pause is that it is so startling a departure from what was predicted; that it has occurred at a time when CO2 concentration has been rising strongly; and that it contributes to the overall picture of slow warming at half the near-term rate predicted by the IPCC a quarter of a century ago.

Richard Courtney is, as always, right: if you want to compile your own temperature index and get it peer-reviewed by your “village”, then stop whining about my entirely straightforward monthly temperature analyses – which are of course a standing embarrassment to those who are trying to pretend we face a “climate crisis” that is simply not present in the record – and get on and do it.

Should I take that as a ‘No’? Of course, I can understand your reticence. Better get on it! 🙂

“Village Idiot” should know by now that I do not do science by consensus, so there would be no evidential value in presenting a mere head-count as though it were science. I am already producing everything else it asks for every month. As for my own prediction, the basis for it is clearly explained in the head posting. I have deliberately simplified the math to make the argument as widely accessible as possible. And I have consciously favored the climate-extremist case by having done the calculations for the IPCC’s case on an equilibrium basis rather than on the transient-sensitivity basis, which would approximately halve the quantum of warming to be expected.

If the “Idiot” wants the calculations or graphs done on some different basis, I am happy to hear its proposals for paying me to carry out the necessary work. Otherwise, as I have suggested, it should do the work itself. However, displaying clear graphs every month is not easy. It requires updating of half a dozen temperature datasets every month, as well as displaying the data in a manner that everyone can understand, while still maintaining academic rigor. The program I wrote to draw the graphs has some 400 lines of code. The graphs are now widely circulated in samizdat form every month, for the Marxstream media are not interested in the interesting fact that there has been no global warming recently notwithstanding the quite rapid increase in CO2 concentration.

Gradually, the world is finding out that the models were and are wrong. Faced with the evidence that the models have never yet succeeded in predicting global temperature correctly, but have consistently run very hot, the “Idiot” should be reconsidering its support for the pseudo-science based on the output of those models, which have been thoroughly discredited by events.

Monckton of Brenchley says: ?w=600&h=461

?w=600&h=461

June 11, 2014 at 6:49 pm

“Phil.” is

wrongcorrect, as usual.As shown in the graph I linked to CH4 continues to rise after the brief hiatus between 2000 and 2005, the graph posted in the head posting conveniently stops in 2011, whereas up-to-date data shows continuing growth.

Methane concentration is indeed not rising as predicted, as the graph in the head posting amply demonstrates. As Mr Stealey has rightly pointed out, methane is yet another foolish scare. The IPCC admits that non-CO2 forcings are not 30% of anthropogenic forcings, as originally predicted, but just 17%, chiefly because methane concentration has not risen anything like as fast as predicted.

As the data in your own post indicates it is ‘rising as fast at predicted’ in AR4, with a lag caused by the 5 year hiatus. Based on the Mauna Loa data (above) it has now made up for that lag and is within the envelope of the AR4 projection.