UPDATE: for those visiting here via links, see my recent letter regarding Dr. Richard Muller and BEST.

I have some quiet time this Sunday morning in my hotel room after a hectic week on the road, so it seems like a good time and place to bring up statistician William Briggs’ recent essay and to add some thoughts of my own. Briggs has taken a look at what he thinks will be going on with the Berekeley Earth Surface Temperature project (BEST). He points out the work of David Brillinger whom I met with for about an hour during my visit. Briggs isn’t far off.

Brillinger, another affable Canadian from Toronto, with an office covered in posters to remind him of his roots, has not even a hint of the arrogance and advance certainty that we’ve seen from people like Dr. Kevin Trenberth. He’s much more like Steve McIntyre in his demeanor and approach. In fact, the entire team seems dedicated to providing an open source, fully transparent, and replicable method no matter whether their new metric shows a trend of warming, cooling, or no trend at all, which is how it should be. I’ve seen some of the methodology, and I’m pleased to say that their design handles many of the issues skeptics have raised and has done so in ways that are unique to the problem.

Mind you, these scientists at LBNL (Lawrence Berkeley National Labs) are used to working with huge particle accelerator datasets to find minute signals in the midst of seas of noise. Another person on the team, Dr. Robert Jacobsen, is an expert in analysis of large data sets. His expertise in managing reams of noisy data is being applied to the problem of the very noisy and very sporadic station data. The approaches that I’ve seen during my visit give me far more confidence than the “homogenization solves all” claims from NOAA and NASA GISS, and that the BEST result will be closer to the ground truth that anything we’ve seen.

But as the famous saying goes, “there’s more than one way to skin a cat”. Different methods yield different results. In science, sometimes methods are tried, published, and then discarded when superior methods become known and accepted. I think, based on what I’ve seen, that BEST has a superior method. Of course that is just my opinion, with all of it’s baggage; it remains to be seen how the rest of the scientific community will react when they publish.

In the meantime, never mind the yipping from climate chihuahuas like Joe Romm over at Climate Progress who are trying to destroy the credibility of the project before it even produces a result (hmmm, where have we seen that before?) , it is simply the modus operandi of the fearful, who don’t want anything to compete with the “certainty” of climate change they have been pushing courtesy NOAA and GISS results.

One thing Romm won’t tell you, but I will, is that one of the team members is a serious AGW proponent, one who yields some very great influence because his work has been seen by millions. Yet many people don’t know of him, so I’ll introduce him by his work.

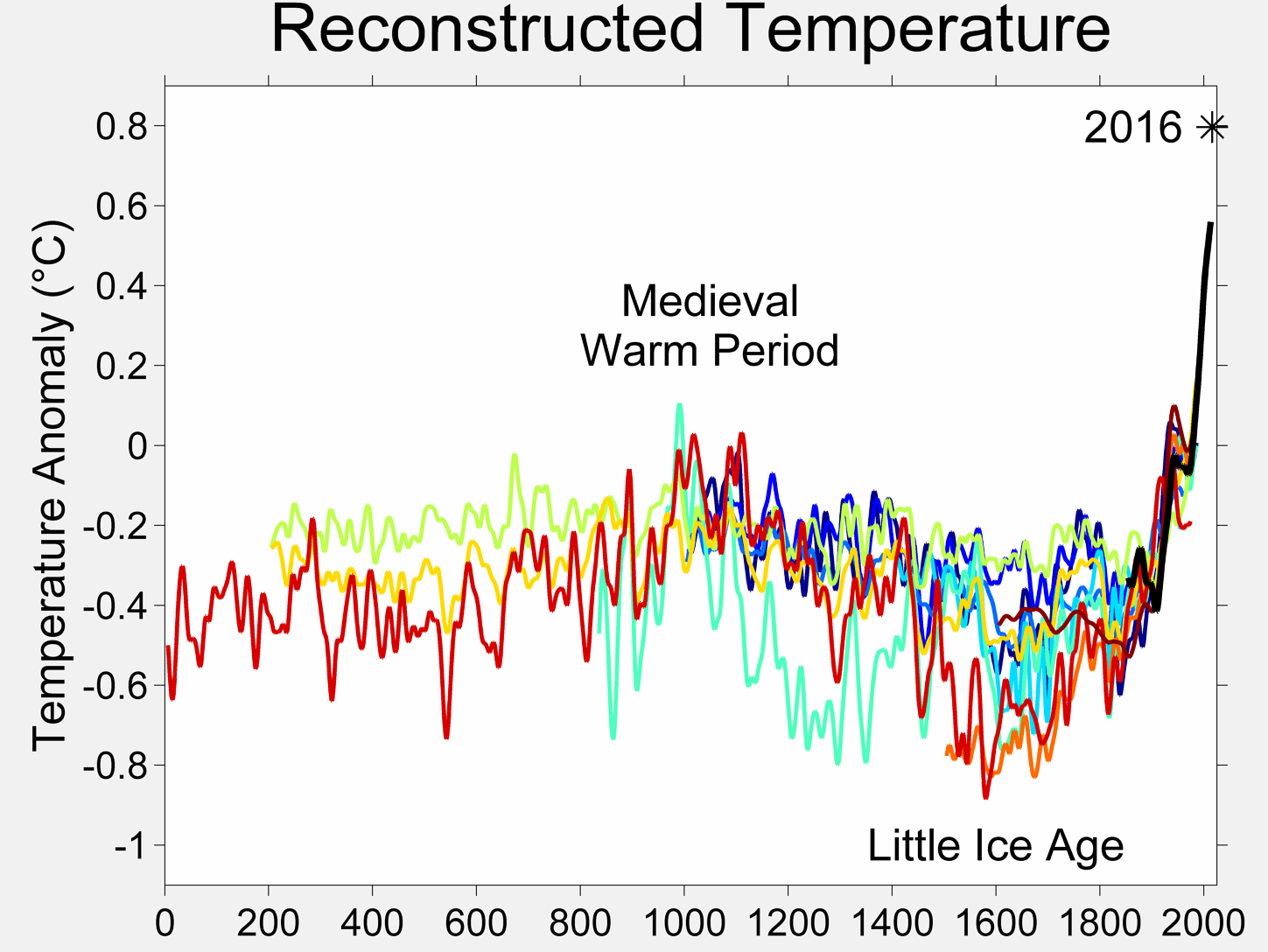

We’ve all seen this:

It’s one of the many works of global warming art that pervade Wikipedia. In the description page for this graph we have this:

The original version of this figure was prepared by Robert A. Rohde from publicly available data, and is incorporated into the Global Warming Art project.

And who is the lead scientist for BEST? One and the same. Now contrast Rohde with Dr. Muller who has gone on record as saying that he disagrees with some of the methods seen in previous science related to the issue. We have what some would call a “warmist” and a “skeptic” both leading a project. When has that ever happened in Climate Science?

Other than making a lot of graphical art that represents the data at hand, Rohde hasn’t been very outspoken, which is why few people have heard of him. I met with him and I can say that Mann, Hansen, Jones, or Trenberth he isn’t. What struck me most about Rohde, besides his quiet demeanor, was the fact that is was he who came up with a method to deal with one of the greatest problems in the surface temperature record that skeptics have been discussing. His method, which I’ve been given in confidence and agreed not to discuss, gave me me one of those “Gee whiz, why didn’t I think of that?” moments. So, the fact that he was willing to look at the problem fresh, and come up with a solution that speaks to skeptical concerns, gives me greater confidence that he isn’t just another Hansen and Jones re-run.

But here’s the thing: I have no certainty nor expectations in the results. Like them, I have no idea whether it will show more warming, about the same, no change, or cooling in the land surface temperature record they are analyzing. Neither do they, as they have not run the full data set, only small test runs on certain areas to evaluate the code. However, I can say that having examined the method, on the surface it seems to be a novel approach that handles many of the issues that have been raised.

As a reflection of my increased confidence, I have provided them with my surfacestations.org dataset to allow them to use it to run a comparisons against their data. The only caveat being that they won’t release my data publicly until our upcoming paper and the supplemental info (SI) has been published. Unlike NCDC and Menne et al, they respect my right to first publication of my own data and have agreed.

And, I’m prepared to accept whatever result they produce, even if it proves my premise wrong. I’m taking this bold step because the method has promise. So let’s not pay attention to the little yippers who want to tear it down before they even see the results. I haven’t seen the global result, nobody has, not even the home team, but the method isn’t the madness that we’ve seen from NOAA, NCDC, GISS, and CRU, and, there aren’t any monetary strings attached to the result that I can tell. If the project was terminated tomorrow, nobody loses jobs, no large government programs get shut down, and no dependent programs crash either. That lack of strings attached to funding, plus the broad mix of people involved especially those who have previous experience in handling large data sets gives me greater confidence in the result being closer to a bona fide ground truth than anything we’ve seen yet. Dr. Fred Singer also gives a tentative endorsement of the methods.

My gut feeling? The possibility that we may get the elusive “grand unified temperature” for the planet is higher than ever before. Let’s give it a chance.

I’ve already said way too much, but it was finally a moment of peace where I could put my thoughts about BEST to print. Climate related website owners, I give you carte blanche to repost this.

I’ll let William Briggs have a say now, excerpts from his article:

=============================================================

Word is going round that Richard Muller is leading a group of physicists, statisticians, and climatologists to re-estimate the yearly global average temperature, from which we can say such things like this year was warmer than last but not warmer than three years ago. Muller’s project is a good idea, and his named team are certainly up to it.

The statistician on Muller’s team is David Brillinger, an expert in time series, which is just the right genre to attack the global-temperature-average problem. Dr Brillinger certainly knows what I am about to show, but many of the climatologists who have used statistics before do not. It is for their benefit that I present this brief primer on how not to display the eventual estimate. I only want to make one major point here: that the common statistical methods produce estimates that are too certain.

…

We are much more certain of where the parameter lies: the peak is in about the same spot, but the variability is much smaller. Obviously, if we were to continue increasing the number of stations the uncertainty in the parameter would disappear. That is, we would have a picture which looked like a spike over the true value (here 0.3). We could then confidently announce to the world that we know the parameter which estimates global average temperature with near certainty.

Are we done? Not hardly.

Read the full article here

Observation #1 – We have statements and/or inferences from various sources that the BEST Project’s data, methodology, code and all related documents will be provided without needing to be requested. Also, we have statements and/or inferences from various sources that those will be available to everyone, not just restricted to the academic/institutional science community. We shall see if those inferences materialize. Fair enough. That is the critical go-no-go check point of an open and transparent step in the science of GST time series analysis. NOTE: I do not see any discussion of whether those will be behind a paywall or not, but given the funding it might very well be behind a paywall.

Observation #2 – If the BEST Project is to be objectively independent from the past GST science, then its data sources must be the original raw data, not processed HADCRU, NOAA, GISS nor any other processed datasets. I do not recall that this has been stated and/or inferred about the BEST project. That is another the critical go-no-go check points of an open and transparent step in the science of GST time series analysis. It would be disappointing if the BEST project stands on the shoulders of previously processed data or stand on original raw data. Time will tell.

Observation #3 – Premises that have formed the fundamental basis the BEST Project have not, to my knowledge, been articulated even though the project has been in development and implementation for up to 1 year already. I mean, for example, premise statements like (my words), “We are fundamentally basing our approach on X, Y and Z.” What kind of X, Y and Z would I like to see? First I would like to see a statement of BEST Project statistical premises. Second I would like to a statement of their quality assurance approach, especially their internal processed for bias auditing. Third I would like to see a statement of the bounding conditions of their study, the known and unknown limits that they assume as a starting point. Fourth, I would like to see a statement of their context to the rest of the climate science body of knowledge. Without such kind premise statements, we just do not know anything to base our expectations of the result of the BEST Project on. Everything stated and/or inferred so far publically is fluffy like a cuddly warm kitten.

Observation #4 – Could this BEST Project be an “”Et tu, Brute?”” moment in GST climate science at these independent science blogs or a “Berlin, 19 November 1989” moment at these climate science blogs? Time will tell us which kind of moment it is, but the amount of openness with the climate blogs by the BEST project so far during its development and implementation phases does send up cautionary signals in my mind. The BEST Project has a right to its privacy during development and implementation. It also has had the right to have been free to be much more open during its development and implementation phases. They made a choice so far, it was a choice they had a right to make. My question is why they chose to restrict openness on the climate science blogs so far?

I deeply appreciate that WUWT has provided this forum to discuss climate science past, present and with expectations of the future.

John

I too have been waiting for what you find in that paper. It would make an interesting comparison with the result of Menne et al 2010 paper, which used only half of your surface stations data (without your permission) and presumably a different methodology to find that UHI effect has been properly accounted for in US temperature sets.

One can only hope Dr Matthew J Menne doesn’t turn out to be one of the peers reviewing your paper, Anthony. That would be insane.

Logan says at March 6, 2011 at 9:11 am

“OK, sounds great. But what about the ARGO ocean buoy data, which is not often mentioned?”

Just where is the Argo data?

Why is it not available every month like Dr. Spencer’s satelite data.

I keep asking, but nobody replies.

Surely sombody knows.

Where is the Argo data?

polistra says in part, on March 6, 2011 at 12:11 pm

“If you’re looking for long-term GLOBAL trends, they must show up in a consistent and well-calibrated record of two or three consistently rural places. If nothing else, simple data gives you a much better chance of pinning down the contaminating factors.”

I agree and have been trying to say just that for some time.

That’s why I have been looking at the longish record of certain Australian locations in some detail.

AusieDan – have you looked at

http://www.argo.ucsd.edu/Argo_data_and.html

(Argo data and how to get it)

or

http://www.pmel.noaa.gov/tao/elnino/drifting.html

(NOAA drifting buoy data – has a link to Argo Home Page amongst others)

I think it all comes down to attitude by those involved. That attitude is one of wishing to be absolutely certain that you are as confident as you can be in the results and humility — because you’ve had the experience that sometimes no matter how confident you are of the results you can still get it wrong

Now if you work for an institution looking for quarks, higgs bosons, or the “god particle” or whatever your press office have spun the latest research, you are probably used to feeling humble as the data doesn’t quite show what you thought it would, let alone what it was spun by the Press Office.

But if you are a climate “scientist” with a pretty third rate degree, in a subject where everyone else got there because there was too much competition in real science and your only “research” has been to state that temperature got warmer and predict it will get warmer in 100 years, and you don’t ever make testable predictions, and “peer review” means a buddy using- a spell checker … and basically there’s no way you can ever be wrong and in climate “science”, … they’ve never had that humbling experience of real scientists of finding their cherished theory was a load of tripe and then learning to be a lot more careful the next time.

And more than that, it’s the reputation of the whole institution that also counts. Sometimes by shear damned luck some scientists never get it wrong. But in a big institution like Berkeley there’s enough of them that sooner or later someone will drop and enormous clanger than affects everyone. So, they know that you can’t let rogue groups just make up the rules as they go along and so the whole institution just can’t afford to come up with a temperature series that some later work proves to be woefully biased. UEA on the other hand … reputation? What reputation?

‘A wow moment, why didn’t I think of that’

you have me chomping at the bit now Anthony. I love things like this, new ways of looking at stuff

EO

“there’s more than one way to skin a cat”.

Forgive the pedantry of a Charles Kingsley lover, but that famous ‘quote’ is

“There are more ways of killing a cat than choking it with cream”

This has the appearance of being the sort of sensible approach that we have long needed.

I look forward to reading the results of BEST. You can’t judge it before you see it.

eadler says:

March 6, 2011 at 4:33 pm

Unfortunately the ocean heat is quite difficult to measure. A lot of corrections have been needed recently. Sampling the top 700M of ocean, worldwide, is not a piece of cake.

In fact if you look at the surface temperature record and ocean heat graph they have shown fairly parallel increases :

http://bobtisdale.blogspot.com/2009/10/nodc-corrections-to-ocean-heat-content.html

http://data.giss.nasa.gov/gistemp/graphs/Fig.A2.gif

So because the right metric is difficult – continue using the wrong one?

I dont know, choosing method before analysing data, that is very unorthodox climate research.

What if it brings wrong results? Do we have enough tricks to correct them?

/sarc off/

Very interesting, not knowing is much worse than guessing wrong.

I am excited to see the results. Perhaps we finally have some “real” science going on? Would be a breath of fresh air. I do however reserve a bit of skepticism, but will maintain my optimism until all the results are compiled and scrutinized.

Thanks for the great post Anthony!

To Dave,

This statement is false. While I would agree on your other points, this one in particular bothers me as I have seen and read so much literature that specifically contradicts this statement. I no longer believe that CO2, or any other “greenhouse” gas can do as you claim. I no longer believe there is such a thing as a “greenhouse” gas, as a gas cannot A) trap anything, or B) back-radiate (which would be required).

Can a “greenhouse” gas “slow” cooling processes? .. The jury is still out on this one, but I would suggest that it may, but the lapse rate is extremely small, perhaps beyond the point of reasonable measurement.

To boldly suggest as you do, is false. There is no evidence to support your point, either empirically or experimentally. This point also happens to be the very lynch pin of the entire AGW argument, for which again, there is absolutely no empirical evidence to support.

We and the world should not accept a “fait accompli” from BEST. There are issues that must be discussed up front. For example, whose historical data will be used. At the time of Climategate, the canonical version of historical data back to 1850 had been assembled by Phil Jones and blessed by all Warmista. Is that still the case? Are we taking for granted the accuracy of Phil Jones’ (non-existent) data. If we are then I have no hope that BEST can produce something useful.

I have download Roy Spencer’s salellite data and graphed it against NCDC global land and ocean index.

Visually these agree very closely.

Correlation about 0.8 which means that about 64% of one, “explains” or coincides with the other, not bad for chaotic climate data.

To my mind that means the NCDC is not badly contaminated by UHI nor any of the shortcomings that we tend to concentrate upon.

Now the NCDC historic data keeps changing, which anoys me greatly.

Neveless it shows that since 1880, the temperature has risen by 0.6 degrees celsius per 100 years.

Overlaying this strong, yet gentle linear trend, is the well known 60 year up and down cycle.

There is no visual appearence of any recent acceleration in the long term trend, that you would expect if it was being driven by the increasing human CO2 emissions.

So I would not be surprised if the Best index more or less validates the existing ones and just corrects their more shaky work, without changing the general trend.

Also I am still expecting that the next 30 years will be cooler than the late 1990’s, but that the gentle undrelying trend will thereafter take the temperature back on up.

None of that speaks to the question of what is causing the rise to occur.

Several people here keep talking about measuring the heat balance rather than the temperature.

Roy Spencer has already done work on that.

While it is outside my area, it is probably where we should search for the truth.

The wild card in all this is the question of the quiet sun and its impact, if any, on the climate.

I have an open mind on that.

Interesting times.

Charlie A says: “With many (most) distributions of data, compliance with Nyquist sampling theorem is NOT required. ”

Charlie – you seem to have fallen straight into the trap of thinking that this is a problem of statistical sampling. I was trying to highlight the fact that it’s not.

(Even if it was just that, the assumption of convergence of statistical properties does not hold when a signal is aliased.)

If I am designing a digital control system, I cannot propose a control methodology based on statistical aggregates of measured variables. I don’t care what the average or standard deviation of a controlled varible is – I need to know where it is NOW in order to determine what control inputs are required to achieve a desired outcome.

And then I need to know again where it has got to within a determined interval in order to review the control inputs. I need this at an adequate frequency to be extremely confident that the sampled measured variable is not aliased.

That means it is the detailed pattern of the signal (in time, or perhaps in space) that myst be determined, not its statistical properties.

That’s when we have the benefit of designing a sampled data system at the outset – the first task is to understand the dynamical properties of the controlled variable(s) to ensure the sampling scheme is adequate for the purpose.

The BEST project doesn’t have the luxury of designing a sampled data scheme at the outset to measure the PATTERN of warming in recent times. Like others, they will need to use whatever data is available to them. So the problem needs to be re-phrased.

There is still a need to determine what sampling scheme (in space and time) which is adequate for the purpose of measuring a PATTERN without risk of aliasing.

The project still needs to determine the basic criteria for sampling the real environment for their purpose. It will then need to consider whether there are periods when the available data meets those criteria, and if there are periods when the criteria are not met.

If the available measurements do not meet the criteria, the measured trend will not be reliable as it may be subject to aliasing.

It is good to see that there is an expert on sampled data systems on the team (described as an expert in time series – but the same should apply to the spatial issues). I will be very interested to see how they have handled the above issues.

With regard to whether the climate is a stationary process, my point is more related to whether an adequate sampling regime at one point in time is also adequate at another.

sHx says:

March 6, 2011 at 10:41 pm

REPLY: It is in late stage peer review, I’ll announce more when I get the word from the journal. I suspect there will be another round of comments we have to deal with, but we may get lucky. Bear in mind all the trouble Jeff Condon and Ryan O’Donnell had with hostile reviewers getting their work out. – Anthony

I too have been waiting for what you find in that paper. It would make an interesting comparison with the result of Menne et al 2010 paper, which used only half of your surface stations data (without your permission) and presumably a different methodology to find that UHI effect has been properly accounted for in US temperature sets.

One can only hope Dr Matthew J Menne doesn’t turn out to be one of the peers reviewing your paper, Anthony. That would be insane.

One half of the surface stations is a pretty good sample considering the number. Using all of them probably wouldn’t change the conclusions much given the methodology that was used. This is not a judgment on the way Anthony was treated when they used his data, it is a statistical judgment.

It would make sense for Dr Menne to be a reviewer of the paper. It would improve the quality, assuming the editor is fair minded. In the case of the O’donnell the editor ended up publishing an improved version.

If you one attacks the institutions of science in the blogosphere, and then attempts to publish something, one should expect to get worked over a little. Scientists are only human. Turnabout is fair play.

Regarding station data. I am unimpressed by the history of these data plots. Once again, the simple seed plot works as a comparison. Let’s say, in our effort to develop a grand, all weather wheat seed, we scattered pots of seed round the world, paying little attention to their locations as a variable. Over time, some of our grad students left school, thus letting their seed plots go to hell in a handbasket. Others, thinking it would be more convenient to move their plot closer to the BBQ, did so, not bothering to record the move, or let us in on their plan before the move was completed. Along with way, all kinds of other things happened to these pots of seed. In the end, we had a vastly different set of variables, compared to when we started. Oh well. Let’s clean up what we have and let’s package up the product anyway, and say this is wheat that can withstand all temperatures, all altitudes, all seasons, etc. Would you buy futures in this wheat?

Wow Romm spouts a lot of nonsense in a very shrill and arrogant fashion.

Curse you Anthony for sending me back there.

I’m not holding my breath.

Roger Pielke, Sr has papers on inherent nite-time low biases that occur even at relatively pristine, rural sites. Because of these biases, he’s recommended that trends be determined from high temperatures only, and simply disregard the min temps. It would be very useful if the BEST team determines additional trends from just daily high temps.

RP Sr should be included in this study (if he isn’t already). At least he should be involved in any review process.

eadler (March 6, 2011 at 4:33 pm): You provided a link to my post on the revised monthly OHC data and a link to an annual GISTEMP LOTI curve and you somehow call them similar. Have you actually scaled one and compared them? Do you know if the two curves are in fact similar, other than having positive trends?

In the meantime, never mind the yipping from climate chihuahuas like Joe Romm over at Climate Progress who are trying to destroy the credibility of the project before it even produces a result (hmmm, where have we seen that before?) , it is simply the modus operandi of the fearful, who don’t want anything to compete with the “certainty” of climate change they have been pushing courtesy NOAA and GISS results.

One has to wonder: why, exactly, are they so afraid? Their position on this is definitely NOT the position of one who has confidence in his own work.

John Whitman says:

March 6, 2011 at 10:38 pm

I’m glad that you found the time to type up all your observations of concern. Let me be the first to second them. Indeed, if BEST accepts the time series of annual averages as presented by current versions of GHCN and USHCN as bona fide data, then they are doomed to repeat the historical distortions. No matter how sound their method(s) of mathematical estimation may be in principle, in practice it is the quality and the spatial distribution of the station data that ultimately limits the reliability. Unfortunately, there is no one on the BEST team with any practical experience in handling the peculiar problems of sparsely sampled geophysical data, even without the urban-biased siting of long-term land stations. Without any knowledge of what a high-quality temperature record sampled every second reveals in its power spectrum, I’m not even sure that such recondite issues as aliasing of harmonics of the diurnal cycle into the lowest frequencies and the difference between true daily averages and mid-range values obtained from Tmax and Tmin will be given their proper due.

AusieDan says:

March 7, 2011 at 5:08 am

“Correlation about 0.8 which means that about 64% of one, “explains” or coincides with the other, not bad for chaotic climate data.

To my mind that means the NCDC is not badly contaminated by UHI nor any of the shortcomings that we tend to concentrate upon.”

The cited zero-lag correlation is largely the product of coherent sub-decadal variations over a ~30-yr interval. “Trends” are the product of multidecadal, quasi-centennial and even longer irregular oscillations. It is precisely at these low frequencies that coherence between intensely urban and provincial town stations most often breaks down in very long station records. The concentration of attention upon fitted linear trends, rather than upon the cross-spectral disparity, is where the mistake is made. You can fit a linear trend to any bounded set of data. That does not mean that one is inherent in the underlying physical process.

beng says:

March 7, 2011 at 6:51 am

I’m not holding my breath.

Roger Pielke, Sr has papers on inherent nite-time low biases that occur even at relatively pristine, rural sites. Because of these biases, he’s recommended that trends be determined from high temperatures only, and simply disregard the min temps. It would be very useful if the BEST team determines additional trends from just daily high temps.

RP Sr should be included in this study (if he isn’t already). At least he should be involved in any review process.

Beng,

The fact is that AGW implies a larger increase in minimum night time temperatures, than daytime temperatures, because at that time, the downwelling radiation is the only factor sending energy to the earth’s surface since the sun is absent. In fact 2/3 of the increase in average temperature observed recently is because of increase in the nighttime minimum temperature.

If you desire to deny the AGW exists, then you clearly the best thing to do is to ignore the increase in the nighttime minimum temperature.