Jeff Id emailed me today, to ask if I wanted to post this with the caveat “it’s very technical, but I think you’ll like it”. Indeed I do, because it represents a significant step forward in the puzzle that is the Steig et all paper published in Nature this year ( Nature, Jan 22, 2009) that claims to have reversed the previously accepted idea that Antarctica is cooling. From the “consensus” point of view, it is very important for “the Team” to make Antarctica start warming. But then there’s that pesky problem of all that above normal ice in Antarctica. Plus, there’s other problems such as buried weather stations which will tend to read warmer when covered with snow. And, the majority of the weather stations (and thus data points) are in the Antarctic peninsula, which weights the results. The Antarctic peninsula could even be classified under a different climate zone given it’s separation from the mainlaind and strong maritime influence.

A central prerequisite point to this is that Steig flatly refused to provide all of the code needed to fully replicate his work in MatLab and RegEM, and has so far refused requests for it. So without the code, replication would be difficult, and without replication, there could be no significant challenge to the validity of the Steig et al paper.

Steig’s claim that there has been “published code” is only partially true, and what has been published by him is only akin to a set of spark plugs and a manual on using a spark plug wrench when given the task of rebuilding an entire V-8 engine.

In a previous Air Vent post, Jeff C points out the percentage of code provided by Steig:

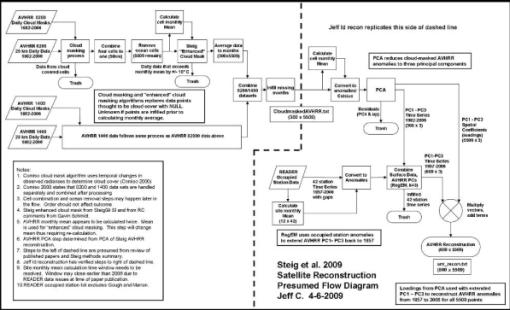

“Here is an excellent flow chart done by JeffC on the methods used in the satellite reconstruction. If you see the little rectangle which says RegEM at the bottom right of the screen, that’s the part of the code which was released, the thousands of lines I and others have written for the rest of the little blocks had to be guessed at, some of it still isn’t figured out yet.”

With that, I give you Jeff and Ryan’s post below. – Anthony

Posted by Jeff Id on May 20, 2009

I was going to hold off on this post because Dr. Weinstein’s post is getting a lot of attention right now it has been picked up on several blogs and even translated into different languages but this is too good not to post.

Ryan has done something amazing here, no joking. He’s recalibrated the satellite data used in Steig’s Antarctic paper correcting offsets and trends, determined a reasonable number of PC’s for the reconstruction and actually calculated a reasonable trend for the Antarctic with proper cooling and warming distributions – He basically fixed Steig et al. by addressing the very concern I had that AVHRR vs surface station temperature(SST) trends and AVHRR station vs SST correlation were not well related in the Steig paper.

Not only that he demonstrated with a substantial blow the ‘robustness’ of the Steig/Mann method at the same time.

If you’ve followed this discussion whatsoever you’ve got to read this post.

RegEM for this post was originally transported to R by Steve McIntyre, certain versions used are truncated PC by Steve M as well as modified code by Ryan.

Ryan O – Guest post on the Air Vent

I’m certain that all of the discussion about the Steig paper will eventually become stale unless we begin drawing some concrete conclusions. Does the Steig reconstruction accurately (or even semi-accurately) reflect the 50-year temperature history of Antarctica?

Probably not – and this time, I would like to present proof.

I: SATELLITE CALIBRATION

As some of you may recall, one of the things I had been working on for awhile was attempting to properly calibrate the AVHRR data to the ground data. In doing so, I noted some major problems with NOAA-11 and NOAA-14. I also noted a minor linear decay of NOAA-7, while NOAA-9 just had a simple offset.

But before I was willing to say that there were actually real problems with how Comiso strung the satellites together, I wanted to verify that there was published literature that confirmed the issues I had noted. Some references:

(NOAA-11)

Click to access i1520-0469-59-3-262.pdf

(Drift)

(Ground/Satellite Temperature Comparisons)

Click to access p26_cihlar_rse60.pdf

The references generally confirmed what I had noted by comparing the satellite data to the ground station data: NOAA-7 had a temperature decrease with time, NOAA-9 was fairly linear, and NOAA-11 had a major unexplained offset in 1993.

Let us see what this means in terms of differences in trends.

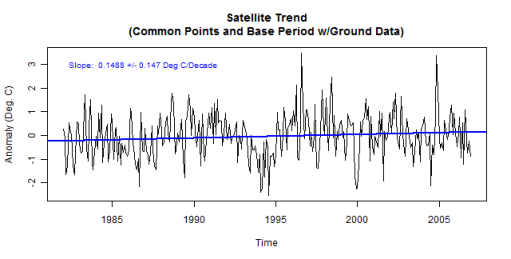

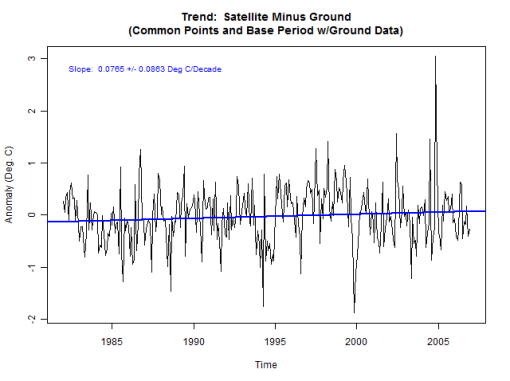

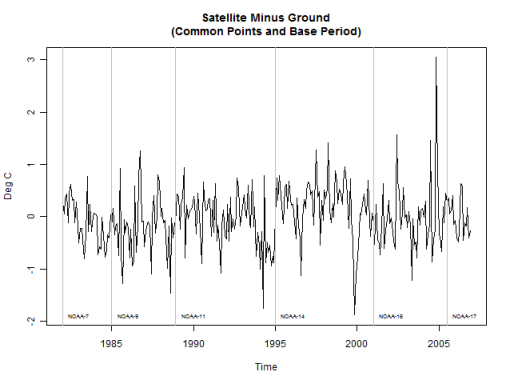

The satellite trend (using only common points between the AVHRR data and the ground data) is double that of the ground trend. While zero is still within the 95% confidence intervals, remember that there are 6 different satellites. So even though the confidence intervals overlap zero, the individual offsets may not.

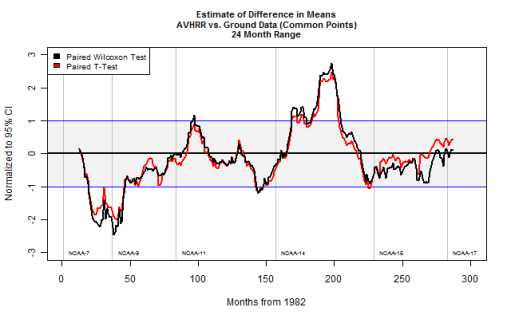

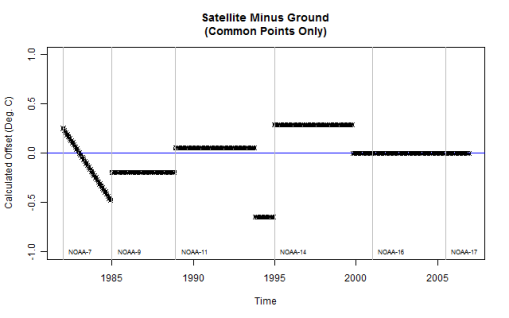

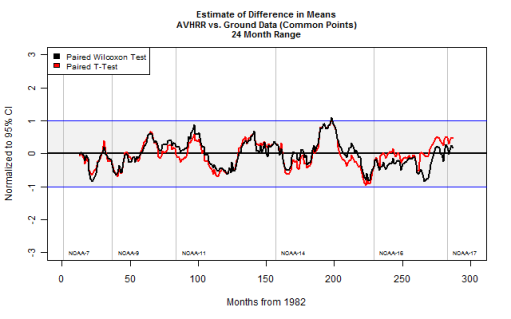

In order to check the individual offsets, I performed running Wilcoxon and t-tests on the difference between the satellites and ground data using a +/-12 month range. Each point is normalized to the 95% confidence interval. If any point exceeds +/- 1.0, then there is a statistically significant difference between the two data sets.

Note that there are two distinct peaks well beyond the confidence intervals and that both lines spend much greater than 5% of the time outside the limits. There is, without a doubt, a statistically significant difference between the satellite data and the ground data.

As a sidebar, the Wilcoxon test is a non-parametric test. It does not require correction for autocorrelation of the residuals when calculating confidence intervals. The fact that it differs from the t-test results indicates that the residuals are not normally distributed and/or the residuals are not free from correlation. This is why it is important to correct for autocorrelation when using tests that rely on assumptions of normality and uncorrelated residuals. Alternatively, you could simply use non-parametric tests, and though they often have less statistical power, I’ve found the Wilcoxon test to be pretty good for most temperature analyses.

Here’s what the difference plot looks like with the satellite periods shown:

The downward trend during NOAA-7 is apparent, as is the strange drop in NOAA-11. NOAA-14 is visibly too high, and NOAA-16 and -17 display some strange upward spikes. Overall, though, NOAA-16 and -17 do not show a statistically significant difference from the ground data, so no correction was applied to them.

After having confirmed that other researchers had noted similar issues, I felt comfortable in performing a calibration of the AVHRR data to the ground data. The calculated offsets and the resulting Wilcoxon and t-test plot are next:

To make sure that I did not “over-modify” the data, I ran a Steig (3 PC, regpar=3, 42 ground stations) reconstruction. The resulting trend was 0.1079 deg C/decade and the trend maps looked nearly identical to the Steig reconstructions. Therefore, the satellite offsets – while they do produce a greater trend when not corrected – do not seem to have a major impact on the Steig result. This should not be surprising, as most of the temperature rise in Antarctica occurs between 1957 and 1970.

II: PCA

One of the items that we’ve spent a lot of time doing sensitivity analysis is the PCA of the AVHRR data. Between Jeff Id, Jeff C, and myself, we’ve performed somewhere north of 200 reconstructions using different methods and different numbers of retained PCs. Based on that, I believe that we have a pretty good feel for the ranges of values that the reconstructions produce, and we all feel that the 3 PC, regpar=3 solution does not accurately reproduce Antarctic temperatures. Unfortunately, our opinions count for very little. We must have a solid basis for concluding that Steig’s choices were less than optimal – not just opinions.

How many PCs to retain for an analysis has been the subject of much debate in many fields. I will quickly summarize some of the major stopping rules:

1. Kaiser-Guttman: Include all PCs with eigenvalues greater than the average eigenvalue. In this case, this would require retention of 73 PCs.

2. Scree Analysis: Plot the eigenvalues from largest to smallest and take all PCs where the slope of the line visibly ticks up. This is subjective, and in this case it would require the retention of 25 – 50 PCs.

3. Minimum explained variance: Retain PCs until some preset amount of variance has been explained. This preset amount is arbitrary, and different people have selected anywhere from 80-95%. This would justify including as few as 14 PCs and as many as 100.

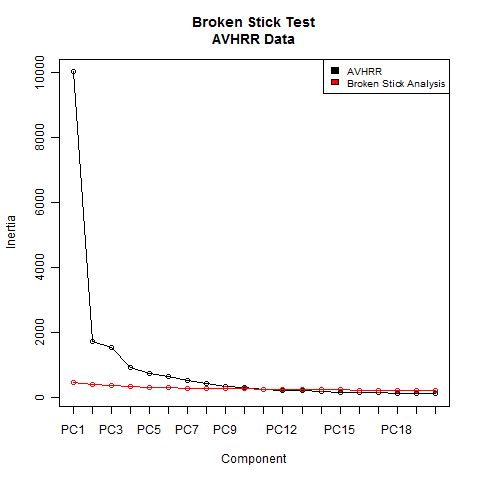

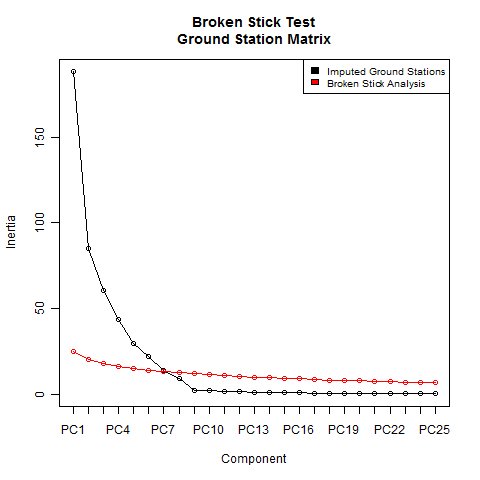

4. Broken stick analysis: Retain PCs that exceed the theoretical scree plot of random, uncorrelated noise. This yields precisely 11 PCs.

5. Bootstrapped eigenvalue and eigenvalue/eigenvector: Through iterative random sampling of either the PCA matrix or the original data matrix, retain PCs that are statistically different from PCs containing only noise. I have not yet done this for the AVHRR data, though the bootstrap analysis typically yields about the same number (or a slightly greater number) of significant PCs as broken stick.

The first 3 rules are widely criticized for being either subjective or retaining too many PCs. In the Jackson article below, a comparison is made showing that 1, 2, and 3 will select “significant” PCs out of matrices populated entirely with uncorrelated noise. There is no reason to retain noise, and the more PCs you retain, the more difficult and cumbersome the analysis becomes.

The last 2 rules have statistical justification. And, not surprisingly, they are much more effective at distinguishing truly significant PCs from noise. The broken stick analysis typically yields the fewest number of significant PCs, but is normally very comparable to the more robust bootstrap method.

Note that all of these rules would indicate retaining far more than simply 3 PCs. I have included some references:

Click to access North_et_al_1982_EOF_error_MWR.pdf

I have not yet had time to modify a bootstrapping algorithm I found (it was written for a much older version of R), but when I finish that, I will show the bootstrap results. For now, I will simply present the broken stick analysis results.

The broken stick analysis finds 11 significant PCs. PCs 12 and 13 are also very close, and I suspect the bootstrap test will find that they are significant. I chose to retain 13 PCs for the reconstruction to follow.

Without presenting plots for the moment, retaining more than 11 PCs does not end up affecting the results much at all. The trend does drop slightly, but this is due to better resolution on the Peninsula warming. The rest of the continent does not change if additional PCs are added. The only thing that changes is the time it takes to do the reconstruction.

Remember that the purpose of the PCA on the AVHRR data is not to perform factor analysis. The purpose is simply to reduce the size of the data to something that can be computed. The penalty for retaining “too many” – in this case – is simply computational time or the inability for RegEM to converge. The penalty for retaining too few, on the other hand, is a faulty analysis.

I do not see how the choice of 3 PCs can be justified on either practical or theoretical grounds. On the practical side, RegEM works just fine with as many as 25 PCs. On the theoretical side, none of the stopping criteria yield anything close to 3. Not only that, but these are empirical functions. They have no direct physical meaning. Despite claims in Steig et al. to the contrary, they do not relate to physical processes in Antarctica – at least not directly. Therefore, there is no justification for excluding PCs that show significance simply because the other ones “look” like physical processes. This latter bit is a whole other discussion that’s probably post worthy at some point, but I’ll leave it there for now.

III: RegEM

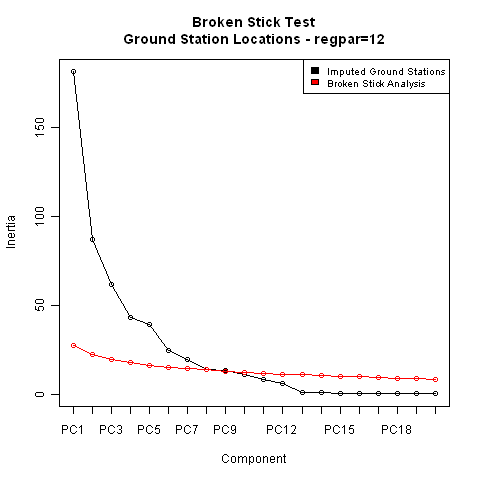

We’ve also spent a great deal of time on RegEM. Steig & Co. used a regpar setting of 3. Was that the “right” setting? They do not present any justification, but that does not necessarily mean the choice is wrong. Fortunately, there is a way to decide.

RegEM works by approximating the actual data with a certain number of principal components and estimating a covariance from which missing data is predicted. Each iteration improves the prediction. In this case (unlike the AVHRR data), selecting too many can be detrimental to the analysis as it can result in over-fitting, spurious correlations between stations and PCs that only represent noise, and retention of the initial infill of zeros. On the other hand, just like the AVHRR data, too few will result in throwing away important information about station and PC covariance.

Figuring out how many PCs (i.e., what regpar setting to use) is a bit trickier because most of the data is missing. Like RegEM itself, this problem needs to be approached iteratively.

The first step was to substitute AVHRR data for station data, calculate the PCs, and perform the broken stick analysis. This yielded 4 or 5 significant PCs. After that, I performed reconstructions with steadily increasing numbers of PCs and performed a broken stick analysis on each one. Once the regpar setting is high enough to begin including insignificant PCs, the broken stick analysis yields the same result every time. The extra PCs show up in the analysis as noise. I first did this using all the AWS and manned stations (minus the open ocean stations).

I ran this all the way up to regpar=20 and the broken stick analysis indicates that 9 PCs are required to properly describe the station covariance. Hence the appropriate regpar setting is 9 if all the manned and AWS stations are used. It is certainly not 3, which is what Steig used for the AWS recon.

I also performed this for the 42 manned stations Steig selected for the main reconstruction. That analysis yielded a regpar setting of 6 – again, not 3.

The conclusion, then, is similar to the AVHRR PC analysis. The selection of regpar=3 does not appear to be justifiable. Additional PCs are necessary to properly describe the covariance.

IV: THE RECONSTRUCTION

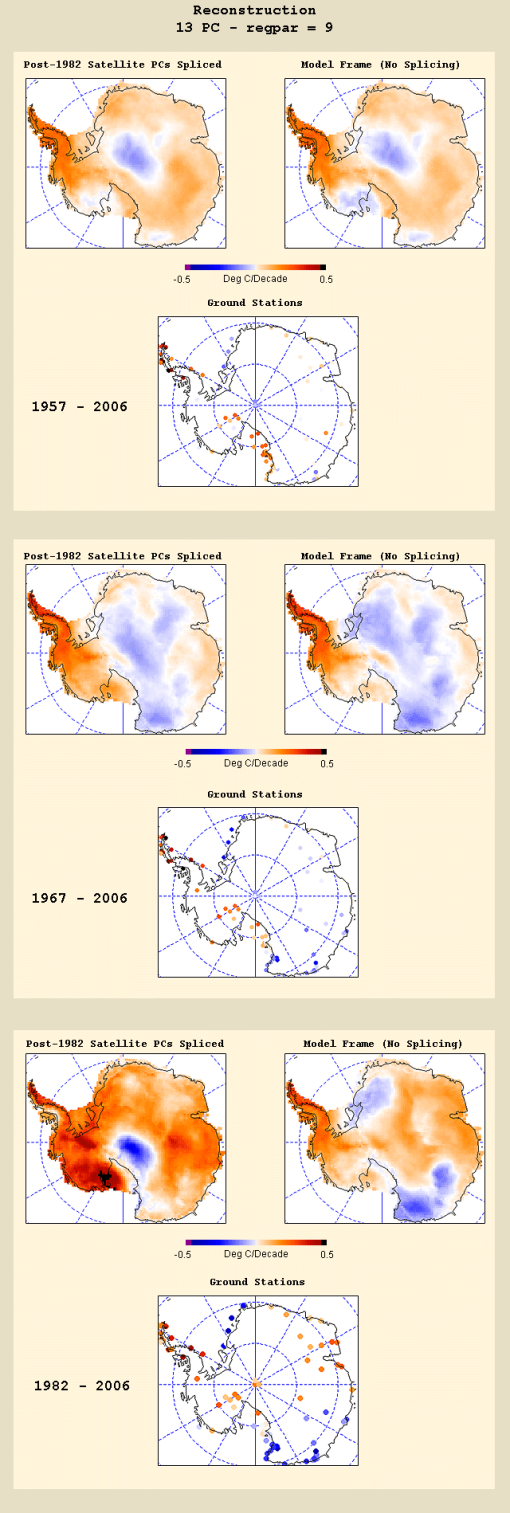

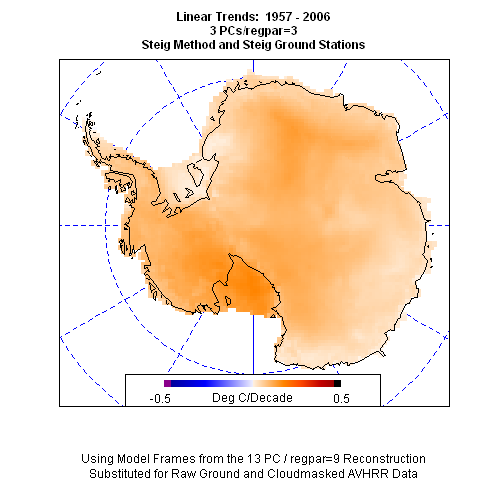

So what happens if the satellite offsets are properly accounted for, the correct number of PCs are retained, and the right regpar settings are used? I present the following panel:

(Right side) Reconstruction trends using just the model frame.

RegEM PTTLS does not return the entire best-fit solution (the model frame, or surface). It only returns what the best-fit solution says the missing points are. It retains the original points. When imputing small amounts of data, this is fine. When imputing large amounts of data, it can be argued that the surface is what is important.

RegEM IPCA returns the surface (along with the spliced solution). This allows you to see the entire solution. In my opinion, in this particular case, the reconstruction should be based on the solution, not a partial solution with data tacked on the end. That is akin to doing a linear regression, throwing away the last half of the regression, adding the data back in, and then doing another linear regression on the result to get the trend. The discontinuity between the model and the data causes errors in the computed trend.

Regardless, the verification statistics are computed vs. the model – not the spliced data – and though Steig did not do this for his paper, we can do it ourselves. (I will do this in a later post.) Besides, the trends between the model and the spliced reconstructions are not that different.

Overall trends are 0.071 deg C/decade for the spliced reconstruction and 0.060 deg C/decade for the model frame. This is comparable to Jeff’s reconstructions using just the ground data, and as you can see, the temperature distribution of the model frame is closer to that of the ground stations. This is another indication that the satellites and the ground stations are not measuring exactly the same thing. It is close, but not exact, and splicing PCs derived solely from satellite data on a reconstruction where the only actual temperatures come from ground data is conceptually suspect.

When I ran the same settings in RegEM PTTLS – which only returns a spliced version – I got 0.077 deg C/decade, which checks nicely with RegEM IPCA.

I also did 11 PC, 15 PC, and 20 PC reconstructions. Trends were 0.081, 0.071, and 0.069 for the spliced and 0.072, 0.059, and 0.055 for the model. The reason for the reduction in trend was simply better resolution (less smearing) of the Peninsula warming.

Additionally, I ran reconstructions using just Steig’s station selection. With 13 PCs, this yielded a spliced trend of 0.080 and a model trend of 0.065. I then did one after removing the open-ocean stations, which yielded 0.080 and 0.064.

Note how when the PCs and regpar are properly selected, the inclusion and exclusion of individual stations does not significantly affect the result. The answers are nearly identical whether 98 AWS/manned stations are used, or only 37 manned stations are used. One might be tempted to call this “robust”.

V: THE COUP DE GRACE

Let us assume for a moment that the reconstruction presented above represents the real 50-year temperature history of Antarctica. Whether this is true is immaterial. We will assume it to be true for the moment. If Steig’s method has validity, then, if we substitute the above reconstruction for the raw ground and AVHRR data, his method should return a result that looks similar to the above reconstruction.

Let’s see if that happens.

For the substitution, I took the ground station model frame (which does not have any actual ground data spliced back in) and removed the same exact points that are missing from the real data.

I then took the post-1982 model frame (so the one with the lowest trend) and substituted that for the AVHRR data.

I set the number of PCs equal to 3.

I set regpar equal to 3 in PTTLS.

I let it rip.

Look familiar?

Overall trend: 0.102 deg C/decade.

Remember that the input data had a trend of 0.060 deg C/decade, showed cooling on the Ross and Weddel ice shelves, showed cooling near the pole, and showed a maximum trend in the Peninsula.

If “robust” means the same answer pops out of a fancy computer algorithm regardless of what the input data is, then I guess Antarctic warming is, indeed, “robust”.

———————————————

Code for the above post is HERE.

I thought I had a vague idea of what was being said until I read “Bootstrapped eigenvalue and eigenvalue/eigenvector” and realised the whole thing is the world’s most complex ever anagram competition.

Wow – I am thoroughly impressed!

Ryan, I’m sure that the critics will be all over this, but after reading all the comments on Steig, et all on CA – well done sir! As esoteric as some of this is, I can still follow it.

Keep this up and WUWT will be the best science blog for a second year.

It would be interesting to hear Eric Steig’s comments on all this. He must surely be back from his 3 months in Antarctica now. Perhaps you could ask him.

Great work! I hope you plan on submitting this for publication to the same folks that so eagerly offered Steig et al a soapbox to trumpet their obviously less worthy work. Of course, if they do actually to publish it, I’ll owe you a six pack of Guinness, ’cause I’m betting their bias won’t allow them to do it.

It’ll be interesting to see if Steig can mount a defense of using only 3 PCs. I’m betting he won’t even bother — either ignore you or reply ad hominem. The intellectual community will, despite their biases, step up (I think/hope).

When this paper by Steig et al. was first being discussed I think I wrote something to the effect that in some types of analyses (socio/economic) where the first few components do have a meaningful interpretation one should go beyond those with caution, but in this case it shouldn’t matter. That was meant to imply that one should use all that helped in the reconstruction. Many years have passed since I’ve worked with such techniques (punched cards on an IBM 360) but I’ve retained just enough to appreciate what you have done. You have done an amazing amount of work. Also, I’m amazed that I can follow along. Must be your excellent writing skills. Good job, John.

Excellent job on a herculean task!!! What we need is a brave journalist to risk his livelihood to hand this to the editor/producer. I happen to know the planet is cooling. Last night I awoke to find 2 feet of ice in my bed. Both of them belonged to my wife. 8^]

OT, Just on Icecap

http://icecap.us/images/uploads/GlobalWarmingMyth.pdf

Somewhere in all this there must be a joke about how many PHD’s it takes to read a thermometer.

Why is measuring temperature such a pseudoscience? If an accurate answer is so important lets develop some new temp stations recalibrate them once or twice a year and take an ave temp. Remove the outliers and voila we have the answer of it getting colder or hotter over some arbitrary timespan. Lets find a way to calibrate the satellite data with high altitude balloons or something.

To me if you have to apply a multitude of algorithms to it the data is not even worth using. We are ultimately talking about measuring temps not tweaking an atom smasher to recreate the big bang. Why is this so hard?

Steig had a reputation of being a nice guy but hanging around Michael Mann has tarnished his reputation.

I think it should be added that there is cooling instead of warming, if the starting date is shifted a few years forward, falsifying the CO2 thesis even more drastically.

@ FatBigot (21:19:30) :

I believe the Jackson article referenced in the text is: Stopping Rules in Principal Components Analysis: A Comparison of Heuristical and Statistical Approaches, Donald A. Jackson (1993) which corresponds to the referenced url: http://labs.eeb.utoronto.ca/jackson/pca.pdf.

FatBigot (21:19:30) :

I thought I had a vague idea of what was being said until I read “Bootstrapped eigenvalue and eigenvalue/eigenvector” and realised the whole thing is the world’s most complex ever anagram competition.

I’ve always had trouble with eigenvalues, too. I do remember that in “No Way Out” while looking for who killed Gene Hackman’s mistress, the CIA took a throwaway Polaroid backpaper, kicked up the eigenvalue, and came up with a studio quality photo of Kevin Costner. Impressive technology.

This is similarly a great piece of crime scene investigation.

It’s nice to know we have a few folks who aren’t gee-whizzed by the “real” scientists’ techspeak. Excellent work, Jeff, Jeff, and Ryan.

After the Seattle Times ran a story giving coverage to this fiasco ( Steig et al ), I emailed both the paper and the ” scientist ” protesting the very unscientific methods and conclusion of this ” piece of work “. I received no response from the individual and the Times editor ignored my email

This man gives computer science and the state of Washington no service.

Perhaps readers here would like to drop a postal mail to the individual and invite him to come here and defend his ” slop ” ?

Eric J. Steig

Department of Earth and Space Sciences and Quaternary Research Center, University of Washington, Seattle, Washington 98195

Saw a report yesterday in the UK about climate protestors in Washington, angry about coal as a fuel, who were trying to close down a power generatation plant. James Hansen was amongst them.

I noticed that there was snow in Washington. Was this recent? Is snow in mid-May usual in this part of the world?

If an accurate answer is so important lets develop some new temp stations recalibrate them once or twice a year and take an ave temp.

No, it’s far more important to spend that huge pile of money they just got to spruce up the home office in Asheville.

Great post. Of course what was done at the end should have been the very first step performed by the Steig team. “If we create a plausible data set, then run our algorithms on it, do we more or less get a summary of the original data set back out”. That is science. Instead it went through the meat grinder and came out with the answer they wanted and that of course was enough to get it published.

While looking at previous posts on this, a key issue identified was that 35% of the weather stations were in the peninsula which is 5% of the land mass. We know that the peninsula is warming for other (including Urban Heat Island) effects. Another issue was that four weather stations on offshore islands were thrown in for good measure. As I understood it, the approach of Steig was to essentially pretend that the weather stations were evenly spread.

This of course seems like a nonsensical approach to the average layman. Many posters here likened it to trying to map the climate history of the United States based on temperatures in Florida.

So this gets me to the question. This article would seem to imply that the underlying methodology is sound, but that the statistical manipulation is wrong.

… or are both the underlying methodology and the statistical manipulation wrong?

In any case, incredible work.

As suspected it is the Steig methodology that is flawed.

Well done to everyone for a great effort.

Anthony should consider giving you guys a medal for this work.

Impressive on all fronts – I hope this work can find its way to publication.

So is Steig’s work/publication in Nature, now falsified?.. this is really the crucial question.

I haven’t a clue what most of that means and my eyes began to glaze over after the first couple of graphs. However, I was able to conclude that the warmists have been caught cooking the books yet again.

Well done!

Fantastic work! Now if only the Establishment would admit this work even exists. I guess no chance of getting Nature to retract Steig’s paper….

@VG

IMHO it puts Steig’s work in nearly as bad light as Mann’s infamous hockey stick. Which is to say it’s a badly done analysis, which tends to give the Alarmists the answer they want (i.e. more warming than is likely to have actually occurred).

Whether or not this was intentional, one can only speculate. I guess Steig’s response (or lack thereof) will determine that.

I am speculating, but I sense that the real story here is how the data was Mann-handled into fitting the theory, rather than Steig’s work. RegEM and PCA were the root of the hockey stick, I believe it was shown that the process mined data for hockey sticks, now a fathered-out-of-wedlock version of the same thing produces a predetermined result in the Antarctic. I sense a pattern.

Aren’t there lots of gaps in tropical troposphere radiosonde data, and an overlap of satellite and balloon data sets? The script almost writes itself. Tropical troposphere hotspot revealed!!! Mike Mann et al.

Wow. Just like the hockey stick. No matter what you put in…..

Excellent. Objective efforts clearly explained (such as this one) advance knowledge (which is the highest praise I can give).

Perhaps Steig et al should take their books and consider taking part in a reality show. Something along the lines of FOX Broadcasting’s Hell’s Kitchen. Perhaps they could compete against Mann et al.

Reading this gave me déjà vu all over again. ☺

I hope this gets a writeup like Bishop Hill’s Caspar and the Jesus Paper so that all those whose eyes glaze over with eigenvalues can come to understand enough of the science as well as the story.

Thank you Jeff Id Jeff C Ryan O and all who’ve done the sterling work. We maybe should establish an alternative Nobel prize here… though really, I’d rather the real Nobel Prize got freed from its corruption by work like this.

Why are all the heads of official science establishments on the gravy train?

This is a job well done.

It’s hard proof of the fact that the scientific world is infected with a breed of scientists that have sold their integrity to a political attempt to achieve absolute power.

Let us be clear about the consequences for all of us if this power grab succeeds.

It’s tyranny, nothing more nothing less.

Who would have expected that 30 years after the fall of the Iron Curtain, which event ended te Cold War, our freedom once again is threatened, this time by an enemy from within?

We now have a clear example how the data was falsified to serve the outcome of a warming Arctic.

Let’s hope we will be able to use this research against those who decided to serve the dark agenda of Anthropogenic Global Warming.

The publication here at WUWT is an important step because it shows that people will not bend to tyranny of any kind.

It’s a clear statement that falsified science, doctrines and political schemes based on lies and manipulation is not accepted.

The most outstanding problem with Steig et al [2009] reconstruction is that the whole study completely ignores the fact that in the last 20-25 years you can’t find any warming in the Antarctic region. Even the Antarctic penisula shows no warming after a very steep temperature increase in the 1970’s. See the temperature records of some stations, Rothera Point for example.

In Steig et al the whole continent has an almost homogeneus warming trend of about 0,1°c/decade for the 1957-2006 period as shown above. According to the available instrumental data, this statement is ridiculous. The Amoundsen-Scott Base a South Pole, the Russian station of Vostok and the Australian Casey station near the Ross Ice Shelf are showing a cooling trend for the period examined by Steig et al. The only area on the whole Antarctic continent which has warmed significantly is the Antarctic Penisula. It is less than 5% of the total area of the Antarctic continent. And don’t forget: even the penisula shows no net warming in the last 25 years.

More than 10 years after the first appearance of the widely discredited MBH98 hockey stick, the Steig et al paper has brought climate science to a new low. How can a ‘high-profile’ scientific journal like Nature publish such a nonsense?

This paper has been peer-reviewed like the ‘hockey paper’ in 1998. It is quite clear that peer-review cannot guarantee good science. One more thing: the findings of the MBH98 study were used as smoking gun evidence in the IPCC Third Assessment Report. I bet that the ‘precious’ work of Steig and his colleagues will appear in the next IPCC report. Any opinion?

For the vast majority of the voting public, any attempt to judge the validity of any of this would be like serving on a jury in a trial where all the attorneys and their witnesses spoke only Greek. The jury would be left searching for clues on the expressions of the witnesses’ faces. In such a case, the jury would likely end up simply believing whatever they chose to believe regardless of the testimony (“We liked his tie!”).

Unless this paper can gain an official seal of authenticity in some quarter recognized by the public (The Goracle has his Nobel Prize), it is just so much spit in the ocean from a public perspective.

That said, I applaud this mountain of work for its own sake in the pursuit of scientific truth.

I rather like the Webster 5th definition of *ROBUST*:

” of, relating to, resembling, or being a relatively large, heavyset australopithecine (especially Australopithecus robustus and A. boisei) characterized especially by heavy molars and small incisors adapted to a vegetarian diet.”

I recommend inserting this verbiage wherever the alarmists use rob-us-t…

In real science, the refusal of a scientist to release the methods used to make a finding is reason enough to impeach the credibility of the finding and the scientist claiming to have the finding.

In AGW, on the other hand, secrecy and non-reproducible work is just the way things are.

I wonder if the magic number 3 gives the highest possible trend. Ought to graph number of PC’s verses trend.

Good job Jeff Id (and to the others which helped).

Considering how “herculean” (someone said) this effort was, it it clear this paper did not receive a proper peer review in the sense that one would think – some kind of replication of the methods.

Not that peer review needs to involve a great measure of replication, but when one is doing a novel technique, using novel data about something this important to a scientific field, Nature should not be putting it on its front cover unless at least one of the reviewers can say “it looks like the methodology was done correctly.”

Well, it wasn’t (double-checked or done correctly).

And it has happened before in this field and large parts of it are based on these novel mathematical calculations that are not replicated or tested in a experimental sense. The more we dig into the calculations and methodologies and check the experimental/empirical data, the less sense one gets that the science is being done objectively.

Adam,

You ask how high profile publications can participate in promoting AGW frauds.

Historians will puzzle over how AGW became such a fixation and was able to mislead and dominate public policy for many years.

Social movements like this are very corrosive and impose a high price. The bad ideas developed to solve non-existent problems come at the expense of good ideas.

AGW profiteers are taking advantage of the distractions they have caused very well.

UK Sceptic (02:50:07) :

“….my eyes began to glaze over after the first couple of graphs.”

Yeah, I have the same problem, but glad I persevered. This one really could do with an executive summary.

Very nicely done.

Can somebody tell me what exactly is the definition of academic fraud and why Steig’s work does not qualify?

OT: Global warming may be twice as bad as previously expected

By Doyle Rice, USA TODAY

Global warming will be twice as severe as previous estimates indicate, according to a new study published this month in the Journal of Climate, a publication of the American Meteorological Society.

http://www.usatoday.com/tech/science/environment/2009-05-20-global-warming_N.htm

Because the computer models tell them so!

All I see is tha any type of reconstruction, with all corrections you want, still give a warming Antarctica. When I think how many time I’ve read here and there that this conclusion was “obviously false” or “proved that data were manipulated”, I seriously wonder how the final result could be turned into a “victory” of some sort… A “coup de grace”? For skeptics?

Exactly. So long as the AGW establishment has a stranglehold on the ‘official’ scientific bodies (AAAS, NSF, NASA, NOAA, the professional societies, etc.) and the peer-reviewed journals, critiques such as this one will be dismissed by the media as the ravings of an Internet fringe, and the public at large will follow blindly along.

The big question for the scientists who have not jumped onto the AGW gravy train should be this: “How do we break that stranglehold?”

I hope that the authors of the post above will submit it to Nature, and if rejected, take whatever steps they can to challenge that rejection.

Is it time for legal action, on grounds that learned societies and journals have broken with their solemn obligation to scientific objectivity and have instead become handmaidens of a political agenda?

It might be futile, but could get media attention. And a lot more scientists now sitting on the sidelines might be encouraged to speak up within their associations and departments.

Tuesday the President of the United States said this:

“We have over the course of decades slowly built an economy that runs on oil. It has given us much of what we have — for good but also for ill. It has transformed the way we live and work, but it’s also wreaked havoc on our climate.”

That last of course is simply not true. But how can Joe Public counter it? The stakes are becoming enormously high. The further the US and other Western governments pursue the ‘climate change’ chimera, the more damage they will do to their national economies, our standard of living, and ultimately our very freedom and autonomy.

Laymen cannot turn this around. It’s up to the scientists who understand arcana like “Bootstrapped eigenvalue and eigenvalue/eigenvector” (thanks FatBigot!) to break through and challenge the dominant establishment. Once that happens, the media will start to pay attention, and if they do, then the politicians will have to as well.

/Mr Lynn

Lucy Skywalker (04:08:46) :

………….

“We maybe should establish an alternative Nobel prize here… though really, I’d rather the real Nobel Prize got freed from its corruption by work like this.”

Surely you are not mistaken the peace prize (always a bad joke from Oslo, Norway) with the real prices? (Royal Swedish Academy of Science, Stockholm, Sweden)

You warned me that it was a bit technical so I don’t want to waste too much of anyone’s precious time but can someone tell me what are the physical realities that Steig correlates with his three PCs and (how) does he justify that the others are not significant – we are talking about 0.07degC/decade! Is it likely that he did an analysis similar to the above and then selected the one that best fit the AGW models? This kind of forensics should be done on all the AGW models.

I forgot: The Norwegian Nobel Committee (Den norske Nobelkomité) awards the Nobel Peace Prize each year. Its five members are appointed by the Norwegian parliament.

They are politicians!! Got that?

http://en.wikipedia.org/wiki/Norwegian_Nobel_Committee

A correction to my previous comment:

I mentioned cooling trends near the Ross Ice Shelf, but the Casey station is about 1000 kms away from there. <a="http://data.giss.nasa.gov/cgi-bin/gistemp/gistemp_station.py?id=700896640008&data_set=1&num_neighbors=1" McMurdo is located next to the ice shelf and shows no significant trend in the last 30 years.

The Casey station is on the edge of the East Antarctic Ice Sheet, and shows a clear cooling trend since about 1980: see this graph.

Ah, but Ryan’s methodology differs from that of Steig and Mann. The two analyses therefore cannot be compared. We have apples vs. oranges.

That will be the response from the team. The fact that Steig’s methods are held back or incompletely described will not be included in any response.

Jeff Id and Ryan O, if they try to publish their study in Nature will have languish it for a year and then receive a rejection.

The best route is to submit it elsewhere, where it has a chance of being accepted. E&E may not have the distribution and “pretige” of Science or Nature, but it will provide a citable reference for posterity – a reminder in 50 years that not all of science was corrupted by AGW nonsense.

Wow thats what I call dropping the hammer, facts wise. It took me some time to read all the information. Maybe I should do what congress is doing.

http://speakyourmindnews.com/site/index.php?itemid=39

So what you are saying is that if you put in the temperature of bananas as they rot in your kitchen as a proxy for antarctic temperature, you get antarctic warming? And just to be sure I get this, if you put in the temperature of your son’s socks as they warm through the day, you would also get antarctic warming? Does this mean that the part of the code that Stulag, or whatever his name is, hasn’t published could contain an algorithm that takes whatever you put in, and adjusts it to his predicted antarctic warming? There are math tricks that do this. You plug in a number (any number, and even a banana if you want to) and the equation always equals the same thing.

Steig et al. My bad.

Claude Harvey (05:00:25) :

For the vast majority of the voting public, any attempt to judge the validity of any of this would be like serving on a jury in a trial where all the attorneys and their witnesses spoke only Greek. The jury would be left searching for clues on the expressions of the witnesses’ faces. In such a case, the jury would likely end up simply believing whatever they chose to believe regardless of the testimony (”We liked his tie!”).

Unless this paper can gain an official seal of authenticity in some quarter recognized by the public (The Goracle has his Nobel Prize), it is just so much spit in the ocean from a public perspective.

That said, I applaud this mountain of work for its own sake in the pursuit of scientific truth.

—————————-

I think the Internet is changing the rules of the game here. Efforts like this, as Adam Soereg (04:57:45) argued, show that peer review –in the traditional sense — is not working, at least not in the field of climate science. The Internet is giving rise to a different kind of “peer review,” one that is more robust, and less dominated by established cliques of authority. And that makes it truly more scientific, because science at its best is inherently anti-authoritarian.

The analogy to serving on a jury here is quite apt. In the law, juries are “lay people.” They are not experts at anything, and are not expected to be experts at anything. The experts that present testimony are “qualified” through “voir dire” before they are allowed to present their testimony. Yet, in almost every case where expert testimony is presented (I’m sure exceptions occur), there are experts on both sides of the issue. And if the testimony and evidence of the experts is such that a lay jury cannot tell the difference, or say one is likely more right than the other, then the outcome of the case should depend on something other than the expert testimony.

The difference between the courtroom analogy and modern science, or at least modern climate science, is that the cliques of authority are acting like judges who allow only one side of a dispute to present their evidence. But the issues are no longer being tried in their courtroom. It is their own fault, really, but the issues — here I’m thinking specifically of issues relating to climate change and AGW — are being tried in the court of public opinion. And just as the Internet is showing that traditional bastions of public opinion — MSM like the NYT, Times magazine, etc. — are no longer in control of the courtroom of public opinion, the same is happening with respect to politicized “science.”

The antics of traditional bastions of authority in science, like Science and Nature, are now increasingly obvious through the power of the Internet. Just as the NYT is losing influence, so are they. The “it was peer reviewed” or “it was not peer reviewed” is becoming recognized as pure and simple appeal to authority, and not indicative of any intrinsic scientific value. The public is waking up to the fact that “peer review” is now a lot like a court room where the judge (the editors of a paper) only lets one side of a dispute qualify their expert.

As long as this continues, the influence of traditional centers of authority in science will continue to erode. There will be further development of “open source” publishing in science — cf. http://www.doaj.org/ — precisely because the traditional venues are now less about promoting free and open inquiry than they are about shaping public opinion.

John Silver

A little while ago I researched the credentials of those that awarded Al Gore the Nobel prize. Earnest and clever people that they undoubtedly are, it was very hard to see this as being anything other than a political gesture bearing in mind the green credentials of most of them

Tonyb

Thanks to all the comments about this; I appreciate them. There are a few points that I would like to make.

1. In the course of doing this, I had several occasions where I needed to contact Steig for clarification on his methods. I personally did not ever ask for code, as I felt that (once we had a working version of RegEM) there would be much more learned by me in attempting to replicate his work from the methods description. Regardless, in every case, he was courteous and professional. On more than one occasion he freely offered to send me intermediate data not published on his website. So the personal comments about Dr. Steig I feel are unjustified – all of my interactions with him were positive.

2. Steig’s choice of 3 PCs is probably based largely on previous work that he had done with Schneider (such as attempting to find circulation patterns using PCA and doing analyses with the 37GHz satellite information). For those specific studies, 2-3 PCs were all that were needed to describe their results. This led them to believe that the temperature field could also be described by 3 PCs. I would strongly discourage the use of “fraud” or any other related terms, as I can see how their past work would have led them to this particular conclusion. I would say, rather, that the Antarctic warming paper suffered from confirmation bias, not fraud or anything close to fraud. I feel that they should have done more sensitivity studies to see if 3 PCs was a correct choice, but I would stop far short of calling this fraud.

3. RegEM and PCA, when used properly, can greatly enhance our ability to analyze sparse data accurately. They are not magic hockey-stick making algorithms. They are legitimate tools for scientific investigation. It is incumbent upon the researcher to use them properly and understand their limitations. In the Antarctic case, I feel that this was not done appropriately, but I personally would chalk that up to confirmation bias, not fraud.

John Galt (06:15:34) :

OT: Global warming may be twice as bad as previously expected

By Doyle Rice, USA TODAY

Global warming will be twice as severe as previous estimates indicate, according to a new study published this month in the Journal of Climate, a publication of the American Meteorological Society.

http://www.usatoday.com/tech/science/environment/2009-05-20-global-warming_N.htm

Because the computer models tell them so!

—

Oh brother!!! This is why I will never purchase a USA Today paper! Check out the same news release from this site:

http://www.sciencedaily.com/releases/2009/05/090519134843.htm

Here, buried near the end of the article, is all you need to know about this junk science:

Prinn stresses that the computer models are built to match the known conditions, processes and past history of the relevant human and natural systems, and the researchers are therefore dependent on the accuracy of this current knowledge. Beyond this, “we do the research, and let the results fall where they may,” he says. Since there are so many uncertainties, especially with regard to what human beings will choose to do and how large the climate response will be, “we don’t pretend we can do it accurately. Instead, we do these 400 runs and look at the spread of the odds.”

–

The money quote from above:

“…we don’t pretend we can do it accurately.”

Now, where was this in the USA Today article??

This, by the way, is a textbook example of how Global Warming stories in the media go from “…we don’t pretend we can do it accurately…” to highly accurate projections with near 100% certainty.

Unbelievable!!

Flanagan,

The Antarctic is not warming. It has not warmed in the past decades.

The interesting thing for AGW believers is this:

When it became obvious Antarctica was cooling, AGW promoters claimed this was *proof* of the looming apocalypse.

Now, by way of undisclosed techniques, AGW promoters claim that the Antarctic (ignoring that it has been cooling for over 20 years) has warmed (in only one small area) and that this is now *proof* that the apocalypse is looming.

So the problem for AGW believers like you is this: how do you maintain your credulity in something that is so patently contrived?

John Galt (06:05:34) :

Can somebody tell me what exactly is the definition of academic fraud and why Steig’s work does not qualify?

It is only fraud if there was fraudulent intent, and that has not been shown to be the case.

Flanagan says:

Tell me, how useful is this function?

Flanagan(X) = { for all x, Flanagan(X) = [snip] }

I sent a quick summary and a link to this post to Nature.

Chris H : @vg Should this work/analysis not then be submitted as a letter to Nature?

@Flanagan

The Steig paper has been heavily criticized by scientists, even from those known to support the AGW hypothesis.

Essentially, Steig created data where none existed and won’t release how the results were obtained. Is the computer code some sort of trade secret? Maybe Steig is planning to file for a software patent of his intellectual property. But until he release his raw data, the adjusted data, the fully documented source code, this falls under the heading of junk science.

When I was undergrad, a paper like this would not get a passing grade. But then again, I’m not a climate scientist.

He’s back!

Eric Steig

Former SIL Post Doc

Faculty, Quaternary Research Center, Univ. of Washington; Associate Professor, Earth & Space Sciences, University of Washington

Ph.D., Geological Sciences, Univ. of Washington 1996

steig “AT” ess.washington.edu <== email

(206) 685-3715 (206) 543-6327

The link:

http://instaar.colorado.edu/sil/people/person_detail.php?person_ID=17

The Boy of John

Another email for Steig

steig@ess.washington.edu

He’s taking questions.

The Boy of John

If Steig is to be pilloried for poor science, so to should the peer reviewers of his article.

Perhaps the work of all persons approving or involved in falsified studies should also be double-checked in order to reveal if they have created a group that merely perpetuates their own pre-conceived ideas.

There was an analysis performed on the papers for the IPCC 2007 report (I think) that demonstrated the close relationship of many persons who were ‘peer reviewers’ of each other’s work, a sure sign that they could be self-serving.

I see Ryan’s comment above and felt like adding my two cents.

I also have no intention of calling this fraud but am somewhat more suspicious. As you know I am completely unwelcome over at RC due to my appropriately toned discussions about the intent behind the M08 hockey stick. When I requested code politely from Dr. Steig, it did not receive a warm welcome or a professional response, probably due to my assigned rather than deserved reputation. Imagine the welcome some of the other fact checking blog hosts receive at RC.

Since that time when I started to replicate some of the work in the paper, Dr. Steig has been polite to my email correspondence although he has so far refused to release his own code which I’m sure has details we would be interested in.

In the meantime, Ryan is correct in that this is possibly just confirmation bias. However, from my experience with the hockey stick and the fact that a different number entered in the algorithm (any regpar number or PC number different than 3) will produce reduced trends, I am reasonably suspicious.

I did a summary of the reconstructions including different techniques recently which lays out the trends from hundreds of different methods. It was nearly impossible to produce a higher trend than Steig et al.

http://noconsensus.wordpress.com/2009/05/14/antarcti-summary-part-1-a-trend-of-trends/

The paper didn’t discuss trying any numbers other than regpar=3 but I don’t believe they didn’t try them and then make a conscious decision not to use them for minimally discussed and apparently incorrect reasons.

Frank K.,

It would be interesting to study how many articles have been publishedinthe past 5- 10 years that claim to *prove* that “AGW is twice as bad as we as we thought it was going to be”.

My brief google of the search term showed 248,000 hits. A survey of the first few pages showed articles dated from 2001 with the phrase “Global Warming Worse Than Predicted”.

I think the AGW promotion industry has been running this one up the flag pole uncahllenged far too long.

But heck, AGW promoters are still getting away with claiming that tropical cyclones have gotten worse and that the world is much hotter.

John Trigge,

Pielke, Sr. has pointed out this very thing in his most recent posting. I urge you to read it and to follow the .ppt link and read that, as well.

It is very revealing.

AGW depends on an echo chamber of promoters and beleivers constantly repeating, and never defending, certain basic ideas.

The conflicts of interest between reviewers, writers, profiteers, and media is strking.

Ryan O (07:14:49) :

Thanks to all the comments about this; I appreciate them. There are a few points that I would like to make.

You make three points attesting to Dr. Steig’s professionalism. Thank you, and thanks and kudos to him. Being “in error” isn’t the same as being fraudulent” or “being evil” I agree with your point that, in my own words, he made an understandable mistake in his methodology. We should all refrain from the ad homs.

I think it would be a good thing if he were to join in the discussion here.

BBC Radio 4 to run a programme on “The first victims of climate change” on May 25th at 21.00. ‘The Carteret Islands – Sharks in the garden’ will undoubtedly blame man-made climate change, even though islanders have been using dynamite to blow up the surrounding reefs to kill fish – thereby removing natural barriers.

John W. (08:41:16) :

Thank you.

I would also like to point out that I would take my reconstruction with a grain of salt. I personally would not make the claim that my reconstruction represents the Truth as far as Antarctic temperatures go. The only thing I would be willing to say is that it might be true. I have not properly quantified the uncertainty in the analysis, and there are many. The calculated satellite offsets have uncertainties. The imputation of the ground station temperatures has uncertainty. The PCA on the AVHRR data has uncertainty. The method Comiso used for cloudmasking has uncertainty.

This, honestly, is my biggest criticism of Steig’s work. These uncertainties are never quantified and, for the ones that are actually mentioned in the papers, they receive only passing attention. The uncertainty in the linear trends reported are simply the +/- 95% confidence intervals (and they are not appropriately corrected for autocorrelation) of the linear regression. They assume that the satellite data is perfect, that the PC decomposition of the satellite data is perfect, that the RegEM imputation is perfect, and that the covariance between the stations is perfect and is constant in time.

Personally, I would like to see much better quantification of uncertainty in papers of this type. It helps put the conclusions in perspective.

Flanagan (06:15:54) :

All I see is tha any type of reconstruction, with all corrections you want, still give a warming Antarctica.

That’s because you do not understand what these results mean. That’s OK, of course. Nobody here believes you would answer any other way, even if you did understand.

Mark

I have copied a post I made a few days ago about fraud in science. I do not think the IPCC set out to be fraudulent at all, but the points made in the article as to how everyone goes along with the flow are well worth reading in order to understand how we have got to this state.

This article below says it all. Frauds (or misunderstanding) peer jealousy, the need to gather more money and prestige for your dept are all major drivers in science these days-much bigger climate drivers than co2 is.

http://www.telegraph.co.uk/scienceandtechnology/5345963/The-scientific-fraudster-who-dazzled-the-world-of-physics.html

“Schön’s fraud was the largest ever exposed in physics; he ended up without a job, and was forced to leave America in disgrace. But the ease with which his fraudulent findings and grotesque errors were accepted by his peers raises troubling questions about the way in which scientists assess each other’s work, and whether there might be other such cases out there.”

We are being dazzled by reputations, unproven theories and computer models (which even the IPCC admit are flawed) whilst we set aside history and observational evidence. Yet still some believe everything they are told. I’m going to have a Mencken moment here…

The Climate Industrial Complex which would be non existing without the AGW HOAX:

http://online.wsj.com/article/SB124286145192740987.html

The test of Eric Steig’s bona fides will be how he reacts to these developments.

He could:

1. Not respond at all: This would in effect cede the game to Ryan O, Jeff ID et al

2. Respond, and defend his paper, explaining why Ryan O, Jeff ID et al are incorrect.

3. Acknowledge the work done by Ryan O, Jeff ID et al, and accept that their work enhances and refines his own work, and engage with them in producing an updated version of his paper that sets the record straight.

If Eric Steig chooses not to respond at all, it seems likely that his professional reputation will be challenged, whereas he can preserve his professional reputation by taking actions 2 or 3. I wonder which course he will choose?

I’ll wade in too. We should be very, very, VERY careful and thorough and ALWAYS default to respect before ever even hinting at charging fraud.

It’s just slimy to do otherwise. Irresponsible, too.

We owe it to ourselves to behave in the same manner we would have others behave in. I know that I would be enraged to the point of distraction if someone were to smugly claim fraud on my part because they cursorily read a critical article. That’s the way you poison wells and burn bridges.

There are a lot of men and women of good will who have been duped or have inadvertently come to the wrong conclusions. We need to acknowledge this (there but for the grace of Dog go I…), and leave the door open to them to come back into the fold. It’s hard enough to do as is.

Mark

What is happening at the Southern Hemisphere?

In New Zealand, winter has arrived skipping Autumn this year.

http://www.iceagenow.com/2009_Other_Parts_of_the_World.htm

And heavy hail covering a surfer resort and low temperatures have convinced some people that a new ice age is due.

http://mickysmuses.blogspot.com/2009/05/global-warming-hits-my-home-town.html

Anthony is celebrated as the big “Star” promoting “climate common sense”.

Off Topic but I just thought I would bring this story to everyones attention. This one crossed my home page today and the claims in item number 2 caught my eye. I am very skeptical about “super pollen”. Also the longer season item in 1. is suspect. I noticed they didn’t comment on how great the cold spring this year will be to reduce allergies.

http://healthandfitness.sympatico.msn.ca/HealthyLiving/ContentPosting?newsitemid=547102&feedname=RODALE-WOMENSHEALTH&show=False&number=0&showbyline=True&subtitle=&detect=&abc=abc&date=False

I’ve spent a lot of time over at CA, and so, as a result, I did not find this post to be “too technical” at all–if fact, quite the opposite: very cogent and well-presented, IMO. My thanks, also, for all your time, effort, and ingenuity. Let’s face it: It’s the efforts of people like you who enable the rest of us to see through the darkness, at least to some extent.

“”” John Silver (06:26:57) :

Lucy Skywalker (04:08:46) :

………….

“We maybe should establish an alternative Nobel prize here… though really, I’d rather the real Nobel Prize got freed from its corruption by work like this.”

Surely you are not mistaken the peace prize (always a bad joke from Oslo, Norway) with the real prices? (Royal Swedish Academy of Science, Stockholm, Sweden) “””

You mean you believe there’s a difference ? I think history shows that both are pretty much popularity contests.

Just take the case of Albert Einstien for example; the vast majority of people know of Einstein only because of E=Mc^2 and his special and general theories of relativity (as well as his Jewishness of course), yet none of that work won him a Nobel prize despite its impact on the course of Science.

He actually got his Nobel prize for his work on the Photo-Electric effect; about which the general public knows virtually nothing.

So far as I know, even today there is NO classical physical explanation for the PE effect; it can only be expained via Quantum mechanics which Einstein himself virtually rejected.

Even our latest Wunderkind; Energy Secrtetary Steven Chu was awarded his Physics Nobel for work on Optical Trapping; something which was invented (discovered if you will) fifteen years before Chu did his work, by Arthur Ashkin at Bell Labs, Holmdel. Chu was taught by Ashkin; yet neither the teaher nor any of Chu’s many co-workers who worked on the project was ever recognised.

It’s all about who knows whom, and who is the better politician; not who does the best science.

With regard to the present essay; and figs (1) and (2), I would love to see the signal filter that turns the black chicken scratchings into the blue graph (with forgiveness for the pixel quantization noise).

Does anyone really believe that the blue is representative of the black ?

George

“That’s because you do not understand what these results mean. That’s OK, of course. Nobody here believes you would answer any other way, even if you did understand.

Flanagan(X) = { for all x, Flanagan(X) = [snip] }”

is there life on mars?

Well I see I made a misteak and should have put: E=mc^2

And I had some exchanges with Steig before all the fat hit the shin; so I think it’s a little bit rash to be throwing around words like science fraud. Having Mann’s name associated with that work gets me blinking.

I believe that both Steig et al, and the current essay suffer from the same problem.

That is the attempt to use statistical mathematics to rectify a more fundamental problem; namely a failure to observe the rules for sampled Data Systems. No amount of Statistics; or Central Limit theorems, can buy you a reprieve from aliassing noise due to Nyquist violation.

Why attempt to create information where none exists.

George

This is great work. I have been following it every day a the Air Vent and at Climate Audit. I will be shocked if it gets anywhere in the MSM.

A coworker sent me a copy of a report a month ago and I finally got around to reading it. It is from SRI Consulting Business Intellegence, “The US Consumer and Global Warming”. It is scary:

Quote—-: In early 2008, a nonprofit environmental advocacy organization, ecoAmerica, lined up a half dozen partners to launch a research effort, the American Climate Values Survey (ACVS), to measure the impact that a year of global warming messaging had had on U.S. attitudes toward global warming and related issues.

The ACVS showed that only 18% of U.S. consumers strongly agreed that global warming is happening, is harmful, and is caused by humans.

Researchers at SRIC-BI use cultural gender to measure the extent to which consumers view an issue, product, or attitude as stereotypically feminine (or tender) versus masculine (or tough). Findings showed that U.S. consumers perceive concern for global warming in culturally feminine/tender terms, whereas they perceive denying global warming as a problem in strictly masculine/tough terms. One reason for the lack of masculine associations with global warming concerns and solutions may be that the problem does not allow for brute-force solutions typical of masculine problem-solving strategies. Further contributing to the divide, current messaging themes surrounding global warming often appear in feminine terms, focusing on the nurturing issue of protecting children and future generations from the harm of warming.

In addition to revealing masculine denial of the problem, the ACVS findings revealed a type of consumer who primarily denies global warming’s existence on intellectual or scientific grounds. Global warming “contrarians” are outspoken, informed consumers who instill public doubt about the science of global warming through blogs, newspaper articles, and face-to-face discussions. Because of their outsize influence, contrarians became a key messaging target in 2008, and ecoAmerica is launching a major media-watch group to monitor and counter claims made by contrarians. ————–End quote

I have begun to feel that we “contrarians” have more imagination than most. The AGW propagandists are using untruthful pictures of fuzzy animals and pretty landscapes and claiming they are all going to be destroyed by CO2. But they get the pictures up. When I hear that power plants are not going to be built, I don’t need to see pictures of the destroyed industry, unemployment, suicides, or burned out houses where the poor died while trying to keep warm with a fire. I can see it all in my mind. I doubt that the average person does. They worry more about a perfectly safe polar bear warming himself in the sun on an ice flow than they do about the unemployed people down the street.

To get back on topic this report by Jeff Id needs a picture. Perhaps one of a frozen penguin chick with a caption about how cold it still is in Antarctica:

http://tinyurl.com/qxyxo5

Here is what the AGW propagandists do:

http://news.nationalgeographic.com/news/bigphotos/51400691.html

The pictures that appear here at WattsUpWithThat of “how not to measure temperature, number xx” are great. When policy topics come up such as biofuels, besides the charts and graphs think about adding a picture of fertilizer runoff from marginal cropland or a starving child from somewhere where the crops have failed and imports are too expensive.

@Leif

Thanks for the clarification. I withdraw the implication of fraud. It’s still bad science, though.

I used to be good at doing math in my head. One day our 6th grade science class was asked to calculate the distance light travels in a year. I correctly calculated the answer but received no credit because I didn’t show my work.

Steig’s methods of creating data where none exist are dubious. Still, he should be asked to show all his work. His paper should have been rejected outright and this also calls into question the peer-review process.

George E. Smith (10:21:17) :

Ryan’s work as I see it is basically a better representation of the spatial distribution of ground data. Infilling between stations based on covariance with the satellite data. By releasing the RegEM TTLS requirement that the satellite grid data is fixed in the reconstruction it becomes a more appropriate blend of the two sets allowing the acutal measured temperatures to direct the reconstruction. This is why it and other higher order RegEM matches well with the area weighted reconstructions which included only surface station data.

As far as fraud, Rayn and I have no intention of calling Steig et al. fraud and nobody else I know of who’s worked on this paper would call it fraud. I don’t mind calling it incorrect though and do have suspicions about choices made during publication – see the link in my comments above.

I think Mann was chosen as a coauthor for his work with RegEM on the M08 hockeystick.

” David Ball (23:08:49) :

Last night I awoke to find 2 feet of ice in my bed. Both of them belonged to my wife. 8^]”

Has to make it to finalist for QOTW.

DaveE.

From the post- “So without the code, replication would be difficult, and without replication, there could be no significant challenge to the validity of the Steig et al paper.”

I respectfully disagree. Without replication, there is no validity. period. It is totally against the scientific process to call a finding valid if it cannot be fully explained and recreated independent of the original scientist. Anyone remember cold fusion?

“Malcolm (02:17:46) :

As suspected it is the Steig methodology that is flawed.

Well done to everyone for a great effort.

Anthony should consider giving you guys a medal for this work.”

They’ll get their medals in due course, just not from Oh, Bummer.

DaveE.

“George E. Smith (10:21:17) :

Why attempt to create information where none exists.”

Jim Hansen, (Henson?)/GISS & Phil Jones/HADCRU have been doing it for years, why stop now?

DaveE.

doesn’t this sound familiar ?

“…Schön was, in effect, doing science backwards: working out what his conclusions should be, and then using his computer to produce the appropriate graphs…”

http://www.telegraph.co.uk/scienceandtechnology/5345963/The-scientific-fraudster-who-dazzled-the-world-of-physics.html

“Leon Brozyna (04:07:47) :

Perhaps Steig et al should take their books and consider taking part in a reality show. Something along the lines of FOX Broadcasting’s Hell’s Kitchen. Perhaps they could compete against Mann et al.”

This was Mann et all! All that changed was the name on the cover.

DaveE.

“I think Mann was chosen as a coauthor for his work with RegEM on the M08 hockeystick.”

Is not that a very good reason to be suspicious, having in mind that Mann has a pretty good track record of performing scientific frauds, such as Hockey Stick reconstruction? In Steig’s chooses I would be much more reluctant to team up with proven fraudster.

Moreover, your assertion that Mann was probably invited because of his statistical skills in constructing the Hockey Stick is even more worrying and suggests that he was employed to help making a new fraud, by using the same fraudulent statistical tricks, abundantly documented by McIntyre and McKitrick. As far as I could see Steve McIntyre considered Steig et al methodology similar to Mann’s in MBH 98 and 99.

George E. Smith (10:09:06) :

Does anyone really believe that the blue is representative of the black ?

No. The blue is simply a linear model. No one believes that a linear model is an accurate model for near-surface temperatures. It is used because it is simple and provides a gross comparison between different analyses. Because it is a poor model of the underlying physical process, the confidence limits associated with it are quite large.

Your comments about the Nyquist sampling theorem are not quite apt in this case. There is no attempt – either in Steig or in the above post – to reconstruct the high-frequency portion of the temperature signal. The attempt is to reconstruct the low-frequency portion, the wavelength of which is determined by the interval over which you want to determine the trend. Given that Steig was not concerned with trends of less than about 15 years, there is no need to sample at high rates.

Will be aliasing and interpolation errors? Absolutely. A portion of that concern is eliminated by monthly averages, which is essentially a low-pass filter to remove the diurnal and higher frequency components. A portion of that concern is eliminated by the use of anomalies rather than absolute temperatures to (partially) remove the annual and higher frequency components. A portion of that is the use of linear trends, which are insensitive to wavelengths much shorter than the period you are trending.

The purpose of statistics, when used properly, is to place bounds on those errors to avoid conclusions that over-reach the data.

Gary P (10:35:33) : —–

That was a sad article you quoted and doomed to failure actually. It tries to continue the “deniers are dumb cavemen with clubs”meme, but then is forced to acknowledge that “Global warming “contrarians” are outspoken, informed consumers”. Because their analysis is not internally consistent, I suspect that they will have trouble formulating any kind of coherent response more effective the equivalent of the play ground taunt “your just a doodyhead”.

A better analysis on their part would have relied more on the yin/yang argument of passivity/receptiveness vs drive/action. But if they had used that then they would have to admit that AGW types tend to be herd followers and ‘contrarians’ tend to be questioners. Probalby not so good for the ol’ PR campaign.

Re: highaltitude (23:10:11) :

Accurate measurements are far from simple, and when as is the case with the satellite data, each instrument has its own idiosyncracies and each satellite has its own orbital history, the data has to be calibrated. If you took chemistry in college or high school, you will remember converting your measurements to STP conditions in order to insure comparable results. There is no difference here. Even a simple measurement like the length of a board can be complex if the required accuracy is even moderately high, e,g, you want your corners square. As far as reading the thermometer goes, that may seen simple enough, but when there are many thermometers scattered over thousands of kilometers, and hundreds of meters difference in altitude, each reading could be accurate to within the instrument limits. But you want compare them continentally, you want to extract global trends in those readings. That is another matter, and satellites don’t USE thermometers, so you need to achieve comparison between disparate TYPES of instruments. It isn’t pseudo science at all, though it does look as Steig et al. might have stopped work to soon.

Ryan O and Jeff Id, fraud or not, the results of Steig et al. paper are not robust and are biased. For a paper that made the cover of Nature, and was realyed by the MSM the world over, we all are entitled to a bit more explanation from both authors and journal. The questions are 1) Who are the Nature reviewers who bought Steig results at face value without doing what you guys did i.e. replicate. The same reviewers failed to correct mistakes that Steve McIntyre found 2) How long would it take and what chances of success would a proper Nature comment about Steig’s paper have? Would the comment receive the same media coverage? 3) Bias or fraud, when are scientists who systematically are involved in controversial papers -i.e. Mann and Team- going to be brought to a peer body and have to explain their actions and face consequences? Your work shows it is time to make these people accountable once for all.

Manfred (12:24:16),

Very good link, thanks for posting. I’ve been following Schön’s and Hwang’s deception for a few years now, and from what I can see, the problem is getting worse. The current withholding of taxpayer funded data and methodology by many climate scientists is unacceptable. It invites fraud.

It wouldn’t be surprising in the least if Schön’s name was replaced with Michael Mann’s, their stories are so similar. It is outrageous that the IPCC political scientists have so uncritically accepted Mann’s fraudulent hockey stick chart — simply because Mann was saying what they wanted to hear. That’s not science, it’s advocacy of a predetermined result.

Ron de Haan (09:41:47) :

What is happening at the Southern Hemisphere?

In New Zealand, winter has arrived skipping Autumn this year.

http://www.iceagenow.com/2009_Other_Parts_of_the_World.htm

It certainly has. We’re getting strong southerlies straight from the Antarctic and it doesn’t feel like its warming much to me.

Not only has it been very cold, but also very wet. Dunedin airport has doubled its previous rainfall record for May, with a week to go before month’s end and the official start of winter. Snow has fallen in the higher parts of town for the third time this year; the start of the week saw some schools closed along with two of the three main routes into town. The main Dunedin-Christchurch highway was closed by flooding and the power companies are spilling water in record volumes; the hydro lakes are already over-full. Queensland (Australia) has also seen extensive flooding.

This is what might be expected in July and, even then, not every year. The ski-fields could have opened more than a month early if staffing had been available.

The farming community believe that its going to be a very hard winter. Good job we all understand that its only weather.

Re: “Leif Svalgaard (07:35:48): It is only fraud if there was fraudulent intent, and that has not been shown to be the case.”

Intent has so many different meanings and applications that it can be considered ambiguous unless used in context that provides specific meaning. See for example:

http://en.wikipedia.org/wiki/Fraud

This link provides several varying meanings for intent as used in the context of civil, academic and criminal rules.

http://article.nationalreview.com/?q=NjZkNGZiODM0YjNmODNkMWFhZDU5Mzk2ZDMwNGRmMDQ

This article by Andrew McCarthy (prosecutor of 1993 World Trade Towers bombers) discusses the differences between “general intent” and “specific intent” in a criminal law context concerning what is torture and the requirements to prove it for water boarding.

Steig et al could commit fraud in numerous ways, including falsification of data, conducting research using empirical data that is deceptively organized to produce false outcomes or opinions that are intended to mislead the public, fail to disclose conflicts of interest that can bias the research, refuse to disclose data and analysis in order to prevent detection of deceptive work, or violate laws, rules and procedures that are intended to protect public from fraudulent or misleading research by providing transparency.

Academic fraud, intellectual dishonesty, and scientific misconduct are forms of fraud that should discredit research and those responsible. Intent in those contexts requires proof that the actors knew what they were doing, and that the outcome was what a reasonable person who performed the acts would expect. If the outcome of the misconduct misleads those who rely upon the research, intent to commit fraud is proved.

John Silver (06:33:02) :

I forgot: The Norwegian Nobel Committee (Den norske Nobelkomité) awards the Nobel Peace Prize each year. Its five members are appointed by the Norwegian parliament.

They are politicians!! Got that?

http://en.wikipedia.org/wiki/Norwegian_Nobel_Committee

Sure, the Nobel Peace Prize has always been a political one. At the time Alfred Nobel established the Nobel Prize (1895), Sweden and Norway was in union. That ended in 1905.