The opening paragraph of NOAA’s press release NCDC Releases June 2013 Global Climate Report begins with alarmist statistics and an error (my boldface):

According to NOAA scientists, the globally averaged temperature for June 2013 tied with 2006 as the fifth warmest June since record keeping began in 1880. It also marked the 37th consecutive June and 340th consecutive month (more than 28 years) with a global temperature above the 20th century average. The last below-average June temperature was June 1976 and the last below-average temperature for any month was February 1985.

First, the error: According to the NOAA Monthly Global (land and ocean combined into an anomaly) Index (°C), the “last below-average temperature for any month was” in reality was December 1984, not February 1985. Makes one wonder, if they can’t read a list of temperature anomalies, should we believe they can read thermometers?

Second, it’s very obvious that NOAA press releases have degraded to nothing but alarmist babble. More than two years ago, NOAA revised the base years they use for anomalies for most of their climate metrics. The CPC Update to Climatologies Notice webpage includes the following statement (my boldface):

Beginning with the January 2011 monthly data, all climatologies, anomalies, and indices presented within and related to the monthly Climate Diagnostics Bulletin will be updated according to current WMO standards. For datasets that span at least the past 30 years (such as atmospheric winds and pressure), the new anomalies will be based on the most recent 30-year climatology period 1981-2010.

Apparently, the NCDC didn’t get the same memo as the CPC. The Japanese Meteorological Agency (JMA) got the memo.

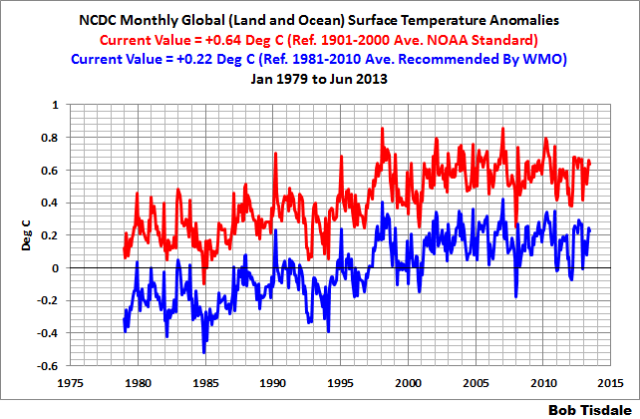

The following graph compares the NCDC global surface temperature product from January 1979 to June 2013, with the base years of 1901-2000 used by the NCDC and the base years of 1981-2010 recommended by the WMO.

If the NCDC had revised their base years to comply with WMO recommendations, the press release wouldn’t have the same alarm-bell ring to it:

According to NOAA scientists, the globally averaged temperature for June 2013 tied with 2006 as the fifth warmest June since record keeping began in 1880. It also marked the 17th consecutive June and 16th consecutive month (less than two years) with a global temperature above the 1981-2010 average. The last below-average June temperature was June 1996 and the last below-average temperature for any month was February 2012, though December 2012 was basically zero.

The monthly global surface temperature stats would be pretty boring if NOAA complied with WMO standards. Pretty boring indeed.

Errr…

The reference period chosen makes absolutely NO difference to the magnitude of the anomalies or the ranking of which year or month is hottest or fourth warmest or whatever.

Only the mathmaticallyiliterate are going to think that a 0.22degC anomaly on a 1981/2010 is somehow ‘better’ than a 0.64degC anomaly on the 1901/2000 reference period.

So, it’s the warmest decade in a century (after adjusting away the dust bowl). It’s also the coolest millennium in this Epoch. Reporting in this one-sided manner shows these agencies press releases are no longer controlled by objective observes but are indeed in control of advocates of a political agenda.

How would the observations differ if the warming were natural and cyclical over longer timescales than our measly record?

Thanks, Bob. A very good point indeed.

It seems like NOAA is willing to go down in warmista flames to support a dying agenda that causes poverty.

Who cares what the baseline is? Trends show up regardless, and anyone intelligent and/or interested enough to be reading articles like this can easily understand the shifting anomalies. UAH shifted its baseline the other way. Comparisons to average 20th century temperatures are useful, as are comparisons to the 1981-2010 averages. The major temp keepers have always used different baselines, and there are numerous sources that plot them together. Much ado about nothing.

The Central England Temperature record (CET) doesn’t suggest that June 2013’s temperature differs in any notable way from those of preceding centuries. This June it was 13.6 degrees Centigrade, and in 1659 13.0 degrees.

A simplistic observation?- of course, but a look at all the temperatures for June over the years indicates that recent June temperatures are typical for this month.

At no time has the average yearly temperature quoted in the CET gone above 11 degrees Centigrade. That’s from 1659 to the present day.

Yes, averages lose detailed information about what happened month by month in any given year (the average temperatures in 1956, 2010,1902, 1754, and 1659 are all given as 8.83 degrees Centigrade) – but isn’t it interesting that 2010 is in the above list?

I’m still waiting for someone to explain that new quartiles map to me. Why are areas with negative temperatures anomalies consistently shown as above normal on the quartiles map? Why do areas with a slight positive anomaly show as much above normal on the quartile map? I understand it depends on the area and anomaly, but I just can’t wrap my head around why the quartile map looks so much warmer than the raw anomaly map.

These are the two maps I am talking about:

http://www.ncdc.noaa.gov/sotc/service/global/map-blended-mntp/201306.gif

http://www.ncdc.noaa.gov/sotc/service/global/map-percentile-mntp/201306.gif

The global temp record is quoted in an anomaly versus that month’s average global temperature so every monthly anomaly is comparable to every monthly anomaly (and there is a seasonal cycle in global temperatures with July being the warmest month).

It makes no sense to say this June versus that June.

And then the NCDC is adjusting its historical temperature record every month and it is a systematic change, cooling the past (particularly around 1900) and warming the recent temperatures (particularly in the mid-1990s). They have added 0.15C to the trend in just the last five years.

http://s7.postimg.org/3y7l79bpn/NCDC_Changes_since_Dec_2008.png

I’m appalled. If I believed I was responsible for global warming I would kill myself to save the planet. I’m not and I won’t. CAWG has, and will always be about the money and control.

Buzz B says:

July 22, 2013 at 5:58 am

“Who cares what the baseline is? Trends show up regardless, and anyone intelligent and/or interested enough to be reading articles like this can easily understand the shifting anomalies. UAH shifted its baseline the other way. Comparisons to average 20th century temperatures are useful, as are comparisons to the 1981-2010 averages. The major temp keepers have always used different baselines, and there are numerous sources that plot them together. Much ado about nothing.”

“who cares what the baseline is?

Apparently they do since they pick one that allows them to hawk global warming which has not been happening for about 15 years despite every effort to manipulate the raw data.

Clearly their methodology is to provide red meat for the progressive agenda which is to mislead the people, nothing more. Do you see the acceleration in warming mentioned by the Administration?

Anthony: Off topic, but you’d probably be interested in this (if you haven’t already seen it): http://cliffmass.blogspot.ca/2013/07/are-nighttime-heat-waves-increasing-in.html

Hi Bob,

Sorry for the off-topic comment, but are you aware of anywhere I could find the latest El Nino Modoki index weekly values please? The most recent I can find online only go to October 2012. To judge by trade wind anomalies I’d expect that the current value may be in seriously negative territory, but I can’t find the data that would show whether this is the case or not.

Bob, it seems to me that you are confusing the base temperature for the anamaly calculation with the _average_. Before sounding off you need to be more careful.

Whatever base period is used does not affect what years are above or below average.

The key criticism is the one Bill Innis makes, that most of the reason temperatures are “warmer” is because they keep cooling the past. They are rigging the data to fit what they want to say.

In the intervening time since the end of the LIA anything but a steady increase in temperature and changes to those things affected by increasing temperature would be alarming. This temperature drift is not alarming. The governmental response to it gives me goose bumps

izen says:

July 22, 2013 at 5:23 am…………………………

“……………………………….Only the mathmaticallyiliterate are going to think that a 0.22degC

anomaly on a 1981/2010 is somehow ‘better’ than a 0.64degC anomaly on the 1901/2000 reference period.”

_____________________________________________________________________________

This is press release and therefore goes out the dyscalculic press and then to the even more dyscalculic public.

The monthly Global Temperature Report – UAHuntsville is easy to read, at http://nsstc.uah.edu/climate/

Also very clear, the Latest Global Average Tropospheric Temperatures at http://www.drroyspencer.com/latest-global-temperatures/

When I read Matt’s “Why are areas with negative temperatures anomalies consistently shown as above normal on the quartiles map?” above, I though it is because the normal temperature for that area is lower than the negative anomaly. But even then, given the background of alarmist rhetoric from NOAA, I stick with UAH.

Keith says: “Sorry for the off-topic comment, but are you aware of anywhere I could find the latest El Nino Modoki index weekly values please?”

I’ve never found El Nino Modoki values published anywhere. I’ve always had to calculate them using the equation in Ashok et al (2009):

https://www.jamstec.go.jp/frcgc/research/d1/iod/publications/modoki-ashok.pdf

Regards

.

Greg says: “Bob, it seems to me that you are confusing the base temperature for the anamaly calculation with the _average_. Before sounding off you need to be more careful.”

I’m not confusing anything. The average depends on the base years, Greg. Look again at Figure 1.

the press release wouldn’t have the same alarm-bell ring to it: and therefore would have failed to achive its objective .

izen says: “Only the mathmaticallyiliterate are going to think that a 0.22degC anomaly on a 1981/2010 is somehow ‘better’ than a 0.64degC anomaly on the 1901/2000 reference period.”

It’s all a matter of perspective.

Izen: “Only the mathmaticallyiliterate are going to think that a 0.22degC anomaly on a 1981/2010 is somehow ‘better’ than a 0.64degC anomaly on the 1901/2000 reference period.”

That’s the whole reason why they do it. Most of the voting public is “mathmaticallyiliterate”.

izen says: “The reference period chosen makes absolutely NO difference to the magnitude of the anomalies…

Not only wrong–your trollish remark is laughably wrong. Once again, you’ve broadcasted for everybody reading this thread that you haven’t the slightest idea what you’re talking about. Look again at Figure 1.

izen says: “…or the ranking of which year or month is hottest or fourth warmest or whatever.”

I didn’t say the reference period changed the ranking, so why are you arguing about it?

But the base years do change the values of the anomalies, contrary to your beliefs.

Thanks Bob.

——————

Several years ago I, and others, made note of the problem the gate keepers of the data would have after 2010 when the updated 30 year climatology would appear. Having adjusted the temperature record to be high during the 2001-2010 years the properties of a calculated ‘mean’ or simple average (strongly affected by outliers) began to stare them in the face. Only if the CO2 hypothesis worked could the numbers continue to show what they seemed to want. With strong natural variation at work there is little wonder that NCDC failed “to get the memo.”

On the other hand, one ought to understand why the 30 year period became “climatology” in the first place and then reflect on whether or not it has meaning in the science of climate. I don’t think it does. It is just a convenience for human’s memory. Why not use all the record one has for the science? That would have NCDC using say, 1901 thru 2012 and not stopping at the year 2000. They are conflicted.

I always thought Dr. Uccellini was a class act, I can’t believe he is now a part of NOAA’s global temperature manipulation for political ends. I an hopeful (though increasingly doubtful) that he has not yet had a chance to evaluate this situation.

Thanks, John F. Hultquist.

I made a mental note of your words then, now I’m beginning to see the problems floating up from the bottom of the deception.

I see more extreme acrobatics in the scam, epicycles all the way down.

izen and Greg: If you’re not aware, temperature anomalies for a given month are created by subtracting the average temperature for that month for a base period from the temperature for a given month. Because global temperatures have warmed, the average for the base period of 1901-2000 will be lower than the average for the period of 1981-2010.

The following graph is of NOAA’s global ERSST.v3b sea surface temperatures for June from 1854 to 2013. Also illustrated are the average June sea surface temperatures for the base-year periods of 1901-2000 and 1981-2010. Obviously, the sea surface temperature anomalies (calculated as the differences between the sea surface temperatures and the base-year average for the respective month) will be higher for the base years of 1901-2000 because the average temperature is about 0.35 deg C cooler than the average for the period of 1981-2010.

http://bobtisdale.files.wordpress.com/2013/07/global-sst-vs-base-year-averages1.png

Thanks, Bob. Your clarity is very welcome.

As the discrepancies between reality and CAGW become more apparent are we are to expect more extreme acrobatics in the scam, or can we hope for a return to science?

December 1984 was the coldest I’ve ever been in the state of North Carolina. High was -5F on my grandfather’s porch way in the mountains.

Wow. 28 consecutive years above 20th century average. Guess they could have said 28 consecutive years above 19th century average too. In 1979. But at that time, cooling was hot.

Thanks Bob. The values I did find were from the same site as the Ashok paper:

http://www.jamstec.go.jp/frcgc/research/d1/iod/modoki_home.html.en

but as you can see, the monthly values currently only go to March 2013 and the weekly values only to October 17th 2012. Do you know where the raw data to be able to calculate the EMI may be available please?

Yes, ConTrari. Thanks.

Pray the Earth doesn’t take the steep down road any time soon.

The reference Bob gives (or http://www.jamstec.go.jp/frcgc/research/d1/iod/enmodiki) provides monthly and weekly values directly. The equation(s) Bob refers to are far too difficult for me to handle :-(( I’ve simply downloaded the actual values. Easy!

Robin

Robin Edwards: Thanks for finding the El Nino Modoki Index data. I couldn’t get your link to work. I assume they’re these.

Weekly:

http://www.jamstec.go.jp/frcgc/research/d1/iod/DATA/emi.weekly.txt

Monthly:

http://www.jamstec.go.jp/frcgc/research/d1/iod/DATA/emi.monthly.txt

And the main page for the JAMSTEC EMI:

http://www.jamstec.go.jp/frcgc/research/d1/iod/enmodoki_home_s.html.en

Regards

Keith: According to Askok et al (2009), they used HADISST for that paper. Unfortunately, HADISST lags the other datasets by a few months. It’s current through May 2013 at the KNMI Climate Explorer:

http://climexp.knmi.nl/selectfield_obs.cgi?someone@somewhere

The weekly data start date gives me the impression they’re using NOAA’s Reynolds OI.v2 data. And it of course is available through the NOAA NOMADS website:

http://nomad3.ncep.noaa.gov/cgi-bin/pdisp_sst.sh?ctlfile=oiv2.ctl&varlist=on&new_window=on&lite=&ptype=ts&dir=

It is interesting to see that Government Organizations are being penetrated by NGO’s. The EPA now refers readers to the Environmental Defense Fund for more data on the toxic nature of chemicals. That is like having the State Department refer to the Communist Party of America for more info on Foreign Policy.

Example: http://www.epa.gov/enviro/html/emci/chemref/68476346.html

DIESEL FUEL NO. 2

CAS #68476-34-6

The following information resources are not maintained by Envirofacts. Envirofacts is neither responsible for their informational content nor for their site operation, but provides references to them here as a convenience to our Internet users.

Reference information on this chemical can be found at the following locations:

Non-Governmental Organizations

The Environmental Defense Fund’s Chemical Scorecard summarizes information about health effects, hazard rankings, industrial and consumer product uses, environmental releases and transfers, risk assessment values and regulatory coverage.

So, for the most common of products produced in high volume, diesel fuel, the EPA intimates that there is no info on the toxicological nature of this product. Sorry, there is a lot of data on this product.

So, let’s go to the EDF site: http://scorecard.goodguide.com/chemical-profiles/summary.tcl?edf_substance_id=68476-34-6

•Human Health Hazards

Health Hazard Reference(s)

Recognized: —

Suspected: —

——————————————————————————–

•Hazard Rankings Data lacking; not ranked by any system in Scorecard.

——————————————————————————–

•Chemical Use Profile This is a high volume chemical with production exceeding 1 million pounds annually in the U.S. No data on industrial or consumer use in Scorecard.

——————————————————————————–

•Rank Chemicals by Reported Environmental Releases in the United States No data on environmental releases in Scorecard.

•Regulatory Coverage Not on chemical lists in Scorecard.

——————————————————————————–

•Basic Testing to Identify Chemical Hazards 6 of 8 basic tests to identify chemical hazards have not been conducted on this chemical, or are not publicly available according to US EPA’s 1998 hazard data availability study.

Track whether this chemical is being voluntarily tested to provide basic hazard data. Copy its CAS number above and check the HPV Chemical Tracker.

——————————————————————————–

•Information Needed for Safety Assessment Lacks at least some of the data required for safety assessment. See risk assessment data for this chemical from U.S. EPA or Scorecard.

There is no further info at EDF worthy of referring to this site for more info. Curious!

Looking at other CAS numbers yields similar results. It seems that EDF wants you to believe that their perception of lack of data means that nothing has ever been done to test this “common” chemical. This is so far from the truth as to be totally unbelievable. Tox data on this an hundreds of other chemicals is readily available or inferred by Structure-Activity Relationship programs developed by the EPA.

What IS going on here?

I think the apparent disagreement between Bob Tisdale and izen and Greg points to something that needs clarification.

We agree on these three points, right?

1. Comparing one absolute temperature with another absolute temperature is legit.

2. Comparing an “anomaly” with another anomaly referenced to the same base period is legit.

3. Comparing an anomaly with another anomaly referenced to a *different* base period is bogus.

It looks to me as if Bob Tisdale is accusing NOAA of doing #3, although he doesn’t say so directly, while izen and Greg are assuming that NOAA is sufficiently careful to make its comparisons via #1 or #2. Personally, although I may have overlooked something in Bob Tisdale’s post, I see no evidence that NOAA has done #3, nor even an explicit allegation that

it has. Please, Bob Tisdale, if that is your point, could you make it clearer?

‘Climatological Period’ is an exercise in psychology and has nothing to do with the physics of the earth or any other planet. It may have something to do with the emotional state of the ‘period preceiver’.

Don’t know Bob, I mean the WMO is pretty much absolutely positively certain that the earth is getting warmer, MUCH warmer. I mean in their most recent report on the years 2000-2010 they say,

“Nine of the decade’s years were among

the 10 warmest on record. The warmest

year ever recorded was 2010, with a mean

temperature anomaly estimated at 0.54°C

above the 14.0°C baseline, followed closely

by 2005. The least warm year was 2008, with

an estimated anomaly of +0.38°C, but this

was enough to make 2008 the warmest La

Niña year on record.”

http://library.wmo.int/pmb_ged/wmo_1119_en.pdf

‘But the base years do change the values of the anomalies, contrary to your beliefs.”

Selecting base periods does change the value of anomalies. thats almost a tautology.

Ideally you want to do what NOAA have done and use a century scale (1901-2000) or use the whole period. If you cant do that then you should use the minimum of 30 years as set out by the WMO. The point of the 30 year time window is that at 30 years when you average 30 januaries you will hopefully have a small variance. If you are ABLE to use longer periods, say 100 years or the entire time series, that is a big bonus when it comes to looking at certain things that are variance dependent. So, WMO have a set a standard that you can consider to be a MINIMUM. If you are going to calculate anomalies, use a minimum of 30 years. If you can use more years then by all means use more. There is a specific reason why you might want to use more.. see if you can guess

@jai mitchell 10:46 am

I mean the WMO is pretty much absolutely positively certain that the earth is getting warmer, MUCH warmer.

The WMO is not measuring the temperature of the “Earth”.

The WMO is using statistics that ultimately come from

1: a few thousand thermometers, each of which has its own UHI and micrositing issues,

2: with error corrections, adjustments, regional homizinization in some black-box algorithm

3: in a continuous process whereby historical temperatures have a non-random tendency to get cooler.

Even given all the problems above, the temperature plateau for the past 15 years should give anyone objective pause. Maybe or maybe not the earth was getting warmer. But the evidence for is getting warmer is getting weaker by the month.

The unjustified outrage of concerning base years is puzzling, and that outrage makes regular appearances on WUWT. It reflects to the tense mindset of those who fret about it – nothing more. Data sets can be converted to any baseline time period for purposes of apple-to-apples comparison. As Mosher points out, eliminating angst concerning easy direct data set comparison isn’t necessarily the driving factor for selecting a base period.

I have now had a look at the El Nino Modoki Monthly data, which do indeed terminate at March 2013. However, my guess/expectation is that any data added since then will do little to affect the very obvious main features of this index. My old-fashioned but nevertheless trusted technique is to plot the cusums of /any/ time series that I acquire. It takes me only a very few minutes and provides an excellent overview of the main features (grand scale) of the data. ENModoki is a case in point. If you plot the data you get a typical climate data mess, which may become somewhat less daunting if you form some moving averages – remember that you will be dismissing much of the detail, as in any rounding process. Cusums retain all the detail. I wish I could post some plots, but I don’t know how.

However, what comes out very clearly is that the are three main regimes, during which no enduring changes occurred. The first is from the start of the data (1870) to Aug 1900, when a sharp upward event occurred Then for the next 69 years there was no further enduring change. El Nino Modoki was effectively constant. Then in Sept 1970 a sudden downturn occurred. The new “level” has been maintained up to March 2013, when the data end, but with at least one pronounced up and down excursion. New data will not have any effect on these robust conclusions unless an enduring change starts.

I do not attempt to put forward any explanations or reasons for these abrupt changes. I simply note that the 1970 downward step precedes the great PDO upward change, which occurred (by my reckoning) in Jul 1976, and which was followed a few months later by step changes in (most) Alaskan sites.

It is the rather ubiquitous occurence of similar (presumably unrelated) step changes all over the climate data from countless sources that gives rise to my surmise that climate may be inherently unpredicatable. Such step changes, revealed by cusum plotting, generally exhibit no “warning” signs as far as I can see. Yet they happen. For the ENModoki data just fit some regressions for the periods I’ve suggested as the ends of stable periods, and compare them with the simple (i.e. naive) regression on the complete original data set. Its linear regression (which people seem to think of as a linear trend!) has RSq = 0.002578, a constant term = 0.993, and the slope is -0.0005116, Std Error 0.0002428 with its t value therefore 2.107, probability 0.0353, and of course the cusum of the residuals has the same properties as the original data cusum. There are 1719 data points, by the way.

I consider this trend fitting as being of no practical value. Please enlighten me.

Robin

@Mosher 11:31 am

Ideally you want to do what NOAA have done and use a century scale (1901-2000) or use the whole period.

Yeah! That way you can keep your investment in adjusted downward historical temperature records. Why let newer decades of better qualtiy temperatures “infect” the studies from early decades with records NCDC worked so long to lower? /sarc.

For the record…. I don’t have much problem using raw unadjusted absolute temperature records, uncut, unspliced, from long running stations. We can recognize each record will be contaminated by some UHI effects, but the UHI is in there only once, rather than many times. These records should serve as an independent analysis for any conclusions that come from data that went through the homogenizer and/or the BEST Cuisinart.

jai mitchell says: “Don’t know Bob, I mean the WMO is pretty much absolutely positively certain that the earth is getting warmer, MUCH warmer. I mean in their most recent report on the years 2000-2010 they say…”

The graph I provided shows the Earth has warmed, jai Mitchell. So I’m not sure what your point is. This is a discussion of the effects of base years on the presentation of that warming–or has the topic of a post eluded you once again.

Peter Pearson: I believe the problem is more fundamental. Based on their comments, izen and Greg did not understand how anomalies were calculated and that the base years used for anomalies impact the presentation of the data.

Steven Mosher says: “Ideally you want to do what NOAA have done and use a century scale (1901-2000) or use the whole period.”

Obviously, the WMO disagrees with you.

Mosher,

As far as I know, NCDC doesn’t actually use 1901-2000 to calculate anomalies (since they use CAM, they would be throwing out a lot of stations with shorter records). They use a shorter period (1971-2000?) and rebaseline the results.

Using 1981-2010 to calculate the anomalies with a common anomaly method (CAM) and GHCN-M would not be a particularly good idea. There are still a sizable number of stations with no data in GHCN-M after 1992, and a 1981-2010 baseline period would only give 10 years of common coverage for those.

The data goes all the way back to 1880? Wow! That’s a significant proportion of earth’s history — NOT!

bobby said,

The graph I provided shows the Earth has warmed, jai Mitchell. So I’m not sure what your point is. This is a discussion of the effects of base years on the presentation of that warming–or has the topic of a post eluded you once again.

Bob,

while I am sure that you would love to have a baseline of all measures taken from a time when the Anthropogenic Global Warming signal has definitively been extracted from the noise of the record with statistically significant accuracy, the simple fact is that our global targets for preserving our modern civilization in the face of undeniable and potentially catastrophic climate change are all compared to those levels determined as the norm prior to 1880.

http://ec.europa.eu/clima/policies/international/negotiations/future/docs/brochure_2c_en.pdf

Global mean temperature increases of up to 2°C (relative to pre-industrial levels) are likely to allow adaptation to climate change for many human systems at globally acceptable economic, social and environmental costs. However, the ability of many natural ecosystems to adapt to rapid climate change is limited and may be exceeded before a 2°C temperature increase is reached.

As an aside, I think we can pretty much save the date today for the first time that WUWT advocated that a United States based agency follow United Nation directed protocols. a red letter day!!!

jai Mitchell, thanks once again for proving to one and all that you have the unlimited capacity to parrot dogma.

Have a nice day.

Dr. Bob says: @ July 22, 2013 at 9:52 am

It is interesting to see that Government Organizations are being penetrated by NGO’s. The EPA now refers readers to the Environmental Defense Fund for more data on the toxic nature of chemicals. That is like having the State Department refer to the Communist Party of America for more info on Foreign Policy…..

So, for the most common of products produced in high volume, diesel fuel, the EPA intimates that there is no info on the toxicological nature of this product. Sorry, there is a lot of data on this product.

So, let’s go to the EDF site….

There is no further info at EDF worthy of referring to this site for more info. Curious!

Looking at other CAS numbers yields similar results. It seems that EDF wants you to believe that their perception of lack of data means that nothing has ever been done to test this “common” chemical. This is so far from the truth as to be totally unbelievable. Tox data on this an hundreds of other chemicals is readily available or inferred by Structure-Activity Relationship programs developed by the EPA.

What IS going on here?

>>>>>>>>>>>>>>>>>>>>>>>>>>

WHAT!?!

Heck back in the dark ages (~1976) having been tapped to do safety in a chemical factory, I ordered A Comprehensive Guide to the Hazardous Properties of Chemical Substances

Later in the 1980s companies were ordered by OSHA to have MSDSheets for all chemicals they produced or used. These had to be made available to workers. Again (different company) I got tapped for explaining to factory workers what the sheets meant and how to read them. (I used ethanol as an example)

OSHA’s Hazard Communication Standard (HCS)

jai mitchell says:

July 22, 2013 at 10:46 am

Don’t know Bob, I mean the WMO is pretty much absolutely positively certain that the earth is getting warmer, MUCH warmer…..

>>>>>>>>>>>>>>>>>>>>>>>

You are kidding right? RIGHT?

Long term the earth is getting cooler. GRAPH

And the reason I say that is the Northern Hemisphere Summer Energy Leading Indicator Graph

340th consecutive month (more than 28 years) with a global temperature above the 20th century average

And for some perspective, RSS shows no warming for 199 months.

Since the past thousand years was the coolest 1,000-year period of the past 8,000 years, it is laughable how ignorance breeds panic that overrules reason. The current modest warming is a natural rebound from the Little Ice Age, the coldest extended period of the past 8,000 years. All of the back and forth of the past 40 years, a period of natural warming which is also a rebound from cooling (1950-1975), is “sound and fury signifying nothing.” Through it all, good observational science forges ahead against the headwind of failing, continuously reconstructed climate models. Never has such failure been so lavishly rewarded, as global warming gives way to climate change (the constant state since the inception of climate), to severe weather (made exceptional only as a result of ignorance and obfuscation), and now the magic of heat sequestration in the ocean depths. How the tiny atmosphere heats the massive ocean while not indulging itself in warming has become a monument to alarmists’ passionate quest for a Hail Mary for the salvation of their floundering religiously pseudoscience. The alarmists believe, as all deeply religious folk do, that miracles do happen, so why not for them. They certainly could use some.

Exactly. The leadership at NCDC has been involved with such blatant, obvious AGW wording for many years now. There “agenda” is VERY apparent. Sad!

@ Bob Tisdale: Sorry for the off topic, but this would be your area. This fellow at the Met is claiming to have observed the missing heat transiting to the deep oceans. Is this worthy of a new post?

http://www.independent.co.uk/news/uk/home-news/has-global-warming-stopped-no–its-just-on-pause-insist-scientists-and-its-down-to-the-oceans-8726893.html

Humans live at the South Pole where temperatures average -50C and in Ethopia where the average mean temperature is 34C.

I’m pretty sure we can adapt to a small 2C temperature increase. We evolved by taking advantage of the hottest time of the day on the African savanna when the other animals were hunkered down in the shade, panting to try to keep cool. We are warm-adapted already.

Chris D. says:

July 23, 2013 at 4:05 am

@ Bob Tisdale: Sorry for the off topic, but this would be your area. This fellow at the Met is claiming to have observed the missing heat transiting to the deep oceans. Is this worthy of a new post?

———————————-

Temps at the Surface, in the 0-700 metre Ocean and in the 0-2000 metre Ocean going back to 1955.

I don’t see how a small 0.0021C increase in the 0-2000 Ocean can be seen as acceleration. It is the same as it has always been in the record. It is also a lower rate than the error margin in the Argo floats. And at this rate, in 100 years, it will have warmed 0.2C. Wow, that should really change things. In addition, it is equivalent to 0.5 W/m2 while net GHG forcing is over 2.0 W/m2. Surface cooling – ocean accumulating 25% – where is the other 75% going.

http://s13.postimg.org/iiu9nju0n/Temps_Surface_2000_M_Ocean_Q1_2013.png

Thanks Bob and Robin for trying to find updated indices 🙂 Can’t seem to get anything plotted from the links you supplied Bob, so I guess I’ll just have to wait for the updated indices.

What I’m curious about is the relative stasis in the ENSO index in the past few months, at a time when it is usually haring off in one direction or the other. From what I can tell, such periods are unusual in the past 30 years and tend to be during periods of weak positive-to-neutral conditions, rather than weak negative-to-neutral conditions. Are prolonged ENSO-neutral conditions in store, I wonder, or do the strengthening trade winds indicate La Nina on the way? Is it a passing phase prior to El Nino?

Something I have noticed in the Modoki data is that, though the numbers are basically up and down for the overall index and for Boxes A and B, Box C (the equatorial Western Pacific) shows a very marked step up following the 1998 El Nino, remaining at elevated levels ever since. I know Bob has frequently posted about this step-change for the Western Pacific as a whole, but hadn’t noticed until now that the equatorial western warm pool itself has been the major part of this, not just the poleward extensions.

Further to my previous comment, the strong trade winds at present may perhaps turn out to be analogous to the those of the fairly weak 1995-96 Nina, which Bob shows to be the trigger for the step-change in Western Pacific temperatures and the subsequent Nino. I don’t know if there’s enough warm water built up there at present to trigger another big El Nino (doesn’t appear to be), but it could be one to watch.

Illiteracies often go together.

mathematically

illiterate

Last time I noticed, the standard for GISS was roughly 1950-1980. (Correlates well with a time of relatively stable readings.) And the standards for HadCRUT and IPCC were 1961-1990.

Meanwhile, the warming rate in the graph appears to me as largely unchanged between the two curves, despite the fact I see them as being not perfectly identical. And, .”current” of .22 degree C warmer than 1981-2010 average “WMO standard” sounds to me like warming on top of the 1981-2010 period being one that experienced warming.

I seem to think that sticking to lack of warming from 2001 (12 years) is a lot easier to sell, and a lot closer to the truth than stuff said above, and other stuff spouted about lack of warming being for 16 or 17 years. Just look at the graph – and consider that from late 1997 to early 1998 we had a century-class El Nino, and its spike needs some discounting from the trend in a time period of only almost 35 years. The most recent greater spike was 1877-1878, close to 2 cycle peaks before the 2004-2005 one in the periodic cycle that I have seen as discernable in HADCrut3.

(The surface index that I note as having greatest resemblances to the UAH and RSS satallite

lower-troposphere indices).

NOAA always likes to report/comment like; “June 2013 is the 5th warmest since 1880”. Or the 13th warmest ect. This as I see it is strictly for “show” or as “alarming”. The fact is known that the Globe warm-up from 1977 to about 1998. To continually present the latest “month” as warmest since records started in 1880 is very childish. We have a new high. Over the past 16 years, any reported temperature below the 8th… indicates cooling. Above the 8th indicates warming. But against the total of the past 16 years, if the reading is just a couple of 1/00ths higher or lower, the change to the global average is minor and no change at all to the new normal. This is called a political or bias spin. This is not what our “scientists should be practicing.