By Andy May

While studying the NOAA USHCN (United States Historical Climate Network) data I noticed the recent differences between the raw and final average annual temperatures were anomalous. The plots in this post are computed from the USHCN monthly averages. The most recent version of the data can be downloaded here. The data shown in this post was downloaded in October 2020 and was complete through September 2020.

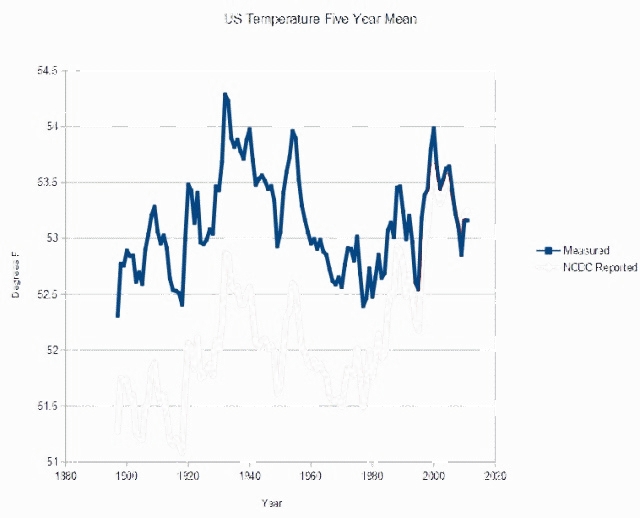

There are two ways to compute the difference and they give different answers. One way is to subtract the raw from the final temperature month-by-month, ignoring missing values, then average the differences by year. When computed this way from the USHCN monthly values, the values are only subtracted when both the raw and final average temperature exist for a given month and station. Using this method, the numerous “estimated” final temperatures are ignored, because there is no matching raw temperature. This plot is shown in Figure 1.

Figure 1. Plot of USHCN final temperatures minus raw data. The difference between final and raw monthly average temperatures is computed when both exist for a specific month. The differences are then averaged. Data used is from NOAA.

In Figure 1 we can see two things. First, the number of raw data stations drops quickly from 2005 to 2019. As we can see in Figure 2 this is not a problem for the final temperatures. How is this so? In turns out that as the active weather stations disappear from the network, the final temperature for them is estimated from neighboring stations. The estimates are made from nearby active stations using the NOAA pairwise homogenization algorithm or “PHA” (Menne & Williams, 2009a). The USHCN is a high-quality subset of the larger COOP set of stations. The PHA estimates are not made with just the USHCN high quality stations, the algorithm utilizes the full COOP set of stations (Menne, Williams, & Vose, 2009).

Figure 2. Final temperatures from 1900 to 2019. Notice 1218 values are present from 1917 to 2019. As shown in Figure 1, these are not all measurements, a significant number of the values are estimated. Data used is from NOAA.

So, what happens if we simply average the values for each month in both the raw dataset and the final dataset, ignoring nulls, then subtract the raw yearly average from the final yearly average? This is done in Figure 3. We realize that the raw values represent fewer stations and that the final values contain many estimated values. The number of estimated final values increases rapidly from 2005 to 2019.

Figure 3. USHCN final-raw temperatures computed year-by-year, regardless of the number of stations in each dataset. The sharp rise in the temperature difference is from 2015-2019.

Discussion

The above plots use all raw data and all final data in the USHCN datasets. Information about the data is available on the NOAA web site. In addition, John Goetz has written about the data and the missing values in some detail here.

The USHCN weather stations are a subset of the larger NOAA Cooperative Observer Program weather stations, the “COOP” mentioned above. USHCN stations are the stations with longer records and better data (Menne, Williams, & Vose, 2009). All the weather station measurements are quality checked and if problems are found a flag is added to the measurement. To make the plots shown here, the flags were ignored, and all values were plotted and used in the calculations. Some plots made by NOAA and others with this data are made this way and others reject some or all flagged data. Little data exists before 1900, so we chose to begin our plots at that date. There are less than 200 stations in 1890. All the weather stations in the USHCN network are plotted in Figure 4, those with more than 50 missing monthly averages between January 2010 and the end of 2019 are noted with red boxes around the symbol.

Figure 4. All USHCN weather stations. Those with missing raw data monthly averages have red boxes around them. Data source: NOAA.

Conclusions

The plots above show that the overall effect of the estimated, or “infilled,” final monthly average temperatures is a rapid recent rise in average temperature as is clearly seen in Figure 3. In Figure 3 the overall monthly averages from the estimated (“infilled”) final weather station values are averaged and then compared to the average of the real measurements, the raw data. This is not a station by station comparison. The station-by-station comparison is shown in Figure 1. In Figure 1 the monthly differences are computed only if a station has both a raw measurement and a final estimate. The values from 2010 to 2019 still look strange, but not as strange as in Figure 3.

Clearly the rapid drop-off of stations during this time, which averages more than 20 stations per year, is playing a role in the strange difference between Figures 1 and 3. But, the extreme jump seen from 2015-2019 in Figure 3 is mostly in the estimated values in the final dataset. We might think the 2016 El Nino played a role in this anomaly, but it continues to 2019. The El Nino effect reversed in 2017 in the U.S., as seen in Figure 2. Besides, this anomaly is not in temperature, it is a difference between the final and raw temperature values in the USHCN dataset.

Figure 4 makes it clear that the dropped stations (boxed in red) are widely scattered. The areal coverage over the lower 48 states is similar in 2010 and 2019, except perhaps in Oklahoma, not sure what happened there. But, in the final dataset, values were estimated for all the terminated weather stations and those estimated values apparently caused the jump shown in Figure 3.

I don’t have an opinion about how the year-by-year Final-Raw anomaly in Figure 3 happened, only that it looks very strange. Reader opinions and additional information are welcome.

Some final points. I used R to read and process the data, although I used Excel to make a lot of the graphs. The USHCN data is complete and reasonably well documented for the most part, but hard to read and get into a usable form. For those that want to check what I’ve done and make sure these plots were made correctly I’ve collected my R programs in a zip file that you can download and use to check my work.

I plan to do more with the USHCN data and its companion GHCN (Global Historical Climate Network) dataset. I’ll publish more posts on them as issues come up.

Confusing tob

One point of confusion in the data, unrelated to this post. NOAA calls their time-of-day corrected data “tob.” It stands for time-of-day bias and accounts for minimum and maximum temperatures taken at different times in different stations. All the tob data supplied on their ftp site has 13 monthly values. I’ve read the papers and the documentation but cannot figure out why there are 13 monthly values for tob, but only 12 monthly values for all the other datasets. I emailed them to ask but have not received an answer to date. Does anyone know? If so, please put the answer in the comments.

Download the R code used to read the USHCN monthly raw and final data and compute the data plotted in this post here.

You can purchase my latest book, Politics and Climate Change: A History, here. The content in this post is not from the book.

The bibliography can be downloaded here.

Nice essay Andy. I don’t do temperatures because I mistrust the apparently innumerable corrections. But lots of folks do. I’ll be looking forward to reading the comments.

I don’t know why they even bother starting with thermometer readings these days.

Why not just make up some numbers right from the get-go?

Mr. Mr.

The article seemed to miss mentioning the small claimed margin of error for the “data” — something that could not possibly be calculated because such a large percentage of the numbers are made up … and those made up numbers are never verified by actual measurements.

So the government bureaucrat “scientists” can make wild guesses, call them “infilling” to sound more scientific than “wild guesses”, and then they go to the bank to cash their big paychecks. They can never be proven wrong !

NOAA make up about 40 percent of the US numbers and we supposedly have the best weather station system in the world. That should have been mentioned in the article.

It would save the taxpayer money if they would just make up ALL the numbers and skip the measurements and wild guess infilling. On the other hand, the US land surface is just a small percentage of the total surface area of our planet. Almost all the other nations have less complete surface coverage with their weather stations. NASA claims record high temperatures in parts of central Africa where the surface grids have NO weather stations.

Surface temperature “data” are not fit for real science analyses.

The sparse non-global surface “data” before 1920 are completely worthless.

The Southern Hemisphere did not have adequate surface coverage before World War II.

The UAH satellite data, since 1979, have sufficient global coverage with little infilling, and are measured in a consistent environment, where the greenhouse effect takes place. They have the potential to be accurate enough for scientific analyses.

Tony Heller created a graph that explains the corrections with the highest correlation of any graph ever in climate studies.

The corrections to the temperature correlated almost perfectly with the increase in CO2 content in the atmosphere. He has proven that NOAH has fudged the temperature numbers so that temperatures appear to increase perfectly with CO2 concentration. They do not. Only the “corrections” to the actual readings correlated with CO2. Just plot the corrections in the third graph vs. the Mauna Loa measured CO2 concentration. There is only one way errors in thermometer readings correlate with CO2 levels: fraud.

his chart is wrong

1. he cut off the early period

2. he calculated averages wrong

The idea that the data from these thermometer ‘sets’ is fit for purpose in determining a temperature trend is ludicrous imo. The change in locations, the massive influence of steel, concrete and bitument heat islands, the switch to electronic sensors and the removal af such large numbers of stations just turns the whole exercise into scientific dumpster diving IMO. As for the ‘corrections’ rubrics, they sound a bit like executive bonus formulae to me. I mean if you were a climate scientist seeking ongoing funding who wouldn’t calculate temperature rise over time?

This is not about science per se, it is about the credibility of the witnesses.

Hydrology has a large influence too. When you pave and build, the rainfall goes into the catchbasins and down to where they dump the water. It does not sink into the soil as it probably did earlier. That means that you do not have the cooling effect of the heat of evaporation.

SYNGENTA has a myriad of data plotting diurnal temperature by Zipcode. This information is used to determine the growing season of each crop species.

https://www.greencastonline.com/growing-degree-days/home

A significant discussion of the advent of Solar Cycle 25 and its significant impact on 2020 massive crop losses is available on https://iceagefarmer.com.

Pretty tough to manipulate this data when it impacts crop yields. All we have to do is get USDA to report accurate crop losses. (sic)

Its not just that it’s a bit foolish thinking that you can measure an intensive property of something that is so not constant even in the one spot with time, the sampling is far from ideal, spatially and temporally. A mean of minima and maximum is used instead of a mean of almost continuous measurements, and no concern that modern measurement of maxima are picking up bursts of heat that are a degree or more than the 10 minute average.

Then this data has to be fixed and missing data filled in, calculated from data not good enough to get an average temperature of the globe to within a degree even if there was complete coverage.

What could go wrong?

Well Robert, nothing can go wrong when you have already decided what the end result is that you’re going to publish.

That’s climate “science” 101.

Agreed M Seward!

• Different locations.

• Different topology.

• Different plant growth and families.

• Different altitudes.

• Different sensor equipment.

• Different sensor technology.

• Changes of equipment and sensor technology at the same location over time.

Different environments.

• Wild changes in deployed sensors ranging from 200 stations to 1200 stations and down to 860 stations.

• Data absent, often without explanation.

• Data infilled using data from stations up to 1,200 km (745 miles) away .

• Data ‘adjusted’ using data from ‘other’ stations, often repeatedly to better fit assumptions.

• Tolerances of equipment originally at full degree markings averaged with equipment alleged to have tolerances to hundreths of a degree.

• Averaging temperature data from different equipment, different accuracy ranges, infilled and adjusted data, different altitudes, different environments, different climates, different landscaping, etc. etc. and an official government agency pretending the data is not only valid but predicting impending doom!

A lack of science from beginning to end.

A lot of tax monies could be saved by firing every employee who participated in this temperature fraud.

Thank you Andy for an open and documented article.

The 13 month issue might be the fixed solar calendar: https://en.wikipedia.org/wiki/International_Fixed_Calendar

That’s a lot of Friday the 13ths!

Andy, Tony Heller has been talking about this for sometime now. Check out Tony’s website, real climate science.com, where he posts his videos. NOAA is flagrantly filling-in phony estimated values to account for these missing stations you referred to. The estimates, of course, are warmer. Tony explains this in detail on his videos – he’s checked the raw data, too.

Thanks, I’ll check it out. I’m just getting started with this and need all the help I can get.

Tony is not somewhere you should be looking if you want accurate information.

You’re one to talk, griff.

Griff, your a fabulist of the first order. I challenge you to show work by Tony that is inaccurate. He gives all of his code and its easy to check against the data.

Never once have you been able to counter anything he puts forward

You have no data or evidence of anything to put forward.

You are a KNOWN LIAR and a known denier of actual data facts and science.

If you say you don’t like something, it is a GREAT PLACE to start looking for the TRUTH and the FACTS.

So NOAA and NASA dats is not accurate then griff ? Because the data tony uses. You download his analysis and data fetch program and test it. I’m sure tony would be interested in your thoughts

But your comments are full of accurate informations ?

Wha I can’t see them ?

Want to show proof of that, Griff?

Hi griff!

For my little spot I have the daily record highs and lows obtained in 2007.

That 2007 list says that in 1998 nine (9) record highs were set in 1998.

The 2009 list says that no (0) record highs were set in 1998.

Of course, if all nine (9) of those records were broken between 2007 and 2009, that would explain it.

But the 2012 list says there were seven (7) record highs set in 1998. Seven (7) records declared were … re-set?

These are NOAA lists and numbers

Please explain that before implying that Tony Heller is inaccurate in pointing out AND showing that the past temperature records have been tampered with.

What Griffiepoo really means: “Please Mummy, make the nasty Tony Heller go away!”.

A sample of the longest continuous weather stations:

https://www.google.com/search?client=ms-android-huawei&sxsrf=ALeKk0043HVw2tXoF4Zvu5yzimthpZWlWA%3A1605199669196&ei=NWetX8a7C5L3qwHP6BM&q=longest+continuous+weather+station&oq=longest+continuous+weather+station&gs_lcp=ChNtb2JpbGUtZ3dzLXdpei1zZXJwEAEYADIECCMQJ1AAWABg_8YEaABwAHgAgAHyAYgB8gGSAQMyLTGYAQDAAQE&sclient=mobile-gws-wiz-serp

Larry’s right. I think Tony’s helped explain the fig 3 anomoly, in great detail.

Tony ‘make it up’ Heller?

He has no credibility whatever.

(Your lack of credibility is based on your attacks on people without providing evidence of alleged errors or incompetence, you FAIL to do it over and over, if you keep doing it, I will start deleting your baseless attacks) SUNMOD

“He has no credibility whatever”

Well you would say that, wouldn’t you, because you don’t like his conclusions. Others find him quite credible.

Black Pot, meet Kettle.

If you have an issue with Heller’s work, why not write an essay to refute instead of just saying it’s carp?

You have NEVER been able to show any time he has been incorrect.

You don’t like that FAC, and are know to be a highly DISHONEST and fact free troll.

Your comment shows that Tony Heller’s place is a GREAT PLACE to start looking for TRUTH and FACTS,

That is how low your credibility is , griff.

What about your credibility Griff. Fred250 has been asking two simple questions for quite a while now,why don’t you answer them and earn yourself some.

Show us your corrective papers including the citations.

You can’t ?

So you should better shut up.

As opposed to “Griff make it up about Heller with zero evidence..”?

How would your fact-finding be considered reliable when you think 3 meals daily for 7 days amounts to 20 meals?

https://wattsupwiththat.com/2019/03/11/californias-permanent-drought-is-now-washed-away-by-reality/#comment-2652755

And here you are on Aug 29, claiming Germany is the world’s 5th largest economy https://wattsupwiththat.com/2020/08/28/germany-chancellor-calls-for-europe-wide-carbon-pricing-on-transport-and-industry/#comment-3071668

Yet on Aug 22, exactly one week earlier you were claiming Germany is the world’s 4th largest economy

https://wattsupwiththat.com/2020/08/22/the-green-new-deal-means-monumental-disruption/#comment-3066616

Andy,

My graph of the disappearance of stations agrees with the orange line on your figure 1. 400 stations gradually vanished starting in 1989, the MannHansen year.

Did these stations stop recording? Most had been recording for 100 years, a heroic and admirable contribution to science. It is doubtful they ‘went out of business’ because NOAA withdrew funds. Their instruments were in place, nothing expensive about noting TMAX, TMIN, SNOW, PERC each day.

Has anyone contacted one or more of the blocked stations? Is there a blog post anywhere that reports the direct scoop?

Surely the data still exists. Mr. Trump tear down this wall. Get the data.

Is there a system in ‘which stations must disappear’? Someone must organize the process of loss of stations.

What is planned?

Do you follow Tony Heller’s work?

This article appears to be very similar to Tony’s analysis–that he’s been blogging about for the last 10+ years:

“Alterations To The US Temperature Record

NOAA also provides the raw (unadjusted) monthly temperature data. For average temperature, it is available at this link. The unadjusted raw monthly graph shows much less warming than the adjusted data, and has recent years cooler than the 1930s.”

https://realclimatescience.com/alterations-to-the-us-temperature-record/

Excellent article from ‘realclimatescience’

I recently compared the global atmospheric temperature record, GISS, with the ocean surface temperature records, ENSO and AMO, since 1880. GISS shows an increase of 1.1 C in the atmospheric temperatures over that period, while ENSO and AMO shows little to no trend (only cyclic variations) in the ocean surface temperatures over the same period.

I would argue that it defies the laws of physics that the atmospheric temperature should decouple from the ocean surface temperatures by 1 C. over the last 140 years.

It seems that the ‘temperature adjusters’ have only adjusted the atmospheric temperature databases, but have forgotten the sea surface temperature databases. Another ‘smoking gun’ in the evidence of data manipulation to fabricate the global warming/climate change narrative

Thanks Kent. No I had not seen Tony Heller’s work before, but I see that he does show the important graph near the end of his post, it plots the same thing that I do in my Figure 3, except he leaves out the station count. He didn’t make the equivalent of my Figure 1, which is important. His comparisons to CO2 are interesting. Thanks for letting me know. I will follow Tony.

Gosh. Climate scientists are fiddling the statistics?

Are you kidding me?

I’m sure personal ethics & professional embarrassment will cause them to immediately explain & correct their work. If enough of this were to happen, untrained peasants would soon lose respect & trust in climate science.

Good one! LOL!

We better ask Peter Gleike about ethics of climate scientists, hey ! 😉

Or people who deliberately tamper with air-conditioners for important climate meetings. 😉

For a real surprise, you should cross-plot the yearly mean temperature difference against atmospheric CO2 concentrations. The linear relationship will surprise you. Tony Heller has shown significant correlation between the data adjustments and rising atmospheric CO2 concentrations.

In other words, if you torture the data, it will confess!

NOAA has a time machine that can go to the past and collect data and a heat pump to modify the more recent ones. I was a data and database administrator and what I used anomalies for was to find data entry errors. Then I would go back to original documents or back to the original sources and do corrections. Infilling data was not allowed.

Thanks for digging into this Andy. It reminds of Ross McKitrick’s graph of some years ago, also showing rising temps coinciding with the great decline in # of stations reported.

I did an analysis of the stations given top ratings for siting by WUWT, and found that the flagging allows removal of recent year data so that values are infilled from elsewhere. For example, see San Antonio

https://rclutz.wordpress.com/2015/04/26/temperature-data-review-project-my-submission/

It always seemed to me that missing data should be infilled by means of the trend observed at that particular station.

“I plan to do more with the USHCN data and its companion GHCN (Global Historical Climate Network) dataset. I’ll publish more posts on them as issues come up.”

1. USHCN IS NO LONGER USED. It ceased to be the data of record YEARS AGO.

2. One of the issues with USHCN is it is NOT a high quality record like many peole think

A) many of the stations are spliced.

B) and many stations have estimated data ( as the author notes)

3. The ENTIRE adjustment approach was SCRAPPED

Basically NOBODY USES USHCN IN ANY GLOBAL RECORD. It has been replaced ENTIRELY

along with TOBS

GHCN is NOT A COMPANION to USHCN

USHCN is used by me. Stations are recording, stations are reporting, NOAA is posting the data daily in USCHN. This is the baseline gold standard data. It is “high quality” because a) long-term history at each station; b) large number of stations, 1200; unadjusted.

A) I don’t know what “spliced” means in this context.

B) USCHN raw (not final or TOBS) has some estimated dailies, but insignificant.

It is impossible to “replace” USCHN.

“A) I don’t know what “spliced” means in this context.

B) USCHN raw (not final or TOBS) has some estimated dailies, but insignificant.

It is impossible to “replace” USCHN.

######################

if you don’t know what spliced is then you have not read the documentation

nor do you understand the inventory file.

Short version. when they had stations that were physically close to together they SPLICED

the records and pretend they are one station.

See the inventory to show you which stations are combined

USHCN is easily replaced. it is a SUBSET of a larger data set.

In short. in the 90s they had a large dataset ( Some first order stations and COOP)

First order stations are the best in that they collect data at midnight ( NO TOB problem)

Anyway, they went through all the stations and tried to pick the longest ones

in some cases they had to splice together 2 or 3 stations to get a long record.

later some of these long stations went offline, got closed, so you end up with 30%

of them estimated.

At the same time the BASE datasets grew as more old data got recovered.

USHCN is easy to replace because

A) it was spliced to begin with

B) many of the stations stopped reporting

C) other records of the past were recovered from old paper and micro fiche

Hint, I’ve only been working with USHCN since 2007

I stopped in 2012 when it was clear that

A) it was a subset of the WHOLE DATABASE

B) there were better ways to compile a complete record

windlord cannot read the readme

“COMPONENT 1 is the Coop Id for the first station (in chronologic order) whose

records were joined with those of the HCN site to form a longer time

series. “——” indicates “not applicable”.

COMPONENT 2 is the Coop Id for the second station (if applicable) whose records

were joined with those of the HCN site to form a longer time series.

COMPONENT 3 is the Coop Id for the third station (if applicable) whose records

were joined with those of the HCN site to form a longer time series.

to make long stations they SPLICED some shorter stations together

Here is the CLUE

USHCN is REPLACEABLE because it is NOT SOURCE DATA

It is a SUBSET of the TRUE SOURCE DATA

that’s why guys like me, having discovered this long ago, use the real source data

and why, guys like you and Heller use a dataset that was slated for the trash heap in 2011

RIP in 2014

Right. A 50-million recording of direct measurement is garbage, but a model temp reconstruction is fact.

Wrong

Steven,

Poor wording perhaps, on my part. By companion, I meant the same methods are used by both. There are a lot more US stations used in GHCN, as I found when I downloaded the data. But, the two share a common methodology. I do not think PHA (pairwise homogenization) has been scrapped, it is still used in both USHCN and GHCN as far as I know. The tob correction is also still used in both datasets as far as I can tell. So I don’t think you can say the entire adjustment approach was scrapped. I’m new to this, but I just read about both techniques being used.

“. I do not think PHA (pairwise homogenization) has been scrapped, it is still used in both USHCN and GHCN as far as I know. The tob correction is also still used in both datasets as far as I can tell. So I don’t think you can say the entire adjustment approach was scrapped. I’m new to this, but I just read about both techniques being used.”

you don’t know the history. neither does Heller.

USHCN used to use

1. Filnet –infilling

2. Shap — stationmoves

3. TOBS — Time of observation

4. A UHI adjustment– based on population

5. A MMTS adjustment – based on Qualyes work

FIVE different adjustments

Then it was changed to this

1. TOB

2. PHA.

Internally to NOAA the PHA team argued that TOB was not needed, that PHA could do the

full job. the decision was made to keep running the TOB adjustment and then run PHA after

it.

Now, here is the thing to understand. TOB can ONLY BE USED ON USA STATIONS

It can ONLY BE USED on stations where you have a GOOD RECORD of the changes

to Observation times. TOB code only works on CONUS stations.

Over time USHCN started to lose stations. Stations closed.

So USHCN was DISCONTINUED as the official dataset of the USA.

around 2014. There are just too many estimated values in it.

It is “retained” for LEGACY reasons. But NASA GISS and all the rest of us

just stopped using it. The switch was made to using GHCN v4.

USHCN is dead.

GHCN does not use TOB. it CAN’T that’s because karl’s 1986 TOB correction code

IS HARD CODED FOR THE USA.

So GHCN doesn’t use TOB, cant use TOB. the TOB software is tied EXCLUSIVELY

to the USA and USA geography.

GHCN uses PHA exclusively. So now with GHCN v4 there are 27000 stations

they are all adjusted by ONE PROGRAM. that program is PHA. TOB is not used

TOB is used ONLY on USHCN which is a deprecated data set.

So to repeat

USHCN USED TO BE THE OFFICIAL RECORD of the USA. 1218 “long stations”

it is not the official record anymore.

A) it used to use about 5 different adjustment codes

B) that list of 5 was reduced to 2: TOB is run first and then PHA is run

C) USHCN has been DISCONTINUED as an official dataset.

D) the code is run every month, but the output isn’t used by any major group to

construct a global average.

E) the dataset is posted only for legacy reasons. Like if someone wants to check an

an old paper that used USHCN or if some government agency is slow to switch to

the new official dataset.

GHCN v4 is a global record. it only uses PHA. It doesn’t use TOB because

A) TOB is specific to USA geography ( and things like sun rise times)

B) TOB requires an accurate record of the changes in time of observation (even USHCN has issues)

C) ONLY the USA has a major TOB problem

( a few countries, norway, Canada, japam, Australia have a few stations with changes

in TOB but none of them can or would use karl’s 1986 fortran program)

Steven Mosher, your entire position is an appeal to authority. I [we?] do not care the you and/or NOAA has denigrated direct measurement USHCN and given it’s reconstructions an “OFFICIAL” dataset designation.

Moreover, you are falsified by either of these two daggers:

1) constructing an “average” global temperature model by projection from short measuring, proxy-duct taping, gridding, and homogenization, is a red herring. It does not answer the question “is there any abnormal warming or cooling over the past 120+ years?”

2) despite all the complex model building and temperature reconstructions, if the purported abnormal warming is a fact, why does it not show up anywhere in the 50-million direct measurements from 1200 weather stations in USHCN?

What I find most fantastic is the claim that USHCN is “not a data source,” while your alphabet soup of models is valorized as such.

I also will fire a torpedo at this:

“Over time USHCN started to lose stations. Stations closed.”

They did not close. Why would stations having recorded for 100 years out of pure dedication to factual science suddenly stop? NOAA simply stopped posting their recordings. This suppression began (what a coincidence) with the jump-off of the MannHansen project in their time of glory 1988-9.

So USHCN was DISCONTINUED as the official dataset of the USA around 2014.”

I {we?] don’t care.

“There are just too many estimated values in it.”

NOT IN THE RAW.

From my website

https://theearthintime.com

The analysis was conducted on the RAW dataset, not the TOB or FLs

Number of recordings, 49,868,490

Missing/redacted [-9999]: 58,737

Estimated [e]: 10,455

“USHCN is dead.”

USHCN is the gold standard of measurement. Not for the purpose of deriving an average, rather for showing 1200 [once you provide the redacted data from 400 stations] sine waves one by one for examination, looking for evidence of abnormal warming. If it is not found anywhere, then there is none.

Attempts to destroy USHCN are like religious terrorists blowing up temples and statues from another religion once they conquer, like that priest who burned the entire writings of the Maya in one day, like Mao destroying any and all people and artifacts that did not fit his agenda for constructing communists.

…USHCN…has been replaced ENTIRELY….

Afraid I haven’t been keeping up, Steven. What replaced it?

Juan, he isn’t very clear, but I think he means USCRN replaced it as the official temperature record for the United States. Although when you look that up you get a very strange answer, see here:

https://www.ncdc.noaa.gov/news/ncdc-introduces-national-temperature-index-page

It is not very understandable either.

Andy,

” Although when you look that up you get a very strange answer”

You shouldn’t write about this stuff if you can’t get your head around the basics. The answer is not strange; it is full and clear:

“The USCRN serves, as its name and original intent imply, as a reference network for operational estimates of national-scale temperature. NCDC builds its current operational contiguous U.S. (CONUS) temperature from a divisional dataset based on 5-km resolution gridded temperature data. This dataset, called nClimDiv, replaced the previous operational dataset, the U.S. Historical Climatology Network (USHCN), in March 2014. Compared to USHCN, nClimDiv uses a much larger set of stations—over 10,000—and a different computational approach known as climatologically aided interpolation, which helps address topographic variability.”

As it very explicitly says, nClimDiv replaced USHCN as the operational dataset in March 2014. USHCN is not now used.

… and as such, nClimDiv is useless for answering the question “Has the been any abnormal warming over the past 120 years” because

a) it is a model, not measurement;

b) it only goes back a few years.

Expunging 120 years of measurement is the ultimate “hide the prior warmth.” After all, why bother altering the data from the 20th century when you can just snap your fingers and send it into the void!

“nClimDiv replaced USHCN as the operational dataset in March 2014. USHCN is not now used” is a petition to the gods, an ardent dream. Alarmists want USHCN to disappear, and the want it replaced by the model. Wishing does not make it so. Nor does bureaucratic declaration.

USHCN is the permanent, authoritative baseline, gold standard.

“a) it is a model, not measurement;

b) it only goes back a few years.”

Complete nonsense on both counts.

Nick ==> “a different computational approach known as climatologically aided interpolation, which helps address topographic variability.”

I’d say that was pretty different alright.

The error bars for CAI are as follows:

“Interpolation errors associated with CAI estimates of annual‐average air temperatures over the terrestrial surface are quite low. On average, CAI errors are of the order of 0.8°C, whereas interpolations made directly (and only) from the yearly station temperatures exhibit average errors between 1.3°C and 1.9°C.” [ link ]

The average error is 0.8°C — and the errors in interpolations made “directly (and only)” from the yearly station temperatures ??? 1.3 to 1.9°C .

I would assume that these are +/- error bars. Greater than all the posited increase in global temperatures since 1890.

That is different . . . . admitting HUGE error ranges.

Kip,

“Greater than all the posited increase in global temperatures since 1890.”

Different things. The numbers you quote are the variability associated with interpolating individual points. The variability of a global average is very much less. And errors are reduced again by using anomalies.

Nick ==> Errors are not reduced by using anomalies — they are just ignored. You know this very well.

You can’t turn lousy numbers into precise results — that is one of the fallacies that is ruining much of scientific effort in many fields — epidemiology, climatology, and the social sciences.

Your stack of temperature records with very wide error bars (up to +/- 1.9°C for interpolated stations) still have the same error bars when reduced to anomalies. You can not just “wish” the error range away.

“And errors are reduced again by using anomalies.”

That is ABSOLUTE NONSENSE.

Anomalies are just the difference from a calculated mean.

The errors DO NOT magically disappear.

“Errors are not reduced by using anomalies”

The error in the population average estimate is reduced. The reason is that the greater homogeneity of anomalies reduces sampling error, which is the main contributor to the error in the population average.

Nick ==> You have definitely stated the fallacy correctly — the belief that more and more division and subtraction and averaging actually IMPROVES the quality and accuracy and precision of the data. It does not do so in the real world.

“The reason is that the greater homogeneity of anomalies reduces sampling error, ”

LOL.. the farce continues !!

Talk about a misleading and disingenuous comment Nick!

How is it you managed to leave out the last sentence of the paragraph?

“You shouldn’t write about this stuff if you can’t get your head around the basics..! – Nick Stokes”

You managed to redact the ultimate and all important qualifying sentence in the entire paragraph:

“all important qualifying sentence”

How is that an all important sentence? It just says that nClimDiv does some things similarly. Doesn’t change the fact that, as explicitly stated, nClimDiv replaced USHCN in March 2014.

“… the fact that, as explicitly stated, nClimDiv replaced USHCN in March 2014.”

You keep repeating that, as if it will eventually be true. “Replaced?” No.

You are making a petition to the gods, an ardent dream. Alarmists want USHCN to disappear, and the want it replaced by a model — a temperature reconstruction using temperature measurement — how absurd is that?

Wishing does not make it so. Nor doesa bureaucratic declaration of “replacement.” Mr. Stokes, this is the age of cancel culture, and you are extremely lame at it.

USHCN is the permanent, authoritative baseline, gold standard. It can’t be cancelled.

“USHCN is the permanent, authoritative baseline, gold standard.”

Like fred, I don’t think you know what USHCN is.

We all know that USHCN is a load of agenda driven garbage

Pity you are too slow and brain-addled to have figured that out, Nick !

“We all know that USHCN is a load of agenda driven garbage”

So you don’t agree that “USHCN is the permanent, authoritative baseline, gold standard.”? Well, OK. But you did say that

“USHCN brought the data tampering to an end, as it was bound to do.”

and complained

“WHY was USHCN dropped from the data sets”

I know what USHCN is.

There is some confusion in this thread about it.

Here is the NOAA FTP download page.

ftp://ftp.ncdc.noaa.gov/pub/data/ushcn/v2.5/

Notice that there are three flavors for both TMAX and TMIN, namely RAW, TOB, and FLs.

RAW contains 50-million direct measurements from 1200 [redacted recently to 800] weather stations over 120+ years. There is a small amount of “estimation” in RAW, but it is basically raw!

The contention is with FLs, “FINAL” dataset. That set is not measured data — it contains constructions and reconstructions, deploying Pairwise Homogenization Algorithm and extrapolation, making it a model, fraught with all the problems constantly exposed in the activities of climate scientists.

Moreover, the concept of FLs is the drive to construct an overall average temperature model. This is an error, if your goal is to answer “Is there any abnormal warming evident in the United States over the past 120 years.” To answer that question, you have to examine the sine curve in the RAW dataset of 1200 stations one by one.

To all of us outraged by data manipulation, I suggest realizing that the RAW data is on file, and receiving updates daily. The cause for rage is when advocates push the FLs dataset out front and Cancels the existence of RAW, justified by the notion that Final (FLs) is ‘more accurate model of average temperature.’ Which is the wrong quest.

Here is what RAW shows:

http://theearthintime.com

My new claim is as follows: USHCN RAW is the permanent, authoritative baseline, gold standard.

windlord is wrong

Nclimdiv goes back to 1895

ftp://ftp.ncdc.noaa.gov/pub/data/climgrid/

Steven Mosher November 6, 2020 at 3:35 am

windlord is wrong Nclimdiv goes back to 1895

ftp://ftp.ncdc.noaa.gov/pub/data/climgrid/

For the moment, let me stipulate that it indeed goes back to 1895.

What I don’t have wrong: That is not direct measurement data. It is a gridded model.

… the folly of temperature reconstruction performed on direct measurement of temperature?

“How funny it’ll seem to come out among the people that walk with their heads downwards! The antipathies …” ~ Alice

“And errors are reduced again by using anomalies.”

“You shouldn’t write about this stuff if you can’t get your head around the basics.”

Same person.

Bottom line – it doesn’t matter what the climate record is called.

They’re exactly the same, the same anticlockwise rotation with vertical stretching, to change the 1930s from being much warmer than the present in the USA, to being much cooler. For the cooling in the 1950’s to 70’s to disappear. Label it what you like. The fraud is the same and everyone knows what is being done.

No

nClimDiv replaced USHCN

nCLIMDIV

Vose, R.S., Applequist, S., Durre, I., Menne, M.J., Williams, C.N., Fenimore, C., Gleason, K., Arndt, D. 2014: Improved Historical Temperature and Precipitation Time Series For U.S. Climate Divisions Journal of Applied Meteorology and Climatology. DOI: http://dx.doi.org/10.1175/JAMC-D-13-0248.1

this is one reason why NASA GISS don’t use USHCN any more

No USHCN, no TOB adjustment

Do you guys read the readmes and literature?

Steven: Why is this data set still maintained? What is the accepted replacement? Has anyone looked critically at any adjustments to this replacement?

I have this nagging feeling that each replacement is just a newer more cleverly hidden adjustment system. When the adjustments come to light, they once again move on to a new data set

“Steven: Why is this data set still maintained? What is the accepted replacement? Has anyone looked critically at any adjustments to this replacement?

I have this nagging feeling that each replacement is just a newer more cleverly hidden adjustment system. When the adjustments come to light, they once again move on to a new data set”

the replacement is nClimDiv

Why is it still maintained?

Well I have told some guys there to just nuke the dataset. But they responded like this

A) Since old published papers used the data, we have to keep it around for legacy

B) some agencies like forestry and others have old programs that used USHCN

C) Some people still like to use it because it has a daily min max version and

GHCND is a pain in the ass.

They have the code. they just run it.

the adjustment system is now ONE program as opposed to the FIVE it used to be.

That one program has been benchmarked and independently verified

One of the goals of our work was to independently verify it. We did

Unlike the USHCN adjustment code, the new system is totally open.

Mosher

Would you care to comment on why the USHCN data are still being collected and published if it “IS NO LONGER USED?”

USHCN, as used here, is just the name for a list of stations that were, until March 2014, used to compile the CONUS average (which was also often shortened to USHCN). The stations still exist and report temperatures, regardless of what the readings are used for or what list they are par of. Many will be included in the longer list, nClimDiv, that are now used for the CONUS average.

And Climdiv shows NO WARMING except for a bulge from the 2015 El Nino, since 2015.

USHCN has put an end to the data tampering. !

“USHCN has put an end to the data tampering. !”

In fact, your graph does not show USHCN at all. If you go back to its source, you can get to to show USHCN (to 2014), and it will show USHCN almost identical to nClimDiv and USCRN.

Which just means that USCRN is affirming the others.

Why would I bother showing a highly corrupted data set when a pristine one is available

NOTHING from before 2005 can be trusted….. period.

The adjusters have made darn sure of that. !

Glad you agree there has been no USA warming except from the El Nino Big Blob since 2005.

Change your foot next time.

“USHCN almost identical to nClimDiv and USCRN. ”

Of course it is , over that short period

The data adjusters KNEW they couldn’t allow it to be disparate from the new system.

USHCN brought the data tampering to an end, as it was bound to do.

But you knew that didn’t you Nick. !

You also have to ask , if it is still being calculated, WHY was USHCN dropped from the data sets.

You only have to look at what they have been doing now, to answer that question.

“Which just means that USCRN is affirming the others”

No, USCRN is a check on REALITY

Funny how everything leveled off as soon as a reliable system, not subjected to massive tampering, was introduced. 😉

“USHCN brought the data tampering to an end, as it was bound to do.”

You have a totally muddled idea of which is which.

WRONG,

You KNOW that USCRN brought the tampering to an end.

Stop your childish attempts to avoid that facts.

Your credibility is dropping to griff level.

fantasy maths.. the Nick way !

Mosher

Would you care to comment on why the USHCN data are still being collected and published if it “IS NO LONGER USED?”

USCHN is a name for a collection of data and its associated adjustment process.

lets see if I can explain — I will use approximate numbers and leave out some detail

There is SOURCE DATA over 20000 stations in the USA GHCN DAILY MIN MAX

back in the 90s NOAA tried to collect these into 1 “BEST” dataset of long stations

they selected 1218. They had to stitch some stations together

Then they had to adjust them. This collection was called USHCN

USHCN is a DERIVED product that drew from GHCND primarily

it is a SUBSET of all the data that was stitched together and adjusted in a

specific way.

Over time they changed the adjustment codes

Over time 30% the 1218 stations went off line..

So they estimated missing data.

In 2014 they decided that these “1218 stations” AND THE ADJUSTMENTS that

define what they are would no longer be the official record.

they built a new official record. Starting again with the MAIN SOURCE GHCN DAILY

they built nClimDIV.

as a “dataset’ it is still on line. every month they take the DAILY source file (20K plus stations)

and they build SUBSETS

A) USHCN SUBSET

B) ncCLIMDIV SUBSET

so “A” as a subset still exists but it’s no longer official and its not used in global climate records

it used to be used by NASA GISS but they stopped using it in 2014

As mosh says..

Why use a fabricated , tortured and corrupted data set, like USHCN or BEST, when you have much better data available.

These fabrications are now so tainted with agenda driven junk-science as to be TOTALLY USELESS as a historical representation of US temperatures.

Only the raw data , as used by Tony Heller, has any meaning.

Fortunately there is a better , untainted system, which shows basically no warming since 2005

And because it exists, the the data tampering has also stopped and the the ClimDiv collation matches USCRN almost exactly. Agin showing no warming except for the bulge from the recent El Nino/Big Blob event

Bingo.

As soon as a high quality, tamper-resistant network came to be, CO2 forgot how to warm the contiguous US.

CO2 is just an old curmudgeon, remembering the glory days when it could influence contiguous US temperatures like a control knob. Now it just sits on the porch and yells at USCRN to get off his lawn.

This is why Mosher and Stokes want to emphasize the land-ocean temperatures. Much less controlled, much lower quality, tampering is not only allowed but encouraged as necessary.

Even early data from pre-1900 should be used when it is high quality. USCRN is only a few dozen stations. You don’t need a lot of data, you need good quality data. Polluting good data with bad is their MO.

“And because it exists, the the data tampering has also stopped and the the ClimDiv collation matches USCRN almost exactly. Agin showing no warming except for the bulge from the recent El Nino/Big Blob event”

USCRN shows less warming than adjusted records

In fact USCRN proves that adjustments DONT warm the record

USCRN proves that the “poor’ stations are actually quite usable if you you know what you are doing

here is a test

A If USCRN is a gold standard then PREDICT what will we see if we compare

USCRN versus nCLIMDIV (adjusted)

USCRN versus USHCN ( adjusted)

What will we see if we compare a gold standard with these two adjusted datasets?

anyone bold enough to predict?

What will see if we compare the gold standard with stations that have bad siting?

UHI? by airports?

what will we see ?

Predict!

Here are all 3 records

USCRN

USHCN

nCLIMDIV

things to note

the unadjusted goldstandard is WARMER THAN the official data for the USA

https://www.ncdc.noaa.gov/temp-and-precip/national-temperature-index/time-series?datasets%5B%5D=uscrn&datasets%5B%5D=climdiv&datasets%5B%5D=cmbushcn¶meter=anom-tavg&time_scale=ann&begyear=2005&endyear=2020&month=9

whats that tell you boys

Steve Mosher, “1. USHCN IS NO LONGER USED.”

Thanks for shouting, Steve.

We all know the USCRN now provides the measurement of record in the US. Since 2005.

So, let’s all notice that all the temperature difference graphs start at 1900. Let’s see, that’s … 105 years before the USCRN entered the scene. We notice further that adjusted USHCN temperatures were used to construct the record across 1900-2005.

That fact makes the difference graphics relevant and your rant irrelevant.

“We all know the USCRN now provides the measurement of record in the US. Since 2005.”

NOPE.

the official record is nclimdiv

wrong pat USCRN is NOT the official record

‘NCEI now uses a new dataset, nClimDiv, to determine the contiguous United States (CONUS) temperature. This new dataset is derived from a gridded instance of the Global Historical Climatology Network (GHCN-Daily), known as nClimGrid.

Previously, NCEI used a 2.5° longitude by 3.5° latitude gridded analysis of monthly temperatures from the 1,218 stations in the US Historical Climatology Network (USHCN v2.5) for its CONUS temperature. The new dataset interpolates to a much finer-mesh grid (about 5km by 5km) and incorporates values from several thousand more stations available in GHCN-Daily. In addition, monthly temperatures from stations in adjacent parts of Canada and Mexico aid in the interpolation of U.S. anomalies near the borders.

The switch to nClimDiv has little effect on the average national temperature trend or on relative rankings for individual years, because the new dataset uses the same set of algorithms and corrections applied in the production of the USHCN v2.5 dataset. However, although both the USHCN v2.5 and nClimDiv yield comparable trends, the finer resolution dataset more explicitly accounts for variations in topography (e.g., mountainous areas). Therefore, the baseline temperature, to which the national temperature anomaly is applied, is cooler for nClimDiv than for USHCN v2.5. This new baseline affects anomalies for all years equally, and thus does not alter our understanding of trends.”

The “Revelation of the Method” by Steven Mother:

;-(

Call me cynical, but this graphic includes the USCRN anomaly plot since 2005.

The normal period is 1981-2010, which means the anomalies were generated by subtracting the average of the 1981-2010 air temperature from each annual average temperature since 2005.

The 1981-2010 normal is calculated using USHCN measurements.

That means the uncertainty of the USHCN 1981-2010 measurement set should propagate into the 2005-2019 USCRN anomalies.

The lower limit of uncertainty from systematic measurement error in the USHCN normal is about ±0.46 C.

So, using very standard error propagation through a difference, the uncertainty in the anomaly temperature is (T_n±0.46) C – (T_crn±0.05)C = (ΔT_anom±0.46)C

But the NOAA graphic has no uncertainty bars. The superhero assumption of perfect accuracy and infinite precision rides again.

Picture how the NOAA graphic would look with ±0.46 C uncertainty bars.

At the 95% confidence interval, the anomaly is indistinguishable from 0 C.

nice use of maths Pat,

Nick and Mosh will be along soon, with their lack of understanding , in a hilarious attempt to undermine you. 🙂

USCRN is the only reference that I know of that doesn’t start at zero.

They started it at ~+1.8, and it has stayed around that level for the last 15 years.

Just set it at zero from the beginning (or some brief period at its own beginning) and stop with the games.

wrong pat

‘ USCRN observations since commissioning to the present (4-9 years) were used to find relationships to nearby COOP stations and estimate normals at the USCRN sites using the normals at the surrounding COOP sites derived from full 1981-2010 records (Sun and Peterson, 2005).”

NOT ushcn

“One of the issues with USHCN is it is NOT a high quality record like many peole think”

ROFLMAO

Nobody thinks USHCN has any real quality at all !

As you say, it is a manufactured, tortured, agenda-driven load of GIGO.

Just like BEST is.

So how is it that the decline of 1/3 in number of stations happen to be “warm” stations ? Since the trend of the remaining stations results in lower average temperature by a rather large whole degree C ?

Yet those expensive well sited USCRN stations, not part of USHCN, show…..NADA

https://www.ncdc.noaa.gov/temp-and-precip/national-temperature-index/time-series?datasets%5B%5D=uscrn¶meter=anom-tavg&time_scale=p12&begyear=2004&endyear=2020&month=12

So the airport stations that were USHCN were upgraded to automated electronic stations and moved to alternate non-USHCN record keeping. Maybe the default reduction in jet engine exhaust blasts and reduction in nearby asphalt runways on the remaining USHCN station records explains the effect ?

#@ur momisugly$&-it Andy…..is that graph axis “ Raw Final “ or “ Final minus raw”….it makes a big difference…

DMacKenzie, I guess it is vague. It means Final minus raw.

Thanks, sorry I spazzed, nice work by the way.

Much of the adjustments are to correct for differences in the time of day that measurements are taken. 1) Near uniform warming througout the day should cause the temperature average formed by multiple measurements taken at different times to increase at about the same rate as measurements taken at the same time. The average will be different between the two cases but, given near uniform warming through the day, the rate of increase in the average should be nearly the same. The average is only useful to determine the rate of increase anyway as the average itself is meaningless; it averages places at different elevation, relative humidity, hours of solar insolation, etc. – the average you get is dependant on the number of stations of each characteristic used in the average. If warming occurs more in overnight temps (as it does) the correction could be either positive or negative depending on how many temps taken at night are included in the average. It would be far more useful to compute a day and a night average and determine the rate of change of each separately. 2) As more and more stations are computerized and thus capable of frequent measurements and calibration checks one would expect the adjustments to flatten out, but that’s not happening. Something seems fishy here.

It’s not quite as simple as the time of taking a reading…..the old mercury thermometer stations only showed the low and high positions of a steel float on the mercury column of the thermometer. So only overnight low and yesterday’s daytime high were recorded. The time the reading was recorded is of no significance except in very rare cases were the reading was taken at a time that was lower than last nights low or higher than yesterday’s high. And the odds of that are 50/50, so cancels over a year average.

Now continuous electronic monitors do have time of measurement issues. Also speed of measurement issues. Electronic probes can show a temperature increase from a small passing convective current that the old mercury thermometers wouldn’t have been able to warm up for…..

Nope, sorry. There was a widespread conversion of USHCN stations to electronic designs before 2009 at which time some were relocated to sites less susceptible to the urban heat island effect. Adjustments were made to account for time of day issues at the time of conversion (which should have made a small effect in the average RATE of warming – adjustments for location could introduce a substantial one-time correction). Therefore, the adjustments should have plateued since 2009. They haven’t. See figure 1. There was an unexplained dip a few years ago but the last data point is right back on the trend of increasing adjustments. That can’t be right.

wrong.

“There was a widespread conversion of USHCN stations to electronic designs before 2009”

COOP stations and others were transitioned to MMTS before this

the TOBS adjustment corrects for trend changes due to change in observation time

“The time the reading was recorded is of no significance except in very rare cases were the reading was taken at a time that was lower than last nights low or higher than yesterday’s high. And the odds of that are 50/50, so cancels over a year average.”

wrong.

there are several effects. One is the end of month effect, one is a high being counted twice

and the other is early morning cold fronts biasing the results

These Biases were First identified in the late 1800s

then again with Mitchell’s work in the 50s

then again in the 1970s by agriculture researchers in Indiana who were concerned

about biases in heating Degree days.

farming matters

it wasn’t until 1986 that the bias was addressed by NOAA

“Near uniform warming througout the day should cause the temperature average formed by multiple measurements taken at different times to increase at about the same rate as measurements taken at the same time. ”

This just isn’t true and is easily shown to be untrue. The daily temperature curve resembles a cosine wave. Thus the warming (and cooling) throughout the day changes radically. At sunrise the rate of warming starts off slowly but increases during the morning. At mid-day the rate of increase has slowed significantly. Then the temperature starts to fall slowly but increases through sunset and early night. Toward sunrise the rate of cooling has decreased significantly.

Do *any* scientists spend a lot of time outdoors any more? A 13 year old Boy Scout could point out the problems with this assumption.

Multiple measurements as in multiple stations participating in the average some of which having the measurement time of day different than others. If the warming is uniform throughout the day, the warming curve is still the same shape – just displaced by the amount of warming. Sorry that you got so confused by such an easy concept.

No confusion here. The warming/cooling curve is DIFFERENT at different times of the day. The rate of warming/cooling is defined by the derivative of the cosine. The derivative of the cosine varies between 1 and 0 from zero to pi/2. The value you get depends on when you take it.

When you average temperatures taken at different times in different locations you wind up with an uncertainty interval that can be quite large.

The warming shape of each station remains the same but the shape of the average is not the shape of either, it is combined. Adding two sin or cos waves that vary in phase does not give you a similar sin or cos wave.

The other question is fitness of purpose. If two data streams are incompatible because of a physical measuring difference, you can’t simply create new data to try and fit your purpose. That is close to what Mann did to his hockey stick graph.

An example is taking older measurements recorded as integers (+/-0.5 uncertainty) and trying to make adjustments to the old data in order to match newer thermometers that are recorded to (+/-0.1 uncertainty). You can’t go back in time to know what the actual rounding was for each temperature. Changing the value and/or the precision of previously recorded data is modifying that data. Knowingly using a database that has done this is acceding to the changes and you are treading in the foggy marshes where postmodern science says “the ends justify the means” with all the same risks that entails.

The most appropriate thing to do is break the data and trends into separate units by the type of measurements that were/are being done. If the values and/or rates don’t match explain what you think the differences are and let the viewer decide what is correct.

“Much of the adjustments are to correct for differences in the time of day that measurements are taken. 1) Near uniform warming througout the day should cause the temperature average formed by multiple measurements taken at different times to increase at about the same rate as measurements taken at the same time. ”

measurements are taken (recorded) once a day

the TOB Time of OBbservation Bias has been reported since the late 1800s.

it became an issue of importance in the 70s in farm belt states because the change

of observation times was biasing the temperatures cold and the agriculture industry

which depends on HDD heating degree days needs accurate data

Here is the first observation of the TOB

1890

6 years prior to any theories about C02 causing increased warming

https://rmets.onlinelibrary.wiley.com/doi/abs/10.1002/qj.4970167605

“the extreme jump seen from 2015-2019 in Figure 3 is mostly in the estimated values in the final dataset. “

The climate scam marching orders from the White House OSTP to NOAA was obviously received loud and clear. The Pause was/is a real problem that had to be “corrected” away. Multiple efforts from Tom Karl (the Karl, et al. 2015 Pause buster paper using arguably fraudulent SST corrections published in SciMag that required pal review to make it through) and still on-going by those he left behind to carry on the temperature hustle. Without those corrections the Pause would likely be unequivocally back in a couple years after the on-going La Nina conditions persist into 2021.

It’s past time to Terminate the climate scam on the surface station temperature records.

Believer in CAGW: The answers violate my theory so the data must have errors that need correction in the direction of CAGW.

Scientist: In Physics, if your theory makes a prediction that turns out wrong, your theory is wrong.

Mother Nature: I don’t care what you believe. I have one Commandment: My way. Ignore me at your peril.

Prediction is difficult, especially about the future. Models should always predict the then-future of the past, too.

If you drop far flung rural stations and just keep the ones close to cities, then the interpolated estimates for the defunct far flung stations will be raised by UHI. If you don’t tell people what you are doing, and then say “But the countryside is warming just as much as the cities”, that is lying.

Effectively, the remaining stations are receiving more weight for the average(s) that is computed. If the retained stations are indeed near cities, then that means the UHI effect is being amplified.

I have a different take. UHI was originally desired to be removed so climate scientists could show how much warming was being caused over and above past CO2 concentrations. To this day the the heat from UHI is still being adjusted. The IR radiation folks want to do this so their radiation budgets work out properly when compared to the past.

UHI is real heat being added to the atmosphere. Anyone that has done highway construction can tell you that concrete/asphalt is a very good conductor of heat to the surrounding air compared to the grass ditches on either side. Energy use in heating, cooling, transportation, manufacturing all add heat that has never existed before. UHI bypasses the IR/CO2 processes and uses conduction/convection. As cities grow with more and more concrete, dark roofs, etc. this will grow also.

Our friends on the left will point out that the United States Historical Climate Network doesn’t cover the world so to quote a well known politician, “What difference does it make?”

The United States isn’t the world, but it spans North America with a good variety of geography and topography which makes it a good sub-set of a land mass. World-wide, the US has the best sampling of weather data of any significant land surface. If the US does not show warming, a strong explanation is needed as to why it is an outlier compared to the rest of the world as a whole. Taken from (Richard Verney, WUWT, July 2017)

What we are treated to in the so called “popular” press is graphics from NASA usually their Land Ocean Temperature Index which covers 1689 months through September. So far in 2020 NASA has made the following number of changes

Jan Feb Mar Apr May Jun Jul Aug Sep Oct Nov Dec

319 240 313 340 298 404 319 370 303

Over the past decade those changes look like this:

The first of the 13 months of TOB data is most likely the last month of the previous year.

Statistics relies on the independence of the samples. As soon as you start doing infilling or homogenization you are adding extra weight to some stations at the expense of others. It is a fatal mathematical flaw.

A more reliable method would be to randomly sample the RAW data and rely on the Central Limit Theorem. The biggest problem will be to correct for popukation movement from NE to SW during the past 150 years.

Climate stats increasingly remind me of the great “Saccharin causes cancer” paper back in 1976. Not only did the data not prove that, it didn’t prove anything, but right down at the ‘might suggest’ level, it suggested that Saccharin had a mild preventative effect. That paper is still being quoted today as proof that Saccharin causes cancer.

Strangely the only people in science I know who believe insignificant man made global warming are doctors.

I wonder why this isn’t done this way? This is a good suggestion!

Sampling is not even a good solution. To have good samples, each sample must be representative of population distribution. The CLT, even if proper, would still give you only the estimate of the mean of the population. The data you have is finite and the only data (that is, the entire population) that you have or will ever have. Simply find the mean and variance. Forget sampling, it is used when you don’t know the entire population.

You can not simply join two populations (that is, different station’s data) and find a common mean. You must also pay attention to the variances of each population. Generally the variance of the total will increase. See:

https://www.khanacademy.org/math/ap-statistics/random-variables-ap/combining-random-variables/a/combining-random-variables-article.

Climate4you.com maintains a record of these intentional and significant adjustments by GISS and NCDC in its Reports on Observations (monthly). By GISS at…..http://www.climate4you.com/Text/Climate4you_September_2020.pdf

but for NCDC temperature manipulations go to earlier Monthly Reports of Observations e.g. ….

http://www.climate4you.com/Text/Climate4you_January_2014.pdf

Monthly manipulations of temperature records are completed by GISS and NCDC from the original temperature records for three dates January 2000 and January 1910 (January 2000 and January 2015 for NCDC). Recent changes of temperature show January 2000 record has been regularly increased by GISS from 0.17 deg C to 0.25 deg C from April 2008 to October 2020 while the January 1910 record has been decreased by GISS from -0.28 deg C to -0.41 deg C to produce an overall total upward adjustment (difference) of +0.66 deg C.

Similarly the total upward adjustment created by NCDC (increase of January 2000 plus downward adjustment of January 1915) is +0.51 deg C.

The manipulations are much clearer by using USHCN tavg_latest_raw_tar data to create your own analysis. For example rural stations in the State of Washington at Davenport and Dayton (far from urban heat islands) show century-scale consistent downward trends of -0.31 deg C. and -0.25 deg C. with the greatest decrease occurring since the heat waves of the 1930s-1940s.

I am with a group that did a lot of this kind of work last spring. We downloaded the GHCN database for the continental US. 12,748 stations with 7.3 million station months. We looked at a lot of issues like missing data, changing station mixes, etc.

Notable findings included:

– The CONUS GISTEMP graph can be reproduced fairly closely using the adjusted temperatures and looking at stations that consistently reported from 1920 on. i.e. You don’t need gridding or interpolated readings for GISTEMP trend.

– The average adjustment of consistently reporting stations rose from a low of approx -.5 degrees C (colder) in 1936 to consistently positive adjustments (over zero) since 2007.

– The actual raw measured temperatures at the same set of stations does not show any positive temp trend since the 1920s. Cooling trend from 1920’s to 1980. Warming trend since then even in the raw data.

– Relocations of the stations don’t affect the average adjustment level.

– Large US airports (in top 50) have not had a consistent adjustment trend of any kind.

– Smaller US airports (name implies airport, not top 50) were adjusted -.4 degrees in the 1920 – mid 1930s and have been adjusted at or above zero since the mid 1990s.

– The geographic distribution of adjustments has been very inconsistent. For example, in 1934, the upper Midwest (MI, IL, IN, WI) were heavily adjusted colder when compared to the Rockies (NM, CO, AZ) which were adjusted much hotter during that year. In 2016, the middle states (Rockies to Mississippi River) of the US have been adjusted hotter while the NW US and mid atlantic coastal regions are adjusted colder.

Let me know if you would like further data from these studies.

This looks interesting – yes please Rich, do let us see further data from your studies.

Andy,

Thanks for this post.

Please watch these 3 videos from Tony Heller. Besides having graphs that look very similar to yours, he also examines NOAA’s explanation for the TOD adjustments. NOAA’s logic sound good, but it can be tested by comparing stations that supposedly required TOD adjustments with those that did not. Tony shows that the TOD hypothesis is faulty.

https://realclimatescience.com/2020/10/new-video-alterations-to-the-us-temperature-record-part-one/

https://realclimatescience.com/2020/10/new-video-alterations-to-the-us-temperature-record-part-2/

https://realclimatescience.com/2020/10/new-video-how-the-us-temperature-record-is-being-altered-part-3/

I think we also need to ask the question of why NOAA is altering current measurements (post 2000) upward, especially if our measurement methodology and equipment is supposedly better than ever today.

Regarding the disappearing stations, we have to ask the question why have those stations been removed from the program. A valid reason might be that those stations suffer from recent urbanization, i.e. the urban heat island effect and are no longer good locations. An obviously invalid reason would be that those stations have shown a decreasing temperature trend that would go against the prevailing AGW narrative.

Essentially it is a representation of the Keeling Curve..

For comparison, Here is USCRN and UAH 48

Surface temperatures seem to respond more in either direction, so the Big Blob/El Nino has caused a drift away from the ZERO TREND that existed until 2016, also a slight divergence between USCRN and UAH48 but both are now gradually leveling back out and the slight divergence disappearing..

Andy

“The plots above show that the overall effect of the estimated, or “infilled,” final monthly average temperatures is a rapid recent rise in average temperature as is clearly seen in Figure 3. In Figure 3 the overall monthly averages from the estimated (“infilled”) final weather station values are averaged and then compared to the average of the real measurements, the raw data. This is not a station by station comparison.“

More to the point, it is a comparison between two different sets of stations, representing different places. The main contribution to the difference in averages comes when one set just consists of hotter places. That isn’t related to changes in weather or climate. And the set of raw stations varies from month to month. I know it is a favorite activity of Tony Heller, but you just can’t usefully subtract the averages of two different and inhomogeneous datasets.

And, as Mosh and I endlessly remind, USHCN was replaced in March 2014 by nClimDiv for CONUS averages. You can still get the data for the USHCN list of stations if you want, and make up your own averages. That is your activity; it is not something that NOAA does.

” nClimDiv for CONUS averages”

And shows NO WARMING since 2005.

Your point is…….. meaningless, Nick.

You are just trying to hide the data tampering by yapping from behind a 6ft fence.

“You can still get the data for the USHCN list of stations if you want, and make up your own averages”

And it shows this…