From AGU EOS —Terri Cook, Freelance Writer

Was the Recent Slowdown in Surface Warming Predictable?

From the early 2000s to the early 2010s, there was a temporary slowdown in the large-scale warming of Earth’s surface. Recent studies have ascribed this slowing to both internal sources of climatic variability—such as cool La Niña conditions and stronger trade winds in the Pacific—and external influences, including the cooling effects of volcanic and human-made particulates in the atmosphere.

Several studies have suggested that climate models could have predicted this slowdown and the subsequent recovery several years ahead of time—implying that the models can accurately account for mechanisms that regulate decadal and interdecadal variability in the planet’s temperature. To test this hypothesis, Mann et al. combined estimates of the Northern Hemisphere’s internal climate variability with hindcasting, a statistical method that uses data from past events to compare modeling projections with the already observed outcomes.

The team’s analyses indicate that statistical methods could not have forecast the recent deceleration in surface warming because they can’t accurately predict the internal variability in the North Pacific Ocean, which played a crucial role in the slowdown. In contrast, a multidecadal signal in the North Atlantic does appear to have been predictable. According to their results, however, its much smaller signal means it will have little influence on Northern Hemisphere temperatures over the next 1 to 2 decades.

This minor signal in the North Atlantic is consistent with previous studies that have identified a regional 50- to 70-year oscillation, which played a more important role in controlling Northern Hemisphere temperatures in the middle of the 20th century than it has so far this century. Should this oscillation reassume a dominant role in the future, argue the researchers, it will likely increase the predictability of large-scale changes in Earth’s surface temperatures.

Paper:

Predictability of the recent slowdown and subsequent recovery of large-scale surface warming using statistical methods

Authors

Michael E. Mann, Byron A. Steinman, Sonya K. Miller, Leela M. Frankcombe, Matthew H. England, Anson H. Cheung

(Geophysical Research Letters, doi:10.1002/2016GL068159, 2016)

Abstract

The temporary slowdown in large-scale surface warming during the early 2000s has been attributed to both external and internal sources of climate variability. Using semiempirical estimates of the internal low-frequency variability component in Northern Hemisphere, Atlantic, and Pacific surface temperatures in concert with statistical hindcast experiments, we investigate whether the slowdown and its recent recovery were predictable. We conclude that the internal variability of the North Pacific, which played a critical role in the slowdown, does not appear to have been predictable using statistical forecast methods. An additional minor contribution from the North Atlantic, by contrast, appears to exhibit some predictability. While our analyses focus on combining semiempirical estimates of internal climatic variability with statistical hindcast experiments, possible implications for initialized model predictions are also discussed.

Yet they’re sure it will be at least three degrees warmer in 2100 than now.

What will Mann say when the Plateau resumes with the coming La Nina, or turns into a valley rather than resuming foothill status?

Mann needn’t worry about a La Nina cooling. The Church Priests will Karlize the temperature data to report what they need.

I thought Mr Mann and his ilk had actually gone to some lengths to deny that the ‘pause’ ever happened at all?? Now he’s saying it did?

You notice he called it a temporary slowdown… Lol

“””””….. The temporary deceleration in warming across the Northern Hemisphere earlier this century could not have been foreseen by statistical forecasting methods, a new study concludes. …..”””””

Well I hate to break the news to them, but absolutely nothing ” can be foreseen by statistical forecasting methods. ”

Statistics is always an analysis of stuff you already know because it has already happened. So you can’t even do any analysis, until you know what happened.

But then you still know absolutely nothing about anything that hasn’t happened yet, and you never will, until it has happened.

You can take a wild ass guess on what you THINK might happen.

But your guess is no better than mine on that. Neither of us knows what is going to happen next.

Nothing ever happens again, so the fact that something did happen, is no basis for assuming it will happen again, because it won’t; something else will.

G

george e. smith: Well I hate to break the news to them, but absolutely nothing ” can be foreseen by statistical forecasting methods. ”

I’m guessing that you don’t use Garman or a modern cell phone to help you navigate in a place you have never been before. Are you guessing when Halley’s comet will return, or the next lunar eclipse will occur?

Put more directly, statistical modeling and forecasting methods (e.g. Kalman filters, weighted nonlinear least squares regression) are used routinely to estimate the positions and trajectories of the GPS satellites.

Its more like a plateau and escarpment, the Klimatariat will have nowhere to run.

So, the bottom line here is that Mann and his models cannot make any worthwhile predictions.

We all already knew that, pity it has taken him so long.

But he got a bunch more grant $$$.

If the accuracy of climate models was graphed in numerical form as temperatures are, without alteration they would have a negative anomaly approaching 100.

The models do not know if we are rapidly entering an ice age, or entering a global sauna…

Geologists have identified the evidence of (at least) 17 previous Ice Ages separated by brief Inter-Glacials. There may be more Ice / warm cycles before that, but the evidence kept getting chewed up by the geological processes at work.

A core tenet of Geology is that the processes we see at work today were at work in the past. Given the cyclical nature of Ice Ages and Inter-Glacials, I’m betting on another 170K long Ice Age will soon be upon us, and the petty musings of Mann and his superstitious fear-mongers will be proven very wrong.

The Greenies were lucky that after their failed Ice Age prophecies there was an uptick from a rebounding from the end of the Little Ice Age. They rode that pony, but it has died. We are now entering at least a 35 year period of lower temperatures, which Mann and his disciples will shake their Ju-Ju Beans at and make all sorts of pronouncements, as they try in vain to keep milking the cash cow that will progressively dry up more each year.

I predict all sorts of drastic actions by the Alarmists, including violence, but if we Skeptics can just hold on (and stay alive long enough to pass our belief in The Scientific Method on to the next generation) we will finally be vindicated.

Reference is incorrect. It should read:

Paper:

Predictability of even more excuses for the recent slowdown and continuing failure of climate models and consensus using statistical bullshit.

Authors

Michael E. Mann, Byron A. Steinman, Sonya K. Miller, Leela M. Frankcombe, Matthew H. England, Anson H. Cheung

Spot on Michael – LOL

And only a couple of years back many of them were still refusing to discuss ‘the pause’…I guess they had to wait for the El Nino spike to save face.

Strange bedfellows Mann and Statistics!

We don’t need no stinkin’ statistics !

One Charlie Brown Christmas Tree in Yamal, is all you need to tell you the climate anywhere, at any time.

g

“statistical methods could not have forecast the recent deceleration in surface warming because they can’t accurately predict the internal variability in the North Pacific Ocean”

Other than the fact the models don’t work they’re wonderful at supporting some people’s belief that man in the cause of global climate change.

OK, so they couldn’t predict the recent deceleration in surface warming but they can predict, with what, 95% confidence, that the surface will warm in an “unprecedented” manner?

Yeah, I can’t see where anyone would have a problem with that.

Semi-empirical mann bends over backwards to retain the models –

oh-you-cargo-cult-pseudo-scientist – you!

“statistical methods could not have forecast the recent deceleration in surface warming…” because statistics isn’t physics. Statistics cannot predict physical events.

The fact that Mann & Co., can even write that sentence is enough to demonstrate without doubt that none of them are scientists.

If I read this right, Mann is asking “if we’d tried to use our models to predict the current pause, could we have done so?” But they already did NOT predict this. So what he’s really asking is can we tweak our models now to match what we now know really happened? And then he finds the answer to be “no.” So then he feels the need to equivocate, so makes up a story about how they could have predicted small component of a cool-down in the North Atlantic, if they’d wanted to.

And I need to give up fossil fuels for this level of chicanery and prestidigitation?

you said it all

Which let us wonder how it could be published.

“because statistics isn’t physics”

The mechanism is physical – AMO and NAO cycles. Statistics are neded to identify the cycles, and estimate the extent to which they can overcome natural variability, including possibility variability in the oscillations themselves.

“Statistics are neded to identify the cycles, and estimate the extent to which they can overcome natural variability, including possibility variability in the oscillations themselves.”

But the science is settled, right, Nick? Our CO2 emissions will cause catastrophic global warming, despite the physics only indicating slight warming, and the rest resulting from hypothetical amplification mechanisms, assumed into the models . . which tell us with certainty that assuming those in causes the models to project catastrophic warming . . Which will them become real . . unless we give out firstborn to the UN, right?

Mickey Reno — well, said. — Eugene WR Gallun

Nick Stokes, at 5:47 AM…”The mechanism is physical – AMO and NAO cycles. Statistics are neded to identify the cycles, and estimate the extent to which they can overcome natural variability, including possibility variability in the oscillations themselves.”

So..AMO and NOA are not part of “natural variability”? Are they then……Super-natural?

Can you point us to a paper that defines which physical processes are variable and unpredictable are “natural” vs. “supernatural”?

Sorry, but these ill-defined terms allow people to say some strange things…

Nick, “Statistics are neded to identify the cycles, and estimate the extent to which they can overcome natural variability, …”

Not correct, Nick. Physics is needed to identify climate cycles. Statistics might be used to estimate time of appearance, if sufficient cycles are identified as physically iso-causal.

I’m glad you showed up here by the way, so I can let you know that your BOM resolution argument was wrong. And the self-congratulatory purring among some of the commenters at your site didn’t speak well for them, either.

Astounding how they can’t “see” (natural) variability as a cause of anything BUT the failure of their GIGO “models” to “predict” ANYTHING. And also astounding that they actually believe that people seeing the phrase “internal variability” are gullible enough to believe it means anything less than “We have no idea what the $^#! is going on.”

What does this mean?

“In contrast, a multidecadal signal in the North Atlantic does appear to have been predictable. According to their results, however, its much smaller signal means it will have little influence on Northern Hemisphere temperatures over the next 1 to 2 decades.”

He seems to be saying that they can detect a longer signal but it doesn’t have any significance? My head hurts now.

If I recall properly, Mann + Co. presented a paper where they used dendrochronology to reconstruct the NAO (North Atlantic Oscillation). That’s right, tree rings as a proxy for ocean currents. No doubt using the Global Climate Teleconnection Signal (I love that one). {The paper was featured here at WUWT} This current work seems to be based on that previous effort.

Your head feel better now?

No, and now my stomach is bothering me as well.

I just left a large Mann-uscript in the loo. Lit a candle to alleviate the remainders and turned on the fan.

Too bad lighting a candle won’t remove the Mann-stench in Climate Science.

Well it does appear to have been predictable but the facts of the matter, is that it WAS NOT PREDICTED.

So fooey; you Monday Morning quarterbackers. If you didn’t predict, don’t tell us later that you could have.

So Cartoon !!

Andy Capp, somewhat sloshed, is staggering home on radar, in the late evening fog in London. He crashes into another lost drunk staggering along, and grabs a hold of him for stability. ” ‘ear; ‘ang on tuh me, mate; I know where every drainage ditch in London is ” ; ‘ Sssploosh !!! ‘; ” See, there’s one of ’em now ! ”

Yeah; predict, my A***.

G

So the science has been unsettled?

Hmm, the total ocean heat capacity is about 1000x greater than the atmosphere. The surface layer they are modeling and measuring is the first 1.5 m of an atmosphere that extends 1000s of meters up. If approximately 1/1000 of the atmosphere’s mass is in the lower 1.5 m (seems like a reasonable approximation, but it’s a difficult calculation with pressure dropping as you go up.) Then they are measuring/modeling something that represents 1/1,000,000 of the earth’s total heat capacity, while completely ignoring the other 999,999/1,000,000 portion which is constantly and actively transferring heat in and out of the surface layer. And they wonder why the models can’t predict the surface temperature.

may be but then comes the magic part…

Oh, but see you’re just providing us with a preview of the next round of excuse-mongering. They’ll just dust off that “heat is hiding in the oceans” (which couldn’t be heated by IR beyond the top few MICRONS of surface) canard, when they no longer have El Nino to produce “scary” thermometer numbers.

But I thought Karl had abolished the “pause”.

banished by waving engine room readings over the data from specifically designed measuring devises mounted on bouys.

So how can the Mann be so off message to refer to a “Temporary deceleration”.

Not a pause of course.

I wonder what he would call a full stop?

I guess when you have nothing left to support your belief except models,then you must cling to them.

Re-examining ones beliefs is far too hard.

I almost feel sorry for the mann, way in over his head and first to be thrown to the angry mob as the mass hysteria dies.

But I thought Karl had abolished the “pause”.

Wah, you beat me to it.

Karl says the pause never existed, Mann says it did and explains why the models missed it.

This “consensus” seems rather fractious, does it not?

Good thing Mann isn’t a police officer, “Sir, you failed to STOP at that STOP sign. Now I’m going to have to charge you”.

Car driver “But the car hasn’t moved in 19 years, how much stopped do you want?”

Mann never acknowledges the pause either.

He calls it a slowdown in warming.

A variation of the old “The long term trend is still (when I start my “trend” in the LIA) up!” canard.

Wow, the threat of a president Trump has really shaken things up !!

Marcus, I like your take on the situation. With this study, these Climate Change Charlatans may very well be positioning themselves for Trump’s possible election.

I don’t think anything they do will save them from intense Trump scrutiny, though.

If Trump gets elected, the whole CAGW three-ring-circus is going to be put under a microscope.

I can’t wait! 🙂

Here in Britain, we can’t wait either. Trump is going to stuff it to so many people – here in Britain too.

For a moment I read that to say “TREE ring circus,” which would have given you the “Pun of the Climate Cycle” Award. 😀

From the article: “From the early 2000s to the early 2010s, there was a temporary slowdown in the large-scale warming of Earth’s surface.”

Why end it in 2010? The years subsequent to 2010 are also included in the “flatline” of the 21st century, since they are all within the margin of error.

Because once the period exceeds 10 years it is approaching a substantial fraction of the “standard” climatological period of 30 years. As it is, the “pause” is now more than half of such a period. Do you think they will ever wish to use 1991-2020 (or 2001-2030) as a reference period?

“Why end it in 2010?”

They didn’t. Your quote says “early 2010s”, not 2010. In fact they took data to 2014, but note that the slowdown effect diminishes if you go to the end of that period:

“Though less pronounced if data through 2014 are used [Cowtan et al., 2015; Karl et al., 2015], the slowdown in Northern Hemisphere (NH) mean warming is evident through at least the early part of this decade.”

Thanks for that clarification, Nick.

Gee, Nick! The Pacific Ocean and its Blob had nothing to do with 2014 temperatures, did they? Ram and jam through 2015 and 2016 Pacific Ocean ENSO temperatures and Cowtan and Karl will really have something to show the rubes. No big pressers projected after 2017? Get a grip.

“The Pacific Ocean and its Blob had nothing to do with 2014 temperatures”

They were talking about an observed slowdown. By 2014 they weren’t observing a slowdown any more. No use saying – well there really was an observed slowdown, except for the Blob, and ENSO etc. On that basis, you could project the slowdown for ever.

Mann is off-message. I thought they did away with the pause, just like Mann did away with the Medieval warm. Very convenient things, models and proxies. About as good as onieromancy, but convenient.

Tom, He is trying to buy street cred. He’ll jump on the pause bandwagon long enough to be quoted and therefore relevant. …shallow has been.

Shallow never was

Since when do the warmists use statistical models anyway? I got the impression all their supercomputer-powered models where determistic…wholly dependent on input variables, assumptions, and initial conditions. Statistical models wouldn’t have really predicted a specific outcome either, only assigned a probability estimate. Bottom line, this warmist propaganda piece is fail on top of fail inside a fail.

Exactly.

If you can’t do a deterministic model, that means you don’t understand the process. That, in turn, means your predictions of future behaviour should not be believed.

“If you put tomfoolery into a computer, nothing comes out of it but tomfoolery. But this tomfoolery, having passed through a very expensive machine, is somehow ennobled and no-one dares criticize it.”

– Pierre Gallois

Deterministic models don’t work on just about everything, especially on computers. see Edward Norton Lorenz’s paper Deterministic Nonperiodic Flow for the full explaination, the Short answer;

Also note that weather is an instance of climate and if weather is chaotic, than Self-similarity forces climate to be chaotic as well.

Yes. You beat me to it, but that’s the real problem and also the reason the models they’ve developed using “hindcasting” can never work.

Anyone with even a basic understanding of statistical modeling knows you can’t ever use an empirically derived model outside its calibration range; it is never valid to extrapolate from an empirical model.

I’ve had this discussion with devotees of the CMIP models many times, on every occasion I’ve been assured I don’t know squat about how those models work, that they are in fact theoretical models based on physical relationships (i.e. the so called “greenhouse” effect) and therefor may legitimately be used to predict the behavior of Earth’s climate. Mann very clearly just denied that and in so doing admitted the methods currently used to model climate change are completely invalid. They can never succeed.

It’s a fundamental rule: You can’t ever extrapolate from an empirical model. Unless you have a complete theoretical model of the system, it will fail to predict. It may appear to succeed randomly, but it will never consistently predict the behavior of the modeled system. This is burned into the brain of every single experiment designer employing statistical methods.

Under this declaration, there is absolutely no reason to continue funding this research. It’s snake oil. Plain and simple.

I have argued for years that the climate models are nothing but expensive curve fitting programs. The super computers and millions of lines of code is just the lipstick they put on the curve fitting pig.

My landlord does this every year.

From the data base of years gone the monthly costs of winters heating are derived – every new year to get accomodated.

Predictions -forget it.

“Since when do the warmists use statistical models anyway? I got the impression all their supercomputer-powered models where deterministic…wholly dependent on input variables, assumptions, and initial conditions. “

People use statistical models when appropriate. But yes, GCM’s are solutions of the basic conservation equations (mass, momentun, energy) locally. That is precisely the issue of Mann’s paper. Some say that the slowdown was statistically predictable by identifying PDO, AMO etc. GCM’s don’t generally do that, for good reason. The physics they use is local, within elements, and superimposing other effects has to be done vary carefully. Still, it is done, most notably with radiation. Mann is finding out whether adding observed cycle information (without mechanism) would improve the result. He’s something of an AMO enthusiast himself. But no, he says, statistically it wouldn’t have helped predict the slowdown. NAO gives some help, but only predicts a small effect.

Gavin Schmidt probably was one of his anonymous reviwers. He likes this sort of hand waving.

Mann was a physics hick when he started scamming fake hockey stick generators as statistics, and you’re one now.

What’s the name of the law of thermodynamics to solve temperature of gas in chemistry?

What’s it’s equation and what do all the factors stand for?

Show where the Green House Gas Effect fits when solving temperature in gas physics.

Or you’re just another fake like all these other Green House Gas Effect barking

hicks.

Nick Stokes on May 11, 2016 at 7:31 pm

“Since when do the warmists use statistical models anyway? I got the impression all their supercomputer-powered models where deterministic…wholly dependent on input variables, assumptions, and initial conditions. “

People use statistical models when appropriate.

________________________

And yes, Nick: people use heavy metall when appropriate.

What are you gonna

CON vince

us.

How can you model something that even the IPCC’ers admit they don’t understand?

“The team’s analyses indicate that statistical methods could not have forecast the recent deceleration in surface warming because they can’t accurately predict the internal variability in the North Pacific Ocean, which played a crucial role in the slowdown.”

Well if the models can’t predict the past then what good are they for predicting the future. This sounds like an indictment of their own sloppy half-a$$ed conjuring. I am surprised that the twit Mann didn’t instrument trees to get a signal for the future…. somehow.

Translation – We simply have no idea what is going on and we are scrambling to find something in our models and everything else we have said that sounds like we aren’t completely full of it.

In contrast, a multidecadal signal in the North Atlantic does appear to have been predictable

=====

There’s so much wrong with this lucky guess……..

It has been shown that the surface temperature series not a random walk but a Hurst process at a wide range of time scales. So it is unlikely that the models will ever get it right with OLS trends that treat the temperature time series as Gaussian and independent and ignore memory, dependence, and persistence in the data. See for example

http://papers.ssrn.com/sol3/papers.cfm?abstract_id=2776867

“It has been shown that the surface temperature series not a random walk but a Hurst process at a wide range of time scales.”

By whom? It is neither.

” So it is unlikely that the models will ever get it right with OLS trends that treat the temperature time series as Gaussian and independent “

GCMs do nothing like that.

Of course they do, except for stuff they want to throw in.

…Our greatest threat is the liberal media ! ?oh=bcbf8ae36f937ac701f5a9a0aeb68708&oe=57AE30AB

?oh=bcbf8ae36f937ac701f5a9a0aeb68708&oe=57AE30AB

Statistical models are not fit for the purpose of forecasting climate trends. Forecasting general trends, with probable useful accuracy ,is straightforward when we know where we are with regard to the natural millennial and 60 year cycles which are obvious by simple inspection of the temperature and solar records.

See Figs 1, 5 ,5a and 8 at

http://climatesense-norpag.blogspot.com/2016/03/the-imminent-collapse-of-cagw-delusion.html

Here is Fig 1 which illustrates the general trends, forecasts and in particular the millennial peak and trend inversion at about 2003.

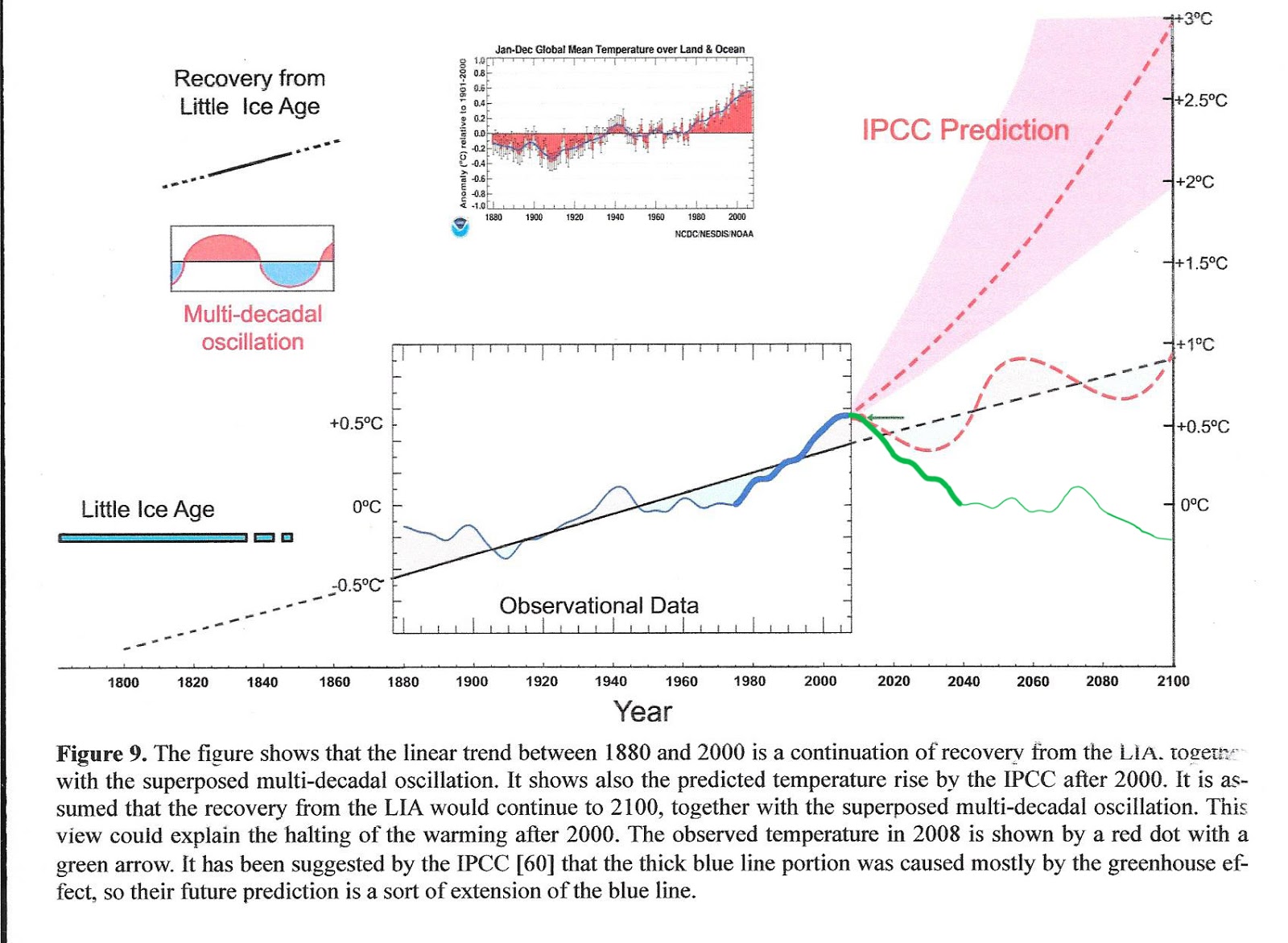

Figure 1 above compares the IPCC forecast with the Akasofu paper forecast and with the simple but most economic working hypothesis of this post (green line) that the peak at about 2003 is the most recent peak in the millennial cycle so obvious in the temperature data.The data also shows that the well documented 60 year temperature cycle coincidentally peaks at about the same time.

Here are Figs 5 and 5a

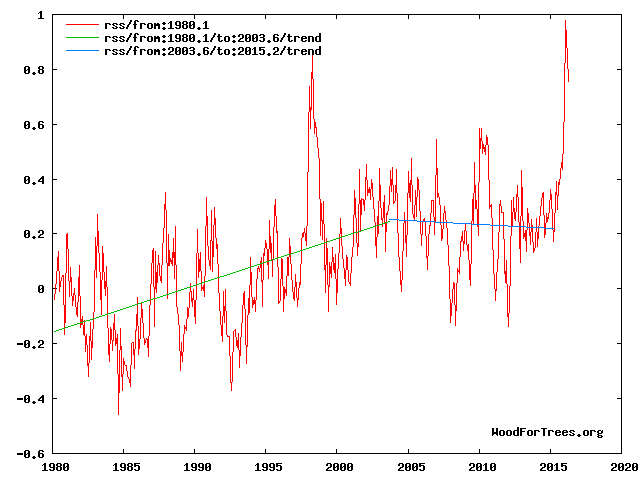

The cooling trends are truncated to exclude the current El Nino as an end point. The Enso events are temporary aberrations which don’t have much influence on the trends – see the 1998 and 2010 events in Figs 5 and 5a.

By anyother name a Bond Event in the Holocene, aka a D-O event in the larger-longer Pleistocene.

What is semiempirical?

I taught I taw a puddy-cat!

I did, I Did, I DID saw a puddy tat!

Thought the same but there is a definition. Sounds like an oxymoron to me. I fact I reckon it is.

In the government, they have base funding, one-time funding, and recurring one-time funding…I only wish I was making that up.

nonemperical is a theoretical model that is a construct of logic and underlying principals that makes predictions, by which observations can be made and experiments can be made which will strengthen or weaken confidence, empirical is based on, concerned with, or verifiable by observation or experience rather than theory or pure logic; so it follows that Semiemperical is not based on pure logic and underlying principals and is only supported by adjusted data products!

It must be a bit like like semiepregnant.

It seems the opening line of the paper presumes to know the future by stating flatly that the pause was temporary.

It seems patently unscientific to start with a predetermined conclusion.

Of course, this is no surprise to me or anyone else who has kept up with the great yet settled debate, but it does immediately flag the author, for even a new-comer to the issue, as highly biased and therefore not to be taken as a credible source of scientific information.

” the internal variability of the North Pacific, which played a critical role in the slowdown, does not appear to have been predictable using statistical forecast methods”.

They didn’t know about the PDO so it wasn’t ‘predictable’, now they do, they have to adjust the models.

thingodonta, you didn’t read what they said. They said that North Pacific internal variability was not “… predictable …” That means that future models still won’t use “… statistical forecast methods.” North Pacific internal variability will not (cannot in their world) be used in future models going forward. The next IPCC Report, then, need not concern itself with inaccurate prior “projections” as they relate to the intervening past. Thus, the writers of history just erase portions of the past. In the future, those pointing out discrepancies are dismissed as cranks.

William R nails it, bit I’ll add my bit anyway: The climate computer models (see here) operate entirely on smallish bits of local weather. That is, they use a global grid of smallish cells interacting in small time slices (by definition, conditions in a small area over a short time are “weather”). They are not statistical models.

The article says “Several studies have suggested that climate models could have predicted this slowdown [..]. To test this hypothesis, Mann et al [compared] [..] compare modeling projections with the already observed outcomes. The team’s analyses indicate that statistical methods could not have forecast the recent deceleration in surface warming“.

The finding by Mann et al is therefore completely false, because what they did (applying statistical methods to model projections and observed outcomes) has nothing whatsoever to do with what goes on inside climate models and therefore cannot determine whether the climate models could have predicted the slowdown. All the study did was to show that the models did not predict the slowdown.

But we knew that already.

Mike, I assume (see here) was meant to be a link? It didn’t come through. I’m very interested because what you’ve done is refute Mann’s understanding of the models, and argument I’ve faced many times before when trying to point the fundamental architectural and mathematical failures of GCM models.

I’d really like to read and understand your sources.

Bartleby – the link is one of a series of articles that I wrote a while back, looking at and into the models. The missing link was : https://wattsupwiththat.com/2015/11/08/inside-the-climate-computer-models/

The sources for that article are linked from the article. There’s also a link to my previous article (take special note of the footnotes!).

Thanks Mike, I appreciate it!

Mike writes in the citation above:

Thanks for the treatment Mike, now I believe I have a better understanding of the GCR models and the term “semiempirical”.

After reading your review, I don’t feel compelled to withdraw my criticism; these models may include some poorly understood theoretical components, but they also attempt to “blend” those theoretical parts with empirical models. In my opinion the approach is both flawed and doomed to failure.

I don’t think the criticism I made, that it isn’t legitimate to extrapolate from empirical models is invalid. They appear to be doing exactly that, while at the same time claiming the models are based on theoretical models. I have no idea how such an approach ever made it passed a scientific review since it is completely unfounded.

PS.

Thinking just a bit more about this (and after putting down a couple glasses of good red wine so don’t take this too seriously) I’d speculate the GCM models adapted for Climate Change use resulted from a small group of post-docs having an “oh shit!” moment when they began to understand the true complexity of statistical thermodynamics, not to mention the outrageous computational resources needed to make any progress at all.

Books have been written on the perils of attempting to simultaneously solve multiple partial differentials uniquely, how could they make anyone believe they’d cornered they’d market? Where are the papers?

I definitely agree with you conclusions. This is all smoke and mirrors.

Carbon dioxide in the atmosphere cannot be compared to blue dye in a river. Metacycles like PDO even less so. Filtering them out and reinserting them is just a tautology.

Statistics is just witching, useful only as an indication of where to drill for physics.

“The finding by Mann et al is therefore completely false, because what they did (applying statistical methods to model projections and observed outcomes) has nothing whatsoever to do with what goes on inside climate models and therefore cannot determine whether the climate models could have predicted the slowdown. All the study did was to show that the models did not predict the slowdown.”

No, that isn’t what the study did. It showed that statistical models could not have predicted the slowdown (using cycles), or at least not much. He’s really interested in whether a newish style of decadal predictions using AMO etc information might have been able to do better, These are initialised, and would operate, he suggests, by removing the AMO component from the initialising data, and restoring it in the results. But that isn’t going to work until some statistical predictability is shown.

With all due respect, that’s tosh. “Several studies have suggested that climate models could have predicted this slowdown [..]. To test this hypothesis, Mann et al [..]“. Mann et al purported to be testing the hypothesis that climate models, NB. not statistical models, could have predicted …..

Ferchristsakes, there has been randomly varying temperatures, with a general downtrend over the last few thousands of years, over this interglacial. There is no statistical predictability over any timeframe. Other than the use of pseudo-scientific mumbo jumbo, there is no way to ascribe CO2 to the minor jig from 1975 to 1998. Public alarm will play out, at great cost, to the benefit of the obvious.

Dave Fair

Mike,

With all due respect, that’s tosh. “Several studies have suggested that climate models could have predicted this slowdown [..].

No. The press release didn’t specify GCMs. In fact, they are talking about decadal prediction – could have been clearer. But the paper is very clear. They tested statistical models – not GCMs. There is some use of EBM’s, which is different again. But what is perfectly clear, from both abstract and paper, is that Mann was testing statistical models. And the purpose, clear in the paper, is to test whether they (AMO etc) might be useful in decadal prediction.

Nobody expects that GCMs would predict the slowdown. They aren’t initialised to do that. ” possible implications for initialized model predictions are also discussed”,/i> is the key phrase in the abstract.

Nick – OK I accept your explanation. But the article as written did say otherwise.

I’m amused by your statement “Nobody expects that GCMs would predict the slowdown. They aren’t initialised to do that.“. You and I know that the climate models can never produce any climate prediction of any value, but in the wider community surely everybody expects the models to make reasonable climate predictions, and surely everybody expects climate models to be initialised correctly for the real climate. Isn’t it now long overdue for the wider community to be told that the climate models bear absolutely no relationship to the real climate.

“Isn’t it now long overdue for the wider community to be told that the climate models bear absolutely no relationship to the real climate.”

No, climate models do track the evolution of earth climate. They just don’t do weather, because they aren’t initialised to do that. For the longer scale weather (ENSO) they do show the appropriate oscillations – they just can’t predict the phasing.

Here’s an analogy. You’re injecting blue dye into a river. You want to predict where and how much you’ll see blue downstream. If you carefully measure the river flow at the input point, you might predict the downstream trajectory for a metre or so. That’s like NWP. But pretty soon (a few metres), you won’t be able to say where the eddies will take it.

But if you look 1 km downstream, and you know the injection rate, you’ll be able to give a good estimate of how blue the sampled water is, and it won’t depend on the eddies that were around at injection time. All that has faded, but conservation of mass still works, and gives a good answer, even though medium term prediction failed. That’s because of mixing. The weather itself doesn’t mix, but the climate averaging process does, and conservation holds.

But your analogy assumes the “scientist” – who is demanding the “right” to experiment with the world’s entire economic drivers, the life and health and well-being of every person living on the planet – does know everything sufficient about the river to APPLY the well-known approximations and assumptions of “mass flow” of the river. Of the ink. And of all of the “other stuff” affeting the river, the blue ink, and all of the rest of the stuff going on that affects the river between the test point and every subsequent point downstream.

We do know, absolutely, that the global average temperature has increased, been steady, decreased, been steady, and increased when CO2 has been steady.

We do know, convincingly, that global average temperature has increased, been steady, decreased, and been steady, and increased while CO2 has been steadily increasing.

Thus, we do know, absolutely, that the global circulation models are incomplete. They do NOT sufficiently predict the future, because they do NOT predict even the present, the past, nor the “invented” past.

For example: Your little analogy falls apart if the blue ink evaporates. If the ink is alcohol-based, and so volume added and the change in final volume do not correspond. If the water evaporates, or goes into a sinkhole. Gets sucked out by an irrigation pump or city water works. Gets added by the clean discharge from a factory or a sewage treatment plant. Gets disturbed by a filling lock or opening dam. Gets sucked up by a deer or antelope playing nearby. Gets the sewage from a bear in the woods.

1. There is NO HARM from CO2 being added to the atmosphere – except when the “scientists” and their tame politicians and social-multipliers become propagandists.

Nick writes:

Nick, this isn’t news though. The important part is they used techniques that are known (well known) to not work. Ever. Under any circumstance.

They appear to have blithely violated every rule of mathematical inference. This isn’t a small thing.

RACookPE1978 writes:

However we also know that CO₂ has increased significantly, while temperature has not?

And isn’t this observation alone sufficient to break the model that correlation equals causality, which appears to be the basic hypothesis of AGW? Correlation isn’t causality, but the complete absence of correlation certainly must deny causality?

Nick writes

This analogy has assumptions that make it invalid IMO. Firstly you can see how fast its flowing and so you can guess how fast the dye will take with reasonable accuracy even though you cant know the detail. Flow rate at one point in the river implies flow rate throughout. AGW simply isn’t like that, the flow rate of the river is what we’re trying to work out.

Secondly there are many injectors of ink, not just the one you’re interested in. Also there are consumers of ink. And all that that muddies the waters as far as knowing whether your ink is what you’re looking at downstream.

Nick,

“No, climate models do track the evolution of earth climate.”

No maybe at all? No if they got it right? Just do? . .

Logically speaking, you just said it’s not even possible to mess up a climate model such that it will not track the evolution of earth climate, and that is very hard for me to believe. Did you mean something more like they’re only designed to track earth climate, not weather?

Like Bartleby, I would like to see the details of the climate models. I am reviewing John Casti’s text on mathematical models (Reality Rules) to refresh my memory before checking to see if the climate models are “good.” (In an earlier life I built some models to perform reliability and maintainability anayses, but they were much simpler than a climate model.) A main point is that a model is not the system being modeled. Although a model does not include all the factors that affect the actual system, it must include all the most important factors and must be coded correctly. Another issue is round-off error, which can be magnified in sequential runs of a model where the output of one run is used as the input for the next run without correcting the round-off error. Unfortunately, the current climate models are not likely to be abandoned until a better model is developed and adopted. Casti emphasizes that the person doing the modeling must have extensive knowledge of the system and also extensive knowledge of math model development including the algorithms used to represent how the system actually functions, but does not need to be an expert. The community of AGW skeptics should be working to develop a better model (or system of models) or a more accurate alternative method to project future climate performance.

See my reply to Bartleby above. Note that I don’t go far into the models, because long before you get to the sort of detail that you are talking about it becomes very clear that the models are incapable of working.You would like to see a better model (or system of models) or a more accurate alternative method. From my article you can see that the models are heading up a blind alley. As they are currently structured, you just can’t get a “better” or “more accurate” model. A completely different kind of model – top down instead of bottom up – is needed.

“I would like to see the details of the climate models.”

CCSM is a well documented GCM. The detailed scientific description is here. The code is available via here.

Mr. Jonas and Mr. Stokes, thanks for your responses. SRI

Mmmmm, subversion code. Interesting terminology for the climate model. Descriptive?