![latest[1]](http://wattsupwiththat.files.wordpress.com/2010/12/latest1.jpg?resize=256%2C256&quality=83) The sun went spotless yesterday, the first time in quite awhile. It seems like a good time to present this analysis from my friend David Archibald. For those not familiar with the Dalton Minimum, here’s some background info from Wiki:

The sun went spotless yesterday, the first time in quite awhile. It seems like a good time to present this analysis from my friend David Archibald. For those not familiar with the Dalton Minimum, here’s some background info from Wiki:

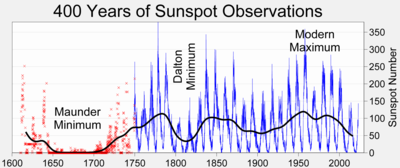

The Dalton Minimum was a period of low solar activity, named after the English meteorologist John Dalton, lasting from about 1790 to 1830.[1] Like the Maunder Minimum and Spörer Minimum, the Dalton Minimum coincided with a period of lower-than-average global temperatures. The Oberlach Station in Germany, for example, experienced a 2.0°C decline over 20 years.[2] The Year Without a Summer, in 1816, also occurred during the Dalton Minimum. Solar cycles 5 and 6, as shown below, were greatly reduced in amplitude. – Anthony

Guest post by David Archibald

James Marusek emailed me to ask if I could update a particular graph. Now that it is a full two years since the month of solar minimum, this was a good opportunity to update a lot of graphs of solar activity.

Figure 1: Solar Polar Magnetic Field Strength

Figure 1: Solar Polar Magnetic Field Strength

The Sun’s current low level of activity starts from the low level of solar polar magnetic field strength at the 23/24 minimum. This was half the level at the previous minimum, and Solar Cycle 24 is expected to be just under half the amplitude of Solar Cycle 23.

Figure 2: Heliospheric Current Sheet Tilt Angle

Figure 2: Heliospheric Current Sheet Tilt Angle

It is said that solar minimum isn’t reached until the heliospheric current sheet tilt angle has flattened. While the month of minimum for the 23/24 transition is considered to be December 2008, the heliospheric current sheet didn’t flatten until June 2009.

Figure 3: Interplanetary Magnetic Field

The Interplanetary Magnetic Field remains very weak. It is almost back to the levels reached in previous solar minima.

Figure 4: Ap Index 1932 – 2010

Figure 4: Ap Index 1932 – 2010

The Ap Index remains under the levels of previous solar minima.

Figure 5: F10.7 Flux 1948 – 2010

The F10.7 Flux is a more accurate indicator of solar activity than the sunspot number. It remains low.

Figure 6: F10.7 Flux aligned on solar minima

In this figure, the F10.7 flux of the last six solar minima are aligned on the month of minimum, with the two years of decline to the minimum and three years of subsequent rise. The Solar Cycle 24 trajectory is much lower and flatter than the rises of the five previous cycles.

Figure 7: Oulu Neutron Count 1964 – 210

A weaker interplanetary magnetic field means more cosmic rays reach the inner planets of the solar system. The neutron count was higher this minimum than in the previous record. Thanks to the correlation between the F10.7 Flux and the neutron count in Figure 8 following, we now have a target for the Oulu neutron count at Solar Cycle 24 maximum in late 2014 of 6,150.

Figure 8: Oulu Neutron Flux plotted against lagged F10.7 flux

Neutron count tends to peak one year after solar minimum. Figure 8 was created by plotting Oulu neutron count against the F10.7 flux lagged by one year. The relationship demonstrated by this graph indicates that the most likely value for the Oulu neutron count at the Solar Cycle 24 maximum expected to be a F10.7 flux value of 100 in late 2014 will be 6,150.

Figure 9: Solar Cycle 24 compared to Solar Cycle 5

I predicted in a paper published in March 2006 that Solar Cycles 24 and 25 would repeat the experience of the Dalton Minimum. With two years of Solar Cycle 24 data in hand, the trajectory established is repeating the rise of Solar Cycle 5, the first half of the Dalton Minimum. The prediction is confirmed. Like Solar Cycles 5 and 6, Solar Cycle 24 is expected to be 12 years long. Solar maximum will be in late 2014/early 2015.

Figure 10: North America Snow Cover Ex-Greenland

The northern hemisphere is experiencing its fourth consecutive cold winter. The current winter is one of the coldest for a hundred years or more. For cold winters to provide positive feedback, snow cover has to survive from one winter to the next so that snow’s higher albedo relative to bare rock will reflect sunlight into space, causing cooler summers. The month of snow cover minimum is most often August, sometimes July. We have to wait another eight months to find out how this winter went in terms of retained snow cover. The 1970s cooling period had much higher snow cover minima than the last thirty years. Despite the last few cold winters, there was no increase in the snow cover minima. The snow cover minimum may have to get to over two million square kilometres before it starts having a significant effect.

David Archibald

December 2010

Geoff Sharp says:

December 23, 2010 at 4:51 am

Chicken and egg I guess, but there was a link that might be worth checking (McKinnon 1987).

The second slide of http://www.leif.org/research/SIDC-Seminar-14Sept.pdf is from McKinnon [quoting Waldmeier 1961: Sunspot numbers, 1610-1985 : based on “The sunspot activity in the years 1610-1960” by Waldmeier]

“I hope we can close that chapter now and accept the words of the observers on this point.”

Perhaps you are too trusting. The sunspot count is a bit of a boys club, surely you don’t believe everything you read?

like Wolf’s number for the Dalton? Yes, in case of the annual reports, I do believe them.

There is good reason for doubt, the data is not solid.

There is no reason to doubt that the 8 cm was used throughout. The data is what we have and we have to work with that.

But lets get back to your earlier statement. You say there are two factors in place, a 0.6 factor that Wolfer introduced to align his new counting method with Wolf, and then another 0.6 factor on top for the change in telescopes when Locarno took over. I do not see any evidence to back up your statement?

There is a bit of mystery here. If Locarno with a factor of 0.6 matches Zurich that also has a factor of 0.6, then Locarno (13.5 cm telescope) and Zurich (8 cm) see the same things and the telescope size doesn’t matter: 0.6 * Locarno [13.5] = 0.6 * Wolfer [8] = 1 * Wolf [8]. I prefer to think that there should be a difference because of the different size telescope, so the trick is to figure out what Waldmeier means when he says that Locarno has a factor of 0.6. I’m prepared to accept that there is no hidden factor of 0.6 and that the size of the telescope doesn’t matter. The Locarno observer before Cortesi was Rapp, and Waldmeier says that the factor for Rapp was 0.8. That would be consistent with a larger scope seeing more spots. In 1957, Rapp [with factor 0.8] overlapped with Cortesi [k=0.53 for 1957] and 0.53/0.8 = 0.66 which is what I would expect. The k-factor for Statne [Becvar] was 0.77, for Istanbul [Gleissberg – of Gleissberg cycle fame] was 0.96. These values for 1948 and both observers used a 13 cm telescope. Consistent with Rapp [also 13.5 cm].

So the mystery is why with the same scope Rapp has 0.8 and Cortesi has 0.53. The other observer [Pittini] at Locarno in the 1959-1978 period also had 0.6, so it is not Cortesi that has gone rogue or something. This whole thing is under investigation. I don’t know the answer yet.

Geoff Sharp says:

December 23, 2010 at 5:12 am

Robuk has some valid points.

No he hasn’t, as nobody doubts that very few spots were visible. And everybody recognize that the early observers were good [I showed him some drawings to make him understand that] and that their telescopes were adequate, so his nonsense [Leif, come CLEAN!] is uncalled for.

I cant understand why you invoke L&P (whatever that is) to describe grand minima. You say there was isotope modulation during the Maunder, but how does it rate when compared with active times like recent decades past?

The L&P is a simple and natural explanation for why no spots were seen and yet the modulation was on par with recent times. Here is McCracken and Beer: http://www.leif.org/research/McCracken-HMF2.png

They have the level wrong, but that does not affect the modulation. If one would claim that the level was correct, then the Maunder Minimum modulation [as a fraction of the whole] would be much larger than the modern values. Pick your poison.

Analysis [by Mayahara – Japanese Cedar trees] of 14C during both the Maunder and Spoerer minima also show a large variation. So, solar activity measured by its magnetic field and/or the number of CMEs [and their effect on cosmic rays] during those Grand Minima was considerable, and yet no spots were seen. To use your phrases, the speck ratio was extreme, in fact all spots had turned into specks. This is what the L&P effect is: the convection is weaker and specks do not grow to become spots. [All spots begin their life as specks which assemble into larger and larger spots, except when the L&P effect prevents them so doing so]. So, a grand minimum is not because the dynamo is disrupted and magnetic fields are scarce, but because the surface convection [causing the ‘percolation’] is weaker [we don’t know why – but perhaps during SC24 we’ll find out].

Leif Svalgaard says:

December 23, 2010 at 9:10 am

There is a bit of mystery here. If Locarno with a factor of 0.6 matches Zurich that also has a factor of 0.6, then Locarno (13.5 cm telescope) and Zurich (8 cm) see the same things and the telescope size doesn’t matter

It turns out to be very hard to get information about the exact instrumentation in Locarno. Their recent publications state 15 cm aperture. I have notes in Waldmeier’s own hand that in 1948 the instrument had 13.5 cm aperture. In both cases what is really important [according to Waldmeier] is the size of the projected image which has been constant at 25 cm diameter.

Leif Svalgaard says:

December 23, 2010 at 9:10 am

If we do assume the records are correct it probably makes the growth in sunspot numbers worse. If Wolfer did invent the weighting scheme as well as use the 150mm telescope at the same time as implementing the 0.6 factor then all would be sweet. But your research into the sudden jump at 1945 would suggest otherwise.

So going on the accepted knowledge we still have 3 areas of possible steps in the counting method. The Waldmeier step being the largest but the increased speck ratio and larger telescopes also adding. The Locarno values look to be discounted by 0.6 to arrive at the SIDC value so we must assume these steps are in place.

Your linked graph of the isotope modulation using a small sample of trees is not compelling. It is suggesting that the magnetic modulation is the same during the Maunder as SC19. That would be like comparing the F10.7 flux value of SC24 to SC19, we know the levels will be vastly different. Of course the grand minimum type cycle is still functioning but at a subdued rate and this same logic applies to L&P. Magnetic activity as I have shown is increasing with the cycle as it approaches cycle max, it is not slowly dying on a continuous ramp down. You cant have your cake and eat it too, but I have noticed you are now pushing the increasing speck ratio as a function of L&P’s observations which is totally plausible…I just dont think you need to measure every speck as it can only continue to drive down the overall magnetic count artificially. If L&P is just about more specks that is good, but the title of their paper suggests otherwise.

I find it more interesting that ratio of large alpha spots with high magnetic strength is increasing but the F10.7 and EUV output from these groups is very weak. This is the bigger story I think.

Geoff Sharp says:

December 23, 2010 at 7:41 pm

So going on the accepted knowledge we still have 3 areas of possible steps in the counting method. The Waldmeier step being the largest but the increased speck ratio and larger telescopes also adding.

The larger telescopes are taken care of by applying a factor taking that into account, although I think that is a small effect. At any rate, it is measured empirically and so is not under debate. The increased number of specks is just L&P at work and actually leads to smaller sunspot numbers as the specks eventually disappear.

Your linked graph of the isotope modulation using a small sample of trees is not compelling.

What nonsense is that? The McCracken-Beer graph is derived from 10Be in ice cores. Mayahara’s cedar trees are just corroborating evidence [and their sample was not small – and in principle a single tree is all that is needed as we just need to measure the 14C value in each tree ring. 14C is a global thing, because of efficient atmospheric mixing]

It is suggesting that the magnetic modulation is the same during the Maunder as SC19.

It is not ‘suggesting’. The values are derived from measured values of the 10Be flux.

i would be like comparing the F10.7 flux value of SC24 to SC19, we know the levels will be vastly different.

Again, we measure that the modulation was the same, so we know that it was not vastly different.

Magnetic activity as I have shown is increasing with the cycle as it approaches cycle max, it is not slowly dying on a continuous ramp down.

You have not shown anything about magnetic activity. You postulate things. The cosmic ray guys and gals [Mayahara] actually measure stuff.

just dont think you need to measure every speck as it can only continue to drive down the overall magnetic count artificially. If L&P is just about more specks that is good, but the title of their paper suggests otherwise.

What they measure and say [and remember Bill L is a good friend of mine and we exchange views and data on this] amounts to this: There is a distribution of spots from large to specks. This is the vertical width of the points on their graph. That whole distribution is steadily shifting downwards in magnetic fields and upwards in intensity. What gets shifted under 1500 G and above 1.000 is still there, but is not visible. This means that although magnetic activity will be slightly lower by the downward shift of the distribution, the effect on the spot count will be much more severe, as the smaller spots will no longer be counted, and the sunspot number is dominated by the small spots. See e.g. http://www.specola.ch/drawings/2003/loc-d20031030.JPG and look at group 266. It has about 10 larger spots and 50 specks.

I find it more interesting that ratio of large alpha spots with high magnetic strength is increasing but the F10.7 and EUV output from these groups is very weak. This is the bigger story I think.

This is well-known and has simple explanation. F10.7 and EUV depends on a bipolar [or more] group with lots of close field lines going from one polarity to the other so the field in the chromosphere and corona that determine F10.7 and EUV is strong and hence F10.7 and EUV are strong. For alpha groups there is still the smae amount of both polarities, but where one polarity is concentrated in the visible spot, the other is spread over a large area around the spot. This spreads the field lines over a large volume and the field is thus weaker, and so is F10.7 and EUV. So, nothing fundamental or interesting there, if one knows what’s going on.

As the specks disappears only larger spots will be left, like during the Maunder Minimum, where the reported spots were often large and single. All this makes eminent sense.

Leif Svalgaard says:

December 23, 2010 at 8:39 pm

None of your comments shed any light on the discussion, they just moved around the issues raised. We can leave it there if you like.

Geoff Sharp says:

December 24, 2010 at 12:40 am

None of your comments shed any light on the discussion, they just moved around the issues raised. We can leave it there if you

Well, I had hoped you would have contributed constructively, but if that is not to be, let us leave it where it is at.

Realclimate

Did the Sun hit record highs over the last few decades?

Alec Rawls says:

10 August 2005 at 2:04 AM

Nice post, but the conclusion: “… solar activity has not increased since the 1950s and is therefore unlikely to be able to explain the recent warming,” would seem to be a non-sequitur.

What matters is not the trend in solar activity but the LEVEL. It does not have to KEEP going up to be a possible cause of warming. It just has to be high, and it has been since the forties.

Presumably you are looking at the modest drop in temperature in the fifties and sixties as inconsistent with a simple solar warming explanation, but it doesn’t have to be simple. Earth has heat sinks that could lead to measured effects being delayed, and other forcings may also be involved. The best evidence for causality would seem to be the long term correlations between solar activity and temperature change. Despite the differences between the different proxies for solar activity, isn’t the overall picture one of long term correlation to temperature?

[Response: You are correct in that you would expect a lag, however, the response to an increase to a steady level of forcing is a lagged increase in temperature and then a asymptotic relaxation to the eventual equilibirum. This is not what is seen. In fact, the rate of temperature increase is rising, and that is only compatible with a continuing increase in the forcing, i.e. from greenhouse gases. – gavin]

===========================================================

http://i446.photobucket.com/albums/qq187/bobclive/reconstructedTSI.jpg

http://i446.photobucket.com/albums/qq187/bobclive/irradiance.gif

For me, the evidence above is clear, a ramp up of TSI from around 1900 and then a steady high from 1940 to 2000 leading to the slightly higher temperatures we see today, no room for CO2.

Sun spots or the lack of them are just the visual marker of an active or less active sun which correlate well with temperature trends here on earth. In fact it is hard to find a better correlation between two variables.

Gavins response is utter rubbish if the two graphs above are correct.

http://www.realclimate.org/index.php/archives/2005/08/did-the-sun-hit-record-highs-over-the-last-few-decades/

Robuk says:

December 24, 2010 at 10:08 am

For me, the evidence above is clear, a ramp up of TSI from around 1900 and then a steady high from 1940 to 2000 leading to the slightly higher temperatures we see today, no room for CO2.

TSI today is what it was in 1900 because the magnetic field that drives variations of TSI is today what it was around 1900.

Robuk says:

December 24, 2010 at 10:08 am

What matters is not the trend in solar activity but the LEVEL. It does not have to KEEP going up to be a possible cause of warming. It just has to be high, and it has been since the forties.

Exactly, and as the warmists seem to forget, the ocean oscillations also play a major role. Trying to link all climate variation with solar output is just creating a strawman. Mixing solar variation with oceanic patterns completely explains the last 100 years of world climate trends.

Why do you believe it will last two cycles or be limited to two cycles?

A prediction for the next 2 cycles only doesn’t preclude the effect continuing after that, but if longer term predictions can’t be reliably made based on the data available, it’s good to not make them.

We don’t know enough about what makes the sun tick to make any long term predictions (nor do we know enough about what makes planetary climate systems tick to make long term predictions about those, not even a month ahead, not that stops greenies from making sweeping predictions for centuries in the future based on cooked computer models in order to sell their agenda).

JTW says:

December 25, 2010 at 12:08 am

A prediction for the next 2 cycles only doesn’t preclude the effect continuing after that, but if longer term predictions can’t be reliably made based on the data available, it’s good to not make them.

The prediction record shows that we can probably predict one cycle ahead, once we know the polar fields a few years before solar minimum. Any more than that will be statistics only, but that has some merit too, once you accept that it only in a statistical sense and that you might be wrong.

Leif Svalgaard says:

December 24, 2010 at 10:44 am

Robuk says:

December 24, 2010 at 10:08 am

For me, the evidence above is clear, a ramp up of TSI from around 1900 and then a steady high from 1940 to 2000 leading to the slightly higher temperatures we see today, no room for CO2.

TSI today is what it was in 1900 because the magnetic field that drives variations of TSI is today what it was around 1900,

Are these two graphs total rubbish, they appear to show a rising TSI, perhaps there is some other Unknown force, I beleive the jet stream was not discovered until the mid 1940`s and the PDO until the mid 1990`s.

http://i446.photobucket.com/albums/qq187/bobclive/reconstructedTSI.jpg

http://i446.photobucket.com/albums/qq187/bobclive/irradiance.gif

TSI is not worth considering. The AGW crowd will lean on this at every opportunity. Solar output is more than just heat, the magnetic and EUV output varies on much larger scales and is now looking to influence atmospheric changes at a minimum….just look at the northern hemisphere right now.

Geoff Sharp says:

December 25, 2010 at 5:48 am

TSI is not worth considering. The AGW crowd will lean on this at every opportunity. Solar output is more than just heat, the magnetic and EUV output varies on much larger scales

The energy in those ‘other’ things are tens of thousands times smaller.

The AGW debate ended at about the same point when debt capacity and income growth ended. Those who were left with the budget tab for these two massive errors will be hurt the most by a cooling outcome.