NEW UPDATES POSTED BELOW

As regular readers know, I have more photographs and charts of weather stations on my computer than I have pictures of my family. A sad commentary to be sure, but necessary for what I do here.

Steve Goddard points out this NASA GISS graph of the Annual Mean Temperature data at Godthab Nuuk Lufthavn (Nuuk Airport) in Greenland. It has an odd discontinuity:

Source data is here

The interesting thing about that end discontinuity is that is is an artifact of incomplete data. In the link to source data above, GISS provides the Annual Mean Temperature (metANN) in the data, before the year 2010 is even complete:

Yet, GISS plots it here and displays it to the public anyway. You can’t plot an annual value before the year is finished. This is flat wrong.

But even more interesting is what you get when you plot and compare the GISS “raw” and “homogenized” data sets for Nuuk, my plot is below:

Looking at the data from 1900 to 2008, where there are no missing years of data, we see no trend whatsoever. When we plot the homogenized data, we see a positive artificial trend of 0.74°C from 1900 to 2007, about 0.7°C per century.

When you look at the GISS plotted map of trends with 250KM smoothing, using that homogenized data and GISS standard 1951-1980 baseline, you can see Nuuk is assigned an orange block of 0.5 to 1C trend.

Source for map here

So, it seems clear, that at least for Nuuk, Greenland, their GISS assigned temperature trend is artificial in the scheme of things. Given that Nuuk is at an airport, and that it has gone through steady growth, the adjustment applied by GISS is in my opinion, inverted.

The Wikipedia entry for Nuuk states:

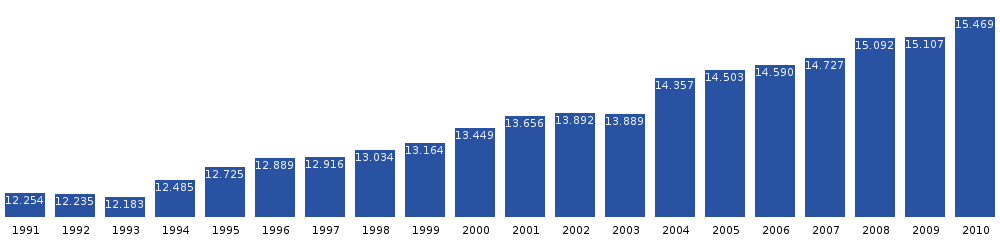

With 15,469 inhabitants as of 2010, Nuuk is the fastest-growing town in Greenland, with migrants from the smaller towns and settlements reinforcing the trend. Together with Tasiilaq, it is the only town in the Sermersooq municipality exhibiting stable growth patterns over the last two decades. The population increased by over a quarter relative to the 1990 levels, and by nearly 16 percent relative to the 2000 levels.

Nuuk population growth dynamics in the last two decades. Source: Statistics Greenland

Instead of adjusting the past downwards, as we see GISS do with this station, the population increase would suggest that if adjustments must be applied, they logically should cool the present. After all, with the addition of modern aviation and additional population, the expenditure of energy in the region and the changing of natural surface cover increases.

The Nuuk airport is small, but modern, here’s a view on approach:

Closer views reveal what very well could be the Stevenson Screen of the GHCN weather station:

Here’s another view:

The Stevenson Screen appears to be elevated so that it does not get covered with snow, which of course is a big problem in places like this. I’m hoping readers can help crowdsource additional photos and/or verification of the weather station placement.

[UPDATE: Crowdsourcing worked, the weather station placement is confirmed, this is clearly a Stevenson Screen in the photo below:

Thanks to WUWT reader “DD More” for finding this photo that definitively places the weather station. ]

Back to the data. One of the curiosities I noted in the GISS record here, was that in recent times, there are a fair number of months of data missing.

I picked one month to look at, January 2008, at Weather Underground, to see if it had data. I was surprised to find just a short patch of data graphed around January 20th, 2008:

But even more surprising, was that when I looked at the data for that period, all the other data for the month, wind speed, wind direction, and barometric pressure, were intact and plotted for the entire month:

I checked the next missing month on WU, Feb 2008, and noticed a similar curiosity; a speck of temperature and dew point data for one day:

But like January 2008, the other values for other sensors were intact and plotted for the whole month:

This is odd, especially for an airport where aviation safety is of prime importance. I just couldn’t imagine they’d leave a faulty sensor in place for two months.

When I switched the Weather Underground page to display days, rather than the month summary, I was surprised to find that there was apparently no faulty temperature sensor at all, and that the temperature data and METAR reports were fully intact. Here’s January 2nd, 2008 from Weather Underground, which showed up as having missing temperature in the monthly WU report for January, but as you can see there’s daily data:

But like we saw on the monthly presentation, the temperature data was not plotted for that day, but the other sensors were:

I did spot checks of other dates in January and February of 2008, and found the same thing: the daily METAR reports were there, but the data was not plotted on graphs in Weather Underground.The Nuuk data and plots for the next few months at Weather Underground have similar problems, as you can see here:

But they gradually get better. Strange. It acts like a sensor malfunction, but the METAR data is there for those months and seems reasonably correct.

Since WU makes these web page reports “on the fly” from stored METAR reports in a database, to me this implies some sort of data formatting problem that prevents the graph from plotting it. It also prevents the WU monthly summary from displaying the correct monthly high, low, and average temperatures. Clearly what they have for January 2008 is wrong as I found many temperatures lower than the monthly minimum of 19 °F they report for January 2008, for example, 8°F on January 17th, 2008.

So what’s going on here?

- There’s no sensor failure.

- We have intact hourly METAR reports (the World Meteorological Organization standard for reporting hourly weather data for January and February 2008.

- We have erroneous/incomplete presentations of monthly data both on Weather Underground and NASA GISS for the two months of Jan Feb 2008 I examined.

What could be the cause?

WUWT readers may recall these stories where I example the impacts of failure of the METAR reporting system:

GISS & METAR – dial “M” for missing minus signs: it’s worse than we thought

Dial “M” for mangled – Wikipedia and Environment Canada caught with temperature data errors.

These had to do with missing “M’s” (for minus temperatures) in the coded reports, causing cold temperatures like -25°C becoming warm temperatures of +25°C, which can really screw up monthly average temperatures with one single bad report.

Following my hunch that I’m seeing another variation of the same METAR coding problem, I decided to have a look at that patch of graphed data that appeared on WU on January 19th-20th 2008 to see what was different about it compared to the rest of the month.

I looked at the METAR data for formatting issues, and ran samples of the data from the times it plotted correctly on WU graphs, versus the times it did not. I ran the METAR reports through two different online METAR decoders:

http://www.wx-now.com/Weather/MetarDecode.aspx

http://heras-gilsanz.com/manuel/METAR-Decoder.html

Nothing stood out from the tests with the decoder I did. The only thing that I can see is that some of the METAR reports seem to have extra characters, like /// or 9999, like these samples, the first one didn’t plot data on WU, but the second one did an hour later on January 19th:

METAR BGGH 191950Z VRB05G28KT 2000 -SN DRSN SCT014 BKN018 BKN024 M01/M04 Q0989 METAR BGGH 192050Z 10007KT 050V190 9999 SCT040 BKN053 BKN060 M00/M06 Q0988

I ran both of these (and many others from other days in Jan/Feb) through decoders, and they decoded correctly. However, that still leaves the question of why Weather Underground’s METAR decoder for graph plotting isn’t decoding them correctly for most of Jan/Feb 2008, and leaves the broader question of why GISS data is missing for these months too.

Now here’s the really interesting part.

We have missing data in Weather Underground and in GISS, for January and February of 2008, but in the case of GISS, they use the CLIMAT reports (yes, no ‘e”) to gather GHCN data for inclusion into GISS, and final collation into their data set for adjustment and public dissemination.

The last time I raised this issue with GISS I was told that the METAR reports didn’t effect GISS at all because they never got numerically connected to the CLIMAT reports. I beg to differ this time.

Here’s where we can look up the CLIMAT reports, at OGIMET:

http://www.ogimet.com/gclimat.phtml.en

Here’s what the one for January 2008 looks like:

Note the Nuuk airport is not listed in January 2008

Here’s the decoded report for the same month, also missing Nuuk airport:

Here’s February 2008, also missing Nuuk, but now with another airport added, Mittarfik:

And finally March 2008, where Nuuk appears, highlighted in yellow:

The -8.1°C value of the CLIMAT report agrees with the Weather Underground report, all the METAR reports are there for March, but the WU plotting program still can’t resolve the METAR report data except on certain days.

I can’t say for certain why two months of CLIMAT data is missing from OGIMET, why the same two months of data is missing from GISS, or why Weather Underground can only graph a few hours of data on each of those months, but I have a pretty good idea of what might be going on. I think the WMO created METAR reporting format itself is at fault.

What is METAR you ask? Well in my opinion, a government invented mess.

When I was a private pilot (which I had to give up due to worsening hearing loss – tower controllers talk like auctioneers on the radio and one day I got the active runway backwards and found myself head-on to traffic. I decided then I was a danger to myself and others.) I learned to read SA reports from airports all over the country. SA reports were manually coded teletype reports sent hourly worldwide so that pilots could know what the weather was in airport destinations. They were also used by the NWS to plot synoptic weather maps. Some readers may remember Alden Weatherfax maps hung up at FAA Flight service stations which were filled with hundreds of plotted airport station SA (surface aviation) reports.

The SA reports were easy to visually decode right off the teletype printout:

From page 115 of the book “Weather” By Paul E. Lehr, R. Will Burnett, Herbert S. Zim, Harry McNaught – click for source image

Note that in the example above, temperature and dewpoint are clearly delineated by slashes. Also, when a minus temperature occurs, such as -10 degrees Fahrenheit, it was reported as “-10″, not with an “M”.

The SA method originated with airmen and teletype machines in the 1920′s and lasted well into the 1990′s. But like anything these days, government stepped in and decided it could do it better. You can thank the United Nations, the French, and the World Meteorological Organization (WMO) for this one. SA reports were finally replaced by METAR in 1996, well into the computer age, even though it was designed in 1968.

From Wikipedia’s section on METAR

METAR reports typically come from airports or permanent weather observation stations. Reports are typically generated once an hour; if conditions change significantly, however, they can be updated in special reports called SPECIs. Some reports are encoded by automated airport weather stations located at airports, military bases, and other sites. Some locations still use augmented observations, which are recorded by digital sensors, encoded via software, and then reviewed by certified weather observers or forecasters prior to being transmitted. Observations may also be taken by trained observers or forecasters who manually observe and encode their observations prior to transmission.

History

The METAR format was introduced 1 January 1968 internationally and has been modified a number of times since. North American countries continued to use a Surface Aviation Observation (SAO) for current weather conditions until 1 June 1996, when this report was replaced with an approved variant of the METAR agreed upon in a 1989 Geneva agreement. The World Meteorological Organization‘s (WMO) publication No. 782 “Aerodrome Reports and Forecasts” contains the base METAR code as adopted by the WMO member countries.[1]

Naming

The name METAR is commonly believed to have its origins in the French phrase message d’observation météorologique pour l’aviation régulière (“Aviation routine weather observation message” or “report”) and would therefore be a contraction of MÉTéorologique Aviation Régulière. The United States Federal Aviation Administration (FAA) lays down the definition in its publication the Aeronautical Information Manual as aviation routine weather report[2] while the international authority for the code form, the WMO, holds the definition to be aerodrome routine meteorological report. The National Oceanic and Atmospheric Administration (part of the United States Department of Commerce) and the United Kingdom‘s Met Office both employ the definition used by the FAA. METAR is also known as Meteorological Terminal Aviation Routine Weather Report or Meteorological Aviation Report.

I’ve always thought METAR coding was a step backwards.

Here is what I think is happening

METAR was designed at a time when teletype machines ran newsrooms and airport control towers worldwide. At that time, the NOAA weatherwire used 5 bit BAUDOT code (rather than 8 bit ASCII) transmitting at 56.8 bits per second on a current loop circuit. METAR was designed with one thing in mind: economy of data transmission.

The variable format created worked great at reducing the number of characters sent over a teletype, but that strength for that technology is now a weakness for today’s technology.

The major weakness with METAR these days is the variable length and variable positioning of the format. If data is missing, the sending operator can just leave out the data field altogether. Humans trained in METAR decoding can interpret this, computer however are only as good as the programming they are endowed with.

Characters that change position or type, fields that shift or that may be there one transmission and not the next, combined with human errors in coding can make for a pretty complex decoding problem. The problem can be so complex, based on permutations of the coding, that it takes some pretty intensive coding to handle all the possibilities.

Of course in 1968, missed characters or fields on a teletype paper report did little more than aggravate somebody trying to read it. In today’s technological world, METAR reports never make it to paper, they get transmitted from computer to computer. Coding on one computer system can easily differ from another, mainly due to things like METAR decoding being a task for an individual programmer, rather than a well tested off the shelf universally accepted format and software package. I’ve seen all sorts of METAR decoding programs, from ancient COBOL, to FORTRAN, LISP, BASIC, PASCAL, and C. Each program was done differently, each was done to a spec written in 1968 for teletype transmission, and each computer may run a different OS, have a program written in a different language, and different programmer. Making all that work to handle the nuances of human coded reports that may contain all sorts of variances and errors, with shifting fields presence and placement, is a tall order.

That being said, NOAA made a free METAR decoder package available many years ago at this URL:

http://www.nws.noaa.gov/oso/metardcd.shtml

That has disappeared now, but a private company is distributing the same package here:

This caveat on the limulus web page should be a red flag to any programmer:

Known Issues

- Horrible function naming.

- Will probably break your program.

- Horrible variable naming.

I’ve never used either package, but I can say this: errors have a way of staying around for years. If there was an error in this code that originated at NWS, it may or may not be fixed in the various custom applications that are based on it.

Clearly Weather Underground has issues with some portion of it’s code that is supposed to plot METAR data, coincidentally, many of the same months where their plotting routine fails, we also have missing CLIMAT reports.

And on this page at the National Center for Atmospheric Research (NCAR/UCAR), we have reports of the METAR package failing in odd ways, discarding reports:

>On Tue, 2 Mar 2004, David Larson wrote: > >> >>Robb, >> >>I've been chasing down a problem that seems to cause perfectly good >>reports to be discarded by the perl metar decoder. There is a comment >>in the 2.4.4 decoder that reads "reports appended together wrongly", the >>code in this area takes the first line as the report to process, and >>discards the next line. >>

Here’s another:

On Fri, 12 Sep 2003, Unidata Support wrote: > > ------- Forwarded Message > > >To: support-decoders@xxxxxxxxxxxxxxxx > >From: David Larson <davidl@xxxxxxxxxxxxxxxxxx> > >Subject: perl metar decoder -- parsing visibility / altimeter wrong > >Organization: UCAR/Unidata > >Keywords: 200309122047.h8CKldLd027998 > > > The decoder seems to mistake the altimeter value for visibility in many > non-US METARs.

So my point is this. METAR is fragile, and at the world’s premier climate research center, it seems to have problems that suggest it doesn’t handle worldwide reports yet it appears to be the backbone for all airport based temperature reports in the world, which get collated to GHCN for climate purposes. I think the people that handle these systems need to reevaluate and test their METAR code.

Even better, let’s dump METAR in favor of a more modern format, that doesn’t have variable fields and variable field placements requiring the code to not only decode the numbers/letters, but also the configuration of the report itself.

In today’s high speed data age, saving a few characters from the holdover of teletype days is a wasted effort.

The broader point is; our reporting system for climate data is a mess of entropy on so many levels.

UPDATE:

The missing data can be found at the homepage of DMI, the Danish Meteorological Institute http://www.dmi.dk/dmi/index/gronland/vejrarkiv-gl.htm “vælg by” means “choose city” choose Nuuk to get the numbers monthly back to january 2000.

Thanks for that. The January 2008 data is available, and plotted below at DMI’s web page.

So now the question is, if the data is available, and a prestigious organization like DMI can decode it, plot it, and create a monthly average for it, why can’t NCDC’s Peterson (who is the curator of GHCN) decode it and present it? Why can’t Gavin at GISS do some QC to find and fix missing data?

Is the answer simply “they don’t care enough?” It sure seems so.

UPDATE: 10/4/10 Dr. Jeff Masters of Weather Underground weighs in. He’s fixed the problem on his end:

Hello Anthony, thanks for bringing this up; this was indeed an error in

our METAR parsing, which was based on old NOAA code:

/* Organization: W/OSO242 – GRAPHICS AND DISPLAY SECTION */

/* Date: 14 Sep 1994 */

/* Language: C/370 */

What was happening was our graphing software was using an older version of

this code, which apparently had a bug in its handling of temperatures

below freezing. The graphs for all of our METAR stations were thus

refusing to plot any temperatures below freezing. We were using a newer

version of the code, which does not have the bug, to generate our hourly

obs tables, which had the correct below freezing temperatures. Other

organizations using the old buggy METAR decoding software may also be

having problems correctly handling below freezing temperatures at METAR

stations. This would not be the case for stations sending data using the

synoptic code; our data plots for Nuuk using the the WMO ID 04250 data,

instead of the BGGH METAR data, were fine. In any case, we’ve fixed the

graphing bug, thanks for the help.

REPLY: Hello Dr. Masters.

It is my pleasure to help, and thank you for responding here. One wonders if similar problems exist in parsing METAR for generation of CLIMAT reports. – Anthony

A shareable editable map of greenland with stations added. testing 1,2,3

Steven Mosher says:

October 3, 2010 at 9:53 pm

DMI seems to have them. Anthony has shown that they are avaible, so why doesn’t GISS use them? Maybe because they would give an answer that GISS doen’t want.

#######

it’s pretty simple. You have these things to consider

1. the DMI process ( it’s models + data )

2. Do they pick DMI up going forward or recalculate the past. If they recalculate

the past, many people will scream ( see recent threads here )

3. The DMI would have to accepted at a trusted source. not saying they arent

4. CRU and NCDC dont use it.

5. Nothing stops anybody else from importing the data into GISSTEMP and running

the numbers.

—…—…

But the DMI daily values for the summer (the melting season), for every day since 1958 HAVE been graphed (here, at WUWT less than 5 weeks ago).

Average daily summer temperatures at 80N have consistently and steadily decreased.

The rate of temperature change is accelerating, Arctic summer temperatures are getting increasingly colder as CO2 rises.

And ,”Yes”, GISS does not want to admit this “inconvenient fact” because it does not fit Hansen’s agenda. It destroys his funding base. It destroys his power base.

Lucy Skywalker hit the nail on the head and suggested the only reasonable approach. Take a small number of stations, widely dispersed, with long records, and research them. Document everything from changes in personnel to equipment to physcial moves to local landscape changes. Put it in a standard format with proper documentation and publish it.

The current method uses archane technology incapable of correctly processing human or other errors, relies on a chain of custody that is obscure at best, and worst of all, requires knowledge that only a handfull of people like Hansen, Mosher, Tisdale and Watts to understand, and they only because of the extensive amount of time they have put into it. Consider Mosher’s detailed responses above regarding error codes and adjustment methodologies. You won’t find that nicely laid out on the GISS web site for just anybody to understand.

Lucy’s suggestion would cost a fraction of what is being proposed for upgrades to the existing network and would make data available in a format that would enable the expertise of thousands of times as many scientists to do meaningful analysis with a fraction of the effort. While Mr Mosher’s knoweldge of the system is impressive, giving credence to his assertion that nothing nefarious is going on, the system itself is a cobbled together mess that provides little assurance that it is remotely accurate. As for something nefarious going on… I’m not as certain as Mr. Mosher but a few thousand statistiscians and other researchers with access to detailed data meticulously documented in a (modern) standard format would soon answer the question.

Thanks for the map Mosh, a great start. Haven’t figured out how to edit yet.

I see the problem – plain as the nose on one’s face. Everyone in Nuuk drives an SUV, and apparently there’s no carpool policy. If ever a town needed light rail more. They need a rail link between the city core and the airport, and possibly a cluster of windmill generators on the hilltop at the end of the runway.

Anthony, I’ll add you as a collaborator on the fusion table.

RACook:

“But the DMI daily values for the summer (the melting season), for every day since 1958 HAVE been graphed (here, at WUWT less than 5 weeks ago). ”

if you only have summer values, then that is a problem for Hansen’s method and for CRU’s method. Further just because somebody post’s a chart does not mean the data meets standards. Just saying. If somebody wants to recommend data, then that data has to be on the same footing as other data. Like it or not GHCN is a clearing house for data. The question is why doesnt GHCN import DMI data.

“Average daily summer temperatures at 80N have consistently and steadily decreased.

The rate of temperature change is accelerating, Arctic summer temperatures are getting increasingly colder as CO2 rises.”

Well, RSS and UHA don’t exactly agree with you there, but maybe I’m misrembering the work I did looking at that. Perhaps you can go pull that data and post about it

“And ,”Yes”, GISS does not want to admit this “inconvenient fact” because it does not fit Hansen’s agenda. It destroys his funding base. It destroys his power base.”

That’s a theory. How do we test it? how do we test your claim that this is the reason? if we have no way to test it, then I’m not really concerned with it.

Has anybody figured out how GISS do their averages when they have supposedly missing data?

For example, below is the raw GISTEMP data for Esperance, a town on the south coast of Western Australia:

http://data.giss.nasa.gov/work/gistemp/STATIONS//tmp.501946380000.0.1/station.txt …

… for 2009 …

21.3 21.0 19.5 17.2 15.4 13.6 12.6 13.4 999.9 16.5 20.1 20.9

DJF MAM JJA SON ANNUAL

20.3 17.4 13.2 17.5 17.10

DJF is 18.7 + 21.3 + 21 / 3 = 20.3 (correct)

MAM is 19.5 + 17.2 + 15.4 / 3= 17.4 (correct)

JJA is 13.6 + 12.6 + 13.4 / 3 = 13.2 (correct)

SON is presumably 16.5 + 20.1 / 2 = 18.3 (incorrect)

The missing September data makes one wonder about their SON calculation of 17.5. Maybe it would help GISS if they visit the Australian Bureau of Met website …

http://www.bom.gov.au/climate/dwo/200909/html/IDCJDW6040.200909.shtml

This is their data source so the September 2009 average mean temp they can’t find turns out to be 13.2, which happens to be the third coldest September since their Esperance records began in 1969. Let’s try again …

SON = 13.2 + 16.5 + 20.1 / 3 = 16.6

Their SON of 17.5 is almost a degree too high and the new Esperance annual average for 2009 is 16.87, not 17.1.

Similar problem several hundred kilometres inland at Kalgoorlie-Boulder, the gold mining capital of Australia, where September 2009 is 999.9.

GISTEMP page

http://data.giss.nasa.gov/work/gistemp/STATIONS//tmp.501946370004.2.1/station.txt

BoM “rescue page” for September 2009 mean temp …

http://www.bom.gov.au/climate/dwo/200909/html/IDCJDW6061.200909.shtml

Which shows a chilly September 2009 average mean temp at 13.9, meaning the Kalgoorlie-Boulder SON works out to be 19.4, not the 20.5 they conjured from the missing September data. So the annual mean for Kalgoorlie-Boulder in 2009 was 19, not 19.28 as calculated by GISS.

How about the capital city of Perth Airport readings? September remains a problem:

GISTEMP page

http://data.giss.nasa.gov/work/gistemp/STATIONS//tmp.501946100000.2.1/station.txt

BoM “rescue page” for September 2009 mean temp …

http://www.bom.gov.au/climate/dwo/200909/html/IDCJDW6110.200909.shtml

Chilly September 2009 mean temp once again at 13.9, meaning the Perth Airport SON works out to be 17.2, not the 17.7 calculated by GISS from the missing September data. The annual mean for Perth Airport was 18.75, not 18.88 as calculated by GISS.

13.2, 13.9 and 13.9. Maybe 13 is their unlucky number?

Meanwhile, back at the ranch, the Australian BoM suffered a database bug which in November last year saw them upward “correct” all their August 2009 temps in Western Australia by .4 to .5 of a degree at almost all locations.

For example, Esperance August 2009 av mean min up from 8.3 to 8.8, max from 17.7 to 18.1; Kalgoorlie-Boulder August 2009 min up from 6.8 to 7.2, max from 20.3 to 20.7; and Perth (metro, not airport) min up from 8.8 to 9.3, max from 18.5 to 18.9.

The GISS data for Esperance and Kalgoorlie-Boulder in August 2009 is the BoM database bug corrected data. August 2009 was the hottest ever August recorded in Australia.

I’m sure it all makes sense to statisticians but I’m off to the casino where I can rely on the numbers.

Watts Up With Nuuk?

So many bad puns. So little bandwidth . . .

evan.

Can you test if you can edit the map

in this day and age it should be simple to develop a simple XML format to encode this data in an easily read (by computer or people) format that would eliminate the gross number of data errors we see lamost daily … that it has not been done is a crime and the motives of those in charge can easily be questioned …

give me 1 month and 2 web programmers and I’ll deliver a global internet based encrypted data capture system with basic error checking (i.e. it would ask “are you sure” if todays temp number varies by more than x percent from yesterdays number)

given the billions and even trillions of dollars/euros/etc at stake the world should expect accurate data collection as the minimum effort expect of “climate scientists” …

Hello Anthony, thanks for bringing this up; this was indeed an error in

our METAR parsing, which was based on old NOAA code:

/* Organization: W/OSO242 – GRAPHICS AND DISPLAY SECTION */

/* Date: 14 Sep 1994 */

/* Language: C/370 */

What was happening was our graphing software was using an older version of

this code, which apparently had a bug in its handling of temperatures

below freezing. The graphs for all of our METAR stations were thus

refusing to plot any temperatures below freezing. We were using a newer

version of the code, which does not have the bug, to generate our hourly

obs tables, which had the correct below freezing temperatures. Other

organizations using the old buggy METAR decoding software may also be

having problems correctly handling below freezing temperatures at METAR

stations. This would not be the case for stations sending data using the

synoptic code; our data plots for Nuuk using the the WMO ID 04250 data,

instead of the BGGH METAR data, were fine. In any case, we’ve fixed the

graphing bug, thanks for the help.

REPLY: Hello Dr. Masters.

It is my pleasure to help, and thank you for responding here. One wonders if similar problems exist in parsing METAR for generation of CLIMAT reports. – Anthony

@ur momisugly Jeff Masters : Thank you for the prompt truth. This is so important to many here that science is what science should be, honest and transparent. h/t to you! Could you also see if such mistakes have been carried through in the logic to the charts and graphs that GISS has been publishing for some period into the past? This could explain some of GISS’s rather large divergence.

Anthony

‘You can’t plot an annual value before the year is finished. This is flat wrong.’

It’s not just Nuuk. They seemed to have done this to many stations.

Try:-

http://data.giss.nasa.gov/work/gistemp/STATIONS//tmp.425724500020.1.1/station.txt

and:-

http://data.giss.nasa.gov/work/gistemp/STATIONS//tmp.501949100003.1.1/station.txt

Sorry to anyone above who has mentioned this.

Hansen just can’t keep his thumb off the temperature scale!

From looking at all of the nice photos. I don’t think they could have placed the temp station in a better place, IF you only consider the pilot and passenger safety.

Like most to the airport sites around the globe, just not suited for climate studies.

Trying to do too much with too little, or adapting to dual use where one suffers as a result.

Where do you stick the thermometer?

I happen to be a climate skeptic by nature, but even in this day of global satellite monitoring of just about everything, there seems to be no rational system of monitoring the various parameters of climate that would give us a clue as to what has happened is happening and maybe, what to expect.

Nuuk appears to be a charming place, but how do I relate that to global climate, ice cover glaciers, rainfall patterns, etc.

All we get are anecdotes which appear to prove the point of whoever is telling the story.

Is this only part of the cosmic war between good and evil or is there a thing called science?

I’ve posted two worked examples at http://oneillp.wordpress.com/2010/05/06/gistemp-anomaly-calculation-post-2-of-6-in-gistemp-example/

There is a bug in the computation of seasonal means for the station data pages at the GIStemp website, for three month periods with one missing month. Note that this bug is not present in the actual Gistemp code. The anomalies computed by the Gistemp code indicate that the seasonal and annual means have been correctly calculated, but as these values are not saved by the GIStemp code they must be calculated again to fulfil plot or data requests at the GIStemp station data webpages, and it at this stage that the bug has crept in.

So the actual GIStemp analysis is not affected by this bug, but users downloading station data (or generating plots) which include missing months should recalculate seasonal and annual means where any of the three month seasonal periods includes one missing month, before use.

The other seasonal means with a single missing month are also wrong, but to see problem see the SEP-OCT-NOV 1884 (should be -1.0) and MAR-APR-MAY 1885 (should be -2.05 -> 2.1) errors outlined in the initial rows of the Nuuk data:

http://oneillp.files.wordpress.com/2010/10/metann.jpg

I’ve not been able to detect any pattern to these errors, and so without source code for the website code I cannot guess what has gone wrong. Reported to GISS.

As this bug affects the first two figures in this post, a corrected plot can be found at http://oneillp.files.wordpress.com/2010/10/nuuk1.jpeg

A plot with the full GISS data dating back to 1880 rather than from 1900 on can be found at http://oneillp.files.wordpress.com/2010/10/nuuk2.jpeg

And if data from the GIStemp July update (data to June 2010) is used instead, so eliminating the additional data which allowed calculation of a 2010 mean, the effect on trends can be seen by comparing the two corresponding plots:

http://oneillp.files.wordpress.com/2010/10/nuuk3.jpeg and

http://oneillp.files.wordpress.com/2010/10/nuuk4.jpeg

(These plots show trends both over the full time period for the plot and over the last 30 years. The figures in parentheses are the R squared values. The adjustment is also shown on each plot).

Update and correction

I’ve received a reply from Reto Ruedy at GISS explaining than the seasonal and annual means returned by the station data webpages are not calculated in the same manner as the corresponding seasonal and annual means are calculated when calculating anomalies in STEP2 of the GIStemp analysis.

The procedure used can be found at

http://data.giss.nasa.gov/gistemp/station_data/seas_ann_means.html ,

and I have verified for the Godthab Nuuk data that this procedure does give the seasonal and annual means returned by the station data pages, other than an occasional differing final digit in cases where differing machine precision can lead to rounding up on one machine and rounding down on the other. So the difference between the results using the two different procedures is intentional, and there is in fact no bug.

My apologies for wrongly jumping to the conclusion that the seasonal and annual means returned by the station data pages should be identical to those calculated as intermediate results while calculating anomalies in GIStemp STEP2, and as a result suggesting that differing values indicated a bug. The outcome of this mistake on my part however is that there is now clarification for Chris Gillham’s question

which I set out to answer. My blog post which I linked still provides a worked example of the procedure used in STEP2 of GIStemp, while calculating anomalies, and Reto Ruedy’s reply provides a link to the procedure used to calculate these values for the data returned by the station data pages.

Reto Ruedy’s reply explained the reason for two different procedures: that missing months are much less important when dealing with anomalies than when dealing with absolute temperatures. When calculating anomalies a straight average over the appropriate three or two months has been used, whereas when dealing with absolute temperatures this more sophisticated procedure is used to handle missing months. He also mentioned that the code used for this is in fact part of the GIStemp STEP1 code, contained in

STEP1/EXTENSIONS/monthly_data/monthlydatamodule.c

(I had noticed when implementing GIStemp STEP1 that there appeared to be some additional code not used by the GIStemp analysis itself, but did not examine that code more closely for the reason that it was not used in the analysis).