From Dr Roy Spencer’s Global Warming Blog

by Roy W. Spencer, Ph. D.

Metop-C Satellite Added to Our Processing

With this update, we have added Metop-C to our processing, so along with Metop-B we are back to having two satellites in the processing stream. The Metop-C data record begins in July of 2019. Like Metop-B, Metop-C was designed to use fuel to maintain its orbital altitude and inclination, so (until fuel reserves are depleted) there is no diurnal drift adjustment needed. Metop-B is beginning to show some drift in the last year or so, but it’s too little at this point to worry about any diurnal drift correction.

The Version 6.1 global average lower tropospheric temperature (LT) anomaly for November, 2024 was +0.64 deg. C departure from the 1991-2020 mean, down from the October, 2024 anomaly of +0.75 deg. C.

The Version 6.1 global area-averaged temperature trend (January 1979 through November 2024) remains at +0.15 deg/ C/decade (+0.21 C/decade over land, +0.13 C/decade over oceans).

The following table lists various regional Version 6.1 LT departures from the 30-year (1991-2020) average for the last 23 months (record highs are in red). Note the tropics have cooled by 0.72 deg. C in the last 8 months, consistent with the onset of La Nina conditions.

| YEAR | MO | GLOBE | NHEM. | SHEM. | TROPIC | USA48 | ARCTIC | AUST |

| 2023 | Jan | -0.06 | +0.07 | -0.19 | -0.41 | +0.14 | -0.10 | -0.45 |

| 2023 | Feb | +0.07 | +0.13 | +0.01 | -0.13 | +0.64 | -0.26 | +0.11 |

| 2023 | Mar | +0.18 | +0.22 | +0.14 | -0.17 | -1.36 | +0.15 | +0.58 |

| 2023 | Apr | +0.12 | +0.04 | +0.20 | -0.09 | -0.40 | +0.47 | +0.41 |

| 2023 | May | +0.28 | +0.16 | +0.41 | +0.32 | +0.37 | +0.52 | +0.10 |

| 2023 | June | +0.30 | +0.33 | +0.28 | +0.51 | -0.55 | +0.29 | +0.20 |

| 2023 | July | +0.56 | +0.59 | +0.54 | +0.83 | +0.28 | +0.79 | +1.42 |

| 2023 | Aug | +0.61 | +0.77 | +0.45 | +0.78 | +0.71 | +1.49 | +1.30 |

| 2023 | Sep | +0.80 | +0.84 | +0.76 | +0.82 | +0.25 | +1.11 | +1.17 |

| 2023 | Oct | +0.79 | +0.85 | +0.72 | +0.85 | +0.83 | +0.81 | +0.57 |

| 2023 | Nov | +0.77 | +0.87 | +0.67 | +0.87 | +0.50 | +1.08 | +0.29 |

| 2023 | Dec | +0.75 | +0.92 | +0.57 | +1.01 | +1.22 | +0.31 | +0.70 |

| 2024 | Jan | +0.80 | +1.02 | +0.58 | +1.20 | -0.19 | +0.40 | +1.12 |

| 2024 | Feb | +0.88 | +0.95 | +0.81 | +1.17 | +1.31 | +0.86 | +1.16 |

| 2024 | Mar | +0.88 | +0.96 | +0.80 | +1.26 | +0.22 | +1.05 | +1.34 |

| 2024 | Apr | +0.94 | +1.12 | +0.77 | +1.15 | +0.86 | +0.88 | +0.54 |

| 2024 | May | +0.78 | +0.77 | +0.78 | +1.20 | +0.05 | +0.22 | +0.53 |

| 2024 | June | +0.69 | +0.78 | +0.60 | +0.85 | +1.37 | +0.64 | +0.91 |

| 2024 | July | +0.74 | +0.86 | +0.62 | +0.97 | +0.44 | +0.56 | -0.06 |

| 2024 | Aug | +0.76 | +0.82 | +0.70 | +0.75 | +0.41 | +0.88 | +1.75 |

| 2024 | Sep | +0.81 | +1.04 | +0.58 | +0.82 | +1.32 | +1.48 | +0.98 |

| 2024 | Oct | +0.75 | +0.89 | +0.61 | +0.64 | +1.90 | +0.81 | +1.09 |

| 2024 | Nov | +0.64 | +0.88 | +0.41 | +0.54 | +1.12 | +0.79 | +1.00 |

The full UAH Global Temperature Report, along with the LT global gridpoint anomaly image for November, 2024, and a more detailed analysis by John Christy, should be available within the next several days here.

The monthly anomalies for various regions for the four deep layers we monitor from satellites will be available in the next several days at the following locations:

Fine, it’s going down 👍

A lot of people in 1816 would have loved today’s temperatures. Instead they died from cold and starving.

It looks a lot like the 1998 biggie. I expect it to follow the pattern and settle into a multi-year pause a few 10ths of a degree above the last long pause. All the warming has been a staircase driven by big El Niño cycles.

El Nino doesn’t explain the observed warming:

ROFLMAO.

Urban CRAP fabrication.

Is that the best you have !!

El Nino explains ALL THE WARMING in the atmospheric data.

It does not, particularly because El Ninos aren’t trending stronger:

There is simply no mechanism by which EL Nino could be driving a multi-decadal warming trend without some external energy input.

You are a moron, AJ.

Are you really DENYING the 1998, 2016/17 and 2023/24 El Nino events and the effect they had on the atmosphere… .. WOW !!

There has been external energy.. Its called the SUN.

And of course CO2 is not an external energy input, so you have kicked yourself in the rear end, yet again.

1… Please provide empirical scientific evidence of warming by atmospheric CO2.

2… Please show the evidence of CO2 warming in the UAH atmospheric data.

3… Please state the amount of CO2 warming in the last 45 year, giving measured scientific evidence for your answer.

The claim being made is that El Nino peaks keep getting warmer and warmer and warmer over time in a stepwise fashion, and that this is driving the observed warming trend. I am saying that there is no evidence that El Ninos are exhibiting a long term trend and there is no evidence that El Nino can drive the observed warming trend. I am not denying the existence of the El Nino peaks.

Are you saying the sun’s output is increasing and that this is driving the El Nino peaks to get warmer and warmer?

“I am saying that there is no evidence that El Ninos are exhibiting a long term trend and there is no evidence that El Nino can drive the observed warming trend”

See, I told you that you were a moron.

Not understanding the difference between the localised ENSO indicator and the actual energy released to the atmosphere.

STILL DENYING the effect of the three El Ninos on the atmosphere.. WOW !!!

Those three El Nino events account for ALL the warming in the UAH data.

Noted that you were totally incapable of showing any evidence of CO2 warming anywhere.

Talk about “climate deniers”, you are a prime example.

How does one characterize the amount of energy released to the atmosphere during an El Nino? Please share that analysis.

Look at the UAH atmospheric data, idiot !!

Calling someone an idiot will not change their mind, nor will it do much for the fence sitters.

“You are a moron, AJ”

What gives you the right to begin your reply with this statement? Whether you agree or disagree with another contributor, what’s so difficult about being civil?

The point AJ makes is perfectly reasonable and deserves a reasonable response. If you yourself maintain the the science isn’t settled, then it’s not settled in any sense – in terms of evidence for or against AGW. Therefore, it’s as absurd for you to claim the El Ninõ effect on warming as settled science, as it is for AJ to contend CO2 as a forcing! At least stand or fall by your own contentions.

The evidence from both satellite and land based data show a definite warming trend for several decades. At least acknowledge the possibility that CO2 might be partially the driving force. It might prove not to be so or it might become increasingly clear that there’s no other explanation outside of CO2. But crucially, science must keep minds open for further enquiry. There’s little profit in pointing the finger at mainstream climate science, claiming lack of integrity, when you engage in exactly the same tactics. The polarised attitudes do nothing at all to help clean up the mire, they just make it more filthy.

WRONG.. The UAH data shows warming ONLY at major El Nino events

Near zero trend from 1980-1997

Near zero trend from 2001-2015

Slight cooling trend from 2017/2023.4

It is basically zero trend, apart from El Nino events

If you think there is evidence of warming by atmospheric CO2 then produce the evidence.

1… Please provide empirical scientific evidence of warming by atmospheric CO2.

2… Please show the evidence of CO2 warming in the UAH atmospheric data.

3… Please state the exact amount of CO2 warming in the last 45 year, giving measured scientific evidence for your answer.

CO2 is not an energy source. It can absorb and emit radiation. But, the emission can never be greater than the absorption. Consequently, CO2, by itself, can not add heat to the system.

Until the atmosphere becomes warmer than the surface, land and ocean, the atmosphere can not add heat to the surface. That assumes the sun can not directly warm the atmosphere, but that is not manmade heat is it?

“At least acknowledge the possibility that CO2 might be partially the driving force. “

Then why was it hotter in the 30’s than today – when CO2 in the atmosphere was smaller?

Because he has proven time and time again that he is. !!

AJ is a vapid AGW apostle. Are you ???

Noted that yet again he has been totally unable to provide any proof whatsoever of CO2 warming.

Only a complete moron or a vapid AGW activist (same thing) would put forward, as evidence, surface data that he knows is both highly agenda corrupted and highly urban effected….

…especially when the whole topic is the UAH atmospheric data.

Wouldn’t you agree. !!

Something the mods here seem to be incapable of dealing with, in this particular sad individual’s case.

Yes, you are a very sad non-entity, fungal.

FYI.

Absorbed solar energy continues to increase.

So, to clarify, the argument is that absorbed shortwave radiation is driving the observed long term warming trend, not a stepwise El Nino forcing?

OMG…. this is why I call you a moron.

The sun helps charge the El Nino events.!

But you were well aware of that, weren’t you.!

Or maybe you will suggest that the SUN does provide energy to the oceans, or something daft like that.

So again…. where is your evidence of any human caused atmospheric warming in the UAH data.

So when you say that the warming is actually occurring stepwise at each El Nino, what you mean is that the warming is being driven by increased shortwave absorption, and that the warming is simply expressed at El Nino events because they are natural high temperature peaks?

Again…. where is your evidence of any human caused atmospheric warming in the UAH data.

Still a complete FAILURE !!

If you can’t understand simple concepts, not my problem

Where do you think most of the energy that is released at El Nino events comes from .. surely not CO2.. that would be dumb even for you.

I am putting aside my personal opinions about the cause of the observed warming and am exploring the theory that was proposed earlier by David. David says “All the warming has been a staircase driven by big El Niño cycles.” I’m asking what is driving the trend in the El Nino cycles. You seem to be saying it’s an increase in shortwave absorption?

Where is your evidence that “the sun helps charge the El Nino events?”

The sun’s radiation is the source of all energy (discounting geological and manmade heat) in the system. The atmosphere can be warmed directly from the sun or by radiation, conduction, convection from the surface. Either requires more absorption from the sun.

Now if one wants make an argument that all heat generated by humans from dung cooking fires to nuclear submarines to air conditioners alters the heat in the Earth’s system, feel free.

I agree that the sun is the source of all energy, but if the argument being made is that there is a long term change in shortwave absorption that is driving the long term temperature trend, and this trend is readily apparent at each El Nino highstand, that’s quite a bit different than claiming that the El Ninos themselves are providing some mode of stepwise climate forcing that is producing the observed trend.

The original poster, David, seemed to be saying the latter, and I’m not clear on where bnice stands based on their comments, or what mechanism might be proposed to allow El Nino to produce such a forcing.

If the oceans are absorbing more of the sun’s energy, then El Nino’s will obviously have higher temperatures to radiate away. An El Nino could complete at a higher temperature than the preceding one causing additional warming of the whole atmosphere.

The problem is that CO2 can’t cause the warming step but a constant low to high range of El Nino could.

So, you mean it would have to look something like this …

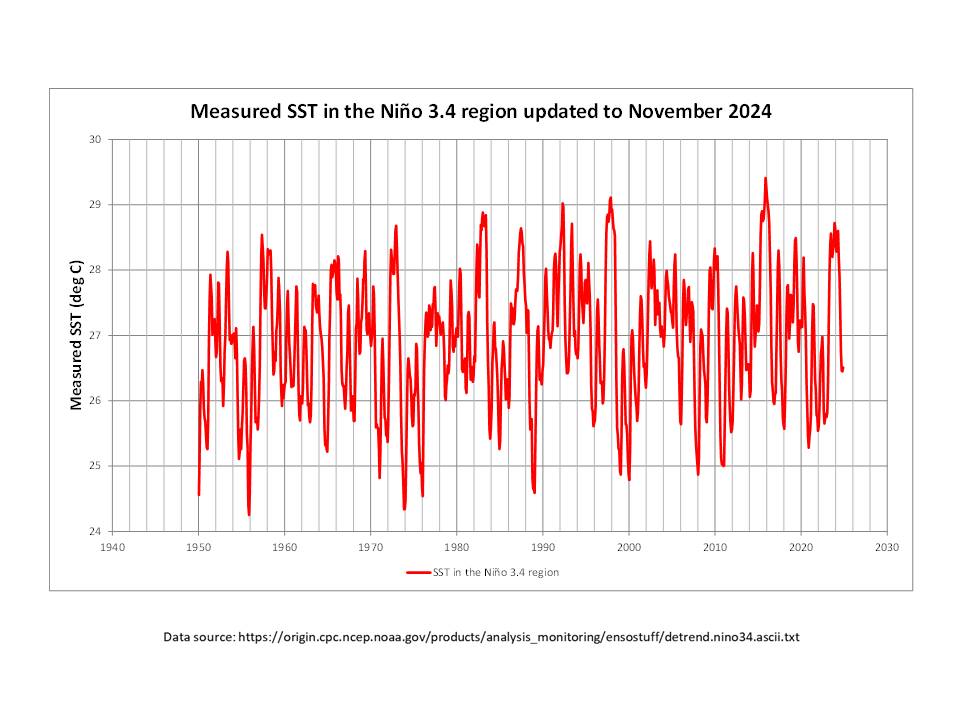

As you probably are well aware, one of the primary indicators, and classification, of El Niño/La Niña events is the Oceanic Niño Index (ONI), which is the rolling three-month average of the sea surface temperature (SST) anomaly in the Niño-3.4 region in the equatorial Pacific Ocean (5degN-5degS, 120deg-170degW). In my view, it is instructive to look at the measured SST values themselves rather than simply focussing on the ONI (anomaly) values derived from them, not least because the ONI values are de-trended.

In the plot above, the monthly SST maximum values (El Niño events) and the minimum values (La Niña events) both show a long term increase of around 1degC since 1950, while maintaining the maximum range of about 4degC.

If the warming is the result of a long term persistent forcing then the “step” appearance of the El Nino events is simply the effect of superimposing cyclic variability atop a linear trend:

So we cannot assume ipso facto that the appearance of a “stepwise” pattern in the data is indicative of any step-forcing driving the change.

But, again, as near as I can tell, bnice, and now you, are claiming that the warming is being caused by increased shortwave forcing, not by El Nino at all, which is a claim at odds with the poster I initially responded to.

Submarine volcanoes?

Earth’s declining magnetic field strength .

Please do not confuse BeNasty 2000 with data. He starts out confused. As you should know, the El Nino Nutters like BeNasty are also greenhouse effect deniers and CO2 Does Nothing Nutters. Triple Nutters.

And of course they think 99.9% of scientists have been wrong about the greenhouse effect since 1896. Based on the science degrees, science knowledge and science work experience they do not have. But they can look at a UAH chart and they see temperature spikes

What is being observed is not temperature but numbers emitted from computers after very inaccurate temperature data from an array of inaccurate measuring instruments not calibrated to a single reference instrument, are averaged, fed into a digital melting pot seething with algorithms to allow for this and that, and bubble out with exquisite accuracy far greater than that of the input data.

There are multiple independent global temperature analyses that yield consistent results, so the results are likely robust:

Statistical precision is not accuracy!

This statement is true but too general to be useful here I think. Precise results instill greater confidence than imprecise results. It is implausible to think there is a common systematic error in the various observation datasets (land, ocean, satellite), so systematic error in any form of analysis should be expected to produce disagreement among the results.

It’s hockey stick time again, kids!

Sorry, I’m not of the same mindset. While I don’t claim this is impossible, I believe there’s a better explanation. The warming over the past 30 years has been driven mainly by the +AMO phase. And, it’s recently been enhanced by the Hunga-Tonga eruption.

Over longer time periods we are seeing an influence from the millennial cycle which also led to the Minoan, Roman and Medieval warm periods.

El Niño does have shorter term effects which are countered by La Niña.

The good news is the next AMO phase change is due within the next 5 years. We should then be able to break down its contribution to recent warming.

Have a look at the UAH atmospheric data.

The ONLY warming has come at major El Nino events. !

The statement by David, that…

“ All the warming has been a staircase driven by big El Niño cycles.”

… is manifestly obvious. !

I think it may be more subtle. The AMO phase change from 1995-97 preceded the 1997-98 El Nino and had a large change in clouds associated with it. It could well be the El Nino (and multi-year La Nina events which followed) were just a coincidence but caused so much noise in the data the AMO change was hidden.

We then had the long pause with little change in temperature.

The 2015-16 El Nino also came after another cloud change from 2014-16. You’ve posted the cloud data so you can check it. Once again it could be the El Nino was just noise masking the cloud changes. The PDO also went positive in 2014 and the Bardarbunga eruption occurred.

Now we have the 2023-24 El Nino following the Hunga-Tonga eruption and yes, more cloud changes.

So, in all cases we can see cloud changes occurring which can explain the step-up in temperature after the El Nino events. To me the cloud changes are the real reason we’ve seen warming. However, they occurred before the El Nino events.

That only establishes correlation, not causation.

You and BeNasty are the head WUWT El Nino Nutters

The ENSO cycles are temperature neutral over the long run of 30 to 50 years. Since climate requires at least a 30 year average, the claim that global warming is only caused by El Ninos is claptrap. El Ninos merely caused temporary spikes in the rising trend since 1975 and La Ninas flattened the rising trend temporarily. The El Nino Nutters look at the spikes like a child looks at candy and ignore the La Ninas.

If El Ninos were the sole cause of global warming, then the global cooling period from 1940 to 1975 could not have happened. It does not take a Ph.D. to figure that out.

Assuming no big changes prior to 2023, this is the second warmest November. Ten warmest Novembers in UAH history are:

2023 0.77

2024 0.64

2019 0.42

2020 0.40

2016 0.34

2017 0.22

2015 0.21

2009 0.14

1990 0.12

2018 0.12

My projection for the year rises slightly to +0.765 ± 0.055°C, and it’s even more certain that 2024 will be the warmest on record, beating 2023 by at least 0.3°C. December would have to be -3.5°C for the record not to be beaten, so I think it’s a fairly safe bet.

Fans of meaningless trends will be delighted to know that the trend has been negative since August 2023, so a 16 month pause. How long before it reaches the magic 5 years, and the return of the monthly update?

In the mean time, the meaningless trend over the last 5 years is currently 1.1°C / decade.

How hai is a Chinaman…

不太

Not as hi as a Hunter.

“Fans of meaningless trends”

How about fans of meaningless averages?

I’d like to know where it’s been “the hottest”. There’s been none of it in my region. Oh! Wait! Maybe it’s not global, and bogus global averages give completely false impressions of what’s really happening!

“How about fans of meaningless averages?”

The meaningless average that gets published here every month, and an entire phony pause was based around.

“I’d like to know where it’s been “the hottest”.”

It’s the second warmest global average for November. I doubt many places in the northern hemisphere are “the hottest”.

It’s even more bizzare when you read that scientists use the GAT index, which includes temperatures from tropical regions, to make inferences about ice sheet feedbacks lol.

https://academic.oup.com/oocc/article/3/1/kgad008/7335889

These are all time series trends. Do you recall seeing any effort to make the trend stationary? I never see any discussions about time series analysis techniques. Just simple linear regression of variables that are not even related by any accepted functional relationship.

UAH were quick to release the gridded data this time, so here’s my map of the anomalies for November.

It’s surprising how warm it shows the UK. Ground based observations only have it slightly above the 1991 – 2020 average.

Is that Met Office data?

Yes.

https://www.metoffice.gov.uk/research/climate/maps-and-data/uk-temperature-rainfall-and-sunshine-time-series

UK sunshine

UK Sunshine for November

Correlation between sun and mean temperatures for November in the UK.

Remember, the UK is in the Northern Hemisphere.

LOL… cherry picking one month. Well done 😉

No human causation, is there.

The month we were talking about – November.

If you want to see a strong correlation between sunshine and temperature you need to look to the months with longer days, July and August show very strong correlation. But you will have to do a lot better than your usual hand waving to demonstrate that increased sunshine is the main reason for the overall warming in the UK.

This comment deleted as posted in wrong place.

What is the r^2 value for that fit? It doesn’t look very high.

For this comment?

https://wattsupwiththat.com/2024/12/03/uah-v6-1-global-temperature-update-for-november-2024-0-64-deg-c/#comment-4002745

I’m getting 0.1357. But the trend itself is quite statistically durable. There is slightly more than one chance in 100,000 that it is, in fact, flat or up.

Finally realize that R^2 is essentially use free for what you are seeking. Do the work. Download the data. Find it’s trend and the standard error of it’s trend. Note the R^2. Now detrend the data and repeat. Note that while the standard error of the trend is unchanged, the R^2 goes essentially to zero.

The standard error of the trend is only a metric for how well the line fits the data. It simply tells you nothing about the accuracy of the data and, therefore, of the accuracy of the trend line.

Only “nothing” to the extent that the data spreads increase that standard error. This is the usual goal post shift. Tell us how the uncertainty of each data point is distributed, and I’ll tell you the total standard error of their trend. Spoiler alert folks. For any credible spreads, it won’t increase much….

Error is not uncertainty.

True. Every measurement has both. But only meaningful if they have enough of them together to significantly change the evaluation under discussion. Anyone, anyone, please provide credible guesses on the errors and uncertainties in this data that could possibly do so.

How do you know the true values?

I don’t. You never do. But it’s not an all purpose way of criticizing every inconvenient evaluation you run across. Well, apparently it is for you….

Then you cannot know the magnitude of any error.

You might know this if you had any experience in real metrology, beyond Statistics Uber Alles.

AGAIN, the all purpose argument. Since every data point has error and uncertainty, only the evaluations we find convenient, should be performed using them Monckton is certainly cheering you on.

And predictably, you and the rest of your coterie continue to whine interminably about how your revealed (only to you all) truths are ignored outside of this tiny fora. I should have realized that alt.world, with it’s hard shell of circular logic, would inevitably mutate from the political sphere into health and the sciences. I’m thankful that, unlike the world outside of these disciplines, it has done so very poorly, so far.

Meaningless word salad!

I’ll try my example one more time.

You have two temperatures:

.2 +/- 0.5

.3 +/- 0.5

The possible delta’s (i.e. the slope of the connection line between the two points):

-.3 to .8 Δ = +.11

.2 to .3 Δ = .1

.7 to -.2 Δ = -.9

If you use only the stated values you get a positive slope of .1. If you use the measurement uncertainties you get slopes ranging from -.9 to +.11.

In other words you can’t even tell the direction of the slope let alone the value of the slope if you *do* consider the measurement uncertainty.

Ignoring measurement uncertainty *is* just the meme of “all measurement uncertainty is random, Gaussian, and cancels”. It’s garbage and you are trying to throw it at the wall hoping it will stick. All it does is smell up the place as it slides down the wall!

Meaningless and ignorant word salad.

He rejects the idea that uncertainty intervals are real, much less their implications.

Well, blob, you’ve just exposed your abject ignorance: metrology isn’t your long suit.

It is glaringly obvious you’ve never had to comply with ISO 17025.

NIST TN 1900 has a representative value of ±1.8°C and that should be doubled because you are subtracting two randon variables. To properly evaluate the trend line you will need to evaluate every possible combination of those values to find the variation in the trend line slope and intercept.

“NIST TN 1900 has a representative value of ±1.8°C..”

Please expand. Is this a 2 sigma distribution for every temperature measurement in the plot furnished by Bellman?

“…and that should be doubled because you are subtracting two randon variables.:

Nope. I’m evaluating the trend between 2 “random variables”.

Nope, yer just stringing random words together.

And it is bellman the weasel’s job to supply the info you seek, not Jim’s.

You can freely download this Technical Note and examine Example 2.

The ±1.8°C is the expanded experimental standard uncertainty of the mean for the monthly average value of Tmax at a station located at the NIST campus station close to I-270.

You should note that this is very similar to what NOAA states as ASOS uncertainty, ±1.0°C. Far, far above what is used when calculating anomaly only standard deviation after throwing away the inherited measurement uncertainty from the actual measurements.

How do you evaluate the trend between two random variables that have measurement uncertainty by using on the stated values?

You are just using the common climate science meme of “all measurement uncertainty is random, Gaussian, and cancels” so you can assume the stated values are 100% accurate and will generate an accurate trend line. It’s a garbage meme.

“How do you evaluate the trend between two random variables that have measurement uncertainty by using on the stated values?”

Per this absurdism, you never can. In any case, anywhere. But thankfully, above ground, people use common sense. They judge the upper bounds of credible error and uncertainty ranges for the data, and use them to bound possible resultant uncertainties for evaluative results. If they don’t significantly change those results, they go with them, and refine them as required.

For the 2 sigma 1.8 degC range that you referenced for temperature, and for Bellman’s last example, the good news for you is that the chance of the trend being wrong increased – as it should. The bad news is that it increased from slightly less than 1 in 100000 to 1 in 100. So, qualitatively, Bellman went from being probably right to being – er – probably right.

But thankfully, above ground, people use common sense. They judge the upper bounds of credible error and uncertainty ranges for the data,

Give one example of a climatologist providing real uncertainty “judgements”, blob. Just one.

I dare you.

If the difference between adjacent points can be down because of measurement uncertainty then the slope of the line between them will be down. *YOU* eliminate that possibility by just assuming no measurement uncertainty and the stated values are 100% accurate!

This is nothing more than one more invacation of the garbage climste science meme that “all measurement uncertainty is random, Gaussian. and cancels”. If the temp change is 0.2 +\- 0.5 then you simply don’t know if tge trend is up or down. So you and climate science just WISH away the +\~ 0.5!

Nope. I never claimed any of that.

“If the temp change is 0.2 +\- 0.5 then you simply don’t know if the trend is up or down.”

You never “know”, in any case. But you do “know” that a trend of 0.2, with a standard error of 1.0, still has a 58% chance of being positive. Bellman’s trend was for an expected value of -0.046 deg/unit change in solar intensity, with a standard error of 0.011 deg/unit change in solar intensity. Do the work and you’ll get the same chance of it, in fact, being positive as me.

Your all purpose diss is that the data has “errors” and “uncertainties”. AGAIN, provide any credible estimate of how much, and we can find out if it changes things enough to matter.

Technical words of which you have no understanding, thus you try to paper over your ignorance of same with yet another word salad (YAWS).

The 0.2 is *NOT* the slope of the trend line. It is the difference between two adjacent data points. Meaning the difference could be positive or negative. We’ve been down this road before. If *all* the differences between adjacent are negative then the slope *has* to be negative. You immediately eliminate that possibility by using the “all measurement uncertainty is random, Gaussian, and cancels” meme so you can concentrate on the stated value alone.

“There is slightly more than one chance in 100,000 that it is, in fact, flat or up.”

If that is so then the trend also has slightly less than 99,999 chances in 100,000 (i.e. it’s almost certain) that it is, in fact, DOWN!

Is that what you intended to suggest?

Yes, six is indeed a half dozen…

What are you talking about, bob? I don’t have time to play mind-games.

Many have wondered the same…

I’ll check when I get back, but it’s pretty low for all months apart from July and August.

It’s better if you look at annual data, but I think that hides a lot of the details. I keep looking at the data, but don’t think I can draw much of a conclusion. Quite possibly some UK warming is caused by increased sunshin, but it can’t explain all or most of it. And that still leaves the question of why sunshine has been increasing over the last 50 years.

R^2 for November is 0.14.

As I said, only the summer months have a reasonably strong R^2.

The months with the shortest days tend to have negative correlation. They also show the strongest slope, which may just be because there is less variation in the number of hours in the month.

You just explained one reason why Tavg on a global basis is a joke. One half of the globe has winter and short days while the other half has summer and long days. Do you really think that combining opposites gives a statistical significant answer? You mention “hiding”. Guess what?

They have all been trained to think solely in terms of GATavg, and cannot escape this trap.

“You just explained one reason why Tavg on a global basis is a joke.”

You need to explain where you think I said any such thing. Did you ever mention to Monckton that his pause was a joke based on the UAH global mean?

“One half of the globe has winter and short days while the other half has summer and long days.”

So you accept the worlds round at least. Though you don’t seem to think that the tropics exist.

“Do you really think that combining opposites gives a statistical significant answer?”

The anomalies are not “opposites”. Look at the UAH data. Currently both hemispheres have positive anomalies, and have had them for the past year or so. As to statistical significance, you will have to explain what your hypothesis is. If the hypothesis is that global anomalies have increased over the last 40+ years, then I think we can say the results using the global average are statistically significant.

“You mention “hiding”. Guess what?”

Why do you always have to speak in riddles. Where did I mention “hiding”? What was the context? What do you want me to guess?

The anomalies inherit the variances of the absolutes. Meaning the anomalies in each hemisphere have different variances. You *have* to add them using proper weighting – which climate science never does. It just averages the anomalies while ignoring the variances – just like they always ignore measurement uncertainties.

He will never admit this is true.

You really need to figure out what it is you are asking and then explain it clearly. And tell it to Dr Spencer rather than me.

I tried to do what I thought you were after a couple of months ago, but you just blew it away. But again, here’s the UAH data expressed in terms of local deviation from the 1991-2020 base period. It’s an interesting approach as it shows where a particular anomaly is unusually high or low for that location and time of year.

And I can produce a global average based on that metric.

The standard beelman weasel.

Thanks showing everyone it is extra sunshine, and NOT CO2 causing the warming.

Doing well 🙂

Correlation does not imply causation. But obviously it’s rain that is making the UK warmer.

Still absolutely ZERO EVIDENCE of any human causation

You are doing well, Bellboy !!

But he’s got his 12-gauge shotgun pointed at paper targets.

Yep, I reckon that’s wrong for the UK. I think the bulge of warmth across the Atlantic has been extended too far east. The UK just hasn’t been that warm in November.

Does that tell you something about the difference in measurement techniques being used? Perhaps they are not comparable at all!

And here’s the average of the last 12 months. (Note, I’ve changed the temperature scale to allow for more detail.)

That the effect of the 2023 El Nino event has been hanging around , hasn’t it.

No evidence of any human causation though, is there.

And in relation to the discussion of the 17 month pause in Australia discussed below, here are what the trends across the globe are like since July 2023.

Note, the scale is running from -20°C / decade to +20°C / decade, which should give some indication of why I think it’s meaningless.

Yep, look at the tropical area cooling down, exactly as expected.

A slower decline from the 2023 El Nino transient because of the extra WV in the stratosphere..

Absolutely ZERO EVIDENCE of any human causation. in the whole of the UAH data.

Lets run Sept, Oct Nov together.

You can clearly see the tropics is finally clearing from the solid yellow it has been since the start of the 2023 El Nino.

I actually expected it to last a bit longer.

PS.. I hate how WordPress blurs images, just click on it to get an unblurred one

“You can clearly see the tropics is finally clearing from the solid yellow it has been since the start of the 2023 El Nino.”

As this article points out, the Tropics have cooled by 0.72°C since March. I’m not sure why that should be a surprise, given the end of the El Niño. In 1998 the Tropics cooled by 1.04°C over the same period.

Here’s the graph again, using the correct version of the data.

March – November 2024

You can see the El Nino effect starting to subside, just as I said.

Well done !

Evidence of human causation NONE. !

Still waiting for you to admit that the 2023/24 El Nino event was NOT ANTHROPOGENIC in any way shape or form.

“Well done !”

Thanks. But I can’t claim credit for the theory that El Niño effects subside. If they didn’t El Niños would cause permanent warming which is absurd.

“Still waiting for you to admit that the 2023/24 El Nino event was NOT ANTHROPOGENIC in any way shape or form.”

Why should I admit something I’ve never disagreed with. I’ve never claimed this or any El Niño was caused by Humans.

Thank you for agreeing that ALL the warming in the UAH atmospheric data in NON-ANTHROPOGENIC.

Perhaps there is hope for you. !

Yes, the El Nino effect is finally winding down… slower than usual.

At least now you are admitting this is all just part of the El Nino event.

You really like your pathetic strawmen arguments, don’t you. Nobody has ever suggested that El Niños do not cause a warming spike, and hence temperatures cool down after the El Niño ends.

It’s something I and others keep pointing out when ever people keep claiming spurious pauses caused by El Niño spikes.

The question this time is whether this particular spike can be explained entirely by this particular El Niño, and I’ve always said we probably won’t know that with any clarity for some time.

Again, Thanks for admitting that there is absolutely no sign of any human caused warming in the UAH data.

No room for any imaginary CO2 warming.

And no, you have it totally bas-ackwards… El Ninos break the zero trend periods.

They start after an El Nino spike/step, and continue until the next El Nino.

No human causation anywhere… as you continue to emphasise.

Have they released the latest adjustment to their ‘pristine’ data yet?

dolt !!

““Fans of meaningless trends””

That’d be YOU !!

“that the trend has been negative since August 2023, so a 16 month pause.”

Yep a slow escape of the 2023 El Nino energy, actually peaked in April 2024.

Why is the trend so meaningless. Please explain! If the trend becomes downward over time, would you still contend that it would be “meaningless”?

Bellboy is what we call a “trend monkey”

He thinks linear trends can be applied to everything, and loves using step changes like at El Nino events, to create linear trends.

Looks like the 2023 El Nino energy is finally dissipating… slowly.

bnice2000 is what we call a “liar”. He claims I think linear trends can be applied to everything, despite the fact I specifically called them meaningless.

I’ve constantly criticized the use of linear trends applied to make spurious points, including those used by Monckton and others to claim pauses, whilst ignoring the uncertainty in the short term trend, and the cherry-picking nature of the choice of start date. I’ve made the same points about bnice2000 claims of “step changes”. Again the result of selecting arbitrary short term trends, and ignoring the uncertainty and ENSO conditions.

Poor bellboy.. writes a post highlighting his ignorance.. Very funny !

He knows the only way he can show a positive linear trend is to use the El Nino spike/steps, which he invariably does.

LYING to himself, fooling no-one but himself… and too dumb to realise it.

Selected short periods that are El Nino.. or can’t you tell when the El Ninos are, little child. !!

No cherry-picking needed! They are where they are.

And they have step changes that are patently obvious to anyone but a blind monkey !

And the bell-child is always running away when asked to produce evidence of human causation.

Monckton calculation is not spurious in the least. It asks a set question, then does a mathematical calculation to answer that question.

For one thing it is a time series. Analyzing time series has requirements that are not met by simple linear regressions.

Another is that in simple terms the global temperature follows a distribution with the max at the tropics and the tails at the poles. Think a normal distribution. To properly describe the GAT ( Global Average Temperature) one should also include a variance description.

1 mK!

HAHAHAHAHAHAHAHAHAHAHAHAHAHAH

I thought that would trigger you. As you clearly don’t understand what the ± 0.055 means, and are incapable of understanding why it might be better to avoid premature rounding, and as you are incapable of figuring out how to round numbers in your head – then just for you:

Dork. Both you and Spencer need to learn significant digit rules.

Oh, you know better than Roy Spencer now… Clever boy.

Poster child for WUWT.

All you’ve done here is expose your abject ignorance of significant digit rules.

Good job.

Have you ever calculated what irradiance change is necessary to contribute a temperature change of 0.001K? Can the detectors on the satellites accurately detect that small amount of change.

Tell us what the ΔW/m² is for a change from 276.000K to 276.001K!

Then tell us what the specification of the satellites have for detecting changes.

If you want to criticize Dr Spencer, do it to his face, rather than through me. My indifference to the “rules” of significant figures is my own heresy.

Thanks for admitting you only post to WUWT to torque people up.

“He’s a troll, Tim” — Pat Frank

Truer words…

He is obviously aware that the 2023 El Nino event energy has had trouble escaping.

And still no proof of a CO2 causation.

+0.765 ± 0.055°C translates to 273.915 K ± 0.02% as a relative uncertainty!

Absurdly absurd.

He doesn’t know the difference between precision and accuracy.

Nor error and uncertainty.

We’ll be able to see how absurd it is once we have December’s figure.

And in case you are not getting this, the projection is for what the UAH annual average will be. I make no claims to the accuracy of that data. The simple fact is that with just one month to go the likelihood of the annual average changing by much is small.

December only needs to be between 0.11 and 0.90 for the annual average to be within that ±0.02% range.

Right because who cares about data accuracy, right? It’s only a trivial detail!

/sarc

/plonk/

He doesn’t even realize he’s undermining himself 💀.

There are seasonal differences in instrument functionality that need to be weighed accordingly.

Religious faith is a great thing, isn’t it?

When the significant figures suggest only a centiKelvin is justified.

Exactly.

I am not interested in warming records. Right now I am battling sub-freezing COLD.

Multiple downvoting of a presentation of the actual data from the regular monthly posting on this site!

Actual data

Yes and at least 22 people downvoted it!

Hope your safe bets are better than the Iowa polling “expert” who had Harris beating Trump by 3 points.

ps – I’ve never had to rely on any models to tell me that there is no such thing as a “safe bet” in anything.

Particularly so where “chaos” is the dominant influence.

Correction –

there is one “safe bet”.

Life – nobody is coming out of this alive.

If you want to put some money on 2024 being colder than 2023 according to UAH, then be my guest. Just say what you think the odds are.

Betting on any of nature’s vagaries is how species become extinct.

Like, for example – betting that humanity will have a viable replacement for modern life-sustaining fossil fuels such as coal, oil and gas before they become rare commodities.

Jeez, who would be that stupid?

Edit –

betting that humanity

will haveHAS a viable replacement(is it beer o’clock yet?)

“(is it beer o’clock yet?)”

Always ! Somewhere.

Just contact a friend in the right time zone, via chat.

And have a beer with them. 🙂

I don’t know why, but that reminded me of a message on the side of a 12-pack of Yuengling beer:

“Please Recycle

Save our Planet

It’s the only one with Beer.”

😎

at last!- a rational reason for recycling.

You are obviously well aware that the El Nino effect has been hanging about for most of 2024, as opposed to only 6 months of 2023.

That means you are obviously well aware that this is NOT anthropogenic.

Can you bring yourself to say it. 😉

“Can you bring yourself to say it.”

Still waiting ! 😉

Do you just pull these random insults out of your fundament? Nothing you said has the slightest relevance to my comment, which was about how safe a bet it is that UAH will say 2024 was the warmest year in record. The reason why it’s the warmest is irrelevant to that bet.

Where was the insult?

Asking you to tell the truth ? sorry you think that is an insult. 😉

Come on.. have the guts to say it. or keep squirming.

You are making a victim of yourself.

Repeat after me…

“The 2023/24 El Nino has no human causation, and is totally Non-Anthropogenic.”

Just like the 1998, and 2016/17 El Ninos.

That is why there is no sign of any human caused warming in the UAH data.

I know its hard for you to admit the facts, but at least try !

And still totally natural because of the “hanging in there” effect of the 2023 El Nino + HT WV

Absolutely zero evidence that this is related to human caused “Klimate Khange with a trade mark”.

anomolies are not temperature records … especially when the underlying data is “massaged/tortured” until it yields what you want … and thats not even dealing with UHI and horrid thermometer siting issues … you don’t have ANY good, fit for purpose data to make ANY projections with …

“thats not even dealing with UHI and horrid thermometer siting issues”

Haven’t you worked out yet that this thread is about satellite data?

So what?

Everyone noticed how you conveniently snipped his main point about your precious anomalies.

I’ve lived longer, literally, than the satellite records.

Keep the records honest to pass on and in a hundred or two hundred years they will be useful for a real look at real “Global” temperature trends.

The global average temp is not even a metric for CLIMATE.

A 1C change from -20C to -19C somewhere on the globe does *NOT* change the climate at all, either locally, regionally, or globally. Same for a change from 70F to 71F.

It can’t even tell you if it is getting *warmer* since warmth is determined by the heat content, i.e. the enthalpy.

Yet climate science enthusiastically refuses to convert from using temperature as a metric to using enthalpy.

Why do you suppose that is?

O/T

On the Grenadian island of Carriacou, even the dead are now climate victims – The Guardian.

Will ghosts, poltergeists, demons etc rise up in anger?

Poor victims. !!

It shows just few feet up from the shore it is already a metre above sea level. They’re gonna be aaaaaalright.

The nearest longish-term tide data I could find shows 2.16mm/year…. SCARY !!

And of course the beach will build itself up as the sea rises.

Send in the ruler monkeys…

Looking at the current crop of Western “leaders”, I am ready to swap out for monkey rulers.

And the Earth just keeps on turning.

Yet another adjustment from UAH.

Are they back to being ‘pristine’ all over again?

Navel gazing can be fun – if you’re that way inclined.

-3

And three people are.

That “adjustment” is called “doing science the careful and proper way” . . . as in monitoring one’s data gathering instruments to minimize error sources, both known (as in diurnal drift from a satellite that has run out of propellant for maintenance of its orbital ephemeris) and spurious/random.

But it looks like you are unaware of such a concept, despite it being specifically mentioned in the above article.

I agree. I’m just pointing out that UAH cannot be considered to be pristine, or ‘gold standard’, etc as is often proclaimed here. It’s flawed and needs constant revision and adjustment, like all the other data producers.

It’s funny that when surface temperature data producers like GISS and NOAA make similar adjustments, even describing their reasons and methods in peer reviewed papers, they are often accused here of fraud.

It does get a bit suspicious when a plot of their adjustments almost match a plot of their surface temperature anomalies.

As soon as you “adjust” (that is – “tamper”) with recorded observations, you no longer have “data”, you have “constructs“.

And if you need to use your constructs to prosecute a particular case, full disclosure of your method and treatment of inputs should explain upfront that your constructs are your version of recorded observations, and as such, your conclusions are better described as “opinion”.

And as has accurately been observed for aeons, opinions are like arses – everybody has one.

Hmmmm . . . just wondering if that applies to things like rounding off “recorded observations” to the number of mathematically-supportable significant digits or to calculating an average of a given number of recorded data values?

And if an instrument circuit failure causes zero output, but the parallel data recording system keeps recording “0.0000, 0.0000, 0.000, . . .” for tens to hundreds of times, must one include those “recorded observations” for fear of elsewise being accused of constructing/adjusting/tampering with “data”?

Data rounding and flagging poor or nonexistent data is nowhere close to the fraudulent Fake Data practices of climastrology.

One of these is standard data handling techniques, the other is fraud.

The critical deficiencies of climate “data” –

Bingo.

That may well be true, but fraud wasn’t mentioned by Mr. in his post, nor in my reply to him.

Your “questions”:

Nope.

And if an instrument circuit failure causes zero output, but the parallel data recording system keeps recording “0.0000, 0.0000, 0.000, . . .” for tens to hundreds of times, must one include those “recorded observations” for fear of elsewise being accused of constructing/adjusting/tampering with “data”?

Nope.

Both of these are standard data handling techniques.

His statement:

…is exactly right, it is fraud.

What about applying a calibration curve (adjustment) to recorded observations (i.e., recorded data). I do believe NIST does that all the time with their laboratory measurements of experiment data.

Personally, I wouldn’t accuse NIST of tampering with or “constructing” data because they use this process. But I guess that’s just me.

So, please, carry on.

Where do you get “calibration curves” for historic air temperature data?

Thanks for admitting you are just another Fake Data fraudster.

You typically use calibration curves for electronic temperature measurement instruments, typically a resistance temperature device (RTD) such as a platinum resistance thermometer (PRT) or a disimilar-metals voltage-generating device such as a thermocouple. The calibration curve is needed to convert the easily electronically-measured parameter, for example resistance in a PRT, into an equivalent temperature because there is not an exact linear relationship between the two, as show in the attached graph excerpted from https://blog.beamex.com/pt100-temperature-sensor .

To the best of my knowledge there is no instrument that does not use an intermediary (such expansion of liquids in glass thermometers, or radiometers in pyrometers, or electronic devices such as RTDs and thermocouples, or IR photodetectors in thermal imaging systems) to “measure” the temperature of a given object or medium. All have non-linearities that require calibration curves to obtain highly accurate conversion of the physically-measured parameter into an equivalent temperature.

You only use calibration curves if you want the highest levels of accurate temperature “measurements”. You may not know this, but “historic air temperature data” was NEVER required to be highly accurate! Usually, +/- 0.5 deg-F was good enough.

The have been numerous WUWT articles that discuss the various inaccuracies associated with “historical”, archived temperature data.

Now, you were saying something about admitting something . . .

Nice rant. Converting a sensor output signal to temperature has NOTHING to do with the abuse to which climatology subjects air temperature data. They (and you) believe they can reduce “error” years after-the-fact.

First you wanted to question the use of calibration curves as they appear to be missing from “historic air temperature data” . . . but now you choose to divert to conversation over to “abuse” of data without even defining what you mean by that?

Why am I not surprised?

BTW, I’m glad to have provided a rant that pleased you.

BTW^2, I know for a fact that photo-manipulation programs (e.g., Photoshop) can correct errors in existing photos (e.g., scratches, printing blemishes, faded contrast, washed-out colors, astigmatism) years after they were taken. I have no problem with that. I know that similar after-the-fact-error-correction processes are used across multiple science disciplines.

They do not “correct” errors. They estimate a value from the surrounding information. There is an uncertainty associated with doing this.

Many moons ago I did photo retouching using Photoshop. You could magnify down to the pixel level and replace with an estimated RGB value. Invariably, at a macro level, you see that the surrounding pixels then needed shading to make a smooth transition.

This is why stations are “retouched” multiple times as the algorithms begin to shade further and further abroad to make smooth transitions.

For physical measurements, each and every change should be documented in a database with an explanation of why.

In business, this climate science practice will get you hung by your thumbs and flayed by lawyers. Why does climate science allow it?

And it is ever so easy to lose information in a photograph with the various enhancement algorithms.

Here’s a nickel, kid, go buy yourself a clue.

That’s all you got?

It’s all you deserve, dork.

Care to address the message and not the messenger?

ROTFLMAO.

Dork.

/plonk/

“a resistance temperature device (RTD) such as a platinum resistance thermometer (PRT) or a disimilar-metals voltage-generating device such as a thermocouple.”

You’ve been down this rat-hole before. Even PRT sensors have given calibration drift factors based on long term heating from current flow. The calibration curve developed before the sensor is installed in the field will *NOT* be correct after a period of time suffering from operating heat.

The is absolutely no reason that a time-of-use-dependent calibration curve for a PRT cannot be generated and used to offset “operating heat”, assuming such is even significant.

Duh.

Have at it. Be sure to publish your results.

I am constantly amazed at how little those supporting CAGW know about the real world.

In the other comments section this guy was trying to claim that paper has never been used to insulate wire cabling.

paper insulated cabling was a major repair issue in the telephone system, especially in residential areas. It was a never ending battle to keep the cables from getting wet and noisy. In the 70’s there were nitrogen bottles hooked into many cable runs in my neighborhood to keep a positive pressure which kept the water from getting in.

My guess is that this guy has never wired a house with old Romax cale where the wires were paper insulated.

Yep!

You guessed wrong . . . look to the words in the postings to which you might be referring. BTW, you refer to wiring for telephone “systems”, whereas the real issue is that related to electric power wiring in homes.

I grew up in a 1880’s era Victorian house that—guess what—had uninsulated wires stung between ceramic standoffs in its attic. That pre-dated Romax cables!

Flat out made up and positively FALSE!

Malarky! It’s called “manufacturing tolerances”.

For “a” PRT, as in a single one, how do you generate a time-dependent heat drift curve when you don’t even know the environment it will be installed in? It’s the *heat* that is important, a PRT in a an Arizona desert station will see a higher heat drift total than one in Nome, AK.

He doesn’t know about the standard RTD calibration curves either.

The calibration curve for PRTs (of a given type/brand) do NOT vary from one unit to the next . . . they have a calibration curve that is based on operating temperature versus time. It is inherently based on the exact materials (alloys) of construction, NOT the manufacturing date or lot number or the location where such PRT may be used!

Now, you were saying something about malarky . . .

From Fluke:

“Calibration is performed by measurement of the resistance of the unit under test (UUT) while it is exposed to a temperature. Fundamentally, four instruments are required as follows:

Each individual sensor requires calibration which generates a calibration curve. There is *NOT* one calibration curve for a given type.

From Cole-Parmer:

Calibration Procedures

Characterization

Characterization is the method that is most often used for medium to high accuracy PRT calibration. With this method, a new resistance vs. temperature relationship is determined anew with each calibration. Generally, with this type of calibration, new calibration coefficients and a calibration table are provided as a product of the calibration. There are five basic steps to perform as listed below:

Did you *really* think that manufacturing runs at different times won’t affect the calibration curve of individual PRT’s?

Read the GUM Section D.3. 3. If one has a calibration curve for the device, the realized value is corrected at the time of measurement. The correction value should be recorded with the measurement for future reference.

Read this site and carefully examine the simple uncertainty budget that is shown.

https://www.muelaner.com/uncertainty-budget/

Where do you find a calibration chart it uncertainty budget for each station location in the past?

Read this part of the GUM:

F.1.1 Randomness and repeated observations

See if you determine why NIST TN 1900 calculated uncertainty in the fashion they did based on this section.

Wherever/whenever did I state, or even imply, that applying calibration curves reduced measurement uncertainties to zero?

I see you are having loads of fun with lame semantic games.

Facts matter.

If that isn’t your implication of the usefulness of a calibration curve then why mention? What’s your point? My guess is that you don’t have one!

That’s a very poor guess on your part.

“laboratory measurements”

The operative words in your statement. Field measurements, including satellite measurements, are not done in a lab!

it is *exactly* what measurement uncertainty is meant to convey! You don’t change the data, you change the measurement uncertainty interval to account for possible inaccuracy.

Absolutely, but climatology rewrites rules then claims the ability to remove “errors” after-the-fact.

No.

Obvious crap is just obvious crap.

No “adjustments” required.

Just toss it out altogether.

I see, said the blind man.

This (half) blind man can still make out crap when it appears in front of him.

And he knows what to do with it.

Just as the gorillas in the zoos do – chuck it at the imbeciles fixated by it.

Rounding recorded observations is not a thing. Manipulating calculations in a fashion that is accepted globally by the use of significant digits rules is done to preserve the information that is available from measurements.

From: http://www.astro.yale.edu/astro120/SigFig.pdf

In other words there is a reason for significant digits rules. If you wish to disagree with them, then show your argument.

.

As to rounding averages to the resolution of the least precise measurements being averaged, the same concept applies. Simple arithmetic CAN NOT add information to measurements. A simple example is 2 in²/3 in. Where does one decide to stop showing how accurately the result can be displayed? Sig fig rules indicate the proper result is 0.7 in. Would you show 0.66666667?

Why is it so hard for climate science to understand basics like these?

WOW, really? Who knew???

/sarc

Well, you better point that out to Roy Spencer and Co at UAH. They are currently on their version 6.1.1 of the various recorded observations.

I guess you view UAH as a ‘construct’?

The same old tired, trite propaganda of the Fake Data Mannipulators.

A reliable construct.

… compared to the GISS et al junk built on lies, data tampering, fakery and urban warming.

No GISS et al make adjustments on past data on a whim using fake statistical homogenisation.

Which is NOT science.

But you are incapable of understanding the difference.

This monkey is always trying to stir the pot.

At some point, the adjustments should cease. But GISS and NOAA continually adjust the adjustments as well.

Remember, the canvas bucket 30 second evaporation correction of ship measured ocean temperature can only evaporate once.

Lol

Don’t confuse fungal with facts.

Seems to have made very little difference to the previous version. Having two satellites should be a big improvement over one.

The fact there is so little change shows they were doing a pretty good job with just one.

Right, but they both support a statistically significant global warming trend.

The extent of the change isn’t really my point; in fact I applaud them for making these adjustments.

My point is that no global temperature data set, by their very nature, can be considered ‘pristine’ or ‘gold standard’.

UAH may have a slower warming trend than all the others; but it’s still a statistically significant warming trend.

Global average temperature should never decrease if the well mixed atmospheric CO2 is driving the warming.

After all, the global average mass of atmospheric CO2 is always increasing since 1850.

The thing is that the extended El Nino event of 2023/24 had absolutely nothing to do with CO2, human or otherwise.

“Global average temperature should never decrease if the well mixed atmospheric CO2 is driving the warming.”

That would be true if CO2 was the only factor effecting temperature – but nobody suggests that.

There is no evidence CO2 a “factor” at all.

You have continued to prove that. !

Not disputing that. Just pointing out that this is a further adjustment to the UAH satellite data that many here seem to think is carved in solid rock.

It’s as fallible and moveable as all the other sources, surface or satellite.

They all paint the same general picture of continued warming, though.

No they don’t.

The satellite data shows warming only at major El Nino events.

You have yet to show us any evidence of warming by CO2 or any other human causation in the satellite data.

A total and continual FAILURE.

And no, it is far more robust that anything coming from randomly spaced junk and fabricated urban surface stations.

Very tiny adjustment in the method of extracting NEW data, due to the use of an extra satellite.

This should actually IMPROVED the measurements.

They will never be “pristine”, but but far the most consistent and reliable we have available.

This cannot be said about the highly corrupted and constantly past-changing of often-fake surface data.

You’ve missed the point, my angry wee mate.

It’s fine to make adjustments that improve your data.

It’s not fine to criticise one group for doing it whilst ignoring the other that does exactly the same thing.

For years here WUWT complained about the NOAA and GISS adjustments to the surface temperature data, even though their methods were clearly set out in peer reviewed journals.

The satellite UAH data was held up as the ‘gold standard’; yet UAH made some of the biggest trend changes of all the global temperature data sets and continues to make change after change.

“…the most consistent and reliable we have available…”

I hardly think so.

Lol

You missed the point, that is because you have zero comprehension of the difference between scientific re-calibrations, and deliberate agenda-drive fakery and mal-adjustments and urban crappymess.

Yes, you hardly think !!.. you have shown you are basically incapable of it.

Zero is exactly the correct amount. None, zip, nada, nil.

Polar bears won’t know what ice is in 2027-

Arctic could be ice free by 2027 in ‘ominous milestone’ for the planet

assuming they’re not all extinct by Xmas of course.

For the past decade the concept of “ice free” had the definition of less than 1 m sq. km. or (1 Wadhams). See: Peter Wadhams, who predicted an “ice free” Arctic Ocean by 2020 – in 2014. Similar predictive failures have been made by others.

Moving the goalposts – has Professor Wadhams Explained His Now Changed ‘ice-free’ Arctic Prediction? – Watts Up With That?

That’s a ludicrous definition. Ice free should mean no ice, not a MILLION sq. km.

Arctic sea ice will start to increase. It’s near its 30-35 year sea ice low phase (currently at 30). This means the sea ice will soon start increasing.

Are we doomed yet? 🙄

The UK is…

Yes. I predict that you’ll pay more to solve non existent problems for the rest of your life.

For all the Hunga-Tonga-eruption-explains-the-spike-in-UAH-GLAT-trending-despite-the-18-month-delay-in-its-occurrance proponents out there, please note the following consistently warmer temperatures in northern hemisphere LAT compared to southern hemisphere LAT based on the UAH data provided in the above article:

Aug 2024 — 0.12 C warmer

Sept 2024 — 0.46 C warmer

Oct 2024 — 0.28 C warmer

Nov 2024 — 0.47 C warmer

(note: not that I personally believe such numbers are accurate to two decimal places)

Hmmm . . . this happening despite the facts that (a) the Hunga-Tonga volcano is located at -20.5 degrees latitude, that is, in the Southern hemisphere, (b) the most significant one of the Hunga-Tonga undersea volcano eruptions took place on January 15, 2022, and (c) the average solar radiation intensity in Earth’s northern hemisphere is declining while is it simultaneously increasing in the southern hemisphere during the months of August through November.

It appears then that HT-injected water vapor “decided” to race up to the northern hemisphere’s stratosphere to cause excessive warming there, but not hang around in the southern hemisphere’s stratosphere to cause even the slightest additional warming there. And all the while, atmospheric physicists assured us the water vapor would be uniformly distributed across Earth’s global stratosphere certainly within one year from time of the eruption! /SARC

Not to mention the purported increase in water vapor was 0.1%.

Yeah, I am so sick of the Hunga-Tongan Climatist Cult that I want to throw my computer out of the window. These people have invalidated everything they ever said in critique of the “greenhouse effect.” It seems they are perfectly willing to assign climate blame to minuscule changes in the level of trace gasses, as long as the gas isn’t CO2.

All you need to do is dig down into the details a little deeper and it all makes sense. There are few people who deny certain gases have unique radiative properties, aka greenhouse gases. I think most of them also accept that water vapor is the strongest greenhouse gas.

When you look much closer at the science you find out CO2 does have a warming effect which fades away as it reaches low atmospheric saturation. Since this has always been the case on planet Earth, it is not possible for CO2 to cause any warming.

Water vapor has also reached low atmosphere saturation, but it is not a well mixed gas. As a result, it can and does have significant effects higher in the atmosphere.

It actually moved to both the NH and SH high latitudes and is clearing over the tropics.

It doesn’t cause “extra warming”, it slows down the escape of the energy from the El Nino event.

Or don’t you believe that large amounts of extra H2O high in the atmosphere block radiative energy release.

The phrase “extra warming” in used in the generic sense of discussing the positive “spike” in temperature show in the UAH graph of GLAT that is given in the above article.

In fact, as noted by https://earthobservatory.nasa.gov/features/EnergyBalance/page4.php (among many other sites) :

“About 23 percent of incoming solar energy is absorbed in the atmosphere by water vapor, dust, and ozone, and 48 percent passes through the atmosphere and is absorbed by the surface.”

(my bold emphasis added)

So, you see, by absorbing incoming solar radiation in the stratosphere, water vapor (specifically NOT clouds) does directly cause warming of Earth’s atmosphere. This is above and beyond water vapor acting as a “greenhouse gas” as it absorbs LWIR radiated off Earth’s surface.

Except there was NOT extra warming after the initial El Nino event which peaked in early 2024.

EXCEPT the most recent El Nino event was declared to have ended or dissipated in May 2024 . . . to repeat, it ended then . . . and yet here we are full 6+ months later without any sudden decrease in GLAT that is comparable to the sudden increase indicated about mid-2023, right around the declared onset of that El Nino.

A couple of refs for official end of the latest El Nino:

https://www.cnn.com/2024/06/13/weather/el-nino-la-nina-summer-forecast-climate/index.html

and

https://en.wikipedia.org/wiki/2023%E2%80%932024_El_Ni%C3%B1o_event

Does absence of correlation disprove causation?

OMG another dope that is incapable of seeing that the EFFECT of this El Nino event has had a greatly extended effect, and probably incapable of seeing why.

The ENSO region is just a tiny pocket used as an indicator.

It does not tell us how much energy is released at the El Nino event…

… that can only be judged by looking at the effect on the atmospheric .

bnice2000, thank you for once again demonstrating for all to see the insightful wisdom of Socrates, who said:

“When the debate is lost, slander becomes the tool of the loser.”

BTW, please explain exactly what you mean by:

(a) “judging” the “energy released” at the El Nino “event” . . . that would be in comparison to what? (And it would be nice if you could give such energy values for, oh, just the last four El Nino events . . . of course, I’m certain that you cannot do such scientifically), and

(b) “the effect on the atmosphere”? Would that be “just a tiny pocket” (hah!), or a global average atmosphere, or just the troposphere, or the troposphere + stratosphere, or just over land, or over land and oceans? And why would such an “effect” be limited just to the atmosphere when it is well known that geographical shifts in warm ocean waters are characteristic of El Ninos? Would “the effect” include change in atmospheric cloud coverage and/or precipitation patterns and intensities? What about atmosphere-to-ocean (or vice verse) heat transfers?

After all, this is YOUR opportunity to be seen as not just another in a long line of dopes. 😜 /sarc

YAWN. You failed to produce anything apart from victimhood. So sad.

If you can’t see the El Nino effect spreading rapidly out from the ENSO region since mid 2023, you are either blind or stupid.

And yes the EL Nino effect is also on the ocean water, as the energy spreads out via the currents.

Well done. Where are you in that long line of dopes ??

(SIGH) . . . as previously commented to you, apparently without any effect:

“EXCEPT the most recent El Nino event was declared to have ended or dissipated in May 2024 . . . to repeat, it ended then . . . and yet here we are full 6+ months later . . .”

I guess I’m in that long line waiting, in effect, for you to—as the saying goes—”put up or . . .” And in this case, you are EXACTLY right: I’m a dope (and stupid) for believing there was any chance at all of either of those two options happening!

YAWN,

It is patently obvious to anyone with eyes and brain the the EFFECT of the 2023 EL Nino is still lingering, and only just starting to dissipate.

Yes you are an idiot, and determined to make yourself look very stupid., because you refuse to look at and comprehend at the UAH data.

Even the most stupid and clueless person should be able to see that the 2023 El Nino started much earlier in the year than usual, climbed faster, and has hung-around much longer than either the 1997/98 or 2015/16 El Nino.

(1 is January of the listed year, dot is when the El Nino effect started)

I can only assume that you are less than clueless.

Too stupid to realise that the ENSO region is just an “indicator” region”, when the effect of the El Nino is only now starting to diminish.

Try not to remain so dumb !!

Strange that you think this is not likely. It is precisely what NASA observations have shown. The water vapor in the SH is fading away faster than the NH. Here’s the data.

Notice the SH tends to dry up more every year. What’s happening was actually predictable.

Well done Richard M, I was about to look for that graph 🙂

Do you have one for the Tropics which, iirc, shows mostly dissipated of the stratospheric WV.

Here’s one up in the stratosphere (26 km) which shows the water vapor starting to dissipate at higher altitudes almost a year ago. Don’t know why this one is not updated.

Here’s another one up a little lower (19 km).

There’s a lot more:

Here’s one part way, showing the stratospheric WV over the topics starting to clear

Strange that you casually overlook the fact, based on your presented Aura MLS contour plot for 75S latitude, that there was NO sign of any unusual, excess water vapor injection at any altitude from 13 to 40 km (i.e., essentially most of the vertical height of the stratosphere) until around November 2022, that being a full 9 months after the Hunga-Tonga eruption.

As for your assertion that the contour plots of Aura MLS water vapor patterns match predictions, please provide reference to a prediction made prior to October 2022 that at 75N latitude, between the altitudes of 20 and 30 km, water vapor levels would very suddenly rise by 1.0-1.5 ppm around November 2022, then precipitously drop by 1.0-1.75 ppm around June 2023, then suddenly rise again by 0.5-1.0 ppm around October 2023, only to then persist at a +1.0 ppm “anomaly level” for about 8 months, until about May 2024, when they began to start erratically tapering of in a “pulsating” fashion as revealed in the plot.

I am confident that nobody predicted such a on-again/off-again pattern in water vapor concentrations would take place at any combination of latitude and altitude in the stratosphere of Earth’s southern hemisphere. Prove me wrong.

Ooops . . . my typo: the first sentence in the second paragraph of my above post should read (with correction noted in strikethrough):

” . . .please provide reference to a prediction made prior to October 2022 that at

75N75S latitude, between the altitudes of 20 and 30 km, water vapor . . .”I wasn’t referring to any “precise prediction”. I was referring to the changes seen in the data prior to the eruption. Those changes strongly hinted at the changes that were forthcoming.

Dr Evil revealed – story tip

“”…reports now suggest Musk is considering giving $100m to Reform UK as what has been described as a “f*** you Starmer payment” that would in effect install Nigel Farage as leader of the opposition. The Guardian reported on Monday that Labour might consider closing some of the loopholes that make such a wild suggestion possible – but only in the second half of this parliament, which can only mean the government has failed to understand how urgent this is.

https://www.theguardian.com/commentisfree/2024/dec/03/elon-musk-reform-uk-political-donations-cap

Even the unions can only cough up a few million….

Money shouldn’t be driving politics.

It’s the Guardian..

Shouldn’t be? Yes, in the ideal world. But not today. Not here and there and there.

All money can do in politics is to buy advertising to try and affect your vote.

Unless you are claiming that the money is being used for bribery.

But since the last big US election cost democrats 1.2 billion and they still lost is evidence against that.

As the noted philosopher Clara Peller once asked, “Where’s the beef?”

“cost democrats 1.2 billion”

Seems that may be a “low” estimate.

And none of it went to anything that could have effected votes towards the Democrats.

A lot of Dems are asking for a full accounting, and I don’t think the Kamal will be inclined to give one. 😉

We can wait and see if Kamala buys a new and bigger mansion.

Money is the basis of politics.

It snowed 20 inches last month where I live on the Front Range, and it was a little cooler than average. In other words, it was a normal November…

Check out the weather in Seoul.

Attention: Story Tip.

Anyone familiar with the NSW power woes last week will find these Sydney County Council electricity commercials from the mid-1980s promoting cheap, reliable, and abundant power very amusing.

In reality, however, they should shame us all for what we all allowed governments to do to us.

https://youtu.be/tEpXRt0AFxY

For some Southern Hemisphere land data, I am resuming a graph each month of the Viscount Monckton style “pause”.

The graph has had only a few months of negative trend since the passing of the recent strong peak that topped out here in August 2024, as the graph shows. However, if the temperature decline continues for a few months, some earlier months again appear on the graph, adding to the present 17 months.

To reiterate, the purpose of this depiction is mainly to indicate that (a) land in the southern hemisphere behaves differently, in detail, to that in the Northern Hemisphere that receives most emphasis and (b) to give a different trend perspective, particularly one that shows how temperatures can move with trends different to the total trend as reported by Dr Spencer each month, giving rise to descriptions like step ladder etc.

Mechanisms that allow periods of months to years of cooling need better explanation in a time when so much emphasis is placed on warming as opposed to no warming or cooling. When you put your saucepan on the hot plate to heat your food, you do not expect the heating to lessen or stop now and then, you expect a steady increase. (No, I am not referring to intermittent renewables as a heat source).

What turns down the “control knob” for which steadily-increasing CO2 is currently favoured, but under increasing threat of being wrong?

Geoff S

So not a warming trend then. 🙂

With generous cherry picking. Here is a little more of the story:

We are talking about after the 2023 El Nino , dopey !

Everyone except you seems to know that major El Ninos cause a warming spike…

.. that is totally unrelated to any human caused warming.