From Dr. Roy Spencer’s Global Warming Blog

September 4th, 2023 by Roy W. Spencer, Ph. D.

The Version 6 global average lower tropospheric temperature (LT) anomaly for August 2023 was +0.69 deg. C departure from the 1991-2020 mean. This is a little above the July 2023 anomaly of +0.64 deg. C.

The linear warming trend since January, 1979 now stands at +0.14 C/decade (+0.12 C/decade over the global-averaged oceans, and +0.19 C/decade over global-averaged land).

Various regional LT departures from the 30-year (1991-2020) average for the last 20 months are:

| YEAR | MO | GLOBE | NHEM. | SHEM. | TROPIC | USA48 | ARCTIC | AUST |

| 2022 | Jan | +0.03 | +0.06 | -0.00 | -0.23 | -0.12 | +0.68 | +0.10 |

| 2022 | Feb | -0.00 | +0.01 | -0.01 | -0.24 | -0.04 | -0.30 | -0.50 |

| 2022 | Mar | +0.15 | +0.28 | +0.03 | -0.07 | +0.22 | +0.74 | +0.02 |

| 2022 | Apr | +0.27 | +0.35 | +0.18 | -0.04 | -0.25 | +0.45 | +0.61 |

| 2022 | May | +0.17 | +0.25 | +0.10 | +0.01 | +0.60 | +0.23 | +0.20 |

| 2022 | Jun | +0.06 | +0.08 | +0.05 | -0.36 | +0.46 | +0.33 | +0.11 |

| 2022 | Jul | +0.36 | +0.37 | +0.35 | +0.13 | +0.84 | +0.56 | +0.65 |

| 2022 | Aug | +0.28 | +0.32 | +0.24 | -0.03 | +0.60 | +0.50 | -0.00 |

| 2022 | Sep | +0.24 | +0.43 | +0.06 | +0.03 | +0.88 | +0.69 | -0.28 |

| 2022 | Oct | +0.32 | +0.43 | +0.21 | +0.04 | +0.16 | +0.93 | +0.04 |

| 2022 | Nov | +0.17 | +0.21 | +0.13 | -0.16 | -0.51 | +0.51 | -0.56 |

| 2022 | Dec | +0.05 | +0.13 | -0.03 | -0.35 | -0.21 | +0.80 | -0.38 |

| 2023 | Jan | -0.04 | +0.05 | -0.14 | -0.38 | +0.12 | -0.12 | -0.50 |

| 2023 | Feb | +0.08 | +0.17 | 0.00 | -0.11 | +0.68 | -0.24 | -0.12 |

| 2023 | Mar | +0.20 | +0.24 | +0.16 | -0.13 | -1.44 | +0.17 | +0.40 |

| 2023 | Apr | +0.18 | +0.11 | +0.25 | -0.03 | -0.38 | +0.53 | +0.21 |

| 2023 | May | +0.37 | +0.30 | +0.44 | +0.39 | +0.57 | +0.66 | -0.09 |

| 2023 | June | +0.38 | +0.47 | +0.29 | +0.55 | -0.35 | +0.45 | +0.06 |

| 2023 | July | +0.64 | +0.73 | +0.56 | +0.87 | +0.53 | +0.91 | +1.43 |

| 2023 | Aug | +0.69 | +0.88 | +0.51 | +0.86 | +0.94 | +1.54 | +1.25 |

The full UAH Global Temperature Report, along with the LT global gridpoint anomaly image for August, 2023 and a more detailed analysis by John Christy of the unusual July conditions, should be available within the next several days here.

Lower Troposphere:

http://vortex.nsstc.uah.edu/data/msu/v6.0/tlt/uahncdc_lt_6.0.txt

Mid-Troposphere:

http://vortex.nsstc.uah.edu/data/msu/v6.0/tmt/uahncdc_mt_6.0.txt

Tropopause:

http://vortex.nsstc.uah.edu/data/msu/v6.0/ttp/uahncdc_tp_6.0.txt

Lower Stratosphere:

http://vortex.nsstc.uah.edu/data/msu/v6.0/tls/uahncdc_ls_6.0.txt

If the next four months average anomalies of .54 degrees C, 2023 will pip out by one hundredth degree the Super El Nino year of 2016 for warmest in the satellite record, ie since 1979.

This could happen, as the combined effects of a strengthening El Nino, the 2022 submarine Tongan eruption and cleaner ship bunker fuel continue to affect global weather. Obviously, CO2 increasing at the same rate as since 1979 is not responsible for suddenly increased atmospheric warmth or decreased Antarctic ice.

However, the cooling trend since Feb. 2016 is at risk.

The climate system really does not want to meet anyone’s expectations.

A rapid rise is usually offset by a rapid fall after a while.

The chart still looks like we are at or near a natural warming peak but the degree of irregularity is surprising.

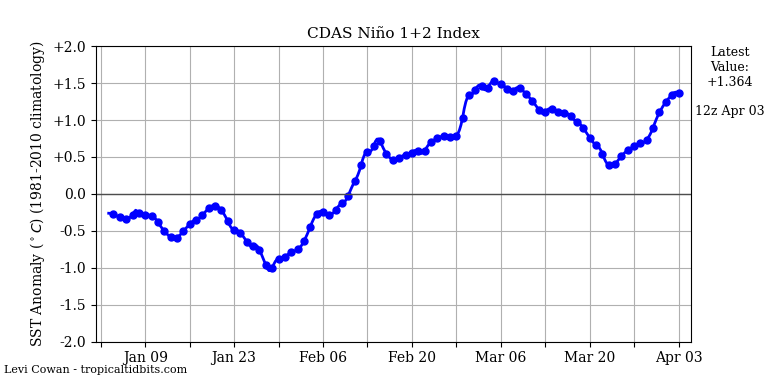

UAH TLT lags ENSO by 4-5 months. The 0.69 C anomaly corresponds with an ONI of -0.1 or 0.2 depending on whether it is 4 or 5 months. If the typical ENSO lag holds in this case then we are only at the ENSO-neutral level right now. I would not eliminate the possibility that higher values will occur within the next 12 months considering the August ENSO value came in at 1.3 with July ONI at 1.1.

I wonder if the temp level after the El Nino is over will be about .2°C above the ~2000-2015 level, which in turn was about .2°C above the previous period.

Rinse and repeat.

https://imgur.com/ecl8wyD

I don’t know man. This one is different. All of this heat is coming from somewhere.

Maybe volcano belching H2O and ocean dumping heat?

The Sun supplies 99+ percent of the heat. Radioactive decay is the rest.

This is as high as it will go. 2016 peak will not be topped.

We’ll see. I can’t say how long the Tongan effect will persist, but the fuel switch could last a while, however minor.

Last month you said the downtrend from 2016 will remain intact. Are you still confident in that prediction?

All analysis must include that the Earth is still in the warming trend that represents rising out of the Little Ice Age.

There does seem to be evidence the Earth has ever spent much time with stable unchanging temperatures over decades and centuries

Almost like some inviolable rule of complex systems in motion, there is always either a warming or cooling phase in play.

I think of spinning tops, or planets, necessarily precessing on their axes. So our climate system “precessing” around its very long term equilibrium——long term meaning a billion or so years.

In a generally warming environment, of course “new” records for high temperatures are to be expected. The breathless terror these “records” generate in the climate alarmist crowd is amusing in light of climate reality.

Chicken Little and Henny Penny reign supreme in the media world.

TempLS has a similar result for August global surface temperature; about 0.05C higher than July, and about 0.24C higher than any previous August. The mean for year to date is now 0.05C higher than 2016, which had its warm months early in the year.

July and August’s abruptly higher temperatures clearly have nothing whatsoever to do with man-made “climate change”. As of yesterday, Arctic sea ice was right on the average for that date of the decade 2011-20. Last year and 2021 were higher, so this decade is setting up to be icier than the prior decade, despite continually rising CO2, including during the plandemic-driven global deindustrialization of 2020-22.

And what about the long term trend of 0.14 degrees per decade? Is that also unrelated to the rising CO2 levels?

What is “long term” in climate science? The run-up from the little ice age well into the 20th century was not CO2 forced and was much longer term than the satellite record.

Yes. Rising CO2 is mainly the result of natural warming since the end of the LIA c. 170 years ago.

How do you figure that Milo ? If the SST warmed a degree that would only be 12 ppm increase in CO2 due to SST. Meanwhile the increase from 280 ppm to 410 ppm is about half of the amount of CO2 emitted by humans burning stuff. So other phenomena must have absorbed the other half.

So, CO2 causes some warming, which causes an upward temperature trend over a long time, barely discernible against natural variability, that should have an exponential decay ending at about 3C of of global warming by the time we have burnt all our fossil fuel reserves (about 3200 ppm CO2).

Except, well before that (roughly about 800ppm), the finding cost of more fossil fuels will cause nuclear and synthetic or biofuels to be relatively economical (but likely quite expensive for the average person barring some sort of liquid fuel synthesis breakthrough) and the problem (if one assumes the CO2 is a problem) will go away on its own.

DM,

Surely it is time to destroy that 12 ppm calculation calculation which, among others, came from Ferdinand Engelbeen.

The amount of CO2 gas released into the air from the heating of ocean water might or might not follow Henry’s Law.

If it followed that Law, then the weight of gas would relate to the weight of heated water.That amount is not known, adequately or at all, because we lack temperature profiles with depth over all of the oceans. We can only make assumptions about thermocline depths etc.

The picture is one of continuous mixing of water at a rate over time that is not adequately known. In some models, the rate at which charged water is brought to the surface to release gas, is not known.

Second, many chemical reactions increase their rate with heat. If there are biological or inorganic reactions at work to produce CO2 in the oceans, these will affect the relationship apart from Henry’s Law, which starts with a fixed, closed volume in the lab experiments.

Third, the constants in the Henry’s Law equation are derived from small, controlled laboratory experiments where neither the mixing effect nor the background chemical gas production system (if any) will operate.

Apart from that, the laboratory system is not in a vessel with hot, moving plates at its base, spreading and spewing heat and almost always having significant CO2 emission from underwater volcanos, magnitude completely unknown.

It is quite dangerous to use the logic you wrote.

….

BTW, it is also dangerous to assume that future nuclear will be expensive. Our construction cost is currently artificially elevated from past actions of inhappy agitators. Needs must, the price might drop a lot when synthetic concerns are shoved aside. I think that costs in Korea and China are closer to the future costs that we can expect. For some extra material see this: Geoff S

https://wattsupwiththat.com/2023/07/18/corruption-of-science-by-money-and-power/

You should have some faith in Henry’s Law and when people claim more CO2 than Henry’s Law suggests, be skeptical of those claims. The amount of water vapor in the air above the ocean corresponds pretty well to Clausius-Clapeyron when one makes an educated guess of what the relative humidity could be (for mixed vertical air transport). Also, CO2 is well mixed in the atmosphere, so one should expect Henry’s Law to be approximately true as well.

And I never said anything about future nuclear being expensive, my point is that future liquid fuels will be expensive because the days of it being pumped out of the ground nearly for free at accessible locations are coming to an end, decade by decade.

That is demonstrably false and discredits us skeptics. The increase can’t be occurring naturally when CO2 isn’t going up at least as much as we’re emitting.

The annual increase of CO2 in the atmosphere is only about half the CO2 emitted by fossil fuel burning.

In fact natural fluxes are net absorbing CO2. (Which is why the earth is greening and agriculture is booming). Fossil fuel burning and cement production are slightly increasing CO2 concentration in the atmosphere (it’s gone from 0.028% to 0.043%).

It’s all good. CO2 is LIFE!

There’s NO CLIMATE EMERGENCY!

You mean the zero trend apart from El Ninos?

Less tropical cloud.

strong sun still..

I’m not sure why someone would downvote you for posting facts. The Wattage increase in TSI is about 1/3 of what is expected from “greenhouse forcing” which is just better insulation of the energy that already exists in the system, whereas the TSI increase means the whole system has more energy to play with and to reach a new equilibrium.

An increase in TSI of 1.1 W/m2 is equivalent to a radiative forcing of about 0.2 W/m2.

bdgwx,

“I.G. Enting, inEncyclopedia of the Anthropocene, 2018

Radiative ForcingRadiative forcing is the change in the net, downward minus upward, radiative flux (expressed in W m− 2) at the tropopause or top of atmosphere due to a change in an external driver of climate change such as a change in the concentration of carbon dioxide or the output of the Sun.”

So what you are saying seems to be incorrect…

1.1 W/m2 * 0.7 / 4 = 0.2 W/m2

Where’s the mistake?

TSI wasn’t measured prior to the 1980s. That graph is from a model in which scientists introduced a rising trend for which there is no evidence. According to sunspots, solar activity is as low as in the 1900s.

Natalia Krivova and Judith Lean explain it in a 2018 publication:

https://arxiv.org/ftp/arxiv/papers/1601/1601.05397.pdf

As we continue to come out of the Little Ice Age – the world has been warming since about 1700 or earlier – hopefully back up to the warmer levels of the early Roman Empire period, or of the even warmer Minoan period, the warming oceans will release CO2, reaching a new equilibrium with the atmosphere and the biosphere of both land and sea. That’s why, even though humans make more and more CO2 every year, the planet absorbs about half of that, even though that half is larger this year than last year or the year. The biosphere has a lag time in growing in relation to the extra CO2 available. Once human CO2 emissions level off once the population levels off and once everyone reaches the same level of prosperity, we’ll see total CO2 levels continue to grow anyway as the biosphere expands, if the warming continues too.

Roughly and ‘by back of an envelope eyeballing” temperature reconstructions, it seems there was a peak in temps AD100 and AD1100 and troughs (years without summers and economic collapse) roughly AD540 and AD1650 – unfortunately the Minoan Warm period and the bronze age collapse don’t fit the timeframe – but anyways, all other things being equal the natural warming we’re experiencing now would seem to reach a peak in AD2100. But that’s just following the reconstruction graphs – we don’t know why there is this roughly 1000 year cycle, it doesn’t have anything to do with the know orbital variations.

“back of an envelope eyeballing”. Now there is a good way to do science. Not to mention that it depends strongly on what reconstruction you use. Neither the PAGES 12K reconstruction nor Marcott et al.’s reconstrcuction show what you claim to see. Have a look at:

https://content.csbs.utah.edu/~mli/Economics%207004/Marcott_Global%20Temperature%20Reconstructed.pdf

or

https://www.realclimate.org/index.php/archives/2013/09/paleoclimate-the-end-of-the-holocene/

Marcott got pings for scientific malpractice.

Pages2K is a far-left propaganda collaboration aimed at a fake attempt to shore up the AGW scam.

PCman999,

Recovery from the LIA seems a logical matter, but it helps greatly if one also mentions the mechanism by which the temperature has been increased. Is it, for example, from more incoming solar short wave, from extra CO2 in the air, from increased volcanism, from various effects of cloud changes, or what?

Geoff S

Whatever is causing it is cyclical:

https://149366104.v2.pressablecdn.com/wp-content/uploads/2022/06/1850THad-

1654131568.5335.jpg

That’s odd. I can’t get that url to post properly.

You have to copy and paste the whole url to get it to work.

It shows that there were three periods since the Little Ice Age ended where there were periods of warming that were equal in magnitude to the warming of the present day.

It warms for a few decades and then it cools for a few decades and has done so since the 1880’s.

Let me try adding this to the WUWT database.

It MAY be related Izaak. Personally I think that there is an effect. BUT—it’s a minor BENEFICIAL effect. The better question is why you think that a slightly milder climate is a crisis?

If there are any negative effects, we just need to use some of the vast increases in wealth that society can expect in the coming decades to adapt to whatever change comes our way.

What evidence do you have that another 1.1°C rise in temperature by 2100 is going to do anything but increase arable land area and extend growing seasons?

Warmer is better. More CO2 More Life. There is NO CLIMATE EMERGENCY!

The IPCC said the trend should be 0.4 degrees per decade for the measured increase in CO2 levels, with a possible range of 0.25 to 0.65 degrees/decade. The long term trend being so far below the minimum shows that either the assumption of CO2 as the driver of warming was incorrect or the effect of CO2 was so vastly overstated there is no long term concern.

Empirical ECS is 1.7 not 3+

There is NO CLIMATE EMERGENCY!

Yes

LOL, there were long periods of time of no warming in between El-Nino’s which is a step-up warming event in temperature data which indicate that CO2 isn’t driving the trend.

When the trend is trivial, in the end the driver whatever it is must also be weak and offset by negative feedbacks.

I see ENSO as a storage-discharge cycle. It is to be expected that temperatures level off or even decline while energy is stored in the ocean during La Niña. Then when it discharges during El Niño, atmospheric temperatures rise. A continuously rising CO2 concentration generates an increasing LW flux but it’s a complex system. Temperature responds in fits and starts.

The even longer geological history shows that warming and cooling are totally out of synch with CO2. Ergo, CO2 has no effect on climate.

Past CO2 changes were caused by geological and biological process. Man has manages to add 35% CO2 to the atmosphere in only about a century. This doesn’t seem to have done any harm so far, but it’s worth keeping an eye out for what effect on climate it might have. Its also worth keeping an eye out that the opportunists of the world don’t use increased CO2 as a money grubbing opportunity.

I wouldn’t suggest the jump in the last couple of months was due directly to CO2. There’s clearly something very unusual happening, and I doubt anyone knows for sure why.

But the linear rate of warming over the course of the UAH record, about 0.6°C over the last 44 years, is an underlying cause of any record. Whatever caused the recent spike is doing it on top of an already warmer planet.

But the rise in CO2 is largely due to the natural warming coming out of the Little Ice Age, coldest interval of the Holocene, ie past 11,400 years.

https://news.yahoo.com/ozone-hole-above-antarctica-opened-100003567.html

It always opens when it’s dark down there. I think you need the Sun’s rays to blast O2 so that there are free O to join up with O2 to make O3

Bellman:

For the cause of the recent temp. spike read:

“Definitive proof that CO2 does not cause global warming” An update.

https://doi.org/10.30574/wjarr.2023.19.2.1660

The recent spike, like the last spike in 2016 and the one in 1997-8 is due to the El Nino, which has been documented for hundreds of years. If it’s man made, you’ll have to forgive the Conquistadors for destroying the Inca temples and ending the live human sacrifices.

Notice how flat temps are (they go up and they come down and so average out) between El Ninos. The only time there’s the “CO2 signature ” is the 1979-1997 period where the rising trend is clear visible even with the oscillations – though that increase was more likely from pollution reduction than the little bit of CO2 increase, since that started in the early seventies, whereas CO2 production has been increasing for a couple hundred years and never seemed to affect temperature then.

Dr Spencer himself said in the July UAH update that it’s too early for the current El Nino to be influencing global lower stratospheric temperatures. We still have that to look forward to.

“global lower stratospheric temperatures.”

How the **** is that relevant, clown !

“The recent spike, like the last spike in 2016 and the one in 1997-8 is due to the El Nino…”

This is claimed a lot, but it makes no sense to me based on the actual figures. By this time in 1997 or 2015 ENSO was well into red territory (values of over 1.0) and had been positive for some time. Yet the peaks wouldn’t be reached until the following year. This year the June-July period is the first to be even slightly positive.

If the last few months have been in response to the current predicted El Niño, it:s behaving in a way that is very different to what we have seen before. Maybe the accumulated ocean heat has caused some fundamental change in the nature of the ENSO cycle, which could be worrying. But for now I’m just going to assume we don’t know what is happening.

“There’s clearly something very unusual happening, and I doubt anyone knows for sure why.”

There’s something we can agree on.

Tom Abbott:

“I doubt that anyone knows for why”

You must have missed my earlier post:

https://doi.org/10.30574/wjarr.2023.19.2.1660

Nick,

Your specialty, mathematics.

Is a difference of 0.05 deg C statistically significant?

Or, to pose it another way, are 2 of these monthly temperature anomalies able to be distinguised if they are 0.05 deg C different, or are we seeing noise?

Geoff S

It’s close to significant for the full year. But it will be higher by December.

The trend is highly significant.

People like to confuse statistical significance with didn’t happen. Any hot day isn’t statistically significant. But it is still hot.

“But it will be higher by December.”

Nick is playing with his crystal balls again !

No crystal ball required. The El Nino’s effect isn’t even being felt yet in UAH.

How would know ?

You have never been correct with one statement you have ever made.

You are a classic climate alarmist BSer.

Roy Spencer, the guy who makes UAH, says so.

So he said for July. What about August?

Roy has been unequivocal that UAH TLT lags ENSO by 4-5 months. The 4 and 5 month ONI values are 0.2 and -0.1 respectively. UAH still hasn’t responded to the El Nino. It has, however, responded to the transition from La Nina to neutral. Unless the ENSO correlation has suddenly broken down then we haven’t seen the peak in UAH TLT yet.

LOL 🙂

I’m cold. 65F and windy with stratus. What does this mean Holy Nick?

I’d say not noise, but the predicted effect of a rare volcanic event, plus switch in ship fuel.

What is the uncertainty envelope for those numbers?

This is a pretty mechanical response. Did you ask for the uncertainty of Roy’s UAH numbers?

Do you REALLY think that no one noticed that you didn’t answer the question? The uncertainty of UAH is irrelevant to the question posed directly to you.

It’s obvious that you either don’t know the answer or don’t want to offer the answer for some reason.

Either way, it puts a load of doubt on everything you post since no one can judge whether the ΔT is large enough to overcome the uncertainty.

So is there a load of doubt on Roy’s post?

It’s not doubt, it’s uncertainty that no one ever discusses. Even you treat the ΔT as an exact number that has no variance associated with it. People who work with real physical measurements never do this.

When you see a measurement whose uncertainty is 0.2, quoted to the hundredths digit, alarm bells go off. If the uncertainty is quoted to two digits, like 0.20, one can believe the uncertainty has been evaluated to the hundredths digit.

Too many mathematicians have been trained that numbers are just numbers to be manipulated however you wish.

To physical scientists and engineers, numbers are not just numbers. They portray and represent physical measurements that have rules to be followed when using them. No measurement is ever considered exact, there is always uncertainty based upon Type A and Type B uncertainties and resolution is one of the things that causes uncertainty.

Satellites are about ±0.2 C [1][2]. Traditional surface are about ±0.05 C [3][4][5]

Not only NO, but HELL NO! Traditional surface temperature measurement devices have uncertainties in the +/- 0.3C to +/- 1.0C range.

No matter how you try to rationalize it to yourself the surface average temperature simply can’t have an uncertainty less than that.

You continue to push two memes no matter how often you are shown they are wrong.

Surface measurement devices always, ALWAYS, have systematic uncertainty. It can’t be identified through statistical methods and therefore cannot be cancelled out in any way, shape, or form.

q = Σx_i/n is the AVERAGE VALUE of a series of temperature measurements. When you find the uncertainty associated with that formula you are finding the AVERAGE UNCERTAINTY, not the uncertainty of the average.

See the attached picture. The average uncertainty is 2.2. The total uncertainty is 13. They are *NOT* the same. All you do when you find the average uncertainty is take the total uncertainty and spread it evenly across all individual members of the data set – you still wind up with the same total uncertainty.

The proof of the pudding is that you will not list out the variances of the temperature data sets, beginning with the individual daily mid-range values and ending with the final global average. Neither will anyone else trying to advance the CAGW agenda. Averaging doesn’t lower variance. Anomalies don’t lower variance.

The daily temperature has a variance of Tmax – Tmin. When you do (Tmax+Tmin)/2 you don’t lower that variance, it remains. The result should be written as [ mean = m, variance = d] where d is the diurnal range for that day.

When you combine Day1 random variable with Day 2 random variable you get:

m_t = m1 + m2, d_t = d1 + d2 (variances add when adding random variables)

This only involves the stated values of the temperatures and doesn’t even consider the measurement uncertainties associated with the stated values of the temperature.

Now come back and tell us that all measurement uncertainty is random, Gaussian, and cancels plus variances can be ignored.

And if all the rounds go through the same hole, the standard deviation is ZERO, which means the SEM is ZERO, yet somehow all the rounds are still off-bullseye.

Go figure.

Which as I explained to you in another comment thread, is a good illustration of the usefulness of the SEM. If you have a very small (or even zero) SEM, you know that any difference between the sample average and the expected average (in this case a bullseye) can not be down to chance. You’ve just demonstrated, using SEM that there is a systematic error in your gun.

You just demonstrated your abject ignorance, again.

In real metrology true values are UNKNOWN.

bgwxyz trots out his milli-Kelvin “uncertainties” again, nothing new under the sun.

The real problem is using the variance in the anomaliy distribution to represent the inherent uncertainty.

You are averaging small numbers and will end up with small variances.

Anomalies are calculated by subtracting absolute temperatures. The uncertainty should carry the variance of those absolute temperatures, and not the variance of the anomalies.

I’m sure many, many people here that are familiar with measuring instruments also know that it is impossible to increase the resolution of measurement by averaging.

If I measure something to the nearest 1/8 of an inch. I cannot, no way, average 1000 measurements and get an answer that has a smaller resolution. Not one chemistry lab, physics lab, or engineering class/lab would let a student do this.

“If I measure something to the nearest 1/8 of an inch. I cannot, no way, average 1000 measurements and get an answer that has a smaller resolution.”

I keep demonstrating this is not true if you are taking the average of things of different size. You could easily test it for yourself if you weren’t afraid of proving every chemistry lab, physics lab, or engineering class/lab wrong.

Just take 1000 random bits of wood, all of different sizes. Measure each with a very precise instrument, and then again with your 1/8 inch ruler. Compare the averages. Is your low resolution average closer to the precise average, than 1/8 inch.

I can easily test this using R. I’ve just generated 1000 random rods, from a random uniform distribution going from 10″ to 14″.

The precise average of these 1000 rods is 11.915″.

Then I “measure” each with your low resolution ruler – that is I round each size to the nearest 1/8″, i.e 0.125″. By your logic the resolution of the average of the low resolution measurements should be 1/8″. In fact the average is 11.914″.

The precise difference between the two was 0.00118″. This to my mind suggests the resolution is better than 0.125″.

No, you keep generating clownish noise to obscure your trendology non-physical nonsense.

Your lack of training in the physical sciences is showing again. Why do you insist on speaking of things you know nothing about?

Science uses the rules of significant figures. When you add/subtract/multiply/divide your final answer should have no more significant digits than the element with the least significant figures.

Your supposed simulation went from 3 decimal places to 5 decimal places. How did you do that?

All you know is statistics world where the number of digits on your calculator determines the final answer. The more digits it displays the better resolution you have.

That isn’t how physical science works.

(p.s. .914 / .125 is not an even number. You didn’t even get the number of 1/8″ increments correct in your average)

The magic of averaging?

Magical thinking, certainly.

And as usual, rather than addressing the argument, or providing evidence against it – there’s the usual ad hominem and arguments from tradition. “It’s what we were taught at school, and you don’t understand anything.”

It’s as if you keep insisting man will never fly, I keep pointing to planes, and you just say they are impossible and say I need to learn why they are impossible before I’ll understand. It’s religious dogma.

“Science uses the rules of significant figures”

You know I don’t share that faith – quoting from the holy scriptures is futile.

“When you add/subtract/multiply/divide your final answer should have no more significant digits than the element with the least significant figures.”

You don’t even understand your own dogma. The “rules” are when you multiply and divide the result has the same number of significant figures as the element with he least, but when add or subtract it’s the element with the fewest decimal places that determines the resulting number of decimal places.

As I’ve demonstrated before, just following these rules will allow you to have more significant figures in an average than you would like. Add 100 figures to 1 decimal place, the total will still be to 1 decimal place. Now divide by 100 and the answer would be quoted to 3 decimal places.

Which is why to justify your claim there has to be an extra special rule that only applies to averages.

“Your supposed simulation went from 3 decimal places to 5 decimal places. How did you do that?”

Oh I’m a naughty heretic – I quoted to as many figures as a I wanted to demonstrate the point. But if it offends your sensibilities

“The more digits it displays the better resolution you have.”

No. The closer the calculated average was tot he true average, the better the resolution. Really this shouldn’t be hard even for. I created a set of random numbers known to as many decimal places as you could wish and calculated their average. Then I reduced the resolution of the figures by rounding to 1/8″ (0.125″), and according to your logic the resolution of the average should have been 1/8″, yet it was almost identical to the true average, differing only by 0.001″. This demonstrates that the resolution of an average can be higher than that of the individual measurements.

“p.s. .914 / .125 is not an even number.”

Huh?

Nice screed, LoopholeMan.

“You know I don’t share that faith – quoting from the holy scriptures is futile.”

We *all* know you think physical science is totally wrong in using significant figures. It stems from you having *NO* STEM background at all. You’ve apparently never, ever had a high level physical science lab course (i.e. physics, chemistry, engineering, etc) let alone any real world experience in actually measuring something that impacts human life and well-being.

“As I’ve demonstrated before, just following these rules will allow you to have more significant figures in an average than you would like.”

NO, they will not. You misapply them every single time. Jim already pointed out to you the reason you can’t is right there in Taylor’s book – which you absolutely refuse to study.

” Now divide by 100 and the answer would be quoted to 3 decimal places.”

Nope! You can’t even count significant figures. 100 has *ONE* significant figure. If the measurements are good to one significant figure then dividing by a number with one significant figure won’t change anything.

If the 100 measurements are good only to the tenths digit (i.e. uncertainty is +/- 0.1) then the answer is good only to the tenths digit. Same for their average.

You are trying to somehow rationalize that you can take an average and it will increase resolution. Climate science may believe that, *YOU* may believe that, but in the real world NO ONE believes that.

“We *all* know you think physical science is totally wrong in using significant figures.”

Only if it using these simplified rules to work out uncertainty.

“Nope! You can’t even count significant figures. 100 has *ONE* significant figure. ”

And there you go, demonstrating you don;t even understand the dogma you force on others. 100 is an exact number, exact numbers have infinite significant figures.

http://www.ruf.rice.edu/~kekule/SignificantFigureRules1.pdf

http://www.astro.yale.edu/astro120/SigFig.pdf

“You are trying to somehow rationalize that you can take an average and it will increase resolution.”

Yes, that’s exactly what I’ve been trying to tell you. An average can have a better resolution than the measurements that make it up. If you want to persuade me I’m wrong, you need to provide some evidence that is better than simply yelling that it can’t be so.

“Jim already pointed out to you the reason you can’t is right there in Taylor’s book”

He has not done anything of the sort. Taylor does not say that – he explicitly points out that in some exercises you can quote more figures than the individual measurements. All he says is the number of figures has to be the same order as the uncertainty – something I agree with. And he also points out that taking an average reduces the uncertainty.

Maybe on Neptune. Stop sniffing methane.

As usual, you didn’t bother to even read the definitions you cherry-picked!

“Thus, number of apparent significant figures in any exact number can be ignored as a limiting factor in determining the number of significant figures in the result of a calculation.”

I said: “Nope! You can’t even count significant figures. 100 has *ONE* significant figure. If the measurements are good to one significant figure then dividing by a number with one significant figure won’t change anything.”

It simply doesn’t matter if you consider the 100 as having infinite sig digits or one, it doesn’t affect the number of sig digits the answer has.

“If you want to persuade me I’m wrong, you need to provide some evidence that is better than simply yelling that it can’t be so.”

I have. Multiple times. The fact that you can’t read is not *my* problem, it’s yours. Your logic says you can reduce uncertainty to the thousandths digit using a yardstick. It’s nonsense on the face of it. And it is something you have yet to explain. You just ignore it when someone points that simple fact out to you.

“Taylor does not say that – he explicitly points out that in some exercises you can quote more figures than the individual measurements.”

Once again, you have not STUDIED Taylor at all. You continue to just cherry-pick with no understanding of context at all. Taylor covers this in Chapter 2, right at the start of the book! “In high-precision work, uncertainties are sometimes stated with two significant figures, but for our purposes we can state the following rule:

Rule for stating uncertainties:Experimental uncertaintites should almost always be rounded to one significant figure.”

He follows this with Rule 2.9: “The last significant figure in any stated answer should usually be of the same order of magnitude (in the same decimal position) as the uncertainty.”

There is a *reason* why physical science and engineering uses the significant figure rules. You don’t seem to be able to grasp it at all. Instead you just claim that hundreds of years of practice by physical scientists and engineers is wrong because you can calculate out to 8 or 16 digits on your calculator.

The concept has to do with repeatability. If you are publishing experimental results or design calculations what you state for the stated value and for the uncertainty has to be repeatable. Being repeatable applies to averages as well as individual measurements. Taylor states it very well in Chapter 2: “If we measure the acceleration of gravity g, it would be absurd to state a result like 9.82 +/- 0.02385 m/s^2”

My guess is that you don’t have a clue as to why this would be an absurd result. It *is* what *you* might get by averaging the individual uncertainties of the multiple measurements taken during the experiment and ignoring significant figure rules.

I’m not eve going to give you a hint as to why this is an absurd result. You’d just say it’s wrong.

“As usual, you didn’t bother to even read the definitions you cherry-picked!”

You made two statements. I was correcting the first one. Statement 1) A divisor of 100 has 1 significant figure. Statement 2) some waffle about measurements being good to 1 significant figure.

Your first statement is just wrong. Your second is also probably wrong, and irrelevant to the case we were discussing.

Let’s suppose you had 100 measurements, each with a single significant figure. Lets say they were all integers between 1 and 9. How many significant figures does the sum of these 100 figures have? Remember when adding it’s the lowest decimal place that counts, so the sum will have a unit as it’s final significant figure. Obviously the sum has to be between 100 and 900, so has to have 3 significant figures. Now divide by 100, and report to the same number of significant figures as the smallest value, i.e. 3 (3 < infinity). So if say the sum was 518, the average would be written 5.18.

“Taylor covers this in Chapter 2, right at the start of the book! “In high-precision work, uncertainties are sometimes stated with two significant figures, but for our purposes we can state the following rule:”

Which is nothing to do with the point I’m making. Which is if you have a mean of a large number of values measured to the nearest unit, the uncertainty may well be less than a tenth of a unit, in which case you should report the result to the nearest tenth of a unit.

“… because you can calculate out to 8 or 16 digits on your calculator.”

Again, you just demonstrate you didn’t read what I wrote, and are just arguing with the voices in your head.

“Let’s suppose you had 100 measurements, each with a single significant figure. Lets say they were all integers between 1 and 9.”

If these are measurements and you are getting values ranging from 1 to 9 then I would tell you to get a different measuring device!

“Which is nothing to do with the point I’m making.”

The only issue at hand is MEASUREMENTS and MEASUREMENT UNCERTAINTY. Significant figures and measurement uncertainty are concepts to make measurements in the physical sciences and in engineering repeatable and meaningful.

Something which you simply cannot address with any competency at all.

All you want to do is show how many digits your calculator can handle – which is meaningless when it comes to measurements.

“Which is if you have a mean of a large number of values measured to the nearest unit, the uncertainty may well be less than a tenth of a unit, in which case you should report the result to the nearest tenth of a unit.”

If your measurements range from 1 to 9 then the uncertainty is in the units digit – PERIOD.

“If these are measurements and you are getting values ranging from 1 to 9 then I would tell you to get a different measuring device!”

For the hard of thinking, that’s 100 measurements of different things.

“The only issue at hand is MEASUREMENTS and MEASUREMENT UNCERTAINTY. ”

and the point flies over his head again.

“All you want to do is show how many digits your calculator can handle”

A) I am not using a calculator.

B) The measurements are rounded to 1 decimal place, so each have 3 significant figures.

“If your measurements range from 1 to 9 then the uncertainty is in the units digit – PERIOD. ”

Which is why I said the measurements only have one digit. But, following the precious rules allows me to write the average to 3 decimal places.

Ambiguity strikes again. You blokes are using separate lines of argument.

bellman is pretty much correct about the number of decimal places which can be used in reporting averages in the absence of uncertainty. It’s the order of magnitude of the count.

The arithmetic mean is the ratio of the sum over the count.

125/100 is 1.25

1250/1000 is 1.250

1250000/1000000 is 1.250000

Tim is also right about the number of decimal places used with measurements, which always have uncertainties.

A measurement of 1.25 is shorthand for 1.250 +/- 0.005, just as 1.250 is shorthand for 1.2500 +/- 0.0005.

Hopefully some progress can be made once the definitions and topic of discussion are agreed upon.

As to whether the uncertainties should be added directly or in quadrature, or as absolute or relative uncertainties, my Physics and Chemistry lab sessions are too far in the past.

“Ambiguity strikes again. You blokes are using separate lines of argument.”

In more ways than one.

“bellman is pretty much correct about the number of decimal places which can be used in reporting averages in the absence of uncertainty.”

Thanks – though I should say that some rules for significant figures do say that the mean should only be quoted to the same number of places as the measurements. My point is that this seems to be tacked on as a “special” rule, and I’ve never seen justified.

I suspect the problem is that these rules are only intended as an introduction, before proper rules of uncertainty are introduced. They make sense when dealing with averaging the same thing a few times, but are way off when applied to large scale samples of different things.

“A measurement of 1.25 is shorthand for 1.250 +/- 0.005, just as 1.250 is shorthand for 1.2500 +/- 0.0005.”

I don;t disagree that that is a short hand, and in some cases might be a useful one. But I much prefer having an explicit uncertainty range. The more I’ve thought about this convention for problems I see.

From what Tim has said though I think the real problem is we never agree with what an average is. I see it as an abstract description of the mean of a population,. whereas he sees it as representing an actual thing – and if nothing is the same as an average than the average does not exist.

The idea that the average can only be as good as a single measure springs from that, and would make sense if you were talking about a median, rather than a mean.

I kept it to integer values to take measurement uncertainty out of that part of the picture. I was purely looking at the number of significant digits attributable to the order of magnitude of the denominator.

That’s a point I’ve brought up a few times. “Average” is a very vague term, which usually means one of the 3 Ms, and you have to guess which one from context.

Even “mean” is ambiguous, ranging from the commonly accepted “arithmetic mean” or “weighted arithmetic mean” to “expected value”. Without agreeing on defined terms, it’s almost guaranteed that people will talk past each other and become frustrated.

Interesting. My educational background assumes it’s derived from the sample, and to determine whether it’s the mean, median or mode.

Then there’s the question of what the mean means. Again, my background says it’s just one of the measures of centrality of a distribution, and to look for the mode, median, range and variance/SD to characterise the distribution.

“Thanks – though I should say that some rules for significant figures do say that the mean should only be quoted to the same number of places as the measurements. My point is that this seems to be tacked on as a “special” rule, and I’ve never seen justified”

This has been justified to you over and over and over again.

It’s so that others looking at your results don’t assume you were using a measuring device with higher resolution that you were actually using! It has *everything* to do with the repeatability of measurement results.

We remember that your ultimate goal is to justify climate science identifying anomaly differences in the hundredths digit from measurements with uncertainties in the units digit. Thus you claim you can increase measurement resolution, and therefore uncertainty, by averaging.

When confronted you start making up numbers like 100 measurements ranging from 1 through 9 – and then refuse to accept that the range of those values indicate either a broken measurement device or a multi-modal distribution – and in either case the measurement uncertainty is huge, even for the average! You just retreat to the excuse that the SEM is the uncertainty of the average.

A mathematician versus a physical engineer. Measurements of a measurand ARE a description of a physical phenomenon. They are not an abstract notion of a population.

It is why you continually dwell on statistical calculations of how to do the math to achieve a number. It is why you never give examples of physical things to measure and how their physical measurements should be calculated.

To you temperature measurements are abstract numbers and the entire process is finding a number that shows how close the mean can be calculated. The more decimal digits you can calculate, the more accurate you can say the mean is. It is why you want to shoehorn an average of disparate measurements into a functional relationship that can be treated as a real measurement of a real thing. The more you include, the larger “n” becomes and the smaller the SEM becomes. You have reached the goal of a mathematician, you calculated the mean to an exact number.

Engineers and physical scientists deal with physical things. Our goal is to measure the physical properties of a measurand as best we can. It is unethical to reduce measurement uncertainty by just concentrating on how accurately a mean can be calculated while the measurements themselves demonstrate a large variance.

Here is how Dr. Taylor explains it his book in Sections 4.3 & 4.4. He made 10 measurements of a spring constant and obtained a mean of 85.7 ± 2. The uncertainty is the Standard Deviation of the 10 measurements. He says that this uncertainty can be used to characterize SINGLE measurements of other springs with their uncertainty. I suspect this is how NOAA/NWS has determined a ±1 F uncertainty that should be applied for single temperature measurements. Dr. Taylor shows that you can also specify the SEM for the uncertainty in the one spring that has the 10 measurements. As usual, Dr. Taylor makes it plain this only works for the same spring and that it must be stated that if the SEM is used, one must specify that the SEM is being quoted for a single item.

Here is another diamond from a note by Dr. Taylor. If the single measurements of the spring constant of other springs begin to differ substantially from the average of the first spring, one must reevaluate the whole uncertainty. Think about what this means for temperatures with different devices and locations.

Lastly, resolution is important in science. It defines repeatability and what others attempting to duplicate experiments can expect. That is why Standard Deviation is more important that SEM. It informs others of the range of values you measured. An SEM does not provide the ability to know what to expect in measurements. It only defines how accurately you were able to calculate the mean without informing you of the variability of what was actually measured. Think about it, does the mean anomaly value of ΔT provide any information about the range of ΔT’s used to calculate it?

Can you imagine what might happen if bellman was responsible process control in a factory?

Yikes.

“Measurements of a measurand ARE a description of a physical phenomenon. They are not an abstract notion of a population.”

Yet you keep insisting we have to follow the “NIST method” which treats the average monthly temperature as a thing to be measured, and all the daily temperatures as different measurements of that thing.

But as I keep saying – if you don’t want to treat a sample mean as a measurement of the population, stop insisting we have to propagate the measurement uncertainties. If the mean isn’t a measurand, then nothing in the GUM applies to it, and we can just go back to using the accepted statistical results.

“It is why you continually dwell on statistical calculations of how to do the math to achieve a number.”

Because they are a powerful and well researched tool for explaining the real world.

“To you temperature measurements are abstract numbers”

They are not – but that doesn’t mean you can’t use abstract numbers to analyze and them. All numbers are abstract, even the ones you use to measure the length of your rods, it doesn’t mean they cannot describe concrete things.

“The more decimal digits you can calculate, the more accurate you can say the mean is.”

Absolutely not what I’ve said.

“It is why you want to shoehorn an average of disparate measurements into a functional relationship that can be treated as a real measurement of a real thing.”

You still don’t know what functional relationship means.

“You have reached the goal of a mathematician, you calculated the mean to an exact number.”

You really can;t be this dense. The point of estimating a SEM is because you don’t know what the exact average is. It’s telling you how much uncertainty there is in your average.

“Our goal is to measure the physical properties of a measurand as best we can.”

I would hope physical science has better goals than that. Good measurements are important, but the main goal is to figure out what they mean, what questions they raise, and how those questions can be answered – and a lot of that involves statistical reasoning.

“Here is how Dr. Taylor”

And we are into the usual “If Dr Taylor uses statistics for one purpose, that is the only way they can used.”

Who are “we”?

“ if you don’t want to treat a sample mean as a measurement of the population”

You simply can’t get *anything* right, can you? No one is saying the mean of the population is not a measurement of the population.

They are saying that the MEASUREMENT UNCERTAINTY of that mean is *NOT* the SEM. It is the propagated uncertainty from the individual elements.

Again, one more time, once your measurement uncertainty overwhelms the resolution of your measuring device, YOU ARE DONE! If you uncertainty is in the units digit then whatever value you come up with in the millionth digit is useless. It’s within the uncertainty interval and that millionth digit is UNKNOWN.

If you have a frequency counter that displays a value in the Hz digit at 10Mhz but the uncertainty of the counter is 1%, then the value in the Hz position IS UNKNOWN. It is within the uncertainty interval. The uncertainty interval would range from 9.999.990 Hz to 10.000.010 Hz. No matter how many times you measure that approximately 10Mhz signal you can’t increase the resolution to the Hz digit because it is UNKNOWN, each and every time. It doesn’t matter if you can calculate the average out to sixteen decimal places, it doesn’t change the uncertainty or the resolution of measurement.

When you say it would take 10,000 measurements or more for you to refine the reading of your cell phone timer you are speaking of the SEM! How precisely you can calculate the mean.

The problem is what you identified. Your reaction time puts the uncertainty of each and every reading in at least the hundredths digit. The uncertainty of the mean will be in at least the hundredths digit, not in the millionth digit. It simply doesn’t matter how many measurements you take or how many digits you use in calculating the mean, the measurement uncertainty will remain in at least the hundredths digit and, quite possibly, in the tenths or unit digit depending on your reaction time.

The timer resolution being in the hundredths digit doesn’t matter if your reaction time causes the measurement uncertainty to be in the tenths digit. Once the measurement uncertainty interval has covered up the resolution, you are DONE. Trying to extend the measurement resolution beyond the measurement uncertainty interval is a waste of time and resources.

The goal in measurement is not JUST using higher resolution instruments but also more accurate instruments. It doesn’t matter what the resolution of your instrument is if the measurement uncertainty covers up that resolution. Measuring 1.123456789 seconds is useless if your measurement uncertainty is +/- 0.1 seconds.

Climate science makes the same mistake you always make. They ignore the measurement uncertainty and think they can extend resolution from +/- 1.0C to +/- 0.005C merely by averaging. You can’t. Anything past the unit’s digit is UNKNOWN. What climate science is truly doing is trying to guess at the location of a diamond in a fish tank full of milk. That location is UNKNOWN and will always be UNKNOWN. Until they start properly handling the variances of the temperatures anything they come up with is simply not fit for purpose!

And the reaction time will be bias error, that is not constant!

How can this be washed away with averaging?

IT CAN’T.

Yes, that was part of my point. The reaction time will likely have a systematic error that will be left no matter how much averaging you do.

These are all integers, not measurements, therefore there is no measurement uncertainty.

Totally different.

The first half of the comment was purely about the mean, and how it can be expressed. Using integers keeps it in a purer form.

The object of the exercise is to try to get people to at least agree on the terminology so they’re arguing about roughly the same thing.

Yet measurement uncertainty deals with floating-point numbers, significant digits have absolutely no meaning for integers.

“1250000/1000000 is 1.250000″

Without decimal points on both the sum and the divisor, you can’t claim the ratio is 1.250000, i.e. seven significant digits.

I hearken back to the old venerable slide rule. It’s why, at least in engineering, you would always specify numbers as exponents of 10, e.g. 1.25 x 10^6. Easy enough to tell how many significant figures in that. Calculators have made so many people totally unable to properly represent measurements.

Goes back to the old saying: “its within an order of magnitude”.

That’s right, but significant digits do have meaning for means of integers.

They’re an indication of the sample size, at least to the order of magnitude level.

Oh, yes I can!

I counted them, so I know the numbers involved. It is what it is, and that’s all what it is. If the sum was 1250001 instead of 1250000, the result is 1.250001.

It also tells readers that the sample or population size is of the order of magnitude of a million.

If it was 1250000/999999, I wouldn’t be justified in using 1.25000125000, but I would be justified in using 1.250001.

It’s unusual for a sample to be anywhere near this large, so it also tells readers that this is the population statistic.

And none of it is of much use without the other summary statistics needed to characterise the distribution.

No, without decimal points or exponential notation, you cannot assume a number of digits.

Your huge number has to be written as 1250000. or 1.25×10^6.

No, my huge number was 1,250,000, and it came from a population of 1,000,000. Let’s say 1.250,000 cars registered to 1,000,000 people.

The mean cars registered per person is 1.250000.

The numbers I used were a bad example, because they were too round. Let’s have 1.250,108 cars, and stick with an exact 1,000,000 people. The mean is 1.250108. This is valid because of the order of magnitude of the sample size.

As I said in a reply to another comment, reconciling significant digits between counts and measurements is beyond my pay grade.

If your value is used in a software calculation, it will be converted to floating point representation because the ratio is a floating point. So it really doesn’t matter.

And if the long division is done by hand it will have the same value.

It’s just a convenient way of displaying a ratio.

I don’t think you want to get into a competition with me about how far we can wet up the wall as far as computers are concerned 🙂

??

If the division of 1.250,000 by 1,000,000 is done by hand or by calculator firmware or computer software, the result is the same.

Similarly, if the 1.250000 is used in a calculation done by hand, the result should be the same as a calculation performed in firmware or software. It will take longer, and most likely require more checking, which is why we use computers.

What calculations do you plan to use the mean in?

Counting is not measuring using a measuring device. Taylor covers this in Section 3.2.

Counting occurrences of something typically requires it to be done over a time interval. There is no guarantee that the same number of occurrences will happen in a subsequent, equal time period. So his rule is that you use the count, ν (nu), as the average +/- sqrt(ν).

average number of occurrences per time = ν +/- sqrt(ν)

If you are just counting, like the number of glasses in your kitchen cupboard, where no time interval applies, then there shouldn’t be any uncertainty to calculate. But neither does this represent a measurement.

It is measurements that are the issue here, the measurement of temperatures and how they combine to form a global average temperature.

There are so many problems with how climate science does this that the final result is simply not fit for purpose. And no amount of “averaging” or precisely locating the average value can make it so.

Remember, bellman has several evasion tactics he uses. One of them is redirecting the discussion away from the subject at hand.

The issue is *NOT* how you find the average of a series of numbers that have no relationship to measurements – but that is where he wants to take the discussion because he knows we are correct in our assessments of how you do measurements and measurement uncertainty.

“One of them is redirecting the discussion away from the subject at hand.”

Fine words from someone who has spent the last day ignoring the point of my simple demonstration, and instead focusing on how many digits I reported the random numbers to.

Yes, yes, yes. The communication problems seem to involve the correct statistical treatment of measurements.

The rules which apply to discrete values (counts, if you will) get blurred when measurements and uncertainty are involved.

The object of the exercise is to hash out whether temperature differences in the hundredths digit can be determined from temperatures with measurement uncertainty in the units digit or tenths digit.

Climate science says you *can*. bellman is trying to prove that you can. They say you can improve resolution by averaging measurements with uncertainties in the units digit or tenths digit.

The physical scientists and engineers here are saying you can’t. You can’t increase resolution past what you can measure. Calculating an average out to the limits of the calculator is not increasing resolution.

This seems to be the core of the disconnect.

It certainly is possible with discrete values, or at least it looks like it.

But, and this is a big but, it doesn’t really increase the resolution.

The number of significant digits (actually the number of digits to the right of the decimal point) is the order of magnitude of the denominator.

Just like the number of decimal places reported for a measurement represents the resolution of the measuring instrument, the number of decimal places reported for the mean of discrete values represents the order of magnitude of the population or sample size.

Reconciling the two is beyond my pay grade.

All of this is a huge non sequitur for averaging air temperature measurements, which are most certainly not integers.

Measurements are continuous on the number line. A measurement can have any value along the number line. A discrete value is one where there is no immediately adjacent area on the number line that it can take on.

Typically discrete numbers are considered to be integers but I don’t think that is a requirement. If 10.1 and 10.2 are two possible values for the object but there can’t be any values between those two points then they are discrete values. (perhaps it’s just a matter of scaling?)

Bellman and climate science try to make all measurements into basically discrete numbers, that way they don’t have to worry about the possible vales on the number line that the measurement could also be.

“Measurements are continuous on the number line.”

Not if you are measuring things to any number of significant digits, they are not.

“Bellman and climate science try to make all measurements into basically discrete numbers, that way they don’t have to worry about the possible vales on the number line that the measurement could also be.”

Of course not. The assumption is that the thing being measured can have any value, i.e.is continuous, but any measurement that involves rounding will give you a discrete value. However, the uncertainty will be a continuous value, all calculations are based on them being continuous.

Specifying an uncertainty as a standard deviation, or as an expanded uncertainty interval generally implies that the measurand could take any value within the range.

“Not if you are measuring things to any number of significant digits, they are not.”

Once again you totally miss the point. Measurements are a CONTINUOUS variable. The value can be *anything*. There is no separation between one value and the next. With a discrete variable it can only take on certain values. There is always a gap between one value and the next.

You are simply unable to understand the real world at all. Resolution is only how far down you can measure it. It is not a determinative factor as to whether it is continuous or not.

“The assumption is that the thing being measured can have any value, i.e.is continuous, but any measurement that involves rounding will give you a discrete value”

NO! It doesn’t become a discrete value. Look at the GUM one more time! ““the dispersion of the values that could reasonably be attributed to the measurand”

There is absolutely nothing that keeps the measurement from taking on ANY value that can be attributed to the measurand. You just keep on ignoring what the uncertainty interval is for! The stated value is just that, a stated value, it’s the dial indication if you will. But the uncertainty interval gives you an indication of what the stated value *could* be if the measurement is repeated. Those possible values are CONTINUOUS on the number line.

“Specifying an uncertainty as a standard deviation, or as an expanded uncertainty interval generally implies that the measurand could take any value within the range.”

And here is your cognitive dissonance at its finest. A measurement is a discrete value but it can take on any value within the range.

Just more waffling and shooting from the hip.

Yep!

Or what about rounding? That standard method of converting air temperature F to C comes to mind.

Yes, they are different animals.

That wasn’t about measurements, it was about how means of discrete integer values can validly be decimals, to a certain number of decimal places.

Without the metrology domain knowledge, it is easy to bring those integer rules into the field of measured values.

By the same token, it is easy to view everything as measured values with implicit or explicit resolution limits.

This is all about measurements; without them, you know nothing,

I *still* can’t find a statistics textbook that has any examples where the data values are given as “stated value +/- uncertainty”. They all assume the stated values are 100% accurate and how precisely you can calculate the population mean is the “uncertainty of the mean”.

That simply isn’t real world. It’s why engineers and physical scientists learn early on in lab work that you *have* to work with measurement uncertainty. You just can’t assume all stated values are 100% accurate.

bellman says his cell phone stop watch only reads in the hundredths digit and he would need many, many measurements to get to the microsecond. He can’t seem to understand that when he says this he is talking about the SEM, i.e. how precisely he can locate the population average. It is *NOT* the measurement uncertainty of the average which would likely be in the tenths digit if he has *very* good reaction times.

If your uncertainty is in the tenths digit then the value in any decimal point further out is UNKNOWN. It doesn’t matter how many digits you carry out the SEM calculation, once you go past the measurement uncertainty digit it’s all UNKNOWN, including the population average!

I have the classic Snedcor & Cochoran text and it certainly doesn’t. The closest it comes is when it deals with linear regression when the “x axis has error”, if I remember right.

That’s because they’re statistics textbooks, not metrology textbooks.

Most statistics work is done with “things” rather than measurements, and it’s usually the number of “things”.

That’s the basis on which more specialised areas build. Metrology is one of those specialised areas.

Would it be useful to at least touch on the additional complexities introduced by measurement uncertainty? Yes, certainly. But it would only be a light touch, or statisticians would be metrologists.

This is not a fight between statistics and metrology: bellman apparently believes it is possible to extract information from nothing. Where in statistics is this outlandish claim made?

“from nothing”? I think you can extract information from large amounts of measurements – the larger the better,

The SEM is *NOT* a statistical descriptor of a population distribution. When you try to use it as one then you *are* trying to extract information from nothing.

https://pubmed.ncbi.nlm.nih.gov/12644429/

“Background: In biomedical research papers, authors often use descriptive statistics to describe the study sample. The standard deviation (SD) describes the variability between individuals in a sample; the standard error of the mean (SEM) describes the uncertainty of how the sample mean represents the population mean. Authors often, inappropriately, report the SEM when describing the sample. As the SEM is always less than the SD, it misleads the reader into underestimating the variability between individuals within the study sample.”

https://pubmed.ncbi.nlm.nih.gov/24767642/

“The study was designed to investigate the frequency of misusing standard error of the mean (SEM) in place of standard deviation (SD) to describe study samples in four selected journals published in 2011. Citation counts of articles and the relationship between the misuse rate and impact factor, immediacy index, or cited half-life were also evaluated.”

The SEM tells you literally NOTHNG about the population distribution. It is *NOT* a descriptive statistic for the population. The SEM is only an indicator of how closely the sample represents the population and even then it is subject to restrictions in its use.

Your focus on the SEM as a statistical descriptor of the population is wrong-headed, even for a statistician. The fact that you won’t admit that is telling.

I see the phrase of the day is “statistical descriptor “.

Correct,the standard error of an the mean is not s statistical descriptor. That’s why it’s better to not call it a standard deviation.

Why you would that mean the SEM is created out of nothing, I’s something only you could explain.

“Your focus on the SEM as a statistical descriptor of the population is wrong-headed, even for a statistician. ”

And nobody should be doing that. The sample mean is a best estimate for the population mean. The SEM is an indicator of how good that estimate is. You might not have a use for that information or understand it, but it is widely used throughout the sciences.

Have you gotten past the Introduction for your paper yet?

The standard error of the sample means – the *real* definition of the SEM – *is* a standard deviation. It is the spread of the sample means. It’s just not the standard deviation of the population or the measurement uncertainty of the population.

The SEM tells you *NOTHING* about the population since it is not a statistical descriptor of the population. Anything *YOU* or climate science uses it for in relation to the population of temperature measurements *IS* creating information out of nothing.

“The sample mean is a best estimate for the population mean.”

*The* sample mean is NOT the best estimate for the population mean. The existence of an SEM means there is sample error which, in turn, means *the* sample mean is not the best estimate of the population mean.

The *best* estimate of the population mean is the distribution formed from MULTIPLE samples of the population. If the population distribution is not Gaussian there is no guarantee that a single sample will represent the non-Gaussian population. As OC tried to tell you, since the SEM is *not* a statistical descriptor of the population, you need additional information in order to properly describe the population.

Your reliance on the SEM for describing the population of global temperature is exactly the same as climate science, garbage, statistical garbage.

“*is* a standard deviation”

Of the sampling distribution. Hence not a statistical descriptor of the population.

“The SEM tells you *NOTHING* about the population since it is not a statistical descriptor of the population.”

It’s telling you something about the sample, and how good an estimate the sample mean is of the population. Your insistence in tying yourself in knots over these common concepts, whilst shouting “NOTHING” at random points, doesn’t make you look as knowledgeable as you would like.

“*The* sample mean is NOT the best estimate for the population mean.”

It is – by definition of maximum likelihood.

“The existence of an SEM means there is sample error which, in turn, means *the* sample mean is not the best estimate of the population mean.”

No. It means the sample mean is unlikely to be the same as the population mean – it is still the best estimate.

“The *best* estimate of the population mean is the distribution formed from MULTIPLE samples of the population.”

Once again, if you take multiple samples, all you are doing is getting one bigger sample. The bigger sample will be a better estimate of the mean, because uncertainty decreases as sample size increases.

“If the population distribution is not Gaussian there is no guarantee that a single sample will represent the non-Gaussian population.”

And exactly the same is true if you remove the words “not” and “non”.

“As OC tried to tell you, since the SEM is *not* a statistical descriptor of the population, you need additional information in order to properly describe the population.”

Of course you do. The mean of a sample is an estimate of one thing, the mean of the population. If you want additional information you can also use the additional information from the sample, and if you want look at the standard errors for them. The sample standard deviation is an estimate for the population standard deviation. The skew of the sample is an estimate of the skew for the population.

But in general the first, and most important thing you want to look at is the mean. That’s becasue if the mean of two populations are different you know they are not the same population.

“Your reliance on the SEM for describing the population of global temperature is exactly the same as climate science, garbage, statistical garbage.”

I don’t know how many times this need to be said, but I have never relied on the SEM to describe the population of global temperature.

And you still don’t know Lesson #1 about uncertainty, yet you lecture on and on and on and on something you don’t understand.

YEP! He claims to not assume everything is Gaussian and random but then turns around and ALWAYS assumes just that!

And somewhere down there he admits it!

“If you tack lots and lots (and lots) of different things together and average them [i.e. air temperatures], the bias errors magically become random and cancel”.

He is quoting Nick Stokes. That is *exactly* what Stokes claims.

You realize kalo made the quote up?

No, he didn’t. I was a participant in the sub-thread where Stokes claimed all measurement uncertainty, including systematic bias, is random, Gaussian, and cancels.

I couldn’t believe it when I read it.

You and karlo said I was quoting Nick Stokes. Now you just say it’s something Nick Stokes once said, whilst karl admits it was just a paraphrase. As always an actual link, with the exact quote in context would be moire useful than having to argue wioth the mixed up things you remember.

You’re either lying, drunk, or unable to read with comprehension.

I paraphrased what YOU wrote, and also quoted it verbatim. Funny that it also matches up so well with nonsense generated by Nitpick Nick Stokes.

“I paraphrased what YOU wrote”

You misspelled “made up”.

Liar.

To be expected of a climate “scientist”.

Got your next backpedal queued up yet?

I was *INI* the sub-thread. It was one of my messages he was replying to!

Believe it or not. You both say the same thing. It’s a common meme in climate science and it is your meme as wall:

measurement uncertainty is random, Gaussian, and cancels – ALWAYS!

You say you don’t assume that but you do EVERY SINGLE TIME!

He has so many stories (the kind word) going at the same time he can’t keep any of them straight.

You pretty much nailed it. It’s the signature of a troll that really doesn’t understand the subject. He’s looking for clicks.

Its a paraphrase, you disingenuous person.

Shall I pull out your exact words?

That will show this is exactly what you claimed?

“What I am saying is that if you take the average of a a lot of different things, all with different sizes, then the error caused by the resolution will be effectively random, and so will tend to cancel when taking the average.” — bellman

https://wattsupwiththat.com/2023/09/05/uah-global-temperature-update-for-august-2023-0-69-deg-c/#comment-3782334

Now try and claim you weren’t referring to air temperature measurements…

Heh, the downvote crew is already out of bed.

Which is not the same as claiming that bias errors become random.

I’m specifically talking about errors caused by resolution.

Either way, still nonsense.

OMG! Not another insane assertion! Do you *ever* stop?

Resolution is *NOT* an error. Resolution sets a minimum floor on uncertainty but it is *NOT* error.

No, he never stops.

And he still can’t get past uncertainty not being “error”.

Even the GUM was late to this understanding. When measuring crankshaft journals 60 years ago it was never an issue of “error*, it was an issue of not being sure of the true value – i.e. not knowing, i.e. the UNKNOWN. True value/error has *always* been an artifact of “statistical world”, not of the real world.

ASTM treats it as “precision and bias”, but assumes it is possible to quantify non-random errors through testing against known materials. They still haven’t tried to reconcile with ±U.

And this is the problem with Stokes’ alleged systematic-to-random conversion: they are unknown, so how can he prove this really happens?

What do you compare the weather station at Forbes AFB in Topeka, KS with as far as testing against known materials?

Precision and bias is ok for calibration but field instruments simply can’t be assumed to remain calibrated.

That’s the problem, and there are a lot of test procedures in ASTM that are completely incompatible with having a standard reference material.

“Resolution is *NOT* an error.”

Just read what I said. “errors caused by resolution”. Not “Resolution is an error”.

Resolution does not *cause* error. Resolution causes UNKNOWN!

Does he just make this stuff up as he goes along?

He’s a true troll. He simply doesn’t care what he says as long as it gets him attention.

UNKNOWN errors.

You are nit-picking. What do you think you are proving?

Resolution doesn’t cause errors. Resolution is not an error. Errors are not caused by resolution. Take your pick!

Error requires you to *know* the true value. Otherwise you don’t know the error. IT IS AN UNKNOWN.

How hard is that to understand?

He’s been told this uncounted times, and it still can’t stick.

“Error requires you to *know* the true value. ”

It does not. Can’t even imagine why you should make such claim. You can know an error exists without knowing it’s value. You can asses the range of likely errors without knowing what any individual error is. That’s the basis of all the error analysis in all the books you keep promoting.

If you knew the exact error it would mean you knew true mean, and so there would be no uncertainty.

Today’s yarn is…

“You can know an error exists without knowing it’s value.”

More evasion! If you don’t know its value then how do you determine the distribution of the errors?