![latest[1]](http://wattsupwiththat.files.wordpress.com/2010/12/latest1.jpg?resize=256%2C256&quality=83) The sun went spotless yesterday, the first time in quite awhile. It seems like a good time to present this analysis from my friend David Archibald. For those not familiar with the Dalton Minimum, here’s some background info from Wiki:

The sun went spotless yesterday, the first time in quite awhile. It seems like a good time to present this analysis from my friend David Archibald. For those not familiar with the Dalton Minimum, here’s some background info from Wiki:

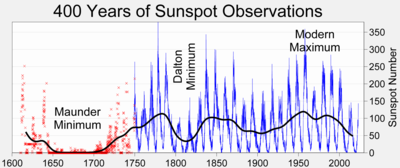

The Dalton Minimum was a period of low solar activity, named after the English meteorologist John Dalton, lasting from about 1790 to 1830.[1] Like the Maunder Minimum and Spörer Minimum, the Dalton Minimum coincided with a period of lower-than-average global temperatures. The Oberlach Station in Germany, for example, experienced a 2.0°C decline over 20 years.[2] The Year Without a Summer, in 1816, also occurred during the Dalton Minimum. Solar cycles 5 and 6, as shown below, were greatly reduced in amplitude. – Anthony

Guest post by David Archibald

James Marusek emailed me to ask if I could update a particular graph. Now that it is a full two years since the month of solar minimum, this was a good opportunity to update a lot of graphs of solar activity.

Figure 1: Solar Polar Magnetic Field Strength

Figure 1: Solar Polar Magnetic Field Strength

The Sun’s current low level of activity starts from the low level of solar polar magnetic field strength at the 23/24 minimum. This was half the level at the previous minimum, and Solar Cycle 24 is expected to be just under half the amplitude of Solar Cycle 23.

Figure 2: Heliospheric Current Sheet Tilt Angle

Figure 2: Heliospheric Current Sheet Tilt Angle

It is said that solar minimum isn’t reached until the heliospheric current sheet tilt angle has flattened. While the month of minimum for the 23/24 transition is considered to be December 2008, the heliospheric current sheet didn’t flatten until June 2009.

Figure 3: Interplanetary Magnetic Field

The Interplanetary Magnetic Field remains very weak. It is almost back to the levels reached in previous solar minima.

Figure 4: Ap Index 1932 – 2010

Figure 4: Ap Index 1932 – 2010

The Ap Index remains under the levels of previous solar minima.

Figure 5: F10.7 Flux 1948 – 2010

The F10.7 Flux is a more accurate indicator of solar activity than the sunspot number. It remains low.

Figure 6: F10.7 Flux aligned on solar minima

In this figure, the F10.7 flux of the last six solar minima are aligned on the month of minimum, with the two years of decline to the minimum and three years of subsequent rise. The Solar Cycle 24 trajectory is much lower and flatter than the rises of the five previous cycles.

Figure 7: Oulu Neutron Count 1964 – 210

A weaker interplanetary magnetic field means more cosmic rays reach the inner planets of the solar system. The neutron count was higher this minimum than in the previous record. Thanks to the correlation between the F10.7 Flux and the neutron count in Figure 8 following, we now have a target for the Oulu neutron count at Solar Cycle 24 maximum in late 2014 of 6,150.

Figure 8: Oulu Neutron Flux plotted against lagged F10.7 flux

Neutron count tends to peak one year after solar minimum. Figure 8 was created by plotting Oulu neutron count against the F10.7 flux lagged by one year. The relationship demonstrated by this graph indicates that the most likely value for the Oulu neutron count at the Solar Cycle 24 maximum expected to be a F10.7 flux value of 100 in late 2014 will be 6,150.

Figure 9: Solar Cycle 24 compared to Solar Cycle 5

I predicted in a paper published in March 2006 that Solar Cycles 24 and 25 would repeat the experience of the Dalton Minimum. With two years of Solar Cycle 24 data in hand, the trajectory established is repeating the rise of Solar Cycle 5, the first half of the Dalton Minimum. The prediction is confirmed. Like Solar Cycles 5 and 6, Solar Cycle 24 is expected to be 12 years long. Solar maximum will be in late 2014/early 2015.

Figure 10: North America Snow Cover Ex-Greenland

The northern hemisphere is experiencing its fourth consecutive cold winter. The current winter is one of the coldest for a hundred years or more. For cold winters to provide positive feedback, snow cover has to survive from one winter to the next so that snow’s higher albedo relative to bare rock will reflect sunlight into space, causing cooler summers. The month of snow cover minimum is most often August, sometimes July. We have to wait another eight months to find out how this winter went in terms of retained snow cover. The 1970s cooling period had much higher snow cover minima than the last thirty years. Despite the last few cold winters, there was no increase in the snow cover minima. The snow cover minimum may have to get to over two million square kilometres before it starts having a significant effect.

David Archibald

December 2010

Vukcevic says

Quote

One of the top people in the field Angelopoulos, estimates the total energy of the two-hour event (magnetic storm/rope) at five hundred thousand billion (5 x 1014) Joules. That’s approximately equivalent to the energy of a magnitude 5.5 earthquake. Electric current is estimated to 650000A.

Unquote

Thats only during “glow mode” the power input continues but in dark mode. How does that translate in Gigawatts?

Look like a good correlation to me.

Leif says

Quote

he excellent conductor moving into a magnetic field B with speed V sees an electric field VxB driving a current.

Unquote

This I believe is called distraction. It does not answer my point that plasma is an excellent conductor. The sun generates the current and it flows to earth along the conductor, where it encounters load. This generates heat.

The inference that the sun generates current, then produces a plasma that reaches earth, which then generates a mirror current is unique, totally contrary to plasma physics.

Was it not that heat and also pressure demagnetize?. Please do not forget that wherever there is magnetism (and specially in fridge magnets)there is electricity at work, at the same time and at a phase angle of 90 degrees. In the case of the fridge magnet, if made from magnetite, it happens between FeO and Fe2O3. Magnets are commonly made from a few metals which share an also common characteristic. Jumping valences 2,3 .

Leif Svalgaard says:

December 21, 2010 at 8:20 am

……………

1. I quoted NASA’s numbers, but if you say 6.7 magnitude (30 stronger than 5.5 magnitude on the Richter scale) that is fine, your numbers are reliable, but your conclusions I often wander about.

2. Magnetic ropes/ (wrongly named electric current loops) have their base attached to the sun 149,000,000 km away, but the Arctic is less than 6,000 km from wherever field is generated. In addition I know exactly how ‘degaussing coil’ works.

3. You really have to start questioning current understanding of the GMF. Antarctica’s (north MP) is decaying faster than combination of Hudson Bay – Siberia, or either of two individually, whichever data you take (NOAA, Gufm or CALS7K), in addition the Hudson Bay is declining and the Siberia is gaining strength. Logic says it can’t be a single dynamo, and forget about harmonics, that is only a cover for false hypothesis.

Therefore I propose that there are 3 conical vortices, and they behave independently: Siberia (getting stronger), Antarctica (getting weaker); these two are probably far down, while the Hudson Bay is likely to be very shallow (getting weaker with a reverse correlation to the solar activity – ‘degaussed’). Here are the details:

http://www.vukcevic.talktalk.net/MF.htm

I don’t expect you to agree today, tomorrow or the day after, but it might be that your young grandson’s generation may come to accept it as a more realistic proposition.

Grey Lensman says:

December 21, 2010 at 8:43 am

…………..

Known electric power (30KV x 0.65MA) , round number 20TW, for two hours (=40TWh), but stand to be corrected.

Leif Svalgaard says:

December 21, 2010 at 8:20 am

There is a 0.6 factor to reduce Locarno to the 80 mm standard telescope [this is what I referred to when I said that each observer has his own factor], then there is another 0.6 factor to reduce the result to Wolf’s scale [to account for Wolf not counting pores].

I didn’t explain this too well. Trying again: since 1860 Wolf used an even smaller telescope [his ‘handheld’ – because he was often traveling]. Wolfer used the 80 mm scope since 1877. The various factors are:

suppose Wolf had a count with his handheld of 20, then that count was multiplied by 1.5 making it 30 to compare it to the 80 mm. To get to what Wolfer counted with the 80 mm, you divide the 30 by 0.6, giving 50. So, Wolfer using the 80 mm counting every little speck he could see, would have counted 50 where Wolf would have counted 20 with his handheld. All Wolf’s counts between 1860 and 1893 were done with the handheld [which in a sense is the true Wolf standard].

Or to take a recent example: This past November, the ratio between SIDC and Locarno was 0.584. The counts [on the same days] were Loc=33.44, SIDC=19.69. Now, the SIDC count can be converted to Wolfer’s scale by dividing by 0.6, so Wolfer would have counted 19.69/0.6 = 33, which Wolf with his handheld would have seen as 33/1.5 = 22.

Perhaps a bit OT, but this issue of magnetism and electricity reminds me the current colloquial expression of “narrow-mindedness”: It is really meaningful. As our brains work on electricity, the narrower its phase angle the lesser its “emission field”, then the heavier and more somber our thoughts.

Eric (skeptic):

I’m not sure what you mean by catastrophists (sensitivity > 4 °C ?), but there are two issues here. First, the observed warming is consistent with the usual range of estimates of the climate sensitivity, and the usual range of estimates of the net climate forcing (basically CO2 + Methane + black carbon – aerosol direct & indirect effects. If you think the climate sensitivity is lower than 2 °C, that means you think the aerosol forcing is smaller than the usual estimates. Why do you think that?

Second, the upper ocean adjusts pretty quickly to the atmosphere CO2 concentration, so no one who understands the carbon cycle believes that a sudden halt in CO2 output would result in a quick draw-down of CO2. Here’s the standard reference: http://geosci.uchicago.edu/~archer/reprints/archer.2009.ann_rev_tail.pdf

If we were stop putting CO2 into the atmosphere today, it would take several hundred years before concentrations fell to half-way between todays values and preindustrial values (unless we pay to pump the stuff out of the air and store it somewhere very stable).

Can somebody perhaps just tell me what is the SI unit of the number displayed on the y-axis of figure 9? What were they measuring 200 years ago and what was the accuracy of that measurement?

Grey Lensman says:

December 21, 2010 at 8:43 am

How does that translate in Gigawatts?

About 100 GW over an area of 100 million square kilometers.

The sun generates the current and it flows to earth along the conductor, where it encounters load. This generates heat.

No, the current does not flow to the Earth. The neutral plasma does. When the neutral plasma meets the Earth’s magnetic field, a current is induced in the Earth’s magnetosphere.

The inference that the sun generates current, then produces a plasma that reaches earth, which then generates a mirror current is unique, totally contrary to plasma physics.

There is no mirror current, it is a new current generated locally at the Earth.

vukcevic says:

December 21, 2010 at 9:22 am

In addition I know exactly how ‘degaussing coil’ works.

But, apparently not how the Earth works.

You really have to start questioning current understanding of the GMF.

Why should I do that? The GMF is generated in the core as the result of a very chaotic process with multiple convections cells each generating their own magnetic field resulting in a very jumbled and irregular field. As you move away from any complicated field, it always becomes more regular, in the limit perfectly dipolar.

I don’t expect you to agree today, tomorrow or the day after,

I don’t expect you to learn today, tomorrow, etc

but it might be that your young grandson’s generation may come to accept it as a more realistic proposition.

It is already wrong. You might begin to become a little less self-glorifying.

Leif Svalgaard says: December 21, 2010 at 9:52 am

The GMF is generated in the core as the result of a very chaotic process with multiple convections cells each generating their own magnetic field resulting in a very jumbled and irregular field.

I am tempted to say nonsense. Earth rotation is pretty regular, lunar and solar tides are also of perfect regularity, inner sphere is either solid or very high viscosity magma, none prone to chaotic movement.

Why now multiple cells ? Two or three conical vortices can explain whole process. Even the less well behaved Earth’s stratosphere occasionally falls into precisely same pattern as described here:

http://www.vukcevic.talktalk.net/MF.htm

and here:

http://www.vukcevic.talktalk.net/MF-PV.htm

Science evolves; dogma does not, it simply dies.

Your induction idea is wrong too.

About 100 GW (?! ) over an area of 100 million square kilometers (?!).

See numbers here:

http://www.nasa.gov/images/content/203795main_FluxPower_400.jpg

Faraday will tell you that induced current will be concentrated in an outer ring not in a disk.

HenryP says:

December 21, 2010 at 9:51 am

Can somebody perhaps just tell me what is the SI unit of the number displayed on the y-axis of figure 9? What were they measuring 200 years ago and what was the accuracy of that measurement?

It has no units as it is a count. Roughly twice the number of sunspots visible on the Sun at any given time. 200 years ago, the uncertainty was about a factor of two, but can be reduced somewhat by also including the effects on sunspots on the Earth, so is probably better than a factor of two for the high count, but likely not the low counts, e.g solar cycle 5, where we actually have very few measurements.

Dan Kirk-Davidoff says:

December 21, 2010 at 9:38 am

I’m not sure what you mean by catastrophists (sensitivity > 4 °C ?), but there are two issues here. First, the observed warming is consistent with the usual range of estimates of the climate sensitivity, and the usual range of estimates of the net climate forcing (basically CO2 + Methane + black carbon – aerosol direct & indirect effects. If you think the climate sensitivity is lower than 2 °C, that means you think the aerosol forcing is smaller than the usual estimates. Why do you think that?

Second, the upper ocean adjusts pretty quickly to the atmosphere CO2 concentration, so no one who understands the carbon cycle believes that a sudden halt in CO2 output would result in a quick draw-down of CO2. Here’s the standard reference: http://geosci.uchicago.edu/~archer/reprints/archer.2009.ann_rev_tail.pdf

If we were stop putting CO2 into the atmosphere today, it would take several hundred years before concentrations fell to half-way between todays values and preindustrial values (unless we pay to pump the stuff out of the air and store it somewhere very stable).

Maybe you should read up a bit on your model forcings.

http://wattsupwiththat.com/2010/12/19/model-charged-with-excessive-use-of-forcing/

thanks Leif,

I gather that 200 years ago, to do the measurement, they projected an image of the sun on a screen and do an actual count (was that done every day?). And you say it can be reasonably accurately determined that compared to how we do it now (how?) they missed 50%?

vukcevic says:

December 21, 2010 at 10:29 am

I am tempted to say nonsense. Earth rotation is pretty regular, lunar and solar tides are also of perfect regularity, inner sphere is either solid or very high viscosity magma, none prone to chaotic movement.

The magnetic field is generated in the outer core which has the same viscosity as water. Convecting water is highly chaotic and turbulent [boil some water and have a look]. Same with solar convection zone.

Why now multiple cells ?

Because that is what we observe. The core-mantle boundary is very lumpy.

Science evolves; dogma does not, it simply dies.

A little knowledge can be a dangerous thing. And pseudo-science never dies, no matter what the lack of evidence or understanding [or perhaps just because of that].

Your induction idea is wrong too.

Same comment about a little knowledge.

About 100 GW (?! ) over an area of 100 million square kilometers (?!).

Measured energy input for Kp=5 is 100 GW over the hemisphere. The extent of the area where the precipitation occurs is a ring [actually, two – one in each hemisphere] that can cover [for a large storm] the area I mentioned. For quiet periods the area and the energy is an order of magnitude smaller.

http://www.swpc.noaa.gov/pmap/index.html

Read my explanation of the calculation in the link I gave you.

Faraday will tell you that induced current will be concentrated in an outer ring not in a disk.

The current comes from the magnetosphere, and is concentrated into the auroral oval. A similar oval is induced at depth of a few tens of kilometers. Since the magnetic field is generated in the core, there are no lasting effects from these ‘telluric currents’. The heating is minuscule. Try to calculate Joule per cubic meter and find out yourself. All this is well-known textbook stuff. You can learn something on this blog by paying attention.

Leif Svalgaard says:

December 21, 2010 at 8:32 am

Robuk says:

December 21, 2010 at 6:24 am

Leif states that the benchmark telescope is similar to this,

http://i446.photobucket.com/albums/qq187/bobclive/telescope1850-1852.jpg

Before 1856 this type of refector telescope had a speculum mirror which only

Leif says,

No, the telescopes used for sunspot counting are usually refractors. The standard telescope [which is still in use] is this

http://www.leif.org/research/Wolf-Telescope.jpg

Leif COME CLEAN, this is the telescope you refere to, it was built in 1847,

http://amazing-space.stsci.edu/resources/explorations/groundup/lesson/scopes/harvard/index.php

to late to observe the low sunspot count of the Maunda minimum as shown on your graph, which ended around 1835.

http://i446.photobucket.com/albums/qq187/bobclive/sunspots2.jpg

All observations prior to that date must have been made with inferior speculum reflector telescopes or inferior smaller refractor telescopes.

You are counting sunspots today with superior equipment, unless you can show the exact date these new larger refractors were widely used by observers and that must proceed the year 1800 then you cannot compare todays observations with a telescope like the harvard 15 inch refractor built around 1845. You are comparing what you could see on a 405 line TV of the 50`s with todays Hi Def tv`s.

Dan Kirk-Davidoff says:

December 21, 2010 at 9:38 am

“so no one who understands the carbon cycle believes that a sudden halt in CO2 output would result in a quick draw-down of CO2. ”

Irrigating the Sahara and Australian deserts and planting trees could get 8Gtc/year.

Leif Svalgaard says:

December 21, 2010 at 12:23 pm

…………..

You got magma viscosity wrong, even Wikipedia knows that, ever seen film of magma coming down volcano slope, not exactly as rush of water.

Induction would happen in the layer of highest conductivity not one you whish it to be. As far as numbers are concerned they are given by NASA for the Arctic as 30kV and o.65MA:

http://www.nasa.gov/images/content/203795main_FluxPower_400.jpg

hence ~ 40TWh .

The energy is not necessarily converted to heat, two magnetic fields acting against each other produce heath only in so called ‘reconnection’ (currents short circuit), it is either mechanical movement which may cause tremors (as investigated by NASA) or more likely miniscule effect on the originator of the magnetic field itself, the Earth’s rotation or LOD, hence its direct correlation with solar activity, and rise of a hypothesis that LOD is cause of GW.

I think more arguments you produce, less relevant they are, so here I agree to disagree; feel free and go ahead with whatever you think it may make your case.

Dr. Svalgaard, Can you update your chart for those of us who follow the 10.7 values? Yours is the most succinct and informative IMHO. TIA!

Leif Svalgaard says:

December 21, 2010 at 8:20 am

There is a 0.6 factor to reduce Locarno to the 80 mm standard telescope [this is what I referred to when I said that each observer has his own factor], then there is another 0.6 factor to reduce the result to Wolf’s scale [to account for Wolf not counting pores].

I think maybe you are the confused one. In the past you have maintained that the 150mm & 80mm telescopes with the same magnification of 64x see the same. Now you are suggesting an extra factor is now applied to bring them together?

I think what is happening is that on some days Locarno gets overridden by the other stations, this occurs when the deviation between stations is bigger. It can be add hock with other days showing the SIDC finished value at Locarno x 0.6. Its a mess really but surprisingly the difference between NOAA and SIDC remains around .6 since 2001, maybe not by accident? It might be interesting to look at the Locarno raw figures over a longer period and see how they track against the SIDC value month to month.

HenryP says:

December 21, 2010 at 12:17 pm

I gather that 200 years ago, to do the measurement, they projected an image of the sun on a screen and do an actual count (was that done every day?). And you say it can be reasonably accurately determined that compared to how we do it now (how?) they missed 50%?

Since about 1850 sunspots have been counted [every day] by visual inspection [and often not by projection, but with a filter]. Before that we’ll take any and all observation by whichever means and try to calibrate them in modern terms. There are other means of calibration the sunspot count – counting aurorae, observing wiggles in the Earth’s magnetic field, measuring radioactive isotopes in ice and tree rings, in meteorites, and more. As we go back in time, we are not ‘missing’ counts, they just become more uncertain ans we are not sure if a count of 75 back then is actually that or any other value between 50 and a 100 [or worse].

Robuk says:

December 21, 2010 at 1:00 pm

You are counting sunspots today with superior equipment, unless you can show the exact, etc…

I’m not sure what kind of learning disability you have, but sunspots today are counted [and/or reduced to] what observers back in 1850s would have seen with the telescope I have shown you [and which is still being used for that purpose]. Astronomers deliberately use ‘inferior’ telescopes for two reasons: 1) to stay compatible with the old counts, and 2) once the telescope is ‘good enough’ improving its resolution does not show anymore spots, because there is a minimum size to what we call a spot. As I said, you can count people across the street by just looking. Using a high-powered telescope will nor show any more people.

Mom2girls says:

December 21, 2010 at 4:31 pm

Dr. Svalgaard, Can you update your chart for those of us who follow the 10.7 values? Yours is the most succinct and informative IMHO. TIA!

Thanks for the encouraging words. I’ll try to find time Real Soon Now.

vukcevic says:

December 21, 2010 at 2:55 pm

You got magma viscosity wrong, even Wikipedia knows that, ever seen film of magma coming down volcano slope, not exactly as rush of water.

As I said [too] little knowledge can be dangerous. You are a good example of that. And you also have a learning disability, it seems. The material in the outer core is not ‘magma’. The viscosity of water is about 0.001-0.002 Pa s, the core at the mantle boundary about 0.001 Pa s. [GEOPHYSICAL RESEARCH LETTERS, VOL. 29, NO. 8, 1217, 10.1029/2001GL014392, 2002].

As far as numbers are concerned they are given by NASA for the Arctic as 30kV and o.65MA, hence ~ 40TWh .

Which is several hundred times too high. You quoted a ‘top man’ in saying that the energy was 5*10^14 Joule. This number is correct [I got the same number back in 1973]. Now, Mr expert in de-gaussing and Faraday/Maxwell, please tell us how many Joules, 40 TWh is.

This is showing a correlation between warming/cooling with the activity level of the Sun and the strength of the magnetic field. Beats the old CO2 concentration correlation with warming/cooling – talk about the elephant in the middle of the room… This is the kind of thing we need – careful monitoring of the physical variables over a long period of time. Of course, we must to have “accurate” monitoring without political fudging of the data – something that seems to be difficult to achieve…

Geoff Sharp says:

December 21, 2010 at 6:29 pm

I think maybe you are the confused one. In the past you have maintained that the 150mm & 80mm telescopes with the same magnification of 64x see the same. Now you are suggesting an extra factor is now applied to bring them together?

There is indeed some confusion about this. Waldmeier always claimed that a factor of 0.6 was necessary to bring Locarno to agree with Zurich’s 80 mm telescope. The only reasonable interpretation is that if you take Locarno values and multiply by 0.6, you get Wolfer values [actually Waldmeier values]. This is verified by the November values I gave. But the Wolfer values are actually already reduced by 0.6 to get Wolf numbers. So, if Wolf counted 24, Wolfer would have counted 24/0.6 = 40, but he would have reported 24 [to match Wolf]. Then Waldmeier [and my recent test] claims if you take Locarno and multiply by 0.6 you also get 24, so Locarno must also have seen 40, as Wolfer did with the smaller telescope. In other words: 0.6*Locarno = Waldmeier, and Wolf = 0.6*Waldmeier [or Wolfer – setting aside the weighting for a moment], so Wolf = 0.6*(0.6*Locarno), but SIDC is also 0.6*Locarno, which means they are not on the Wolf scale. I’m researching the original documents to try to see where this disconnect originates.

difference between NOAA and SIDC remains around .6 since 2001, maybe not by accident?

They strive to align themselves. But also note that the ratio was 10% higher [0.66] before 2001, attesting to SIDC undercounting.

vukcevic says:

December 21, 2010 at 2:55 pm

You got magma viscosity wrong, even Wikipedia knows that, ever seen film of magma coming down volcano slope, not exactly as rush of water.

As I said [too] little knowledge can be dangerous. You are a good example of that. And you also have a learning disability, it seems. The material in the outer core is not ‘magma’. The viscosity of water is about 0.001-0.002 Pa s, the core at the mantle boundary about 0.001 Pa s. [GEOPHYSICAL RESEARCH LETTERS, VOL. 29, NO. 8, 1217, 10.1029/2001GL014392, 2002].

Mom2girls says:

December 21, 2010 at 4:31 pm

Dr. Svalgaard, Can you update your chart for those of us who follow the 10.7 values? Yours is the most succinct and informative IMHO. TIA!

Updated now.