This post provides an update of the values for the three primary suppliers of global land+ocean surface temperature reconstructions—GISS through August 2015 and HADCRUT4 and NCEI (formerly NCDC) through July 2015—and of the two suppliers of satellite-based lower troposphere temperature composites (RSS and UAH) through August 2015. It also includes a model-data comparison.

INITIAL NOTES (BOILERPLATE):

The NOAA NCEI product is the new global land+ocean surface reconstruction with the manufactured warming presented in Karl et al. (2015).

Even though the changes to the ERSST reconstruction since 1998 cannot be justified by the night marine air temperature product that was used as a reference for bias adjustments (See comparison graph here), GISS also switched to the new “pause-buster” NCEI ERSST.v4 sea surface temperature reconstruction with their July 2015 update.

The UKMO also recently made adjustments to their HadCRUT4 product, but they are minor compared to the GISS and NCEI adjustments.

We’re using the UAH lower troposphere temperature anomalies Release 6.0 for this post even though it’s in beta form. And for those who wish to whine about my portrayals of the changes to the UAH and to the GISS and NCEI products, see the post here.

The GISS LOTI surface temperature reconstruction, and the two lower troposphere temperature composites are for the most recent month. The HADCRUT4 and NCEI products lag one month.

Much of the following text is boilerplate…updated for all products. The boilerplate is intended for those new to the presentation of global surface temperature anomalies.

Most of the update graphs start in 1979. That’s a commonly used start year for global temperature products because many of the satellite-based temperature composites start then.

We discussed why the three suppliers of surface temperature products use different base years for anomalies in the post Why Aren’t Global Surface Temperature Data Produced in Absolute Form?

Since the July 2015 update, we’re using the UKMO’s HadCRUT4 reconstruction for the model-data comparisons.

GISS LAND OCEAN TEMPERATURE INDEX (LOTI)

Introduction: The GISS Land Ocean Temperature Index (LOTI) reconstruction is a product of the Goddard Institute for Space Studies. Starting with the July 2015 update, GISS LOTI uses the new NOAA Extended Reconstructed Sea Surface Temperature version 4 (ERSST.v4), the pause-buster reconstruction, which also infills grids without temperature samples. For land surfaces, GISS adjusts GHCN and other land surface temperature products via a number of methods and infills areas without temperature samples using 1200km smoothing. Refer to the GISS description here. Unlike the UK Met Office and NCEI products, GISS masks sea surface temperature data at the poles, anywhere seasonal sea ice has existed, and they extend land surface temperature data out over the oceans in those locations, regardless of whether or not sea surface temperature observations for the polar oceans are available that month. Refer to the discussions here and here. GISS uses the base years of 1951-1980 as the reference period for anomalies. The values for the GISS product are found here. (I archived the former version here at the WaybackMachine.)

Update: The August 2015 GISS global temperature anomaly is +0.81 deg C. It rose (an increase of about +0.06 deg C) since July 2015.

Figure 1 – GISS Land-Ocean Temperature Index

NCEI GLOBAL SURFACE TEMPERATURE ANOMALIES (LAGS ONE MONTH)

NOTE: The NCEI publishes only the product with the manufactured-warming adjustments presented in the paper Karl et al. (2015). As far as I know, the former version of the reconstruction is no longer available online. For more information on those curious adjustments, see the posts:

- NOAA/NCDC’s new ‘pause-buster’ paper: a laughable attempt to create warming by adjusting past data

- More Curiosities about NOAA’s New “Pause Busting” Sea Surface Temperature Dataset

- Open Letter to Tom Karl of NOAA/NCEI Regarding “Hiatus Busting” Paper

- NOAA Releases New Pause-Buster Global Surface Temperature Data and Immediately Claims Record-High Temps for June 2015 – What a Surprise!

Introduction: The NOAA Global (Land and Ocean) Surface Temperature Anomaly reconstruction is the product of the National Centers for Environmental Information (NCEI), which was formerly known as the National Climatic Data Center (NCDC). NCEI merges their new Extended Reconstructed Sea Surface Temperature version 4 (ERSST.v4) with the new Global Historical Climatology Network-Monthly (GHCN-M) version 3.3.0 for land surface air temperatures. The ERSST.v4 sea surface temperature reconstruction infills grids without temperature samples in a given month. NCEI also infills land surface grids using statistical methods, but they do not infill over the polar oceans when sea ice exists. When sea ice exists, NCEI leave a polar ocean grid blank.

The source of the NCEI values is through their Global Surface Temperature Anomalies webpage. Click on the link to Anomalies and Index Data.)

Update (Lags One Month): The July 2015 NCEI global land plus sea surface temperature anomaly was +0.81 deg C. See Figure 2. It dropped (a decrease of -0.06 deg C) since June 2015 (based on the new reconstruction).

Figure 2 – NCEI Global (Land and Ocean) Surface Temperature Anomalies

UK MET OFFICE HADCRUT4 (LAGS ONE MONTH)

Introduction: The UK Met Office HADCRUT4 reconstruction merges CRUTEM4 land-surface air temperature product and the HadSST3 sea-surface temperature (SST) reconstruction. CRUTEM4 is the product of the combined efforts of the Met Office Hadley Centre and the Climatic Research Unit at the University of East Anglia. And HadSST3 is a product of the Hadley Centre. Unlike the GISS and NCEI reconstructions, grids without temperature samples for a given month are not infilled in the HADCRUT4 product. That is, if a 5-deg latitude by 5-deg longitude grid does not have a temperature anomaly value in a given month, it is left blank. Blank grids are indirectly assigned the average values for their respective hemispheres before the hemispheric values are merged. The HADCRUT4 reconstruction is described in the Morice et al (2012) paper here. The CRUTEM4 product is described in Jones et al (2012) here. And the HadSST3 reconstruction is presented in the 2-part Kennedy et al (2012) paper here and here. The UKMO uses the base years of 1961-1990 for anomalies. The monthly values of the HADCRUT4 product can be found here.

Update (Lags One Month): The July 2015 HADCRUT4 global temperature anomaly is +0.69 deg C. See Figure 3. It decreased (about -0.04 deg C) since June 2015.

Figure 3 – HADCRUT4

UAH LOWER TROPOSPHERE TEMPERATURE ANOMALY COMPOSITE (UAH TLT)

Special sensors (microwave sounding units) aboard satellites have orbited the Earth since the late 1970s, allowing scientists to calculate the temperatures of the atmosphere at various heights above sea level (lower troposphere, mid troposphere, tropopause and lower stratosphere). The atmospheric temperature values are calculated from a series of satellites with overlapping operation periods, not from a single satellite. Because the atmospheric temperature products rely on numerous satellites, they are known as composites. The level nearest to the surface of the Earth is the lower troposphere. The lower troposphere temperature composite include the altitudes of zero to about 12,500 meters, but are most heavily weighted to the altitudes of less than 3000 meters. See the left-hand cell of the illustration here.

The monthly UAH lower troposphere temperature composite is the product of the Earth System Science Center of the University of Alabama in Huntsville (UAH). UAH provides the lower troposphere temperature anomalies broken down into numerous subsets. See the webpage here. The UAH lower troposphere temperature composite are supported by Christy et al. (2000) MSU Tropospheric Temperatures: Dataset Construction and Radiosonde Comparisons. Additionally, Dr. Roy Spencer of UAH presents at his blog the monthly UAH TLT anomaly updates a few days before the release at the UAH website. Those posts are also regularly cross posted at WattsUpWithThat. UAH uses the base years of 1981-2010 for anomalies. The UAH lower troposphere temperature product is for the latitudes of 85S to 85N, which represent more than 99% of the surface of the globe.

UAH recently released a beta version of Release 6.0 of their atmospheric temperature product. Those enhancements lowered the warming rates of their lower troposphere temperature anomalies. See Dr. Roy Spencer’s blog post Version 6.0 of the UAH Temperature Dataset Released: New LT Trend = +0.11 C/decade and my blog post New UAH Lower Troposphere Temperature Data Show No Global Warming for More Than 18 Years. The UAH lower troposphere anomalies Release 6.0 beta through August 2015 are here.

Update: The August 2015 UAH (Release 6.0 beta) lower troposphere temperature anomaly is +0.28 deg C. It rose (an increase of about +0.10 deg C) since July 2015.

Figure 4 – UAH Lower Troposphere Temperature (TLT) Anomaly Composite – Release 6.0 Beta

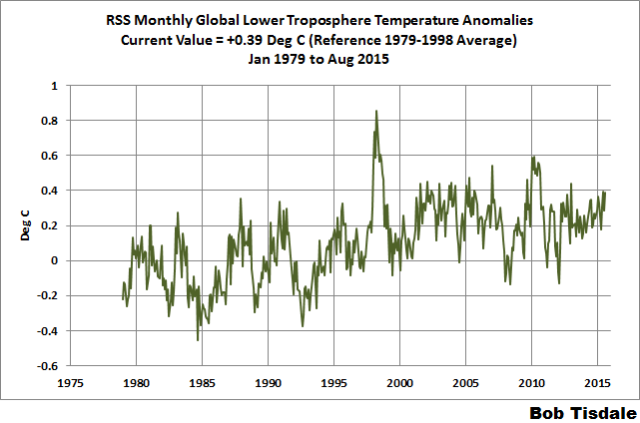

RSS LOWER TROPOSPHERE TEMPERATURE ANOMALY COMPOSITE (RSS TLT)

Like the UAH lower troposphere temperature product, Remote Sensing Systems (RSS) calculates lower troposphere temperature anomalies from microwave sounding units aboard a series of NOAA satellites. RSS describes their product at the Upper Air Temperature webpage. The RSS product is supported by Mears and Wentz (2009) Construction of the Remote Sensing Systems V3.2 Atmospheric Temperature Records from the MSU and AMSU Microwave Sounders. RSS also presents their lower troposphere temperature composite in various subsets. The land+ocean TLT values are here. Curiously, on that webpage, RSS lists the composite as extending from 82.5S to 82.5N, while on their Upper Air Temperature webpage linked above, they state:

We do not provide monthly means poleward of 82.5 degrees (or south of 70S for TLT) due to difficulties in merging measurements in these regions.

Also see the RSS MSU & AMSU Time Series Trend Browse Tool. RSS uses the base years of 1979 to 1998 for anomalies.

Update: The August 2015 RSS lower troposphere temperature anomaly is +0.39 deg C. It rose (an increase of about +0.10 deg C) since July 2015.

Figure 5 – RSS Lower Troposphere Temperature (TLT) Anomalies

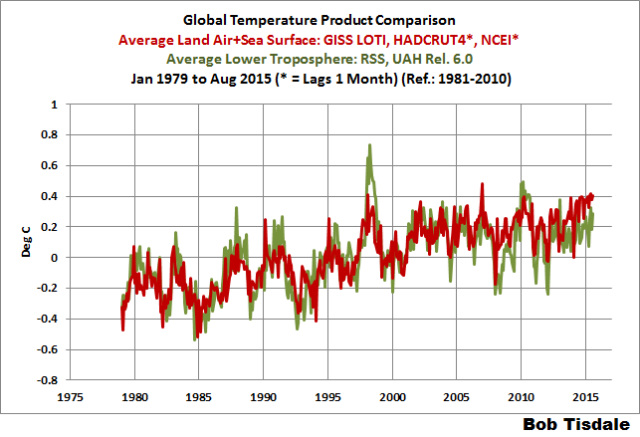

COMPARISONS

The GISS, HADCRUT4 and NCEI global surface temperature anomalies and the RSS and UAH lower troposphere temperature anomalies are compared in the next three time-series graphs. Figure 6 compares the five global temperature anomaly products starting in 1979. Again, due to the timing of this post, the HADCRUT4 and NCEI updates lag the UAH, RSS and GISS products by a month. For those wanting a closer look at the more recent wiggles and trends, Figure 7 starts in 1998, which was the start year used by von Storch et al (2013) Can climate models explain the recent stagnation in global warming? They, of course, found that the CMIP3 (IPCC AR4) and CMIP5 (IPCC AR5) models could NOT explain the recent slowdown in warming, but that was before NOAA manufactured warming with their new ERSST.v4 reconstruction.

Figure 8 starts in 2001, which was the year Kevin Trenberth chose for the start of the warming slowdown in his RMS article Has Global Warming Stalled?

Because the suppliers all use different base years for calculating anomalies, I’ve referenced them to a common 30-year period: 1981 to 2010. Referring to their discussion under FAQ 9 here, according to NOAA:

This period is used in order to comply with a recommended World Meteorological Organization (WMO) Policy, which suggests using the latest decade for the 30-year average.

The impacts of the unjustifiable adjustments to the ERSST.v4 reconstruction are visible in the two shorter-term comparisons, Figures 7 and 8. That is, the short-term warming rates of the new NCEI and GISS reconstructions are noticeably higher during “the hiatus”, as are the trends of the newly revised HADCRUT product. See the June update for the trends before the adjustments. But the trends of the revised reconstructions still fall short of the modeled warming rates.

Figure 6 – Comparison Starting in 1979

#####

Figure 7 – Comparison Starting in 1998

#####

Figure 8 – Comparison Starting in 2001

Note also that the graphs list the trends of the CMIP5 multi-model mean (historic and RCP8.5 forcings), which are the climate models used by the IPCC for their 5th Assessment Report.

AVERAGE

Figure 9 presents the average of the GISS, HADCRUT and NCEI land plus sea surface temperature anomaly reconstructions and the average of the RSS and UAH lower troposphere temperature composites. Again because the HADCRUT4 and NCEI products lag one month in this update, the most current average only includes the GISS product.

Figure 9 – Average of Global Land+Sea Surface Temperature Anomaly Products

MODEL-DATA COMPARISON & DIFFERENCE

Note: The HADCRUT4 reconstruction is now used in this section. [End note.]

Considering the uptick in surface temperatures in 2014 (see the posts here and here), government agencies that supply global surface temperature products have been touting record high combined global land and ocean surface temperatures. Alarmists happily ignore the fact that it is easy to have record high global temperatures in the midst of a hiatus or slowdown in global warming, and they have been using the recent record highs to draw attention away from the growing difference between observed global surface temperatures and the IPCC climate model-based projections of them.

There are a number of ways to present how poorly climate models simulate global surface temperatures. Normally they are compared in a time-series graph. See the example in Figure 10. In that example, the UKMO HadCRUT4 land+ocean surface temperature reconstruction is compared to the multi-model mean of the climate models stored in the CMIP5 archive, which was used by the IPCC for their 5th Assessment Report. The reconstruction and model outputs have been smoothed with 61-month filters to reduce the monthly variations. Also, the anomalies for the reconstruction and model outputs have been referenced to the period of 1880 to 2013 so not to bias the results.

Figure 10

It’s very hard to overlook the fact that, over the past decade, climate models are simulating way too much warming and are diverging rapidly from reality.

Another way to show how poorly climate models perform is to subtract the observations-based reconstruction from the average of the model outputs (model mean). We first presented and discussed this method using global surface temperatures in absolute form. (See the post On the Elusive Absolute Global Mean Surface Temperature – A Model-Data Comparison.) The graph below shows a model-data difference using anomalies, where the data are represented by the UKMO HadCRUT4 land+ocean surface temperature product and the model simulations of global surface temperature are represented by the multi-model mean of the models stored in the CMIP5 archive. Like Figure 10, to assure that the base years used for anomalies did not bias the graph, the full term of the graph (1880 to 2013) was used as the reference period.

In this example, we’re illustrating the model-data differences in the monthly surface temperature anomalies. Also included in red is the difference smoothed with a 61-month running mean filter.

Figure 11

Based on the red curve of the smoothed difference, the greatest difference between models and reconstruction occurs now.

There was also a major difference, but of the opposite sign, in the late 1880s. That difference decreases drastically from the 1880s and switches signs by the 1910s. The reason: the models do not properly simulate the observed cooling that takes place at that time. Because the models failed to properly simulate the cooling from the 1880s to the 1910s, they also failed to properly simulate the warming that took place from the 1910s until 1940. That explains the long-term decrease in the difference during that period and the switching of signs in the difference once again. The difference cycles back and forth, nearing a zero difference in the 1980s and 90s, indicating the models are tracking observations better (relatively) during that period. And from the 1990s to present, because of the slowdown in warming, the difference has increased to greatest value ever…where the difference indicates the models are showing too much warming.

It’s very easy to see the recent record-high global surface temperatures have had a tiny impact on the difference between models and observations.

See the post On the Use of the Multi-Model Mean for a discussion of its use in model-data comparisons.

MONTHLY SEA SURFACE TEMPERATURE UPDATE

The most recent sea surface temperature update can be found here. The satellite-enhanced sea surface temperature composite (Reynolds OI.2) are presented in global, hemispheric and ocean-basin bases. We discussed the recent record-high global sea surface temperatures in 2014 and the reasons for them in the post On The Recent Record-High Global Sea Surface Temperatures – The Wheres and Whys.

Bob–

You refer to the UAH 6.0 Beta without indicating whether this is beta 2 or beta 3:

“Figure 4 – UAH Lower Troposphere Temperature (TLT) Anomaly Composite – Release 6.0 Beta”

I haven’t seen an explanation of why Beta 3 differs so sharply from Beta 2 in recent years.

For an excellent graphic showing the difference between the two, see:

http://www.drroyspencer.com/2015/09/uah-v6-0-global-temperature-update-for-aug-2015-0-28-c/#comment-198744

In beta2, 2014 was the 6th warmest, but in beta3, 2014 was 5th warmest.

If the present 8 month average of 0.223 were to continue for the rest of the year, UAH would come in third.

There is absolutely no way that UAH will beat 1998 in 2015. With an 8 month average of 0.223, the average over the next 4 months needs to be 1.00 to beat 1998 this year. The highest anomaly ever was in April 1998 when it was 0.742. It is a big jump from 0.276 in August to 0.742. However while 0.742 could get beaten by December, there is no way that we can average 1.00 over the last 4 months of 2015.

The same general situation applies to RSS.

Well Bob, your fig 2 and 3 for GISS and HADCRUD certainly seem to have scratched the pause from 1998 ish, but ” pause busting ” is a bit of a stretch for my calibrated eyeballs.

The pause seems to me to be alive and well for about 1998-2013/14 ish.

Specially when you compare the slope during that time with the overall historical linear trend rate that they plot.

They may be trying to fool us Bob, but it ain’t working too gud !

Thanx for the update.

g

The “here” goes to beta2 which is not found. What you wanted was beta3 here:

http://vortex.nsstc.uah.edu/data/msu/v6.0beta/tlt/tltglhmam_6.0beta3.txt

Bob, please insert vertical lines in Figures 10 and 11 indicating the time at which the models were constructed. Otherwise these types of charts make it look like the models were validly forecasting for decades and only recently diverged – when in reality the models were hindcasting and failing completely at forecasting.

“But the trends of the revised reconstructions still fall short of the modeled warming rates.”

Needs more fiddling to get the desired result.

Again why do you Bob the GISS and NCEI data is manipulated, while the satellite data is not.

It is time to put both GISS /NCEI data with an asterisk which says manipulated.

I for one do not will not consider their data.

http://icecap.us/images/uploads/JULYR6.png

This is the true global temp. picture.

That is NCEP.

A model

Real time temperatures. Not proxy reconstructions or anything like that. Much different than the BS we see from most other models and provides a very viable forecast of what’s going to come in the real world based on past temperature patterns. Joe Bastardi explains a little of that starting at the 13:50 mark in his most recent weatherbell Saturday Summary: http://www.weatherbell.com/saturday-summary-september-12-2015

wrong rah

The input data stream to NCEP is garbage. go google the station data that goes into the system

that garbage is massaged.

Then that garbage is put into a physics model. the same physics as a GCM.

Plus NCEP sucks compared to other forecasting models

And that’s how Weatherbell, which uses NCEP extensively to formulate it’s forecasts, has such a miserable record right? And the reason why those guys in the private concern are just starving to death because nobody will buy their products, Right! [sarc]!

Not the garbage GISS puts out.

Millions of $US are spent in calculating global temperatures. Last year I found a fundamental error in the UK’s MetOffice numbers, emailed the info and appropriate correction was made.

Currently I am looking at the ‘natural variability’ component; it appears there might be some problems in there too.

My climate resolutions, stop looking at manipulated data and stop wasting time trying to study the reasoning behind AGW theory. I have wasted so much of my time trying to understand their theory which I have concluded is nonsense.

There is no legitimate reason to do that, really, there is not even an excuse for such behavior. We have excellent revision control systems available, so it is extremely easy to publish all versions of a dataset simultaneously in a structured manner along with plenty of explanation and/or algotithmic support for version upgrades. This gaping omission, an attempt to rewrite the past with no trace whatsoever is done on purpose, and that purpose can be anything but pretty.

I’m pretty sure the reports that detail the revisions are available online. Is this what you were looking for?

http://www1.ncdc.noaa.gov/pub/data/ghcn/v3/techreports/Technical%20Report%20GHCNM%20No15-01.pdf

http://www1.ncdc.noaa.gov/pub/data/ghcn/v3/techreports/Technical%20Report%20NCDC%20No12-02-3.2.0-29Aug12.pdf

Nope. One would need all versions, not just the most recent v2 &. v3 ghcn-m datasets. Which are not available for anonymous ftp anyway.

See?

ftp://ftp.ncdc.noaa.gov/pub/data/ghcn/v2/

ftp://ftp.ncdc.noaa.gov/pub/data/ghcn/v3/

There can only be one version of a data set – the actual data set. Any other “version” is not a data set, but rather an estimate set. Calling a collection of adjusted temperatures a “data set” is a travesty.

+100

No such thing as the Actual data.There is a First report

take a LIG thermometer min max thermometer.

Some time during the day a min temp occurs.

some time during the day a max temp occurs.

THAT is the actual data.

Sometime during the day the thermometer records what it thinks are the min and max. That may not

be done correctly

sometime during the day the observer comes to look at the device.

he observes the device. not the temperature. his observation may be wrong.

he writes down a ROUNDED version of his observation.

this may or may not be accurately done.

And it goes on from there.

However, I will grant you your wish to use RAW data.

You just INCREASED the warming since the net effect of adjustments is to COOL the record

By Steven Mosher’s definition in his reply below, there is no global temperature data; and, thus, there are no global temperature data sets. There are merely sets of rounded observations of questionable estimates of min/max temperatures. From these sets of rounded observations of questionable estimates of min/max temperatures, the producers of the global mean surface temperature records then “conjure up” a global mean surface temperature anomaly to two decimal place precision and zero decimal place accuracy.

I have noted here previously the differences among the anomaly change calculations by these producers for December, 2014, which ranged from 0.06C to 0.15C, or roughly 10% of the total anomaly reported. While we can not be certain that any of the reported anomalies is accurate, we can be sure that not all of them are, since all purport to calculate the temperature change for the same globe for the same month. As laughable as that is, the July report by NCEI of an actual global mean surface temperature to two decimal place precision is even more humorous, at least to those of us with warped senses of humor.

Time serie monthly NINO3.4

http://climexp.knmi.nl/data/ihadisst1_nino3.4a.png

Is the Blob dissipating?

http://www.ospo.noaa.gov/data/sst/anomaly/2015/anomnight.9.14.2015.gif

It is, has been, and always will be total rot to take the trend of half of a cycle of any known cyclic phenomena and project that indefinitely into the future. The bottom of the AMO was in the 1970s, the peak was in the late 2000s. This is a known cycle. To then take the trend from 1980 to 2010 and project that forward as a linear trend is not science, it is rubbish. But then again so is the escathological cargo cult of the AGW. I continue to find it of interest that the bottom of the AMO tends to occur at the local bottom of the solar magnetic cycle in 1908 and the 1970s. If the apparent cycle repeats, 2030s will be quite cold and a minimum of the solar activity once again.

Agree.

AsI posted on the JC’s blog only 3 days ago.

The AMO has periodicity of around ~ 60 years (most likely around 64). As this is an oscillating variable calculating the trend line should be limited to full number of cycles calculating back from 2014 (looking at the annual data).

For two full cycles the N. Atlantic SST data prior to 1900, should show a clear peak, but it doesn’t. For almost 50 years of the early data the SST shows no trend, hence for calculating the trend it may be advisable to use just one cycle (in this case 1940 to 2014). As it happens this may not make great deal of difference.

http://www.vukcevic.talktalk.net/AMO-1cy.gif

From the above it may be concluded that the ‘negative’ AMO may be some years off, considering that the 9 year cycle is near its minimum, and may soon be on the upward trend.

The N. A. SST on the multidecadal scale rises slowly and falls fast, but considering that the last peak lasted some 20 years, I would (think) that the AMO’s ‘flip’ around 2020 is the most likely outcome.

Vuk. Maybe i’m missing something. 1940 to 2014 is 74 years. You are referring to 64 year cycles?

Thanks, you’re ok, it is me who is missing some brain cells. Will correct.

Several problems.

1. you should not compare the observations to the mean of all models. The mean of all models is a model of models.. Although its fun to test against the mean of all models, it is questionable whether the mean of alll models is the best model.

2. For comparing land ocean from a model to land ocean from obseravtions, you have to do it right.

You havent,

In engineering and design models are essential tools. In the climate ‘science’ models are essentially for the WUWT’s punters amusement.

Bob cant even get the basics right.

As many skeptics have argued the “mean of the models” is statistically meaningless.

we accept or reject a model based on that models skill versus observations. he needs to compare invididual models with the observations or he merely recapitulates the IPCC mistake.

further he needs to pull the right model data . and show his work

As usual, Fig. 10 would very much benefit from distinguishing between the forecast and the hindcast.

ANYBODY can get a model to agree with a curve when the required curve shape is sitting in front of you. What counts is the success of the out of sample prediction.

Typo: Bob, the following needs an “as” inserted:

“Also, the anomalies for the reconstruction and model outputs have been referenced to the period of 1880 to 2013 so [as] not to bias the results.”

What about the missing models, Steven?

The missing models. If a model is missing, then it is missing and you cant look at its skill.

any more silly questions.

if you ran 50 different models who in their right mind would average all 50 to see if any given model was correct.

Look, skeptics prattle on about feynman. each model is a separate and unique theory of the climate turned into code. Did feyman say that you average theories and then compare them to observations?

NOPE. Every theory every model has to stand the test ALONE… Image this

Imagine the world warmed at 1C per century.

Imagine you had 2 models. one with zero warming and one with 2C.

The average would warm at 1C and be perfect.

Thats Bobs method. average them and compare. BUT we know that both are wrong. Bobs approach says they are perfect. two wrongs make a right.

Feyman my butt

Bob, or anyone:

I am trying to do some investigations of my own into temperature trends, and I have run into a problem.

Which online dataset is the most faithful to the actual numbers someone trudged out in the rain in 1921 to write down in a log book? (Worldwide, or wherever you know of good raw data) I have looked at the main online sites and it simply isn’t at all clear what is raw data and what has been “adjusted”. Helpful advice from the well-informed will be greatly appreciated.

For the US you’d be looking for a b91.. its beeen a while since anthony and some other folks (me) requested them. the problem is you either cant get them or you cant verify that they are original copies..

unless they are hand written and you have writing samples from the observer to compare.

There is doubt all the way down. its just depends how skeptical you want to be.

And even if you have it in hand writing there is no assurance that the person ACTUALLY walked outside to record the measure or that they saw the device correctly, or that they wrote it down and rounded it correctly.

You realize that the FIRST STEP is to round the observation before writing it down? you realize that this is an adjustment and you realize that you can never verify that this was done properly.

Doubt all the way down… if U are a true skeptic..

plus adjustments cool the record, so good luck

You can get B91 forms here.

Thanks Steven, but that’s not what I had in mind. They are selling paper copies. I want a downloadable computer dataset, but the one that is the least manipulated from the original digitisation of the old records.

I think Feynman would first discard the models which are incoherent with the observations and extrapolate from those that come closest to reality. Some of the models are marginally close to reality, isn’t that a clue that CO2’s reradiation effect has been quite overstated?

Or, are the natural factors grossly understated?

Note to Bob Tisdale; Can you handle a delay of a few more days on these monthly reports? The NCEI release schedule is listed at https://www.ncdc.noaa.gov/monitoring-references/dyk/monthly-releases They have both “US National” and “Global” tentative release schedules. Yes, a few times they do have last-minute delays, but they mostly do make the scheduled release date. The August Global data is scheduled to be released 17 September 2015, 11:00 AM EDT. The September data is scheduled to be released 19 October 2015, 11:00 AM EDT.

Does anyone know the answer to this question? When models show a moderately good correlation with historic global average temperatures (whatever that means), they show occasional declines, which they need to do to correlate with the data. What factors are programmed into the models to cause those declines, e.g. the decline in the 1960s in figure 10?

The temperature of the lower troposphere and AMO.

http://woodfortrees.org/plot/rss/plot/esrl-amo/from:1979

I guess Steven Mosher would like to see Bob Tisdale present the Model/real world difference more like this.

I think Bob Tisdale’s ‘average’ model result gets his point across even if it is not technically correct or meaningful to just show the mean. But Maybe it would be better to show all the runs as it better shows the huge uncertainty in any future ‘prediction’.

Back 2013 I guess a few people were pretty worried. Most models did not seem to have much predictive skill.

http://www.climate-lab-book.ac.uk/2013/updates-to-comparison-of-cmip5-models-observations/

But we can all sigh with relief now as they are back on track.

http://www.climate-lab-book.ac.uk/comparing-cmip5-observations/

Until the latest El Nino ends anyway.

Its a good time to remind people that there is a lag with respect to how global and tropical temperatures respond to the ENSO.

It takes time for an El Nino to build up and then for it to dissipate afterward. 80% of ENSO events peak in the November to February period. This El Nino is not done yet as there is still a lot of warm water in the equatorial undercurrent which has not surfaced yet and it takes time for that water to then reach the Nino 3.4 area where it has the most impact. Unlike last year’s stunted El Nino, there is very little cold water in the eastern Pacific to cool off the warm water surfacing from the undercurrent so this El Nino will not get cut-off like last year.

And then it takes time for all that warm water in the Nino 3.4 area to build up into large convection cloud systems, nearly continuous they get. Then all those clouds actually hold in all that heat that is trying to escape to space. Then it takes even more time for that warm tropical atmosphere over the cloudy Nino 3.4 and Nino 4.0 areas to enter the atmospheric flow patterns north and south and west and affect the rest of the world.

It takes 2-3 months for the tropical atmosphere to peak after the Nino 3.4 peaks and it takes 3-4 months for the rest of the world to be impacted.

Temperatures have only just started to go up from this El Nino.

If the El Nino peaks in mid-December, the most common period, global and tropical temperatures will not peak until February or March or April 2016.

Temps are going up for another 7 months.

(Unless we have a stratospheric volcanic eruption in the mean-time).

http://s23.postimg.org/ggp96uijv/UAH_RSS_Global_Tropics_ENSO_Aug_15.png

Thanks, Bill Illis.

It makes sense that ENSO; the big movements of heat in the Pacific Ocean, and the cloudiness and clear skies it causes in different places, “control” the climate much more than CO2.

CO2 is like a persistent background and its small increase is an unlikely culprit for anything but the greening of the Earth.

I found Fig9 the most interesting as it shows the greatest divergence between the SST and Sat series. While there has been divergence in the past it has been for short periods. What is shown at the end is different as they seem to be running parallel or even growing apart. Is this evidence of the effect of the fiddling going on with the SSTs? As has been mentioned here before, is it a sign that they have overdone the ‘corrections’ to create warming ready for COP21 and that there will be increasing scrutiny and questioning if the two sets continue to diverge?

Thanks, Bob.

I can see the satellites showing no change, the thermometers showing positive change and the models showing alarming trend.

I think the satellites get it right, the thermometers are too few, badly distributed and way overcooked. The CO2-based models, well, the poor things, at least they got the sign correctly for the end of the 20th century. But they really disagree too much among themselves to be anything like a credible party.