Guest Post by Werner Brozek, Edited by Just The Facts:

Some of you may have wondered why the title and the above plot are comparing different data sets. The reasons are that GISS and HadCRUT4.3 are very similar. As well, UAH6.0 is now very similar to RSS. However WFT does not have the latest UAH nor Hadcrut. So when you plot UAH on WFT, you actually are plotting version 5.5 and not even 5.6. Version 5.5 has not been updated since December 2014, however HadCRUT 4.2 has not been updated since July 2014, so those slopes are really off by a huge amount due to the fact that HadCRUT had record levels over the last year. But GISS and RSS are up to date on WFT.

The times of 58 months and 162 months were chosen to give a symmetric picture of shorter and longer terms where the slopes diverge between the satellites and ground based data sets.

In the next four paragraphs, I will give information for HadCRUT4.2 in July 2014, HadCRUT4.3 in April 2015, UAH5.6 in March 2015 and UAH6.0 in April 2015. The information will be

1. For how long the slope is flat;

2. Since when the warming is not statistically significant according to Nick Stokes’s calculation;

3. Since when the warming is not statistically significant according to Dr. McKitrick’s calculation where applicable;

4. The previous hot record year; and

5. Where each data set would rank after the given number of months.

For HadCRUT4.2 in July 2014, the slope was flat for 13 years and 6 months. There was no statistically significant warming since November 1996 according to Nick Stokes. Dr. McKitrick said there was no statistically significant warming for 19 years. The previous record warm year was 2010. As of July 2014, HadCRUT4.2 was on pace to be the third warmest year on record.

For HadCRUT4.3 in April 2015, the slope is not negative for any period worth mentioning. There is no statistically significant warming since June 2000 according to Nick Stokes. The previous record warm year was 2014. As of April, HadCRUT4.3 is on pace to set a new record. Note that on all criteria, HadCRUT4.3 is warmer than HadCRUT4.2.

For UAH5.6 as of March 2015, the slope was flat for an even 6 years. There was no statistically significant warming since August 1996 according to Nick Stokes. Dr. McKitrick said there was no statistically significant warming for 19 years, however this would be from about April 2014. The previous record warm year was 1998. As of March 2015, UAH5.6 was on pace to be the third warmest year on record.

For UAH6.0 as of April 2015, the slope is negative for 18 years and 4 months. There is no statistically significant warming since October 1992 according to Nick Stokes. The previous record warm year was 1998 as well. As of April, UAH6.0 is on pace to be the 8th warmest year. Note that unlike the HadCRUT comparison, UAH6.0 is colder than UAH5.6.

A year ago, Dr. McKitrick used HadCRUT4.2 and UAH5.6 to come up with times for no statistically significant warming on each of these data sets. In the meantime, HadCRUT4.2 has been replaced by HadCRUT4.3 which has been setting hot records over the past year. However UAH5.6 has been replaced with UAH6.0 which is much cooler than the UAH5.6 version. As a result, his times are no longer valid for these two data sets so I will not give them any more.

For RSS, Dr. McKitrick had a time of 26 years. From Nick Stokes’s time of November 1992 for last April to the present time of January 1993, there is very little change in the starting time, however we are now a year later. Therefore I would predict that if Dr. McKitrick ran the numbers again, he would get a time of 27 years without statistically significant warming.

For UAH5.6, Dr. McKitrick had a time of 16 years. However, Nick Stokes’s new time for UAH6.0 is from October 1992. Since this is three months earlier than the RSS time, I would predict that if Dr. McKitrick ran the numbers again, he would also get a time of 27 years without statistically significant warming for the new UAH6.0.

For Hadcrut4.2, Dr. McKitrick had a time of 19 years. At that time, Nick Stokes’s had a time since October 1996. However Nick Stokes’s new time for Hadcrut4.3 is from June 2000. It would be reasonable to assume that Dr. McKitrick would get 15 years if he did the calculation today.

In the sections below, as in previous posts, we will present you with the latest facts. The information will be presented in three sections and an appendix. The first section will show for how long there has been no warming on some data sets. At the moment, only the satellite data have flat periods of longer than a year. The second section will show for how long there has been no statistically significant warming on several data sets. The third section will show how 2015 so far compares with 2014 and the warmest years and months on record so far. For three of the data sets, 2014 also happens to be the warmest year. The appendix will illustrate sections 1 and 2 in a different way. Graphs and a table will be used to illustrate the data.

Section 1

This analysis uses the latest month for which data is available on WoodForTrees.com (WFT). All of the data on WFT is also available at the specific sources as outlined below. We start with the present date and go to the furthest month in the past where the slope is a least slightly negative on at least one calculation. So if the slope from September is 4 x 10^-4 but it is – 4 x 10^-4 from October, we give the time from October so no one can accuse us of being less than honest if we say the slope is flat from a certain month.

1. For GISS, the slope is not flat for any period that is worth mentioning.

2. For Hadcrut4, the slope is not flat for any period that is worth mentioning. Note that WFT has not updated Hadcrut4 since July and it is only Hadcrut4.2 that is shown.

3. For Hadsst3, the slope is not flat for any period that is worth mentioning.

4. For UAH, the slope is flat since January 1997 or 18 years and 4 months. (goes to April using version 6.0)

5. For RSS, the slope is flat since December 1996 or 18 years and 5 months. (goes to April)

The next graph shows just the lines to illustrate the above. Think of it as a sideways bar graph where the lengths of the lines indicate the relative times where the slope is 0. In addition, the upward sloping blue line at the top indicates that CO2 has steadily increased over this period.

When two things are plotted as I have done, the left only shows a temperature anomaly.

The actual numbers are meaningless since the two slopes are essentially zero. No numbers are given for CO2. Some have asked that the log of the concentration of CO2 be plotted. However WFT does not give this option. The upward sloping CO2 line only shows that while CO2 has been going up over the last 18 years, the temperatures have been flat for varying periods on the two sets.

Section 2

For this analysis, data was retrieved from Nick Stokes’s Trendviewer available on his website. This analysis indicates for how long there has not been statistically significant warming according to Nick’s criteria. Data go to their latest update for each set. In every case, note that the lower error bar is negative so a slope of 0 cannot be ruled out from the month indicated.

On several different data sets, there has been no statistically significant warming for between 14 and 22 years according to Nick’s criteria. Cl stands for the confidence limits at the 95% level.

The details for several sets are below.

For UAH6.0: Since October 1992: Cl from -0.042 to 1.759

This is 22 years and 7 months.

For RSS: Since January 1993: Cl from -0.023 to 1.682

This is 22 years and 4 months.

For Hadcrut4.3: Since June 2000: Cl from -0.015 to 1.387

This is 14 years and 10 months.

For Hadsst3: Since June 1995: Cl from -0.013 to 1.706

This is 19 years and 11 months.

For GISS: Since November 2000: Cl from -0.041 to 1.354

This is 14 years and 5 months.

Section 3

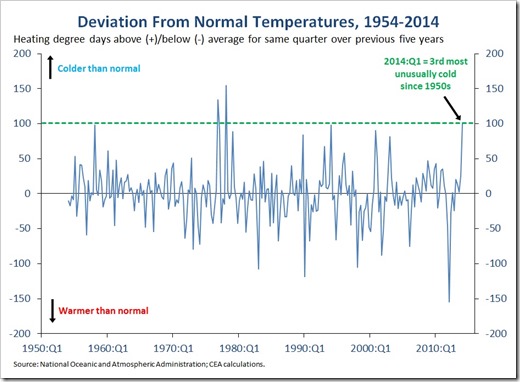

This section shows data about January 2015 and other information in the form of a table. The table shows the five data sources along the top and other places so they should be visible at all times. The sources are UAH, RSS, Hadcrut4, Hadsst3, and GISS.

Down the column, are the following:

1. 14ra: This is the final ranking for 2014 on each data set.

2. 14a: Here I give the average anomaly for 2014.

3. year: This indicates the warmest year on record so far for that particular data set. Note that the satellite data sets have 1998 as the warmest year and the others have 2014 as the warmest year.

4. ano: This is the average of the monthly anomalies of the warmest year just above.

5. mon: This is the month where that particular data set showed the highest anomaly. The months are identified by the first three letters of the month and the last two numbers of the year.

6. ano: This is the anomaly of the month just above.

7. y/m: This is the longest period of time where the slope is not positive given in years/months. So 16/2 means that for 16 years and 2 months the slope is essentially 0. Periods of under a year are not counted and are shown as “0”.

8. sig: This the first month for which warming is not statistically significant according to Nick’s criteria. The first three letters of the month are followed by the last two numbers of the year.

9. sy/m: This is the years and months for row 8. Depending on when the update was last done, the months may be off by one month.

10. Jan: This is the January 2015 anomaly for that particular data set.

11. Feb: This is the February 2015 anomaly for that particular data set, etc.

14. ave: This is the average anomaly of all months to date taken by adding all numbers and dividing by the number of months.

15. rnk: This is the rank that each particular data set would have for 2015 without regards to error bars and assuming no changes. Think of it as an update 20 minutes into a game.

| Source | UAH | RSS | Had4 | Sst3 | GISS |

|---|---|---|---|---|---|

| 1.14ra | 6th | 6th | 1st | 1st | 1st |

| 2.14a | 0.170 | 0.255 | 0.564 | 0.479 | 0.68 |

| 3.year | 1998 | 1998 | 2014 | 2014 | 2014 |

| 4.ano | 0.483 | 0.55 | 0.564 | 0.479 | 0.68 |

| 5.mon | Apr98 | Apr98 | Jan07 | Aug14 | Jan07 |

| 6.ano | 0.742 | 0.857 | 0.835 | 0.644 | 0.93 |

| 7.y/m | 18/4 | 18/5 | 0 | 0 | 0 |

| 8.sig | Oct92 | Jan93 | Jun00 | Jun95 | Nov00 |

| 9.sy/m | 22/7 | 22/4 | 14/10 | 19/11 | 14/5 |

| Source | UAH | RSS | Had4 | Sst3 | GISS |

| 10.Jan | 0.261 | 0.367 | 0.690 | 0.440 | 0.75 |

| 11.Feb | 0.157 | 0.327 | 0.660 | 0.406 | 0.80 |

| 12.Mar | 0.139 | 0.255 | 0.680 | 0.424 | 0.84 |

| 13.Apr | 0.065 | 0.174 | 0.655 | 0.557 | 0.71 |

| Source | UAH | RSS | Had4 | Sst3 | GISS |

| 14.ave | 0.156 | 0.281 | 0.671 | 0.457 | 0.78 |

| 15.rnk | 8th | 6th | 1st | 2nd | 1st |

If you wish to verify all of the latest anomalies, go to the following:

For UAH, version 6.0 was used. Note that WFT uses version 5.5 however this version was last updated for December 2014 and it looks like it will no longer be given.

http://vortex.nsstc.uah.edu/data/msu/v6.0beta/tlt/tltglhmam_6.0beta2

For RSS, see: ftp://ftp.ssmi.com/msu/monthly_time_series/rss_monthly_msu_amsu_channel_tlt_anomalies_land_and_ocean_v03_3.txt

For Hadcrut4, see: http://www.metoffice.gov.uk/hadobs/hadcrut4/data/current/time_series/HadCRUT.4.3.0.0.monthly_ns_avg.txt

For Hadsst3, see: http://www.cru.uea.ac.uk/cru/data/temperature/HadSST3-gl.dat

For GISS, see:

http://data.giss.nasa.gov/gistemp/tabledata_v3/GLB.Ts+dSST.txt

To see all points since January 2014 in the form of a graph, see the WFT graph below. Note that Hadcrut4 is the old version that has been discontinued. WFT does not show Hadcrut4.3 yet. As well, only UAH version 5.5 is shown which stopped in December. WFT does not show version 6.0 yet.

As you can see, all lines have been offset so they all start at the same place in January 2014. This makes it easy to compare January 2014 with the latest anomaly.

Appendix

In this part, we are summarizing data for each set separately.

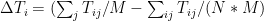

RSS

The slope is flat since December, 1996 or 18 years, 5 months. (goes to April)

For RSS: There is no statistically significant warming since January 1993: Cl from -0.023 to 1.682.

The RSS average anomaly so far for 2015 is 0.281. This would rank it as 6th place. 1998 was the warmest at 0.55. The highest ever monthly anomaly was in April of 1998 when it reached 0.857. The anomaly in 2014 was 0.255 and it was ranked 6th.

UAH6.0

The slope is flat since January 1997 or 18 years and 4 months. (goes to April using version 6.0)

For UAH: There is no statistically significant warming since October 1992: Cl from -0.042 to 1.759. (This is using version 6.0 according to Nick’s program.)

The UAH average anomaly so far for 2015 is 0.156. This would rank it as 8th place. 1998 was the warmest at 0.483. The highest ever monthly anomaly was in April of 1998 when it reached 0.742. The anomaly in 2014 was 0.170 and it was ranked 6th.

Hadcrut4.3

The slope is not flat for any period that is worth mentioning.

For Hadcrut4: There is no statistically significant warming since June 2000: Cl from -0.015 to 1.387.

The Hadcrut4 average anomaly so far for 2015 is 0.671. This would set a new record if it stayed this way. The highest ever monthly anomaly was in January of 2007 when it reached 0.835. The anomaly in 2014 was 0.564 and this set a new record.

Hadsst3

For Hadsst3, the slope is not flat for any period that is worth mentioning. For Hadsst3: There is no statistically significant warming since June 1995: Cl from -0.013 to 1.706.

The Hadsst3 average anomaly so far for 2015 is 0.457. This would rank 2nd if it stayed this way. The highest ever monthly anomaly was in August of 2014 when it reached 0.644. The anomaly in 2014 was 0.479 and this set a new record.

GISS

The slope is not flat for any period that is worth mentioning.

For GISS: There is no statistically significant warming since November 2000: Cl from -0.041 to 1.354.

The GISS average anomaly so far for 2015 is 0.78. This would set a new record if it stayed this way. The highest ever monthly anomaly was in January of 2007 when it reached 0.93. The anomaly in 2014 was 0.68 and it set a new record.

Conclusion

Why are the new satellite and ground data sets going in opposite directions? Is there any reason that you can think of where both could simultaneously be correct? Lubos Motl has an interesting article in which it looks as if satellites can “see” things the ground data sets miss. Do you think there could be something to this for at least a partial explanation?

Updates:

RSS May: With the May anomaly at 0.310, the 5 month average is 0.287 and RSS would remain in 6th place if it stayed this way. The time for a slope of zero increases to 18 years and 6 months from December 1996 to May 2015.

WFT Update: A few days ago, WFT has been updated and now shows HadCRUT4.3 to date.

UAH5.5 has also been updated but it shows UAH5.6 and not UAH6.0, so it cannot be used

to verify the 18 year and 4 month pause for UAH6.0.

ugg. Any way to turn off the auto-play video ads? Obnoxious.

Adblock in Firefox could do?

Yes – go to firefox or mozilla to install (forgot which one)

Thanks for the tips. I am using Safari on an iPad, and that seems to complicate things…more research I guess!

Ghostery in Firefox is also good.

select “Click to run”

Just hit the mute button and keep scrolling down.

The latest version of Firefox has “reader view” that will display the post the with all of the extraneous page clutter stripped away. Unfortunately, you can’t see the comments in reader view, but it does make reading the text easier.

My ad blocker software suppresses them.

Also relevant to this discussion is the following observation:

http://realclimatescience.com/2015/06/data-tampering-on-the-other-side-of-the-pond/

As I commented there, there’s a publicly available full explanation for the changes out there somewhere, isn’t there?

Seems like HADCRUT 4 has been Karled.

Thank you! In addition, we also had earlier articles here:

http://wattsupwiththat.com/2014/10/05/is-wti-dead-and-hadcrut-adjusts-up-again-now-includes-august-data-except-for-hadcrut4-2-and-hadsst3/

http://wattsupwiththat.com/2014/11/05/hadcrut4-adjustments-discovering-missing-data-or-reinterpreting-existing-data-now-includes-september-data/

Has anyone taken a good look at the UHI around those added Chinese stations? It’s not like there hasn’t been major economic development in the country, after all…

There’s a whole lot of “no statistically significants” in that there above Werner, so it begs the question:

Over the time frames discussed above would there be ANY statistically significant difference between the log of the atmospheric CO2; say from Mauna Loa, and the best straight line linear fit to the same data ??

In other words; why would anybody want to plot something that couldn’t possibly show anything that is statistically significant ?

I venture that statistical significance, is significant only to persons who play the game of statistics.

It isn’t of any significance to the physical universe, which is not even aware that statistical mathematics even exists.

G

I have never seen this discussed anywhere. It would be something for Nick Stokes to comment on. I just sent him an email asking if he would like to respond.

I’m not sure whether the difference would be statistically significant. The CO2 curve is not well modelled by a random process. The deviations are mainly cyclic.

But in fact the difference between log and linear over the period in question is not great. The arithmetic mean (linear) of 280 and 400 is 340. The geometric mean (log) is 334.7.

“I venture that statistical significance, is significant only to persons who play the game of statistics.”

Statistical significance matters if you are trying to deduce something from observations. But often you aren’t. Exponential rise of CO2 is a reasonable descriptor; no principle really hangs on it. The log dependence of forcing on CO2 is a matter of radiative calculation.

Why are satellite and surface datasets different? Because they are measurements of different things.

From memory Cowtan and Way came up with a method for converting one to the other, at least in part.

Could you elaborate why temperature of lower troposphere has a hiatus, pause or plateau, which would not coincide (killing of money?) with surface pause?

Hugh,

I am not sure I understand your question – are you asking me to explain WHY they are different. I do not know, if I did I would try to write a paper about it.

Which periods from the UAH and RSS datasets do you think contain a “pause”. That is checkable.

Harry believes that the surface is warming, but that above the surface it’s not.

That’s why the cooked data from HADCRUT shows warming, but the satellite data doesn’t.

Yes, they do measure different things. But unless the adiabatic lapse rate is changing, there should not be huge differences over the long run.

Perhaps a better way of saying it is that they measure the “same thing” but take the measurements at different places.

The satellite measurements is most certainly a more uniformly distributed measurement of the atmosphere than are the ground station measurements which are predominately clustered around people and cities.

“there should not be huge differences over the long run”

What???

Lower throposphere should have about 2/3 of surface warming rate. Differece should be huge over the long run.

I could be wrong here, but I do not think they converted one to the other. I think they used satellite data where there was no surface data available and figured out what the Hadcrut data would have been if it were available. But now that I look at it this way, I suppose it is a conversion of sorts. The interesting thing is that Cowtan and Way must have had a formula such as a constant adiabatic lapse rate to even attempt this conversion. This just reinforces the idea that the two data sets should not be diverging in my opinion.

Werner Brozek.

I have never seen a scientific study saying the satellite and surface temperatures should track each-other. If there is such a study someone can post a citation.

The UAH and RSS estimates do not always track each other, either. I do know the methods they use to allow for unsampled regions and data contamination differ.

I do believe if base lines are aligned correctly and a longer trend is computed then satellite and surface temperatures track each other better.

Harry, you don’t need a study, just a knowledge of basic physics.

Unless the air column follows the adiabatic rate, it will become unstable. If, the air at the surface is warming faster than the air at altitude, then the atmosphere becomes unstable and quickly over turns. This is why you get afternoon thunderstorms in many places.

See Paul Homewood’s comment below:

“For those who argue that surface and satellites don’t necessarily follow each other, remember what the Met Office had to say in 2013, when discussing their HADCRUT sets:

Changes in temperature observed in surface data records are corroborated by records of temperatures in the troposphere recorded by satellites””

http://wattsupwiththat.com/2015/06/09/huge-divergence-between-latest-uah-and-hadcrut4-revisions-now-includes-april-data/#comment-1958564

As for the tracking, that was true before UAH6.0. Now they more or less agree.

Werner Brozek.

“Changes in temperature observed in surface data records are corroborated by records of temperatures in the troposphere recorded by satellites”

Well yes, as I said above if you align the baselines correctly and take longer trendlines they do match better. But on shorter time scales, they don’t – that is pretty obvious.

I still need a citation to the scientific literature. The stand-alone quote is ambiguous, context is required.

Werner Brozek.

The only reference I can find says this:

“Changes in temperature observed in surface data records are corroborated by measurements of temperatures below the surface of the ocean, by records of temperatures in the troposphere recorded by satellites and weather balloons, in independent records of air temperatures measured over the oceans and by records of sea-surface temperatures measured by satellites.”

In context he means the changes are “corroborated”. He doesn’t say they follow each other. So I am calling a straw man argument on this one.

The two data sets should not be diverging, period, unless everything we understand about atmospheric thermal dynamics is wrong. That is, I will add my “opinion” to Werner’s and point out that it is based on simple atmospheric physics taught in any relevant textbook.

This does not mean that the cannot and are not systematically differing; it just means that the growing difference is strong evidence of bias in the computation of the surface record. This bias is not, really surprising, given that every new version of HadCRUT and GISS has had the overall effect of cooling the past and/or warming the present! This is as unlikely as flipping a coin (at this point) ten or twelve times each, and having it come up heads every time for both products. In fact, if one formulates the null hypothesis “the global surface temperature anomaly corrections are unbiased”, the p-value of this hypothesis is less than 0.01, let alone 0.05. If one considers both of the major products collectively, it is less than 0.001. IMO, there is absolutely no question that GISS and HadCRUT, at least, are at this point hopelessly corrupted.

One way in which they are corrupted with the well-known Urban Heat Island effect, wherein urban data or data from poorly sited weather stations shows local warming that does not accurately reflect the spatial average surface temperature in the surrounding countryside. This effect is substantial, and clearly visible if you visit e.g. Weather Underground and look at the temperature distributions from personal weather stations in an area that includes both in-town and rural PWSs. The city temperatures (and sometimes a few isolated PWSs) show a consistent temperature 1 to 2 C higher than the surrounding country temperatures. Airport temperatures often have this problem as well, as the temperatures they report come from stations that are deliberately sited right next to large asphalt runways, as they are primarily used by pilots and air traffic controllers to help planes land safely, and only secondarily are the temperatures they report almost invariably used as “the official temperature” of their location. Anthony has done a fair bit of systematic work on this, and it is a serious problem corrupting all of the major ground surface temperature anomalies.

The problem with the UHI is that it continues to systematically increase independent of what the climate is doing. Urban centers continue to grow, more shopping centers continue to be built, more roadway is laid down, more vehicle exhaust and household furnace exhaust and water vapor from watering lawns bumps greenhouse gases in a poorly-mixed blanket over the city and suburbs proper, and their perimeter extends, increasing the distance between the poorly sited official weather stations and the nearest actual unbiased countryside.

HadCRUT does not correct in any way for UHI. If it did, the correction would be the more or less uniform subtraction of a trend proportional to global population across the entire dataset. This correction, of course, would be a cooling correction, not a warming correction, and while it is impossible to tell how large it is without working through the unknown details of how HadCRUT is computed and from what data (and without using e.g. the PWS field to build a topological correction field, as UHI corrupts even well-sited official stations compared to the lower troposphere temperatures that are a much better estimator of the true areal average) IMO it would knock at least 0.3 C off of 2015 relative to 1850, and would knock off around 0.1 C off of 2015 relative to 1980 (as the number of corrupted stations and the magnitude of the error is not linear — it is heavily loaded in the recent past as population increases exponentially and global wealth reflected in “urbanization” has outpaced the population).

GISS is even worse. They do correct for UHI, but somehow, after they got through with UHI the correction ended up being neutral to negative. That’s right, UHI, which is the urban heat island effect, something that has to strictly cool present temperatures relative to past ones in unbiased estimation of global temperatures ended up warming them instead. Learning that left me speechless, and in awe of the team that did it. I want them to do my taxes for me. I’ll end up with the government owing me money.

However, in science, this leaves both GISS and HadCRUT (and any of the other temperature estimates that play similar games) with a serious, serious problem. Sure, they can get headlines out of rewriting the present and erasing the hiatus/pause. They might please their political masters and allow them to convince a skeptical (and sensible!) public that we need to spend hundreds of billions of dollars a year to unilaterally eliminate the emission of carbon dioxide, escalating to a trillion a year, sustained, if we decide that we have to “help” the rest of the world do the same. They might get the warm fuzzies themselves from the belief that their scientific mendacity serves the higher purpose of “saving the planet”. But science itself is indifferent to their human wishes or needs! A continuing divergence between any major temperature index and RSS/UAH is inconceivable and simple proof that the major temperature indices are corrupt.

Right now, to be frank, the divergence is already large enough to be raising eyebrows, and is concealed only by the fact that RSS/UAH only have a 35+ year base. If the owners of HadCRUT and GISSTEMP had the sense god gave a goose, they’d be working feverishly to cool the present to better match the satellites, not warm it and increase the already growing divergence because no atmospheric physicist is going to buy a systematic divergence between the two, as Werner has pointed out, given that both are necessarily linked by the Adiabatic Lapse Rate which is both well understood and directly measurable and measured (via e.g. weather balloon soundings) more than often enough to validate that it accurately links surface temperatures and lower troposphere temperatures in a predictable way. The lapse rate is (on average) 6.5 C/km. Lower Troposphere temperatures from e.g. RSS sample predominantly the layer of atmosphere centered roughly 1.5 km above the ground, and by their nature smooth over both height and surrounding area (that is, they don’t measure temperatures at points, they directly measure a volume averaged temperature above an area on the surface. They by their nature give the correct weight to the local warming above urban areas in the actual global anomaly, and really should also be corrected to estimate the CO_2 linked warming, or rather the latter should be estimated only from unbiased rural areas or better yet, completely unpopulated areas like the Sahara desert (where it isn’t likely to be mixed with much confounding water vapor feedback).

RSS and UAH are directly and regularly confirmed by balloon soundings and, over time, each other. They are not unconstrained or unchecked. They are generally accepted as accurate representations of LTT’s (and the atmospheric temperature profile in general).

The question remains as to how accurate/precise they are. RSS uses a sophisticated Monte Carlo process to assess error bounds, and eyeballing it suggests that it is likely to be accurate to 0.1-0.2 C month to month (similar to error claims for HadCRUT4) but much more accurate than this when smoothed over months or years to estimate a trend as the error is generally expected to be unbiased. Again this ought to be true for HadCRUT4, but all this ends up meaning is that a trend difference is a serious problem in the consistency of the two estimators given that they must be linked by the ALR and the precision is adequate even month by month to make it well over 95% certain that they are not, not monthly and not on average.

If they grow any more, I would predict that the current mutter about the anomaly between the anomalies will grow to an absolute roar, and will not go away until the anomaly anomaly is resolved. The resolution process — if the gods are good to us — will involve a serious appraisal of the actual series of “corrections” to HadCRUT and GISSTEMP, reveal to the public eye that they have somehow always been warming ones, reveal the fact that UHI is ignored or computed to be negative, and with any luck find definitive evidence of specific thumbs placed on these important scales. HadCRUT5 might — just might — end up being corrected down by the ~0.3 C that has probably been added to it or erroneously computed in it over time.

rgb

rgbatduke, brilliant post. Worthy of being made a main article, if….

It is true that GISS increases it’s warming trend to counter the increasing Urban Heat Island effect.

That cannot possibly be true. It makes no sense.

Are you sure of that?

Good comments by rgb – thank you.

Also thanks to Werner Brozek, Richard Courtney and others – a worthwhile discussion.

For the record, in my January 2008 paper at http://icecap.us/images/uploads/CO2vsTMacRaeFig5b.xls

I used UAH5.2 and HadCrut3.

In Fig. 1 you can see an apparent warming bias in HadCrut3 vs. UAH5.2 of about 0.2C in three decades, or about 0.06-0,07C per decade.

A critic might suggest that warming bias is closer to 0.1C in three decades… whatever…

M Courtney

Yes, rgb is right about UHI adjustments.

But there are worse problems than that.

Temperature is an intrinsic property so it cannot be averaged according to the laws of physics. But temperature is averaged according to the laws of climate science, and those laws are problematic.

1.

There is no agreed definition of global average surface temperature anomaly (GASTA).

2.

Each team that produces values of GASTA uses its own definition of GASTA.

3.

Each team that produces values of GASTA alters its definition of GASTA most months and each such alteration changes its indications of past GASTA values.

4.

Whatever definition of GASTA is or could be adopted, there is no possibility of a calibration reference for measurements of GASTA.

I commend you to read this item especially its Appendix B.

Richard

Thank you very much rgb!

I also agree that this should be elevated to be a main article.

(If it is, this typo in the second paragraph should be fixed: “This does not mean that the cannot and are not systematically differing”

they cannot)

No. However, I’ve read a number of articles that are at least reasonably convincing that suggest that it is, some of them on WUWT or linked sites. One such article actually broke down the GISS UHI correction by station and showed that roughly a third of the UHI corrections decreased station temperatures, a third increased them, and a bunch in the middle showed no change. Sadly, I failed to link it and don’t remember who did it (but I have little reason to doubt what they stated). Indeed, articles openly published on the UHI effect by warmist sites directly state that it is the lack of any substantive change to the global anomaly upon applying the GISS correction that proves that UHI is a non-issue.

Other sites (again, referencing articles on WUWT in several cases by people who I believe to be both generally honest and reasonably competent) disagree:

http://www.greenworldtrust.org.uk/Science/Scientific/UHI.htm

or more recently:

http://wattsupwiththat.com/2014/03/14/record-daily-temperatures-and-uhi-in-the-usa/

Personally, I find it very suspicious that a correction that almost certainly should show some cooling effect ends up neutral when applied by GISS, and AFAIK HadCRUT just ignores UHI, and both warmist and skeptical sites seem to agree that the GISS correction is small, essentially neutral. NCDC disagrees, but claims that their (sometimes large) homogenization correction accounts for it and that it doesn’t explain CONUS warming over the last century. The WUWT article linked above (which admittedly focuses on the southeast) disagrees. So does the article on rural vs urban county warming in California. North Carolina (where I live) has been temperature neutral for over 100 years, with no significant trend.

That’s the odd thing about this “Global” warming. It’s not terribly global, even over a century of data, when you look at unadjusted data or data maintained by people who don’t use a model to turn it into a global product.

So no, I’m not certain, and yes, it is a shame because I’d love to be able to trust a global temperature record so that one could compare model predictions or forecasts to an objective standard. However, direct quotes of e.g. Phil Jones in climategate letters and Hansen’s obvious personal bias make it pretty clear that HadCRUT and GISS — their personal babies — have been built and maintained not by objective scientists but by strongly politically partisan scientists who have proven directly obstructive (in the case of Jones) when people interested in checking their data and methods attempted to do so. Yet simple red flags such as the obvious bias in corrections to the major temperature products over many version changes go ignored. If we are to believe them, all of the measurements made before 1950 or so caused daily temperatures to be overestimated, all of the measurements made after 1950 including contemporary ones (go figure!) cause them to be underestimated. Obviously, 1950 was a very good year for buying thermometers. Wish I had me one of them vintage 1950 mercury babies… then I’d know the temperature outside…

This is, to put it simply, extremely implausible. In particular it is difficult to understand how temperatures over the last three decades could possibly require additional upwards correction — from the 50’s or 60’s on (post world war II) weather reporting and weather stations have been built using proper equipment and paid, trained personnel and in much of that, electronic reporting that could not reasonably have a high or low systematic bias such as time of day.

The latest paper that is being skewered is simply the latest, clumsiest version of this. A major world meeting (G7) is going on to try to get agreement among the major world powers on global warming and the need to basically halt modern civilization in its tracks to prevent it at any or all human cost and at exactly the right moment a new paper appears that claims that the fact that there has been no statistically significant warming for at least 15 years (acknowledged even in AR5, which is not known for its objectivity on the issue) if not 18 to 20 years — basically the entire shelf life of the Assessment Reports themselves — is incorrect, that there really has been warming, and that the source of this warming is the underreporting of intake water temperatures on ships!

I’m still working on that one. Intake water drawn in by a ship has not been warmed by the engine in any ship that is under way. The water behind the ship might conceivably be warmed by the engine — if you sampled it at the precise spot where the cooling water was returned to the ocean and within seconds of doing so. After a few seconds, we have a simple mixing problem. Assuming cooling water with an exit temperature of 373 C (an overestimate) and ocean water at 273 C (an obvious underestimate) to get a 0.1 C temperature change in the latter we have to mix each liter of hot exhaust water with 1000 liters of ocean water. 1000 liters happens to be 1 cubic meter of ocean water. No matter how I mentally juggle the mixing of water in the wake of a major boat underway or during the laminar flow of water up from a forward intake to even a badly located temperature sensor (one e.g. sitting right on top of the engine itself) I don’t see much chance of a major warming relative to the true intake temperature (which is true SST). I absolutely don’t see how engine exhaust heat returned as water that is immediately mixed by the wake behind the boat is going to affect intake water temperatures in front of the wake, or for that matter behind the boat after the boat has travelled as little as a single meter. I’d want to see direct evidence, and I do mean direct, experiments performed on an ensemble of randomly selected actual ships underway on randomly selected parts of the ocean with reliable instruments in the sea in front of the intake valves compared to the intake temperature, before I’d even imagine such a correction to be globally accurate.

Worse, this paper argues that ARGO buoys are not measuring SST correctly, but corrected ship intake measurements do! Really? So we spent a gazillion dollars on ARGO to get systematically biased results? My recollection of ARGO is that it was motivated largely because ship-based data is highly unreliable and becomes more unreliable the further you go into the past until it is based on sampling buckets of water drawn up by hand using ordinary thermometers held up in the wind to take a reading by indifferent skippers along 19th century trade routes (giving us no samples at all in most of the ocean).

So what are the odds that this paper is a) apolitical; b) objective; c) timed by pure chance? I’d have to say “zero”. And we haven’t even gotten to the next actual climate summit. Expect yet another “adjustment” of the present and its nasty old inaccurate thermometers just in time to be able to claim that the hiatus is over.

The only problem is, these adjustments are going to serve no useful purpose but to increase the already glaring gap between surface temperatures and RSS/UAH (and the latest creates a new, bigger gap between it and even far-from-objective HadCRUT and GISSTemp, to add to their gap with RSS). At some point the scientists involved will have no choice but to address this gap, because there are simply too many ways to directly measure and challenge the individual temperatures at grid points to build the averages.

Also, the public dislikes being lied to or “fooled”. If the latest paper is torn to shreds in short order even by those that believe in global warming — as I think is not unlikely, as they are going head to head with all of the people with an interest in ARGO, so there is actual money and academic reputation on the line across a broad patch of scientists here who have used ARGO data and Hadley data at face value, all now being challenged — it is not at all unlikely that it will backfire.

I can hardly wait for September. Will we see HadCRUT4 and GISS magically sprout a 0.2 C jump in August (with RSS remaining stubbornly nearly unchanged)? Will ENSO save the day for warmists everywhere and convince China and India to remain energy poor for the rest of eternity, convince the US congress to commit economic hari kiri? Will past-peak solar activity start to have an impact through as-yet unverified means? Stay tuned, folks, and get out that popcorn!

rgb

It’s hard to keep up with this mini-thread, but:

Sadly, Richard, I just can’t get started properly on GASTA itself, but I feel your pain. The assertion that is implicit in GASTA is that we know the anomaly as a function of position and time worldwide all the way back into the indefinite past and across multiple changes of equipment and local environment to a precision ten times the precision we know the actual average temperature produced by the exact same thermometers. Thermometers, as you point out, are forced to maintain a constant reference. It would be embarrassing if a thermometer maker sold thermometers that showed water boiling at 110 C or water freezing at -5 C, so nearly all secular thermometers are very likely to be quality controlled across those points.

more accurately than we know

more accurately than we know  .

.

Then we get to a miracle of modern statistics. The assertion is basically that we know:

In words, this means that we know the local temperature anomaly at some site computed as the difference between its recorded temperature and a reference temperature computed using some base period. Somehow this number, averaged over the entire surface of the planet is more accurate that the direct estimate of the average temperature. I’m still trying to figure that one out. I’d very much like somebody to show me an independent, identically distributed set of “random” data, generated numerically from a common distribution, where any similarly defined anomaly in the data is known more accurately than the simple average of the data. Ordinarily I’d say that the sums are linear, the error in the reference temperature has to be added to the overall error obtained by any reaveraging of the monthly local data, and this difference will always be less accurate than the simple average.

But I could be mistaken about this. Stats is hard, and I’m not expert on every single aspect of it. I just have never seen a theorem supporting this in any stats textbook I’ve looked at, and it makes no sense when I try to derive it myself. But perhaps there is a set of assumptions that can justify it. I’d just like to learn what they are supposed to be.

In the meantime, we know global average surface temperature today no more accurately than 1 C (according to NASA) but we know the anomaly in the year 1850 to within around 0.3 C (according to HadCRUT4), which is also only around twice as imprecise as our knowledge of the anomaly today (according to HadCRUT4).

I have to say that I am very, very tempted to just state that this has to be incorrect in some really humongous, unavoidable way. I can see no conceivable way that our knowledge of the global anomaly in 1850 could possibly be only twice as imprecise as our knowledge today. If a Ph.D. student made such an assertion, I’d bitch-slap them and tell them to go try again, or be prepared to have their data and stats gone over with a fine-toothed comb. But it just sits there, on Hadley’s website, right there in their data file containing HadCRUT4, unchallenged.

Madness. Possibly mine, of course. But I’m willing to be taught and corrected, if anybody can direct me to a place where this sort of thing is derived as being valid.

rgb

Converting one to the other means making up numbers that were never a part of any observation anywhere by anybody. Sorta ike scotch mist !

g

The main purpose of Cowtan and Way was to refute the pause. They did this by infilling data at the poles, where there are few records, with what they thought the data should be. And voila! Pesky pause removed.

I am curious what their analysis would show with the new UAH6.0 that is very close to RSS now. Would the pause still be there?

Reg Nelson,

I don’t see any problem with a study that comes up with an estimate for unsampled regions. From memory that is why HadCRUT4 and GISTEMP differ as well.

I keep saying the IPCC never referred to the slowdown as a ‘pause’, they called it a hiatus. But I find the new found trust in the various global average temperature increase encouraging, far better than outright denial.

There is always a problem with estimating missing data.

Among other thing it dramatically increases your error bars.

The other is when the estimation is inherently invalid, such as taking land stations and stretching them across on ice covered ocean.

Data that do not exist cannot be created from “whole cloth” and “infilled”. No measurement=no data.

It seems to me that most people here trust the satellites that show no temperature increase.

Werner Brozek,

Correct. Satellite data is the most accurate.

That said, it should be noted that no dataset agrees completely with any others. There are reasons for those slight discrepancies. In the past, RSS and UAH have diverged by a tenth of a degree or so. But UAH 6 is almost identical now with RSS.

What is more important than a specific temperature point is the trend. For the past 18 ½ years there has been no trend in global T. Global warming has s stopped.

It may resume. Or not. Or the planet could cool. But readers should remember that the change in T over the past century is only about 0.7ºC. Geologically speaking, that is nothing. That is as close to no change as you can find in the temperature record.

Just prior to our current Holocene, temperatures changed by TENS of degrees — and within only a decade or two. That is scary; 0.7ºC is not. In fact, it is hard to find a century in the temperature record that has been as benign as the past century. Current global temperatures could hardly be any better.

Climate alarmists are desperately looking for something to show that there is a problem. So far, they have failed.

True. For one thing, UAH goes to 85 S but if I am not mistaken, RSS only goes to 70 S. However there is good agreement despite this. Presumably 70 S to 85 S is not much different than the rest of the earth.

Werner, this is my problem with some contributors here. I am deeply suspicious of satellite data, as it doesn’t directly record temperature. Surface temperature datasets are dubious for known reasons, but I am uneasy about satellite data being taken as ‘gospel’ on here by authors of articles, and contributors to those posts. Believing what you want to because it reinforces your belief is dangerous to your objectivity. We need 1,000 (worldwide) set of pylons, 100 metres up (away from influence) containing a Stevenson screen – and direct temperatures taken without any adjustments, run by solar cells, and the info beamed across the internet 24 hours a day. Imagine, no more fiddling, and no more arguing!

In the same way, does a mercury thermometer really record temperature or does it record the expansion of mercury in a glass tube that has certain markings on it? Different physical attributes can easily be quantified to represent temperature differences. I do not share this suspicion of yours although the mercury thermometer seems more straightforward to me. Nothing here is perfect, but we do the best we can with what we have.

TGOBJC,

What’s important for the purposes of the pause argument is not whether satellites measure the absolute temperature accurately, but whether or not they measure the changes in temperature from their previous measurements accurately. I would argue that they do so sufficiently accurately to establish that the rate of change for the past 18 years has been near enough to nil.

Additionally, any adjustments are made in a transparent manner and for transparent reasons, based on sound calculations. Compare that with the vast problems of ground and sea-based temperature measurements (calibration, siting, site changes, missing data) and the subsequent opaque and in many cases, downright questionable adjustments (TOBA, site changes, in-filling missing data, homogenising “nearby” stations, etc.). The result is such a vastly altered record as to massively reduce the confidence in even the comparability of previous readings with present readings, such that you may as well be comparing early 20th century apples with 21st century pork sausages.

TGOBJC, as I recall, the satellite readings of the lower troposphere (LT) are verified by balloon measurements of the LT, at least within measurement uncertainty.

If you buy one of the new 4K ultr-high definition television sets (from almost any vendor) you face the problem that there is virtually no interesting 4k software to show you.

Nobody broadcast the recent French Open Tennis event (way to go Serena) in 4K TV broadcasts; so HD is about all you can get (I don’t even pay to get any of that on my 26 inch TV).

BUT !! every one of those fancy sets can “upconvert” or “back fill” faux hi res interpolations between the HD data. Maybe they use Dr. Roy Spencer’s third order polynomial “just for amusement” algorithm.

Bottom line is that those 4K up conversions from HD create astonishingly good looking TV images, which are very pleasant to watch.

But of course they are quite fake pictures. Well the Louvre is full of “fake pictures”.

No the paintings aren’t forgeries; but they are some artist’s representation of a false reality, which many of us find quite wonderful in many ways.

I dare say, that all of these up conversions and backfilling of real data with false rubbish also creates beautiful pictures that some find pleasant to view.

But beautiful or not; they are still fake pictures, and really don’t add anything different from “an artist’s impression” of reality.

So we should not be taken in by fake 4K climate data; it isn’t an image of reality.

g

Well they are measuring different things and in different ways as well.

So if that’s the case “global temperature” seems to be a mythical creature like a Unicorn, that is not existing in reality or in any empirical sense.

Taking temperature readings from the center, four corners, and various elevations in my home and factoring out elevation, distance to stove, where most people congregate, heating elements, sunny days which heat upper elevations, etc. to determine an annual “Home Temperature” cannot be considered a temperature reading. It is a concept of “Home Temperature” based on measurements, which in the case of homes is useless and I wonder in terms of the earth if it is equally useless. Yeah I know if “Global Temperature” increases by x amount everything bad that can possibly go bad in the world will go bad. Unfortunately there is about as much evidence for that as evidence for the Rapture.

Regardless, the problem is the term “temperature” suggests an empirical measurement (like heating a an oven to 350 degrees) when “Global Temperature” is not an empirical measurement at all. It is a stew of measurements, calculations and theory, whose ingredients and seasoning are based entirely on the whims of the cook.

Alx,

I am surprised you know the difference between a hot day and a cold day, considering you do not trust thermometers.

Harry, did you take lessons in missing the point, or are you just naturally talented.

He said nothing about not trusting thermometers, he said that taking a handful of readings does not equate to knowing the “temperature” of the whole house.

Surface station average temperature is the average of Tmin,Tmax; which is al all honesty temporally extremely sparse, only 2 measurements / day, where the satellite takes measurements on 16 orbits per day. Surface station Temperature are also both spatially sparse and irregular resulting in the need for infilling, problems; satellite measurements not perfect are at least regular and are producing something much closer to what most people would think of when they think average.

The global Temperature is 288 K or 15 deg. C or 59 deg. F Kevin Trenberth et al says so, and it never changes any time or anywhere.

That should be compared to the climate Temperature (local phenomenon) from various places on earth where the reading can be from about -94 deg. C and about + 60 deg. C , and due to an argument by Galileo Galilei , there are an infinite number of places all over the earth, where you will be able to find ANY Temperature in that entire range.

No that does not work; you cannot have two different Temperatures for any single place at any single time, only one Temperature per instance please.

But given that for the real world, a one deg. C change in 150 years, at some place that nobody knows where it was measured, is not something to even mention; let alone worry about.

g

That is the average. It actually changes by 3.8 C between January and June. See:

http://theinconvenientskeptic.com/2013/03/misunderstanding-of-the-global-temperature-anomaly/

Hear, hear! It’s obvious to everyone that the heat is hiding in the deep atmosphere. Due to the very slow circulation it will reappear some 500 to 1000 years from now.

Cowtan and Way used a questionable method known as krigging to infill the high latitudes, and viola, the hiatus was gone. It’s kind of the same game GISS plays – most of GISS’s warming occurs where there are no reporting stations – their grid squares uses extrapolated data. Remove the estimated data and you remove almost all of the “warming”.

Kriging per se isn’t necessarily questionable — it is just error prone. If you have to krige, it cannot “produce” data, it’s just a way of smoothing over missing regions not unlike a cubic spline or other smooth interpolation. The questionable part is strictly the way it affects precision. Kriged data cannot reduce error estimates as if it is an independent and identically distributed sample from some distribution. It by its nature smooths out any peaks or valleys in the kriged region, and is perfectly happy to make systematic errors.

For example, if one kriged missing data in Antarctica using sea surface temperatures from a ring around the continent, one would make a really huge error, would one not? Because the SSTs are all going to be in the vicinity of 0 C, causing a krige of 0 C across Antarctica in winter, which is a wee bit of a joke. Nor can one place a single weather station at the south pole and krige between it and the surrounding ring — this would produce a smooth temperature field out to the warm boundary and is again a joke — the temperature variation between the sea and the land is enormous and rapid. Similar considerations involve the Arctic — kriging either way across different surface and (especially!) across the arctic circulation and local phenomena that strongly affect local temperatures is going to smooth away reality and replace it with a number, but one that has to have a huge error bar added to it that contributes just as much error to the final error estimate as having no data there at all because you can’t squeeze statistical blood from a stone! Missing information is missing.

rgb

See: John Christy et al.

Geophysical newsletter Vol 28, Jan 2001

“Differential Trends in Tropical Sea Surface and Atmospheric Temperatures since 1979”

“””””…..Missing information is missing.

rgb…..”””””

Well Robert, I Kringe every time I think of Kriging.

I would venture (lacking a handy rigorous proof) that Kriging can only be tolerated, if NONE of the “Kriged” faux data points causes the Kriged signal to suddenly acquire faux signal component frequencies that are above the Nyquist bandwidth limit corresponding to the pre-Kriged sampled data.

As I have (in effect) asserted elsewhere “Kriged pictures look prettier; but they are still false representations of reality.

g

James….use Ad Block Plus

Thank you VERY much! WUWT ads aren’t too bad because they allow you to skip them, but I absolutely detest some other sites, such as YouTube and my local news site, because either having to wait for complete ads to play or the stupid pop-ups – I’ve yet to see one of these that made me think “Hey – I need to buy that!”.

Anyway, I’ve installed Ad Block Plus and have so far had a most enjoyable morning, ad free!

UHI, plus data fabrication of surface records is, IMV, causing the divergence. There is significant evidence for this.

Nowhere in any of this is there any sign of an increase in acceleration of warming which is the basic premise of “human produced CO2 will cause a run away global warming catastrophe”. No acceleration of warming means the theory of man made climate destruction is wrong.

If accelerated warming occurred without the predicted spike in water vapor, wouldn’t that also disprove the AGW hypothesis?

You certainly couldnt get to the more alarming end of the claims without the feedbacks that were to cause 2/3 of the warming.

Even NOAA did not attempt to show an acceleration in warming. I do not agree with their analysis, but they were happy to show no hiatus by comparing 1950 to 1999 with 2000 to 2014. In my opinion, they should have compared 2000 to 2014 with 1975 to 1998.

The problem I see with this data analysis is that it ignores seasonality. It would seem that a better approach would be to take the average of the differences 12 months apart. In other words average [t(i+12) -t(i)].

This way you are comparing January with January and February with February etc.

Most of the parametric statistical tests used are invalid. However, with the suggested method, you can easily do a non-parametric test on the signs of these differences.

Good point Walt D. It would be … interesting to see annual ‘anomalies’ if years started in different months. Does 2014 ‘break a record’ if taken from March 1st to March 1st 2015? Shifting the ‘year’ by two months (or more, or less, forward or backwards) would expose the lottery we are basing ‘our’ treasure-shift upon.

Yes, shifting to different 12 month intervals can give slightly higher values than the January to December averages. If you want to clearly see this, plot what you want on WFT, then take a mean of 12.

Walt D.

I agree, it seems to make sense to annualise the temperature data. The season are a pretty obvious cycle.

Yet globally all seasons are occurring all the time. However the difference between the global mean of each season is informative. In January the earth experiences plus 90 watts per sq meter insolation yet the atmosphere cools on average.

If I’m not mistaken, Willis Eschenbach has searched in vain for a “seasonal” signature in “Global Temperature”, and so far found none, despite some quite intriguing methodologies.

Temperature change is not a global phenomenon; it is entirely local.

g

By using anomalies, we automatically compare Januaries with Januaries, etc. If we did not use anomalies, then all Januaries would be 3.8 C colder than all Julies on the average.

When you get down to such small fractions of a degree, they are meaningless relative to each other. I grant you, you may discover different seasons are warming or cooling at different rates if the size of the units permits definitive stats. Since we are examining global warming and the wiggles are in the noise, it’s best to do it the way its done here. We already have to much distraction with statistically insignificant data in climate science.

Yes, when you take a step back, warming rates of .01 deg C a year in the AGW era are insanely small. Yet, somehow, we are being told it will do all of this…

http://whatreallyhappened.com/WRHARTICLES/globalwarming2.html

I wonder how many of those bad things are contradictions of each other.

This is the kind of posting that makes the Team shiver with fright and impending trials LOL. Keep it up. Tony Heller does it every day.

UAH Update: With the May anomaly of 0.27, the 5 month average is 0.18 and this would rank in 6th place if it stayed this way.

Hadsst3 Update: With the May anomaly of 0.593, the 5 month average is 0.484 and this would set a new record if it stayed this way.

it’s what they call “the Paris effect”: the last ditched attempt by the numbskulls to try to con everyone that their modified data “proves” the world is/was/is going/will be going to/certainly will be going to … warm by a fraction of a degree.

How many climate change angels are dancing on the head of the dataset pin (you ask)? The answer is; cAGW CO2 hypothesis, says we should be seeing accelerated catastrophic warming, all observations and all data sets show no such warming rates, the cAGW hypothesis/theory is dead wrong (those angels aren’t dancing).

“With the May anomaly of 0.593”

When will people please stop printing temperatures to the thousandth of a degree? Is it supposed to make it look more accurate? Why not release .59325439777 as the absolute temperature anomaly? Now that would make me believe.

With the land/sea based measuring system, claiming an accuracy of 1C is way too optimistic.

Anomalies reported to resolutions higher than the instrument resolution are a joke. Three decimal place resolution for “adjustments” is hysterical.

None of the land data sets are worth a plug nickel. The extent to which they have been manipulated in one form or another renders them absolutely useless. Administrative adjustments, as Dr. Ole Humlum has shown, produce half of the “warming” we have seen.

And maybe ocean temps as well. Attempting to resolve incomplete and varying types of suspicious (can’t think of the right word) data with adjustments seems to be stretching for a result that can be regarded as scientific.

“questionable”, I think is the word you were looking for.

How about “worthless”?

Although for color I like the ” not worth a plug nickel” line.

Then again, they are only worthless from the perspective of doing objective scientific analysis.

For the purpose of political posturing, or for obtaining fat grants, or for attempting to prove a false hypothesis, the seem to have some value.

I can’t believe they are already working with HadCRUT4.3. Didn’t they move from HadCRUT3 to HadCRUT4 five years ago? Did they already modify the set twice since then? Or three times?

There have been several updates. And guess in which direction the latest numbers went? See:

http://wattsupwiththat.com/2015/06/09/huge-divergence-between-latest-uah-and-hadcrut4-revisions-now-includes-april-data/#comment-1958519

“…renders them absolutely useless”

Useless? They’re absolutely priceless!

Look at all of the “hottest whatever since whenever” press.

ClimSci(TM) might be interested in this webinar:

http://www.foresteruniversity.net/webinar-surviving-media-crisis.html

“Surviving a Media Crisis

“At some point we all find ourselves in the hot seat. And if you’re a public figure, that hot seat is likely in front of a media firing squad. Are you prepared to answer the tough questions? Are you ready for the media’s tricks? Do you have a plan in hand to manage your reputation? You should.”

It’s pretty obvious why these are diverging.

People who’s job depends evidence of temperature increasing are “adjusting” GISS and HADCRUT, particularly GISS. When your temperature readings represent a small fraction of 1% of the earth’s surface and you are compelled to interpolate, extrapolate, adjust for UHI, and otherwise torture the data you can get any answer that Obama wants. Heck, we’re only talking variations of around 1C for the whole shebang, so it’s easy to fiddle around and shave a tenth or two off here and there. Then all you have to do is withhold raw data and methods and who can challenge the result? Is anyone really surprised?

The Russians have a saying: The future is certain. It’s the past that keeps changing.

It’s far harder to muck with RSS or UAH and keep the tampering off the ‘radar’ so to speak. Also, RSS and particularly UAH are run by dirty dog skeptics (sorry Dr. Roy, you know I love you) so they are continuously under the microscope. Actually, it’s hard to imagine that our Imperious Leader has tolerated RSS and UAH divergence for so long, and one would expect a “purge of the unfaithful” to bring these results more in line with the thermometer measurements.

But hey, color me cynical.

The real difference between the surface temperature data sets and the satellite data sets is that the algorithms for calculating adjustments to the surface temperature data sets and the subsequent trends are publicly available. However, the algorithms for UAH are not released.

Not that you can blame the owners of this data set. The UAH longest available trends at each point in time have had to be raised time and time again as more errors are found in the data set, as a result of errors in the UAH calculation which have had to be identified by independent reviewers the hard way – without having access to the code.

http://d35brb9zkkbdsd.cloudfront.net/wp-content/uploads/2014/07/Christy-Spencer-638×379.jpg

After all, why give those wishing to independently check the UAH results any more assistance than you absolutely need to?

Have you seen this very extensive article:

http://www.drroyspencer.com/2015/04/version-6-0-of-the-uah-temperature-dataset-released-new-lt-trend-0-11-cdecade/

If it does not have what you are looking for, ask them for what it is that you need.

And just what is the Ministry of Truth doing while RSS and UAH perpetuate these thought crimes?

The Ministry of Truth is to busy re-writing history and public property (temperature data) to notice the RSS and UAH are committing the crime of non-corruption of public property, When they notice (or perhaps can grasp the concepts) then the just will be punished and the data adjusted.

For those who argue that surface and satellites don’t necessarily follow each other, remember what the Met Office had to say in 2013, when discussing their HADCRUT sets:

Changes in temperature observed in surface data records are corroborated by records of temperatures in the troposphere recorded by satellites”

https://notalotofpeopleknowthat.wordpress.com/2015/01/17/met-office-say-surface-temperatures-should-agree-with-satellites/

Well, except when they’re not!

Thank you! At what point will scientists wake up and smell the coffee?

Paul Homewood.

The only reference I can find says this:

“Changes in temperature observed in surface data records are corroborated by measurements of temperatures below the surface of the ocean, by records of temperatures in the troposphere recorded by satellites and weather balloons, in independent records of air temperatures measured over the oceans and by records of sea-surface temperatures measured by satellites.”

In context he means the changes are “corroborated”. He doesn’t say they follow each other. So I am calling a straw man argument on this one.

“Why are the new satellite and ground data sets going in opposite directions? ”

Don’t both GISS and HadCRUT get (at least some of) their input from NCDC?

How accurate is the measurement of global temperature? How about global ocean temperatures?

I haven’t seen a good article that addresses this based on real data…the monthly disparities in the different data sets.

Here is one way of looking at it. I took the Wood For Trees Index (WTI) components RSS UAH HADCRUT GISS… then I computed how much each month’s change was different from the mean monthly change.

Here are some results. First, the WTI had a monthly standard deviation of 0.09 deg C. So, that means there is about 5% chance that the monthly temp will change more than 0.18 deg.

UAH was most correlated to the mean each month (84%). Hadcrut was lowest (66%).

Over the last 60 months, the four data sets were an average of 0.06 degrees away from the mean of the four. In other words, the different data sets disagree by an average of 0.06 a month. If they were perfect, they wouldn’t disagree at all. (well, they would a bit because they do measure slightly different things).

So, seems to me that a rough measure of the measurement accuracy is about 0.06 deg for global temp. That’s a ballpark but I think it’s reasonable.

Ocean Temps…

I have read that the ARGO data measures the Ocean Temps with an error of 0.005°C. Considering the analysis above, I hope you are laughing at the absurdity of the ARGO accuracy claims. My gut says ocean error must be at least as high as air, but I could be wrong. But I’m sure it is vastly larger than 0.005 deg C.

This is all back of the envelope. I would love if people could add to this.

Are you aware of the fact that the WTI is totally out of date? It uses Hadcrut3 which has not been updated since May 2014. So there is no WTI since then either. And with record high temperatures since then on certain data sets, that makes a huge difference. As well, it used UAH5.5 and of course does not use the new UAH6.0 which would also make a huge difference.

I maintain my own WTI data set and did the calcs from that

Is there anything you want to make available for the rest of us to use? For example, I would really love to plot the NOAA numbers on a WFT type format, but WFT does not have NOAA.

“Is there anything you want to make available for the rest of us to use? For example, I would really love to plot the NOAA numbers on a WFT type format, but WFT does not have NOAA.”

The stat guys here at work did this all “back of envelope” so the data would need to be double-checked. But there is nothing magical about WTI. It’s just an average of the four main data sets…adjusted to a baseline.

I like to use it because it eliminates the cherry picking and arguing over which is better… sat vs ground. It just uses them all. Although not perfect as the many comments here point out, it is a nice consensus statistical assessment of the relative warming of the planet.

Could you please send me the URL for what you have so I can double check it? Thanks!

The Argo floats are calibrated before they are released. More to the point, the occasional float drifts ashore by chance. Here are the results of retesting the calibration of Argo floats after they have been in the water for a few years and retested.

http://api.ning.com/files/p0ZiZvXgHQgJ3GvAOz4p06LWIb0*vjZa*BuLBDKPYxkvlpeSIaNQMBeEFKGlICP3jdJRtIX1ApcKaelqe7YMMlOVlV*2a559/ArgoRecoveredFloatTestResults.jpg

Note the American float had been in the water for 3 years before checking the calibration. And it was still accurate to three ten-thousandths of a degree C.

Apart from the sensor accuracy and repeatability itself, there is every reason why the Argo float temperature sensor readings should be much more accurate than surface temperature thermometers. The Argo float is at a well away from the warming influence of the sun. And it is stationary – drifting with the current at that depth. And lastly the water has intimate contact with the whole of the float assembly and has plenty of time to come to thermal equilibrium.

The complexity with the Argo floats is that there are under 4,000 of them to cover all earth’s oceans, though they do take readings at depths between 0 and 2000m, so you get good vertical coverage down to 2000m.

It just goes to show how wrong gut feel can be.

ARGO shows cooling at most depths:

http://tumetuestumefaisdubien1.sweb.cz/ARGO-sea-temperature-max-max.PNG

climate pete:

Argo measures to 0.00001C???? That’s absurd.

3600 floats to cover 3.6 * 10^8 m^2. And you call that good coverage?

Your standards are amazingly flexible.

I agree with much of this and get the fact that measuring temp of water is in many ways easier than air. But there is a lot of other uncertainties. First, in the error chart above, all the errors for dT and dS and dp are in the same direction. The statistics on overall ARGO errors generally assumes a normal shaped distribution of the errors. But the chart above shows that they can all be in the same direction, leading to bias far greater than a bell shaped estimate. Then there is the problem of having just buoy for every 100,000 sq KM and the drifting of measurements.

So getting a representative overall ocean heat content measure is significantly more imprecise than the estimated error rate of a few thermometers.

I think I’m going to buy an Argo float to use as a benchtop multimeter. It seems that Argo floats can outperform the best 7½ digit multimeter that money can buy by a large margin. The Keysight (formerly Agilent, formerly Hewlett-Packard 34470A only manages to measure resistance (ie temperature if using a platinum resistance thermometer) to an accuracy of 40 parts per million after an hour warm-up in an environment within 1°C of the calibration temperature within 24 hours of calibration. After 2 years it’s accuracy is 170 parts per million after a 1 hour warm-up in an environment within 5°C of the calibration temperature.

To make measurements in a dynamic environment when far away from the calibration temperature makes Argo floats the best thermometers in the galaxy!

In reality, I suspect a lot of self-delusion is going on.

My gut feeling reading your chart is that the second float’s serial number is entered incorrectly. Call me superstitious if you like, but I doubt that Japan has released 2871011 argo buoys, so something is amiss.

I am still curious about the exceptional low uncertainty you indicate for the individual temperature measurements in each ARGO float. However, you also have an unquantified statement about vertical coverage. I guess it took you a few seconds to write: “so you get good vertical coverage down to 2000m”.

Sorry for not being able to debunk your claims at the same tremendous speed as you make them.

However here are some findings on the good vertical coverage:

“[52] In conclusion, our study has shown encouraging agreement, typically to within 0.5°C, between the Argo-based temperature field at 36°N and the corresponding field from a hydrographic section. Furthermore, the model analysis demonstrated that within the subtropical North Atlantic, sampling of the temperature field at the Argo resolution results in a level of uncertainty of around 10–20 Wm−2 at monthly timescales, falling to 7 Wm−2 at seasonal timescales. This is sufficiently small that it should allow investigations of variability in this region, on these timescales.”

On the accuracy of North Atlantic temperature and heat storage fields from Argo

Authors R. E. Hadfield, N. C. Wells, S. A. Josey, J. J-M. Hirschi

First published: 25 January 2007

1 ARGO float per 100,000 square km performing 1 measurement every ten days.

Even if the accuracy of each ARGO temperature sensor hold its specification of 0,005 °C. I think the uncertainty related to sparse sampling of a vast volume will be dominating the uncertainty budget for the uncertainty in determination of the average temperature of selected depths down to 2000 meters.

I think it is misleading to draw attention to the exceptionally low uncertainty of the temperature sensor when it has such a low contributions to total uncertainty.

“Argo floats drift freely at a predetermined parking depth, which is typically 2000 decibars, rising up to the sea surface every ten days by changing their volume and buoyancy. During the ascent they measure temperature, salinity, and pressure with a conductivity-temperature- depth (CTD) sensor module. They send the observed temperature and salinity data, obtained at about 70 sampling depths, to satellites during their stay at the sea surface, and then return to the parking depth. The floats’ battery capacity is equivalent to more than 150 CTD profiles, which determines their lifetime of about four years. The accuracy requirement of the float measurements in Argo is 0.005°C for temperature and 0.01 practical salinity units (psu) for salinity. The temperature requirement is relatively easy to attain, while that for salinity is not easy, due to drift of the conductivity sensor.”

(Ref: Recalibration of temperature and conductivity sensors affixed on Argo floats” JAMSTEC Report of Research and Development, Volume 5, March 2007, 31–39″ Makito Yokota et al)

Argo shows heating between 2004 and 2012.

dbstealey said

Argo floats take readings down to 2000m. All DBStealey’s Argo chart show layers from the surface down to particular depths, so the temperature of a higher layer is included in the average for the chart at the next depth down.

So what the charts show is a drop in temperature over 8 years of around 0.06 C for depths down to 20m. By the time you take averages for all layers down to 150m the trend is flat. The average of 0-200m shows an increase in 0.02 C. In other words, deeper layers are warmer and are more than offsetting the cooling trend at the surface.

It is not the layer at 200m alone which shows warming. It is the average of all layers from the surface down to 200m which shows warming.