The title question often appears during discussions of global surface temperatures. That is, GISS, Hadley Centre and NCDC only present their global land+ocean surface temperatures products as anomalies. The questions is: why don’t they produce the global surface temperature products in absolute form?

In this post, I’ve included the answers provided by the three suppliers. I’ll also discuss sea surface temperature data and a land surface air temperature reanalysis which are presented in absolute form. And I’ll include a chapter that has appeared in my books that shows why, when using monthly data, it’s easier to use anomalies.

Back to global temperature products:

GISS EXPLANATION

GISS on their webpage here states:

Anomalies and Absolute Temperatures

Our analysis concerns only temperature anomalies, not absolute temperature. Temperature anomalies are computed relative to the base period 1951-1980. The reason to work with anomalies, rather than absolute temperature is that absolute temperature varies markedly in short distances, while monthly or annual temperature anomalies are representative of a much larger region. Indeed, we have shown (Hansen and Lebedeff, 1987) that temperature anomalies are strongly correlated out to distances of the order of 1000 km. For a more detailed discussion, see The Elusive Absolute Surface Air Temperature.

UKMO-HADLEY CENTRE EXPLANATION

The UKMO-Hadley Centre answers that question…and why they use 1961-1990 as their base period for anomalies on their webpage here.

Why are the temperatures expressed as anomalies from 1961-90?

Stations on land are at different elevations, and different countries measure average monthly temperatures using different methods and formulae. To avoid biases that could result from these problems, monthly average temperatures are reduced to anomalies from the period with best coverage (1961-90). For stations to be used, an estimate of the base period average must be calculated. Because many stations do not have complete records for the 1961-90 period several methods have been developed to estimate 1961-90 averages from neighbouring records or using other sources of data (see more discussion on this and other points in Jones et al. 2012). Over the oceans, where observations are generally made from mobile platforms, it is impossible to assemble long series of actual temperatures for fixed points. However it is possible to interpolate historical data to create spatially complete reference climatologies (averages for 1961-90) so that individual observations can be compared with a local normal for the given day of the year (more discussion in Kennedy et al. 2011).

It is possible to develop an absolute temperature series for any area selected, using the absolute file, and then add this to a regional average in anomalies calculated from the gridded data. If for example a regional average is required, users should calculate a time series in anomalies, then average the absolute file for the same region then add the average derived to each of the values in the time series. Do NOT add the absolute values to every grid box in each monthly field and then calculate large-scale averages.

NCDC EXPLANATION

Also see the NCDC FAQ webpage here. They state:

Absolute estimates of global average surface temperature are difficult to compile for several reasons. Some regions have few temperature measurement stations (e.g., the Sahara Desert) and interpolation must be made over large, data-sparse regions. In mountainous areas, most observations come from the inhabited valleys, so the effect of elevation on a region’s average temperature must be considered as well. For example, a summer month over an area may be cooler than average, both at a mountain top and in a nearby valley, but the absolute temperatures will be quite different at the two locations. The use of anomalies in this case will show that temperatures for both locations were below average.

Using reference values computed on smaller [more local] scales over the same time period establishes a baseline from which anomalies are calculated. This effectively normalizes the data so they can be compared and combined to more accurately represent temperature patterns with respect to what is normal for different places within a region.

For these reasons, large-area summaries incorporate anomalies, not the temperature itself. Anomalies more accurately describe climate variability over larger areas than absolute temperatures do, and they give a frame of reference that allows more meaningful comparisons between locations and more accurate calculations of temperature trends.

SURFACE TEMPERATURE DATASETS AND A REANALYSIS THAT ARE AVAILABLE IN ABSOLUTE FORM

Most sea surface temperature datasets are available in absolute form. These include:

- the Reynolds OI.v2 SST data from NOAA

- the NOAA reconstruction ERSST

- the Hadley Centre reconstruction HADISST

- and the source data for the reconstructions ICOADS

The Hadley Centre’s HADSST3, which is used in the HADCRUT4 product, is only produced in absolute form, however. And I believe Kaplan SST was also only available in anomaly form.

With the exception of Kaplan SST, all of those datasets are available to download through the KNMI Climate Explorer Monthly Observations webpage. Scroll down to SST and select a dataset. For further information about the use of the KNMI Climate Explorer see the posts Very Basic Introduction To The KNMI Climate Explorer and Step-By-Step Instructions for Creating a Climate-Related Model-Data Comparison Graph.

GHCN-CAMS is a reanalysis of land surface air temperatures and it is presented in absolute form. It must be kept in mind, though, that a reanalysis is not “raw” data; it is the output of a climate model that uses data as inputs. GHCN-CAMS is also available through the KNMI Climate Explorer and identified as “1948-now: CPC GHCN/CAMS t2m analysis (land)”. I first presented it in the post Absolute Land Surface Temperature Reanalysis back in 2010.

WHY WE NORMALLY PRESENT ANOMALIES

The following is “Chapter 2.1 – The Use of Temperature and Precipitation Anomalies” from my book Climate Models Fail. There was a similar chapter in my book Who Turned on the Heat?

[Start of Chapter 2.1 – The Use of Temperature and Precipitation Anomalies]

With rare exceptions, the surface temperature, precipitation, and sea ice area data and model outputs in this book are presented as anomalies, not as absolutes. To see why anomalies are used, take a look at global surface temperature in absolute form. Figure 2-1 shows monthly global surface temperatures from January, 1950 to October, 2011. As you can see, there are wide seasonal swings in global surface temperatures every year.

The three producers of global surface temperature datasets are the NASA GISS (Goddard Institute for Space Studies), the NCDC (NOAA National Climatic Data Center), and the United Kingdom’s National Weather Service known as the UKMO (UK Met Office). Those global surface temperature products are only available in anomaly form. As a result, to create Figure 2-1, I needed to combine land and sea surface temperature datasets that are available in absolute form. I used GHCN+CAMS land surface air temperature data from NOAA and the HADISST Sea Surface Temperature data from the UK Met Office Hadley Centre. Land covers about 30% of the Earth’s surface, so the data in Figure 2-1 is a weighted average of land surface temperature data (30%) and sea surface temperature data (70%).

When looking at absolute surface temperatures (Figure 2-1), it’s really difficult to determine if there are changes in global surface temperatures from one year to the next; the annual cycle is so large that it limits one’s ability to see when there are changes. And note that the variations in the annual minimums do not always coincide with the variations in the maximums. You can see that the temperatures have warmed, but you can’t determine the changes from month to month or year to year.

Take the example of comparing the surface temperatures of the Northern and Southern Hemispheres using the satellite-era sea surface temperatures in Figure 2-2. The seasonal signals in the data from the two hemispheres oppose each other. When the Northern Hemisphere is warming as winter changes to summer, the Southern Hemisphere is cooling because it’s going from summer to winter at the same time. Those two datasets are 180 degrees out of phase.

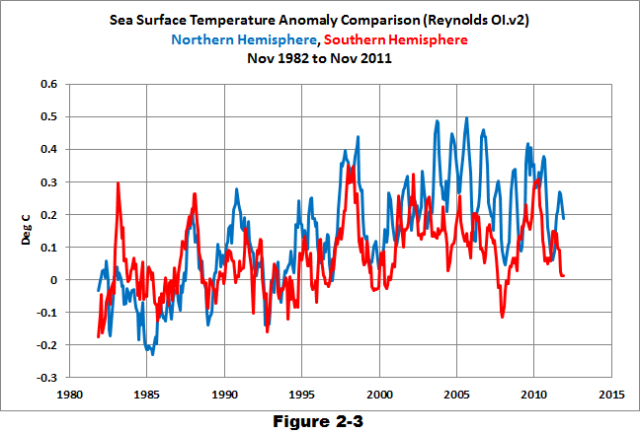

After converting that data to anomalies (Figure 2-3), the two datasets are easier to compare.

Returning to the global land-plus-sea surface temperature data, once you convert the same data to anomalies, as was done in Figure 2-4, you can see that there are significant changes in global surface temperatures that aren’t related to the annual seasonal cycle. The upward spikes every couple of years are caused by El Niño events. Most of the downward spikes are caused by La Niña events. (I discuss El Niño and La Niña events a number of times in this book. They are parts of a very interesting process that nature created.) Some of the drops in temperature are caused by the aerosols ejected from explosive volcanic eruptions. Those aerosols reduce the amount of sunlight that reaches the surface of the Earth, cooling it temporarily. Temperatures rebound over the next few years as volcanic aerosols dissipate.

HOW TO CALCULATE ANOMALIES

For those who are interested: To convert the absolute surface temperatures shown in Figure 2-1 into the anomalies presented in Figure 2-4, you must first choose a reference period. The reference period is often referred to as the “base years.” I use the base years of 1950 to 2010 for this example.

The process: First, determine average temperatures for each month during the reference period. That is, average all the surface temperatures for all the Januaries from 1950 to 2010. Do the same thing for all the Februaries, Marches, and so on, through the Decembers during the reference period; each month is averaged separately. Those are the reference temperatures. Second, determine the anomalies, which are calculated as the differences between the reference temperatures and the temperatures for a given month. That is, to determine the January, 1950 temperature anomaly, subtract the average January surface temperature from the January, 1950 value. Because the January, 1950 surface temperature was below the average temperature of the reference period, the anomaly has a negative value. If it had been higher than the reference-period average, the anomaly would have been positive. The process continues as February, 1950 is compared to the reference-period average temperature for Februaries. Then March, 1950 is compared to the reference-period average temperature for Marches, and so on, through the last month of the data, which in this example was October 2011. It’s easy to create a spreadsheet to do this, but, thankfully, data sources like the KNMI Climate Explorer website do all of those calculations for you, so you can save a few steps.

CHAPTER 2.1 SUMMARY

Anomalies are used instead of absolutes because anomalies remove most of the large seasonal cycles inherent in the temperature, precipitation, and sea ice area data and model outputs. Using anomalies makes it easier to see the monthly and annual variations and makes comparing data and model outputs on a single graph much easier.

[End of Chapter 2.1 from Climate Models Fail]

There are a good number of other introductory discussions in my ebooks, for those who are new to the topic of global warming and climate change. See the Tables of Contents included in the free previews to Climate Models Fail here and Who Turned on the Heat? here.

durr.

Well, at Berkeley we dont average temperatures and we dont create anomalies and average them.

We estimate the temperature field in absolute C

From that field you can then do any kind of averaging you like or take anomalies.

Hmm.

Here is something new

lets see if the link works

Werner Brozek: I can’t answer your question. I presented the results of a sea surface temperature dataset and a land surface air temperature reanalysis. You’d have to ask the author of the post you linked for his sources.

Regards

drat,

try this

https://mapsengine.google.com/11291863457841367551-12228718966281122880-4/mapview/?authuser=0

Allan M.R. MacRae says: “Have you looked into Steven Goddard’s latest discovery?”

Sorry, I haven’t had the time to examine it in any detail.

Can’t use temperatures. Reason is simple, there’s no significance without randomization (R.A Fisher). Thermometers are not in random spots but at places where there are people. So even if you’re not bothered by the difference between intensive and extensive variables, you cannot get a proper sample and thus no valid average. So they have to pretend they’re measuring all. Hence anomalies which are correlated over large distances instead of temperatures that aren’t. For extra misdirection go gridded, homogenize and readjust. And stop talking about temperature…..

I like this answer from GISS:

The Elusive Absolute Surface Air Temperature (SAT)

http://data.giss.nasa.gov/gistemp/abs_temp.html

Bottom line you are looking at the results of model runs and each run produces different results.

Your graph of NH vs SH temp anomalies makes it look like the “global” warming is principally a Northern Hemisphere phenomenon. If we were to take this analysis further, would we discover that “global” warming is really a western Pacific and Indian Ocean phenomenon?

Computational Reality: the world is warming as in your second graph. Representational warming: the Northern hemisphere is warming while the Southern hemisphere is cooling.

I find the regionalism very disturbing, but not as disturbing as the Computational-Representational problem. The Hockey Stick is a fundamental concept of financed climate change programs, but (as pointed out repeated in the skeptic blogs) the blade portion is a function of an outlier of one or two Yamal tree ring datasets and a biased computational program. The conclusion that representationally the Urals were NOT warming as per the combined tree chronologies show has been lost because the mathematics is correct.

I do not doubt that CO2 causes warmer surface temperatures. But the regionalism that is everywhere in the datasets corrupts the representational aspect of observational analysis and conclusion-making. Even the recent argument that the world is still warming if you add in the Arctic data that is not being collected: the implication that the Arctic, not the world, is warming somehow has failed to be noted.

We are seeing, IMHO, a large artifact in the pseudo-scientific, politicized description of climate change. The world is a huge heat redistribution machine that does not redistribute it perfectly globally either geographically or temporally. Trenberth looks for his “missing heat” because he cannot accept philosophically that the world is not a smoothly functioning object without occasional tremors, vibrations or glitches. The heat HAS to be hidden because otherwise he would see a planet with an energy imbalance (greater than he can philosophically, again) accept.

We, all of us, accept natural variations. Sometimes things go up, sometimes they go down, without us being able to predict the shifts because the interaction of myriad forces is greater than our data collection ability and interpretive techniques. But the variations we in the consensus public and scientific world are short-term. Even the claim that the post-1998 period of pause is a portion of natural variation is a stretch for the IPPC crowd: the world is supposed to be a smoothly functioning machine within a time period of five years, to gauge by the claims for the 1975-1998 period. The idea that the heat redistribution systems of the world fiddle about significantly on the 30-year period is only being posited currently as a way out of accepting flaws in the CAGW narrative.

The world is not, again in my estimation, a smoothly functioning machine. There are things that happen HERE and not all over the place. When the winds don’t blow and the skies are clear in central Australia temperatures rise to “record” levels: is this an Australian phenomenon or a global phenomenon? Only an idiot-ideologue would cling to the position that it is a global phenomenon, however, when those regional temperatures are added into the other records, there is a (possibly) blip in the global record. Thus “global” comes out and is affirmed by a regional situation (substitute “Arctic” for “central Australia” and you’ll hear claims you recognize).

I do not disagree that the sun also has a large part in the post 1850 warming, nor especially in the post 1975 warming. I agree that more CO2 has some effect. But when Antarctica ices over while the Arctic melts, I consider likely that unequal heat redistribution is also a phenomenon that has lead to the appearance of a “global” warming.

We are facing a serious error in procedure and belief wrt the CAGW debacle. The smart minds have told us that the bigger the dataset the better the end result. I hold this to be a falsehood that comes from an ill-considered view of what drives data regionally and how outliers and subsets can be deferentially determined from the greater dataset. Computational reality is not necessarily and, in the global warming situation, not actually the same as Representational reality. Just because your equations give you answer does not mean that the answer reflects what the situation is in the world.

timetochooseagain says:

January 26, 2014 at 9:01 am

“NCDC does not usually report in “absolute”, but they *do* offer “normals” you can add to their anomalies to get “absolute” values.”

Good tip!

I find for NOV 2013:

http://www.ncdc.noaa.gov/sotc/global/2013/11/

“Global Highlights

The combined average temperature over global land and ocean surfaces for November 2013 was record highest for the 134-year period of record, at 0.78°C (1.40°F) above the 20th century average of 12.9°C (55.2°F).”

So we were at 12.9+0.78 = 13.68 deg C in NOV 2013.

Here’s again the values that markx collected:

“1988: 15.4°C

1990: 15.5°C

1999: 14.6°C

2004: 14.5°C

2007: 14.5°C

2010: 14.5°C

2012 14.0 °C

2013: 14.0°C”

So NCDC admits that in NOV 2013 the globe was coolest since beginning of Global Warming Research.

This Global Warming is sure a wicked thing.

I guess it’s all got to do with cooling the past, and with “choosing between being efficient and being honest” ((c) Steven Schneider).

This Orwellian science stuff is so fun.

“””””…..GISS EXPLANATION

GISS on their webpage here states:

Anomalies and Absolute Temperatures

Our analysis concerns only temperature anomalies, not absolute temperature. Temperature anomalies are computed relative to the base period 1951-1980. The reason to work with anomalies, rather than absolute temperature is that absolute temperature varies markedly in short distances, while monthly or annual temperature anomalies are representative of a much larger region. Indeed, we have shown (Hansen and Lebedeff, 1987) that temperature anomalies are strongly correlated out to distances of the order of 1000 km. For a more detailed discussion, see The Elusive Absolute Surface Air Temperature…….””””””

“”..indeed, we have shown (Hansen and Lebedeff, 1987) that temperature anomalies are strongly correlated out to distances of the order of 1000 km. ..””

These immortal words, should be read in conjunction with Willis Eschenbach’s essay down the column about sunspots, temperature, and piranhas; well rivers.

Willis demonstrated how correlations are created out of filtering, where none existed before.

Anomalies are simply a filter, and like all filters, they throwaway much of the information you started with; most of it in fact, and they create the illusion of a correlation between disconnected data sets.

Our world of anomalies can have no weather, since there can be no winds flowing on an anomalous planet, that is all at one temperature.

So the gist of GISS is that the base period is 1951-1980. That period conveniently contains the all time record sunspot peak of 1957/58, during the IGY..

So anomalies dispense with weather, in the study of climate, that is in fact the long term integral of the weather; and they replace the highly non linear physical environment system of planet earth with a dull linear counterfeit system, on a non-rotating earth, uniformly illuminated by a quarter irradiance permanent sunlight.

So anomalies are strongly correlated over distances of 1,000 km. Temperatures on the other hand, show simultaneous, observed ranges of 120 deg. C quite routinely, to about 155 deg. C in the extreme cases, over a distance of perhaps 12,000 km.

We are supposed to believe these two different systems are having the same behavior.

So if we have good anomalies out to 1,000 km, then an anomalie measuring station is only required every 2,000 km, so you can sample the equator with just 20 stations and likewise , the meridian from pole to pole through Greenwich .

Do enough anomalizationing, and we could monitor the entire climate system, with a single station say in Brussels, or Geneva, although Greenwich would be a sentimental favorite.

Yes the GISS anomalie charter, gives us a good idea, just what the man behind the curtain is actually doing.

Anomalies are ok, but must be used carefully. You can exaggerate anything that has no importance or you could hide the important. Maybe anomalies should be presented together with absolute values and the seasonal changes, especially around 0 C absolute temperature. I dont know how to do it practically, but as someone said, if it was not for those climatists producing the anomalies you would never know of any temperature changes.

It is a bit like a thermografic image with a resolution of 0.1 degr or better where a 1 degr surface temperature change goes from deep blue to hot red.

High moral is needed to sell rubberbands by meter.

The elephant in the room is explicitly stated by GISS:

The entire analysis and claim of AGW is based on the claims that

1) temperature anomalies are strongly correlated out to distances of the order of 1000 km, and

2) the associated error estimates are correct

For example, based on this type of analysis, Hansen et al claim to calculate the “global mean temperature anomaly” back in 1890 to within +/- 0.05C [1 sigma]

http://data.giss.nasa.gov/gistemp/2011/Fig2.gif

Looking at the Hansen and Lebedeff, 1987 paper

http://pubs.giss.nasa.gov/abs/ha00700d.html

GCM based error estimates, given the known problems with GCMs, should be treated with skepticism. A sensitivity analysis to estimate systematic, as opposed to statistical, errors does not appear to have been done.

If the error bars have been significantly underestimated, then the global warming claim is nothing more than case of flawed rejection of the null hypothesis.

Considering how much has been written in the ongoing debate on the existence of AGW,

it’s surprising that this key point has been mostly ignored.

“””””…..MikeB says:

January 26, 2014 at 5:27 am

Could I just make a very pedantic point because I have become a very grumpy old man. Kelvin is a man’s name. The units of temperature are ‘kelvin’ with a small ‘k’.

If you abbreviate kelvin then you must use a capital K (following the SI convention).

Further, we don’t speak of ‘degrees kelvin’ because the units in this case are not degrees, they are kelvins ( or say K, but not degrees K )……”””””

Right on Mate !

But speaking of SI and kelvins; do they also now ordain that we no longer have Volts, or Amps, or Siemens, or Hertz ??

I prefer Kelvins myself for the very reason it is The man’s name. And I always tell folks that one deg. Kelvin is a bloody cold Temperature. I use deg. C exclusively for temperature increments, and (K)kelvins are ALWAYS absolute Temperatures; not increments.

Steven Mosher says:January 26, 2014 at 10:12 am

Is the Berkeley BEST raw data now finalised?

Have they removed all the obvious errors?

Is it still available at the same place?

From your title, I thought you were going to show this GISS absolute temperature graph:

http://suyts.files.wordpress.com/2013/02/image_thumb265.png?w=636&h=294

Really alarming, isn’t it, the way temperatures have climbed since the 1880s?

Do I need a sarc tag?

Thanks to D.B. Stealey – March 5, 2013 at 12:39 pm…for finding this graph…

Joe Solters: I think I understand the essence of this thread on average global temperature. One, there is no actual measurement of a global temperature due to insufficient number of accurate thermometers located around the earth. So approximations are necessary. These approximations are calculated to tenth or hundredth’s of a degree. Two, monthly average temperature estimates are calculated by extrapolation from sites which may be 1000 km apart on land. Maybe further at sea. Three, these monthly estimates are then compared with monthly average estimates for past base periods going back in time 20 or 30 years. These final calculations are called anomalies, and used to approximate increases or decreases in average global temperature over time. The anomaly scale starts at zero for the base period selected and shows approximations in tenths of a degree difference in average temperature. Few, if any of the instruments used to record actual temp’s are accurate to tenths of a degree. These approximate differences in tenths of a degree are used to support government policies directed at changing fundamental global energy production and use with enormous impacts on lives. Assuming average global temperature is the proper metric to determine climate change, there has to be a better, more accurate way to measure what is actually happening to global temperature. If the data aren’t available, stop the policy nonsense until it is available.

I wish I knew where D.B. Stealey found that original GISS data graph so it could be viewed in it’s larger form…

http://suyts.files.wordpress.com/2013/02/image_thumb265.png?w=636&h=294

J. Philip Peterson says:

January 26, 2014 at 12:02 pm

“I wish I knew where D.B. Stealey found that original GISS data graph so it could be viewed in it’s larger form…”

http://suyts.wordpress.com/2013/02/22/how-the-earths-temperature-looks-on-a-mercury-thermometer/

DirkH says:

January 26, 2014 at 11:01 am

The combined average temperature over global land and ocean surfaces for November 2013 was record highest for the 134-year period of record, at 0.78°C (1.40°F) above the 20th century average of 12.9°C (55.2°F).”

Here’s again the values that markx collected:

“1988: 15.4°C

1990: 15.5°C

1999: 14.6°C

2004: 14.5°C

2007: 14.5°C

2010: 14.5°C

2012 14.0 °C

2013: 14.0°C”

So NCDC admits that in NOV 2013 the globe was coolest since beginning of Global Warming Research.

This sounds contradictory but it really is not. Note the question in my comment at:

Werner Brozek says:

January 26, 2014 at 9:07 am

It is apparent that the numbers around 14.0 come from a source that goes from 12.0 to 15.8 for the year. And the 12.0 is in January so 12.9 in November is reasonable. To state it differently, you can have the warmest November in the last 160 years and it will still be colder in absolute terms than the coldest July in the last 160 years.

Thanks DirkH!, I tried searching at suyts.files.wordpress and all I would get was 404 — File not found. Guess I wouldn’t be much of a hacker…

“Rienk says:

January 26, 2014 at 10:17 am

Can’t use temperatures. Reason is simple, there’s no significance without randomization (R.A Fisher). Thermometers are not in random spots but at places where there are people. ”

######################################

Untrue. around 40% of the sites have no population to speak of ( less than 10 people) withing 10km. Further you can test whether population has an effect ( needs to be randomized )

population doesnt have a significant effect unless the density is very high ( like over 5k per sq km) In short, if you limit to stations with small population, the answer does not change in any statistically significant way from the full sample.

#####################################

So even if you’re not bothered by the difference between intensive and extensive variables, you cannot get a proper sample and thus no valid average. So they have to pretend they’re measuring all. Hence anomalies which are correlated over large distances instead of temperatures that aren’t. For extra misdirection go gridded, homogenize and readjust. And stop talking about temperature

Wrong as well. Temperatures are correlated over long distances. You can prove this by doing out of sample testing. You decimate the sample ( drop 85% of the stations ) create the field

and then use the field to predict the temperature at the 85% you held out) Answer? temperature is correlated out to long distances. The distance varies with latitude and season.

“Don’t even get me started in 1000km correlation crap of GISS”

actually you’ll find correlation to distances beyond this, and some distances less than this.

Its really quite simple. You tell me temperature at position xy and mathematically you

can calculate a window of temperature for a location x2,y2.

depending on the season, depending on the latitude, the estimate will be quite good.

This is just plain old physical geography. Give me the temperature and the month and you can predict ( with uncertainty) the location at which the measurement was taken. add in the altitude and you can narrow it down even more.

given the latitude and the altitude and the season you can predict the temperature within about 1.6 C 95% of the time. Why? because physical geography determines a large amount of the variance in temperature. why? its the sun stupid.

Isn’t it lucky we’ve got the UAH satellite record to provide such solid support for the surface temperature data.

On the point in Bill Illis’ post about the use of Kelvin rather than Degrees Celsius for absolute temperature. He’s absolutely correct. The Celsius scale, in effect, simply reports anomalies relative to a constant base line of 273K. However, despite it’s attraction to some, a lot of information would be ‘lost’ if the Kelvin scale were used in practice. The glacial/interglacial variation in global mean temperature is only ~5 degrees C. On a graph using the Kelvin scale that would only show as relatively tiny departure in the temperature record.

As far as climate is concerned, we are interested in temperature variation within a narrow range. We want to know whether the world is going to warm 3 degrees, 1 degree … or not at all over the rest of this century. Anomalies are just fine for assessing the likely outcomes.

A C Osborn says:

January 26, 2014 at 11:33 am

Steven Mosher says:January 26, 2014 at 10:12 am

Is the Berkeley BEST raw data now finalised?

Have they removed all the obvious errors?

Is it still available at the same place?

####################################

1. Raw data is a myth. There are first reports. When an observer writes down the value

he thinks he saw on thermometer. this is a first report. When a station transmits its data

to a data center, this is a first report. They may not be “raw’, whatever that means.

From day 1 the “raw” data (as you call it) has been available.

A) we pull from open sources, 14 of them, the code shows you the URLS

B) those files are ingested monthly, they are output in a “common format”. values are not changed, merely re ordered.Common format is posted.

2. It is never finalized since new reports continue to come in. Further archives are being

added to. This last month 2000 new stations were added. There are mountains of

old data being added to the stream. These old data show us that our predictions for

the temperature field are correct within uncertainty bounds. For example, with the old data

we have an estimate for Montreal in 1884. New data comes in from digitization projects

where old records are added to the system. These “new’ observations can be used

to check the accuracy of the estimate. Guess what?

3. Have all obvious errors been removed? With over 40000 stations this is the biggest challenge

For example, a grad student found a duplication error that effected 600 stations. After fixing that problem.. the answer……. did not change. Currently working with guys who are specialists in various parts of the world, fixing issues as they crop up. Again, no material changes. LLN is your friend.

4. They should be in the same place. Researchers who are going over the data seem to have no problem finding it or accessing the SVN. When they find issues they write me and the issues get reviewed.

When you pose the problem in the right mathematical framework.. “How do i estimate the temperature at places where I dont have measures?”, RATHER THAN “how do I average temperatures where I do have them?” you get a wonderful way of showing that your prediction is accurate within the stated uncertainties. So you can get it..

Take all 20K stations in the US. drop the CRN stations (110). These are the stations which

WUWT thinks are the gold standard. Drop those. Next, estimate the field using the 19,810 other stations. This field is a prediction Temperature at x,y,z and time t will be X

Then, see what you predict for those 110 CRN stations. is your prediction within your uncertainty or not?

As Steve Mcintyre explained the temperature metric is not rocket science. Its plain old ordinary accounting ( well ordinary geostatistics) its used every day in business. Nothing cutting edge about it whatsoever. The weird thing is that GISS and CRU use methods they invented but never really tested. berkeley? we just used what businesses use who operate in this arena.

Bottom line. There was an LIA. Its warmer now. and we did land on the moon.