The title question often appears during discussions of global surface temperatures. That is, GISS, Hadley Centre and NCDC only present their global land+ocean surface temperatures products as anomalies. The questions is: why don’t they produce the global surface temperature products in absolute form?

In this post, I’ve included the answers provided by the three suppliers. I’ll also discuss sea surface temperature data and a land surface air temperature reanalysis which are presented in absolute form. And I’ll include a chapter that has appeared in my books that shows why, when using monthly data, it’s easier to use anomalies.

Back to global temperature products:

GISS EXPLANATION

GISS on their webpage here states:

Anomalies and Absolute Temperatures

Our analysis concerns only temperature anomalies, not absolute temperature. Temperature anomalies are computed relative to the base period 1951-1980. The reason to work with anomalies, rather than absolute temperature is that absolute temperature varies markedly in short distances, while monthly or annual temperature anomalies are representative of a much larger region. Indeed, we have shown (Hansen and Lebedeff, 1987) that temperature anomalies are strongly correlated out to distances of the order of 1000 km. For a more detailed discussion, see The Elusive Absolute Surface Air Temperature.

UKMO-HADLEY CENTRE EXPLANATION

The UKMO-Hadley Centre answers that question…and why they use 1961-1990 as their base period for anomalies on their webpage here.

Why are the temperatures expressed as anomalies from 1961-90?

Stations on land are at different elevations, and different countries measure average monthly temperatures using different methods and formulae. To avoid biases that could result from these problems, monthly average temperatures are reduced to anomalies from the period with best coverage (1961-90). For stations to be used, an estimate of the base period average must be calculated. Because many stations do not have complete records for the 1961-90 period several methods have been developed to estimate 1961-90 averages from neighbouring records or using other sources of data (see more discussion on this and other points in Jones et al. 2012). Over the oceans, where observations are generally made from mobile platforms, it is impossible to assemble long series of actual temperatures for fixed points. However it is possible to interpolate historical data to create spatially complete reference climatologies (averages for 1961-90) so that individual observations can be compared with a local normal for the given day of the year (more discussion in Kennedy et al. 2011).

It is possible to develop an absolute temperature series for any area selected, using the absolute file, and then add this to a regional average in anomalies calculated from the gridded data. If for example a regional average is required, users should calculate a time series in anomalies, then average the absolute file for the same region then add the average derived to each of the values in the time series. Do NOT add the absolute values to every grid box in each monthly field and then calculate large-scale averages.

NCDC EXPLANATION

Also see the NCDC FAQ webpage here. They state:

Absolute estimates of global average surface temperature are difficult to compile for several reasons. Some regions have few temperature measurement stations (e.g., the Sahara Desert) and interpolation must be made over large, data-sparse regions. In mountainous areas, most observations come from the inhabited valleys, so the effect of elevation on a region’s average temperature must be considered as well. For example, a summer month over an area may be cooler than average, both at a mountain top and in a nearby valley, but the absolute temperatures will be quite different at the two locations. The use of anomalies in this case will show that temperatures for both locations were below average.

Using reference values computed on smaller [more local] scales over the same time period establishes a baseline from which anomalies are calculated. This effectively normalizes the data so they can be compared and combined to more accurately represent temperature patterns with respect to what is normal for different places within a region.

For these reasons, large-area summaries incorporate anomalies, not the temperature itself. Anomalies more accurately describe climate variability over larger areas than absolute temperatures do, and they give a frame of reference that allows more meaningful comparisons between locations and more accurate calculations of temperature trends.

SURFACE TEMPERATURE DATASETS AND A REANALYSIS THAT ARE AVAILABLE IN ABSOLUTE FORM

Most sea surface temperature datasets are available in absolute form. These include:

- the Reynolds OI.v2 SST data from NOAA

- the NOAA reconstruction ERSST

- the Hadley Centre reconstruction HADISST

- and the source data for the reconstructions ICOADS

The Hadley Centre’s HADSST3, which is used in the HADCRUT4 product, is only produced in absolute form, however. And I believe Kaplan SST was also only available in anomaly form.

With the exception of Kaplan SST, all of those datasets are available to download through the KNMI Climate Explorer Monthly Observations webpage. Scroll down to SST and select a dataset. For further information about the use of the KNMI Climate Explorer see the posts Very Basic Introduction To The KNMI Climate Explorer and Step-By-Step Instructions for Creating a Climate-Related Model-Data Comparison Graph.

GHCN-CAMS is a reanalysis of land surface air temperatures and it is presented in absolute form. It must be kept in mind, though, that a reanalysis is not “raw” data; it is the output of a climate model that uses data as inputs. GHCN-CAMS is also available through the KNMI Climate Explorer and identified as “1948-now: CPC GHCN/CAMS t2m analysis (land)”. I first presented it in the post Absolute Land Surface Temperature Reanalysis back in 2010.

WHY WE NORMALLY PRESENT ANOMALIES

The following is “Chapter 2.1 – The Use of Temperature and Precipitation Anomalies” from my book Climate Models Fail. There was a similar chapter in my book Who Turned on the Heat?

[Start of Chapter 2.1 – The Use of Temperature and Precipitation Anomalies]

With rare exceptions, the surface temperature, precipitation, and sea ice area data and model outputs in this book are presented as anomalies, not as absolutes. To see why anomalies are used, take a look at global surface temperature in absolute form. Figure 2-1 shows monthly global surface temperatures from January, 1950 to October, 2011. As you can see, there are wide seasonal swings in global surface temperatures every year.

The three producers of global surface temperature datasets are the NASA GISS (Goddard Institute for Space Studies), the NCDC (NOAA National Climatic Data Center), and the United Kingdom’s National Weather Service known as the UKMO (UK Met Office). Those global surface temperature products are only available in anomaly form. As a result, to create Figure 2-1, I needed to combine land and sea surface temperature datasets that are available in absolute form. I used GHCN+CAMS land surface air temperature data from NOAA and the HADISST Sea Surface Temperature data from the UK Met Office Hadley Centre. Land covers about 30% of the Earth’s surface, so the data in Figure 2-1 is a weighted average of land surface temperature data (30%) and sea surface temperature data (70%).

When looking at absolute surface temperatures (Figure 2-1), it’s really difficult to determine if there are changes in global surface temperatures from one year to the next; the annual cycle is so large that it limits one’s ability to see when there are changes. And note that the variations in the annual minimums do not always coincide with the variations in the maximums. You can see that the temperatures have warmed, but you can’t determine the changes from month to month or year to year.

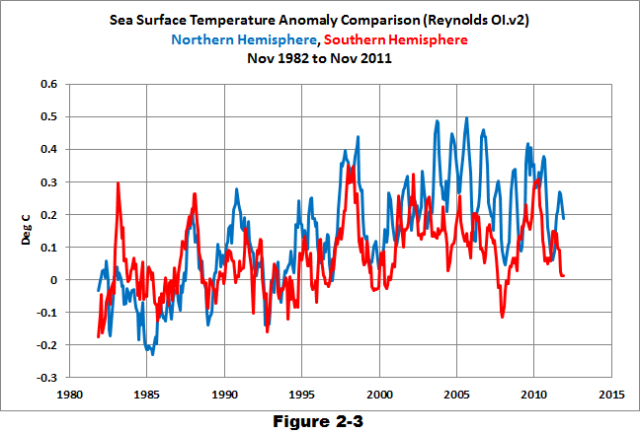

Take the example of comparing the surface temperatures of the Northern and Southern Hemispheres using the satellite-era sea surface temperatures in Figure 2-2. The seasonal signals in the data from the two hemispheres oppose each other. When the Northern Hemisphere is warming as winter changes to summer, the Southern Hemisphere is cooling because it’s going from summer to winter at the same time. Those two datasets are 180 degrees out of phase.

After converting that data to anomalies (Figure 2-3), the two datasets are easier to compare.

Returning to the global land-plus-sea surface temperature data, once you convert the same data to anomalies, as was done in Figure 2-4, you can see that there are significant changes in global surface temperatures that aren’t related to the annual seasonal cycle. The upward spikes every couple of years are caused by El Niño events. Most of the downward spikes are caused by La Niña events. (I discuss El Niño and La Niña events a number of times in this book. They are parts of a very interesting process that nature created.) Some of the drops in temperature are caused by the aerosols ejected from explosive volcanic eruptions. Those aerosols reduce the amount of sunlight that reaches the surface of the Earth, cooling it temporarily. Temperatures rebound over the next few years as volcanic aerosols dissipate.

HOW TO CALCULATE ANOMALIES

For those who are interested: To convert the absolute surface temperatures shown in Figure 2-1 into the anomalies presented in Figure 2-4, you must first choose a reference period. The reference period is often referred to as the “base years.” I use the base years of 1950 to 2010 for this example.

The process: First, determine average temperatures for each month during the reference period. That is, average all the surface temperatures for all the Januaries from 1950 to 2010. Do the same thing for all the Februaries, Marches, and so on, through the Decembers during the reference period; each month is averaged separately. Those are the reference temperatures. Second, determine the anomalies, which are calculated as the differences between the reference temperatures and the temperatures for a given month. That is, to determine the January, 1950 temperature anomaly, subtract the average January surface temperature from the January, 1950 value. Because the January, 1950 surface temperature was below the average temperature of the reference period, the anomaly has a negative value. If it had been higher than the reference-period average, the anomaly would have been positive. The process continues as February, 1950 is compared to the reference-period average temperature for Februaries. Then March, 1950 is compared to the reference-period average temperature for Marches, and so on, through the last month of the data, which in this example was October 2011. It’s easy to create a spreadsheet to do this, but, thankfully, data sources like the KNMI Climate Explorer website do all of those calculations for you, so you can save a few steps.

CHAPTER 2.1 SUMMARY

Anomalies are used instead of absolutes because anomalies remove most of the large seasonal cycles inherent in the temperature, precipitation, and sea ice area data and model outputs. Using anomalies makes it easier to see the monthly and annual variations and makes comparing data and model outputs on a single graph much easier.

[End of Chapter 2.1 from Climate Models Fail]

There are a good number of other introductory discussions in my ebooks, for those who are new to the topic of global warming and climate change. See the Tables of Contents included in the free previews to Climate Models Fail here and Who Turned on the Heat? here.

So here is a plot of daily ave temperatures in Ithaca, NY for January, in °K. Hardly anything! So what’s all this I’ve been hearing about a cold snap in the US?

I agree, if we/they are math-literate “scientists” (scare-quotes!), then why not report and discuss in kelvin.

“Why Aren’t Global Surface Temperature Data Produced in Absolute Form?”, because climatologists aren’t physicists.

Thank you Bob Tisdale, you have confirmed what I have always suspected that these data sets are all SWAGs.

http://wattsupwiththat.com/2014/01/25/hadcrut4-for-2013-almost-a-dnf-in-top-ten-warmest/#comment-1548993

Or Engineers either.

Why aren’t all the datasets based on 1981-2010, which is the WMO policy for meteorological organisations? They would then all be comparable.

For instance, GISS use 1951-80, which produces a much bigger, scarier anomaly.

Based on 1981-2010, annual temperature anomalies for 2013 would be:

RSS 0.12C

UAH 0.24C

HADCRUT 0.20C

GISS 0.21C

http://notalotofpeopleknowthat.wordpress.com/2014/01/26/global-temperature-report-2013/#more-6627

Bill Illis says:

January 26, 2014 at 3:50 am

“The temperatures should really be in degrees Kelvin or 288K average with a range on the planet of 200K to 323K.”

Makes perfect sense. It would eliminate the scaling problems with different graphs:

Fig 2-1 covers a range of 5 degrees C

Fig 2-2 covers a range of 9 degrees C

Fig 2-3 covers a range of .9 degrees C

Fig 2-4 covers a range of 1.4 degrees C

Of course it would also reduce the scare impact many manipulators are quite happy to use.

They use anomalies because it lets them hide the fact that the signal they’re looking for (the trend) is a tiny fraction of the natural daily & annual cycles that everything and everyone on the planet tolerates. Basically it’s a tool that enables exaggeration.

Good article, Bob. When I read the title my first thought was kelvin scale. The only thing that seemed to be missing is estimates of error. After a discussion of difficulties in data sets with sparse, widely distributed measurements, problems with elevations, attempts to homogenize the data sets to come out with a product called anomalies, there is no discussion in the uncertainty of these measurements. I find it interesting that climate scientists wax eloquently about anomalies to 2-3 decimal places without showing measurement uncertainties.

Back in the dark ages when I was teaching college chem labs and pocket calculators first came out, the kiddies would report results to 8+ decimal places because the calculator said so. I’d make them read the silly stuff about significant figures, measurement error and then tell me what they could really expect using a 2-place balance. Climate science seems to be a bit more casual about measurements.

With anomalies it is easier to manipulate.

It is also easier to force extraction of bogus meaning to dishonestly present as well developed a science that is only starting.

Don’t even get me started in 1000km correlation crap of GISS

Use of nomalies sounds good, but if they were forced to show actual global average temperatures as well, then it would be more difficult for particularly NASA/GISS but also the other surface temperature providers to “hide the decline” in temperatures before the satellite age in order to produce man made global warming i.e. global warming produced by manipulations.

markx says:

January 26, 2014 at 4:49 am

“But, the accepted ‘mean global temperature’ has apparently changed with time: From contemporary publications:

1988: 15.4°C

1990: 15.5°C

1999: 14.6°C

2004: 14.5°C

2007: 14.5°C

2010: 14.5°C

2012 14.0 °C

2013: 14.0°C

”

Thanks so much, markx! I forgot to bookmark Pierre’s post which amused me immensely when I first read it!

So: We know that warming from here above 2 deg C will be catastrophic! That’s what we all fight against! Now, as we are at 14 deg C now, and we were at 15.5 in 1990, that means we were 0.5 deg C from extinction in 1990! Phew, close call!

I remember all those barebellied techno girls… Meaning, if Global Warming continues with, say 0.1 deg C per decade (that is the long term trend for RSS starting at 1979), we will see a return of Techno parades in 2163! Get out the 909!

The anomalies are fine as long as you include the scale of the temperature. Since we produce a “global temperature” then we in turn MUST use a global scale.

when plotted on a scale of about -65 deg C to +45 deg C the anomalies become meaning less in the grand scale of the planet

The recent release of 34 years of satellite data shows that even when using the skewed data ( it was shifted 0.3 deg C in 2002 with aqua launch) the relative trend has been completely flat inside the error of margin for the entire 34 year range.

This makes sense as its basically an average of averages CALCULATED from raw data.

The only place anomalies show trends are when you take the temperature measurements down to smaller more specific areas such as reasons and specific weather stations.

When you do this fluctuations and decade patterns emerge in the overal natural variance of the location. Groups of warm winters, or cols summers are identifiable.

As well recording changes become apparent such as when equipment screens switched from whitewashed to painted, when electronics began to be included in screens, when weather station started to become “urban” with city growth

You can see definite temperature jumps and plateaus when these things happen. All of these contributing to that infinatly small global average tucked into a rediculously small plus or minus half degree scale shown on anomaly graphs

It’s not the roller coaster shown, it’s a flatline with a minuscule trend upward produced by the continual adjustment UP when ever an adjustment is required. Satellites did not see a 0.3 deg C jump up in 2002

All pre Aqua satellites were adjusted up that amount to match the new more accurate Aqua satellite.

Anecdotally I ask everyone I know if the weather in thier specific area has changed outside of the historical variance known by themselves, thier parents, thier grandparents

The resounding answer is NO

The Canadian Prairies where I live are the same for well over 100 years now.

Nederland (holland) where my ancestry is from is the same

Take note holland is NOT drowning from sea level increases.

Mediterainian ports are still ports,

I work with pressurized systems

I do NOT buy a gauge that reads in a range of 1001 to 1003 psi and freak out when the needle fluctuates between 1001.5 and 1002.5

I buy a gauge that reads 0 to 1500 psi and note how it stays flat around a bit over 1000 psi

On the prairies I’ve been in temps as low as -45 and as high as + 43 deg C

But I know I’m going to spend most of my year -20 to + 28 if it goes out side of that I know its a warm summer or cold winter but nothing special.

Anomaly temps are fine if taken for what they are

Flat CALCULATED averages of averages on a global scale.

My 2 cents but what do I know about data and graphing and history?

When we talk about global warming we are talking about the temperature on the SURFACE of the earth, because that is where we live. So, how do we measure the absolute temperature of the surface of the earth? There is no practical way to do that. Although there are a large number of weather stations dotted around the globe do they provide a representative sample of the whole surface? Some stations may be on high mountains, others in valleys or local microclimates.

What is more, the station readings have not been historically cross-calibrated with each other. Although we have now have certain standards for the siting and sheltering of thermometers a recent study by our own Anthony Watts found that that many U.S. temperature stations are located near buildings, in parking lots, or close to heat sources and so do not comply with the WMO requirements ( and if a developed country like the USA doesn’t comply what chance the rest of the world?).

It is difficult (impossible) therefore to determine the absolute temperature of the earth accurately with any confidence. We could say that it is probably about 14 or 15 deg.C ( my 1905 encyclopaedia puts it at 45 deg.F). If you really want absolute temperatures then just add the anomalies to 14 or 15 degrees. It doesn’t change the trends.

However, we have a much better chance of determining whether temperatures are increasing or not, by comparing measurements from weather stations with those they gave in the past, hence anomalies. That is to say, my thermometer may not be accurately calibrated, but I can still tell if it is getting warmer or colder.

P.S. Oh dear Nick, just after I said don’t say degrees K, you put °K.

John Peter says:

January 26, 2014 at 6:41 am

Use of nomalies sounds good, but if they were forced to show actual global average temperatures as well.

Well said but please if you may, what exactly (and I do mean EXACTLY) is the GLOBAL average temperature? This IS science is it NOT? There must be an exact number.

Find me ONE location on earth where 14.5 (or close to that number) is the normal temperature day And night for more than 50 % of the year

The whole idea of an average on this rotating ball of variability borders on insane.

I love asking that when people tell me the planet is warming. Where? What temp? How fast? In the summer? Winter? Day? Night? Surface? Atmosphere? etc

AlexS says:

January 26, 2014 at 6:41 am

Alex can you please start to explain 1000km correlation of GISS data for us?

Lol

😉

Bob Tisdale says:

January 26, 2014 at 5:05 am

markstoval, PS: There’s no Dr. before my name.

Cheers

Maybe, but there should be!

Thank you Bob,

Have you looked into Steven Goddard’s latest discovery? If correct, this should be interesting.

Regards, Allan

http://wattsupwiththat.com/2014/01/25/hadcrut4-for-2013-almost-a-dnf-in-top-ten-warmest/#comment-1549043

The following seems to have fallen under the radar. I have NOT verified Goddard’s claim below.

http://stevengoddard.wordpress.com/2014/01/19/just-hit-the-noaa-motherlode/?utm_source=newsletter&utm_medium=email&utm_campaign=newsletter_January_19_2014

Independent data analyst, Steven Goddard, today (January 19, 2014) released his telling study of the officially adjusted and “homogenized” US temperature records relied upon by NASA, NOAA, USHCN and scientists around the world to “prove” our climate has been warming dangerously.

Goddard reports, “I spent the evening comparing graphs…and hit the NOAA motherlode.” His diligent research exposed the real reason why there is a startling disparity between the “raw” thermometer readings, as reported by measuring stations, and the “adjusted” temperatures, those that appear in official charts and government reports. In effect, the adjustments to the “raw” thermometer measurements made by the climate scientists “turns a 90 year cooling trend into a warming trend,” says the astonished Goddard.

Goddard’s plain-as-day evidence not only proves the officially-claimed one-degree increase in temperatures is entirely fictitious, it also discredits the reliability of any assertion by such agencies to possess a reliable and robust temperature record.

Regards, Allan

I have whined about this many times on this blog. The real answer is that by showing the anomalies, rather than absolutes, one changes the scale and makes the changes look more ‘dangerous’ than they really are. That hockey stick goes away. Just google the subject and try to find some actual temperatures. Hard to find.

Our climate is remarkably, possibly unreasonably, stable in the scheme of things. Probably one reason why we’re here at all. When one considers all of the variables that need to be “just right” for this Goldilocks phenomina to occur one might conclude that there is a divine Planner, after all. It also leads me to believe that all of the billions of estimated other planets that may exist in habitable zones around other stars might be short of much life other than possibly bacteria or moss and lichens.

This web page

http://www.ncdc.noaa.gov/cmb-faq/anomalies.html

used to say:

Average Land Surface Mean Temp Base Period 1901 – 2000 ( 8.5°C)

Average Sea Surface Mean Temp Base Period 1901 – 2000 ( 16.1°C)

but now it doesn’t. Here’s the wayback machine page:.

It told you that the ocean temperature is 5.7°C warmer than the air temperatures which is nice to know when “THEY” start claiming the atmosphere’s back radiation warms up the ocean.

@ur momisugly RichardLH

I wonder if you’re familiar with Marcia Wyatt’s application of MSSA?

http://www.wyattonearth.net/home.html

Marcia bundles everything into a ‘Stadium Wave’ (~60 year multivariate wave):

http://www.wyattonearth.net/originalresearchstadiumwave.html

Paraphrasing Jean Dickey (NASA JPL):

Everything’s coupled.

With due focus, this can be seen.

When you’re ready to get really serious, let me know.

Regards

NCDC does not usually report in “absolute”, but they *do* offer “normals” you can add to their anomalies to get “absolute” values.

Thank you for this article. Your Figure 2-1 suggests that global average temperatures vary between about 14.0 and 17.7, however the following site says the variation is from 12.0 and 15.8. Why the difference? Thanks!

http://theinconvenientskeptic.com/2013/03/misunderstanding-of-the-global-temperature-anomaly/

Perhaps it’s because the absolute temperature is not known.

I could go around our back yard with a thermometer and come up with many different readings.

Thanks Bob a timely posting.

Thanks Markx, I was looking for that list of the steadily adjusted down,Average Global Temperatures.

As most other commenter have covered, the anomalies are useful… Politically

Without error bars, a defined common AGT, assumptions listed, they are wild assed guesses at best.Political nonsense at worst.

Of course the activists and advocates will not use absolute values, that would fail the public relations intent of their communications.Where are the extremes? The high peaking graphs?

Communicating science being IPCCspeak for propaganda.

Sorry still chuckling over that Brad Keyes comment, what will Mike Mann be remembered for.

His communication or his science?

The trouble with the lack of scientific discipline on this, temperature as proxy for energy accumulation, front is we may be actually cooling overall through the time periods of claimed warming .

If so the consequences of our government policies may be very unhelpful.

We have been impoverished as a group by this hysteria over assumed warming, poor people have fewer resources to respond to crisis.The survivors of a crisis caused by political stupidity have very unforgiving attitudes.