While climate models tend to be open-ended and impossible to verify for the future they predict until the future becomes the measured present, weather models can and have been verified, and they keep getting better. Here is one such example.

NSF-supported research at the University of Oklahoma uses supercomputers and simulations to improve storm forecasts

When a hail storm moved through Fort Worth, Texas on May 5, 1995, it battered the highly populated area with hail up to 4 inches in diameter and struck a local outdoor festival known as the Fort Worth Mayfest.

The Mayfest storm was one of the costliest hailstorms in U.S history, causing more than $2 billion in damage and injuring at least 100 people.

Scientists know that storms with a rotating updraft on their southwestern sides — which are particularly common in the spring on the U.S. southern plains — are associated with the biggest, most severe tornadoes and also produce a lot of large hail. However, clear ideas on how they form and how to predict these events in advance have proven elusive.

A team based at University of Oklahoma (OU) working on the Severe Hail Analysis, Representation and Prediction (SHARP) project works to solve that mystery, with support from the National Science Foundation (NSF).

Performing experimental weather forecasts using the Stampede supercomputer at the Texas Advanced Computing Center, researchers have gained a better understanding of the conditions that cause severe hail to form, and are producing predictions with far greater accuracy than those currently used operationally.

Improving model accuracy

To predict hail storms, or weather in general, scientists have developed mathematically based physics models of the atmosphere and the complex processes within, and computer codes that represent these physical processes on a grid consisting of millions of points. Numerical models in the form of computer codes are integrated forward in time starting from the observed current conditions to determine how a weather system will evolve and whether a serious storm will form.

Because of the wide range of spatial and temporal scales that numerical weather predictions must cover and the fast turnaround required, they are almost always run on powerful supercomputers. The finer the resolution of the grid used to simulate the phenomena, the more accurate the forecast; but the more accurate the forecast, the more computation required.

The highest-resolution National Weather Service’s official forecasts have grid spacing of one point for every three kilometers. The model the Oklahoma team is using in the SHARP project, on the other hand, uses one grid point for every 500 meters — six times more resolved in the horizontal directions.

“This lets us simulate the storms with a lot higher accuracy,” says Nathan Snook, an OU research scientist. “But the trade-off is, to do that, we need a lot of computing power — more than 100 times that of three-kilometer simulations. Which is why we need Stampede.”

Stampede is currently one of the most powerful supercomputers in the U.S. for open science research and serves as an important part of NSF’s portfolio of advanced cyberinfrastructure resources, enabling cutting-edge computational and data-intensive science and engineering research nationwide.

CREDIT University of Oklahoma

According to Snook, there’s a major effort underway to move to a “warning on forecast” paradigm — that is, to use computer-model-based, short-term forecasts to predict what will happen over the next several hours and use those predictions to warn the public, as opposed to warning only when storms form and are observed.

“How do we get the models good enough that we can warn the public based on them?” Snook asks. “That’s the ultimate goal of what we want to do — get to the point where we can make hail forecasts two hours in advance. ‘A storm is likely to move into downtown Dallas, now is a good time to act.'”

With such a system in place, it might be possible to prevent injuries to vulnerable people, divert or move planes into hangers and protect cars and other property.

Looking at past storms to predict future ones

To study the problem, the team first reviews the previous season’s storms to identify the best cases to study. They then perform numerical experiments to see if their models can predict these storms better than the original forecasts using new, improved techniques. The idea is to ultimately transition the higher-resolution models they are testing into operation in the future.

Now in the third year of their hail forecasting project, the researchers are getting promising results. Studying the storms that produced the May 20, 2013 Oklahoma-Moore tornado that led to 24 deaths, destroyed 1,150 homes and resulted in an estimated $2 billion in damage, they developed zero to 90 minute hail forecasts that captured the storm’s impact better than the National Weather Service forecasts produced at the time.

“The storms in the model move faster than the actual storms,” Snook says. “But the model accurately predicted which three storms would produce strong hail and the path they would take.”

The models required Stampede to solve multiple fluid dynamics equations at millions of grid points and also incorporate the physics of precipitation, turbulence, radiation from the sun and energy changes from the ground. Moreover, the researchers had to simulate the storm multiple times — as an ensemble — to estimate and reduce the uncertainty in the data and in the physics of the weather phenomena themselves.

“Performing all of these calculations on millions of points, multiple times every second, requires a massive amount of computing resources,” Snook says.

The team used more than a million computing hours on Stampede for the experiments and additional time on the Darter system at the National Institute for Computational Science for more recent forecasts. The resources were provided through the NSF-supported Extreme Science and Engineering Discovery Environment (XSEDE) program, which acts as a single virtual system that scientists can use to interactively share computing resources, data and expertise.

The potential of hail prediction

Though the ultimate impacts of the numerical experiments will take some time to realize, its potential motivates Snook and the severe hail prediction team.

“This has the potential to change the way people look at severe weather predictions,” Snook says. “Five or 10 years down the road, when we have a system that can tell you that there’s a severe hail storm coming hours in advance, and to be able to trust that — it will change how we see severe weather. Instead of running for shelter, you’ll know there’s a storm coming and can schedule your afternoon.”

Ming Xue, the leader of the project and director of the Center for Analysis and Prediction of Storms (CAPS) at OU, gave a similar assessment.

“Given the promise shown by the research and the ever increasing computing power, numerical prediction of hailstorms and warnings issued based on the model forecasts, with a couple of hours of lead time, may indeed be realized operationally in a not-too-distant future, and the forecasts will also be accompanied by information on how certain the forecasts are.”

The team published its results in the proceedings of the 20th Conference on Integrated Observing and Assimilation Systems for Atmosphere, Oceans and Land Surface (IOAS-AOLS); they will also be published in an upcoming issue of the American Meteorological Society journal Weather and Forecasting.

“Severe hail events can have significant economic and safety impacts,” says Nicholas F. Anderson, program officer in NSF’s Division of Atmospheric and Geospace Sciences. “The work being done by SHARP project scientists is a step towards improving forecasts and providing better warnings for the public.”

###

…This is the realm that climate forecasting should have stayed in, short range weather !!

Finally, a model that they are willing to compare with empirical data!

+97

I love it. Pick up a hailstone off the ground. Place hail stone on top of tape measure. Read hail Temperature proxy off tape measure. Report suspected severe hailstorm happening immediately.

g

Once upon a time, I saw parked on the runway, a KC135 that had gone through a hailstorm. It looked like it had been worked over with a thousand of ball peen hammers.

Predicting the possibility of hail, and were it goes, is of vital importance to aircraft . Money well spent vs the other money wasting efforts.

Maybe next we could predict tornados, in either case 20 minutes would really help.

5 minutes would save a lot of lives, property damage is going to happen.

I understand that they can currently give more than 20 minutes warning and now the concern is giving too much warning so people do things that puts them in more danger (e.g. driving to get kids out of school) instead of hunkering down in safe space.

Yeah, in the 1960’s I remember having about 10 minutes’ warning, by means of a police car racing through the neighborhoods giving the alarm.

Now they usually advise us 2-3 days in advance that the weather will be stormy. By the time that day arrives, they can tell us the part of the state and time of day the hazardous conditions will begin. Storm trackers position themselves out in the field hours beforehand, and we often have hour of warning when hail or tornadoes begin.

Mike is right, though, at times you wonder if they give too much warning. 😉

Another thing we have now is lots of video footage from ground-based trackers and helicopters, as well as animated live radar images. Some of those are interactive, and you can see it down to the neighborhood level.

One stormy night, there was such a brouhaha over tornadoes near Oklahoma City that they never even talked about the storm that came near my town, about an hour away. But I watched the radar online and knew what was happening. On one computer, I watched the weatherman’s live reports, and on the other computer I watched the online radar. We had supper in the building where we shelter, and it was over by bedtime. Good times! Got popcorn?

It does give one an idea of why global climate models are so inadequate. If it requires a supercomputer to model one feature of storms, one can only imagine what would be needed for good climate modeling.

40 years to model 1 year of what we think is the global climate, on the best hardware available.

If they are waiting for actual quantum computing, they may keep waiting. Other than hyped up nonsense, that area is struggling

And, these researchers are thrilled at the prospect of making good forecasts 90 minutes ahead of time. CAGW “forecasters” who think they can predict many decades out are lunatics.

“While climate models tend to be open-ended and impossible to verify for the future they predict until the future becomes the measured present, weather models can and have been verified, and they keep getting better. ”

Open ended? That is a meaningless term

“Impossible to verify the future they predict until it becomes the present?

That is true of ALL models

Weather models verified? ah no. you measure their skill. They are always wrong. the question is how wrong COMPARED to other models.

Next, some GCMs are just weather models that are run for a longer time period.

So there is no fundamental distinction between “weather” model and climate model.

In both cases ( weather models and GCM), they cant be fully evaluated until the future.

You dont “verify ( verify is checking against an end user accuracy specification ), you

measure their skill. how wrong they are.

Given the complexity of the system, GCMs do ok. Again, it’s a relative measure that matters.. are they better than a naive forecast that “nothing changes” yup

[another wild claim from drive by Mosher. I’ve used weather forecast models on a daily basis for 3 decades. Yes their predictive skill has improved since 30 years ago. Many many times then and now they get the forecast for the next day for a location exactly right – that’s what I call verified. The prediction was verified by the actual result. Climate models that predict 25-50, or 100 years out have NEVER been verified by an actual result, and can’t be until he time elapses. They can’t even be assessed for predictive skill right now for 25-100 year forecasts. That’s why I call climate models open-ended and it is not a meaningless term, but a judgment of their inability to be verified. Tough noogies if you don’t like my description -Anthony]

the mosher definition; “( verify is checking against an end user accuracy specification )”.

Is “end user accuracy specification” another way of saying “irrevocable truth” or is it more like “measure their skill”?

Steer, don’t brake Steven.

=========

“some GCMs are just weather models that are run for a longer time period.

So there is no fundamental distinction between “weather” model and climate model.”

=========

Name a weather model that uses atmospheric concentration of CO2 as a “forcing” to generate weather forecasts.

======

“In both cases ( weather models and GCM), they cant be fully evaluated until the future.”

======

In meteorology, today’s weather in the real world allows us to evaluate the accuracy of yesterday’s forecast.

In carbon climatology, today’s climate can’t be used to evaluate yesterday’s doomsday predictions, simply because yesterday didn’t happen in the post-normal world. Instead, a shiny new model gets wheeled out each year to explain why today is like today. e.g.:

============

Cold winters have been caused by global warming: new research

Telegraph, Oct 2014

[…]

Research at Tokyo University and Japan’s national Institute of Polar Research – published in the current issue of the journal Nature Geoscience – has linked the cold winters with the “rapid decline of Arctic sea ice”, caused by warming, over the past decade.

The most comprehensive computer modelling study on the issue to date, it concludes the risk of severe winters in Europe and Northern Asia has doubled as the result of the climate change.

http://www.telegraph.co.uk/news/earth/environment/climatechange/11191520/Cold-winters-have-been-caused-by-global-warming-say-scientists.html

============

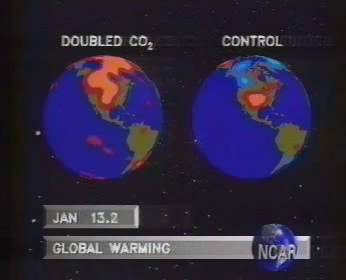

–Billion dollar climate model – Schneider/NCAR, 1990

Hey – there not a cloud in the sky! It must be a weather model.

Hey, he has to say this stuff because he has bills to pay…..

“The team used more than a million computing hours on Stampede for the experiments”. Unless a computing hour is different from a “normal” hour, that would be about 114 years of computer usage. Quite the feat.

I see this all the time in press releases. They mean a million hours of processing time. All supercomputers are massively parallel these days.

Looking at the specifications of Stampede, they probably mean something between 150 and 175 hours of time, split between the 6,400 “nodes” of the system. (If they mean “cores” – they only took a couple of hours of system time. That doesn’t seem worth mentioning, but I could be wrong.)

You are correct, if the data was serially processed it would take a million hours.

This is a great prediction tool. It can predict what is going to happen some time after doing a million hours of computations.

At the end of the simulated imitation run, the computer will ask: “And we are doing this computation for what reason ?? ”

Simply wunnerful ! And they can do this for the entire globe’s climate at the same time ??

G

Mark, I can assure you that out in the real world the data is serially processed. First of all something happens. It happens so fast it will make your head spin; maybe as short as 10^-43 seconds. Then next something else happens; also very fast. No time is wasted, everything happens as fast as it can as soon as it can. Nothing happens before it is possible, and nothing waits for something else to happen, before it happens; if it can happen then.

G

Parallel processing. Thanks.

Cloud computation – c’s netted in clouds.

“Five or 10 years down the road, when we have a system that can tell you that there’s a severe hail storm coming hours in advance, and to be able to trust that — it will change how we see severe weather. Instead of running for shelter, you’ll know there’s a storm coming and can schedule your afternoon.”

============

Sounds like bar weather to me, with the added incentive of betting on whose farm gets the worst of the predicted hail storms.

If you play it right, the hail never happens and you collect all the bets.

That will be some computer system, since it will have to process data faster than Mother Gaia can, and she is as fast as it gets. Computers can process fast enough to make tomorrow’s head lines in the news paper. (after the event.)

G

There should be a different word to describe the sort of verified computer models used in short term weather predictions, and the unverified speculations used in long term climate models. They are both merely calculations. Some calculations can be extremely accurate, others less so.

There are words. The first is “science,” the second is “money.”

The radar indicated/predicted graph looks like someone has just thrown a whole bunch of green darts at the maps.

Amazing accuracy that dartboard computer program.

It should humble the “climate model” prognosticators to look at the computing power that needs to be applied to hope to get a handle on a mere weather event with the current state of atmospheric science. Applied science as it should be!

The forecasts and warnings from the severe weather forecast center in Norman have probably saved tens of thousands of lives, to date, in central Oklahoma. Some of the most violent weather events ever recorded on the planet have occurred here and have been preceded by warnings to get below ground or evacuate, because survival was not possible above ground. People around here listen when the folks in Norman speak, because we’ve seen the results of their previous warnings and they were right.

We’ve seen paths of destruction for miles in which there was nothing but piles of rubble and we’ve seen worse, where there were not even piles of rubble left- just bare nothing, with even sections of cement slab foundations and chunks of sidewalks and roadway ripped away, if the winds could find an exposed edge.

Any tools which these researchers need to perfect and expand their craft are just fine with me.

From the descriptions and the maps provided, there are quit number of questions.

Norman Oklahoma’s day to day weather watching is excellent and most of their weather reports are about as accurate as the claimed weather reports above.

When researchers trot out results that are still on par with current forecasts, I wonder just what are they celebrating?

In looking at their map, did they actually predict individual storms along the entire front? Or just a few near their center?

Why do they title a graph as ‘Graupel’ that is predicting 50+mm sized hail?

For what it is worth, in most of the nasty thunderstorm alley, everyone sane checks the weather forecast. Severe thunderstorm watches and warnings should be taken seriously.

Predicted storms with a chance of hail are fair warning that it is foolish to wait till one is bonked in the head or one’s car is dented.

Predicted thunderstorms with potential for rotation and tornadoes mean one keeps the weather radio on and heeds all warnings.

The Mayfest storm they refer to was not a mild graupel event. Hail, four inches across (102mm), is deadly and even cars are not safe places to hide in.

Predicting exactly which storm is going to drop 102mm hail and when would be a terrific accomplishment, but I don’t see that prediction specificity in the research above.

Which brings us back to when one observes a towering gray green black anvil cloud bearing down on you, especially if you can see rotations in the cloud; get under cover, preferably strong underground cover!

Those folks who wave off the seriousness of said storms are just Darwin award candidates trying for their deserved destiny.

I suspect the research above is news so those folks can request their very own super duper ala t’ Booper computer to run more predictions.

What is a “computing hour?” The story says

“The team used more than a million computing hours on Stampede for the experiments and additional time on the Darter system at the National Institute for Computational Science for more recent forecasts.”

A million hours is about 114 years. So obviously this is not a “real time” estimate of how much computing time was used. I’m sure we’re talking about some massive computing resources here, and I’d actually like to understand the magnitude. “A million computing hours” is meaningless to me.

A positive development. More data to input to a massively more power dedicated computer will be required to achieve the useful accuracy. For my purposes a content five mile radius is the accuracy required. Farmers need that accuracy five days out. There is nothing like having hay down and read to bale, and come a storm.

Cool use of modeling. Much better than the old system I was a part of as a weather spotter in the 80s, in the frontrange foothills of the Rockies in Colorado. We reported any rainfall at or greater than 2″/hour from storm cells, as by the time they got onto the plains, hail and tornadoes were likely. I’d guess we were lucky to catch any with that system due to the low density observation coverage.

I got about a 15 minute hailstorm last night, here in Eastern Oklahoma. Sure was loud.

The real-time weather forcasting around here is unreal. We have a ringside seat for every tornado, with TV, and radar and storm chasers, who really give us important information about the tornadoes as they develop and move.

Jetstreams. We need more jetstreams on the TV weather. Jetstreams tell the story. They tell you where the storm front is going and where it is going to be the strongest.

We used to have a meteorologist around here named Gary Shore (Tulsa), who always showed the jetstreams with his weather forcasts, and I learned so much about weather from this guy. I sure do miss him.

I don’t see jetstreams emphasized very much on the TV weather anymore, for some reason.